Characterizing Student Engagement Moods for Dropout Prediction in Question Pool Websites

Abstract.

Problem-Based Learning (PBL) is a popular approach to instruction that supports students to get hands-on training by solving problems. Question Pool websites (QPs) such as LeetCode, Code Chef, and Math Playground help PBL by supplying authentic, diverse, and contextualized questions to students. Nonetheless, empirical findings suggest that 40% to 80% of students registered in QPs drop out in less than two months. This research is the first attempt to understand and predict student dropouts from QPs via exploiting students’ engagement moods. Adopting a data-driven approach, we identify five different engagement moods for QP students, which are namely challenge-seeker, subject-seeker, interest-seeker, joy-seeker, and non-seeker. We find that students have collective preferences for answering questions in each engagement mood, and deviation from those preferences increases their probability of dropping out significantly. Last but not least, this paper contributes by introducing a new hybrid machine learning model (we call Dropout-Plus) for predicting student dropouts in QPs. The test results on a popular QP in China, with nearly 10K students, show that Dropout-Plus can exceed the rival algorithms’ dropout prediction performance in terms of accuracy, F1-measure, and AUC. We wrap up our work by giving some design suggestions to QP managers and online learning professionals to reduce their student dropouts.

1. Introduction

Problem-Based Learning (PBL) is a student-centered approach to instruction where students learn through solving problems (Pan et al., 2020). Question Pool websites such as LeetCode, Code Chef, Timus, Jutge, and Math Playground support PBL by supplying students with a variety of questions, quizzes, and competitions in different subjects (Xia et al., 2019; Pereira et al., 2019; Wasik et al., 2018). However, empirical statistics from these websites show that 40% to 80% of the registered students in QPs tend to drop out111Depending on the context of research, the terms attrition, churning, and dropping out can be used interchangeably to imply similar concepts. before completing their second-month of membership (LeetCode, 2019; Chef, 2019; Timus, 2019; Jutge, 2019; Playground, 2019). Having said this, practical insights into this phenomenon can help educators and online learning professionals to improve their QP designs and reduce dropouts.

Nevertheless, the majority of empirical studies to date in the area of computer-mediated education are only focused on studying dropouts from Massive Open Online Courses (MOOCs) and Community Question Answering (CQA) websites (Srba and Bielikova, 2016; Nagrecha et al., 2017; Mogavi et al., 2019; Chen et al., 2016). Therefore, there is a research gap in the literature for studying student dropouts in comparatively new platforms like QPs. Our research aims to fill this gap and inspect the problem of student dropouts in QPs through the lens of student engagement moods. By doing so, we draw Human-Computer Interaction (HCI) and Computer Supported Cooperative Work (CSCW) researchers’ attention to the importance of personalization in QPs. More formally, this work answers three research questions as follows:

-

•

RQ1: What are student engagement moods in QPs?

-

•

RQ2: How are student engagement moods and dropout rates correlated?

-

•

RQ3: Can student engagement moods help to predict student dropouts more precisely?

We utilize a probabilistic graphical model, known as Hidden Markov Model (HMM), to extract and visually distinguish different student engagement moods in QPs. We identify five dominant student engagement moods, which are (E1) challenge-seeker, (E2) subject-seeker, (E3) interest-seeker, (E4) joy-seeker, and (E5) non-seeker. We distinguish each mood according to students’ data-driven behavioral patterns that emerge in the process of interacting with QPs. We are inspired by the Hexad user types of Tondello et al. (see (Tondello et al., 2016)) for naming the extracted engagement moods, but the context and concepts we introduce are genuine and specialized for QPs.

To the best of our knowledge, this work is the first research that casts a typology for student behaviors in QPs. By adopting a data-driven approach, we identify some distinctive behavioral patterns for different QP students. For example, when students are in the challenge-seeker mood, they search for challenging types of questions that are commensurate with their high-level skills. Students who are in the subject-seeker mood are described best as mission or task-oriented individuals. Interestingly, they do not search much to find their questions and often restrict themselves to a predefined study plan around specific subject matter and contexts. Students in this mood are more in need of a mentor, guide, or a study plan to keep them focused and help them find their questions easily. When students are in an interest-seeker mood, they rummage around for a variety of topics to find their questions of interest. However, they do not chase the challenging questions as challenge-seekers do.

Furthermore, we notice that the students in joy-seeking and non-seeking moods are not as committed as students in the other moods to study and exercise their knowledge. Joy-seeker students have a high tendency to game the platform or misuse it for purposes other than education (Baker, 2005; d. Baker et al., 2008; Baker et al., 2004). Technically speaking, students who exploit the platform’s properties rather than their knowledge or skills to become successful in an educational platform are considered to be gaming the platform (Baker, 2007). Finally, students in a non-seeker mood tend to leave the platform earlier than students in other moods. These students are seldom determined to answer any questions. They only check the platform to see what is new and if any questions can attract their attention by chance. These findings underline the familiar point that a one-size design QP does not fit all students (Teasley, 2017; Chung et al., 2017; Lessel et al., 2019).

We also find that students have collective preferences for answering questions in each engagement mood, and deviation from those preferences increases their probability of dropping out significantly. Finally, inspired by the HMM findings and insights about student dropouts, we feed HMM results to a Long Short-Term Memory (LSTM) recurrent neural network to find if it can improve the accuracy of dropout predictions compared with a plain LSTM model and five other baselines, including an XGBoost model, Random Forest, Decision Tree (DT), Logistic Regression, and Support Vector Machine (SVM) (Vafeiadis et al., 2015; Nagrecha et al., 2017). We notice improvements in the accuracy of dropout predictions when HMM and LSTM recurrent neural networks are combined. More precisely, we reach an accuracy of 78.22%, F1-measure of 81.28%, and AUC measure of 89.10%, which until now set the bar for dropout prediction models in QPs.

Contributions. This work is important to HCI and CSCW because it presents the first typology and dropout prediction model for QP students. Furthermore, it reinforces the need for personalizing QP websites by revealing that different students have different preferences for selecting and answering QP questions. Such knowledge could help QPs to make more informed design decisions. We also provide some design suggestions for QP managers and online learning professionals to reduce student dropouts in QPs.

2. Background

Question Pool websites (QP) provide students with a collection of questions to learn and practice their knowledge online (Xia et al., 2019). The QPs such as Timus, Jutge.org, Optil.io, Code Chef, and HDU virtual judge are among the most popular web-based platforms for code education (Petit et al., 2012; Wasik et al., 2018; Xia et al., 2019; Wasik et al., 2016). These platforms usually include a large repository of programming questions from which students choose to answer. The students submit their solutions to the QP and wait for the feedback to find if their code is correct. Dropout prediction is a challenging but necessary study for ensuring the sustainability and service continuation of these platforms.

In this paper, we concentrate on a popular and publicly accessible QP in China that is known as HDU virtual judge222http://code.hdu.edu.cn/ platform (henceforth HDU). The website originally belongs to Hangzhou Dianzi University’s ACM team and is designed to provide students hands-on exercises to hone their programming and coding skills (Xia et al., 2019). HDU, on average, hosts more than one hundred students every day and receives more than 300K programming code submissions every month. It is also a familiar platform for researchers who work in the field of HCI (Xia et al., 2019). Figure 1 shows snapshots of HDU website.

Similar to the literature works (Dror et al., 2012; Rowe, 2013; Yang et al., 2018a), we formulate the dropout prediction problem as a binary classification task. Our definition of dropout is similar to (Mogavi et al., 2019), which temporally splits a dataset into observation and inspection periods. The students whose number of solution submissions in the inspection period drops to less than 20% of the observation period are considered to be dropped students. Since QP platforms usually do not have fixed start and end time points like in MOOCs (Nagrecha et al., 2017), we regulate the observation and inspection periods similar to CQA platforms (Mogavi et al., 2019; Dror et al., 2012; Pudipeddi et al., 2014). We use equally long periods of time for observation and inspection time windows.

3. Related Work

3.1. Engagement: Conceptualization and Detection Techniques

The HCI and CSCW literature is abundant with various conceptualizations of engagement (Dixon et al., 2019; Foley et al., 2020; Kelders and Kip, 2019; Aslan et al., 2019; Carlton et al., 2019; Thebault-Spieker et al., 2016). However, there is a lack of consensus on the definition of engagement (Barkley, 2009). The three most widely used conceptualizations of engagement moods are behavioral, emotional, and cognitive (Fredricks et al., 2004; Alyuz et al., 2017). In the context of students’ learning research, behavioral engagement refers to attention, participation, and effort a student puts in doing his or her academic activities (Nguyen et al., 2016; Cappella et al., 2013; Alyuz et al., 2017). For example, students can be in off-task or on-task moods when doing their learning tasks (Alyuz et al., 2017). The emotional engagement refers to students’ affective responses to learning activities and the individuals involved in those activities (Nguyen et al., 2016; Park et al., 2011). For instance, students might be concerned about how their instructors perceive their performances. Finally, cognitive engagement is about how intrinsically invested and motivated students are in their learning process (Nguyen et al., 2016). For example, students might make mental efforts to debate in an online forum (Hind et al., 2017).

The complexity of discovering student engagement moods has resulted in the appearance of a diversity of data mining techniques (Bosch, 2016; Aslan et al., 2019; Waldrop et al., 2018; Moubayed et al., 2020). K-means, the clustering algorithm, is one of the most popular techniques many researchers use to extract naturally occurring typology of students’ engagement moods (Moubayed et al., 2020; Saenz et al., 2011; Hamid et al., 2018). Saenz et al. use K-means and exploratory cluster analysis to extract different engagement moods for college students (Saenz et al., 2011). By comparison of similarities and dissimilarities between different features, they characterize 15 different clusters. Furtado et al. use a combination of hierarchical and non-hierarchical clustering algorithms to identify the contributors’ profiles in the context of CQA platforms (Furtado et al., 2013). They categorize CQA contributors’ behavior into 10 types based on how much and how well they contribute to the platform over time. However, K-Means is more useful when features show Euclidean distances properties (He et al., 2015).

Latent variable models like Hidden Markov Models (HMMs) are also quite popular. Faucon et al. use a semi-Markov Model for simulating and capturing the behavioral engagement of MOOC students (Faucon et al., 2016). They provide a graphical representation of the dynamics of the transitions between different states, such as forum participation or video watching. Mogavi et al. combine HMM and a Reinforcement Learning Model (RLM) to capture users’ flow experience on a famous CQA platform (Mogavi et al., 2019). Flow experience is a positive mental state in psychology that occurs when the challenge level of an activity (e.g., answering a question, doing a task, or solving a homework problem) is commensurate with the user’s skill level (Mogavi et al., 2019; Pace, 2004; Csikszentmihalyi, 1975). Our paper is the first to use HMM to characterize students’ engagement moods in QPs. Understanding students’ engagement moods can help educators to manage students behaviors better and set more customized curricula (Shernoff and Csikszentmihalyi, 2009).

3.2. Dropouts: Prediction and Control

Educational Data Mining (EDM) is a relatively new discipline that has recently caught the attention of HCI and CSCW communities (Costa et al., 2017; Juhaňák et al., 2019; Maldonado-Mahauad et al., 2018). One of EDM’s primary interests is to know whether students would drop out soon or continue their studies until the end of their courses or at least for a long time (Bassen et al., 2020; Kim et al., 2014; Romero and Ventura, 2016). Qiu et al. introduce a latent variable model called LadFG based on students’ demographics, forum activities, and learning behaviors to predict students’ success in completing the courses they start in XuetangX, one of the largest MOOCs in China (Qiu et al., 2016). They show that having friends on MOOCs can increase students’ chances of success in receiving the final certificates of any course dramatically by three-fold, but surprisingly, being more active on the program does not guarantee the student will take the final certificate. Wang et al. propose a hybrid deep learning-based dropout prediction model that combines two architectures of Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) to advance the accuracy of dropout prediction models in MOOCs (Wang et al., 2017). They show that their model can achieve a high accuracy comparable to feature-engineered data mining methods. As another example, Xing et al. propose a simple deep learning-based model with features such as students’ access times to the platform and their history of active days to predict dropouts (Xing and Du, 2018). They suggest finding students’ dropout probability on a weekly basis to take better measures in preventing students’ dropouts. Student engagement is one key factor among all of these studies. In fact, engagement can be considered a basis for students’ retention, and a lack of it confronts and cancels any positive learning outcomes (Zainuddin et al., 2020). Therefore, we use this rationale to utilize students’ engagement moods to predict dropouts in QPs.

4. Data Description

After receiving the approval of our local university’s Institutional Review Board (IRB), we follow the ethical guidelines of AoIR333https://aoir.org/ethics/ for the study of student behavior on Hangzhou Dianzi University’s QP platform (known as HDU). The dataset we study includes near 10K student records in the range from January 25th to July 15th, 2019 (172 days). We utilize the student data before April 21st for the observation period feed of the prediction models, and the data after that for the inspection of the dropouts (similar to (Pudipeddi et al., 2014; Naito et al., 2018)). More than half of the students drop out of the platform in the inspection period. We exclude the students with no submissions in the observation period from our study to avoid the inclusion of the students who have already dropped out and to alleviate the problem of imbalanced class labels between dropped and continuing students (see (Mogavi et al., 2019; Kwon et al., 2019)). Table 1 summarizes the main statistics of our dataset.

| Measure | Number | Min | Max | Median | Mean | SD | |

|---|---|---|---|---|---|---|---|

| Answer Submission | 1.2 M | 1 | 62 | 5.23 | 7.14 | 18.53 | |

| Accepted Answers | 227 K | 0 | 39 | 4.16 | 5.01 | 12.89 | |

| Endurance (in minute) | N/A | 0 | 209.65 | 15.91 | 21.38 | 67.43 | |

| Attendance Gap (in hour) | N/A | 1.18 | 673.38 | 134.22 | 192.48 | 125.68 | |

| Number of Students = 9,941 | Number of Dropped Students = 5,261 | ||||||

5. Research Overview

We use an unsupervised HMM for decoding student engagement moods, and a supervised LSTM network for predicting dropouts. Both components are trained with the student data features during the observation period. The HMM inputs include simple observable features that imply student performance, challenge, endurance, and attendance gap states after each submission to the QP. The parameters of the HMM are estimated by iterations of a standard Expectation Maximization (EM) algorithm known as Baum-Welch (Baum et al., 1970). Five dominant student engagement moods are identified, which are (E1) challenge-seeker, (E2) subject-seeker, (E3) interest-seeker, (E4) joy-seeker, and (E5) non-seeker. We run a user study with 26 local students to evaluate our HMM findings.

After decoding the student engagement moods with the HMM, we associate the platform questions with the engagement moods that are more likely to submit solutions for those questions. We notice that students have collective preferences for answering questions in each engagement mood, and deviation from those preferences increases their probability of dropping out significantly.

Finally, we feed the generated engagement moods and questions’ associativity features along with other common features to an LSTM network to predict student dropout in the inspection period. All the dropout predictions are reported based on the 10-fold cross-validation.

6. Students’ Engagement Moods (RQ1)

6.1. Model Construction

Hidden Markov Models (HMMs) are statistical tools that help to make inferences about the latent (unobservable) variables through analyzing the manifest (observable) features (Kokkodis, 2019; Shi et al., 2019; Chen et al., 2017). In the context of education systems, HMMs are used for the detection of various phenomenons such as student social loafing (Zhang et al., 2017), flow zone engagement (Mogavi et al., 2019), and academic pathway choices (Gruver et al., 2019). The widespread use of HMMs in the literature and the capability of capturing complex data structures (Gruver et al., 2019) inspire our work to apply HMMs to help distinguish between different student engagement moods in the QP platforms. We use the hmmlearn module in Python to train our model.

Inputs. In order to build an HMM, we utilize the manifest features for student performance, challenge, endurance, and attendance gap. We pick the manifest features by performing an extensive thematic analysis (Parsons et al., 2015; Ward et al., 2009) across the literature about student engagement (Hamari et al., 2016; Hoffman et al., 2019; Morgan et al., 2018). The features we use are as follows.

-

-

Performance: The feedback QP platforms provide each submission, such as if the answer is Wrong or Accepted.

-

-

Challenge: The past acceptance rate of a question, which is often shown along with a guide next to each question, can resemble the challenge.

-

-

Endurance: The time students spend on the platform to answer questions and compile codes in one session is student endurance. Similar to (Hara et al., 2018), we define a “session” in QP platforms as a period of time in which the interval between two consecutive submissions does not exceed one hour. We measure the student endurance in minutes.

-

-

Attendance gap: The time interval between two consecutive sessions is a student’s attendance gap. We measure the attendance gap in hours.

We should mention here that these features serve only as cues to infer students’ cognitive (performance and challenge) and behavioral (endurance and attendance gap) engagement moods (Kuh, 2001; Handelsman et al., 2005; Ouimet and Smallwood, 2005; Langley, 2006).

Parameters. Hereafter, we use a four-element vector to refer to the corresponding student observations after each answer submission to the QP in time . The HMM assumes the observations are generated by an underlying state space of hidden variables , where . For convenience, we use a triplet to denote the HMM we train for extraction of the student engagement moods. The transition matrix shows the probabilities of moving between different engagement moods over time. The emission matrix shows the conditional probability for an observation to be emitted (generated) from a certain engagement mood . The vector denotes the initial probabilities of being in each of the engagement moods of . The initial probabilities are often assumed to be , with showing the cardinality of the hidden state space (i.e., the number of engagement moods) (Mogavi et al., 2019).

Model training. We optimize parameters according to the student behavior in the observation period with a standard EM algorithm known as Baum-Welch (Baum et al., 1970). The aim is to optimize the parameters such that is maximized with . To avoid the local maximum problem with the EM algorithm, we train the HMM with ten random seeds until they converge at the global maximum.

The HMM representation is completed by choosing the best number of hidden states (Mogavi et al., 2019). While this task appears to be simple conceptually, finding the best number of hidden states in a meaningful way is quite challenging (Van Der Aalst, 2011; Mogavi et al., 2019). The main reason is that an HMM with a small number of hidden states cannot capture the underlying behavioral kinetics adequately, and an HMM with too many hidden states is difficult to interpret (Okamoto, 2017). However, we need a criterion to compromise, and thus we use the conventional Akaike (AIC) (Shang and Cavanaugh, 2008) and Bayes (BIC) (Chen et al., 1998) measures in our model training (also see (Mogavi et al., 2019)). Figure 2 plots the AIC and BIC measures against the number of hidden states in our trained HMMs (i.e., from 2 to 10 hidden states are tested). We choose an HMM with hidden states since the global values of AICs and BICs measures are the lowest in this representation. The smaller AIC and BIC show more descriptive and less complicated (Mogavi et al., 2019).

6.2. Clustering Analysis: Pattern Discovery

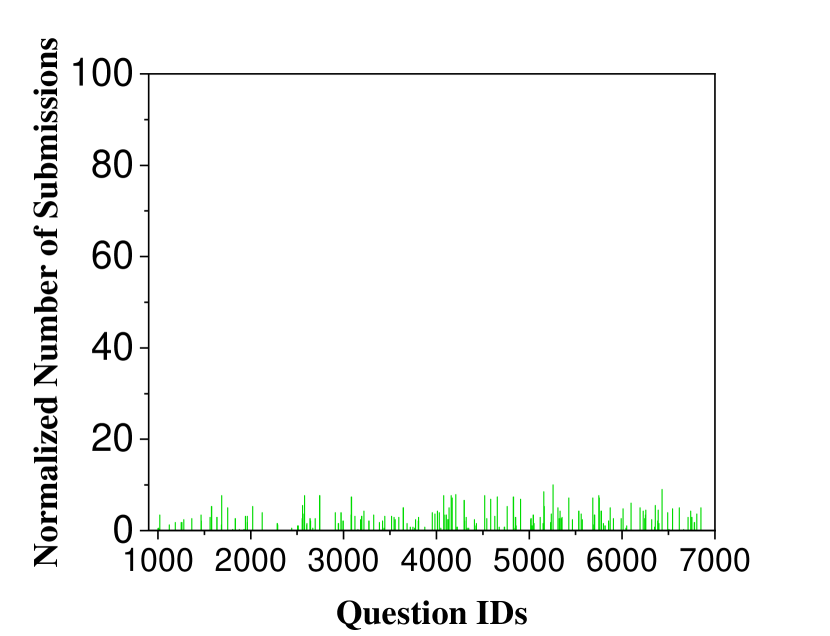

Similar to the literature, we use data distributions within each hidden state to characterize and visualize the distinction between different engagement moods (Furtado et al., 2013; Mogavi et al., 2019; Babbie, 2016). The features we inspect here are the number of incorrect and accepted answers, the average ease of questions, the average time spent in the platform, the average time gap in attendance, and the number of repeated submissions. They are inspired by the manifest features we had before, but instead of an individual student, they aim to examine all students’ collective behaviors in a specific hidden state. The cumulative distribution function (CDF) of these features are plotted in Figure 3. We also utilize the frequency plots of student submissions over different question IDs to demonstrate question-answering patterns in each hidden state (Figure 4). The number of submissions in each hidden state is a value normalized between 0 to 100. This representation is to facilitate the visualization and comparison of the patterns in different hidden states. The marked characteristics of each hidden state are as follows:

(E1) Challenge-seeker (Hidden State 1): As shown in Figure 3(c), the students in this mood are best described for their tendency to look for more challenging questions, i.e., the questions with the least acceptance rate. From Figures 3(d) and 3(e), we find they also spend the longest average time on the platform, and attend the platform more frequently in comparison with the other engagement moods. Furthermore, Figure 4(a) shows that challenge-seekers show more interest in the last questions of the platform.

(E2) Subject-seeker (Hidden State 2): As shown in Figure 4(b), students in this mood tend to answer specific sets of questions. They usually answer the specific-context questions (e.g., greedy algorithms) sequentially. Furthermore, from Figure 3(d) we can notice that subject-seekers come second after the students in the challenge-seeker mood for spending the longest time on the platform. Figures 3(a) and 3(b) show that the students in the subject-seeker mood have the largest average number of incorrect answers on the platform, whereas their average number of accepted answers is quite similar to those of the challenge-seeker students (the distributions are also similar).

(E3) Interest-seeker (Hidden State 3): From Figure 4(c), we realize that the students in this mood do not answer specific-context questions and regularly search for their questions of interest. The question types they answer has the largest variance.

Furthermore, the distribution of the easiness of the questions shown in Figure 3(c) makes no tangible difference in comparison with the other moods except the challenge-seeker mood. As shown in Figure 3(b), the students in this mood hold the highest average number of accepted answers after the students in the joy-seeker mood. Moreover, according to Figure 3(a), interest-seekers’ distribution of producing incorrect answers is close to a uniform distribution, which sharply distinguishes them from the other student moods.

(E4) Joy-seeker (Hidden State 4): As shown in Figures 3(c) and 3(f), the students who are in this mood tend to answer the easiest questions on the platform in a highly repetitive manner. Interestingly, these students choose their questions from a small and selective number of QP questions (probably those with compilation loopholes) (see Figure 4(d)). Also, their number of accepted answers has the highest value among all the other moods (see Figure 3(b)). Based on these signs, we come to the conclusion that these students are at the highest risk of gaming the platform (Baker

et al., 2004; Baker, 2005) in comparison with the other mood groups. Finally, we notice that the distribution of incorrect answers for this mood type resembles a mixture of two Gaussian distributions, which distinguishes itself in sharp contrast to the other mood groups (see Figure 3(a)).

(E5) Non-seeker (Hidden State 5): According to Figure 3(e), we notice that the students in this mood are well-distinguished by holding the largest average time gap among all moods for attending the platform, which means that they seldom visit the platform.

Furthermore, the least average time spent on the platform is also another characteristic of this mood (see Figure 3(d)). Finally, as is expected, the lowest number of incorrect answers, accepted answers, and repeated submissions are the outcomes of this short visit (see Figures 3(a), 3(b), and 3(f)).

Challenge-seeker

Subject-seeker

Interest-seeker

Joy-seeker

Non-seeker

6.3. HMM Evaluation: Experience Sampling

With the approval of our university’s Institutional Review Board (IRB), we conduct a preliminary user study with 26 local students to evaluate the accuracy of the hidden states resolved. A combination of snowball and convenience sampling methods is used to recruit participants from our local universities. The participants include 9 females and 17 males, all undergraduate computer-major students in the age range between 18 to 23 (mean 19.46, SD 1.36). They have taken introductory programming courses (i.e., CS1 and CS2 courses (Hertz, 2010)) in the previous semester, and now use the QP website to hone their problem-solving and algorithm design skills. Thirteen students have never used any QPs before, and the others have less than one year of experience with QPs in subjects other than programming. All of the participants are asked to sign an informed consent form before we begin our study. Due to the pandemic restrictions (Covid-19), participants are asked to take part in our study from their homes’ safety and comfort. As a token of our appreciation, we compensate each participants’ time with small cash (50HK$) at the end of our study.

We use the event-focused version of Experience Sampling Method (ESM) (Zirkel et al., 2015), to collect the participants’ self-reported engagement moods after each answer submission to HDU. The participants report their engagement moods through a Google form. After training each participant about the definition of each engagement mood, we ask them to identify their moods through one of the options shown in Table 2. The study lasts for a period of two weeks long, from September 18th to October 2nd, 2020, and 63 responses are recorded. Parallel to each self-reported engagement mood we receive, an HMM-based label is also generated through students’ observed data from HDU. We notice that there is a 76.19% agreement between the engagement mood labels extracted from the HMM and ESM. This is more than 50% better than a random labeler with an accuracy of 20%.

Interestingly, none of the participants mention any engagement moods other than the five engagement moods we have extracted. However, we predict that there would be more personalized and detailed engagement moods with an increased number of participants (Saenz et al., 2011).

| Engagement Mood Options: | Responses |

|---|---|

| (1) I am looking for a challenging question. (Challenge-seeker) | 46.03% |

| (2) I am looking for a specific question. (Subject-seeker) | 19.04% |

| (3) I am looking for an interesting (non-challenging) question. (Interest-seeker) | 12.69% |

| (4) I want to use the platform for purposes other than learning. (Joy-seeker) | 11.11% |

| (5) I want to solve a random question. (Non-seeker) | 11.11% |

| (6) None of the above (explain) | N/A |

7. Engagement Moods and Student Dropouts (RQ2)

Next, we use the optimal to find the most probable engagement mood sequence for each student with respect to their observed sequence so as to maximize the (inferences at (Forney Jr, 2005)). Furthermore, we associate every question on the platform a distribution based on its probabilities for receiving answers when students are in different hidden states. Here, the index refers to the identity number of the questions on the platform. We define the average question mismatch as the probability that a question does not match with the current engagement mood of a student. Illustratively, the question mismatch is the complement of the question’s associativity measure, as is represented in Figure 5(a).

In this subsection, we test the null hypothesis that the average question mismatch has no correlation with the percentage of student dropouts. The regression analysis shown in Figure 5(b) reveals the positive coefficient factor of , and the Pearson’s r value of with the significance of between the average question mismatch and the student dropouts. Therefore, the null hypothesis is rejected, and the alternative hypothesis is accepted. That is to say, as the average question mismatch increases, the percentage of students who drop out also increases. Furthermore, it can be inferred that the students in each engagement mood have a collective preference for answering questions if from which they deviate, their risk of dropping out also increases.

8. Dropout Prediction with Engagement Moods (RQ3)

We suggest using engagement moods extracted from the HMM with an LSTM network to predict student dropouts in QPs more precisely. We refer to this hybrid machine learning architecture as Dropout-Plus (DP).

We use Keras in Python to build an attention-based Long Short-Term Memory (LSTM) network to detect the student dropouts (Sato et al., 2019). The features we input into the network at every time the student submits an answer to the QP are of two types: 1) We call the set of non-handcrafted features the common features that are directly acquired from student behavior. The common features are often shared among different QP platforms and include the features of student’s membership period, rank, nationality, acceptance rate, error type distributions, and the average time gap between submissions; and 2) the set of preprocessed features the HMM renders. In order to feed data into the network more effectively and reduce the effect of correlated features, we use a fully connected feed-forward neural network to combine the features and get a distributional feature set to train our model (Yang et al., 2018b).

We compare the performance of DP for predicting student dropouts with five competitive baselines picked from the literature. Since there are no preceding dropout prediction models for QPs before our work, we pick our baselines from previous MOOC and CQA studies (Nagrecha et al., 2017; Pudipeddi et al., 2014). To keep the comparisons relevant and unbiased, we avoid the models where it is not clear how to match MOOC or CQA features to QPs. The baselines include:

XGBoost: Extreme Gradient Boosting (XGBoost) algorithm is one of the most dominant machine learning tools for classification and regression (Miri et al., 2020; Kwak et al., 2019; Wang et al., 2019b; Murauer and Specht, 2018). It comprises a collection of base decision tree models that are built sequentially, and their final results are summed together to reduce the bias (Chen and Guestrin, 2016). Each decision tree boosts attributes that led to misclassification of the previous decision tree.

Random Forest: Random Forest is another decision tree-based ensemble algorithm that has a rich literature in HCI and CSCW communities (Rezapour and Diesner, 2017; Maity et al., 2017; Kim et al., 2019; Shin et al., 2020). However, different from XGBoost, Random Forest combines the decision trees all uniformly by using an ensemble algorithm known as bootstrap aggregation (Bishop, 2006; Breiman, 2001). In other words, every decision tree is independent of the others, and thus the final classification result is resolved based on a majority voting (Bishop, 2006).

Decision Tree (DT): As a base model of Random Forest and XGBoost algorithms, DT’s performance sometimes exceeds the two (Nagrecha et al., 2017). However, even a small change in data would dramatically reshape the model, which is an adverse point. Nevertheless, we add DTs in our analysis to have a more comprehensive set of baselines (Dror et al., 2012; Nagrecha et al., 2017; Pudipeddi et al., 2014).

Logistic Regression: As a popular statistical tool in HCI and CSCW quantitative analysis (Masaki et al., 2020; Che et al., 2018; Kim et al., 2019; Fortin et al., 2019), Logistic Regression in its basic form uses a logistic (sigmoid function) function to model a binary dependent variable (Masaki et al., 2020). In our work, the dependent variable is the dropout’s outcome (Masaki et al., 2020).

SVM (RBF Kernel): Support Vector Machine (SVM) is another supervised algorithm that can be applied for both classification and regression purposes (Bishop, 2006). SVM tries to identify hyperplanes (boundaries) that can separate all data points into groups with high margins (Benerradi et al., 2019). Gaussian Radial Basis Function (RBF) is one of the most common kernel functions researchers apply to train their models (Wang et al., 2019a; Xuan et al., 2016; Liu et al., 2020).

We also run an ablation study (see (Joseph et al., 2017; Gardiner, 1974)) with each baseline by including or excluding Engagement Features (EF) produced by the HMM. More precisely, EF includes students’ engagement moods and their questions’ associativity features after making each submission during the observation period. However, all of the models apply the common features we introduced before for model training. We remind the reader that the common features include student’s membership period, rank, nationality, acceptance rate, error type distributions, and the average time gap between submissions. All of the baselines are implemented through sklearn module in Python.

Table 3 summarizes the results of our analyses based on 10-fold cross-validation (Bishop, 2006; Rodriguez et al., 2009). The results of our analyses show that DP outperforms all the other baselines we have suggested. Moreover, the models with HMM Engagement Features (EF) have all performed better than models without EF. In fact, adding EF has leveled up the performance of all models close to DP’s performance, which can attest that the information about engagement moods can improve dropout prediction.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/527b3ff3-c196-4f01-8316-9c4e99948626/x15.png)

9. Discussion

To the best of our knowledge, this research is the first work that characterizes the students’ engagement moods and sets the dropout prediction baseline for the QP platforms. Rendering and exposing student engagement moods is the sweet spot of our work, and the implications can provide practical insights for online learning professionals to manage students’ behaviors better and tailor their services accordingly.

9.1. Engagement Mood Dynamics

One of the difficulties of studying human behavior in HCI and CSCW studies is that people change their behaviors dynamically over time (dos Santos Junior et al., 2018; Sleeper et al., 2015; Alghamdi et al., 2019; Lee et al., 2019). Therefore, studying the dynamics between student engagement moods is an essential part of understanding students’ behavior.

Based on the observations from students’ engagement mood changes in our dataset, we have calculated the transition probabilities between different engagement moods, as shown in Table 4. Therefore, these probabilities show a frequentist perspective of engagement mood transitions for a restricted time period (i.e., 172 days) (Spanos, 2019). As is shown, the engagement moods are well-distinguished with respect to their probabilistic distributions. The general dynamics from Table 4 show that holistically students have the highest probability of getting into the interest-seeker mood and the least probability for getting into the challenge-seeker mood.

Interestingly, the students in the challenge-seeker mood do not become joy-seekers or non-seekers. We also realize that the joy-seeker students do not become challenge-seekers or subject-seekers. They look for easy questions, and the subjects seem not to interest them. Furthermore, we observe that the challenge-seeker students have the highest probability of becoming interest-seekers. On the other hand, interest-seeker students do not become challenge-seekers at any time. However, this observation may be the result of the inappropriate question assignments to the students. According to our findings, subject-seeker students are also not terribly motivated to change their moods, which sounds reasonable because of the directed and specific-context nature of the questions they choose to answer. Interest-seekers are more likely to become non-seekers and vice-versa. Since finding the programming topics of interest is often the main intention for most of the student explorations in QPs, a fit question recommender system can be advantageous for students’ satisfaction and quality of experience (Mogavi et al., 2019; Xia et al., 2019). Finally, among all the resolved engagement moods, students in the joy-seeker mood have the least tendency to change their moods. This implies that the platform resources are largely at risk of being wasted unless we find and guide the joy-seeker students on time (Wasik et al., 2018).

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/527b3ff3-c196-4f01-8316-9c4e99948626/x16.png)

9.2. Dominant Engagement Moods

We define a dominant engagement mood as a mood that has happened most frequently in a student’s engagement mood trajectory. As is shown in Table 5, the majority of the students () are often in the non-seeker mood. They also hold the highest average mismatch measure () for the questions they answer, and as is expected, they hold the worst dropout rate () among all of the other engagement moods. According to our findings, the average mismatch measure () and dropout rate () are the least for the dominantly challenge-seeker students. Also, although we saw that the students in the joy-seeker mood have the least tendency to change their moods, the students who are dominantly in this mood have the second highest dropout rate () on the platform. It is likely that they get bored or encounter some technical issues that force them to stop gaming the platform.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/527b3ff3-c196-4f01-8316-9c4e99948626/x17.png)

9.3. Design Suggestions

Based on our findings, we provide some design suggestions for online learning professionals to better control student dropouts in QPs.

Recommender systems. Current recommender systems do not consider when is the right time for a student to learn something, they just consider what students want to learn (Xia et al., 2019). The problem holds for many educators as well who do not know when it is the right time to engage students for studying a specific topic. Our resolved engagement moods can play a role as an assistant to address this issue better. For example, if a topic is difficult and challenging, we would recommend asking students to solve it when they are in a challenge-seeker mood. If students are in a joy-seeker mood, we would suggest educators to skip asking questions in that session because it might actually result in adverse and unwanted educational outcomes in students, such as feeling frustrated or disliking the subject matter of that course (Baker et al., 2008). Combining learning path recommenders or personal teaching (coaching) styles with engagement mood exposers would probably enhance the quality of education and deter dropouts as students would feel more satisfied with what they learn and do (Suhre et al., 2007).

Strategic dropout management. Before our work, researchers have not paid much attention to who is dropping out (Pereira et al., 2019; Whitehill et al., 2017), but we think that this is an important question to be answered for managing student dropouts more strategically. In contrast with the existing practice in dropout studies, we believe not all of the dropouts are equally bad. For example, imagine an educator who is undecided about which types of interventions she should apply to retain students from dropping out. She is confused about customizing the system either according to challenge-seekers’ or joy-seekers’ tastes. We would recommend prioritizing challenge-seekers as joy-seekers would most probably game the system without learning anything. Joy-seekers might also mischievously have adverse side effects on other students’ experiences and make them feel disappointed (Cheng et al., 2017; Baker et al., 2008). For more complex dropout scenarios, educators can also heed to the abundance of students in each group and the transition probabilities between different engagement moods to make better decisions.

Gamification and reinforcement tools. According to Skinner’s refined Law of Effect, reinforced behaviors tend to repeat more in the future, and those behaviors which are not reinforced tend to dissipate over time (Bhutto, 2011). Therefore, educators can use gamification incentives such as badges, points, and leaderboards to steer students’ behaviors (Anderson et al., 2013). We suggest QP designers to consider student engagement moods for designing their gamification mechanics (Tondello et al., 2019). For example, if educators are preparing students for difficult exams like ACM programming contests, they can reinforce challenge-seekers by providing bigger gamification prizes (Zhang et al., 2016). Likewise, negative reinforcement can be applied to diminish non-productive behaviors like being in a joy-seeker mood. Besides that, QP designers can provision special gamification mechanics or side games to satisfy students’ playful intentions somewhere else.

Putting students into groups. At this point, collaboration and social communication are hugely missing in the context of QPs, and instead, all the website functions only promote competency among students. Although competency can provide an initial motive to attract students (Bartel et al., 2015), many educators believe that collaboration and social communication among students are also needed to better achieve the intended educational outcomes (Janssen and Kirschner, 2020; Tucker, 2016). We suggest QPs to add affordances to support student communication and social collaboration as well. Meanwhile, student engagement moods can help to find initial common attitudes among students and form groups. For example, if one student is a subject-seeker, there is a good chance that this student would successfully fit into one group with other subject-seeker students. This rule is known as Homophily in social networks (McPherson et al., 2001). Nevertheless, more in-depth studies are required to figure out which combination of student engagement moods work and match better together.

9.4. Limitations

As with any study, there are several limitations and challenges in this study. First, the platform data we analyzed is cross-sectional and is restricted in its size and time, but not type. We find this problem to be a commonplace issue in many related studies as well (Nagrecha et al., 2017; Mogavi et al., 2019). Also, we want to emphasize the difficulty of working with student data and the scarceness of datasets about QP platforms, which are relatively new research subjects in the context of educational data mining. Regarding the model limitations, although HMM can successfully profile the student behavior in a few hidden states, it is often a time-consuming task to characterize the resolved hidden states. For example, in this research, we have spent more than twelve hours to carefully visualize and compare the probabilistic distributions behind the hidden states considering different aspects and features to finally announce our engagement mood typology. We should also explain that since hidden states are found by an estimation procedure, different platforms might result in different numbers of hidden states. Generally, we expect these states to be semantically close to what we have introduced in this work. We emphasize that this feature should be viewed more as a positive point for future work, which leaves more complex engagement moods to be mined and compared with our extracted typology. Hence, it remains as future work to cross-validate our work on other QP platforms such as Timus, quera.ir, CodeChef, and the like. Moreover, it would be a particularly interesting direction to examine the effect of the HMM’s state space granularity on the performance of the dropout predictions.

10. Conclusion

We used the powerful tool Hidden Markov Models (HMMs) to expose underlying student engagement moods in QP platforms and point out that the mismatch between students’ engagement moods and the question types they answer over time can significantly increase the dropout risk. Furthermore, we developed a novel and more accurate computational framework called Dropout-Plus (DP) to predict student dropouts and explain the possible reasons why dropouts happen in QP platforms. However, we believe there is still a long path in front of HCI and CSCW researchers to fully understand dropouts on different educational platforms. Our future work includes developing a more exact time prediction for student dropouts and enriching the explanations to the question of “why dropouts happen?” Finally, this study can benefit researchers and practitioners of online education platforms to promote their work by understanding student dropouts more profoundly, building better prediction models, and providing more customized services.

Acknowledgements.

This research has been supported in part by project 16214817 from the Research Grants Council of Hong Kong, and the 5GEAR and FIT projects from Academy of Finland. It is also partially supported by the Research Grants Council of the Hong Kong Special Administrative Region, China under General Research Fund (GRF) Grant No. 16204420.References

- (1)

- Alghamdi et al. (2019) Najwa Alghamdi, Nora Alrajebah, and Shiroq Al-Megren. 2019. Crowd Behavior Analysis Using Snap Map: A Preliminary Study on the Grand Holy Mosque in Mecca. In Conference Companion Publication of the 2019 on Computer Supported Cooperative Work and Social Computing (Austin, TX, USA) (CSCW ’19). Association for Computing Machinery, New York, NY, USA, 137–141. https://doi.org/10.1145/3311957.3359473

- Alyuz et al. (2017) Nese Alyuz, Eda Okur, Utku Genc, Sinem Aslan, Cagri Tanriover, and Asli Arslan Esme. 2017. An Unobtrusive and Multimodal Approach for Behavioral Engagement Detection of Students. In Proceedings of the 1st ACM SIGCHI International Workshop on Multimodal Interaction for Education (Glasgow, UK) (MIE 2017). Association for Computing Machinery, New York, NY, USA, 26–32. https://doi.org/10.1145/3139513.3139521

- Anderson et al. (2013) Ashton Anderson, Daniel Huttenlocher, Jon Kleinberg, and Jure Leskovec. 2013. Steering User Behavior with Badges. In Proceedings of the 22nd International Conference on World Wide Web (Rio de Janeiro, Brazil) (WWW ’13). Association for Computing Machinery, New York, NY, USA, 95–106. https://doi.org/10.1145/2488388.2488398

- Aslan et al. (2019) Sinem Aslan, Nese Alyuz, Cagri Tanriover, Sinem E. Mete, Eda Okur, Sidney K. D’Mello, and Asli Arslan Esme. 2019. Investigating the Impact of a Real-Time, Multimodal Student Engagement Analytics Technology in Authentic Classrooms. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3290605.3300534

- Babbie (2016) R. Babbie. 2016. The Basics of Social Research. Cengage Learning. https://books.google.com/books?id=0CJTCwAAQBAJ

- Baker et al. (2008) Ryan Baker, Jason Walonoski, Neil Heffernan, Ido Roll, Albert Corbett, and Kenneth Koedinger. 2008. Why students engage in “gaming the system” behavior in interactive learning environments. Journal of Interactive Learning Research 19, 2 (2008), 185–224.

- Baker (2005) Ryan Shaun Baker. 2005. Designing intelligent tutors that adapt to when students game the system. Ph.D. Dissertation. Carnegie Mellon University Pittsburgh.

- Baker (2007) Ryan S.J.d. Baker. 2007. Modeling and Understanding Students’ off-Task Behavior in Intelligent Tutoring Systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (San Jose, California, USA) (CHI ’07). Association for Computing Machinery, New York, NY, USA, 1059–1068. https://doi.org/10.1145/1240624.1240785

- Baker et al. (2004) Ryan Shaun Baker, Albert T. Corbett, Kenneth R. Koedinger, and Angela Z. Wagner. 2004. Off-Task Behavior in the Cognitive Tutor Classroom: When Students ”Game the System”. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Vienna, Austria) (CHI ’04). Association for Computing Machinery, New York, NY, USA, 383–390. https://doi.org/10.1145/985692.985741

- Barkley (2009) E.F. Barkley. 2009. Student Engagement Techniques: A Handbook for College Faculty. Wiley. https://books.google.com/books?id=muAStyrwyZgC

- Bartel et al. (2015) Alexander Bartel, Paula Figas, and Georg Hagel. 2015. Towards a Competency-Based Education with Gamification Design Elements. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play (London, United Kingdom) (CHI PLAY ’15). Association for Computing Machinery, New York, NY, USA, 457–462. https://doi.org/10.1145/2793107.2810325

- Bassen et al. (2020) Jonathan Bassen, Bharathan Balaji, Michael Schaarschmidt, Candace Thille, Jay Painter, Dawn Zimmaro, Alex Games, Ethan Fast, and John C. Mitchell. 2020. Reinforcement Learning for the Adaptive Scheduling of Educational Activities. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3313831.3376518

- Baum et al. (1970) Leonard E. Baum, Ted Petrie, George Soules, and Norman Weiss. 1970. A Maximization Technique Occurring in the Statistical Analysis of Probabilistic Functions of Markov Chains. The Annals of Mathematical Statistics 41, 1 (1970), 164–171. http://www.jstor.org/stable/2239727

- Benerradi et al. (2019) Johann Benerradi, Horia A. Maior, Adrian Marinescu, Jeremie Clos, and Max L. Wilson. 2019. Exploring Machine Learning Approaches for Classifying Mental Workload Using FNIRS Data from HCI Tasks. In Proceedings of the Halfway to the Future Symposium 2019 (Nottingham, United Kingdom) (HTTF 2019). Association for Computing Machinery, New York, NY, USA, Article 8, 11 pages. https://doi.org/10.1145/3363384.3363392

- Bhutto (2011) Muhammad Ilyas Bhutto. 2011. Effects of Social Reinforcers on Students’Learning Outcomes at Secondary School Level. International Journal of Academic Research in Business and Social Sciences 1, 2 (2011), 71.

- Bishop (2006) Christopher M Bishop. 2006. Machine learning and pattern recognition. Information science and statistics. Springer, Heidelberg (2006).

- Bosch (2016) Nigel Bosch. 2016. Detecting Student Engagement: Human Versus Machine. In Proceedings of the 2016 Conference on User Modeling Adaptation and Personalization (Halifax, Nova Scotia, Canada) (UMAP ’16). Association for Computing Machinery, New York, NY, USA, 317–320. https://doi.org/10.1145/2930238.2930371

- Breiman (2001) Leo Breiman. 2001. Random forests. Machine Learning 45, 1 (2001), 5–32. https://doi.org/10.1023/a:1010933404324

- Cappella et al. (2013) Elise Cappella, Ha Yeon Kim, Jennifer W. Neal, and Daisy R. Jackson. 2013. Classroom Peer Relationships and Behavioral Engagement in Elementary School: The Role of Social Network Equity. American Journal of Community Psychology 52, 3-4 (Oct. 2013), 367–379. https://doi.org/10.1007/s10464-013-9603-5

- Carlton et al. (2019) Jonathan Carlton, Andy Brown, Caroline Jay, and John Keane. 2019. Inferring User Engagement from Interaction Data. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI EA ’19). Association for Computing Machinery, New York, NY, USA, 1–6. https://doi.org/10.1145/3290607.3313009

- Che et al. (2018) Xunru Che, Danaë Metaxa-Kakavouli, and Jeffrey T. Hancock. 2018. Fake News in the News: An Analysis of Partisan Coverage of the Fake News Phenomenon. In Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing (Jersey City, NJ, USA) (CSCW ’18). Association for Computing Machinery, New York, NY, USA, 289–292. https://doi.org/10.1145/3272973.3274079

- Chef (2019) Code Chef. 2019. Code Chef. Retrieved January 24, 2019 from https://www.codechef.com/

- Chen et al. (1998) Scott Chen, Ponani Gopalakrishnan, et al. 1998. Speaker, environment and channel change detection and clustering via the bayesian information criterion. In Proc. DARPA broadcast news transcription and understanding workshop, Vol. 8. Virginia, USA, 127–132.

- Chen and Guestrin (2016) Tianqi Chen and Carlos Guestrin. 2016. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (San Francisco, California, USA) (KDD ’16). Association for Computing Machinery, New York, NY, USA, 785–794. https://doi.org/10.1145/2939672.2939785

- Chen et al. (2016) Yuanzhe Chen, Qing Chen, Mingqian Zhao, Sebastien Boyer, Kalyan Veeramachaneni, and Huamin Qu. 2016. DropoutSeer: Visualizing learning patterns in Massive Open Online Courses for dropout reasoning and prediction. In 2016 IEEE Conference on Visual Analytics Science and Technology (VAST). IEEE, 111–120.

- Chen et al. (2017) Yinghan Chen, Steven Andrew Culpepper, Shiyu Wang, and Jeffrey Douglas. 2017. A Hidden Markov Model for Learning Trajectories in Cognitive Diagnosis With Application to Spatial Rotation Skills. Applied Psychological Measurement 42, 1 (Sept. 2017), 5–23. https://doi.org/10.1177/0146621617721250

- Cheng et al. (2017) Justin Cheng, Michael Bernstein, Cristian Danescu-Niculescu-Mizil, and Jure Leskovec. 2017. Anyone Can Become a Troll: Causes of Trolling Behavior in Online Discussions. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (Portland, Oregon, USA) (CSCW ’17). Association for Computing Machinery, New York, NY, USA, 1217–1230. https://doi.org/10.1145/2998181.2998213

- Chung et al. (2017) Chia-Fang Chung, Nanna Gorm, Irina A. Shklovski, and Sean Munson. 2017. Finding the Right Fit: Understanding Health Tracking in Workplace Wellness Programs. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (Denver, Colorado, USA) (CHI ’17). Association for Computing Machinery, New York, NY, USA, 4875–4886. https://doi.org/10.1145/3025453.3025510

- Costa et al. (2017) Evandro B. Costa, Baldoino Fonseca, Marcelo Almeida Santana, Fabrísia Ferreira de Araújo, and Joilson Rego. 2017. Evaluating the effectiveness of educational data mining techniques for early prediction of students’ academic failure in introductory programming courses. Computers in Human Behavior 73 (2017), 247 – 256. https://doi.org/10.1016/j.chb.2017.01.047

- Csikszentmihalyi (1975) M Csikszentmihalyi. 1975. Beyond boredom and anxiety. San Francisco: JosseyBass. Well-being: Thefoundations of hedonic psychology (1975), 134–154.

- d. Baker et al. (2008) Ryan S. J. d. Baker, Albert T. Corbett, Ido Roll, and Kenneth R. Koedinger. 2008. Developing a generalizable detector of when students game the system. User Modeling and User-Adapted Interaction 18, 3 (Jan. 2008), 287–314. https://doi.org/10.1007/s11257-007-9045-6

- Dixon et al. (2019) Matt Dixon, Nalin Asanka Gamagedara Arachchilage, and James Nicholson. 2019. Engaging Users with Educational Games: The Case of Phishing. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI EA ’19). Association for Computing Machinery, New York, NY, USA, 1–6. https://doi.org/10.1145/3290607.3313026

- dos Santos Junior et al. (2018) Edson B. dos Santos Junior, Carlos Simões, Ana Cristina Bicharra Garcia, and Adriana S. Vivacqua. 2018. What Does a Crowd Routing Behavior Change Reveal?. In Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing (Jersey City, NJ, USA) (CSCW ’18). Association for Computing Machinery, New York, NY, USA, 297–300. https://doi.org/10.1145/3272973.3274081

- Dror et al. (2012) Gideon Dror, Dan Pelleg, Oleg Rokhlenko, and Idan Szpektor. 2012. Churn Prediction in New Users of Yahoo! Answers. In Proceedings of the 21st International Conference on World Wide Web (Lyon, France) (WWW ’12 Companion). ACM, New York, NY, USA, 829–834. https://doi.org/10.1145/2187980.2188207

- Faucon et al. (2016) Louis Faucon, Lukasz Kidzinski, and Pierre Dillenbourg. 2016. Semi-Markov Model for Simulating MOOC Students. International Educational Data Mining Society (2016).

- Foley et al. (2020) Sarah Foley, Nadia Pantidi, and John McCarthy. 2020. Student Engagement in Sensitive Design Contexts: A Case Study in Dementia Care. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. https://doi.org/10.1145/3313831.3376161

- Forney Jr (2005) G David Forney Jr. 2005. The viterbi algorithm: A personal history. arXiv preprint cs/0504020 (2005). http://arxiv.org/abs/cs/0504020

- Fortin et al. (2019) Pascal E. Fortin, Elisabeth Sulmont, and Jeremy Cooperstock. 2019. Detecting Perception of Smartphone Notifications Using Skin Conductance Responses. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–9. https://doi.org/10.1145/3290605.3300420

- Fredricks et al. (2004) Jennifer A Fredricks, Phyllis C Blumenfeld, and Alison H Paris. 2004. School Engagement: Potential of the Concept, State of the Evidence. Review of Educational Research 74, 1 (March 2004), 59–109. https://doi.org/10.3102/00346543074001059

- Furtado et al. (2013) Adabriand Furtado, Nazareno Andrade, Nigini Oliveira, and Francisco Brasileiro. 2013. Contributor Profiles, Their Dynamics, and Their Importance in Five Q&a Sites. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work (San Antonio, Texas, USA) (CSCW ’13). Association for Computing Machinery, New York, NY, USA, 1237–1252. https://doi.org/10.1145/2441776.2441916

- Gardiner (1974) W.L. Gardiner. 1974. Psychology: a story of a search. Brooks/Cole Pub. Co. https://books.google.fr/books?id=q50bAQAAMAAJ

- Gruver et al. (2019) Nate Gruver, Ali Malik, Brahm Capoor, Chris Piech, Mitchell L Stevens, and Andreas Paepcke. 2019. Using Latent Variable Models to Observe Academic Pathways. In Proceedings of The 12th International Conference on Educational Data Mining. EDM, 294–299. http://educationaldatamining.org/edm2019/proceedings/

- Hamari et al. (2016) Juho Hamari, David J. Shernoff, Elizabeth Rowe, Brianno Coller, Jodi Asbell-Clarke, and Teon Edwards. 2016. Challenging games help students learn: An empirical study on engagement, flow and immersion in game-based learning. Computers in Human Behavior 54 (2016), 170 – 179. https://doi.org/10.1016/j.chb.2015.07.045

- Hamid et al. (2018) Siti Suhaila Abdul Hamid, Novia Admodisastro, Noridayu Manshor, Azrina Kamaruddin, and Abdul Azim Abd Ghani. 2018. Dyslexia adaptive learning model: student engagement prediction using machine learning approach. In International Conference on Soft Computing and Data Mining. Springer, 372–384.

- Handelsman et al. (2005) Mitchell M Handelsman, William L Briggs, Nora Sullivan, and Annette Towler. 2005. A measure of college student course engagement. The Journal of Educational Research 98, 3 (2005), 184–192.

- Hara et al. (2018) Kotaro Hara, Abigail Adams, Kristy Milland, Saiph Savage, Chris Callison-Burch, and Jeffrey P. Bigham. 2018. A Data-Driven Analysis of Workers’ Earnings on Amazon Mechanical Turk. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (Montreal QC, Canada) (CHI ’18). ACM, New York, NY, USA, Article 449, 14 pages. https://doi.org/10.1145/3173574.3174023

- He et al. (2015) Xiaofei He, Xinbo Gao, Yanning Zhang, Zhi-Hua Zhou, Zhi-Yong Liu, Baochuan Fu, Fuyuan Hu, and Zhancheng Zhang. 2015. Intelligence Science and Big Data Engineering. Big Data and Machine Learning Techniques: 5th International Conference, IScIDE 2015, Suzhou, China, June 14-16, 2015, Revised Selected Papers. Vol. 9243. Springer.

- Hertz (2010) Matthew Hertz. 2010. What Do ”CS1” and ”CS2” Mean? Investigating Differences in the Early Courses. In Proceedings of the 41st ACM Technical Symposium on Computer Science Education (Milwaukee, Wisconsin, USA) (SIGCSE ’10). Association for Computing Machinery, New York, NY, USA, 199–203. https://doi.org/10.1145/1734263.1734335

- Hind et al. (2017) Hayati Hind, Mohammed Khalidi Idrissi, and Samir Bennani. 2017. Applying Text Mining to Predict Learners’ Cognitive Engagement. In Proceedings of the Mediterranean Symposium on Smart City Application (Tangier, Morocco) (SCAMS ’17). Association for Computing Machinery, New York, NY, USA, Article 2, 6 pages. https://doi.org/10.1145/3175628.3175655

- Hoffman et al. (2019) Beryl Hoffman, Ralph Morelli, and Jennifer Rosato. 2019. Student Engagement is Key to Broadening Participation in CS. In Proceedings of the 50th ACM Technical Symposium on Computer Science Education (Minneapolis, MN, USA) (SIGCSE ’19). ACM, New York, NY, USA, 1123–1129. https://doi.org/10.1145/3287324.3287438

- Janssen and Kirschner (2020) Jeroen Janssen and Paul A Kirschner. 2020. Applying collaborative cognitive load theory to computer-supported collaborative learning: towards a research agenda. Educational Technology Research and Development (2020), 1–23.

- Joseph et al. (2017) Kenneth Joseph, Wei Wei, and Kathleen M. Carley. 2017. Girls Rule, Boys Drool: Extracting Semantic and Affective Stereotypes from Twitter. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (Portland, Oregon, USA) (CSCW ’17). Association for Computing Machinery, New York, NY, USA, 1362–1374. https://doi.org/10.1145/2998181.2998187

- Juhaňák et al. (2019) Libor Juhaňák, Jiří Zounek, and Lucie Rohlíková. 2019. Using process mining to analyze students’ quiz-taking behavior patterns in a learning management system. Computers in Human Behavior 92 (2019), 496 – 506. https://doi.org/10.1016/j.chb.2017.12.015

- Jutge (2019) Jutge. 2019. jutge. Retrieved January 24, 2019 from https://jutge.org/

- Kelders and Kip (2019) Saskia M. Kelders and Hanneke Kip. 2019. Development and Initial Validation of a Scale to Measure Engagement with EHealth Technologies. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI EA ’19). Association for Computing Machinery, New York, NY, USA, 1–6. https://doi.org/10.1145/3290607.3312917

- Kim et al. (2019) Jina Kim, Kunwoo Bae, Eunil Park, and Angel P. del Pobil. 2019. Who Will Subscribe to My Streaming Channel? The Case of Twitch. In Conference Companion Publication of the 2019 on Computer Supported Cooperative Work and Social Computing (Austin, TX, USA) (CSCW ’19). Association for Computing Machinery, New York, NY, USA, 247–251. https://doi.org/10.1145/3311957.3359470

- Kim et al. (2014) Juho Kim, Philip J. Guo, Daniel T. Seaton, Piotr Mitros, Krzysztof Z. Gajos, and Robert C. Miller. 2014. Understanding In-Video Dropouts and Interaction Peaks Inonline Lecture Videos. In Proceedings of the First ACM Conference on Learning @ Scale Conference (Atlanta, Georgia, USA) (L@S ’14). Association for Computing Machinery, New York, NY, USA, 31–40. https://doi.org/10.1145/2556325.2566237

- Kokkodis (2019) Marios Kokkodis. 2019. Reputation Deflation Through Dynamic Expertise Assessment in Online Labor Markets. In The World Wide Web Conference (San Francisco, CA, USA) (WWW ’19). ACM, New York, NY, USA, 896–905. https://doi.org/10.1145/3308558.3313479

- Kuh (2001) George D Kuh. 2001. Assessing what really matters to student learning inside the national survey of student engagement. Change: The Magazine of Higher Learning 33, 3 (2001), 10–17.

- Kwak et al. (2019) Il-Youp Kwak, Jun Ho Huh, Seung Taek Han, Iljoo Kim, and Jiwon Yoon. 2019. Voice Presentation Attack Detection through Text-Converted Voice Command Analysis. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3290605.3300828

- Kwon et al. (2019) Young D. Kwon, Dimitris Chatzopoulos, Ehsan ul Haq, Raymond Chi-Wing Wong, and Pan Hui. 2019. GeoLifecycle: User Engagement of Geographical Exploration and Churn Prediction in LBSNs. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 3, 3, Article 92 (Sept. 2019), 29 pages. https://doi.org/10.1145/3351250

- Langley (2006) D Langley. 2006. The student engagement index: A proposed student rating system based on the national benchmarks of effective educational practice. University of Minnesota: Center for Teaching and Learning Services (2006).

- Lee et al. (2019) Hyunsoo Lee, Uichin Lee, and Hwajung Hong. 2019. Commitment Devices in Online Behavior Change Support Systems. In Proceedings of Asian CHI Symposium 2019: Emerging HCI Research Collection (Glasgow, Scotland, United Kingdom) (AsianHCI ’19). Association for Computing Machinery, New York, NY, USA, 105–113. https://doi.org/10.1145/3309700.3338446

- LeetCode (2019) LeetCode. 2019. LeetCode. Retrieved January 24, 2019 from https://leetcode.com/

- Lessel et al. (2019) Pascal Lessel, Maximilian Altmeyer, Lea Verena Schmeer, and Antonio Krüger. 2019. ”Enable or Disable Gamification?”: Analyzing the Impact of Choice in a Gamified Image Tagging Task. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3290605.3300380

- Liu et al. (2020) Zipeng Liu, Zhicheng Liu, and Tamara Munzner. 2020. Data-Driven Multi-Level Segmentation of Image Editing Logs. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3313831.3376152

- Maity et al. (2017) Suman Kalyan Maity, Aishik Chakraborty, Pawan Goyal, and Animesh Mukherjee. 2017. Detection of Sockpuppets in Social Media. In Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (Portland, Oregon, USA) (CSCW ’17). Association for Computing Machinery, New York, NY, USA, 243–246. https://doi.org/10.1145/3022198.3026360

- Maldonado-Mahauad et al. (2018) Jorge Maldonado-Mahauad, Mar Pérez-Sanagustín, René F. Kizilcec, Nicolás Morales, and Jorge Munoz-Gama. 2018. Mining theory-based patterns from Big data: Identifying self-regulated learning strategies in Massive Open Online Courses. Computers in Human Behavior 80 (2018), 179 – 196. https://doi.org/10.1016/j.chb.2017.11.011

- Masaki et al. (2020) Hiroaki Masaki, Kengo Shibata, Shui Hoshino, Takahiro Ishihama, Nagayuki Saito, and Koji Yatani. 2020. Exploring Nudge Designs to Help Adolescent SNS Users Avoid Privacy and Safety Threats. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–11. https://doi.org/10.1145/3313831.3376666

- McPherson et al. (2001) Miller McPherson, Lynn Smith-Lovin, and James M Cook. 2001. Birds of a feather: Homophily in social networks. Annual review of sociology 27, 1 (2001), 415–444.

- Miri et al. (2020) Pardis Miri, Emily Jusuf, Andero Uusberg, Horia Margarit, Robert Flory, Katherine Isbister, Keith Marzullo, and James J. Gross. 2020. Evaluating a Personalizable, Inconspicuous Vibrotactile(PIV) Breathing Pacer for In-the-Moment Affect Regulation. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–12. https://doi.org/10.1145/3313831.3376757

- Mogavi et al. (2019) Reza Hadi Mogavi, Sujit Gujar, Xiaojuan Ma, and Pan Hui. 2019. HRCR: Hidden Markov-Based Reinforcement to Reduce Churn in Question Answering Forums. In PRICAI 2019: Trends in Artificial Intelligence. Springer International Publishing, 364–376. https://doi.org/10.1007/978-3-030-29908-8_29

- Morgan et al. (2018) Michael Morgan, Matthew Butler, Neena Thota, and Jane Sinclair. 2018. How CS Academics View Student Engagement. In Proceedings of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education (Larnaca, Cyprus) (ITiCSE 2018). ACM, New York, NY, USA, 284–289. https://doi.org/10.1145/3197091.3197092

- Moubayed et al. (2020) Abdallah Moubayed, Mohammadnoor Injadat, Abdallah Shami, and Hanan Lutfiyya. 2020. Student Engagement Level in an e-Learning Environment: Clustering Using K-means. American Journal of Distance Education 34, 2 (March 2020), 137–156. https://doi.org/10.1080/08923647.2020.1696140

- Murauer and Specht (2018) Benjamin Murauer and Günther Specht. 2018. Detecting Music Genre Using Extreme Gradient Boosting. In Companion Proceedings of the The Web Conference 2018 (Lyon, France) (WWW ’18). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE, 1923–1927. https://doi.org/10.1145/3184558.3191822

- Nagrecha et al. (2017) Saurabh Nagrecha, John Z. Dillon, and Nitesh V. Chawla. 2017. MOOC Dropout Prediction: Lessons Learned from Making Pipelines Interpretable. In Proceedings of the 26th International Conference on World Wide Web Companion (Perth, Australia) (WWW ’17). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE, 351–359. https://doi.org/10.1145/3041021.3054162

- Naito et al. (2018) Junpei Naito, Yukino Baba, Hisashi Kashima, Takenori Takaki, and Takuya Funo. 2018. Predictive modeling of learning continuation in preschool education using temporal patterns of development tests. In Thirty-Second AAAI Conference on Artificial Intelligence.

- Nguyen et al. (2016) Tuan Dinh Nguyen, Marisa Cannata, and Jason Miller. 2016. Understanding student behavioral engagement: Importance of student interaction with peers and teachers. The Journal of Educational Research 111, 2 (Oct. 2016), 163–174. https://doi.org/10.1080/00220671.2016.1220359

- Okamoto (2017) Kenji Okamoto. 2017. Analyzing Single Molecule FRET Trajectories Using HMM. In Hidden Markov Models. Springer New York, 103–113. https://doi.org/10.1007/978-1-4939-6753-7_7

- Ouimet and Smallwood (2005) Judith A Ouimet and Robert A Smallwood. 2005. Assessment Measures: CLASSE–The Class-Level Survey of Student Engagement. Assessment Update 17, 6 (2005), 13–15.

- Pace (2004) Steven Pace. 2004. A grounded theory of the flow experiences of Web users. International Journal of Human-Computer Studies 60, 3 (2004), 327 – 363. https://doi.org/10.1016/j.ijhcs.2003.08.005

- Pan et al. (2020) Zilong Pan, Chenglu Li, and Min Liu. 2020. Learning Analytics Dashboard for Problem-Based Learning. In Proceedings of the Seventh ACM Conference on Learning @ Scale (Virtual Event, USA) (L@S ’20). Association for Computing Machinery, New York, NY, USA, 393–396. https://doi.org/10.1145/3386527.3406751

- Park et al. (2011) Sira Park, Susan D. Holloway, Amanda Arendtsz, Janine Bempechat, and Jin Li. 2011. What Makes Students Engaged in Learning? A Time-Use Study of Within- and Between-Individual Predictors of Emotional Engagement in Low-Performing High Schools. Journal of Youth and Adolescence 41, 3 (Dec. 2011), 390–401. https://doi.org/10.1007/s10964-011-9738-3

- Parsons et al. (2015) Sophie Parsons, Peter M. Atkinson, Elena Simperl, and Mark Weal. 2015. Thematically Analysing Social Network Content During Disasters Through the Lens of the Disaster Management Lifecycle. In Proceedings of the 24th International Conference on World Wide Web (Florence, Italy) (WWW ’15 Companion). ACM, New York, NY, USA, 1221–1226. https://doi.org/10.1145/2740908.2741721

- Pereira et al. (2019) Filipe D. Pereira, Elaine Oliveira, Alexandra Cristea, David Fernandes, Luciano Silva, Gene Aguiar, Ahmed Alamri, and Mohammad Alshehri. 2019. Early Dropout Prediction for Programming Courses Supported by Online Judges. In Lecture Notes in Computer Science. Springer International Publishing, 67–72. https://doi.org/10.1007/978-3-030-23207-8_13

- Petit et al. (2012) Jordi Petit, Omer Giménez, and Salvador Roura. 2012. Jutge.Org: An Educational Programming Judge. In Proceedings of the 43rd ACM Technical Symposium on Computer Science Education (Raleigh, North Carolina, USA) (SIGCSE ’12). ACM, New York, NY, USA, 445–450. https://doi.org/10.1145/2157136.2157267

- Playground (2019) Math Playground. 2019. Math Playground. Retrieved January 24, 2019 from https://www.mathplayground.com/

- Pudipeddi et al. (2014) Jagat Sastry Pudipeddi, Leman Akoglu, and Hanghang Tong. 2014. User Churn in Focused Question Answering Sites: Characterizations and Prediction. In Proceedings of the 23rd International Conference on World Wide Web (Seoul, Korea) (WWW ’14 Companion). ACM, New York, NY, USA, 469–474. https://doi.org/10.1145/2567948.2576965

- Qiu et al. (2016) Jiezhong Qiu, Jie Tang, Tracy Xiao Liu, Jie Gong, Chenhui Zhang, Qian Zhang, and Yufei Xue. 2016. Modeling and Predicting Learning Behavior in MOOCs. In Proceedings of the Ninth ACM International Conference on Web Search and Data Mining (San Francisco, California, USA) (WSDM ’16). Association for Computing Machinery, New York, NY, USA, 93–102. https://doi.org/10.1145/2835776.2835842

- Rezapour and Diesner (2017) Rezvaneh Rezapour and Jana Diesner. 2017. Classification and Detection of Micro-Level Impact of Issue-Focused Documentary Films Based on Reviews. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (Portland, Oregon, USA) (CSCW ’17). Association for Computing Machinery, New York, NY, USA, 1419–1431. https://doi.org/10.1145/2998181.2998201

- Rodriguez et al. (2009) Juan D Rodriguez, Aritz Perez, and Jose A Lozano. 2009. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE transactions on pattern analysis and machine intelligence 32, 3 (2009), 569–575.

- Romero and Ventura (2016) Cristóbal Romero and Sebastián Ventura. 2016. Educational data science in massive open online courses. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 7, 1 (Sept. 2016), e1187. https://doi.org/10.1002/widm.1187

- Rowe (2013) Matthew Rowe. 2013. Mining user lifecycles from online community platforms and their application to churn prediction. In 2013 IEEE 13th International Conference on Data Mining. IEEE, 637–646.

- Saenz et al. (2011) Victor B. Saenz, Deryl Hatch, Beth E. Bukoski, Suyun Kim, Kye hyoung Lee, and Patrick Valdez. 2011. Community College Student Engagement Patterns. Community College Review 39, 3 (July 2011), 235–267. https://doi.org/10.1177/0091552111416643

- Sato et al. (2019) Koya Sato, Mizuki Oka, and Kazuhiko Kato. 2019. Early Churn User Classification in Social Networking Service Using Attention-Based Long Short-Term Memory. In Lecture Notes in Computer Science. Springer International Publishing, 45–56. https://doi.org/10.1007/978-3-030-26142-9_5

- Shang and Cavanaugh (2008) Junfeng Shang and Joseph E. Cavanaugh. 2008. Bootstrap variants of the Akaike information criterion for mixed model selection. Computational Statistics & Data Analysis 52, 4 (2008), 2004 – 2021. https://doi.org/10.1016/j.csda.2007.06.019