Change point detection and image segmentation for time series of astrophysical images

Abstract

Many astrophysical phenomena are time-varying, in the sense that their intensity, energy spectrum, and/or the spatial distribution of the emission suddenly change. This paper develops a method for modeling a time series of images. Under the assumption that the arrival times of the photons follow a Poisson process, the data are binned into 4D grids of voxels (time, energy band, and x-y coordinates), and viewed as a time series of non-homogeneous Poisson images. The method assumes that at each time point, the corresponding multi-band image stack is an unknown 3D piecewise constant function including Poisson noise. It also assumes that all image stacks between any two adjacent change points (in time domain) share the same unknown piecewise constant function. The proposed method is designed to estimate the number and the locations of all the change points (in time domain), as well as all the unknown piecewise constant functions between any pairs of the change points. The method applies the minimum description length (MDL) principle to perform this task. A practical algorithm is also developed to solve the corresponding complicated optimization problem. Simulation experiments and applications to real datasets show that the proposed method enjoys very promising empirical properties. Applications to two real datasets, the XMM observation of a flaring star and an emerging solar coronal loop, illustrate the usage of the proposed method and the scientific insight gained from it.

1 Introduction

Many phenomena in the high-energy universe are time-variable, from coronal flares on the smallest stars to accretion events in the most massive black holes. Often, this variability can just be seen “by-eye” but at other times, we need to use robust methods founded in statistics to distinguish random noise from significant variability. Realizing where the change has occurred is critical for subsequent scientific analyses, e.g., spectral fitting and light curve modeling. Such analyses must focus on those intervals in data space which are properly tied to the changes in the physical processes that generate the observed photons. Therefore, it is of importance to identify sources as well as to locate their spatial boundaries. Our goal is to detect change points in the time direction; that is, the times at which sudden changes happened during the underlying astrophysical process.

Change point detection in time series is well studied, and several algorithms employing different philosophies have been developed. For example, Aue & Horváth (2013) employed hypothesis testing to study structural break detection, using both non-parametric approaches like cumulative sum (CUSUM) and parametric methods like likelihood ratio statistic to deal with different kinds of structural breaks. Another likelihood-based approach commonly used to analyze astronomical time series is Bayesian Blocks (Scargle et al., 2013) which finds change points by fitting piecewise constant models between change points. A good example of the model driven approach is the Auto-PARM procedure developed by Davis et al. (2006). By modeling the piecewise-stationary time series, the procedure is able to simultaneously estimate the number of change points, their locations and the parametric model for each piece. Here the minimum description length (MDL) principle by Rissanen (1989, 2007) is applied in the model selection procedure. Davis & Yau (2013) proved the strong consistency of the MDL-based change point detection procedure. Another example is Automark by Wong et al. (2016), who developed an MDL-based methodology which detects the changes in observed emission from astronomical sources in 2-D time-wavelength space.

Dey et al. (2010) loosely classified image segmentation techniques into two categories: (i) image driven approaches and (ii) model driven approaches. Image driven segmentation techniques are mainly based on the discrete pixel values of the image. For example, the graph-based algorithm by Felzenszwalb & Huttenlocher (2004) treats pixels as vertices, and the weights of the edges are based on the similarity between the features of pixels. The evidence for a boundary between two regions can be measured based on the graph. Such methods work in many complicated cases as there is no underlying model for images. Model driven approaches rely upon the information of the structure of the image. These methods are based on the assumption that the pixels in the same region have similar characteristics. The Blobworld framework by Carson et al. (1999) assumes that the features of pixels are from a underlying multivariate Gaussian mixture model. Neighboring pixels whose features are from the same Gaussian distribution are grouped into the same region.

Here we follow the model driven approach. In order to develop a change point detection method for image time series data, we begin by specifying an underlying statistical model for the images between any two consecutive change points. In doing so we also study the statistical properties of the change point detection method. We assume the underlying Poisson rate for each of the images follows a piecewise constant function. Therefore, the region growing algorithm developed by Adams & Bischof (1994) for greyvalue images can be naturally applied.

Given the previous successes of applying the MDL principle (Rissanen, 1989, 2007) to other time series change point detection and image segmentation problems (e.g., Davis et al., 2006; Lee, 2000; Wong et al., 2016), here we also use MDL to tackle our problem of joint change point detection and image segmentation for time series of astronomical images. Briefly, MDL defines the best model as the one that produces the best lossless compression of the data. There are different versions of MDL, and the one we use is the so-called two-part code; a gentle introduction can be found in Lee (2001). When comparing with other versions of MDL such as normalized maximum likelihood, one advantage of the two-part version is that it tends to be more computationally tractable for complex problems such as the one this paper considers. It has also been shown to enjoy excellent theoretical and empirical properties in other model selection tasks (e.g., Aue & Lee, 2011; Lee, 2000; Davis et al., 2006; Davis & Yau, 2013) Based on MDL, we develop a practical algorithm that can be applied to simultaneously estimate the number and locations of the change points, as well as to perform image segmentation on each of the images.

2 Methodology

Our method is applied to 4-D data cubes where 2-D spatial slices in several energy passbands are stacked in time. Such data cubes are commonly available, though in high-energy astrophysics, data are usually obtained in the form of a list of photons. The list contains the two-dimensional spatial coordinates where the photons were recorded on the detector, the times they were recorded, and their energies or wavelengths. To facilitate our analysis, we bin these data into a 4-D rectangular grid of boxes. After the binning of the original data, we obtain a 4D table of photon counts indexed by the two-dimensional coordinates , time index and energy band . The dataset is thus a series of multi-band images with counts of photons as the values of the pixels. Since the emission times of photons can be considered a non-homogeneous Poisson process, and the grids do not overlap with each other, the counts in each pixel are independent, and the image slices are also independent.

We first partition these images into a set of non-overlapping region segments using a seeded region growing (SRG) method, and then merging adjacent segments to minimize MDL (see Section 2.1). The counts in each segment are modeled as Poisson counts (see Section 2.2; the implementation details of the algorithm are described in Section 2.3). We minimize the MDL criterion across the images by iteratively removing change points along the time axis and applying the SRG segmentation onto the images in each of the time intervals. Key pixels that are influential in how the segmentations and change points are determined are then identified through searching for changes in the fitted intensities (see Section 2.4). Such regions are the focus of follow-up analyses.

We list the variables, parameters, and notation used here in Table 1.

| Notation | Definition |

|---|---|

| number of pixels in each 2-D spatial image | |

| number of time bins | |

| number of energy bands | |

| duration of the time bin | |

| photon counts within the spatial pixel, the time interval and the energy range | |

| Poisson rate for the spatial pixel, the time interval and the energy range | |

| number of change points | |

| location of the change point | |

| number of region segments for the interval between two consecutive change points | |

| the area (number of pixels) of the region segment of the interval between two consecutive change points | |

| the “perimeter” (number of pixel edges between this and neighboring regions) of the region of the interval | |

| Poisson rate for the region segment and the energy range of the interval | |

| fitted Poisson rate for the region segment and the energy range of the interval |

2.1 Region Growing and Merging

As a first step in the analysis, a suitable segmentation method must be applied to the images to delineate regions of interest (ROIs). For this, we use the seeded region growing (SRG) method of Adams & Bischof (1994) to obtain a segmentation of the image. We choose SRG over other image segmentation algorithms for its speed and reliability (Fan & Lee, 2015). Also, it can be straightforwardly incorporated to the Poisson setting.

At the beginning of SRG, we select a set of seeds, manually or automatically, from the image. Each seed can be a single pixel or a set of connected pixels. A seed comprises an initial region. Then each region starts to grow outward until the whole image is covered. (See Section 2.3.2 offers some suggestions on the selection of initial seeds.)

At each step, the unlabelled pixels which are neighbors to at least one of the current regions comprise the set of candidates for growing the region. One of these candidates is selected to merge into the region, based on the Poisson likelihood that measures the similarity between a candidate pixel and the corresponding region. We repeat this process until all the pixels are labeled, thus producing an initial segmentation by SRG.

At the end of the SRG process, we are left with an oversegmentation, i.e., with the image split into a larger than optimal number of segments. We then merge these segments based on the largest reduction or smallest increase in the MDL criterion (see below). From this sequence of segmentations, we select the one that gives the smallest value of the MDL criterion as the final ROIs.

2.2 Modeling a Poisson Image Series

2.2.1 Input Data Type

We require that the data are binned into photon counts in an tensor , where is the photon counts within the spatial rectangular region, the time interval and the energy range . After binning, the data can be viewed as a time series of images in different energy bands. The values of each pixel are the photon counts in the different bands in the corresponding spatial region.

Notice that compared with Automark (Wong et al., 2016), we incorporate 2-D spatial information into the model, thus extending the analysis from two (wavelength/energy and time) to four dimensions (wavelength/energy, time, and projected sky location). We also relax the restriction that the bin sizes along any of the axes are held fixed. Thus, sharp changes are more easily detected.

As the data in high-energy astrophysics are photon counts, we use a Poisson process to model the data,

| (1) |

where .

Our goal is to infer model intensities from the observed counts data . We are especially interested in detecting significant changes of over time. If there are changes, we also want to estimate the number and locations of the change points.

2.2.2 Piecewise Constant Model

First consider a temporally homogeneous Poisson model without any change points. Then each image can be treated as an independent Poisson realization of the same, unknown, true image.

We model the image as a 3-dimensional piecewise constant function. That is, the 2-dimensional space of - coordinates is partitioned into non-overlapping regions such that all the pixels in a given region have the same Poisson intensity. Different energy bands share the same spatial partitioning. Rigorously, the Poisson parameter can be written as a summation of region-specific Poisson rates times the corresponding indicator functions of regions ( is 1 for pixel in region and 0 otherwise) in the following format:

| (2) |

Here means “the pixel in the region” and is the indicator function. is the index set of the pixels within the region, with . Also, is the Poisson rate for the band of the region. The partition of the image is specified by .

2.2.3 Adding Change Points to the Model

Now we allow the underlying Poisson parameter to change over time . We model as a piecewise constant function of .

Suppose these images can be partitioned into homogeneous intervals by change points

For the image, suppose that it belongs to the time interval; i.e., . For each given , let be a two-dimensional piecewise constant function with constant regions. Then can be represented by

| (3) |

where is the number of regions within the interval. Let . The partition of the images within interval is specified by . And the overall partition is . The Poisson rates is the value for the band in the region of the interval. Let . And let , and means “the pixel is in the region of the interval”.

2.2.4 Model Selection Using MDL

Given the observed images , we aim to obtain an estimate of . In other words, we want an estimate of the image partitions and the Poisson rates of the regions for each band. It is straightforward to estimate the Poisson intensities given the region partitioning, but the partitioning is a much more complicated model selection problem.

We will apply MDL to select the best fitting model. Loosely speaking, the idea behind MDL for model selection is to first obtain a MDL criterion for each possible model, and then define the best fitting model as the minimizer of this criterion. MDL defines the best model as the one that produces the best compression of the data. The criterion can be treated as the code length, or amount of hardware memory required to store the data.

First we present the MDL criterion for the homogeneous Poisson model, then follow it by the MDL criterion for the general case (i.e., with change points).

Following similar arguments as in Lee (2000) (see their Appendix B), the MDL criterion for segmenting homogeneous images is

where and are, respectively, the “area” (number of pixels) and “perimeter” (number of pixel edges) of region , and

| (5) |

is the maximum likelihood estimate of the Poisson rate in the corresponding region. Note that the indices of run over the region segments and the passbands .

For the Poisson model with change points, once the number of change points and the locations are specified, for each , and can be estimated independently. Using the previous argument, the MDL criterion for images within the same homogeneous interval is

| (6) | |||||

Then the overall MDL criterion for the model with change points is

| (7) | |||||

To sum up, using the MDL principle, the best-fit model is defined as the minimizer of the criterion (7). The next subsection presents a practical algorithm for carrying out this minimization.

2.2.5 Statistical Consistency

An important step to demonstrating the efficacy of our method is to establish its statistical consistency. That is, if it is shown that as the size of the data increases, the differences between the estimated model parameters and the true values decrease to zero, then the method can be said to be free of asymptotic bias, can be applied in the general case, and is elevated above a heuristic. We prove in Appendix A that the MDL-based model selection to choose the region partitioning, as well as the corresponding Poisson intensity parameters, is indeed strongly statistically consistent under mild assumptions of maintaining the temporal variability structure of .

2.3 Practical Minimization

2.3.1 An Iterative Algorithm

Given its complicated structure, global minimization of (Equation 7) is virtually infeasible when the number of images and the number of pixels are not small, because the time complexity of the exhaustive search is of order .

We iterate the following two steps to (approximately) minimize the MDL criterion (7).

-

1.

Given a set of change points, apply the image segmentation method to all the images belonging to the first homogeneous time interval and obtain the MDL best-fitting image for this interval. Repeat this for all remaining intervals. Calculate the MDL criterion (7).

-

2.

Modify the set of change points by, for example, adding or removing one change point. In terms of what modification should be made, we use the greedy strategy to select the one that achieves the largest reduction of the overall MDL value in (7).

For Step 1 we begin with a large set of change points (i.e., an over-fitted model). In Step 2 we remove one change point (i.e., merge two neighboring intervals) to maximize the reduction of the MDL value. The procedure stops and declares the optimization is done if no further MDL reduction can be achieved by removing change points. This is similar to backward elimination for statistical model selection problems. See Figure 1 for a flowchart of the whole procedure.

2.3.2 Practical Considerations

Here we list some practical issues that are crucial to the success of the above minimization algorithm.

Initial seed allocation for SRG: The selection of the initial seeds in SRG plays an important role in the performance of the algorithm. To obtain a good initial oversegmentation, there must be at least one seed within each true region. Currently we use all the local maxima as well as a subset of the square lattice as the initial seeds. Based on simulation results (see Section 3.2), when the number of initial seeds is inadequate, the SRG will underfit the images, which will in turn lead to an overfitting of the change points and will lead to an increased false positive rate. On the other hand, it could be time-consuming when the number of initial seeds is very large, especially for high resolution images. We developed an algorithm that allocates initial seeds automatically based on locating local maxima. However, an optimal selection of initial seeds almost certainly requires expert intervention, as it depends on the type of data that we work with. To reduce the chance of obtaining a poor oversegmentation with SRG, in practice one could try using different sets of initial seeds and select the oversegmentation that gives the smallest MDL value.

Counts per bin: The photon counts in the image pixels cannot be too small, otherwise the algorithm could fail to produce meaningful output; see Section 3.2. In some sense, small photon counts can be seen as low signal level, which means that the proposed method requires a minimum level of signal to operate with. Therefore, care must be exercised when deciding the size for the bin. As a rule of thumb, it should be enough to have around 100 counts for each pixel belonging to an astronomical object, while pixels from the background can have very low or even zero count.

Initial change point selection: Although the stepwise greedy algorithm is capable of saving a significant amount of computation time, it could still be time consuming if the initial set of change points is too large, as it might need many iterations to reach a local minimum. It is recommended to select the initial change points based on prior knowledge, if available, in order to accelerate the algorithm.

Computation Time: In each iteration, the main time-consuming part is to apply the SRG. When the total number of pixels and the number of seeds are large, during the process, the number of candidates is large. Therefore, the comparison among all the candidates and the following updating manner lead to most of the computation burden. As an example, we found that it takes about 40 minutes for applying SRG and merging once on images with about 200 seeds on a Linux machine with an octa-core 2.90 GHz Intel Xeon processor.

2.4 Highlighting the Key Pixels

After the change points are located, it is necessary to locate the pixels or regions that contribute to the estimation of the change points. The manner by which such key pixels are identified depends on the scientific context. Below we present two methods that are applicable to the real-world examples we discuss in Section 4

We focus here on images in a single passband, i.e., grey-valued images. For multi-band images, one can first transform a multi-band image into a single-band image by, for example, summing the pixel values in different bands, or by using only first principal component image of the multi-band image. Alternatively, one can also apply the method to each band individually, and merge the results from each band.

2.4.1 Based on Pixel Differences

The first method is to highlight key pixels based on the distribution of pixel differences before and after change points. The rationale is that a pixel with different fitted values before and after a change point is a strong indicator that it is a key pixel.

Suppose the fitted values for pixel in time intervals and are and , respectively. Given the Poisson nature of the data, we first apply a square-root transformation to normalize the fitted values. Define the difference for pixel as

| (8) |

A pixel is labelled as a key pixel if its is far away from the mean of all the differences. To be specific, pixel is labelled as a key pixel if

| (9) |

where and . Here is the median absolute deviation with , and is used to obtain a robust estimate (i.e., a measure that minimizes the effect of outliers) of the standard deviation of the ’s (see e.g., Rousseeuw & Croux, 1993). is the quantile of the standard normal distribution, and is the pre-specified significance level111 is related to the standard Normal error function, with exemplar values . We typically choose as our threshold.. Notice that by checking the sign of , we can deduce if pixel has increased or decreased after this change point.

2.4.2 Based on Region Differences

Another method to locate key pixels is to compare pairs of regions. For any region in the time interval , there must exist at least one region in time interval such that these two regions have overlapping pixels. We then test if the difference between the means of the pixels from these two regions is significant or not.

As before, we apply the square-root transformation to the pixels within each of the regions. Then we calculate the sample means and and sample variances and of these two groups of square-rooted values. Then we can for example test whether the difference between and is large enough with

| (10) |

See Section 4 for the applications of these two methods on some real data sets.

Lastly we note that the selection of , the significance level, deserves a more careful consideration. As in reality one may need to do comparisons for many change points and energy bands, this becomes a multiple-testing problem where the number of tests is large. Therefore, one should adjust the value of in order to control false positives.

3 Simulations

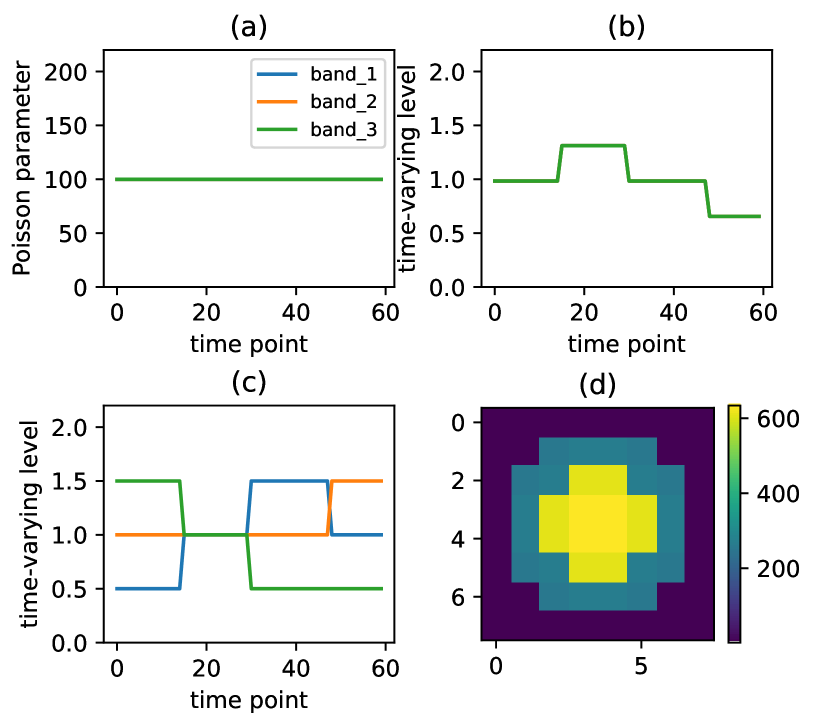

Two groups of simulations were conducted to evaluate the empirical performance of the proposed method. A specially designed was used for each of the experiments. For each experiment, we tested 13 signal levels, defined as the average number of photon counts per pixel. For each signal level, 100 datasets were generated according to (1), with . The number of spectral bands so and the number of time points .

3.1 Group 1: Single Pixel

The first group of experiments were designed to evaluate the ability of the proposed method for detecting change points, under the condition that there are no spatial variations. To be more specific, the size of the images is ; i.e., only one pixel. In other words, and the in is a dummy index. Three ’s of increasing complexity were used:

-

1.

is constant; i.e., no change point (as depicted in Figure 2 (a)).

-

2.

shows intensity changes but all three bands are identical at any given (see Figure 2 (b)).

-

3.

shows spectral changes (see Figure 2 (c)).

The first was used to study the level of false positives, while the remaining two were used to study false negatives.

3.1.1 No Change Point

As there is no change point in , this experiment is ideal for studying the relationship between the false positive rate and the signal level; recall the latter is defined as the average number of photon counts per pixel.

The results of this experiment (together with the next two experiments) are summarized as the blue curves in Figure 3. The figure captures how well the simulation recovers the location of the change points (top left), the number of change points (top right), the excess number of change points (false positives; bottom left), and the deficit in change points (false negatives; bottom right). The top left plot reports the fraction of simulated datasets for which the set of the fitted change points is identical to the set of true change points . The top right plot presents the fraction of simulated datasets for which the fitted number of change points equals to the true number of change points . The bottom left plot shows the average false positive rate, which is defined as the average number of falsely detected change points per possible location. The bottom right plot presents the fraction of simulated datasets for which contains , i.e., . One can see that the false discovery rate seems to be quite stable across different signal levels. Notice that the last curve is always 1 because is empty for this experiment.

3.1.2 Varying Intensity

In this experiment we introduced variation in by multiplying (a) and (b) in Figure 2 together. The results are reported as the green curve in Figure 3. One can see that when the signal level is small (), increasing the signal level leads to more false positives: this range of signals levels are too small to provide enough information for the detection of the true change points.

When is between and , the proposed method starts to be able to detect the true change points as the signal level increases. Also, the false positive rate begins to drop. One possible explanation is that for any two consecutive homogeneous time intervals, the location of the change point might not be clear when the signal level is relatively small.

As the signal level continues to increase, the proposed method becomes more successful in detecting the true change point locations. For example, when the average number of counts in each bin is greater than 100 (i.e., ), the signal is strong enough so that all the true change points can be detected successfully. We note that there are always some false positives due to the Poisson randomness, and it seems that the false positive rate stabilizes as the signal level increases.

3.1.3 Varying Spectrum

Here we allow different bands to change differently at the change points. The rate was obtained by multiplying (a) and (c) in Figure 2 together. The results, which are about the same as the previous experiment (varying intensity), are reported as the red curve in Figure 3. When the signal level is small (), the proposed method fails to detect the true change points. When is between and , as the signal level increases, the false positive rate begins to decrease while the true positive rate increases. When , all the true change points can be detected successfully, while the false positive rate stays at the same level as signal level increases.

3.2 Group 2: Spatial Structure

Instead of having a constant spatial signal (i.e., single pixel), in this second group of experiments a spatial varying structure is introduced to study the empirical performance of the proposed method. As before, three Poisson rate functions are considered. The size of the image is set to . To illustrate the importance of initial seed placement, we tested two allocation strategies: (i) we deliberately placed an inadequate number of initial seeds and (ii) we used every pixel as an initial seed.

3.2.1 No Change Point

There was no change point in this experiment and the spatial variation of is given in the bottom right plot of Figure 2. The results are reported as the blue curves in Figure 4. One can see that if the number of initial seeds is inadequate, the false positive rate increases as the signal level increases above . However, this does not happen when there are a large number of initial seeds; see the blue dotted curves in Figure 4. In fact, for this and the following two experiments, our method did not detect any false positive change points. This suggests that when the images are under-segmented, the method tends to place more false change points to compensate for data variability not explainable by image segmentation.

3.2.2 Varying Intensity

3.2.3 Varying Spectrum

3.3 Empirical Conclusions

The following empirical conclusions can be drawn from the above experimental results.

-

•

The method works well in all cases when the signal level is sufficiently large. As a rule of thumb, for binning of the original data, it would be ideal to have 100 counts or more for each bin covering an astronomical source.

-

•

It is important to place enough initial seeds when applying SRG; otherwise the false positive rate will increase with the signal level. See the second paragraph of Section 2.3.2 for some practical guidelines for initial seed selection.

4 Applications to Real Data

To illustrate the usage in the astrophysics field, we apply the proposed method on two real datasets, which are more complicated than those in the previous section. Specifically, we select these datasets with some obvious time-evolving variations to demonstrate the performance of our method.

4.1 XMM-Newton Observations of Proxima Centauri

Proxima Centauri (catalog ) is the nearest star to the Sun and as such is well suited for studies of coronal activity. Like our Sun, Proxima Centauri operates an internal dynamo, which generates a stellar magnetic field. In the standard model for stellar dynamos, the magnetic field lines wind up through differential rotation. When some of the magnetic field lines reconnect, the energy is released in the stellar flare. Such flares typically show a sudden rise in X-ray emission and a more gradual decay over several hours. In flares, flux and temperature are correlated such that a higher X-ray flux corresponds to a higher temperature and thus a higher energy of the average detected photons (see Güdel, 2004, for a review of X-ray emission in stellar coronae and further references).

Despite its proximity, Proxima Centauri and its corona are unresolved in X-ray observations; it is just the point-spread function (PSF) of the telescope that distributes the incoming flux over many pixels on the detector.

4.1.1 Data

We use a dataset from XMM-Newton (Obs.ID 0049350101), where Proxima Centauri was observed for 67 ks on 2001-08-12. Because of the high flux, the MOS cameras on XMM-Newton are highly piled-up and we restrict our analysis to the data from the PN camera. We obtained the data from the XMM-Newton science archive hosted by the European Space Agency (ESA)222https://www.cosmos.esa.int/web/xmm-newton/xsa. The data we received was processed by ODS version 12.0.0. Our analysis is based on the filtered PN event data from the automated reduction pipeline (PPS). Güdel et al. (2002) presented a detailed analysis and interpretation of this dataset.

In our analysis, we only used a subset of photons with spatial coordinates within , and it was binned as images of size . We used the temporal bins of width seconds to generate images. We binned the data into three energy bands, , and in eV.

4.1.2 Results

Figure 5 presents the light curves for different bands as well as the locations of the detected change points. As there is only a single source of photons with negligible background signal level, the detected change points coincide with the changes of the light curves. Many change points are detected for the abrupt increase and then decrease in brightness for all the bands at the time points between 42 and 50. The time interval between 15 and 36 is detected as a homogeneous time interval, and the variation in light curves within this interval is viewed as common Poisson variations. A few change points are detected for the time interval before time point 15 and the interval after 50. A piecewise constant model is used to fit these gradual changes in intensities.

The fitted images can be found in Figure 6. The source of most photons, a point source, is modeled by different piecewise constant models at all these time intervals. The center of the point source and the wings of the PSF region nearby were fitted by models with shapes like concentric circles.

To test our method for finding the regions of significant change, we also apply it here because we know what the answer should be. For this dataset, as the observations for different bands change simultaneously, we combine all the three bands to highlight the key pixels. That is, we highlight the regions for each band and take the intersection of these regions. Examples of the results based on the method in Section 2.4.2 can be found in Figure 6. The method indeed picks out the point source. With significance level , the abrupt increase in brightness of the source at time point 41 and 42, as well as the sudden decrease at time point 43 can be detected successfully. By modifying the significance level, different sensitivity can be achieved.

4.2 Isolated Evolving Solar Coronal Loop

Images of the solar corona constitute a legitimate Big Data problem. Several observatories have been collecting images in extreme ultra-violet (EUV) filters and in X-ray passbands for several decades, and analyzing them to pick out interesting changes using automated routines have been largely unsuccessful. Catalogs like the HEK (Heliophysics Events Knowledgebase Hurlburt et al., 2012; Martens et al., 2012) can detect and mark features of particular varieties, though these compilations remain beset by incompleteness (see, e.g., Aggarwal et al., 2018; Hughes et al., 2019; Barnes et al., 2017). In this context, our method provides a way to model solar features without limiting it to a particular feature set to identify and locate regions in images where something interesting has transpired. As a proof of concept, we apply the method to a simple case of an isolated coronal loop filling with plasma, as observed with the Solar Dynamics Observatory’s Atmospheric Imaging Assembly (SDO/AIA) filters (Pesnell et al., 2012; Lemen et al., 2012). Considerable enhancements must still be made in order to lower the computational cost before the method can be applied to full size images at faster than observed cadence; however, we demonstrate here that a well-defined region of interest can be selected without manual intervention for a dataset that consists of images in several filters.

4.2.1 Data

In particular, here we consider AIA observations carried out on 2014-Dec-11 between 19:12 UT and 19:23 UT, and focus on a pixel region located from disk center, in which a small, isolated, well-defined loop appeared at approximately 19:19 UT. This region was selected solely as a test case to demonstrate our method; the appearance of the loop is clear and unambiguous, with no other event occurring nearby to confuse the issue; see Figure 7. We apply our method to these data, downloaded using the SDO AIA Cutout Service,333https://www.lmsal.com/get_aia_data/ and demonstrate that the loop (and it alone) is detected and identified; see Figures 8, 9 and 10. AIA data are available in 6 filter bands, centered at 211, 94, 335, 193, 131, 171 Å. Here, we have limited our analysis to 3 bands: 94, 335, and 131 Å in which the isolated loop is easily discernible to the eye (a full analysis including all the filters does not change the results). Each filter consists of a sequence of 54 images, and while they are not obtained simultaneously, the difference in time between the bands is ignorable on the timescale over which the loop evolves.

4.2.2 Results

The fitted images for the 3-band case can be found in Figure 8. Notice that there is a loop-shaped object that is of interest. Based on the fitted result, this object starts to appear at time point c.36 and becomes brighter after that for the first band. In the second band, this object appears at time point c.26 and stays bright throughout the duration considered. However in the third band, the object becomes bright at time point c.36 and vanishes soon after time point c.38. The proposed method is able to catch these changes in different bands and to detect the corresponding change points.

After detecting these change points, we find the key pixels that contribute to the change points using the methods in Section 2.4.1. This method is appropriate to highlight the regions that change rapidly after the change point because different bands may not change in the same direction for this dataset. Here we apply this method on a single band, 94, as an example. We use . See Figure 9 for an illustration. We find that the method could highlight the loop-shape object which starts to appear at time point c.36, and also detect the region that becomes much brighter after time point c.38. We also compute the light curves of the intensities in a region comprised of the set of pixels formed from the union of all key pixels found at all change points in all the filters; see Figure 10. Notice that the event of interest is fully incorporated within the key pixels, with no spillover into the background, and the change points are post facto found to be reasonably located from a temporal perspective in that they are located where a researcher seeking to manually place them would do so. The first segment is characterized by steady emission in all three bands, the second segment shows the isolated loop beginning to form, the third segment catches the time when it reaches a peak, and the last segment tracks the slow decline in intensity.

5 Summary

We have developed an approach to model photon emissions by astronomical sources. Also, we propose a practical algorithm to detect the change points as well as to segment the astronomical images, based on the MDL principle for model selection. We test this method on a series of simulation experiments and apply it to two real astrophysical datasets. We are able to recover the time-evolving variations.

Based on the results of simulation experiments, it is recommended that the average number of photon counts within each bin should be from 100 to 1000 for pixels belonging to an astrophysical object, so that the proposed method is able to find change points and limit false positives.

For future work, it will be helpful to quantify the evidence of the existence of a change point by deriving a test statistic based on Monte Carlo simulations or other methods. Another possible extension is to relax the piecewise constant assumption and allow piecewise linear/quadratic modeling so that the method is able to capture more complicated and realistic patterns.

Appendix A Statistical Consistency

Here we prove that the MDL scheme is statistically consistent (see Section 2.2.5), thereby ensuring that the estimates of region segmentations and the Poisson intensities are reliable measures of the data. In the following, we assume that the size of the time bins , as increases to infinity. That is to say, first, we study that as these underlying nonhomogeneous Poisson processes are extending at the same rate, the size of the bins keeps fixed, which leads to increasing number of independent observations for any given part of this Poisson process. And by keeping the size of the bins fixed, we get rid of the case that the Poisson parameters keep varying as increases. Second, by setting , the Poisson parameter is numerically equal to the Poisson rate, which ease the arguments. The proof can be extended if we relax this assumption.

Given the above assumption, the photon counts have the following Poisson model,

| (A1) |

Given change points , we set and , and let to be the normalized change points. The consistency results are based on ’s being fixed as increases.

The observed images within the same interval follow the same corresponding piecewise constant model given change points . Let , , and where .

Let ’s within the interval follow the same corresponding two-dimensional piecewise constant model with constant regions. The partition of the images within interval is specified by a region assignment function . The Poisson parameters is the value for the band in the region of the interval. Let . That is to say,

| (A2) |

In all, for pixel ,

| (A3) |

For each , the log-likelihood function for regions assignment and Poisson parameters is

| (A4) |

As some of the terms in the log-likelihood function have nothing to do with the parameters to estimate, we remove these terms and write down the log-likelihood to be

| (A5) |

Define to be the parameter set for the interval, and to be the class of models can take value from. Then the log-likelihood for the interval can be written as

| (A6) |

Let be the normalized change point location vector, and be the parameter vector. Then vector can specify a model for this sequence of images. The MDL is derived to be

| (A7) | |||||

Here the “area” (number of pixels) and “perimeter” (number of pixel edges) of region are denoted by and .

In order to make sure that the change points are identifiable, we assume that there exists a such that . Therefore, the number of change points is bounded by . And there exists a constraint of where

| (A8) |

Then the estimation of the model based on MDL is given by

| (A9) |

Here is defined in (A7), and , where . And is defined as

| (A10) |

with denotes the estimated interval of the sequence of images.

We further define the log-likelihood formed by a portion of the observations in the interval by

| (A11) |

where and .

We denote

| (A12) |

to simplify the notation.

In this setting, an extension need to be made such that and can be slightly outside . It means that the estimated interval could cover a part of the observations that belong to the and true intervals. Based on the formula (3.4) in Davis & Yau (2013), for a real-value function on ,

| (A13) |

is used to denote

| (A14) |

for any pre-specified positive-valued sequences and , which cover to 0 as .

The following assumptions on true Poisson parameters are necessary.

Assumption 1.

| (A15) |

Assumption 2.

For two true neighboring regions and at the interval,

| (A16) |

Assumption 3.

For any two neighboring intervals and

| (A17) |

Proposition A.1 (v).

For and any fixed , there exists a such that,

| (A18) |

This proposition holds for due to the compactness of parameter space (Assumption 1) and bounded .

Proposition A.2.

For and any fixed ,

| (A19) |

where

| (A20) |

The estimated locations of change points are used to define the likelihood in practice. Therefore, the two ends of the interval might contain observations from the and true intervals, though the estimated change points are close to the true change points. It is necessary to control the effect at the two ends of the fitted interval.

Proposition A.3 (w).

For and any fixed and any sequence of integers that satisfies for some when is large enough, then

| (A21) |

Based on Lemma 1 in Davis & Yau (2013), Proposition A.3 holds when Proposition A.1(2) holds and the Assumption 4* in Davis & Yau (2013) is satisfied. And Assumption 4* is satisfied because an independent process, like the current setting, must be mixing.

It is necessary to discuss the identifiability of models in . First we define an oversegmentation compared with if leads to .

Proposition A.4.

For the interval, the true model satisfies . Also, is uniquely identifiable, which means that if there exists a such that almost everywhere for , then . And suppose there exists another model such that almost everywhere, then must be an oversegmentation compared with . And satisfies .

Proof.

Suppose on the contrary there exist a model that satisfies , and is neither the true model nor an oversegmentation of the true model. Then there exist two pixels and , such that they are neighboring pixels, . Therefore, by Assumption 2, we have

| (A22) |

Define

| (A23) |

where denotes the number of pixels in region given segmentation . And in a special case,

| (A24) |

Then for all possible , we have

| (A25) |

Lemma 1.

For any fixed ,

| (A28) |

See Proposition 1 and 2 in Davis & Yau (2013) for the proof.

Lemma 2.

Suppose the true parameters for interval is . And suppose a region segmentation is specified for estimation. Let

| (A29) |

Then

| (A30) |

where the supremum is defined in (A13). And if , we further have

| (A31) |

If is an oversegmentation than , then we have

| (A32) |

Proof.

| (A33) |

The first inequity is obtained by the definition of maximum likelihood estimator, and the last convergence comes from Lemma 1. As maximizes and , we have

| (A34) |

Now we give a preliminary result of the convergence when the number of change points is known.

Theorem 1.

(Theorem 1 in Davis & Yau (2013)) Let be the observed images specified by . And suppose the number of change points is known. The change points and parameters are estimated by

| (A35) |

Then and for each interval, the estimated must be an oversegmentation comparing to the true region segmentation.

We skip the proof of this theorem because it is quite similar to the proof of Theorem 1 in Davis & Yau (2013). Notice that we need to use Assumption 3 in the proof.

Corollary 1.

(Corollary 1 in Davis & Yau (2013)) Under the conditions of Theorem 1, if the number of change- points is unknown and is estimated from the data , then

-

1.

The number of change points cannot be underestimated. That is to say, almost surely when is large enough.

-

2.

When , must be a subset of the limit of for large enough .

-

3.

In each fitted interval, the region segmentation must be equal to or be an oversegmentation comparing with the corresponding true region segmentation.

See Corollary 1 in Davis & Yau (2013) for more details.

Theorem 2.

(Theorem 2 in Davis & Yau (2013)) Let be the true change points. And is the MDL-based result. Then , there exists a where such that

| (A36) |

See the proof of Theorem 2 in Davis & Yau (2013).

Lemma 3.

Suppose the true region segmentation is specified for the interval, then

| (A37) |

When the specific region segmentation is an oversegmentation compared with , then we have

| (A38) |

See Lemma 2 in Davis & Yau (2013) for more details.

Then we come to the main result.

Theorem 3.

Let be the observed images specified by . The estimator is defined by (A9). Then we have

| (A39) |

See Theorem 3 in Davis & Yau (2013) for more details.

References

- Adams & Bischof (1994) Adams, R., & Bischof, L. 1994, IEEE Transactions on Pattern Analysis and Machine Intelligence, 641

- Aggarwal et al. (2018) Aggarwal, A., Schanche, N., Reeves, K. K., Kempton, D., & Angryk, R. 2018, ApJS, 236, 15, doi: 10.3847/1538-4365/aab77f

- Astropy Collaboration et al. (2013) Astropy Collaboration, Robitaille, T. P., Tollerud, E. J., et al. 2013, A&A, 558, A33, doi: 10.1051/0004-6361/201322068

- Astropy Collaboration et al. (2018) Astropy Collaboration, Price-Whelan, A. M., Sipőcz, B. M., et al. 2018, AJ, 156, 123, doi: 10.3847/1538-3881/aabc4f

- Aue & Horváth (2013) Aue, A., & Horváth, L. 2013, Journal of Time Series Analysis, 34, 1, doi: 10.1111/j.1467-9892.2012.00819.x

- Aue & Lee (2011) Aue, A., & Lee, T. C. M. 2011, The Annals of Statistics, 39, 2912

- Barnes et al. (2017) Barnes, G., Schanche, N., Leka, K. D., Aggarwal, A., & Reeves, K. 2017, in Astroinformatics, ed. M. Brescia, S. G. Djorgovski, E. D. Feigelson, G. Longo, & S. Cavuoti, Vol. 325, 201–204, doi: 10.1017/S1743921316012758

- Carson et al. (1999) Carson, C., Belongie, S., Greenspan, H., & Malik, J. 1999, IEEE Transactions on Pattern Analysis and Machine Intelligence, 167

- Davis et al. (2006) Davis, R. A., Lee, T. C. M., & Rodriguez-Yam, G. A. 2006, Journal of the American Statistical Association, 101, 223

- Davis & Yau (2013) Davis, R. A., & Yau, C. Y. 2013, Electronic Journal of Statistics, 7, 381

- Dey et al. (2010) Dey, V., Zhang, Y., & Zhong, M. 2010, The International Society for Photogrammetry and Remote Sensing Symposium (ISPRS) TC VII Symposium-100 Years ISPRS, Vienna, Austria, XXXVIII, 31

- Fan & Lee (2015) Fan, M., & Lee, T. C. 2015, IET Image Processing, 9, 478, doi: https://doi.org/10.1049/iet-ipr.2014.0490

- Felzenszwalb & Huttenlocher (2004) Felzenszwalb, P. F., & Huttenlocher, D. P. 2004, International Journal of Computer Vision, 59(2), 167

- Freeland & Handy (2012) Freeland, S. L., & Handy, B. N. 2012, SolarSoft: Programming and data analysis environment for solar physics. http://ascl.net/1208.013

- Fruscione et al. (2006) Fruscione, A., McDowell, J. C., Allen, G. E., et al. 2006, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Vol. 6270, Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, ed. D. R. Silva & R. E. Doxsey, 62701V, doi: 10.1117/12.671760

- Güdel (2004) Güdel, M. 2004, The Astronomy and Astrophysics Review, 12, 71, doi: 10.1007/s00159-004-0023-2

- Güdel et al. (2002) Güdel, M., Audard, M., Skinner, S. L., & Horvath, M. I. 2002, The Astrophysical Journal, 580, L73, doi: 10.1086/345404

- Hughes et al. (2019) Hughes, J. M., Hsu, V. W., Seaton, D. B., et al. 2019, Journal of Space Weather and Space Climate, 9, A38, doi: 10.1051/swsc/2019036

- Hurlburt et al. (2012) Hurlburt, N., Cheung, M., Schrijver, C., et al. 2012, Sol. Phys., 275, 67, doi: 10.1007/s11207-010-9624-2

- Lee (2000) Lee, T. C. M. 2000, Journal of the American Statistical Association, 259

- Lee (2001) —. 2001, International Statistical Review, 69, 169

- Lemen et al. (2012) Lemen, J. R., Title, A. M., Akin, D. J., et al. 2012, Sol. Phys., 275, 17, doi: 10.1007/s11207-011-9776-8

- Martens et al. (2012) Martens, P. C. H., Attrill, G. D. R., Davey, A. R., et al. 2012, Sol. Phys., 275, 79, doi: 10.1007/s11207-010-9697-y

- Pesnell et al. (2012) Pesnell, W. D., Thompson, B. J., & Chamberlin, P. C. 2012, Sol. Phys., 275, 3, doi: 10.1007/s11207-011-9841-3

- Rissanen (1989) Rissanen, J. 1989, Stochastic Complexity in Statistical Inquiry Theory (USA: World Scientific Publishing Co., Inc.)

- Rissanen (2007) —. 2007, Information and Complexity in Statistical Modeling, doi: 10.1007/978-0-387-68812-1

- Rousseeuw & Croux (1993) Rousseeuw, P. J., & Croux, C. 1993, Journal of the American Statistical Association, 88, 1273, doi: 10.1080/01621459.1993.10476408

- Scargle et al. (2013) Scargle, J. D., Norris, J. P., Jackson, B., & Chiang, J. 2013, The Astrophysical Journal, 764, 167, doi: 10.1088/0004-637x/764/2/167

- Wong et al. (2016) Wong, R. K. W., Kashyap, V. L., Lee, T. C. M., & van Dyk, D. A. 2016, Annals of Applied Statistics, 10, 1107, doi: 10.1214/16-AOAS933