Chanel-Orderer: A Channel-Ordering Predictor for Tri-Channel

Natural Images

Abstract

This paper shows a proof-of-concept that, given a typical 3-channel images but in a randomly permuted channel order, a model (termed as Chanel-Orderer) with ad-hoc inductive biases in terms of both architecture and loss functions can accurately predict the channel ordering and knows how to make it right. Specifically, Chanel-Orderer learns to score each of the three channels with the priors of object semantics and uses the resulting scores to predict the channel ordering. This brings up benefits into a typical scenario where an RGB image is often mis-displayed in the BGR format and needs to be corrected into the right order. Furthermore, as a byproduct, the resulting model Chanel-Orderer is able to tell whether a given image is a near-gray-scale image (near-monochromatic) or not (polychromatic). Our research suggests that Chanel-Orderer mimics human visual coloring of our physical natural world.

1 Introduction

The advent of digital imaging has transformed the way we capture, store, and process visual information. However, the reliance on electronic devices and software introduces various challenges, including the correct interpretation of image data. One such challenge is the proper ordering of the color channels in an image, which is critical for accurate representation and subsequent analysis. While the typical representation of color images is in the RGB (Red, Green, Blue) format, various systems and libraries may store images in the BGR (Blue, Green, Red) order, leading to confusion and incorrect display or processing.

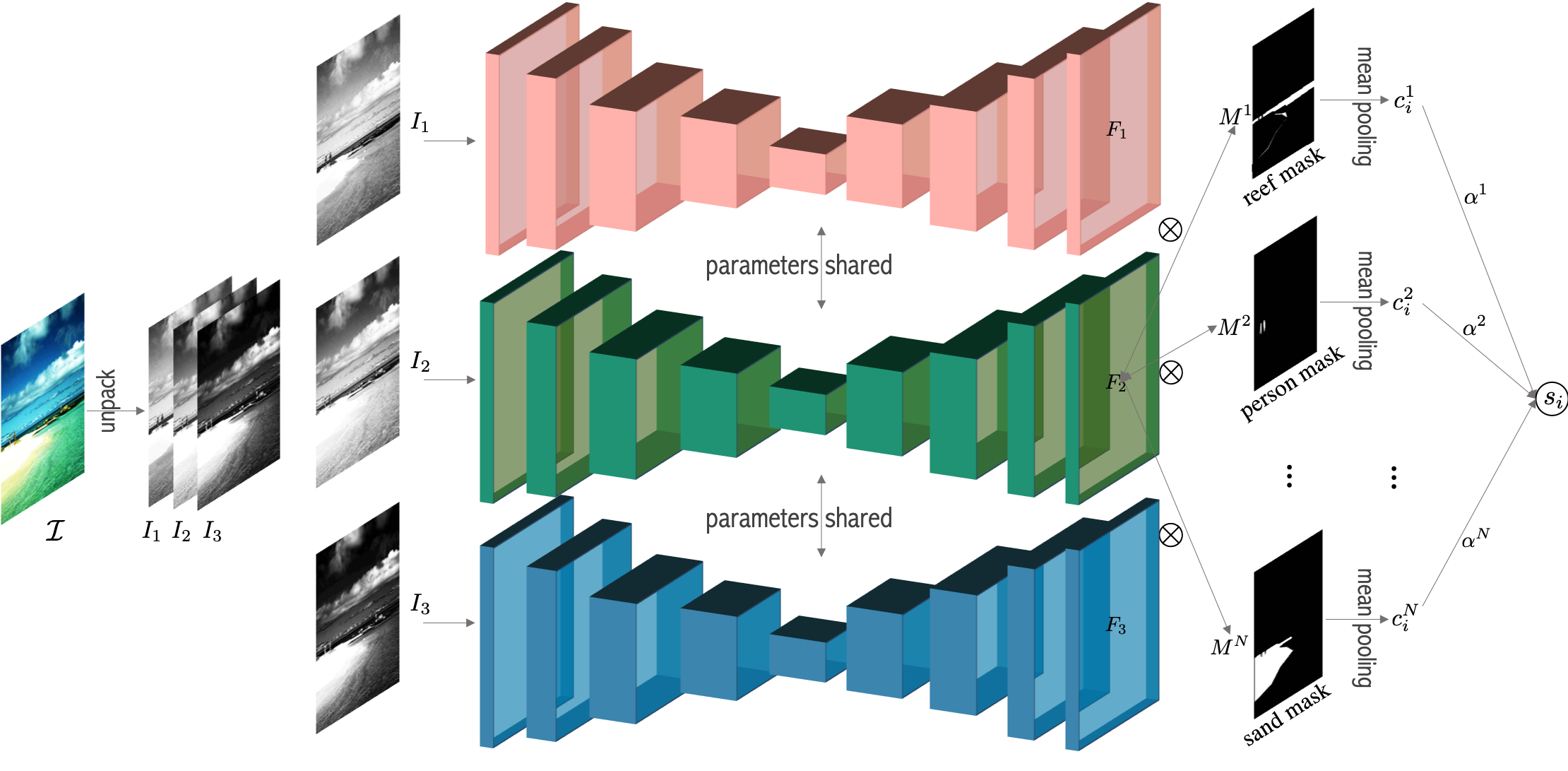

In this paper, we present a proof-of-concept that demonstrates the capability of a machine learning model, referred to as Chanel-Orderer, to accurately predict the correct channel order of a given image when the image’s channels are permuted. The model’s architecture and loss functions are designed to incorporate ad-hoc inductive biases that facilitate the learning of color representation of object semantics. As shown in Figure 1, by scoring each of the three channels based on these semantic priors, Chanel-Orderer is able to make accurate predictions about the original channel order. One may notice that the difficulty of this task lies in the ambiguity of image display when the channel order is shuffled: images even ordered in non-RGB format alone may seem valid but still weird; yet, when compared with the valid RGB counterpart, they do not look realistic. Our objective hence is to build a model that is able to overcome this difficulty and learns to restore the valid channel order by predicting the ordering.

An alternative straightforward workaround of this problem is to train a softmax classification model to predict all possible cases: RGB, RBG, GRB, GBR, BRG and BGR. However, our empirical findings suggests softmax models are inferior to our proposed model. This findings is align with the results from the prior work [9] which suggests that neural networks may take shortcuts to predict when inductive biases are not sufficiently infused throughout learning. In contrast, our proposed model (termed Chanel-Orderer) is designed with inductive biases in terms of both architectures and loss functions and empirically outperforms softmax models.

The benefits of Chanel-Orderer extend beyond the correction of channel order. In a typical scenario where an RGB image is mis-displayed in BGR order, Chanel-Orderer can correct the order to ensure the image is displayed correctly. This has implications for a wide range of applications, including image processing, computer graphics, and user interfaces.

Furthermore, as a byproduct of the model’s training, Chanel-Orderer also gains the ability to predict image monochromaticism (i.e. to predict whether a given image is a near-grayscale image or not). This is achieved by leveraging the model’s understanding of the semantic content of objects and their representation in color channels. Near-gray-scale images often have very similar values across all three color channels, which the model can grasp statistically and detect and classify accordingly.

The remainder of this paper is organized as follows. Section 2 details the proposed Chanel-Orderer model, including its architecture, loss functions, and the learning process. Section 3 presents the experimental setup and results, showcasing the model’s performance on various tasks, including channel order prediction and near-grayscale classification. Finally, Section 4 closes the paper by discussing limitations and potential future directions.

2 Methodology

We propose a channel-order predictor, Chanel-Orderer, that can predict the ordering of channels of a given 3-channel image with any of 3-permutations of , where denotes the red, green, blue channel of the image, respectively. Note that the channel ordering of an image can be determined by deciding the orderings of pairs of comparison: versus , versus and versus . We aim to design a parameterization model that can make these three pairwise decisions. We find that the design of such a model stems from two inductive biases in terms of loss function and network architecture.

2.1 Loss Inductive Bias

We first define the following partial order:

| (1) |

which suggests that ideally among the three channels, the red channel should be placed in the first channel, followed by the green channel and the blue channel .

Then, given a 3-channel image with any of 3-permutations , we formulate the model (parameterized by ) as a scoring function which outputs the ranking scores for each of the channels independently:

| (2) |

These scores are interpreted as the likeness scores that should obey the partial order (1). For example, if the groundtruth suggests according to the partial order (1), then we should enforce the model to output and such that ; otherwise, . By modifying the model to predict the probability of :

| (3) |

we can formulate the ordering prediction problem into three seperate binary classification problems ( versus , versus , versus ). Ideally, such a predicted probability distribution should get close to the desired probability distribution :

| (4) |

In Eq. (3), the scalar denotes temperature that rescales exponent to and the function should be an increasing differentiable function with regards to the score difference , e.g. the identity function as the simplest choice. However, we empirically find that the choice of the identity function leads to unstable optimization. In the next section, we show a better choice of that yields amenable optimization.

Formally, given any , we minimize the cross entropy loss between the predicted and the groundtruth over all the pairs of comparison (which is inherently a function of and ):

| (5) |

Plugging and into Eq (2.1) yields

| (6) |

Theorem 2.1.

Suppose the function is a monotonically increasing differentiable function. The loss function is an increasing function with regards to the score difference when and a decreasing function with regards to when , i.e.:

| (7) |

Proof.

| (8) |

When , and the derivative becomes

| (9) |

When , and the derivative becomes

| (10) |

∎

Remark.

When , and the loss function is a decreasing function with regard to , which suggests that the minimum of is attained when the score difference is largest. Hence, during training, the scoring function will adjust its learnable parameter to maximize the score and minimize the score . When , and the loss function is an increasing function with regard to , which suggests that the minimum of is attained when the score difference is smallest. During training, the scoring function will adjust its learnable parameter to minimize the score and maximize the score . Similar ranking spirit can be found in [3]. Theorem 2.1 sheds light on the design of Chanel-Orderer inference algorithm: the larger the value of is, the more likely should be placed in front among all channels (). In Section 2.3, we will show the specific algorithm design by virtue of this insight.

2.2 Architectural Inductive Bias

This section introduces two architectural inductive biases that are incorporated into the implementation of Chanel-Orderer: (1) the choice of and ; (2) the architectural design of the scoring function .

2.2.1 Choice of and

As mentioned earlier, the function should be an increasing differentiable function with regard to the score difference . The simplest choice is , which, however, leads to unstable optimization. We argue that this is because the distribution of does not fully overlap with the support of the sigmoid function. Here we propose another choice of that leads to amenable optimization.

According to Theorem 2.1, when , the derivative should be zero, as no ranking should be enforced and hence no updates should be performed to the learnable parameter . This observation suggests that :

| (11) |

The last implication holds by noting that when , the score difference since the scoring function is permutation-invariant. Therefore, any increasing differentiable function that passes through the origin can serve as a valid choice of . We choose , as it maps to a symmetric domain . To largely overlap the support of the sigmoid function, we further perform the division of which expands the range to the range . Empirically, we set such that the resulting range largely overlaps the definition domain of the sigmoid function, outside of which is the saturation region of the sigmoid function where gradients vanish.

2.2.2 Architecture of

To predict the ordering of channels of a given 3-channel image, it is important to first understand the semantics of the image. Different objects in the image have different surface colors, but objects of similar semantics or of the same categories tend to exhibit similar colors in their surfaces. For example, human faces and skin, regardless of identity, tend to be yellow or brown while mountains, regardless of shape and location, tend to be green-ish. The design of the architecture should take this prior knowledge into account. Hence, the key design of our proposed Chanel-Orderer is to exploit semantic segmentation masks to predict the ranking scores.

As shown in Figure 2, given a three-channel image, Chanel-Orderer first separates it into three channels, , and . Then, these three channels are separately and independently sent into a U-Net [19], which yields three feature maps , and . Each feature map captures general visual representation of each image channel. For each feature map , segmentation masks are applied to it followed by a mean pooling operation which yields the color representation for each semantic object , for . We concatenate them as a vector . Let denote the general prior weight for each object. Then the final score is given by the inner product between and :, . Note that the semantic segmentation masks can be obtained from ground-truth, or from the output of a pretrained segmentation model if ground-truth is unavailable [21, 23, 20, 22, 7, 5, 4, 13, 11, 6, 1, 12, 18]. The specific training procedure is summarized in Algorithm 1.

2.3 Inference

Recall that Theorem 2.1 implies that the larger the value of is, the more likely should be placed in front among all channels (). By virtue of this implication, we can use as the indicator of the channel ordering.

Specifically, given an image whose channels might be permuted in a wrong order, Chanel-Orderer applies its scoring function to each of the channels to obtain the scores, respectively: , , . And then label the channel with the largest score among the three as the red channel (Red), label the channel with the smallest score as the blue channel (Blue), and label the third one as the green channel (Green). See Algorithm 2 for the specific Python-like implementation.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2f10e451-27c9-4ebd-98da-00f2eabdf58a/algorithms.png)

2.4 Detection of RGB against BGR

In most cases, we rarely encounter a scenerio where a model is expected to tell all possible permutation orders. Rather, in a typical scenario, an RGB image is often mis-displayed in BGR order. To tackle this particular situation, we slightly modify the proposed Chanel-Orderer for all possible permutations into a model variant that detects RGB against BGR.

We inherit the partial order from (1):

| (12) |

which suggests that ideally the red channel should be ranked ahead of the blue channel and therefore that RGB is preferable over BGR.

Given a tri-channel image , similarly as earlier, we first unpacks it into three channels, , and . Then, we concatenate and which yields and concatenate and which yields . After a few operations followed by a global average pooling, the scoring function is expected to score and (yielding and , respectively) to determine which ranks ahead of the other. To train the scoring function, a similar ranking loss function as in Eq. (2.1) can be applied. For inference, if , the given image is predicted as RGB; otherwise, it is predicted as BGR.

2.5 Detection of Near-Grayscale Images

In this section, we show our proposed Chanel-Orderer is promising in detecting near-gray images from RGB color images. Near-gray images are images which look monochromatic in general but have a few if not none pixels that are polychromatic (see Figure 3 for some examples). Such images, which often appear in posters or advertisements, are mostly photographed for aesthetic purpose: photographers who make such images use polychromatic imagery to highlight the objects in the images and use monochromatic imagery to render the rest. Prior to Chanel-Orderer, existing methods hinges upon statistic thresholding that are determined in a heuristic manner. Chanel-Orderer, in contrast, is data-driven and learns to predict the ranking scores , and whose relative values can inherently be used as indicators to determine whether a given image is polychromatic or monochromatic.

Specifically, given an image , we evaluate the ranking scores , for . And then we evaluate score differences between the three pairs which yields , , . Finally, we determine its monochromatism using the following rule: if (where is a predefined threshold), we decide it as a near-grayscale image; otherwise, it is decided as a polychromatic image.

3 Experiments

3.1 Benchmarks

We evaluate the proposed Chanel-Orderer on three challenging datasets including SiftFlow [14], PASCAL Context [15] and a customized face dataset referred to as CustoFace thereinafter. The first two benchmarks are used to evaluate the model capability on all-permutation ordering prediction, and the last one is used to evaluate the performance on the detection of RGB against BGR.

SiftFlow [14] includes 2,688 annotated images from a subset of the LabelMe database. The 256 × 256 pixel images are based on 8 different outdoor scenes, among them streets, mountains, fields, beaches, and buildings. All images belong to one of 33 semantic classes. For each test image, we permute its channels to obtain versions of it.

PASCAL Context [15] is an enhanced version of the PASCAL VOC 2010 object detection challenge, and it provides pixel-level labels for all the training images. The dataset encompasses over 400 classes (which includes the original 20 classes from PASCAL VOC, along with background classes from the segmentation dataset), categorized into three groups: objects, stuff, and hybrid categories. Due to the sparsity of many object categories in the dataset, a subset of 59 frequently occurring classes is commonly chosen for practical use.

CustoFace contains nearly 1,500 face images. All images are and contain human aligned faces across various races.

We use total accuracy and accuracies in RGB, RBG, GRB, GBR, BRG and BGR to measure the model performance.

3.2 Implementation Details

| Method | RGB | RBG | BGR | BRG | GBR | GRB | Overall |

| Shallow Model | 46.27 | 48.88 | 35.82 | 24.63 | 27.24 | 37.69 | 36.75 |

| Softmax Model | 85.07 | 84.70 | 85.07 | 84.33 | 82.46 | 84.45 | 84.64 |

| Chanel-Orderer-wo-Seg | 82.46 | 84.70 | 83.21 | 84.70 | 82.09 | 82.09 | 83.21 |

| Chanel-Orderer | 98.51 | 98.51 | 98.51 | 98.51 | 98.51 | 98.51 | 98.51 |

| Method | RGB | RBG | BGR | BRG | GBR | GRB | Overall |

| Shallow Model | 30.30 | 30.50 | 38.02 | 40.00 | 34.65 | 35.64 | 34.85 |

| Softmax Model | 77.42 | 74.06 | 75.25 | 74.06 | 67.52 | 71.68 | 73.33 |

| Chanel-Orderer-wo-Seg | 57.43 | 57.82 | 60.40 | 59.01 | 58.42 | 57.62 | 58.45 |

| Chanel-Orderer | 73.86 | 74.46 | 78.22 | 79.60 | 74.26 | 74.06 | 75.74 |

The proposed Chanel-Orderer consists of a U-Net architecture [19] with the four layers of encoders that maps an input into 32-channel, 64-channel, 128-channel and 256-channel sequentially, then with a four layers of decoders that map the encoded feature map back to 128-channel, 64-channel, 32-channel and 1-channel. The intermediate activation functions are ReLUs. The training batch size is set to and the total training epochs is . The initial learning rate is set to and decays with the factor of Throughout the entire training process, we use the Adam optimizer.

3.3 Performance Evaluation

3.3.1 Competing Methods

We compare our proposed Chanel-Orderer with other promising methods, including shallow models, Softmax models and other Chanel-Orderer variants.

Shallow models:

we construct color histograms [16] for each channel of images , and , and train a simple classifier to tell which should come first given a pair of channels. That is, for each , train the classifier to take as input the concatenated color histograms and output the probability that the -th channel ranks in the front of the -th channel according to the predefined partial order shown in Eq. (1).

Softmax models [2]:

in this model, we formulate the ordering prediction task as a multi-class classification task, that is, to train a classifier to predict which category a given image should fall into: RGB, RBG, GRB, GBR, BRG and BGR. For the detection of RGB against BGR, the classifier is to predict RGB or BGR only. For the detection of near-grayscale images, as the classifer outputs a categorical distribution over all categories, we use its entropy as an indicator of monochromatism (see the next section for the specifics).

Chanel-Orderer-wo-Seg:

our proposed Chanel-Orderer exploits the segmentation semantics to help make the ordering predictions. To investigate the effect of segmentation semantics, we perform an ablation study by removing the segmentation semantics. Specifically, we remove the element-wise multiplication between and and only leave the mean pooling operation upon . The resulting model is referred to as Chanel-Orderer-wo-Seg. We compare Chanel-Orderer against it for the ablation study on the effect of segmentation semantics.

3.3.2 Quantitative Results

The comparison results on SiftFlow are shown in Table 1. The Chanel-Orderer model achieves the best overall performance with the overal accuracy of 98.51%. It is the most robust model to changes in channel order since it maintains high accuracies across all channel orders. The Softmax Model also performs well with an overall average of 84.64%, indicating that it is less sensitive to channel order than the Shallow Model, which shows significant drops in performance with certain channel orders. The Chanel-Orderer-wo-Seg model performs similarly to the “Softmax Model” but slightly less robustly to channel order changes. Shallow Model has a wide range of performance scores, indicating high sensitivity to the input channel order. The highest accuracy is 48.88% for the RGB channel order, and the lowest is 24.63% for the BRG channel order. The overall average accuracy is 36.75%, which is the lowest among the models tested. Softmax Model performs significantly better than the Shallow Model, with a high degree of consistency across different channel orders. The overall average accuracy is 84.64%, with the lowest accuracy being 82.46% for the GBR channel order. Chanel-Orderer-wo-Seg also performs well, with an overall average accuracy of 83.21%. The performance is quite consistent, with the accuracy ranging from 82.09% to 84.70%. This suggests that the model is less sensitive to channel order changes compared to the Shallow Model. Chanel-Orderer has the highest overall average accuracy at 98.51%. It shows a very consistent performance across all channel orders, with the lowest accuracy being 98.51% and the highest being 98.51%. This indicates that the Chanel-Orderer model is highly robust to variations in channel order.

The comparison results on PASCAL-Context are shown in Table 2. Shallow Model has a varied performance across different channel orders, with the highest accuracy of 40.00% for the BRG channel order and the lowest of 30.30% for the RGB channel order. The overall average accuracy is 34.85%, which is the lowest among the models tested. This suggests that the Shallow Model is not only performing poorly overall but is also highly sensitive to the input channel order. Softmax Model shows better performance than the Shallow Model across all channel orders, with an average accuracy of 73.33%. The performance is relatively consistent, except for a noticeable drop when the channel order is GBR, where the accuracy drops to 67.52%. This indicates that while the Softmax Model is more robust to channel order changes than the Shallow Model, it is still somewhat affected by them. Chanel-Orderer-wo-Seg has an overall average accuracy of 58.45%, which is lower than the Softmax Model but higher than the Shallow Model. The performance is relatively stable across different channel orders, with a narrow range from 57.43% to 60.40%. This suggests that the model is designed to handle channel order variations to some extent, but it is not as effective as the Chanel-Orderer model. Chanel-Orderer has the highest overall average accuracy at 75.74%, which is significantly better than the other models. It also shows the most consistent performance across different channel orders, with a narrow range from 73.86% to 79.60%. This indicates that the Chanel-Orderer model is highly effective at dealing with channel order variations and is the most robust model in this comparison.

Detection of BGR against RGB.

We compare Chanel-Orderer with the Softmax model. As shown in Table 3, Chanel-Orderer achieves the accuracy of 93.85% whereas the Softmax model only achieves 51.63%. This suggests that without sufficient inductive biases either in terms of architecture or loss, the Softmax model is unable to take any shortcut to learn a valid mapping for classification. Chanel-Orderer, however, casts this problem as a ranking problem and makes use of the architectural and loss inductive biases to learn the ranking, and therefore achieves promising results on this task.

Detection of Near-Grayscale Images.

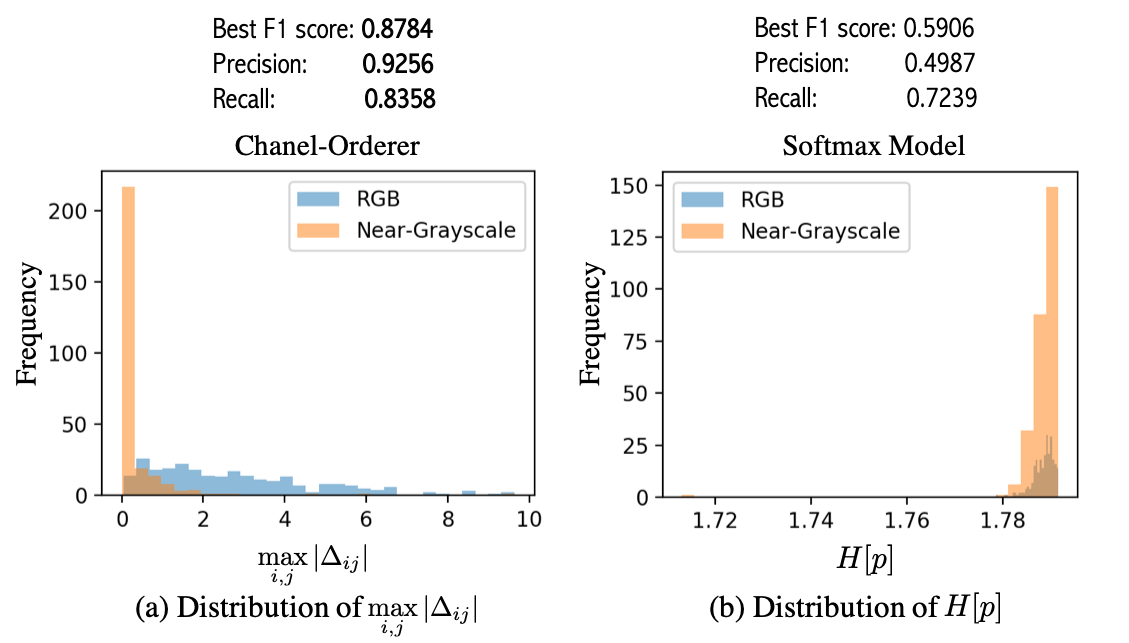

We compare Chanel-Orderer against the Softmax model in the detection of near-gray images. Recall that Chanel-Orderer uses the maximum absolute score difference as an indictor to detect near-grayscale images. If ( is a predefined threshold), the given image is detected as near-grayscale; otherwise, it is detected as RGB. On the other hand, the Softmax model outputs probabilities ( for ) for each color orderings. We use the softmax entropy as the indictor of monochromatism:

| (13) |

since if the softmax entropy is high, the softmax model has high epistemic uncertainty [24] about the channel ordering of a given image.

As shown in Figure 4, we observe that Chanel-Orderer outperforms the Softmax model by clear margins in this task: the maximum absolute score difference given by Chanel-Orderer can distinguish near-grayscale images from normal RGB images whereas the entropy given by Softmax model cannot. Consequently, Chanel-Orderer achieves F1-score of 0.8784 while Softmax model only achieves 0.5906. According to prior works [10, 8, 17] on softmax, neural networks trained by softmax loss tend to yield miscalibrated probabilities on the basis of information that is not meant for desired predictions to human intelligence.

3.4 Model Behavoir Analysis

The results from Table 1, Table 2 and Table 3 suggest that Chanel-Orderer consistently outperforms Softmax models in almost all cases. Softmax models cast the channel-ordering prediction into a classification problem whereas Chanel-Orderer tackles this problem in ranking spirit. This further suggests that ranking is more preferable as inductive bias than classification in this particular task. This can also be seen from the training progress: we observe, during training, that Chanel-Orderer converges much faster than Softmax models into smaller loss values, which validates the advantage of inductive biases incorporated into the model.

4 Conclusion

The advent of digital imaging has revolutionized our ability to capture, store, and process visual information, yet it has also introduced complexities such as the correct interpretation of image data. This paper presents Chanel-Orderer, a statistical ranking model designed to address the challenge of determining the correct channel order of color images, a task that is pivotal for accurate image representation and subsequent analysis. Through our proof-of-concept, we have demonstrated the model’s capability to accurately predict the original channel order of images, even when the channels are permuted, thereby mitigating issues related to incorrect display or processing.

Our approach, which leverages ad-hoc inductive biases in terms of loss function and architecture, has proven to be effective in scoring each color channel based on these semantic priors. Chanel-Orderer not only ensures the correct display of image channels but also extends its utility to predicting image monochromatism in a statistical prospective.

The implications of Chanel-Orderer’s success are far-reaching, touching upon various domains including image processing, computer graphics, and user interface design. By ensuring images are accurately represented, Chanel-Orderer contributes to an enhanced user experience, more reliable processing outcomes, and increased efficiency in the development of imaging applications.

Looking forward, there are several avenues for future research. First, we aim to generalize the model to accommodate a broader range of color spaces and channel configurations, expanding its applicability. Second, integrating Chanel-Orderer with existing imaging libraries and software ecosystems will be a key step towards streamlining image handling across diverse platforms. Finally, we are committed to improving the model’s robustness and accuracy to cater to the vast array of image conditions encountered in real-world scenarios.

| Method | Accuracy |

| Softmax Model | 51.63 |

| Chanel-Orderer | 93.85 |

Limitations.

While the Chanel-Orderer model has shown promise in addressing the challenge of correcting color channel order, it is essential to acknowledge its potential limitations. These limitations provide insights into areas for further research and development.

- Generalization: The model’s performance may be limited to specific types of images or datasets. As the model’s inductive biases are tailored to learn object semantics, it may struggle with images that include open-set semantic categories. Expanding the model’s training data and exploring more diverse image categories could enhance its generalization capabilities.

- Complexity: The complexity of the model’s architecture and the need for specialized training data may pose challenges for deployment in resource-constrained environments. Simplifying the model or developing lightweight versions could make it more accessible for a wider range of applications.

- Sensitivity to Image Quality: The model’s performance may be sensitive to the quality of the input images. Issues such as noise, compression artifacts, or pixelation may hinder its ability to accurately predict the original channel order. Improving the model’s robustness to such challenges is a critical area for future work.

Future work might focus on addressing these challenges for better performance.

References

- Bousselham et al. [2021] Walid Bousselham, Guillaume Thibault, Lucas Pagano, Archana Machireddy, Joe Gray, Young Hwan Chang, and Xubo Song. Efficient self-ensemble for semantic segmentation. arXiv preprint arXiv:2111.13280, 2021.

- Bridle [1989] John Bridle. Training stochastic model recognition algorithms as networks can lead to maximum mutual information estimation of parameters. Advances in neural information processing systems, 2, 1989.

- Burges et al. [2005] Chris Burges, Tal Shaked, Erin Renshaw, Ari Lazier, Matt Deeds, Nicole Hamilton, and Greg Hullender. Learning to rank using gradient descent. In Proceedings of the 22nd international conference on Machine learning, pages 89–96, 2005.

- Cai et al. [2023] Yuxuan Cai, Yizhuang Zhou, Qi Han, Jianjian Sun, Xiangwen Kong, Jun Li, and Xiangyu Zhang. Reversible column networks. In The Eleventh International Conference on Learning Representations, 2023.

- Chen et al. [2023] Zhe Chen, Yuchen Duan, Wenhai Wang, Junjun He, Tong Lu, Jifeng Dai, and Yu Qiao. Vision transformer adapter for dense predictions. In The Eleventh International Conference on Learning Representations, 2023.

- Cheng et al. [2022] Bowen Cheng, Ishan Misra, Alexander G Schwing, Alexander Kirillov, and Rohit Girdhar. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 1290–1299, 2022.

- Fang et al. [2023] Yuxin Fang, Wen Wang, Binhui Xie, Quan Sun, Ledell Wu, Xinggang Wang, Tiejun Huang, Xinlong Wang, and Yue Cao. Eva: Exploring the limits of masked visual representation learning at scale. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19358–19369, 2023.

- Gal and Ghahramani [2016] Yarin Gal and Zoubin Ghahramani. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In international conference on machine learning, pages 1050–1059. PMLR, 2016.

- Geirhos et al. [2018] Robert Geirhos, Patricia Rubisch, Claudio Michaelis, Matthias Bethge, Felix A. Wichmann, and Wieland Brendel. Imagenet-trained cnns are biased towards texture; increasing shape bias improves accuracy and robustness. CoRR, abs/1811.12231, 2018.

- Guo et al. [2017] Chuan Guo, Geoff Pleiss, Yu Sun, and Kilian Q Weinberger. On calibration of modern neural networks. In International conference on machine learning, pages 1321–1330. PMLR, 2017.

- Jain et al. [2023] Jitesh Jain, Anukriti Singh, Nikita Orlov, Zilong Huang, Jiachen Li, Steven Walton, and Humphrey Shi. Semask: Semantically masked transformers for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 752–761, 2023.

- Kirillov et al. [2023] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4015–4026, 2023.

- Li et al. [2023] Feng Li, Hao Zhang, Huaizhe Xu, Shilong Liu, Lei Zhang, Lionel M Ni, and Heung-Yeung Shum. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3041–3050, 2023.

- Liu et al. [2009] Ce Liu, Jenny Yuen, and Antonio Torralba. Nonparametric scene parsing: Label transfer via dense scene alignment. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 1972–1979. IEEE, 2009.

- Mottaghi et al. [2014] Roozbeh Mottaghi, Xianjie Chen, Xiaobai Liu, Nam-Gyu Cho, Seong-Whan Lee, Sanja Fidler, Raquel Urtasun, and Alan Yuille. The role of context for object detection and semantic segmentation in the wild. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 891–898, 2014.

- Novak et al. [1992] Carol L Novak, Steven A Shafer, et al. Anatomy of a color histogram. In CVPR, pages 599–605, 1992.

- Pearce et al. [2021] Tim Pearce, Alexandra Brintrup, and Jun Zhu. Understanding softmax confidence and uncertainty. arXiv preprint arXiv:2106.04972, 2021.

- Ravi et al. [2024] Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, et al. Sam 2: Segment anything in images and videos. arXiv preprint arXiv:2408.00714, 2024.

- Ronneberger et al. [2015] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. CoRR, abs/1505.04597, 2015.

- Su et al. [2023] Weijie Su, Xizhou Zhu, Chenxin Tao, Lewei Lu, Bin Li, Gao Huang, Yu Qiao, Xiaogang Wang, Jie Zhou, and Jifeng Dai. Towards all-in-one pre-training via maximizing multi-modal mutual information. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 15888–15899, 2023.

- Wang et al. [2023a] Peng Wang, Shijie Wang, Junyang Lin, Shuai Bai, Xiaohuan Zhou, Jingren Zhou, Xinggang Wang, and Chang Zhou. One-peace: Exploring one general representation model toward unlimited modalities. arXiv preprint arXiv:2305.11172, 2023a.

- Wang et al. [2022] Wenhui Wang, Hangbo Bao, Li Dong, Johan Bjorck, Zhiliang Peng, Qiang Liu, Kriti Aggarwal, Owais Khan Mohammed, Saksham Singhal, Subhojit Som, et al. Image as a foreign language: Beit pretraining for all vision and vision-language tasks. arXiv preprint arXiv:2208.10442, 2022.

- Wang et al. [2023b] Wenhai Wang, Jifeng Dai, Zhe Chen, Zhenhang Huang, Zhiqi Li, Xizhou Zhu, Xiaowei Hu, Tong Lu, Lewei Lu, Hongsheng Li, et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 14408–14419, 2023b.

- Xu et al. [2023] Jianqing Xu, Shen Li, Ailin Deng, Miao Xiong, Jiaying Wu, Jiaxiang Wu, Shouhong Ding, and Bryan Hooi. Probabilistic knowledge distillation of face ensembles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3489–3498, 2023.