11email: [email protected], {wwangbw, yqsong}@cse.ust.hk22institutetext: The Hong Kong University of Science and Technology (Guangzhou),

Guangzhou, China

22email: [email protected], [email protected]

Chain-of-Choice Hierarchical Policy Learning for Conversational Recommendation

Abstract

Conversational Recommender Systems (CRS) illuminate user preferences via multi-round interactive dialogues, ultimately navigating towards precise and satisfactory recommendations. However, contemporary CRS are limited to inquiring binary or multi-choice questions based on a single attribute type (e.g., color) per round, which causes excessive rounds of interaction and diminishes the user’s experience. To address this, we propose a more realistic and efficient conversational recommendation problem setting, called Multi-Type-Attribute Multi-round Conversational Recommendation (MTAMCR), which enables CRS to inquire about multi-choice questions covering multiple types of attributes in each round, thereby improving interactive efficiency. Moreover, by formulating MTAMCR as a hierarchical reinforcement learning task, we propose a Chain-of-Choice Hierarchical Policy Learning (CoCHPL) framework to enhance both the questioning efficiency and recommendation effectiveness in MTAMCR. Specifically, a long-term policy over options (i.e., ask or recommend) determines the action type, while two short-term intra-option policies sequentially generate the chain of attributes or items through multi-step reasoning and selection, optimizing the diversity and interdependence of questioning attributes. Finally, extensive experiments on four benchmarks demonstrate the superior performance of CoCHPL over prevailing state-of-the-art methods.

Keywords:

Conversational Recommendation Hierarchical Reinforcement Learning Graph Representation Learning

(a) Binary choices

(b) Single-Type attributes

(c) Multi-Type attributes

1 Introduction

Compared to traditional recommender systems, conversational recommender system (CRS) [9] serves as an online salesperson who elicits user preferences [14, 8] through engaging question-and-answer dialogues [6, 19] to provide tailored recommendations. This conversational approach allows for explicit preference reasoning by actively asking questions about attributes and making desirable recommendations based on the user’s real-time feedback.

Recent research has explored different settings to create more realistic conversational recommendation scenarios. Existing studies allow CRS to ask either binary (yes/no) questions for each selected attribute [9, 6, 7, 4] or multiple choice questions falling into one selected attribute type (e.g., brand, color) in each round [18]. However, existing CRS using binary choices [4] or single-type attribute questions [18] can be repetitive and time-consuming, causing frustration for the user and decreasing the overall recommendation success rate. Consider an example illustrated in Figure 1, where the user wants shoes with the attributes “Basketball,” “Nike,” and “Black.” In Figure 1(a) and Figure 1(b), the question choices are restricted to one attribute type per round, necessitating at least three rounds to extract all the preferred attributes. Our work studies a more realistic scenario, namely Multi-Type-Attribute Multi-round Conversational Recommendation (MTAMCR) as depicted in Figure 1(c), which enables CRS to generate questions encompassing diverse yet dependent attribute types. By optimizing the combination of attribute types, we can enhance the efficiency of reasoning and questioning, ultimately increasing the success rate of recommendations.

Previous works [9, 6, 7, 4, 18, 20] have formulated conversational recommendation as a Markov Decision Process (MDP) and introduced reinforcement learning (RL) to choose the attributes and recommended items. However, these approaches encounter two main limitations when apply them to the new MTAMCR scenario: 1) Diversity of attributes: Previous works select the attribute instances within a single attribute type, allowing for exploring only one type of user’s attribute preference (e.g., color) per round. Thus, these methods need more rounds to discover and capture all the attributes of the user’s target item. 2) Dependency between attributes: Existing RL-based approaches directly select one or multiple attribute instances based on the top-K Q-values without considering the influence of previous attribute choices on subsequent attribute selections. As a result, such approaches usually neglect the dependency between selections, leading to suboptimal combinations of attributes.

To address the two limitations, we propose the chain-of-choice generation approach, as illustrated in Figure 2. This approach infers the next choice, considering the previous choices by predicting the user feedback. For example, if the user wants basketball shoes and the first choice in a given turn is “Nike,” selecting the color “Black” as the next choice is more effective than presenting a brand selection between “Nike” and “Adidas.” This is because we predict that the user would accept the attribute “Nike” since many individuals highly prefer Nike basketball shoes. Therefore, it is strategically advantageous to prioritize exploring color preferences rather than spending more time deliberating over brand preferences. With the chain of choices, we first proposed the Chain-of-Choice Hierarchical Policy Learning (CoCHPL) framework, which formulates MTAMCR as a hierarchical reinforcement learning task. In each turn, CoCHPL employs a long-term policy to select the option (i.e., ask or recommend) and generates the chain of choices (i.e., attributes or items) step by step via different short intra-option policies. To model the transitions of the choice chain and determine the optimal moment for terminating the current choice generation, we utilize learnable functions that predict feedback for the ongoing choice chain and infer the next state for the next choice selection.

Our major contributions can be summarized as follows:

-

1)

We introduce the MTAMCR scenario, which allows the CRS agent to ask questions under the same or different attribute types in each round, enabling more realistic and effective user interaction.

-

2)

We propose the CoCHPL framework for the MTAMCR scenario by formulating MTAMCR as a hierarchical RL task, which includes an option selection task and chain-of-choice generation tasks for different options (action types).

-

3)

We conduct extensive experiments on four benchmark datasets, and the results demonstrate a substantial improvement in both performance and generative capacity with all the comparison methods.

2 Related Work

2.1 Multi-round Conversational Recommendation

Multi-round conversational recommendation (MCR) is a prevalent setting in conversational recommendation, characterized by iterative questioning and recommendations until user satisfaction or a dialogue round limit is reached [4, 7, 15]. Early models like CRM [9] utilized reinforcement learning in single-round settings, later extended to MCR by EAR [6]. SCPR [7] considers MCR as interactive path reasoning on a graph, while UNICORN [4] proposes a unified framework based on a dynamic weighted graph. DAHCR [20] introduces a hierarchical approach for action type and attribute/item selection. MCMIPL [18] proposes Multiple-Choice MCR to enable multiple-choice questions within an attribute type per turn. Instead of single-type attribute queries per turn, our work introduces a more realistic setting, Multi-Type-Attribute Multi-round Conversational Recommendation.

2.2 The Options Framework

The options framework by Sutton et al.[11] extends the conventional reinforcement learning paradigm [16] by incorporating temporally extended actions, or options. Each option comprises an intra-option policy for action selection, a state transition function , and a termination function to decide when to terminate the current option. This hierarchical structure aids in efficient exploration and task decomposition. The option-critic architecture, introduced by [1], integrates policy gradient methods with the options framework[10], enabling concurrent learning of intra-option policies and termination conditions, and facilitating subgoal identification without additional reward schemes.

3 Preliminary

3.0.1 Multi-Type-Attribute Multi-round Conversational Recommendation.

Generally [6, 7, 18], CRS engages in a dialogue with users, asking questions about attributes or providing recommendations in each round. The users are assumed to have definite preferences toward specific attributes and items, and they can express preferences by accepting or rejecting the attributes or items mentioned by CRS. The conversation continues until a successful recommendation is made or a pre-set round limit is reached. Our focus is on a more realistic CRS paradigm, Multi-Type-Attribute Multi-round Conversational Recommendation, where the CRS can question the user about multiple types of attributes per turn.

In MTAMCR, we denote the user set by , the item set by , and the attribute set by . Each item is associated with attributes , with each attribute corresponding to a specific type. In each episode, there is a target item that is acceptable to the user . At the start of each session, a user targets an item and indicates a preferred attribute to the CRS. Then, in each turn , the CRS is free to query the user for attribute preference or recommend items from the candidates through multiple choice questions:

| (1) |

where represents a choice (i.e., attribute or item), and may comprise multiple attributes or items . The user responds to each choice in presented by the CRS, either accepting or rejecting it.

4 Chain-of-Choice Hierarchical Policy Learning

In this section, we present a detailed description of our method, CoCHPL, as illustrated in Figure 3, to explain the overview of the chain-of-choice generation. CoCHPL consists of four key components: an option-based MDP Environment to provide the dynamic state with the graph structure and candidate options and choices for the agent, a Dynamic-Graph State Representation module that encodes the graph-based state into a latent space to capture the key information and relationships, a Long Policy Over Options to determine the option (i.e., ask or recommend) per round, and a Short Intra-Option Policy to generate the chain of choices via multi-step reasoning and selection.

4.1 Option-based MDP Environment

By formulating the MTAMCR as a hierarchical reinforcement learning task, we model not only the low-level actions (choices) but also the high-level actions (options) within each turn, which helps the CRS learn different dimensions of temporal abstractions. The original environment consists of a state space , an action space , a transition function and a reward funtion . The Option-Based MDP environment extends the MDP environment to the tuple by introducing options , which are alternative action types that the agent can choose from at each turn.

To model the state transitions between the multiple choices within the same turn, we assume that the user answers the question choices one by one and introduces the timestep to describe the intrinsic state of turn as follows:

| (2) |

where , is the choice number in turn and , respectively. If the user responds to the first choice at turn , the state will transition from to .

4.1.1 State-Option Space

Before each turn , the CRS selects an option from to decide the action type and extend the state space. The state-option pair at timestep in turn contains , where , and . denotes all the accepted attributes, and denotes all the rejected items and attributes, and denotes the candidate items and attributes.

4.1.2 Action Space.

For at timestep , the candidate action space is for the option . We have where denotes all the items which contain all the accepted attributes. For another pair , the candidate attribute space and denotes the attributes of all the candidate items.

4.1.3 Transition.

For the state-option pair , after the selection of at timestep , if the CRS predicts that the user accepts , then will transiton to and . If rejected, are updated to . The next candidate action space is updated to . The transition is similar for another pair .

4.1.4 Reward.

To promote a universal and uniform distribution of rewards , a simplification of the reward structure is implemented as (1) : a slight reward when the user accepts the asked attribute, (2) : a slight penalty when the user rejects the asked attribute, (3) : a slight penalty when the user rejects the recommended item, (4) : a strong reward when the user accepts the recommended item.

4.2 Dynamic-Graph State Representation Learning

We explicitly employ a dynamic-graph state representation in the option-based MDP environment to incorporate user-preferred attributes and candidate items. This representation captures both the current conversation and its historical context, facilitating the modeling of conversational recommendation as an interactive path reasoning problem on a graph.

4.2.1 Graph Construction.

We denote the dynamic undirected graph as . The node set consists of the related user, items, and attributes. And is a adjacency matrix representing each node’s edge weight in . During each timestep in the conversation, dynamically changes the node and adjacency matrix as follows:

| (3) |

| (4) |

where denotes the user’s preference score for the item , calculated as:

| (5) |

where is the sigmoid function and are pre-trained embeddings initialized as [2]. We can also calculate the preference score for candidate attributes at the current state: . With the calculated weights, we can reduce the action space size for different options by selecting the top- candidates in the action selection section 4.3, 4.4.

4.2.2 State Representation.

By incorporating both the current conversation and the historical conversation data, we can create a comprehensive global knowledge graph denoted as . This knowledge graph captures the evolving preferences of users over time. To effectively leverage the correlation information among users, items, and attributes represented in the graph, we implement a two-layer graph convolutional network (GCN) [5, 17] as follows:

| (6) |

where is the layer number, denotes the neighbors of and denotes the degree matrix with . and are both trainable network parameters. To further extract the sequential information of the current conversation, we use a single-layer multi-head attention [12] network and calculate the final state representation by an average pooling:

| (7) |

| (8) |

After the sequential learning, the final state representation is generated, and denotes all parameters for the dynamic-graph state representation module.

4.3 Long Policy Leaning Over Options

After encoding the state with the dynamic graph, we propose a long-term policy denoted as to select the option at each turn. Our objective is to optimize the discounted return at turn directly. To achieve this, we express the expected Q-value of each option as follows:

| (9) |

where is the intra-option policy and is the estimated Q-value of executing an action in the context of a state-option pair which is introduced in section 4.4.

4.4 Short Intra-Option Policy Learning

With the state-option pair, we need a short-term policy to generate the choice chain at each turn. We propose that each option consists of a triple in which is a short-term intra-option policy to select the attribute or item at each timestep, is a feedback prediction function to predict user response for inferring the next timestep state, and is a termination function to determine when to exit the generation process of the current option.

As shown in Algorithm 1, during each timestep in turn , select a item or attribute as the choice , and then predict the feedback (i.e., acc/rej) via for inferring the next state . If determines to terminate the choice chain generation, the CRS will present the chain-of-choice question to the user. Otherwise, the generation will continue.

To optimize intra-option policy , we estimate the Q-value of selecting a choice in the context of a state-option pair , which can be calculated by:

| (10) |

where is the reward function and denotes the value function of executing option upon entering the next state as:

| (11) |

where the next state value is estimated by a weighted sum of the value of continuing the current option and the maximum value in the next state, with the weights determined by the termination value.

4.5 Model Training

As illustrated in Algorithm 1, in MTAMCR, the CRS needs to generate a complete chain of choices before interacting with the user and obtaining feedback. However, during the training stage, we can receive real feedback for each choice and reward immediately from users at each timestep , and accurately infer the next state , which speeds up the convergence of training. Each option has its own experience replay buffer, denoted by , and the experience is stored in the replay buffer . We sample a mini-batch of experiences from each after every turn and optimize the parameters of the whole network.

In this work, we implement the long policy over options and short intra-option policies to sample the probability distribution of the discrete action space with the Q-value function as follows:

| (12) |

where both and freeze the parameters for the stable training optimization and only need a learnable function to represent , and .We devise a dueling deep Q-network [13] to estimate the expected value of the triple at timestep and the Q-value of the tuple can be estimated by:

| (13) |

where and represent the parameters of the value and advantage functions, respectively, simplified as . The target value of during training can be obtained as Eq. (10), and we can optimize the parameters of the Q network by minimizing the MSE loss as follows:

| (14) |

Based on the Termination Gradient Theorem proposed by [1], the parameters of termination network can be optimized by increasing the gradient or minimizing the loss:

| (15) |

where is the estimated value of the next state. Intuitively, when the value of selecting the current option exceeds the estimated value, the probability of terminating the option is reduced.

For the feedback prediction function, we simply implement it by a multilayer perceptron (MLP). With the ground-truth state and reward, we optimize it by minimizing the following loss to minimize the squared difference between the predicted feedback for action and the ground-truth user feedback:

| (16) |

where takes the value of 1 when user accepts , and 0 otherwise.

5 Experiments

To demonstrate the superiority of CoCHPL in the MTAMCR scenario, we conduct experiments aimed at validating the following three research questions (RQs):

-

•

RQ1: With the comparison of state-of-the-art methods, how much does our model CoCHPL improve in overall performance?

-

•

RQ2: How do different components contribute to the performance?

-

•

RQ3: Does CoCHPL enhance attribute diversity and dependency within each conversational turn?

5.1 Experimental Settings

5.1.1 Datasets.

To fairly evaluate CoCHPL, we utilized four widely recognized benchmark datasets [18] that are commonly used for conversational recommendation tasks involving multiple choice questions, namely: LastFM, Yelp, Amazon-Book and MovieLens. The statistics of datasets are shown in Table 1.

-

•

LastFM is a popular online platform that focuses on music recommendations and social networking. The original attributes in LastFM were treated as attribute instances, and a clustering method was utilized to identify 34 attribute types.

-

•

Yelp serves for business recommendations. In the context of Yelp, a two-layer taxonomy was created by [6], comprising 29 attribute types and 590 attribute instances. The attribute types in the taxonomy represent different categories or aspects of businesses, such as price range, ambiance, service quality, and more.

-

•

Amazon-Book is widely used for book recommendations. Users and items with a minimum of 10 interaction records are retained. Entities and relations in the knowledge graph are considered as attribute instances and attribute types, respectively.

-

•

MovieLens is a rating dataset that contains user ratings for movies. Interactions with ratings above three are retained, and the knowledge graph entities and relations are considered as attribute instances and attribute types.

| LastFM | Yelp | Amazon-Book | MovieLens | |

| #Users | 1,801 | 27,675 | 30,291 | 20,892 |

| #Items | 7,432 | 70,311 | 17,739 | 16,482 |

| #Attributes | 8,438 | 590 | 988 | 1,498 |

| #Types | 34 | 29 | 40 | 24 |

| #Entities | 17,671 | 98,576 | 49,018 | 38,872 |

| #Relations | 4 | 3 | 2 | 2 |

| #Interactions | 76,693 | 1,368,606 | 478,099 | 454,011 |

| #Triples | 228,217 | 2,533,827 | 565,068 | 380,016 |

5.1.2 Baselines.

To benchmark our model’s performance, we compared it against eight established baselines:

-

•

Abs Greedy [3] only recommends items in each turn until the user accepts the recommendation or the total turn number exceeds the maximum limit.

-

•

Max Entropy [6] asks or recommends the top-scored instances with the maximum entropy with a certain probability.

-

•

CRM [9] introduces a policy that selects the action and terminates the conversation when the recommendation is rejected.

-

•

EAR [6] proposes a three-stage pipeline called Estimation–Action-Reflection for selecting actions and optimizing the model.

-

•

SCPR [7] employs graph-based path reasoning to retrieve actions and graph embedding to represent user preferences dynamically.

-

•

UNICORN [4] offers a comprehensive approach for selecting optimal actions, integrating both the conversation and recommendation components.

-

•

MCMIPL [18] focuses on single-type attribute selection in multiple-choice question settings and uses a multi-interest extractor for preference capture.

-

•

DAHCR [20] employ a director to select the action type and an actor to choose the asked attribute or recommended item.

To ensure a fair comparison in the MTAMCR scenario, we made two kinds of modifications to UNICORN, MCMIPL, and DAHCR:

-

•

Single-Type attributes (-S): Also known as attribue type-based multiple choice [18]. In each round, CRS selected one attribute type and then chose multiple attribute instances within that type.

-

•

Multi-Type attributes (-M): In each round, we selected the top-K attribute instances with the highest Q-values, which could be from different or the same attribute types.

| Model | LastFM | Yelp | Amazon-Book | MovieLens | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SR@15 | AT | hDCG | SR@15 | AT | hDCG | SR@15 | AT | hDCG | SR@15 | AT | hDCG | |

| Abs Greedy[3] | 0.635 | 8.66 | 0.267 | 0.189 | 13.43 | 0.089 | 0.193 | 13.90 | 0.087 | 0.724 | 5.24 | 0.465 |

| Max Entropy[6] | 0.669 | 9.33 | 0.269 | 0.398 | 13.42 | 0.121 | 0.382 | 12.43 | 0.149 | 0.655 | 6.87 | 0.412 |

| CRM[9] | 0.580 | 10.79 | 0.224 | 0.177 | 13.69 | 0.070 | 0.354 | 12.81 | 0.127 | 0.626 | 7.43 | 0.433 |

| EAR[6] | 0.595 | 10.51 | 0.230 | 0.182 | 13.63 | 0.079 | 0.411 | 11.62 | 0.153 | 0.749 | 6.28 | 0.459 |

| SCPR[7] | 0.709 | 8.43 | 0.317 | 0.489 | 12.62 | 0.159 | 0.432 | 11.03 | 0.148 | 0.823 | 4.94 | 0.514 |

| UNICORN[4] | 0.788 | 7.58 | 0.349 | 0.520 | 11.31 | 0.203 | 0.498 | 10.42 | 0.179 | 0.863 | 4.62 | 0.523 |

| DAHCR[20] | 0.925 | 6.31 | 0.431 | 0.626 | 11.02 | 0.192 | 0.522 | 10.14 | 0.188 | 0.875 | 4.34 | 0.535 |

| UNICORN-S | 0.884 | 6.07 | 0.403 | 0.593 | 10.57 | 0.212 | 0.538 | 10.01 | 0.253 | 0.883 | 4.05 | 0.585 |

| MCMIPL-S | 0.942 | 5.44 | 0.431 | 0.631 | 10.25 | 0.225 | 0.556 | 9.57 | 0.264 | 0.879 | 3.92 | 0.581 |

| DAHCR-S | 0.933 | 5.67 | 0.429 | 0.668 | 10.15 | 0.232 | 0.572 | 9.45 | 0.272 | 0.895 | 3.89 | 0.603 |

| UNICORN-M | 0.892 | 5.96 | 0.426 | 0.634 | 10.35 | 0.233 | 0.584 | 9.69 | 0.261 | 0.887 | 4.10 | 0.587 |

| MCMIPL-M | 0.973 | 4.59 | 0.466 | 0.675 | 10.11 | 0.256 | 0.667 | 8.98 | 0.293 | 0.891 | 3.68 | 0.609 |

| DAHCR-M | 0.965 | 4.61 | 0.451 | 0.681 | 10.23 | 0.241 | 0.689 | 8.45 | 0.301 | 0.874 | 3.98 | 0.593 |

| CoCHPL | 0.991 | 3.75 | 0.493 | 0.846 | 9.36 | 0.267 | 0.775 | 7.61 | 0.330 | 0.908 | 2.85 | 0.628 |

5.1.3 Evaluation Metrics.

We evaluate the performance of our MCR recommendation system using three key metrics: (1) Success rate (SR@t) measures the cumulative ratio of successful recommendations within a maximum of turns. (2) Average turn (AT) evaluates the average number of terminated conversation turns for all sessions. Lower values indicate higher efficiency in the recommendation process. (3) hDCG@(T, K) [4] assesses the ranking performance of recommended items by considering the target item’s rank in the recommendation list, which extends the normalized discounted cumulative gain metric.

5.1.4 Training Details.

We set the maximum turns to 15, and the agent can recommend up to items or ask attribute-related questions at every turn. We pre-train the graph nodes with TransE [2], and train each dataset for episodes online. Adam optimizer with the learning rate and discount factor is set to be 0.999. For our method CoCHPL, the reward settings were as follows: , , , and . We found that the effect of retrieving accepted attributes was minimal in MovieLens, and then the reward for was adjusted to for it. The rewards for all state-of-the-art baselines remain unchanged as [18].

5.2 Experimental Results

5.2.1 Overall Performance (RQ1).

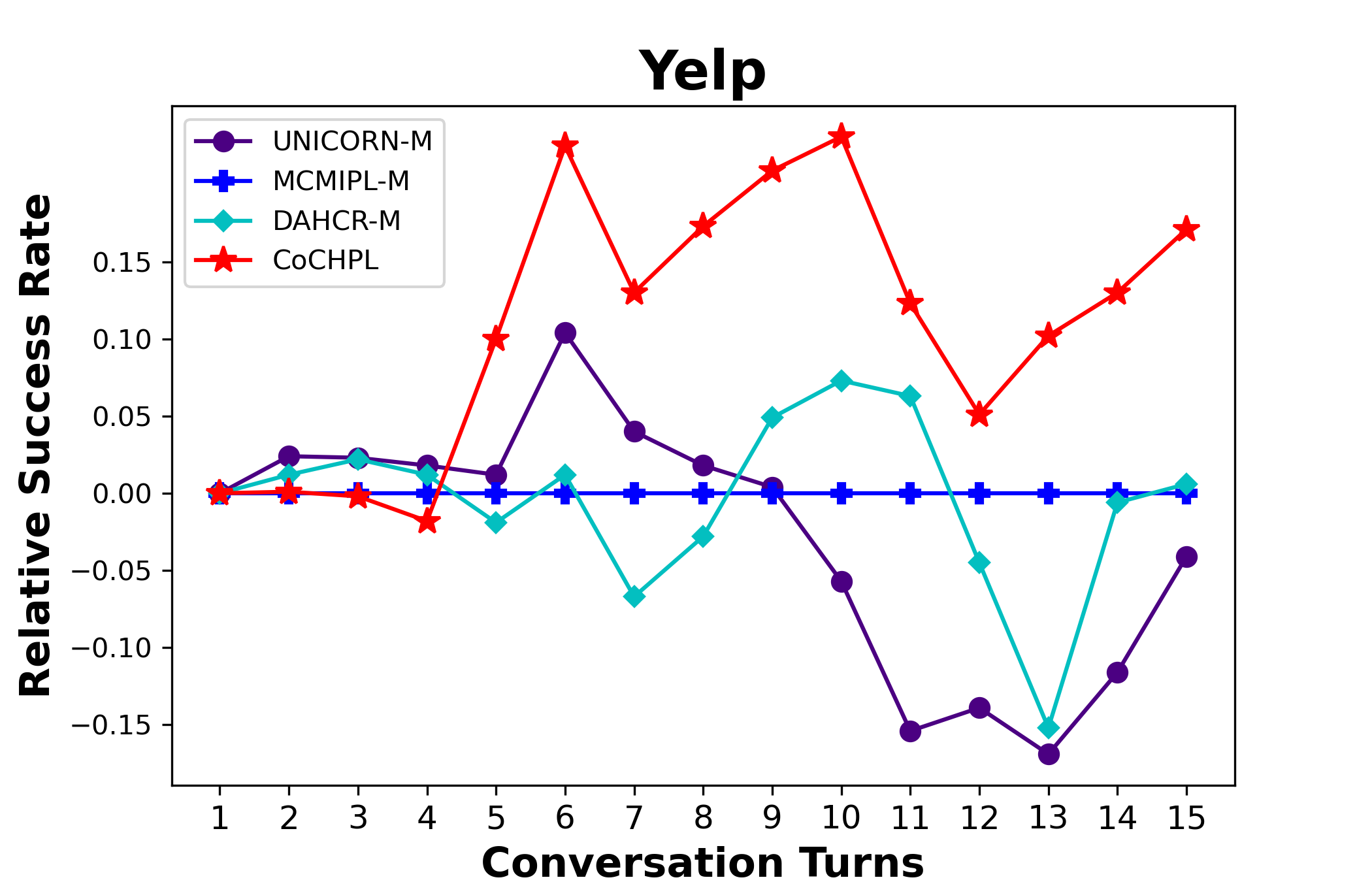

As detailed in Table 2, CoCHPL surpasses eight baselines across SR@15, AT, and hDCG metrics. Baselines with binary yes/no question settings exhibit lower efficiency and longer turn requirements for successful recommendations (Figure 1(a)). In single-type attributes scenarios, UNICORN-S, MCMIPL-S, and DAHCR-S show modest improvements, while in multi-type attributes contexts, UNICORN-M, MCMIPL-M, and DAHCR-M display enhanced success rates and reduced average turns, which indicates the effectiveness of our MTAMCR approach in preference capture. CoCHPL’s chain-of-choice generation leads to marked improvements in success rates and efficiency across benchmark datasets, notably reducing the number of turns for successful recommendations. In critical datasets like Yelp and Amazon-Book, CoCHPL demonstrates significant advancements over top methods with relative increases of , , and in SR@15, AT, and hDCG, respectively. For a clear comparison, we benchmarked against MCMIPL-M’s SR, with differences in SR among methods serving as a measure of relative success rate (Figure 4) and the comparison highlights the superior performance of CoCHPL, especially in Yelp and Amazon-Book, where extensive attribute preference extraction is essential.

| Yelp | Amazon-Book | |||||

|---|---|---|---|---|---|---|

| SR@15 | AT | hDCG | SR@15 | AT | hDCG | |

| CoCHPL | 0.846 | 9.36 | 0.267 | 0.775 | 7.61 | 0.330 |

| w/o long policy over options | 0.693 | 10.21 | 0.225 | 0.649 | 9.12 | 0.278 |

| w/o short intra-option policy (ask) | 0.655 | 10.29 | 0.221 | 0.612 | 9.54 | 0.270 |

| w/o short intra-option policy (rec) | 0.756 | 10.05 | 0.245 | 0.685 | 8.99 | 0.285 |

| w/o termination function | 0.798 | 9.67 | 0.253 | 0.744 | 8.34 | 0.302 |

| w/o feedback prediction function | 0.782 | 9.95 | 0.248 | 0.732 | 8.12 | 0.311 |

5.2.2 Ablation Study (RQ2).

We performed an ablation study on the Yelp and Amazon-Book datasets to assess the impact of different components on system performance as follows: (1) w/o policy over options: The CRS randomly selects options (action types) in each turn. (2) w/o intra-option policy (ask): The CRS chooses the top- attribute instances with the highest Q-value at every turn. (3) w/o intra-option policy (recommend): The CRS selected the top- items with the highest Q-value at each turn. (4) w/o termination function: The CRS asks for or recommends the maximum number of instances in each turn without appropriate termination. (5) w/o feedback prediction function: The CRS randomly predicts whether the user would accept or reject the previous choice. From Table 3, we can observe that short intra-option policy (ask) and long policy over options play crucial roles in performance improvement, which suggests that learning how to select options and effectively generate multiple-choice questions about attributes is essential in the MTAMCR scenario. Additionally, the significance of the termination and feedback prediction functions underscores the importance of learning appropriate termination conditions and the necessity of simulating user behavior during the chain-of-choice generation process.

5.2.3 Effect of Attribute Instances Number and Case Study (RQ3).

Our study, using Yelp and Amazon-Book datasets, assessed the reasoning capabilities of our model, CoCHPL, against three baselines: UNICRON-M, MCMIPL-M, and DAHCR-M. We varied the number of attribute instances requested from 1 to 5 per turn as shown in Figure 5. We noted that while the success rate of baseline models improved with an increased number of attribute instances, the marginal gains diminished over time, approaching a plateau. This trend suggests that these models tend to select numerous but less effective attribute instances, leading to suboptimal exploitation of user preferences. In contrast, CoCHPL demonstrated a nearly linear performance improvement in both datasets. A case study depicted in Figure 6 further reinforces CoCHPL’s superiority. Unlike DAHCR, which selects the top- attribute instances based on Q-values, often resulting in homogenous choices due to similar embeddings, CoCHPL dynamically predicts user feedback and recalculates Q-values after each selection, prioritizing the top- attribute for subsequent choices. Both evaluations demonstrate that CoCHPL effectively diversifies and deepens the dependency among attributes, significantly enhancing the overall recommendation quality.

(a) DAHCR-M

(b) CoCHPL

6 Conclusion

In this paper, we propose a more realistic CRS setting called Multi-Type-Attribute Multi-round Conversational Recommendation (MTAMCR). To optimize the questioning efficiency, we develop a novel framework, namely Chain-of-Choice Hierarchical Policy Learning (CoCHPL), for the MTAMCR scenario. Specifically, by formulating MTAMCR as a hierarchical RL task, we employed a long-term policy over action options to decide when to ask or recommend and two short-term intra-option policies to sequentially generate the optimal multi-type attribute and item chains with the assistance of the learnable termination and feedback prediction functions. Experimental results demonstrate that CoCHPL significantly outperforms state-of-the-art CRS methods in terms of both the reasoning ability in chains of choice and the success rate of recommendations.

6.0.1 Acknowledgements

This research was supported in part by the National Natural Science Foundation of China under Grant No. 62102110, 92370204, the Guangzhou Basic and Applied Basic Research Program under Grant No. 2024A04J3279, and the Education Bureau of Guangzhou Municipality.

References

- [1] Bacon, P.L., Harb, J., Precup, D.: The option-critic architecture. In: AAAI. p. 1726–1734 (2017)

- [2] Bordes, A., Usunier, N., Garcia-Durán, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: NeurIPS. pp. 2787–2795 (2013)

- [3] Christakopoulou, K., Radlinski, F., Hofmann, K.: Towards conversational recommender systems. In: SIGKDD. pp. 815–824 (2016)

- [4] Deng, Y., Li, Y., Sun, F., Ding, B., Lam, W.: Unified conversational recommendation policy learning via graph-based reinforcement learning. In: SIGIR. pp. 1431–1441 (2021)

- [5] Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: ICLR (2017)

- [6] Lei, W., He, X., Miao, Y., Wu, Q., Hong, R., Kan, M.Y., Chua, T.S.: Estimation-action-reflection: Towards deep interaction between conversational and recommender systems. In: WSDM. pp. 304–312 (2020)

- [7] Lei, W., Zhang, G., He, X., Miao, Y., Wang, X., Chen, L., Chua, T.S.: Interactive path reasoning on graph for conversational recommendation. In: SIGKDD. pp. 2073–2083 (2020)

- [8] Lin, A., Zhu, Z., Wang, J., Caverlee, J.: Enhancing user personalization in conversational recommenders. In: WWW. pp. 770–778 (2023)

- [9] Sun, Y., Zhang, Y.: Conversational recommender system. In: SIGIR. pp. 235–244 (2018)

- [10] Sutton, R.S., McAllester, D., Singh, S., Mansour, Y.: Policy gradient methods for reinforcement learning with function approximation. In: NeurIPS (1999)

- [11] Sutton, R.S., Precup, D., Singh, S.: Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial intelligence 112(1-2), 181–211 (1999)

- [12] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: NeurIPS. pp. 6000–6010 (2017)

- [13] Wang, Z., Schaul, T., Hessel, M., Hasselt, H., Lanctot, M., Freitas, N.: Dueling network architectures for deep reinforcement learning. In: ICML (2016)

- [14] Xie, Z., Yu, T., Zhao, C., Li, S.: Comparison-based conversational recommender system with relative bandit feedback. In: SIGIR. pp. 1400–1409 (2021)

- [15] Xu, K., Yang, J., Xu, J., Gao, S., Guo, J., Wen, J.R.: Adapting user preference to online feedback in multi-round conversational recommendation. In: WSDM. pp. 364–372 (2021)

- [16] Zhang, W., Liu, H., Han, J., Ge, Y., Xiong, H.: Multi-agent graph convolutional reinforcement learning for dynamic electric vehicle charging pricing. In: SIGKDD. pp. 2471–2481 (2022)

- [17] Zhang, W., Liu, H., Liu, Y., Zhou, J., Xiong, H.: Semi-supervised hierarchical recurrent graph neural network for city-wide parking availability prediction. In: AAAI. pp. 1186–1193 (2020)

- [18] Zhang, Y., Wu, L., Shen, Q., Pang, Y., Wei, Z., Xu, F., Long, B., Pei, J.: Multiple choice questions based multi-interest policy learning for conversational recommendation. In: WWW. pp. 2153–2162 (2022)

- [19] Zhang, Y., Chen, X., Ai, Q., Yang, L., Croft, W.B.: Towards conversational search and recommendation: System ask, user respond. In: CIKM. pp. 177–186 (2018)

- [20] Zhao, S., Wei, W., Liu, Y., Wang, Z., Li, W., Mao, X.L., Zhu, S., Yang, M., Wen, Z.: Towards hierarchical policy learning for conversational recommendation with hypergraph-based reinforcement learning. In: IJCAI. pp. 2459–2467 (2023)