mainReference \newcitesappendixReference

Certifiably Robust Graph Contrastive Learning

Abstract

Graph Contrastive Learning (GCL) has emerged as a popular unsupervised graph representation learning method. However, it has been shown that GCL is vulnerable to adversarial attacks on both the graph structure and node attributes. Although empirical approaches have been proposed to enhance the robustness of GCL, the certifiable robustness of GCL is still remain unexplored. In this paper, we develop the first certifiably robust framework in GCL. Specifically, we first propose a unified criteria to evaluate and certify the robustness of GCL. We then introduce a novel technique, RES (Randomized Edgedrop Smoothing), to ensure certifiable robustness for any GCL model, and this certified robustness can be provably preserved in downstream tasks. Furthermore, an effective training method is proposed for robust GCL. Extensive experiments on real-world datasets demonstrate the effectiveness of our proposed method in providing effective certifiable robustness and enhancing the robustness of any GCL model. The source code of RES is available at https://github.com/ventr1c/RES-GCL.

1 Introduction

Graph structured data are ubiquitous in real-world applications such as social networks [1], finance systems [2], and molecular graphs [3]. Graph Neural Networks (GNNs) have emerged as a popular approach to learn graph representations by adopting a message passing scheme [4, 5, 6], which updates a node’s representation by aggregating information from its neighbors. Recently, graph contrastive learning has gained popularity as an unsupervised approach to learn node or graph representations [7, 8, 9]. The graph contrastive learning creates augmented views and minimizes the distances among positive pairs while maximizing the distances among negative pairs in the embedding space.

Despite the great success of GNNs, some existing works [10, 11, 12, 13] have shown that GNNs are vulnerable to adversarial attacks where the attackers can deliberately manipulate the graph structures and/or node features to degrade the model’s performance. The representations learned by Graph Contrastive Learning (GCL) are also susceptible to such attacks, which can lead to poor performance in downstream tasks with a small amount of perturbations [14, 15, 16]. To defend against graph adversarial attacks, several empirical-based works have been conducted in robust GNNs for classification tasks [17, 18] and representation learning with contrastive learning [14, 15]. Nevertheless, with the advancement of new defense strategies, new attacks may be developed to invalidate the defense methods [19, 20, 21, 22], leading to an endless arms race. Therefore, it is important to develop a certifiably robust GNNs under contrastive learning, which can provide certificates to nodes/graphs that are robust to potential perturbations in considered space. There are several efforts in certifiable robustness of GNNs under supervised learning, which requires labels for the certifiable robustness analysis [23, 24, 25, 26]. For instance, [23] firstly analyze the certifiable robustness against the perturbation on node features. Some following works [24, 25, 26] further study the certified robustness of GNN under graph topology attacks. However, the certifiable robustness of GCL, which is in unsupervised setting, is still rarely explored.

Certifying the robustness of GCL is rather challenging. First, quantifying the robustness of GCL consistently and reliably is a difficult task. Existing GCL methods [27, 14, 15] often use empirical robustness metrics (e.g., robust accuracy) that rely on the knowledge of specific downstream tasks (e.g., task types, node/graph labels) for evaluating GCL robustness. However, these criteria may not be universally applicable across different downstream tasks. The lack of clarity in defining and assessing robustness within GCL further compounds the difficulty of developing certifiably robust GCL methods. Second, the absence of labels in GCL makes it challenging to study certifiable robustness. Existing works [23, 28, 29, 25, 24] on certifiable robustness of GNNs are designed for supervised or semi-supervised settings, which are not applicable to GCL. They typically try to provide certificates by analyzing the worst-case attack on labeled nodes. However, it is difficult to conduct such analyses due to the absence of labels in GCL. Additionally, some works [29, 24] that rely on randomized smoothing to obtain robustness certificates can not be directly applied to GCL as they may require injecting noise into the whole graph during data augmentation, introducing too many spurious edges and hurting downstream task performance, particularly for large and sparse graphs. Third, it is unclear whether certifiable robustness of GCL can be converted into robust performance in downstream tasks. The challenge arises due to the misalignment of objectives between GCL and downstream tasks. For instance, GCL usually focuses on discriminating between different instances, while some downstream tasks may also require understanding the relations among instances. This discrepancy engenders a divide between unsupervised GCL and its downstream tasks, making it difficult to prove the robust performance of the certified robust GNN encoder in downstream tasks.

In this work, we propose the first certifiably robust GCL framework, RES (Randomized Edgedrop Smoothing). (i) To address the ambiguity in evaluating and certifying robustness of GCL, we propose a unified criteria based on the semantic similarity between node or graph representations in the latent space. (ii) To address the label absence challenges and avoid adding too many noisy edges for certifying the robustness in GCL, we employ the robustness defined at (i) for unlabeled data and introduce a novel technique called randomized edgedrop smoothing, which can transform any base GNN encoder trained by GCL into a certifiably robust encoder. Therein, some randomized edgedrop noises are injected into the graphs by stochastically dropping observed edges with a certain probability, preventing the introduction of excessive noisy edges and yielding effective and efficient performance. (iii) We theoretically demonstrate that the representations learned by our robust encoder can achieve provably robust performance in downstream tasks. (iv) Moreover, we present an effective training method that enhances the robustness of GCL by incorporating randomized edgedrop noise. Our experimental results show that GRACE [30] with our RES can outperform the state-of-the-art baselines against several structural attacks and also achieve 43.96% certified accuracy in OGB-arxiv dataset when the attacker arbitrarily adds at most 10 edges.

Our main contributions are: (i) We study a novel problem of certifying the robustness of GCL to various perturbations. We introduce a unified definition of robustness in GCL and design a novel framework RES to certify the robustness; (ii) Theoretical analysis demonstrates that the representations learned by our robust encoder can achieve provably robust performance in downstream tasks; (iii) We design an effective training method for robust GCL by incorporating randomize edgedrop noise; and (iv) Extensive empirical evaluations show that our method can provide certifiable robustness for downstream tasks (i.e., node and graph classifications) on real-world datasets, and also enhance the robust performance of any GNN encoder through GCL.

2 Related Work

Graph Contrastive Learning. GCL has recently gained significant attention and shows promise for improving graph representations in scenarios where labeled data is scarce [31, 32, 9, 30, 27, 15, 33, 32, 34, 35]. Generally, GCL creates two views through data augmentation and contrasts representations between two views. Several recent works [16, 36] show that GCL is vulnerable to adversarial attacks. Despite very few empirical works [9, 15, 14] on robustness of GCL, there are no existing works studying the certified robustness of GCL. In contrast, we propose to provide robustness certificates for GCL by using randomized edgedrop smoothing. More details are shown in Appendix A.1. To the best of our knowledge, our method is the first work to study the certified robustness of GCL.

Certifiable Robustness of GNNs. Several recent studies investigate the certified robustness of GNNs in the (semi)-supervised setting[23, 28, 29, 26, 24, 25, 37]. Zunger et al. [23] are the first to explore certifiable robustness with respect to node feature perturbations. Subsequent works[28, 26, 29, 24, 38] extend the analysis to certifiable robustness under topological attacks. More details are shown in Appendix A.2. Our work is inherently different from them: (i) we provide an unified definition to evalute and certify the robustness of GCL; (ii) we theoretically provide the certified robustness for GCL in the absence of labeled data, and this certified robustness can provably sustained in downstream tasks; (iii) we design an effective training method to enhance the robustness of GCL.

3 Backgrounds and Preliminaries

3.1 Graph Contrastive Learning

Notations. Let denote a graph, where is the set of nodes, is the set of edges, and is the set of node attributes with being the node attribute of . is the adjacency matrix of , where if nodes and are connected; otherwise . In this paper, we focus on unsupervised graph contrastive learning, where label information of nodes and graphs are unavailable during training for both node- and graph-level tasks. For the node-level task, given a graph , the goal is to learn a GNN encoder to produce representation for , which can be used to conduct prediction for node in downstream tasks. For the graph-level task, given a set of graph , the goal is to learn the latent representation for each graph , which can be used to predict downstream label of . For simplicity and clarity, in this paper, we uniformly denote a node or graph as a concatenation vector . The representation of from encoder is denoted as . More details are shown in Appendix E.2.

Graph Contrastive Learning. Generally, GCL consists of three steps: (i) multiple views are generated for each instance through stochastic data augmentation. Positive pairs are defined as two views generated from the same instance; while negative pairs are sampled from different instances; (ii) these views are fed into a set of GNN encoders , which may share weights; (iii) a contrastive loss is applied to minimize the distance between positive pairs and maximize the distance between negative pairs in latent space. To achieve the certified robustness of GCL to downstream tasks, we introduce latent class to formalize the contrastive loss for GCL, which is inspired by [39, 40].

Definition 1 (Latent Class).

We utilize the latent class to formalize semantic similarity of positive and negative pairs in GCL [39]. Consider a GNN encoder learnt by GCL. Let denote the set of all possible nodes/graphs in the input space. There exists a set of latent class, denoted as , where each sample is associated with a latent class . Each class is associated with a distribution over the latent space of samples belonging to class under . The distribution on is denoted as . Intuitively, captures how relevant is to class in the latent space under , similar to the meaning of class in supervised settings. The latent class is related to the specific downstream task. For instance, when the downstream task is a classification problem, the latent class can be interpreted as the specific class to which the instances belong.

Typically, GCL is based on the label-invariant augmentation intuition: the augmented operations preserve the nature of graphs and make the augmented positive views have consistent latent classes with the original ones [41]. Let , denote the positive and negative latent classes drawn from , and are the distributions to sample positive and negative samples, respectively. For each positive pair associated with negative samples , the widely-used loss for GCL, which is also known as InfoNCE loss [42], can be written as follows:

| (1) |

3.2 Threat Model

Attacker’s Goal. We consider an attacker conducts an evasion attack in GCL. Given a well-trained GNN encoder on a clean graph via GCL, an attacker aims to degrade the performance of in downstream tasks by injecting noise into the graph. For instance, an attacker may attempt to manipulate a social network by injecting fake edges, which could affect the performance of a well-trained in tasks such as community detection, node classification, or link prediction. The noise can take different forms, including adding/deleting nodes/edges or augmenting node features.

Attacker’s Knowledge and Capability. We assume that the attacker follows the grey-box setting to conduct an evasion attack. Specifically, the attacker has access to a benign GNN encoder trained on a clean dataset through GCL, and the training data to train downstream classifier is available to the attacker. The model architecture and other related information of the GNN encoder are unknown to the attacker. During inference phase, the attacker is capable of injecting noises to the graph within a given budget to degrade the performance of target nodes/graphs in downstream tasks. In this paper, we focus on perturbations on the graph structure , i.e, only structural noises such as adding new edges/nodes are injected into the graph as structure attack is more effective than feature attack [11, 24, 43]. We leave the extension to feature attack and defense of GCL as future work. We denote as the structural noise to a node/graph , where denote the number of nodes on the graph or -hop subgraph of node and indicates the addition of a noisy edge to in the -th entry, and its L0-norm indicates the number of noisy edges. We then have the perturbed version of by the attacker, denoted as .

3.3 Problem Statement

Our objective is to develop a certifiably robust GCL. We aim to train a GNN encoder that exhibits provably benign behaviors in downstream tasks. The problem can be formulated as follows:

Problem 1.

Given a GNN encoder , a node or graph . Let denote the perturbed version of by the attacker, where the structural noise with is injected into . Suppose that is the latent class of and is the downstream classifier. Our goal is to develop a certifiably robust GCL for such that for any , the representation of under can satisfy the following requirement in downstream tasks:

| (2) |

where is the worst-case margin of between and in the downstream task for any , and denotes the probability of the representation being classified into class by in the downstream task. Eq. (2) implies that for any within the budget , i.e., is a certifiably robust at when perturbing at most edges.

4 Certifying Robustness for GCL

In this section, we present the details of our RES, which aims to provide certifiable robustness for GCL models. There are mainly three challenges: (i) how to define the certified robustness of GCL; (ii) how to derive the certified robustness; and (iii) how to transfer the certified robustness of GCL to downstream tasks. To address these challenges, we first define certified robustness for GCL in Sec. 4.1. We then propose the RES method to derive the certificates and theoretically guarantee certifiable robustness in Sec. 4.2. Finally, we show that the certifiably robust representations learned from our approach are still provably robust in downstream tasks in Sec. 4.3.

4.1 Certified Robustness of GCL

To give the definition of certified robustness of GCL, we first give the conditions for successfully attacking the GNN encoder. The core idea behind it comes from supervised learning, where the objective is to judge whether the predictions of target nodes/graphs are altered under specific perturbations. Inspired by [40], we consider the following scenario of a successful attack against the GNN encoder.

Given a clean input , with positive and negative samples and used in the learning process of GCL, respectively, we consider , where represents structural noise on and is the attack target of . The attacker’s goal is to produce an adversarial example that can deceive the model into classifying as similar to . Formally, given a well-trained GNN encoder via GCL, we say that has been successfully attacked at if the cosine similarity is less than . This indicates that is more similar to than to in the latent space. Otherwise, we conclude that has not been successfully attacked. This definition of successful attack provides the basis for the definition of certified robustness of GCL. The formal definition of certified robustness problem for GCL is then given as

Definition 2 (Certified Robustness of GCL).

Given a well trained GNN encoder via GCL. Let be a clean input. is the positive sample of and denotes all possible negative samples of , which are sampled as discussed in Sec. 3.1. Suppose that is a perturbed sample obtained by adding structural noise to , where , and is a cosine similarity function. Then, is certifiably -robust at () if the following inequality is satisfied:

| (3) |

Similar to the supervised certified robustness, this problem is to prove that the point under the perturbation within budget is still the positive sample of . However, unlike the supervised learning, where we can estimate the prediction distribution of by leveraging the labels of the training data, it is difficult to estimate such a distribution in GCL because the label information is unavailable. To address this issue, we first consider a space for a sample , where each sample within it is the positive sample of . Formally, given a sample with the latent class from the probability distribution , is defined as

| (4) |

GCL maximizes the agreement of the positive pair in the latent space and assume access to similar data in the form of pairs that comes from a distribution given the latent class . Thus, it is natural to connect the latent class and the similarity between representations. The probability that is the positive sample of can be given by the following theorem.

Theorem 1.

4.2 Certifying Robustness by the Proposed Randomized EdgeDrop Smoothing (RES)

With the definition of certifiable robustness of GCL, we introduce our proposed Randomized Edgedrop Smoothing (RES), which provides certifiable robustness for GCL. RES injects randomized edgedrop noise by randomly dropping each edge of the input sample with a certain probability. We will also demonstrate the theoretical basis for the certifiable robustness of GCL achieved by RES.

Firstly, we define an randomized edgedrop noise for that remove each edge of with the probability . Formally, given a sample , the probability distribution of for is given as:

| (6) |

where denotes the connection status of -th entry of . Given a well-trained GNN encoder via GCL and a noise with the distribution in Eq. (6), the smoothed GNN encoder is defined as:

| (7) |

Here is the set of all nodes/graphs in the dataset. It implies will return the representation of whose latent class is the same as that of the most probable instance that is its positive sample. Therefore, consider the scenario where some noisy edges are injected into a clean input , resulting in a perturbed sample . With a smoothed GNN encoder learnt through our method, if we set to a large value, it is highly probable that the noisy edges will be eliminated from . Consequently, by running multiple randomized edgedrop on and , a majority of them will possess identical structural vectors, that is, , which implies and will have the same latent class and the robust performance of in downstream tasks. We can then derive an upper bound on the expected difference in vote count for each class between and . With this, we can theoretically demonstrate that is certifiably robust at against any perturbation within a specific attack budget. Specifically, let for simplicity of notation, denotes a lower bound on with confidence and denotes a similar upper bound, the formal theorem is presented as follows:

Theorem 2.

Let be a clean input and be its perturbed version, where . is the set of negative samples of . If for all :

| (8) |

where and denotes the number of remaining edges of after injecting . Then, with a confidence level of at least , we have:

| (9) |

The proof is in Appendix C.2. Theorem 2 theoretically guarantees that of is still the positive sample of with confidence if the worst-case margin of under is larger than , which indicates that the certified robustness of at is holds in this case. Moreover, it also paves us a efficient way to compute the certified perturbation size in practical (See in Sec. 5.2).

4.3 Transfer the Certified Robustness to Downstream Tasks

Though we have theoretically shown the capability of learning -certifiably robust node/graph representations with RES, it is unclear whether the certified robustness of the smoothed GNN encoder can be preserved in downstream tasks. To address this concern, we propose a theorem that establishes a direct relationship between the certified robustness of GCL and that of downstream tasks.

Theorem 3.

Given a GNN encoder trained via GCL and an clean input . and are the positive sample and the set of negative samples of , respectively. Let and denote the latent classes of and , respectively. Suppose is the downstream classifier that classify a data point into one of the classes in . Then, we have

| (10) |

The proof is in Appendix C.3. By applying Theorem 3, we can prove given ’s perturbed version , where , if and satisfy Eq. (8) and Eq. (9) in Theorem 2, we have

| (11) |

which implies provable -certified robustness retention of at in downstream tasks.

5 Practical Algorithms

In this section, we first propose a simple yet effective GCL method to train the GNN encoder robustly. Then we introduce the practical algorithms for the prediction and robustness certification.

5.1 Training Robust Base Encoder

Theorem 2 holds regardless how the base GNN encoder is trained. However, introducing randomized edgedrop noise solely to test samples during the inference phase could potentially compromise performance in downstream tasks, which further negatively impact the certified robustness performance based on Eq. 8. In order to make can classify and certify correctly and robustly, inspire by [45], we propose to train the base GNN encoder via GCL with randomized edgedrop noise. Our key idea is to inject randomized edgedrop noise to one augmented view and maximize the probability in Eq. 5 by using GCL to maximize the agreement between two views. Fig. 1 gives an illustration of general process of training the base GNN encoder via our RES. Given an input graph , two contrasting views and are generated from through stochastic data augmentations. Specifically, two views generated from the same instance are usually considered as a positive pair, while two views constructed from different instances are considered as a negative pair. After that, we inject the randomized edgedrop noise to one of the two views and obtain . Finally, two GNN encoders are used to generate embeddings of the views and a contrastive loss is applied to maximize the agreement between and . The training algorithm is summarized in Appendix D.

5.2 Prediction & Certification

Following [46, 24], we then present the algorithm to use the smoothed GNN encoder in the downstream tasks and derive the robustness certificates through Monte Carlo algorithms.

Prediction on Downstream Tasks. We draw samples of , corrupted by randomized edgedrop noises. Then we obtain their predictions via the downstream classifier. If is the class which has the largest frequency among the predictions, is returned as the final prediction.

Compute Robustness Certificates. One of robustness certificates is the certified perturbation size, which is the maximum attack budget that do not change the prediction of an instance no matter what perturbations within the budget are used. To derive the certified perturbation size of an instance , we need to estimate the lower bound and upper bound in Eq. 8. Since it is challenging to directly estimate them in GCL, inspired by Theorem 3, if the prediction of in downstream tasks is correct, will also be the positive sample of under , which indicates the connection between and the probability of correctly classified in the downstream tasks. Formally, given a label set for the downstream task, let denotes the lower bound of the probability that is correctly classified as in the downstream task, is the upper bound of the probability that is classified as for . we have the following probability bound by a confidence level at least :

| (12) |

where , and is the -th quantile of a beta distribution with shape parameter and . After that, we calculate based on Theorem 2 and the maximum that satisfies Eq. 8 is the certified perturbation size.

6 Experiments

In this section, we conduct experiments to answer the following research questions: (Q1) How robust is RES under various adversarial attacks? (Q2) Can RES effectively provide certifiable robustness for various GCL methods? (Q3) How does RES contribute to the robustness in GCL?

6.1 Experimental Setup

Datasets. We conduct experiments on 4 public benchmark datasets for node classification, i.e., Cora, Pubmed [47], Coauthor-Physics [48] and OGB-arxiv [49], and 3 widely used dataset for graph classification i.e., MUTAG, PROTEINS [50] and OGB-molhiv [49]. We use public splits for Cora and Pubmed, and for five other datasets, we perform a 10/10/80 random split for training, validation, and testing, respectively. The details and splits of these datasets are summarized in Appendix G.1.

Attack Methods. To demonstrate the robustness of RES to various structural noises, we evaluate RES on 4 types of structural attacks in an evasion setting, i.e., Random attack, Nettack [11], PRBCD [51], CLGA [16] for both node and graph classification. In our evaluation, we first train a GNN encoder on a clean dataset using GCL, and then subject RES to attacks during the inference phase by employing perturbed graphs in downstream tasks. Especially, CLGA is an poisoning attack methods for GCL. To align it with our evasion setting, we directly employ the poisoned graph generated by CLGA in downstream tasks for evaluation. The details of these attacks are given in Appendix G.2.

Compared Methods. We employ 4 state-of-the-art GCL methods, i.e., GRACE [30], BGRL [52], DGI [31] and GraphCL [9], as baselines. More specifically, GRACE, BGRL and DGI are for node classification, GraphCL and BGRL are for graph classification. We apply our RES on them to train the smoothed GNN encoders. Recall one of our goal is to validate that our method can enhance the robustness of GCL against structural noises, we also consider two robust GCL methods (i.e., Ariel [15] and GCL-Jaccard [12]) and two unsupervised methods ( i.e., Node2Vec [53] and GAE [54]) as the baselines. All hyperparameters of the baselines are tuned based on the validation set to make fair comparisons. The detailed descriptions of the baselines are given in Appendix G.3

| Dataset | Graph | Node2Vec | GAE | GCL-Jac. | Ariel | GRACE | RES-GRACE |

|---|---|---|---|---|---|---|---|

| Cora | Raw | 67.61.0 | 76.80.9 | 76.12.0 | 79.80.6 | 77.11.6 | 79.71.0 |

| Random | 57.70.7 | 74.20.9 | 74.12.0 | 76.30.6 | 74.52.1 | 79.11.2 | |

| CLGA | 64.70.7 | 72.71.3 | 73.71.2 | 76.60.4 | 74.92.0 | 78.21.0 | |

| PRBCD | 63.33.2 | 75.62.1 | 75.31.2 | 75.60.2 | 75.82.5 | 78.51.7 | |

| Pubmed | Raw | 66.42.0 | 78.40.4 | 78.22.7 | 78.01.1 | 79.52.9 | 79.51.2 |

| Random | 56.81.4 | 71.81.0 | 75.82.4 | 75.90.2 | 75.01.0 | 78.20.9 | |

| CLGA | 61.91.2 | 77.60.5 | 76.22.8 | 77.51.1 | 76.62.5 | 78.31.1 | |

| PRBCD | 55.91.5 | 74.82.5 | 73.12.0 | 73.51.6 | 73.22.3 | 78.81.7 | |

| Physics | Raw | 92.90.1 | 95.20.1 | 94.10.3 | 95.30.3 | 94.00.4 | 94.70.2 |

| Random | 85.70.3 | 93.70.1 | 93.40.3 | 93.80.1 | 92.60.5 | 94.20.3 | |

| PRBCD | 81.80.6 | 91.10.7 | 91.60.2 | 91.00.9 | 89.20.6 | 94.10.2 | |

| OGB-arxiv | Raw | 64.60.1 | 61.50.5 | 64.70.2 | 64.70.3 | 65.10.5 | 65.20.1 |

| Random | 52.40.1 | 57.40.5 | 59.00.1 | 59.40.4 | 59.00.2 | 60.00.1 | |

| PRBCD | 56.50.5 | 54.10.5 | 56.50.4 | 57.00.9 | 55.70.4 | 58.30.4 |

Evaluation Protocol. In this paper, we conduct experiments on both transductive node classification and inductive graph classification tasks. For each experiment, a 2-layer GCN is employed as the backbone GNN encoder. We adopt the common used linear evaluation scheme [31], where each model is firstly trained via GCL and then the resulting embeddings are used to train and test a -regularized logistic regression classifier. The certified accuracy and robust accuracy on test nodes are used to evaluate the robustness performance. Specifically, certified accuracy [46, 24] denotes the fraction of correctly predicted test nodes/graphs whose certified perturbation size is no smaller than the given perturbation size. Each experiment is conduct 5 times and the average results are reported.

6.2 Performance of Robustness

To answer Q1, we compare RES with the baselines on various noisy graphs. We also conduct experiments to demonstrate that RES can improve the robustness against different noisy levels, which can be found in Appendix I.2. We focus on node classification as the downstream task on four types of noisy graphs, i.e., raw graphs, random attack perturbed graphs, CLGA perturbed graphs and PRBCD perturbed graphs. The perturbation rate of noisy graphs is . The details of the noisy graphs are presented in Appendix G.2. GRACE is used as the target GCL method to train a GCN encoder. The smoothed version of GRACE is denoted as RES-GRACE. The results on Cora, Coauthor-Physics and OGB-arxiv are given in Table 1. From the table, we observe: (i) When no attack is applied on the clean graph, our RES-GRACE achieve state-of-the-art performance, especially on large-scale datasets, which indicates RES is beneficial to learn good representation by injecting random edgedrop noise to the graph. (ii) The structural noises degrade the performances of all baselines. However, its impact to RES-GRACE is negligible. RES-GRACE outperforms all baselines including two robust GCL methods, which indicates RES could eliminate the effects of the noisy edges. More results on graph classification are shown in Appendix I.1.

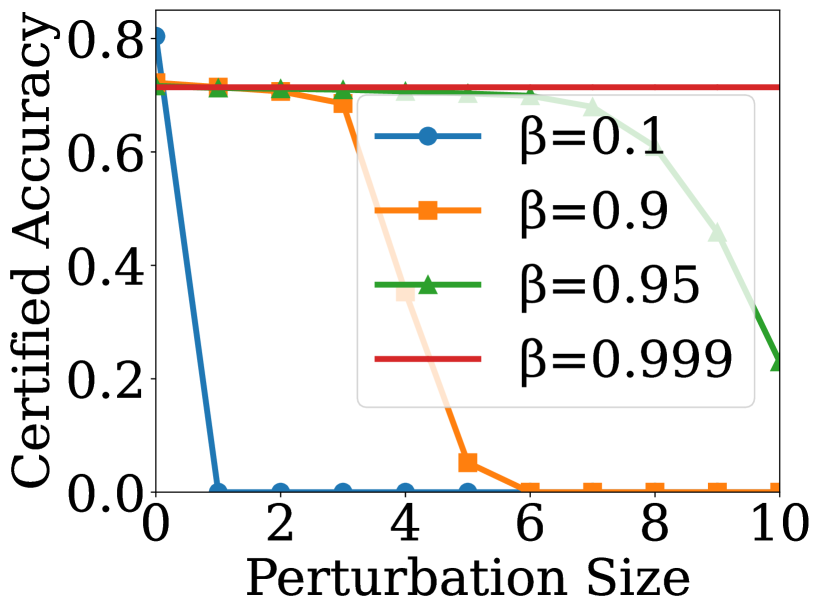

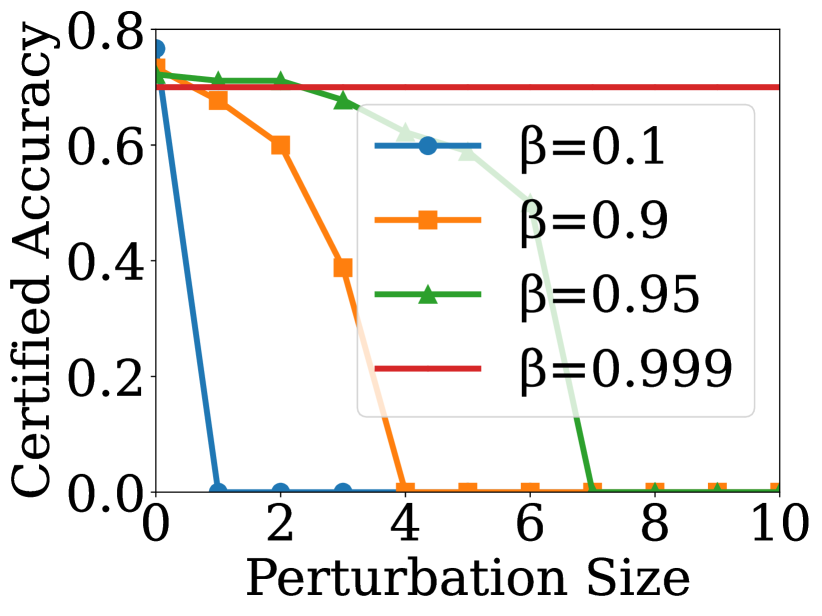

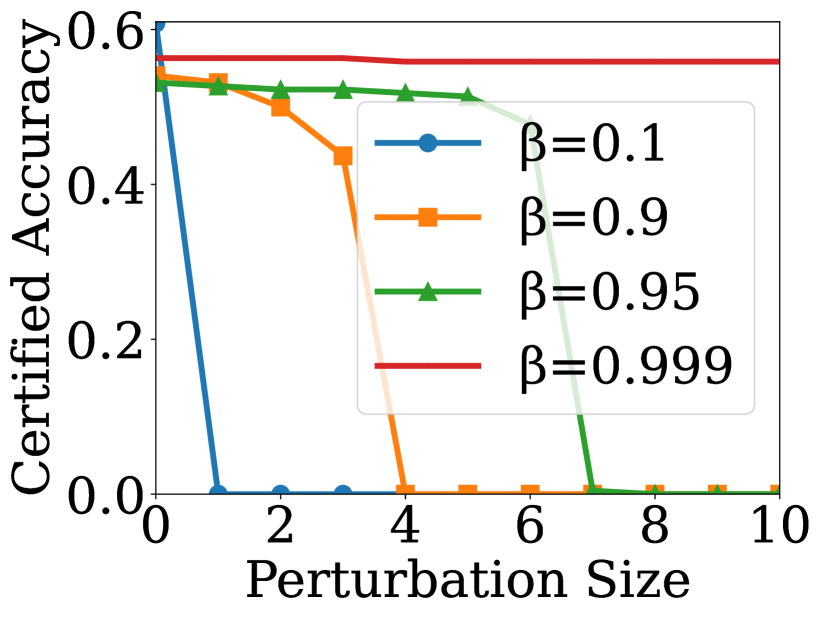

6.3 Performance of Certificates

To answer Q2, we use certified accuracy as the metric to evaluate the performance of robustness certificates. We choose GCN as the GNN encoder and employ our method on GRACE [30] and GraphCL [9] for node classification and graph classification, respectively. We select the overall test nodes as the target nodes and set , to compute the certified accuracy. The results for various are shown in Fig. 2, where the x-axis denotes the given perturbation size. From the figures, we can observe that controls the tradeoff between robustness and model utility. When is larger, the certified accuracy on clean graph is larger, but it drops more quickly as the perturbation size increases. Especially, when , the certified accuracy is nearly independent of the perturbation size. Our analysis reveals that when is low, more structural information is retained on the graph, which benefits the performance of RES under no attack, but also results in more noisy edges being retained on the noisy graph, leading to lower certified accuracy. Conversely, when is large, less structural information is retained on the graph, which may affect the performance of RES under no attack, but also results in fewer noisy edges remaining on the noisy graph, leading to higher certified accuracy for larger perturbation sizes.

6.4 Ablation Study

To answer Q3, we conduct several ablation studies to investigate the effects of our proposed RES method. More results of ablation studies are shown in Appendix J. We also investigate how hyperparameters and affect the performance of our RES, which can be found in Appendix K.

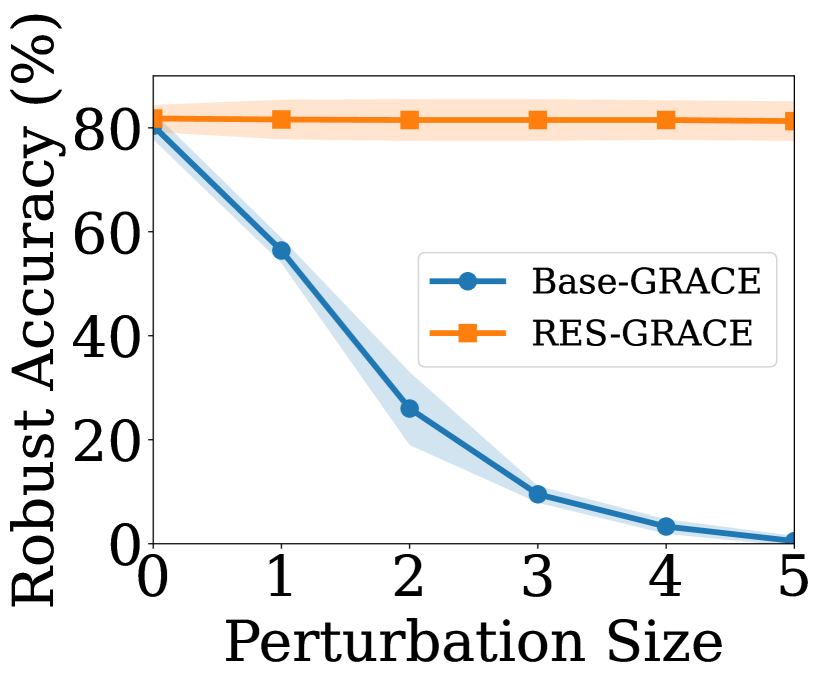

Our first goal is to demonstrate RES is more effective in GCL compared with vanilla binary randomized smoothing in [24], We implement a variant of our model, FLIP, which replaces the random edgedrop noise with binary random noise [24], thereby flipping the connection status within the graph with the probability . For our method, we set , , and . For FLIP, to ensure a fair comparison, we set and , and vary over and select the value which yields the best performance on the validation set of clean graphs. The target GCL method is selected as GRACE. We compare the robust accuracy of the two methods on the clean graph and noisy graph under Nettack with attack budget . The average robust accuracy and standard deviation on Cora and Pubmed are reported in Fig. 3 (a) and (b). We observe: (i) RES achieves better results on various graphs (i.e., clean and noisy graph) compared to FLIP and the base GCL method. (ii) FLIP performs much worse than the base GCL method and RES on the clean graph, suggesting that vanilla binary randomized smoothing is ineffective in GCL. That is because FLIP introduces excessive noisy edges, which in turn makes it challenging for GCL to learn accurate representations.

Second goal is to understand how RES contributes to the robustness of GCL. We implement several variants of our model by removing structural information in the training and testing phases, which are named RES, where and denote the number of removed structures in the training and testing phases, respectively. For our method, we set , and . We compare the robust accuracy on clean and noisy graphs and use PRBCD to perturb 10% of the total number of edges in the graph (before attack) for noisy graphs. The overall test set was selected as the target nodes. The results on Cora and OGB-arxiv are shown in Fig. 3 (c) and (d). We observe: (i) Our method significantly outperforms the ablative methods on various graphs, corroborating the effectiveness of randomized edgedrop smoothing for GCL. (ii) Our method and RES achieve better robust accuracy on various graphs compared to other ablative methods. This is because dropping edges in only one augmented view during training both alleviates over-fitting and increases the worst-case margin, which is helpful for robustness and model utility. (iii) RES and RES perform worse than other methods because of the absence of structural information in training and test phases, highlighting the importance of structural information in GCL. Moreover, the ablation studies on the effectiveness of our approach for training the GNN encoder are in Appendix J.1.

7 Conclusion

In this paper, we present the first work to study the certifiable robustness of GCL. We address the existing ambiguity in quantifying the robustness of GCL to perturbations by introducing a unified definition for robustness in GCL. Our proposed approach, Randomized Edgedrop Smoothing, injects randomized edgedrop noise into graphs to provide certified robustness for GCL on unlabeled data, while minimizing the introduction of spurious edges. Theoretical analysis establishes the provable robust performance of our encoder in downstream tasks. Additionally, we present an effective training method for robust GCL. Extensive empirical evaluations on various real-world datasets show that our method guarantees certifiable robustness and enhances the robustness of any GCL model.

8 Acknowledgements

This material is based upon work supported by, or in part by, the National Science Foundation (NSF) under grant number IIS-1909702, the Army Research Office (ARO) under grant number W911NF21-1-0198, and Department of Homeland Security (DNS) CINA under grant number E205949D. The findings in this paper do not necessarily reflect the view of the funding agencies.

References

- [1] Will Hamilton, Zhitao Ying, and Jure Leskovec. Inductive representation learning on large graphs. Advances in Neural Information Processing Systems, 30, 2017.

- [2] Daixin Wang, Jianbin Lin, Peng Cui, Quanhui Jia, Zhen Wang, Yanming Fang, Quan Yu, Jun Zhou, Shuang Yang, and Yuan Qi. A semi-supervised graph attentive network for financial fraud detection. In IEEE International Conference on Data Mining, pages 598–607, 2019.

- [3] Yuyang Wang, Jianren Wang, Zhonglin Cao, and Amir Barati Farimani. Molecular contrastive learning of representations via graph neural networks. Nature Machine Intelligence, 4(3):279–287, 2022.

- [4] Thomas N. Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations, 2017.

- [5] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In International Conference on Learning Representations, 2018.

- [6] Teng Xiao, Zhengyu Chen, Donglin Wang, and Suhang Wang. Learning how to propagate messages in graph neural networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 1894–1903, 2021.

- [7] Yanqiao Zhu, Yichen Xu, Feng Yu, Qiang Liu, Shu Wu, and Liang Wang. Deep Graph Contrastive Representation Learning. In ICML Workshop on Graph Representation Learning and Beyond, 2020.

- [8] Fan-Yun Sun, Jordan Hoffman, Vikas Verma, and Jian Tang. Infograph: Unsupervised and semi-supervised graph-level representation learning via mutual information maximization. In International Conference on Learning Representations, 2020.

- [9] Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, and Yang Shen. Graph contrastive learning with augmentations. In Advances in Neural Information Processing Systems, pages 5812–5823, 2020.

- [10] Hanjun Dai, Hui Li, Tian Tian, Xin Huang, Lin Wang, Jun Zhu, and Le Song. Adversarial attack on graph structured data. International Conference on Machine Learning, 2018.

- [11] Daniel Zügner, Amir Akbarnejad, and Stephan Günnemann. Adversarial attacks on neural networks for graph data. In the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 2847–2856, 2018.

- [12] Kaidi Xu, Hongge Chen, Sijia Liu, Pin-Yu Chen, Tsui-Wei Weng, Mingyi Hong, and Xue Lin. Topology attack and defense for graph neural networks: An optimization perspective. In IJCAI, pages 3961–3967, 2019.

- [13] Binghui Wang, Tianxiang Zhou, Minhua Lin, Pan Zhou, Ang Li, Meng Pang, Cai Fu, Hai Li, and Yiran Chen. Evasion attacks to graph neural networks via influence function. arXiv preprint arXiv:2009.00203, 2020.

- [14] Nikola Jovanović, Zhao Meng, Lukas Faber, and Roger Wattenhofer. Towards robust graph contrastive learning. arXiv preprint arXiv:2102.13085, 2021.

- [15] Shengyu Feng, Baoyu Jing, Yada Zhu, and Hanghang Tong. Adversarial graph contrastive learning with information regularization. In Proceedings of The Web Conference, pages 1362–1371, 2022.

- [16] Sixiao Zhang, Hongxu Chen, Xiangguo Sun, Yicong Li, and Guandong Xu. Unsupervised graph poisoning attack via contrastive loss back-propagation. In Proceedings of The Web Conference, pages 1322–1330, 2022.

- [17] Enyan Dai, Wei Jin, Hui Liu, and Suhang Wang. Towards robust graph neural networks for noisy graphs with sparse labels. In WSDM, pages 181–191, 2022.

- [18] Enyan Dai, Limeng Cui, Zhengyang Wang, Xianfeng Tang, Yinghan Wang, Monica Cheng, Bing Yin, and Suhang Wang. A unified framework of graph information bottleneck for robustness and membership privacy. arXiv preprint arXiv:2306.08604, 2023.

- [19] Yongqiang Chen, Han Yang, Yonggang Zhang, MA KAILI, Tongliang Liu, Bo Han, and James Cheng. Understanding and improving graph injection attack by promoting unnoticeability. In International Conference on Learning Representations, 2022.

- [20] Enyan Dai, Minhua Lin, Xiang Zhang, and Suhang Wang. Unnoticeable backdoor attacks on graph neural networks. In Proceedings of the ACM Web Conference 2023, pages 2263–2273, 2023.

- [21] Zhenting Wang, Hailun Ding, Juan Zhai, and Shiqing Ma. Training with more confidence: Mitigating injected and natural backdoors during training. Advances in Neural Information Processing Systems, 35:36396–36410, 2022.

- [22] Zhenting Wang, Kai Mei, Hailun Ding, Juan Zhai, and Shiqing Ma. Rethinking the reverse-engineering of trojan triggers. Advances in Neural Information Processing Systems, 35:9738–9753, 2022.

- [23] Daniel Zügner and Stephan Günnemann. Certifiable robustness and robust training for graph convolutional networks. In the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 246–256, 2019.

- [24] Binghui Wang, Jinyuan Jia, Xiaoyu Cao, and Neil Zhenqiang Gong. Certified robustness of graph neural networks against adversarial structural perturbation. In the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, page 1645–1653, 2021.

- [25] Zhidong Gao, Rui Hu, and Yanmin Gong. Certified robustness of graph classification against topology attack with randomized smoothing. In GLOBECOM 2020-2020 IEEE Global Communications Conference, pages 1–6. IEEE, 2020.

- [26] Hongwei Jin, Zhan Shi, Venkata Jaya Shankar Ashish Peruri, and Xinhua Zhang. Certified robustness of graph convolution networks for graph classification under topological attacks. In Advances in Neural Information Processing Systems, pages 8463–8474, 2020.

- [27] Yanqiao Zhu, Yichen Xu, Feng Yu, Qiang Liu, Shu Wu, and Liang Wang. Graph contrastive learning with adaptive augmentation. In Proceedings of the Web Conference 2021, pages 2069–2080, 2021.

- [28] Aleksandar Bojchevski and Stephan Günnemann. Certifiable robustness to graph perturbations. Advances in Neural Information Processing Systems, 32, 2019.

- [29] Aleksandar Bojchevski, Johannes Gasteiger, and Stephan Günnemann. Efficient robustness certificates for discrete data: Sparsity-aware randomized smoothing for graphs, images and more. In International Conference on Machine Learning, pages 1003–1013, 2020.

- [30] Yanqiao Zhu, Yichen Xu, Feng Yu, Qiang Liu, Shu Wu, and Liang Wang. Deep Graph Contrastive Representation Learning. In ICML Workshop on Graph Representation Learning and Beyond, 2020.

- [31] Petar Velickovic, William Fedus, William L Hamilton, Pietro Liò, Yoshua Bengio, and R Devon Hjelm. Deep graph infomax. International Conference on Learning Representations, 2(3):4, 2019.

- [32] Kaveh Hassani and Amir Hosein Khasahmadi. Contrastive multi-view representation learning on graphs. In International Conference on Machine Learning, pages 4116–4126, 2020.

- [33] Yujie Mo, Liang Peng, Jie Xu, Xiaoshuang Shi, and Xiaofeng Zhu. Simple unsupervised graph representation learning. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, pages 7797–7805, 2022.

- [34] Teng Xiao, Zhengyu Chen, Zhimeng Guo, Zeyang Zhuang, and Suhang Wang. Decoupled self-supervised learning for graphs. Advances in Neural Information Processing Systems, 35:620–634, 2022.

- [35] Enyan Dai and Suhang Wang. Towards self-explainable graph neural network. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pages 302–311, 2021.

- [36] Hangfan Zhang, Jinghui Chen, Lu Lin, Jinyuan Jia, and Dinghao Wu. Graph contrastive backdoor attacks. In Proceedings of the 40th International Conference on Machine Learning, 2023.

- [37] Enyan Dai, Tianxiang Zhao, Huaisheng Zhu, Junjie Xu, Zhimeng Guo, Hui Liu, Jiliang Tang, and Suhang Wang. A comprehensive survey on trustworthy graph neural networks: Privacy, robustness, fairness, and explainability. arXiv preprint arXiv:2204.08570, 2022.

- [38] Daniel Zügner and Stephan Günnemann. Certifiable robustness of graph convolutional networks under structure perturbations. In Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining, pages 1656–1665, 2020.

- [39] Nikunj Saunshi, Orestis Plevrakis, Sanjeev Arora, Mikhail Khodak, and Hrishikesh Khandeparkar. A theoretical analysis of contrastive unsupervised representation learning. In International Conference on Learning Representations, pages 5628–5637, 2019.

- [40] Zekai Wang and Weiwei Liu. Robustness verification for contrastive learning. In International Conference on Machine Learning, pages 22865–22883, 2022.

- [41] Han Yue, Chunhui Zhang, Chuxu Zhang, and Hongfu Liu. Label-invariant augmentation for semi-supervised graph classification. In Advances in Neural Information Processing Systems.

- [42] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748, 2018.

- [43] Binghui Wang, Meng Pang, and Yun Dong. Turning strengths into weaknesses: A certified robustness inspired attack framework against graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023.

- [44] Stuart Coles, Joanna Bawa, Lesley Trenner, and Pat Dorazio. An introduction to statistical modeling of extreme values, volume 208. Springer, 2001.

- [45] Mathias Lecuyer, Vaggelis Atlidakis, Roxana Geambasu, Daniel Hsu, and Suman Jana. Certified robustness to adversarial examples with differential privacy. In 2019 IEEE Symposium on Security and Privacy (SP), pages 656–672. IEEE, 2019.

- [46] Jeremy Cohen, Elan Rosenfeld, and Zico Kolter. Certified adversarial robustness via randomized smoothing. In International Conference on Machine Learning, volume 97, pages 1310–1320, 2019.

- [47] Prithviraj Sen, Galileo Namata, Mustafa Bilgic, Lise Getoor, Brian Galligher, and Tina Eliassi-Rad. Collective classification in network data. AI magazine, 29(3):93–93, 2008.

- [48] Oleksandr Shchur, Maximilian Mumme, Aleksandar Bojchevski, and Stephan Günnemann. Pitfalls of graph neural network evaluation. arXiv preprint arXiv:1811.05868, 2018.

- [49] Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. Open graph benchmark: Datasets for machine learning on graphs. In Advances in Neural Information Processing Systems, volume 33, pages 22118–22133, 2020.

- [50] Christopher Morris, Nils M. Kriege, Franka Bause, Kristian Kersting, Petra Mutzel, and Marion Neumann. Tudataset: A collection of benchmark datasets for learning with graphs. In ICML 2020 Workshop on Graph Representation Learning and Beyond (GRL+ 2020), 2020.

- [51] Simon Geisler, Tobias Schmidt, Hakan Şirin, Daniel Zügner, Aleksandar Bojchevski, and Stephan Günnemann. Robustness of graph neural networks at scale. Advances in Neural Information Processing Systems, 34:7637–7649, 2021.

- [52] Shantanu Thakoor, Corentin Tallec, Mohammad Gheshlaghi Azar, Mehdi Azabou, Eva L Dyer, Remi Munos, Petar Veličković, and Michal Valko. Large-scale representation learning on graphs via bootstrapping. In International Conference on Learning Representations, 2022.

- [53] Aditya Grover and Jure Leskovec. Node2vec: Scalable feature learning for networks. In the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2016.

- [54] Thomas N Kipf and Max Welling. Variational graph auto-encoders. NIPS Workshop on Bayesian Deep Learning, 2016.

- [55] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning, 2020.

- [56] Ralph Linsker. Self-organization in a perceptual network. Computer, 21(3):105–117, 1988.

- [57] Jordan T Ash, Surbhi Goel, Akshay Krishnamurthy, and Dipendra Misra. Investigating the role of negatives in contrastive representation learning. arXiv preprint arXiv:2106.09943, 2021.

- [58] Susheel Suresh, Pan Li, Cong Hao, and Jennifer Neville. Adversarial graph augmentation to improve graph contrastive learning. In Advances in Neural Information Processing Systems, 2021.

- [59] Zhenqin Wu, Bharath Ramsundar, Evan N Feinberg, Joseph Gomes, Caleb Geniesse, Aneesh S Pappu, Karl Leswing, and Vijay Pande. Moleculenet: a benchmark for molecular machine learning. Chemical science, 9(2):513–530, 2018.

- [60] Xu Zou, Qinkai Zheng, Yuxiao Dong, Xinyu Guan, Evgeny Kharlamov, Jialiang Lu, and Jie Tang. Tdgia: Effective injection attacks on graph neural networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 2461–2471, 2021.

- [61] Yongqiang Chen, Han Yang, Yonggang Zhang, Kaili Ma, Tongliang Liu, Bo Han, and James Cheng. Understanding and improving graph injection attack by promoting unnoticeability. In International Conference on Learning Representations, 2022.

- [62] Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Guo, Mohammad Gheshlaghi Azar, et al. Bootstrap your own latent-a new approach to self-supervised learning. In Advances in neural information processing systems, pages 21271–21284, 2020.

- [63] R Devon Hjelm, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. Learning deep representations by mutual information estimation and maximization. In International Conference on Learning Representations, 2019.

- [64] Huijun Wu, Chen Wang, Yuriy Tyshetskiy, Andrew Docherty, Kai Lu, and Liming Zhu. Adversarial examples for graph data: Deep insights into attack and defense. In IJCAI, pages 4816–4823, 7 2019.

- [65] Yanqiao Zhu, Yichen Xu, Qiang Liu, and Shu Wu. An Empirical Study of Graph Contrastive Learning. arXiv.org, September 2021.

- [66] Sihang Li, Xiang Wang, An Zhang, Xiangnan He, and Tat-Seng Chua. Let invariant rationale discovery inspire graph contrastive learning. In Proceedings of the 39th International Conference on Machine Learning, 2022.

Appendix A Details of Related Works

A.1 Graph Contrastive Learning

GCL has recently gained significant attention and shows promise for improving graph representations in scenarios where labeled data is scarce [31, 32, 9, 30, 27, 15, 33, 32]. Generally, GCL creates two views through data augmentation and contrasts representations between two views. GraphCL [9] focuses on graph classification by exploring four types of augmentations including node dropping, edge perturbation, attribute masking and subgraph sampling. GRACE [30] and GCA [27] adapt SimCLR [55] to graphs to maximize the mutual information between two views for each node through a variety of data augmentation. DGI [31] applied InfoMax principle [56] to train a GNN encoder by maximizing the mutual information between node-level and graph-level representations. MVGRL [32] proposes to learn representations by maximizing the mutual information between the cross-view representations of nodes and graphs. Several recent works [16, 36] show that GCL is vulnerable to adversarial attacks. CLGA [16] is an unsupervised poisoning attacks for attack graph contrastive learning. In detail, the gradients of the adjacency matrices for both views are computed, and edge flipping is performed using gradient ascent to maximize the contrastive loss. Despite very few empirical works [9, 15, 14] on robustness of GCL, there are no existing works studying the certified robustness of GCL. In contrast, we propose to provide robustness certificates for GCL by using randomized edgedrop smoothing. To the best of our knowledge, our method is the first work to study the certified robustness of GCL.

A.2 Certifiable Robustness of Graph Neural Networks

Several recent studies investigate the certified robustness of GNNs in the supervised setting [23, 28, 29, 26, 24, 25, 37]. Zügner et al. [23] are the first to explore certifiable robustness with respect to node feature perturbations. Subsequent works[28, 26, 29, 24, 38] extend the analysis to certifiable robustness under topological attacks. For instance, Bojchevski et al. [28] propose a branch-and-bound algorithm to achieve tight bounds on the global optimum of certificates for topological attacks. Bojchevski et al. [29] further adapt the randomized smoothing technique to sparse settings, deriving certified robustness for GNNs. This approach involves injecting random noise into test samples to mitigate the negative effects of adversarial perturbations. Wang et al. [24] further refine this technique to provide theoretically tight robust certificates. Our work is inherently different from them: (i) existing work focues on the certified robustness of GNN under (semi)-supervised setting; while we provide a unified definition to evaluate and certify the robustness of GCL for unsupervised representation learning. (ii) we theoretically provide the certified robustness for GCL in the absence of labeled data, and this certified robustness can provably sustained in downstream tasks. (iii) we design an effective training method to enhance the robustness of GCL.

Appendix B Preliminary of Randomized Smoothing

One potential solution for achieving certified robustness in GNNs is binary randomized smoothing presented in [24]. Specifically, consider a noisy vector in the discrete space

| (B.1) |

where . This indicates that the connection status of the -th entry of will be flipped with probability and preserved with probability . Given a base node or graph classifier that returns the class label with highest label probability, the smoothed classifier is defined as:

| (B.2) |

where denotes the label set, and represents the XOR operation between two binary variables, which combines the structural information of both variables. is the probability that the base classifier predicts class when random noise is added to . According to [24], by injecting multiple to and returning the most likely class (the majority vote), if it is holds that:

| (B.3) |

where is the class with the highest label probability. Then we can guarantee there exists a certified perturbation size such that for any perturbation with , the predictions of will remain unchanged, that is, . Thus, is certifiably robust at when perturbing at most edges. For more deatils of the proof, please refer to [24].

However, directly applying the vanilla randomized smoothing approach to certify robustness in GCL is inapplicable. This approach may require injecting random noise into the entire graph during data augmentation, resulting in an excessive number of noisy/spurious edges that can significantly harm downstream task performance, particularly for large and sparse graphs. In contrast, our proposed method, Randomized Edgedrop Smoothing (RES), aims to prevent the introduction of excessive spurious edges in graphs. RES achieves this by injecting randomized edgedrop noises, where observed edges are randomly removed from the graphs with a certain probability. Additionally, we propose an effective training method for robust GCL. Our experimental results in Sec. 6.4 demonstrate that our method significantly outperforms the ablative method, FLIP, that directly applies vanilla randomized smoothing [24] in GCL. Specifically, on the clean Cora and Pubmed graphs, our method achieves accuracies of 82% and 85.6% respectively. On the Nettack perturbed [11] Cora and Pubmed graphs, our method achieves robust accuracies of 79.4% and 82.5%, respectively. In contrast, FLIP achieves only up to 27% and 42% robust accuracies on the Cora and Pubmed datasets, respectively. These results strongly validate the effectiveness of our RES approach,

Appendix C Detailed Proofs

C.1 Proof of Theorem 1

To begin, we provide a formalization of similarity in relation to the latent class.

Given a GNN encoder and an input sample , which consists of a positive sample and a negative sample an input sample with positive sample and negative sample , according to Def. 1, we assume that represent the latent classes of and in the latent space of , respectively. These latent classes are based on the distribution over . we assume that are the latent class of and in the latent space of , respectively, which are randomly determined based on the distribution on . Similar data are i.i.d. draws from the same class distribution , whereas negative samples originate from the marginal of , which are formalized as follow:

| (C.4) | ||||

Since classes are allowed to overlap and/or be fine-grained [39], this is a plausible formalization of "similarity". The formalization connects similarity with latent class, thereby offering a viable way to employ the similarity between samples under the latent space for defining the probability of being the positive sample of .

Lemma 1 (The Generalized Extreme Value Distribution [44]).

Let be a sequence of i.i.d samples from a common distribution function . Let , indicting the maximum of . Then if and are sequences of constants such that

| (C.5) |

where is a non-degenerate distribution function. It is a member of the generalized extreme value family of distributions, which belongs to either the Gumbel family (Type I), the Fréchet family (Type II) or the Reverse Weibull family (Type III) with their CDFs as follows:

| (C.6) | ||||

where , and are the scale, location and shape parameters, respectively.

Theorem 1. Let be a space around as defined in Eq. 4. Given an input sample and a GNN encoder learnt by GCL in Eq. 1, the probability of being the positive sample of is:

| (C.7) |

where is the cosine similarity function. , are Weibull shape and scale parameters [44].

Proof.

We first assume are i.i.d samples under the latent space . Then, suppose is the set of negative samples of . We assume there exists a continuous non-degenerate margin distribution , which is denoted as follows:

| (C.8) |

where , and is the cosine similarity function. indicates the half of the minimum distance between and .

According to Lemma C.6, we know that there exists in the three types of generalized extreme value family. And since Lemma 1 is applied to the maximum, refer to Eq. (C.8), we transfer the variable to , Since is bounded , let , the marginal distribution of series, for , can be Reverse Weibull family. Therefore, the asymptotic marginal distribution of fits into the Reverse Weibull distribution:

where is the shape parameter and is the scale parameter. Compared to Eq. (C.6), here, because is bound (). We use margin distances of the closest samples with to estimate the parameters and , which means to estimate of the distribution function .

Following Eq. (C.4), if the similarity is larger, is more possible to be from the same latent class as , which implies is the positive sample of . Since the distance between and can be denoted as , the probability of is the positive sample of can be written as:

| (C.9) | ||||

Let , and , since , we can rewrite Eq. (LABEL:eq:prob_margin_old) as (C.7) according to Lemma 1. ∎

C.2 Proof of Theorem 2

For simplicity of notation, let . Then, given a randomized edgedrop noise with the following probability distribution:

| (C.10) |

where is the -entry of noisy vector and is the connection status of -th entry of . Then, we let

| (C.11) |

where and denote the lower bound and the upper bound on , respectively. The formal theorem is presented as follows:

Theorem 2. Let be a clean input and be its perturbed version, where . is the set of negative samples of . If for all :

| (C.12) |

where

| (C.13) |

and denotes the number of remaining edges of after injecting , then with a confidence level of at least , we have:

| (C.14) |

Proof.

Suppose is the set of positive and negative samples of . For any , let for simplicity, we have:

| (C.15) | ||||

As stated by the law of total probability, we have:

| (C.16) | ||||

where represent the intersection of the edge sets and , that is, the set of edges shared in the two structure vectors. Therefore, for , it means that no noisy edge of exists in , which indicates that and are structural identical at all indices, i.e., . Therefore, we can then derive the following equality:

| (C.17) |

By multiplying in both sides of Eq. (C.17), we have:

| (C.18) |

Combining Eq. (C.16) with Eq. (C.18) results in:

| (C.19) | ||||

Since probabilities are non-negative, we can rewrite Eq. (C.19) into the following inequality:

| (C.20) | ||||

Applying the conjunction rule, we have:

| (C.21) | ||||

Since , and implies that remaining edges of and are identical after injecting the random masking noise, we can derive the following probability:

| (C.22) |

where denotes the number of remaining edges, and denotes the certified perturbation size. represents the probability that noise edges are all dropped and edges of are retained in . Therefore, we have:

| (C.23) |

Substituting Eq. (C.23) into Eq. (LABEL:eq:prob_inequality_3), then Eq. (LABEL:eq:prob_inequality_3) can be rewritten as

| (C.24) |

C.3 Proof of Theorem 3

In order to transfer the certified robustness of GCL to downstream tasks, we first introduce two loss functions, namely, unsupervised loss and supervised loss. Subsequently, we introduce a lemma to establish the relationship between GCL and downstream tasks. Finally, a theorem is proposed to prove that the certified robustness of GCL is provably preserved in downstream tasks.

Unsupervised Loss

Given an input sample with its positive sample and negative samples . Let be the GNN encoder based on GCL to obtain representations. The unsupervised loss for at point is defined as:

| (C.27) |

where is logistic loss according to [57, 39]. Note that this loss is essentially equivalent to the InfoNCE loss [42, 57] shown in Eq. 1, which is widely-used for GCL.

Supervised Loss

Linear evaluation, which learns a downstream linear layer after the base encoder, is a common way to evaluate the performance of GCL model in downstream tasks. Let be denoted as the set of latent classes, where . We consider the standard supervised learning tasks that classify a data point into one of the classes in . To connect the GCL task with the downstream classification task, the supervised loss of downstream classifier at is defined as:

| (C.28) |

where is the same as the loss function used in the unsupervised loss in Eq. (C.27). To evaluate the learned representations on downstream tasks, we typically fix and train a linear classifier on the top of the encoder . Therefore, the supervised loss of at at is defined as:

| (C.29) |

Lemma 2 (Connection between GCL and Downstream Tasks [39]).

Given a input sample with its positive sample and the set of negative samples . is the set of latent class with distribution . The latent class of is denoted as . Suppose any has the latent class . Then we have

| (C.30) |

where , which indicates the expectation that .

Proof.

Note that the above bound is similar to Lemma 4.3 in [39]. By leveraging our Theorem 2, we can establish the connection between GCL and downstream tasks, and use this connection to prove the transferability of the certified robustness of GCL to downstream tasks.

Theorem 3. Given a GNN encoder trained via GCL and an clean input . and are the positive sample and the set of negative samples of , respectively. Let and denote the latent classes of and , respectively. Suppose is the downstream classifier that classify a data point into one of the classes in . Then, we have

| (C.32) |

Proof.

Since is a clean input, according to Eq. (C.4) and Sec. 4.1, the positive pair a pair of similar data that come from the same class distribution and they have the following relationship:

| (C.33) |

Then, based on Theorem 1, we know that is monotonically increasing as increases. Therefore, we have

| (C.34) |

According to Eq. (LABEL:eq:sim_compare), we can obtain that:

| (C.35) |

and the equivalent form of Eq. (C.35) is given as:

| (C.36) |

where is the -normalization operation on vector . Then, we can obtain:

| (C.37) |

To relate the robustness of GCL to that of downstream tasks, we select the negative samples whose latent class is different from and obtain the following relationship:

| (C.38) |

Appendix D Training Algorithms

We summarize the training method of Sec. 5.1 for training smoothed GNN encoders in Algorithm 1. Specifically, at each training epoch, we first generate two augmented graphs and via and , respectively, where and are two graph augmentations sampled from an augmentation pool . The graph augmentation includes edge perturbation, feature masking, node dropping, etc. (line 3). Then we inject randomized edgedrop noise to one of the augmented graphs (line 4). From line 5 to line 10, we train the GNN encoder through GCL by maximizing the agreement of representations in these two views. In detail, we apply to obtain node or graph representations and from and , respectively (line 5), then we do gradient descent on based on Eq. (1).

Appendix E Discussions

E.1 Difference between RES and Edge-dropping Augmentations in [9] and [58]

Our RES is inherently different from the random edge-dropping augmentation in GraphCL [9] and the learnable edge-dropping augmentation in ADGCL [58]: (i) Random edge-dropping is an augmentation method to generate different augmented views and maximize the agreement between views, and the learnable edge-dropping [58] is also an augmentation method to enhance downstream task performance. However, RES is devised from the robustness perspective, providing certifiable robustness and enhancing the robustness of any GCL method. (ii) While random edge-dropping and learnable edge-dropping are only applied to augment graphs for GCL, RES extends beyond this. Following the generation of two augmented views as shown in Sec. 5.1, RES injects randomized edgedrop noise into one augmented view during GCL training. Then, it performs randomized edgedrop smoothing in the inference phase through Monte Carlo, as shown in Sec. 5.2. Specifically, for inference using RES, samples of are drawn by injecting randomized edge-drop noise to times. The final prediction is from Monte Carlo, selecting the predictions with the highest frequency in samples.

To demonstrate the effectiveness of RES, we further compare ADGCL with RES-GraphCL on MUTAG and PROTEINS. We also add RES-ADGCL into comparisons. More details are shown in Appendix. I.4.

E.2 Additional Details of Concatenation Vector

The concatenation vector is a vector to depict the structure of the node/graph for learning representations. For node-level tasks, it represents the connection status of any pair of nodes in the K-hop subgraph of the node . For graph-level tasks, it represents the connection status of any pair of nodes in the graph . To construct such a vector, we select the upper triangular part of the adjacency matrix of the K-hop subgraph of or the graph and flatten it into the vector, where each item in this vector can denote the connection status of any pair of nodes in the K-hop subgraph of the node or the graph .

The motivation for using this notation is that since we focus on perturbations on the graph structure in this paper, we treat the feature vector of as a constant and use the adjacency matrix of the K-hop subgraph of the node or the adjacency matrix of the graph to represent the structure of the node or graph. For simplicity and clarity, given a GNN encoder and the concatenation vector of the node or the graph as above, we then omit the node feature matrix and simply write the node ’s representation and the graph ’s representation as . Therefore, we use a unified notation to denote the node or the graph , and further facilitate our theoretical derivations.

E.3 Definition of Well-trained GNN encoders

The well-trained GNN encoder is defined as an encoder that can extract meaningful and discriminative representations by mapping the positive pairs closer in the latent space while pushing dissimilar samples away.

To evaluate whether a GNN encoder is well trained or not mathematically, we introduce criteria based on the similarity between node/graph representations in the latent space. For each positive pair with its negative samples , we clarify that is well-trained at if the following inequality is satisfied:

| (E.42) |

where is a cosine similarity function. This implies that can effectively discriminate from all its negative samples in and learn the meaningful representations for in the latent space. Therefore, based on Eq. (E.42), we can further extend the criteria for certifying robustness in GCL, which is shown in Definition 2.

E.4 Rationale behind Setting High Values for

The proposed robust encoder training method in Sec. 5.1 improves the model utility of GCL. Even setting a large for RES, we can still obtain high robust accuracy on clean graphs, further leading to high certified accuracies.

Specifically, as shown in Sec. 6.1, certified accuracy denotes the fraction of correctly predicted test nodes/graphs whose certified perturbation size is not smaller than the given perturbation size. It implies that these certified robust samples should also be correctly predicted by RES in the clean datasets. However, as the reviewer said, introducing randomized edgedrop solely to test samples during the inference could potentially hurt downstream task performance and further negatively impact the certified robustness based on Eq.(8). Thus, we propose robust encoder training for RES in Sec. 5.1 by injecting randomized edgedrop noise into one augmented view during GCL . It ensures the samples with randomized edgedrop noises align in latent class with clean samples under the encoder, thereby mitigating the negative impacts of such noises and further benefiting the robustness and certification of RES.

Appendix F Code

Our code is available at https://github.com/ventr1c/RES-GCL.

Appendix G Additonal Details of Experiment Settings

G.1 Dataset Statistics

For node classification, we conduct experiments on 4 public benchmark datasets: Cora, Pubmed [47], Amazon-Computers [48] and OGB-arxiv [49], Cora and Pubmed are small citation networks. Amazon-Computers is a network of goods represented as nodes, and edges between nodes represent that the two goods are frequently bought together. OGB-arxiv is a large-scale citation network. For graph classification, we use 3 well-known dataset: MUTAG, PROTEINS [50] and OGB-molhiv [49]. MUTAG is a collection of nitroaromatic compounds. PROTEINS is a set of proteins that are classified as enzymes or non-enzymes. OGB-molhiv is a dataset contains molecules, which is adopted from MoleculeNet [59]. Among these datasets, for Cora and Pubmed, we evaluate the models on the public splits. Regarding to the other 5 datasets, Coauthor-Physics, OGB-arxiv, MUTAG, PROTEINS and OGB-molhiv, we instead randomly select , , and nodes/graphs for the training, validation and test, respectively. The statistics details of these datasets are summarized in Table 2.

| Datasets | #Graphs | #Avg. Nodes | #Avg. Edges | #Avg. Feature | #Classes |

|---|---|---|---|---|---|

| Cora | 1 | 2,708 | 5,429 | 1,443 | 7 |

| Pubmed | 1 | 19,717 | 44,338 | 500 | 3 |

| Coauthor-Physics | 1 | 34,493 | 495,924 | 8415 | 5 |

| OGB-arxiv | 1 | 169,343 | 1,166,243 | 128 | 40 |

| MUTAG | 188 | 17.9 | 39.6 | 7 | 2 |

| PROTEINS | 1,113 | 39.1 | 145.6 | 3 | 2 |

| OGB-molhiv | 41,127 | 25.5 | 27.5 | 9 | 2 |

G.2 Attack Methods

One of our goals is to show RES is robust to various structural noises, we evaluate RES on 4 types of structural attacks in evasion setting, i.e., Random attack, Nettack [11], PRBCD [51], CLGA [16] for both node and graph classification. The procedure of the evasion attack against GCL in transductive node classification is shown in Algorithm 2. The details of these attacks are described following:

-

1.

Random Attack: We randomly add some noisy edges to the graphs for node classification and graph classification, respectively, until the attack budget is satisfied. Specifically, we consider two kinds of attack settings for node classification, that is, global random attack and targeted random attack. For global random attack, which is used in Sec. 6.2, we randomly inject some fake edges (10% in our setting) into the whole graph. For targeted attack, we randomly connect some fake edges to the direct neighbors of target nodes.

-

2.

Nettack [11]: It is a targeted attacks for node classification that manipulate the graph structure to mislead the prediction of target nodes.

-

3.

PRBCD [51]: It is a scalable global attack for node classification that aims to decrease the overall accuracy of the graph.

-

4.

CLGA [16]: It is an unsupervised gradient-based poisoning attack targeting graph contrastive learning for node classification. Since we focus on evasion attacks, we directly use the poisoned graph generated by CLGA in the downstream tasks to evaluate the performance and regard it as an evasion global attack.

Moreover, we further consider two graph injection attack methods, i.e., TDGIA [60] and AGIA [61], as baselines to demonstrate the robustness of RES. The details of experimental results are shown in Sec. I.3.

G.3 Compared Methods

We select four state-of-the-art GCL methods and employ RES on them to train smoothed GNN encoders:

-

1.

GRACE [30]: It is node-level GCL method which creates multiple augmented views by perturbing graph structure and masking node features. Then it encourages consistency between the same nodes in different views.

-

2.

BGRL [52]:Insipred by BYOL [62], it is performs graph contrastive learning that does not require negative samples. Specifically, it applies two graph encoders (i.e., online and targeted encoders), and update them iteratively to make the predicted representations closer to the true representations for each node.

- 3.

- 4.

Moreover, we also compare RES-GCL with several representative and state-of-the-art graph representation learning methods and robust GCLs against structural noises:

-

1.

Node2Vec [53]: It is a traditional unsupervised methods. Its key idea is to perform random walks on the graph to generate sequences of nodes that capture both the local and global structure of the graph.

-

2.

GAE [54]: It is a representative unsupervised learning method which learns a low-dimensional representation of a graph by encoding its nodes and edges into a vector space, and then decoding this representation back into a graph structure. It is trained to minimize the reconstruction error between the original graph and the reconstructed graph.

-

3.

GCL-Jaccard: It is implemented by removing dissimilar edges based on Jaccard similarity before and after the training phase, respectively, which is inspired from GCN-Jaccard [64].

-

4.

Ariel [15]: It is a robust GCL method which uses an additional adversarial graph view in graph contrastive learning to improve the robustness. An information regularizer is also applied to stabilize its performance.

G.4 Implementation details

A 2-layer GCN is employed as the backbone GNN encoder and a common used linear evaluation scheme [31] is adopt in the downstream tasks. More specifically, each GNN encoder is firstly trained via GCL method and then the resulting embeddings are used to train and test a -regularized logistic regression classifier. GRACE is implemented based on the source code published by authors 111https://github.com/CRIPAC-DIG/GRACE. BGRL and DGI methods are implemented based on PyGCL library [65]. All hyperparameters of all methods are tuned based on the validation set for fair comparison. All models are trained on an A6000 GPU with 48G memory.

G.5 Attack Settings

In this paper, we assume that the attacker can conduct attack in two settings: transductive node classification and inductive graph classification. These two settings are described below:

-

•

Transductive Setting: In this setting, test instances (nodes/graphs) are visible during both training the GNN encoder and inference in downstream tasks. Specifically, we first train a GNN encoder via GCL on a clean dataset that includes test nodes to generate node representations. Then, an attacker adds perturbations to the test nodes, causing to produce poor representations that degrade performance on downstream tasks. For example, an attacker may attempt to manipulate a social network by injecting fake edges, which could affect the performance of a well-trained in tasks such as community detection, influential node identification, or link prediction.

-

•

Inductive Setting: In this setting, test instances only appear in downstream tasks and are invisible during the training phase. This setting is similar to the transductive setting, but the test nodes/graphs are not seen during training. This scenario commonly arises in real-world applications such as new drug discovery, where an attacker may attempt to manipulate a new molecular graph in the test set to mislead the model, resulting in incorrect predictions in downstream tasks.

Appendix H Additional Results of the Performance of Certificates

In this section, we extend the experiments in Sec. 6.3 and present comprehensive results on the performance of robustness certificates. Our aim is to demonstrate that RES can effectively provide certifiable robustness for various GCL methods. We select GRACE, BGRL, DGI, and GraphCL as the target GCL methods and integrate them with RES. We perform experiments on Cora, Pubmed, Coauthor-Physics, and OGB-arxiv for node classification tasks, as well as MUTAG, PROTEINS, and OGB-molhiv for graph classification tasks. Certified accuracy is selected as the evaluation metric. Specifically, certified accuracy [46, 24] denotes the fraction of correctly predicted test nodes/graphs whose certified perturbation size is no smaller than the given perturbation size. The complete results are reported in Fig. 4 to Fig. 8.

Appendix I Additional Results of the Performance of Robustness