CEMFormer: Learning to Predict Driver Intentions from In-Cabin and External Cameras via Spatial-Temporal Transformers

Abstract

Driver intention prediction seeks to anticipate drivers’ actions by analyzing their behaviors with respect to surrounding traffic environments. Existing approaches primarily focus on late-fusion techniques, and neglect the importance of maintaining consistency between predictions and prevailing driving contexts. In this paper, we introduce a new framework called Cross-View Episodic Memory Transformer (CEMFormer), which employs spatio-temporal transformers to learn unified memory representations for an improved driver intention prediction. Specifically, we develop a spatial-temporal encoder to integrate information from both in-cabin and external camera views, along with episodic memory representations to continuously fuse historical data. Furthermore, we propose a novel context-consistency loss that incorporates driving context as an auxiliary supervision signal to improve prediction performance. Comprehensive experiments on the Brain4Cars dataset demonstrate that CEMFormer consistently outperforms existing state-of-the-art methods in driver intention prediction.

I Introduction

Over the past decade, Advanced Driver-Assistance Systems (ADAS) have emerged as an invaluable asset in the automotive industry, significantly enhancing driver safety by seamlessly collaborating with human operators to deliver comprehensive traffic information and timely alerts for hazardous maneuvers. ADAS technologies can aid drivers by identifying potential threats through passive assistance or providing proactive guidance to navigate through safety-critical scenarios by leveraging vehicle-to-everything (V2X) communications [1] and augmented reality (AR) [2].

In response to the growing demand for more advanced safety features, automotive manufacturers have started to develop cutting-edge ADAS capable of anticipating a driver’s intentions before they execute a maneuver, thereby preventing accidents. These sophisticated systems rely on a combination of sensors for comprehensive analysis. However, accurately predicting driver intentions remains a formidable challenge, primarily due to several contributing factors.

-

•

Complexity of real-world driving scenarios. Numerous factors can hinder a vehicle’s ability to perceive its surrounding traffic environment, such as weather, road conditions, lighting, or visibility. Furthermore, traffic situations are dynamic and subject to rapid changes, necessitating continuous monitoring and adaptation.

-

•

Constraints of temporal context Driver intention anticipation differs from offline video understanding tasks like action detection, which assume the entire video is accessible during inference. Instead, ADAS must process data causally and in real-time, introducing unique challenges.

-

•

Unpredictability of human behavior. Human drivers can exhibit unpredictable behavior in a wide range of driving situations, influenced by factors such as distractions, emotional states, inattention, lack of experience, or poor decision-making. This unpredictability can result in hazardous situations on the road, making it difficult for ADAS to accurately anticipate and respond to their actions.

Prior research on driver intention anticipation has explored various methods to address these challenges. For instance, following the introduction of the mobility digital twin concept [3], Liao et al. [4] further developed a driver digital twin to conduct online prediction of lane-change intentions of drivers in a personalized manner. Wang et al. [5] proposed a nonlinear auto-regressive neural network to predict the speed-tracking behaviors of various drivers. Gebert et al. [6] suggested employing 3D ResNet-101 models to predict driver intentions in an end-to-end fashion. Rong et al. [7] introduced a ConvLSTM-based auto-encoder to encode traffic scene motion and fuse features extracted from dual camera inputs using a deep-net classifier.

The aforementioned methods have made significant progress in the field of driver intention anticipation, but they do present certain limitations. Firstly, these approaches mainly focus on combining in-cabin and front-facing view information after processing the data separately during the early stages. This strategy may lead to suboptimal performance, as the individual processing steps might not be specifically designed for optimal integration of information from both sources. Secondly, these methods overlook the importance of maintaining consistency between their predictions and the prevailing driving context. Taking traffic context into account can help alleviate the challenge of reducing uncertainty in predicting driver behaviors. For example, if the vehicle occupies the rightmost lane, the system should not anticipate a lane change to the right.

To address these challenges, we introduce a cross-view episodic memory transformer, named CEMFormer, which effectively aggregates spatio-temporal features from both in-cabin and external cameras as well as historical memory features. The memory representations generated by CEMFormer efficiently support the anticipation of driver intentions. Our CEMFormer model contains three key designs: (1) a spatial cross-view encoder that combines spatial features from in-cabin and external camera views, (2) an episodic memory module that fuses spatial and temporal information through self-attention mechanisms [8, 9], and (3) a novel context-consistency loss that utilizes traffic context as supplementary training cues for enhanced prediction accuracy.

Our primary contributions are as follows:

-

•

We propose CEMFormer, a spatial-temporal transformer encoder that fuses multi-camera and multi-timestamp input into episodic memory representations, addressing the complexities of real-world driving scenarios and constraints of temporal context.

-

•

We develop a context-consistent loss that enhances the model’s ability to employ traffic context as an auxiliary supervision signal during training, reducing uncertainty in predicting driver intentions.

-

•

We assess the proposed CEMFormer on the Brain4Cars benchmark. Our CEMFormer consistently achieves superior performance compared to previous state-of-the-art methods. For instance, CEMFormer attains F1 score with approximately fewer parameters, outperforming the previous best method by 2.8 points. Furthermore, the lightweight model architecture allows for an inference speed of 15 FPS, making it suitable for real-time deployment.

II Methodology

II-A Preliminaries

Given multi-view streaming camera inputs and traffic-related context information, our objective is to predict a future event based on observations up to the present time. These future events fall into one of several predefined categories. We denote the input space as , the output space as , and the context space as . During training, a set of training samples is provided, where represents the observation at time . is the ground-truth label of the event that occurs at the end of the video at time . is the vector containing auxiliary context information, which is utilized during training. During inference, however, the algorithm processes each incoming video frame in an online manner. Specifically, the online prediction system receives at each time step, with the goal of predicting the event that will occur at time given only past and current observations , where .

We propose a novel transformer-based framework for online driver intention anticipation, designed to effectively aggregate spatio-temporal features from in-cabin and external cameras as well as episodic memory representations using attention mechanisms. As illustrated in Fig. 1, CEMFormer comprises encoder layers, each adopting the conventional structure from vision transformers [10] with some tailored designs.

left lane change

left most lane

right lane change

right most lane

left turn

( left most lane) right most lane

right turn

( right most lane) left most lane

II-B Multi-View Embeddings

Firstly, distinct patch projection layers are applied to each input view. Suppose we have the observation at time , which contains views:

| (1) |

where and represent the height and width of the input image from view , and denotes the number of channels. For simplicity, we omit in this paragraph. The image is then divided into a grid of patches, each with a size of , where is the patch size and is the number of patches. The patches are subsequently flattened and linearly projected to -dimensional tokens:

| (2) |

where is the projection matrix, and represents the position embedding. Inputs from multiple views are then concatenated into a combined sequence :

| (3) |

which is subsequently fed into the spatial-temporal encoder.

II-C Episodic Memory

Episodic memory pertains to the capacity to recall latent representations from the past time series [11]. CEMFormer incorporates this concept to process camera inputs online, allowing it to retain and reference prior context while facilitating information flow between iterations. Consequently, CEMFormer can make predictions based on both previous and current frames, resulting in enhanced robustness and accuracy.

Specifically, episodic memory embeddings are prepended to the input sequence:

| (4) |

The spatial-temporal encoder then aggregates the features from in-cabin and external cameras as well as episodic memory representations by stacking transformer blocks:

| (5) |

In the last layer, to ensure information flow between frames, the current episodic memory representations are passed to the input sequence of the next moment:

| (6) |

II-D Context-Consistency Loss

The episodic memory representations at the output of the spatial-temporal encoder are fed into a prediction head to generate the final outputs. Directly optimizing the standard cross-entropy loss can lead to incorrect predictions of some scenarios into categories that conflict with the traffic context. To address this issue, we propose a new context-consistency (CC) loss, which applies a penalty for making such wrong predictions. Specifically, let be the set of contradicting scenarios, which is a subset of false positive cases. The CC loss can be defined as:

| (7) |

where is the current traffic context, is a binary indicator, and is the predicted probability of event given by the model.

Following [12], we take advantage of the exponentially growing loss to encourage the model to predict early while ensuring that it does not over-fit the training data when there is insufficient context for anticipation. Combining the cross-entropy loss, the context-consistent loss, and the exponentially growing loss, we refer to the unified loss function as the joint Context-Consistent cross entropy loss:

| (8) |

Since the derivatives with respect to all parameters can be computed, we can effectively train the proposed CEMFormer using an off-the-shelf optimizer to minimize the loss function with back-propagation through time (BPTT) as in [13].

III Experiments and Results

| Data Source | Method | Param.(M) | Accuracy | |

|---|---|---|---|---|

| in-cabin only | Gebert et al. [6]∗ | 240.26 | 0.8310 0.0250 | 0.8170 0.0260 |

| Rong et al. [7]∗ | 46.22 | 0.7740 0.0002 | 0.7549 0.0002 | |

| CEMFormer (ours) | 86.6 | 0.8447 0.0598 | 0.8266 0.0540 | |

| external only | Gebert et al. [6]∗ | 240.26 | 0.5320 0.0500 | 0.4340 0.0900 |

| Rong et al. [7]∗ | 160.41 | 0.6087 0.0001 | 0.6638 0.0003 | |

| CEMFormer (ours) | 86.6 | 0.6475 0.0282 | 0.6631 0.0219 | |

| in-cabin external | Gebert et al. [6]∗ | 325.52 | 0.7550 0.0240 | 0.7320 0.0220 |

| Rong et al. [7]∗ | 212.92 | 0.8398 0.0001 | 0.8430 0.0001 | |

| CEMFormer (ours) | 87.3 | 0.8537 0.0295 | 0.8709 0.0023 |

III-A Dataset and Setup

We assess the performance of our proposed method for maneuver anticipation using the publicly available Brain4Cars dataset [14]. This dataset comprises 594 video clips, showcasing both in-cabin and forward-facing views of a vehicle111Though it was reported that the dataset includes 700 videos, a portion of them are missing in the public release.. The dataset encompasses five driver maneuver categories, which defines the output space for our experiments {go straight, left lane change, left turn, right lane change, right turn}. Lane changes and turns are annotated with the maneuver’s start time, corresponding to when the wheel touches the lane marking or when the vehicle begins to yaw at the intersection, respectively [12].

Based on the available traffic context information in the dataset, we define the traffic context vector , as a three-dimensional binary vector. Each dimension represents whether the ego vehicle is in the left-most lane, in the right-most lane, and near an intersection, respectively. Additionally, the set of contradicting scenarios in Eq. 7 is formally defined as (left lane change, ), (right lane change, ), (left turn, ), (right turn, ), (left turn, ), (right turn, ), and is visualized in Fig. 2.

To ensure the reliability of the results, we employ a 5-fold cross-validation in all our experiments, which is consistent with previous studies. The final evaluation metrics include the average accuracy and F1 score, along with their standard deviations.

| Module | Accuracy | ||

|---|---|---|---|

| EM | CC | ||

| ✗ | ✗ | 0.7640 0.0059 | 0.7599 0.0161 |

| ✗ | ✓ | 0.7751 0.0424 | 0.8041 0.0296 |

| ✓ | ✗ | 0.8176 0.0051 | 0.8143 0.0262 |

| ✓ | ✓ | 0.8537 0.0295 | 0.8709 0.0023 |

| K | Accuracy | |

|---|---|---|

| 2 | 0.8304 0.0187 | 0.8623 0.0010 |

| 4 | 0.8537 0.0295 | 0.8709 0.0023 |

| 8 | 0.8511 0.0473 | 0.8681 0.0172 |

III-B Implementation Details

For our experiments, we initialize our model using the DINO ViT-B/16 [15] pre-trained weighs, which was trained on ImageNet [16]. We adopt AdamW [17] as the optimizer with a weight decay of 0.05 and apply a cosine learning rate scheduler [18] with the base learning rate set to . Both the in-cabin and external vehicle camera streams have a resolution of . With a patch size of , this results in a total of 392 patches. We empirically set the number of memory tokens (Ablation study is in Sec. III-D). For data augmentation, we divide each video into segments of equal duration and randomly sample one frame from each segment, where is the video length in seconds, drawing inspiration from [19]. As a frame-level data augmentation strategy, we also employ simple random crop with random horizontal flip, introduced in [20]. Owing to the limited size of the Brain4Cars dataset, we only fine-tune the multi-head self-attention layers in the spatial-temporal encoder, as recommended in [21]. Our model is trained for 200 epochs on a single NVIDIA RTX 3090 Ti GPU with a batch size of 10.

III-C Comparison with State-of-the-Art

Tab. I presents the comparison results on the Brain4Cars test set. We compare CEMFormer to two other widely used end-to-end methods [6, 7], as they have outperformed traditional machine learning approaches in driver intention prediction tasks222For a fair comparison, we do not consider results from [12] as part of its training data is not accessible.. CEMFormer achieves the highest accuracy of 85.37% and an F1 score of 87.09% with the multi-view inputs. Furthermore, we observe that CEMFormer surpasses the other two methods in terms of the number of parameters. These results indicate that the proper use of an episodic memory-guided architecture allows the model to learn complex spatial-temporal relationships from both in-cabin and external driving views. This is in contrast to previous work such as [6], which claimed that outside views were not helpful for driver intention prediction tasks. Additionally, our model significantly reduces the number of parameters compared to previous methods while maintaining a compact size, even when incorporating additional views. This demonstrates the scalability of the proposed model.

Multi-View with Context Consistency

Multi-View with Episodic Memory

Single-View with EM and CC

Single-View with EM and CC

Multi-View with Context Consistency

Cross-View with Episodic Memory

Single-View with EM and CC

Multi-View with EM and CC

III-D Ablation Study

Module Analysis

To investigate the effect of different modules, we conduct ablation experiments to further verify the impact of the episodic memory (EM) and the context-consistency loss (CC) by modifying one component while keeping the other one fixed. Both in-cabin and external camera views are used in the experiments. According to the results in Tab. II, the episodic memory mechanism contributes a 4.42% improvement in F1 score compared to the baseline model. Meanwhile, the context-consistency loss results in a 5.44% improvement in F1 score. The two components complement each other in terms of performance and variance, and when used together, they achieve an accuracy of 85.37% and an F1 score of 87.09%. We also visualize the contribution of each module in Sec. III-E.

Influence of Episodic Memory Tokens

We present the results of selecting the number of episodic memory tokens based on the comparison experiment in Tab. III. The best performance in accuracy and F1 score is achieved when . We also notice that larger values of lead to higher variance. The parameter is task-specific and is influenced by the number of input views and the complexity of the task.

Model Latency

We evaluate the latency of the proposed CEMFormer model with both single-view or double-view inputs. The latency is measured on an RTX 3090 Ti GPU. The results presented in Tab. IV demonstrates that the CEMFormer model achieves satisfactory real-time performance, with single-view and double-view inputs achieving 22.08 and 15.56 frames per second, respectively. This performance level indicates that the model’s computational cost is suitable for real-time applications.

| Data Source | Parameters | Frames per second (FPS) |

|---|---|---|

| Single-view | 86.6M | 22.08 |

| Dual-view | 87.3M | 15.56 |

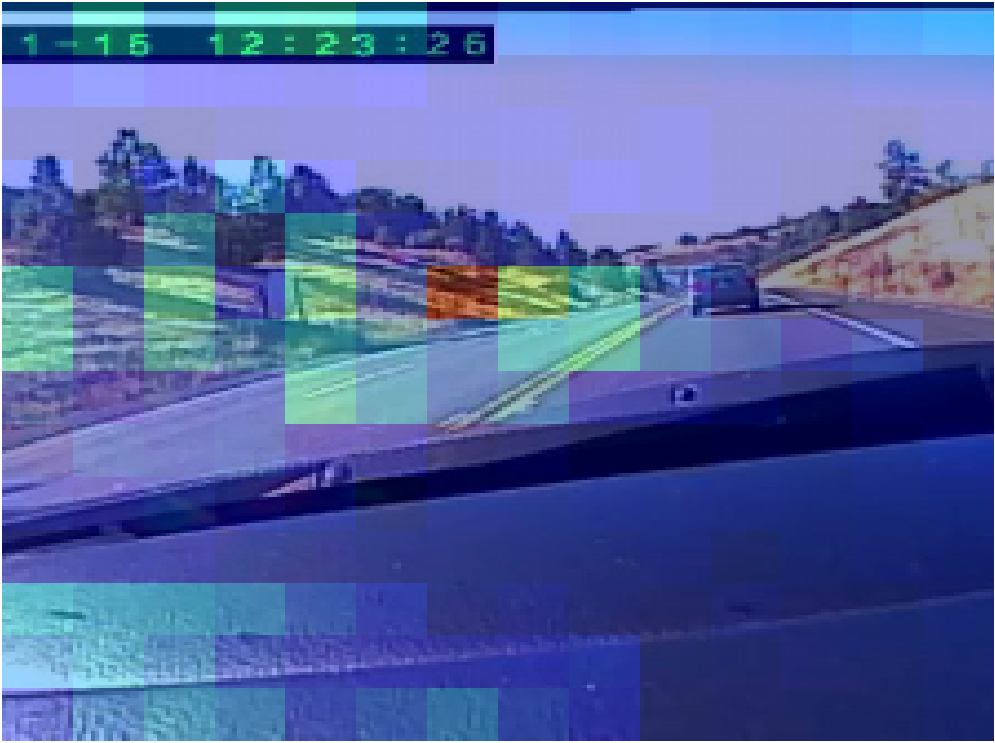

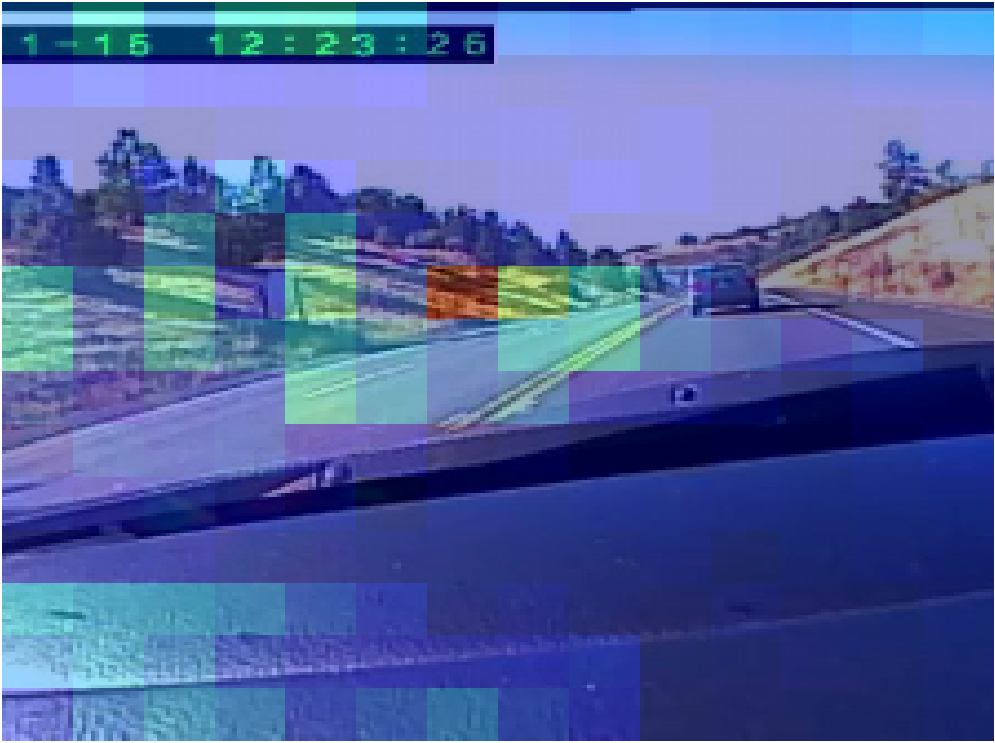

III-E Qualitative Results & Interpretability

To offer a thorough understanding of the CEMFormer model’s performance during online inference, we present detailed visualizations that demonstrate how the individual transformer modules contribute to identifying crucial regions within multi-view streams. The well-trained CEMFormer model performs remarkably in most scenarios, as illustrated in Fig. 3 using one episode as an example.

We visualize attention maps for both in-cabin and external views in the last layer of the spatial-temporal encoder. Attention scores are employed to generate attention maps, which are then superimposed onto the original images. These attention scores are reshaped according to their original spatial positions to produce the attention maps, with red representing the highest level of focus on a specific region. Images displayed in the same row show results from the same model. The following observations can be made based on the visualization results:

Episodic Memory

Comparing the first and last rows, it becomes evident that when episodic memory is unavailable, image frames are encoded independently, preventing the model from fusing historical information. In contrast, applying episodic memory allows the model to fuse historical data, gradually reducing uncertainty as the temporal context increases. This observation holds for both in-cabin and external views.

Context Consistency

Comparing the second and last rows, we can see that without context consistency, the attention map appears more divergent, indicating suboptimal performance. This observation supports our claim that the context consistency loss functions as a regularizer, assisting the model in reducing uncertainty and concentrating on the most important regions. This consistency is observed in both in-cabin and external views.

Multi-View

When comparing single-view attention maps to those of multiple views, the latter exhibits superior outcomes. In the in-cabin view, the single-view model’s focus is inconsistent, initially concentrating on the driver’s face before shifting to the steering wheel. In contrast, the cross-view results prioritize the driver’s face correctly. Likewise, in the external view, the single-view results pay more attention to off-road regions, while the cross-view results focus on the road—particularly the center line.

IV Related Work

Video Understanding Models

Video understanding models are designed to enable computers to comprehend and interpret video content in a manner similar to that of humans. These models process videos as sequences of images and employ various techniques, such as handcrafted features [22, 23], recurrent neural networks [24, 25], convolutional neural networks[26, 27], and transformer-based architectures [28, 29], to extract and analyze the spatio-temporal features from videos. They then utilize this data to make predictions about the video content.

Assistive Features for Vehicles

Modern vehicles are equipped with cameras and other sensors that continuously monitor the surrounding environment. These sensors, using multi-sensory fusion, provide various assistive features such as lane keeping, forward collision avoidance, and adaptive cruise control. These features not only warn drivers of potentially hazardous maneuvers but also enhance the overall driving experience. While driver monitoring for distraction and drowsiness has been extensively researched [30, 31, 32], our work focuses on building next-generation ADAS capable of anticipating maneuvers before they occur [33]. This capability will not only improve current ADAS and driver monitoring techniques but also significantly enhance driver safety.

V Discussion and Conclusion

In this work, we have proposed CEMFormer, a framework designed to predict driver intentions using in-cabin and external camera inputs. The model efficiently aggregates spatial-temporal information and employs the novel context-consistency loss to incorporate driving context as an auxiliary supervision signal during training. Despite these advancements, there are some limitations and future directions worth exploring.

The design of the episodic memory module is based on the assumption that the most informative contextual cues appear shortly (typically less than 5 seconds) before the maneuver [34]. This assumption may not always be accurate, which could limit the model’s predictive capabilities in certain scenarios.

We observed a modest accuracy improvement when incorporating external data into in-cabin camera data. One possible explanation for this limited improvement could be that when both in-cabin and external cameras are available, the prediction relies predominantly on the in-cabin data, which provides information about the driver’s behavior, such as head and eye movements. The external view offers supplementary traffic information, which might not be directly related to predicting driver intentions. As a result, the advantage of combining the two data streams may be diminished by the noise introduced by irrelevant information. Alternatively, it is possible that processing the forward-facing camera data is more challenging than the in-cabin camera data due to the dynamic nature of the traffic environment.

Moreover, we demonstrated that incorporating traffic context information in the form of a finite set of traffic context encodings can enhance driver intention prediction performance. However, leveraging traffic navigation data collected from High Definition (HD) maps could potentially provide even greater benefits, as it delivers centimeter-level offline location services for ADAS and minimizes environmental interference with real-time streaming camera data [35]. Consequently, future work could involve incorporating additional sensors or integrating with existing HD map systems to further improve the proposed CEMFormer model.

In conclusion, our work contributes to ongoing efforts to improve traffic safety and paves the way for further advancements in driver intention prediction and personalized driving assistance systems.

Acknowledgment

This work is funded by the Digital Twin Roadmap of InfoTech Labs, Toyota Motor North America. The contents of this paper only reflect the views of the authors, who are responsible for the facts and the accuracy of the data presented herein. The contents do not necessarily reflect the official views of Toyota Motor North America.

References

- [1] Z. Wang, X. Liao, X. Zhao, K. Han, P. Tiwari, M. J. Barth, and G. Wu, “A Digital Twin Paradigm: Vehicle-to-Cloud Based Advanced Driver Assistance Systems,” in 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), May 2020, pp. 1–6, iSSN: 2577-2465.

- [2] Z. Wang, K. Han, and P. Tiwari, “Augmented Reality-Based Advanced Driver-Assistance System for Connected Vehicles,” in 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oct. 2020, pp. 752–759, iSSN: 2577-1655.

- [3] Z. Wang, R. Gupta, K. Han, H. Wang, A. Ganlath, N. Ammar, and P. Tiwari, “Mobility Digital Twin: Concept, Architecture, Case Study, and Future Challenges,” IEEE Internet of Things Journal, vol. 9, no. 18, pp. 17 452–17 467, Sep. 2022, conference Name: IEEE Internet of Things Journal.

- [4] X. Liao, X. Zhao, Z. Wang, Z. Zhao, K. Han, R. Gupta, M. J. Barth, and G. Wu, “Driver Digital Twin for Online Prediction of Personalized Lane Change Behavior,” IEEE Internet of Things Journal, pp. 1–1, 2023, conference Name: IEEE Internet of Things Journal.

- [5] Z. Wang, X. Liao, C. Wang, D. Oswald, G. Wu, K. Boriboonsomsin, M. J. Barth, K. Han, B. Kim, and P. Tiwari, “Driver Behavior Modeling Using Game Engine and Real Vehicle: A Learning-Based Approach,” IEEE Transactions on Intelligent Vehicles, vol. 5, no. 4, pp. 738–749, Dec. 2020, conference Name: IEEE Transactions on Intelligent Vehicles.

- [6] P. Gebert, A. Roitberg, M. Haurilet, and R. Stiefelhagen, “End-to-end Prediction of Driver Intention using 3D Convolutional Neural Networks,” in IEEE Intelligent Vehicles Symposium (IV), Jun. 2019, pp. 969–974, iSSN: 2642-7214.

- [7] Y. Rong, Z. Akata, and E. Kasneci, “Driver Intention Anticipation Based on In-Cabin and Driving Scene Monitoring,” in IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Sep. 2020, pp. 1–8.

- [8] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is All you Need,” in Advances in Neural Information Processing Systems, vol. 30. Curran Associates, Inc., 2017.

- [9] X. Cao, W. Ye, E. Sizikova, X. Bai, M. Coffee, H. Zeng, and J. Cao, “Vitasd: Robust vision transformer baselines for autism spectrum disorder facial diagnosis,” in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- [10] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale,” in International Conference on Learning Representations, 2021.

- [11] M. Sandler, A. Zhmoginov, M. Vladymyrov, and A. Jackson, “Fine-Tuning Image Transformers Using Learnable Memory,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 155–12 164.

- [12] A. Jain, A. Singh, H. S. Koppula, S. Soh, and A. Saxena, “Recurrent Neural Networks for driver activity anticipation via sensory-fusion architecture,” in IEEE International Conference on Robotics and Automation (ICRA), May 2016, pp. 3118–3125.

- [13] S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory,” Neural Computation, vol. 9, no. 8, pp. 1735–1780, Nov. 1997.

- [14] A. Jain, H. S. Koppula, S. Soh, B. Raghavan, A. Singh, and A. Saxena, “Brain4Cars: Car That Knows Before You Do via Sensory-Fusion Deep Learning Architecture,” Jan. 2016, arXiv:1601.00740 [cs].

- [15] M. Caron, H. Touvron, I. Misra, H. Jégou, J. Mairal, P. Bojanowski, and A. Joulin, “Emerging Properties in Self-Supervised Vision Transformers,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 9650–9660.

- [16] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2009, pp. 248–255, iSSN: 1063-6919.

- [17] I. Loshchilov and F. Hutter, “Decoupled Weight Decay Regularization,” in International Conference on Learning Representations, 2019.

- [18] Ilya Loshchilov and Frank Hutter, “SGDR: Stochastic Gradient Descent with Warm Restarts,” in International Conference on Learning Representations, 2017.

- [19] L. Wang, Y. Xiong, Z. Wang, Y. Qiao, D. Lin, X. Tang, and L. Van Gool, “Temporal Segment Networks: Towards Good Practices for Deep Action Recognition,” in Proceedings of the European Conference on Computer Vision (ECCV), B. Leibe, J. Matas, N. Sebe, and M. Welling, Eds. Cham: Springer, 2016, pp. 20–36.

- [20] H. Touvron, M. Cord, and H. Jégou, “DeiT III: Revenge of the ViT,” in European Conference on Computer Vision (ECCV), S. Avidan, G. Brostow, M. Cissé, G. M. Farinella, and T. Hassner, Eds. Cham: Springer, 2022, pp. 516–533.

- [21] H. Touvron, M. Cord, A. El-Nouby, J. Verbeek, and H. Jégou, “Three Things Everyone Should Know About Vision Transformers,” in European Conference on Computer Vision (ECCV), S. Avidan, G. Brostow, M. Cissé, G. M. Farinella, and T. Hassner, Eds. Cham: Springer Nature Switzerland, 2022, pp. 497–515.

- [22] I. Laptev, M. Marszalek, C. Schmid, and B. Rozenfeld, “Learning realistic human actions from movies,” in IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2008, pp. 1–8, iSSN: 1063-6919.

- [23] X. Peng, C. Zou, Y. Qiao, and Q. Peng, “Action Recognition with Stacked Fisher Vectors,” in Proceedings of the European Conference on Computer Vision (ECCV), D. Fleet, T. Pajdla, B. Schiele, and T. Tuytelaars, Eds. Cham: Springer International Publishing, 2014, pp. 581–595.

- [24] L. Sun, K. Jia, K. Chen, D.-Y. Yeung, B. E. Shi, and S. Savarese, “Lattice Long Short-Term Memory for Human Action Recognition,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2147–2156.

- [25] D. Li, Z. Qiu, Q. Dai, T. Yao, and T. Mei, “Recurrent Tubelet Proposal and Recognition Networks for Action Detection,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 303–318.

- [26] C. Feichtenhofer, H. Fan, J. Malik, and K. He, “SlowFast Networks for Video Recognition,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 6202–6211.

- [27] S. Zhao, Y. Ma, Y. Gu, J. Yang, T. Xing, P. Xu, R. Hu, H. Chai, and K. Keutzer, “An End-to-End Visual-Audio Attention Network for Emotion Recognition in User-Generated Videos,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, Apr. 2020, pp. 303–311, number: 01.

- [28] M. Xu, Y. Xiong, H. Chen, X. Li, W. Xia, Z. Tu, and S. Soatto, “Long Short-Term Transformer for Online Action Detection,” in Advances in Neural Information Processing Systems, vol. 34. Curran Associates, Inc., 2021, pp. 1086–1099.

- [29] Y. Li, C.-Y. Wu, H. Fan, K. Mangalam, B. Xiong, J. Malik, and C. Feichtenhofer, “MViTv2: Improved Multiscale Vision Transformers for Classification and Detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 4804–4814.

- [30] M. Rezaei and R. Klette, “Look at the Driver, Look at the Road: No Distraction! No Accident!” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 129–136.

- [31] M. Ahmed, S. Masood, M. Ahmad, and A. A. Abd El-Latif, “Intelligent Driver Drowsiness Detection for Traffic Safety Based on Multi CNN Deep Model and Facial Subsampling,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 10, pp. 19 743–19 752, Oct. 2022.

- [32] Y. Ma and Z. Wang, “ViT-DD: Multi-Task Vision Transformer for Semi-Supervised Driver Distraction Detection,” in 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, USA, Jun. 2023, arXiv:2209.09178 [cs].

- [33] Y. Liu, Z. Wang, K. Han, Z. Shou, P. Tiwari, and J. H. L. Hansen, “Vision-Cloud Data Fusion for ADAS: A Lane Change Prediction Case Study,” IEEE Transactions on Intelligent Vehicles, vol. 7, no. 2, pp. 210–220, Jun. 2022.

- [34] B. Morris, A. Doshi, and M. Trivedi, “Lane change intent prediction for driver assistance: On-road design and evaluation,” in 2011 IEEE Intelligent Vehicles Symposium (IV), Jun. 2011, pp. 895–901, iSSN: 1931-0587.

- [35] K. Tang, X. Cao, Z. Cao, T. Zhou, E. Li, A. Liu, S. Zou, C. Liu, S. Mei, E. Sizikova, and C. Zheng, “THMA: Tencent HD Map AI System for Creating HD Map Annotations,” Dec. 2022, arXiv:2212.11123 [cs].