[name=Theorem]theorem \declaretheorem[name=Proposition]proposition \declaretheorem[name=Assumption,numberwithin=section]assumption

Causal Estimation of Exposure Shifts with Neural Networks

Abstract.

A fundamental task in causal inference is estimating the effect of a distribution shift in the treatment variable. We refer to this problem as shift-response function (SRF) estimation. Existing neural network methods for causal inference lack theoretical guarantees and practical implementations for SRF estimation. In this paper, we introduce Targeted Regularization for Exposure Shifts with Neural Networks (tresnet), a method to estimate SRFs with robustness and efficiency guarantees. Our contributions are twofold. First, we propose a targeted regularization loss for neural networks with theoretical properties that ensure double robustness and asymptotic efficiency specific to SRF estimation. Second, we extend targeted regularization to support loss functions from the exponential family to accommodate non-continuous outcome distributions (e.g., discrete counts). We conduct benchmark experiments demonstrating tresnet’s broad applicability and competitiveness. We then apply our method to a key policy question in public health to estimate the causal effect of revising the US National Ambient Air Quality Standards (NAAQS) for from 12 to 9 . This change has been recently proposed by the US Environmental Protection Agency (EPA). Our goal is to estimate the reduction in deaths that would result from this anticipated revision using data consisting of 68 million individuals across the U.S.111The code is available at https://github.com/NSAPH-Projects/tresnet

1. Introduction

The field of causal inference has seen immense progress in the past couple of decades with the development of targeted doubly-robust methods yielding desirable theoretical efficiency guarantees on estimates for various causal effects (Van der Laan et al., 2011; Kennedy, 2016). These advancements have recently been incorporated into the neural network (NN) literature for causal inference via targeted regularization (TR) (Shi et al., 2019; Nie et al., 2021). TR methods produce favorable properties for causal estimation by incorporating a regularization term into a supervised neural network model. However, it remains an open task to develop a NN method that specifically targets the causal effect of a shift in the distribution for a continuous exposure/treatment variable (Muñoz and Van Der Laan, 2012). We call this problem shift-response function (SRF) estimation. Many scientific questions can be formulated as an SRF estimation task (Muñoz and Van Der Laan, 2012). Some notable examples in the literature include estimating the health effects from shifts to the distribution of environmental, socioeconomic, and behavioral variables (e.g., air pollution, income, exercise habits) (Muñoz and Van Der Laan, 2012; Díaz and Hejazi, 2020; Smith et al., 2023).

One of our objectives is to develop a neural network technique that addresses a timely and highly prominent regulatory question. Specifically, the EPA is currently considering whether or not to revise the National Ambient Air Quality Standards (NAAQS), potentially lowering the acceptable annual average concentration from 12 to 11, 10, or 9 . We anticipate that the revision to the NAAQS will ultimately result in a shift to the distribution of concentrations. Our goal is to estimate, for the first time, the reduction in deaths that would result from this anticipated shift using causal methods for SRFs.

Contributions. We develop a novel method, called Targeted Regularization for Exposure Shifts with Neural Networks (tresnet), which introduces two necessary and generalizable methodological innovations to the TR literature. First, we use a TR loss targeting SRFs, ensuring that our estimates retain the properties expected from TR methods such as asymptotic efficiency and double robustness (Kennedy, 2016). Given standard regularity conditions, these properties guarantee that the SRF is consistently estimated when either the outcome model or a density-ratio model for the exposure shift is correctly specified, achieving the best possible efficiency rate when both models are consistently estimated. Second, tresnet accommodates non-continuous outcomes belonging to the exponential family of distributions (such as mortality counts) that frequently arise in real-world scenarios, including our motivating application. In addition to its suitability for our application, we evaluate the performance of tresnet in a simulation study tailored for SRF estimation, demonstrating improvements over neural network methods not specifically designed for SRFs.

Our results contribute to the public debate informing the US Environmental Protection Agency (EPA) on the effects of modifying air quality standards. A preview of the results (fully developed in Section 5) is presented in Figure 1. The figure presents the estimated reduction in deaths (%) resulting from various shifts to the distribution of across every ZIP-code in the contiguous US between 2000 and 2016. These shifts limit the maximum concentration of to the cutoff value for every ZIP-code that exceeds the cutoff, and otherwise leaves ZIP-codes that do not exceed the cutoff unchanged. We vary the cutoff in this SRF between 6 and 16 (x-axis). The y-axis represents the % reduction in deaths that corresponds with each cutoff threshold. Notably, a NAAQS threshold of 9 , would have had the effect of decreasing elder mortality by 4%. These findings present a data-driven perspective on the potential health benefits of the EPA’s proposal.

Related work

Neural network-specific methods in causal inference can broadly be categorized into those focusing on estimating individualized effects (e.g., (Bica et al., 2020; Yoon et al., 2018)) and those aimed at understanding marginal effects from a population. Various articles in the latter category – to which our work belongs – use semiparametric theory to derive theoretical guarantees tied to a specific target causal estimand. Such guarantees are generally focused on double robustness and asymptotic efficiency (Kennedy, 2016; Bang and Robins, 2005; Robins, 2000; Bickel et al., 1993). The efficient influence function (EIF) plays a pivotal role in this domain by characterizing the best possible asymptotic efficiency of estimators. The EIF is also recognized as Neyman orthogonal scores within the double machine learning literature (Kennedy, 2022).

Targeted regularization (TR) has emerged as a tool to incorporate EIF-based methods within deep learning. Notably, the dragonnet (Shi et al., 2019) introduced TR in the context of binary treatment effects, demonstrating its potential for causal inference. Subsequently, the vcnet (Nie et al., 2021) extended the application of TR to the estimation of exposure-response functions (ERFs), showcasing its utility in estimating dose-response curves for continuous treatments and highlighting the adaptability of TR in addressing complex causal questions. Our work builds on the TR literature recognizing its unexplored potential for SRF estimation. Indeed, EIF methods have been applied to scenarios closely related to SRFs outside the neural-network literature under the stochastic intervention and modified treatment policy frameworks (Muñoz and Van Der Laan, 2012; Díaz et al., 2021). However, their direct integration with targeted regularization has not been previously undertaken in the literature – a gap which we address in this work.

Relating to the application, recent studies have investigated the causal effects of air pollution on mortality using ERFs (Wu et al., 2020; Bahadori et al., 2022; Josey et al., 2023). Although these methods inform policy regarding the marginal effects of air pollution on mortality, they cannot adequately address questions suited for SRFs, which are crucial for analyzing the impact of air quality regulation changes. The distinction between ERFs and SRFs, and the significance of SRFs for our application and policy implications, are elaborated in Sections 2 and 5.

2. Problem Statement: The Causal Effect of an Exposure Shift

We let denote a unit from the target population, where is a continuous exposure variable (also known as the treatment), is the outcome of interest, and are covariates. We assume a sample of size and denote it as . For instance, in the application of interest, which is described in detail in Section 5, represents a zip code location in a given year, represents its annual average concentration of (), is the number of deaths, and includes demographic and socioeconomic variables. For simplicity, we will omit the subscript unless required for clarity or when describing statistical estimators from samples.

We assume the following general non-parametric structural causal model (SCM)(Pearl, 2009):

| (1) | ||||

where are exogenous variables. The functions are deterministic and unknown. We will avoid measure-theoretic formulations to keep the presentation simple.

The quantities are known as potential outcomes. Potential outcomes can be divided into two parts. The factual outcome corresponds with the observed outcome when . The counterfactual outcomes are hypothetical results at any point where . We will typically refer to as the counterfactual, as SRFs are tooled for predicting the potential outcome from unobserved exposures (i.e. the counterfactuals), unless specifically stated otherwise.

There are two functions that are pivotal in defining and estimating causal effects. First, the outcome function represents the expected (counter)factual outcome conditional on covariates, expressed as

Unless otherwise specified, all expectations are taken from the data distribution in Equation 1. The second key function, the (generalized) propensity score, is the distribution of the exposure conditional on the covariates, denoted as . Note that is also used generically to represent any density function with the given arguments; the propensity score being a special case.

Exposure shifts

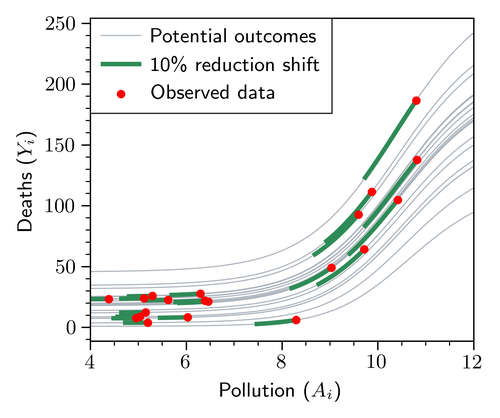

An exposure shift alters a unit’s exposure from its observed value to a modified value . To help build some intuition, one example of an exposure shift is a cutoff shift, defined as , which caps the exposure to a maximum threshold . Another example is a percent reduction shift in which, for instance, a value of would signify a reduction to the observed exposures. These shifts are depicted in Figure 2. As in our application of interest, developed in detail in Section 5, represents the (hypothetical) annual exposure to that would have occurred in a particular zip code and year if an alternate policy (relative to the current NAAQS) had been implemented.

Formally, we define an exposure shift as a counterfactual exposure distribution , replacing the propensity score in the observed data distribution. We do not assume any advanced knowledge of the shifted distribution nor the analytical form of the shift. Instead, we can observe samples from the shifted distribution, realized as pairs of unshifted-shifted exposures . An alternative representation is that of a modified treatment policy (Díaz et al., 2021), where the exposure shift can arise from a (possibly unknown) stochastic or deterministic transform – .

Importantly, while and may seem like different objects due to the notation, they in fact refer to the same data element – the exposure or treatment. The tilde notation “” is helpful to emphasize whether it’s being sampled from the shifted or unshifted distributions.

The estimand of interest: the SRF

The quantity of interest is the expected counterfactual outcome induced by the exposure shift after replacing with :

| (2) |

The SRF is a key estimand in the context of exposure shifts, as it allows us to estimate the effect of a policy-relevant shift in the distribution of a continuous exposure.

Under some regularity conditions, established later in this section, the estimand can be rewritten using the importance sampling formula as

| (3) | ||||

We will derive estimators of using estimators of and in Section 3.

Comparison with traditional causal effects

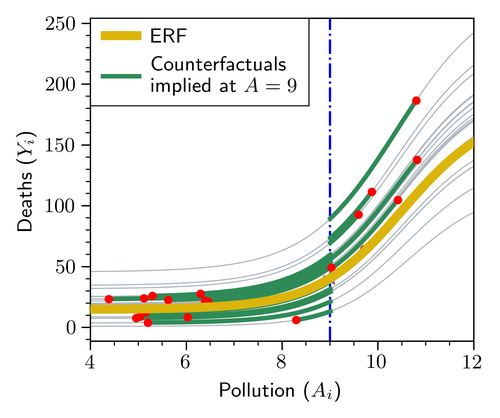

Exposure-response functions are the most common estimands in the causal inference literature for continuous exposures. Also known as a dose-response curve, an ERF can be described as the mapping .

One can consider ERFs as a special case of an SRF in which, for each treatment value , the quantity of interest is the average counterfactual outcome where the exposure is assigned to for all units. That is, it can be seen as a limiting case of an SRF when is a degenerate point-mass distribution at for all units. This observation is the primary reason why SRFs are better suited for our motivating application as ERFs do not allow us to consider scenarios where each unit is given a different exposure value shifted from its observed value. A visual example is shown in Figure 2(c). At each point of the curve, an ERF describes the average outcome when all units experience the same value. By contrast, SRFs allow us to estimate the average outcome when each unit experiences a different value, as would occur under the NAAQS revision.

Moreover, ERFs require extrapolating the outcome estimates to regions where the positivity condition fails (Imbens and Rubin, 2015), that is, regions of the joint exposure-covariate space where no data is available (see Figure 2(c)). Extrapolation to such regions is unnecessary to estimate SRFs efficiently. This insight is supported by our simulation and experiment results in Section 4.

Causal identification

The target estimand can be expressed as a functional of the observable data distribution under standard assumptions, which are:

[Unconfoundedness] There are no backdoor paths from to in the SCM in Equation 1, implying that for all ; {assumption}[Positivity] There are constants such that for all such that .

The first assumption ensures that . This result is sometimes known as causal identification since it allows us to express the causal effect of on as a function of the observed data. A formal proof is given in the appendix. The second assumption implies that the density ratio in Equation 3 is well-defined and behaved. Notice that the notion of positivity used here, as required by SRFs, is generally weaker than the typical positivity assumption in the standard causal inference literature requiring for all (Muñoz and Van Der Laan, 2012).

Multiple shifts

Under the framework outlined so far, it is possible to estimate the effect of multiple exposure shifts simultaneously. We simply let denote the set of finite exposure shifts of interest and adopt the vectorized notation , , and .

3. Estimating SRFs with Targeted Regularization for Neural Nets

As we have suggested earlier, an estimator of the SRF can be derived from estimators of the outcome and density ratio functions. Using TR, we will obtain an estimator that “converges efficiently” to the true at a rate in accordance with the prevailing semiparametric efficiency theory surrounding robust causal effect estimation (Kennedy, 2022).

Additional notation

To describe the necessary theoretical results based on semiparametric theory, we require some additional notation. Given a family of functions , denote . The sample Rademacher complexity is denoted as where are independent and identically distributed Rademacher random variables satisfying . The Rademacher complexity measures the “degrees of freedom” of an estimating class of functions. We use and to denote stochastic boundedness and convergence in probability, respectively. Given a random variable , denote . We also let denote the empirical distribution of given an iid sample . It follows that . Lastly, let denote an element of the augmented data generating process.

The efficient influence function (EIF) The EIF is a fundamental function in semiparametric theory (Kennedy, 2022). It is denoted as the function for an observation . The EIF characterizes the gradient of the target estimand with respect to a small perturbation in the data distribution. The form of the EIF is specific to the target estimand. For the SRF in Equation 2, the EIF is given by

| (4) |

We provide a formal derivation in the appendix. The reader can refer to Tsiatis (2006) and Kennedy (2022) for a more comprehensive introduction to the nature and utility of influence functions. The importance of EIFs for causal estimation relies on the following two properties. First, the best possible variance among the family of regular, asymptotically linear estimators of is bounded below by . This lower bound is known as the efficiency rate. Second, the EIF can be constructed as a function of . As it turns out, if a tuple of estimators satisfies the empirical estimating equation (EEE) given by , then achieves the efficiency rate asymptotically.

The following observation offers an interpretation to the EEE. If satisfy the EEE, then can be decomposed in terms of a debiasing component of the residual error and a plugin estimator for the marginalized average of the mean response:

| (5) |

This heuristic is crucial for the development of the TR loss in the next section.

3.1. Targeted Regularization for SRFs

An immediate approach to obtain a doubly-robust estimator satisfying the EEE would be to use the right-hand side of Equation 5 as a definition of an estimator given nuisance function estimators and . Such an estimator is called the augmented inverse-probability weighting (AIPW) estimator for exposure shifts in analogy to the AIPW estimators for traditional average causal effects (ATE and ERF) (Robins, 2000; Robins et al., 2000). However, it’s been noted in the causal inference literature that, empirically, relying on the debiasing term of Equation 5 causes AIPW-type estimators to have suboptimal performance in finite samples (Shi et al., 2019; Van der Laan et al., 2011). Instead, targeted learning seeks an estimator that, by construction, satisfies the EEE without requiring the debiasing term. TR achieves this goal by learning a perturbed outcome model using a special regularization loss.

Generalized TR for outcomes in the exponential family. We will derive the TR loss for SRFs while also extending the TR framework to accommodate non-continuous outcomes from the exponential family of distributions.

First, we say that the outcome follows a conditional distribution from the exponential family if for some function . The family’s canonical link function is defined by the identity . For all distributions in the exponential family, is invertible (McCullagh, 2019). Exponential families allow us to consider the usual mean-squared error and logistic regression as special cases. They also enable modeling count outcomes as in our motivating application. In this setting, we set and – the canonical environment for Poisson regression. The following theorem forms the basis for the TR estimator.

Theorem 3.1.

Let denote a perturbation parameter and define

| (6) | ||||

Then if and only if

Proof.

A key property of an exponential family is (McCullagh, 2019). Noting that for all , and using the chain rule, we have:

| (7) | ||||

The fact that satisfies the empirical estimating equation follows trivially from the fact that . The first term is zero because of the above results while the last two terms cancel each other by definition. ∎

Notice that the condition in the theorem is achieved at the local minima of . Motivated by this observation, we next define the total TR loss as

where and are the empirical risk functions used to learn and (details in Section 3.2), is a hyperparameter, and is a regularization weight satisfying . The latter condition is needed to ensure statistical consistency, as first discussed by Nie et al. (2021) for ERF estimation.

Since only appears in the regularization term, the full loss in Equation 8 preserves the result that upon optimization Then, the TR estimator is defined as the solution of the optimization problem:

| (8) | ||||

In the theorem below we introduce the main result establishing the double robustness and consistency properties of our TR estimator:

Theorem 3.2.

Let and be classes of functions such that and . Suppose assumptions 2 and 2 hold, and that the following regularity conditions hold: (i) , , ; (ii) either one model is correctly specified ( or ), or both function classes have a vanishing complexity and ; (iii) the loss function in Equation 6 is Lipschitz; (iv) and are twice continuously differentiable. Then, the following statements are true:

-

(1)

The outcome and density ratio estimators of TR are consistent. That is, and .

-

(2)

The estimator satisfies whenever and .

Theorem 3.2 shows that the TR learner of the SRF achieves “optimal” root- convergence when or when either or vanishes. Using standard arguments involving concentration inequalities, the Lipschitz assumption placed on the loss function can be relaxed by assuming that the loss function has a vanishing Rademacher complexity (Wainwright, 2019).

Proof.

The proof strategy follows Nie et al. (2021) but is tailored to the SRF case and the more general exponential family of loss functions. We proceed in two steps.

Step 1: showing consistency of the outcome and density ratio models. Denote the population risk as

where is the loss function in Equation 6 without the targeted regularization. The minimizers of are denoted and . They are the estimators of under infinite data. We will now bound the risk loss, showing that the risk of the finite-sample estimators minimizing Equation 6 is sufficiently close to that of . Precisely, we shall show that

| (9) |

To prove this fact, we first note that

| (10) | ||||

The first inequality is because of the definition of the risk minimizer and for the second one we added and subtracted the empirical risk and rearranged the terms.

Observe that the second and third terms in the last expression are empirical processes, suggesting we can use the uniform law of large numbers (ULLN). Indeed, the regularity assumptions on the Rademacher complexity provide a sufficient condition (Wainwright, 2019). Additionally, the order of the Rademacher complexity is preserved under Lipschitz transforms, then, using that the loss is Lipschitz, it follows that the class has a Rademacher complexity of order . Further, uniform boundedness is also preserved under Lispschitz transformations. Thus, the uniform law of large numbers applies, implying that the last two terms are . Note that the Lipschitz condition can be relaxed by the direct assumption that satisfies the boundedness and complexity conditions for the ULLN (Wainwright, 2019).

We now bound the first term. We begin by adding and subtracting the regularization terms, and compute:

| (11) | ||||

Inequality (a) is due to being a minimizer for the regularized risk; (b) is the result of being bounded below since the exponential family of distributions is log-concave and ; (c) uses the uniform law of large numbers from the Rademacher complexity and the uniform boundedness, and the fact that is Lipschitz; (d) follows from . Combining Equation 10 and Equation 11, we get .

The result now follows from observing that the population risk has a unique minimizer up to the reparameterization of the network weights. Hence, and .

Step 2: Proving convergence and efficiency of . Direct computation gives

| (12) | ||||

where (a) is by the property of the targeted regularization, namely, ; (b) is because of the uniform concentration of the empirical process, again using the vanishing Rademacher complexity and uniform boundedness; (c) integrates over ; (d) uses the definition of and the importance sampling formula with . Since the link function is continuously differentiable, invertible and strictly monotone, then by the mean value theorem there exists such that

From the uniform boundedness and smoothness of the link function, we have that for some constant . Then, using the above result in the last term of Equation 12, we obtain

| (13) | ||||

To complete the proof, we will show that . Letting be as in the Taylor expansion above, we can re-arrange the targeted regularization condition such that

Hence, we can write with the closed-form expression

Observe now that is uniformly lower bounded since the denominator is uniformly bounded in a neighborhood of the solution due to the assumption , and is strictly monotone and continuously differentiable. Hence, there is such that

| (14) | ||||

where (a) uses the uniform concentration of the empirical process and (b) again uses the uniform boundedness of . The proof now follows from combining equations (12), (13), and (14). ∎

| Spline-based varying coefficients | Piecewise linear varying coefficients | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | aipw | outcome | tresnet∗ | vcnet | aipw | drnet | tresnet∗ | drnet+tr |

| ihdp | 3.15 (0.37) | 2.19 (0.06) | 0.61 (0.03) | 0.63 (0.03) | 1.18 (0.14) | 2.36 (0.06) | 0.15 (0.02) | 0.19 (0.02) |

| news | 1.5 (0.19) | 3.65 (0.04) | 0.18 (0.02) | 0.28 (0.03) | 0.99 (0.12) | 0.99 (0.1) | 0.17 (0.01) | 0.26 (0.03) |

| sim-B | 4.1 (0.57) | 0.5 (0.05) | 0.26 (0.03) | 0.29 (0.04) | 1.46 (0.2) | 1.6 (0.2) | 0.14 (0.02) | 0.16 (0.02) |

| sim-N | 5.69 (0.64) | 0.52 (0.05) | 0.32 (0.02) | 0.32 (0.03) | 1.81 (0.25) | 0.95 (0.06) | 0.14 (0.01) | 0.15 (0.01) |

| tcga-1 | 1.13 (0.08) | 0.63 (0.02) | 0.8 (0.01) | 0.87 (0.03) | 0.76 (0.05) | 0.62 (0.02) | 0.61 (0.02) | 0.69 (0.03) |

| tcga-2 | 0.76 (0.09) | 0.24 (0.02) | 0.18 (0.01) | 0.24 (0.02) | 0.36 (0.05) | 0.17 (0.01) | 0.12 (0.0) | 0.16 (0.01) |

| tcga-3 | 0.83 (0.11) | 0.38 (0.03) | 0.1 (0.01) | 0.15 (0.02) | 0.59 (0.06) | 0.59 (0.04) | 0.08 (0.01) | 0.15 (0.02) |

| experiment | outcomew/poisson loss∗ | outcomew/mse loss | tresnetw/poisson loss∗ | tresnetw/mse loss |

|---|---|---|---|---|

| ihdp | 18.82 (3.18) | 3726.92 (357.31) | 2.04 (0.08) | 10986.43 (211.99) |

| news | 3.41 (0.25) | 372.24 (52.02) | 0.33 (0.05) | 1187.94 (100.8) |

| sim-B | 1222.61 (1269.53) | 8433.98 (1327.22) | 1113.58 (1270.49) | 16902.41 (1233.54) |

| sim-N | 50.72 (4.45) | 2491.63 (310.36) | 4.6 (0.22) | 18062.85 (243.2) |

| tcga-1 | 184.89 (6.67) | 6682.91 (474.32) | 40.56 (19.2) | 16307.15 (232.64) |

| tcga-2 | 48.3 (1.44) | 5854.08 (483.41) | 398.82 (143.96) | 16112.02 (186.99) |

| tcga-3 | 18.27 (2.73) | 14381.06 (516.25) | 12.92 (2.3) | 8565.17 (447.13) |

3.2. Architectures for Estimating the Outcome and Density Ratio Functions

Neural network architectures for nuisance function estimation have been widely investigated in causal inference (see Farrell et al. (2021) for a review). We use the architectures proposed in the TR literature as a building block, particularly for continuous treatments (Nie et al., 2021). Nevertheless, previous work in TR has not yet investigated the architectures required for SRF estimation. In particular, we require a new architecture to estimate the density ratio . Rather, previous works have primarily focused on architectures for estimating propensity scores as required by traditional causal effect estimation (e.g. for estimating ATEs and ERFs).

We describe a simple yet effective architecture for estimating and . To keep the notation simple, we will write to denote generic output from a neural network indexed by weights . The architecture has three components, illustrated in Figure 3. The first one maps the confounders to a latent representation . This component will typically be a multi-layer perception (MLP). The second and third components are the outcome and density-ratio heads, which are functions of and the treatment. We describe all three components in detail below.

Outcome model Recall that we assume the outcome follows a conditional distribution from the exponential family. That is, with an invertible link function satisfying . We can identify the canonical parameter with the output of the neural network and learn by minimizing the empirical risk

| (15) |

Next, we need to select a functional form for the neural network. An MLP parameterization with the concatenated inputs of –the naïve choice–would likely result in the effect of being lost in intermediate computations. Instead, we adopt the varying coefficient approach by setting (Nie et al., 2021; Chiang et al., 2001). With this choice, the weights of each layer are dynamically computed as a function of obtained from a linear combination of basis functions spanning the set of admissible functions on . The weights of the linear combination are themselves a learnable linear combination of the hidden outputs from the previous layer. We refer the reader to Nie et al. (2021) for additional background on varying-coefficient layers. Our experiments suggest TR is beneficial for different choices of basis functions.

Estimation of via classification. The density ratio head is trained using an auxiliary classification task. For this purpose, we use an auxiliary classification task where the positive labels are assigned to the samples from and the negative labels to the samples with . This loss can be written as:

| (16) | ||||

where is the binary classification loss and are the label predictions on the logit scale, which coincide with the log-density ratios (Sugiyama et al., 2012). To capture the effect of more effectively, we propose parameterizing the network using the varying-coefficient structure discussed in the previous section with . To our knowledge, we are the first to consider a varying-coefficient architecture for conditional density ratio estimation.

4. Simulation study

We conducted simulation experiments to validate the design choices of tresnet. The so-called fundamental problem of causal inference is that the counterfactual responses are never observed in real data. Thus, we must rely on widely used semi-synthetic datasets to evaluate the validity of our proposed estimators. First, we consider two datasets introduced by Nie et al. (2021), which are continuous-treatment adaptions to the popular datasets ihdp (Hill, 2011) and news (Newman, 2008). We also apply our methods to (Nie et al., 2021)’s fully simulated data, sim-N. In addition, we consider the fully simulated dataset described in (Bahadori et al., 2022), which features a continuous treatment and has been previously used for calibrating models in air pollution studies. Finally, we also consider three variants of the tcga dataset presented in Bica et al. (2020). The three variants consist of three different dose specifications as treatment assignments and the corresponding dose-response as the outcome.

The datasets described here have been employed without substantial modifications from the original source studies to facilitate fair comparison. Note that for each of these synthetic and semi-synthetic datasets, we have access to the true counterfactual outcomes, which allows us to compute sample SRFs exactly. We will consider the estimation task of 20 equally-spaced percent reduction shifts between 0-50% from the current observed exposures. More specifically, for values of in .

Evaluation metric and task. Given a semi-synthetic dataset , consider an algorithm that produces an estimator of given an exposure shift and random seed . To evaluate the quality of the estimator, we use the mean integrated squared error This metric is a natural adaption of an analogous metric commonly used in dose-response curve estimation (Bica et al., 2020).

Experiment 1: Does targeting SRFs specifically improve their estimation (compared to no targeting or standard ERF targeting?) We evaluate two variants of tresnet against alternative SRF estimators, including vcnet (Nie et al., 2021) and drnet (Schwab et al., 2020), two prominent methods used in causal TR estimation for continuous treatments. Since these methods were not designed specifically for SRFs, we expect that their performance for this task can be improved by adapting TR through their baseline architectures.

The first variant of tresnet uses varying-coefficient layers based on splines—see the discussion in Section 3.2 for background. We compare this variant, named tresnet, with the following baselines:

-

(1)

, the AIPW-type estimator for SRFs discussed in Section 3.1, wherein we fit separate outcome and density ratio models which are then substituted into Equation 5;

-

(2)

, which uses the same outcome model as tresnet, but without the TR and density ratio heads;

-

(3)

vcnet, which uses a similar overall architecture as tresnet, but with a TR designed for ERFs rather than for SRFs.

The second variant of tresnet uses varying coefficients based on piecewise linear functions instead of splines. This variant, tresnet, is compared against the following analogous baselines:

-

(1)

, which is the piecewise analogue to ;

-

(2)

drnet, based on Schwab et al. (2020), functioning as the piecewise linear analogue to ;

-

(3)

, which is the analogue to vcnet that can be constructed by adding a density ratio head and TR designed for ERFs rather than SRFs.

Table 1(a) shows the results of this experiment. For both architectures, the tresnet variants achieve the best performance. tresnet is somewhat better than vcnet, which have comparable architectures although tresnet uses a different TR implementation specific for SRF estimation rather than ERF estimation. Likewise, tresnet outperforms . These moderate but consistent performance improvements suggest the importance of SRF-specific forms of TR. We also see strong advantages against outcome-based predictions and AIPW estimators, suggesting that the TR loss and the shared learning architecture is a boon to performance. These results are compatible with observations from previous work in the TR literature (Nie et al., 2021; Shi et al., 2019).

Experiment 2: Does TR improve estimation when count-valued outcomes are observed? For these experiments, we used the spline-based variant and evaluate whether tresnet with the Poisson-specific TR, explained in Section 3, performs better than the mean-squared error (MSE) loss variant when the true data follows a Poisson distribution. This evaluation is important since our application consists of count data requiring a Poisson model which are widely used to investigate the effects of on health (Wu et al., 2020; Josey et al., 2022). We construct similar semi-synthetic datasets as in Experiment 1, however in this experiment the outcome-generating mechanism samples from a Poisson distribution rather than a Gaussian distribution. The results of this experiment are clear–using the correct exponential family for the outcome model is critical, regardless of whether TR is implemented.

5. Main Application: The Effects of Stricter Air Quality Standards

We implemented tresnet for count data to estimate the health benefits caused by shifts to the distribution of that would result from lowering the NAAQS—the regulatory threshold for the annual-average concentration of enforced by the EPA.

Data. The dataset is comprised of Medicare recipients222Access to Medicare data is restricted without authorization by the Centers for Medicare & Medicaid Services since it contains sensitive health information. The authors have completed the required IRB and ethical training to handle these datasets. from 2000–2016, involving 68 million unique individuals. The data includes measurements on participant race/ethnicity, sex, age, Medicaid eligibility, and date of death, which are subsequently aggregated to the annual ZIP-code level. The exposure measurements are extracted from a previously fit ensemble prediction model (Di et al., 2019). The confounders include measurements on meteorological information, demographics, and the socioeconomic status of each ZIP-code. Calendar year and census region indicators are also included to account for spatiotemporal trends. To compile our dataset, we replicated the steps and variables outlined by Wu et al. (2020).

Exposure shifts. We consider two types of shifts, cutoff shits and percent reduction shift, each providing different perspectives and insights. The counterfactuals implied from these scenarios are illustrated in figures 2(a) and 2(b), respectively. First, a cutoff shift, parameterized by a threshold , encapsulates scenarios in which every ZIP-code year that exceeded some threshold are truncated to that maximum threshold. Mathematically, the shift is defined by the transformation . To be more succinct, the exposure shift defines a counterfactual scenario. For this application, the threshold is evaluated at equally spaced points starting with 15 moving down to 6 . We expect that at 15 there will be little to no reduction in deaths since of observations fall below that range. We can contemplate the proposed NAAQS levels through assuming that full compliance to the new regulation holds for incompliant ZIP-codes. The exposure shift should otherwise not affect already compliant ZIP-codes. Second, we onsider percent reduction shifts. This scenario assumes that all ZIP-code years reduce their pollution levels proportionally from their observed value. More precisely, the shift is defined as . We considered a range of percent reduction shifts between . We can interpret these shifts in terms of the NAAQS by mapping each percent reduction to a compliance percentile. For instance, Figure 4 shows that, under a 30% overall reduction in historical values, approximately 82% would comply with a NAAQS of 9 .

Implementation. We implement tresnet using varying-coefficient splines as in Section 4. We select a NN architecture using the out-of-sample prediction error from a 20/80% test-train split to choose the number of hidden layers (1-3 layers) and hidden dimensions (16, 64, 256). We found no evidence of overfitting in the selected models. To account for uncertainty in our estimations, we train the model on 100 bootstrap samples, each with random initializations, thereby obtaining an approximate posterior distribution. Deep learning ensembles have been previously shown to approximate estimation uncertainty well in deep learning tasks (Izmailov et al., 2021).

Results. Figure 1 in the introduction presents the effects of shifting the distribution at various cutoffs on the expected reduction in deaths. The slope is steeper at stricter/lower cutoffs, likely because lower cutoffs affect a larger fraction of the observed population and reduce the overall . For instance, figure Figure 1 shows that had no ZIP-code years exceeded 12 , the observed death counts would have decreased by around 1%. If the cutoff is lowered to 9 , then deaths could have fallen by around 4%. The slope becomes increasingly steeper as the threshold is reduced, suggesting an increasing benefit from lowering the standard concentration level. Another way to interpret this result is to say that there is a greater gain to reducing mortality caused by from lowering the concentration level from 10 to 8 than there is from lowering it from 12 to 10 , despite the obvious observation that both contrasts examine a reduction of 2 .

The results of the percent-reduction shift are presented in Figure 5. The decrease in deaths is approximately linear with respect to the percent decrease in . As such, the SRF shows an approximate 0.5% decrease in deaths resulting from a 10% decrease in . This result is consistent with previous causal estimates of the marginal effect of exposure on elder mortality (Wu et al., 2020). Percent reduction offers a complementary view to the cutoff shift response function as it might pertain to policymakers decisions on the future of the NAAQS.

6. Discussion and Limitations

The results of Section 5 demonstrate the significant need to establish proper estimators when addressing the pressing public health question regarding the potential health benefits of lowering the NAAQS in the United States. In response to this question, we introduce the first causal inference method to utilize neural networks for estimating SRFs. Furthermore, we have extended this method to handle count data, which is crucial for our application in addition to other public health and epidemiology contexts.

There are numerous opportunities to improve to our methodology. First, our uncertainty assessment of the SRF relies on the bootstrap and ensembling of multiple random seeds. While these methods are used often in practice, future research could explore the integration of tresnet with Bayesian methods to enhance uncertainty quantification. Second, our application of the methodology focuses on exposure shifts representing complementary viewpoints to the possible effects of the proposed EPA rules on the NAAQS. However, it does not determine the most probable exposure shift resulting from the new rule’s implementation, based on historical responses to changes in the NAAQS. Subsequent investigations should more carefully consider this aspect of the analysis. The assessment of annual average concentrations at the ZIP-code level is based on predictions rather than on actual observable values, introducing potential attenuation bias stemming from measurement error. Nonetheless, previous studies on measurement error involving clustered air pollution exposures have demonstrated that such attenuation tends to pull the causal effect towards a null result (Josey et al., 2022; Wei et al., 2022).

It is essential to recognize that the SRF framework places additional considerations on the analyst designing the exposure shift. This newfound responsibility can be seen as both a disadvantage and an advantage. However, it highlights the need for an explicit and meticulous statement of the assumptions underlying the considered exposure shifts in order to mitigate the potential misuse of SRF estimation techniques.

Acknowledgements.

The work was supported by the National Institutes of Health (R01-AG066793, R01-ES030616, R01-ES034373), the Alfred P. Sloan Foundation (G-2020-13946), and the Ren Che Foundation supporting the climate-smart public health project in the Golden Lab. Computations ran on the FASRC Cannon cluster supported by the FAS Division of Science Research Computing Group at Harvard University.References

- (1)

- Bahadori et al. (2022) Taha Bahadori, Eric Tchetgen Tchetgen, and David Heckerman. 2022. End-to-End Balancing for Causal Continuous Treatment-Effect Estimation. In International Conference on Machine Learning. 1313–1326.

- Bang and Robins (2005) Heejung Bang and James M Robins. 2005. Doubly robust estimation in missing data and causal inference models. Biometrics 61, 4 (2005), 962–973.

- Bica et al. (2020) Ioana Bica, James Jordon, and Mihaela van der Schaar. 2020. Estimating the effects of continuous-valued interventions using generative adversarial networks. Advances in Neural Information Processing Systems 33 (2020), 16434–16445.

- Bickel et al. (1993) Peter J Bickel, Chris AJ Klaassen, Peter J Bickel, Ya’acov Ritov, J Klaassen, Jon A Wellner, and YA’Acov Ritov. 1993. Efficient and adaptive estimation for semiparametric models. Vol. 4. Springer.

- Chiang et al. (2001) Chin-Tsang Chiang, John A Rice, and Colin O Wu. 2001. Smoothing spline estimation for varying coefficient models with repeatedly measured dependent variables. J. Amer. Statist. Assoc. 96, 454 (2001), 605–619.

- Di et al. (2019) Qian Di, Heresh Amini, Liuhua Shi, Itai Kloog, Rachel Silvern, James Kelly, M Benjamin Sabath, Christine Choirat, Petros Koutrakis, Alexei Lyapustin, et al. 2019. An ensemble-based model of PM2. 5 concentration across the contiguous United States with high spatiotemporal resolution. Environment international 130 (2019), 104909.

- Díaz and Hejazi (2020) Iván Díaz and Nima S Hejazi. 2020. Causal mediation analysis for stochastic interventions. Journal of the Royal Statistical Society Series B: Statistical Methodology 82, 3 (2020).

- Díaz et al. (2021) Iván Díaz, Nicholas Williams, Katherine L Hoffman, and Edward J Schenck. 2021. Nonparametric causal effects based on longitudinal modified treatment policies. J. Amer. Statist. Assoc. (2021), 1–16.

- Farrell et al. (2021) Max H Farrell, Tengyuan Liang, and Sanjog Misra. 2021. Deep neural networks for estimation and inference. Econometrica 89, 1 (2021), 181–213.

- Hill (2011) Jennifer L Hill. 2011. Bayesian nonparametric modeling for causal inference. Journal of Computational and Graphical Statistics 20, 1 (2011), 217–240.

- Imbens and Rubin (2015) Guido W. Imbens and Donald B. Rubin. 2015. Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction. Cambridge University Press.

- Izmailov et al. (2021) Pavel Izmailov, Sharad Vikram, Matthew D Hoffman, and Andrew Gordon Gordon Wilson. 2021. What are Bayesian neural network posteriors really like?. In International conference on machine learning. 4629–4640.

- Josey et al. (2023) Kevin P Josey, Scott W Delaney, Xiao Wu, Rachel C Nethery, Priyanka DeSouza, Danielle Braun, and Francesca Dominici. 2023. Air Pollution and Mortality at the Intersection of Race and Social Class. New England Journal of Medicine 388, 15 (2023).

- Josey et al. (2022) Kevin P Josey, Priyanka DeSouza, Xiao Wu, Danielle Braun, and Rachel Nethery. 2022. Estimating a Causal Exposure Response Function with a Continuous Error-Prone Exposure: A Study of Fine Particulate Matter and All-Cause Mortality. Journal of Agricultural, Biological and Environmental Statistics (2022), 1–22.

- Kennedy (2016) Edward H Kennedy. 2016. Semiparametric theory and empirical processes in causal inference. Statistical causal inferences and their applications in public health research (2016), 141–167.

- Kennedy (2022) Edward H Kennedy. 2022. Semiparametric doubly robust targeted double machine learning: a review. arXiv preprint arXiv:2203.06469 (2022).

- McCullagh (2019) Peter McCullagh. 2019. Generalized linear models. Routledge.

- Muñoz and Van Der Laan (2012) Iván Díaz Muñoz and Mark Van Der Laan. 2012. Population intervention causal effects based on stochastic interventions. Biometrics 68, 2 (2012), 541–549.

- Newman (2008) David Newman. 2008. Bag of words data set. UCI Machine Learning Respository 289 (2008).

- Nie et al. (2021) Lizhen Nie, Mao Ye, Dan Nicolae, et al. 2021. VCNet and Functional Targeted Regularization For Learning Causal Effects of Continuous Treatments. In International Conference on Learning Representations.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32 (2019).

- Pearl (2009) Judea Pearl. 2009. Causality. Cambridge university press.

- Robins (2000) James M Robins. 2000. Robust estimation in sequentially ignorable missing data and causal inference models. In Proceedings of the American Statistical Association, Vol. 1999.

- Robins et al. (2000) James M Robins, Andrea Rotnitzky, and Mark van der Laan. 2000. On profile likelihood: comment. J. Amer. Statist. Assoc. 95, 450 (2000), 477–482.

- Schwab et al. (2020) Patrick Schwab, Lorenz Linhardt, Stefan Bauer, Joachim M Buhmann, and Walter Karlen. 2020. Learning counterfactual representations for estimating individual dose-response curves. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 5612–5619.

- Shi et al. (2019) Claudia Shi, David Blei, and Victor Veitch. 2019. Adapting neural networks for the estimation of treatment effects. Advances in neural information processing systems 32 (2019).

- Smith et al. (2023) Matthew J Smith, Rachael V Phillips, Miguel Angel Luque-Fernandez, and Camille Maringe. 2023. Application of targeted maximum likelihood estimation in public health and epidemiological studies: a systematic review. Annals of Epidemiology (2023).

- Sugiyama et al. (2012) Masashi Sugiyama, Taiji Suzuki, and Takafumi Kanamori. 2012. Density ratio estimation in machine learning. Cambridge University Press.

- Tsiatis (2006) Anastasios A Tsiatis. 2006. Semiparametric theory and missing data. (2006).

- Van der Laan et al. (2011) Mark J Van der Laan, Sherri Rose, et al. 2011. Targeted learning: causal inference for observational and experimental data. Vol. 4. Springer.

- Wainwright (2019) Martin J Wainwright. 2019. High-dimensional statistics: A non-asymptotic viewpoint. Vol. 48. Cambridge university press.

- Wei et al. (2022) Yaguang Wei, Xinye Qiu, Mahdieh Danesh Yazdi, Alexandra Shtein, Liuhua Shi, Jiabei Yang, Adjani A Peralta, Brent A Coull, and Joel D Schwartz. 2022. The impact of exposure measurement error on the estimated concentration–response relationship between long-term exposure to PM 2.5 and mortality. Environmental Health Perspectives 130, 7 (2022), 077006.

- Wu et al. (2020) X Wu, D Braun, J Schwartz, MA Kioumourtzoglou, and F Dominici. 2020. Evaluating the impact of long-term exposure to fine particulate matter on mortality among the elderly. Science advances 6, 29 (2020), eaba5692.

- Yoon et al. (2018) Jinsung Yoon, James Jordon, and Mihaela Van Der Schaar. 2018. GANITE: Estimation of individualized treatment effects using generative adversarial nets. In International conference on learning representations.

Appendix A Additional proofs

Causal identification

We first show the identification result from Section 2, which establishes that the outcome function is equal to the conditional expectation of the outcome given the treatment and covariates. As a corollary, the SRF estimand can be estimated from observed data.

Proposition A.1.

Suppose Section 2 holds. Then .

Proof.

Corollary A.2.

The SRF is identified by the data distribution.

Proof.

From the definitions and the importance sampling formula, we have

which shows identification as the right-hand side only involves an expectation over the observed data distribution by Proposition A.1. ∎

Derivation of the EIF

When we fit a statistical model to a dataset, each data point contributes to the estimated parameters of the model. The efficient influence function (EIF) effectively measures how sensitive an estimate is to the inclusion or exclusion of individual data points. In other words, it tells us how much a single data point can influence the estimate of a causal effect. Formally, the EIF is defined as the canonical gradient (Van der Laan et al., 2011) of the targeted parameter. Before defining it properly, we need to introduce a few objects and notations. First, we let be a smooth parametric submodel, that is, a parameterized family of distributions such that . Next, we adopt the following notation convention

where the subscript indicates that the density is from the data distribution is taken from . The score function for each member of the submodel is defined as

Similarly, we can define the SRF estimand at each member of the submodel as

It follows that is the target SRF.

Under a slight abuse of notation to reduce clutter and avoid multiple integrals, we denote here and in the proofs the integral of the relevant variables (understood from context) using a single operator .

The EIF of the non-parametric model is the canonical gradient of the SRF estimand, which is characterized by the equation

| (17) |

This condition is also known as pathwise differentiability.

The following results establish that the EIF of the SRF is given by .

Proof.

We need to show that equation 17 holds. Starting on the left hand side, and using properties of logarithms, we have

| (19) | ||||

Observe now that (also due to logarithmic properties) we can factorize into three parts:

| (20) |

where , , and are the score functions for the outcome, shifted exposure, and covariates, respectively. Combining this decomposition with Equation 19, we can rewrite the left-hand side of (17) as

| (21) | ||||

For the next step of the proof, we will recursively use the two following identities. For any arbitrary function , and for any two subsets of measurements and (e.g. and ), we have

| (22) | ||||

Using these identities and evaluating at , we can manipulate the first term in (21) as

where we used the importance sampling formula for the second equality. Now, for the second term in (21), also evaluated at , we have

where we again center the integrand and recursively apply the identities of (22). Finally, for the third term in (21) we have

Combining these three terms above proves the condition in (17) holds, thus completing the proof. ∎

Appendix B Another Example of SRF vs ERF

The following examples show a simple case where the SRF and ERF estimands are different, thereby demonstrating why the ERF is not a useful estimate of the effect of an exposure shift. Consider a setting in which with , . Now consider the exposure shift induced by for some . Using the SRF formulation, we find that . On the other hand, the ERF is for all . Therefore, estimators of the two estimands return two different estimates. Moreover, the ERF is identically zero for every treatment value. Thus, it cannot be used to approximate the value of the effect of the exposure shift, even when is correctly specified.

Appendix C Hardware/Software/Data Access

We ran all of our experiments both in the simulation study and the application section using Pytorch (Paszke et al., 2019) on a high-performance computing cluster equipped with Intel 8268 ”Cascade Lake” processors. Due to the relatively small size of the datasets, hardware limitations, and the large number of simulations required, we did not require the use of GPUs. Instead we found that using only CPUs run in parallel sufficed. Reproducing the full set of experiments takes approximately 12 hours with 100 parallel processes, each with 4 CPU cores and 8GB of RAM. Each process runs a different random seed for the experiment configuration.

The code for reproducibility is provided on the submission repository along with the data sources for the simulation experiments. The datasets for these experiments were obtained from the public domain and were adapted from the GitHub repositories shared by Nie et al. (2021) and Bica et al. (2020) as explained in the experiment details section. The data for the application was purchased from https://resdac.org/. Due to a data usage agreement and privacy concerns, manipulation of these data requires IRB approval under which the authors have completed the training and for which reason the data cannot be shared with the public.