∎

22email: [email protected]

Causal Clustering for 1-Factor Measurement Models on Data with Various Types

Abstract

The tetrad constraint is a condition of which the satisfaction signals a rank reduction of a covariance submatrix and is used to design causal discovery algorithms that detects the existence of latent (unmeasured) variables, such as FOFC Kummerfeld and Ramsey (2016). Initially such algorithms only work for cases where the measured and latent variables are all Gaussian and have linear relations (Gaussian-Gaussian Case). It has been shown that a unidimentional latent variable model implies tetrad constraints when the measured and latent variables are all binary (Binary-Binary case). This paper proves that the tetrad constraint can also be entailed when the measured variables are of mixed data types and when the measured variables are discrete and the latent common causes are continuous, which implies that any clustering algorithm relying on this constraint can work on those cases. Each case is shown with an example and a proof. The performance of FOFC on mixed data is shown by simulation studies and is compared with some algorithms with similar functions.

Keywords:

Causal Discovery Latent Variable1 Introduction

Tetrad constraints is a condition of which the satisfaction signals a rank reduction of a covariance submatrix and is used to design causal discovery algorithms that detects the existence of latent (unmeasured) variables, such as FOFC Kummerfeld and Ramsey (2016). Initially such algorithms only work for cases where the measured and latent variables are all Gaussian and have linear relations (Gaussian-Gaussian Case). It has been shown that the tetrad constraints also hold for the case where the measured variables are all binary and they are only connected with one binary latent common cause (Binary-Binary case)Danks and Glymour (2013). Here we prove that the tetrad constraint can also be entailed when the measured variables are of mixed data types and when the measured variables are discrete and the latent common causes are continuous, which implies that any clustering algorithm relying on this constraint can work on those cases. In section 2, we briefly introduce the tetrad constraint and FOFC as an example of algorithms designed based on it. In section 3, we describe each case for which tetrad constraint can work with a proof and an example. In section 4, we discribe the performance of FOFC on simulation studies: in section 4.1, we describe the performance of FOFC on simuated data corresponding to cases in section3; in section 4.2 we compare FOFC using rank correlation and tetrachoric correlation; in section 4.3 we compare FOFC with MGM and MGM-FCI-MAX, two algorithms studying the structure of mixed data.

2 FindOneFactorClusters

We use directed acyclic graphs to represent causal relations between variables. Given a directed edge in the graph, a node is defined as parent if the edge comes from it; the child of the directed edge is the node that it goes into. A directed path is a sequence of connected edges where the source of the latter one is the target of the first one. Given a directed path, the parent of the first edge is the ancestor of the child of the last edge and the target of the last edge is the descendant of its ancestor. Using a directed graph to represent causal relation, each edge represents one direct causal relation relative to the variables in the graph, with the direction from the cause (parent) to the effect (child). We say a causal relation is direct relative to a set of variables if that causal relation cannot be interrupted by interfering any subset of .

We assume the joint probability distribution on the variables respects the Markov condition, i.e., all variables conditioned on their parents are independent from the set of all of their non-descendants. We also assume Faithfulness, which states that all conditional independence relations that hold in the population are consequences of the Markov condition from the underlying true causal graph.

A trek between two variables, and , is defined as either a directed path between and , or two directed paths starting from a common third variable , the two paths intersecting only at , with one path ending at and another at .

The FindOneFactorClusters (FOFC) algorithm aims to find the pure 1-factor measurement models, where each measured variable shares one latent cause and there are no other causal connections between the measured variables Kummerfeld and Ramsey (2016). A set of variables V is causally sufficient when every cause of any two variables in V is also in V. Given a set of measured indicators O, and a causally sufficient set of variables V containing O s.t. no strict subset of V containing O is causally sufficient, then a pure 1-factor measurement models for V is a measurement model in which each observed indicator has at most one latent parents, no observed parents, and no correlated errors. For instance, figure 1 is a pure 1-factor measurement model where to are measured variables and is latent.

If both the measured and latent variables are Gaussian and each measured variable can be written as a linear function of the latent variable plus a noise independent from other measured and latent variables, we can derive the vanishing tetrad constraint

among any four measured variables. In fact, any three measured Gaussian variables in a pure 1-factor measurement model entail a vanishing tetrad with another Gaussian variable no matter how they are partitioned. In this case, the three measured variables are called a pure triple.

FOFC finds a pure 1-factor measurement model by first identifying all pure triples. Then for every pure triple the algorithm expands each triple into a cluster by merging triples with overlapping variables. Finally it outputs the cluster with the largest size, deletes all the clusters that have overlapping variables with the largest one then outputs the largest one that remains.Cox and Wermuth (2002)

3 Working Cases and Proofs

3.1 Binary-Binary

Consider a case of Figure 1, where both the latent and measured variables are binary. According to the lemma inPearl (1988) Danks and Glymour (2013):

Lemma 1.

If , and are all binary variables, 111 denotes that and are independent conditioning on if and only if .

for a group of binary variables sharing a single common binary cause, three vanishing tetrad constraints are satisfied:

As long as there are at least four measured binary variables sharing the same unique binary latent common cause, and no measured variables are caused by other measured variables, FOFC will find that any three of them is a pure triple and manage to merge the triples into a pure cluster.

3.2 Continuous-Mixed

For a case where the latent and some measured variables are Gaussian while others are binary, it is often assumed that the binary variable is generated by partitioning the values of the Gaussian variable into two categories. For the convenience of calculation, we assume all Gaussian variables are standardized. For instance, for a binary variable which can take value zero or one, we assume that it is generated by a standard normal variable such that if , and otherwise. It will be shown in later sections that, for two binary variables, and , that are generated in this way, their joint distribution is decided by the joint distribution between and (see 2), the Gaussian variables that generates each of them. Therefore, the binary variable can be viewed as a child of a Gaussian variable and four such variables form a pure 1-factor measurement model. If only one of the measured variables is binary and the other three are linearly related to the latent, then the measured variables still satisfies vanishing tetrad constraints, but in general this constraint is violated. However, in simulations with mixed types FOFC identifies clusters with two or more continuous (and linear) and two or more binary variables most of the time. This section will introduce why tetrad constraints are not entailed when there are two or more binary measured variables, and describe conditions where tetrad constraints approximately hold when there are two or more binary variables.

3.2.1 One Binary Variable

For a binary variable generated as described above, its covariance with a Gaussian :

where is the pdf of standard normal variable. For any other that forms a pure 1-factor measurement model, we have:

Since forms a pure triple with other measured Gaussian variables, replacing with does not change the pure property of those triples, i.e., adding another variable to the triple creates a vanishing tetrad regardless of the partition. Therefore, FOFC is able to identify them and correctly cluster with other measured Gaussian variables that form a pure 1-factor measurement model with .

We can generalize this case: when is some function of and some independent noise , i.e., while other variables in the model are still linear Gaussian, the vanishing tetrad is still entailed by pure triple. If we denote the latent common cause as such that for all , we have:

| (1) |

Then the tetrad constraint holds for any partition:

3.2.2 More than One Binary Variable

When several measured variables are binary while the latent variable is continuous, the measured variables would fail to form pure triples that entail the tetrad constraints. Assume two of the measured variables, and , are binary and are generated by two standard Gaussian variables and as described before, and let be the cdf of the joint standard bivariate normal distribution between and and the correlation between and , we get the covariance between and :

| (2) |

Theoretically, FOFC is not entailed to build the right cluster, but the accuracy of FOFC clustering of measured variables with mixed types is too high to be chance. Similar to the last case, we can generalize this case: when is some function of and some noise , i.e., and is some function of and some noise , i.e., while other variables in the model are still linear Gaussian, one tetrad constraint is entailed. If we denote the latent common cause as such that for all , we have:

Then the tetrad constraint holds for the partition where the correlation between and is not included:

3.3 Continuous-Binary

For the case when the latent variable is Gaussian and measured variables are binary, we assume that the measured variables are generated with the same mechanism as described in the last section. As shown in figure 2, the mediate variables to forms a pure 1-factor measurement model.. Each mediate Gaussian variable can be written as a linear function of the latent variable :

where is constant and represents the extra cause of that is independent from . As mentioned before, the measured binary variable if for some constant and otherwise. From the section 3.2.2 we know that the covariance between binary variables generated in this way does not reliably allow any three measured variables to form a pure triple, and FOFC is not entailed to work in this case. However, simulation tests show it is nonetheless very accurate. This phenomenon is going to be discussed in the next two sections, from median dichotomy () to non-median dichotomy.

3.3.1 Median Dichotomy

Suppose a binary variable is generated from a median dichotomy, i.e., . When every measured variables is generated from a median dichotomy, the covariance matrix of the measured data behaves quite similarly to a covariance matrix of the Gaussian variables. Given two standard Gaussian variables and with correlation , one property of bivariate normal distribution formed by and is that:

where is the pdf of standard bivariate normal distributionMeyer (2013). Then the covariance between two measured binary variables is:

| (3) |

When the covariance can be written as :

| (4) |

The last step is based on the Taylor expansion of . Equation(3) indicates that the covariance between the two binary variables with median dichotomy is proportional to the correlation between the two mediate Gaussian variables. If , the difference between and is less than 10% Cox and Wermuth (2002). Since the covariance between the measured binary variables is approximately proportional to the correlation between the mediate Gaussian variables, the property that the a partition of any four mediate variables satisfies the tetrad constraints approximately holds on the measured binary variables. In fact, the performance of FOFC on binary data with median dichotomy is almost as good as on Gaussian data.

3.3.2 Non-median Dichotomy

Recall that we assume the binary variable is generated from a standard Gaussian variable with a cutoff value , such that if and otherwise. This section discusses the situation where . When the , FOFC is still reliable for building the right cluster. Recall the covariance between two binary variables:

| (5) | ||||

When is small and the absolute value of and are smaller than 1 , is approximately 1 and is apporximately 0. The covariance can be approximated:

| (6) | ||||

The final step of the approximation entails the binary variable with non-median dichotomy to satisfy the tetrad constraints.

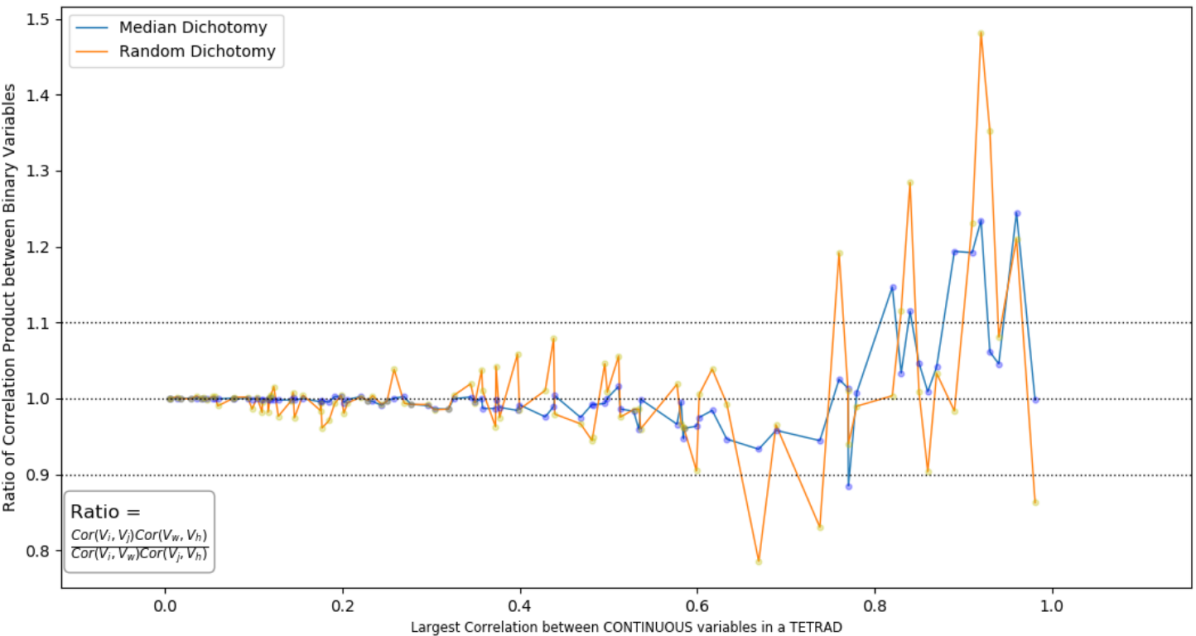

Figure 3 shows how well binary variables derived from normal Gaussian with various dichotomies satisfy the tetrad constraints: in this figure, four binary variables whose original Gaussian variables form a pure 1-factor measurement model.are randomly partitioned twice; the y axis is the ratio of the product of correlation between these two partitions and x axis the largest absolute value the correlation the pairs of Gaussian variables that generates the binary variables in the partition can have. For the sake of convenience, all correlations here are positive since what matters is not being positive or negative but the absolute value. A ratio of 1 is when tetrad constraint holds. We can see that binary variables with median dichotomy satisfy the tetrad constraints with slight variations when the (absolute value of) the correlation between the standard Gaussian variables is less than 0.8; the product ratio of binary variables with non-median dichotomy fluctuates around “1” and become less and less stable as the correlation between the standard Gaussian variables increases, suggesting a susceptibility to external noises and complex connections between mediate Gaussian variables even if each of them forms a pure 1-factor measurement model..

The figure 3 is designed to plot Ratio versus the Largest Absolute Value of Continuous Correlation because, besides the absolute value of cutoff, (the absolute value of) continuous correlation is the only other thing that influences how well binary variables satisfy tetrad constraints. As mentioned in setion 3.3.1, the covariance between two binary variables is closer to proportional to the correlation of the two Gaussians that generate them when the Gaussian correlation is small. The closer to proportional the binary covariance is to the Gaussian correlation, the closer the binary tetrad containing the pair of binary variables satisfies the tetrad constraint (so the ratio is closer to 1) if other conditions are the same. In other words, after two partitions, the largest absolute value among the four continuous correlations determines how close the ratio is to ‘1’.

3.4 Continuous-Discrete

FOFC works effectively on discrete variables with randomly many categories as long as each of them is discretized from a Gaussian variable in the same way the binary variables are generated in the last section. Roughly speaking, as the number of categories increases the performance of FOFC increases. As will be discussed later in this section, the reason behind such performance varies with the number of possible values the variables can take. All the variables discussed in this section are derived from standard Gaussian variables with the same discretization mechanism shown in the Figure 24:

for a discrete variable , there is a standard Gaussian variable such that iff .

Remark.

For any two discrete variables and that are derived from standard Gaussian variable and with linear discretization, such that has k possible values and has g possible values, their covariance is:

where is the correlation between and and denotes the cutoff value of such that when .

This remark can be easily seen by a small proof by induction in the appendix.

According to the remark, if the absolute value of every cutoff of each variable is small enough (smaller than 1), using the same idea of section 2.3.3 Non-median Dichotomy, the covariance between any two discrete variables with and possible values can be approximated:

| (7) | ||||

Recall that is the covariance between and . As mentioned in section 2.3.3, when is not too large. To see whether the tetrat constraints approximately hold between discrete variables generated through linear discretization from continuous variables in a pure 1-factor measurement model.when their correlations are not too large, let us check the tetrads between discrete variables in figure 2 with arbitrary number of categories. More specifically, variable has many categories:

Similarly,

And it’s easy to see:

So the tetrad constraints approximately hold between discrete variables generated through linear discretization from continuous variables in a pure 1-factor measurement model.when their correlations are not too large.

3.4.1 Discrete Variables with Large Number of Categories Behave similarly to the Continuous

As shown above, the tetrad constraints approximately hold between discrete variables generated from continuous variables in a pure 1-factor measurement model.. The satisfaction of the tetrad constraints requires that correlations between the continuous variables are not too large and also the absolute values of cutoffs () are small (to enable the approximation in 2.6 and 2.9).

On the other hand, the more categories a standard Gaussian variable is discretized into, the more similarly the resulting discrete variable behaves to the original Gaussian. Figure 4, which is a plot of the mean of ratio of the correlation between two discrete variables over the correlation between the two original Gaussian variables versus the number of categories a discrete variable takes, illustrates this feature.

The figure is generated by first calculating the mean of ratio: A 9x2 Matrix M is initialized to set the number of categories each pair of discrete variables are taking. represents 9 pairs of variables; one of the two variables in the ith pair takes possible values and another . The value of each entry of is set up like this: ) and is a random number between and . Let denote the variable that has more categories in th pair and the number of categories takes. The setup of entails that if .

For a pair of discrete variables of which the numbers of possible values are and , we calculate the correlation of the pair given the correlation between the Gaussian variables, as an element in ContCor, from which they are derived. Two cutoff arrays, one of length and another of , were initialized such that each array has an increasing order and every two adjacent elements differ from a random number between 0 and 1. The two cutoff arrays are then centered to get a zero mean222the centering operation is just to make sure that some cutoffs are negative and some are positive; there is no difference in the final result depending on whether the cutoff array is centered or not. Given the correlation between the two standard Gaussian variables, the variance of each discrete variable is first calculated, then their covariance using the formula in remark, then their correlation, which is then divided by the Gaussian correlation to get the ratio. For every pair of discrete variables, such a ratio for every element in ContCor is calculated, then all the ratios are summed up and divided by the length of ContCor to get the mean of ratio. After collecting the mean of ratio for every pair of variable, the figure is plotted such that the x axis is the second column of and for a point with as the x-coordinate, its y-coordinate is the mean of ratio for the ith pair.

As shown in this figure, the correlation between the discrete variables approaches the original Gaussian correlation closer and closer as the number of category increases. In fact, when the larger number of category exceeds six, the difference between the discrete correlation and continuous correlation is less than 10%.

4 Test Results

This section presents the performance of FOFC on simulation data: the first part of the performance presented here is about FOFC running on data with various types and comparison of various correlations: sample variance, tetrachoric correlation and rank correlation; the second part is a comparison of performance between FOFC and a clustering algorithm working on data with mixed types called MGMSedgewick et al. (2018).

4.1 Performance on Continuous and Discrete data

4.1.1 General Setup of Simulation

This section presents the performance of FOFC on Gaussian data, binary data and data with more than two possible values discretized from the original continuous variables. The original continuous data is simulated from a graph with five latent variables each having four measured children. In the simulation study, the number of edges connecting the latents can be zero, one, three, six, seven or nine. Figure 6 shows one example where the graph has 5 pure 1-factor measurement models.with 6 edges connecting the five latents. These numbers are chosen to represent cases from those in which all latents are independent from each other to those in which the latents are densely connected. Note that the ability of the algorithm to detect impurities of the model, i.e., one measured variable is directly caused by more than one latent variables or measured variables directly causes other measured variables, is tested but is not explicitly shown, because such ability is found to be stable regardless of the data type. The graphs used for the simulation contains impurities, such as figure 5, where there are three pairs of measured variables having direct causal relations.

After the Gaussian data is simulated, if asked to discretize, the system will generate random cutoffs for each variable to linearly discretize the variable into the number of categories set up beforehand. The discrete data shown in this section belong to the following situations, each situation under three sample sizes(100, 500 and 2000):

-

•

binary variable from median dichotomy

-

•

binary variable from non-median dichotomy

-

•

data with THREE possible values

-

•

data with FOUR possible values

-

•

data with FIVE possible values

-

•

data with SIX possible values

-

•

data with EIGHT possible values

For the sake of convenience, the simulation is set up so that, when running on the discretized data, all variables included have the same number of possible values. This should not raise a concern because as proven in section 3.4, whether the measured discrete variables take the same number of possible values or not does not influence whether they satisfy the tetrad constraints.

4.1.2 Precision and Recall

The two indexes used to evaluate the performance of the algorithm are the very common ones: precision and recall. The performance for each index is shown by a figure.

One expects FOFC to identify latent variables through measured ones and ultimately recover the connection between the latents. Precision measures that, for each group of variables clustered by the algorithm, how accurate it is for them to actually form a pure 1-factor measurement model.: for an estimated cluster, the system will first find the true cluster that contains the largest number of the variables in this estimated cluster and calculate the percentage of variables in the estimated cluster contained in the true cluster as an individual precision for this single estimated cluster. In the best case where all the variables in an estimated cluster indeed forms the same pure 1-factor measurement model., the individual precision will be one; in the worst case the individual precision will be where is the number of variables in the estimated cluster. The system calculates the precision for each estimated cluster, then calculate the mean of all individual precisions as the final precision for the simulation.

Figure 7,9 and 11 shows the precision of FOFC performed on datasets with different sizes. The dataset with sample size 100 and 500 is simulated from graphs where there are 3, 6, or 9 edges connecting the five latent variables(such as figure 6). With sample size 2000, FOFC is tested on simulations where the true graph is in six different conditions. As mentioned before, the difference between the six conditions is the density of the connection between the five latent variables. The connection is introduced as the title of each of the six sub-figures. For each sub-figure, the x-axis is the type of data the algorithm runs on: 0 means the data is Gaussian; 2 indicates binary data with median dichotomy; binary data with non-median dichotomy; 3 data with three possible values; 4 data with four possible values, etc; the y-axis is the mean of final precision of the 40 simulations. Note that the 40 simulations are based on the same true graph.

The precision of FOFC increases as the sample size increases. The reliably high precision in the figure 11 indicates that, regardless of the situation of the data, if the algorithm detects a latent common cause from a group of variables by clustering them together, it is safe to say that these variables indeed share a latent common cause, forming a pure 1-factor measurement model..

Precision measures the accuracy of FOFC on different data types whereas recall measures the sensitivity, i.e., for each pure 1-factor measurement model.(or true cluster) in the true graph, how likely it is for the algorithm to actually put all the variables it contains into an estimated cluster: for an true cluster, the system will first find the estimated cluster that contains the largest number of the variables in this true cluster and calculate the percentage of variables in the true cluster contained in the estimated cluster as an individual recall for this single true cluster. In the best case where all the variables in an true cluster indeed are grouped into the same estimated cluster, the individual recall will be one; in the worst case the individual recall will be 0 when all variables in the true cluster are determined as independent from others. The system calculates the recall for each true cluster, then calculate the mean of all individual recalls as the final recall for the simulation.

Figure 8, 10 and 12 show the recall of the FOFC tested on the datasets from which the precisions with the corresponding sample sizes shown above are calculated. The connection between the five latent variables is introduced as the title of each of the six sub-figures. For each sub-figure, the x-axis has the same meaning as before; the y-axis is the mean of final recall from the 40 simulations each time.

The recall of the FOFC running on each data type is similar to precision except for the binary data with non-median dichotomy. As the number of edges connecting the five latent variables increases, the recall for non-median binary data decreases from 0.8 to less than 0.4, showing that the algorithm gradually loses the ability to identify pure clusters using the tetrad constraints. The high precision and low recall indicate that, when the data is non-median binary, FOFC can form correct clusters but can only recover some of them while falsely leaves many variables outside of clusters which they form in the true graph. A typical output of the algorithm in this situation is shown in figure 13 when the true graph is figure 6. Since the algorithm is run on binary data instead of the original continuous data, all the variables in figure 6 represents the Gaussian variable and in figure 13 the binary variable discretized from the original. in figure 13 represents the latent variable the algorithm postulated for each estimated cluster. Another thing worth mentioning is that, although FOFC is designed to study the latent variables, the algorithm itself does not calculate the connections between the latent variables and will not put edges between them.

As pointed in section 3.3.2, when the binary data is discretized with a random number between zero and one as the cutoff value whereas the original Gaussian variable has a mean of zero, although the correlation between the binary variables of which the parents form a pure 1-factor measurement model.approximately satisfy the tetrad constraints, the clustering decision is disturbed by the connections between the latent variables. The disturbance becomes inevitable for at least two reasons. First, the absolute value of the correlation shrinks after the dichotomy, making the algorithm more likely to judge two variable as independent even if they are not, not to mention testing the tetrad constraints. Second, the variables only approximately satisfy the tetrad constraints; as mentioned before, multiple factors can influence how well the constraints are satisfied, such as how large a cutoff value is. The variables not belonging to the same cluster will have non-zero correlation when the latent variables are not independent, making it harder for the algorithm to test whether certain group of variables satisfy the tetrad constraints or not.

4.2 Testing Rank Correlation and Tetrachoric Correlation

Rank correlation and tetrachoric correlation calculate the correlation between discrete variables. Rank correlation measures the ordinal association between two discrete variables. The tetrachoric correlation is the specific case of polychoric correlation: given two discrete variables are generated from two Guassian variable, the polychoric correlation estimates the association between the underlying Guassian variables from the discrete variables, and tetrachoric correlation is the case where the discrete variables are binary.

This section presents the performance of FOFC when the rank and tetrachoric correlation are used in the algorithm. That is, instead of using correlations, the variations use Pearson rank correlations or tetrachoric correlations. The setup of the true graph and how the precision and recall are calculated are the same as before. For a graph with 3, 6 or 9 edges connecting the five latent variables, 40 simulations are generated with 500 data points each time.

Figure 15 and 14 compares the recall and precision of FOFC running with rank correlation with the result we get before. As before, the x-axis denotes how many categories the variables are discretized into; the y-axis is the final mean of 40 simulations of either recall or precision, depending on the graph. The meaning of each line is explained on the top of the graph, which consists of two part: what kind of correlation is calculated and the number of edges connecting the latent variables in the graph from which the data is generated. For instance, the “Rank-3” in figure 15 means that this line denotes the result calculating rank correlation when the five latent variables are connected with 3 edges in the true graph.

Note that each graph includes the performance of FOFC on Gaussian variables (it is the statistics corresponding to the 0 on the x-axis). Since in this case the variable is continuous, the variation is always calculated as correlation (so, even if in figure 15 the red line “Rank-3” starts with the point (0, 0.91), this 0.91 recall is for the FOFC running a continuous variables, therefore the variation used here is correlation) . Comparing to the perfomance of FOFC in the last section with the same sample size, we see that the only statistics higher in the rank correlation is the precision for the case where variables have three possible values. In any other cases, including the recall of rank correlation for the variables taking three possible values, the algorithm performs better when calculating the correlation. One possible explanation is that the rank correlation does not reflect linearity, which is crucial to the performance of FOFC when the variables are discretized.

Figure 17 and 16 compares the recall and precision of FOFC running with tetrachoric correlation with the result we get before. In each graph, the y-axis has the same meaning as before, and the x-axis denotes the type of the variable: whether it’s continuous, binary from median dichotomy or non-median dichotomy. Similar to rank correlation, the recall and precision of FOFC with tetrachoric correlation decrease as the variable goes from continuous to non-median dichotomy.

4.3 Comparing FOFC with MGM and MGM-FCI-MAX

4.3.1 Introduction of MGM

MGM is an algorithm learning graphical structure over continuous and discrete variablesSedgewick et al. (2018). Unlike FOFC, which only relies on correlation, MGM uses a pairwise graphical modelLee and Hastie (2015):

where there continuous variables and discrete varaibles .

Using the pseudolikelihood method, MGM estimates the parameters and use them to build an undirected graph:

-

•

an edge is added between two nodes and representing continuous variables and if

-

•

an edge is added between two nodes and representing continuous variable and discrete variable if it’s not the case where for all values of

-

•

an edge is added between two nodes and representing discrete variables and if for all values of and

The undirected graph is built with the idea that the absence of an edge between two nodes and means that variables and are independent conditioning on all other variables. This undirected graph is then pruned using an independence test. For instance, when running on a dataset with three variables where the true graph is figure 18, the MGM will first generate figure 19 since any two variables are dependent given the third one. The final output will be a undirected graph with the edge between and removed since they are marginally independent.

4.3.2 Customized Recall and Precision Test

The recall and precision used to evaluate the FOFC are the same as before, but customized for MGM.

MGM does not decide whether some variables share a latent common cause, but we can interpret its output in that way: if some variables form a clique, this clique is equivalent to an estimated cluster from FOFC. Therefore, the recall can be calculated by checking whether all variables from a true cluster form a clique in the MGM output. Similar to the calculation for FOFC, individual recall is calculated for each true cluster, which will be either 1 if a clique is formed or 0 if not. After this step, final recall is calculated as the mean of individual recall.

The precision for MGM is expected to measure that, for each group of variables forming a clique, how accurate it is for them to actually form a pure 1-factor measurement model.in the true graph. In order to do that, the system will first check if the variables forming a pure 1-factor measurement model.in the true graph forms a clique in the MGM output, then will build groups of variables called fake clusters. Each fake cluster has the same size as each true cluster, but variables in it do not belong to the same pure 1-factor measurement model.in the true graph. For instance, given the true graph figure 20, the system will not only check whether variables in a true cluster, such as form a clique in the MGM output, it will also check whether variables in a fake cluster, such as , form a clique.

The precision is calculated based on the formula of precision:

where stands for “true positive” and “false positive”. For each MGM output, the number of true clusters that are cliques in the output is treated as the and the number of fake clusters that are cliques in the output is treated as the . Therefore, for each MGM output, in the best case the precision will be 1 and worse case 0. If the MGM output is a complete graph, the precision is 0.5 since all true and fake clusters are all cliques, i.e., .

Figure 20 shows the graph used for simulation. After the Gaussian data is generated, it will first be discretized with random cutoff value, then mixed with the original Gaussian data such that in each pure cluster, half of the measured variables are continuous and the other half are discrete. Figure 21 shows the result of the comparison. For each sub-figure the x-axis is the number of possible values the discrete variable in the mixed dataset can take: to distinguish the two algorithms, means the discrete variable can take possible values and the mixed dataset is run by FOFC; means possible values and is run by MGM; the y-axis is the mean of precision (recall) of 40 simulations with 2000 data points each time. Note that the 40 simulations are based on the same true graph.

To make the comparison explicit, the performances of each algorithm on the same mixed dataset are put next to each other. The blue bar refers to FOFC and the orange MGM.

Figure 21 shows that the recall of FOFC and MGM are high, but MGM is better than FOFC. It can be caused by the fact that FOFC is susceptible to the shrinking of correlation due to discretization. The difference between the precision of FOFC and MGM is rather obvious.

The precision of MGM being between 0.6 and 0.7 indicates that, when the latent variables are not independent, MGM has a hard time deciding whether variables are from the same pure cluster or not. In fact, the precision is consistently 0.5 when the true graph has two pure 1-factor measurement models.and the one latent variable is a direct cause of another. If the latent variables are independent, the MGM performs as well as, or slightly better in terms of recall than the FOFC.

4.4 Comparing FOFC with MGM-FCI-MAX

MGM-FCI-MAX uses the output of MGM, an undirected graph, as the input of FCI-MAX, which applies the edge orientation rule with a “maximum probability-based search technique” Raghu et al. (2018). The setup of simulation is the same as section 4.2. Since the output of MGM-FCI-MAX is also an undirected graph (because of the setup of simulation), the precision and recall for MGM-FCI-MAX is calculated in the same way as for MGM.

Fig 22 and Fig 23 show that the precision and recall of MGM-FCI-MAX is higher than FOFC only when the discrete variables in the dataset are binary. The precision of MGM-FCI-MAX decreases as the number of categories the discrete variables has increases. It can be explained by the fact that the conditional independence test used in MGM-FCI-MAX is designed specifically for mixed data, while as the number of categories increases for the discretized variable, the variable behaves similar to the continuous, as shown in 4.

5 Appendix: Proof for the Remark in Section 3.4

Remark.

For any two discrete variables and that are derived from standard Gaussian variable and with linear discretization, such that has k possible values and has g possible values, their covariance is:

where is the correlation between and and denotes the cutoff value of such that when .

Proof.

We are going to prove the it by induction.

Basic case: when k=g=2,

| (8) | ||||

Induction Hypothesis: assume when and ,

for a ==== *

Now let (shown in Figure 25) and .

Let denote the probability of when has categories. Similarly, denotes the joint probability of and when has categories, and the joint probability of and when has categories (of course, it means that the number of categories of remains the same). According to the formula:

can be further reformulated by analyzing the increment of number of categories of :

-

1.

As shown in figure 25, the increment of categories does not change the former cutoff values, therefore when

-

2.

From figure 25 it is easy to see that

| (9) | ||||

Notice that (I) is just when has categories (, ); some simple calculation gives (II) . By Induction Hypothesis, we get:

It can be similarly proven that holds when and .∎∎

References

- Cox and Wermuth (2002) Cox DR, Wermuth N (2002) On some models for multivariate binary variables parallel in complexity with the multivariate gaussian distribution. Biometrika 89(2):462–469, URL http://www.jstor.org/stable/4140591

- Danks and Glymour (2013) Danks D, Glymour C (2013) Linearity properties of bayes nets with binary variables. CoRR abs/1301.2263, URL http://arxiv.org/abs/1301.2263, 1301.2263

- Kummerfeld and Ramsey (2016) Kummerfeld E, Ramsey J (2016) Causal clustering for 1-factor measurement models. In: Proceedings of the 22Nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, New York, NY, USA, KDD ’16, pp 1655–1664, DOI 10.1145/2939672.2939838, URL http://doi.acm.org/10.1145/2939672.2939838

- Lee and Hastie (2015) Lee JD, Hastie TJ (2015) Learning the structure of mixed graphical models. Journal of Computational and Graphical Statistics 24(1):230–253, URL http://arxiv.org/abs/1301.2263, 1301.2263

- Meyer (2013) Meyer C (2013) The bivariate normal copula. Communications in Statistics - Theory and Methods 42(13):2402–2422, DOI 10.1080/03610926.2011.611316, URL https://doi.org/10.1080/03610926.2011.611316, https://doi.org/10.1080/03610926.2011.611316

- Pearl (1988) Pearl J (1988) Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA

- Raghu et al. (2018) Raghu VK, Ramsey J, Morris A, Manatakis DV, Sprites P, Chrysanthis PK, Glymour C, Benos PV (2018) Comparison of strategies for scalable causal discovery of latent variable models from mixed data. In: International Journal of Data Science and Analytics

- Sedgewick et al. (2018) Sedgewick A, Buschur K, Shi I, Ramsey JD, Raghu VK, Manatakis DV, Zhang Y, Bon J, Chandra D, Karoleski C, Sciurba FC, Spirtes P, Glymour C, Benos PV (2018) Mixed graphical models for integrative causal analysis with application to chronic lung disease diagnosis and prognosis. Bioinformatics 35(7):1204–1212