CATRO: Channel Pruning via Class-Aware

Trace Ratio Optimization

Abstract

Deep convolutional neural networks are shown to be overkill with high parametric and computational redundancy in many application scenarios, and an increasing number of works have explored model pruning to obtain lightweight and efficient networks. However, most existing pruning approaches are driven by empirical heuristic and rarely consider the joint impact of channels, leading to unguaranteed and suboptimal performance. In this paper, we propose a novel channel pruning method via class-aware trace ratio optimization (CATRO) to reduce the computational burden and accelerate the model inference. Utilizing class information from a few samples, CATRO measures the joint impact of multiple channels by feature space discriminations and consolidates the layer-wise impact of preserved channels. By formulating channel pruning as a submodular set function maximization problem, CATRO solves it efficiently via a two-stage greedy iterative optimization procedure. More importantly, we present theoretical justifications on convergence of CATRO and performance of pruned networks. Experimental results demonstrate that CATRO achieves higher accuracy with similar computation cost or lower computation cost with similar accuracy than other state-of-the-art channel pruning algorithms. In addition, because of its class-aware property, CATRO is suitable to prune efficient networks adaptively for various classification subtasks, enhancing handy deployment and usage of deep networks in real-world applications.

Index Terms:

Deep Model, Pruning, Compression, Subtask, Trace Ratio.I Introduction

In the past few years, deep convolution neural networks (CNNs) have achieved impressive performance in computer vision tasks, especially image classification [1, 2]. However, deep models often have an enormous number of parameters, which requires colossal memory and massive amounts of computations. These requirements not only increase infrastructure costs but also impose a great challenge to deploy models in resource-constrained environments, including mobile devices, embedded systems, and autonomous robots. Therefore, it is significant to obtain deep models with high accuracy but relatively low computations used in various scenarios. Pruning is an effective way to accelerate and compress deep networks by removing less important connections in the network. Channel pruning [3, 4, 5], as a hardware-friendly method, directly reduces redundancy computation by removing channels in convolutional layers and is widely used in practice.

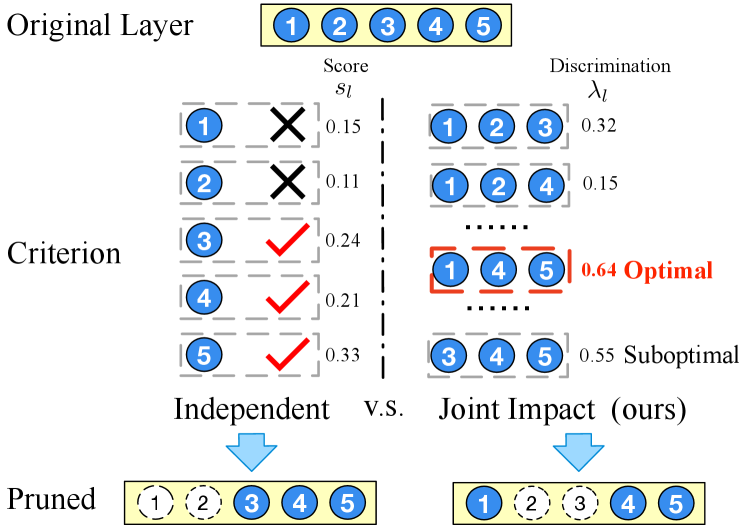

Among recent advances in channel pruning, most approaches are driven by empirical heuristic and rarely consider the channel dependency. Many pruning methods directly measure the importance of individual channels by the weights of filters [3, 6, 4], and others introduce some intuitive losses [7, 8, 5] about reconstruction, discrimination, sparsity, etc. However, neglecting the dependency among channels leads to suboptimal pruning results. For example, even multiple channels are important when examined independently, keeping them all in a pruned network may still be redundancy because of similar extracted features from different channels. On the contrary, two or more individually unimportant channels may have boosted impact when working together. Such joint impact have been moderately studied in machine learning methods [9, 10, 11] but is rarely exploited in channel pruning. CCP [12] moves one step ahead with only pairwise channel correlations in pruning, and it remains demanding and challenging for effective utilizations and rigorous investigations of multiple channel joint impact.

In this paper, we develop a novel channel pruning method. As shown in Fig. 1, our method benefits from taking joint impact of channels into account and is superior to most existing methods pruning channels independently. The proposed method extends network discriminative power [8, 13] for multi-channel setting and find channels by solving a combinatorial optimization problem. To be more specific, we firstly transfer the channel combination optimization into a graph trace optimization, and then we solve the trace optimization in a global view to obtain a result with the consideration of the joint impact. By formulating the pruning objective into a submodular set function maximization problem, we further show a theoretical lower boundary guarantee on accuracy and convergence under an assumption about the relationship between discrimination and accuracy.

In addition, different from most approaches measuring the channel importance by the filter weights, the discrimination in CATRO is based on input samples and class-aware in the optimization steps. This enables CATRO to adaptively mine subsets of data samples and handle various pruning tasks with the same original network. For example, suppose we have a large traffic sign classification model that works perfectly in the whole world, and we need to deploy a tiny model on an autonomous vehicle operated in a small town, where only a subset types of signs are on the road. This kind customization and compression combination tasks are ubiquitous in the real world and require efficient solutions. We name such application scenarios classification subtask compression and showcase that CATRO is quite suitable in these scenarios.

To the best of our knowledge, CATRO is the first to exploit the multi-channel joint impact to find the optimal combination of preserved channels in pruning and the first to use submodularity for effective and theoretically guaranteed pruning performance. We summarize our contributions as follows:

-

•

We introduce graph-based trace ratio as discrimination criteria to consider the multi-channel joint impact for channel pruning.

-

•

We formulate the pruning to a submodular maximization problem, provide theoretical guarantees on convergence of the pruning step and performance of pruned networks, and solve it with an efficient two-stage algorithm.

-

•

We demonstrate in extensive experiments that our method achieves superior performance compared to existing channel pruning methods by the efficiency and accuracy of the pruned networks.

II Related Work

II-A Channel Pruning

The recent advances of weight pruning can be mainly categorized into unstructured pruning and channel pruning. Unstructured pruning can effectively prune the over-parameterized deep neural networks (DNNs) but results in irregular sparse matrices, which require customized hardwares and libraries to support the practical speedup, leading to a limited inference acceleration in most practical cases [14, 15]. Recent work concentrates on channel pruning [16, 4, 17, 18, 19, 20, 21, 22, 23, 24, 25], which prunes the whole channel and results in structured sparsity. The compacted DNN after channel pruning can be directly deployed on the existing prevalent CNN acceleration frameworks without dedicated hardware/libraries implementation. Early work [26] proposes a straightforward pruning heuristic, penalizing weights with -norm group lasso regularization and then eliminate the least -norm of channels. Network Slimming [16] introduces a factor in the batch normalization layer with respect to each channel and evaluates the importance of channels by the magnitude of the factor. Then, many criteria are proposed to evaluate the importance of neuron. SCP [6] measures the relative importance of a filter in each layer by calculating the -norm and -norm, respectively. FPGM [4] removes the filters minimizing the sum of distances to others. Variational CNN pruning [27] provides a Bayesian model compression technique, which approximates batch-norm scaling parameters to a factorized normal distribution using stochastic variational inference. GBN [28] introduces a gate for each channel and performs a backward method to optimize the gates. Then it independently prunes channels by the rank of gates. Similarily, DCPH [23] designs a channelwise gate to enable or disable each channel. Greedy algorithm is also applied to identify the subnetwork inside the original network [29]. Recently, MFP [22] introduces a new set of criteria to consider the geometric distance of filters. These works evaluate and prune the importance of channels individually. Another work [13] leverage Fisher’s linear discriminant analysis (LDA) to prune the last layer of the DNN, which, however is empirical heuristic and suffers from the expensive cost of cross-layer tracing. CCP [12] exploits the inter-channel dependency but stops with only pairwise correlations. It prunes channels without global consideration either. SCOP [30] utilize samples to set up a scientific control and then prunes the neural network in the group by introducing generated input features. Recent prevailing AutoML-based pruning approaches [31, 32, 33, 34, 35, 36] can achieve a high FLOPs reduction of DNNs. For instance, AMC [31] leverages deep reinforcement learning (DRL) to let the agent optimizes the policy from extensive experiences by repeatedly pruning and evaluating for DNNs. DNCP [21] uses a set of learnable architecture parameters, and calculates the probability of each channel being retained based on these parameters. However, they usually requires tremendous computation budgets due to the large search space and trial-and-error manner.

II-B Submodular

In recent years, the combinatorial selection based on submodular functions has been one of the most promising methods in machine learning and data mining. It has been a surge of interest in lots of computer tasks, such as visual recognition [37], segmentation [38], clustering [39, 40], active learning [41] and user recommendation [42]. The submodular set function maximization [43], which is to maximize a submodular function, is one of the most basic and important problem. There exist several algorithms for solving nonnegative and monotone submodular function maximization. Under the uniform matroid constraint [43], a standard greedy algorithm gives an approximation [44, 45, 46] in polynomial time, and is shown to be the best possible approximation unless [44]. There are also many works investigating more effective algorithms [47, 48] and many other works investigating the maximization of a submodular set function under different constraints [49, 50, 51]. Although submodularity has been widely used in many tasks and widely studied, it has not been explored in model compression as far as we known.

III Methodology

III-A Problem Reformulation

For a CNN with a stack of convolution (CONV) layers and weight parameters , given a set of image samples from classes and the corresponding labels , channel pruning aims to find binary channel masks with the following objective:

| (1) | ||||

| s.t. |

where is the loss function (e.g., cross-entropy for classification), is the channel number of the -th layer, is the FLOPs function, and is a given FLOPs constraint.

Most methods prune channels with some surrogate layer-wise criteria instead of directly solving Eq.(1). Following this setting and with the goal of keeping channels in layer , we can express the pruning objective as follows:

| (2) | ||||

| s.t. |

where is the feature map of input in the -th layer, and is elementwise multiplication with broadcasting. A variety of have been proposed [7, 8, 4, 5], which may not be completely equivalent to solving Eq.(1) but effective in practice.

Alternatively, we can formulate Eq.(2) as a matrix projection problem. For clarity, we denote the -th element in a vector (or an ordered set) as . We build an ordered set of the channel index with and an indicator matrix , where denotes the one-hot vector with its -th element as one. Therefore, Eq.(2) can been expressed as follows:

| (3) | ||||

| s.t. |

For an input , we denote the pruned feature map in layer with by .

III-B Trace Ratio Criterion for Channel Pruning

In order to obtain effective criteria for model pruning, inspired by the classical Fisher Linear Discriminant Analysis (LDA) [52], we dig into the feature discrimination for measuring multi-channel joint impact. As a simple example, Fig. 2 visualizes two feature spaces from the -th layer in a well-trained ResNet-20 model trained on CIFAR-10. The red and blue points represent features of images containing ships and airplanes, respectively. We have an intuitive feeling that the features produced by channels in the right figure are more discriminative, and a classifier built on that space is more likely to outperform that on the left space. For deep CNNs with more layers, we hypothesis the discrimination effects accumulate and the classifier performance gap enlarges. We describe the relation between space discrimination and classifier accuracy in Assumption 1.

Assumption 1

For a classification task, if samples are more discriminatory in feature space than in feature space , it holds that the accuracy of a classifier built on , namely , is no less than that of the classifier built on with large probability, i.e., , where means “no less with probability ”.

The above assumption enables us to concretely measure and optimize the discriminations. Suppose denotes data samples from the training set with classes and denotes the corresponding class label. We build two graph weight matrices and to depict the prior knowledge about data sample relationship. reflects within-class relationship, where each element has a larger value if samples and belong to the same class, and smaller otherwise. Analogically, represents the between-class relationship, where is larger if samples and belong to different classes. Without any other priors, we directly use the Fisher score [52] as the graph metric for its simplicity and efficiency:

| (6) | |||||

| (7) |

where is the number of samples in the -th class, and .

Given Assumption 1 and the prior matrices in Eq.(6) and Eq.(7), a natural criterion for channel pruning is to keep channels that reduce the discrimination of samples within the same class () and enlarge that of samples from different classes (). Therefore, we can replace Eq.(3) by

| (8) | ||||

| s.t. |

As is a diagonal matrix with and is a diagonal matrix with , and are two Laplace matrices. Based on the Laplace matrix properties, Eq.(8) is mathematically equivalent to the following trace optimization:

| (9) | ||||

| s.t. |

where is the aggregated feature map for in layer , and denotes the trace. Equivalently, our goal is to find the optimal channel index set with the maximum trace ratio criterion for discrimination:

| (10) |

where and . Since , for all satisfying we have

| (11) | |||||

| (12) |

Therefore, to optimize Eq.(9) is further equivalent to optimize the following optimization:

| (13) | ||||

To be more clear, the objective in Eq.(9) is the left-hand side terms of the inequation in Eq.(11), and the optimal solution of Eq.(9) makes the equality of the inequation in Eq.(11) hold (by the definition of ). And similarly, given , the objective in Eq.(13) is the left-hand side terms of the inequation in Eq.(12), and the solution of Eq.(13) makes the equality of the inequation in Eq.(12) hold. Lastly, the equality of the inequation in Eq.(11) holds if and only if the equality of the inequation in Eq.(12) holds. Thus, the optimal solution of Eq.(9) makes equality of the inequation in Eq.(11) hold, makes equality of the inequation in Eq.(12) hold, and is also the optimal solution of Eq.(13). In contrast, the optimal solution of Eq.(13) makes equality of the inequation in Eq.(12) hold, makes equality of the inequation in Eq.(11) hold, and is also the optimal solution of Eq.(9).

Noting that solving Eq.(13) naturally considers the multi-channel joint impacts inherited in .

III-C Iterative Layer-wise Optimization

To solve Eq.(13), we introduce a submodular set optimization, provide theoretical guarantees on the pruned model accuracy, and propose a layer-wise pruning procedure.

Firstly, we introduce as the discrimination score for channel in layer given a specified ratio , and as the index set function given an index set . When , we notice that Eq.(13) is equivalent to the following one:

| (14) | ||||

| s.t. |

We introduce two nice properties of set functions and show satisfies them.

Definition 1

(Submodularity): A set function is submodular if for any subset and any element , it has .

Definition 2

(Monotonicity): A set function is monotone if for any subset and any element , it has .

Lemma 1

Given a , is a set function with monotonicity and submodularity.

Proof:

Suppose , where is the number of channels in layer . For any , we have

and

Therefore, is a set function with monotonicity and submodularity. ∎

Secondly, to maximize with a fixed , we show that sequentially selecting the indexes with the largest score obtains a model with a guaranteed accuracy according to Theorem 1.

Theorem 1

Given a , maximizing by sequentially selecting elements with the highest scores from , with representing the selected index set, results a model that, with a large probability , has a lower boundary on the accuracy related to the optimal index set , i.e.,

where is the monotone non-decreasing mapping from to accuracy under Assumption 1.

Proof:

Suppose the optimal solution is , the index set obtained by sequentially selected top elements is . Let represent the index set at step of the sequencital selection. Since is monotonic and submodular, following the similar proof [43], we have the inequalities:

and then we have

When is obtained, we can calculate a discrimination:

Thus, can be regarded as a description of discrimination. Under the assumption 1, let is the monotone non-decreasing mapping from to the accuracy , we have such an accuracy lower boundary with a large probability :

We can further express the boundary as

∎

Finally, we consider to iteratively optimize the discrimination ratio. Suppose the current index set is and the discrimination ratio is . After maximizing with the index sequential selection procedure, we get a new index set and a new ratio . From Theorem 2, we have .

Theorem 2

The obtained by iteratively maximizing is monotonic increasing and converges to the optimal value.

Proof:

Under the specific indicator matrix , where denotes the one-hot vector with its -th element as one. we have the following equivalent optimizations:

The first equivalence relation comes from the definition of trace and , the second equivalence relation comes from the monotonicity of exponential function and logarithmic function, and the last comes from the definition of .

Suppose the current index set (of the iteration round ) is and the discrimination is , and after maximizing we get the next index set . Let

then

which means is a monotonic non-increasing function. In addition, because of the definition of .

Let be a piecewise logarithmic linear approximation of at point , namely

then we know , and , which means is also monotonic non-increasing.

Let , we have

which is the same value as the discrimination score calculated with the next index set .

Since is a monotonic non-increasing function with optimal (i.e., the largest) satisfying , we have

for . This shows when .

In addition, the definition of guarantees that can only take a finite number of different values during the iterative optimization process.

Thus, the discrimination obtained by iteratively maximizing is monotonic increasing until it converges to the optimal value. ∎

Input: sampled data and their labels ; Original CNN model with CONV layers; Original and pruned channel number and for all layers; Stop criterion

Output: Channel masks for all layers

The whole layer-wise pruning procedure, given the channel budgets for each layer, is shown in Algorithm 1.

Theorems 2 and 1 show that, given a well-trained unpruned network and channel numbers , the pruning procedure in Algorithm 1 leads to monotonically increasing feature discrimination value until reaching the optimal solution (by Theorem 2) and obtains the pruned network with an accuracy lower boundary proportional to the best-pruned network with large probability (by Theorem 1).

Note that the objective in each layer is not a simply linear sum of each channel. Determining the value of in incorporates the joint-channel impact and depends on other channels. With the update of , channels selected in the previous rounds may be unselected. In other words, the set of channels is not built by step-by-step greedy inclusion.

Compared to training-based pruning, the time complexity of our layer-wise pruning procedure is linear to the number of sampled data, which is usually much smaller than (and increased sub-linearly to) the number of original training data, and it only performs inference once and no back-propagation. Theoretically, the convergence of the pruning step is guaranteed; Empirically, it only uses three to four iterations to converge to the pruned network structures, taking a negligible amount of time.

III-D Greedy Architecture Optimization

We further propose an approximate algorithm to determine the architecture of the pruned model, i.e., to find the optimal channel numbers , as a prerequisite of the layer-wise pruning stage shown in Algorithm 1. Besides the discrimination criteria, we also consider the FLOPs constraint, aiming to quickly estimate the effects of local channel number changes on the whole model’s power and efficiency.

Input: sampled data and their labels ; Original CNN model with CONV layers; Initial channel number ; Step size ; FLOPs constraint

Output: Channel numbers for all layers

We first introduce a network discrimination approximation function with given , which is . Taking the suboptimal solution in Theorem 1 and ignoring the effects of in other layers, we have its derivative as follows:

| (15) |

where is the descending sorted elements of . The two approximations come from the sequentially selection of element in Theorem 1 and Taylor expansion, respectively.

On the other hand, to measure the network computational efficiency, we define the FLOPs function of layer as , where , , and is the -th layer’s kernel size, feature map width, and feature map height, respectively. The FLOPs function for the entire network is , and its derivative for is as follows:

| (16) |

As a tradeoff to jointly considering computations and discriminations, we define the following function to evaluate the effect of changing each channel number :

| (17) |

For the global architecture optimization, we manipulate the channel numbers without selecting the channels. Therefore, we take a greedy coordinate ascent strategy to find the optimal channel numbers, which is describe in Algorithm 2. It is worth noting that, similar to the layer-wise pruning procedure, this algorithm only performs inference once without backpropagation. After that, it only needs to recalculate a small portion of variables in each iteration.

III-E Overall Pruning Procedure

We take the two stages together to build CATRO, the proposed channel pruning method. As illustrated in Fig. 3, CATRO first employs Algorithm 2 (Greedy Selection for Channel Numbers) to select the channel numbers for each layer and then uses Algorithm 1 (Layer-wise Pruning with Trace Ratio Criterion) to prune each layer. It fine-tunes the pruned network as the last step on all available training data, similarly to other pruning methods.

IV Experiments

| Depth | Method | CIFAR-10 | CIFAR-100 | ||||

|---|---|---|---|---|---|---|---|

| Accuracy | Acc. Drop | FLOPs (Drop ) | Accuracy | Acc. Drop | FLOPs (Drop ) | ||

| 20 | LCCL [53] | 91.68% | 1.06% | 2.61E7 (36.0%) | 64.66% | 2.87% | 2.73E7 (33.1%) |

| SFP [6] | 90.83% | 1.37% | 2.43E7 (42.2%) | 64.37% | 3.25% | 2.43E7 (42.2%) | |

| FPGM [4] | 91.09% | 1.11% | 2.43E7 (42.2%) | 66.86% | 0.76% | 2.43E7 (42.2%) | |

| CNN-FCF [54] | 91.13% | 1.07% | 2.38E7 (41.6%) | - | - | - | |

| Taylor FO [55] | 91.52% | 0.48% | 2.83E7 (32.7%) | - | - | - | |

| PScratch [56] | 90.55% | 1.20% | 2.06E7 (50.0%) | - | - | - | |

| DHP [57] | 91.54% | 1.00% | 2.14E7 (48.2%) | - | - | - | |

| SCOP [30] | 90.75% | 1.44% | 1.83E7 (55.7%) | - | - | - | |

| Ours | 91.76% | 0.98% | 1.95E7 (52.7%) | 68.61% | 0.99% | 2.02E7 (51.0%) | |

| 32 | LCCL [53] | 90.74% | 1.59% | 4.76E7 (31.2%) | 67.39% | 2.69% | 4.32E7 (37.5%) |

| SFP [6] | 92.08% | 0.55% | 4.03E7 (41.5%) | 68.37% | 1.40% | 4.03E7 (41.5%) | |

| TAS [32] | 93.16% | 0.73% | 3.50E7 (49.4%) | 72.41% | -1.80% | 4.25E7 (38.5%) | |

| FPGM [4] | 92.31% | 0.32% | 4.03E7 (41.5%) | 68.52% | 1.25% | 4.03E7 (41.5%) | |

| CNN-FCF [54] | 92.18% | 0.25% | 3.99E7 (42.2%) | - | - | - | |

| LFPC [19] | 92.12% | 0.51% | 3.27E7 (49.4%) | - | - | - | |

| SCOP [30] | 92.13% | 0.53% | 3.00E7 (55.8%) | - | - | - | |

| LFPC [19] | 92.12% | 0.51% | 3.27E7 (49.4%) | - | - | - | |

| DCPH [23] | 92.85% | -0.49% | 4.82E7 (30.0%) | 69.51% | -0.63% | 4.82E7(30.1%) | |

| MFP [22] | 91.85% | 0.78% | 3.23E7 (53.2%) | - | - | - | |

| Ours | 93.17% | 0.77% | 2.98E7 (56.1%) | 71.71% | 0.24% | 3.81E7 (43.8%) | |

| 56 | LCCL [53] | 92.81% | 1.54% | 7.81E7 (37.9%) | 68.37% | 2.96% | 7.63E7 (39.3%) |

| SFP [6] | 93.35% | 0.24% | 5.94E7 (52.6%) | 68.79% | 2.61% | 5.94E7 (52.6%) | |

| AMC [31] | 91.90% | 0.90% | 6.29E7 (50.0%) | - | - | - | |

| DCP [8] | 93.49% | 0.31% | 6.27E7 (50.0%) | - | - | - | |

| TAS [32] | 93.69% | 0.77% | 5.95E7 (52.7%) | 72.25% | 0.93% | 6.12E7 (51.3%) | |

| FPGM [4] | 93.49% | 0.10% | 5.94E7 (52.6%) | 69.66% | 1.75% | 5.94E7 (52.6%) | |

| CNN-FCF [54] | 93.38% | -0.24% | 7.20E7 (42.8%) | - | - | - | |

| GAL [58] | 93.38% | 0.12% | 7.83E7 (37.6%) | - | - | - | |

| CCP [12] | 93.50% | 0.04% | 6.73E7 (47.0%) | - | - | - | |

| LFPC [19] | 93.24% | 0.35% | 5.91E7 (52.9%) | 70.83% | 0.58% | 6.08E7 (51.6%) | |

| SCP [5] | 93.23% | 0.46% | 6.10E7 (51.5%) | - | - | - | |

| HRank [18] | 93.17% | 0.09% | 6.27E7 (50.0%) | - | - | - | |

| DHP [57] | 93.58% | -0.63% | 6.47E7 (49.0%) | - | - | - | |

| PScratch [56] | 93.05% | 0.18% | 6.35E7 (50.0%) | - | - | - | |

| LeGR [59] | 93.70% | 0.20% | 5.89E7 (53.0%) | - | - | - | |

| DCPH [23] | 93.26% | -0.60% | 8.76E7 (30.2%) | 71.31% | -0.41% | 8.78E7(30.0%) | |

| MFP [22] | 93.56% | 0.03% | 5.94E7 (52.6%) | - | - | - | |

| Ours | 93.87% | 0.03% | 5.83E7 (54.0%) | 72.13% | 0.67% | 5.64E7 (55.5%) | |

| 110 | LCCL [53] | 93.44% | 0.19% | 1.68E8 (34.2%) | 70.78% | 2.01% | 1.73E8 (31.3%) |

| SFP [6] | 93.86% | -0.18% | 1.21E8 (52.3%) | 71.28% | 2.86% | 1.21E8 (52.3%) | |

| TAS [32] | 94.33% | 0.64% | 1.19E8 (53.0%) | 73.16% | 1.90% | 1.20E8 (52.6%) | |

| FPGM [4] | 93.85% | -0.17% | 1.21E8 (52.3%) | 72.55% | 1.59% | 1.21E8 (52.3%) | |

| CNN-FCF [54] | 93.67% | -0.09% | 1.44E8 (43.1%) | - | - | - | |

| GAL [58] | 92.74% | 0.76% | 1.30E8 (48.5%) | - | - | - | |

| LFPC [19] | 93.07% | 0.61% | 1.01E8 (60.0%) | - | - | - | |

| HRank [18] | 93.36% | 0.87% | 1.06E8 (58.2%) | - | - | - | |

| DHP [57] | 93.39% | -0.06% | 1.62E8 (36.3%) | - | - | - | |

| PScratch [56] | 93.69% | -0.20% | 1.53E8 (40.0%) | - | - | - | |

| DCPH [23] | 94.11% | -0.73% | 1.77E8 (30.1%) | 72.79% | -0.26% | 1.77E8(30.0%) | |

| MFP [22] | 93.31% | 0.37% | 1.21E8 (52.3%) | - | - | - | |

| Ours | 94.41% | 0.41% | 1.00E8 (60.8%) | 74.04% | 0.45% | 1.12E8 (56.1%) | |

IV-A Datesets and Implementation Details

Datasets

We conducted our experiments on four datasets: CIFAR-10, CIFAR-100 [60], ImageNet [61], and GTSRB [62]. Both CIFAR-10 and CIFAR-100 contain 50K training images and 10K test images, and they have 10 and 100 classes, respectively. ImageNet contains 1.2M training images and 50K test images for 1000 classes. GTSRB, which is used to evaluate pruning performance for classification subtasks, contains 39,209 training images and 12,630 test images of 43 types of traffic signs.

Implementation details

For CIFAR-10/100 and GTSRB, we trained the original model 300 epochs using SGD with momentum 0.9, weight decay parameter 0.0005, batch size 256, and the initial learning rate of 0.1 dropped the factor of 0.1 after the 80th, 150th and 250th epochs. The step size is 1. We finetuned 200 epochs for pruned models with an initial learning rate of 0.01 dropped by the factor of 0.1 after half epochs for fair comparisons. The models are trained on GPU Tesla P40 using Pytorch. For quantitative performance comparisons, all our models do not prune the first convolution layer, and the minimal number of channels is three as the same with the input images. The numbers of channels are directly set to the minimum values. For the CIFAR-100 classification subtask compression, we moved about epochs from finetune phrase to the pruning phrase after pruning each block while keeping the total number of backward the same. In order to reduce overfitting, we limited the maximal number of channels with half of the original model. For the GTSRB classification subtask compression, we further limited the maximal number of channels with of the original model for the ultra-high compression ratios. For all subtask compression experiments, to get the high compression ratio, the first convolution layer was pruned in the same way as the other layers. For the ImageNet, we trained 90 epochs, and the learning rate was decayed by the factor of 0.1 after the 30th, 60th epochs.

Hyperparameters

The key hyperparameters are and number of data samples. We studied different numbers of samples on CIFAR-10/100. Results showed that CATRO are robust within a large range of sample number. We chose the value of with the following considerations. Firstly, should be a positive value to avoid cutting off the network or layer-collapse issues. Secondly, should be small enough to have a large search space. We empirically set the to be 3, and we set all layers’ as the same value for ease of use. We found out it may influence some layers’ pruning ratios, but the overall compression ratios are similar with almost the same accuracy performance. In practice, the minimum initial channel number is not sensitive to the overall channel number selection algorithm since most pruned layers are larger than the .

IV-B Quantitative Performance Comparisons

ResNet on CIFAR

We compared the classification performance of our proposed method with several state-of-the-art channel pruning approaches on CIFAR-10 and CIFAR-100 with ResNet-20/32/56/110 [2]. As different baselines use different training efforts or strategies, the original accuracy values can be different. We reported both the pruned accuracy and the accuracy drop from all baselines’ original papers to draw fair comparisons. Results in Table I clearly demonstrate the superiority of our CATRO over other channel pruning approaches. For CIFAR-10, CATRO consistently outperformed other channel pruning algorithms while achieving or approaching the highest FLOPs reduction ratios in ResNet-20/32/56/110. In the case of ResNet-110, CATRO reduced FLOPs with a notably high accuracy and reduced FLOPs with the highest accuracy on CIFAR-10 and CIFAR-100, respectively.

MobileNet on CIFAR

We further validated CATRO on the compact MobileNet-v2 models. Results are present on Table II, and CATRO reduced FLOPs with a notably high accuracy .

| Depth | Method | Top-1 | Top-5 | FLOPs Drop | ||

|---|---|---|---|---|---|---|

| Accuracy | Acc. Drop | Accuracy | Acc. Drop | |||

| 34 | SFP [6] | 71.83% | 2.09% | 90.33% | 1.29% | 41.1% |

| FPGM [4] | 72.54% | 1.38% | 91.13% | 0.49% | 41.1% | |

| ABCPruner [34] | 70.98% | 2.30% | - | - | 41.0% | |

| Ours | 72.75% | 0.50% | 91.13% | 0.41% | 42.9% | |

| 50 | FPGM [4] | 75.59% | 0.56% | 92.27% | 0.24% | 42.2% |

| HRank [18] | 74.98% | 1.17% | 92.33% | 0.51% | 43.7% | |

| SCOP [30] | 75.95% | 0.40% | 92.70% | 0.02% | 45.3% | |

| LeGR [59] | 75.70% | 0.20% | 92.79% | 0.20% | 42.0% | |

| CNN-FCF [54] | 75.68% | 0.47% | 92.68% | 0.19% | 46.0% | |

| MFP [22] | 75.67% | 0.48% | 92.81% | 0.06% | 42.2% | |

| Ours | 75.98% | 0.14% | 92.79% | 0.08% | 45.8% | |

ResNet on ImageNet

Results on ImageNet supported our findings on CIFAR, and we show the results of ResNet-34/50 in Table III, where CATRO achieved the best accuracies with better or similar FLOPs drops. For ResNet-34, with fewer FLOPs ( FLOPs reduction), CATRO achieved better classification accuracies compared to others. For ResNet-50, CATRO achieved better or competitive classification accuracies compared to many state-of-the-art methods.

IV-C Investigations on Determining Channel Numbers

Fig. 4 showcases the procedure of greedy architecture optimization (described in Section III-D) for ResNet-110 on CIFAR-10 for better investigations of CATRO. A green point at represents that at the -th iteration in Algorithm 2 our method decided to increase the retaining number of the -th layer by one. The channel numbers of the pruned and original network are visualized on the plane in green and gray, respectively. As shown in the figure, the proposed optimization method focused more on deeper layers (i.e., layers close to the output) at the beginning (e.g., the first 300 iterations) and gradually expanded other layers, which is consistent with the state that features in neural networks have a tendency to transfer from general to special [63, 64]. After 2126 iterations, the optimization procedure ended up with a config of network architecture with the desired FLOPs (1.0E8). The resulted network had 21 narrow bottleneck layers (with channels) in the first half of the network and 37 fully recovered layers.

IV-D Results on Classification Subtask Compression

| Depth | Method | 5-Class | 10-Class | 20-Class | |||

|---|---|---|---|---|---|---|---|

| Acc. (Drop ) | FLOPs (Drop ) | Acc. (Drop ) | FLOPs (Drop ) | Acc. (Drop ) | FLOPs (Drop ) | ||

| 32 | Direct [3] | 91.40% (1.80%) | 1.11E7 (83.63%) | 91.00% (1.70%) | 1.11E7 (83.63%) | 83.05% (3.70%) | 1.11E7 (83.63%) |

| FPGM [4] | 91.80% (1.60%) | 1.11E7 (83.63%) | 91.40% (1.30%) | 1.11E7 (83.63%) | 83.05% (3.70%) | 1.11E7 (83.63%) | |

| Ours | 93.60% (-0.40%) | 1.09E7 (83.92%) | 92.00% (0.70%) | 1.07E7 (84.22%) | 84.45% (2.30%) | 1.08E7 (84.07%) | |

| 56 | Direct [3] | 94.00% (1.20%) | 2.02E7 (84.07%) | 92.70% (2.10%) | 2.02E7 (84.07%) | 85.95% (1.80%) | 2.02E7 (84.07%) |

| FPGM [4] | 94.20% (1.00%) | 2.02E7 (84.07%) | 92.90% (1.90%) | 2.02E7 (84.07%) | 86.15% (1.60%) | 2.02E7 (84.07%) | |

| Ours | 95.00% (0.20%) | 1.95E7 (84.62%) | 93.90% (0.90%) | 1.97E7 (84.46%) | 86.55% (1.20%) | 1.98E7 (84.38%) | |

We evaluated image classification subtask compressions to demonstrate the efficacy of CATRO for both general purposes (CIFAR-100) and practical applications (GTSRB).

CIFAR-100

CIFAR-100 is a benchmark dataset for general classification with 100 classes. Without losing generality, we directly selected the top 5/10/20 classes as subtasks of interests and pruned tiny networks for each specific subtask. We compared CATRO with a data-independent channel pruning method (‘Direct’, which uses that -norm [3]) and a training-based channel pruning method (FPGM [4]). As shown in Table IV, with a high compression ratio (84% FLOPs drop), our method consistently outperformed others with a distinct gap in all settings.

| Drop Ratio | Method | Limit Speed Signs | Direction Signs | ||||

|---|---|---|---|---|---|---|---|

| Accuracy | Acc. Drop | FLOPs (Drop ) | Accuracy | Acc. Drop | FLOPs (Drop ) | ||

| 97% | Direct [3] | 95.75% | 4.00% | 9.60E5 (97.67%) | 98.19% | 1.02% | 9.60E5 (97.67%) |

| Ours | 96.97% | 2.98% | 9.40E5 (97.72%) | 98.59% | 0.62% | 9.08E5 (97.80%) | |

| 90% | Direct [3] | 99.23% | 0.52% | 4.08E6 (90.10%) | 98.47% | 0.74% | 4.08E6 (90.10%) |

| Ours | 99.39% | 0.36% | 3.71E6 (90.75%) | 99.04% | 0.17% | 3.79E6 (90.80%) | |

GTSRB

We used GTSRB to demonstrate the efficacy of CATRO for practical usages, e.g., in intelligent traffic and driver-assistance systems. To mimic the real-world application scenarios, we chose two subtasks from GTSRB (limit-speed-sign recognition and direction-sign recognition) and evaluated the pruning methods with two ultra-high compression ratios (90% and 97%). Table V shows the results of pruned ResNet-20 and clearly indicates the better performance of CATRO in terms of both the accuracy and the model size. Fig. 5 visualizes the pruned ResNet-20 models from CATRO for different tasks. As shown in the figure, we can find out that different channels contributed to different tasks, and CATRO obtained specific pruned models for each task by selecting different channels. To be more specific, some of the selected/preserved channel indexes in Fig. 5 are the same (the squares filled with both red and blue) while each subtask has its own specific channels (the squares filled with only red or only blue).

Study with task exchange on GTSRB

To investigate the subtask-oriented channel selection, we further explored a task-exchange experiment. We compared the original CATRO model on one target task (e.g., Direction Signs) with a task-exchange CATRO model, which is pruned (i.e., channel selection) on another task (e.g., Limited Speed Signs) and fine-tuned on the target task (Direction Signs). These two models are named by “DS-DS” and “LSS-DS”, respectively. We expected the model pruned and fine-tuned on the same task would perform better, and the results shown in Table VI confirms it. Comparing “LSS-DS” and “DS-DS”, we find the accuracy of Direct-Sign with task-exchanging channels drops . Similarly, we can find the accuracy drop by comparing “LSS-LSS” and “DS-LSS”. The results demonstrate that CATRO is a task-oriented pruning approach and its selected/pruned channels are based on the specific subtask.

IV-E Ablation Studies

| Network | Method | Predefined Pruning Ratio or Structure | FLOPs | Accuracy | Acc. Drop |

|---|---|---|---|---|---|

| ResNet-56 | SFP [6] | 40% | 5.94E7 | 93.35% | 0.24% |

| FPGM [4] | 40% | 5.94E7 | 93.49% | 0.10% | |

| CATRO-W/O Algorithm 2 | 40% | 5.94E7 | 93.83% | 0.08% | |

| CATRO (Ours) | – | 5.83E7 | 93.87% | 0.03% | |

| ResNet-20 | Taylor FO [55] | Model-A (Table 4 in [55]) | 2.83E7 | 91.52% | 0.48% |

| CATRO-W/O Algorithm 2 | Model-A (Table 4 in [55]) | 2.83E7 | 92.30% | 0.44% | |

| SFP [6] | 30% | 2.43E7 | 90.83% | 1.37% | |

| FPGM [4] | 30% | 2.43E7 | 91.09% | 1.11% | |

| CATRO-W/O Algorithm 2 | 30% | 2.43E7 | 91.68% | 1.06% | |

| CATRO (Ours) | – | 1.95E7 | 91.76% | 0.98% |

Investigations with fixed compression ratio

To evaluate the effectiveness of the proposed trace ratio criterion in layer-wise pruning, we provided ablation studies with fixed compressions ratio (either uniform pruning or by given structures). This ablation study followed the settings of the baselines, and we replaced Algorithm 2 (searching the pruning ratios of each layer) in CATRO by directly using the same pruning ratios as baselines. The results in the Table VII clearly demonstrated the effectiveness of the proposed criterion. On one hand, under the same compression ratio strategy, CATRO (even without the global architecture optimization) clearly outperformed the baselines on both accuracies and accuracy drops. On the other hand, incorporating the architecture optimization further boosted the effects of CATRO and led to a more compact but powerful network. For example, 0.04% accuracy improvement with 1.1E6 FLOPs reduction for ResNet-56, and 0.08% accuracy improvement with 4.8E6 FLOPs reduction for ResNet-20.

Investigations on different layer groups

| Network | Layer Group | Acc. (Drop ) | ||

|---|---|---|---|---|

| Shallow | Middle | Deep | ||

| ResNet-110 | 93.67% (1.15%) | |||

| 93.80% (1.02%) | ||||

| 94.26% (0.56%) | ||||

| 94.40% (0.42%) | ||||

| 93.97% (0.85%) | ||||

| CATRO (Ours) | 94.41% (0.41%) | |||

| ResNet-56 | 93.03% (0.87%) | |||

| 93.27% (0.63%) | ||||

| 93.52% (0.38%) | ||||

| 93.73% (0.17%) | ||||

| 93.50% (0.40%) | ||||

| CATRO (Ours) | 93.87% (0.03%) | |||

| ResNet-32 | 92.92% (1.02%) | |||

| 92.94% (1.00%) | ||||

| 92.98% (0.96%) | ||||

| 93.04% (0.90%) | ||||

| 93.00% (0.94%) | ||||

| CATRO (Ours) | 93.17% (0.77%) | |||

| ResNet-20 | 91.19% (1.55%) | |||

| 91.30% (1.44%) | ||||

| 91.48% (1.26%) | ||||

| 91.64% (1.10%) | ||||

| 91.54% (1.20%) | ||||

| CATRO (Ours) | 91.76% (0.98%) | |||

To further figure out to which extent the feature discriminations and the proposed criteria benefit channel pruning in CATRO, we conducted additional ablation studies on the layer-wise pruning method in different parts of the network. To be more specific, for each ResNet model, we grouped all layers into the Shallow-Group (the first one-third conv layers), the Middle-Group (the second one-third conv layers), and the Deep-Group (the last one-third conv layers). With the same channel numbers obtained by Algorithm 2, we replaced the layer-wise pruning (Algorithm 1) in CATRO by the common pruner in different layer groups. As shown in Table VIII, we can find that removing trace criterion in either shallow or deep stages leads to accuracy drop, and removing trace criterion in the Deep-Group leads to more accuracy drop compared to the Shallow-Group. Therefore, we can conclude that using discrimination in either shallow or deep stages both help and the proposed feature discrimination criteria has its superiority over common baselines. This also lead to an open and exciting topic on better utilizing the discrimination or combining it with other pruning strategies, which we will explore in future work.

IV-F Discussions

Studies on number of data samples used in CATRO

The number of data samples used in the trace ratio calculation is a key factor. In order to investigate the effects of the numbers of data samples, we studied different numbers of samples on CIFAR-10 and CIFAR-100. Results are shown in Fig. 6, and we found that more data provided limited gains with enlarging samples. Furthermore, we found that CATRO needed fewer samples for each class with more classes, which may be due to the help of more negative samples from other classes. Since time complexity of CATRO is independent to the number of training samples or batches, a reasonable number of data is a number that is small but represents the data distribution. In our experiments, we empirically randomly sampled 5,120/25,600 samples for CIFAR-10/CIFAR-100, which is marked with star in Fig. 6.

Pruning time complexity of CATRO

The main difference in pruning time lies in the trace ratio computation steps. The time complexity is linear to the number of sampled data which is usually much smaller than original training data. We provided a detailed time analysis on different phases of the training schedule on a machine with Tesla P40 GPU and E5-2630 CPU. We conducted the experiment for ResNet-110 on CIFAR-10 and used 5,120 out of the 50K samples. The one-time forward propagation took about (including feature map transfer from GPU to CPU), and the average trace optimization for one layer on CPU took about . The time of Algorithm 2 is linear to the time of updating . Note that in Algorithm 2 can be calculated in parallel in advance, Algorithm 2 only took about to perform the greedy search on the CPU, while common training forward and backward propagation on GPU took about per epoch on average. Overall, the pruning time for CATRO was about . Therefore, CATRO needs much less time for the pruning step than other training-based methods, e.g., GBN [28], which takes 10 epochs for each tock phase with and many rounds of tock phases. As CATRO has similar fine-tuning time to other pruning methods and much less pruning time, it is a much more efficient pruning method.

V Conclusion

In this paper, we present CATRO, a novel channel pruning method via class-aware trace ratio optimization. By investigating and preserving the joint impact of channels, which is rarely considered in existing pruning methods, CATRO can coherently determine the network architecture and select channels in each layer, both in an efficient non-training-based manner. Both empirical studies and theoretical justifications have been presented to demonstrate the effectiveness of our proposed CATRO and its superiority over other state-of-the-art channel pruning algorithms.

Acknowledgment

This work was supported in part by the China Postdoctoral Science Foundation (No. 2020M680563), and in part by NSFC under Grant No. 52131201.

References

- [1] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in neural information processing systems (NeurIPS), 2012, pp. 1097–1105.

- [2] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, 2016, pp. 770–778.

- [3] H. Li, A. Kadav, I. Durdanovic, H. Samet, and H. P. Graf, “Pruning filters for efficient convnets,” International Conference on Learning Representations, ICLR, 2017.

- [4] Y. He, P. Liu, Z. Wang, Z. Hu, and Y. Yang, “Filter pruning via geometric median for deep convolutional neural networks acceleration,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [5] M. Kang and B. Han, “Operation-aware soft channel pruning using differentiable masks,” in International Conference on Machine Learning. PMLR, 2020, pp. 5122–5131.

- [6] Y. He, G. Kang, X. Dong, Y. Fu, and Y. Yang, “Soft filter pruning for accelerating deep convolutional neural networks,” in International Joint Conference on Artificial Intelligence (IJCAI), 2018.

- [7] J.-H. Luo, J. Wu, and W. Lin, “Thinet: A filter level pruning method for deep neural network compression,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 5058–5066.

- [8] Z. Zhuang, M. Tan, B. Zhuang, J. Liu, Y. Guo, Q. Wu, J. Huang, and J. Zhu, “Discrimination-aware channel pruning for deep neural networks,” in Neural Information Processing Systems, 2018, pp. 875–886.

- [9] B. Krishnapuram, A. Harternink, L. Carin, and M. A. Figueiredo, “A bayesian approach to joint feature selection and classifier design,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 26, no. 9, pp. 1105–1111, 2004.

- [10] F. Nie, S. Xiang, Y. Jia, C. Zhang, and S. Yan, “Trace ratio criterion for feature selection.” in AAAI, vol. 2, 2008, pp. 671–676.

- [11] K. Wang, R. He, L. Wang, W. Wang, and T. Tan, “Joint feature selection and subspace learning for cross-modal retrieval,” IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 10, pp. 2010–2023, 2015.

- [12] H. Peng, J. Wu, S. Chen, and J. Huang, “Collaborative channel pruning for deep networks,” in International Conference on Machine Learning, 2019, pp. 5113–5122.

- [13] Q. Tian, T. Arbel, and J. J. Clark, “Task dependent deep lda pruning of neural networks,” Computer Vision and Image Understanding, vol. 203, p. 103154, 2021.

- [14] S. Han, J. Pool, J. Tran, and W. Dally, “Learning both weights and connections for efficient neural network,” in Advances in Neural Information Processing Systems (NIPS), 2015, pp. 1135–1143.

- [15] S. Han, H. Mao, and W. J. Dally, “Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding,” International Conference on Learning Representations (ICLR), 2016.

- [16] Z. Liu, J. Li, Z. Shen, G. Huang, S. Yan, and C. Zhang, “Learning efficient convolutional networks through network slimming,” in Proceedings of the IEEE International Conference on Computer Vision, 2017.

- [17] X. Dai, H. Yin, and N. Jha, “Nest: A neural network synthesis tool based on a grow-and-prune paradigm,” IEEE Transactions on Computers, 2019.

- [18] M. Lin, R. Ji, Y. Wang, Y. Zhang, B. Zhang, Y. Tian, and L. Shao, “Hrank: Filter pruning using high-rank feature map,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [19] Y. He, Y. Ding, P. Liu, L. Zhu, H. Zhang, and Y. Yang, “Learning filter pruning criteria for deep convolutional neural networks acceleration,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2009–2018.

- [20] Y. Guan, N. Liu, P. Zhao, Z. Che, K. Bian, Y. Wang, and J. Tang, “Dais: Automatic channel pruning via differentiable annealing indicator search,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [21] Y.-J. Zheng, S.-B. Chen, C. H. Ding, and B. Luo, “Model compression based on differentiable network channel pruning,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [22] Y. He, P. Liu, L. Zhu, and Y. Yang, “Filter pruning by switching to neighboring cnns with good attributes,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [23] Z. Chen, T.-B. Xu, C. Du, C.-L. Liu, and H. He, “Dynamical channel pruning by conditional accuracy change for deep neural networks,” IEEE transactions on neural networks and learning systems, vol. 32, no. 2, pp. 799–813, 2021.

- [24] Z. Tang, L. Luo, B. Xie, Y. Zhu, R. Zhao, L. Bi, and C. Lu, “Automatic sparse connectivity learning for neural networks,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [25] J. Peng, B. Tang, H. Jiang, Z. Li, Y. Lei, T. Lin, and H. Li, “Overcoming long-term catastrophic forgetting through adversarial neural pruning and synaptic consolidation,” IEEE Transactions on Neural Networks and Learning Systems, 2021.

- [26] W. Wen, C. Wu, Y. Wang, Y. Chen, and H. Li, “Learning structured sparsity in deep neural networks,” in Advances in neural information processing systems (NeurIPS), 2016, pp. 2074–2082.

- [27] C. Zhao, B. Ni, J. Zhang, Q. Zhao, W. Zhang, and Q. Tian, “Variational convolutional neural network pruning,” in CVPR, 2019, pp. 2780–2789.

- [28] Z. You, K. Yan, J. Ye, M. Ma, and P. Wang, “Gate decorator: Global filter pruning method for accelerating deep convolutional neural networks,” Neural Information Processing Systems (NeurIPS), 2019.

- [29] M. Ye, C. Gong, L. Nie, D. Zhou, A. Klivans, and Q. Liu, “Good subnetworks provably exist: Pruning via greedy forward selection,” in International Conference on Machine Learning, 2020.

- [30] Y. Tang, Y. Wang, Y. Xu, D. Tao, C. Xu, C. Xu, and C. Xu, “SCOP: scientific control for reliable neural network pruning,” in Neural Information Processing Systems, 2020.

- [31] Y. He, J. Lin, Z. Liu, H. Wang, L.-J. Li, and S. Han, “Amc: Automl for model compression and acceleration on mobile devices,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018.

- [32] X. Dong and Y. Yang, “Network pruning via transformable architecture search,” in Neural Information Processing Systems, 2019.

- [33] Z. Liu, H. Mu, X. Zhang, Z. Guo, X. Yang, K. Cheng, and J. Sun, “Metapruning: Meta learning for automatic neural network channel pruning,” in International Conference on Computer Vision. IEEE, 2019, pp. 3295–3304.

- [34] M. Lin, R. Ji, Y. Zhang, B. Zhang, Y. Wu, and Y. Tian, “Channel pruning via automatic structure search,” Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, 2020.

- [35] N. Liu, X. Ma, Z. Xu, Y. Wang, J. Tang, and J. Ye, “Autocompress: An automatic dnn structured pruning framework for ultra-high compression rates,” in AAAI, 2020.

- [36] B. Li, B. Wu, J. Su, and G. Wang, “Eagleeye: Fast sub-net evaluation for efficient neural network pruning,” in ECCV, 2020.

- [37] J. Zheng, Z. Jiang, and R. Chellappa, “Submodular attribute selection for visual recognition,” IEEE transactions on pattern analysis and machine intelligence, vol. 39, no. 11, pp. 2242–2255, 2016.

- [38] Y. Zhang, X. Chen, J. Li, W. Teng, and H. Song, “Exploring weakly labeled images for video object segmentation with submodular proposal selection,” IEEE Transactions on Image Processing, vol. 27, no. 9, pp. 4245–4259, 2018.

- [39] M.-Y. Liu, O. Tuzel, S. Ramalingam, and R. Chellappa, “Entropy-rate clustering: Cluster analysis via maximizing a submodular function subject to a matroid constraint,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 1, pp. 99–112, 2013.

- [40] J. Shen, X. Dong, J. Peng, X. Jin, L. Shao, and F. Porikli, “Submodular function optimization for motion clustering and image segmentation,” IEEE transactions on neural networks and learning systems, vol. 30, no. 9, pp. 2637–2649, 2019.

- [41] K. Wei, R. K. Iyer, and J. A. Bilmes, “Submodularity in data subset selection and active learning,” in International Conference on Machine Learning, 2015.

- [42] A. Ashkan, B. Kveton, S. Berkovsky, and Z. Wen, “Optimal greedy diversity for recommendation,” in Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Q. Yang and M. J. Wooldridge, Eds. AAAI Press, 2015, pp. 1742–1748.

- [43] G. L. Nemhauser, L. A. Wolsey, and M. L. Fisher, “An analysis of approximations for maximizing submodular set functions—i,” Mathematical programming, vol. 14, no. 1, pp. 265–294, 1978.

- [44] U. Feige, “A threshold of ln n for approximating set cover,” Journal of the ACM (JACM), vol. 45, no. 4, pp. 634–652, 1998.

- [45] G. Calinescu, C. Chekuri, M. Pál, and J. Vondrák, “Maximizing a submodular set function subject to a matroid constraint,” in International Conference on Integer Programming and Combinatorial Optimization. Springer, 2007, pp. 182–196.

- [46] C. Chekuri, T. Jayram, and J. Vondrák, “On multiplicative weight updates for concave and submodular function maximization,” in Proceedings of the 2015 Conference on Innovations in Theoretical Computer Science, 2015, pp. 201–210.

- [47] A. Badanidiyuru, B. Mirzasoleiman, A. Karbasi, and A. Krause, “Streaming submodular maximization: Massive data summarization on the fly,” in Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, 2014, pp. 671–680.

- [48] S. Buschjäger, P.-J. Honysz, L. Pfahler, and K. Morik, “Very fast streaming submodular function maximization,” in Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 2021.

- [49] R. K. Iyer and J. A. Bilmes, “Submodular optimization with submodular cover and submodular knapsack constraints,” Advances in neural information processing systems, vol. 26, pp. 2436–2444, 2013.

- [50] N. Buchbinder, M. Feldman, J. Naor, and R. Schwartz, “Submodular maximization with cardinality constraints,” in Proceedings of the twenty-fifth annual ACM-SIAM symposium on Discrete algorithms. SIAM, 2014, pp. 1433–1452.

- [51] O. Sadeghi and M. Fazel, “Online continuous dr-submodular maximization with long-term budget constraints,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2020, pp. 4410–4419.

- [52] C. M. Bishop et al., Neural networks for pattern recognition. Oxford university press, 1995.

- [53] X. Dong, J. Huang, Y. Yang, and S. Yan, “More is less: A more complicated network with less inference complexity,” in IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- [54] T. Li, B. Wu, Y. Yang, Y. Fan, Y. Zhang, and W. Liu, “Compressing convolutional neural networks via factorized convolutional filters,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 3977–3986.

- [55] P. Molchanov, A. Mallya, S. Tyree, I. Frosio, and J. Kautz, “Importance estimation for neural network pruning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.

- [56] Y. Wang, X. Zhang, L. Xie, J. Zhou, H. Su, B. Zhang, and X. Hu, “Pruning from scratch,” in The Thirty-Fourth AAAI Conference on Artificial Intelligence, 2020.

- [57] Y. Li, S. Gu, K. Zhang, L. V. Gool, and R. Timofte, “DHP: differentiable meta pruning via hypernetworks,” in ECCV, 2020.

- [58] S. Lin, R. Ji, C. Yan, B. Zhang, L. Cao, Q. Ye, F. Huang, and D. Doermann, “Towards optimal structured cnn pruning via generative adversarial learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 2790–2799.

- [59] T. Chin, R. Ding, C. Zhang, and D. Marculescu, “Towards efficient model compression via learned global ranking,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [60] A. Krizhevsky, “Learning multiple layers of features from tiny images,” Citeseer, Tech. Rep., 2009.

- [61] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition, 2009, pp. 248–255.

- [62] S. Houben, J. Stallkamp, J. Salmen, M. Schlipsing, and C. Igel, “Detection of traffic signs in real-world images: The German Traffic Sign Detection Benchmark,” in International Joint Conference on Neural Networks, no. 1288, 2013.

- [63] J. Yosinski, J. Clune, Y. Bengio, and H. Lipson, “How transferable are features in deep neural networks?” Advances in Neural Information Processing Systems, 2014.

- [64] W. Hu, Q. Zhuo, C. Zhang, and J. Li, “Fast branch convolutional neural network for traffic sign recognition,” IEEE Intelligent Transportation Systems Magazine, vol. 9, no. 3, pp. 114–126, 2017.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/x7.png) |

Wenzheng Hu received the B.S. degree from the Department of Automation, Beihang University, Beijing, China, in 2013, and Ph.D. degrees in control science and engineering from Tsinghua University, Beijing, China, in 2019. He is a Postdoc in the State Key Laboratory of Automotive Satefy and Energy, Tsinghua University, Beijing, China, and he is a researcher at Kuaishou Technology Co., Ltd., P.R.China. His research interests include machine learning, deep learning and intelligent transportation systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/ZhengpingChe.jpg) |

Zhengping Che received the Ph.D. degree in Computer Science from the University of Southern California, Los Angeles, CA, USA, in 2018, and the B.Eng. degree in Computer Science from Tsinghua University, Beijing, China, in 2013. He is now with AI Innovation Center, Midea Group. His research interests lie in the areas of deep learning, computer vision, and time series analysis with applications to robot learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/LiuNing.jpg) |

Ning Liu received the Ph.D. degree in computer engineering from the Northeastern University, Boston, MA, USA, in 2019. He is a researcher at Midea Group. His current research interests lie in deep learning, deep model compression and acceleration, deep reinforcement learning, and edge computing. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/x8.png) |

Mingyang Li received the Master degree from in Department of Automation, Tsinghua University, Beijing, China, in 2020. He is currently an engineer in KE Holdings Inc., Beijing, China. His research interests include machine learning and computer vision. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/JianTang.jpg) |

Jian Tang (F’2019) received his Ph.D degree in Computer Science from Arizona State University in 2006. He is an IEEE Fellow and an ACM Distinguished Member. He is with Midea Group. His research interests lie in the areas of AI, IoT, Wireless Networking and Mobile Computing. Dr. Tang has published over 180 papers in premier journals and conferences. He received an NSF CAREER award in 2009. He also received several best paper awards, including the 2019 William R. Bennett Prize and the 2019 TCBD (Technical Committee on Big Data) Best Journal Paper Award from IEEE Communications Society (ComSoc), the 2016 Best Vehicular Electronics Paper Award from IEEE Vehicular Technology Society (VTS), and Best Paper Awards from the 2014 IEEE International Conference on Communications (ICC) and the 2015 IEEE Global Communications Conference (Globecom) respectively. He has served as an editor for several IEEE journals, including IEEE Transactions on Big Data, IEEE Transactions on Mobile Computing, etc. In addition, he served as a TPC co-chair for a few international conferences, including the IEEE/ACM IWQoS’2019, MobiQuitous’2018, IEEE iThings’2015. etc.; as the TPC vice chair for the INFOCOM’2019; and as an area TPC chair for INFOCOM 2017-2018. He was also an IEEE VTS Distinguished Lecturer, and the Chair of the Communications Switching and Routing Committee of IEEE ComSoc. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/x9.png) |

Changshui Zhang (M’02–SM’15–F’18) received the B.S. degree in mathematics from Peking University, Beijing, China, in 1986, and the M.S. and Ph.D. degrees in control science and engineering from Tsinghua University, Beijing, China, in 1989 and 1992, respectively. In 1992, he joined the Department of Automation, Tsinghua University, where he is currently a Professor. He has authored more than 200 articles, and he is a member of the Standing Council of the Chinese Association of Artificial Intelligence. Dr. Zhang is currently an Associate Editor of the Pattern Recognition Journal and the IEEE TPAMI, and he is also a Fellow member of IEEE. His current research interests include pattern recognition and machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ee03c0df-8d7a-406a-9d61-2104da223c21/x10.png) |

Jianqiang Wang received the B.Tech. and M.S. degrees in automotive application engineering from the Jilin University of Technology, Changchun, China, in 1994 and 1997, respectively, and the Ph.D. degree in vehicle operation engineering from Jilin University, Changchun, in 2002. He is currently a Professor with the School of Vehicle and Mobility, Tsinghua University, Beijing, China. He has authored more than 40 journal papers and is currently a coholder of 30 patent applications. His research focuses on intelligent vehicles, driving assistance systems, and driver behavior. Dr. Wang was a recipient of the Best Paper Awards in IEEE Intelligent Vehicles (IV) Symposium 2014, IEEE IV 2017, 14th ITS Asia-Pacific Forum, the Distinguished Young Scientists of National Science Foundation China in 2016, and the New Century Excellent Talents in 2008. |