Can Bad Teaching Induce Forgetting? Unlearning in Deep Networks Using an Incompetent Teacher

Abstract

Machine unlearning has become an important area of research due to an increasing need for machine learning (ML) applications to comply with the emerging data privacy regulations. It facilitates the provision for removal of certain set or class of data from an already trained ML model without requiring retraining from scratch. Recently, several efforts have been put in to make unlearning to be effective and efficient. We propose a novel machine unlearning method by exploring the utility of competent and incompetent teachers in a student-teacher framework to induce forgetfulness. The knowledge from the competent and incompetent teachers is selectively transferred to the student to obtain a model that doesn’t contain any information about the forget data. We experimentally show that this method generalizes well, is fast and effective. Furthermore, we introduce the zero retrain forgetting (ZRF) metric to evaluate any unlearning method. Unlike the existing unlearning metrics, the ZRF score does not depend on the availability of the expensive retrained model. This makes it useful for analysis of the unlearned model after deployment as well. We present results of experiments conducted for random subset forgetting and class forgetting on various deep networks and across different application domains. Source code is at: https://github.com/vikram2000b/bad-teaching-unlearning

Introduction

Machine learning (ML) models are being widely deployed for various applications across different organizations. These models are often trained with large-scale user data. Modern data regulatory frameworks such as European Union GDPR (Voigt and Von dem Bussche 2017), and California Consumer Privacy Act (CCPA) (Goldman 2020) provide for citizens the right to be forgotten. It mandates deletion-upon-request of user data. The regulations also require that user consent must be obtained prior to data collection. This consent for the use of an individual’s data in these ML models may be withdrawn at any point of time. Thus, a request for data deletion can be made to the ML model owner. The owner company (of the ML model) is legally obligated to remove the models/algorithms derived from using that particular data. As the ML models usually memorize the training samples (Feldman 2020; Carlini et al. 2019), the company either needs to retrain the model from scratch by excluding the requested data or somehow erase the user’s information completely from the ML model parameters. The algorithms supporting such information removal are known as machine unlearning methods. Machine unlearning also offers a framework to prove data removal from the updated ML model.

The unlearning methods can be practically applied in the following ways: (i) forgetting single-class or multiple classes of data (Tarun et al. 2021), (ii) forgetting a cohort of data from a single class (Golatkar, Achille, and Soatto 2020a, b), (iii) forgetting a random subset of data from multiple classes (Golatkar et al. 2021). In this paper, we investigate the utility of teacher-student framework with knowledge distillation to develop a robust unlearning method that can support all the three modes, i.e. single/multiple class-level, sub-class level and random subset-level unlearning. Another important question we raise is how well the unlearned model has generalized the forgetting? Recent studies suggest that the unlearning methods may lead to privacy leakage in the models (Chen et al. 2021). Therefore, it is important to validate whether the unlearned models are susceptible to privacy attacks such as membership inference attacks. Moreover, the trade-off between the amount of unlearning and privacy exposure also should be investigated for better decision-making on the part of the model owner. We propose a new metric to evaluate the generalization ability of the unlearning method.

The existing unlearning methods for deep networks put several constraints over the training procedure. For example, (Golatkar et al. 2021) train an additional mixed-linear model along with the actual model which is used in their unlearning method. Similarly, (Golatkar, Achille, and Soatto 2020a, b) strictly require SGD to be used in optimization during model training. These restrictions and the need for other prior information make these methods less practical for real-world applications. We present a method that does not require any prior information about the training procedure. We do not train any extra models to assist in the unlearning. Furthermore, we aim to keep the unlearning process efficient and fast in comparison to the high computational costs of the existing methods.

We make the following key contributions:

-

1.

We present a teacher-student framework, consisting of competent and incompetent teachers. The selective knowledge transfer to the student results in the unlearned model. The method works for both single-class and multiple class unlearning. It also works effectively for multiple class random-subset forgetting.

-

2.

We propose a new retrained model-free evaluation metric called zero retrain forgetting (ZRF) metric to robustly evaluate the unlearning method. This also helps in assessing the generalization in the unlearned model on the forget data.

-

3.

Our method works on different modalities of deep networks such as CNN, Vision transformers, and LSTM. Unlike the existing methods, our method doesn’t put any constraints over the training procedure. We also demonstrate the wide applicability of our method by conducting experiments in different domains of multimedia applications including image classification, human activity recognition, and epileptic seizure detection.

Related Work

Machine Unlearning. Bourtoule et al. (Bourtoule et al. 2021) proposed to partition the training dataset into non-overlapping shards and create multiple models for the disjoint sets. They store the weakly learned models to deal with multiple data removal requests. Ginart et al. (Ginart et al. 2019) adopted the definition of differential privacy to introduce the probabilistic notion of unlearning. It expects high similarity between the output distributions of the unlearned model and the retrained model without using the deletion data. Several subsequent works (Mirzasoleiman, Karbasi, and Krause 2017; Izzo et al. 2021; Ullah et al. 2021) follow this approach in presenting theoretical guarantees in their respective problem settings. We also follow this definition of unlearning in our work. Guo et al. (Guo et al. 2020) give a certified data removal framework to enable data deletion in linear and logistic regression. Neel et al. (Neel, Roth, and Sharifi-Malvajerdi 2021) apply gradient descent to achieve unlearning in convex models. The difference between differential privacy and machine unlearning is studied in (Sekhari et al. 2021). Unlearning in random forests (Brophy and Lowd 2021) and Bayesian setting (Nguyen, Low, and Jaillet 2020) are also studied. These methods are designed specifically for convex problems and are unlikely to work in deep learning models. Our work is aimed at performing unlearning in deep networks.

Unlearning in Deep Networks. Golatkar et al. (Golatkar, Achille, and Soatto 2020a) presented one of the early works in deep machine unlearning. They introduced a scrubbing method to remove the information from the network weights. The method impose a condition of SGD based optimization during training. The subsequent work (Golatkar, Achille, and Soatto 2020b) proposed a neural tangent kernel (NTK) based method to approximate the training process. The additional approximated model is used to estimate the network weights for the unlearned model. (Golatkar et al. 2021) train a mixed-linear model along with the original model. The linearized model is specific to different deep networks and requires fine-tuning to work properly. Moreover, all these methods suffer from high computational costs, constraints on the training process, and limitations of the approximation methods. Tarun et al. (Tarun et al. 2021) proposed an efficient class-level machine unlearning method. However, it does not support random subset forgetting. In our work, we do not need to train any additional model to support unlearning. Our method does not demand the use of any specific optimization technique during training or any other prior information about the training process. (Chundawat et al. 2023; Graves, Nagisetty, and Ganesh 2021; Tarun et al. 2022) are some other notable works.

Preliminaries

Let the complete (multimedia) dataset be with number of samples, where is the sample, and is the corresponding class label. The set of samples to forget is denoted as . In class-level unlearning, corresponds to all the data samples present in a single or multiple classes. In random-subset unlearning, may either consist of a random subset of data samples from a single class or multiple classes. The information exclusive to these data points need to be removed from the model. The set of remaining samples to be retained is denoted by . The information about these samples are to be kept unchanged in the model. and together represent the whole training set and are mutually exclusive, i.e. and . Each data point is assigned an unlearning label, , which is if the sample belongs to and if it belongs to . The subset used for unlearning is , is total number of samples, and is unlearning label corresponding to each sample .

The model trained from scratch without observing the forget samples is called the retrained model or the gold model in this paper. In the proposed teacher-student framework, the competent teacher is the fully trained model or the original model. The competent teacher has observed and learned from the complete data . Let denote the competent/smart teacher with parameters . It takes as input and outputs the probabilities . The incompetent teacher is a randomly initialized model. Let be the incompetent/dumb teacher with parameters and output probabilities . The student is a model initialized with parameters i.e., the same as the competent teacher. It returns the output probabilities . It is to be noted that the student is initialized with all the information present in the original model (). The incompetent teacher is used to remove the requested information (about the forget data ) from this model. The Kullback-Leibler (KL) divergence (Kullback and Leibler 1951) is used as a measure of similarity between two probability distributions. For two distributions and , the KL-divergence is defined by

Proposed Method

Unlearning with Competent/Incompetent Teachers

We aim to remove the information about the requested data-points by using two teachers (competent and incompetent) and one student. The student is initialized with knowledge about the complete data i.e., the parameters of the fully trained model. The idea is to selectively remove the information about the forget samples from this model. At the same time, the information pertaining to the retain set should not to be disturbed. Thus, the unlearning objective is to remove the information about while retaining the information about . We achieve this by using a pair of (competent/smart () and incompetent/dumb ()) teachers to manipulate the student () as depicted in Figure 1. The bad knowledge about from the incompetent teacher is passed on to the student which helps the student to forget samples. Such an approach consequently induces random knowledge about the forget set in the student instead of completely making their prediction accuracy zero. This serves as a protection against the risk of information exposure about the samples to forget. The bad (random) inputs from may invariably corrupt some of the information about the retain set in the student. Therefore, we selectively borrow correct knowledge related to from the competent teacher as well. In this manner, both the incompetent and competent teachers help the student forget and retain the corresponding information, respectively.

For a student , incompetent/dumb teacher , and competent/smart teacher , we define the KL-Divergence between and in Eq. 1.

| (1) |

where corresponds to the data class. Similarly, the KL-Divergence between the fully trained competent teacher and student is given in Eq. 2.

| (2) |

The unlearning objective can be formulated as in Eq. 3.

| (3) |

where is the unlearning label and is a data sample. The data samples used by the proposed unlearning method consists of all the samples from and a small subset of samples of . The student is then trained to optimize the loss function for all these samples. The intuition behind optimizing over is that we selectively transfer bad knowledge about forget data from by minimizing KL-Divergence between and and the accurate knowledge corresponding to is fed from by minimizing KL-Divergence between and . The student learns to mimic for , thus removing information exclusively pertaining to those samples while retaining all the generic information which can be obtained by other samples of same class.

Zero Retrain Forgetting Metric

The effectiveness of an unlearning method is evaluated employing several metrics in the literature. Some frequently used metrics are ‘accuracy on forget set and retain set’ (Golatkar, Achille, and Soatto 2020a; Tarun et al. 2021; Golatkar et al. 2021; Chundawat et al. 2023), relearn time (Tarun et al. 2021), membership inference attacks (Golatkar et al. 2021; Graves, Nagisetty, and Ganesh 2021), activation distance (Golatkar, Achille, and Soatto 2020a; Golatkar et al. 2021), Anamnesis Index (Chundawat et al. 2023), and layer-wise distance (Tarun et al. 2021). Excluding the forget and retain set accuracy, all of the remaining metrics in the literature require a retrained model i.e., training a model from scratch without using the forget set. These metrics can only be interpreted with reference to such a retrained model. Such dependency on the retrained model for unlearning evaluation would lead to higher time and computational costs. Simply measuring the performance on and does not reveal whether the information is actually removed from the network weights. Thus it is not a comprehensive measure of unlearning.

We propose a novel ‘Zero Retrain Forgetting Metric’ (ZRF) to enable evaluation of unlearning methods free from dependence on the retrained model. It measures the randomness in the model’s prediction by comparing them with the incompetent teacher . We calculate the Jensen–Shannon (JS) divergence(Lin 1991) between an unlearned model and the incompetent teacher as below.

| (4) |

where . The ZRF metric is defined as

| (5) |

where is sample from with a total of samples. The ZRF compares the output distribution for the forget set in the unlearned model with the output of a randomly initialized model, which is our incompetent teacher in most of the cases. The ZRF score lies between 0 and 1. The score will be close to 1 if the model behaviour is completely random for the forget samples and it will be close to 0 if the model shows some specific pattern.

What is an ideal ZRF score? Suppose there is a class aeroplanes that contains images of Boeing aircraft along with other aircraft models in the training set. If we unlearn Boeing aircraft, we don’t expect the model to now classify them as animals, vegetables or any other totally unrelated class. We still expect most of these unlearned images to be classified as aeroplanes. This comes from the intuition that the model must have been designed and trained with generalization in mind. An unlearning method that makes the performance much worse than the generalization error for aeroplanes is not actually unlearning. It is just teaching the model to be consistently incorrect when it sees a Boeing aeroplane. The ZRF score will be 0 when the model almost always classifies a Boeing aircraft as an animal or some other totally different class. The ZRF will be 1 if the model always classifies all classes with same random probability for Boeing aircraft. Both of these ( or ) are not the desirable outcomes. We expect the unlearned model to have a generalization performance similar to that of a model trained without the Boeing aircraft. It will have some random predicted logits since the Boeing aircraft class was not overfitted during training.

An ideal value of ZRF score depends on the model, dataset and the forget set. Ideally, the optimal ZRF value is what a model trained without the forget set would have. But in practical scenarios we do not have access to the retrained model. So, a good proxy for the ideal ZRF value could be the ZRF value obtained on a test set. The test set by definition is a set about which the model has never learned anything specifically. It is equivalent to saying, a set that the model has unlearned perfectly.

| Super | Sub | Accuracy ( , ) | ZRF | JS- | Mem. Attack Prob | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | Class | Acc. | Orig. | Retrain | Our | Orig. | Retrain | Our | Div | Orig. | Retrain | Our | |

| ResNet18 | Veh2 | Rocket | 85.78 | 85.79 | 85.050.61 | 0.87 | 0.93 | 0.99 | 0.04 | 0.98 | 0.52 | 0.00 | |

| 82 | 3 | 20.40 | |||||||||||

| Veg | MR | 85.82 | 85.38 | 84.790.51 | 0.88 | 0.93 | 0.99 | 0.04 | 0.99 | 0.41 | 0.00 | ||

| 78 | 4 | 10.36 | |||||||||||

| People | Baby | 85.67 | 85.73 | 85.180.54 | 0.84 | 0.87 | 0.98 | 0.05 | 1.0 | 0.84 | 0.58 | ||

| 93 | 82 | 770.34 | |||||||||||

| ED | Lamp | 85.83 | 86.28 | 84.740.32 | 0.88 | 0.94 | 0.98 | 0.03 | 0.98 | 0.43 | 0.01 | ||

| 77 | 14 | 50.29 | |||||||||||

| NS | Sea | 85.63 | 85.46 | 84.580.22 | 0.84 | 0.87 | 0.98 | 0.07 | 0.99 | 0.88 | 0.42 | ||

| 97 | 83 | 840.68 | |||||||||||

| ViT | Veh2 | Rocket | 94.89 | 95.35 | 94.840.71 | 0.91 | 0.96 | 0.99 | 0.03 | 0.99 | 0.47 | 0.01 | |

| 98 | 9 | 170.2 | |||||||||||

| Veg | MR | 94.94 | 94.8 | 94.590.65 | 0.93 | 0.98 | 0.99 | 0.02 | 0.99 | 0.25 | 0.01 | ||

| 93 | 4 | 170.54 | |||||||||||

| People | Baby | 94.91 | 95.26 | 94.450.69 | 0.90 | 0.92 | 0.99 | 0.06 | 1.0 | 0.91 | 0.14 | ||

| 96 | 92 | 770.33 | |||||||||||

| ED | Lamp | 94.93 | 94.86 | 94.990.85 | 0.91 | 0.96 | 0.99 | 0.03 | 1.0 | 0.57 | 0.02 | ||

| 94 | 13 | 210.29 | |||||||||||

| NS | Sea | 94.91 | 94.93 | 94.310.46 | 0.90 | 0.92 | 0.99 | 0.06 | 1.0 | 0.97 | 0.12 | ||

| 96 | 85 | 79.54 | |||||||||||

Experiments

Datasets used. We evaluate our proposed method on image classification: CIFAR10 (Krizhevsky 2009), CIFAR100 (Krizhevsky 2009), epileptic seizure recognition (Andrzejak et al. 2001), and activity recognition (Anguita et al. 2013) datasets.

Models used. We use ResNet18, ResNet34, MobileNetv2, Vision Transformer, and AllCNN models for learning and unlearning in image classification tasks. We use a 3-layer DNN model for unlearning in epileptic seizure recognition. We use an LSTM model for unlearning in activity recognition task. All the experiments were performed on NVIDIA Tesla V100 (32 GB) with Intel Xeon processors. The experiments are implemented in PyTorch 1.5.0. The KL temperature is set to 1 for all the experiments.

Evaluation Measures. We use the following metrics for our analysis of the proposed unlearning method. 1) Accuracy on forget & retain set: The accuracy of the unlearned model on and sets should be similar to the retrained model. 2) Membership inference attack: A membership inference attack is performed to check if any information about the forget samples is still remaining in the model. The attack probabilities should be lower on the forget set in the unlearned model. 3) Activation distance: This is an average of the L2-distance between the unlearned model and retrained model’s predicted probabilities on the forget set. A lesser activation distance represents better unlearning. 4) JS-Divergence: JS-Divergence between the predictions of the unlearned and retrained model when coupled with activation distance gives a more complete picture on unlearning. Lesser the divergence, better the unlearning. 5) ZRF score: We introduce this metric to remove the dependence on the retrained model for evaluating the machine unlearning method.

Baseline Models. We use the unlearning method from (Graves, Nagisetty, and Ganesh 2021) for a comparative analysis. This best fits our problem statement i.e., unlike most other methods, this method achieves unlearning in an already trained model without putting any constraints on the training procedure. We also use the retrained model for comparison. We perform two types of unlearning: (i) sample unlearning, and (ii) class unlearning. We present the experiments and analysis for each of them below.

Forget Acc. Vs Information Exposure Trade-off

Machine unlearning of a specific class or cohort often leads to a decrease in accuracy or performance on forget set. Although, it is an expected result when the forget set is orthogonal to the retain set i.e., there are no samples in the retain set similar to the ones in the forget set. But this may not be true when retain set contains data points similar to the forget set samples. The accuracy may drop slightly, may not drop at all, or even increase in some cases. An unlearning method should bring the forget set performance closer to the gold (retrained) model instead of simply reducing it. If the performance of the unlearned model deviates a lot from the gold model, it could lead to Streisand effect. This effect refers to unexpected behaviour of the model on forget samples which may leak information about that data. The leak could be in the form of being consistently & maximally incorrect about only the forget samples, signalling that a deliberate effort was made to forget a selected set of samples. The aim should be to avoid this in order to ensure that the information about the forget set has been properly erased. For example, as mentioned earlier in the aeroplanes example, when method unlearns Boeing aircraft, if it is maximally wrong whenever it sees any Boeing aircraft image and classifies it as sea, animals, mushroom, etc., it will be suspicious. Other unseen aeroplanes will not be classified incorrectly so consistently. That means it has not really erased the information of Boeing aircraft. That information still exists which the model uses to be deliberately incorrect about the forget set.

Sample Unlearning

CIFARSuper20. The CIFAR100 is made up of 20 super classes i.e., there are different variants for each of these 20 classes. We merge all classes of the CIFAR100 into their super classes and convert it into a 20 class set named CIFARSuper20. Each class in CIFARSuper20 have 5 sub-classes, which are actual classes of CIFAR100. We conduct experiments on CIFARSuper20 by forgetting one sub-class from each super class. This setup makes unlearning more difficult than a regular scenario as we need to unlearn a sample/class without damaging the information of another sample/class that looks quite similar to it (for example, forget baby from people super class consisting of baby, boy, girl, man, woman).

We present unlearning results on ResNet18, ResNet34, and Vision Transformer. We use pretrained models to train/fine-tune for 5 epochs using Adam optimizer with a batch size of 256. The learning rate is 0.001 for final layer and 0.0001 for pretrained weight layers. A learning plateau with patience of 3 and reduce factor 0.5 is used. We conduct multiple runs (5 times) of our algorithm which didn’t show any significant variation in performance (refer Table 1). Therefore, we report the results of single run for all the models in this paper.

We unlearn various sub-classes from a super-class. We use 30% of retain data and a single epoch of unlearning for all models. The learning rate of 0.0001 is used for unlearning. Table 1 shows unlearning results on ResNet18 and Vision Transformer (Dosovitskiy et al. 2021). The evaluation is performed on all the metrics discussed earlier. It can be observed in Table 1 that performance of our method is very close to that of the retrained model. There is very low probability of membership inference attack on our unlearned model. The accuracy of our method on the forget and retain set when forgetting rocket images from vehicles is almost same as the retrained models. The membership inference attack probability on the model for samples from rocket class drops to 0.002 from 0.982 after unlearning. The JS-Divergence between predictions of the retrained model and our model is 0.04 in the forget set. This implies the output distribution of unlearned model is very close to the retrained model. The ZRF score of our model becomes 0.99 from 0.87 after unlearning, thus indicating effective forgetting. Furthermore, Table 4 shows the unlearning results in ResNet34 with different types of teachers.

| Forget Set | Accuracy | Original | Retrain | Amnesiac | Our | Activation Distance | ||

| Amnesiac | Our | |||||||

| RN18+ | Rocket | 85.78 | 85.79 | 84.79 | 85.05 | 0.70 | 0.67 | |

| C20 | 82 | 3 | 4 | 2 | ||||

| Dataset | Baby | 85.67 | 85.73 | 84.64 | 85.18 | 0.65 | 0.65 | |

| 93 | 82 | 78 | 77 | |||||

| DNN+ | 50 | 71.91 | 72.26 | 76.04 | 82.69 | 0.77 | 0.47 | |

| Seizure | samples | 98 | 70 | 30 | 74 | |||

| Dataset | 100 | 71.91 | 73.39 | 75.17 | 79.26 | 0.69 | 0.42 | |

| samples | 96 | 73 | 40 | 70 | ||||

| LSTM+ | Person | 90.46 | 84.01 | 89.45 | 87.27 | 0.52 | 0.14 | |

| HAR | #1 | 100 | 99.13 | 53.03 | 94.24 | |||

| Dataset | Person | 90.46 | 89.68 | 87.04 | 86.6 | 0.49 | 0.13 | |

| #3 | 99.41 | 99.41 | 75.95 | 95.31 | ||||

RN18: ResNet18, C20: CIFAR20, HAR: Human Action Recognition

Epileptic Seizure Detection. The dataset consists of the status of seizure in medical patients. There are a total of 178 predictor variables and 5 classes. A 3-layer DNN is trained for classification. The model is trained for 50 epochs using Adam Optimizer with learning rate of 0.01 and plateau with patience 10 and reduce factor 0.1. We unlearn 50 and 100 randomly selected data points. The results are presented in Figure 2(a) and Figure 2(b). We observe that the proposed method performance is close to the retrained model. The accuracy on the forget set indicates that we have indeed effectively unlearned the forget set as the forget accuracy is reduced from around 100% to a generalized performance. For example, in case of forgetting 100 samples, the accuracy on the forget set drops from 90% to 74% in our method which is close the 70% accuracy of the retrained model.

Human Activity Recognition. This is a task of classifying the activity of a person using the readings collected from smartphone sensors that an individual is carrying with her. The observation were taken from 30 different persons. The dataset contains 6 different types of activities which can be classified using time-series data with sensors giving 9 readings at each time-step. An LSTM Model with 2 dense layers after each LSTM is trained to predict the activity. The model is trained for 50 epochs using Adam Optimizer with learning rate of 0.01 and plateau with patience 10 and reduce factor 0.1. Table 2 contains the results of forgetting person 1 and person 3. Detailed results and effects of various parameters on unlearning are present in the supplementary material.

| # | Acc. | Orig. | Retrain | UNSIR | Our | |

|---|---|---|---|---|---|---|

| RN18+ | 1 | 77.86 | 78.32 | 71.06 | 78.46 | |

| C10 | 81.01 | 0 | 0 | 4.22 | ||

| Dataset | 2 | 78.00 | 79.15 | 73.61 | 79.22 | |

| 78.65 | 0 | 0 | 9.94 | |||

| RN18+ | 1 | 78.68 | 78.37 | 75.36 | 77.00 | |

| Pre+C100 | 83.00 | 0 | 0 | 0 | ||

| Dataset | 20 | 77.84 | 79.73 | 75.38 | 77.78 | |

| 82.84 | 0 | 0 | 3.90 | |||

| AllCNN+ | 1 | 82.64 | 85.90 | 73.90 | 81.74 | |

| C10 | 91.02 | 0 | 0 | 9.16 | ||

| Dataset | 2 | 84.27 | 85.21 | 80.76 | 77.68 | |

| 79.74 | 0 | 0 | 5.64 | |||

| MNv2+ | 1 | 77.43 | 78 | 75.76 | 78.22 | |

| Pre+C100 | 90 | 0 | 0 | 0 | ||

| Dataset | 20 | 76.47 | 77 | 76.27 | 76.65 | |

| 81.70 | 0 | 0 | 13.65 |

Comparison with Amnesiac learning (Graves, Nagisetty, and Ganesh 2021). We compare our result with Amnesiac learning which fine-tunes the model with random labels on forget samples. Table 2 shows the comparison between both the methods. We compare the activation distance and accuracy on the forget and retain set. A lower activation distance indicates closeness to the retrained model. This subsequently indicates better unlearning and an accuracy closer to the retrained model is desired on forget and retain set. The activation distance for our method is very low compared to Amnesiac method in most of the cases (refer Table 2). Amnesiac method causes too much damage in the forget set of epileptic seizure and human activity recognition dataset, indicating Streisand effect. The accuracy in epileptic seizure dataset (forget set of 50 samples) is 98% for the original model, 70% for retrained model, 74% for our method, and 30% for amnesiac method. The Amnesiac method damages the performance on forget set by a huge margin. It reduces the forget set accuracy to 30% which otherwise should be close to 70%. It should also be noted that activation distance from retrained model is 0.47 for our method and 0.77 for Amnesiac method. Amnesiac method is causing undesired effects and the generated model is very different from the retrained model. Our method, besides being more effective and robust, requires access to only a subset of retain data. We use only 30% of retain data to obtain the results in our method. Our method is faster than Amnesiac method, more effective even when limited data is available for use.

Class Unlearning

We also demonstrate full-class (single and multiple classes) unlearning capability of our method. We show results on CIFAR10 and CIFAR100 with ResNet18, AllCNN, and MobileNetv2 models. Class-level unlearning results are compared with an existing method with configuration as in (Tarun et al. 2021). The model update in our method is performed for 1 epoch using 30% of the retain data. The learning rate at the time of unlearning is set to 0.001. Table 3 gives a performance comparison between the proposed and the existing methods. The accuracy on the retain set in CIFAR10 single-class forgetting is 71.06% for UNSIR, 78.32% for the retrained model, and 78.46% for our method. The results are quite similar in all three methods. The accuracy on forget set is zero in the retrained model and UNSIR but our method retains some accuracy on the forget set. This is because the method learns from a randomly initialized teacher which does random predictions and predicts each class with 10% probability and forget model learns the same. This in turn leads to better protection against the risk of privacy exposure.

| Super | Sub | Acc. | Orig. | Retrain | Our | |

|---|---|---|---|---|---|---|

| Class | Class | Model | Model | Method | ||

| ResNet34 | Veh2 | Rocket | 86.36 | 85.32 | 85.8 | |

| 88 | 4 | 1 | ||||

| Veg | MR | 86.41 | 85.92 | 85.61 | ||

| 83 | 2 | 5 | ||||

| ResNet18 | Veh2 | Rocket | 86.36 | 85.32 | 85.86 | |

| 88 | 4 | 15 | ||||

| Veg | MR | 86.41 | 85.92 | 85.83 | ||

| 83 | 2 | 1 | ||||

| Random | Veh2 | Rocket | 86.36 | 85.32 | 86.04 | |

| 88 | 4 | 5 | ||||

| Veg | MR | 86.41 | 85.92 | 83.37 | ||

| 83 | 2 | 11 |

| Super- | Acc. | Orig. | Ret. | (RI) | (P) | JS-Div | |

|---|---|---|---|---|---|---|---|

| Sub | (RI) | (P) | |||||

| Veh2- | 85.8 | 85.8 | 85.1 | 85.1 | 0.04 | 0.02 | |

| Rocket | 82 | 3 | 2 | 2 | |||

| Veg- | 85.8 | 85.4 | 84.8 | 85.4 | 0.04 | 0.08 | |

| MR | 78 | 4 | 1 | 3 | |||

Using a Simpler Model as an Incompetent Teacher

Our method does not place any constraints on the architecture of the incompetent teacher. Preferably the architecture should be kept same as the student for proper transfer of information. But it can be replaced with smaller models without significantly affecting the results. As the teacher is initialized with random weights, such behaviour can be obtained by a significantly smaller model, or even hard coded algorithms to generate random predictions. A cheaper teacher can make the unlearning process faster without compromising in the quality of unlearning. We replace the incompetent teacher with (i) a small randomly initialized Neural Network, and (ii) a random prediction generator. The random prediction generator first assigns equal probability to all classes and then a adds Gaussian noise to the predictions. The performance with these teachers is shown in Table 4. With ResNet34 as teacher, the performance on (forget class: Rocket) is 1% while using the same model as teacher, 15% while using ResNet18, and 5% while using a random predictor as a teacher. Similarly, the performance on retain set while using ResNet34 as teacher is 85.8%, 85.86% while using ResNet18, and 86.04% while using a random predictor as a teacher. There is a negligible change in performance when we use simpler models as teachers. Thus, it can be used to reduce the computational costs without much loss in the performance.

Using Partially Retrained Model as an Incompetent Teacher

A partially trained (PT) model on a subset of retain data can be used as an incompetent teacher in the proposed framework. Similarly, smaller models trained on a small subset of the retain data can also serve as an incompetent teacher. We show the results of using PT models as incompetent teachers to induce forgetting. Similarly, we further investigate the effectiveness of PT teacher on CIFARSuper20 in Table 5. The teacher is trained for 1 epoch on 50% of the data. The accuracy on forget set Rocket is 3% for retrained model, 2% for our method with PT teacher and 2% for RI teacher. The accuracy on retain set for Rocket is 85.79% for retrained model, 85.07% for our method with PT teacher and 85.05% for our method with RI teacher. Besides, the JS-Divergence between retrained model & RI model based unlearning is 0.04 and 0.02 in case of PT teacher model based unlearning. This shows that in addition to accuracy improvement, PT teacher based unlearning may also give output distribution more similar to the retrained model.

Efficiency Analysis

We compare the run-time comparison of the retrained model, the existing methods, and the proposed methods. The random weights based setup is faster than retraining and more than faster than Amnesiac learning (Graves, Nagisetty, and Ganesh 2021). The method is faster when cheaper unlearning teachers are used. The proxy model based setup is about faster than retraining method. The ideal trade-off between efficiency and performance can be obtained by using smaller models partially trained on retain data but they come with the expense of additional training. This (partial training) further comes with a trade-off between computational cost and closeness of the model to the retrained model. The right amount of partial training should be decided. We observed that the cheap randomly initialized models are more efficient and generally perform well in most cases.

Conclusion

We present a novel and general teacher-student framework for machine unlearning. A pair of competent and incompetent teachers is used to selectively transfer knowledge into the student network to obtain the unlearned model. Our work supports single & multiple classes forgetting, sub-class forgetting and random samples forgetting. The effectiveness is evaluated in various application domains and modality of networks. We also introduce a new evaluation metric ZRF that is free from the need of having a retrained model for reference. This metric would be useful in real world scenarios where retrained models are not available or very expensive to obtain. Several possible efficient teachers are also explored to reduce the computational complexity. Future work could focus at the intersection of efficiency and privacy guarantees which may be in the form of either developing better evaluation measures or developing new class of unlearning techniques.

Acknowledgements

This research is supported by the National Research Foundation, Singapore under its Strategic Capability Research Centres Funding Initiative. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation, Singapore.

References

- Andrzejak et al. (2001) Andrzejak, R. G.; Lehnertz, K.; Mormann, F.; Rieke, C.; David, P.; and Elger, C. E. 2001. Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: Dependence on recording region and brain state. Physical Review E, 64(6): 061907.

- Anguita et al. (2013) Anguita, D.; Ghio, A.; Oneto, L.; Parra Perez, X.; and Reyes Ortiz, J. L. 2013. A public domain dataset for human activity recognition using smartphones. In Proceedings of the 21th international European symposium on artificial neural networks, computational intelligence and machine learning, 437–442.

- Bourtoule et al. (2021) Bourtoule, L.; Chandrasekaran, V.; Choquette-Choo, C. A.; Jia, H.; Travers, A.; Zhang, B.; Lie, D.; and Papernot, N. 2021. Machine unlearning. In 2021 IEEE Symposium on Security and Privacy (SP), 141–159. IEEE.

- Brophy and Lowd (2021) Brophy, J.; and Lowd, D. 2021. Machine Unlearning for Random Forests. In International Conference on Machine Learning, 1092–1104. PMLR.

- Carlini et al. (2019) Carlini, N.; Liu, C.; Erlingsson, Ú.; Kos, J.; and Song, D. 2019. The secret sharer: Evaluating and testing unintended memorization in neural networks. In 28th USENIX Security Symposium (USENIX Security 19), 267–284.

- Chen et al. (2021) Chen, M.; Zhang, Z.; Wang, T.; Backes, M.; Humbert, M.; and Zhang, Y. 2021. When machine unlearning jeopardizes privacy. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, 896–911.

- Chundawat et al. (2023) Chundawat, V. S.; Tarun, A. K.; Mandal, M.; and Kankanhalli, M. 2023. Zero-Shot Machine Unlearning. IEEE Transactions on Information Forensics and Security.

- Dosovitskiy et al. (2021) Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; Uszkoreit, J.; and Houlsby, N. 2021. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. ICLR.

- Feldman (2020) Feldman, V. 2020. Does learning require memorization? a short tale about a long tail. In Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing, 954–959.

- Ginart et al. (2019) Ginart, A.; Guan, M. Y.; Valiant, G.; and Zou, J. 2019. Making AI Forget You: Data Deletion in Machine Learning. In Advances in neural information processing systems, 3513–3526.

- Golatkar et al. (2021) Golatkar, A.; Achille, A.; Ravichandran, A.; Polito, M.; and Soatto, S. 2021. Mixed-Privacy Forgetting in Deep Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 792–801.

- Golatkar, Achille, and Soatto (2020a) Golatkar, A.; Achille, A.; and Soatto, S. 2020a. Eternal sunshine of the spotless net: Selective forgetting in deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9304–9312.

- Golatkar, Achille, and Soatto (2020b) Golatkar, A.; Achille, A.; and Soatto, S. 2020b. Forgetting outside the box: Scrubbing deep networks of information accessible from input-output observations. In European Conference on Computer Vision, 383–398. Springer.

- Goldman (2020) Goldman, E. 2020. An Introduction to the California Consumer Privacy Act (CCPA). Santa Clara Univ. Legal Studies Research Paper.

- Graves, Nagisetty, and Ganesh (2021) Graves, L.; Nagisetty, V.; and Ganesh, V. 2021. Amnesiac Machine Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, 11516–11524.

- Guo et al. (2020) Guo, C.; Goldstein, T.; Hannun, A.; and Van Der Maaten, L. 2020. Certified Data Removal from Machine Learning Models. In International Conference on Machine Learning, 3832–3842. PMLR.

- Izzo et al. (2021) Izzo, Z.; Smart, M. A.; Chaudhuri, K.; and Zou, J. 2021. Approximate data deletion from machine learning models. In International Conference on Artificial Intelligence and Statistics, 2008–2016. PMLR.

- Krizhevsky (2009) Krizhevsky, A. 2009. Learning multiple layers of features from tiny images. Technical report, CIFAR, University of Toronto.

- Kullback and Leibler (1951) Kullback, S.; and Leibler, R. A. 1951. On information and sufficiency. The annals of mathematical statistics, 22(1): 79–86.

- Lin (1991) Lin, J. 1991. Divergence measures based on the Shannon entropy. IEEE Transactions on Information theory, 37(1): 145–151.

- Mirzasoleiman, Karbasi, and Krause (2017) Mirzasoleiman, B.; Karbasi, A.; and Krause, A. 2017. Deletion-robust submodular maximization: Data summarization with “the right to be forgotten”. In International Conference on Machine Learning, 2449–2458. PMLR.

- Neel, Roth, and Sharifi-Malvajerdi (2021) Neel, S.; Roth, A.; and Sharifi-Malvajerdi, S. 2021. Descent-to-delete: Gradient-based methods for machine unlearning. In Algorithmic Learning Theory, 931–962. PMLR.

- Nguyen, Low, and Jaillet (2020) Nguyen, Q. P.; Low, B. K. H.; and Jaillet, P. 2020. Variational bayesian unlearning. Advances in Neural Information Processing Systems, 33.

- Sekhari et al. (2021) Sekhari, A.; Acharya, J.; Kamath, G.; and Suresh, A. T. 2021. Remember what you want to forget: Algorithms for machine unlearning. Advances in Neural Information Processing Systems, 34.

- Tarun et al. (2021) Tarun, A. K.; Chundawat, V. S.; Mandal, M.; and Kankanhalli, M. 2021. Fast Yet Effective Machine Unlearning. arXiv preprint arXiv:2111.08947.

- Tarun et al. (2022) Tarun, A. K.; Chundawat, V. S.; Mandal, M.; and Kankanhalli, M. 2022. Deep Regression Unlearning. arXiv preprint arXiv:2210.08196.

- Ullah et al. (2021) Ullah, E.; Mai, T.; Rao, A.; Rossi, R. A.; and Arora, R. 2021. Machine unlearning via algorithmic stability. In Conference on Learning Theory, 4126–4142. PMLR.

- Voigt and Von dem Bussche (2017) Voigt, P.; and Von dem Bussche, A. 2017. The eu general data protection regulation (gdpr). A Practical Guide, 1st Ed., Cham: Springer International Publishing.

| No. of | % | Accuracy | Original | Retrained | Our | ZRF | JS-Div | ||

|---|---|---|---|---|---|---|---|---|---|

| epochs | of | Model | Model | Method | Original | Retrained | Ours | ||

| 1 | 100% | 90.46 | 84.01 | 88.43 | 0.57 | 0.72 | 0.79 | 0.03 | |

| 100 | 99.13 | 94.52 | |||||||

| 50% | 90.46 | 84.01 | 88.7 | 0.58 | 0.72 | 0.69 | 0.02 | ||

| 100 | 99.13 | 97.69 | |||||||

| 30% | 90.46 | 84.01 | 88.77 | 0.58 | 0.72 | 0.67 | 0.02 | ||

| 100 | 99.13 | 98.27 | |||||||

| 10% | 90.46 | 84.01 | 90.19 | 0.58 | 0.72 | 0.62 | 0.01 | ||

| 100 | 99.13 | 100 | |||||||

| 2 | 100% | 90.46 | 84.01 | 88.22 | 0.57 | 0.71 | 0.79 | 0.02 | |

| 100 | 99.13 | 94.81 | |||||||

| 50% | 90.46 | 84.01 | 86.7 | 0.58 | 0.72 | 0.75 | 0.02 | ||

| 100 | 99.13 | 96.83 | |||||||

| 30% | 90.46 | 84.01 | 87.75 | 0.58 | 0.72 | 0.72 | 0.03 | ||

| 100 | 99.13 | 93.95 | |||||||

| 10% | 90.46 | 84.01 | 89.07 | 0.57 | 0.71 | 0.65 | 0.02 | ||

| 100 | 99.13 | 99.71 | |||||||

| 5 | 100% | 90.46 | 84.01 | 88.67 | 0.57 | 0.71 | 0.87 | 0.04 | |

| 100 | 99.13 | 89.91 | |||||||

| 50% | 90.46 | 84.01 | 86.90 | 0.56 | 0.71 | 0.84 | 0.04 | ||

| 100 | 99.13 | 90.49 | |||||||

| 30% | 90.46 | 84.01 | 84.29 | 0.57 | 0.71 | 0.83 | 0.05 | ||

| 100 | 99.13 | 91.07 | |||||||

| 10% | 90.46 | 84.01 | 85.71 | 0.57 | 0.71 | 0.75 | 0.04 | ||

| 100 | 99.13 | 92.22 | |||||||

Appendix A Additional Ablation Studies

We study the effect of various hyper parameters in the unlearning method for the Human Activity Recognition dataset. We discuss the results achieved at different epochs of unlearning and at different learning rates below.

Effect of Different Number of Epochs

Without loss of generality, we unlearn the data related to user-1 from the activity dataset. Table 6 shows how varying the size of the data used for retraining (% of ) and the number of epochs for fine-tuning effects unlearning performance. The learning rate is fixed at 0.001. From Table 6 we observe that using more data samples from result in better randomization in the outputs for in the unlearned model. For example, when only a single epoch is run and 100% of is used, the ZRF score is 0.79. This goes down to 0.69 if only 50% of is used. The ZRF score further declines to 0.67 and 0.62 if only and of is used, respectively. Increasing the number of epochs has a similar effect to that of increasing the number of samples from . For example, with of , the score ZRF is 0.62 after 1 epoch, 0.65 after 2 epochs and 0.75 after 5 epochs, respectively. The decision of selecting the number of epochs and % of for retraining would depend on the degree of desired efficiency and the amount of available for use.

Effect of Different Learning Rates

Table 7 shows the effect of using different learning rate during the brief retraining period. The number of epochs and the subset of are fixed at 2 and , respectively. Increasing the learning rate increases the amount of randomization in the updated model. The ZRF score steadily goes down from 0.96 (learning rate 0.1) to 0.59 (learning rate 0.0001).

Evidently, our method is highly flexible with respect to the amount of randomization desired by the model owner or the customer. Increasing the number of epochs, portion of the retain data or the learning rate increases the ZRF score. As explained in Section 4 in the main paper, we want the ZRF score to be as close to the retrained model as possible. The ZRF score closest to the retrained model is obtained with 30% retain data, 2 epochs and a learning rate of 0.001. The JS-Divergence in this case between the retrained model and the model obtained by our method is 0.03. This indicates that the output distributions of the retrained model and the model obtained using our method are very similar.

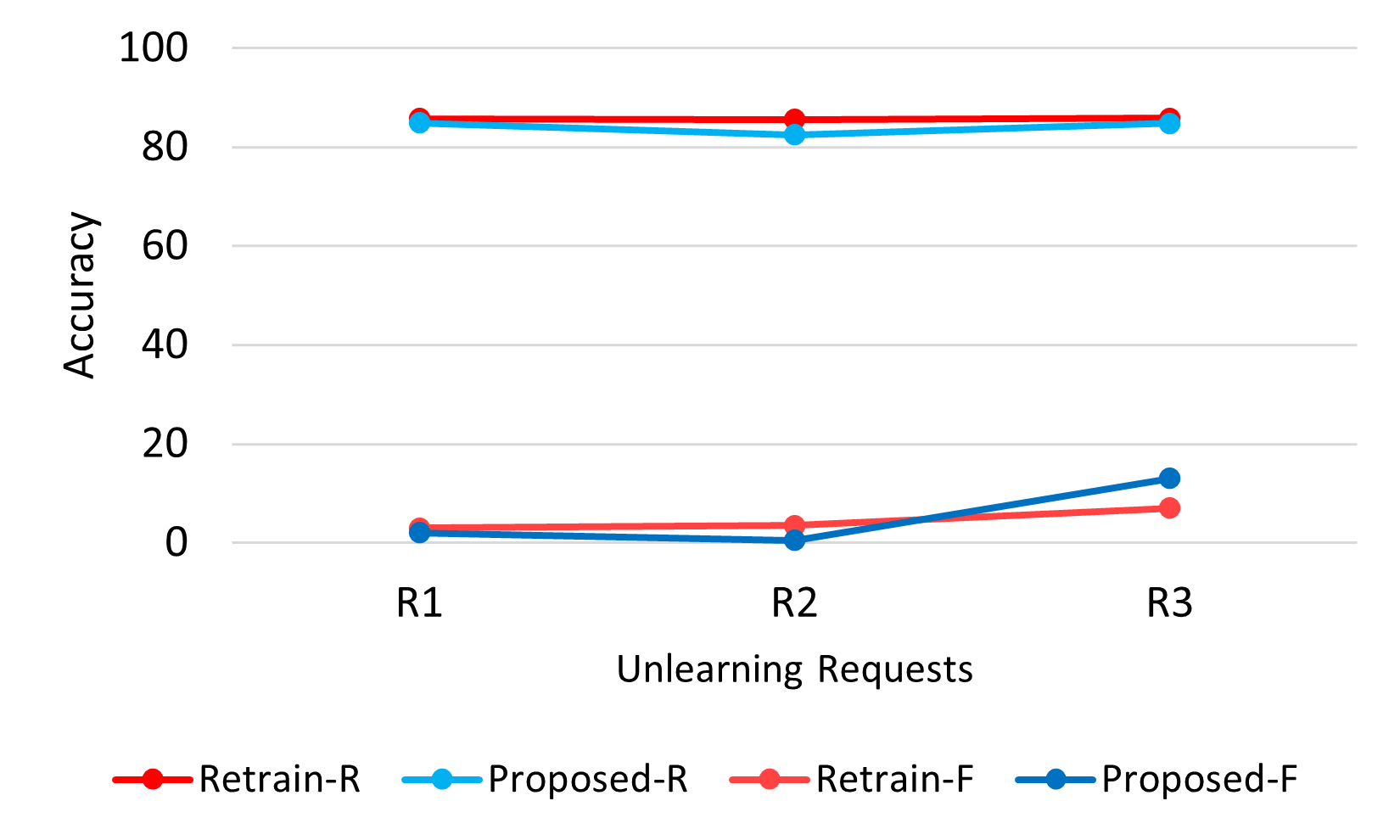

Appendix B Sequential Unlearning

We conduct experiments to simulate a real life scenario where unlearning may be requested repeatedly. Figure 3 showcases the performance of our method in the following scenario: we forget sub-classes Rocket, Mushrooms, and Lamp one after the other. We observe that our method performs really well here. Even after multiple requests of unlearning, there is hardly any degradation in performance which indicates the robustness of our method.

Appendix C Partially Retrained Model as a Competent Teacher: Additional Experiments

A partially trained (PT) model on a subset of retain data can be used as an incompetent teacher in the proposed framework. Similarly, smaller models trained on a small subset of the retain data can also serve as the incompetent teacher. We show the results of using PT models as incompetent teachers to induce forgetting. Table 8 shows results of class unlearning using PT teacher. The teacher is trained for 2 epochs on of the retain data. The accuracy on forget set with ResNet18 is 0% for retrained model, UNSIR, and our method with PT teacher. Whereas, it is 4.22% in our method with randomly initialized (RI) teacher. The accuracy on retain set for ResNet18 is 78.32% for retrained model, 78.6% for our method with PT teacher, 78.46% for our method with RI teacher, and 71.06% for UNSIR. The performance is closer to the retrained model when PT teacher is used instead of RI teacher.

| Learning | Accuracy | Original | Retrained | Our | ZRF | JS-Div | ||

|---|---|---|---|---|---|---|---|---|

| Rate | Model | Model | Method | Original | Retrained | Ours | ||

| 0.1 | 90.46 | 84.01 | 72 | 0.57 | 0.71 | 0.96 | 0.07 | |

| 100 | 99.13 | 82.13 | ||||||

| 0.01 | 90.46 | 84.01 | 86.19 | 0.57 | 0.72 | 0.93 | 0.07 | |

| 100 | 99.13 | 88.76 | ||||||

| 0.001 | 90.46 | 84.01 | 87.75 | 0.58 | 0.72 | 0.72 | 0.03 | |

| 100 | 99.13 | 93.95 | ||||||

| 0.0001 | 90.46 | 84.01 | 90.4 | 0.57 | 0.71 | 0.59 | 0.01 | |

| 100 | 99.13 | 100 | ||||||

Appendix D Efficiency Analysis: More Details

Figure 4 shows the total run-time comparison between the retrained model, the existing and the proposed methods. Our methods takes substantially less amount of time for unlearning in comparison to both the retrained method and the Amnesiac learning method (Graves, Nagisetty, and Ganesh 2021). Our method is only behind UNSIR (Tarun et al. 2021) in run time efficiency. However, the UNSIR only supports class-level unlearning whereas, the proposed method supports both sample-level and class-level unlearning.

| Model | Accuracy | Original | Retrained | UNSIR | Ours(R) | Ours(P) |

|---|---|---|---|---|---|---|

| Model | Model | (Tarun et al. 2021) | ||||

| ResNet18 | 77.86 | 78.32 | 71.06 | 78.46 | 78.6 | |

| 81.01 | 0 | 0 | 4.22 | 0 | ||

| AllCNN | 82.64 | 85.90 | 73.90 | 81.74 | 83.76 | |

| 91.02 | 0 | 0 | 9.16 | 0.5 |