Can a Machine Feel Vibrations?: A Framework for Vibrotactile Sensation and Emotion Prediction via a Neural Network

Abstract

Vibrotactile signals offer new possibilities for conveying sensations and emotions in various applications. Yet, designing vibrotactile tactile icons (i.e., Tactons) to evoke specific feelings often requires a trial-and-error process and user studies. To support haptic design, we propose a framework for predicting sensory and emotional ratings from vibration signals. We created 154 Tactons and conducted a study to collect acceleration data from smartphones and roughness, valence, and arousal user ratings (n=36). We converted the Tacton signals into two-channel spectrograms reflecting the spectral sensitivities of mechanoreceptors, then input them into VibNet, our dual-stream neural network. The first stream captures sequential features using recurrent networks, while the second captures temporal-spectral features using 2D convolutional networks. VibNet outperformed baseline models, with 82% of its predictions falling within the standard deviations of ground truth user ratings for two new Tacton sets. We discuss the efficacy of our mechanoreceptive processing and dual-stream neural network and present future research directions.

Index Terms:

Vibrotactile Perception, Tactile Icon, Neural Network.I Introduction

Tactile icons (i.e., Tactons) play a crucial role in a variety of haptic devices, such as wearable devices [1, 2], VR/AR controllers [3, 4, 5], and smartphones [6]. They serve a wide range of functions, from alerting users to events or system states [7, 8] to mediating sensations and emotions [9, 10], thus creating immersive interactions and enhancing user experiences. Moreover, Tactons support diverse user scenarios, such as communicating emotions with others [11], sending emotional messages to children with autism [12], and expressing feelings between long-distance partners [13].

Various design approaches have sought to create effective Tactons for conveying and communicating sensations and emotions. Common approaches include parameter-based design [14, 15], which systematically varies the design parameters of a vibrotactile signal, and metaphor-based design, which creates Tactons that evoke specific metaphors in users [16, 17]. For both design approaches, designers need to run multiple experiments with many users to assess subjective sensations and emotions elicited by Tactons. Through these user studies, designers identify effective design parameters for delivering targeted sensations and emotions, using the findings to create Tactons suitable for specific applications.

Despite the usefulness of these approaches, they come with high costs and effort to conduct user studies, which limit designers in testing new Tactons. For instance, if a designer wants to create a new Tacton with a complex rhythmic structure that has not been previously investigated, additional user studies are required to evaluate the sensations and emotions elicited by the new Tacton. While some guidelines exist on the effects of design parameters on sensations or emotions (e.g., the effect of frequency on roughness [18]), designers resort to repetitive trial-and-errors and extensive user testing until they find a Tacton that conveys the desired sensations and emotions to users.

To address these challenges, we ask: Can we predict the sensations and emotions users feel from Tactons using vibration accelerations produced by a vibrotactile actuator in haptic devices to accelerate the progress of Tacton design? In this paper, we introduce a framework for predicting the sensory and emotional ratings of Tactons with three components: (1) haptic data augmentation, (2) mechanoreceptive processing, and (3) a neural network designed to mimic human tactile perception and cognition mechanisms. To construct a haptic dataset of accelerations paired with corresponding ratings (roughness, valence, and arousal; on a scale of 0 to 100) of Tactons, we first designed 154 Tactons that cover a wide range of design parameters, including carrier frequency, envelope frequency, duration, amplitude, rhythmic structure, and complex waveforms. We then conducted a user study with 36 participants using three different iPhone models with different masses and sizes to reflect the use of commercial haptic devices. Next, we augmented the dataset by devising a set of haptic data augmentation methods, considering factors related to human vibrotactile perception, such as the Just Noticeable Difference (JND) for vibrations. We then processed the augmented data into two-channel spectrograms using biomimetic mechanoreceptive channel filters based on human vibrotactile receptors (Meissner and Pacinian Corpuscles) and Short-Time Fourier Transform (STFT).

We trained a neural network, VibNet, using the accelerations and two-channel spectrograms, structured as two parallel input streams. The first stream feeds 1D acceleration data into Gated Recurrent Units (GRUs) [19], a type of recurrent neural networks, to capture sequential features of Tactons. This approach is grounded in human vibrotactile perception of temporal and rhythmic patterns [20, 21, 22, 15, 14]. The second stream feeds the two-channel 2D spectrograms into Convolutional Neural Networks (CNNs), specifically ResNet [23], to capture temporal-spectral features perceived by vibration-relevant mechanoreceptive channels in the skin [24, 25]. Through these two streams, VibNet predicts roughness, valence, and arousal ratings, which are the primary sensory and emotional dimensions for Tactons.

We evaluated the trained model using two new Tacton sets based on [26], each containing 24 Tactons designed by varying signal parameters and 24 complex Tactons. Our model demonstrated state-of-the-art performance compared to baseline machine learning models, such as Long Short-Term Memory (LSTM) and Transformer, achieving lower root mean square errors (RMSE) between the predictions and the ground truth ratings. On average, 82% of the predictions generated by our model fell within the standard deviation of the ground truth ratings across the three dimensions of sensation and emotion for all 48 Tactons in the two test sets. Based on these results, we present the implications of our framework and discuss directions for future research on predicting vibrotactile sensations and emotions. Our contributions include:

-

•

Sensory and emotional ratings for 154 Tactons varying in six design parameters, and corresponding 1D accelerations measured from three commercial devices.

-

•

A biomimetic framework including haptic data augmentation, mechanoreceptive processing, and a neural network (VibNet), with an end-to-end implementation.

-

•

A demonstration of the efficacy of our method in predicting the sensation and emotion evoked by new unseen Tactons.

II Related Work

We review prior research on design parameters for Tactons in haptics, studies on vibrotactile perception, sensation, and emotion, and computational models for haptic stimuli.

II-A Design Parameters and Libraries for Tactons

Over the decades, extensive research have explored myriad design parameters for Tactons to effectively convey and communicate information and emotions in various haptic user interfaces [27]. Prior studies have investigated low-level parameters of Tactons, such as carrier frequency [28, 29], envelope frequency [21, 20], duration [30, 14], and amplitude [28, 29], as well as superposition of multiple sinusoids [31, 32] and combinations of multiple parameters [14, 20]. Other studies have proposed high-level parameters of Tactons, such as rhythmic structure [22, 33, 34], which include the evenness of pulses and note length, interval between vibrations [35], and sound waveform or timbre [9, 36]. Designers can create Tactons by systematically varying these parameters (i.e., parameter-based design approach). For constructing a haptic dataset, we select four common low-level Tacton parameters – carrier frequency, envelope frequency, duration, and amplitude – and one high-level parameter, rhythmic structure.

In addition to creating Tactons from scratch, haptic designers can create new Tacotns by transforming libraries from other modalities into vibration libraries or by modifying template Tactons from existing vibration libraries [37]. Past studies have proposed various vibration libraries together with their associated user feelings or metaphors (i.e., metaphor-based design approach). For example, van Erp and M.A. Spapé created 59 Tactons by transforming auditory melodies into vibrations and examined their perceptual impacts [38]. Disney Research introduced FeelEffects, a library of over 40 Tactons, and investigated the semantic and parametric spaces of these Tactons [39]. Seifi et al. proposed VibViz, a library of 120 Tactons with subjective ratings and descriptive tags on their physical, sensory, emotional, usage, and metaphoric attributes [16]. These libraries consist of Tactons with complex waveforms, such as intricate rhythmic structures in the time domain, varying frequency spectra over time, and various durations. We include 40 Tactons by modifying existing Tactons from the open-source vibration library VibViz for rendering on iPhones, to enhance the diversity of our haptic dataset.

II-B Studies on Vibrotactile Perception, Sensation, and Emotion

Investigating how humans perceive vibrations is a fundamental aspect of the haptics field. Prior research has studied the neurophysiological processes underlying tactile perception, identifying four types of mechanoreceptors in human skin that contribute to the perception of touch [40]. These four mechanoreceptive tactile channels have different characteristics, such as perception properties, spectral sensitivities, and sensory adaptation rates [41, 24]. Among these four mechanoreceptive channels, Meissner Corpuscle (RA1) and Pacinian Corpuscle (RA2) are primarily activated by vibrotactile stimuli compared to Merkel Disk (SA1) and Ruffini Ending (SA2). Drawing on the properties of the two mechanoreceptive channels (RA1 and RA2) most related to vibrations and their spectral sensitivities, we propose a mechanoreceptive processing approach that converts vibration signals into two-channel spectrograms.

Previous studies have also explored the perceptibility and discriminability of vibrations. Psychophysics researchers have examined the detection thresholds, or Absolute Limens (AL), for vibrations and uncovered a U-shaped threshold curve across frequencies, with the highest sensitivity occurring around 200 Hz (between 150 Hz and 300 Hz) [42, 43]. Other studies have investigated the discrimination thresholds, or Just Noticeable Differences (JND), between vibrations [29, 44, 45, 46]. JNDs for vibration intensity generally range from 10% to 30%, while JNDs for vibration frequency typically fall between 15% and 30% [24]. Some studies have also explored the relative impact of these parameters; increasing the duration of vibrations has been shown to decrease the JNDs for vibration intensity [47, 48]. We use these established findings on detection and discrimination thresholds for vibrations to augment our vibration signals while ensuring that the augmented vibrations remain perceptually indistinguishable to users.

The sensations and emotions elicited by haptic stimuli are also important research topics in HCI. Past research has developed sensory and emotional lexicons associated with haptic stimuli [49, 50, 51, 52]. Researchers have collected user ratings based on Russell’s circumplex model of affect, which defines two key emotional dimensions: valence and arousal [53]. Guest et al. identified 26 sensory attributes and 14 emotional attributes for describing touch and demonstrated that valence and arousal are primary factors in haptic emotional experience [54]. Among sensory attributes, researchers identified roughness as the primary dimension for capturing the perceptual properties of real textures or materials, with low variability among individual users. Studies on Tactons reported analogous findings: participants associated Tactons with roughness effectively but often faced challenges in associating hardness with Tactons, and sensory attributes like wet/dry or hot/cold were not relevant to vibrations [15, 16]. Additionally, while temporal attributes such as tempo, energy, and rhythm are relevant for describing certain vibration patterns, they are not considered primary sensory dimensions, as they are context-dependent and less generalizable across different tactile scenarios. Building on these established frameworks, we select roughness as the primary sensory dimension for Tactons and valence and arousal as the two primary emotional dimensions.

To inform the design of Tactons that convey specific sensations and emotions, previous studies have sought to derive sensory and emotional spaces for Tactons and identify effective design parameters. After creating one or more sets of Tactons, designers typically recruit participants to gather subjective responses to these Tactons. The sensory and emotional spaces are then visualized using averaged ratings for each Tacton, or statistical tests are applied to determine which design parameters significantly impact the sensations and emotions. Seifi and Maclean designed 14 Tactons and demonstrated that rhythmic structure influenced all three dimensions (roughness, valence, and arousal) and that carrier frequency affected arousal [15]. Yoo et al. explored emotional spaces with three different Tacton sets (25, 36, and 24 patterns) and provided design guidelines for four sinusoidal parameters [14]. Seifi et al. collected roughness, valence, and arousal ratings for 120 Tactons and developed the VibViz visualization to assist haptic designers [16]. While these studies have examined emotional and sensory spaces through controlled laboratory user studies (i.e., offline studies), recent research has explored the efficacy of crowdsourcing user studies (i.e., online studies) to reduce the effort and time needed to collect sensory and emotional ratings of Tactons [55, 26]. However, these studies still rely on the efforts of researchers and designers in evaluating Tactons, which limits their scalability. To address this limitation, we construct sensory and emotional ratings of 154 Tactons, the largest Tacton set ever studied, and develop a model that predicts roughness, valence, and arousal ratings to enable the rapid prototyping of effective Tacton candidates.

II-C Computational Models for Haptic Stimuli

Computational models that predict subjective evaluations of haptic stimuli or compare different haptic stimuli can help designers and researchers enhance user experiences and accelerate the design process in various applications. Previous research has developed models to predict haptic perceptions and sensations for objects or textured surfaces. These studies used robots or rigid tools to collect haptic data generated from physical interactions, such as accelerations, forces, and speeds. The resulting models predict sensory attributes elicited from objects [56, 57, 58], textured surfaces [59, 60], or perceptual similarities between textured surfaces [61]. While these models primarily focus on real objects or textured surfaces, we propose a model that predicts the sensations and emotions elicited by Tactons conveyed through a single vibrotactile actuator in haptic interfaces, which generates 1D accelerations.

Past studies have also proposed computational models for predicting subjective evaluations for vibrations rendered on a vibrotactile actuator. Park and Kuchenbecker introduced algorithms to transform three-axes accelerations collected from human interactions with textured surfaces into one-axis accelerations that can be played on a vibrotactile actuator [62]. They then developed a model to assess the perceptual similarities between the haptic stimuli generated from human interactions with textured surfaces and the converted one-axis accelerations. Others researchers proposed models for evaluating and comparing perceptual qualities between original and compressed vibration signals [63, 64, 65]. Recent work introduced a model to predict perceptual dissimilarities between Tactons by simulating the neural transmission from mechanoreceptors in the skin to the brain [20]. Previous studies primarily focused on developing models to predict perceptual distinguishability between real-world haptic stimuli and vibrations or between different Tactons. In contrast, we propose a computational model that predicts sensations and emotions elicited by Tactons. We note that unless perceptual differences between Tactons are extremely subtle (i.e., Tactons are not easily distinguishable), users may perceive their sensory and emotional attributes differently. This highlights the need for vibrotactile sensation and emotion prediction models, as perceptual distinguishability models alone may not capture the nuanced mappings between vibrations and user-rated sensation and emotions. Our approach enables efficient prediction for new and unique Tactons that may not closely resemble existing vibrations in the dataset.

III Haptic Dataset Construction

We conducted a user study to record acceleration data from 154 Tactons rendered on three commercial devices (iPhones) and to collect sensory and emotional ratings of the Tactons (Figure 1).

III-A Tacton Design

We created a set of 154 Tactons to provide haptic dataset to the neural networks (Figure 2). The design of this set was informed by prior literature on Tacton design, utilizing sinusoidal parameters (54 Tactons), rhythmic structures (60 Tactons), and complex Tactons that evoke multiple attributes such as sensations, emotions, and metaphors (40 Tactons).

The 54 Tactons (V1–V54) systematically varied in signal parameters, including three carrier frequencies (80 Hz, 155 Hz, and 230 Hz), three envelope frequencies (0 Hz, 4 Hz, and 8 Hz), three durations (300 ms, 1000 ms, and 2000 ms), and two amplitudes ( and ) (Figure 2a). We selected these parameters to cover most of the emotional space of sinusoidal vibrations [14] while ensuring compatibility with iPhone playback. We rendered the sinusoidal vibrations using the mathematical formula for temporal envelopes and temporal frequencies for generating Tactons on iPhones, as follows:

| (1) |

Here, represents the amplitude in the Apple Haptic and Audio Pattern (AHAP) format, where and correspond to 0.5 and 1, respectively. denotes the envelope frequency, where Hz represents a constant envelope (i.e., ), and denotes the carrier frequency.

The 60 Tactons (V55–V114) varied on 10 rhythmic structures from [22] and two signal parameters of three carrier frequencies (80 Hz, 150 Hz, and 230 Hz) and two amplitudes ( and ) (Figure 2b). We selected these rhythmic structures to cover most of the perceptual space of rhythmic Tactons, considering primary parameters such as note length and evenness. All 60 Tactons lasted for 2 seconds.We rendered the rhythmic Tactons using the following formula for temporal envelopes and temporal frequencies :

| (2) |

Here, and represent the amplitude in the AHAP format and the carrier frequency, respectively. is a list of binary pulses, each with a length of 31.25 ms, as shown in [34, 66].

Lastly, we designed 40 complex Tactons (V115–V154) using the Vibviz library [16] (Figure 2c). We divided the original emotional space of Tactons in the library into nine segments and selected 40 Tactons based on their distribution in the original sensory and emotional spaces. We rendered the Tactons by transforming original vibration waveforms into temporal envelopes and temporal frequencies suitable for iPhones. These Tactons featured more complex waveforms compared to the 54 sinusoidal and 60 rhythmic Tactons. In addition, these complex Tactons used variable over time, whereas the 54 sinusoidal and 60 rhythmic Tactons used constant frequency (i.e., ). The durations of the 40 Tactons ranged from 0.43 to 5.38 seconds.

III-B Participants

We recruited 36 participants (18 women and 18 men; 18–31 years old (mean: 22.8, SD: 3.5)), including three left-handed and 33 right-handed users. All participants had no sensory impairments in both hands. The participants took 65 minutes on average to complete the study and received $30 USD as compensation.

III-C Experiment Setup

We used three iOS smartphones (iPhone 13 mini, iPhone 14, and iPhone 11 Pro Max) by Apple Inc. to collect acceleration data as well as sensory and emotional ratings of Tactons on various consumer phones. These phones varied in size and mass: iPhone 13 mini (,mm, 141,g), iPhone 14 (,mm, 172,g), and iPhone 11 Pro Max (,mm, 226,g). We attached a 3-axis accelerometer (Analog Devices; ADXL354z) on the right to the smartphone camera (Figure 3a) and measured the accelerations of the vibrations using a DAQ board (National Instruments; USB-6353) with the sample rate of 10 kHz. We collected three-axis accelerations but used one axis (left-right) aligned with the vibration direction of the Taptic Engines in the iOS smartphones when training a neural network, as the other two axes consisted of noise unrelated to the Tactons. We collected participants’ responses using a graphical user interface (GUI) on the smartphones (Figure 3b). Participants placed both hands on a table in front of them and maintained the same holding posture with their left hand and a natural grasp force while interacting with the GUI application using their right hand. The participants wore noise-canceling headphones with white noise to block any environmental sounds.

III-D Experiment Procedure

The study process included a sequence of sessions: introduction, training session, and main session. Participants first completed the consent form and a demographics pre-questionnaire. Next, participants began the experiment using the GUI application on one of three smartphones, with participants evenly distributed by biological sex across the devices. The training session displayed a set of buttons, each randomly corresponding to a Tacton (Figure 3b left). Participants experienced all the Tactons before the main session, while we collected acceleration data from their interactions using the accelerometer. If a participant played a Tacton multiple times, the acceleration data from the last interaction was stored. In other words, we measured the accelerations from 12 participants (six women, six men) per smartphone, resulting in acceleration data for 154 Tactons from 36 participants across the three smartphones(Figure 4a).

In the main session, participants rated roughness (smooth/rough), valence (unpleasant/pleasant), and arousal (calm/exciting) of 154 Tactons using sliders ranging from 0 to 100 (Figure 3b right). The application presented Tactons in a random order. Participants could play the Tactons multiple times but were unable to modify ratings for previously rated Tactons. Throughout the study, participants could take breaks as needed, and we maintained the room temperature between 20–23 degrees Celsius.

III-E Results

We collected 5,544 acceleration data (154 Tactons 36 participants) and downsampled all the data from a 10 kHz to a 1 kHz, a sample rate that captures the range of human tactile receptors. This was done before augmenting the vibration signals and inputting them into the neural network to improve the efficiency of model training.

In the comparisons in ratings between the three devices (averaged for 12 participants per device), roughness and arousal ratings showed very strong correlations. For roughness: Pearson’s correlation = 0.91 (iPhone 14 vs. iPhone 13 mini), 0.90 (iPhone 14 vs. iPhone 11 Pro Max), and 0.90 (iPhone 13 mini vs. iPhone 11 Pro Max), all with . For arousal: = 0.87 (iPhone 14 vs. iPhone 13 mini), 0.88 (iPhone 14 vs. iPhone 11 Pro Max), and 0.88 (iPhone 13 mini vs. iPhone 11 Pro Max), all with . In contrast, valence ratings showed low to moderate correlations between the iPhone 14 and the other two devices ( = 0.38, for iPhone 13 mini and = 0.47, for iPhone 11 Pro Max), while the correlation in valence ratings between the iPhone 13 mini and iPhone 11 Pro Max was strong ( = 0.72, ). Therefore, we used the averaged ratings for each device to train the neural network. Overall, valence ratings showed a negative relationship with arousal ratings (mean across the three devices), while arousal ratings had a positive relationship with roughness ratings (mean ), as shown in [16]. The standard deviations for roughness, valence, and arousal ratings of the 154 Tactons across 36 participants were 17.2, 19.1, and 17.3 (out of 100), respectively (Figure 4b).

IV Haptic Data Augmentation and Mechanoreceptive Processing

In this section, we augment the 5,544 acceleration data entries, as increasing the scale of data can help improve the performance of neural networks, reduce overfitting, and enhance generalization of the model [67]. Next, we introduce a mechanoreceptive processing technique for converting 1D accelerations into two-channel 2D spectrograms to feed the processed data into our proposed neural network.

IV-A Haptic Data Augmentation

In contrast to the extensive research and techniques available for data augmentation in images or audio, limited techniques exist for augmenting haptic data. To address this, we propose three vibration augmentation techniques informed by human haptic perception and audio data augmentation methods, particularly for waveforms in the time domain, while ensuring that users cannot distinguish between the original and augmented vibrations (Figure 5). Five users confirmed that accelerations augmented by the following three techniques and their four combinations (=+) were indistinguishable through 3AFC (Three-Alternative Forced Choice) testing, where participants were presented with two identical stimuli (A, A; both being the original vibration) and one different stimulus (B; the augmented vibration) and asked to identify the different one. For the seven augmentation cases, the average probability of participants correctly identifying the augmented vibration converged to around 0.33, suggesting the perceptual invariance of the augmented vibrations.

IV-A1 Noise Injection

We injected white noise into the original haptic data, ensuring that the noise intensities remained below the absolute detection thresholds or absolute limens (AL) for vibration frequencies (Figure 5a). Since humans are most sensitive to vibrations at around 200 Hz [24], we injected uniform white noise with an amplitude within the range of [0.0006 G, 0.0006 G]. The value 0.0006 G represents the AL at 200 Hz, and was randomly selected from this range.

IV-A2 Changing Speed

We increased or decreased the speed of the vibration, taking into account the discrimination thresholds or just noticeable differences (JND) for vibration frequency in haptics and the effects of duration on vibration perception, particularly temporal summation and amplitude JND (Figure 5b). Since human frequency JND generally cluster between 15% and 30% [24], we set the change in the duration of the haptic data to be lower than 15%. Besides the frequency JND, since vibration durations longer than 10 ms affect both the JNDs of vibration amplitude and the perceived intensity of vibration, we changed the speed of the haptic data at a rate within the range of , where was randomly selected from this range, while ensuring that the absolute change in duration between the original and augmented haptic data was less than 10 ms.

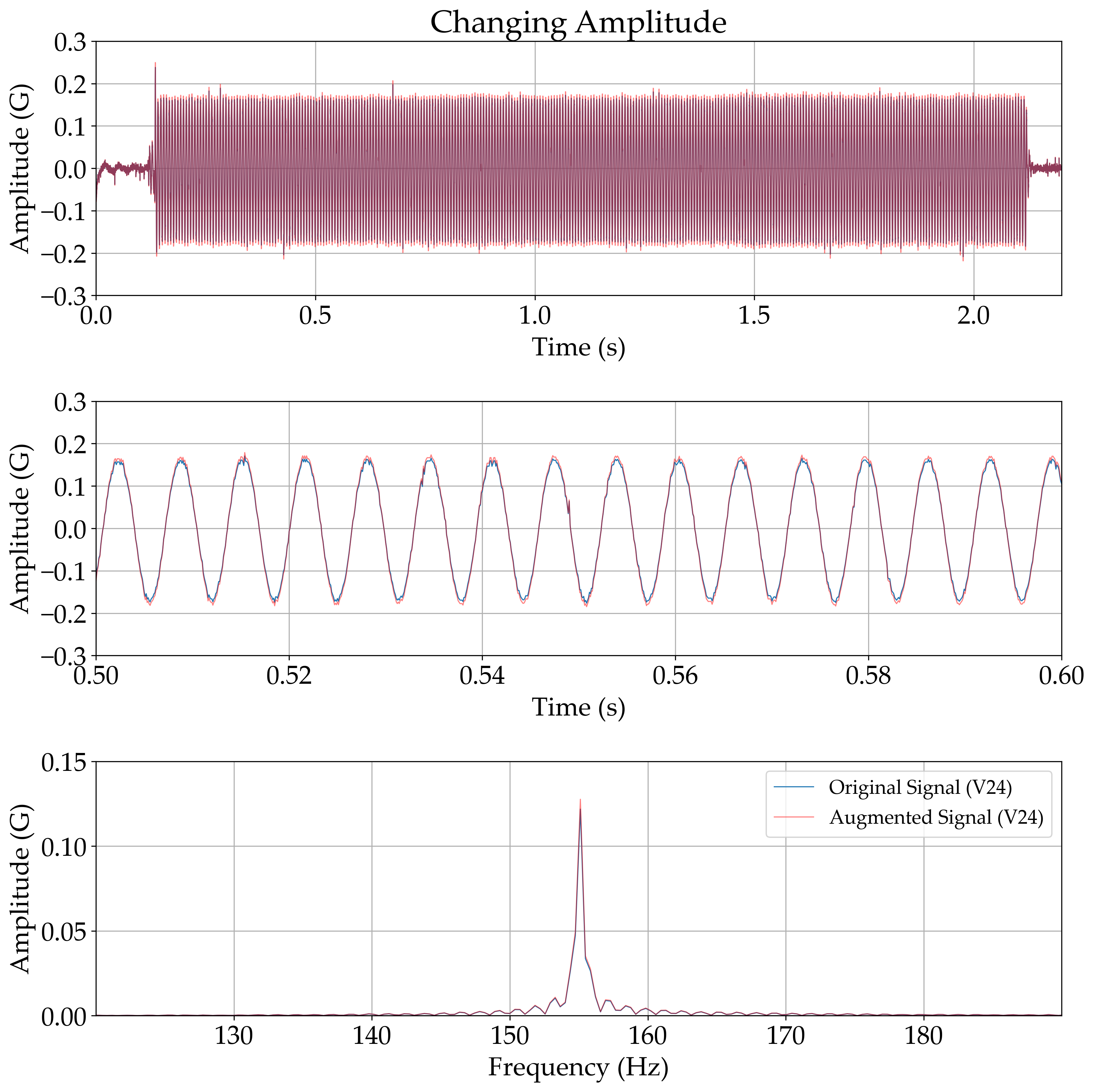

IV-A3 Changing Amplitude

We increased or decreased the amplitude of the vibration, considering the amplitude JND (Figure 5c). Since human amplitude JND mostly fall between 10% and 30% [24], we set the change in the amplitude of the haptic data to be lower than 10%. Therefore, we changed the amplitude of the haptic data at a rate within the range of , where was randomly selected from this range.

IV-A4 Implementation of Haptic Data Augmentation

Based on the three augmentation techniques described above and their four combinations, we applied a total of seven augmentation methods to each of our 5,544 acceleration data, repeating the process twice. In other words, we generated an additional 77,616 acceleration data entries () from the original 5,544 data, resulting in a total of 83,160 data entries. We then fed them into the mechanoreceptive processing and the neural network.

IV-B Mechanoreceptive Processing

To better capture human vibrotactile processing, we supplemented the 1D waveform haptic data, which contains only amplitude values and lacks explicit frequency information, with additional data in a different form. Previous research on developing neural networks for audio data introduced a method to convert the audio classification/prediction problem into an image classification/prediction problem by transforming waveform audio data into a spectrogram [68, 69]. This conversion can complement the waveform data by segmenting its duration into smaller time intervals and representing the frequency content of these segments in a single plot through the STFT. In addition, since the converted spectrogram is an image, it is well-suited for input into CNN-based architectures designed for image processing.

When processing the conversion, we applied two bandpass filters based on the spectral sensitivity of mechanoreceptors involved in coding touch information for perception. In vision research, neural networks are typically developed using three-channel (RGB) images [67, 70], as the cone photoreceptors in the retina, which are responsible for visual processing, are classified into three types, each exhibiting different spectral sensitivities and therefore activated by photons of specific wavelengths [71]. Similarly, each of the four types of mechanoreceptors involved in tactile processing have distinct spectral sensitivities and activation patterns. However, we used two bandpass filters corresponding to the spectral sensitivities of Meissner Corpuscle (RA1) [3 Hz, 100 Hz] and Pacinian Corpuscle (RA2) [40 Hz, 500 Hz], as these two mechanoreceptors are most relevant to vibration perception [72]. In addition, we also tested neural networks using four mechanoreceptive filters but did not find significant improvements in performance compared to those using two filters (see Section VI-B and Table I).

We applied the mechanoreceptive processing technique to 83,160 augmented 1D waveforms, creating two-channel 2D spectrograms with raw floating-point numbers, where time is on the x-axis and frequency on the y-axis. To ensure consistency in data sample length, we first added zero padding to the end of each acceleration data to make all lengths identical at 6,000 samples (i.e., 6-second durations), accounting for the maximum duration of the Tactons in our dataset, which varied in duration. We then applied STFT to the two signals from the above mechanoreceptive filters, using a 0.5-second window and a 0.05-second hop size to achieve a spectral resolution of 2 Hz in the spectrograms. As a result, we derived two-channel spectrograms with dimensions of (251, 121), and then fed both the 83,160 1D acceleration data entries and their corresponding two-channel spectrograms into the neural network.

V VibNet: A Neural Network for Predicting Sensory and Emotional Ratings

In this section, we propose a deep learning (DL) model, VibNet, to predict sensations and emotions conveyed through Tactons on a scale from 0 to 100. VibNet consists of two parallel streams of neural networks inspired by human vibrotactile perception and cognitive mechanisms [41, 20], each utilizing different input types: 1D waveform data and two-channel 2D spectrograms, respectively. By extracting both sequential and temporal-spectral features from these varied inputs, our proposed DL model aims to enhance the ability to predict the sensory and emotional impacts of haptic feedback.

V-A Network Architecture

VibNet consists of two parallel neural network streams, each designed to capture different aspects of the vibration data (Figure 6). The first stream employs Gated Recurrent Units (GRU) layers [19]. GRU networks use a reset gate and an update gate in each unit to capture temporal dependencies and are known for their training efficiency compared to Long Short-Term Memory (LSTM) networks, as GRUs have fewer parameters. Given the significant impact of temporal and rhythmic structures on human vibrotactile perception [20, 21, 22], we selected GRU networks to effectively capture the sequential features of Tactons. This stream consists of two layers, each with 1024 GRU units. The first layer processes the 1D waveform data as input and passes it to the next layer. The final GRU layer flattens the output before passing it to the concatenation layer.

The second stream employs a ResNet architecture [23], a type of Convolutional Neural Network (CNN), to process the two spectrograms from each acceleration signal. ResNet uses residual connections to allow the training of very deep networks by addressing the vanishing gradient problem, and it has demonstrated state-of-the-art performance with fewer parameters compared to traditional plain CNNs, such as VGGNet [73]. We used a 154-layer ResNet with bottleneck blocks to capture the temporal-spectral features from the two-channel 2D spectrograms, effectively transferring the model’s capabilities from image processing to haptic data processing. As in the first stream, the final layer of this stream flattens the output and passes it to the concatenation layer.

The concatenation layer receives the outputs from the final layers of both streams. This is followed by three fully connected layers with 1024, 128, and 16 neurons, respectively, applying the ReLU activation function and a dropout rate of 0.5 between layers. The final layer, consisting of 16 neurons, regresses the values to provide three outputs corresponding to predictions for roughness, valence, and arousal ratings.

V-B Implementation

We implemented VibNet using the PyTorch library, and trained it on the abovementioned haptic dataset using an NVIDIA GeForce RTX 3080 Ti GPU. We employed the Adam optimizer with a batch size of 32 and used Mean Square Error (MSE) as the loss function. The learning rate was set to 0.001, and the model was trained for 100 epochs, following commonly-used values in DL [67]. To ensure robust performance, we applied 5-fold cross-validation during the training process, balancing training efficiency and model accuracy given the complexity of the dataset and architecture.

VI Evaluation

We evaluated the performance and generalizability of our framework using five baseline machine learning models on two new Tacton sets. The baseline models were trained using the same haptic dataset as our model, with adjustments to meet the specific input requirements of each baseline.

VI-A Method

We verified VibNet against five baseline models, including conventional machine learning models and standard neural networks in the haptics field [59, 58]. Additionally, we conducted an ablation study [74] to assess the impact of the multi-channel spectrogram inputs and the corresponding CNN layers derived from the proposed mechanoreceptive processing (Section IV-B). We compared the proposed framework — which utilizes two-channel spectrograms processed by two mechanoreceptive filters (RA1 and RA2) — with neural networks that applied (1) STFT without mechanoreceptive filters and (2) STFT with four mechanoreceptive filters (RA1, RA2, SA1, and SA2) in the second stream constructed by CNN layers. We specifically tested the approach (2) because Merkel Disk (SA1) and Ruffini Ending (SA2) are known to contribute less to vibration perception compared to the Meissner Corpuscle (RA1) and Pacinian Corpuscle (RA2) [72, 24]. To include these two additional channels, we applied a lowpass filter at 5 Hz and a bandpass filter of [15 Hz, 400 Hz] considering the spectral sensitivities for Merkel Disk and Ruffini Ending.

We used two new Tacton sets from a previous study [26], which included sensory and emotional ratings for 24 Tactons in each set. The first set of 24 Tactons was designed using sinusoidal parameters, while the second set consisted of 24 Tactons with complex waveforms. None of the 48 Tactons in the two sets overlapped with the 154 Tactons used for training. We collected acceleration data from 36 new participants for the 48 Tactons, following the same procedure described in Section III-C. We compared the RMSE averaged across the 36 participants for roughness, valence, and arousal ratings between the predictions and the ground truths. We also report the proportion of our predictions that fall within the standard deviation of user ratings across the two test sets. We used individual acceleration data from each of the 36 participants, with each data entry consisting of 48 vibration measurements, to evaluate the model’s performance for variations in user measurements. However, we averaged the roughness, valence, and arousal ratings across participants and used these as the ground truth to ensure the results were generalizable and applicable to real-world haptic design, reflecting aggregate perceptions across diverse users.

| Method | Network |

|

|

Avg. | |||||||||||

| R | V | A | Avg. | R | V | A | Avg. | ||||||||

| Baseline | Linear Regression | 17.86 | 15.38 | 19.49 | 17.58 | 16.55 | 14.41 | 20.11 | 17.02 | 17.30 | |||||

| 1D-CNN [58] | 17.94 | 11.14 | 17.37 | 15.48 | 23.00 | 11.54 | 22.60 | 20.05 | 17.77 | ||||||

| LSTM [58] | 18.19 | 9.44 | 18.69 | 15.44 | 17.13 | 9.01 | 18.71 | 14.95 | 15.20 | ||||||

| CNN-LSTM [59] | 17.71 | 8.81 | 17.90 | 14.81 | 16.61 | 8.16 | 16.94 | 13.90 | 14.36 | ||||||

| Transformer [75] | 21.28 | 12.90 | 20.13 | 18.10 | 18.47 | 9.39 | 19.08 | 15.64 | 16.87 | ||||||

| Proposed Method | Tested Approach | Single Spectrogram | 16.12 | 8.31 | 16.45 | 13.63 | 14.14 | 6.78 | 14.52 | 11.81 | 12.72 | ||||

|

15.12 | 7.46 | 14.86 | 12.48 | 13.53 | 6.65 | 13.97 | 11.35 | 11.91 | ||||||

|

14.60 | 7.21 | 14.56 | 12.12 | 13.60 | 7.61 | 14.22 | 11.81 | 11.97 | ||||||

VI-B Results

The RMSE values averaged across 36 users showed that all three of our proposed methods, regardless of whether mechanoreceptive filters were used in the second stream, outperformed the five baselines across both test sets (Table I). Among all tested models, linear regression yielded the highest RMSE for test set 1 (17.58). The 1D-CNN model [58] showed a reduction in RMSE for test set 1 but had the highest RMSE for test set 2 (20.05) among all tested models. The LSTM model [58] performed similarly to the 1D-CNN model for set 1 but showed improved performance for set 2, with a reduced RMSE of 14.95. The CNN-LSTM network from [59], which used parallel streams of CNN and LSTM, performed better than using either a single stream of CNN or LSTM [58]. However, the transformer regression model [75] did not yield accurate predictions for the roughness and emotions of unseen Tactons, despite being well-trained. Our proposed methods outperformed the CNN-LSTM network across all three tested approaches. Within these approaches, using two mechanoreceptive filters led to a lower RMSE for test set 1 (RMSE = 12.48) compared to using a single spectrogram (i.e., without a mechanoreceptive filter, RMSE = 13.63). The average RMSE for test set 1 was higher when using two mechanoreceptive filters compared to four mechanoreceptive filters (RMSE = 12.12). For test set 2, the RMSE was lowest when using two mechanoreceptive filters (RMSE = 11.35) compared to using a single spectrogram or four-channel spectrograms (RMSE = 11.81). Overall, VibNet with the two-channel mechanoreceptive filters performed the best over both datasets.

On average, 82% of the predictions generated by VibNet fell within the standard deviation of the ground truth user ratings in both test sets across 36 participants. Specifically, in set 1, 18.5, 23.6, and 16.9 out of 24 predictions for roughness, valence, and arousal, respectively, were within the standard deviation. In set 2, 19.4, 23.6, and 16.2 out of 24 predictions for roughness, valence, and arousal, respectively, met this criterion. Overall, across the two test sets, the highest proportion of predictions within the ground truth’s standard deviation was for valence (98% for both sets 1 and 2), followed by roughness (set 1: 77%, set 2: 81%) and arousal (set 1: 70%, set 2: 67%).

VII Discussion

In this paper, we designed 154 Tactons and ran a user study to construct a large-scale haptic dataset. We augmented the acceleration data considering the human perception threshold and verified the perceptual invariance of the augmented 1D haptic signals. The vibration signals were processed into two-channel spectrograms inspired by the spectral sensitivities of mechanoreceptors and fed into VibNet, which consisted of two streams of recurrent neural network layers and CNN layers. The results demonstrated state-of-the-art performance on two unseen Tacton sets compared to the five baseline machine learning models. Based on these findings, we discuss the efficacy of VibNet and outline implications for future work.

VII-A Performance Comparison in Vibrotactile Sensation and Emotion Prediction

We used dual streams in our neural network to capture sequential and temporal-spectral features, drawing inspiration from human vibrotactile perception and the cognitive mechanisms [41, 20]. Our evaluation demonstrated that this architectural choice enabled VibNet to achieve the lowest average RMSE across the test sets. The 1D CNN model [58] performed better than linear regression for test set 1, which consisted of Tactons designed with sinusoidal parameters that varied the spectral aspects of vibration signals. However, this model showed the worst performance for test set 2, which consisted of Tactons with complex waveforms in the time domain. In contrast, the CNN-LSTM model [59], which combined 1D CNN and LSTM in dual streams to predict haptic attributes of real textures, showed lower RMSEs for the test sets compared to using a single stream alone. Our findings further confirm that predicting vibration cognition requires capturing both sequential and temporal-spectral features, in line with the results presented in [20]. Moreover, the results imply that using 2D spectrograms with 2D CNN layers instead of 1D waveforms with 1D CNN layers can enhance the network’s ability to capture the spectral features of vibrations.

In the second stream designed to capture the temporal-spectral features of Tactons, the use of two-channel mechanoreceptive filters — based on the spectral sensitivities of the Meissner Corpuscle (RA1) and Pacinian Corpuscle (RA2) — led to a lower average RMSE of 11.91 across the two test sets compared to two alternative approaches: (1) using a single spectrogram (RMSE = 12.72) and (2) using four-channel mechanoreceptive filters (RMSE = 11.97). Specifically, while using more channels in the spectrogram improved performance for test set 1, the best performance for test set 2 was achieved with VibNet (RMSE = 11.35), followed by both the single spectrogram and the four-channel mechanoreceptive filters (RMSE = 11.81 for both). These results underscore the efficacy of mechanoreceptive filters while also highlighting the need to balance the two streams within the network to maintain generalizability for predicting vibrotactile sensations and emotions. Overall, the predictions generated by VibNet achieved an average generalization accuracy of 82% across Tacton sets and participants, as measured by the proportion of predictions falling within the standard deviations of the ground truths, irrespective of using any individual participant’s acceleration haptic data.

VII-B Limitations

While VibNet demonstrated promising prediction performance and generalizability across different Tacton sets and users, our biomimetic framework has several limitations. Although we trained VibNet on a wide range of Tactons designed using a variety of parameters, the model may be less accurate on Tactons outside the design space used for training. For instance, the training data included Tactons with durations ranging from 300 milliseconds to 6 seconds. If a user inputs a Tacton lasting 20 seconds, VibNet may require adjustments to maintain accuracy. Similarly, the frequency bandwidth of iPhones is in the range of 80 Hz to 230 Hz, with a maximum intensity around 0.3 G. Thus, making reliable predictions for Tactons outside these frequency and amplitude parameters may require additional data collection and further enhancements to the neural network. Lastly, our focus was solely on haptic stimuli, with no auditory or visual stimuli provided. Future research should aim to expand the dataset to cover a broader design space and incorporate multimodal stimuli to further enhance VibNet’s utility.

VII-C Implications for Future Work

We outline how our framework can inform future research and influence haptic design practices.

Designers can utilize VibNet to estimate the user’s sensations and emotions when prototyping Tactons. Exploring the sensations and emotions elicited by Tactons is often a time-consuming and resource-intensive process, as it requires conducting multiple user studies while navigating an extensive Tacton design space. However, VibNet requires only acceleration data produced by a haptic device, enabling designers to efficiently prototype and refine Tactons to achieve the desired sensations and emotions. By reducing reliance on trial-and-error prototyping and extensive user testing, VibNet streamlines the development of haptic applications.

Researchers can integrate our model into Tacton design tools. Designing effective Tactons for real-world applications is often a complex and challenging task. To address this, previous studies have proposed graphical user interfaces to assist designers in creating Tactons for diverse applications and scenarios [37, 76]. VibNet can further enhance these design tools by enabling designers to quickly evaluate the sensations and emotions elicited by the created Tactons, leading to a more efficient and streamlined design process.

Our framework can inform the development of future machine learning models to predict various attributes conveyed by haptic stimuli. First, we provide a unique haptic dataset consisting of acceleration data collected from 36 participants, along with roughness, valence, and arousal ratings for 154 Tactons designed using a wide range of design parameters. This dataset serves as a resource for training future prediction models in haptics domain. Additionally, researchers can use this data to explore which components of neural network architectures contribute to capturing specific features of vibrations, providing deeper insights for Tacton design. Second, our framework offers a method for augmenting haptic data offline while ensuring perceptual invariance of the signals. This method provides a foundation for developing deep learning or generative models that require large haptic datasets. Researchers could also explore the effects of haptic data augmentation on model robustness, performance, and training efficiency, as has been done in vision and auditory research [77, 78]. In addition, they could develop online augmentation techniques, such as using Generative Adversarial Networks (GANs) [79], similar to those employed in vision and auditory research [78, 80]. Such advancements could accelerate the development of computational models for haptics by enabling real-time data generation and refinement. Finally, our demonstration of mechanoreceptive processing and dual-stream neural networks offers a foundation for future prediction models of Tactons. Future work can expand these models to predict specific metaphors conveyed through Tactons, such as heartbeat or tapping, with the collection of metaphor ratings [30]. Researchers could also extend VibNet to predict sensations and emotions elicited by vibrotactile grids [81, 82] or by multi-modal feedback systems, including thermal feedback and force feedback. Moreover, future studies could expand the scope of prediction models beyond mechanical vibrations to encompass other stimuli in emerging haptic technologies, such as surface electrovibrations or mid-air ultrasound vibrations. Such models would not only improve in versatility and applicability but also enhance their relevance to a broader range of haptic interfaces and experiences.

VIII Conclusion

The models for predicting vibrotactile sensations and emotions offer new opportunities for designers and researchers. We proposed a framework grounded in the perception and cognition mechanisms of human haptic processing. We hope our framework will help designers and researchers quickly prototype rich and diverse haptic feedback to convey target roughness and emotions, accelerating the integration of haptics into various user applications.

References

- [1] R. F. Friesen and Y. Vardar, “Perceived realism of virtual textures rendered by a vibrotactile wearable ring display,” IEEE Transactions on Haptics, 2023.

- [2] M. Schirmer, J. Hartmann, S. Bertel, and F. Echtler, “Shoe me the way: A shoe-based tactile interface for eyes-free urban navigation,” in Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services, 2015, pp. 327–336.

- [3] M. Lee, G. Bruder, and G. F. Welch, “Exploring the effect of vibrotactile feedback through the floor on social presence in an immersive virtual environment,” in 2017 IEEE Virtual Reality (VR). IEEE, 2017, pp. 105–111.

- [4] J. Seo, S. Mun, J. Lee, and S. Choi, “Substituting motion effects with vibrotactile effects for 4d experiences,” in Proceedings of the 2018 CHI conference on human factors in computing systems, 2018, pp. 1–6.

- [5] E. Pezent, A. Israr, M. Samad, S. Robinson, P. Agarwal, H. Benko, and N. Colonnese, “Tasbi: Multisensory squeeze and vibrotactile wrist haptics for augmented and virtual reality,” in 2019 IEEE World Haptics Conference (WHC). IEEE, 2019, pp. 1–6.

- [6] J. Seo and S. Choi, “Perceptual analysis of vibrotactile flows on a mobile device,” IEEE transactions on haptics, vol. 6, no. 4, pp. 522–527, 2013.

- [7] Y. Gaffary and A. Lécuyer, “The use of haptic and tactile information in the car to improve driving safety: A review of current technologies,” Frontiers in ICT, vol. 5, p. 5, 2018.

- [8] J. Ryu, J. Chun, G. Park, S. Choi, and S. H. Han, “Vibrotactile feedback for information delivery in the vehicle,” IEEE Transactions on Haptics, vol. 3, no. 2, pp. 138–149, 2010.

- [9] L. M. Brown, Tactons: structured vibrotactile messages for non-visual information display. University of Glasgow (United Kingdom), 2007.

- [10] L. Turchet, P. Burelli, and S. Serafin, “Haptic feedback for enhancing realism of walking simulations,” IEEE transactions on haptics, vol. 6, no. 1, pp. 35–45, 2012.

- [11] Y. Ju, D. Zheng, D. Hynds, G. Chernyshov, K. Kunze, and K. Minamizawa, “Haptic empathy: Conveying emotional meaning through vibrotactile feedback,” in Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, 2021, pp. 1–7.

- [12] G. Changeon, D. Graeff, M. Anastassova, and J. Lozada, “Tactile emotions: A vibrotactile tactile gamepad for transmitting emotional messages to children with autism,” in Haptics: Perception, Devices, Mobility, and Communication: International Conference, EuroHaptics 2012, Tampere, Finland, June 13-15, 2012. Proceedings, Part I. Springer, 2012, pp. 79–90.

- [13] Y.-W. Park, K.-M. Baek, and T.-J. Nam, “The roles of touch during phone conversations: long-distance couples’ use of poke in their homes,” in Proceedings of the SIGCHI conference on human factors in computing systems, 2013, pp. 1679–1688.

- [14] Y. Yoo, T. Yoo, J. Kong, and S. Choi, “Emotional responses of tactile icons: Effects of amplitude, frequency, duration, and envelope,” in 2015 IEEE World Haptics Conference (WHC). IEEE, 2015, pp. 235–240.

- [15] H. Seifi and K. E. Maclean, “A first look at individuals’ affective ratings of vibrations,” in 2013 World Haptics Conference (WHC). IEEE, 2013, pp. 605–610.

- [16] H. Seifi, K. Zhang, and K. E. MacLean, “Vibviz: Organizing, visualizing and navigating vibration libraries,” in 2015 IEEE World Haptics Conference (WHC). IEEE, 2015, pp. 254–259.

- [17] H. Seifi and K. E. MacLean, “Exploiting haptic facets: Users’ sensemaking schemas as a path to design and personalization of experience,” International Journal of Human-Computer Studies, vol. 107, pp. 38–61, 2017.

- [18] H. Z. Tan, N. I. Durlach, C. M. Reed, and W. M. Rabinowitz, “Information transmission with a multifinger tactual display,” Perception & psychophysics, vol. 61, no. 6, pp. 993–1008, 1999.

- [19] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using rnn encoder-decoder for statistical machine translation,” arXiv preprint arXiv:1406.1078, 2014.

- [20] C. Lim and G. Park, “Can a computer tell differences between vibrations?: Physiology-based computational model for perceptual dissimilarity prediction,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, 2023, pp. 1–15.

- [21] G. Park and S. Choi, “Perceptual space of amplitude-modulated vibrotactile stimuli,” in 2011 IEEE world haptics conference. IEEE, 2011, pp. 59–64.

- [22] D. Ternes and K. E. MacLean, “Designing large sets of haptic icons with rhythm,” in Haptics: Perception, Devices and Scenarios: 6th International Conference, EuroHaptics 2008 Madrid, Spain, June 10-13, 2008 Proceedings 6. Springer, 2008, pp. 199–208.

- [23] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [24] S. Choi and K. J. Kuchenbecker, “Vibrotactile display: Perception, technology, and applications,” Proceedings of the IEEE, vol. 101, no. 9, pp. 2093–2104, 2012.

- [25] S. J. Lederman and R. L. Klatzky, “Haptic perception: A tutorial,” Attention, Perception, & Psychophysics, vol. 71, no. 7, pp. 1439–1459, 2009.

- [26] C. Lim, G. Kim, Y. Shetty, T. McDaniel, H. Seifi, and G. Park, “Emotional and sensory ratings of vibration tactons in the lab and crowdsourced settings,” Available at SSRN 4785071, 2024.

- [27] K. E. MacLean, “Foundations of transparency in tactile information design,” IEEE Transactions on Haptics, vol. 1, no. 2, pp. 84–95, 2008.

- [28] I. Hwang and S. Choi, “Perceptual space and adjective rating of sinusoidal vibrations perceived via mobile device,” in 2010 IEEE Haptics Symposium. IEEE, 2010, pp. 1–8.

- [29] A. Israr, H. Z. Tan, and C. M. Reed, “Frequency and amplitude discrimination along the kinesthetic-cutaneous continuum in the presence of masking stimuli,” The Journal of the Acoustical society of America, vol. 120, no. 5, pp. 2789–2800, 2006.

- [30] D. Kwon, R. Abou Chahine, C. Lim, H. Seifi, and G. Park, “Can we crowdsource tacton similarity perception and metaphor ratings?” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, 2023, pp. 1–13.

- [31] Y. Yoo, I. Hwang, and S. Choi, “Perceived intensity model of dual-frequency superimposed vibration: Pythagorean sum,” IEEE Transactions on Haptics, vol. 15, no. 2, pp. 405–415, 2022.

- [32] I. Hwang, J. Seo, and S. Choi, “Perceptual space of superimposed dual-frequency vibrations in the hands,” PloS one, vol. 12, no. 1, p. e0169570, 2017.

- [33] L. M. Brown, S. A. Brewster, and H. C. Purchase, “Multidimensional tactons for non-visual information presentation in mobile devices,” in Proceedings of the 8th conference on Human-computer interaction with mobile devices and services, 2006, pp. 231–238.

- [34] R. Abou Chahine, D. Kwon, C. Lim, G. Park, and H. Seifi, “Vibrotactile similarity perception in crowdsourced and lab studies,” in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications. Springer, 2022, pp. 255–263.

- [35] J. Tan, Y. Ge, X. Sun, Y. Zhang, and Y. Liu, “User experience of tactile feedback on a smartphone: Effects of vibration intensity, times and interval,” in Cross-Cultural Design. Methods, Tools and User Experience: 11th International Conference, CCD 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, July 26–31, 2019, Proceedings, Part I 21. Springer, 2019, pp. 397–406.

- [36] L. M. Brown, S. A. Brewster, and H. C. Purchase, “A first investigation into the effectiveness of tactons,” in First joint eurohaptics conference and symposium on haptic interfaces for virtual environment and teleoperator systems. world haptics conference. IEEE, 2005, pp. 167–176.

- [37] O. S. Schneider and K. E. MacLean, “Studying design process and example use with macaron, a web-based vibrotactile effect editor,” in 2016 IEEE Haptics Symposium (Haptics). IEEE, 2016, pp. 52–58.

- [38] J. B. van Erp, M. M. Spapé et al., “Distilling the underlying dimensions of tactile melodies,” in Proceedings of Eurohaptics, vol. 2003, 2003, pp. 111–120.

- [39] A. Israr, S. Zhao, K. Schwalje, R. Klatzky, and J. Lehman, “Feel effects: enriching storytelling with haptic feedback,” ACM Transactions on Applied Perception (TAP), vol. 11, no. 3, pp. 1–17, 2014.

- [40] E. B. Goldstein, Sensation and perception. Wadsworth/Thomson Learning, 1989.

- [41] E. Kandel, “Principles of neural science,” 2000.

- [42] G. A. Gescheider, Psychophysics: the fundamentals. Psychology Press, 2013.

- [43] J. Ryu, “Psychophysical model for vibrotactile rendering in mobile devices,” Presence, vol. 19, no. 4, pp. 364–387, 2010.

- [44] O. Franzén and J. Nordmark, “Vibrotactile frequency discrimination,” Perception & Psychophysics, vol. 17, pp. 480–484, 1975.

- [45] A. K. Goble and M. Hollins, “Vibrotactile adaptation enhances frequency discrimination,” The Journal of the Acoustical Society of America, vol. 96, no. 2, pp. 771–780, 1994.

- [46] G. D. Goff, “Differential discrimination of frequency of cutaneous mechanical vibration.” Journal of experimental psychology, vol. 74, no. 2p1, p. 294, 1967.

- [47] G. A. Gescheider, J. J. Zwislocki, and A. Rasmussen, “Effects of stimulus duration on the amplitude difference limen for vibrotaction,” The Journal of the Acoustical Society of America, vol. 100, no. 4, pp. 2312–2319, 1996.

- [48] L. A. Jones and S. J. Lederman, Human hand function. Oxford university press, 2006.

- [49] M. Holliins, R. Faldowski, S. Rao, and F. Young, “Perceptual dimensions of tactile surface texture: A multidimensional scaling analysis,” Perception & psychophysics, vol. 54, pp. 697–705, 1993.

- [50] M. Hollins, S. Bensmaïa, K. Karlof, and F. Young, “Individual differences in perceptual space for tactile textures: Evidence from multidimensional scaling,” Perception & psychophysics, vol. 62, pp. 1534–1544, 2000.

- [51] I. Soufflet, M. Calonnier, and C. Dacremont, “A comparison between industrial experts’ and novices’ haptic perceptual organization: A tool to identify descriptors of the handle of fabrics,” Food quality and preference, vol. 15, no. 7-8, pp. 689–699, 2004.

- [52] W. M. B. Tiest and A. M. Kappers, “Analysis of haptic perception of materials by multidimensional scaling and physical measurements of roughness and compressibility,” Acta psychologica, vol. 121, no. 1, pp. 1–20, 2006.

- [53] J. A. Russell, “A circumplex model of affect.” Journal of personality and social psychology, vol. 39, no. 6, p. 1161, 1980.

- [54] S. Guest, J. M. Dessirier, A. Mehrabyan, F. McGlone, G. Essick, G. Gescheider, A. Fontana, R. Xiong, R. Ackerley, and K. Blot, “The development and validation of sensory and emotional scales of touch perception,” Attention, Perception, & Psychophysics, vol. 73, pp. 531–550, 2011.

- [55] O. S. Schneider, H. Seifi, S. Kashani, M. Chun, and K. E. MacLean, “Hapturk: Crowdsourcing affective ratings of vibrotactile icons,” in Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 2016, pp. 3248–3260.

- [56] V. Chu, I. McMahon, L. Riano, C. G. McDonald, Q. He, J. M. Perez-Tejada, M. Arrigo, T. Darrell, and K. J. Kuchenbecker, “Robotic learning of haptic adjectives through physical interaction,” Robotics and Autonomous Systems, vol. 63, pp. 279–292, 2015.

- [57] B. A. Richardson and K. J. Kuchenbecker, “Learning to predict perceptual distributions of haptic adjectives,” Frontiers in Neurorobotics, vol. 13, p. 116, 2020.

- [58] Y. Gao, L. A. Hendricks, K. J. Kuchenbecker, and T. Darrell, “Deep learning for tactile understanding from visual and haptic data,” in 2016 IEEE international conference on robotics and automation (ICRA). IEEE, 2016, pp. 536–543.

- [59] M. I. Awan, W. Hassan, and S. Jeon, “Predicting perceptual haptic attributes of textured surface from tactile data based on deep cnn-lstm network,” in Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, 2023, pp. 1–9.

- [60] F. Ito and K. Takemura, “A model for estimating tactile sensation by machine learning based on vibration information obtained while touching an object,” Sensors, vol. 21, no. 23, p. 7772, 2021.

- [61] B. A. Richardson, Y. Vardar, C. Wallraven, and K. J. Kuchenbecker, “Learning to feel textures: Predicting perceptual similarities from unconstrained finger-surface interactions,” IEEE Transactions on Haptics, vol. 15, no. 4, pp. 705–717, 2022.

- [62] G. Park and K. J. Kuchenbecker, “Objective and subjective assessment of algorithms for reducing three-axis vibrations to one-axis vibrations,” in 2019 IEEE World Haptics Conference (WHC). IEEE, 2019, pp. 467–472.

- [63] R. Hassen and E. Steinbach, “Subjective evaluation of the spectral temporal similarity (st-sim) measure for vibrotactile quality assessment,” IEEE Transactions on Haptics, vol. 13, no. 1, pp. 25–31, 2019.

- [64] E. Muschter, A. Noll, J. Zhao, R. Hassen, M. Strese, B. Gülecyüz, S.-C. Li, and E. Steinbach, “Perceptual quality assessment of compressed vibrotactile signals through comparative judgment,” IEEE Transactions on Haptics, vol. 14, no. 2, pp. 291–296, 2021.

- [65] A. Noll, M. Hofbauer, E. Muschter, S.-C. Li, and E. Steinbach, “Automated quality assessment for compressed vibrotactile signals using multi-method assessment fusion,” in 2022 IEEE Haptics Symposium (HAPTICS). IEEE, 2022, pp. 1–6.

- [66] C. Lim, G. Park, and H. Seifi, “Designing distinguishable mid-air ultrasound tactons with temporal parameters,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, 2024, pp. 1–18.

- [67] I. Goodfellow, Deep Learning. MIT Press, 2016.

- [68] J. Shen, R. Pang, R. J. Weiss, M. Schuster, N. Jaitly, Z. Yang, Z. Chen, Y. Zhang, Y. Wang, R. Skerrv-Ryan et al., “Natural tts synthesis by conditioning wavenet on mel spectrogram predictions,” in 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 2018, pp. 4779–4783.

- [69] H. Meng, T. Yan, F. Yuan, and H. Wei, “Speech emotion recognition from 3d log-mel spectrograms with deep learning network,” IEEE access, vol. 7, pp. 125 868–125 881, 2019.

- [70] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Advances in neural information processing systems, vol. 25, 2012.

- [71] J. K. Bowmaker and H. Dartnall, “Visual pigments of rods and cones in a human retina.” The Journal of physiology, vol. 298, no. 1, pp. 501–511, 1980.

- [72] K. O. Johnson, “The roles and functions of cutaneous mechanoreceptors,” Current opinion in neurobiology, vol. 11, no. 4, pp. 455–461, 2001.

- [73] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [74] S. Sheikholeslami, “Ablation programming for machine learning,” 2019.

- [75] A. Vaswani, “Attention is all you need,” Advances in Neural Information Processing Systems, 2017.

- [76] S. Panëels, M. Anastassova, and L. Brunet, “Tactiped: Easy prototyping of tactile patterns,” in Human-Computer Interaction–INTERACT 2013: 14th IFIP TC 13 International Conference, Cape Town, South Africa, September 2-6, 2013, Proceedings, Part II 14. Springer, 2013, pp. 228–245.

- [77] P. Y. Simard, D. Steinkraus, J. C. Platt et al., “Best practices for convolutional neural networks applied to visual document analysis.” in Icdar, vol. 3, no. 2003. Edinburgh, 2003.

- [78] T. Ko, V. Peddinti, D. Povey, and S. Khudanpur, “Audio augmentation for speech recognition.” in Interspeech, vol. 2015, 2015, p. 3586.

- [79] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” Communications of the ACM, vol. 63, no. 11, pp. 139–144, 2020.

- [80] E. D. Cubuk, B. Zoph, D. Mane, V. Vasudevan, and Q. V. Le, “Autoaugment: Learning augmentation strategies from data,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 113–123.

- [81] O. S. Schneider, A. Israr, and K. E. MacLean, “Tactile animation by direct manipulation of grid displays,” in Proceedings of the 28th annual ACM symposium on user interface software & technology, 2015, pp. 21–30.

- [82] A. Israr and I. Poupyrev, “Tactile brush: drawing on skin with a tactile grid display,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2011, pp. 2019–2028.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3adabf68-d1a5-4c3e-be8a-a0cc46ec78b0/20241209_Chungman_Lim.jpg) |

Chungman Lim received the B.S. degree in statistics from the University of Seoul. He is currently a Ph.D. candidate in the Artificial Intelligence Graduate School at the Gwangju Institute of Science and Technology (GIST). His research interest is at the intersection of human-computer interaction, computational interaction, and haptics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3adabf68-d1a5-4c3e-be8a-a0cc46ec78b0/Profile_GyeongdeokKim.png) |

Gyeongdeok Kim received the BS degrees both in mechanical engineering and computrer science engineering from Korea University of Technology and Education, Cheonan, South Korea, in 2020. He is a PhD student at Gwangju Institute of Science and Technology, Gwangju, South Korea. His research interests include accessible system for the visually impaired, haptics, and human-computer interaction. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3adabf68-d1a5-4c3e-be8a-a0cc46ec78b0/Profile_Su-Yeon_Kang.png) |

Su-Yeon Kang received the BS degree in Computer Science and Engineering from Gyeongsang National University, Jinju, South Korea, in 2024. She is a master’s student at the Gwangju Institute of Science and Technology, Gwangju, South Korea. Her research interests include human-computer interaction, haptics, and Artificial Intelligence. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3adabf68-d1a5-4c3e-be8a-a0cc46ec78b0/x1.jpg) |

Hasti Seifi is an assistant professor in the School of Computing and Augmented Intelligence at Arizona State University. Previously, she was an assistant professor at the University of Copenhagen (2020-2022) and a postdoctoral research fellow at the Max Planck Institute for Intelligent Systems (2017-2020). She received her Ph.D. in computer science from the University of British Columbia in 2017, her M.Sc. from Simon Fraser University in 2011, and B.Sc. from the University of Tehran in 2008. Her research interests lie at the intersection of haptics, human-computer interaction, social robotics, and accessibility. Her work was recognized by an NSF CAREER award (2024), an NSERC postdoctoral fellowship (2018), and the EuroHaptics best Ph.D. thesis award (2017). |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3adabf68-d1a5-4c3e-be8a-a0cc46ec78b0/Profile_Gunhyuk_Park.jpg) |

Gunhyuk Park received the B.S. degrees in computer science and engineering and in electronic and electrical engineering in 2007, and the Ph.D. degree in computer science and engineering in 2017 from the Pohang University of Science and Technology, Pohang, South Korea. He is currently an Assistant Professor at the AI Graduate School, Gwangju Institute of Science and Technology, Gwangju, South Korea. He was a Postdoctoral Researcher with the Max Planck Institute for Intelligent Systems from 2017 to 2019. His research interests include haptic rendering and perception, mainly on tactile systems. He has worked on haptics for vibrotactile feedback perception, illusory vibrotactile feedback, automobiles, and mobile phones. |