Bures Joint Distribution Alignment with Dynamic Margin for Unsupervised Domain Adaptation

Abstract

Unsupervised domain adaptation (UDA) is one of the prominent tasks of transfer learning, and it provides an effective approach to mitigate the distribution shift between the labeled source domain and the unlabeled target domain. Prior works mainly focus on aligning the marginal distributions or the estimated class-conditional distributions. However, the joint dependency among the feature and the label is crucial for the adaptation task and is not fully exploited. To address this problem, we propose the Bures Joint Distribution Alignment (BJDA) algorithm which directly models the joint distribution shift based on the optimal transport theory in the infinite-dimensional kernel spaces. Specifically, we propose a novel alignment loss term that minimizes the kernel Bures-Wasserstein distance between the joint distributions. Technically, BJDA can effectively capture the nonlinear structures underlying the data. In addition, we introduce a dynamic margin in contrastive learning phase to flexibly characterize the class separability and improve the discriminative ability of representations. It also avoids the cross-validation procedure to determine the margin parameter in traditional triplet loss based methods. Extensive experiments show that BJDA is very effective for the UDA tasks, as it outperforms state-of-the-art algorithms in most experimental settings. In particular, BJDA improves the average accuracy of UDA tasks by 2.8% on Adaptiope, 1.4% on Office-Caltech10, and 1.1% on ImageCLEF-DA.

Index Terms:

Unsupervised domain adaptation, Joint distribution, Optimal transportation, Contrastive learning, Dynamic margin.I Introduction

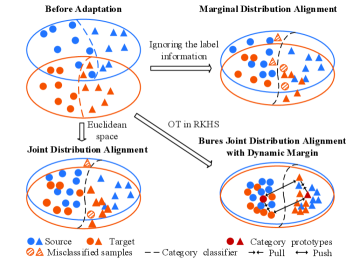

The generalization ability across datasets is a long-standing goal of machine learning models. While deep neural networks have demonstrated the powerful generalization ability on a number of tasks, these remarkable gains largely rely on the availability of sufficient labeled training data. Annotating large amounts of data is unfortunately difficult or expensive in real-world applications. One natural approach [1] is to transfer the learned knowledge from one dataset to another new dataset. However, various datasets are collected under different conditions. Thus, the distribution shift across datasets often leads to significant performance degradation, as shown in the top left of Fig. 1. Unsupervised domain adaptation (UDA) [2, 3, 4] specifically deals with this situation, and learns a model for the unlabeled test data (i.e., the target domain) by transferring knowledge from the labeled training data (i.e., the source domain).

UDA has been investigated for many fields including object recognition [5, 6, 7], medical image diagnosis [8, 9], and natural language processing [10, 11]. Let be the input space and the label space, denote the source and target samples, denote the corresponding labels, where is unavailable during training. As shown in the top right of Fig. 1, many previous works focus on reducing the difference between the marginal feature distributions of the two domains, i.e., and , as small as possible. In some cases [12, 13], statistical moments such as expectation and covariance matrix are used to model the difference between the feature distributions of the two domains. Then, this idea has been extended to use some statistical distance to measure the discrepancy of feature distributions from various domains, such as the maximum mean discrepancy (MMD) [14, 15, 16, 17], or the Wasserstein distance [18]. Some works propose the domain-adversarial strategy [19, 20, 21] to align the feature distributions with a domain discriminator. While the above approaches try to learn domain-invariant features between source and target domains, they rarely take the label information into consideration during the adaptation process. Another line of research [22, 23] involves the conditional distributions of labels, i.e., and , which attempts to minimize the difference in both the marginal feature distributions and the conditional distributions between the source and the target domains. Since and are very difficult to estimate, they usually use the class-conditional distributions and instead, which limits their effectiveness.

Motivated by the fact that the features and labels are jointly drawn from certain high-dimensional probability distributions, some methods have been designed to directly address the difference between the joint distributions of the two domains, as shown in the bottom left of Fig. 1. Courty et al. [10] assume that there exists an optimal transport transformation between the joint distributions across different domains, i.e., and , and propose joint distribution optimal transport (JDOT) to align the joint distributions. Damodaran et al. [24] incorporate JDOT into deep neural networks and propose deep joint distribution optimal transport (DeepJDOT) for UDA. Although they have shown promising performance in UDA, there are still some limitations. On the one hand, they rely on the alternate optimization strategy for the optimal transport plan and the classifier, which is unstable and hard to train. On the other hand, these works typically reduce the distribution discrepancy in the Euclidean space, which may ignore the nonlinear structures underlying the data.

To address these issues mentioned above, we introduce a novel Bures joint Distribution Alignment (BJDA) approach based on the optimal transport (OT) theory (see the bottom right of Fig. 1). The key idea is to model the joint distribution shift in the reproducing kernel Hilbert space (RKHS). In addition, we introduce a dynamic margin based contrastive learning phase to improve the category discriminative ability of representations. Importantly, we use an equivalent and computable formulation [25] of the kernel Bures-Wasserstein distance for the discrepancy between the joint distributions by generalizing the original optimal transport problem in Euclidean space to kernel space. Thus, our method can capture the nonlinear structures underlying the data, and it provides an efficient and end-to-end trainable approach for reducing the joint distribution shift between the source domain and the target domain.

The main contributions of this paper are summarized as follows:

-

1.

A Bures joint distribution alignment phase is proposed, which minimizes the discrepancy of joint distributions between the source and target domains in the infinite-dimensional kernel space.

-

2.

A dynamic margin based contrastive learning phase is proposed, which enhances the discriminative ability of the features and avoids cross-validation to determine the margin parameters.

-

3.

The proposed BJDA method is evaluated on several object classification tasks, and it achieves state-of-the-art classification performance on four publicly available datasets.

The remainder of the paper is organized as follows. In Section II, some closely related works are reviewed. Algorithmic details of our BJDA method are presented in Section III. Section IV provides extensive experimental results for validating the superiority of BJDA. Finally, we conclude the paper with a brief discussion in Section V.

II Related Works

In this section, we briefly review some recent works about UDA by considering the marginal distribution and the joint distribution, respectively. While many previous works exist, we focus primarily on deep learning-based methods. In light of our method is based on the optimal transport theory, we also review some progress about OT.

II-A Unsupervised Domain Adaptation with Marginal Distribution Shift

Most recent UDA methods are motivated by the seminal theoretical work of Ben-David et al. [26], which proposes the -distance to estimate the discrepancy of feature marginal distributions between source and target domains. Then Blitzer et al. [27] develop the -distance to deduce a uniform learning bound, which minimizes the convex combination of empirical risks among various domains. A thorough theoretical investigation for UDA is presented in [28] in terms of the dimension. In light of these foundation works, minimizing the domain distribution discrepancy has been used extensively for UDA. The covariance matrix is used in several works [12, 13] to model the difference between the feature distributions of various domains. Ren et al. [6] exploit a low-rank representation to reduce the marginal distribution shift and stress the group compactness of feature representations. Li et al. [29] design an adaptive batch normalization operation to align the feature distributions of different domains. Luo et al. [30] use a sequence of latent manifolds to describe the diverse domains and employ the manifold metrics to model the domain discrepancy. While methods discussed above are mainly based on distribution discrepancy, there have been several works to address the problem of UDA by applying adversarial learning. Ganin et al. [19] introduce domain adversarial neural network (DANN) to align the feature distributions with a domain discriminator via the adversarial mechanism. Tzeng et al. [31] use separate feature extraction networks for diverse domains and train the target domain adversarially until it is adapted to the source domain. Satio et al. [32] propose maximum classifier discrepancy (MCD), which utilizes two category classifiers to simulate a domain discriminator and train the feature generator in an adversarial fashion. These approaches focus on learning a domain-invariant feature representation among the source and target domains, the available label information from the source domain is not fully exploited during the adaptation process.

II-B Unsupervised Domain Adaptation with Joint Distribution Shift

Domain shift usually exists both marginal distributions and conditional distributions. Thus, it is more important to investigate the domain difference among the joint distributions. To account for the label information, Li et al. [33] propose a novel domain distance, Maximum Density Divergence (MDD), which measures both the inter-domain divergence and the intra-class density. Li et al. [34] also propose Adaptation by Adversarial Attacks (AAA), which addresses the UDA task from the view of the adversarial attack, and generalizes the UDA task to the scenario in which the source data or the target data is absent during training. Then, Faster Domain Adaptation (FDA) [35] is proposed, which adaptively selects the number of neural network layers for various samples and significantly speeds up the adaptation process. Li et al. [36] further propose Heterogeneous Domain Adaptation (HDA), which uses dictionary learning to handle heterogeneous feature spaces with different dimensions, and minimizes the marginal distribution gaps across different domains. Zhang et al. [37] use a domain discriminator to align the marginal distribution with adversarial learning, and develop a class-wise divergence to address the gap between conditional distributions. Du et al. [38] align the marginal distribution with the domain discriminator, it also utilizes the disagreement of two category classifiers to measure the discrepancy of conditional distributions. Yu et al. [39] use two domain discriminators, one for the marginal distributions, and the other for the conditional distributions. Wang et al. [40] propose Dynamic Distribution Adaptation (DDA) to address the different effects of marginal and conditional distributions in the adaptation process. Wang et al. [41] train a domain-invariant classifier in Grassmann manifold and minimizes the structural risk. Luo et al. [42] propose a category-level adversarial network to enforce local semantic consistency. Then, Luo et al. [43] introduce a novel Significance-aware Information Bottleneck (SIB) to improve the former-mentioned category-level adaptation. Luo et al. [44] further propose a novel Significance Aware Layer, which uses the channel-wise significance of each semantic feature to balance the information from different categories. Although these works take the label information into consideration, they align the marginal distributions and the conditional distributions separately.

Long et al. [22] propose Joint Distribution Adaptation (JDA) by aligning both the marginal feature distributions and the conditional distributions in a dimensionality reduction procedure. Chen et al. [23] exploit nonlinear projections to match the projected distributions. Long et al. [45] propose the Joint Maximum Mean Discrepancy (JMMD) to measure the discrepancy of the joint distributions of feature representations from different layers in the neural network between diverse domains. Li et al. [46] propose to match the marginal and conditional distributions via the maximum mean discrepancy (MMD) distance. Long et al. [47] develop a multilinear map to model the interactions across the feature and label predictions. Zhang et al. [20] propose a symmetric framework to perform domain confusion both on label-level and feature-level. Gu et al. [48] take the label information into consideration and propose to correct the pseudo-labels based on the feature distance. Ni et al. [49] propose to match both the marginal distributions and conditional distributions by training a domain-invariant kernel. Courty et al. [10] investigate an optimal transport transformation to align the joint distributions between different domains and propose joint distribution optimal transport (JDOT). Damodaran et al. [24] incorporate JDOT with deep neural networks and propose deep joint distribution optimal transport (DeepJDOT). Although these works have shown promising performance in UDA, some of them reduce the distribution discrepancy in the Euclidean space, which may ignore the nonlinear structures underlying the data, and the others usually train the deep neural networks and the optimal transport task alternately, which is unstable and hard to train.

II-C UDA with Optimal Transport

Optimal transportation (OT) [50] has been an important topic in both the computer vision and machine learning communities. OT investigates how to effectively transport a probability distribution to another one, and the total cost during the transportation is related to a distance between diverse probability distributions. Assume that we are given two probability measures and , defined respectively on some measure spaces and . The cost function defined on depicts how much it costs to transport a unit of probability mass from the point to the point , where and . The transport coupling is a joint probability measure which can be interpreted as the amount of probability mass transported from the point to the point . The optimal transport cost between the two measures and defines the Wasserstein distance [50], which is denoted as

| (1) |

Courty et al. [51] propose a regularized optimal transportation algorithm to perform the alignment of the feature representations among the source and target domains. Yang et al. [52] present a novel robust regression scheme by integrating OT with convex regularization terms. Li et al. [18] use an attention-aware transport distance to directly minimize the domain discrepancy. Usually, it is complicated to compute the Wasserstein distance as it requires for solving a linear programming task iteratively. Thus, it is not straightforward to incorporate the Wasserstein distance with deep neural networks. To address this issue, Bhatia et al. [53] extend the Wasserstein distance to the Bures-Wasserstein distance, which is easy and computable. Let be the set of all PSD matrices of size . The Bures-Wasserstein distance [53] between two probability measures and is defined as

| (2) |

where , are the covariance matrices of and , respectively. The original OT problem is discussed in the Euclidean space. Recently, several works [25, 4] generalize the original optimal transport method to infinite-dimensional feature spaces. An equivalent and computable closed-form formulation [25] of the Kantorovich optimal transport problem is provided via the kernel methods, which provides a probable solution for measuring the discrepancy among diverse distributions. The theorem in [25] states that if and are two Gaussian measures with the same expectation, the Wasserstein distance is just the corresponding Bures-Wasserstein distance between their covariance matrices on the manifold of Positive Semi-Definite (PSD) matrices.

III Bures joint Distribution Alignment

In this section, we describe the BJDA algorithm in details. We first present basic formulation for the UDA problem. Then we introduce the Bures joint distribution alignment phase (loss term) and the dynamic margin based contrastive learning phase.

III-A Problem Formulation

Without loss of generality, we consider a -class problem as an example. Let be the input space and the label space, where . denote the source and target samples, denote the label of source and target samples, where is not available during training. We define a domain as a pair consisting of a joint distribution on the input space and a labeling function . and denote the joint distributions of the source and target domains, respectively. Our goal is to learn effective features such that the model also makes accurate predictions on the target domain.

III-B Bures joint Distribution Alignment Phase

Inspired by recent works about the joint distribution alignment for UDA [10, 24] as well as new proceedings [25] on the optimal transport theory in RKHS, we propose to align the joint distributions of the two domains in the infinite-dimensional kernel spaces. The architecture is depicted by Fig. 2. Our model consists of a feature extractor with parameters , a category classifier with parameters . The feature extractor maps the samples to the representation space . and represent the subspace for and , respectively. The category classifier outputs the -dimensional probability prediction vector for the input representation. The optimal coupling between the joint distributions and is denoted as , and we can get two sub-couplings and . Since is unavailable during training, we assign soft pseudo-labels to the unlabeled , i.e., . We use the following theorems to model the discrepancy between the joint distributions of the two domains.

Theorem 1 [50]. Let () and () be two Polish probability spaces; let and be two upper semicontinuous functions such that , . Let be a lower semicontinuous cost function, such that for all , . Then there is a coupling of which minimizes the total cost among all possible couplings .

Theorem 2 [50]. Any sub-coupling is still optimal.

Theorem 1 states the existence of an optimal coupling. Theorem 2 declares that any induced sub-coupling is also optimal.

In light of the above theorems, we can compute the squared Wasserstein distance between joint distributions as follows:

| (3) |

where , , and .

The original OT problem is discussed in the Euclidean space. It is widely believed that the kernel methods are effective in exploring those complex data with nonlinear structures. Thus, the Bures-Wasserstein distance corresponding to the original OT problem is generalized to the infinite-dimensional settings, and the kernel Bures-Wasserstein distance [25] between the covariance operators in RKHS is induced.

Denote a nonempty set as , and let be a Hilbert space of -valued function defined on . Let be a positive definite kernel on , and be the reproducing kernel Hilbert space generated by . Define the implicit feature map as . Let and be two sample matrices from two probability measures and , respectively. Let and be the mapped data matrices. Let , and be the kernel matrices defined by ,, and . Let and be two centering matrices. The kernel Bures-Wasserstein distance [25] is expressed as:

| (4) |

where denotes the nuclear norm, i.e., , and is the singular values of matrix . This is an equivalent and computable formulation [25] of the kernel Bures-Wasserstein distance, the form of which is fully determined by the kernel function.

In order to exploit the capability of capturing nonlinear structures of kernel methods, we propose to match the joint distributions of source and target domains in RKHS. Specifically, we incorporate the formulation of the kernel Bures-Wasserstein distance and propose the following Bures joint distribution alignment loss

| (5) |

where the kernel matrices follow the definition of Eq. (4), such as ,. We use the Gauss kernel function in this paper, i.e., , where is set as the mean of all the squared Euclidean distance .

Our proposed Bures joint distribution alignment loss is differentiable by means of the closed form solutions, so we can directly model the joint distribution shift in the reproducing kernel Hilbert space. We also train the feature extractor and the category classifier with the cross-entropy loss on the source domain, i.e.,

| (6) |

III-C Contrastive Learning with Dynamic Margin

The goal of UDA is to train a model which makes accurate and robust predictions for the unlabeled target data. Therefore, enhancing the discriminative ability of the feature representations between categories may be of utmost importance. Recently, some works about contrastive learning are proposed. Wang et al. [54] use self-supervised learning method to reduce the discrepancy across domains. Park et al. [55] employ the mutual information between a feature and its label to train class-level discriminative features. Both [54] and [55] are based on the InfoNCE Loss [56], which minimizes the distance of all positive pairs relative to negative pairs, and they may probably encounter the potential risk of overfitting. Vikas et al. [57] propose a method for data augmentation, which mixes samples either at the input or hidden-state levels to create positive and negative samples. But those synthesis samples will introduce the Mixup-noise [57]. Intuitively, representations belonging to the same category should be located nearby in the representation space, and representations belonging to the different categories should be pushed far away. The triplet loss [58, 59, 60, 61, 62] is effective in achieving the desired goal. Let denote the feature representation of a sample , the triplet loss is defined as

| (7) |

where represents a fixed margin value, denotes the feature representation that has the same category label with respect to , denotes the feature representation with different category label. We try to incorporate the triplet loss into our method, and train the network using the Bures joint distribution alignment loss, the cross-entropy loss, and the triplet loss. The experimental settings are described in Section IV, and is termed as BJDA( )+. However, the experiment results show some limitations. As shown in Fig. 3, different margin values have a significant influence on the performance. It motivates us to find a dynamic margin to avoid the tedious task of determining the margin by cross-validation. On the other hand, the fixed margin may be an overly strict objective, as it is difficult to make all the clusters keep the same margin far away from each other in the high-dimensional representation space. In this work, we propose a dynamic margin based contrastive learning phase to address these issues and preserve the category discriminative information from the source domain.

Naturally, the prototypes, which play the role of each class center, contain much more robust category discriminative information than the sample points [63]. Therefore, we use the prototypes to guide the feature extractor to generate a stable and category discriminative feature space. Specifically, the representations that have the same label should get close to the corresponding category prototype and keep a reasonable distance far away from the category prototypes that have the different labels. Therefore, we propose to learn a dynamic margin among various clusters under the guidance of category prototypes and train a more robust and category-separable representation space.

Let denote the prototype of the -th category, is the prototype of the category , where is different from and . We propose the following dynamic margin contrastive (DMC) loss:

| (8) |

where , is the prediction probability of for the category , denotes the Euclidean distance between two feature vectors. The dynamic margin will not be a fixed value, but has relation to the output of the network. When the margin gets a small value, it means that the category classifier outputs a confident prediction for , and the feature representation is far away from the decision categorical surface. It seems that is a tractable sample for optimizing the representation space, thus we can assign a small margin between and . On the contrary, when gets an uncertain prediction, we should assign a larger margin between and . The comparison between the original triplet loss and the proposed DMC loss is shown in Fig. 4. The triplet loss pulls the positive pairs (i.e., samples that have the same category label) close to each other and pushes the negative pairs (i.e., samples that have the different category label) far away from each other, but the margin is a fixed value which is hard to determine. Our DMC loss encourages the feature representations scattering around the center of the corresponding category and seeks to keep a reasonable separation between different clusters.

The prototypes are computed from the source data which have ground truth labels. To generalize the discriminative information from the source domain to the target domain, we compute the DMC loss using both the source and target data. Since labels of the target data are unavailable during training, we assign hard pseudo-labels to the unlabeled , that is,

| (9) |

Although both our DMC and previous works [54, 55, 57] are based on the idea of contrastive learning, their models are different. Specifically, the works [54, 55, 57] search positive samples and negative samples for each sample and compute the distance for all positive pairs and negative pairs, and they assign a fixed margin between clusters. While our DMC only needs to compute the distance between a single sample and two category prototypes, and it introduces a dynamic margin to flexibly characterize the class separability of deep features. Overall, the proposed DMC loss encourages maintaining a reasonable separation across the centers of feature representations belonging to different categories in the feature space. Since the relationship of intra-category compactness and inter-category discrepancy are taken into account, the feature learned for the target data has the better discriminative ability.

III-D Model Training

The objective function of the BJDA method is formulated as

| (10) |

where and are non-negative hyper-parameters.

We use the mini-batch stochastic gradient descent (Mini-batch SGD) [64] to solve the objective function.

The detailed training procedure is shown in Algorithm 1.

Input: Labeled source data , unlabeled target data , maximum iteration , parameters and .

Output: Feature extractor , category classifier .

IV Experimental Results

In this section, we first evaluate our BJDA method on the image classification tasks under UDA settings. Next, we perform ablation study and convergence analysis on BJDA. Then we show the impact of different hyper-parameters.

IV-A Datasets

We use six benchmark datasets, i.e., ImageCLEF-DA, Office-Caltech, Adaptiope, and Refurbished Office-31.

ImageCLEF-DA: The ImageCLEF-DA dataset [45] is a commonly used dataset for UDA. It consists of images from three distinct domains, which are subsets of Caltech-256 (), ImageNet ILSVRC 2012 (), and Pascal VOC 2012 () datasets. It selects 12 classes common to the three public datasets. There are 50 images in each category, and each domain is comprised of the same amount of samples.

Office-Caltech10: The Office-Caltech10 dataset [66] is a dataset widely adopted by UDA methods. The whole dataset contains 2533 images, which consists of 4 domains: Amazon (images downloaded from online websites), DSLR (high-resolution images from digital SLR cameras), Webcam (low-resolution images taken by web cameras), and Caltech-10 (images from Caltech-256 dataset). We use characters , , , to represent these domains for short, respectively. There are 10 common categories of objects in each domain, including monitor, keyboard, headphones, calculator, etc.

Adaptiope: The Adaptiope dataset [67] is a new, large, diverse classification dataset for UDA. It has 3 domains, namely Product, Real life, and Synthetic. Images for the Product domain are real article images from the e-commerce website, and images in the Real life domain are customer review images. For the Synthetic domain, data is collected from artistic websites such as . It is noteworthy that every category has an equal number of images, and each domain contains 12300 images from 123 classes. Fig. 5 presents example images from the Adaptiope dataset. It can be seen that there are large domain gaps among various domains in this challenging dataset, and different backgrounds and colorspaces exist among images from different domains.

Refurbished Office-31: The Refurbished Office-31 dataset[67] is an upgrade version of the commonly used Office31 dataset [68]. In light of there are some systematic errors (i.e., ambiguous ground truth, domain leakage) in the Amazon domain of the original Office31 dataset [68], the author of [67] propose the Refurbished Office-31 dataset by manually cleaning a total of 834 of the 2,817 images in the Amazon domain. It is a collection of images from three different domains, i.e., The Refurbished Amazon, DSLR, and Webcam. We use , , to represent these domains for short, respectively.

Office-Home: It is one of the largest datasets for the UDA tasks in the community [69]. It has samples with 65 categories. It consists of four different domains, namely Art, Clipart, Product, Real-World. We use , , , to represent these domains for short, respectively.

DomainNet: It is a recently proposed benchmark [70] with 0.59 million images, it consists of six different domains, namely Clipart, Infograph, Painting, Quickdraw, Real, and Sketch[70]. Each domain contains 345 categories of objects. We use , , , , , to represent these domains for short, respectively.

We compare our BJDA method against a variety of state-of-the-art UDA methods, including CORAL [12], DANN [19], DAN [71], OT-GL [51], CDAN [47], SymNets [20], ATM [33], RSDA [48]. Results of these methods are quoted from the original papers, or re-implemented under the same UDA settings. In the test phase, test samples are forwarded through the feature extractor and the category classifier . The setting of Source-only is used as a baseline, where all images from source domains are used to train a classifier without matching with the target data.

IV-B Experimental Settings

To make it a fair comparison, we follow the experimental settings of UDA ([20, 71, 33] ). We perform each task for five runs and report the average accuracy and standard deviation. We use the deep features commonly used in existing works for a fair comparison with other UDA methods. Specifically, the Decaf6 [72] features (activations of the 6th fully connected layer of the convolutional neural network, the dimensionality is 4096) are used for the Office-Caltech10 dataset, the ResNet101 [73]features (activations of the penultimate fully connected layer of the convolutional neural network, the dimensionality is 2048) are used for the DomainNet dataset. In light of ResNet50 [73] has been commonly used to extract features or as the backbone of deep learning models in the literature, we use the ResNet50 features (the dimensionality is 2048) for the other three datasets in our experiments. For the feature extractor , we use two-layered fully-connected networks with 1024 neurons in the hidden layers, in which the LeakyReLU is used as the activation function. We set the number of output dimensions of the feature extractor as 512. For the category classifier , we use a fully-connected network. The maximum iteration is set to 300 in all experiments. All the networks are optimized by a Minibatch SGD optimizer with a weight decay of 0.0005 and momentum of 0.9, and the initial learning rate is 0.001. The values of and for experiments in current manuscript are set as (0.5,0.3). We follow the protocol in the community [45, 71, 33] and tune hyper-parameters using transfer cross-validation [74]. All the experiments are implemented using the PyTorch library and are trained with an NVIDIA GeForce TITAN Xp GPU.

IV-C Experimental Results on ImageCLEF-DA

| Method | IP | PI | IC | CI | CP | PC | Avg |

| Source-only | 74.80.3 | 83.90.1 | 91.50.3 | 78.00.2 | 65.50.3 | 91.20.3 | 80.7 |

| CORAL [12] | 76.90.2 | 88.50.3 | 93.60.3 | 86.80.6 | 74.00.3 | 91.60.3 | 85.2 |

| JAN [45] | 76.8 | 88.0 | 94.7 | 89.5 | 74.2 | 91.7 | 85.8 |

| DDC [14] | 74.60.3 | 85.70.8 | 91.10.3 | 82.30.7 | 68.30.4 | 88.80.2 | 81.8 |

| DANN [19] | 75.00.6 | 86.00.3 | 96.20.4 | 87.00.5 | 74.30.5 | 91.50.6 | 85.0 |

| DAN [71] | 75.00.4 | 86.20.2 | 93.30.2 | 84.10.4 | 69.80.4 | 91.30.4 | 83.3 |

| OT-GL [51] | 73.2 | 83.1 | 93.6 | 85.5 | 71.3 | 91.7 | 83.1 |

| DeepJDOT [24] | 79.4 | 86.7 | 93.2 | 82.0 | 69.5 | 91.3 | 83.7 |

| MADA [75] | 75.00.3 | 87.90.2 | 96.00.3 | 88.80.3 | 75.20.2 | 92.20.3 | 85.8 |

| CDAN [47] | 77.70.3 | 90.70.2 | 97.70.3 | 91.30.3 | 74.20.2 | 94.30.3 | 87.7 |

| SymNets [20] | 80.20.3 | 93.60.2 | 97.00.3 | 93.40.3 | 78.70.2 | 96.40.1 | 89.9 |

| RSDA [48] | 79.20.4 | 93.00.2 | 98.30.4 | 93.60.4 | 78.50.3 | 98.20.2 | 90.1 |

| ATM [33] | 80.30.3 | 92.90.4 | 98.60.4 | 93.50.1 | 77.80.3 | 96.70.2 | 90.0 |

| TCM [76] | 79.90.4 | 94.20.2 | 97.80.3 | 93.80.4 | 79.90.4 | 96.90.4 | 90.5 |

| BJDA (ours) | 81.90.4 | 93.80.1 | 97.50.4 | 95.80.2 | 81.90.1 | 96.30.1 | 91.2 |

The classification results for the ImageCLEF-DA dataset are reported in Table I. The results show that our BJDA significantly outperforms all methods on 3 out of 6 UDA tasks and achieves the best average accuracy. There are several interesting observations as shown in Table I. Firstly, BJDA achieves better performance than DeepJDOT [24] which matches the joint distributions using OT and deep neural networks in the Euclidean space. This confirms that performing domain alignment via OT in RKHS can improve the performance of UDA tasks. Secondly, several methods (i.e., MADA [75], CDAN [47], SymNets [20], RSDA [48], ATM [33], TCM [76]) that explicitly or implicitly consider the label information significantly outperform those methods that perform domain alignment with only feature marginal distribution (i.e., DDC [14],DANN [19], DAN [71], OT-GL [51]), which indicates that label information contributes to modeling the multi-modal structure of image data. Thirdly, our BJDA substantially improves the classification accuracy of difficult UDA tasks (e.g., IP, CI, CP), where there exist large domain gaps, which confirms that aligning the joint distributions among various domains is robust to large domain gaps. Finally, our BJDA achieves the best average performance, which demonstrates its effectiveness in modeling a more transferable target classifier.

IV-D Experimental Results on Office-Caltech10

| Method | AC | AD | AW | CA | CD | CW | DA | DC | DW | WA | WC | WD | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Source-only | 82.7 | 85.4 | 78.3 | 91.5 | 88.5 | 83.1 | 80.6 | 74.6 | 99.0 | 77.0 | 69.6.7 | 100.0 | 84.2 |

| CORAL [12] | 85.3 | 80.8 | 76.3 | 91.1 | 86.6 | 81.1 | 88.7 | 80.4 | 99.3 | 82.1 | 78.7 | 100.0 | 85.9 |

| JDA [22] | 81.3 | 86.3 | 80.3 | 88.0 | 84.1 | 79.6 | 91.3 | 81.1 | 97.5 | 90.2 | 82.0 | 98.9 | 86.7 |

| DDC [14] | 85.0 | 89.0 | 86.1 | 91.9 | 88.8 | 85.4 | 89.5 | 81.1 | 98.2 | 84.9 | 78.0 | 100.0 | 88.2 |

| DANN [19] | 87.8 | 82.5 | 77.8 | 93.3 | 91.2 | 89.5 | 84.7 | 82.1 | 98.9 | 82.9 | 81.3 | 100.0 | 87.7 |

| DAN [71] | 84.1 | 91.7 | 91.8 | 92.0 | 89.3 | 90.6 | 90.0 | 80.3 | 98.5 | 92.1 | 81.2 | 100.0 | 90.1 |

| OT-GL [51] | 85.5 | 85.0 | 83.1 | 92.1 | 87.3 | 84.2 | 92.3 | 84.1 | 96.3 | 90.6 | 81.5 | 96.3 | 88.2 |

| DeepJDOT [24] | 87.4 | 88.5 | 86.7 | 92.3 | 92.0 | 85.3 | 91.5 | 85.3 | 98.7 | 86.6 | 84.7 | 98.7 | 89.8 |

| DMP [30] | 86.6 | 90.4 | 91.3 | 92.8 | 93.0 | 88.5 | 91.4 | 85.3 | 97.7 | 91.9 | 85.6 | 100 | 91.2 |

| BJDA (ours) | 87.8 | 92.9 | 94.2 | 93.5 | 94.3 | 90.5 | 92.7 | 86.0 | 100.0 | 93.3 | 86.3 | 100.0 | 92.6 |

Table II summarizes the classification results for the Office-Caltech10 dataset. The BJDA model substantially outperforms the comparison methods on most UDA tasks. Specifically, BJDA obtains an absolute accuracy improvement of 1.4% over the latest competitor DMP [30]. Notably, BJDA and DAN [71] yield greater improvements for this benchmark, which highlights the advantage of performing distribution alignment by exploiting the nonlinear feature mapping of RKHS. For JDA [22] and DeepJDOT [24], both of which match the joint distribution cross the two domains, BJDA outperforms them by 5.9% and 2.8%, respectively. It validates the the superiority of BJDA which directly models the joint distribution shift in the infinite-dimensional spaces. UDA tasks with the domain A are more challenging as images from domain A exhibit more diversity within each category. In this case, aligning the joint distributions can effectively improve the performance of UDA tasks. As Table II shows, our BJDA method exhibits significant advantages over other UDA methods when domain acts as the source domain or the target domain. The results show that BJDA tends to more stable when there are large domain shift between the source and target domains.

IV-E Experimental Results on Adaptiope

| Method | PR | P S | RP | RS | SP | SR | Avg |

| Source-only | 63.60.4 | 26.71.7 | 85.30.3 | 27.60.3 | 43.10.3 | 29.60.4 | 45.9 |

| CORAL [12] | 67.5 | 38.2 | 86.7 | 29.1 | 45.3 | 35.2 | 50.3 |

| DDC [14] | 69.5 | 36.2 | 87.5 | 29.4 | 43.5 | 33.5 | 49.9 |

| DANN [19] | 68.5 | 39.3 | 89.2 | 37.4 | 53.7 | 35.1 | 53.8 |

| OT-GL [51] | 66.1 | 42.2 | 87.4 | 37.6 | 53.8 | 40.9 | 54.7 |

| DeepJDOT [24] | 68.8 | 43.9 | 86.5 | 40.1 | 55.3 | 43.5 | 56.4 |

| SymNets [20] | 81.40.3 | 53.10.6 | 92.30.2 | 49.21.0 | 69.60.5 | 44.91.5 | 65.1 |

| ATM [33] | 73.9 | 49.6 | 87.0 | 45.9 | 61.3 | 35.3 | 58.8 |

| RSDA [48] | 73.80.2 | 59.20.2 | 87.50.2 | 50.30.5 | 69.50.6 | 44.61.1 | 64.2 |

| BJDA (ours) | 74.30.1 | 60.10.4 | 88.40.2 | 52.00.2 | 76.40.2 | 56.30.1 | 67.9 |

To demonstrate the applicability of the proposed BJDA on more intricate real-world problems, we evaluate BJDA on the recently proposed Adaptiope benchmark. The results compared with several state-of-the-art methods are shown in Table III. Our method outperforms the other methods on 4 out of 6 UDA tasks and achieves the best average accuracy. Especially in the most challenging task (i.e, P S, RS, and SR), for which Source-only accuracies are below 30%, our method significantly outperforms all the previous methods. It shows the superiority of our BJDA when there are severe domain gaps between the source and the target domains. Furthermore, both BJDA and DeepJDOT [24] show significant improvements over the marginal-based methods (i.e., CORAL [12], DDC [14], DANN [19], OT-GL [51]), which demonstrates that it is very necessary to align the joint distributions rather than the marginal distributions. Our BJDA achieves 2.8% and 3.7% average accuracy improvements with respect to the recent SymNets [20] and RSDA [48], respectively. These experimental results indicate that BJDA can extract more transferable features than state-of-the-art approaches.

IV-F Experimental Results on Refurbished Office-31

| Method | ArefD | Aref W | DAref | DW | WAref | WD | Avg |

|---|---|---|---|---|---|---|---|

| Source-only | 79.20.6 | 76.81.0 | 73.51.2 | 96.30.2 | 74.10.5 | 99.10.3 | 83.2 |

| CORAL [12] | 84.7 | 81.5 | 78.8 | 97.2 | 79.5 | 99.6 | 86.8 |

| DDC [14] | 85.3 | 82.0 | 78.1 | 97.7 | 77.8 | 98.9 | 86.6 |

| DANN [19] | 86.4 | 87.4 | 75.9 | 97.9 | 76.1 | 99.1 | 87.1 |

| OT-GL [51] | 80.1 | 80.9 | 81.1 | 96.5 | 78.9 | 96.7 | 85.7 |

| DeepJDOT [24] | 82.2 | 80.1 | 82.2 | 96.2 | 82.2 | 99.2 | 86.0 |

| SymNets [20] | 92.40.4 | 91.00.2 | 90.60.4 | 98.00.1 | 89.20.4 | 99.80.0 | 93.5 |

| ATM [33] | 88.5 | 91.7 | 72.4 | 99.2 | 76.6 | 99.2 | 88.1 |

| RSDA [48] | 90.91.3 | 91.80.5 | 87.30.6 | 98.80.2 | 90.50.9 | 99.90.1 | 93.2 |

| BJDA (ours) | 92.80.1 | 91.30.1 | 92.50.2 | 99.30.1 | 87.90.1 | 99.80.2 | 93.9 |

We report the results of the proposed BJDA method on the recently proposed Refurbished Office-31 benchmark in Table IV. It can be seen that our BJDA method outperforms all comparison methods on 3 out of 6 UDA tasks and achieves the best average results. It is interesting to see that BJDA, SymNets [20], RSDA [48] achieve significantly better results over individual UDA tasks than the other comparison methods, the reason may be that these methods take into account the label information and lead to a more discriminative target feature space. By comparing the performance of our BJDA with the DeepJDOT [24] which also considers aligning the joint distribution based on OT, we find that BJDA exceeds DeepJDOT on all UDA tasks by large margins. The results verify that it is effectual to extend the OT theory in the Euclidean space to the reproducing kernel Hilbert space. It is worth noting that our BJDA achieves a significant improvement on the hard tasks between the domain Aref and the domain D, which demonstrates the effectiveness of our proposed approach.

IV-G Experimental Results on Large-scale Datasets

| Method | AC | AP | AR | CA | CP | CR | PA | PC | PR | RA | RC | RP | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Source-only | 34.9 | 50.0 | 58.0 | 37.4 | 41.9 | 46.2 | 38.5 | 31.2 | 60.4 | 53.9 | 41.2 | 59.9 | 46.1 |

| JAN [45] | 45.9 | 61.2 | 68.9 | 50.4 | 59.7 | 61.0 | 45.8 | 43.4 | 70.3 | 63.9 | 52.4 | 76.8 | 58.3 |

| DANN [19] | 45.6 | 59.3 | 70.1 | 47.0 | 58.5 | 60.9 | 46.1 | 43.7 | 68.5 | 63.2 | 51.8 | 76.8 | 57.6 |

| DAN [71] | 43.6 | 57.0 | 67.9 | 45.8 | 56.5 | 60.4 | 44.0 | 43.6 | 67.7 | 63.1 | 51.5 | 74.3 | 56.3 |

| KGOT [25] | 36.2 | 59.4 | 65.0 | 48.6 | 56.5 | 60.2 | 52.1 | 37.8 | 67.1 | 59.0 | 41.9 | 72.0 | 54.7 |

| DeepJDOT [24] | 48.2 | 69.2 | 74.5 | 58.5 | 69.2 | 71.1 | 56.3 | 46.0 | 76.5 | 68.0 | 52.7 | 80.9 | 64.3 |

| CDAN [47] | 50.7 | 70.6 | 76.0 | 57.6 | 70.0 | 70.0 | 57.4 | 50.9 | 77.3 | 70.9 | 56.7 | 81.6 | 65.8 |

| SymNets [20] | 47.7 | 72.9 | 78.5 | 64.2 | 71.3 | 74.2 | 64.2 | 48.8 | 79.5 | 74.5 | 52.6 | 82.7 | 67.6 |

| ATM [33] | 52.4 | 72.6 | 78.0 | 61.1 | 72.0 | 72.6 | 59.5 | 52.0 | 79.1 | 73.3 | 58.9 | 83.4 | 67.9 |

| DMP [30] | 52.3 | 73.0 | 77.3 | 64.3 | 72.0 | 71.8 | 63.6 | 52.7 | 78.5 | 72.0 | 57.7 | 81.6 | 68.1 |

| SCDA [77] | 57.5 | 76.9 | 80.3 | 65.7 | 74.9 | 74.5 | 65.5 | 53.6 | 79.8 | 74.5 | 59.6 | 83.7 | 70.5 |

| TCM [76] | 58.6 | 74.4 | 79.6 | 64.5 | 74.0 | 75.1 | 64.6 | 56.2 | 80.9 | 74.6 | 60.7 | 84.7 | 70.7 |

| BJDA (ours) | 52.9 | 72.1 | 78.2 | 59.9 | 72.8 | 74.2 | 61.1 | 50.8 | 78.8 | 69.6 | 52.4 | 84.1 | 67.2 |

| BJDA+PL (ours) | 53.0 | 74.8 | 78.4 | 60.1 | 73.6 | 75.0 | 62.2 | 51.9 | 79.9 | 69.2 | 55.4 | 84.3 | 68.1 |

| Source-only | clp | inf | pnt | qdr | rel | skt | Avg. | DANN [19] | clp | inf | pnt | qdr | rel | skt | Avg. | MCD [32] | clp | inf | pnt | qdr | rel | skt | Avg. | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| clp | - | 19.3 | 37.5 | 11.1 | 52.2 | 41.0 | 32.2 | clp | - | 15.5 | 34.8 | 9.5 | 50.8 | 41.4 | 30.4 | clp | - | 23.6 | 34.4 | 15.0 | 42.6 | 41.2 | 31.4 | |||||

| inf | 30.2 | - | 31.2 | 3.6 | 44.0 | 27.9 | 27.4 | inf | 31.8 | - | 30.2 | 3.8 | 44.8 | 25.7 | 27.3 | inf | 14.2 | - | 14.8 | 3.0 | 19.6 | 13.7 | 13.1 | |||||

| pnt | 39.6 | 18.7 | - | 4.9 | 54.5 | 36.3 | 30.8 | pnt | 39.6 | 15.1 | - | 5.5 | 54.6 | 35.1 | 30.0 | pnt | 26.1 | 21.2 | - | 7.0 | 42.6 | 27.6 | 24.9 | |||||

| qdr | 7.0 | 0.9 | 1.4 | - | 4.1 | 8.3 | 4.3 | qdr | 11.8 | 2.0 | 4.4 | - | 9.8 | 8.4 | 7.3 | qdr | 1.6 | 1.5 | 1.9 | - | 11.5 | 3.8 | 4.6 | |||||

| rel | 48.4 | 22.2 | 49.4 | 6.4 | - | 38.8 | 33.0 | rel | 47.5 | 17.9 | 47.0 | 6.3 | - | 37.3 | 31.2 | rel | 45.0 | 14.2 | 45.0 | 2.2 | - | 34.8 | 28.2 | |||||

| skt | 46.9 | 15.4 | 37.0 | 10.9 | 47.0 | - | 31.4 | skt | 47.9 | 13.9 | 34.5 | 10.4 | 46.8 | - | 30.7 | skt | 33.8 | 18.0 | 28.4 | 10.2 | 29.3 | - | 23.9 | |||||

| Avg. | 34.4 | 15.3 | 31.3 | 7.4 | 40.4 | 30.5 | 26.6 | Avg. | 35.7 | 12.9 | 30.2 | 7.1 | 41.4 | 29.6 | 26.1 | Avg. | 26.5 | 15.4 | 26.1 | 7.4 | 31.7 | 25.7 | 20.9 | |||||

| ADDA [31] | clp | inf | pnt | qdr | rel | skt | Avg. | CDAN [47] | clp | inf | pnt | qdr | rel | skt | Avg. | MarginDD [78] | clp | inf | pnt | qdr | rel | skt | Avg. | |||||

| clp | - | 11.2 | 24.1 | 3.2 | 41.9 | 30.7 | 22.2 | clp | - | 20.4 | 36.6 | 9.0 | 50.7 | 42.3 | 31.8 | clp | - | 20.5 | 40.7 | 6.2 | 52.5 | 42.1 | 32.4 | |||||

| inf | 19.1 | - | 16.4 | 3.2 | 26.9 | 14.6 | 16.0 | inf | 27.5 | - | 25.7 | 1.8 | 34.7 | 20.1 | 22.0 | inf | 33.0 | - | 33.8 | 2.6 | 46.2 | 24.5 | 28.0 | |||||

| pnt | 31.2 | 9.5 | - | 8.4 | 39.1 | 25.4 | 22.7 | pnt | 42.6 | 20.0 | - | 2.5 | 55.6 | 38.5 | 31.8 | pnt | 43.7 | 20.4 | - | 2.8 | 51.2 | 41.7 | 32.0 | |||||

| qdr | 15.7 | 2.6 | 5.4 | - | 9.9 | 11.9 | 9.1 | qdr | 21.0 | 4.5 | 8.1 | - | 14.3 | 15.7 | 12.7 | qdr | 18.4 | 3.0 | 8.1 | - | 12.9 | 11.8 | 10.8 | |||||

| rel | 39.5 | 14.5 | 29.1 | 12.1 | - | 25.7 | 24.2 | rel | 51.9 | 23.3 | 50.4 | 5.4 | - | 41.4 | 34.5 | rel | 52.8 | 21.6 | 47.8 | 4.2 | - | 41.2 | 33.5 | |||||

| skt | 35.3 | 8.9 | 25.2 | 14.9 | 37.6 | - | 25.4 | skt | 50.8 | 20.3 | 43.0 | 2.9 | 50.8 | - | 33.6 | skt | 54.3 | 17.5 | 43.1 | 5.7 | 54.2 | - | 35.0 | |||||

| Avg. | 28.2 | 9.3 | 20.1 | 8.4 | 31.1 | 21.7 | 19.8 | Avg. | 38.8 | 17.7 | 32.8 | 4.3 | 41.2 | 31.6 | 27.7 | Avg. | 40.4 | 16.6 | 34.7 | 4.3 | 43.4 | 32.3 | 28.6 | |||||

|

clp | inf | pnt | qdr | rel | skt | Avg. |

|

clp | inf | pnt | qdr | rel | skt | Avg. |

|

clp | inf | pnt | qdr | rel | skt | Avg. | |||||

| clp | - | 18.6 | 39.3 | 5.1 | 55.0 | 44.1 | 32.4 | clp | - | 16.4 | 43.2 | 10.6 | 54.9 | 46.0 | 34.2 | clp | - | 17.4 | 46.0 | 12.0 | 55.9 | 46.8 | 35.6 | |||||

| inf | 29.6 | - | 34.0 | 1.4 | 46.3 | 25.4 | 27.3 | inf | 19.6 | - | 31.8 | 4.9 | 45.1 | 24.4 | 25.2 | inf | 20.3 | - | 34.2 | 5.8 | 46.0 | 24.6 | 26.2 | |||||

| pnt | 44.1 | 19.0 | - | 2.6 | 56.2 | 42.0 | 32.8 | pnt | 34.2 | 15.9 | - | 6.2 | 53.9 | 27.0 | 27.4 | pnt | 38.6 | 16.7 | - | 6.1 | 52.5 | 27.2 | 28.2 | |||||

| qdr | 30.0 | 4.9 | 15.0 | - | 25.4 | 19.8 | 19.0 | qdr | 28.4 | 3.4 | 14.9 | - | 9.9 | 8.6 | 13.1 | qdr | 28.4 | 3.5 | 15.1 | - | 10.0 | 8.8 | 13.2 | |||||

| rel | 54.0 | 22.5 | 51.9 | 2.3 | - | 42.5 | 34.6 | rel | 55.5 | 14.7 | 52.7 | 5.2 | - | 24.7 | 30.5 | rel | 55.8 | 14.5 | 54.2 | 5.7 | - | 24.5 | 30.9 | |||||

| skt | 55.6 | 18.5 | 44.7 | 6.4 | 53.2 | - | 35.7 | skt | 53.4 | 11.6 | 47.7 | 10.3 | 39.6 | - | 32.5 | skt | 56.1 | 13.7 | 48.2 | 11.6 | 46.0 | - | 35.1 | |||||

| Avg. | 42.6 | 16.7 | 37.0 | 3.6 | 47.2 | 34.8 | 30.3 | Avg. | 38.2 | 12.4 | 38.1 | 7.4 | 40.7 | 26.1 | 27.2 | Avg. | 39.8 | 13.2 | 39.5 | 8.2 | 42.1 | 26.4 | 28.2 |

To evaluate the scalability of the proposed BJDA method, We compare BJDA with several recent UDA methods on the large-scale datasets Office-Home and DomainNet. During the training process, we assign pseudo-labels for the unlabeled target samples. Those pseudo-labels may be wrong, so these noises in pseudo-labels will degrade the performance of our BJDA method. To address this issue, we select the target samples which have prediction probability greater than a confidence threshold in the training stage. We add the experiments with this selection strategy on the Office-Home and DomainNet datasets. The results are shown in Table V and Table VI, and the corresponding setting is denoted as BJDA+PL. It can be seen that the selection strategy for pseudo-labels can improve the performance of the proposed BJDA method and alleviate the impact of noisy pseudo-labels. For the Office-Home dataset, our BJDA outperforms the recently proposed ATM method [33] by 0.2% and the average accuracy is close to the state-of-the-arts (i.e., SCDA [77] and TCM [76]). The DomainNet dataset is a large and very challenging benchmark, in which large domain gaps exist. Our BJDA obtains an average accuracy of 28.2%, which is very close to the best result 30.3% produced by SCDA [77]. The reason may be that SCDA uses multiple complicated pair-wise adversarial processes, in which the classifier first finds the region with a large discrepancy among samples with the same category, and then the feature extractor tries to suppress the dissimilar regions. TCM combines the disentangled causal mechanisms with deep neural networks and it can effectively identify the latent semantic feature and improve the discriminative ability of representations. In addition, our BJDA achieves the best accuracy on 12 out of the 30 UDA tasks on the DomainNet dataset. From these results, we find that BJDA achieves comparable accuracy compared with recent UDA methods on those large-scale benchmarks. These results verify the scalability and the effectiveness of the proposed BJDA method.

IV-H Feature visualization for BJDA

To show the effectiveness of BJDA intuitively, we visualize the deep features before and after adaptation using t-SNE [79] in Fig. 6. The experiment of tasks IP and PI in ImageCLEF-DA are selected for illustration. Features extracted from DANN [19] and DeepJDOT [24] are also shown in Fig. 6 for comparison. For clarity, we use different markers to denote different categories, and the source features are shown in blue and target in red. Compared with the features learned by the Source-only in Fig. 6(a) and 6(e), we observe that all the features from BJDA in Fig. 6(d) and 6(h) have shown good adaptation patterns, which implies that BJDA is helpful to reduce the domain shift and improve the transferability of deep features. We can see that the features of DeepJDOT [24] in the third column are more separable than those of DANN [19] in the second column, which demonstrates that it is more valuable to align the joint distributions compared with the marginal-based methods. As shown in Fig. 6, there are larger margins between different clusters corresponding to different categories in Fig. 6(d) and 6(h) compared with graphs in other columns, and the target features in the fourth column behave more clear to categorize, which indicates that BJDA can learn more discriminative features. It is shown that the features of BJDA are better aligned and more distinguishable than the features of DeepJDOT [24], which indicates the superiority of performing joint distribution alignment in RKHS.

IV-I Ablation Study

| Method | IP | PI | IC | CI | CP | PC | Avg |

| Source-only | 74.80.3 | 83.90.1 | 91.50.3 | 78.00.2 | 65.50.3 | 91.20.3 | 80.7 |

| DeepJDOT [24] | 79.4 | 86.7 | 93.2 | 82.0 | 69.5 | 91.3 | 83.7 |

| TripDA [60] | 78.10.3 | 89.20.1 | 96.80.2 | 91.30.2 | 78.20.2 | 94.00.3 | 87.9 |

| BJDA( ) | 80.80.5 | 91.50.2 | 95.10.3 | 92.30.3 | 77.40.2 | 95.30.2 | 88.7 |

| BJDA( ) | 81.70.2 | 93.30.5 | 97.00.4 | 93.00.4 | 81.50.3 | 95.50.5 | 90.3 |

| BJDA( )+ | 78.50.6 | 88.30.4 | 96.30.3 | 95.20.3 | 80.40.5 | 95.80.2 | 89.1 |

| BJDA( )+ | 75.70.1 | 84.60.0 | 92.90.0 | 91.60.0 | 77.50.1 | 92.50.0 | 85.8 |

| BJDA( )+ | 80.00.0 | 92.00.1 | 96.00.0 | 92.60.0 | 78.70.0 | 95.40.1 | 89.1 |

| BJDA( )+ | 80.30.1 | 91.00.1 | 95.60.0 | 92.30.0 | 78.10.2 | 93.90.1 | 88.5 |

| BJDA | 81.90.4 | 93.80.1 | 97.50.4 | 95.80.2 | 81.90.1 | 96.30.1 | 91.2 |

In order to exemplify the performance of BJDA and better investigate where the improvements are coming from, we perform an ablation study over various combinations for the UDA task on ImageCLEF-DA. The BJDA algorithm reduces the differences in the joint distributions and learns a category discriminative feature space simultaneously. It mainly consists of two components: the Bures joint distribution alignment loss and the dynamic margin contrastive loss. The BJDA( ) implements the idea of Bures joint distribution alignment but excludes the DMC loss. The BJDA( ) trains the model with the cross-entropy loss and the DMC loss. The BJDA implements the proposed BJDA algorithm as Eq. (10). DeepJDOT [24] incorporates OT and deep neural networks in the Euclidean space to matches the joint distributions, we select DeepJDOT as the baseline to demonstrate the superiority of our Bures joint distribution alignment loss. In light of the original triplet loss [58] is widely used to preserve the semantic relations, we train our network using the Bures joint distribution alignment loss, the cross-entropy loss, and the triplet loss, and is termed as BJDA( )+. We select BJDA( )+ as the baseline to show the effectiveness of our DMC loss. The recently proposed TripDA [60] uses the original triplet loss to model the semantic structure of the data and incorporates the Joint Maximum Mean Discrepancy (JMMD) [45] to reduce the domain shift. We compare BJDA( )+ with TripDA [60] to show the effectiveness of our Bures joint distribution alignment loss. Table VII shows the results of the ablation study.

Comparing BJDA( ) with DeepJDOT [24], it is clear that on average the BJDA( ) performs better, which demonstrates the superiority of our Bures joint distribution alignment loss that performs domain alignment via OT in RKHS. BJDA( )+ outperforms the TripDA [60] by a clear margin, which verifies the effectiveness of the proposed Bures joint distribution alignment loss compared with JMMD. Our BJDA achieves 2.1% improvement on the average accuracy against BJDA( )+. This result shows the superiority of the DMC loss compared with the original triplet loss [58] and indicates that our DMC loss can be of help to improve the category discriminative ability of feature space. Notice that BJDA( ) obtains an improvement of 1.6% over BJDA( ), which reveals that the Bures joint distribution alignment loss makes a greater contribution to the UDA task compared with the DMC loss. From the above results, we can confirm that each component in BJDA has a specific contribution. Combining the Bures joint distribution alignment loss and the DMC loss leads to further improvement, indicating their complementarity and superiority of the BJDA algorithm.

LMMD [80] is a recently proposed divergence for conditional MMD, which models the domain divergence by aligning the marginal distributions of subdomains. We compare our BJDA with conditional MMD by substituting the Eq. (5) loss with the LMMD loss. The setting is denoted as BJDA( )+. It can be seen that the Eq. (5) loss performs better than LMMD, which verifies the superiority of aligning joint distributions. MDD [33] is another domain divergence, which considers the marginal distributions by minimizing the inter-domain divergence and optimizes the conditional distributions by introducing class information. We empirically evaluate the proposed BJDA by substituting the Eq. (5) loss with the MDD loss. The setting is denoted as BJDA( )+. It can be seen that the Eq. (5) loss achieves better results compared with the MDD loss. We also add the ablation experiment with the variant which uses the Wasserstein distance on the ImageCLEF-DA dataset. We compute the Wasserstein distance follow the formula of discrete Optimal Transport from previous work [25]. The corresponding setting is denoted as BJDA( )+. The results suggest that the BJDA loss gets better performance than the Wasserstein distance. These results further verify the superiority of the proposed Bures joint distribution alignment loss in Eq. (5).

IV-J Convergence Analysis

To show the stability of training, we report the classification loss values and total loss values during the epochs in Fig. 7. To reveal the effectiveness of our contribution, we compare the loss values between BJDA, BJDA( ), and BJDA( ). We take the task IP in ImageCLEF-DA as an example. It can be seen that BJDA gradually converges after 300 epochs. Fig. 7(a) show that the classification loss values of BJDA (black line) are lower than that of BJDA( ) (blue line) and BJDA( ) (red line), which indicates that the features learned by BJDA are more discriminative. Fig. 7(b) presents the comparison of total loss values, it can be seen that the values of the total loss of BJDA are much smaller, which demonstrates the superiority of BJDA, and BJDA is better towards the convergence.

IV-K Hyper-parameters Analysis

The overall objective function, i.e., Eq. (10), of the proposed BJDA includes two hyper-parameters and to balance the Bures joint distribution alignment loss and the dynamic margin contrastive loss. To show the stability of our BJDA methods and the impact of different hyper-parameters and , we investigate the sensitivity of the hyper-parameters and implement grid search for and with respect to the tasks IP and PI in ImageCLEF-DA. and are searched in the range of [0.1, 0.3, 0.5, 0.7, 0.9], respectively. Results are shown in Fig. 8. The brighter the color, the higher the classification accuracy is. It can be seen that the classification accuracy varies slowly among the peak area. The results show that in most cases, the performance of BJDA is stable when the values of and increase from 0.1 to 0.9. This indicates the robustness of performing joint distribution alignment in the RKHS. The values of in this paper are set as (0.5,0.3). The fact that indicates that the Bures joint distribution alignment phase is more important than the metric learning phase during the adaptation process.

V Conclusion

In this paper, we propose a novel method called Bures joint Distribution Alignment (BJDA) for unsupervised domain adaptation. We directly align the joint distributions of the two domains based on the optimal transport theory. To empower the optimal transport theory with the capability of capturing nonlinear structures underlying the data, our work minimizes the kernel Bures-Wasserstein distance of the joint distributions in the reproducing kernel Hilbert space. In addition, we introduce a dynamic margin based contrastive learning phase to improve the category discriminative ability of representations. Extensive experiments on four benchmarks verify that BJDA exceeds previous state-of-the-art methods with significant advantages. While BJDA is designed for UDA task with a single source domain, there exist some works among multiple source domains. In the future, we plan to extend BJDA to the scenario with multiple source domains.

References

- [1] J. L. Hu, J. W. Lu, Y. P. Tan, and J. Zhou, “Deep transfer metric learning,” IEEE Transactions on Image Processing, vol. 25, no. 12, pp. 5576–5588, 2016.

- [2] B. Yang and P. C. Yuen, “Learning adaptive geometry for unsupervised domain adaptation,” Pattern Recognition, vol. 110, p. 107638, 2021.

- [3] S. Deng, W. Xia, Q. Gao, and X. Gao, “Cross-view classification by joint adversarial learning and class-specificity distribution,” Pattern Recognition, vol. 110, p. 107633, 2021.

- [4] Y.-W. Luo and C.-X. Ren, “Conditional bures metric for domain adaptation,” in Proceedings of the IEEE International Conference on Computer Vision, 2021, pp. 13 989–13 998.

- [5] J. Wang, J. Chen, J. Lin, L. Sigal, and C. W. de Silva, “Discriminative feature alignment: Improving transferability of unsupervised domain adaptation by Gaussian-guided latent alignment,” Pattern Recognition, vol. 116, p. 107943, 2021.

- [6] C.-X. Ren, X. L. Xu, and H. Yan, “Generalized conditional domain adaptation: A causal perspective with low-rank translators,” IEEE Transactions on Cybernetics, vol. 50, no. 2, pp. 821–834, 2020.

- [7] Y.-H. Liu and C.-X. Ren, “A two-way alignment approach for unsupervised multi-source domain adaptation,” Pattern Recognition, vol. 124, p. 108430, 2022.

- [8] Y. Zhang, Y. Wei, Q. Wu, P. Zhao, S. Niu, J. Huang, and M. Tan, “Collaborative unsupervised domain adaptation for medical image diagnosis,” IEEE Transactions on Image Processing, vol. 29, pp. 7834–7844, 2020.

- [9] G.-X. Xu, C. Liu, J. Liu, Z. Ding, F. Shi, M. Guo, W. Zhao, X. Li, Y. Wei, Y. Gao, C.-X. Ren, and D. Shen, “Cross-site severity assessment of covid-19 from ct images via domain adaptation,” IEEE Transactions on Medical Imaging, p. , 2021.

- [10] N. Courty, R. Flamary, A. Habrard, and A. Rakotomamonjy, “Joint distribution optimal transportation for domain adaptation,” in Proceedings of the Advances in Neural Information Processing Systems 30, 2017.

- [11] F. J. Castellanos, A.-J. Gallego, and J. Calvo-Zaragoza, “Unsupervised neural domain adaptation for document image binarization,” Pattern Recognition, vol. 119, p. 108099, 2021.

- [12] B. C. Sun, J. S. Feng, and K. Saenko, “Return of frustratingly easy domain adaptation,” in Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, 2016.

- [13] C.-X. Ren, J. S. Feng, D. Q. Dai, and S. C. Yan, “Heterogenous domain adaptation via covariance structured feature translators,” IEEE Transactions on Cybernetics, vol. 51, no. 4, pp. 2166–2177, 2021.

- [14] E. Tzeng, J. Hoffman, N. Zhang, K. Saenko, and T. Darrell, “Deep domain confusion: Maximizing for domain invariance,” arXiv preprint arXiv:1412.3474, 2014.

- [15] Y. F. Liu, W. P. Tu, B. Du, L. F. Zhang, and D. C. Tao, “Homologous component analysis for domain adaptation,” IEEE Transactions on Image Processing, vol. 29, pp. 1074–1089, 2020.

- [16] N. Xiao and L. Zhang, “Dynamic weighted learning for unsupervised domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 15 242–15 251.

- [17] W. Liu, J. Li, B. Liu, W. Guan, Y. Zhou, and C. Xu, “Unified cross-domain classification via geometric and statistical adaptations,” Pattern Recognition, vol. 110, p. 107658, 2021.

- [18] M. X. Li, Y. M. Zhai, Y. W. Luo, P. F. Ge, and C.-X. Ren, “Enhanced transport distance for unsupervised domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 933–13 941.

- [19] Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. March, and V. Lempitsky, “Domain-adversarial training of neural networks,” Journal of Machine Learning Research, vol. 17, no. 59, pp. 1–35, 2016.

- [20] Y. B. Zhang, H. Tang, K. Jia, and M. K. Tan, “Domain-symmetric networks for adversarial domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 5026–5035.

- [21] L. C. Song, Y. H. Xu, L. F. Zhang, B. Du, and X. G. Wang, “Learning from synthetic images via active pseudo-labeling,” IEEE Transactions on Image Processing, vol. 29, pp. 6452–6465, 2020.

- [22] M. Long, J. Wang, G. Ding, J. Sun, and P. S. Yu, “Transfer feature learning with joint distribution adaptation,” in Proceedings of the IEEE International Conference on Computer Vision, 2013, pp. 2200–2207.

- [23] S. T. Chen, M. Harandi, X. N. Jin, and X. W. Yang, “Domain adaptation by joint distribution invariant projections,” IEEE Transactions on Image Processing, vol. 29, pp. 8264–8277, 2020.

- [24] B. B. Damodaran, B. Kellenberger, R. Flamary, D. Tuia, and N. Courty, “Deepjdot: Deep joint distribution optimal transport for unsupervised domain adaptation,” in Proceedings of European Conference on Computer Vision, 2018, pp. 467–483.

- [25] Z. Zhang, M. Wang, and A. Nehorai, “Optimal transport in reproducing kernel hilbert spaces: Theory and applications,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 7, pp. 1741–1754, 2020.

- [26] S. Ben-David, J. Blitzer, K. Crammer, and F. Pereira, “Analysis of representations for domain adaptation,” in Proceedings of the Advances in Neural Information Processing Systems 19, 2007, pp. 137–144.

- [27] J. Blitzer, K. Crammer, A. Kulesza, F. Pereira, and J. Wortman, “Learning bounds for domain adaptation,” in Proceedings of the Advances in Neural Information Processing Systems 20, 2008, pp. 129–136.

- [28] S. Ben-David, J. Blitzer, K. Crammer, A. Kulesza, F. Pereira, and J. W. Vaughan, “A theory of learning from different domains,” Machine Learning, vol. 79, no. 1-2, pp. 151–175, 2010.

- [29] Y. H. Li, N. Y. Wang, J. P. Shi, X. D. Hou, and J. Y. Liu, “Adaptive batch normalization for practical domain adaptation,” Pattern Recognition, vol. 80, pp. 109–117, 2018.

- [30] Y. W. Luo, C.-X. Ren, D. Q. Dai, and H. Yan, “Unsupervised domain adaptation via discriminative manifold propagation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 3, pp. 1653–1669, 2022.

- [31] E. Tzeng, J. Hoffman, K. Saenko, and T. Darrell, “Adversarial discriminative domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 7167–7176.

- [32] K. Saito, K. Watanabe, Y. Ushiku, and T. Harada, “Maximum classifier discrepancy for unsupervised domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3723–3732.

- [33] J. Li, E. Chen, Z. Ding, L. Zhu, K. Lu, and H. T. Shen, “Maximum density divergence for domain adaptation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 11, pp. 3918–3930, 2021.

- [34] J. Li, Z. Du, L. Zhu, Z. Ding, K. Lu, and H. T. Shen, “Divergence-agnostic unsupervised domain adaptation by adversarial attacks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, p. , 2021.

- [35] J. Li, M. Jing, H. Su, K. Lu, L. Zhu, and H. T. Shen, “Faster domain adaptation networks,” IEEE Transactions on Knowledge and Data Engineering, p. , 2021.

- [36] J. Li, K. Lu, Z. Huang, L. Zhu, and H. T. Shen, “Heterogeneous domain adaptation through progressive alignment,” IEEE Transactions on Neural Networks and Learning Systems, vol. 30, no. 5, pp. 1381–1391, 2019.

- [37] B. Zhang, T. Chen, B. Wang, and R. Li, “Joint distribution alignment via adversarial learning for domain adaptive object detection,” IEEE Transactions on Multimedia, p. , 2021.

- [38] Y. Du, Z. Tan, X. Zhang, Y. Yao et al., “Unsupervised domain adaptation with unified joint distribution alignment,” in Database Systems for Advanced Applications, 2021, pp. 449–464.

- [39] C. Yu, J. Wang, Y. Chen, and M. Huang, “Transfer learning with dynamic adversarial adaptation network,” in IEEE International Conference on Data Mining (ICDM), 2019, pp. 778–786.

- [40] J. Wang, Y. Chen, W. Feng, H. Yu, M. Huang, and Q. Yang, “Transfer learning with dynamic distribution adaptation,” ACM Trans. Intell. Syst. Technol., vol. 11, no. 1, p. 25, 2020.

- [41] J. D. Wang, W. J. Feng, Y. Q. Chen, H. Yu, M. Y. Huang, and P. S. Yu, “Visual domain adaptation with manifold embedded distribution alignment,” in Proceedings of the 26th ACM International Conference on Multimedia, 2018, pp. 402–410.

- [42] Y. Luo, L. Zheng, T. Guan, J. Yu, and Y. Yang, “Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 2502–2511.

- [43] Y. Luo, P. Liu, L. Zheng, T. Guan, J. Yu, and Y. Yang, “Category-level adversarial adaptation for semantic segmentation using purified features,” IEEE Transactions on Pattern Analysis and Machine Intelligence, p. , 2021.

- [44] Y. Luo, P. Liu, T. Guan, J. Yu, and Y. Yang, “Significance-aware information bottleneck for domain adaptive semantic segmentation,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 6777–6786.

- [45] M. S. Long, H. Zhu, J. M. Wang, and M. Jordan, “Deep transfer learning with joint adaptation networks,” in Proceedings of the 34th International Conference on Machine Learning, 2017, pp. 2208–2217.

- [46] S. Li, S. Song, G. Huang, Z. Ding, and C. Wu, “Domain invariant and class discriminative feature learning for visual domain adaptation,” IEEE Transactions on Image Processing, vol. 27, no. 9, pp. 4260–4273, 2018.

- [47] M. S. Long, Z. J. Cao, J. M. Wang, and M. I. Jordan, “Conditional adversarial domain adaptation,” in Proceedings of the Advances in Neural Information Processing Systems 31, 2018, pp. 1640–1650.

- [48] X. Gu, J. Sun, and Z. Xu, “Spherical space domain adaptation with robust pseudo-label loss,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 9098–9107.

- [49] M. X. Ni, X. Y. Shen, and Z. Hai, “Transfer learning based on joint distribution kernel adaptation and its privacy protection (in chinese),” SCIENTIA SINICA Informationis, p. , 2021.

- [50] C. Villani, Optimal Transport: Old and New. Berlin, Heidelberg: The Springer Press, 2009.

- [51] N. Courty, R. Flamary, D. Tuia, and A. Rakotomamonjy, “Optimal transport for domain adaptation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 9, pp. 1853–1865, 2017.

- [52] J. J. Qian, W. K. Wong, H. M. Zhang, J. Xie, and J. Yang, “Joint optimal transport with convex regularization for robust image classification,” IEEE Transactions on Cybernetics, p. , 2020.

- [53] R. Bhatia, T. Jain, and Y. Lim, “On the Bures-Wasserstein distance between positive definite matrices,” Expositiones Mathematicae, vol. 37, no. 2, pp. 165–191, 2019.

- [54] R. Wang, Z. Wu, Z. Weng, J. Chen, G.-J. Qi, and Y.-G. Jiang, “Cross-domain contrastive learning for unsupervised domain adaptation,” arXiv preprint arXiv:2106.05528, 2021.

- [55] C. Park, J. Lee, J. Yoo, M. Hur, and S. Yoon, “Joint contrastive learning for unsupervised domain adaptation,” arXiv preprint arXiv:2006.10297, 2020.

- [56] O. V. Aaron van den Oord, Yazhe Li, “Representation learning with contrastive predictive coding,” arXiv preprint arXiv:1807.03748, 2018.

- [57] V. Verma, T. Luong, K. Kawaguchi, H. Pham, and Q. Le, “Towards domain-agnostic contrastive learning,” in Proceedings of the 38th International Conference on Machine Learning, 2021.

- [58] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 815–823.

- [59] S. Wang and L. Zhang, “Self-adaptive re-weighted adversarial domain adaptation,” in Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, 2020, pp. 3181–3187.

- [60] W. Deng, L. Zheng, Y. Sun, and J. Jiao, “Rethinking triplet loss for domain adaptation,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 1, pp. 29–37, 2021.

- [61] C.-X. Ren, X.-L. Xu, and Z. Lei, “A deep and structured metric learning method for robust person re-identification,” Pattern Recognition, vol. 96, p. 106995, 2019.

- [62] K.-K. Huang, C.-X. Ren, H. Liu, Z.-R. Lai, Y.-F. Yu, and D.-Q. Dai, “Hyperspectral image classification via discriminative convolutional neural network with an improved triplet loss,” Pattern Recognition, vol. 112, p. 107744, 2021.

- [63] H.-M. Yang, X.-Y. Zhang, F. Yin, and C.-L. Liu, “Robust classification with convolutional prototype learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3474–3482.

- [64] M. Li, T. Zhang, Y. Q. Chen, and A. J. Smola, “Efficient mini-batch training for stochastic optimization,” in Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2014, pp. 661–670.

- [65] X. Glorot and Y. Bengio, “Understanding the difficulty of training deep feedforward neural networks,” in Proceedings of the 13th International Conference on Artificial Intelligence and Statistics, vol. 9, 2010, pp. 249–256.

- [66] B. Q. Gong, Y. Shi, F. Sha, and K. Grauman, “Geodesic flow kernel for unsupervised domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2012, pp. 2066–2073.

- [67] T. Ringwald and R. Stiefelhagen, “Adaptiope: A modern benchmark for unsupervised domain adaptation,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2021, pp. 101–110.

- [68] K. Saenko, B. Kulis, M. Fritz, and T. Darrell, “Adapting visual category models to new domains,” in Proceedings of European Conference on Computer Vision, 2010, pp. 213–226.

- [69] H. Venkateswara, J. Eusebio, S. Chakraborty, and S. Panchanathan, “Deep hashing network for unsupervised domain adaptation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 5385–5394.

- [70] X. C. Peng, Q. X. Bai, X. D. Xia et al., “Moment matching for multi-source domain adaptation,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 1406–1415.

- [71] M. S. Long, Y. Cao, Z. J. Cao, J. M. Wang, and M. Jordan, “Transferable representation learning with deep adaptation networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 12, pp. 3071–3085, 2019.

- [72] J. Donahue, Y. Jia, O. Vinyals, J. Hoffman, N. Zhang, E. Tzeng, and T. Darrell, “Decaf: A deep convolutional activation feature for generic visual recognition,” in Proceedings of the 31th International Conference on Machine Learning, 2014.

- [73] K. M. He, X. Y. Zhang, S. Q. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [74] E. Zhong, W. Fan, Q. Yang, O. Verscheure, and J. Ren, “Cross validation framework to choose amongst models and datasets for transfer learning,” in Machine Learning and Knowledge Discovery in Databases, 2010, pp. 547–562.

- [75] Z. Pei, Z. Cao, M. Long, and J. Wang, “Multi-adversarial domain adaptation,” in Proceedings of the Thirty-two AAAI Conference on Artificial Intelligence, 2018.

- [76] Z. Yue, Q. Sun, X.-S. Hua, and H. Zhang, “Transporting causal mechanisms for unsupervised domain adaptation,” in Proceedings of the IEEE International Conference on Computer Vision, 2021, pp. 8599–8608.

- [77] S. Li, M. Xie, F. Lv, C. H. Liu, J. Liang, C. Qin, and W. Li, “Semantic concentration for domain adaptation,” in Proceedings of the IEEE International Conference on Computer Vision, 2021, pp. 9102–9111.

- [78] Y. Zhang, T. Liu, M. Long, and M. Jordan, “Bridging theory and algorithm for domain adaptation,” in Proceedings of the 36th International Conference on Machine Learning, 2019, pp. 7404–7413.

- [79] L. V. D. Maaten and G. E. Hinton, “Visualizing data using t-SNE,” Journal of Machine Learning Research, vol. 9, pp. 2579–2605, 2008.

- [80] Y. Zhu, F. Zhuang, J. Wang, G. Ke, J. Chen, J. Bian, H. Xiong, and Q. He, “Deep subdomain adaptation network for image classification,” IEEE transactions on neural networks and learning systems, vol. 32, no. 4, pp. 1713–1722, 2020.