∎

11email: [email protected]; [email protected]

Building a connected graph for action reasoning

Abstract

One of the challenges of task planning is to find out what causes the planning failure and how to handle the failure intelligently. This paper shows how to achieve this. The idea is to build a connected graph, where each verticle represents a set of compatible states, and each edge represents an action. So that given the initial states and goal states, there will always be solutions. This paper shows how to introduce extra actions to extend action models to make the graph to be connected: i) explicitly define types, states, conflicts, actions in the action model file; ii) add extra actions by simplifying the preconditions of the existing actions; iii) add extra actions for the state that will never be the effect of any action. The implementation was evaluated in five different experiments on object search and manipulation.

Keywords:

Action reasoning Connected graph Failure explanation Extra actions1 Introduction

Your text comes here. Separate text sections with

2 Domain formulation

The action domain description consists of (i) types, (ii) states, (iii) conflicts and (iv) actions. There are two points to focus on: 1) states are limited by types, which is mainly to generate extra actions for achiving any state transition; 2) states that cannot exist at the same time are defined by conflicts, which are to ensure non conflicts in extra actions.

2.1 Types

Types are templates for logical facts that can be classes (e.g. room, apple, water cup), features (e.g. red, with lid). All types are designed to be time-independent that means each of them will stay the same during planning. Types are defined as the following.

types {

room(R).

object(O).

apple(A).

}

The type apple(A) defines a template means A, which will be a real instance when this template is used, is an apple.

2.2 States

Each state is a template for the condition that a person or an object is in. States are divided into static states, which indicate they are time-independent, and dynamic states, which can be changed during the planning.

static {

connected(A,B,C) : door(A) & room(B) & room(C).

locInRoom(A,B) : location(A) & room(B).

}

dynamic {

isHeld(A,B) : object(A) & hand(B).

opened(A) : door(A).

closed(A) : door(A).

isNear(B) : human(B) | location(B).

isPlaced(A,B) : object(A) & location(B).

}

As shown above, each state consists of two parts: head (left part), which defines the name and parameters, and requirements (right part), which are types limit all parameters. The requirements can be in many cases, which are connected by symbol ; and in each case, the parameters must be limited by types.

2.3 Conflicts

Conflicts define which states cannot exist at the same time, and which states should be dealt with when conflicts are caused by new states.

conflicts {

closed(A) : opened(A) : opened(A).

opened(A) : closed(A) : closed(A).

}

Each conflict consists of three parts (divided by symbol :): left part is the emerging states, middle part is the existing states and right part is how to deal with them.

2.4 Actions

Actions are operator-schemas, which should be grounded/instantiated during execution. Each action has a name (including variables that should be instantiated with object), preconditions and effects. The effects of actions could be also conditional.

action {

name: moveTo(L,R).

precondition:

inRoom(R) & (isLocated(L,R) | locInRoom(L,R)) & isNear(L).

effect:

isNear(L) & (isNear(L1) isNear(L1)).

}

In the action above, symbol means not and means conditional (left part is effect and right part is conditions). Each parameter must be limited by states or types.

3 Extra actions

Even when all action models are constructed correctly, in some cases, planner cann’t find any plan for some goals. The reasons for these failures may be varied, but classified, we classify them into three categories:

-

1.

Absence: some goal can’t be achieved by any action, aka, it isn’t any effect of any action.

-

2.

Restriction: the preconditions of actions and environment prevent actions from working. It may be the range of objects that the action is applicable to, or the distribution of objects that makes the path impossible (for example, The key needed to open the room is locked in the room).

-

3.

Conflict: planner can’t find a plan for multi-goals, although each of them is the effect of some actions.

For the case of absence, a new action needs to be modeled based on state and conflict. According the specifications state and conflict, constructing new actions follows the following rules:

-

1.

The state is the effect of the action.

-

2.

The types limits the state and the negative of the state are designed to be the precondiitions of the action.

-

3.

If there is a conflict contains the state, conditional effecte are added (middle part of conflict is designed to be condtions, and right part effects).

The action of state opened(A) (its state is opened(A):door(A); conflict is opened(A):closed(A):closed(A)) is as shown below:

action {

name: action_opened(A).

precondition:

door(A) & opened(A).

effect:

opened(A) & (closed(A) closed(A)).

}

For the case of restriction, the solution is to simplify the preconditions of existing actions, only retain the types required by the preconditions, and remove the states. It should be noted that this method can’t deal with the problem of the scope of objects the action applicable to, and the related processing will be described in Section 4.

For the case of conflict, all dynamic states, except the case of absence, need to modeled as new actions. The modeled method follows the same rules as the case of absence.

4 Progressive reasoning framework and failure explanation

In this section, an extension of the classical planning method will be described. Based on this extended method, we will describe how to generate an explanation for the planning failure.

4.1 Progressive reasoning framework

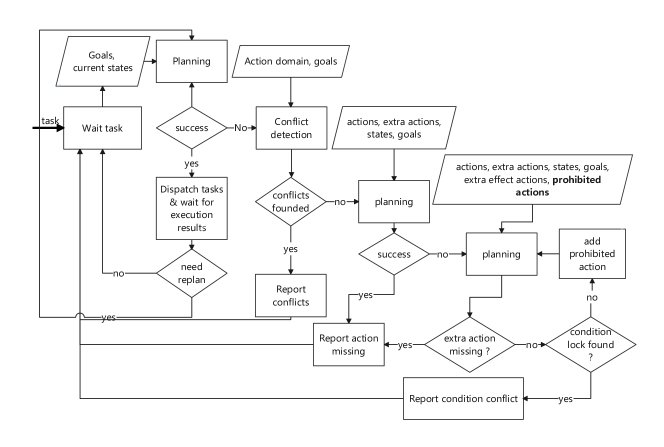

Two points have to be considered in the new planning method. First, it should have no or samll impact on the successful cases; then it should cover the widest possible range of situations. In Fig.1, the flowchart of the method has been shown. To reduce impact on the successful cases, the new method do none additional oprations before the planning fails. If the planner successfully get a plan for the goals based on current states, it will dispatch the plan and waits for the execution results. The planner decides whether to re-plan based on execution feedback and environmental changes. For details on handling these normal situations, please refer to our previous work CuiSC21.

Goal checking.

In Sec. 2.2, we define that each item of states contians two parts: head and requirements. They are used to do the first detection of the goals. Because the user may propose an goal that does not meet the pre-defined states, such as moving an object that cannot be moved. The workflow of the goal checking method is exhibited in Alg. 1. The algorithm first grounds the instaces involved by each goal (Line 3). Then the requirements of the goal is created by the operator (Line 4), and a set containing all states and types involving these instances is created (Line 5). If a requirement of the goal can not be met by , the items that conflict with it are filtered out and recored (Line 6-12).

Planning with virtual actions.

When a plan cannot be found using the defined actions, we consider that due to the lack of corresponding actions to complete the corresponding state transition. Then virtual actions are added to the action domain, which is used by the planner to make a new plan. If the planner can generate a new plan, the new plan will contain some virtual actions that describe the actions that are required to complete the task but are not provided by the action domain.

Planning with virtual-effect actions.

If planning with virtual action fails, it means that a solution cannot be found in the current environment. There is only one reason that can lead to this result: the preconditions of the actions are always not met, and the state transitions cannot be completed. This situation requires planning with virtual-effect actions. The workflow of the goal checking method is exhibited in Alg. 2. The algorithm first find a plan with all normal actions, virtual actions, and virtual-effect actions (Line 3). If the plan contains a virtual action, Alg. 2 stops subsequent calculations and returns this plan to the caller (Line 4-7). Otherwise, it will try to find a ring composed of virtual-effect actions. If an action in the plan is an virtual-effect action, the algorithm will check if the virtual-effect action and the already obtained virtual actions (Line 10). If a conflict is detected, the means contains or a precondition of is same to a effect of the normal action corresponding to an effect-virtual action in , the algorithm stops the follow-up and returns the result (Line 11-15). Otherwise, it will add to and (Line 16-17), set effect of as the new goal and then re-plan (Line 18-19).

4.2 Failure explanation

In traditional planning algorithms, failed planning often returns no results, and no effective information can be obtained at this time. Our extended, hierarchical planning algorithm, while maintaining the efficiency of the normal situation, can still return a result when the traditional planning fails. The virtual action or virtual-effect actions contained in the returned result are used to explain the failure. Each virtual action or virtual-effect action has only the fewest preconditions and one effect. When it appears in the result, that means that its effect cannot be normally satisfied. A virtual action means that the robot has no action to achieve its effect. For example, appears in the result, which means that the robot does not have the ability to open doors. A set of virtual-effect actions is used to locate the environmental factors. Their effects can be achieved through certain actions, but due to environmental constraints, the preconditions for the action to occur cannot be met. We use a simple example to illustrate this situation. The robot can open the door, but requires the room card, but the room card is locked in the room to be opened. In this way, a deadlock makes the robot unable to open the door. After our planning algorithm, we can get a ring to explain failure.

5 Case study

In this section, we will describe several type of case study. These cases cover instruction/knowledge conflicts, lack of capabilities, and environmental constraints.

5.1 Case study 1: small/big object

In a room, there are several objects, they are divided into two types by size: big or small. The robot can grab small objects, but can’t grab big objects. This rule is formalized as

| (1) |

As described in 2.2, is a state, is an attribute. Suppose there is an object, watermelon, it is big.

| (2) |

The user gives an command ”pickup the watermelon”. This command will be translated into the gaol of the task plan: . Before planning, conflict detection is performed to detect whether the task target conflicts with current knowledge:

| (3) | ||||

There is no item , a conflict will be derived by the program.

5.2 Case study 2: open door

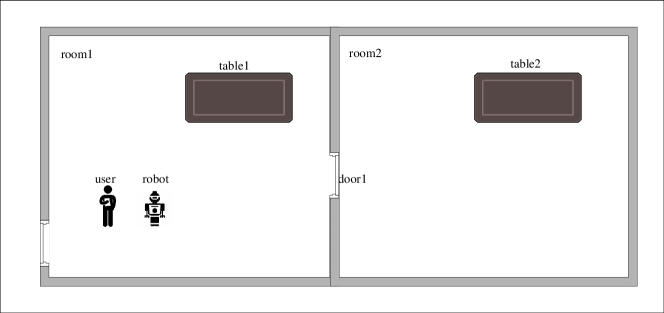

Consider a scenario where there are two rooms and , as shown in Fig.2, there is a door between them, and the robot is in and receives instructions to move to room B.

The robot has the ability to move between rooms, but can not open doors. The action models are described as in Tab. 1.

| action | preconditions | effects | |||||||

|

|

|

As shown in Tab. 2, the action models contain 3 types of states.

| action | preconditions | effects | |||||||

|

|

|

At this point, the robot is in room1() and the door is closed(). The planner cannot find a way to

According the method described in Sec. 3, an pure virtual action is defined as Tab. 3 shown. The traditional planner can’t find a solution, and can’t give the reason for the planning failure.

| action | preconditions | effects | |||

|

|