capbtabboxtable[][\FBwidth]

Bridging Mean-Field Games and Normalizing Flows with Trajectory Regularization††thanks: This work is supported in part by an NSF Career Award (DMS-1752934) and NSF DMS-2134168.

Abstract

Mean-field games (MFGs) are a modeling framework for systems with a large number of interacting agents. They have applications in economics, finance, and game theory. Normalizing flows (NFs) are a family of deep generative models that compute data likelihoods by using an invertible mapping, which is typically parameterized by using neural networks. They are useful for density modeling and data generation. While active research has been conducted on both models, few noted the relationship between the two. In this work, we unravel the connections between MFGs and NFs by contextualizing the training of an NF as solving the MFG. This is achieved by reformulating the MFG problem in terms of agent trajectories and parameterizing a discretization of the resulting MFG with flow architectures. With this connection, we explore two research directions. First, we employ expressive NF architectures to accurately solve high-dimensional MFGs, sidestepping the curse of dimensionality in traditional numerical methods. Compared with other deep learning approaches, our trajectory-based formulation encodes the continuity equation in the neural network, resulting in a better approximation of the population dynamics. Second, we regularize the training of NFs with transport costs and show the effectiveness on controlling the model’s Lipschitz bound, resulting in better generalization performance. We demonstrate numerical results through comprehensive experiments on a variety of synthetic and real-life datasets.

1 Introduction

Mean-field games (MFGs) are a powerful modeling framework for systems with a large number of interacting agents. They arise in the study of game theory [27, 28], economics [1, 3], finance [44, 12, 24], and industrial planning [48, 36, 64]. In general, the MFG formulation prescribes an identical objective for all agents and it seeks the optimal strategy over a time interval, which is analogous to solving for the Nash equilibrium in a finite -player game. When the strategies concern spatial movement with a particular transport cost, the MFG is reduced to the optimal transport (OT) problem, which finds rich applications in signal processing and machine learning [54, 42, 43, 5].

A key theoretical underpinning for MFGs is the characterization of its optimality condition in terms of the Hamilton-Jacobi-Bellman (HJB) equation. Obtaining the Nash equilibrium of an MFG is thus reduced to solving a system of nonlinear partial differential equations (PDEs), for which a plethora of numerical methods have been developed based on the Eulerian framework [2, 9, 10, 8, 34, 56, 63]. Nevertheless, the reliance on spatial discretization renders traditional approaches exponentially more expensive as the dimensionality grows. Hence, numerically solving MFGs in high dimensions remains a difficult problem, due to the curse of dimensionality. Meanwhile, machine learning-based approaches emerged lately. A recent method [59] successfully solves MFGs in quite high dimensions using deep neural networks. This method uses a Lagrangian-based approach to approximate the value function. However, besides penalizing the HJB equation, this method has to additionally solve the continuity equation that governs the evolution of the densities under the learned dynamics.

Rather than approximating the value function in MFGs by using neural networks as discussed in [59], we propose to approximate the trajectories instead. To this end, we establish a mathematical connection between the MFG framework and a popularly used family of generative models in deep learning—normalizing flows (NFs). Composed of a sequence of invertible mappings, NFs allow for the exact computation of the data likelihood. They can be used to model complex data distributions for density estimation and latent sampling [41]. Starting from the MFG problem, we reformulate it in terms of agent trajectories and canonically parameterize the discretization as an NF. As a result, the standard negative log-likelihood training of an NF is equivalent to a special case of the MFG without transport and interaction costs.

This connection between the MFGs and the NFs motivates us to explore two promising directions to improve the two. First, we approximate the trajectories of MFGs by employing expressive NF architectures, to accurately and efficiently solve high-dimensional MFG problems. The key novelty of our approach lies in its encoding of the continuity equation into the neural network, which mitigates the numerical errors incurred in the approximation and allows one to solve MFGs more accurately. We demonstrate the advantage of our approach in problems including OT, crowd motion, and multi-group path planning. Additionally, we conduct numerical analysis of a discretization scheme and explore the relationship between continuous and discretized MFGs. By characterizing the solution behaviors of discretized MFGs with the OT theory [58], we obtain an important insight, which sugggests that direct linear interpolation of the discretized problems in the temporal direction solves MFGs without the interaction term. Moreover, we adapt the universality theory from [60] to argue the effectiveness of using a particular kind of NFs—affine coupling flows—in parameterizing MFG trajectories. The resultant push-forward measure in the NF converges in distribution, corroborating accurate modeling of the population dynamics.

Second, we improve NFs by introducing the transport cost to their training, resulting in trajectory-regularized flows. Note that in high dimensions, there exists more than one flow that transforms one probability density to another. Therefore, the NF training problem is ill-posed and proper regularization is needed to incentivize the solution towards a more meaningful one. The OT theory suggests that this can be done via using the kinetic energy as the transport cost, which induces an OT plan that traverses the geodesic in the space of measures with the Wasserstein-2 metric [58]. We show by using a variety of synthetic datasets that in the absence of the transport cost, one cannot expect existing NF models to approximate the OT trajectory. Additionally, we find that the intermediate flows incur significantly less distortion when trained with transport costs. Regulating the kinetic energy, therefore, serves as an effective way of controlling the Lipschitz bound, which is intimately related to the model’s generalization performance as well as its ability to counter adversarial samples [7, 14]. Traditionally, the control over the Lipschitz bound for a neural network is implemented through explicit regularization such as the weight decay. To the best of our knowledge, this is the first work regularizing NFs by using transport costs and relating the regularization to the Lipschitz bound of the trained neural network, which proves to be more robust and effective than weight decay. We demonstrate the effectiveness of transport-based regularization on a variety of synthetic and real-life datasets and show improvements over popularly used NF models, such as RealNVP and the neural spline flow [17, 22].

Related Works

Variational MFGs are a generalization of the dynamic formulation of OT [58], where the final density matching is encouraged but not enforced and transport costs other than the kinetic energy can be considered. Traditional methods for solving OT and variational MFG problems are well-studied in low dimensions [2, 9, 10, 8, 34, 56, 63, 15]; and machine learning-based approaches emerged recently for solving high-dimensional MFGs. The work [59] is the first on solving high-dimensional deterministic MFGs by approximating the value function using deep neural networks. The work [47] solves high-dimensional MFGs in the stochastic setting using the primal-dual formulation of MFGs, where training is conducted similarly to that of generative adversarial networks [25, 5].

Our proposed trajectory-regularized flow can be built on any discrete NF. In general, existing NF models impose a certain structure on the flow mapping to enable fast evaluation of the log-determinant of the Jacobian. For a non-exhaustive list, RealNVP and NICE combine affine coupling flows to build simple yet flexible mappings [17, 16], while NSF and Flow use splines and a mixture of logistic CDFs as more sophisticated coupling functions [22, 30]. MAF and IAF are autoregressive flows with affine coupling functions [57, 40], while NAF and UMNN parameterize the coupling function with another neural network [31, 62].

Not the focus of this work, continuous NF models consider the ODE limit of the discrete version, with significant changes to the computation of the parameter gradient and the change of variables. In these models, neural ODE outlines the theoretical framework [13] and FFJORD uses the Hutchinson estimator to approximate the trace term incurred in the density evaluation [26]. OT-Flow introduces the OT cost to continuous NFs, suggesting a parameterization of the HJB potential that allows for exact trace computations [55]. In addition, recent works found that the transport cost can enhance and stabilize the training of continuous NFs [23]. For example, the OT theory suggests that the optimal agent trajectories are straight; this characterization allows the ODE solver in OT-Flow to integrate with larger time steps and reduce the computational cost [55].

Roadmap

The rest of the paper is organized as follows. We provide the mathematical background for MFGs and NFs in Section 2 and elaborate the connections between the two in Section 3, via a reformulation and discretization of the MFG objective. In Section 4, we perform theoretical analysis to relate the discretized and continuous MFG problems, as well as establishing universality theorems for using NFs to solve MFGs. In Section 5, we provide numerical results that demonstrate the effectiveness and accuracy of solving high-dimensional MFGs by using NFs. Meanwhile, we illustrate the effect of regularized NF training, stress the ability of acquiring a lower Lipschitz bound, and show its superiority to weight decay in real-life datasets.

2 Mathematical Background

2.1 Mean-Field Games

The study of MFGs considers classic -player games at the limit of [45, 33, 32]. Assuming homogeneity of the objectives and anonymity of the players, we set up an MFG with a continuum of non-cooperative rational agents distributed in a spatial domain and a time interval . For any fixed , we denote the population density of agents by , where is the space of all probability densities over . For an agent starting at , their position over time follows a trajectory governed by

| (1) |

where specifies an agent’s action at a given time. For simplicity, there are no stochastic terms in (1) in this work. Then, each agent’s trajectory is completely determined by . To play the game over an interval , each agent seeks to minimize their objective:

| (2) | ||||

Each term in the objective denotes a particular type of cost. The running cost is incurred due to each agent’s action. A commonly used example for is the kinetic energy , which accounts for the total amount of movement along the trajectory. The running cost is accumulated through each agent interacting with one another or with the environment. For example, this term can be an entropy that discourages the agents from grouping together, or a penalty for the agents to collide with an obstacle in the space [59]. The terminal cost is computed from the final state of the agents. Typically, measures a discrepancy between the final distribution and a desirable distribution .

To solve the MFG, we define the value function as

| (3) |

One can show that and are solutions to the following HJB equation and continuity equations [45, 33, 32]

| (4) |

where is the Hamiltonian ; is the initial population density at ; and denotes the optimal strategy for an agent at position and time . It can be shown that the optimal strategy satisfies . We note that the continuity equations in the last two lines of (4) are a special case of the Fokker-Planck equation with no diffusion terms.

The setup (1)–(2) concerns the strategy of an individual agent. Under suitable assumptions, the work [45] developed a macroscopic formulation of the MFG that models the collective strategy of all agents. Suppose there exist functionals such that

where is the variational derivative. Then, the functions and satisfying (4) coincide with the optimizers of the following variational problem:

| (5) |

This formulation is termed the variational MFG and it serves as the main optimization problem this work solves. It is known that traditional numerical PDE methods that solve (4) are computationally intractable in dimensions higher than three, as the number of grid points grows exponentially. This challenge motivates us to solve the MFG problem with parameterizations of the agent trajectory based on NFs.

2.2 Normalizing Flows

NFs are a family of invertible neural networks that find applications in density estimation, data generation, and variational inference [16, 17, 22, 30, 57, 40, 31]. In machine learning, NFs are considered deep generative models, which include variational autoencoders [38] and generative adversarial networks [25] as other well-known examples. The key advantage of NFs over these alternatives is the exact computation of the data likelihood, which is made possible by invertibility.

Mathematically, suppose we have a dataset generated by an underlying data distribution ; that is, . We define a flow to be an invertible function parameterized by , and a normalizing flow to be the composition of a sequence of flows: parameterized by . To model the complex data distribution , the idea is to use an NF to gradually transform a simple base distribution to . Formally, the transformation of the base distribution is conducted through the push-forward operation , where for all measurable sets . The aim is that the transformed distribution resembles the data distribution. Thus, a commonly used loss function for training the NF is the KL divergence:

| (6) |

If the measure admits density with respect to the Lebesgue measure, the density associated with the push-forward measure satisfies the change-of-variable formula [41]:

| (7) |

where is the Jacobian of . This allows one to tractably compute the KL term in (6), which is equivalent to the negative log-likelihood of the data up to a constant (independent of ):

| (8) |

where are the flow inverses, and .

We remark that there exists flexibility in choosing the base distribution , as long as it admits a known density to evaluate on. In practice, a typical choice is the standard normal . In addition, (8) requires the log-determinant of the Jacobians, which take time to compute in general. Existing NF architectures sidestep this issue by meticulously using functions that follow a (block) triangular structure [41], so that the log-determinants can be computed in time from the (block) diagonal elements.

One popular family of NF models is the coupling flow [41], which composes individual flows in the following form

| (9) |

where , is the called the conditioner, and is the coupling function. Suppose is invertible, then the inverse is:

| (10) |

In common scenarios, is defined element-wise, such that invertibility is ensured by strict monotonicity. For example, a simple yet expressive choice is the affine function . This gives rise to the RealNVP flow [17]. Other popular coupling functions include splines, mixture of CDFs, and functions parameterized by neural networks, which are shown to be more expressive than the affine coupling function [22, 50, 21, 30, 35, 31].

3 Bridging MFGs and NFs

In this section, we reformulate the MFG problem as a generalization of the NF training loss. This reformulation relates the two models and opens opportunities to improve both. The contribution is two-fold. On the one hand, we solve high-dimensional MFG problems by using an NF parameterization. The NF model encodes the discretized trajectory of the agents in its network architecture, which allows for efficient optimization through evaluation of the log-determinants in cost. We show that the expressitivity of the NF architecture allows one to tractably solve high-dimensional MFG problems with small error. On the other hand, under the MFG framework we introduce a model-agnostic regularization to improve the training of NFs. In the MFG language, existing NF models are trained by using only the terminal cost (i.e., the KL loss). With the introduction of the transport cost, the intermediate flows become better behaved and more robust, owing to a smaller Lipschitz bound. It turns out that the learned NF improves the matching of the densities, as evident in various synthetic and real-life examples.

3.1 Trajectory-Based Formulation of MFGs

Rather than solving for the density and the action , we reparameterize the problem (5) to directly work with agent trajectories, from which and can be derived. Let be the measure that admits as its density for all . Define the agent trajectory as , where is the position of the agent starting at and having traveled for time . It satisfies the following ordinary differential equation:

| (11) |

The evolution of the population density is determined by the movement of agents. Thus, is simply the push-forward of under ; namely, , whose associated density satisfies

| (12) |

where is a Radon-Nikodym derivative. Note that this definition of automatically satisfies the continuity equation as well as the initial condition for in (5). Unless specified otherwise, we assume and use the transport cost from now on. Here, is treated as a model (hyper-)parameter. Furthermore, we can apply a change of variables on (12) to rewrite the integral involving :

Similarly, we can rewrite the interaction cost and the terminal cost as:

| (13) | ||||

Therefore, (5) becomes:

| (14) | ||||

It is worth noting that (14) removes the continuity-equation constraint. The reformulated problem optimizes over agent trajectories , which allows one to parameterize with an NF. In addition, the remaining constraint will be automatically integrated into the NF, such that it can be trained in an unconstrained manner.

3.2 Discretization of MFG Trajectories with NFs

Starting from the base density , each flow in the NF advances the density one step forward. Hence, the NF model specifies a discretized evolution of the agent trajectories. Formally, we discretize the trajectory in time with regular grid points . Thus, the grid spacing is Denote . By approximating with the forward difference, the transport cost becomes:

| (15) |

Moreover, suppose that the terminal cost is computed as the KL divergence as in standard NFs:

| (16) |

For simplicity of exposition, for now we omit the interaction term (that is, ). We can discretize the interaction cost , similarly to (15), given its exact form. Subsequently, we will give numerical examples for the case (see Sections 5.1.2 and 5.1.3).

For any , the discretized objective value converges to the continuous version at . However, we show later that the optimal discretized and continous objective values in fact agree for any fixed number of grid points used. Hence, there introduce no additional errors by discretizing the original MFG problem, in the absence of interaction costs.

To solve the discretized MFG, we define the flow maps for as , where

| (17) |

Here, we leverage the semi-group property to decompose the agent trajectories into a sequence of flow maps. It follows that , and we parameterize each by a flow model . Denote , , and . The discretized MFG problem with flow-parameterized trajectories is:

| (18) |

where are weights.

By comparing (18) to the NF optimization problem (8), we see that training a standard NF is equivalent to solving a special MFG with no transport and interaction costs. In the past, many highly flexible flows were developed that are capable of solving this special MFG [31, 22, 17, 39, 30]. As a result, the trajectory-based formulation opens the door to employ expressive NFs to accurately solve MFGs. In addition, note that the density evolution can be directly obtained from the agent trajectories, which are the outputs of the individual flows in the NF model. Thus, this formulation sidesteps the additional effort required to solve the Fokker-Planck equation (4), resulting in a more efficient solution of the population dynamics.

We also note that the computation of (18) involves both a forward (normalizing) and an inverse (generating) pass of the NF: the forward pass computes via (8) and the inverse pass yields the transport cost. For NFs that have comparable run time for passes in both directions (e.g. coupling flows), adding the transport cost only doubles the overall computation time. For NFs where the inverse pass is much slower than the forward pass (or vice versa), such as autoregressive flows [41], the additional computational effort associated with the transport cost may be prohibitive. To remedy this, we propose an alternative, equivalent formulation that incurs less overhead.

Alternative Formulation

Recall that the forward agent trajectory is defined in (11), such that . Equivalently, we can consider the backward trajectory :

| (19) | ||||

Therefore, . As a result, the objective (18) becomes:

| (20) |

where are the flow inverses, and . Not that the evaluation of requires the inverse of , which is expressed in the ’s defined in (8). We defer the detailed derivation to Appendix B.

The transport cost in the equivalent formulation (20) can be computed via a single forward pass on the training data, which can be done together with the negative log-likelihood computation. As a result, the addition of the transport cost incurs minimal overhead compared to the standard NF training.

3.3 Regularizing NF Training with MFG Costs

The aforementioned method for solving high-dimensional MFGs using NFs leads to the view of NFs as a special type of MFG problems. Comparing the MFG formulation (18) with the standard NF training (8), one can interpret the MFG transport cost as a regularization term in the NF training with strength ; we call this regularization a trajectory regularization. It is straightforward to implement it on top of any existing discrete NF models (not necessarily restricted to coupling flows). Since the transport cost aggregates squared differences between the input and the output of each flow, the regularization encourages smoothness in the intermediate transforms.

From the perspective of inverse problems, there exist many flows (transport plans) that can transform the base to . Therefore, regularization helps ameliorate the inevitably ill-posed nature of NF training. Through OT theory, the regularized evolution of densities is characterized as the geodesic connecting and in the space of measures equipped with the Wasserstein-2 metric [4]. Since is always chosen to be absolutely continuous for NFs, the OT between the two densities will be a unique and bijective mapping known as the Monge map, . The same result holds for the discretized MFG problem, shown later in Theorem 4.4. Therefore, the NF training problem is no longer ill-posed. Furthermore, the optimal trajectory is then a linear interpolation between each point and its destination : .

From the perspective of machine learning, the transport cost provides an effective framework to control the flow’s Lipschitz bound, which closely correlates with the robustness and the generalization capability [53, 14]. Note that by enforcing smoothness in the time domain, the trajectory regularization also encourages smoothness in the spatial domain, owing to the triangle inequality:

| (21) |

where , and is the NF model. By regularizing with the transport cost as in (18), the right-hand side can be controlled.

As observed by the authors of [7], explicit regularization methods such as weight decay has little impact on the model’s generalization gap. In contrast, the trajectory regularization is implicit in that it imposes no direct penalty on the parameters. We find it to be much more effective than weight decay in reducing the NF’s Lipschitz bound. We will demonstrate in the numerical experiments that a regularized evolution can reduce overfitting and improve density estimation.

4 Theoretical Analysis

We first characterize the solutions of the MFG problem and its discretized analogue, when the interaction cost is absent and the transport cost is the kinetic energy. We prove that the discretized objective converges to the continuous version linearly, and that the minimum values agree between the two problems, for any number of discretized points. Through reduction to an OT problem, we show uniqueness of the optimizer for discretized MFG problems, thereby alleviating ill-posedness of the NF training objective.

In addition, we show that with mild assumptions on the MFG solution, certain classes of normalizing flows can be used to approximate the optimal trajectories of any MFG problem in locally. Our results build on the work [60], which establishes universality results for a broad class of coupling flows. In addition, we state that under the learned mapping, the evolution of the probability measure converges to the ground truth in distribution, at every discretized time step. We sketch the intuitions here and defer the proofs to the appendix.

4.1 Relating Continuous and Discretized MFGs

Our analysis focuses on the MFG problem without the interaction cost. We recall the discretized MFG problem with here for convenience:

| (22) | ||||

First, we note that in the absence of the interaction cost, the discretized objective converges to the continuous analogue in when evaluated on any .

Theorem 4.1.

Assume . The discretized MFG objective value converges to the continuous version at for any , when both objectives are finite:

The proof is based on a straightfoward Taylor expansion, similar to that used in finite difference error analysis. In general, one can use a higher order scheme to discretize (14) in time, yielding a higher order convergence rate. For example, the composite Simpson’s rule is used in our numerical experiments.

Next, we consider the optimization and establish two lemmas that characterize the optimal trajectories of both the discretized and the continuous MFG problems.

Lemma 4.2.

Assume is absolutely continuous with density . Let be an optimizer for (14) with . Define . Then, .

Lemma 4.2 can be shown by recasting the MFG as a suitable OT problem. Then, the straight trajectories follow from the OT theory. A similar result holds for the discretized MFG problem.

Lemma 4.3.

Assume is absolutely continuous with density . Let be an optimizer for (22) with . Then, .

Lemma 4.3 can be shown by recasting the problem in a similar fashion, followed by solving its KKT system. Combining Lemma 4.2 and Lemma 4.3, we can show that the optimal value of the discretized problem agrees with its continuous counterpart.

Theorem 4.4.

Theorem 4.4 has two important implications. First, when we use an NF to parameterize and solve the MFG problem with no interaction costs, the optimizer incurs no discretization error. Therefore, it is not necessary to use higher order discretization schemes for the problem. Second, the discretized MFG problem, which corresponds to the training objective (18) modulo parameterization, admits a unique solution. In other words, the regularized NF training is well-posed.

4.2 Universality of NFs for Solving MFGs

The universality results from [60] indicate that NFs built with affine coupling transforms and sufficiently expressive conditioners are universal approximators for locally in . Here, , where is diffeomorphic, and is open and diffeomorphic to . As a result, affine coupling flows are well-suited for approximating the optimal MFG trajectories. To be more precise, we consider the following sets introduced in [60].

Definition 4.5.

Define the set of single coordinate affine coupling flows with conditioner class as , where is an affine coupling flow. Further, define the set of invertible neural networks , which is a family of normalizing flows with flow transforms from the class of invertible functions .

Note that the affine coupling flows used in our numerical experiments belong to , where is the class of ReLU networks. It turns out that the universality of provides an ideal characterization for the approximate solutions of (14).

Theorem 4.6.

Let be sup-universal for and contain piecewise functions. For a fixed , let the optimizer of (14) be and its evaluation on the grid points be . Assume . Then, for all , , and compact sets , there exists such that

This result can be directly obtained from the conclusion in [60]. We remark that the conditions on can be satisfied by fully connected networks equipped with the ReLU activation. In addition, we show that the push-forward measures from the approximated optimal trajectories converge to the ground truth in distribution. This ensures the validity of the learned evolution of the agent population.

Theorem 4.7.

Let be absolutely continuous with a bounded density , and be the optimizer for (14) such that . Then, for each , there exists a sequence of normalizing flows such that

where denotes convergence in distribution.

The proof is based on the Lipschitz-bounded definition of convergence in distribution. See the proof in the appendix.

5 Numerical Experiments

This section contains numerical results into two parts. The first part showcases NFs being an effective parameterization for solving high-dimensional MFG problems. The second part showcases the use of MFG transport costs to improve the robustness of NF models for generative modeling.

5.1 Solving MFGs with NFs

In the first part, we follow the settings in [59] to construct two MFG problems. They are designed so that the behavior of the MFG solutions is invariant to dimensionality, when projected onto the first two components. This property allows for qualitative evaluation through visualization. As the dimensionality increases from 2 to 100, the problem becomes more challenging in terms of the neural network’s capacity and the computational complexity. We further extend our numerical experiments to multi-group path planning. In these experiments, we use spline flows as it is commonly believed that they are more expressive than coupling flows.

5.1.1 OT in High Dimensional Spaces

In this experiment, we devise a dynamically formulated OT problem that can be solved efficiently with classic numerical methods in [2, 9, 10, 8, 34, 56, 63, 15]. The optimization problem is the following:

| (23) |

Let denote the density function of a multivariate Gaussian with mean and covariance . We set the initial density to be and the terminal density to be , where , and are the first two standard basis vectors.

Contrary to MFGs, the OT problem imposes a strict constraint on the matching of the terminal density. To handle this, we cast the OT as a variational MFG problem by penalizing the mismatch of the terminal density using the KL divergence, and we parameterize the MFG with the coupling version of the neural spline flow (NSF-CL) [22], which employs flexible rational-quadratic coupling functions to learn expressive transformations. Overall, we find NSF-CL to be a robust architecture for solving different kinds of MFGs.

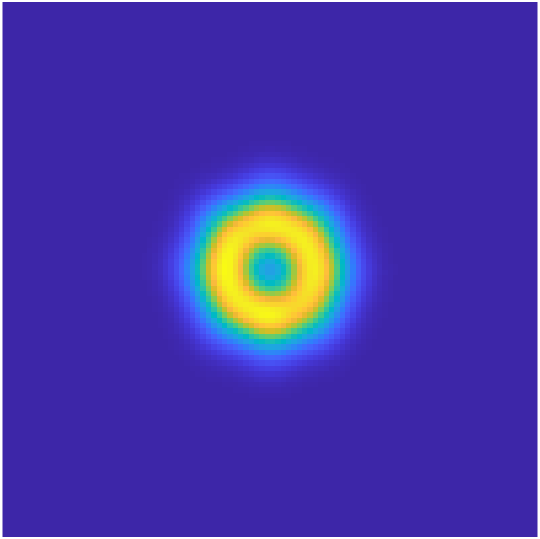

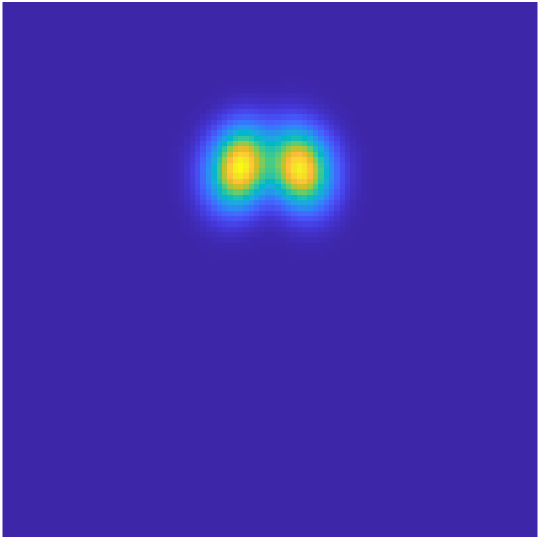

An illustration of the agent trajectories obtained from our method is given in Figure 1 and the evolution of the population density is displayed in Figure 2. We see that the NF learns to equally partition the initial Gaussian density and transport each partition to a separate Gaussian in an approximately straight trajectory. Moreover, it does so consistently as the problem dimension grows. More experimental results can be found in the appendix.

| Method | Dimension | Time/iter | Number of iters | ||

|---|---|---|---|---|---|

| Eulerian | 2 | 9.8 | 0.1725 | - | - |

| MFGNet | 2 | 9.9 | 0.1402 | 2.038 | 1e3∗ |

| Ours | 2 | 10.1 | 0.0663 | 0.549 | 1e5 |

| MFGNet | 10 | 10.1 | 0.1616 | 8.256 | 1e3∗ |

| Ours | 10 | 10.1 | 0.0641 | 0.561 | 1e5 |

| MFGNet | 50 | 10.1 | 0.1396 | 81.764 | 1e3∗ |

| Ours | 50 | 10.1 | 0.0641 | 0.584 | 1e5 |

| MFGNet | 100 | 10.1 | 0.1616 | 301.043 | 1e3∗ |

| Ours | 100 | 10.1 | 0.0622 | 0.625 | 1e5 |

We compute the MFG cost term and compare our results to a traditional Eulerian method [63] as well as the MFGNet [59], another deep learning approach based on continuous NFs. The Eulerian method is provably convergent, but its reliance on a discrete grid renders it infeasible for dimensions higher than three. For a fair comparison, we report the unweighted cost and treat the KL divergence as the terminal cost.

Table 1 demonstrates that the NF-based parameterization can solve MFG problems up to 100 dimensions while maintaining a consistent transport cost. Compared to the MFGNet, our approach yields a similar transport cost but a much lower terminal cost, entailing a more accurate density matching at the final time. We attribute the improvement in terminal matching to the trajectory-based parameterization of the MFG. By encoding the continuity equation (4) in the flow architecture, our approach sidesteps the errors incurred in the numerical schemes used in the MFGNet, when approximating the solution.

We remark that our model is optimized with ADAM [37] while the MFGNet is trained with BFGS. The superlinear convergence of BFGS allows the MFGNet to converge with fewer iterations, at the price of higher costs per update. In addition, our implementation is based on Pytorch, which effectively leverages GPU parallelism to achieve an optimal runtime scaling, up to 100 dimensions. While being slower than MFGNet in lower dimensions, our approach uses only one third of the time in , since its runtime is nearly constant in different dimensions.

5.1.2 Crowd Motion

In this experiment, we consider an MFG problem with nonzero interaction costs. Following the general setting in (14), we use a two-part interaction cost :

| (24) |

Here, acts as an obstacle that incurs a cost for an agent that passes through it. It is set to be the density of a bivariate Gaussian centered at the origin, with a magnitude of 50:

| (25) |

In dimensions higher than two, we evaluate the first two components of on to compute . The initial and the terminal densities are chosen as Gaussians: ; and the terminal cost is identical to that in the OT experiment (23). Intuitively, this problem aims at transporting an initial Gaussian density to a different location, while avoiding an obstacle placed at the origin. Moreover, the optimal trajectories are invariant to the dimensionality on the first two components, allowing us to visualize the dynamics in high dimensions.

Similar to the transport cost , we perform a discretization on (24), based on the NF parameterization. Note that is integrated in time in the MFG problem (14). Thus, discretizing the integral with the right-point rule yields

| (26) | ||||

| (27) |

where and . Similar to before, our discretization is consistent with the global error. In practice, we use fourth-order forward difference for the term in and approximate all integrals with Simpson’s rule. The overall numerical scheme converges to the continuous MFG with global error.

| Method | Dimension | Time/iter | Number of iters | |||

|---|---|---|---|---|---|---|

| Eulerian | 2 | 31.8 | 2.27 | 0.1190 | - | - |

| MFGNet | 2 | 33.0 | 2.29 | 0.1562 | 4.122 | 1e3∗ |

| Ours | 2 | 32.8 | 2.19 | 0.0417 | 0.559 | 1e5 |

| MFGNet | 10 | 33.0 | 2.29 | 0.1502 | 17.205 | 1e3∗ |

| Ours | 10 | 32.9 | 2.13 | 0.0436 | 0.568 | 1e5 |

| MFGNet | 50 | 33.0 | 1.91 | 0.1440 | 134.938 | 1e3∗ |

| Ours | 50 | 32.9 | 1.82 | 0.0381 | 0.581 | 1e5 |

| MFGNet | 100 | 33.0 | 1.49 | 0.2000 | 241.727 | 1e3∗ |

| Ours | 100 | 33.0 | 1.36 | 0.0464 | 0.625 | 1e5 |

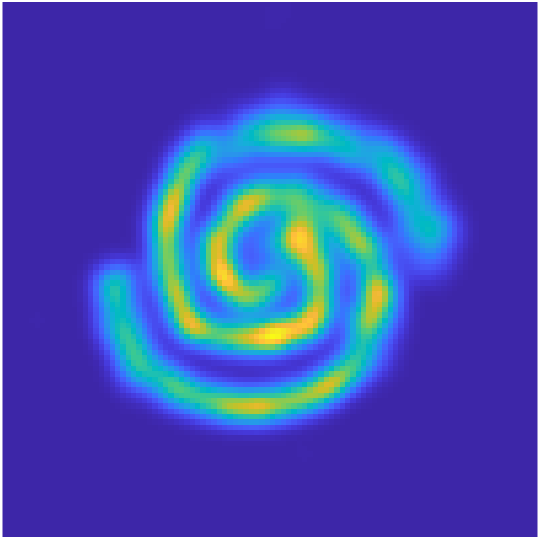

Similar to the OT experiment, we use the same NSF-CL flow [22] to solve the crowd motion problem from 2 to 100 dimensions and compare the computed cost terms to those of MFGNet in Table 2. Compared to the baseline, our method yields comparable transport cost but lower interaction and terminal costs, suggesting a more accurate characterization of the agents’ behavior in avoiding the conflicts with the obstacle, as well as a more authentic matching of the final population distribution. Similar to the MFGNet, our computed costs are approximately invariant with respect to the dimensionality, as further validated by the visualizations of the sampled trajectories shown in Figure 3. The agents plan their paths to circumvent the obstacle; moreover, trajectories of symmetric initial points are symmetric as well, which is expected from the problem setup. In Figure 4, we also show the optimal trajectories at different levels of aversion preference, further validating the robustness of the method. From left to right, the agents are farther away from the obstacle, while maintaining approximately symmetric trajectories and accurate terminal matching. The associated costs for each scenario are given in the appendix, together with more numerical results.

5.1.3 Multi-Group Path Planning

In the above crowd motion experiment, we demonstrated that the interaction cost can be used to devise the optimal trajectories for a single population, in the presence of obstacles. Here, we take one step further and investigate path planning when multiple populations are moving simultaneously. Therein, the interaction cost is used to discourage conflicts among different populations.

We consider a generalized MFG problem that accommodates multiple densities; this problem is also explored in [6, 61]. We consider populations and their associated trajectories , each of which is parameterized by an NF. Every population maintains an independent transport cost and an independent terminal cost , where are the initial and terminal distributions for the -th population, respectively.

To keep the setting simple yet illustrative, we consider an inter-group interaction cost that encourages different populations to avoid each other in their paths. In a real-life scenario, this can be thought of as path planning for multiple groups of drones, and the desired outcome is for them to arrive at their desired locations through short paths while minimizing collisions. Formally, the interaction cost is modeled by a Gaussian kernel , which decays exponentially as the radial distance between two populations increases.

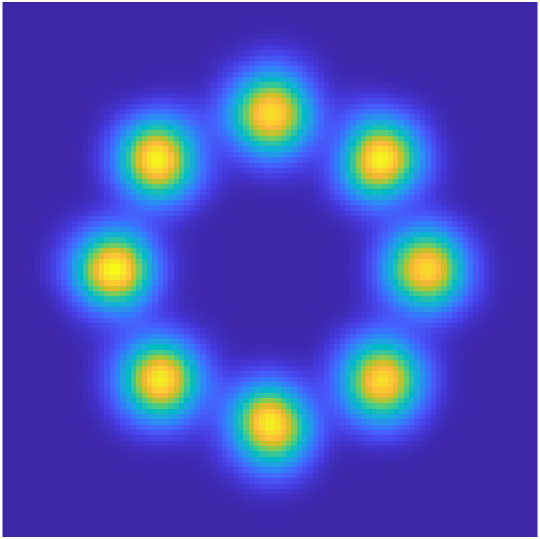

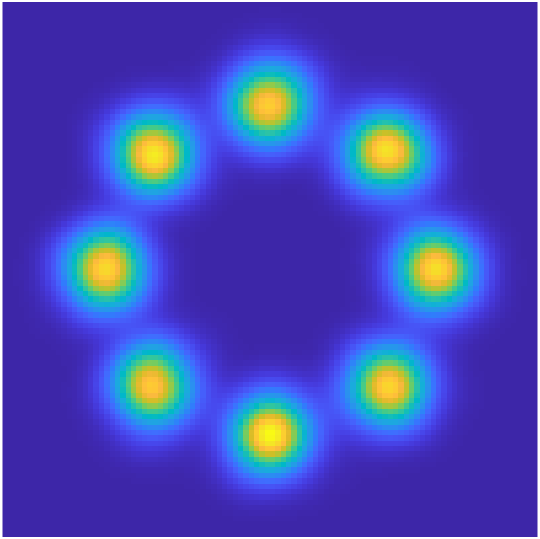

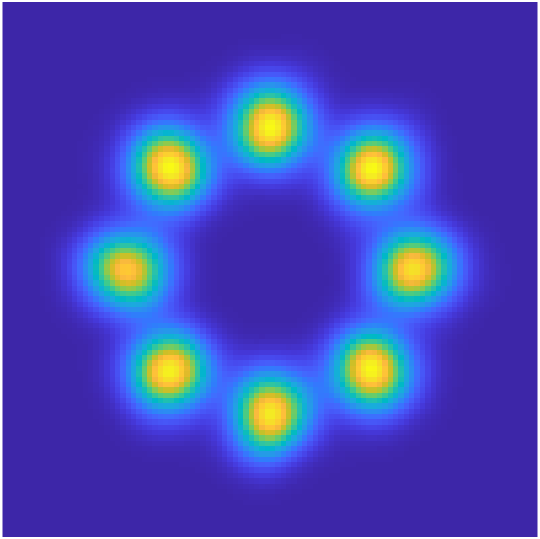

In Figure 5, we show results for problems in two and three dimensions. For the 2D experiment with two populations, we choose as the initial and terminal density for the first population, and for the second population. For the 3D experiment with two populations, we have . For the 2D experiment with eight populations, the densities are , where . Each population uses the density mirrored across the origin as its destination.

In these problems, the initial and the terminal densities are configured to create a full collision if the interaction term is absent. As the weights on the interaction cost increase, the populations coordinate a delicate dance to avert each other during travel. Note that the computed trajectories are approximately symmetric, which is expected according to the symmetric nature of the problem setup. We summarize the computed unweighted costs in Table 3. As higher weights are placed on the interaction cost, the populations spend more effort maneuvering and hence the transport cost increases.

| 2D, 2 populations | 3D, 2 populations | 2D, 8 populations | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3.9755 | 0.8992 | 0.0038 | 0 | 5.9899 | 0.8897 | 0.0023 | 0 | 120.8807 | 12.5090 | 0.0067 |

| 3 | 5.5874 | 0.6556 | 0.0034 | 3 | 7.2835 | 0.6574 | 0.0017 | 1 | 125.7670 | 10.6740 | 0.0086 |

| 5 | 9.1048 | 0.4690 | 0.0099 | 5 | 10.8889 | 0.4677 | 0.0047 | 3 | 164.5073 | 6.0951 | 0.0089 |

5.2 Improving NF Training with MFGs

The standard training of NFs only considers minimizing the KL divergence (the terminal cost in the MFG), without transport and interaction costs. Therefore, intermediate distributions may be meaningless artifacts of the flow parameterization and they often come with enormous distortion. By viewing NFs as a parameterization of the discretized MFG, it is natural to introduce the transport cost into NF training. We showed in Theorem 4.4 that the training problem admits a unique mapping as its solution. In other words, the problem is no longer ill-posed.

More interestingly, the unique transport-efficient mapping tends to be well-behaved, in terms of the Lipschitz bound illustrated in (3.3). This behavior is closely related to the model’s complexity and the robustness of the neural network [7]. As a result, the weight on the trajectory regularization serves as an effective means of controlling the Lipschitz bound. With appropriate choices of the weight, we observe in a variety of tabular and image datasets that the trained model has a better generalization performance.

5.2.1 Synthetic Datasets

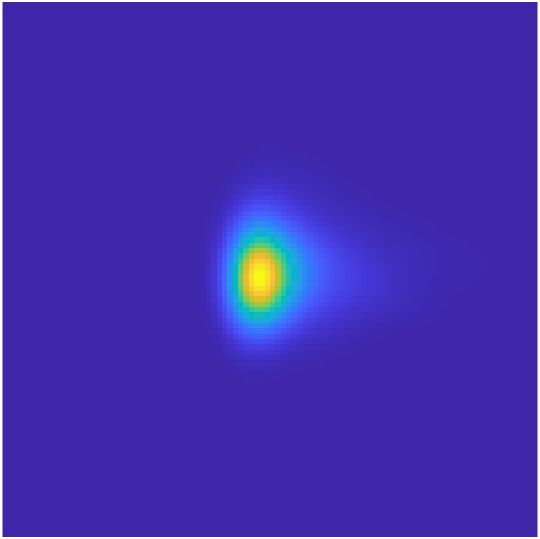

In this experiment, we compare the intermediate flows trained on 2D synthetic datasets, with and without the transport cost. The introduction of the transport cost encourages NFs to evolve the data to their desired destinations with the least amount of distortion. As a result, the intermediate flows are more sensible and visually appealing, as illustrated in Figure 6 (see more examples in the appendix; Figures 17, 18, 19). By comparing the NFs trained with and without the transport cost, we can see that in the absence of the regularization, existing NF models cannot be expected to learn trajectories that are approximately optimal under the cost. Instead, the learned trajectories are artifacts of the flow parameterization and they are sensitive to initialization.

Visually, NFs trained with the transport cost incur significantly less distortion, so it is not surprising that the Lipschitz bound for each flow and that for the whole model are much smaller. In Figure 6, we compare the Lipschitz bounds between the standard NF, the NF trained with weight decay, and the NF trained with trajectory regularization. It is evident that while weight decay has a small impact on the Lipschitz bound, the trajectory regularization yields more noticeable improvement.

5.2.2 Tabular Datasets

| Model | POWER | GAS | HEPMASS | MINIBOONE | BSDS300 |

|---|---|---|---|---|---|

| MAF (10) | 0.24 0.01 | 10.08 0.02 | -17.73 0.02 | -12.24 0.45 | 154.93 0.28 |

| MAF MoG | 0.30 0.01 | 9.59 0.02 | -17.39 0.02 | -11.68 0.44 | 156.36 0.28 |

| NAF | 0.60 0.02 | 11.96 0.33 | -15.32 0.23 | -9.01 0.01 | 157.43 0.30 |

| SOS | 0.60 0.01 | 11.99 0.41 | -15.15 0.10 | -8.90 0.11 | 157.48 0.41 |

| Quad. Spline | 0.64 0.01 | 12.80 0.02 | -15.35 0.02 | -9.35 0.44 | 157.65 0.28 |

| RNVP | 0.335 0.013 | 11.017 0.022 | -17.983 0.021 | -11.540 0.469 | 153.398 0.283 |

| NSF-AR | 0.650 0.013 | 13.001 0.018 | -14.145 0.025 | -9.662 0.501 | 157.546 0.282 |

| RNVP + OT | 0.374 0.013 | 11.063 0.022 | -17.879 0.0215 | -11.167 0.466 | 153.406 0.283 |

| NSF-AR + OT | 0.654 0.012 | 13.018 0.018 | -13.989 0.026 | -9.395 0.494 | 157.729 0.280 |

To demonstrate the benefit of training with transport costs, we replicate the density estimation experiments on popular benchmarks from the UCI repository and BSDS300 [18, 49], using the same setup as in [57]. Our approach requires no changes to the baseline architecture and hence any existing discrete NF is applicable. Here, we use two popular NF architectures, RealNVP and NSF-AR, which are representatives of coupling flows and autoregressive flows, respectively [17, 22]. The former uses simple (affine) coupling functions while the latter uses sophisticated (spline) coupling functions.

Table 4 indicates that NF models trained with OT (trajectory regularization) are able to achieve better performance on the test set across all tabular datasets. The Lipschitz bounds for RealNVP trained on the datasets Power, Gas, and BSDS300 are visualized in Figure 7 (for other dadtasets, see the appendix). Clearly, while regularization has a mixed impact on the model’s Lipschitz bound, the trajectory regularization reduces the bound effectively and improves the model’s generalization performance.

We can interpret the discretized points on the OT trajectories as landmarks that should be visited by each flow in the interim. Our training setup is thus analogous to DSN [46], which guides the learning of intermediate layers with intermediate classifiers. Consistent with their observation, the transport cost provides a natural mechanism for distributing losses throughout the network to improve convergence of the initial flows.

5.2.3 Image Datasets

To further demonstrate the usefulness of our transport-efficient model, we add transport costs to Glow [39], a prominent NF architecture well-suited for generating high-quality images. Similar to tabular data, we observe improvements in density estimation via training Glow with the transport cost, in a number of datasets.

| Model | MNIST | FMNIST | CIFAR-10 | SVHN | EUROSAT |

|---|---|---|---|---|---|

| Glow | 1.182 0.005 | 2.944 0.017 | 3.489 0.012 | 2.156 0.005 | 2.670 0.006 |

| Glow + OT | 1.142 0.005 | 2.901 0.017 | 3.465 0.012 | 2.146 0.005 | 2.658 0.006 |

In Table 5, we select five popular image datasets to compare the density estimation results. The selected datasets include both greyscale and color images, with sizes varying between and . The Glow implementation is down-scaled to fit on a single GPU; and the baseline results are similar to those reported by other works that follow this approach; e.g. [51]. In addition, we omit the 1x1 convolution transforms [39] in the transport cost, as they play a role similar to permutations. Overall, we observe improvements in the esitmated density across all five image datasets, with similar regularization weights.

6 Conclusion

In this work, we unravel connections between the training of an NF and the solving of an MFG. Based on this insight, we explore two research directions. First, we employ expressive flow transforms to accurately and efficiently solve high-dimensional MFG problems. We also approximation analysis for the proposed method. Compared to existing deep learning approaches, our approach encodes the continuity equation into the NF architecture and achieves a more accurate terminal matching. In addition, our implementation makes effective use of GPU parallelism to achieve fast computations in high dimensions. Second, we introduce transport costs into the standard NF training and demonstrate the effectiveness in controlling the network’s Lipschitz bound. As a result, NFs trained with appropriate trajectory regularization are shown to achieve better generalization performance in density modeling across tabular and image datasets.

References

- [1] Yves Achdou, Francisco J Buera, Jean-Michel Lasry, Pierre-Louis Lions, and Benjamin Moll. Partial differential equation models in macroeconomics. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 372(2028):20130397, 2014.

- [2] Yves Achdou and Italo Capuzzo-Dolcetta. Mean field games: numerical methods. SIAM Journal on Numerical Analysis, 48(3):1136–1162, 2010.

- [3] Yves Achdou, Jiequn Han, Jean-Michel Lasry, Pierre-Louis Lions, and Benjamin Moll. Income and wealth distribution in macroeconomics: A continuous-time approach. The review of economic studies, 89(1):45–86, 2022.

- [4] Luigi Ambrosio, Nicola Gigli, and Giuseppe Savaré. Gradient flows: in metric spaces and in the space of probability measures. Springer Science & Business Media, 2008.

- [5] Martin Arjovsky, Soumith Chintala, and Léon Bottou. Wasserstein generative adversarial networks. In International conference on machine learning, pages 214–223. PMLR, 2017.

- [6] César Barilla, Guillaume Carlier, and Jean-Michel Lasry. A mean field game model for the evolution of cities. Journal of Dynamics & Games, 8(3):299, 2021.

- [7] Peter L Bartlett, Dylan J Foster, and Matus J Telgarsky. Spectrally-normalized margin bounds for neural networks. Advances in neural information processing systems, 30, 2017.

- [8] Jean-David Benamou and Yann Brenier. A computational fluid mechanics solution to the monge-kantorovich mass transfer problem. Numerische Mathematik, 84(3):375–393, 2000.

- [9] Jean-David Benamou and Guillaume Carlier. Augmented lagrangian methods for transport optimization, mean-field games and degenerate pdes. Journal of Optimization Theory and Applications, 167:1–26, 2015.

- [10] Jean-David Benamou, Guillaume Carlier, and Filippo Santambrogio. Variational mean field games. In Active Particles, Volume 1, pages 141–171. Springer, 2017.

- [11] Kamen Bliznashki. normalizing_flows. https://github.com/kamenbliznashki/normalizing_flows, 2020.

- [12] Pierre Cardaliaguet and Charles-Albert Lehalle. Mean field game of controls and an application to trade crowding. Mathematics and Financial Economics, 12(3):335–363, 2018.

- [13] Ricky TQ Chen, Yulia Rubanova, Jesse Bettencourt, and David Duvenaud. Neural ordinary differential equations. arXiv preprint arXiv:1806.07366, 2018.

- [14] Moustapha Cisse, Piotr Bojanowski, Edouard Grave, Yann Dauphin, and Nicolas Usunier. Parseval networks: Improving robustness to adversarial examples. In International Conference on Machine Learning, pages 854–863. PMLR, 2017.

- [15] Marco Cuturi. Sinkhorn distances: Lightspeed computation of optimal transport. Advances in neural information processing systems, 26:2292–2300, 2013.

- [16] Laurent Dinh, David Krueger, and Yoshua Bengio. Nice: Non-linear independent components estimation. arXiv preprint arXiv:1410.8516, 2014.

- [17] Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio. Density estimation using real nvp. arXiv preprint arXiv:1605.08803, 2016.

- [18] Dheeru Dua and Casey Graff. UCI machine learning repository, 2017.

- [19] Tony Duan. normalizing-flows. https://github.com/tonyduan/normalizing-flows, 2019.

- [20] R. M. Dudley. Real Analysis and Probability. Cambridge Studies in Advanced Mathematics. Cambridge University Press, 2 edition, 2002.

- [21] Conor Durkan, Artur Bekasov, Iain Murray, and George Papamakarios. Cubic-spline flows. arXiv preprint arXiv:1906.02145, 2019.

- [22] Conor Durkan, Artur Bekasov, Iain Murray, and George Papamakarios. Neural spline flows. Advances in Neural Information Processing Systems, 32:7511–7522, 2019.

- [23] Chris Finlay, Jörn-Henrik Jacobsen, Levon Nurbekyan, and Adam Oberman. How to train your neural ode: the world of jacobian and kinetic regularization. In International conference on machine learning, pages 3154–3164. PMLR, 2020.

- [24] Dena Firoozi and Peter E Caines. An optimal execution problem in finance targeting the market trading speed: An mfg formulation. In 2017 IEEE 56th Annual Conference on Decision and Control (CDC), pages 7–14. IEEE, 2017.

- [25] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- [26] Will Grathwohl, Ricky TQ Chen, Jesse Bettencourt, Ilya Sutskever, and David Duvenaud. Ffjord: Free-form continuous dynamics for scalable reversible generative models. arXiv preprint arXiv:1810.01367, 2018.

- [27] Olivier Guéant, Jean-Michel Lasry, and Pierre-Louis Lions. Mean field games and applications. In Paris-Princeton lectures on mathematical finance 2010, pages 205–266. Springer, 2011.

- [28] Xin Guo, Anran Hu, Renyuan Xu, and Junzi Zhang. Learning mean-field games. Advances in Neural Information Processing Systems, 32, 2019.

- [29] Patrick Helber, Benjamin Bischke, Andreas Dengel, and Damian Borth. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 12(7):2217–2226, 2019.

- [30] Jonathan Ho, Xi Chen, Aravind Srinivas, Yan Duan, and Pieter Abbeel. Flow++: Improving flow-based generative models with variational dequantization and architecture design. In International Conference on Machine Learning, pages 2722–2730. PMLR, 2019.

- [31] Chin-Wei Huang, David Krueger, Alexandre Lacoste, and Aaron Courville. Neural autoregressive flows. In International Conference on Machine Learning, pages 2078–2087. PMLR, 2018.

- [32] Minyi Huang, Peter E Caines, and Roland P Malhamé. Large-population cost-coupled lqg problems with nonuniform agents: individual-mass behavior and decentralized -nash equilibria. IEEE transactions on automatic control, 52(9):1560–1571, 2007.

- [33] Minyi Huang, Roland P Malhamé, Peter E Caines, et al. Large population stochastic dynamic games: closed-loop mckean-vlasov systems and the nash certainty equivalence principle. Communications in Information & Systems, 6(3):221–252, 2006.

- [34] Matt Jacobs, Flavien Léger, Wuchen Li, and Stanley Osher. Solving large-scale optimization problems with a convergence rate independent of grid size. SIAM Journal on Numerical Analysis, 57(3):1100–1123, 2019.

- [35] Priyank Jaini, Kira A Selby, and Yaoliang Yu. Sum-of-squares polynomial flow. In International Conference on Machine Learning, pages 3009–3018. PMLR, 2019.

- [36] Yanxiang Jiang, Yabai Hu, Mehdi Bennis, Fu-Chun Zheng, and Xiaohu You. A mean field game-based distributed edge caching in fog radio access networks. IEEE Transactions on Communications, 68(3):1567–1580, 2019.

- [37] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [38] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- [39] Durk P Kingma and Prafulla Dhariwal. Glow: Generative flow with invertible 1x1 convolutions. Advances in neural information processing systems, 31, 2018.

- [40] Durk P Kingma, Tim Salimans, Rafal Jozefowicz, Xi Chen, Ilya Sutskever, and Max Welling. Improved variational inference with inverse autoregressive flow. Advances in neural information processing systems, 29:4743–4751, 2016.

- [41] Ivan Kobyzev, Simon Prince, and Marcus Brubaker. Normalizing flows: An introduction and review of current methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [42] Soheil Kolouri, Se Rim Park, Matthew Thorpe, Dejan Slepcev, and Gustavo K Rohde. Optimal mass transport: Signal processing and machine-learning applications. IEEE signal processing magazine, 34(4):43–59, 2017.

- [43] Matt Kusner, Yu Sun, Nicholas Kolkin, and Kilian Weinberger. From word embeddings to document distances. In International conference on machine learning, pages 957–966. PMLR, 2015.

- [44] Aimé Lachapelle, Jean-Michel Lasry, Charles-Albert Lehalle, and Pierre-Louis Lions. Efficiency of the price formation process in presence of high frequency participants: a mean field game analysis. Mathematics and Financial Economics, 10(3):223–262, 2016.

- [45] Jean-Michel Lasry and Pierre-Louis Lions. Mean field games. Japanese journal of mathematics, 2(1):229–260, 2007.

- [46] Chen-Yu Lee, Saining Xie, Patrick Gallagher, Zhengyou Zhang, and Zhuowen Tu. Deeply-supervised nets. In Artificial intelligence and statistics, pages 562–570. PMLR, 2015.

- [47] Alex Tong Lin, Samy Wu Fung, Wuchen Li, Levon Nurbekyan, and Stanley J. Osher. Alternating the population and control neural networks to solve high-dimensional stochastic mean-field games. Proceedings of the National Academy of Sciences, 118(31):e2024713118, 2021.

- [48] Zhiyu Liu, Bo Wu, and Hai Lin. A mean field game approach to swarming robots control. In 2018 Annual American Control Conference (ACC), pages 4293–4298. IEEE, 2018.

- [49] David Martin, Charless Fowlkes, Doron Tal, and Jitendra Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, volume 2, pages 416–423. IEEE, 2001.

- [50] Thomas Müller, Brian McWilliams, Fabrice Rousselle, Markus Gross, and Jan Novák. Neural importance sampling. ACM Transactions on Graphics (TOG), 38(5):1–19, 2019.

- [51] Eric Nalisnick, Akihiro Matsukawa, Yee Whye Teh, Dilan Gorur, and Balaji Lakshminarayanan. Do deep generative models know what they don’t know? arXiv preprint arXiv:1810.09136, 2018.

- [52] Yuval Netzer, Tao Wang, Adam Coates, Alessandro Bissacco, Bo Wu, and Andrew Y Ng. Reading digits in natural images with unsupervised feature learning. NIPS Workshop on Deep Learning and Unsupervised Feature Learning, 2011.

- [53] Behnam Neyshabur, Ryota Tomioka, and Nathan Srebro. Norm-based capacity control in neural networks. In Conference on Learning Theory, pages 1376–1401. PMLR, 2015.

- [54] Aude Oliva and Antonio Torralba. Modeling the shape of the scene: A holistic representation of the spatial envelope. International journal of computer vision, 42(3):145–175, 2001.

- [55] Derek Onken, S Wu Fung, Xingjian Li, and Lars Ruthotto. Ot-flow: Fast and accurate continuous normalizing flows via optimal transport. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, 2021.

- [56] Nicolas Papadakis, Gabriel Peyré, and Edouard Oudet. Optimal transport with proximal splitting. SIAM Journal on Imaging Sciences, 7(1):212–238, 2014.

- [57] George Papamakarios, Theo Pavlakou, and Iain Murray. Masked autoregressive flow for density estimation. arXiv preprint arXiv:1705.07057, 2017.

- [58] Gabriel Peyré and Marco Cuturi. Computational optimal transport: With applications to data science. Foundations and Trends in Machine Learning, 11(5-6):355–607, 2019.

- [59] Lars Ruthotto, Stanley J Osher, Wuchen Li, Levon Nurbekyan, and Samy Wu Fung. A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proceedings of the National Academy of Sciences, 117(17):9183–9193, 2020.

- [60] Takeshi Teshima, Isao Ishikawa, Koichi Tojo, Kenta Oono, Masahiro Ikeda, and Masashi Sugiyama. Coupling-based invertible neural networks are universal diffeomorphism approximators. Advances in Neural Information Processing Systems, 33:3362–3373, 2020.

- [61] Guofang Wang, Wang Yao, Xiao Zhang, and Zijia Niu. Coupled alternating neural networks for solving multi-population high-dimensional mean-field games with stochasticity. 2022.

- [62] Antoine Wehenkel and Gilles Louppe. Unconstrained monotonic neural networks. Advances in Neural Information Processing Systems, 32:1545–1555, 2019.

- [63] Jiajia Yu, Rongjie Lai, Wuchen Li, and Stanley Osher. A fast proximal gradient method and convergence analysis for dynamic mean field planning. arXiv preprint arXiv:2102.13260, 2021.

- [64] Yaomin Zhang, Haijun Zhang, and Keping Long. Energy efficient resource allocation in cache based terahertz vehicular networks: a mean-field game approach. IEEE Transactions on Vehicular Technology, 70(6):5275–5285, 2021.

Appendix A Derivations Regarding and

In this section, we provide additional details for some statements made in (3), regarding the agent trajectories and . First, we will derive the ODE system defining that is central to our MFG reformulation. Second, we will show how can be decomposed into a series of flow maps that we subsequently parameterize with neural networks.

At the base level, we have in (1) the trajectory for a single agent starting at . From there, we can define the trajectories for all agents as , where

| (28) |

We see that , and . This gives the ODE system governing .

Next, we prove the additive property of .

Proposition A.1.

For any , we have .

Proof.

By directly integrating the ODE governing ,

∎

Now, recalling the definition of in (17), we see that , which by the additive property can be decomposed iteratively: .

Appendix B Derivation of the Alternative Formulation

Consider reverse agent trajectories satisfying:

| (29) |

Applying the change of measure, the transport cost becomes:

Defining a regular grid in time: , , we obtain the approximation:

Therefore, the discretized transport cost is:

Appendix C Proofs

In this section, we provide the proofs of the statements mentioned in Section 4.

C.1 Theorem 4.1

The proof essentially follows the error analysis used in finite difference. Define a regular grid in time with grid points and grid spacing . Let . We start with the continuous objective

| (30) |

To obtain , we use the forward difference:

where . Therefore, if we approximate , the error of the integral is:

| (31) |

where the last equality is a result of summing over grid points.

C.2 Lemma 4.2

First, note that the MFG problem (14) admits the same optimizer and objective value if we add as an additional constraint:

| (32) |

Note that is a constant with respect to . Therefore, the above problem has the same solution as:

| (33) |

which in turn is equivalent to the following OT problem:

| (34) |

where . By the OT theory, we know that there exists a unique Monge map such that [58]. Therefore, and the optimizer of (33) satisfies . As noted before, minimizes the continuous MFG problem (14) as well.

C.3 Lemma 4.3

Applying a similar logic to the proof of Lemma 4.2, we know that the optimizer of the discretized MFG problem (22) coincides with that of the following problem

where is an optimizer of the discretized MFG problem for , and . Applying the KKT conditions on the above problem, we obtain:

Along with , we see that the solution to this recurrence relation is .

C.4 Theorem 4.4

From Lemma 4.2, we know the optimizer of the continuous MFG problem (14) has the form

We can substitute this into the continuous MFG problem and optimize over instead of , yielding

Second, we know from (4.3) the optimizer of the discretized MFG problem with grid points has the form . As a result, we can rewrite the discretized MFG problem as

which is identical to the problem over for the continuous MFG. Therefore, their objective values agree; furthermore, the minimizers of the two transformed problems are also the same: . As a result, the mapping at the terminal time agrees between the continuous and the discretized problem: . It then follows that the linear interpolation between the initial and terminal mappings in the discretized problem, , is precisely the unique optimizer for the continuous problem.

C.5 Theorem 4.7

The proof can be obtained by combining Theorem 4.6 with the following lemma.

Lemma C.1.

Let be absolutely continuous with a bounded density . Suppose for any compact , there is a sequence of mappings such that . Then, there exists such that , where denotes convergence in distribution.

Proof.

Define

According to [20], to show , it suffices to prove that:

For each , pick a compact such that . By assumption, we can find a sequence satisfying . Construct the sequence , which satisfies .

For any , we have:

∎

Appendix D MFG Experiments

We use NSF-CL with identical hyperparameters to solve both the OT and the crowd motion problems across different dimensions. The NF model contains 10 flows, each consisting of an alternating block with rational-quadratic splines as the coupling function, followed by a linear transformation. The conditioner has 256 hidden features, 8 bins, 2 transform blocks, ReLU activation, and a dropout probability of 0.25.

In both the OT and the crowd motion experiments, we use Jeffery’s divergence for terminal matching, which is the symmetrized KL divergence: . This terminal cost is different from the typical negative log-likelihood loss in NF training, but it is still tractable because are known. The choice of the terminal cost is not unique. For example, one can use either forward or backward KL divergence. However, we find empirically that Jeffery’s divergence yields the best results.

In the Monte Carlo approximation of the expectation, we resample a batch of data from the initial and the terminal distributions to compute the various loss terms at each iteration. Although [59] remarked that this approach yields slightly worse performance than resampling every 20 iterations, we find it to be good enough for our method.

The computed dynamics for the OT and the crowd motion experiments are approximately identical with respect to the dimensionality, so we select only one to show in the main text. For completeness, we include the remaining plots here.

D.1 OT

We show the density evolution and the sample trajectories for here; for the case , see Figure 2 in the main text. Visually, the evolutions are approximately invariant with respect to the dimensionality.

D.2 Crowd Motion

D.2.1 Density Evolution in Different Dimensions

We show the density evolution for here, which further supports the robustness with respect to dimensionality.

D.2.2 Different Levels of Penalty on Conflicts with the Obstacle

In addition to the sampled trajectories provided in Figure 4, we show the density evolution as well as the computed cost values for different aversion preferences here. All experiments are conducted at with identical hyperparameter settings as the one used in Table 2, except for the weights on the cost terms. The level of aversion is manifested through the outward-curving behavior when the densities are transported near the origin.

| 0.2 | 32.8511 0.0060 | 2.1274 0.0114 | 0.0436 0.0059 |

|---|---|---|---|

| 0.5 | 35.5121 0.0134 | 1.3463 0.0096 | 0.0424 0.0058 |

| 1.0 | 39.9230 0.0223 | 0.7076 0.0053 | 0.0430 0.0058 |

Appendix E NF Experiments

E.1 More Synthetic Data

E.2 Tabular Datasets

For the RealNVP flows, we used a replementation provided by [19], which achieved better log-likelihoods than the results reported in the original paper [17]. All RealNVP models use 6 flows with hidden dimensions of 256 in the networks. For NSF, we used the original implementation [22] with the reported hyperparameters in their paper. The likelihood results for the other models are taken from Table 3 of [41]. Our Lipschitz bound for a flow is defined as , the estimated maximum gradient spectral norm over the training set . The Lipschitz bound for the entire NF is the product of the bounds of the individual flows.

For a controlled study, all hyperparameters for the standard RealNVP (resp. NSF) and the RealNVP (resp. NSF) trained with transport costs are identical. The normalized weights for the transport costs used to produce the results in Table 4 are summarized in Table 7.

The dimension-wise permutations have shown to improve the flexibility of flow transforms both theoretically [60] and empirically [39]. When transport costs are computed, we omit the costs of permutation transforms, since the underlying object stays the same.

| Power | Gas | Hepmass | Miniboone | BSDS300 | |

|---|---|---|---|---|---|

| RNVP OT Weight | 5e-6 | 1e-6 | 5e-5 | 5e-3 | 1e-5 |

| NSF OT Weight | 1e-5 | 5e-5 | 5e-5 | 5e-3 | 5e-2 |

In the main text, we showed the marginal distributions on the first two dimensions for RealNVP trained on Miniboone. The remaining dimensions follow a similar trend. We additionally show those for dimensions 3,4 and for dimensions 5,6 here.

E.3 Image Datasets

Here, we document the details of the image datasets as well as the Glow model. The SVHN dataset consists of color images of house numbers [52], and EuroSAT is composed of satellite images for various landscapes [29]. In Table 8, we provide the meta-data for the image datasets used.

| MNIST | FMNIST | CIFAR-10 | SVHN | EUROSAT | |

|---|---|---|---|---|---|

| Number of Images | 60000 | 60000 | 50000 | 73257 | 27000 |

| Image Dimensions | |||||

| Number of Channels | 1 | 1 | 3 | 3 | 3 |

The Glow implementation we used is adapted from [11], which uses a simple CNN with 512 channels interlaced with actnorm as the conditioner in its affine coupling transforms. Each flow in Glow consists of an actnorm layer, an affine coupling, and a 1x1 convolution [39]. The full model employs 32 flow transforms per level, and the number of levels depends on the image dataset specified in Table 9. At the end of each level, the images are squeezed from the shape to , where are the number of channels and the side dimension, respectively. Afterwards, half of the channels go through an additional affine transformation and are not transformed further in the remaining flows. We optimize the models with Adam [37] at the highest learning rate when training does not diverge. Further information about the Glow model is summarized in Table 9.

| MNIST | FMNIST | CIFAR-10 | SVHN | EUROSAT | |

| Glow OT Weight | 1e-6 | 1e-6 | 5e-7 | 3e-7 | 3e-7 |

| Number of levels | 2 | 2 | 4 | 4 | 4 |