BrainDecoder: Style-Based Visual Decoding

of EEG Signals††thanks: This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible.

Abstract

Decoding neural representations of visual stimuli from electroencephalography (EEG) offers valuable insights into brain activity and cognition. Recent advancements in deep learning have significantly enhanced the field of visual decoding of EEG, primarily focusing on reconstructing the semantic content of visual stimuli. In this paper, we present a novel visual decoding pipeline that, in addition to recovering the content, emphasizes the reconstruction of the style, such as color and texture, of images viewed by the subject. Unlike previous methods, this “style-based” approach learns in the CLIP spaces of image and text separately, facilitating a more nuanced extraction of information from EEG signals. We also use captions for text alignment simpler than previously employed, which we find work better. Both quantitative and qualitative evaluations show that our method better preserves the style of visual stimuli and extracts more fine-grained semantic information from neural signals. Notably, it achieves significant improvements in quantitative results and sets a new state-of-the-art on the popular Brain2Image dataset.

Index Terms:

Deep Learning, Image Synthesis, EEG, MultimodalI Introduction

Understanding neural representations in the brain and the information they encode is crucial for enhancing our knowledge of cognitive processes and developing brain-computer interfaces (BCIs) [1]. In particular, decoding and simulating the human visual system has emerged as a significant challenge. Recent advancements have led to substantial progress in visual decoding, allowing for the reconstruction of visual stimuli perceived by a subject during brain activity measurement. [2] [3] [4] [5] [6] [7] [8] [9]

Electroencephalography (EEG) is a technique for recording brain signals, widely used due to its non-invasive nature, cost-effectiveness, and high temporal resolution. Although it has notable limitations [10] such as relatively lower spatial resolution as well as susceptibility to physiological artifacts and individual differences, conducting research based on EEG remains crucial for practical applications. The technique’s accessibility and ability to capture real-time brain activity make it invaluable.

Previous research [11] [12] [13] [14] on EEG-based visual decoding has primarily focused on capturing high-level semantic content by aligning with the text or image embedding space of CLIP (Contrastive Language–Image Pretraining) [15]. While these approaches have successfully represented broad semantic categories, they often fall short in accurately reproducing stylistic details such as color and texture, revealing a gap between semantic understanding and detailed visual representation.

In this paper, we present BrainDecoder, a novel method that aims to overcome this limitation by aligning EEG signals with both image and text embeddings as separate conditions in a pretrained latent diffusion model [16]. In the text-to-image generation literature, previous researches [17] [18] have demonstrated that incorporating image “prompts” along with the text ones enable image generation that preserves style and content. By aligning EEG signals with both image and text embedding spaces, we show it is possible to extract both style and semantic information. This dual approach enhances the model’s ability to more accurately reconstruct the stylistic features of the images viewed by the EEG subject. Our qualitative and quantitative evaluations demonstrate that BrainDecoder outperforms the state-of-the-art by a large margin in both reconstruction details and generation quality, setting a new benchmark for EEG-based visual decoding.

II Methodology

We introduce a novel framework for reconstructing images viewed by an EEG subject, as illustrated in Fig. 1. It consists of three main components: A) Aligning EEG signals with CLIP image space, B) Aligning EEG signals with CLIP text space, and C) Combining the CLIP-aligned EEG representations for visual stimuli reconstruction.

II-A EEG Alignment in Image Space

Prior work [17] [19] [20] has demonstrated the ability of CLIP image embeddings to facilitate both semantic and stylistic transfer when the generator model is conditioned accordingly. Building on these findings, our approach aims to extract image-related information from EEG signals by aligning them with CLIP image embeddings. To achieve this, we process the EEG signals and their corresponding ground truth images (i.e., the ones that the EEG subject was watching when the signal was taken) using an EEG encoder and a CLIP image encoder, respectively, and aim to correlate the outputs. Let be an encoder that processes the input EEG signal , and the CLIP image encoder applied to the input image . We call the EEG-image encoder because it is trained to align with the image as encoded by . We employ Mean Squared Error (MSE) as the loss function to measure the similarity between the EEG and image representations:

| (1) |

To effectively encode the EEG data, we extend upon previous approaches [2] [21] [6] by utilizing an LSTM-based encoder architecture followed by fully connected layers.

II-B EEG Alignment with Text Space

Recent approaches for visual brain signal decoding [11] [22] have sought to align brain signals with CLIP [15] text embeddings obtained from captions generated by pretrained image caption generators. However, since CLIP was trained on image-text pairs publicly available on the Internet with often short captions, those methods using longer generated captions, particularly with Stable Diffusion [16], have been less effective. Although Stable Diffusion allows up to 77 tokens as input, empirical evidence suggests that the effective token length of CLIP is considerably shorter [23]. Accordingly, we adopt a simpler labeling approach: we make the caption by appending the class label of the image to the text “an image of”. We show empirically that this method improves performance over previous approaches and that more fine-grained information can be captured by the EEG-image encoder instead. We use the CLIP text encoder that embeds the caption to train the EEG-text encoder that encodes the corresponding EEG signal . As in the image alignment step, we use MSE as the loss function to quantify the similarity between the EEG and the text representations:

| (2) |

Similar to the image processing pipeline, an LSTM-based encoder is used for EEG signal encoding.

II-C Visual Stimuli Reconstruction

After training the EEG-image and EEG-text encoders, we leverage the resulting EEG embeddings to generate images. Our method uses a pretrained latent diffusion model (e.g., Stable Diffusion [16]), with the EEG embeddings from both encoders serving as distinct conditioning inputs. This is achieved through a decoupled cross-attention mechanism [17]. We hypothesize that by aligning the EEG signals in CLIP image space, the EEG encoder can capture detailed semantics and style that may not be easily conveyed through text alone. This approach is analogous to the way latent diffusion models incorporate both text and image prompts as conditioning factors. The reconstructed visual stimuli are defined as:

| (3) |

Here, represents the reconstructed image, and denotes the pretrained Stable Diffusion, conditioned on the outputs of both the EEG-image encoder and the EEG-text encoder .

III Experiments and Results

This section is divided into two main parts. We begin by detailing our experimental setup for training the EEG encoders. Following this, we present our findings and discuss various ablation studies.

III-A Dataset

We utilize the Brain2Image [21] [2], an EEG-image pair dataset with 11,466 EEG recordings from six participants, for our experiments. These recordings were captured using a 128-channel EEG system as the participants were exposed to visual stimuli for 500 ms. The stimuli consisted of 2,000 images with labels spanning 40 categories, derived from the ImageNet dataset [24]. Each category included 50 easily recognizable images to ensure clarity in the participants’ neural responses.

III-B Implementation

For the EEG encoders, we extend from previous approaches [21] [2] [6] and use a 3-layered LSTM network with a hidden dimension of 512. The output of the network is then passed through a fully connected linear network with a BatchNorm [25] and LeakyReLU [26] activation function in between. Only the EEG encoders are trained in our framework, keeping the framework computationally efficient. We use the Adam [27] optimizer with a weight decay of 0.0001. The initial learning rate is set to 0.0003 and a lambda learning rate scheduler is used with a lambda factor of 0.999.

In order to align with CLIP image space, we follow the approach outlined in the IP-Adapter [17] framework, utilizing the CLIP-Huge model to process the images. For aligning EEG with CLIP text space, we process the captions using the CLIP-Large model which is used by Stable Diffusion 1.5. The captions are generated by concatenating “an image of” with the class label. For ablation studies, we employ the LLaVA-1.5-7b model [28] for layout-oriented caption generation and BLIP [29] for general caption generation. For visual reconstruction, we employ Stable Diffusion version 1.5, aligning our method with recent results for fair comparison and we employ a PNDM scheduler [30] with 25 inference steps.

III-C Evaluation Metrics

We employ the following metrics to objectively assess the performance of our framework. ACC: The -way Top- Classification Accuracy [8] [31] evaluates the semantic accuracy of the reconstructed images. We set and . IS: The Inception Score [32] assesses the diversity and quality of the generated images. FID: Fréchet inception distance [33] measures the distance from the ground truth images. SSIM: The Structural Similarity Index Measure [34] evaluates the quality of images. CS: CLIP Similarity [35] [11] reflects how well the generated images capture the semantic and stylistic content of the ground truth images.

III-D Results

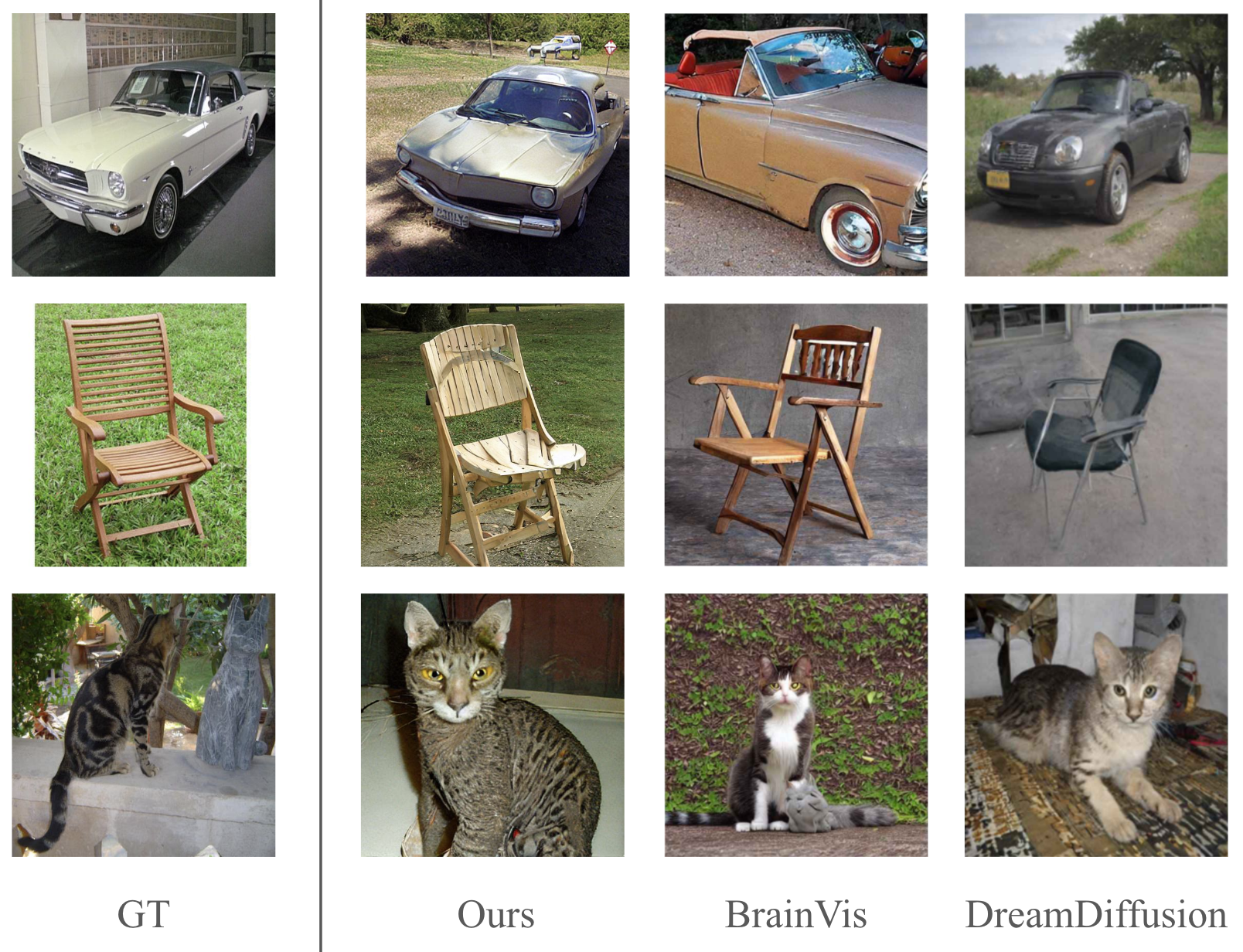

Fig. 2 presents sample outputs of BrainDecoder. Beyond capturing the high-level semantics, our method demonstrates the ability to retain fine-grained visual features, including color and texture. Notably, there is also a resemblance in the color composition of the background in addition to the main object’s color. This capability is further illustrated in the example of the electric locomotive class. The object’s color is depicted as light blue—matching the visual stimuli—despite the range of potential color variations. This demonstrates the model’s ability to recover nuanced visual attributes with a high fidelity.

This is further demonstrated in Fig. 3, where we compare our results with prior studies. Notably, in the second image, our method is able to reconstruct not only the wooden texture of the chair, but the grass in the background as well, which was absent in the results by other methods.

| Methods | ACC | IS | FID | SSIM | CS |

|---|---|---|---|---|---|

| Brain2Image | - | 5.07 | - | - | - |

| DreamDiffusion∗ | 45.8 | - | - | - | - |

| BrainVis | 45.5 | - | - | - | 0.602 |

| EEGStyleGAN-ADA | - | 10.82 | 174.13 | - | - |

| Ours | 95.2 | 28.11 | 69.97 | 0.2277 | 0.7575 |

∗Results from DreamDiffusion were computed using data from subject 4

Table. I shows the quantitative results of BrainDecoder compared to baselines [2] [12] [11] [7]. We evaluate our methodology on 5 evaluation metrics in §III-C. BrainDecoder outperforms the state-of-the-art in both reconstruction fidelity and generation quality. Notably, BrainDecoder achieves a surprising 95.2% on the 50-way top-1 classification accuracy metrics, showing that the trained EEG encoders are able to extract rich information from the brain signals very well.

| Methods | ACC | IS | FID | SSIM | CS |

|---|---|---|---|---|---|

| Only Text (LLaVA) | 60.43 | 20.22 | 151.98 | 0.1797 | 0.6188 |

| Only Text (BLIP) | 68.91 | 24.0 | 127.19 | 0.1832 | 0.6541 |

| Only Text (label) | 72.61 | 26.43 | 105.6 | 0.1845 | 0.6610 |

| Only Image | 79.7 | 26.1 | 75.88 | 0.2239 | 0.7177 |

| Original | 95.2 | 28.11 | 69.97 | 0.2277 | 0.7575 |

III-E Ablation

We conduct an ablation study to understand the contributions of each component. Rows 3-5 of Table II show visual decoding with the EEG-image encoder yields a higher SSIM (0.2239) than with only the EEG-text encoder (0.1845). This supports our premise that aligning in CLIP’s image space facilitates style transfer. Furthermore, the framework achieves the best performance when both encoders are used, indicating the complementary nature of the two encoders.

Additionally, we empirically show that captions generated by Vision Language Models (VLMs) are suboptimal for EEG-based visual decoding. We compare our label caption method with two VLMs: BLIP [29] and layout-oriented LLaVA [28]. A key challenge in image reconstruction from brain signals is preserving the visual layout. We hypothesize that associating EEG signals with detailed layout-oriented CLIP text embeddings might help. Using the LLaVA model, we generate layout-oriented captions following the instruction: “Write a description of the image layout. EXAMPLE OUTPUT: [object] is in the top left of the image, facing right.” Fig. 4 shows example layout-oriented captions. Notably, rows 1-3 of Table II indicate that the simple label caption (“an image of [class name]”) performs best, while layout-oriented captions (row 1) perform the worst. This further supports our premise that simple label captions are more effective for EEG encoders and complex prompts are harder for CLIP to fully interpret.

IV Conclusion

Our research introduces BrainDecoder, a novel approach to image reconstruction from EEG signals that preserves both stylistic and semantic features of visual stimuli. By aligning EEG signals with CLIP image and text embeddings separately, we bridge the gap between neural representations and visual content. Our analysis demonstrates significant improvements over existing models, offering a richer interpretation of neural signals through the dual-alignment strategy.

References

- [1] M. Orban, M. Elsamanty, K. Guo, S. Zhang, and H. Yang, “A review of brain activity and eeg-based brain-computer interfaces for rehabilitation application,” in Bioengineering, 2022.

- [2] I. Kavasidis, S. Palazzo, C. Spampinato, D. Giordano, and M. Shah, “Brain2image: Converting brain signals into images,” Proceedings of the 25th ACM international conference on Multimedia, 2017.

- [3] P. Tirupattur, Y. S. Rawat, C. Spampinato, and M. Shah, “Thoughtviz: Visualizing human thoughts using generative adversarial network,” in Proceedings of the 26th ACM International Conference on Multimedia, ser. MM ’18. New York, NY, USA: Association for Computing Machinery, 2018, p. 950–958.

- [4] S. Khare, R. Choubey, L. Amar, and V. Udutalapalli, “Neurovision: perceived image regeneration using cprogan,” Neural Computing and Applications, vol. 34, pp. 1–13, 04 2022.

- [5] T. Fang, Y. Qi, and G. Pan, “Reconstructing perceptive images from brain activity by shape-semantic gan,” Advances in Neural Information Processing Systems, vol. 33, pp. 13 038–13 048, 2020.

- [6] P. Singh, P. Pandey, K. Miyapuram, and S. Raman, “Eeg2image: image reconstruction from eeg brain signals,” in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- [7] P. Singh, D. Dalal, G. Vashishtha, K. Miyapuram, and S. Raman, “Learning robust deep visual representations from eeg brain recordings,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, pp. 7553–7562.

- [8] Z. Chen, J. Qing, T. Xiang, W. L. Yue, and J. H. Zhou, “Seeing beyond the brain: Conditional diffusion model with sparse masked modeling for vision decoding,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 22 710–22 720.

- [9] Y. Takagi and S. Nishimoto, “High-resolution image reconstruction with latent diffusion models from human brain activity,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 14 453–14 463.

- [10] C. Q. Lai, H. Ibrahim, M. Z. Abdullah, J. M. Abdullah, S. A. Suandi, and A. Azman, “Artifacts and noise removal for electroencephalogram (eeg): A literature review,” 2018 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), pp. 326–332, 2018.

- [11] H. Fu, Z. Shen, J. J. Chin, and H. Wang, “Brainvis: Exploring the bridge between brain and visual signals via image reconstruction,” arXiv preprint arXiv:2312.14871, 2023.

- [12] Y. Bai, X. Wang, Y.-p. Cao, Y. Ge, C. Yuan, and Y. Shan, “Dreamdiffusion: Generating high-quality images from brain eeg signals,” arXiv preprint arXiv:2306.16934, 2023.

- [13] Y.-T. Lan, K. Ren, Y. Wang, W.-L. Zheng, D. Li, B.-L. Lu, and L. Qiu, “Seeing through the brain: image reconstruction of visual perception from human brain signals,” arXiv preprint arXiv:2308.02510, 2023.

- [14] D. Li, C. Wei, S. Li, J. Zou, and Q. Liu, “Visual decoding and reconstruction via eeg embeddings with guided diffusion,” arXiv preprint arXiv:2403.07721, 2024.

- [15] A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark et al., “Learning transferable visual models from natural language supervision,” in International conference on machine learning. PMLR, 2021, pp. 8748–8763.

- [16] R. Rombach, A. Blattmann, D. Lorenz, P. Esser, and B. Ommer, “High-resolution image synthesis with latent diffusion models,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 10 684–10 695.

- [17] H. Ye, J. Zhang, S. Liu, X. Han, and W. Yang, “Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models,” arXiv preprint arXiv:2308.06721, 2023.

- [18] Q. Wang, X. Bai, H. Wang, Z. Qin, and A. Chen, “Instantid: Zero-shot identity-preserving generation in seconds,” arXiv preprint arXiv:2401.07519, 2024.

- [19] P. Wang and Y. Shi, “Imagedream: Image-prompt multi-view diffusion for 3d generation,” arXiv preprint arXiv:2312.02201, 2023.

- [20] A. Ramesh, P. Dhariwal, A. Nichol, C. Chu, and M. Chen, “Hierarchical text-conditional image generation with clip latents,” arXiv preprint arXiv:2204.06125, 2022.

- [21] C. Spampinato, S. Palazzo, I. Kavasidis, D. Giordano, N. Souly, and M. Shah, “Deep learning human mind for automated visual classification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 6809–6817.

- [22] M. Ferrante, F. Ozcelik, T. Boccato, R. VanRullen, and N. Toschi, “Brain captioning: Decoding human brain activity into images and text,” arXiv preprint arXiv:2305.11560, 2023.

- [23] B. Zhang, P. Zhang, X. Dong, Y. Zang, and J. Wang, “Long-clip: Unlocking the long-text capability of clip,” arXiv preprint arXiv:2403.15378, 2024.

- [24] J. Deng, R. Socher, L. Fei-Fei, W. Dong, K. Li, and L.-J. Li, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), vol. 00, 06 2009, pp. 248–255.

- [25] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Proceedings of the 32nd International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, F. Bach and D. Blei, Eds., vol. 37. Lille, France: PMLR, 07–09 Jul 2015, pp. 448–456.

- [26] B. Xu, “Empirical evaluation of rectified activations in convolutional network,” arXiv preprint arXiv:1505.00853, 2015.

- [27] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Y. Bengio and Y. LeCun, Eds., 2015.

- [28] H. Liu, C. Li, Q. Wu, and Y. J. Lee, “Visual instruction tuning,” in NeurIPS, 2023.

- [29] J. Li, D. Li, C. Xiong, and S. Hoi, “Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation,” in International conference on machine learning. PMLR, 2022, pp. 12 888–12 900.

- [30] L. Liu, Y. Ren, Z. Lin, and Z. Zhao, “Pseudo numerical methods for diffusion models on manifolds,” in ICLR, 2022.

- [31] G. Gaziv, R. Beliy, N. Granot, A. Hoogi, F. Strappini, T. Golan, and M. Irani, “Self-supervised natural image reconstruction and large-scale semantic classification from brain activity,” NeuroImage, vol. 254, p. 119121, 2022.

- [32] T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen, “Improved techniques for training gans,” Advances in neural information processing systems, vol. 29, 2016.

- [33] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “Gans trained by a two time-scale update rule converge to a local nash equilibrium,” Advances in neural information processing systems, vol. 30, 2017.

- [34] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity.” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004.

- [35] Y. Lu, C. Du, Q. Zhou, D. Wang, and H. He, “Minddiffuser: Controlled image reconstruction from human brain activity with semantic and structural diffusion,” in Proceedings of the 31st ACM International Conference on Multimedia, ser. MM ’23. New York, NY, USA: Association for Computing Machinery, 2023, p. 5899–5908.