Brain-inspired bodily self-perception model for robot rubber hand illusion

At the core of bodily self-consciousness is the perception of the ownership of one’s body. Recent efforts to gain a deeper understanding of the mechanisms behind the brain’s encoding of the self-body have led to various attempts to develop a unified theoretical framework to explain related behavioral and neurophysiological phenomena. A central question to be explained is how body illusions such as the rubber hand illusion actually occur. Despite the conceptual descriptions of the mechanisms of bodily self-consciousness and the possible relevant brain areas, the existing theoretical models still lack an explanation of the computational mechanisms by which the brain encodes the perception of one’s body and how our subjectively perceived body illusions can be generated by neural networks. Here we integrate the biological findings of bodily self-consciousness to propose a Brain-inspired bodily self-perception model, by which perceptions of bodily self can be autonomously constructed without any supervision signals. We successfully validated our computational model with six rubber hand illusion experiments and a disability experiment on platforms including a iCub humanoid robot and simulated environments. The experimental results show that our model can not only well replicate the behavioral and neural data of monkeys in biological experiments, but also reasonably explain the causes and results of the rubber hand illusion from the neuronal level due to advantages in biological interpretability, thus contributing to the revealing of the computational and neural mechanisms underlying the occurrence of the rubber hand illusion.

1 Introduction

At the core of bodily self-consciousness is the perception of the ownership of one’s own body (?, ?). In recent years, in order to understand more deeply how the brain encodes self body, some researchers have tried to establish a unified theoretical framework to explain the behavioral and neurophysiological phenomena in the coding of bodily self-consciousness from the perspectives of Predictive coding and Bayesian causal inference. For these theoretical models, a core problem that needs to be explained is how body illusions such as the rubber hand illusion occur. The rubber hand illusion refers to the illusion that occurs when the participant’s rubber hand and the invisible real hand are presented with synchronized tactile and visual stimuli, in which the participant will have the illusion that the rubber hand seems to become his or her own hand (?).

Prediction coding method realizes body estimation by minimizing the errors between perception and prediction (?). Hinz et al. (?) regard the rubber hand illusion as a body estimation problem. They take humans and a multisensory robotic that can perceive the proprioception, vision and tactile information on its arm as participants, and verify their model through the traditional rubber hand illusion experiment. The experiment shows that the proprioception drifts are caused by prediction error fusion instead of hypothesis selection.

The Active inference models can be regarded as an extension of Predictive coding approaches. Rood et al. (?) propose a deep active inference model for studying the rubber hand illusion. They model the rubber hand illusion experiment in a simulated environment. The results show that their model produces similar perceptual and active patterns to that of human during the experiment. Maselli et al. (?) propose an active inference model for arm perception and control, which integrates the imperatives both in intentional and conflict-resolution. The imperative of intentional is used to control the achievement of external goals, and the imperative of conflict-resolution is to avoid multisensory inconsistencies. The results reveal that intentional and conflict-resolution imperatives are driven by different prediction errors.

Bayesian causal inference model is extensively used in the theoretical modeling of multimodal integration, and has been repeatedly verified at the behavioral and neuronal levels (?, ?). The Bayesian causal inference model can well reproduce and explain a variety of rubber hand illusion experiments. Samad et al. (?) adopted the Bayesian causal inference model of multisensory perception, and test it through the rubber hand illusion on humans. The results show that their model could reproduce the rubber hand illusion, and suggest that the Bayesian causal inference dominates the perception of body ownership. Fang et al. (?) present a nonhuman primate version experiment of a rubber hand illusion. They conduct the experiment on human and monkey participants. They adopted the Bayesian causal inference (BCI) model to establish an objective and quantitative model for the body ownership of macaques. With the BCI model, they investigate the computational and neural mechanisms of body ownership in the macaque brain from monkey behavioral and neural data, respectively. The results show that the BCI model can fit monkey behavioral and neuronal data well, helping to reveal a cortical representation of body ownership.

Although the above theoretical models describe the mechanism of bodily self-consciousness and possible related brain areas from a conceptual perspective, they still do not explain the computational mechanism of how the brain encodes bodily self-consciousness and how the body illusion we subjectively perceive is generated by neural networks.

Here we integrate the biological findings of bodily self-consciousness and construct a Brain-inspired bodily self-perception model, which can achieve association learning of proprioceptive and visual information and construct the bodily self-perception model autonomously without any supervision signals. In terms of experiments, this model can reproduce six rubber hand illusion experiments simultaneously at behavioral and neuronal scales, and the experimental results can well fit the behavioral and neural data of monkeys in biological experiments. In terms of biological explainability, this model can explain the causes and results of the rubber hand illusion reasonably from the neuron scale, which is helpful to reveal the computational and neural mechanism underlying the rubber hand illusion. In addition, the results of the disability experiment indicate that the generation of rubber hand illusion is the result of the joint action of primary multisensory integration area (e.g., TPJ) and high-level multisensory integration area (e.g., AI), and neither is indispensable.

2 Results

In this section, we first introduce the Brain-inspired bodily self-perception model, then introduce the design of the experiment, and finally introduce the Proprioceptive drift experiment result on the iCub humanoid robot, and the results of Proprioceptive drift, Proprioception accuracy, Appearance replacement, Asynchronous, Proprioception only, Vision only and Disability experiments in the simulated environment.

2.1 Brain-inspired bodily self-perception model

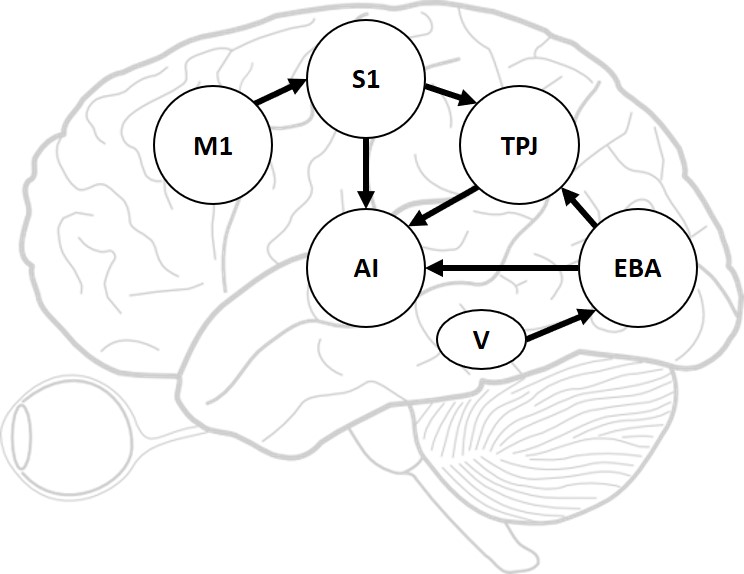

We integrate the biological findings of bodily self-perception into a model to construct a brain-inspired model for robot bodily self-perception, and the architecture of the model is shown in Figure 1A.

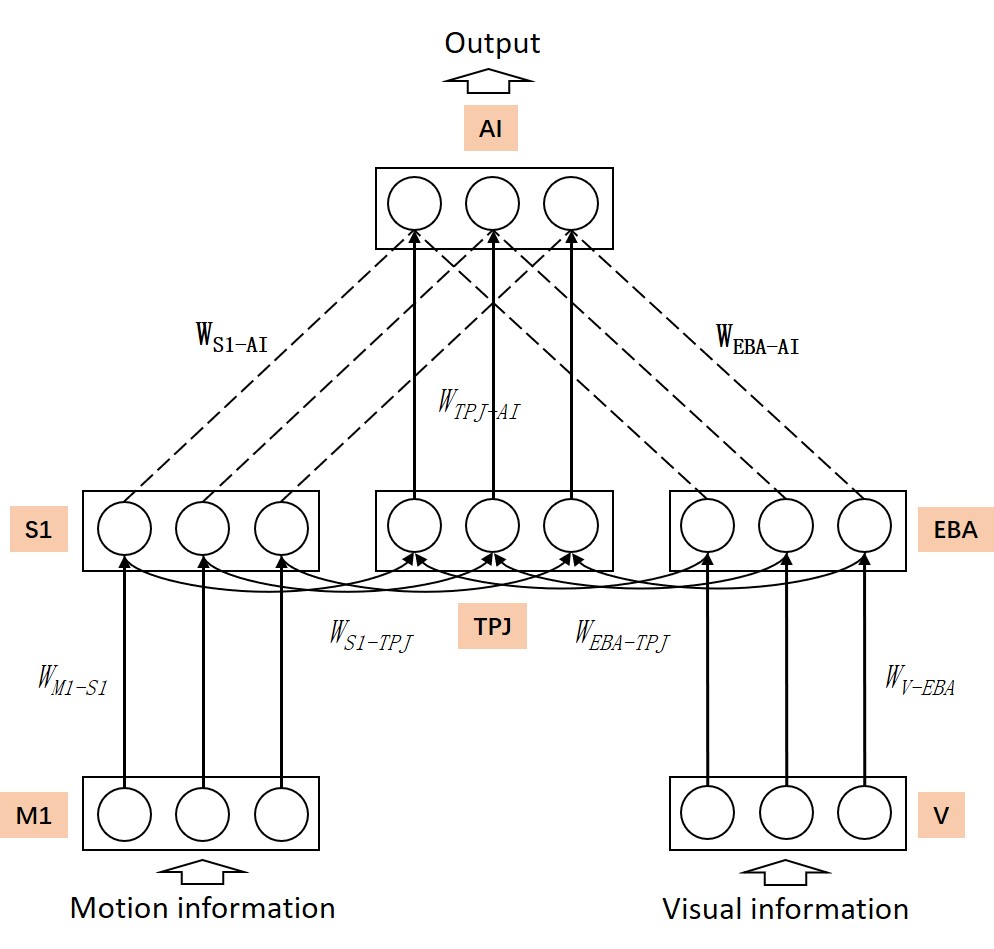

We have derived and designed our computational model to include multiple functional brain areas based on the current biological understanding of the functions of various brain areas involved in bodily self-perception. Specifically, the primary motor cortex (M1) is considered for encoding motion’s strength and orientation, and controlling the motion execution (?, ?). In our computational model, the M1 is used to encode the orientation of motion. The primary somatosensory cortex (S1) is considered for proprioception to perceive limb position (?, ?). In our computational model, S1 receives stimuli from M1 for the perception of arm movement orientation. The extrastriate body area (EBA) is considered to be involved in the visual perception of the human body and body parts (?), and in the rubber hand illusion experiment, it is proved to be directly involved in the processing of limb ownership (?). In our computational model, the EBA receives information from Vision (V) and obtains finger orientation information. The temporo-parietal junction (TPJ) integrates information from visual, auditory, and somatosensory systems and is considered to play an important role in multisensory bodily processing (?, ?), and has been reported be activated in the rubber hand illusion experiment (?, ?). In our computational model, the TPJ receives information from S1 and EBA, and performs primary multisensory information integration. The anterior insula (AI) is considered to be a primary region for processing interoceptive signals (?), multimodal sensory processing (?, ?, ?), and it has also been reported to be activated in the rubber hand illusion experiment (?, ?). In our computational model, the AI receives information from S1, TPJ and EBA, performs high-level multisensory information integration, and outputs the result.

Figure 1B shows the neural network architecture of the proposed model. This model is a three-layer spiking neural network model with cross-layer connections. The M1 area generates information about the robot’s motion direction and the V area receives visual information about the robot. The AI area outputs the robot’s behavior decision information according to the highest firing rate of the neuron. Unsupervised learning of the bodily self-perception model is realized through the motor and visual information, and tested using the rubber hand illusion adapted from the macaque behavior experiment (?). More details could be found in Materials and Methods section.

2.2 Experiments on the iCub robot

2.2.1 Experimental design

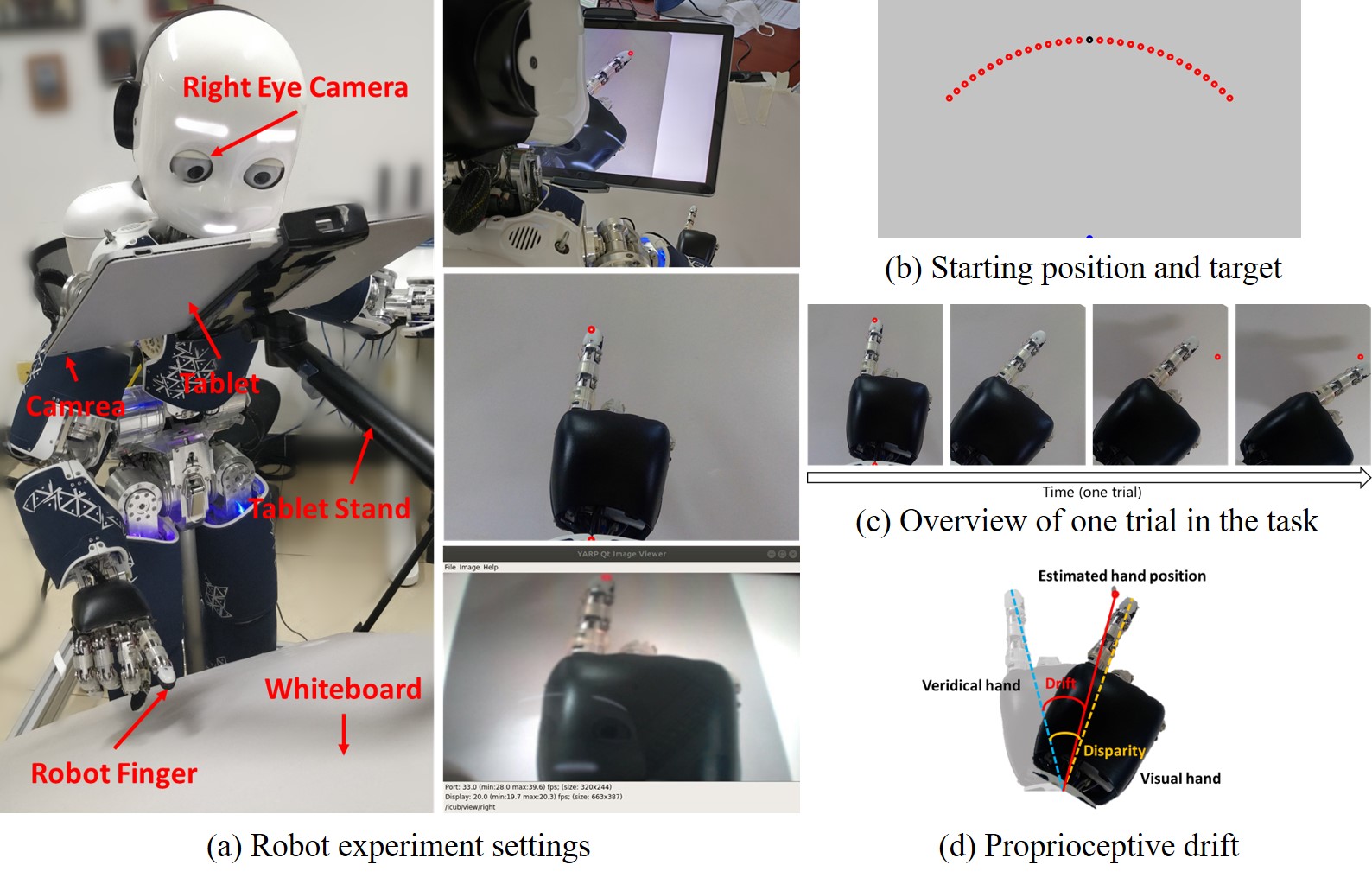

The overview of the robot experiment is shown in Figure 2.

Figure 2A shows the scene settings of the experiment. The left panel shows the equipment involved in the robot experiment, including an iCub humanoid robot, a Surface Book 2 tablet with a camera in its back, a tablet stand, and a white cardboard. The robot’s hand is placed in between the tablet and the white cardboard. The tablet serves two functions: blocking the robot from seeing its own hand directly; capturing images of the robot’s hand through the back camera, rotating the image, and displaying the processed image in front of the robot’s own eye-camera (the right eye-camera). The tablet stand is used to support and hold the tablet in a desired position. The white cardboard blocks sundries to make the background as clean as possible, thus reducing the difficulty of image recognition and the possibility of incorporating other intrusive visual cues. The settings of the equipment make sure that the robot could only perceives its visual hand through the display of the tablet by its own eye-camera. The right side panel shows, from top to bottom, a scene of the robot experiment in the right rear view, the image displayed by the tablet and the image perceived by the robot using its right eye-camera.

Figure 2B shows the Starting position and target during the robot experiment. All points in the experiment are colored in red, and here we have marked the initial positions as blue and black points for ease of illustration. The robot first places its wrist on the blue dot below the image and places its finger on the black dot. The red dots are the target that the robot needs to point to during the experiment. These targets are distributed on a circular arc with the blue dot as the circular dot (the position of the robot wrist) and the distance between the blue dot and the black dot as the radius (the distance of the robot’s wrist to its fingertip). With the point directly in front of the circular dot as , ranging from to , and the interval is . There are 9 target points in total.

Figure 2C shows the overview of one trial in the task. (1) The robot puts its hand in the starting position. (2) The point at the starting position disappears, and the visual hand rotates at a random angle. Considering the motion range and precision of the robot, the rotation angle ranges from to , and the interval is , including two angles and . There are 15 rotation angles in total. (3) The target is displayed. (4) Based on multisensory integration by vision and proprioception, the robot makes behavioral decisions and points to the target.

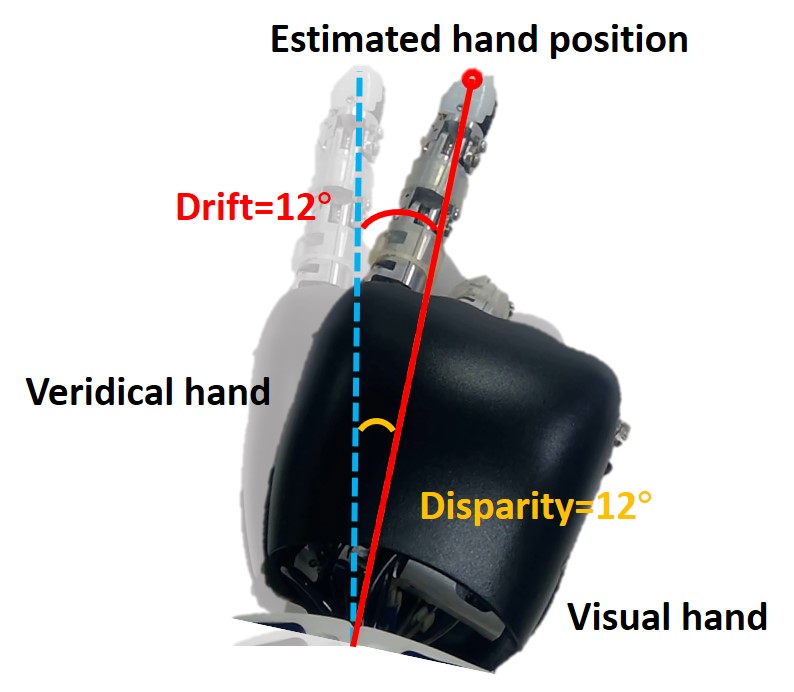

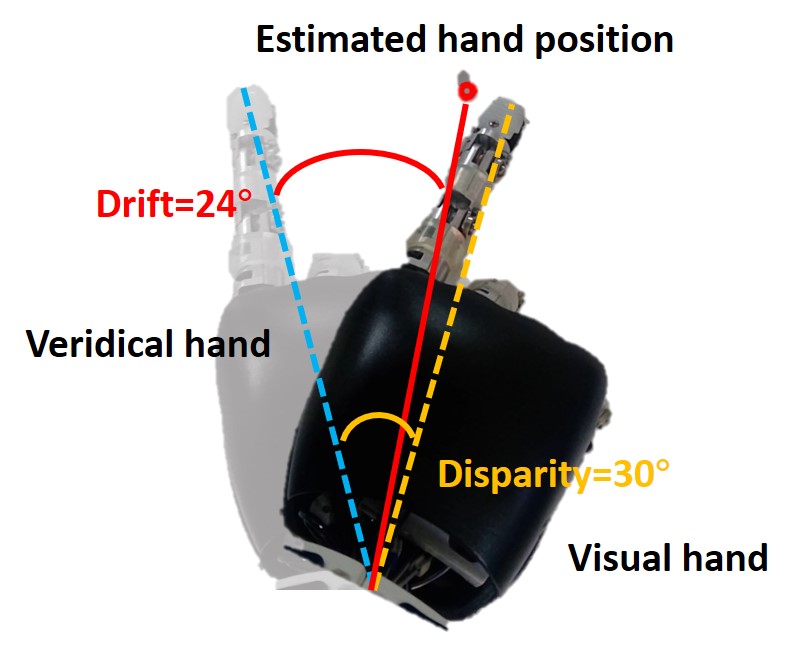

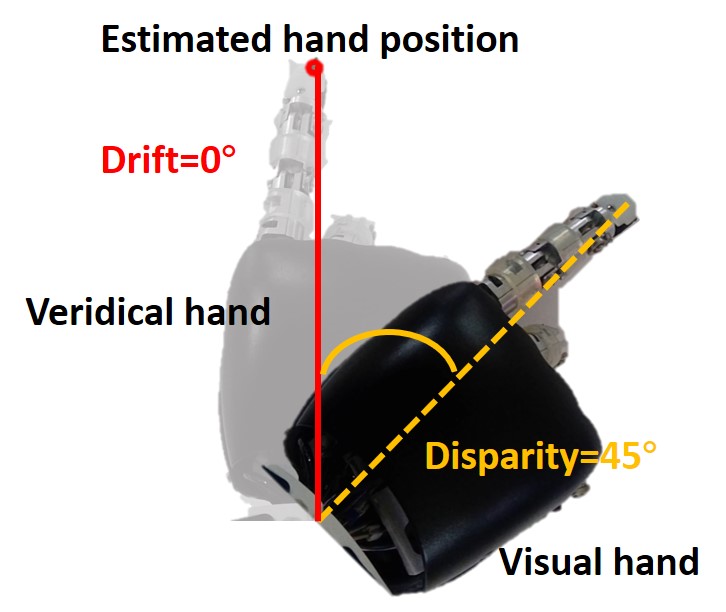

Figure 2D shows the measurement method of proprioceptive drift. The dark hand is the visual hand, and the light hand is the veridical hand. The red target point is the position of the hand estimated by the robot, while the veridical hand is the position where the proprioceptive hand is located. Therefore, the angle between the robot’s veridical hand and the target point is the proprioceptive drift in the rubber hand illusion, that is, the angle between the blue dotted line and the red solid line. The angle between the blue dotted line and the orange dotted line is the disparity from the veridical hand to the visual hand.

2.2.2 Experimental results

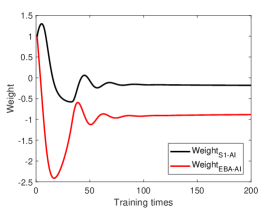

Before the rubber hand illusion experiment, the robot needs to construct the bodily self-perception model through training. During training process, the robot observes its veridical hand directly and trains through random movement. The training process does not require any supervised signal. Figure 3A shows the synaptic weights between S1 and AI, EBA and AI after training, in which both of them have changed from excitatory connection to inhibitory connection. It also shows that the intensity of inhibitory connection from vision (EBA-AI) is higher than that from proprioception (S1-AI).

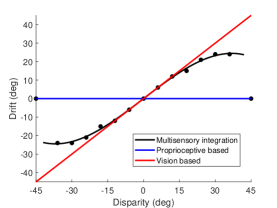

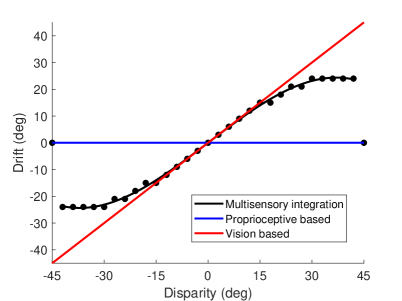

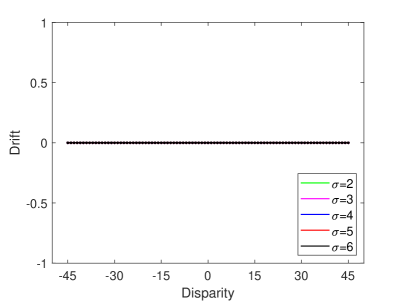

Figure 3B shows the behavioral results of the robot experiment. When the hand rotation angle is small (small disparity angle), the proprioceptive drift is small. With the increase of the disparity angle, the proprioceptive drift increases, indicating that the robot mainly relies on visual information for decision-making. When the hand rotation angle is medium (medium disparity angle), the proprioceptive drift is medium. With the increase of the disparity angle, the proprioceptive drift increases slowly or remains unchanged, indicating that the robot mainly relies on proprioceptive information for decision-making. When the hand rotation angle is large (large disparity angle), the proprioceptive drift is zero. With the increase of the disparity angle, the proprioception deviation does not change, indicating that the robot completely relies on the proprioceptive information for decision-making.

Figure 3C shows the results of the behavior at a small disparity angle. The disparity angle is , the proprioceptive drift is . Figure 3D shows the results of the behavior at a medium disparity angle. The disparity angle is , the proprioceptive drift is . Figure 3E shows the results of the behavior at a large disparity angle. The disparity angle is , the proprioceptive drift is .

2.3 Experiments in simulated environment

2.3.1 Proprioceptive drift and Proprioception accuracy experiments

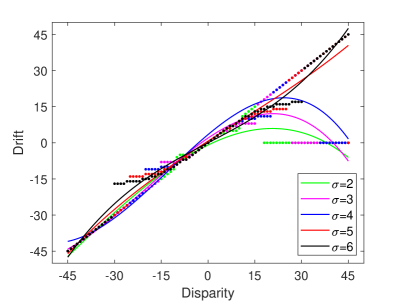

In the simulated environment, the rotation angle ranges from to , and the interval is . The result of the proprioceptive drift experiment is shown in Figure 4A, which is similar to the results of the rubber hand illusion behavior experiment in macaques and humans (?). The behavior experiment results in (?) show that the proprioceptive drifts increased for small levels of disparity, while it plateaus or even decreases when the disparity exceeds . In our experiment, the proprioceptive drift increases rapidly when the disparity is less than , and became flatter when the disparity is greater than . The results show that when the disparity between the visual hand and the robot’s veridical hand is small, the hand position perceived by the robot will be closer to the position of the visual hand, while when the disparity is large, the robot’s perception of the hand position is more dependent on the hand position of proprioception.

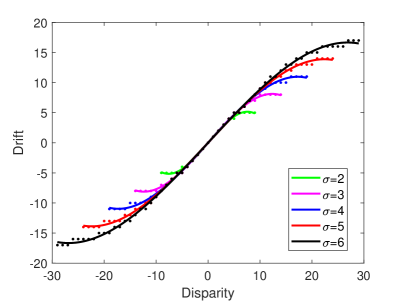

A recent study has shown that the proprioception accuracy may influence the proneness to the rubber hand illusion, but there are fewer relevant studies (?). In our model, the accuracy of proprioception can be achieved by controlling the standard deviation of the receptive field of the neuron model. The larger the standard deviation, the wider the receptive field range, and the lower the accuracy. On the contrary, the smaller the standard deviation, the narrower the receptive field range, and the higher the accuracy. Therefore, we test the effect of proprioception accuracy on rubber hand illusion by controlling the standard deviation of the receptive field of the neuron, and the result is shown in Figure 4B. It shows that when the proprioception accuracy is high (that is, the standard deviation of the receptive field is small), the robot can only produce illusions within a small disparity range, and when the proprioception accuracy is low (that is, the standard deviation of the receptive field is larger), the robot can produce illusions within a large disparity range. That is, the experimental results of the model prove that lower proprioception accuracy is more likely to induce rubber hand illusion, while higher proprioception accuracy is more difficult to induce. This conclusion is consistent with the recent studies (?, ?). It should be noted that in order to make the experimental results more intuitive, we unified the behavioral results of different proprioceptive accuracies into a scale range.

In the proprioceptive drift experiment, the robot’s behavior is influenced by the dynamic change of the neurons’ firing rate in the model. In our model, multisensory integration takes place in two areas: TPJ and AI. TPJ is the low-level area of multisensory integration, which realizes the initial integration of proprioceptive information and visual information. The synaptic weights of and are the same, but since the perception of visual information is caused by movement, TPJ is more affected by visual information during multisensory integration. AI is a high-level area of multisensory integration. It integrates the proprioceptive information from S1, the visual information from EBA, and the initial proprioceptive-visual integration information from TPJ to achieve the final integration of multisensory information, and the integration result affects the behavior of the robot. After training, and change from the initial excitatory connections to inhibitory connections. The inhibitory strength of is greater than that of , which means that AI has a strong inhibitory effect on visual information during multisensory integration (Figure 3A).

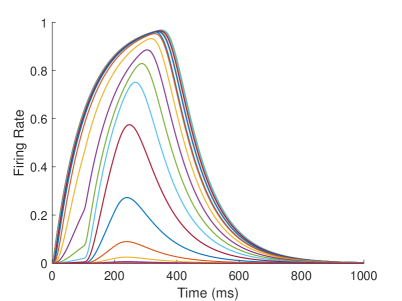

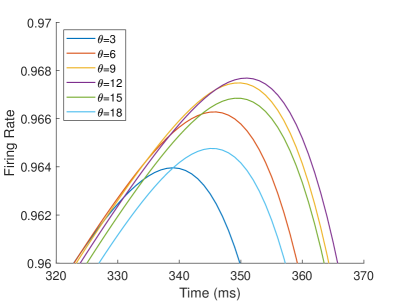

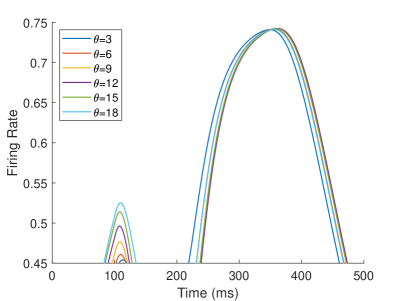

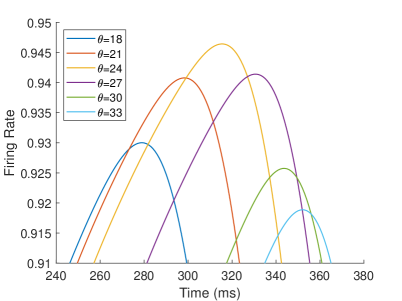

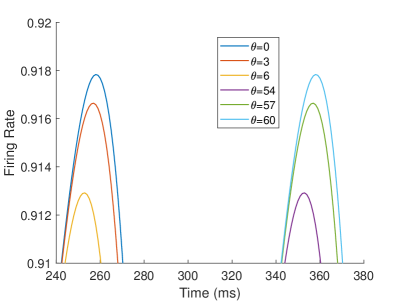

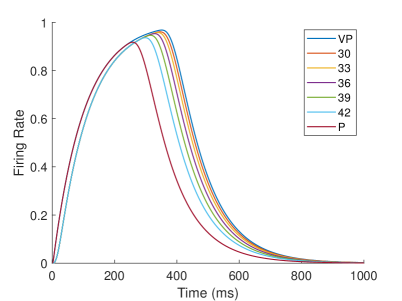

The firing rate of the TPJ and AI in the proprioceptive drift experiment (Multisensory integration - Visual dominance) is shown in Figure 5. When the visual disparity is small, the receptive field overlap of proprioceptive information and visual information is huge, so the TPJ area presents a single peak, visual-information-dominated multisensory integration result. The results of multisensory integration when the proprioceptive perception is , and the visual disparity is are shown in Figure 5A and Figure 5C. Figure 5C shows the dynamic changes of the six neurons with the highest firing rate in TPJ. The firing rate from high to low is , and the initial integration result in TPJ is . The AI area presents multisensory integration results of bimodal peaks (as shown in Figure 5B and Figure 5D). The anterior peak is mainly inhibited by neurons near the proprioceptive perception and the visual perception . The firing rate from high to low is , and the result of anterior peak integration is . In the posterior peak, the inhibition intensity decreases with the disappearance of the proprioceptive and visual stimulation, and the firing rate is from high to low. The firing rate of neurons in the posterior peak is higher than that in the anterior peak, and the final integration result in AI is .

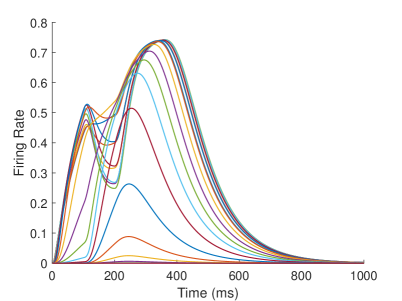

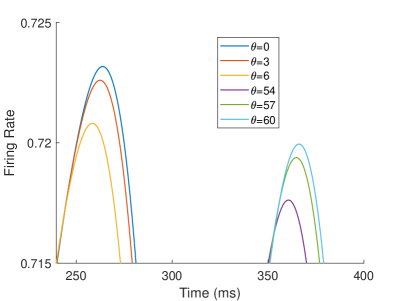

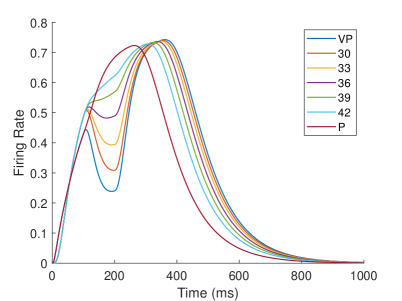

The firing rate of the TPJ and AI in the proprioceptive drift experiment (Multisensory integration - Proprioception dominance) is shown in Figure 6. When the visual disparity is moderate, the information integration in TPJ mainly occurs at the location where the proprioceptive information and visual information receptive fields overlap. The TPJ area presents multi-peak, proprioceptive-information-dominated multisensory integration result. The results of multisensory integration when the proprioceptive perception is , and the visual disparity is are shown in Figure 6A and Figure 6C. Figure 6C shows the dynamic changes of the six neurons with the highest firing rate in TPJ. The firing rate from high to low is , and the initial integration result in TPJ is . The AI area presents multi-peak multisensory integration results (as shown in Figure 6B and Figure 6D). Figure 6B shows the dynamic changes of all neurons. Figure 6D shows the dynamic changes of the six neurons with the highest firing rate in AI. The firing rate from high to low is . The final integration result in AI is .

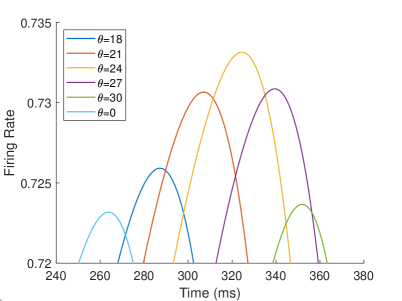

The firing rate of the TPJ and AI in the proprioceptive drift experiment (Multisensory integration - Proprioception based) is shown in Figure 7. When the visual disparity is large, the overlapping area of proprioceptive neurons and visual neurons’ receptive fields is small, and the integration of TPJ is weak. Therefore, the TPJ presents completely doublet peak results, with the anterior peak dominated by proprioceptive information and the posterior peak dominated by visual information. The results of multisensory integration when the proprioceptive perception is , and the visual disparity is are shown in Figure 7A and Figure 7C. Figure 7C shows the dynamic changes of the six neurons with the highest firing rate in TPJ. It shows that the anterior peak presents the integration result dependent on proprioception information, and the firing rate from high to low is . The posterior peak presents the integration result dependent on visual information, and the firing rate from high to low is . The firing rates of the six neurons are from high to low, and the initial integration result in TPJ is . The AI area presents doublet peak multisensory integration results (as shown in Figure 7B and Figure 7D). Since the inhibition weight of visual connections () in the AI area is greater than that of proprioception (), the anterior peak that relies on proprioception is used as the final output. The firing rate from high to low is . The final integration result in AI is .

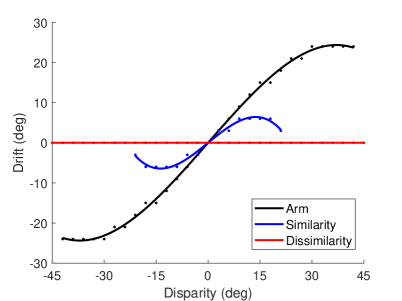

2.3.2 Appearance replacement and Asynchronous experiments

The result of the appearance replacement experiment is shown in Figure 8A, which is similar to the results of the prior knowledge of body representation behavior experiment (that is, replacing the visual hand in the rubber hand illusion with a wooden block) in macaques and humans (?). The experimental result in (?) shows that the proprioceptive drifts in the wood condition is significantly reduced than that in the visual hand condition. In our experiment, in the case of high similarity (that is, the hand in the robot’s field of vision is similar to its own hand), the proprioceptive drift of the robot is significantly smaller than that of the own visual hand. In addition, the range of visual disparity that can induce the robot’s rubber hand illusion is narrower. When the disparity exceeded , the robot’s proprioceptive drift is , and the rubber hand illusion disappears. In the case of dissimilarity (that is, the hand in the robot’s field of vision is completely different from its own hand), the robot’s proprioceptive drift is , indicating that the robot does not consider the hand in the field of view as its own. At the neuronal scale, the response of neurons in EBA area to a similar hand is lower than that of their own hand, which leads to the weak contribution of visual information for multisensory integration, with TPJ and AI relying more on proprioceptive information for integration; the neurons in the EBA area respond much less to dissimilar hands than their own hand, further weakening the contribution of visual information for multisensory integration in the TPJ and AI areas.

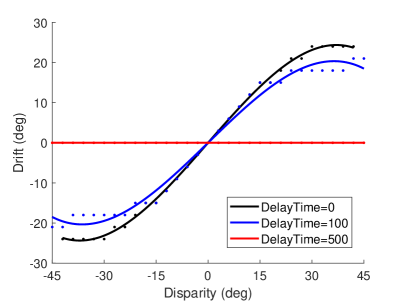

The studies in (?, ?) show that the majority of participants would not experience the rubber hand illusion if the asynchrony is greater than 500 or 300 ms. Here we test the effect of synchrony on the rubber hand illusion. The result of the asynchronous experiment is shown in Figure 8B. When the delay time is 100 ms, the proprioceptive drift of the robot is smaller than that of the own visual hand. When the delay time is 500 ms, the robot’s proprioceptive drift is , indicating that the robot does not think that the hand in the field of view is its own. At the neuronal scale, the delayed presentation of visual information will weaken the contribution of visual information to multisensory integration, and a large delay will result in the inability to integrate proprioceptive information and visual information within the effective firing time window of neurons.

2.3.3 Proprioception only and Vision only experiments

When there is only proprioception information, that is, when the robot only moves its own hand and no hand is observed in the field of vision, the AI neuron is fired, indicating that the robot thinks that the moving hand belongs to itself. When there is only visual information, that is, the robot’s own hand does not move, but the hand movement can be seen in the field of vision, the neuron in AI is not fired, indicating that the robot does not think that the moving hand belongs to itself. At the neuronal scale, the main reason for this result is that the inhibition intensity of visual information in AI is stronger than that of proprioceptive information.

2.3.4 Disability experiment

We explore the effects of TPJ disability and AI disability on the rubber hand illusion by setting synaptic weights between different brain areas. Take the Proprioception accuracy experiment as an example.

In the TPJ disability experiment, we set and as 0. Multisensory information is integrated by the AI only, and the TPJ no longer integrated any information. After training and testing, the experimental result of TPJ disability with different proprioception accuracy is shown in Figure 9A. This result indicates that when TPJ is completely disabled, multisensory information integration by AI alone cannot induce the rubber hand illusion. In addition, we simulate the impact of varying extents of TPJ disability on the rubber hand illusion by setting different synaptic weights. The experimental results show that when the extent of TPJ disability is low (e.g., the values of and are large), TPJ is still able to integrate multisensory information, and the robot can be induced rubber hand illusion. But the proprioceptive drift is smaller, and the range of visual disparity that can induce the robot’s rubber hand illusion is narrower. As the extent of disability increases (i.e. the values of and decrease), the rubber hand illusion becomes more difficult to be induced. The experimental results are consistent with the behavioral experiment results that use the Transcranial Magnetic Stimulation over TPJ to reduce the extent of rubber hand illusion (?).

In the AI disability experiment, we set , and as 0. Multisensory information is integrated by the TPJ, and the AI no longer integrated any information. After training and testing, the experimental result of AI disability with different proprioception accuracy is shown in Figure 9B. As seen in Figure 9B, although the robot is induced with the rubber hand illusion at partial visual deflection angles, the AI disability robot mainly relies on vision for decision-making at most visual deflection angles. That is, AI disability cannot effectively induce the rubber hand illusion.

The results of the disability experiment indicate that the generation of rubber hand illusion is the result of the joint action of primary multisensory integration area (e.g., TPJ) and high-level multisensory integration area (e.g., AI), and neither is indispensable.

2.4 Comparison with other models

Computational models of self-body representation, especially those that can reproduce and explain the rubber hand illusion, are less studied. Researchers have proposed theoretical models of body representation mainly from the perspectives of Predictive coding and Bayesian causal inference (?). Table 1 shows the comparison results with other models.

The core idea of the Predictive coding approaches (?, ?) is to refine the body estimation through the minimization of the errors between perception and prediction. The Active inference models (?, ?) can be regarded as an extension of Predictive coding approaches. These methods can well reproduce and explain the proprioceptive drift experiment, and have been verified in human, robot and simulated environment. However, these studies did not involve various experiments of the rubber hand illusion, nor could they explain the specific computational mechanism of individual neurons and population neurons.

Bayesian causal inference model is extensively used in theoretical modeling of multimodal integration, and has been repeatedly verified at the behavioral and neuronal levels. The Bayesian causal inference model can well reproduce and explain a variety of rubber hand illusion experiments (?, ?). However, in the experiment of rubber hand illusion, most of the Bayesian causal inference models have problems similar to the Predictive coding approaches, such as the explanatory scale remains at the behavioral scale, and also does not explain how the rubber hand illusion is generated from the neuron scale. In addition, there are also some studies on neural network modeling, although they mainly focus on multimodal integration and do not involve rubber hand illusion experiments (?).

Compared with these models, we build a Brain-inspired bodily self-perception model from the perspective of brain-inspired computing. Our model can not only reproduce as many as six experiments of the rubber hand illusion, but also reasonably explain the rubber hand illusion from the neuron scale at the same time, which is helpful to reveal the computational and neural mechanism of the rubber hand illusion. And the computational model of Disability experiment demonstrates that the rubber hand illusion cannot be induced without TPJ, which performs primary multisensory integration, and AI, which performs high-level multisensory integration.

| Experiment | (?) | (?) | (?) | (?) | (?) | (?) | Our model |

|---|---|---|---|---|---|---|---|

| Proprioceptive drift | O | O | O | O | O | O | O |

| Proprioception accuracy | - | - | - | - | - | - | O |

| Appearance replacement | - | - | - | - | - | O | O |

| Asynchronous | - | - | O | - | O | - | O |

| Proprioception only | - | - | - | - | O | - | O |

| Vision only | - | - | - | - | O | - | O |

| Disability | - | - | - | - | - | - | O |

| Model | PC | PC | Deep AIF | AIF | BCI | BCI | Brain-Self |

| Participant | H, R | R | S | H | H | M, H | R, S |

-

•

Model: PC, Predictive coding; AIF, Active inference model; BCI, Bayesian causal inference model; Brain-Self, Brain-inspired Bodily Self-perception Model.

-

•

Participant: H, Human; R, Robot; S, simulated environment; M, Monkey.

3 Discussion

In this study, we integrate the biological findings of bodily self-consciousness and construct a Brain-inspired bodily self-perception model, which could construct the bodily self-perception autonomously without any supervision signals. It can reproduce six rubber hand illusion experiments, and reasonably explain the causes and results of rubber hand illusion from the neuron scale. Compared with other models, our model explains the computational mechanism that the brain encodes bodily self-consciousness and how the body illusion we subjectively perceive is generated by neural networks. Especially, the experimental results of this model can well fit the behavioral and neural data of monkeys in biological experiments. This model is helpful to reveal the computational and neural mechanism of the rubber hand illusion.

In the biological experiment of rubber hand, Fang et al. (?) recorded the firing rate of neurons in the premotor cortex of 2 monkeys in the behavior task. They defined two types of neurons: integration neurons and segregation neurons. If a neuron’s firing rate under the visual-proprioceptive congruent task is greater than that under the proprioception-only task, it indicates that the neuron prefers to integrate visual and proprioception information, which they define as ‘integration neuron’; Conversely, if a neuron’s firing rate under proprioception-only task is greater than that under visual-proprioceptive congruent task, it means that the neuron prefers only proprioception information, which they define as ‘segregation neuron’. By analyzing the firing rates of neurons under different tasks, they found that the firing rates of integration neurons decreased with increasing visual disparity during the target-holding period, and the firing rates of segregation neurons increased with increasing visual disparity during the preparation period.

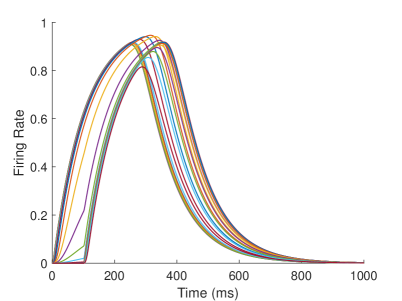

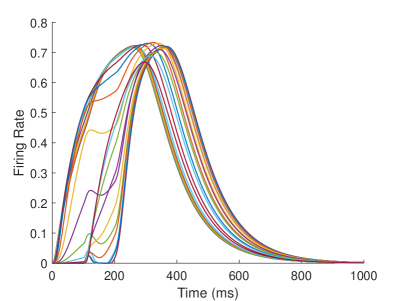

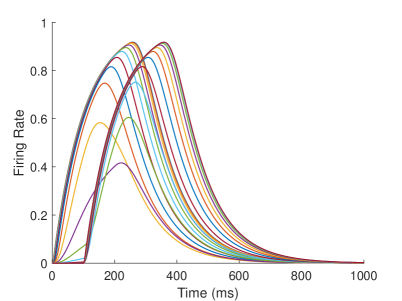

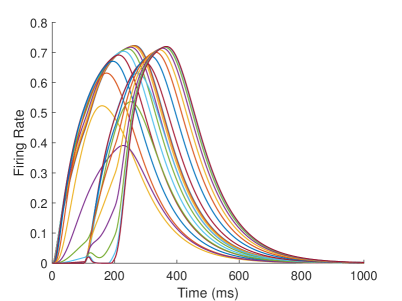

In our computational model, neurons in the TPJ and AI areas exhibit similar properties. Figure 10 shows the dynamic changes of neuronal firing rates in TPJ and AI areas in different tasks. In order to make the comparison of results more intuitive and significant, the results of visual-proprioceptive congruent (VP) condition, proprioception-only (P) condition and part of visual-proprioceptive conflict (VPC) conditions are selected for display. The VPC conditions includes the results when proprioception is and visual deflection is , respectively. Clearly, neurons in the TPJ area consistently exhibit integration properties. With the increase of visual disparity, the integration intensity of neurons in TPJ becomes weaker and the firing rate of neurons becomes lower. Neurons in the AI region exhibit the properties of separation and integration at different stages. In the early stage (such as about 200ms), the neurons in the AI area exhibit separation properties, and the firing rate of neurons increases with the increase of visual disparity. In the later stage (such as about 400ms), the neurons in the AI area exhibit integration properties, and the firing rate of neurons decreases with the increase of visual disparity.

The reason for the integration effect in TPJ is that when the visual disparity is small, the receptive field overlap of proprioceptive information and visual information is huge, so the integration effect is strong and the firing rate of neurons is high; when the visual disparity is huge, the receptive field overlap of proprioceptive information and visual information is small, so the integration effect is weak and the firing rate of neurons is low.

AI is a high-level area of multisensory integration that receives excitatory stimulus (proprioc-eption-visual integration information) from TPJ, and inhibitory stimulus from S1 (proprioception information) and EBA (visual information) simultaneously. In the separation stage, the firing rate of neurons is highest in the proprioception-only task and the lowest in the visual-proprioceptive congruent task, and in the visual-proprioceptive conflict task, the firing rates of the neurons increased with the increase of visual disparity. The reason is that when the proprioceptive stimulation disappears, the firing rate of neurons in S1 decreases; when the visual stimulation appears, the firing rate of neurons in EBA increases, and the inhibitory effect of information from EBA on information from TPJ in AI increases. In the proprioception-only task, the firing rate of neurons in AI is highest due to the absence of inhibition from EBA. In the visual-proprioceptive congruent task, the receptive field of proprioceptive information and visual information overlap completely, the inhibitory effect of EBA on TPJ was strongest in AI, so the firing rate of neurons in AI is the lowest. In the visual-proprioceptive conflict task, the information integration in TPJ mainly occurs at the location where the proprioceptive information and visual information receptive fields overlap. With the increase of visual disparity, the inhibition of EBA on the integration information in TPJ decreases, leading to the increase of the firing rate of neurons in AI. In the integration stage, the proprioceptive and visual stimulation disappears, and their firing rates decrease. Due to the cumulative effect, the firing rate of neurons in TPJ continues to increase, and the inhibition of S1 and EBA in AI on TPJ decreases, highlighting the integration effect of TPJ.

4 Materials and Methods

The brain-inspired bodily self-perception model is shown in Figure 1.

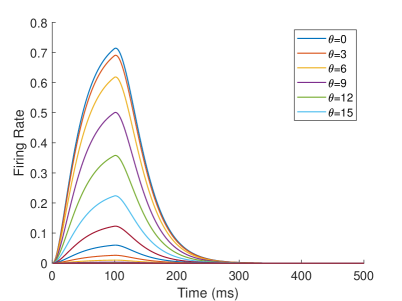

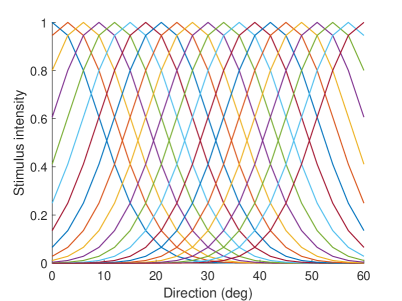

The neurons representing angles in M1 and V are described by Equation 1. represents stimulus j at time , and Vj(t) represents the firing rate of the neuron. The intensity of the stimulus is related to the receptive field of the neuron. Equation 2 describes the receptive field of the . When the stimulation angle is , the stimulation intensity of neuron representing is the largest, and the stimulation intensity of other neurons decreases successively. The firing rate of the neuron will increase when the stimulus is present and will decay when the stimulus has ended. is the parameter that controls the rate of increase and decrease. We set in M1, V, S1 and EBA areas, in TPJ area, and in AI area. The firing rate of the neuron and the receptive fields of different neurons are shown as Figure 11.

| (1) |

| (2) |

Neurons in other areas are described by Equation 3. The is the firing rate of the postsynaptic neuron, is the firing rate of the presynaptic neuron, and the is the synaptic weight between the presynaptic neuron and the postsynaptic neuron . Specifically, the neurons in each brain area in the computational model are described as shown in Equation 4.

| (3) |

| (4) |

The synaptic plasticity in this model is defined as shown in Equation 5. in this model represents the synaptic weight between the postsynaptic neuron and the presynaptic neuron population .

| (5) |

where

The is calculated using Equation 6.

| (6) |

where

is the STDP function reported in (?, ?, ?). According to our previous research in (?), we set the parameters of , , in this model.

is the lateral inhibitory synaptic weight used to control the location of synaptic weight update, which is described by the Equation 7. is the firing state of the postsynaptic neuron, and is the number of firings of the postsynaptic neuron. If the firing rate of the postsynaptic neuron is greater than the threshold, the firing state of the neuron is 1, otherwise it is 0. We set the threshold as 0.7 in this model.

| (7) |

where

The details of visual processing such as motion perception and appearance learning are shown in our previous work (?, ?).

References

- 1. O. Blanke, M. Slater, and A. Serino. Behavioral, neural, and computational principles of bodily self-consciousness. Neuron, 88(1):145–66, 2015.

- 2. H Henrik Ehrsson, Charles Spence, and Richard E Passingham. That’s my hand! activity in premotor cortex reflects feeling of ownership of a limb. Science, 305(5685):875–877, 2004.

- 3. Matthew Botvinick and Jonathan Cohen. Rubber hands ‘feel’touch that eyes see. Nature, 391(6669):756–756, 1998.

- 4. Matthew AJ Apps and Manos Tsakiris. The free-energy self: a predictive coding account of self-recognition. Neuroscience and Biobehavioral Reviews, 41:85–97, 2014.

- 5. Nina-Alisa Hinz, Pablo Lanillos, Hermann Mueller, and Gordon Cheng. Drifting perceptual patterns suggest prediction errors fusion rather than hypothesis selection: replicating the rubber-hand illusion on a robot. In 2018 Joint IEEE 8th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), pages 125–132. IEEE.

- 6. Thomas Rood, Marcel van Gerven, and Pablo Lanillos. A deep active inference model of the rubber-hand illusion. In International Workshop on Active Inference, pages 84–91. Springer.

- 7. Antonella Maselli, Pablo Lanillos, and Giovanni Pezzulo. Active inference unifies intentional and conflict-resolution imperatives of motor control. PLoS computational biology, 18(6):e1010095, 2022.

- 8. C. R. Fetsch, G. C. DeAngelis, and D. E. Angelaki. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci, 14(6):429–42, 2013.

- 9. L. Shams and U. Beierholm. Bayesian causal inference: A unifying neuroscience theory. Neurosci Biobehav Rev, 137:104619, 2022.

- 10. Mikhail A. Lebedev, Majed Samad, Albert Jin Chung, and Ladan Shams. Perception of body ownership is driven by bayesian sensory inference. Plos One, 10(2):e0117178, 2015.

- 11. Wen Fang, Junru Li, Guangyao Qi, Shenghao Li, Mariano Sigman, and Liping Wang. Statistical inference of body representation in the macaque brain. Proceedings of the National Academy of Sciences, 2019.

- 12. A. Georgopoulos, A. Schwartz, and R. Kettner. Neuronal population coding of movement direction. Science, 233(4771):1416–1419, 1986.

- 13. T. P. Lillicrap and S. H. Scott. Preference distributions of primary motor cortex neurons reflect control solutions optimized for limb biomechanics. Neuron, 77(1):168–79, 2013.

- 14. MJ Prud’Homme and John F Kalaska. Proprioceptive activity in primate primary somatosensory cortex during active arm reaching movements. Journal of neurophysiology, 72(5):2280–2301, 1994.

- 15. VA Jennings, YVON Lamour, HUGO Solis, and CHRISTOPH Fromm. Somatosensory cortex activity related to position and force. Journal of Neurophysiology, 49(5):1216–1229, 1983.

- 16. Paul E Downing, Yuhong Jiang, Miles Shuman, and Nancy Kanwisher. A cortical area selective for visual processing of the human body. Science, 293(5539):2470–2473, 2001.

- 17. J. Limanowski, A. Lutti, and F. Blankenburg. The extrastriate body area is involved in illusory limb ownership. Neuroimage, 86:514–24, 2014.

- 18. S. Ionta, L. Heydrich, B. Lenggenhager, M. Mouthon, E. Fornari, D. Chapuis, R. Gassert, and O. Blanke. Multisensory mechanisms in temporo-parietal cortex support self-location and first-person perspective. Neuron, 70(2):363–74, 2011.

- 19. A. Serino, A. Alsmith, M. Costantini, A. Mandrigin, A. Tajadura-Jimenez, and C. Lopez. Bodily ownership and self-location: components of bodily self-consciousness. Conscious Cogn, 22(4):1239–52, 2013.

- 20. M. Wawrzyniak, J. Klingbeil, D. Zeller, D. Saur, and J. Classen. The neuronal network involved in self-attribution of an artificial hand: A lesion network-symptom-mapping study. Neuroimage, 166:317–324, 2018.

- 21. M. Tsakiris, M. Costantini, and P. Haggard. The role of the right temporo-parietal junction in maintaining a coherent sense of one’s body. Neuropsychologia, 46(12):3014–8, 2008.

- 22. Jonathan Downar, Adrian P Crawley, David J Mikulis, and Karen D Davis. A multimodal cortical network for the detection of changes in the sensory environment. Nature neuroscience, 3(3):277–283, 2000.

- 23. Khalafalla O Bushara, Takashi Hanakawa, Ilka Immisch, Keiichiro Toma, Kenji Kansaku, and Mark Hallett. Neural correlates of cross-modal binding. Nature neuroscience, 6(2):190–195, 2003.

- 24. Khalafalla O Bushara, Jordan Grafman, and Mark Hallett. Neural correlates of auditory–visual stimulus onset asynchrony detection. Journal of Neuroscience, 21(1):300–304, 2001.

- 25. H Henrik Ehrsson, Katja Wiech, Nikolaus Weiskopf, Raymond J Dolan, and Richard E Passingham. Threatening a rubber hand that you feel is yours elicits a cortical anxiety response. Proceedings of the National Academy of Sciences, 104(23):9828–9833, 2007.

- 26. A. Horvath, E. Ferentzi, T. Bogdany, T. Szolcsanyi, M. Witthoft, and F. Koteles. Proprioception but not cardiac interoception is related to the rubber hand illusion. Cortex, 132:361–373, 2020.

- 27. M. Slater and H. H. Ehrsson. Multisensory integration dominates hypnotisability and expectations in the rubber hand illusion. Front Hum Neurosci, 16:834492, 2022.

- 28. Manos Tsakiris, Maike D Hesse, Christian Boy, Patrick Haggard, and Gereon R Fink. Neural signatures of body ownership: a sensory network for bodily self-consciousness. Cerebral cortex, 17(10):2235–2244, 2007.

- 29. Sotaro Shimada, Kensuke Fukuda, and Kazuo Hiraki. Rubber hand illusion under delayed visual feedback. PloS one, 4(7):e6185, 2009.

- 30. Manos Tsakiris. My body in the brain: a neurocognitive model of body-ownership. Neuropsychologia, 48(3):703–712, 2010.

- 31. K. Kilteni, A. Maselli, K. P. Kording, and M. Slater. Over my fake body: body ownership illusions for studying the multisensory basis of own-body perception. Front Hum Neurosci, 9:141, 2015.

- 32. Pablo Lanillos and Gordon Cheng. Adaptive robot body learning and estimation through predictive coding. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 4083–4090. IEEE.

- 33. Elisa Magosso. Integrating information from vision and touch: a neural network modeling study. IEEE Transactions on Information Technology in Biomedicine, 14(3):598–612, 2010.

- 34. G. Bi and M. Poo. Synaptic modification by correlated activity: Hebb’s postulate revisited. Annu Rev Neurosci, 24:139–66, 2001.

- 35. S. Song, K. D. Miller, and L. F. Abbott. Competitive hebbian learning through spike-timing-dependent synaptic plasticity. Nat Neurosci, 3(9):919–26, 2000.

- 36. H. Z. Shouval, M. F. Bear, and L. N. Cooper. A unified model of nmda receptor-dependent bidirectional synaptic plasticity. Proc Natl Acad Sci U S A, 99(16):10831–6, 2002.

- 37. Yuxuan Zhao, Yi Zeng, and Guang Qiao. Brain-inspired classical conditioning model. iScience, 24(1):101980, 2021.

- 38. Yi Zeng, Yuxuan Zhao, and Jun Bai. Towards robot self-consciousness (i): Brain-inspired robot mirror neuron system model and its application in mirror self-recognition. In International Conference on Brain Inspired Cognitive Systems, pages 11–21.

- 39. Yi Zeng, Yuxuan Zhao, Jun Bai, and Bo Xu. Toward robot self-consciousness (ii): Brain-inspired robot bodily self model for self-recognition. Cognitive Computation, 10(2):307–320, 2017.

Acknowledgments

Funding: This study was supported by the Key Research Program of Frontier Sciences, CAS (Grant No. ZDBS-LY-JSC013), the Strategic Priority Research Program of the Chinese Academy of Sciences (Grant No. XDB32070100), the Beijing Municipal Commission of Science and Technology (Grant No. Z181100001518006).

Author contributions: Conceptualization, Y.Zh. and Y.Ze.; Methodology, Y.Zh.; Software, Y.Zh. and E.L.; Validation, Y.Zh. and E.L.; Formal Analysis, Y.Zh.; Investigation, Y.Zh.; Writing - Original Draft, Y.Zh., E.L. and Y.Ze.; Visualization, Y.Zh.; Supervision, Y.Ze.; Project Administration, Y.Ze.; Funding Acquisition, Y.Ze.

Competing interests: All authors declare that there is no conflict of interest.

Supplementary materials

Movie: Rubber hand illusion experiment on the iCub robot (Multisensory integration - Visual dominance, Proprioception dominance, Proprioception based)

Description:

Part I: Experiment on the iCub robot (Multisensory integration - Visual dominance): When the hand rotation angle is small (small disparity angle), the proprioceptive drift is small. The robot mainly relies on visual information for decision-making.

Part II: Experiment on the iCub robot (Multisensory integration - Proprioception dominance): When the hand rotation angle is medium (medium disparity angle), the proprioceptive drift is medium. The robot mainly relies on proprioceptive information for decision-making.

Part III: Experiment on the iCub robot (Multisensory integration - Proprioception based): When the hand rotation angle is large (large disparity angle), the proprioceptive drift is zero. The robot completely relies on the proprioceptive information for decision-making.

Movie Address: https://youtu.be/G941vAfaJ-Q