BPJDet: Extended Object Representation for Generic Body-Part Joint Detection

Abstract

Detection of human body and its parts has been intensively studied. However, most of CNNs-based detectors are trained independently, making it difficult to associate detected parts with body. In this paper, we focus on the joint detection of human body and its parts. Specifically, we propose a novel extended object representation integrating center-offsets of body parts, and construct an end-to-end generic Body-Part Joint Detector (BPJDet). In this way, body-part associations are neatly embedded in a unified representation containing both semantic and geometric contents. Therefore, we can optimize multi-loss to tackle multi-tasks synergistically. Moreover, this representation is suitable for anchor-based and anchor-free detectors. BPJDet does not suffer from error-prone post matching, and keeps a better trade-off between speed and accuracy. Furthermore, BPJDet can be generalized to detect body-part or body-parts of either human or quadruped animals. To verify the superiority of BPJDet, we conduct experiments on datasets of body-part (CityPersons, CrowdHuman and BodyHands) and body-parts (COCOHumanParts and Animals5C). While keeping high detection accuracy, BPJDet achieves state-of-the-art association performance on all datasets. Besides, we show benefits of advanced body-part association capability by improving performance of two representative downstream applications: accurate crowd head detection and hand contact estimation. Project is available in https://hnuzhy.github.io/projects/BPJDet.

Index Terms:

Body-part association, body-part joint detection, hand contact estimation, head detection, object representation1 Introduction

Human body detection [1, 2, 3, 4, 5] is a long-standing research hotspot in the computer vision field. Accurate and fast human detection in an arbitrary scene can support many down-stream vision tasks such as pedestrian person re-identification (ReID) [6, 7], person tracking [8, 9, 10] and human pose estimation [11, 12]. Also, the detection of body parts like face [13, 14], head [15, 16] and hands [17, 18] is equally important and has been extensively studied. They may serve as precursors to specific tasks like face recognition [19], crowd counting [20, 21] and hand pose estimation [22]. Although the independent detection of human body and related parts has been significantly improved, due to breakthroughs of deep CNN-based general object detectors (e.g., Faster R-CNN [23], FPN [24], RetinaNet [25] and YOLO [26]) and construction of large-scale high-quality datasets (e.g., COCO [27], CityPersons [28] and CrowdHuman [29]), the more challenging joint discovery of human body and its parts is less-addressed but meaningful. As proof, recently, we can find numerous human-related applications excavating the linkage effect of body-part, including robot teleoperation [30], person surveillance [31, 32], robust person ReID [33, 34], human parsing [35, 36, 37], hand-related object contact [38, 39, 40, 41, 42], human-object interaction (HOI) [43, 44, 45, 46], part-based 3D human body models [47, 48, 49] and human pose estimation [50, 51, 52, 53].

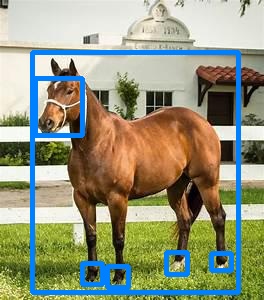

In this paper, we study the body-part joint detection task. A body part can be a face, head, hand or their arbitrary combinations as in Fig. 1. Our principal goal is to improve the accuracy of body-part association on the premise of ensuring the detection precision. Prior to ours, a few literatures have attempted to address the joint body and part detection task with two separate stages. DA-RCNN [54], PedHunter [4] and JointDet [55] focus on joint body-head detection by assuming that human head can provide complementary spatial information to the occluded body. BFJDet [56] concentrates on the similar body-face joint detection task which is much more difficult because body and face are not always one-to-one correspondence. BodyHands [18] and Zhou et al.[17] tackle the problem of detecting hands with finding the location of corresponding person. Instead of only one body part, DID-Net [57] takes the inherent correlation between the body and its two parts (hand and face) into account by building the dataset HumanParts. Hier R-CNN [58] extends HumanParts to COCOHumanParts based on COCO [27] and defines up to six body parts. However, as in Fig. 1, two-stage methods use explicit strategies to model body-part relationships by exploiting heuristic post-matching rules or learning branched association networks. Unlike them, we propose a novel single-stage Body-Part Joint Detector (BPJDet), which can jointly detect and associate body with one or more parts in a unified framework.

The association ability of proposed BPJDet mainly relies on our elaborately designed extended object representation (refer Fig. 4) that can be harmlessly applied to single-stage anchor-based or anchor-free detectors like YOLO series [26, 59, 60, 61, 62, 63, 64]. Besides bounding box, confidence and objectness contained in the classic object representation, our extension adds location offsets of body parts to it. Center-offset regression is popular in modern one-stage object detectors [65, 66] and human pose estimators [67, 68, 69, 70]. This simple yet efficient innovation has at least three advantages. (1) The relational learning of body-part can benefit from training of general detectors without needing extra association subnets. (2) The final prediction naturally implies the detected body bounding boxes and their associated parts, which avoids error-prone and tedious association post-processing. (3) This general representation enables us to jointly detect arbitrary body part or parts such as face and hands without major modifications. In experiments, we performed extensive tests on many public datasets including CityPersons [28], CrowdHuman [29], BodyHands [18] and COCOHumanParts [58] for human and the dataset Animals5C (built from AnimalPose [71] and AP-10K [72]) for quadruped animals to verify the superiority of our method. To further reflect the potential value of our advanced BPJDet, we have applied it to two closely related tasks: accurate crowd head detection and hand contact estimation.

To sum up, we mainly have following five contributions:

-

•

We propose a novel end-to-end trainable Body-Part Joint Detector (BPJDet) by extending the classic object representation with offsets of body parts.

-

•

We reveal the feasibility and expedience of joint training of bounding boxes and offsets with designing suitable multi-task loss functions by using either anchor-based or anchor-free detectors.

-

•

We verify that BPJDet is a unified and universal framework which can support joint detection of body with one or more body parts for both human and some quadruped animals.

-

•

We achieve state-of-the-art body-part association performance on four public benchmarks while maintaining high detection accuracy of body and parts.

-

•

We demonstrate the significant benefits of BPJDet’s superior body-part association ability for performance promotion in two downstream tasks.

In order to inspire the future research and application of generic body-part joint detection and association, we open source the implementation of BPJDet. In this work, we make several major extensions to our earlier conference paper [73]: (1) A more in-depth introduction to works related to the body-part joint detection task and its downstream applications in Sections 1 and 2. (2) A more detailed discussion on the inspiration and similar variants of the proposed extended object representation in Sections 3.1 and 3.2. (3) An overall procedure of the updated association decoding algorithm without limitation on the number and category of body-part as illustrated in Fig. 3 and Alg. 1. (4) A seamless modification of BPJDet by using anchor-free detectors in Section 4.5, as well as the new version BPJDetPlus with a further expansion of object representation for supporting many more multi-tasks in Section 4.6. (5) New experiments on CrowdHuman and COCOHumanParts for body-head and body-parts joint detection of human in Sections 5.3.3 and 5.3.4, respectively, and the newly built Animals5C for body-parts joint detection of five quadruped animals in Section 5.3.5. Experimental results of more ablation and anchor-free BPJDet are also reported. (6) Two downstream applications including accurate crowd head detection and hand contact estimation in Sections 5.4.1 and 5.4.2 for explaining benefits of using the advanced body-head and body-hand association ability, respectively.

2 Related Work

2.1 Human Body and Part Detection

We here only discuss emerging CNN-based detectors that achieve promising results over traditional approaches using hand-crafted features. For human body detection, it belongs to either general object detection containing the person category [23, 24, 25, 26, 65, 66, 61, 64] trained on common datasets like COCO [27], or pedestrian detection [2, 3, 5, 74, 75] trained on specific benchmarks CityPersons [28] and CrowdHuman [29]. A key challenge in human body detection is occlusion. Therefore, many researches propose customized loss functions [2, 3], improved NMS [74, 5] or tailored distribution model [75] for alleviating problems of crowded people detection. PedHunter [4] designs a mask-guided module to leverage the head information to enhance the pedestrian feature. Currently, with the proposed burdensome yet powerful Detection Transformer (DETR) [76], some query-based crowd pedestrian detection methods [77, 78, 79, 80] are dominant. On the other hand, detection of body part has also been intensively and extensively studied. Detection of face [13, 14], head [15, 16, 81] and hand [58, 18] are all vigorous fields. Face detectors are usually based on well-designed networks and trained on dedicated datasets. While, head and hand detectors are rarely studied alone, and often used to facilitate downstream tasks. Precise design of body or part detector is beyond the scope of this paper. We focus on the joint detection of them.

2.2 Body-Part Joint Detection

We mainly pay attention to the joint detection of human body and one or more body parts like face, head and hands. First of all, body-head joint detection is the most popular couple, because the human head is a salient structural body part and plays a vital role in recognizing people, especially when occlusion occurs. For example, DA-RCNN [54] proposes to handle the crowd occlusion problem in human detection by capturing and cross-optimizing body and head parts in pairs with double anchor RPN. JointDet [55] presents a head-body relationship discriminating module to perform relational learning between heads and human bodies. Recently, BFJDet [56] firstly investigates the performance of body-face joint detection, and proposes a bottom-up scheme that outputs body-face pairs for each pedestrian. It also adopts independent detection followed by pairwise association. For body-hand joint detection, [17] devises heuristic strategies to match hand-raising gestures with body skeletons [67] in classroom scenes. BodyHands [18] proposes a novel association network based on Mask R-CNN [11] to jointly detect hands and the body location, and introduces a new corresponding hand-body association dataset. Besides, body-parts joint detection is also important for instance-level human-parts analysis. DID-Net [57] adopts Faster R-CNN [23] with two carefully designed detectors for the human body and two body parts (hand and face) separately. Hier R-CNN [58] extends body parts up to six, and modifies Mask R-CNN [11] with a new designed hierarchy branch inspired by FCOS [66] for subordinate relationship learning of body and parts. Unlike all of them, our approach BPJDet is not restricted to one or more body parts, and can handle detection and association in an end-to-end way.

2.3 Applications of Body-Part Association

Superior body-part association is meaningful for boosting many downstream tasks. According to their dependence on body part associations, we can roughly divide them into two categories: (1) low-level application: such as person surveillance [31, 32], robust person ReID [33, 34], human parsing [35, 36, 37] and human pose estimation [50, 51, 52, 53]. (2) high-level understanding: such as robot teleoperation [30], hand-related object contact [38, 39, 40, 41, 42] and human-object interaction (HOI) [43, 44, 45, 46]. Actually, there are essential differences between the two categories. The low-level tasks usually apply body-part associations to their methods for performance enhancing like robust person search by adding head [32], occluded person ReID relying on body part-based features [34], keypoints regressors using body parts dependence [50] and part affinity fields for learning to associate body parts [67, 52, 53]. Body-part association is an alternative for them. While, the high-level tasks are often inseparable from body-part associations. For example, human-machine systems should recognize the physical contact state of a body-part like hand [38, 30]. Human contact estimation [39] and understanding [40] may also need to analyze physical states of hands including their visual appearance and surrounding local context. In the well-defined field of HOI, body-part association is even more essential for understanding human activities [43, 45] and interactions [44, 46]. To confirm the benefits of BPJDet, we choose to strengthen two basic representative tasks: low-level accurate crowd head detection and high-level hand contact estimation by establishing our proposed stronger body-part associations.

3 Preliminaries and Motivation

In this section, we present and discuss works that largely motivate us to detect human body with regressing its parts jointly by proposing an extended object representation.

3.1 Object Representation

Most modern object detectors include three categories: box-based [23, 26, 24, 25, 61] using predefined anchors, point-based [82, 65, 66, 83, 84, 63, 64] free of anchor boxes and query-based [76, 78, 79, 80, 85] representing objects with a set of queries. Those box-based detectors heavily rely on handcrafted anchors that include parameters like number, size and aspect ratio. The object representation of them is rare and difficult to expand. Those point-based detectors directly predict object using keypoint or center point regression without needing of anchor boxes. This mechanism can eliminate the hyperparameters finetuning of anchors, achieve similar performance to anchor-based detectors with less computational cost, and keep better generalization ability. The last query-based DETRs abandon the traditional object representation and streamline the detection training pipeline by viewing it as a set prediction problem. However, their implicit encoder-decoder design does not align with our concept of extending representation. Instead, we propose a novel extended object representation by exploring both box-based (anchor-based) and point-based (anchor-free) detectors.

Prior to us, some literatures have attempted to expand the point-based object representation, especially for human pose estimation that has an essential overlap with the object detection task. For example, CenterNet [65] models objects using heatmap-based center points and subtly represents human poses as a 2K-dimensional property of the center point. Similarly, FCPose [69] adapts single-stage anchor-free FCOS [66] with proposed dynamic filters to process person detections by predicting keypoint heatmaps and regressing offsets. Other single-stage pose estimators like SPM [68], AdaptivePose [70] and YOLO-Pose [86] also learn to regress keypoints as series of joint offsets. Recently, ED-Pose [87] presents an explicit box detection framework which unifies the contextual learning between global human-level and local keypoint-level information based on DN-DETR [85]. It re-considers human pose estimation task as two explicit box detection processes with a unified representation and regression supervision, which much resembles our joint body (global) and part (local) detection design.

3.2 Body-Part Association

Currently, most body-part joint detection methods [57, 58, 55, 56, 18] are based on two separate stages including detection and post-matching. The inherent drawbacks of post-matching for body-part association are often unavoidable. For example, once the true main body of one detected body-part is undetected or improperly boxed, it will be a high probability event by mistakenly allocating this body-part to one person bounding box it just falls within, even if the error is quite obvious or unreasonable. In Section 5.3, we give some ordinary failure cases of body-hand in Fig. 8 and body-parts in Fig. 9 to explicitly reflect their defects. To alleviate this problem, we now have at least three strategies originally designed for human pose estimation to complete a single-stage body-part association. They are (1) center-offset regression as in CenterNet [65] and FCOS [66], (2) part affinity fields as in OpenPose [67, 53] and OpenPifPaf [52], and (3) hierarchical offset regression as in SPM [68]. We illustrate their discrepancies in Fig. 2. These correlation methods have greatly inspired our work. We apply the first intuitive yet still sufficiently effective center-offset regression strategy to validate the desirability to extend this single-stage association to traditional object representation.

4 Our Method

4.1 Extended Object Representation

In our proposed BPJDet, we train a dense single-stage anchor-based detector to directly predict a set of objects , which contains human body set and body-part set concurrently. A typical extended object prediction is concatenated of the bounding box and corresponding center point displacement .

| (1) | ||||

It locates an object with a tight bounding box where coordinates are the center position, and are the width and height of , respectively. It also records the relative displacement of center point of body-part () or affiliated body () to each . Here, is the body-part number. Considering that body-part may be one or more components (e.g., two both hands with , and the COCOHumanParts with ), we allow to be compatible with dynamic 2D offsets. The and are predicted objectness and classification scores, respectively. For these regressed offsets, we will explain how to decode them for the body-part association with fusing detected body-part set in Section 4.4.

Intuitively, we can benefit a lot from this extended representation. On one hand, an appropriate large body bounding box possesses both strong local characteristics and weak global features (such as surrounding background and anatomical position) for its body part offset regression. This enables the network to learn their intrinsic relationships. On the other hand, compared to methods [58, 55, 56, 18] training multiple subnetworks or stages, mixing and up for synchronous learning can be leveraged directly in BPJDet without the need of complicated post-processing. By designing a one-stage network that uses shared heads to jointly predict and , our approach can achieve high accuracy with much less computational burden during training and inference.

4.2 Overall Network Architecture

Our network structure is shown in Fig. 3. We choose the recent cost-effective one-stage YOLOv5 [61] as the basic backbone . Specifically, following YOLOv5, we feed one RGB image as the input to , keep its beneficial data augmentation strategies (e.g., Mosaic and MixUp), and output four grids from four multi-scale heads. Each grid contains dense object outputs produced from anchor channels and output channels. Supposing that one target object is centered at in the feature map , the corresponding grid at cell should be highly confident. When having defined anchor boxes for the grid , we will generate anchor channels at each cell . Furthermore, to obtain robust capability, YOLOv5 allows detection redundancy of multiple objects and four surrounding cells matching for each cell. This redundancy makes sense to the detection of body or part position , but it is not completely facilitative to the regression of offsets . We interpret this in Section 4.3.

Then, we explain the arrangement of one prediction from output channels of which is related to -th anchor box at grid cell . As shown in the example of grid cells in Fig. 4, one typical predicted embedding consists of four parts: the objectness or probability that an object exists, the bounding box candidate , the object classification scores , and the body-part offsets candidate . Thus, . To transform the candidate into coordinates relative to the grid cell , we apply conversions as below:

| (2) | ||||

where is the sigmoid function that limits model predictions in the range of . Similarly, this detection strategy can be extended to the offset of body-part. A body-part’s intermediate offsets are predicted in the grid coordinates and relative to the grid cell origin using:

| (3) |

In the way, and are constrained to ranges and , respectively. To learn and , losses are applied in the grid space. Sample targets of and are shown in Fig. 4.

4.3 Multi-Loss Functions

For a set of predicted grids , we firstly build target grids set following formats introduced in Section 4.2. Then, we mainly compute following four loss components:

| (4) | |||

| (5) | |||

| (6) | |||

| (7) |

For the bounding box regression loss , we adopt the complete intersection over union (CIoU) across four grids . in both the objectness loss and classification loss is the binary cross-entropy. The multiplier in is used for penalizing candidates without hitting target grid cells (), and encouraging candidates around target anchor ground-truths (). The coefficient is a balance weight for different grid levels in YOLOv5. For the body-part displacement loss , we utilize the mean squared error (MSE) to measure offset outputs and normalized targets . Before that, we apply a filter with the visibility label of body-part to remove out those false-positive offset predictions from . Finally, we calculate the total training loss as follows:

| (8) |

where is the batch size. The , , and are weights of losses , , and , respectively.

4.4 Association Decoding

In inference, we process predicted objects set to get final results. First of all, we apply the conventional Non-Maximum Suppression (NMS) to filter out false-positive and redundant bounding boxes of body and part objects:

| (9) | |||

| (10) |

where and are thresholds for object confidence and IoU overlap, respectively. Considering different recognition difficulties and overlapping levels, we define distinct thresholds and for filtering body and part boxes. We fetch confidence of each predicted object by , where for body object and for part object. Then, we rescale and in and to obtain real and by the following transformations:

| (11) | |||

| (12) |

where and are offsets from grid cell centers. The scalar maps the down-sampled size of box and offset back to the original image shape.

Finally, we update associated body parts of each left body object in by fusing its regressed center point offsets () with the remaining candidate part objects . Specifically, we search each box in part objects with its nearest body-part offset belonging to body object. The part box that has a large inner IoU () with the body box will be selected. Inner IoU here represents the ratio of intersection area on smaller body-part bounding box instead of the larger union of body-part and human body.

| (13) | ||||

We here naturally assume that the smaller body-part box should be completely inside its associated larger human body box if using ground-truth labels. During the prediction stage, detected boxes do not always tightly surround the objects. It may be too large or too small. We cannot simply set . In contrast, a very small will obviously lead to many wrong body part pair candidates. Therefore, we should assign a reasonable to keep a balance between the quantity and quality of matching pairs. This experimental discussion is presented in Section 5.2.2. We summarize the overall decoding process in Alg. 1. We report all evaluation results on the final updated body and parts boxes set pairs .

4.5 BPJDet using Anchor-Free Detectors

To reveal the universality of our proposed extended representation, we here interpret how to integrate it with advanced anchor-free detectors YOLOv5u [61] and YOLOv8 [64]. YOLOv8 is the latest iteration of YOLO series, which employs state-of-the-art backbone [83, 84] and neck architectures. It adopts an objectness-free split head, which contributes to better accuracy and a more efficient detection process. YOLOv5u modernizes YOLOv5 by originating from its foundational architecture and adopting an anchor-free head as in YOLOv8. These similarities allow us to extensively reuse the detailed designs in the previous subsections, with a key focus on representation adjustment.

Specifically, in the anchor-free paradigm, detection is formulated as a dense inference of every pixel in feature maps. For each position in , the prediction head regresses a 4D vector , which represents the relative offsets from the four sides (left, top, right and bottom) of a bounding box anchored in . We reformulate the anchor-based extended representation in Eqn. 1 into the anchor-free one as below.

| (14) | ||||

where the objectness score is omitted. The meaning of and are totally the same as and , respectively. The bounding box is the distinctive design for point-based detectors. In both YOLOv5u and YOLOv8, they adopt the same task-aligned assigner as in TOOD [83] to learn the probabilities of values around the continuous locations of target bounding boxes , which includes a CIoU loss and a Distribution Focal Loss (DFL) [84] . Please refer [83] and [84] for the explicit definition of these two losses. The classification loss uses the binary cross-entropy. For the body-part displacement loss , we synchronously generate target GTs in one identical box assigner, and optimize corresponding outputs using MSE. The final total training loss is as follows:

| (15) |

which is an updating of in Eqn. 8. is the batch size. The , , and are weights of each loss. In inference, the association decoding part keeps unchanged.

4.6 BPJDetPlus: Further Extended Representation

For supporting the downstream application hand contact estimation, we build a new architecture by extending the proposed object representation with additional spaces to estimate the contact state of a detected hand. We rename this new framework as BPJDetPlus.

Specifically, we keep extending the object representation with adding that represents four contact states of two hands . The new typical prediction inherited from in Eqn. 1 is constituted as below:

| (16) | ||||

where contains hand contact states. The or is a specific contact state () of one hand part. Following [39], we define a new hand contact states loss to be the sum of four independent binary cross-entropy losses corresponding to four possible categories.

| (17) |

where is the predicted hand contact state ranging from 0 to 1, and is the ground-truth state being 0, 1 or 2 meaning No, Yes or Unsure, respectively. We do not penalize with Unsure state. The final loss of BPJDetPlus is updated as:

| (18) |

which is an extension of the multi-loss function in Eqn. 8. is the batch size. The loss weight of is empirically set as 0.01 by online searching similar to in Section 5.2.1. We train BPJDetPlus on the train-set of ContactHands [39] and evaluate its performance on the test-set. In inference, the final predicted hand contact state score is a weighted sum of two probabilities embedded in the hand and its associated body instance. More results are in Section 5.4.2.

5 Experimental Results

5.1 Experimental Settings

5.1.1 Datasets

Body-Part Joint Detection: We firstly expect to evaluate the association quality of proposed BPJDet, while maintaining high object detection accuracy. We choose four wildly used benchmarks including CityPersons [28], CrowdHuman [29], BodyHands [18] and COCOHumanParts [58]. The former two are for pedestrian detection tasks. In CityPersons, it has 2,975 and 500 images for training and validation, respectively. It only provides box labels of pedestrians. In CrowdHuman, there are 15,000 images for training and 4,375 images for validation. It provides box labels of both body and head. Following BFJDet [56], we use its re-annotated box labels of visible faces for conducting corresponding body-face joint detection experiments. Besides, we supply the body-head joint detection experiment on CrowdHuman as a strong baseline. The third dataset BodyHands is for hand-body association tasks. It has 18,861 and 1,629 images in train-set and test-set with annotations for hand and body locations and correspondences. We implement body-hand joint detection task in it for comparing. The last dataset COCOHumanParts contains 66,808 images with 64,115 in train-set and 2,693 in val-set. It has inherited bounding-box of person category from official COCO, and labeled the locations of six body-parts (face, head, right-hand/left-hand and right-foot/left-foot) in each instance if it is visible. We execute body-parts joint detection task in it for comparing with Hier R-CNN [58]. The statistics of these four datasets are summarized in Table I.

Besides, to verify the generality of BPJDet about body-part association, we reconstruct a dataset Animals5C with five kinds of quadruped animals (e.g., dog, cat, sheep, horse and cow) based on AnimalPose [71] and AP-10K [72]. We adopt their bounding box labels of the animal body, and generate boxes of five parts (e.g., head and four feet) using keypoints. Finally, we obtain 4,608 images and 6,117 body instances in AnimalPose as the train-set, and 2,000 images and 7,962 body instances in AP-10K as the val-set. Note, we no longer learn to distinguish animal categories.

Downstream Applications: (1) We select three datasets CrowdHuman val-set [29], SCUT Head Part_B [81] and Crowd of Heads Dataset (CroHD) train-set [9] for the accurate crowd head detection application. The last two datasets do not include body boxes, and were originally released for head detection and dense head tracking tasks, respectively. Thus, it is not feasible to train BPJDet directly on them. Instead, we decide to apply body-head joint detection models trained on CrowdHuman to them in a cross-domain generalization manner. SCUT Head Part_B mostly focuses on indoor scenes like classrooms and meeting rooms. CroHD is collected across 9 HD sequences captured from an elevated viewpoint. All sequences are open scenes like crossings and train stations with super high crowd densities. CroHD only provides annotations in 4 train-set sequences. Statistics and samples of them are in Table I and Fig. 11. (2) For the second application hand contact estimation, we select the dedicated dataset ContactHands [39] for improving performance by leveraging the advanced body-hand association ability of BPJDet. ContactHands is a large-scale dataset of in-the-wild images for hand detection and contact recognition. It has annotations for 20,506 images, of which 18,877 form the train-set and 1,629 form the test-set. There are 52,050 and 5,983 hand instances in train and test sets, respectively. Some samples are shown in Fig. 12.

| Datasets | Images | Instance | ||||

| person | head | face | hand | foot | ||

| CityPersons [28] | 3,475 | 18,201 | 17,954 | 7,922 | — | — |

| CrowdHuman [29] | 19,375 | 439,046 | 439,046 | 248,903 | — | — |

| BodyHands [18] | 20,490 | 63,095 | — | — | 57,898 | — |

| COCOHumanParts [58] | 66,808 | 268,030 | 232,392 | 160,102 | 204,827 | 162,099 |

| SCUT Head Part_B [81] | 2,405 | — | 43,930 | — | — | — |

| CroHD Train-set [9] | 5,741 | — | 1,188,793 | — | — | — |

| ContactHands [39] | 20,506 | — | — | — | 58,033 | — |

5.1.2 Evaluation Metric

For detection evaluation of body and parts, we report the standard VOC Average Precision (AP) metric with IoU=0.5. We also present the log-average miss rate on False Positive Per Image (FPPI) in the range of [10-2, 100] shortened as MR-2 [1] of body and its parts. To assess the association quality of BPJDet, we report the log-average miss matching rate (mMR-2) on FPPI of body-part pairs in [10-2, 100], which is originally proposed by BFJDet [56] for exhibiting the proportion of body-face pairs that are miss-matched:

| (19) |

where is the number of matched body-part pairs with box IoU threshold as 0.5, and represents the total number of pairs. The final value of mMR-2 can be naturally acquired by log-averaging all mMR values in the FPPI range. For BPJDet on the body-hand joint detection task, we present Conditional Accuracy and Joint AP defined by BodyHands [18] of body-hand pairs. Finally, for body-parts joint detection task on COCOHumanParts, we follow the evaluation protocols defined in Hier R-CNN [58]. We report detection performance with a series of APs (AP.5:.95, AP.5, AP.75, APM, APL) as in COCO metrics, as well as APsubs based on subordinate relationship which reflect the body-parts association state. Although Hier R-CNN further divides small objects into new small and tiny categories, it does not measure them coherently in the APsubs, we thus abandoned the comparison of APS and AP to show fairness. Evaluations on Animals5C are similar to COCOHumanParts.

For the accurate crowd head detection task, we report various metrics including Precision, Recall, F1 scores and COCO mean Average Precision (mAP) following CroHD [9]. For the hand contact estimation task, following settings in ContactHands [39], we report four Average Precision (AP) values corresponding to four physical hand-contact states (including No-Contact, Self-Contact, Person-Contact and Object-Contact), and the mAP value of them.

5.1.3 Implementation Details

We adopt the PyTorch 1.10 and 4 RTX-3090 GPUs for training. Depending on the complexity and scale of datasets, we train on the CityPersons, CrowdHuman, BodyHands, COCOHumanParts and Animals5C datasets for 100, 150, 100, 150 and 100 epochs using the SGD optimizer, respectively. Following the original YOLOv5 [61] architecture, we train three kinds of models including BFJDet-S/M/L by controlling the depth and width of bottlenecks in . All input images are resized and zero-padded to for being consistent with input shape settings in JointDet [55], BFJDet [56], BodyHands [18] and Hier R-CNN [58]. We adopt effective data training augmentations including mosaic, mix-up and random scaling but leave out test time augmentation (TTA). YOLOv5u [61] and YOLOv8 [64] are similar to the YOLOv5 except the prediction head and loss functions. We only train BPJDet based on them using the Large parameters BFJDet-L5u and BFJDet-L8u.

As for many hyperparameters, for YOLOv5, we keep most of them unchanged, such as adaptive anchors boxes , the grid balance weight , and the loss weights , and . We set based on ablation studies Section 5.2.1. For anchor-free detectors YOLOv5u and YOLOv8, the loss weights , , and . When testing, we use thresholds , , , and for applying on . The value of is a trade-off choice which is discussed in Section 5.2.2. Besides, due to the absence of left and right annotation of hands in BodyHands, we should have to set body-part number but reduce body-part categories from 2 to 1. For COCOHumanParts and Animals5C, we set body-part number (categories) to 6 and 5, respectively.

5.2 Ablation Studies

5.2.1 Loss Weight

We firstly investigate the hyperparameter . For simplicity, we conduct all ablation experiments on the CityPersons dataset using BPJDet-S and training about joint body-face detection task. The input shape is . Total epoch is expanded to 150 for searching the best result. We uniformly sample from 0.005 to 0.030 with step 0.005 for training, and report metrics including MR-2s and average mMR-2 of each best model. As in Fig. 5a, we can clearly find that the model performance is optimal when . A larger or smaller will lead to inferior results.

In addition to investigating the influence of on MR-2 and average mMR-2, we also report the metric AP for body and face to reflect its impact. As shown in Fig. 5b, we still obtain the optimal AP for face when . Although we do not get the best AP for body as shown in Fig. 5c by setting , we decide to give priority to ensuring the accuracy of the body-part matching after the trade-off between detection and association.

5.2.2 Inner IoU Threshold

In the Section 4.4, we introduce a new and vital threshold for better allocating part boxes to the body boxes they belong to. We conduct the threshold searching task on the BodyHands [18] val-set using the already trained body-hand joint detection model BPJDet-S. As shown in Fig. 6, we select from 0.4 to 0.8 with a step 0.05, and observe the change trend of indicators Cond. Accuracy and Joint AP. Finally, we set for a better trade-off between conditional accuracy and joint AP.

5.2.3 Influence of Association Task

To investigate the influence of extended representation for body-part association task on detection accuracy, we additionally trained all models including BPJDet-S/M/L/L5u/L8u on the CityPersons dataset while removing the association task (). All AP results of body and part (face) are shown in Fig. 7 top. We can observe that regardless of the type of detector used, there is no significant difference in the detection accuracy before and after the introduction of extended representation, i.e., the addition of body-part association in BPJDet. Even in some cases, especially for anchor-free detectors, the association task may lead to higher detection performance. These results indicate that our extended proposed representation is indeed harmless and not sensitive about backbones. Besides, we also evaluated the average mMR-2 of BPJDet when not using our extended representation for jointly learning the association task. As shown in Fig. 7 bottom, the trivial body-part matching results of them are very poor, which reveals the necessity of association design.

5.3 Quantitative and Visual Comparison

5.3.1 Body-Face Joint Detection

We compare joint body-face detection performance of our BPJDet with method BFJDet [56] on two benchmarks: CityPersons and CrowdHuman.

CityPersons: The Table II shows results on the val-set of CityPersons. Following strategies in [3, 56], results are reported on four subsets of Reasonable (occlusion 35%), Partial (10% occlusion 35%), Bare (occlusion 10%) and Heavy (occlusion 35%). We also report the average value (Average) of these four results. For BPJDet using YOLOv5, comparing with RetinaNet+BFJ which obtains similar body AP but far inferior face AP to ours, BPJDet-L exceeds it of mMR-2 by 13.1%, 13.8%, 13.0% and 16.9% in four subsets, respectively. Comparing with FPN+BFJ that has similar face AP but higher body AP to ours, BPJDet-L also surpasses it of mMR-2 by 6.3%, 2.9%, 7.5% and 7.3% in four subsets. Especially, in the Heavy subset, our association superiority is the most prominent, which reveals that our BPJDet has an advantage for addressing miss-matchings in crowded scenes. When using advanced anchor-free detectors which achieved higher AP results of body and face than BPJDet-L, BPJDet-L5u and BPJDet-L8u can further improve the association ability in four subsets. We also obtained two new lower Average mMR-2 results 29.3% and 30.6% than 31.5% of the anchor-based BPJDet-L. This proves the generalization of extended representation.

| Methods | AP body | AP face | body-face mMR | ||||

| Reasonable | Partial | Bare | Heavy | Average | |||

| RetinaNet+POS [25, 56] | 78.5 | 35.3 | 40.0 | 42.8 | 38.7 | 67.0 | 47.1 |

| RetinaNet+BFJ [25, 56] | 79.3 | 36.2 | 39.5 | 41.5 | 38.5 | 63.1 | 45.7 |

| FPN+POS [24, 56] | 80.6 | 65.5 | 33.5 | 32.7 | 34.1 | 56.6 | 39.2 |

| FPN+BFJ [24, 56] | 84.4 | 68.0 | 32.7 | 30.6 | 33.0 | 53.5 | 37.5 |

| BPJDet-S (Ours) | 75.1 | 58.2 | 29.3 | 28.9 | 29.3 | 57.2 | 36.2 |

| BPJDet-M (Ours) | 76.7 | 58.8 | 27.5 | 31.6 | 24.9 | 55.8 | 35.0 |

| BPJDet-L (Ours) | 75.5 | 61.0 | 26.4 | 27.7 | 25.5 | 46.2 | 31.5 |

| BPJDet-L5u (Ours) | 81.5 | 63.3 | 22.9 | 24.0 | 22.1 | 48.4 | 29.3 |

| BPJDet-L8u (Ours) | 80.2 | 64.2 | 24.3 | 21.1 | 26.1 | 50.9 | 30.6 |

CrowdHuman: The Table III shows results on the more challenging CrowdHuman. Our method BPJDet using either anchor-based YOLOv5 or anchor-free detectors (YOLOv5u and YOLOv8) achieves considerable gains in all metrics comparing with BFJDet based on one-stage RetinaNet [25] or two-stage FPN [24] and CrowdDet [5]. Remarkably, BPJDet-L8u has achieved the lowest mMR-2 value 49.9%, which is 2.4% lower than the previous best method CrowdDet+BFJ. Moreover, our BPJDet surpasses CrowdDet+BFJ on body-face association with keeping higher detection performance of body and face, which means dealing with more instance matching issues. These again demonstrate the universality and superiority of our method.

| Methods | Stage | MR-2 body | MR-2 face | AP body | AP face | body-face mMR |

| RetinaNet+POS [25, 56] | One | 52.3 | 60.1 | 79.6 | 58.0 | 73.7 |

| RetinaNet+BFJ [25, 56] | One | 52.7 | 59.7 | 80.0 | 58.7 | 63.7 |

| FPN+POS [24, 56] | Two | 43.5 | 54.3 | 87.8 | 70.3 | 66.0 |

| FPN+BFJ [24, 56] | Two | 43.4 | 53.2 | 88.8 | 70.0 | 52.5 |

| CrowdDet+POS [5, 56] | Two | 41.9 | 54.1 | 90.7 | 69.6 | 64.5 |

| CrowdDet+BFJ [5, 56] | Two | 41.9 | 53.1 | 90.3 | 70.5 | 52.3 |

| BPJDet-S (Ours) | One | 41.3 | 45.9 | 89.5 | 80.8 | 51.4 |

| BPJDet-M (Ours) | One | 39.7 | 45.0 | 90.7 | 82.2 | 50.6 |

| BPJDet-L (Ours) | One | 40.7 | 46.3 | 89.5 | 81.6 | 50.1 |

| BPJDet-L5u (Ours) | One | 37.0 | 45.1 | 91.8 | 81.9 | 50.4 |

| BPJDet-L8u (Ours) | One | 36.9 | 45.0 | 91.5 | 82.1 | 49.9 |

5.3.2 Body-Hand Joint Detection

We conduct joint body-hand detection experiments on BodyHands [18], and compare our BPJDet with several methods proposed in BodyHands. As shown in Table IV, our BPJDet using either anchor-based or anchor-free detectors outperforms all other methods by a significant margin. With achieving highest hand AP 87.6% and conditional accuracy 86.93% of body, BPJDet-L5u largely improves the previous best Joint AP of body and hands by 21.55%. We give more qualitative comparisons of joint body-hand detection trained on BodyHands in Fig. 8. The compared masked images are fetched from BodyHands paper. Our BPJDet can detect many unlabeled objects, and associate hands that method proposed in BodyHands failed to match. This illustrates why we have an overwhelming Joint AP advantage. All these results further validate the generalizability of our extended object representation and its impressive strength on body and part relationship discovery.

| Methods | Hand AP | Cond. Accuracy | Joint AP |

| OpenPose [67] | 39.7 | 74.03 | 27.81 |

| Keypoint Communities [88] | 33.6 | 71.48 | 20.71 |

| MaskRCNN+FD [11, 18] | 84.8 | 41.38 | 23.16 |

| MaskRCNN+FS [11, 18] | 84.8 | 39.12 | 23.30 |

| MaskRCNN+LD [11, 18] | 84.8 | 72.83 | 50.42 |

| MaskRCNN+IoU [11, 18] | 84.8 | 74.52 | 51.74 |

| BodyHands [18] | 84.8 | 83.44 | 63.48 |

| BodyHands* [18] | 84.8 | 84.12 | 63.87 |

| BPJDet-S (Ours) | 84.0 | 85.68 | 77.86 |

| BPJDet-M (Ours) | 85.3 | 86.80 | 78.13 |

| BPJDet-L (Ours) | 85.9 | 86.91 | 84.39 |

| BPJDet-L5u (Ours) | 87.6 | 86.93 | 85.42 |

| BPJDet-L8u (Ours) | 86.8 | 85.07 | 78.44 |

5.3.3 Body-Head Joint Detection

To further verify the strong adaptability of our proposed BPJDet, we implement the joint body-head detection test on the CrowdHuman [29] dataset, and compare our body AP and MR-2 results with popular pedestrian detection methods towards crowded scenes. All experiment settings are the same as training of body-face pairs. The results are shown in Tabel V. In order to compare its association difference with the joint body-face detection task, we replicate the body face pair detection results of BPJDet for reference.

We can observe that, comparing with the body-face joint detection task, both of the AP and MR-2 results become worse, although the mMR-2 shows a better body-head association effect (improving from 49.9 to 46.1). On one hand, this may reflect that head detection is harder than face detection due to showing less appearance feature when face area is invisible. On the other hand, training body detection jointly with a challenging body-part (e.g., full-view head) may be a burden on its AP (dropping from the best result 91.8 to 87.4) and MR-2 (dropping from the best result 36.9 to 41.1) performance. Nevertheless, compared with the previous best body-head joint detection methods, including DA-RCNN [54] and JointDet [55], we still present considerable advantages in MR-2 performance. Finally, we also reported SOTA methods focusing on independent detection of body objects in crowded people. The so far lowest MR-2 of body is maintained by PedHunter [4]. Our BPJDet-L8u model for body-face joint detection task achieves a lower result with it (36.9 vs. 39.5). The best AP of body is held by Iter-E2EDET [80], which is based on the cumbersome DETR. However, such query-based methods are slightly inferior to their box-based counterparts in performance of body MR-2. We consider alleviating this drawback by exploring the DETR for body-part joint detection in future.

| Methods | Joint Detect? | MR-2 body | MR-2 head | AP body | AP head | body-head mMR | ||||||

| CrowdHuman baseline [29] | ✗ | 50.4 | 52.1 | 85.0 | 78.0 | — | ||||||

| Adaptive-NMS [74] | ✗ | 49.7 | — | 84.7 | — | — | ||||||

| PBM [89] | ✗ | 43.3 | — | 89.3 | — | — | ||||||

| CrowdDet [5] | ✗ | 41.4 | — | 90.7 | — | — | ||||||

| AEVB [90] | ✗ | 40.7 | — | — | — | — | ||||||

| AutoPedestrian [91] | ✗ | 40.6 | — | — | — | — | ||||||

| Beta RCNN(KLth = 7) [75] | ✗ | 40.3 | — | 88.2 | — | — | ||||||

| PedHunter [4] | ✗ | 39.5 | — | — | — | — | ||||||

| Sparse-RCNN [79] | ✗ | 44.8 | — | 91.3 | — | — | ||||||

| Deformable-DETR [78] | ✗ | 43.7 | — | 91.5 | — | — | ||||||

| PED-DETR [77] | ✗ | 43.7 | — | 91.6 | — | — | ||||||

| Iter-E2EDET [80] | ✗ | 41.6 | — | 92.5 | — | — | ||||||

| DA-RCNN [54] | ✓ | 52.3 | 50.0 | — | — | — | ||||||

| DA-RCNN + J-NMS [54] | ✓ | 51.8 | 49.7 | — | — | — | ||||||

| JointDet w/o RDM [55] | ✓ | 47.0 | 48.7 | — | — | — | ||||||

| JointDet [55] | ✓ | 46.5 | 48.3 | — | — | — | ||||||

| BPJDet-S (Ours) | ✓ | 45.9 | 47.8 | 85.0 | 79.4 | 48.0 | ||||||

| BPJDet-M (Ours) | ✓ | 45.4 | 46.8 | 85.3 | 80.5 | 46.1 | ||||||

| BPJDet-L (Ours) | ✓ | 46.2 | 48.0 | 83.8 | 78.6 | 46.4 | ||||||

| BPJDet-L5u (Ours) | ✓ | 41.1 | 50.2 | 87.4 | 79.9 | 49.9 | ||||||

| BPJDet-L8u (Ours) | ✓ | 41.8 | 50.4 | 86.6 | 79.8 | 49.4 | ||||||

| Methods | Joint Detect? | MR-2 body | MR-2 face | AP body | AP face | body-face mMR | ||||||

| BPJDet-S (Ours) | ✓ | 41.3 | 45.9 | 89.5 | 80.8 | 51.4 | ||||||

| BPJDet-M (Ours) | ✓ | 39.7 | 45.0 | 90.7 | 82.2 | 50.6 | ||||||

| BPJDet-L (Ours) | ✓ | 40.7 | 46.3 | 89.5 | 81.6 | 50.1 | ||||||

| BPJDet-L5u (Ours) | ✓ | 37.0 | 45.1 | 91.8 | 81.9 | 50.4 | ||||||

| BPJDet-L8u (Ours) | ✓ | 36.9 | 45.0 | 91.5 | 82.1 | 49.9 | ||||||

5.3.4 Body-Parts Joint Detection

We conduct joint body-parts detection experiments on the COCOHumanParts [58] dataset to explain that our generic BPJDet is not limited to body part numbers and categories. All results are shown in Table VI. On the premise of similar parameters, our YOLOv5-S based model BPJDet-S is far better than a series of ResNet-50 based baselines (e.g., Faster R-CNN [23], Mask R-CNN [11] RetinaNet [25], FCOS [66] and Hier R-CNN [58]) in terms of all and per categories of AP metrics. Although Mask R-CNN [11] and Hier R-CNN [58] employ backbones with larger parameters like ResNet-101 and ResNeXt-101, our BPJDet-S still maintains a leading advantage over them. After incorporating various enhanced components, including high-capacity backbones, deformable convs [92], and training time augmentation, Hier R-CNN achieves its best results of all categories APs (Hier-X101) that remain comparable to BPJDet-M/L/L5u/L8u narrowly. And note that the cost is more complex structural design and greater computation. In terms of detection accuracy, we reveal the necessity and urgency of exploring advanced detectors like YOLOv5 and YOLOv8, rather than the ancient Faster R-CNN and its variants.

Besides, for the subordinate relationship of body and parts, it can be observed that our models have only a slight decline under all categories APsub metrics and have achieved discernible leadership in multiple sub indicators, reflecting advantages of our method in the body-parts association task. We also report body-head mMR-2 results. Similarly, as results in CrowdHuman dataset shown in Table V, BPJDet-L may not necessarily achieve a better association result than BPJDet-M due to its more detected challenging body and head instances. The difference is that the mMR-2 values here are much lower, confirming the fact that the dataset COCOHumanParts is simpler than CrowdHuman which has a relative high population density. Finally, in Fig. 9, we show some comparative examples where detection results of our BPJDet-L are better than Hier-X101. We can intuitively see that our method can easily avoid some obviously unreasonable association pairs, which are troublesome to methods like Hier R-CNN with separate designs of detection and association.

| Methods | Joint Detect? | All categories APs | Per categories APs | All categories APs (subordination) | body-head mMR | ||||||||||||||

| AP.5:.95 | AP.5 | AP.75 | APM | APL | person | head | face | r-hand | l-hand | r-foot | l-foot | AP | AP | AP | AP | AP | |||

| Faster-C4-R50 [23] | ✗ | 32.0 | 55.5 | 32.3 | 54.9 | 52.4 | 50.5 | 47.5 | 35.5 | 27.2 | 24.9 | 19.2 | 19.3 | — | — | — | — | — | — |

| Faster-FPN-R50 [11] | ✓ | 34.8 | 60.0 | 35.4 | 55.4 | 52.2 | 51.4 | 48.7 | 36.7 | 31.7 | 29.7 | 22.4 | 22.9 | 14.5 | 31.4 | 11.2 | 18.2 | 24.1 | — |

| Faster-FPN-R50 [11] | ✓ | 34.8 | 60.0 | 35.4 | 55.4 | 52.2 | 51.4 | 48.7 | 36.7 | 31.7 | 29.7 | 22.4 | 22.9 | 19.1 | 38.5 | 16.0 | 22.4 | 33.6 | — |

| RetinaNet-R50 [25] | ✗ | 32.2 | 54.7 | 33.3 | 54.5 | 53.8 | 49.7 | 47.1 | 33.7 | 28.7 | 26.7 | 19.7 | 20.2 | — | — | — | — | — | — |

| FCOS-R50 [66] | ✗ | 34.1 | 58.6 | 34.5 | 55.1 | 55.1 | 51.1 | 45.7 | 40.0 | 29.8 | 28.1 | 22.2 | 21.9 | — | — | — | — | — | — |

| Faster-FPN-R101 [11] | ✗ | 36.0 | 62.1 | 36.5 | 57.2 | 54.8 | 52.6 | 49.3 | 36.9 | 33.3 | 30.3 | 24.3 | 24.4 | — | — | — | — | — | — |

| Faster-FPN-X101 [11] | ✗ | 36.7 | 62.8 | 37.4 | 57.4 | 55.3 | 53.6 | 49.7 | 37.3 | 33.8 | 32.2 | 25.0 | 25.1 | — | — | — | — | — | — |

| Hier-R50 [58] | ✓ | 36.8 | 65.7 | 36.2 | 53.9 | 47.5 | 53.2 | 50.9 | 41.5 | 31.3 | 29.3 | 25.5 | 26.1 | 33.3 | 67.1 | 28.3 | 29.9 | 47.1 | — |

| Hier-R101 [58] | ✗ | 37.2 | 65.9 | 36.7 | 55.1 | 50.3 | 54.0 | 50.4 | 41.6 | 31.6 | 30.1 | 26.0 | 26.6 | — | — | — | — | — | — |

| Hier-X101 [58] | ✓ | 38.8 | 68.1 | 38.5 | 56.6 | 52.3 | 55.4 | 52.3 | 43.2 | 33.5 | 32.0 | 27.4 | 27.9 | 36.6 | 69.7 | 32.5 | 32.6 | 51.1 | — |

| Hier-R50 [58] | ✓ | 38.6 | 67.9 | 38.3 | 55.9 | 51.7 | — | — | — | — | — | — | — | 36.0 | 70.8 | 31.6 | 32.0 | 49.6 | — |

| Hier-R50 [58] | ✓ | 39.3 | 68.8 | 39.0 | 56.5 | 49.4 | — | — | — | — | — | — | — | 37.3 | 70.8 | 33.5 | 33.1 | 52.0 | — |

| Hier-R50 [58] | ✓ | 40.6 | 70.1 | 40.7 | 57.5 | 51.5 | — | — | — | — | — | — | — | 37.3 | 72.5 | 33.0 | 35.4 | 48.9 | — |

| Hier-X101 [58] | ✓ | 40.3 | 70.1 | 40.1 | 58.2 | 53.6 | — | — | — | — | — | — | — | 37.1 | 72.1 | 32.9 | 34.6 | 49.7 | — |

| Hier-X101 [58] | ✓ | 40.5 | 70.2 | 40.4 | 57.6 | 52.7 | — | — | — | — | — | — | — | 38.6 | 72.3 | 35.0 | 35.2 | 53.5 | — |

| Hier-X101 [58] | ✓ | 42.0 | 71.6 | 42.3 | 59.0 | 53.3 | — | — | — | — | — | — | — | 38.8 | 72.3 | 35.5 | 37.4 | 50.3 | — |

| BPJDet-S (Ours) | ✓ | 38.9 | 65.5 | 39.4 | 59.1 | 49.7 | 56.3 | 53.5 | 41.9 | 34.7 | 33.7 | 25.5 | 26.5 | 38.4 | 64.4 | 38.9 | 57.7 | 47.5 | 29.8 |

| BPJDet-M (Ours) | ✓ | 42.0 | 68.9 | 43.2 | 62.3 | 54.6 | 59.8 | 55.7 | 44.7 | 38.7 | 37.6 | 28.6 | 29.2 | 41.7 | 68.1 | 42.9 | 61.6 | 52.9 | 26.6 |

| BPJDet-L (Ours) | ✓ | 43.6 | 70.6 | 45.1 | 63.8 | 61.8 | 61.3 | 56.6 | 46.3 | 40.4 | 39.6 | 30.2 | 30.9 | 43.3 | 69.8 | 44.7 | 63.2 | 58.9 | 26.8 |

| BPJDet-L5u (Ours) | ✓ | 44.0 | 71.9 | 44.8 | 65.7 | 66.5 | 63.7 | 55.4 | 47.0 | 40.8 | 39.3 | 30.5 | 31.5 | 43.5 | 70.7 | 44.5 | 65.2 | 65.7 | 25.2 |

| BPJDet-L8u (Ours) | ✓ | 43.5 | 71.7 | 44.2 | 65.4 | 63.1 | 60.6 | 55.3 | 47.1 | 40.5 | 39.4 | 30.4 | 31.2 | 41.6 | 68.6 | 42.4 | 59.9 | 55.1 | 29.7 |

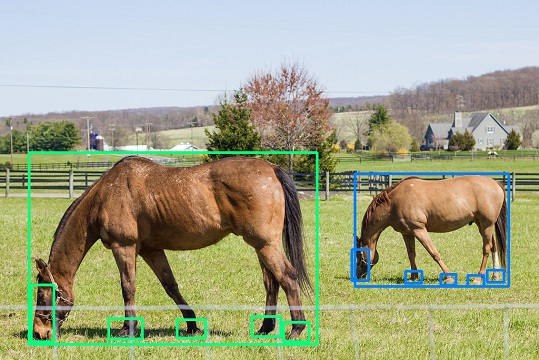

5.3.5 Body-Parts Joint Detection of Animals

For exploratory purposes, we choose the reconstructed dataset Animals5C to conduct the body-parts joint detection of animals. Due to the fact that the GT boxes of each part of an animal are automatically generated through 2D keypoints and body size, they do not tightly surround the object. Therefore, although each loss in BPJDet can converge in training, the final APs are not satisfactory. Nevertheless, we can quantitatively confirm that BPJDet does work with producing relative high results of precision and recall (about 80% in the val-set). In addition, as shown in Fig. 10, our qualitative results on images from the val-set also demonstrate that the body-parts joint detection of animals is successful. These results indicate that our tentative generalization testing of BPJDet on quadruped animals is persuasive and meaningful.

5.4 Benefits of Generic BPJDet

Associating each detected part (e.g., head and hand) to a human body is beneficial for many downstream tasks. This subsection demonstrates the benefits of this ability by our proposed generic BPJDet for two such tasks: accurate crowd head detection and hand contact estimation. Without losing generality, we only train BPJDet using anchor-based detectors in these applications.

5.4.1 Body-Head for Accurate Crowd Head Detection

As discussed in Section 5.1.1, the chosen two datasets SCUT Head dataset [81] and CroHD train-set [9] are not suitable for retraining or finetuning BPJDet. Although the larger in-the-wild CrowdHuman dataset has similar congested populations comparing to them, they mutually have scene-level inter domain differences. Thus, we attempt to conduct cross domain generalization experiments on these two target datasets with specific domains. All results are summarized in Table VII and Fig. 11. We also report non-cross-domain evaluation results on the CrowdHuman val-set.

| Datasets | Methods | Detection Metrics | |||

| Precision | Recall | F1 Score | mAP | ||

| Crowd- Human val-set [29] | BPJDet-S | 90.6+20.9 | 74.2-9.3 | 81.6-0.4 | 72.5-7.9 |

| BPJDet-S | 69.7 | 83.5 | 82.0 | 80.2 | |

| BPJDet-M | 72.1 | 83.7 | 82.5 | 80.7 | |

| BPJDet-L | 76.6 | 81.6 | 82.0 | 78.6 | |

| BPJDet-S | 81.1+10.4 | 81.7-1.8 | 82.6+0.6 | 79.0-1.2 | |

| BPJDet-M | 82.6+10.5 | 81.9-1.8 | 83.1+0.6 | 79.5-1.2 | |

| BPJDet-L | 85.5+8.9 | 80.3-1.3 | 83.1+1.1 | 77.9-0.7 | |

| SCUT Head Part_B [81] | BPJDet-S | 94.0+9.5 | 76.7-6.7 | 84.5-0.8 | 75.8-6.0 |

| BPJDet-S | 84.5 | 83.4 | 85.3 | 81.8 | |

| BPJDet-M | 84.2 | 82.8 | 84.8 | 81.2 | |

| BPJDet-L | 85.1 | 80.4 | 84.3 | 79.1 | |

| BPJDet-S | 90.4+5.9 | 81.7-1.7 | 85.9+0.6 | 80.5-1.3 | |

| BPJDet-M | 90.6+6.4 | 80.9-1.9 | 85.5+0.7 | 79.6-1.6 | |

| BPJDet-L | 91.7+6.6 | 79.3-1.1 | 85.1+0.8 | 78.2-0.9 | |

| CroHD train-set [9] | BPJDet-S | 67.8+17.9 | 35.2-14.3 | 46.3-4.8 | 27.1-8.8 |

| BPJDet-S | 49.9 | 49.5 | 51.1 | 35.9 | |

| BPJDet-M | 53.3 | 46.3 | 50.0 | 33.1 | |

| BPJDet-L | 58.2 | 42.2 | 49.0 | 31.3 | |

| BPJDet-S | 61.5+11.6 | 46.8-2.7 | 53.2+2.1 | 34.7-1.2 | |

| BPJDet-M | 62.4+9.2 | 44.2-2.1 | 51.7+1.7 | 32.1-1.0 | |

| BPJDet-L | 64.3+6.1 | 41.2-1.0 | 50.2+1.2 | 30.9-0.4 | |

Specifically, to evaluate BPJDet trained on Crowdhuman directly on two unknown target domains, we need to lower the NMS confidence threshold to better generalize, rather than using a larger threshold (e.g., 0.5 or 0.9) as in supervised learning tests. Without losing generality, we here set confidence thresholds as 0.2 for NMS filtering. However, simply relying on lower thresholds is not robust, which will lead to more candidates being obtained, while also introducing more false positive predictions. This means better recall rates, F1 scores and mAP, but worse precision. We can check related metric variations of BPJDet-S () and BPJDet-S () on three datasets in Table VII for more details. Despite improvements in recall, F1, and mAP values, the precision is rapidly declining. More qualitative visualization of false positives for cross domain testing is shown in the left column of Fig. 11.

Fortunately, our proposed BPJDet can predict body-head pairs rather than just apply head detection. To reduce false positives, we can utilize these pairs to perform a double check, retaining only candidates who detect body and head. Using this strategy, we can greatly improve precision and slightly increase F1 scores with minimal recall and mAP decreases in three test sets, as shown in Table VII (BPJDet-S/M/L vs. BPJDet-S/M/L). Qualitative comparison is shown in Fig. 11. All these impressive improvements benefit from the reliable body-head association ability of BPJDet. This experiment has explored our proposed BPJDet with body-head joint detection models for realizing stable, robust and accurate human head detection in crowded scenes. It also demonstrates the great potential of BPJDet for cross domain generalization in head detection, which is meaningful to following in-the-wild applications such as surveillance, crowd counting and general head tracking.

5.4.2 Body-Hand for Hand Contact Estimation

Following the test setting of physical contact analysis in BodyHands [18], we here explore the benefits of body-hand joint detection for recognizing or estimating the physical contact state of a hand on dataset ContactHands [39]. Hand contact estimation is a high-level task that has many further applications such as human understanding, activity recognition and AR/VR. Generic hand contact estimation is a complex problem. ContactHands has defined four contact states: No-Contact, Self-Contact, Person-Contact and Object-Contact. They are not mutually exclusive considering multiple states of one hand. And the Person-Contact category is the most challenging one due to the difficulty of distinguishing it with Self-Contact. It is nontrivial to determine if one hand is part of the same body (Self-Contact) or a different body (Person-Contact). By utilizing the visual appearance of a hand and its surrounding local context, BodyHands [18] proposed two approaches as listed in Table VIII to exclusively improve the baseline of Person-Contact estimation by leveraging the body-hand association. Methods in [39, 18] are all based on ResNet-101.

| Methods | APNC | APSC | APPC | APOC | mAP |

| Mask R-CNN [11, 39] | 60.52 | 51.62 | 33.79 | 67.43 | 53.31 |

| ContactHands [39] | 62.48 | 54.31 | 39.51 | 73.34 | 57.41 |

| Heuristic Method [18] | 62.48 | 54.31 | 40.89 | 73.34 | 57.56 |

| ContactHands [39] | 63.90 | 59.30 | 42.01 | 70.49 | 58.93 |

| End-to-end Method [18] | 64.74 | 56.12 | 47.09 | 74.32 | 60.56 |

| BPJDetPlus-S (Ours) | 58.87 | 54.69 | 45.51 | 66.62 | 56.42 |

| BPJDetPlus-M (Ours) | 62.67 | 56.16 | 46.33 | 67.48 | 58.16 |

| BPJDetPlus-L (Ours) | 62.98 | 57.39 | 50.32 | 69.55 | 60.06 |

| 0.0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | |

| 1.0 | 0.9 | 0.8 | 0.7 | 0.6 | 0.5 | 0.4 | 0.3 | 0.2 | 0.1 | 0.0 | |

| APNC | 55.51 | 56.39 | 56.99 | 57.58 | 58.02 | 58.39 | 58.87 | 58.75 | 58.85 | 58.89 | 58.76 |

| APSC | 52.18 | 52.85 | 53.38 | 53.91 | 54.32 | 54.51 | 54.69 | 54.85 | 54.83 | 54.76 | 54.91 |

| APPC | 38.60 | 41.14 | 42.66 | 43.29 | 43.87 | 44.90 | 45.51 | 45.00 | 44.27 | 44.22 | 43.39 |

| APOC | 63.30 | 65.02 | 65.60 | 65.94 | 66.23 | 66.48 | 66.62 | 66.66 | 66.65 | 66.50 | 66.38 |

| mAP | 52.40 | 53.85 | 54.66 | 55.18 | 55.61 | 56.07 | 56.42 | 56.32 | 56.15 | 56.09 | 55.86 |

Similarly, we implemented our BPJDetPlus on ContactHands as explained in Section 4.6. As in Table IX, we empirically assign weights and of the hand and body instances as 0.6 and 0.4 by offline searching based on the trained BPJDetPlus-S model. Relying solely on tight hand instances () or loose body instances () to estimate the hand contact state is insufficient and not optimal. Finally, we summarize our results in Table VIII. Without the need for meticulously designed attention mechanisms or many additional tuning steps like in [39, 18], our method BPJDetPlus-L improves the most challenging APPC from the best 47.09% to 50.32%, which profoundly benefits from the stronger body-hand association ability. The largest gap appears in APOC values (69.55% vs. 74.32%) due to the applying of a pre-trained universal object detector to detect all other common objects in the image in methods [39, 18]. Nonetheless, we achieve a comparable mAP 60.06% with the SOTA 60.56%. More qualitative results comparison of our method with the SOTA method in ContactHands [39] is shown in Fig. 12. These results certificate the strong adaptability of our proposed extended representation and effortless performance gains of using body-hand pairs for the hand contact estimation task.

6 Discussion and Conclusion

In this paper, we pointed out many problems with existing body-part joint detection methods, including inefficient separated branches of detection and association, reliance on heuristic rules to design association modules, and addiction to outdated detectors. Compared to some popular human-centered topics such as pedestrian detection, face detection and pose estimation, the joint detection of body-part is less-explored. We thus thoroughly investigated technical difficulties related to body-part joint detection and its potential to assist downstream tasks. To promote this community, we propose a novel generic single-stage body-part joint detector named BPJDet to address the challenging paired object detection and association problem. Inspired by center-offset regression in general object detection and human pose estimation, we extend the traditional object representation by appending body-part displacements, and design favorable multi-loss functions to enable joint training of detection and association tasks. Furthermore, BPJDet is not limited to a specific or single body part. And it is also not picky about using anchor-based or anchor-free detectors. Quantitative SOTA results and impressive qualitative performance on four public body-part or body-parts datasets of human as well as one body-parts dataset of quadruped animals suffice to demonstrate the robustness, adaptability and superiority of our proposed BPJDet. Besides, we have conducted tests on two closely related downstream applications, including accurate crowd head detection and hand contact estimation, to further verify obvious benefits of the advanced body-part association ability of BPJDet.

In summary, we envision that our BPJDet can serve as a strong baseline on body-part joint detection benchmarks and may benefit many other applications. Nonetheless, the performance of BPJDet in some difficult scenarios is not satisfactory, as shown of some missed and failure cases in Fig. 8, 9 and 12. We assume that these challenges may be alleviated by utilizing more powerful backbones such as transformer-based DETRs, applying unsupervised learning with large-scale unlabeled images, or exploring advanced strategies for more robust multi-task joint learning. In addition, body-part pairs can help with many trendy tasks, such as stable multi-person/head tracking, fine-grained human-object interaction (HOI) and generic human-object contact detection. We will study them in the future.

Acknowledgments

We acknowledge the effort from authors of human-related datasets including CityPersons, CrowdHuman, BodyHands, COCOHumanParts, SCUT Head Part_B, CroHD and ContactHands. These datasets make researches and downstream applications about generic body-part joint detection and association possible.

References

- [1] P. Dollar, C. Wojek, B. Schiele, and P. Perona, “Pedestrian detection: An evaluation of the state of the art,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 4, pp. 743–761, 2011.

- [2] S. Zhang, L. Wen, X. Bian, Z. Lei, and S. Z. Li, “Occlusion-aware r-cnn: detecting pedestrians in a crowd,” in European Conference on Computer Vision, 2018, pp. 637–653.

- [3] X. Wang, T. Xiao, Y. Jiang, S. Shao, J. Sun, and C. Shen, “Repulsion loss: Detecting pedestrians in a crowd,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7774–7783.

- [4] C. Chi, S. Zhang, J. Xing, Z. Lei, S. Z. Li, and X. Zou, “Pedhunter: Occlusion robust pedestrian detector in crowded scenes,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 10 639–10 646.

- [5] X. Chu, A. Zheng, X. Zhang, and J. Sun, “Detection in crowded scenes: One proposal, multiple predictions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 12 214–12 223.

- [6] D. Li, X. Chen, Z. Zhang, and K. Huang, “Learning deep context-aware features over body and latent parts for person re-identification,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 384–393.

- [7] M. Ye, J. Shen, G. Lin, T. Xiang, L. Shao, and S. C. Hoi, “Deep learning for person re-identification: A survey and outlook,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 6, pp. 2872–2893, 2021.

- [8] X. Zhou, V. Koltun, and P. Krähenbühl, “Tracking objects as points,” in European Conference on Computer Vision. Springer, 2020, pp. 474–490.

- [9] R. Sundararaman, C. De Almeida Braga, E. Marchand, and J. Pettre, “Tracking pedestrian heads in dense crowd,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 3865–3875.

- [10] W. Luo, J. Xing, A. Milan, X. Zhang, W. Liu, and T.-K. Kim, “Multiple object tracking: A literature review,” Artificial Intelligence, vol. 293, p. 103448, 2021.

- [11] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2961–2969.

- [12] H.-S. Fang, J. Li, H. Tang, C. Xu, H. Zhu, Y. Xiu, Y.-L. Li, and C. Lu, “Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [13] P. Hu and D. Ramanan, “Finding tiny faces,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 951–959.

- [14] J. Deng, J. Guo, E. Ververas, I. Kotsia, and S. Zafeiriou, “Retinaface: Single-shot multi-level face localisation in the wild,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 5203–5212.

- [15] T.-H. Vu, A. Osokin, and I. Laptev, “Context-aware cnns for person head detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 2893–2901.

- [16] C. Le, H. Ma, X. Wang, and X. Li, “Key parts context and scene geometry in human head detection,” in IEEE International Conference on Image Processing. IEEE, 2018, pp. 1897–1901.

- [17] H. Zhou, F. Jiang, and R. Shen, “Who are raising their hands? hand-raiser seeking based on object detection and pose estimation,” in Asian Conference on Machine Learning. PMLR, 2018, pp. 470–485.

- [18] S. Narasimhaswamy, T. Nguyen, M. Huang, and M. Hoai, “Whose hands are these? hand detection and hand-body association in the wild,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 4889–4899.

- [19] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 4690–4699.

- [20] H. Idrees, K. Soomro, and M. Shah, “Detecting humans in dense crowds using locally-consistent scale prior and global occlusion reasoning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 10, pp. 1986–1998, 2015.

- [21] D. B. Sam, S. V. Peri, M. N. Sundararaman, A. Kamath, and R. V. Babu, “Locate, size, and count: accurately resolving people in dense crowds via detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 8, pp. 2739–2751, 2020.

- [22] L. Ge, H. Liang, J. Yuan, and D. Thalmann, “Real-time 3d hand pose estimation with 3d convolutional neural networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 4, pp. 956–970, 2018.

- [23] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in Neural Information Processing Systems, vol. 28, 2015.

- [24] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2117–2125.

- [25] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2980–2988.

- [26] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 779–788.

- [27] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in European Conference on Computer Vision. Springer, 2014, pp. 740–755.

- [28] S. Zhang, R. Benenson, and B. Schiele, “Citypersons: A diverse dataset for pedestrian detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 3213–3221.

- [29] S. Shao, Z. Zhao, B. Li, T. Xiao, G. Yu, X. Zhang, and J. Sun, “Crowdhuman: A benchmark for detecting human in a crowd,” arXiv preprint arXiv:1805.00123, 2018.

- [30] Q. Gao, Z. Ju, Y. Chen, Q. Wang, Y. Zhao, and S. Lai, “Parallel dual-hand detection by using hand and body features for robot teleoperation,” IEEE Transactions on Human-Machine Systems, 2023.

- [31] Z. Xiong, Z. Yao, Y. Ma, and X. Wu, “Vikingdet: A real-time person and face detector for surveillance cameras,” in IEEE International Conference on Advanced Video and Signal Based Surveillance. IEEE, 2019, pp. 1–7.

- [32] X. Shu, Y. Tao, R. Qiao, B. Ke, W. Wen, and B. Ren, “Head and body: Unified detector and graph network for person search in media,” arXiv preprint arXiv:2111.13888, 2021.

- [33] C. Ding, K. Wang, P. Wang, and D. Tao, “Multi-task learning with coarse priors for robust part-aware person re-identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 3, pp. 1474–1488, 2020.

- [34] V. Somers, C. De Vleeschouwer, and A. Alahi, “Body part-based representation learning for occluded person re-identification,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2023, pp. 1613–1623.

- [35] S. Zhang, X. Cao, G.-J. Qi, Z. Song, and J. Zhou, “Aiparsing: Anchor-free instance-level human parsing,” IEEE Transactions on Image Processing, vol. 31, pp. 5599–5612, 2022.

- [36] L. Yang, Q. Song, Z. Wang, Z. Liu, S. Xu, and Z. Li, “Quality-aware network for human parsing,” IEEE Transactions on Multimedia, 2022.

- [37] K. Liu, O. Choi, J. Wang, and W. Hwang, “Cdgnet: Class distribution guided network for human parsing,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 4473–4482.

- [38] S. Brahmbhatt, C. Tang, C. D. Twigg, C. C. Kemp, and J. Hays, “Contactpose: A dataset of grasps with object contact and hand pose,” in European Conference on Computer Vision. Springer, 2020, pp. 361–378.

- [39] S. Narasimhaswamy, T. Nguyen, and M. H. Nguyen, “Detecting hands and recognizing physical contact in the wild,” Advances in Neural Information Processing Systems, vol. 33, pp. 7841–7851, 2020.

- [40] D. Shan, J. Geng, M. Shu, and D. F. Fouhey, “Understanding human hands in contact at internet scale,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 9869–9878.

- [41] L. Muller, A. A. Osman, S. Tang, C.-H. P. Huang, and M. J. Black, “On self-contact and human pose,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 9990–9999.

- [42] Y. Chen, S. K. Dwivedi, M. J. Black, and D. Tzionas, “Detecting human-object contact in images,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [43] Y.-L. Li, L. Xu, X. Liu, X. Huang, Y. Xu, S. Wang, H.-S. Fang, Z. Ma, M. Chen, and C. Lu, “Pastanet: Toward human activity knowledge engine,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 382–391.

- [44] X. Wu, Y.-L. Li, X. Liu, J. Zhang, Y. Wu, and C. Lu, “Mining cross-person cues for body-part interactiveness learning in hoi detection,” in European Conference on Computer Vision. Springer, 2022, pp. 121–136.

- [45] Y.-L. Li, X. Liu, X. Wu, Y. Li, Z. Qiu, L. Xu, Y. Xu, H.-S. Fang, and C. Lu, “Hake: a knowledge engine foundation for human activity understanding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [46] J. Lim, V. M. Baskaran, J. M.-Y. Lim, K. Wong, J. See, and M. Tistarelli, “Ernet: An efficient and reliable human-object interaction detection network,” IEEE Transactions on Image Processing, vol. 32, pp. 964–979, 2023.

- [47] A. A. Osman, T. Bolkart, D. Tzionas, and M. J. Black, “Supr: A sparse unified part-based human representation,” in European Conference on Computer Vision. Springer, 2022, pp. 568–585.

- [48] S.-Y. Su, T. Bagautdinov, and H. Rhodin, “Danbo: Disentangled articulated neural body representations via graph neural networks,” in European Conference on Computer Vision. Springer, 2022, pp. 107–124.

- [49] M. Mihajlovic, S. Saito, A. Bansal, M. Zollhoefer, and S. Tang, “Coap: Compositional articulated occupancy of people,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 13 201–13 210.

- [50] M. Dantone, J. Gall, C. Leistner, and L. Van Gool, “Body parts dependent joint regressors for human pose estimation in still images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 11, pp. 2131–2143, 2014.

- [51] H. Zhang, J. Cao, G. Lu, W. Ouyang, and Z. Sun, “Learning 3d human shape and pose from dense body parts,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 5, pp. 2610–2627, 2020.

- [52] S. Kreiss, L. Bertoni, and A. Alahi, “Openpifpaf: Composite fields for semantic keypoint detection and spatio-temporal association,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 8, pp. 13 498–13 511, 2021.

- [53] Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, and Y. Sheikh, “Openpose: Realtime multi-person 2d pose estimation using part affinity fields,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 01, pp. 172–186, 2021.

- [54] K. Zhang, F. Xiong, P. Sun, L. Hu, B. Li, and G. Yu, “Double anchor r-cnn for human detection in a crowd,” arXiv preprint arXiv:1909.09998, 2019.

- [55] C. Chi, S. Zhang, J. Xing, Z. Lei, S. Z. Li, and X. Zou, “Relational learning for joint head and human detection,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 10 647–10 654.

- [56] J. Wan, J. Deng, X. Qiu, and F. Zhou, “Body-face joint detection via embedding and head hook,” in Proceedings of the IEEE International Conference on Computer Vision, 2021, pp. 2959–2968.

- [57] X. Li, L. Yang, Q. Song, and F. Zhou, “Detector-in-detector: Multi-level analysis for human-parts,” in Asian Conference on Computer Vision. Springer, 2019, pp. 228–240.

- [58] L. Yang, Q. Song, Z. Wang, M. Hu, and C. Liu, “Hier r-cnn: Instance-level human parts detection and a new benchmark,” IEEE Transactions on Image Processing, vol. 30, pp. 39–54, 2020.

- [59] J. Redmon and A. Farhadi, “Yolo9000: better, faster, stronger,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 7263–7271.

- [60] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Scaled-yolov4: Scaling cross stage partial network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 13 029–13 038.

- [61] G. Jocher, “Ultralytics yolov5,” 2020. [Online]. Available: https://github.com/ultralytics/yolov5

- [62] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [63] S. Xu, X. Wang, W. Lv, Q. Chang, C. Cui, K. Deng, G. Wang, Q. Dang, S. Wei, Y. Du et al., “Pp-yoloe: An evolved version of yolo,” arXiv preprint arXiv:2203.16250, 2022.

- [64] G. Jocher, A. Chaurasia, and J. Qiu, “Ultralytics yolov8,” 2023. [Online]. Available: https://github.com/ultralytics/ultralytics

- [65] X. Zhou, D. Wang, and P. Krähenbühl, “Objects as points,” arXiv preprint arXiv:1904.07850, 2019.

- [66] Z. Tian, C. Shen, H. Chen, and T. He, “Fcos: Fully convolutional one-stage object detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 9627–9636.

- [67] Z. Cao, T. Simon, S.-E. Wei, and Y. Sheikh, “Realtime multi-person 2d pose estimation using part affinity fields,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 7291–7299.

- [68] X. Nie, J. Feng, J. Zhang, and S. Yan, “Single-stage multi-person pose machines,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 6951–6960.