22institutetext: Shenzhen SiBright Co. Ltd., Shenzhen, Guangdong 518052 China

22email: [email protected]

Bounding Box Tightness Prior for Weakly Supervised Image Segmentation

Abstract

This paper presents a weakly supervised image segmentation method that adopts tight bounding box annotations. It proposes generalized multiple instance learning (MIL) and smooth maximum approximation to integrate the bounding box tightness prior into the deep neural network in an end-to-end manner. In generalized MIL, positive bags are defined by parallel crossing lines with a set of different angles, and negative bags are defined as individual pixels outside of any bounding boxes. Two variants of smooth maximum approximation, i.e., -softmax function and -quasimax function, are exploited to conquer the numeral instability introduced by maximum function of bag prediction. The proposed approach was evaluated on two pubic medical datasets using Dice coefficient. The results demonstrate that it outperforms the state-of-the-art methods. The codes are available at https://github.com/wangjuan313/wsis-boundingbox.

Keywords:

Weakly supervised image segmentation Bounding box tightness prior Multiple instance learning Smooth maximum approximation Deep neural networks.1 Introduction

In recent years, image segmentation has been made great progress with the development of deep neural networks in a fully-supervised manner [16, 12, 3]. However, collecting large-scale training set with precise pixel-wise annotation is considerably labor-intensive and expensive. To tackle this issue, there have been great interests in the development of weakly supervised image segmentation. All kinds of supervisions have been considered, including image-level annotations [1, 19], scribbles [10], bounding boxes [15, 5], and points [2]. This work focuses on image segmentation by employing supervision of bounding boxes.

In the literature, some efforts have been made to develop the weakly supervised image segmentation methods adopting the bounding box annotations. For example, Rajchl et al. [15] developed an iterative optimization method for image segmentation, in which a neural network classifier was trained from bounding box annotations. Khoreva et al. [6] employed GrabCut [18] and MCG proposals [14] to obtain pseudo label for image segmentation. Hsu et al. [4] exploited multiple instance learning (MIL) strategy and mask R-CNN for image segmentation. Kervadec et al. [5] leveraged the tightness prior to a deep learning setting via imposing a set of constraints on the network outputs for image segmentation.

In this work, we present a generalized MIL formulation and smooth maximum approximation to integrate the bounding box tightness prior into the network in an end-to-end manner. Specially, we employ parallel crossing lines with a set of different angles to obtain positive bags and use individual pixels outside of any bounding boxes as negative bags. We consider two variants of smooth maximum approximation to conquer the numeral instability introduced by maximum function of bag prediction. The experiments on two public medical datasets demonstrate that the proposed approach outperforms the state-of-the-art methods.

2 Methods

2.1 Preliminaries

2.1.1 Problem description

Suppose denotes an input image, and is its corresponding pixel-level category label, in which is the number of categories of the objects. The image segmentation problem is to obtain the prediction of , denoted as , for the input image .

In the fully supervised image segmentation setting, for an image , its pixel-wise category label is available during training. Instead, in this study we are only provided its bounding box label . Suppose there are bounding boxes, then its bounding box label is , where the location label is a 4-dimensional vector representing the top left and bottom right points of the bounding box, and is its category label.

2.1.2 Deep neural network

This study considers deep neural networks which output the pixel-wise prediction of the input image, such as Unet [16], FCN [12], etc. Due to the possible overlaps of objects of different categories in images, especially in medical images, the image segmentation problem is formulated as a multi-label classification problem in this study. That is, for a location in the input image, it outputs a vector with elements, one element for a category; each element is converted to the range of by the sigmoid function.

2.2 MIL baseline

2.2.1 MIL definition and bounding box tightness prior

Multiple instance learning (MIL) is a type of supervised learning. Different from the traditional supervised learning which receives a set of training samples which are individually labeled, MIL receives a set of labeled bags, each containing many training samples. In MIL, a bag is labeled as negative if all of its samples are negative, a bag is positive if it has at least one sample which is positive.

Tightness prior of bounding box indicates that the location label of bounding box is the smallest rectangle enclosing the whole object, thus the object must touch the four sides of its bounding box, and does not overlap with the region outside its bounding box. The crossing line of a bounding box is defined as a line with its two endpoints located on the opposite sides of the box. In an image under consideration, for an object with category , any crossing line in the bounding box has at least one pixel belonging to the object in the box; any pixels outside of any bounding boxes of category do not belong to category . Hence pixels on a cross line compose a positive bag for category , while pixels outside of any bounding boxes of category are used for negative bags.

2.2.2 MIL baseline

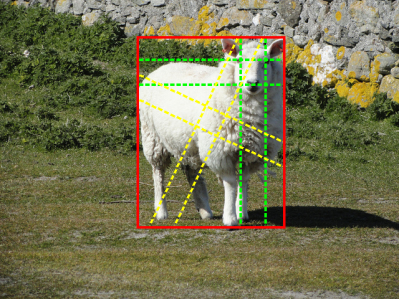

For category in an image, the baseline approach simply considers all of the horizontal and vertical crossing lines inside the boxes as positive bags, and all of the horizontal and vertical crossing lines that do not overlap any bounding boxes of category as negative bags. This definition is shown in Fig. 1(a) and has been widely employed in the literature [4, 5].

|

|

| (a) | (b) |

To optimize the network parameters, MIL loss with two terms are considered [4]. For category , suppose its positive and negative bags are denoted by and , respectively, then loss can be defined as follows:

| (1) |

where is the unary loss term, is the pairwise loss term, and is a constant value controlling the trade off between the unary loss and the pairwise loss.

The unary loss enforces the tightness constraint of bounding boxes on the network prediction by considering both positive bags and negative bags . A positive bag contains at least one pixel inside the object, hence the pixel with highest prediction tends to be inside the object, thus belonging to category . In contrast, no pixels in negative bags belong to any objects, hence even the pixel with highest prediction does not belong to category . Based on these observations, the unary loss can be expressed as:

| (2) |

where is the prediction of the bag being positive for category , is the network output of the pixel location k for category , and is the cardinality of .

The unary loss is binary cross entropy loss for bag prediction , it achieves minimum when for positive bags and for negative bags. More importantly, during training the unary loss adaptively selects a positive sample per positive bag and a negative sample per negative bag based on the network prediction for optimization, thus yielding an adaptive sampling effect.

However, using the unary loss alone is prone to segment merely the discriminative parts of an object. To address this issue, the pairwise loss as follows is introduced to pose the piece-wise smoothness on the network prediction.

| (3) |

where is the set containing all neighboring pixel pairs, is the network output of the pixel location k for category .

Finally, considering all categories, the MIL loss is:

| (4) |

2.3 Generalized MIL

2.3.1 Generalized positive bags

For an object of height pixels and width pixels, the positive bag definition in the MIL baseline yields only positive bags, a much smaller number when compared with the size of the object. Hence it limits the selected positive samples during training, resulting in a bottleneck for image segmentation.

To eliminate this issue, this study proposes to generalize positive bag definition by considering all parallel crossing lines with a set of different angles. An parallel crossing line is parameterized by an angle with respect to the edges of the box where its two endpoints located. For an angle , two sets of parallel crossing lines can be obtained, one crosses up and bottom edges of the box, and the other crosses left and right edges of the box. As examples, in Fig. 1(b), we show positive bags of two different angles, in which those marked by yellow dashed colors have , and those marked by green dashed lines are with . Note the positive bag definition in MIL baseline is a special case of the generalized positive bags with .

2.3.2 Generalized negative bags

The similar issue also exists for the negative bag definition in the MIL baseline. To tackle this issue, for a category , we propose to define each individual pixel outside of any bounding boxes of category as a negative bag. This definition greatly increases the number of negative bags, and forces the network to see every pixel outside of bounding boxes during training.

2.3.3 Improved unary loss

The generalized MIL definitions above will inevitably lead to imbalance between positive and negative bags. To deal with this issue, we borrow the concept of focal loss [17] and use the improved unary loss as follows:

| (5) |

where , is the weighting factor, and is the focusing parameter. The improved unary loss is focal loss for bag prediction, it achieves minimum when for positive bags and for negative bags.

2.4 Smooth maximum approximation

In the unary loss, the maximum prediction of pixels in a bag is used as bag prediction . However, the derivative is discontinuous, leading to numerical instability. To solve this problem, we replace the maximum function by its smooth maximum approximation [8]. Let the maximum function be , its two variants of smooth maximum approximation as follows are considered.

(1) -softmax function:

| (6) |

where is a constant. The higher the value is, the closer the approximation to . For , a soft approximation of the mean function is obtained.

(2) -quasimax function:

| (7) |

where is a constant. The higher the value is, the closer the approximation to . One can easily prove that always holds.

In real application, each bag usually has more than one pixel belonging to object segment. However, has value 0 for all but the maximum , thus the maximum function considers only the maximum during optimization. In contrast, the smooth maximum approximation has and for all , thus it considers every during optimization. More importantly, in the smooth maximum approximation, large has much greater derivative than small , thus eliminating the possible adverse effect of negative samples in the optimization. In the end, besides the advantage of conquering numerical instability, the smooth maximum approximation is also beneficial for performance improvement.

3 Experiments

3.1 Datasets

This study made use of two public medical datasets for performance evaluation. The first one is the prostate MR image segmentation 2012 (PROMISE12) dataset [11] for prostate segmentation and the second one is the anatomical tracings of lesions after stroke (ATLAS) dataset [9] for brain lesion segmentation.

Prostate segmentation: The PROMISE12 dataset was first developed for prostate segmentation in MICCAI 2012 grand challenge [11]. It consists of the transversal T2-weighted MR images from 50 patients, including both benign and prostate cancer cases. These images were acquired at different centers with multiple MRI vendors and different scanning protocols. Same as the study in [5], the dataset was divided into two non-overlapping subsets, one with 40 patients for training and the other with 10 patients for validation.

Brain lesion segmentation: The ATLAS dataset is a well-known open-source dataset for brain lesion segmentation. It consists of 229 T1-weighted MR images from 220 patients. These images were acquired from different cohorts and different scanners. The annotations were done by a group of 11 experts. Same as the study in [5], the dataset was divided into two non-overlapping subsets, one with 203 images from 195 patients for training and the other with 26 images from 25 patients for validation.

3.2 Implementation details

All experiments were implemented using PyTorch in this study. Image segmentation was conducted on the 2D slices of MR images. The parameters in the MIL loss (1) were set as based on experience, and those in the improved unary loss (5) were set as and according to the focal loss [17]. As indicated below, most experimental setups were set to be same as study in [5] for fairness of comparison.

For the PROMISE12 dataset, a residual version of UNet [16] was used for segmentation [5]. The models were trained with Adam optimizer [7] with the following parameter values: batch size = 16, initial learning rate = , , and . To enlarge the set of images for training, an off-line data augmentation procedure [5] was applied to the images in the training set as follows: 1) mirroring, 2) flipping, and 3) rotation.

3.3 Performance evaluation

To measure the performance of the proposed approach, the Dice coefficient was employed, which has been applied as a standard performance metric in medical image segmentation. The Dice coefficient was calculated based on the 3D MR images by stacking the 2D predictions of the networks together.

4 Results

4.1 Ablation study

4.1.1 Generalized MIL

To demonstrate the effectiveness of the proposed generalized MIL formulation, in Table 1 we show the performance of the generalized MIL for the two datasets. For comparison, we also show the results of the baseline method. It can be observed that the generalized MIL approach consistently outperforms the baseline method at different angle settings. In particular, the PROMISE12 dataset got best Dice coefficient of 0.878 at for the generalized MIL, compared with 0.859 for the baseline method. The ATLAS dataset achieved best Dice coefficient of 0.474 at for the generalized MIL, much higher than 0.408 for the baseline method.

| Method | PROMISE12 | ATLAS |

|---|---|---|

| MIL baseline | 0.859 (0.038) | 0.408 (0.249) |

| 0.868 (0.031) | 0.463 (0.278) | |

| 0.878 (0.027) | 0.466 (0.248) | |

| 0.868 (0.047) | 0.474 (0.262) |

4.1.2 Smooth maximum approximation

To demonstrate the benefits of the smooth maximum approximation, in Table 2 we show the performance of the MIL baseline method when the smooth maximum approximation was applied. As can be seen, for the PROMISE12 dataset, the better performance is obtained for -softmax function with and 6 and for -quasimax function with and 8. For the ATLAS dataset, the improved performance can also be observed for -softmax function with and 8 and for -quasimax function with .

| Method | PROMISE12 | ATLAS | |

|---|---|---|---|

| -softmax | 0.861 (0.031) | 0.401(0.246) | |

| 0.861 (0.036) | 0.424(0.255) | ||

| 0.859 (0.030) | 0.414(0.264) | ||

| -quasimax | 0.856 (0.026) | 0.405(0.246) | |

| 0.873 (0.018) | 0.371(0.240) | ||

| 0.869 (0.024) | 0.414(0.256) | ||

4.2 Main experimental results

In Table 3 the Dice coefficients of the proposed approach are given, in which , and 8 are considered in smooth maximum approximation functions and those with highest dice coefficient are reported. For comparison, the full supervision results are also shown in Table 3. As can be seen, the PROMISE12 dataset gets Dice coefficient 0.878 for -softmax function and 0.880 for -quasimax function, which are same as or higher than the results in the ablation study. More importantly, these values are close to 0.894 obtained by the full supervision. The similar trends are observed for the ATLAS dataset.

| Method | PROMISE12 | ATLAS |

|---|---|---|

| Full supervision | 0.894 (0.021) | 0.512 (0.292) |

| + -softmax | 0.878 (0.031) | 0.494 (0.236) |

| + -quasimax | 0.880 (0.024) | 0.488 (0.240) |

| Deep cut [15] | 0.827 (0.085) | 0.375 (0.246) |

| Global constraint [5] | 0.835 (0.032) | 0.474 (0.245) |

Furthermore, we also show the Dice coefficients of two state-of-the-art methods in Table 3. The PROMISE12 dataset gets Dice coefficient 0.827 for deep cut and 0.835 for global constraint, both of which are much lower than those of the proposed approach. Similarly, the proposed approach also achieves higher Dice coefficients compared with these two methods for the ATLAS dataset.

Finally, to visually demonstrate the performance of the proposed approach, qualitative segmentation results are depicted in Fig. 2. It can be seen that the proposed method achieves good segmentation results for both prostate segmentation task and brain lesion segmentation task.

|

|

|

|

|

|

|

|

|

|

|

|

5 Conclusion

This paper described a weakly supervised image segmentation method with tight bounding box supervision. It proposed generalized MIL and smooth maximum approximation to integrate the supervision into the deep neural network. The experiments demonstrate the proposed approach outperforms the state-of-the-art methods. However, there is still performance gap between the weakly supervised approach and the fully supervised method. In the future, it would be interesting to study whether using multi-scale outputs and adding auxiliary object detection task improve the segmentation performance.

References

- [1] Ahn, J., Cho, S., Kwak, S.: Weakly supervised learning of instance segmentation with inter-pixel relations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2209–2218 (2019)

- [2] Bearman, A., Russakovsky, O., Ferrari, V., Fei-Fei, L.: What’s the point: Semantic segmentation with point supervision. In: Proceedings of the European Conference on Computer Vision. pp. 549–565. Springer (2016)

- [3] Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision. pp. 801–818 (2018)

- [4] Hsu, C.C., Hsu, K.J., Tsai, C.C., Lin, Y.Y., Chuang, Y.Y.: Weakly supervised instance segmentation using the bounding box tightness prior. Advances in Neural Information Processing Systems 32, 6586–6597 (2019)

- [5] Kervadec, H., Dolz, J., Wang, S., Granger, E., Ayed, I.B.: Bounding boxes for weakly supervised segmentation: Global constraints get close to full supervision. In: Medical Imaging with Deep Learning. pp. 365–381. PMLR (2020)

- [6] Khoreva, A., Benenson, R., Hosang, J., Hein, M., Schiele, B.: Simple does it: Weakly supervised instance and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 876–885 (2017)

- [7] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- [8] Lange, M., Zühlke, D., Holz, O., Villmann, T., Mittweida, S.G.: Applications of lp-norms and their smooth approximations for gradient based learning vector quantization. In: ESANN. pp. 271–276 (2014)

- [9] Liew, S.L., Anglin, J.M., Banks, N.W., Sondag, M., Ito, K.L., Kim, H., Chan, J., Ito, J., Jung, C., Khoshab, N., et al.: A large, open source dataset of stroke anatomical brain images and manual lesion segmentations. Scientific Data 5(1), 1–11 (2018)

- [10] Lin, D., Dai, J., Jia, J., He, K., Sun, J.: Scribblesup: Scribble-supervised convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3159–3167 (2016)

- [11] Litjens, G., Toth, R., van de Ven, W., Hoeks, C., Kerkstra, S., van Ginneken, B., Vincent, G., Guillard, G., Birbeck, N., Zhang, J., et al.: Evaluation of prostate segmentation algorithms for mri: the promise12 challenge. Medical Image Analysis 18(2), 359–373 (2014)

- [12] Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3431–3440 (2015)

- [13] Paszke, A., Chaurasia, A., Kim, S., Culurciello, E.: Enet: A deep neural network architecture for real-time semantic segmentation. arXiv preprint arXiv:1606.02147 (2016)

- [14] Pont-Tuset, J., Arbelaez, P., Barron, J.T., Marques, F., Malik, J.: Multiscale combinatorial grouping for image segmentation and object proposal generation. IEEE Transactions on Pattern Analysis and Machine Intelligence 39(1), 128–140 (2016)

- [15] Rajchl, M., Lee, M.C., Oktay, O., Kamnitsas, K., Passerat-Palmbach, J., Bai, W., Damodaram, M., Rutherford, M.A., Hajnal, J.V., Kainz, B., et al.: Deepcut: Object segmentation from bounding box annotations using convolutional neural networks. IEEE Transactions on Medical Imaging 36(2), 674–683 (2016)

- [16] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 234–241. Springer (2015)

- [17] Ross, T.Y., Dollár, G.: Focal loss for dense object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 2980–2988 (2017)

- [18] Rother, C., Kolmogorov, V., Blake, A.: “GrabCut” interactive foreground extraction using iterated graph cuts. ACM Transactions on Graphics 23(3), 309–314 (2004)

- [19] Wang, Y., Zhang, J., Kan, M., Shan, S., Chen, X.: Self-supervised equivariant attention mechanism for weakly supervised semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12275–12284 (2020)