BOTT: Box Only Transformer Tracker for 3D Object Tracking

Abstract

Tracking 3D objects is an important task in autonomous driving. Classical Kalman Filtering based methods are still the most popular solutions. However, these methods require handcrafted designs in motion modeling and can not benefit from the growing data amounts. In this paper, Box Only Transformer Tracker (BOTT) is proposed to learn to link 3D boxes of the same object from the different frames, by taking all the 3D boxes in a time window as input. Specifically, transformer self-attention is applied to exchange information between all the boxes to learn global-informative box embeddings. The similarity between these learned embeddings can be used to link the boxes of the same object. BOTT can be used for both online and offline tracking modes seamlessly. Its simplicity enables us to significantly reduce engineering efforts required by traditional Kalman Filtering based methods. Experiments show BOTT achieves competitive performance on two largest 3D MOT benchmarks: 69.9 and 66.7 AMOTA on nuScenes validation and test splits, respectively, 56.45 and 59.57 MOTA L2 on Waymo Open Dataset validation and test splits, respectively. This work suggests that tracking 3D objects by learning features directly from 3D boxes using transformers is a simple yet effective way.

1 Introduction

Autonomous driving is an open challenge attracting tremendous attention in the past decade. One of the most essential tasks for autonomous vehicles is to perceive 3D objects accurately, which includes the detection and tracking of the objects. Encouraging progress has been made in 3D object detection, owing to the emergence of large public multi-modality datasets [4, 18] and advanced 3D object detection methods [2, 9, 10, 23]. On the other hand, tracking-by-detection methods for 3D Multi-Object Tracking (MOT) [7, 13, 20, 24] remain competitive and popular due to their ability to benefit from powerful 3D object detectors. Among them, Kalman Filtering (KF) based trackers [13, 20] are dominant, as the kinematics models are naturally designed for tracking 3D motion.

Despite their competitiveness, KF-based trackers have two main disadvantages. Firstly, a series of Kalman filters must be defined to cover various types of motion kinematics, including static, constant velocity, constant acceleration, constant angular velocity, and more sophisticated non-constant ones. Meanwhile, Kalman filters require specific parameters for each object category, such as the mean and variance of measurements and noises. Hence, KF-based trackers need much engineering efforts to tune these parameters to have a decent performance. Second, KF-based trackers could not make use of modern large datasets [4, 18] to boost the performance.

One approach for data-driven 3D MOT is to perform joint detection and tracking in a single stage, such as SimTrack [11] and CenterPoint [23]. While these methods can simultaneously detect and track 3D objects in point clouds with a single model, the tracking is normally limited to consecutive frames to fit the architecture of the 3D object detector based on lidar. However, there is a fundamental conflict between the two tasks: 3D detection focuses on the instantaneous spatial localization of objects with only a few point clouds, while 3D tracking requires a much longer spatial-temporal memory. In practice, 4D spatial and temporal learning with significantly more point clouds is still an open challenge due to computational complexity and hardware limitation.

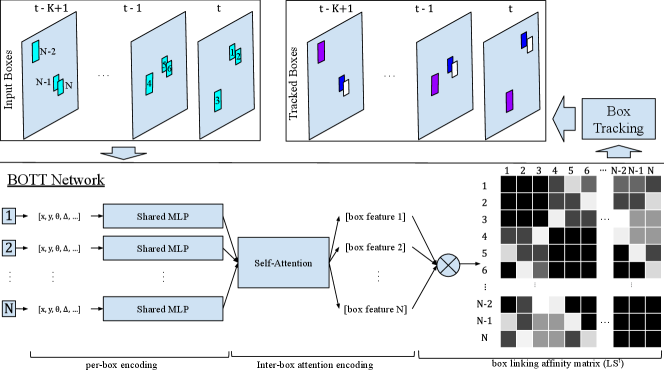

An alternative research direction is to learn to track the bounding boxes of the 3D objects directly [6, 7, 24]. This approach offers a straightforward replacement of KF-based trackers in the existing tracking-by-detection paradigm. Machine learning methods, consuming only the geometric properties of bounding boxes, inherit the merits of KF-based trackers and could benefit from growing data amounts. However, 3D box-based learning methods face two key challenges. Firstly, each input frame contains a varying number of unordered boxes, making it difficult to establish a consistent identity for each object. Secondly, unlike image appearance features, 3D box geometric features lack spatial-temporal consistency for each object identity. Nevertheless, humans can easily connect boxes from the same object when viewing the bird’s-eye view boxes sequentially by interpreting the global box distributions and the spatial-temporal context of each individual box. In other words, the box features, i.e. position, size, orientation, object types, and their temporal-spatial distributions should be sufficient for tracking. The key is to find a suitable tool to learn such information for each box. PolarMOT [7] is an inspiring work in this regard, which uses a graph neural network (GNN) to iteratively learn box features from spatial-temporal local boxes. Differently, we propose a novel approach, called the Box Only Transformer Tracker (BOTT), that uses attention [19] to globally learn per-box embeddings from all multi-class boxes with a single model, as shown in Figure. 1. BOTT is well-suited for 3D box tracking, as attention mechanisms have repeatedly demonstrated its effectiveness in communicating information temporally and spatially between inputs of varying-length [2, 12, 25].

In summary, the proposed BOTT is a simple multi-class multi-object 3D box-only tracker. Attentive box features are globally learned to encode box information and its spatial-temporal distributions among other boxes. The linking scores or similarity scores between box features are used to link boxes. Our main contributions are as follows:

-

•

We propose BOTT, a simple self-attention based tracker, which consumes only 3D bounding boxes. The simplicity and effectiveness pave the path for more potential works to track 3D boxes using transformers. Meanwhile, it could be extended to other applications such as multi-modal 3D box tracking.

-

•

We provide complete online and offline tracking algorithms for multi-class 3D tracking with a single model under the BOTT framework.

- •

-

•

We conduct extensive ablation studies to examine the key designs that enable strong performance and validate the BOTT’s generalization ability across datasets and input frequencies.

2 Related Work

This section first reviews the 3D MOT algorithms based on the tracking-by-detection paradigm, then reviews the transformer-based trackers, and lastly reviews online and offline MOT.

2.1 3D MOT

Under the tracking-by-detection framework, AB3DMOT [20] serves as a baseline, using a simple KF tracking framework. Many methods have been proposed to improve the tracking performance based on the same KF-based tracking framework, such as ProbTrack [5] and SimpleTrack [13]. Their major difference lies in the association metrics: AB3DMOT used 3D Intersection of Union (IoU); ProbTrack used Mahalabnobis distance; and SimpleTrack used 3D generalized 3D (GIoU) while a second association was applied for better handling lower-score objects.

Lately, more learning-based tracking algorithms have been reported. Some of them proposed to use GNNs [3, 7, 21, 24] as graphs are a natural representation of the MOT problem, where the detected objects are encoded as nodes and their spatial-temporal relations are represented by edges. GNN3DMOT [21] used GNNs to estimate an affinity matrix and solved the association using the Hungarian algorithm. OGR3MOT [24] solved 3D MOT with GNNs in an end-to-end manner, focusing on the data association and the classification of active tracklets. Batch3dmot [3] presented a novel multi-modal GNN framework for offline 3D MOT on multi-class tracking graphs that introduces nearest neighborhood attention across graph components to allow information exchange across disconnected graph components. Closest to our approach, PolarMOT [7] explored the impact of geometric relationships between objects based on GNNs with solely 3D boxes as well. The difference is that in the PolarMOT, mutual object interactions within local regions are learned implicitly through iterative message-passing steps, while we used self-attention to get the global context information in one shot.

2.2 Transformer Tracker

Over the past few years, transformer networks have gained great momentum for their ability to effectively process sequence data. Encouraging tracking performance on 2D MOT has been demonstrated from transformer trackers with image appearance features [6, 12, 15, 17, 25, 26]. This is mainly because transformers could handle long-term dependencies and is robust to occlusions and complex scenarios. Among these trackers, SODA [6] and GTR [25] are more related to our work. SODA proposed an attention measurement encoding to compute track embeddings from objects’ appearance features to reason about the spatial-temporal dependencies between all objects. GTR fed the objects’ appearance features into the encoder of the transformer, additionally took trajectory queries as the decoder input, and produced association scores between each query and object. Different from their work, our work learns the spatial-temporal context information from 3D bounding boxes only without appearance using simple self-attention encoders.

2.3 Offline and Online Tracking

Recently, offline auto-labeling methods [22, 14] have drawn great attention in the autonomous driving industry, as they could scale up the data annotation drastically. In the auto-labeling pipeline, future information could be introduced to improve tracking performance. It is not straightforward for KF-based trackers [5, 13, 20] to leverage on the future information, as they are designed to operate recursively and rely solely on the current state and observations. In contrast, BOTT provides a convenient solution for both online and offline multi-class tracking.

3 Box Only Transformer Tracker

In this section, the proposed BOTT framework will be presented, including the BOTT network and box tracking algorithms.

3.1 Overview

In a scene with frames , there are detected 3D boxes for frame : . Each box contains raw features including (: center, : size, : yaw, : time, : classification scores for categories). A sliding window is defined as a set of all the boxes from consecutive frames: . For simplicity, denotes a sliding window with latest frame without explicit statement of , and denotes the total box number within .

As shown in Figure. 1, BOTT accepts sliding windows as input and estimates pairwise box linking scores using a BOTT network. Then a box tracking module connects the boxes to produce tracks using the linking scores. The BOTT network contains three components: 1) single-box feature encoding; 2) inter-box encoding with self-attention; and 3) linking scores estimation.

3.2 Single-Box Feature Encoding

This module aims to learn high-level per-box features from raw geometric features. The raw center values may vary greatly in different sliding windows because they are in the shared global coordinates. To reduce variance, center positions of all boxes in are normalized by subtracting the minimal center values of all boxes. Take for instance, . The time feature of box is encoded as the delta between the box frame and center frame in . Box heading angle is encoded as . In summary, the input feature of includes . Each box feature vector is fed into a shared Multi-Layer Perception (MLP), which has latent layers with dimensions and a final output layer with dimensions. feature embeddings are learned for all boxes .

3.3 Inter-Box Encoding with Self-Attention

After per-box feature encoding, the output embeddings are fed to a self-attention module to encode inter-box relationship. Specifically, identical transformer encoder blocks [19] are applied sequentially to exchange information between all input box embeddings. Each encoder block consists of a multi-head attention network with heads and a following feed-forward network with hidden nodes. After self-attention, the size of output box embeddings remains for .

Notably, the self-attention in BOTT is class-agnostic, i.e. each box learns to get information from all the other boxes in the sliding window. As shown in Figure. 5.1, the moving car in sub-figure (a) and (b) also gets attention from nearby pedestrians, and the pedestrian in sub-figure (c) and (d) also gets attention from nearby cars. Self-attention is expected to learn robust box representation by encoding global spatial-temporal box distributions into each box. Another advantage of class-agnostic self-attention is that it enables BOTT to handle multi-class objects tracking with a single model. It greatly reduces the deployment complexity in practice.

3.4 Linking Score Estimation

Tracked boxes from the same object share a similar spatial-temporal context within a sliding window. With the learned box embeddings, normalization is performed to normalize each box embedding to unit length. Then, simple dot-product is used to compute inter-box linking scores, resulting in a linking score matrix (illustrated in Figure. 1). For convention, the linking score is normalized to range by . A linking score of indicates that two boxes belong to the same object, and means the opposite. In this way, the tracking is converted to a binary classification problem of box links.

3.5 Loss

Assume denote the corresponding ground truth linking score matrix for , binary cross entropy between them is used as the loss. During training, to prevent the easy cases from overwhelming the loss, a binary mask is constructed to ignore losses from: 1) inter-class box links; 2) box links from the same frame; 3) box links between two false positive boxes (detected boxes without associated ground truth boxes); and 4) box links whose center distances exceed the expected maximum displacement for the object over the given time duration.

Given a batch data of sliding windows , the linking loss is computed as:

| (1) |

where is the positive sample weight.

3.6 Box Tracking with BOTT

Linking scores are utilized to create tracks in box tracking module. Depending on whether future data can be used, BOTT can perform both online and offline tracking.

3.6.1 Online Tracking

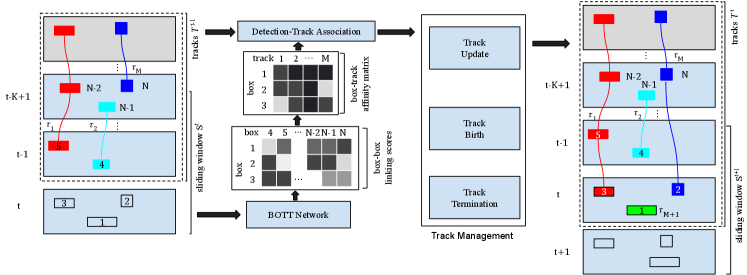

Figure. 2 illustrates online box tracking under BOTT framework. At time , the set of history tracks are denoted as , where a track is defined as a list of time-ordered 3D boxes. In the example of Figure. 2, , where denotes the box as marked. At frame , the latest sliding window is fed into BOTT network to generate linking scores between all the boxes in , i.e. }. Since the goal of online tracking is to connect new detections to existing tracks , we only use linking scores between boxes (i.e., ) and boxes (i.e., ). Moreover, linking scores are set to zero for box links under conditions: 1) the two boxes are from different object categories; 2) the center distance violates the physical constraints as discussed in section 3.5. Next, the affinity score between each detection and each track is calculated as maximum of all box pairs:

| (2) |

Instead of track propagation by motion model and association in latest frame, BOTT directly conducts Hungarian association between all detections and tracks using cost matrix . This enables BOTT to handle multi-class tracking with single model.

BOTT online tracking utilizes a simple track management strategy to control birth, update and termination of tracks. Each associated detection is appended as the new tail box in associated track, sharing the same track identity. Unmatched detections will birth a track with unconfirmed status. An unconfirmed track will be changed to confirmed status after it has accumulated minimal boxes, and a confirmed track will be terminated after seconds without new detections. This work uses and . After track management, tail boxes of confirmed tracks in frame will be published as the latest tracking result.

3.6.2 Offline Tracking

BOTT can also be used to perform offline tracking. Thanks to the effectiveness of BOTT, a simple greedy approach is enough to achieve promising performance. In offline setting, all sliding windows are first constructed with a stride of one, and fed to the BOTT network to generate linking scores. Let denote the estimated linking score between box and in sliding window . The linking score between and across the scene is computed as:

| (3) |

And an optimal threshold is applied to remove links with low . To remove redundant links, non-maximum suppression is performed according to . For example, if a link between and from two frames has been selected, all the other links to or between the same two frames will be pruned. To further prevent false links, boxes that violate physical constrains as in online tracking or boxes from different classes are pruned. Finally, box interpolations are also performed to fill any gaps between the linked boxes.

4 Experimental Setup

4.1 Datasets and Metrics

We evaluate BOTT on the two largest benchmarks for 3D MOT: nuScenes [4] and Waymo Open Dataset (WOD) [18].

nuScenes

consists of 1000 driving scenes of approximately 20 seconds long. scenes are used for training, validation, and test, respectively. Lidar scans and ground truth (GT) 3D box annotations are provided at 20Hz and 2Hz, respectively. We report the overall Average Multiple Object Tracking Accuracy (AMOTA) [20], recall, and identity switches(IDS) across all the 7 tracking categories, i.e. car, pedestrian, bicycle, bus, motorcycle, trailer, and truck, and also the AMOTA for each individual category. Overall AMOTA is the primary metric for nuScenes benchmark.

Waymo Open Dataset

contains 1000 driving sequences of 20 seconds, with sequences for training, validation and test, respectively. Both point clouds and GT 3D boxes of objects are provided at 10Hz. We report MOTA for both the L1 and L2 difficulty levels, mismatch ratio [18] for objects in the L2 difficulty, and MOTA for all the 3 tracking categories (vehicle, pedestrian and cyclist) in the L2 difficulty level. MOTA in the L2 difficulty level is the primary metric for WOD 3D MOT benchmark.

4.2 Track Database Generation

CenterPoint [23] is deployed on the training, validation and test sets on both nuScenes and WOD to get the detections to generate a track database. With the detections, a Non-Maximum Suppression (NMS) is applied to remove overlapped boxes, and boxes with detection scores below a threshold are also filtered. To generate the database, an association between detections and GT boxes provided by nuScenes or WOD is performed. The track IDs in the GT boxes are assigned to the associated detection boxes, and unmatched detection boxes are considered as false positives. For both nuScenes and WOD, the track database are generated at 10Hz. The 2Hz GT provided by nuScenes is interpolated to get 10Hz GT. Each scene in the track database is divided into overlapping frame sliding windows with a stride of one. This work uses .

4.3 Implementation Details

4.3.1 Network Details

The MLP in single box encoding consists of four LinearReLU blocks, with output dimensions . Three stacked identical encoder blocks are used for inter-box encoding, with each block including 1) a head attention following a LayerNorm [1]; and 2) a feature feed-forward net with two LinearReLU blocks (output dimensions ), following a LayerNorm. The output box embeddings remains the size of .

4.3.2 Training Procedure

The link distribution is very imbalanced in sliding windows, with over negative links. Hard negative sample mining is applied to pick all positives (assume links) and maximally negative links with largest linking score errors ( is used). The positive weight in Eq.(1) is set to . BOTT is trained with Adam optimizer [8] for 50 epochs with batch size of 4 sliding windows. We use the 1cycle learning rate policy [16] with intial learning rate of . As each sliding window has varying number of boxes, we apply zero padding to each sliding window to have a largest box number of all sliding windows in a batch, and a binary masking is used to prohibit attention from padded boxes in attention layers.

4.3.3 Data Augmentation

First, we drop some tracks randomly in the sliding windows to reduce the number of boxes to a maximum number 3000, to mimic occlusions and false negatives. Next, all boxes in a sliding window will be shifted from global coordinates to local coordinates centered at the middle of all box centers, i.e. . Finally, we perform two set of global augmentations to all the boxes in a sliding window: we first apply a random flip along x axis andor y axis, then a global rotation (yaw uniformly drawn from ).

4.4 Public Benchmark

Tracking performance heavily relies on the detection quality. For fair comparison, we compare BOTT with these published trackers that are also based on the commonly used CenterPoint detections [23]. Among them, AB3DMOT [20] and SimpleTrack [13] trackers belongs to classic motion trackers, while PolarMOT [7], OGR3MOT [24], CenterPoint [23] and Batch3DMOT [3] are learning based trackers. Table. 1 shows the 3D MOT results on nuScenes validation and test sets. In the validation set, we compare the performance for both online and offline tracking. Our online BOTT achieves a 2.53 AMOTA improvement over learning based tracker PolarMOT, and achieves slightly better performance that the advanced classic motion trackers, i.e. SimpleTrack [13]. Our offline BOTT achieves state-of-the-art performance of the Lidar box-based offline tracking algorithms, with 71.38 AMOTA. One should note that the tracking performance for Batch3DMOT [3] is presented with box feature only. For the test set, only online tracking results are compared between different trackers. Similarly, our online BOTT achieves better performance than learning based box tracker, and comparable results with classic motion trackers.

Table. 2 shows the 3D MOT results on WOD. Our online BOTT could achieve better results than learning based trackers, i.e. CenterPoint [23], and achieves comparable performance to advanced classic motion trackers, i.e. SimpleTrack [13].

| Method | Modality | AMOTA | IDS | Recall | class-specific AMOTA | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| car | ped | bicycle | bus | motor | trailer | truck | ||||||

| val set | AB3DMOT* [20] | Box3D | 57.8 | 1275 | - | - | - | - | - | - | - | - |

| ProbTrack*[5] | Box3D | 62.4 | 1098 | - | 73.5 | 75.5 | 27.2 | 74.1 | 50.6 | 33.7 | 58.0 | |

| SimpleTrack* [13] | Box3D | 69.57 | 403 | 73.61 | 83.9 | 80.67 | 50.3 | 79.52 | 74.19 | 52.7 | 65.69 | |

| CenterPoint [23] | Lidar | 66.75 | 616 | 70.5 | 83.83 | 77.02 | 47.56 | 84.83 | 60.22 | 45.73 | 68.02 | |

| Online PolarMOT [7] | Box3D | 67.27 | 439 | 72.46 | 81.26 | 78.79 | 49.38 | 82.76 | 67.19 | 45.8 | 65.7 | |

| Online BOTT | Box3D | 69.91 | 438 | 72.08 | 83.67 | 80.02 | 50.34 | 83.66 | 71.53 | 51.34 | 68.85 | |

| Offline Batch3DMOT [3] | Box3D | 70.6 | 758 | 72.0 | 83.4 | 81.1 | 54.8 | 83.5 | 73.3 | 49.6 | 68.5 | |

| Offline PolarMOT [7] | Box3D | 71.14 | 213 | 75.14 | 85.83 | 81.7 | 54.1 | 87.36 | 72.32 | 48.67 | 68.03 | |

| Offline BOTT | Box3D | 71.38 | 310 | 73.3 | 84.3 | 82.1 | 53.8 | 85.4 | 74.2 | 51.2 | 68.7 | |

| test set | AB3DMOT* [20] | Box3D | 15.1 | 9027 | 27.6 | 27.8 | 14.1 | 0 | 40.8 | 8.1 | 13.6 | 1.3 |

| ProbTrack* [5] | Box3D | 55.0 | 950 | 76.8 | 71.9 | 74.5 | 25.5 | 64.1 | 48.1 | 49.5 | 51.3 | |

| SimpleTrack* [13] | Box3D | 66.8 | 575 | 70.3 | 82.3 | 79.6 | 40.7 | 71.5 | 67.4 | 67.3 | 58.7 | |

| CenterPoint [23] | Lidar | 63.8 | 760 | 67.5 | 82.9 | 76.7 | 32.1 | 71.1 | 59.1 | 65.1 | 59.9 | |

| PolarMOT [7] | Box3D | 66.4 | 242 | 70.2 | 85.3 | 80.6 | 34.9 | 70.8 | 65.6 | 67.3 | 60.2 | |

| OGR3MOT [24] | Box3D | 65.6 | 288 | 69.2 | 81.6 | 78.7 | 38.0 | 71.1 | 64.0 | 67.1 | 59.0 | |

| Online BOTT | Box3D | 66.7 | 743 | 67.7 | 83.1 | 75.9 | 32.6 | 74.8 | 65.8 | 70.1 | 64.3 | |

-

•

*Non-ML methods

5 Results

| Method | Modality | MOTA(L1) | MOTA(L2) | Mismatch | class-specific MOTA(L2) | |||

|---|---|---|---|---|---|---|---|---|

| vehicle | pedestrian | cyclist | ||||||

| val set | AB3DMOT* [20] | Box3D | - | - | - | 40.1 | 37.7 | - |

| ProbTrack* [5] | Box3D | - | - | - | 54.06 | 48.10 | - | |

| SimpleTrack* [13] | Box3D | 59.44 | 56.92 | 0.36 | 56.12 | 57.76 | 56.88 | |

| CenterPoint [23] | Lidar | 58.35 | 55.81 | 0.74 | 55.05 | 54.94 | 57.44 | |

| Online BOTT | Box3D | 58.98 | 56.50 | 0.35 | 55.11 | 56.48 | 57.78 | |

| Offline BOTT | Box3D | 59.67 | 57.14 | 0.12 | 55.17 | 57.05 | 59.21 | |

| test set | AB3DMOT* [20] | Box3D | - | - | - | 57.73 | 53.80 | - |

| ProbTrack* [5] | Box3D | 49.16 | 47.65 | 1.01 | 49.32 | 44.38 | 25.29 | |

| SimpleTrack* [13] | Box3D | 61.82 | 60.18 | 0.38 | 60.3 | 60.13 | 60.12 | |

| CenterPoint [23] | Lidar | 60.31 | 58.67 | 0.72 | 59.38 | 56.64 | 60.0 | |

| Online BOTT | Box3D | 61.20 | 59.57 | 0.31 | 59.49 | 58.82 | 60.41 | |

-

•

*Non-ML methods

5.1 Qualitative Analysis

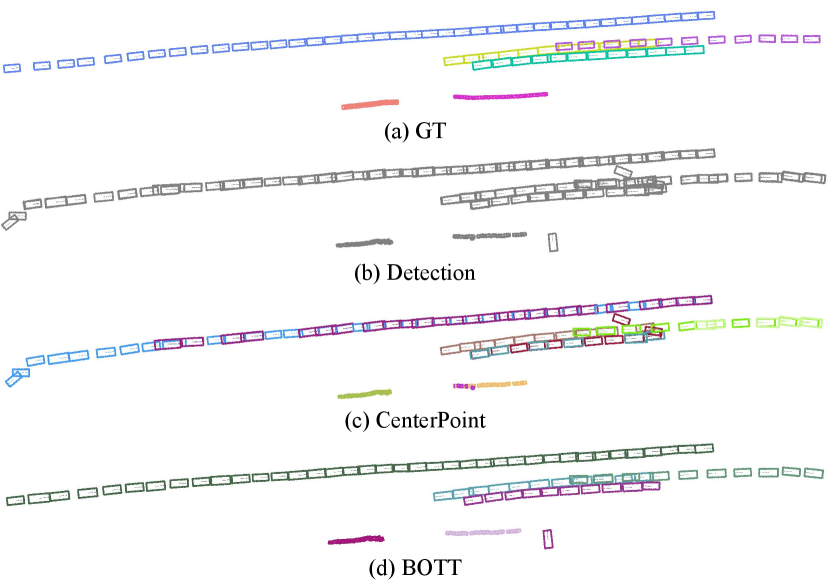

Figure. 3 illustrates the qualitative results of BOTT which accumulate over 40 frames in a scene on the nuScenes validation set. Figure. 3 (a) and (b) shows GT and raw detections from CenterPoint [23], respectively, and (c) and (d) shows the tracking results of CenterPoint [23] and our BOTT.

Figure. 5.1 showcases a few examples of the attentive boxes from the same frame that relate to the circled reference boxes. We could see that the boxes which are closer to the reference boxes normally have stronger attention impact than the boxes far away. Nevertheless, faraway boxes also contributes to the reference box due to the global self-attention. Notably, the figure only shows attentive boxes from the same frame for illustration convenience, in fact all boxes in the sliding window contribute to the reference box for a robust box embedding.

| (a) frame | (b) frame | |

| Moving Car |

|

|

| (c) frame | (d) frame | |

| Static Pedestrian |

|

|

6 Ablation Studies

We conduct ablation studies on nuScenes val set to analyse the impact of attention and physical constraints, and study the generalization capability of BOTT.

6.1 Attention mechanism

We ablate the necessity of using the attention mechanism to learn the context information. Initially, we remove the attention layers and relied solely on raw box features to establish the links between all the boxes. We also conducted experiments with different encoder layers. The ablation results for the attention mechanism on the nuScenes validation set are presented in Table. 3. The results revealed that without attention, the performance is worse than our best setting in Table. 1, with a AMOTA drop. It is observed that we can achieve best performance with 3 encoder layers, and further adding attention layers in the encoder does not improve the performance.

| # encoders layers | AMOTA | IDS | Recall |

|---|---|---|---|

| 0 | 68.0 | 734 | 69.9 |

| 1 | 68.76 | 520 | 70.4 |

| 3 | 69.91 | 438 | 72.1 |

| 6 | 69.76 | 455 | 70.4 |

6.2 Physical Constraints

During deployment, physical constraints are added to restrain some faraway boxes from linking together, including 1) maximal velocity constraint: center distance of two boxes could not exceed the product of time difference and object category maximal velocity; and 2) static position constraint, static boxes (with absolute speed less than from CenterPoint[23] detections) are prohibited to link boxes above 2 meters. Table. 4 compares the tracking results on nuScenes val set with different constraints. The results shows it could benefit tracking performance by reducing the interference from the impossible links. This agrees with the observation in PolarMOT [7] that constructing sparse graph using boxes within certain range improves tracking performance.

| Constraints | AMOTA | IDS | Recall |

|---|---|---|---|

| none | 64.84 | 1485 | 68.3 |

| velocity | 67.4 | 927 | 71.3 |

| position | 67.1 | 1245 | 71.7 |

| pos. + vel. | 69.91 | 438 | 72.1 |

6.3 Generalization Studies

| Evaluation | ||||||||

| Training | nuScenes | WOD | ||||||

| class | AMOTA | IDS | Recall | class | MOTA(L1) | MOTA(L2) | Mismatch (L2) | |

| nuScenes | car | 83.1 | 181 | 82.2 | vehicle | 58.2 | 54.68 | 0.53 |

| WOD | 83.2 | 222 | 84.5 | 58.62 | 55.08 | 0.14 | ||

| nuScenes | ped | 78.2 | 360 | 82.6 | ped | 59.83 | 55.92 | 1.23 |

| WOD | 77.2 | 308 | 76.3 | 60.35 | 56.42 | 0.73 | ||

| nuScenes | bicycle | 49.8 | 1 | 47.7 | cyclist | 57.02 | 56.95 | 0.67 |

| WOD | 48.1 | 1 | 48.3 | 57.55 | 57.49 | 0.38 | ||

Cross-dataset Generalization: This study shows how the BOTT online tracker generalizes across datasets. The nuScenes and WOD benchmarks have different input frequencies and number of classes, and slightly distinct annotation instructions. For example, vehicle in WOD includes any object that can be recognized as a vehicle, including motorcyles; Also parked bicycles are not labelled in WOD but they are labelled in nuScenes. To mitigate it, we conducted following processing steps: 1) nuScenes model was trained with 3 classes by merging vehicle categories: (car, bus, trailer, truck, motorcycle) cyclist; 2) both nuScenes and WOD use 10Hz for training and validation; and 3) only evaluate car, pedestrian and bicycle in 7-class nuScenes validation set as it is not able to evaluate the merged vehicle category in nuScenes benchmark. Table. 5 reports the class-specific generalization results of BOTT online tracker between nuScenes and WOD. BOTT shows very promising generalization capability between nuScenes and WOD for all categories: the model trained with source dataset has slightly inferior performance than the model trained with target dataset.

| Frequency | K | AMOTA | IDS | Recall |

|---|---|---|---|---|

| 2Hz | 4 | 68.7 | 992 | 73.2 |

| 5Hz | 8 | 68.6 | 617 | 71.5 |

| 10Hz* | 16 | 69.9 | 438 | 72.1 |

| 20Hz | 32 | 68.8 | 529 | 71.3 |

-

•

*Model training frequency

Input Frequency Generalization: This ablation is to assess whether BOTT is sensitive to input frequencies. CenterPoint [23] is deployed to generate 20Hz detections for nuScenes validation set, and then generate corresponding 20Hz track database following Section 4.2. Afterwards, validation track databases at 10Hz, 5Hz and 2Hz are generated by keep every 2, 4 and 10 frames from the 20Hz database. To test model generalization against input frequency, a BOTT model was trained with 10Hz track database with sliding window size , and was tested with validation track databases at 20Hz, 10Hz, 5Hz and 2Hz, with accordingly being adjusted to 32, 16, 8 and 4. The results reported in Table. 6 is encouraging that the shared BOTT model achieves fairly good performance at various input frequencies and window sizes. In practice, this enables that BOTT tracker has higher deployment flexibility on input data.

7 Conclusion

We propose BOTT, a novel machine learning based 3D MOT method that relies only on 3D bounding boxes. BOTT leverages a transformer encoder with global self-attention to learn box features enriched with spatial-temporal context information from all the boxes across a time window, leading to a significant performance boost. Notably, BOTT does not rely on appearance information from raw data, which leads to better generalization ability. BOTT can be easily configured as an online or offline tracker, and our experiments demonstrate achieves state-of-the-art performance among the learning-based trackers on nuScenes and WOD.

References

- [1] Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E. Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- [2] Xuyang Bai, Zeyu Hu, Xinge Zhu, Qingqiu Huang, Yilun Chen, Hongbo Fu, and Chiew-Lan Tai. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1090–1099, 2022.

- [3] Martin Büchner and Abhinav Valada. 3d multi-object tracking using graph neural networks with cross-edge modality attention. IEEE Robotics and Automation Letters, 7(4):9707–9714, 2022.

- [4] Holger Caesar, Varun Bankiti, Alex H. Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 11621–11631, 2020.

- [5] Hsu-kuang Chiu, Antonio Prioletti, Jie Li, and Jeannette Bohg. Probabilistic 3d multi-object tracking for autonomous driving. arXiv preprint arXiv:2001.05673, 2020.

- [6] Wei-Chih Hung, Henrik Kretzschmar, Tsung-Yi Lin, Yuning Chai, Ruichi Yu, Ming-Hsuan Yang, and Dragomir Anguelov. Soda: Multi-object tracking with soft data association. arXiv preprint arXiv:2008.07725, 2020.

- [7] Aleksandr Kim, Guillem Brasó, Aljoša Ošep, and Laura Leal-Taixé. Polarmot: How far can geometric relations take us in 3d multi-object tracking? In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXII, pages 41–58. Springer, 2022.

- [8] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [9] Alex H. Lang, Sourabh Vora, Holger Caesar, Lubing Zhou, Jiong Yang, and Oscar Beijbom. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12697–12705, 2019.

- [10] Zhijian Liu, Haotian Tang, Alexander Amini, Xinyu Yang, Huizi Mao, Daniela Rus, and Song Han. Bevfusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation. arXiv preprint arXiv:2205.13542, 2022.

- [11] Chenxu Luo, Xiaodong Yang, and Alan Yuille. Exploring simple 3d multi-object tracking for autonomous driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10488–10497, 2021.

- [12] Tim Meinhardt, Alexander Kirillov, Laura Leal-Taixe, and Christoph Feichtenhofer. Trackformer: Multi-object tracking with transformers. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8844–8854, 2022.

- [13] Ziqi Pang, Zhichao Li, and Naiyan Wang. Simpletrack: Understanding and rethinking 3d multi-object tracking. In Computer Vision–ECCV 2022 Workshops: Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part I, pages 680–696. Springer, 2023.

- [14] Charles R. Qi, Yin Zhou, Mahyar Najibi, Pei Sun, Khoa Vo, Boyang Deng, and Dragomir Anguelov. Offboard 3d object detection from point cloud sequences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6134–6144, 2021.

- [15] Felicia Ruppel, Florian Faion, Claudius Gläser, and Klaus Dietmayer. Transformers for multi-object tracking on point clouds. In 2022 IEEE Intelligent Vehicles Symposium (IV), pages 852–859, 2022.

- [16] Leslie N. Smith and Nicholay Topin. Super-convergence: Very fast training of neural networks using large learning rates. arXiv preprint arXiv:1708.07120, 2017.

- [17] Peize Sun, Jinkun Cao, Yi Jiang, Rufeng Zhang, Enze Xie, Zehuan Yuan, Changhu Wang, and Ping Luo. Transtrack: Multiple object tracking with transformer. arXiv preprint arXiv:2012.15460, 2020.

- [18] Pei Sun, Henrik Kretzschmar, Xerxes Dotiwalla, Aurelien Chouard, Vijaysai Patnaik, Paul Tsui, James Guo, Yin Zhou, Yuning Chai, Benjamin Caine, and et al. Scalability in perception for autonomous driving: An open dataset benchmark. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2446–2454, 2020.

- [19] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [20] Xinshuo Weng, Jianren Wang, David Held, and Kris Kitani. Ab3dmot: A baseline for 3d multi-object tracking and new evaluation metrics. arXiv preprint arXiv:2008.08063, 2020.

- [21] Xinshuo Weng, Yongxin Wang, Yunze Man, and Kris Kitani. Gnn3dmot: Graph neural network for 3d multiobject tracking with 2d-3d multi-feature learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, page 6498–6507, 2020.

- [22] Bin Yang, Min Bai, Ming Liang, Wenyuan Zeng, and Raquel Urtasun. Auto4d: Learning to label 4d objects from sequential point clouds. arXiv preprint arXiv:2101.06586, 2021.

- [23] Tianwei Yin, Xingyi Zhou, and Philipp Krahenbuhl. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 11784–11793, 2021.

- [24] Jan-Nico Zaech, Alexander Liniger, Dengxin Dai, Martin Danelljan, and Luc Van Gool. Learnable online graph representations for 3d multi-object tracking. IEEE Robotics and Automation Letters, 7(2):5103–5110, 2022.

- [25] Xingyi Zhou, Tianwei Yin, Vladlen Koltun, and Philipp Krähenbühl. Global tracking transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8771–8780, 2022.

- [26] Tianyu Zhu, Markus Hiller, Mahsa Ehsanpour, Rongkai Ma, Tom Drummond, Ian Reid, and Hamid Rezatofighi. Looking beyond two frames: End-to-end multi-object tracking using spatial and temporal transformers. arXiv preprint arXiv:2103.14829, 2021.

BOTT: Box Only Transformer Tracker for 3D Object Tracking

- Supplementary Material -

In this appendix, we (i) provide more details for track dataset generation; (ii) study impact of various data augmentations; (iii) analyse runtime breakdown of online tracker; and (iv) provide more details for online tracking.

A.1 Track database generation details

This section presents further details about track database generation. The track database is generated by matching detection boxes and ground truth provided by nuscenes and WOD. We follow 4 steps to generate the track database. Firstly, we deploy CenterPoint [23] on the training, validation and test sets on nuScenes and WOD following the official instruction at https://github.com/tianweiy/CenterPoint. The pretrained model VoxelNet with flip augmentation is adopted to deploy on the nuScenes. As we generated the track database at 10Hz, we downsample the input point clouds, but keep all the key frames. Due to nuScenes only provides 2Hz GT, we interpolate the GT to 10Hz. As for WOD, we use the two-stage VoxelNet model with velocity. Secondly, as the raw detection boxes have many overlapped boxes and low-score objects, we perform both NMS and filtering of low-score objects to the raw detection boxes. The detailed parameters used for nuScenes and WOD are listed in Table. A.1. Then, we perform a class-aware Hungarian association between the filtered detection boxes and GT boxes. A box pair is only determined to be matched if interaction over union (IoU) between them is higher than the minimal threshold (0.0001). For the matched pair, the detection box will be assigned the track ID of the matched GT. Finally, all the detection boxes and GT boxes in a scene, the matched information between them, and other meta information are stored in an individual file, indexed by each time frame.

| Param | nuScenes | WOD | ||||

|---|---|---|---|---|---|---|

| car | ped | other | vehicle | pedestrian | cyclist | |

| NMS | 0.1 | 0.25 | 0.1 | 0.1 | 0.25 | 0.1 |

| Score | 0.2 | 0.2 | 0.1 | 0.2 | 0.2 | 0.1 |

A.2 Ablation Study on Data Augmentation

This section presents ablation study on data augmentation. We conducted ablation study on three different augmentation methods including dropping tracks, global flipping, and global yaw augmentation. From the results in Table. A.2, it boosts the performance by dropping some tracks randomly. Global flipping along both x-axis and y-axis could improve the performance. To have larger diversity, global flipping on x-axis and y-axis are enabled. Global rotation is applied with yaw angle uniformly drawn between 0 and a maximal value. As seen from the results, all experiments, i.e. rotation with a maximum of 30, 60, 90, and 180 degrees, could improve the performance. Since the results are close, we just choose 90 degrees for larger diversity. We do not enable global jittering, due to jittering on all boxes will not change the box raw feature encoding with as described in Section. 3.2.

| Augmentation | AMOTA | IDS | Recall |

|---|---|---|---|

| none | 68.96 | 509 | 70.9 |

| drop track | 69.38 | 434 | 71.8 |

| xflip | 69.79 | 537 | 72.7 |

| yflip | 69.65 | 479 | 73.3 |

| xyflip | 69.40 | 572 | 72.0 |

| yaw30 | 69.67 | 522 | 71.5 |

| yaw60 | 69.07 | 507 | 71.5 |

| yaw90 | 69.56 | 502 | 72.2 |

| yaw180 | 69.85 | 505 | 71.6 |

| drop+xyflip+yaw90 | 69.91 | 438 | 72.1 |

A.3 Runtime of BOTT Online Tracking

Realtime tracking is critical for autonomous driving. Table. A.3 reports detailed runtime for BOTT online tracker on a desktop equipped with a 3.6GHz CPU and a GTX 1080-Ti GPU. Overall, online tracking runtime mainly consists of data loading time, network inference time, and box tracking time. It shows the BOTT tracker can run fast. For instance, BOTT network with 3 encoder layers can track at a fps of 47.8Hz on nuScenes val set with an average of 802 boxes per frame. In comparison, when running the official AB3DMOT [21] and SimpleTrack [14] code on nuScenes validation set, they require 322.1ms and 1128.4ms per frame, respectively. The slower runtime is due to (1) class-wise tracking for 7 classes, resulting in the summation of processing time (PolarMOT also trains different networks for different classes); (2) The Generalized IoU between boxes is computationally expensive. Additionally, SimpleTrack employs a two-stage association approach to boost performance with runtime sacrifice. In contrast, BOTT uses a single network for class-agnostic affinity matrix computation.

| encoder | Data (ms) | Network (ms) | Tracking (ms) | fps |

|---|---|---|---|---|

| 0 | 1.8 | 2.6 | 9.5 | 71.9 |

| 1 | 4.7 | 9.4 | 62.9 | |

| 3 | 8.6 | 10.5 | 47.8 | |

| 6 | 13.6 | 10.0 | 39.4 |

Table A.4 shows BOTT inference time with different box numbers. In real-world driving scenarios, it is uncommon to encounter more than 3000 boxes. Various optimizations, such as half-precision and TensorRT inference, can be applied to further reduce the time cost.

| box number | 500 | 1000 | 2000 | 3000 | 4000 |

|---|---|---|---|---|---|

| model time (ms) | 3.32 | 7.84 | 23.43 | 53.94 | 85.44 |

A.4 Additional Details for Online Tracking

This section provides more details for the BOTT online tracking described in 3.6.1. As mentioned, velocity physical constraints are applied to forbid faraway box links. Specifically, box center distance cannot exceed the product of class-specific maximal speed and the time difference. The maximal speed for each class are: bicycle=20, pedestrian=10, car=bus=motorcycle=trailer=truck=35. Meanwhile, all links with center distance below following thresholds will be used for data association: bicycle=2m, pedestrian=1.5m, car=bus=motorcycle=trailer=truck=3m. During detection-track association, associated pairs with cost below thresholds are used as matched pairs. Correspondingly, the minimal linking scores for each class are: bicycle=0.6, car=0.4, pedestrian=car=bus=motorcycle=trailer=truck=0.5.

A.5 Sliding Window Size

Table A.5 shows how different window sizes affect results. Two models are evaluated. The left side is trained with the same window size as the deployment (). The right side was trained with but deployed with different . Smaller window sizes result in less temporal context accessibility, while larger window sizes require the model to have a larger capacity to learn affinity between input boxes that grow quadratically, leading to increasing computations.

| AMOTA | IDS | AMOTA | IDS | ||||

|---|---|---|---|---|---|---|---|

| 4 | 4 | 66.4 | 1369 | 16 | 4 | 65.8 | 1586 |

| 8 | 8 | 68.8 | 818 | 16 | 8 | 67.9 | 889 |

| 16 | 16 | 69.9 | 438 | 16 | 16 | 69.9 | 438 |

| 24 | 24 | 69.8 | 483 | 16 | 24 | 68.1 | 663 |