Boost Decentralized Federated Learning in Vehicular Networks by Diversifying Data Sources

Abstract

Recently, federated learning (FL) has received intensive research because of its ability in preserving data privacy for scattered clients to collaboratively train machine learning models. Commonly, a parameter server (PS) is deployed for aggregating model parameters contributed by different clients. Decentralized federated learning (DFL) is upgraded from FL which allows clients to aggregate model parameters with their neighbours directly. DFL is particularly feasible for vehicular networks as vehicles communicate with each other in a vehicle-to-vehicle (V2V) manner. However, due to the restrictions of vehicle routes and communication distances, it is hard for individual vehicles to sufficiently exchange models with others. Data sources contributing to models on individual vehicles may not diversified enough resulting in poor model accuracy. To address this problem, we propose the DFL-DDS (DFL with diversified Data Sources) algorithm to diversify data sources in DFL. Specifically, each vehicle maintains a state vector to record the contribution weight of each data source to its model. The Kullback–Leibler (KL) divergence is adopted to measure the diversity of a state vector. To boost the convergence of DFL, a vehicle tunes the aggregation weight of each data source by minimizing the KL divergence of its state vector, and its effectiveness in diversifying data sources can be theoretically proved. Finally, the superiority of DFL-DDS is evaluated by extensive experiments (with MNIST and CIFAR-10 datasets) which demonstrate that DFL-DDS can accelerate the convergence of DFL and improve the model accuracy significantly compared with state-of-the-art baselines.

Index Terms:

Decentralized Federated Learning, Privacy Protection, Vehicular Networks, KL Divergence.I Introduction

With the proliferation of intelligent vehicles, vehicles have accumulated abundant datasets via equipped LiDAR, RIDAR, camera and sensors [1]. Advanced machine learning models can be obtained by exploiting datasets on vehicles to improve road safety, object recognition accuracy, etc [2]. However, exploiting datasets on vehicles for model training confronts at least two challenges: First, accessing raw datasets residing on vehicles inevitably invades data privacy because these datasets probably contain sensitive and confidential information; Second, the communication cost may be very heavy by extensively collecting raw datasets from vehicles. Thanks to the recent advances of federated learning (FL), these challenges can be potentially solved.

To mitigate the rising concerns on data privacy leakage, FL conducts model training without touching original data samples stored on scattered clients [3]. In each round of FL global iteration (a.k.a epoch), a parameter server (PS) is responsible for aggregating model parameters collected from participating clients who conduct local iterations with local datasets and then disclose their model parameters to the PS. Global iterations will be conducted for multiple times by involving different clients until the trained model finally converges [3].

To avoid the single point failure and alleviate the communication bottleneck of the PS in FL [4], decentralized FL (DFL) is devised which allows each client to aggregate model parameters gathered from its neighbours directly. According to [5, 6], clients weigh models from neighbours based on their connection degrees or sample sizes for model aggregation. The convergence of DFL has been proved in [7, 8] assuming that the network topology formed by clients is connected. DFL is particularly feasible for vehicular networks provided that vehicles as learning clients communicate with each other in a vehicle-to-vehicle (V2V) manner [9].

Despite the feasibility of DFL, it is highly possible that individual vehicles 111We use vehicles exchangeably with clients in our paper. fail to attain satisfactory model accuracy due to restrictions of their routes and communication distances [10]. To illustrate this point, we show a concrete example in Fig. 1 by using real traces extracted from DiDi [11]. In Fig. 1, there are four vehicles, namely A, B, C and D. The route of vehicle A has no overlap with the route of vehicle B though their information can be exchanged via intermediate vehicle C. In other words, data sources contributing to model aggregation on vehicle A are not sufficiently diversified given that vehicle A cannot directly communicate with vehicle B. The problem is even worse in a real large-scale vehicular network when vehicles need to exchange model parameters via multiple hops through intermediate vehicles. As a consequence, the convergence of DFL in vehicular networks could be very slow.

Diversifying data sources in FL has been explored by existing works. It has been regarded as an important data related factor affecting model performance in [12], which proposed a diversity-driven client selection strategy. However, a central server is needed to collect the noisy data sketch of each client to compute the data similarity between two clients. In [13], a joint sampling and data offloading algorithm is proposed to improve model performance, where the sampling method is based on the utility of device data distributions. A D2D (device-to-device) offloading scheme is used to diversify sampled devices, though D2D offloading between neighboring devices is unsuitable in many privacy-preserving tasks. In [14], training workers are assumed to be connected with all data sources, which can ensure that the dataset collected by each training worker is of large diversity. Unfortunately, these methods cannot be leveraged to support DFL in vehicular networks in that they failed to consider the fully decentralized V2V communication manner in vehicular networks.

To boost DFL by diversifying data sources in vehicular networks, we propose the DFL with diversified data sources (DFL-DDS) algorithm. First of all, we implement the distributed learning algorithm designed in [5] without considering data source diversity to conduct a simulation study. Our study unveils that unlucky vehicles will encounter difficulties to diversify their data sources for model aggregation. Consequently, their model accuracy is much lower than that of other vehicles. Inspired by our simulation study, we design DFL-DDS as below. Each vehicle in DFL-DDS maintains a state vector to record the contribution weight of each vehicle in the system to its model, which will be exchanged between vehicles along with the exchange of model parameters. Formally, we employ the KL divergence to measure the diversity of a state vector, which not only quantifies data source diversity but also accommodates heterogeneous dataset sizes from different vehicles. Prior to aggregating models, each vehicle generates the aggregation weight for each data source by minimizing the KL divergence of its aggregated state vector, and its effectiveness in diversifying data sources can be theoretically illustrated. To validate that higher model accuracy can be attained with more diversified data sources, we conduct extensive experiments to evaluate our algorithm by using standard MNIST and CIFAR-10 datasets. Experimental results demonstrate superb performance achieved by DFL-DDS in comparison with competitive baselines.

The rest of the paper is organized as follows. Relevant works and our contribution compared to these works are discussed in Sec. II. Preliminary knowledge of the FedAvg and Decentralized FedAvg algorithms are introduced in Sec. III. The simulation study showing the deficiency of existing algorithms is presented in Sec. IV. The DFL-DDS algorithm and its analysis are elaborated in Sec. V. Experiments are conducted and results are reported in Sec. VI. Lastly, we conclude our work and envision our future work in Sec. VII.

II Related Work

In this section, we briefly discuss related works from two aspects: federated learning and learning in vehicular networks, and state our contribution in light of existing works.

II-A Federated Learning

Federated learning was originally proposed by [3] to preserve the privacy of data distributed on decentralized clients during model training. FedAvg and FedSGD [3, 15] were proposed as two most fundamental model averaging algorithms in FL. They can complete model training by exchanging model parameters between the PS and clients without accessing original data samples.

Since its inception, FL has received intensive research efforts, and has been applied in various systems. To fit in different applications, variants of FedAvg/FedSGD were devised to accelerate FL. Khalil et. al. extended FedAvg by introducing a new sampling and aggregation strategy to improve the convergence speed when training a federated recommendation model [16]. In [17], Chen et. al. proposed a federated transfer learning framework for wearable healthcare to achieve personalized model learning. In [18], Yu et. al. proposed a neural-structure-aware resource management approach with module-based FL framework to improve the resource efficiency. In [19], Nishio et. al. proposed algorithms to actively manage clients based on their resource conditions to accelerate FL procedure.

Regardless of application scenarios, the data diversity is always an important factor influencing the accuracy of FL. In FL, data samples are collected and privately maintained by distributed clients, which makes data sample selection and the estimation of data sample quality difficult. In view of this difficulty, research efforts have been dedicated to diversifying data sources to improve model accuracy. In [14], Pu et. al. proposed an efficient online data scheduling policy to alleviate the data skew issue caused by the capacity heterogeneity of training workers from the long-term perspective. In [13], Wang et. al. proposed a joint sampling and data offloading optimization problem subject to constraints on the network topology and device capacities to improve FL training accuracy. Considering the similarity of datasets collected across devices, a similarity-aware data offloading strategy was proposed in a distributed manner to optimize the data dissimilarity of each device. In [12], Li et. al. reported that a low content diversity can lead to severe model accuracy deterioration based on trace driven analysis. A client sampling method jointly considering the statistical homogeneity and content diversity was proposed to improve model accuracy.

However, all these mentioned works trying to improve the diversity of data sources failed to consider the decentralized FL scenario. Due to the lack of a centralized PS in DFL, it is rather difficult to collect necessary information to diversify data sources from a global perspective. In [6], FedAvg was extended to a DFL algorithm to enable learning in arbitrarily large scale networks. Same as FedAvg, the aggregation weight is proportional to the sample population failing to diversify data sources. In [20], Hu et. al. proposed a segmented gossip aggregation approach allowing each worker to pull different model segments from chosen peers. The objective is to optimize the utilization of the bandwidth resources between workers requiring high connectivity among workers. In [5], a broadcast-based algorithm was developed to minimize the sum of convex functions scatted throughout a collection of distributed nodes. The aggregation weight of each model is merely determined by the in-degree and out-degree of each worker, which cannot effectively diversify data sources.

II-B Learning in Vehicular Networks

To accommodate intelligent applications on vehicles, privacy preserved and efficient learning framework applicable for vehicular networks becomes extremely needed. It was reported in [21, 22] that FL can benefit many important applications in autonomous driving. The works [23, 24, 25] investigated how to support data- and computation-intensive applications with low communication overhead with a strong privacy guarantee in vehicular networks.

Nevertheless, it is costly to deploy a PS to conduct centralized model aggregation for FL in large-scale vehicular networks. In [26], an RSU (Road Side Unit) based model aggregation method was proposed to conduct FL. However, pervasively deploying RSUs can be very costly. Thereby, pure V2V solutions are extensively explored by [27, 28, 29, 30]. These works indicate the feasibility to implement DFL in vehicular networks by allowing each vehicle to aggregate models collected from neighbours directly. In [31, 32], blockchain-based FL was proposed to enable decentralized FL. Whereas, the FL efficiency of these works cannot be guaranteed.

Based on the above discussion, existing works on DFL in vehicular networks largely overlooked the influence of data source diversity when aggregating models. Consequently, it is hard for them to achieve high model accuracy on every individual vehicles.

III Preliminary

In this section, we briefly explain the FedAvg and Decentralized FedAvg algorithms to facilitate the discussion of our DFL-DDS algorithm presented in the next section.

III-A FedAvg

Without loss of generality, we suppose that data samples are distributed among clients in the FL system. The process to train a machine learning model can be regarded as the process to minimize a loss function. The loss function of a particular sample can be defined as , where represents model parameters to be learned. The objective of FL is to minimize the overall loss function defined as

| (1) |

Let denote the set of data samples owned by client and . The objective in Eq. (1) can be rewritten as

| (2) |

FederatedAveraging (FedAvg) minimizes the loss function by conducting multiple global iterations (a.k.a epochs) with the assistance of the PS. The learning process consists of the following steps.

-

•

The PS randomly and uniformly selects clients, denoted by , to participate local model update in the -th global iteration. This client selection scheme can ensure the diversity of data sources for model aggregation.

-

•

Each participating client downloads the latest global model222The global model in the first global iteration can be randomly initialized by the PS. from the PS to perform local updates according to the formula of each local update as below with local data samples.

(3) where is the learning rate and is a batch of samples selected from . Then, the updated model parameters are uploaded to the PS.

-

•

The PS aggregates all updated models from participating clients as

(4) Here represents the weight of participating client for model aggregation in the -th global iteration. It is usual to set proportional to .

-

•

Repeat the above three steps until the model converges.

III-B Decentralized FedAvg

Decentralized FedAvg can be easily extended from FedAvg by enabling each client to collect model parameters from its neighbour clients directly. It is equivalent to implementing the function of the PS on each client. Many existing works can be perceived as variants of decentralized FedAvg such as the sub-gradient push algorithm proposed in [5].

Nevertheless, it is tricky to properly set when deploying DFL in vehicular networks. Considering the constraints of vehicle routes and communication distances, an individual vehicle cannot uniformly and randomly select other vehicles as its neighbours to diversify data sources contributed to its model training. Consequently, it becomes a challenging problem to properly set for DFL in vehicular networks. In [5, 8], it was proposed to set according to in-degrees and out-degrees of client , which has ignored the influence of data source diversity on the model accuracy. We will further explore the deficiency to implement such algorithms in vehicular networks with a simulation study in the next section.

IV Simulation Study

To visualize the impact of data source diversity on the final model accuracy in DFL, we conduct a simulation study by implementing the subgradient push (SP) algorithm [5] with two public datasets: CIFAR-10 and MNIST.

IV-A System Model

We study a vehicular network with vehicles. Each vehicle owns a dataset . Each vehicle is equipped with certain computation and communication capacity. It can either park or move along roads. We consider a synchronized DFL system. Each vehicle conducts local iterations according to Eq. (3) with its own dataset and its model in the -th global iteration. When local iterations are completed, all vehicles make efforts to contact other vehicles in their proximity. Then, two vehicles can exchange model parameters if their distance is within the communication range. Let denote the set of neighbor vehicles, which can exchange models with vehicle for the -th round of model aggregation. Let denote the set of all vehicles involved in the -th round of model aggregation on vehicle . After collecting model parameters from vehicles in , vehicle conducts aggregation according to Eq. (4). With refined parameters, vehicles proceed to the next round of global iteration.

To facilitate the understanding of our work, frequently used notations are summarized with brief explanations in Table I.

| Notation | Short Explanation |

|---|---|

| The number of data samples owned by vehicle | |

| The local dataset owned by vehicle | |

| The total number of vehicles | |

| The number of local updates | |

| The learning rate | |

| The weight of the model from vehicle for model | |

| aggregation on vehicle in the -th global iteration | |

| The model of vehicle in the -th global iteration | |

| The set of vehicles which can communicate with vehicle | |

| in the -th global iteration | |

| The set of vehicles involved for model aggregation on | |

| vehicle in the -th global iteration | |

| The state vector on vehicle in the -th global iteration | |

| The contribution weight from vehicle to vehicle | |

| The target state vector |

IV-B Simulation Implementation

We implement a simulator to conduct DFL experiments in vehicular networks for our study. The SP algorithm introduced in [5] is implemented on each vehicle. Specifically, in the -th global iteration, each vehicle conducts a round of local iteration with its local samples to update the model. Meanwhile, it maintains two intermediate quantities: a vector variable , and a scalar variable . In SP, represents model parameters contributed from client . However, must be adjusted by the scalar for model aggregation. Each vehicle evenly broadcasts two quantities and to all neighbour vehicles , where , after conducting a local iteration. Then, and are utilized to update model parameters. For detailed derivation of and , please refer to [5].

We leverage a microscopic traffic simulator SUMO [33] to generate grid topology and random topology as the road networks as well as vehicle trajectories. We generate 100 vehicles moving along roads in road networks based on the Manhattan mobility model [34]. Each vehicle can only communicate to other vehicles within the communication range of 100 meters. Two public datasets, CIFAR-10 with 50k/10k training/test images and MNIST with 60k/10k training/test images, are adopted to conduct our experiments. In each experiment, the training samples are distributed to 100 vehicles in a balanced and non-IID manner. In other words, each vehicle is assigned with 500 training images of CIFAR-10 or 600 training images of MNIST, selected from 2 to 4 out of 10 labels. Two CNN models are adopted for the image classification tasks. The CNN models for CIFAR-10 and MNIST have 33,834 and 21,840 parameters, respectively.333These models are from the public code repository at https://github.com/AshwinRJ/Federated-Learning-PyTorch We train the CNN models for 3,000 iterations on CIFAR-10 and 300 iterations on MNIST.

IV-C Measuring Accuracy

We collect the final model accuracy of each vehicle (evaluated on test datasets) in our experiments. Then, we plot the cumulative distribution functions (CDF) of the final model accuracy of individual vehicles in Fig. 2. From the CDF curves of the final model accuracy, we can observe that: 1) Along vehicles’ routes (randomly generated), a vehicle can only exchange model parameters with neighbour vehicles. As a result, there exist giant discrepancies of the final model accuracy on different vehicles. The accuracy can reach 70% on CIFAR-10 or 95% on MNIST for some lucky vehicles. In contrast, the model accuracy can be lower than 30% on CIFAR-10 or 85% on MNIST for some unlucky vehicles. 2) The network topology can severely affect the final model accuracy. The model accuracy obtained on the random topology is much worse than that on the grid topology because there exist vehicles with a low connectivity with other vehicles in random topology impeding the exchange of model parameters between vehicles.

This measurement result indicates that it is insufficient to merely consider the in-degrees and out-degrees of vehicles to determine weights, i.e., ’s, for decentralized model aggregation in DFL. It calls for a more sophisticated aggregation method considering data source diversity to achieve consistent high model accuracy across all vehicles in the system.

IV-D Measuring Accuracy versus Diversity

To explore the underlying reason resulting in poorer model accuracy for unlucky vehicles, we further investigate the relationship between model accuracy and data source diversity. The challenge is how to measure the data source diversity since only model parameters are maintained as vehicles exchange and aggregate models with their neighbours. To tackle this problem, we propose to maintain state vectors on individual vehicles to track the contribution weight from each data source. The metric to measure the diversity of data sources is based on the state vector maintained by each vehicle.

Specifically, let denote the state vector of vehicle in the -th global epoch where represents the contribution weight from vehicle to vehicle ’s model. For example, if there are vehicles in the system and the state vector of vehicle is . It implies that the model of vehicle is equally contributed from all vehicles. Otherwise, if , it means that the model on vehicle is mainly contributed from vehicle and , which probably is not diversified enough.

Next, we describe how to update state vectors with the progress of DFL. Initially, all values in a state vector are assigned with . State vectors are updated when vehicles conduct local iterations or model aggregations. For a particular vehicle , we have

| (5) |

where is the learning rate. In other words, by conducting a round of local iteration, vehicle increases its own contribution weight. Similarly, Eq. 5 is executed for times given that local updates are conducted for rounds. Then, the state vector is normalized as

| (6) |

By conducting a round of model aggregation, the state vector is further revised as

| (7) |

which is subject to . Here represents the set of vehicles involved in model aggregation conducted on vehicle in the -th global iteration and represents the aggregation weight assigned for the model contributed from vehicle .

Based on Eqs. (5) and (7), the functionality of state vectors can be interpreted as below. For conducting local iterations via Eq. (5), a state vector incorporates the contribution of the local dataset weighted by the learning rate for rounds. As a vehicle aggregates its local model with other models from neighbour vehicles, its state vector is also mixed with state vectors from neighbours according to aggregation weights in Eq. (7). In this way, a state vector can track the contribution weight from each data source.

We embark on introducing how to measure data diversity based on state vectors. We first consider a homogeneous case to ease our explanation in which every vehicle has the same number of samples. It is well-known that entropy is effective in measuring diversity. Given the state vector , we define the entropy of the state vector as

| (8) |

Apparently, achieves its maximum value if and minimum value if for some .

With the diversity measure, we can examine the importance to diversify data sources in DFL. We measure the Pearson correlation between vehicles’ model accuracy and the diversity metrics of their state vectors in the experiment presented in Fig. 3. The results are plotted in Fig. 3, in which the x-axis represents the index of global iteration while the y-axis represents the Pearson correlation coefficient after each global iteration. In Fig. 3, we can observe that there is a strong positive correlation between model accuracy and the diversity metric under both network topologies, indicating that unlucky vehicles with poorer model accuracy fail to diversify their data sources. This experiment also manifests the potential to improve model accuracy by diversifying data sources in DFL.

V DFL-DDS Algorithm Design

This section presents the design essence of the DFL-DDS algorithm.

V-A Algorithm Design

In view of the importance of data source diversity on the final model accuracy, we design the DFL-DDS algorithm that maximizes the data source diversity when aggregating models collected from neighbour vehicles.

The entropy value of a state vector is based on a homogeneous system. Yet, vehicles possibly own different sizes of datasets in practice. To tackle the heterogeneity of dataset sizes across vehicles and make our algorithm more flexible for aggregation, we propose to employ KL (Kullback–Leibler) divergence to measure data source diversity for state vectors in our algorithm.

According to [3], if the sample distribution is heterogeneous, it is common to assign the contribution weight of a vehicle proportional to its dataset size, i.e., . To take heterogeneous distribution into account, we define the target state vector as and , where . The KL divergence of a state vector is defined as its distance to the target state vector, which is

| (9) |

The KL divergence achieves its minimum value when . Intuitively speaking, the KL divergence takes both the diversity and the importance of each vehicle into account.

Based on the defined KL divergence for each vehicle, we propose to generate weights when aggregating models by minimizing the local KL divergence between the current state and the target state in order to diversify the contribution of data sources and factor in the importance of each data source simultaneously. To facilitate the understanding of our algorithm, we exemplify the aggregation process in Fig. 4. In the example, it takes four steps for vehicle to update its state vector: 1) Exchange state vectors with neighbour vehicles along with the exchange of model parameters; 2) Generate weights ’s by minimizing the KL divergence of the state vector after aggregation; 3) Aggregate models based on aggregation weight ; 4) Update local state vector according to Eqs. (5) and (6) as local iterations are conducted. Similar to the example, the general update of state vectors can be embedded into the execution of local iterations. In particular, model parameters are aggregated according to the following rules in step 3).

| (10) |

Note that it is not reasonable to straightly set for a general road network without scrutinizing the state vector of because it overlooks the weights of data sources contributing to . To make it clearer, we reuse the example in Fig. 1 for illustration. In Fig. 1, there are two undirected communication paths: A-C-B and A-D. Assuming that the sample sizes in vehicles {A, B, C, D} are {100, 100, 10, 100}, respectively. Then, . However, by only considering neighbour vehicles, vehicle A will straightly set the aggregation weight of C as because it can only contact vehicles C and D without taking vehicle B into account. This example illustrates the necessity for optimizing diversity via state vectors. The holistic overview of our DFL-DDS algorithm is sketched in Alg. 1.

V-B Analysis

To justify the feasibility of step 2) deducing based on state vectors, we move on to prove that minimizing KL divergence can be solved efficiently by vehicles.

According to Eq. (7), the problem to minimize KL divergence is formulated as

| (11) | ||||

It is known that the KL divergence as the objective of is a convex function. Meanwhile, all constraints in are linear functions. Hence, this is a convex optimization problem, which can be solved efficiently to optimally determine weights ’s for model aggregation.

It is worth noting that the problem minimizing the KL divergence of the local state vector is equivalent to maximizing the entropy value of the state vector when the sample distribution is balanced, i.e., . For this special case, we have

V-C Implementation

DFL-DDS is friendly for implementation in practice. Firstly, the computation and communication overhead incurred by DFL-DDS is negligible. Given vehicles in the system, exchanging state vectors only brings communication overhead, which could be much less than the communication load for exchanging complicated CNN models between vehicles. The computation overhead lies in solving problem , a small-scale convex optimization problem. It is still insignificant compared with the computation load to train CNN models. Secondly, the communication overhead can be further reduced if the system scale is large by maintaining dynamic state vectors on vehicles. In other words, each vehicle can adaptively adjust the size of its state vector to only record weights from vehicles which have already contributed to its model training. Obtaining and distributing the target vector may consume extra communication traffic as well. Yet, the target state vector is static and limited with . It implies that a central coordinator can easily complete this task with low overhead.

Besides, our algorithm can be easily extended if RSUs are available for supporting DFL. An RSU can be regarded as a special static vehicle, which can also maintain a state vector to record the contribution weight of each vehicle. Thus, a vehicle can exchange information with an RSU to accelerate DFL as long as they are within effective communication distance.

VI Experimental Results and Analysis

In this section, we report the results of experiments conducted with MNIST and CIFAR-10 datasets to evaluate our DFL-DDS algorithm.

VI-A Experimental Settings

VI-A1 Datasets

We employ the well-known image datasets CIFAR-10 and MNIST for our experiments, since they are widely used in many areas including vehicular networks [35, 19]. The CIFAR-10 dataset consists of 50,000 training samples and 10,000 testing samples. Each sample in the CIFAR-10 dataset is a 3*32*32 color image with one of ten labels such as ships, cats, and dogs. The MNIST dataset consists of 60,000 training samples, and 10,000 testing samples. Each sample is a 28*28 handwritten digit with a label of digits from 0 to 9.

VI-A2 Learning Tasks

The learning tasks on each vehicle are to train two CNN (Convolutional Neural Network) models for classifing CIFAR-10 and MNIST images, respectively. The structure of two CNN models are described as below:444These models are from the public code repository at https://github.com/AshwinRJ/Federated-Learning-PyTorch

-

•

The CNN to classify CIFAR-10 images is with three 33 convolution layers (the first layer is with 16 channels, the second one is with 32, the third one is with 64 and each channel is followed by a 22 max pooling), a dropout layer with the probability of 0.25 and a fully connected layer with a log softmax output layer. There are 33,834 parameters in total.

-

•

The model to classify MNIST images is with two 55 convolution layers (the first layer is with 10 channels, the second one is with 20 channels and each channel is followed with 22 max pooling), a fully connected layer with 50 units, a dropout layer with the probability of 0.5, a final fully connected layer with a log softmax output layer. There are 21,840 parameters in total.

VI-A3 Road Network and Trajectory Generation

We generate three road networks for our experiments. Road network examples are shown in Fig. 5.

-

•

Grid net: We set the number of nodes as 10 for both horizontal and vertical directions, and the length between two neighboring nodes is 100 meters. As a result, 100 nodes are placed evenly in the grid network, and their degrees range from 2 to 4 with frequencies {4, 32, 64}.

-

•

Random net: The distance between two neighboring nodes is randomly generated in the range from 100 to 200 meters and other parameters are the same as the default values in the SUMO simulator [33]. In the random network, 100 nodes are randomly generated with 100 iterations. For these nodes, their degrees range from 1 to 5 with frequencies {25, 7, 36, 27, 5}.

-

•

Spider net: The number of nodes and the distance between nodes are determined by three parameters: arms, circles, and radius increment of neighboring circles, which are set as 10, 10, and 100 meters, respectively. We locate 100 nodes evenly in 10 circles.

We adopt the same settings as the simulation study for the generation and communication of vehicles. There are 100 vehicles moving in each road network. Their trajectories are generated based on the Manhattan mobility model [34]. The default velocity of each vehicle is 13.89 m/s, while the real velocity is influenced by several factors, such as road types and the congestion condition. We assume that all vehicles are equipped with DSRC and mmWave communication components with a communication range of 100 meters[23, 36, 37].

| Experiment Parameter | Value |

| learning rate | 0.1 |

| local minibatch size | 80 |

| local epoch | 8 |

| vehicle number | 100 |

| communication range | 100m |

For convenience, we summarize critical parameters in our experiments in Table II.

VI-A4 Sample Distribution

In FL, the typical data sample distribution is non-IID (not independent and identically distributed) and unbalanced. Accordingly, we adopt two different ways to place images on vehicles for our experiments.

-

•

Balanced & non-IID: The datasets are firstly grouped based on their labels, which are then evenly partitioned into shards. Each vehicle is assigned with 4 shards so that each vehicle receives the same number of training samples. The number of labels owned by each vehicle is from 2 to 4.

-

•

Unbalanced & IID: All samples assigned to a vehicle are uniformly and randomly selected from CIFAR-10 and MNIST in an IID manner. But, the number of samples on each vehicle is restricted to one of values in {125, 375, 1125} for CIFAR-10 or {150, 450, 1350} for MNIST.

VI-A5 Baselines and Evaluation Metrics

We implement two baselines: the subgraident push (SP) algorithm proposed in [5] and the decentralized federated learning (DFL) algorithm proposed in [6]. In the SP algorithm, each vehicle only conducts local iteration with all local samples once before it evenly partitions its parameters and broadcasts them to all neighbor vehicles. The DFL algorithm is a distributed version of the FedAvg algorithm, which regards each vehicle as a PS to execute FedAvg. Its parameters are set as the same values as our DFL-DDS except the model aggregation weights. For DFL, it assigns weights to models proportional to the sample population on each involved vehicle.

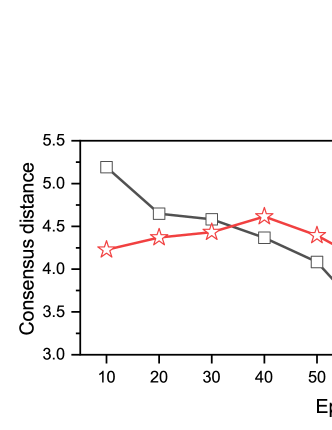

We evaluate DFL-DDS and baselines with three metrics: 1) The overall average model accuracy; 2) The number of consumed global epochs to reach target accuracy; 3) Consensus distance which is defined as . Here , and thus represents the average distance between the virtual global model and local models.

If all samples are centrally located on a server, the accuracy of our CNN models on CIFAR-10 and MNIST datasets can reach about 72% and 98%, respectively. For our experiments, the final average model accuracy is comparable with that obtained with centralized training.

VI-B Experimental Results

VI-B1 Evaluating Accuracy with CIFAR-10

In Figs. 6 and 7, we conduct experiments using the CIFAR-10 dataset to compare the model accuracy of DFL-DDS, DFL and SP under two different dataset distribution scenarios and the grid road network. Due to the complication of the CIFAR-10 dataset, we respectively conduct 4,000 and 3,000 global epochs in total under two dataset distributions. The x-axis represents the number of conducted global epochs, while the y-axis represents the average model accuracy of 100 vehicles after each epoch. From the results in Figs. 6 and 7, we can observe that:

-

•

The DFL-DDS algorithm outperforms DFL and SP in terms of average model accuracy under both sample distribution scenarios. For instance, after 2,000 epochs, the average accuracy of DFL-DDS reaches 63%, while the DFL and SP algorithms only reach 61% and 56%, respectively, under the non-IID sample distribution scenario.

-

•

The data sample distribution substantially influences the final model accuracy. For the non-IID sample distribution scenario, the final model accuracy is less than 70%. Whereas, for the IID scenario, the model accuracy of DFL-DDS exceeds 71% after about 1,500 global epochs, which is significantly higher than that of DFL and SP. This accuracy is already about the same as that achieved with centralized training, and thereby the execution of DFL-DDS is terminated then.

-

•

For the Unbalanced & IID distribution, vehicles may possess different numbers of samples. The results in Fig. 7 confirm that it is reasonable to adopt KL divergence to measure the diversity of data sources, which can well handle the heterogeneity of sample sizes.

VI-B2 Evaluating Accuracy with MNIST

We further evaluate the performance of DFL-DDS and two baselines, i.e., DFL and SP, using the MNIST dataset under three kinds of road networks. Due to the simplicity of the MNIST dataset, we totally execute 500 global epochs on each vehicle for all three algorithms. The experiment results are presented in Fig. 8. The x-axis represents the number of conducted global epochs while the y-axis represents the average model accuracy of 100 vehicles on the test dataset. From Fig. 8, we can observe that:

-

•

DFL-DDS is the best one that achieves the highest model accuracy under all evaluation cases. The model accuracy of DFL-DDS can finally exceed 95% which is very close to the accuracy achieved by centralized training. The results of this experiment validate the superiority of DFL-DDS by diversifying data sources for model aggregation.

-

•

DFL is better than SP (which only conducts local iteration once per global epoch with the full set of local samples) because DFL conducts multiple local iterations with local sample batches per global epoch which can make the trained model converge with a faster rate.

-

•

The network topology can substantially affect the model accuracy. The model accuracy in the grid network is the best one while the accuracy in the spider network is the worst one. The reason is that both the degrees of junctions and the lengths of each road segments are uniform in grid network implying a high connectivity among vehicles. In the random network, some junctions have a low degree, which leads to a low connectivity for some unlucky vehicles. In the spider network, the perimeters of different circles are highly different, and vehicles in outer circles have sparser connectivity with other vehicles. Overall speaking, if the road network is more irregular and sparser, it is possible that more vehicles will have difficulties to diversify their data sources resulting in lower model accuracy.

VI-B3 Evaluating Iteration Numbers

From another perspective, we compare DFL-DDS with baselines in terms of the number of global iterations consumed to reach the target average model accuracy. From this comparison, we can investigate how much time cost can be reduced by diversifying data sources in DFL. We employ the MNIST dataset and enumerate the target average model accuracy as 90%, 92% and 95%. The required numbers of global epochs for each experiment case are displayed in Fig. 9. Our experiment results reveal that:

-

•

DFL-DDS is the best one always taking the fewest number of global epochs to reach the target model accuracy in all experiment cases. Note that there are two experiment cases in which some algorithms cannot reach the target average model accuracy. These two cases are marked by red arrows in Fig. 9.

-

•

Compared with SP, the DFL-DDS algorithm can reduce the number of epochs to reach the target by more than 53%. In contrast, the reduction of epochs compared with the DFL algorithm varies from about 11% to 45%. These results shed light on the merit of DFL-DDS which can considerably improve the training efficiency of DFL.

VI-B4 Evaluating Consensus Distance

The consensus among participating clients is pivotal in evaluating the convergence speed of decentralized machine learning. Previous works have proposed several similar metrics such as consensus distance [38] and Laplacian potential [39] to measure the degree of disagreement among all local models. Inspired by these works, we employ consensus distance defined as to evaluate DFL progress in our experiments. A lower consensus distance implies a better DFL performance.

Similar to [38], we conduct the experiment to evaluate the consensus distances of DFL-DDS and DFL for the first 100 global epochs. We set up two experiment cases: IID dataset distribution with CIFAR-10 and non-IID dataset distribution with MNIST. The road network is generated as a grid net. Fig. 10 displays the comparison of the consensus distance for the first 100 epochs. From results in Fig. 10, we can observe that the consensus distance of DFL-DDS is always much lower than that of DFL, indicating that DFL-DDS can significantly outperform DFL by diversifying data sources.

VII Conclusion and Future Work

DFL is naturally feasible for vehicular networks. However, the low training efficiency is the major obstacle impeding the wide application of DFL. Through a simulation study, we unveil that the lack of data source diversity is a main reason resulting in poor model accuracy. To address this problem, we propose a novel DFL-DDS algorithm by maximizing the data source diversity contributed to the model trained on individual vehicles. Meanwhile, we elaborate implementation issues of DFL-DDS by considering practical issues. Experimental results conducted with public datasets confirm the superiority of DFL-DDS.

This work as our initial attempt to improve DFL is insightful for future works in this area. Our future work includes two possible aspects. Firstly, communication unreliability has not been considered in our algorithm. In other words, vehicle communications can be interrupted due to various reasons such that models cannot be completely exchanged between vehicles. Robust strategies should be developed that can tolerate information loss. Secondly, we assume a static target state vector in our work. In practice, the sample population on vehicles can be a dynamic variable. A dynamic lightweight algorithm is needed to timely adjust the target state vector.

References

- [1] L. Liang, H. Ye, and G. Y. Li, “Toward intelligent vehicular networks: A machine learning framework,” IEEE Internet of Things Journal, vol. 6, no. 1, pp. 124–135, 2019.

- [2] A. Albaseer, B. S. Ciftler, M. Abdallah, and A. Al-Fuqaha, “Exploiting unlabeled data in smart cities using federated edge learning,” in 2020 International Wireless Communications and Mobile Computing, ser. IWCMC ’20, 2020, pp. 1666–1671.

- [3] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in International Conference on Artificial Intelligence and Statistics, ser. AISTATS ’17, 2017, pp. 1273–1282.

- [4] X. Lian, C. Zhang, H. Zhang, C.-J. Hsieh, W. Zhang, and J. Liu, “Can decentralized algorithms outperform centralized algorithms? A case study for decentralized parallel stochastic gradient descent,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, ser. NIPS’ 17, 2017, p. 5336–5346.

- [5] A. Nedić and A. Olshevsky, “Distributed optimization over time-varying directed graphs,” IEEE Transactions on Automatic Control, vol. 60, no. 3, pp. 601–615, 2015.

- [6] S. Savazzi, M. Nicoli, V. Rampa, and S. Kianoush, “Federated learning with mutually cooperating devices: A consensus approach towards server-less model optimization,” in 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, ser. ICASSP ’20, 2020, pp. 3937–3941.

- [7] A. Koloskova, N. Loizou, S. Boreiri, M. Jaggi, and S. Stich, “A unified theory of decentralized sgd with changing topology and local updates,” in International Conference on Machine Learning. PMLR, 2020, pp. 5381–5393.

- [8] Z. Zhong, Y. Zhou, D. Wu, X. Chen, M. Chen, C. Li, and Q. Z. Sheng, “P-FedAvg: parallelizing federated learning with theoretical guarantees,” in IEEE Conference on Computer Communications, ser. INFOCOM ’21, 2021, pp. 1–10.

- [9] Y. Lu, X. Huang, Y. Dai, S. Maharjan, and Y. Zhang, “Differentially private asynchronous federated learning for mobile edge computing in urban informatics,” IEEE Transactions on Industrial Informatics, vol. 16, no. 3, pp. 2134–2143, 2020.

- [10] D. Deveaux, T. Higuchi, S. Uçar, C.-H. Wang, J. Härri, and O. Altintas, “On the orchestration of federated learning through vehicular knowledge networking,” in 2020 IEEE Vehicular Networking Conference, ser. VNC ’20, 2020, pp. 1–8.

- [11] Z. Che, G. Li, T. Li, B. Jiang, X. Shi, X. Zhang, Y. Lu, G. Wu, Y. Liu, and J. Ye, “-city: A large-scale dashcam video dataset of diverse traffic scenarios,” arXiv preprint arXiv:1904.01975, 2019.

- [12] A. Li, L. Zhang, J. Tan, Y. Qin, J. Wang, and X.-Y. Li, “Sample-level data selection for federated learning,” in IEEE Conference on Computer Communications, ser. INFOCOM ’21, 2021, pp. 1–10.

- [13] S. Wang, M. Lee, S. Hosseinalipour, R. Morabito, M. Chiang, and C. G. Brinton, “Device sampling for heterogeneous federated learning: Theory, algorithms, and implementation,” in IEEE Conference on Computer Communications, ser. INFOCOM ’21, 2021, pp. 1–10.

- [14] L. Pu, X. Yuan, X. Xu, X. Chen, P. Zhou, and J. Xu, “Cost-efficient and skew-aware data scheduling for incremental learning in 5G networks,” IEEE Journal on Selected Areas in Communications, vol. 40, no. 2, pp. 578–595, 2022.

- [15] C. Jianmin, P. Xinghao, M. Rajat, and J. Rafal, “Revisiting distributed synchronous SGD,” in International Conference on Learning Representations Workshop Track, 2016.

- [16] K. Muhammad, Q. Wang, D. O’Reilly-Morgan, E. Tragos, B. Smyth, N. Hurley, J. Geraci, and A. Lawlor, “FedFast: Going beyond average for faster training of federated recommender systems,” in Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, ser. KDD ’20, 2020, p. 1234–1242.

- [17] Y. Chen, X. Qin, J. Wang, C. Yu, and W. Gao, “Fedhealth: A federated transfer learning framework for wearable healthcare,” IEEE Intelligent Systems, vol. 35, no. 4, pp. 83–93, 2020.

- [18] R. Yu and P. Li, “Toward resource-efficient federated learning in mobile edge computing,” IEEE Network, vol. 35, no. 1, pp. 148–155, 2021.

- [19] T. Nishio and R. Yonetani, “Client selection for federated learning with heterogeneous resources in mobile edge,” in 2019 IEEE International Conference on Communications, ser. ICC ’19, 2019, pp. 1–7.

- [20] C. Hu, J. Jiang, and Z. Wang, “Decentralized federated learning: A segmented gossip approach,” arXiv preprint arXiv:1908.07782, 2019.

- [21] A. M. Elbir, B. Soner, and S. Coleri, “Federated learning in vehicular networks,” arXiv preprint arXiv:2006.01412, 2020.

- [22] Z. Du, C. Wu, T. Yoshinaga, K.-L. A. Yau, Y. Ji, and J. Li, “Federated learning for vehicular internet of things: Recent advances and open issues,” IEEE Open Journal of the Computer Society, vol. 1, pp. 45–61, 2020.

- [23] J. Posner, L. Tseng, M. Aloqaily, and Y. Jararweh, “Federated learning in vehicular networks: Opportunities and solutions,” IEEE Network, vol. 35, no. 2, pp. 152–159, 2021.

- [24] J. Kang, Z. Xiong, D. Niyato, Y. Zou, Y. Zhang, and M. Guizani, “Reliable federated learning for mobile networks,” IEEE Wireless Communications, vol. 27, no. 2, pp. 72–80, 2020.

- [25] X. Zhou, W. Liang, J. She, Z. Yan, and K. I.-K. Wang, “Two-layer federated learning with heterogeneous model aggregation for 6G supported internet of vehicles,” IEEE Transactions on Vehicular Technology, vol. 70, no. 6, pp. 5308–5317, 2021.

- [26] S. Samarakoon, M. Bennis, W. Saad, and M. Debbah, “Federated learning for ultra-reliable low-latency V2V communications,” in 2018 IEEE Global Communications Conference, ser. GLOBECOM ’18, 2018, pp. 1–7.

- [27] Q. Luo, C. Li, Q. Ye, T. H. Luan, L. Zhu, and X. Han, “CFT: A cluster-based file transfer scheme for highway vanets,” in 2017 IEEE International Conference on Communications, ser. ICC ’17, 2017, pp. 1–6.

- [28] A. Bradai, T. Ahmed, and A. Benslimane, “ViCoV: Efficient video streaming for cognitive radio VANET,” Vehicular Communications, vol. 1, no. 3, pp. 105–122, 2014.

- [29] D. Lin, J. Kang, A. Squicciarini, Y. Wu, S. Gurung, and O. Tonguz, “MoZo: A moving zone based routing protocol using pure v2v communication in vanets,” IEEE Transactions on Mobile Computing, vol. 16, no. 5, pp. 1357–1370, 2017.

- [30] X. Fan, Y. Lu, B. Liu, D. Liu, S. Wen, and B. Fu, “High-integrity based cooperative file transmission at urban intersections using pure v2v communication,” Ad Hoc Networks, vol. 122, p. 102612, 2021.

- [31] L. Feng, Y. Zhao, S. Guo, X. Qiu, W. Li, and P. Yu, “Blockchain-based asynchronous federated learning for internet of things,” IEEE Transactions on Computers, vol. 71, no. 5, pp. 1092–1103, 2022.

- [32] S. R. Pokhrel and J. Choi, “Federated learning with blockchain for autonomous vehicles: Analysis and design challenges,” IEEE Transactions on Communications, vol. 68, no. 8, pp. 4734–4746, 2020.

- [33] P. A. Lopez, M. Behrisch, L. Bieker-Walz, J. Erdmann, Y.-P. Flötteröd, R. Hilbrich, L. Lücken, J. Rummel, P. Wagner, and E. Wießner, “Microscopic traffic simulation using sumo,” in The 21st IEEE International Conference on Intelligent Transportation Systems, 2018.

- [34] F. Bai, N. Sadagopan, and A. Helmy, “Important: A framework to systematically analyze the impact of mobility on performance of routing protocols for Ad-hoc networks,” in IEEE Conference on Computer Communications, ser. INFOCOM ’03, vol. 2, 2003, pp. 825–835.

- [35] H. Chai, S. Leng, Y. Chen, and K. Zhang, “A hierarchical blockchain-enabled federated learning algorithm for knowledge sharing in internet of vehicles,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 7, pp. 3975–3986, 2021.

- [36] Y. Cao, T. Jiang, O. Kaiwartya, H. Sun, H. Zhou, and R. Wang, “Toward pre-empted ev charging recommendation through v2v-based reservation system,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 51, no. 5, pp. 3026–3039, 2021.

- [37] D. Zhao, H. Qin, B. Song, Y. Zhang, X. Du, and M. Guizani, “A reinforcement learning method for joint mode selection and power adaptation in the v2v communication network in 5g,” IEEE Transactions on Cognitive Communications and Networking, vol. 6, no. 2, pp. 452–463, 2020.

- [38] L. Kong, T. Lin, A. Koloskova, M. Jaggi, and S. Stich, “Consensus control for decentralized deep learning,” in International Conference on Machine Learning. PMLR, 2021, pp. 5686–5696.

- [39] H. Xu, M. Chen, Z. Meng, Y. Xu, L. Wang, and C. Qiao, “Decentralized machine learning through experience-driven method in edge networks,” IEEE Journal on Selected Areas in Communications, vol. 40, no. 2, pp. 515–531, 2022.