MainReferences \newcitesSuppReferences

1]\orgdivResearch School of Finance, Actuarial Studies and Statistics, \orgnameThe Australian National University, \orgaddress\cityCanberra, \stateACT, \countryAustralia

2]\orgdivDepartment of Statistical Sciences and Operations Research, \orgnameVirginia Commonwealth University, \orgaddress\cityRichmond, \stateVA, \countryUSA

BOB: Bayesian Optimized Bootstrap for Uncertainty Quantification in Gaussian Mixture Models

Abstract

A natural way to quantify uncertainties in Gaussian mixture models (GMMs) is through Bayesian methods. That said, sampling from the joint posterior distribution of GMMs via standard Markov chain Monte Carlo (MCMC) imposes several computational challenges, which have prevented a broader full Bayesian implementation of these models. A growing body of literature has introduced the Weighted Likelihood Bootstrap and the Weighted Bayesian Bootstrap as alternatives to MCMC sampling. The core idea of these methods is to repeatedly compute maximum a posteriori (MAP) estimates on many randomly weighted posterior densities. These MAP estimates then can be treated as approximate posterior draws. Nonetheless, a central question remains unanswered: How to select the random weights under arbitrary sample sizes. We, therefore, introduce the Bayesian Optimized Bootstrap (BOB), a computational method to automatically select these random weights by minimizing, through Bayesian Optimization, a black-box and noisy version of the reverse Kullback–Leibler (KL) divergence between the Bayesian posterior and an approximate posterior obtained via random weighting. Our proposed method outperforms competing approaches in recovering the Bayesian posterior, it provides a better uncertainty quantification, and it retains key asymptotic properties from existing methods. BOB’s performance is demonstrated through extensive simulations, along with real-world data analyses.

keywords:

Bayesian Optimization, Finite Mixture Models, Multimodal Posterior Sampling, Weighted Bayesian Bootstrap1 Introduction

Gaussian Mixture Models (GMMs) are powerful and flexible tools, which turn up naturally when the population of sampling units consists of homogeneous clusters or subgroups [52], and can be applied in a wide range of scientific problems including model-based clustering [13], density estimation [20], or as flexible semi-parametric model-based approaches for analyzing complex data [39]. It is no surprise, then, that uncertainty quantification to infer properties or make predictions about a population of interest based on mixture models is now a crucial and important area of statistical research [53], and a natural way to quantify uncertainty in mixture models is through Bayesian methods by specifying a sampling model and a prior distribution [35].

Motivation. Despite the flexibility and wide applicability of GMMs, the large computational costs of Bayesian analysis have prevented a broader full Bayesian implementation of these models. For instance, one could sample from the posterior distribution of GMMs via standard Markov chain Monte Carlo (MCMC). However, since the log-likelihood function is non-concave, the resulting posterior might be multimodal. The multimodality of the posterior, combined with the serial nature of MCMC algorithms, results in a sampler that might get trapped in local regions of high posterior density, failing to explore the entire parameter space and leading to mixing limitations between posterior modes [8, 12]. Moreover, in the context of mixture models, MCMC algorithms scale poorly to large datasets [35] and their serial nature has prevented the adoption of recent developments in parallel computing [32, 40], exacerbating even further the computational limitations of these methods. Consequently, new and refined posterior samplers are still required.

Related Work. This research joins a growing body of literature, dating back to 1994 when Newton and Raftery proposed the Weighted Likelihood Bootstrap (WLB) as an alternative to MCMC. The main idea behind WLB is to generate approximate posterior draws by independently maximizing a series of randomly weighted log-likelihood functions. WLB, however, does not naturally incorporate any prior information in the sampling procedure. With this in mind, researchers have been recently extending the WLB framework to accommodate prior information by weighting not only the likelihood but also the prior. A few examples include the Weighted Bayesian Bootstrap, or WBB [32, 34] and the Bayesian Bootstrap spike-and-slab Lasso [36]. In the context of generalized Bayesian analysis and model misspecification, we can find the loss-likelihood Bootstrap [28], the Posterior Bootstrap [12, 40] and the Deep Bayesian Bootstrap [37].

Our Contribution. In this paper, we first illustrate how to carry out a Bayesian implementation of GMMs within a WBB framework by developing an algorithm to optimize a randomly weighted and non-concave posterior distribution associated with a GMM. Secondly, we address the problem of selecting adequate random weights in order to obtain better posterior approximations. Up until now, one key question has not been addressed: how to select the random weights under arbitrary—especially under fixed—sample sizes. Few analyses have been proposed from the lenses of asymptotic theory but, to the best of our knowledge, the core of this question remains unanswered. In fact, as discussed by [33] and [37], “no general recipe (to select the random weights) yet exists.” Thus, in this work we develop the Bayesian Optimized Bootstrap (BOB), a computational approach to automatically select the random weights by minimizing a black-box and noisy version of the reverse Kullback–Leibler (KL) divergence between the Bayesian posterior and an approximate posterior obtained via random weighting. Since this divergence is expensive to evaluate and we do not have access to a closed-form expression for the divergence or its derivatives, the minimization is carried out via Bayesian Optimization, or BO (and thus the name BOB), as BO is one of the most efficient methods for optimizing a black-box objective function, requiring only a few evaluations of the objective itself [22, 21]. We show that BOB leads to a better approximation of the Bayesian posterior, it allows for uncertainty quantification, and, unlike standard MCMC, it is able to adopt recent developments in parallel computing.

Article Outline. The rest of this article is organized as follows. Section 2 revisits WBB and describes how a Bayesian implementation of GMMs can be carried out within a WBB framework. In Section 3 we formally introduce BOB and draw connections with existing Bootstrap-based posterior samplers. Simulation exercises are carried out in Section 4. In section 5, we demonstrate the applicability of BOB on real-world data. We conclude with a discussion in Section 6.

2 Revisiting Weighted Bayesian Bootstrap

2.1 Problem Setup

For our sampling model, let be a random sample from a Gaussian Mixture with components. More precisely, let the density of , for , be

| (1) |

where is the weight of component so that , is a known integer, denotes the Gaussian density with mean vector and covariance matrix , and denotes the set of symmetric positive semidefinite matrices of size . Additionally, let us define the latent indicator variables so that , where denotes the multinomial distribution. Then, the joint distribution of and would be given by

| (2) |

For our prior specification, let , , and , which corresponds to a normal-inverse-Wishart prior for and with scale matrix , and a Dirichlet prior for the mixture proportions . Additionally, let be the collection of all model parameters so that, under the sampling distribution and priors introduced above, the joint Bayesian posterior would be, up to a proportionality constant, given by

| (3) |

2.2 Posterior Sampling via Random Weighting

We now illustrate how one can approximately sample from the joint posterior in (3) via WBB. Following [32], WBB can be summarized in three simple steps:

Step 1: Start by sampling non-negative random weights from some weight distribution and construct the re-weighted log-density of and as

| (4) |

Step 2: Sample from some weight distribution and construct the re-weighted log-prior density as

| (5) |

Step 3: By marginalizing out of equation (4), one would have that and the re-weighted log-posterior of would be, up to an additive constant, given by . WBB proceeds to maximize this posterior density seeking

| (6) |

Under a traditional Bayesian framework, the randomness in arises from treating it as a random variable with a prior distribution. On the other hand, as discussed in [37], can be seen as an estimator, where, given the data, the only source of randomness comes from the random weights. Thus, by fixing the data and repeating steps 1 - 3 many times, one would obtain approximate posterior draws . This idea is attractive as optimizing can be easier and less computationally intensive than sampling from an intractable posterior. Note, additionally, that the random weights are independent across iterations. Consequently, and , for , are also independent and could be sampled in parallel. Moreover, Bootstrap-based posterior samplers do not require costly tuning runs, burn-in periods, or convergence diagnostics [12, 40], making random weighting an attractive alternative to MCMC sampling.

2.3 Randomly Weighted Expectation-Maximization

2.3.1 Expectation Step

We start by computing the expected value of , conditional on , and , where is the current value of within the EM algorithm. By Bayes’ rule, we have that

| (7) |

2.3.2 Maximization Step

In the maximization step, we then maximize the following surrogate objective function with respect to .

| (8) |

where . As we will illustrate shortly, the objective in (8) leads to well-known maximization problems, which can be solved relatively cheaply.

Proposition 1.

Let us define , , , , and , for all . Additionally, let , , , and . Then, the update of and would be given by

| (9) |

where

Details on the derivation of are presented in the supplementary materials. To optimize , we make use of a two-stage procedure in which we first update and then update .

Update : Start by noting that is a monotone transformation of [46, Ch. 9]. Thus, optimizing (9) with respect to yields

| (10) |

One can identify the solution to (10) as the mode of an distribution. This is,

| (11) |

Update : Again, note that is a monotone transformation of , so optimizing (9) with respect to reduces to

| (12) |

which corresponds to the mode of a distribution, where denotes the t-distribution with degrees of freedom, location vector and scale matrix [4, Ch. 2]. More precisely, the update of is given by .

Update : Lastly, note from (8) that the update of corresponds to the mode of a distribution, where . Namely, for ,

| (13) |

Our EM algorithm, then, iterates between the E and the M steps until convergence.

2.3.3 Dealing with Suboptimal Modes: Tempered EM

Despite the simplicity of our EM algorithm, a well-known drawback of non-convex optimization methods is that they might converge to a local optima (i.e., a suboptimal mode). Random restarts (RRs) have been widely used to increase the parameter space exploration and escape suboptimal modes. However, this approach is too computationally intensive and yet, does not guarantee that one would reach the global mode.

Tempering and annealing [24, 45], on the other hand, are optimization techniques which also increase the parameter space exploration at a lower computational cost. More precisely, let be a tempering profile, i.e., a sequence of positive numbers such that , where is the -th iteration of the EM algorithm. Then, a tempered EM algorithm modifies the E step in equation (7) as , where larger values of allow the algorithm to explore more of the target posterior by flattening the distribution of , and as one would recover the original objective function, progressively attracting the solution towards the global mode [2, 26]. Thus, we let

| (14) |

with , , and , which corresponds to the oscillatory tempering profile with gradually decreasing amplitudes from [2]. To select a suitable combination of and , we use a grid-search over a range of possible values, choose the combination that yields the largest objective using an unweighted EM algorithm, and fix such a solution throughout the entire sampler. As discussed in [26], the tempering hyper-parameters can remain fixed across different optimization problems with similar characteristics, as in the case of randomly weighted EM.

3 Introducing BOB

So far, we have developed and presented a randomly weighted EM algorithm to approximately sample from the posterior distribution of GMMs within a WBB framework, but we have not yet answered a key and important question: How to select the random weights. [33] showed that under low-dimensional settings and assuming the square of Jeffreys prior, uniform Dirichlet weights for the likelihood yield approximate posterior draws that are first order correct, i.e., consistent (they tend to concentrate around a small neighbourhood of the maximum likelihood estimator—MLE) and asymptotically normal. More recently, [34] established first order correctness and model selection consistency for a wide range of weight distributions in linear models with Lasso priors, and [36] showed that, for a number of weight distributions, the approximate posterior from Bayesian Bootstrap spike-and-slab Lasso concentrates at the same rate as the actual Bayesian posterior. Note, however, that these results are all based on the assumption that . Considering the case of a fixed is important because, under a small to medium sample size, the prior, , would have more influence on the posterior, and as the effect of gets bigger, the relationship between and the random weights becomes less clear [37], making the choice of adequate weights a much more important and difficult task. Thus, we propose BOB as an alternative methodology for automatically selecting the random weights under arbitrary sample sizes.

Before illustrating our proposed method, though, let us present a brief summary of Variational Bayes (VB) methods, as we draw inspiration from them. VB aims to obtain an approximation, , with variational parameters such that , i.e., VB aims to minimize the reverse KL divergence between the Bayesian posterior and a variational approximation [5].

With this in mind, we propose the following weighting scheme: Draw the likelihood weights as , with and , where denotes the Dirac measure so that , , and denotes the exponential distribution with mean 1. If , the distribution for the likelihood weights would be the uniform Dirichlet, while would yield an overdispersed distribution [33, 16]. For the weights associated with the prior, we set, for , , , and , where . Hence, our problem is reduced to finding appropriate values for , where is a compact search space. Inspired by VB, we propose to select the optimal as

| (15) |

with

where the latter line is known as the negative evidence lower bound (ELBO) [5]. In other words, we propose to select the optimal so that we minimize the reverse KL divergence between the Bayesian posterior (up to a proportionality constant) and the approximate posterior induced by random weighting, denoted as . As discussed in section 2, given the data , the only source of variation in comes from the random weights, which, in our weighting scheme, depend on .

Ideally, we would like to compute as in (15), but we cannot optimize directly because: (a) we do not know the form of the joint density , and (b) the expectation is analytically intractable. By Sklar’s theorem [47], the approximate joint posterior density has the form

where is the copula density of (i.e., represents the dependence structure in the approximate posterior), denotes the marginal density of the -th entry of with cumulative distribution function , and . Given posterior draws , one could think about estimating such a copula density (see e.g. [41] for a review on copula density estimation in the bivariate case); however, obtaining accurate copula density estimates in high dimensional settings is remarkably difficult. Note that the number of unique parameters in our GMM is given by , which grows quadratically with . For instance, letting and would feature 543 unique parameters, leading to inaccurate and unstable copula density estimates. Thus, let us assume that the approximate posterior parameters are mutually independent. More precisely, let , for all , so that

That being so, to overcome (a), we propose to obtain a batch of approximate posterior draws, denoted by , and compute univariate kernel density estimates (KDEs) of each marginal density , denoted by , which can be evaluated efficiently as in [18, 19]. To overcome (b), we propose to use the sample mean as an unbiased estimator of the expected value. More formally, for any , we obtain a batch of approximate posterior draws and estimate with

| (16) |

Hence, we can think about as a noisy and black-box approximation of in the sense that, given an input , we can evaluate the objective, but we do not have access to a closed-form expression for neither the objective nor its derivatives. One could use grid-search methods to minimize ; however, evaluating is tremendously expensive because, for each , we need to sample a batch of posterior draws, compute univariate KDEs of the approximate marginals, and compare those to the Bayesian posterior. As a consequence, exhaustive grid-search approaches would be infeasible to implement. To overcome this, we use Bayesian Optimization (BO) to minimize , as it is one of the most efficient approaches to optimize a noisy black-box objective with little evaluations [22, 21].

Comments on the independence assumption between approximate posterior parameters: To construct , we assume that the approximate posterior parameters are independent, as this is a widely implemented technique in the Bayesian literature. For instance, mean-field variational Bayes (MFVB) assumes that the variational distribution factorizes as . In such cases, the MFVB objective function is given by

which closely reassembles our noisy black-box objective in equation (16). In a GMM context, however, one could argue that the independence assumption between model parameters might be unreasonable, as the latent indicator variables depend on each other (i.e., if an observation belongs to a cluster, it automatically cannot belongs to any other cluster), as well as on the mean vectors and covariance matrices. In such cases, the MFVB distribution, , might not contain the true posterior, as it is not able to capture such a complicated dependence structure, negatively affecting the entire analysis. Fortunately, this is not our case. Unlike MFVB, our goal is not to obtain a variational distribution to approximate the true posterior, but to obtain adequate random weights within a WBB framework. Thus, the negative effects would be less severe if the joint density used in equation (16) does not contain the true posterior, as we do not use to construct an entire variational distribution to approximate . We obtain our approximate posterior draws through a randomly weighted EM algorithm, where the latent variables are integrated out in the E-step, incorporating those dependencies in the posterior draws. Moreover, recall from the M-step that the updates of , , and do not depend on each other, giving room to assume that .

3.1 Minimizing via Bayesian Optimization

We now illustrate our BO approach to minimize . First up, though, note that maximizing is equivalent to minimizing . Thus, we consider the problem

Namely, given the black-box function , we would like to find its global maximum using repeated evaluations of . However, since each evaluation is expensive, our goal is to maximize using as few evaluations as possible.

By combining the current evidence, i.e., the evaluations , with some prior information over , i.e., , BO efficiently determines future evaluations. More precisely, BO uses the posterior to construct an acquisition function and choose its maximum, , as our next point for evaluation [23].

For our prior on , we assume a Gaussian Process (). This is,

where and are a mean and a kernel (covariance) function, respectively, so that , for all . Given the evidence , with , , and , the resulting posterior would also be a with mean and covariance given by

| (17) | |||

| (18) |

where , , , , , , and [42].

As suggested by [48], we use a Matèrn 2.5 kernel, given by

where , is a covariance amplitude, and , for are length scales. For our acquisition function, we use the Expected Improvement (EI) over the best current value [22], as it has been shown to be efficient in the number of evaluations required to find the global maximum of a wide range of black-box functions [6, 48]. More precisely, given the best current value—denoted by , we set

That being said, note that the BO procedure is carried out just once. Then, we use the learned random weights throughout the entire sampling process, as described in section 2. Additionally, obtaining the batch of approximate posterior draws, computing the univariate KDEs, and evaluating the Bayesian posterior, can all be trivially implemented in parallel, reducing the cost of evaluating and minimizing . Thus BOB, as WLB and WBB, also embraces recent developments in parallel computing. BOB, however, let us select the optimal , and hence, adequate random weights. We summarize our proposed method in algorithm 1.

Input:

Data:

Total number of posterior draws:

Prior hyper-parameters:

Batch size:

Compact search space:

Tempering hyper-parameters:

Output:

Posterior draws:

To construct our Bayesian optimizer, we make use of the well-established “DiceOptim” R package [44, 27]. Our implementation of BOB, as well as of WBB, are all available in the “BOBgmms” R package, which can be found in the supplementary materials or online at github.com/marinsantiago/BOBgmms. Source code to reproduce the results from this article can also be found in the supplementary materials or online at github.com/marinsantiago/BOBgmms-examples.

3.2 Asymptotic Properties

Throughout this subsection, we want to show that BOB retains key asymptotic properties from WLB and WBB. To that end, let be the MLE for and let be a draw from BOB. It is assumed that the ordering of and is the same. Let also, for , , , , and , such that, as , , , , and . Then, note that BOB can be seen as a generalization of WLB and WBB. In fact, by setting or , one would recover WLB or WBB (with fixed prior weights [34]), respectively. Thus, if are random samples from a sufficiently regular model , as described in section 2.1, and if the BOB solution converges to the WLB or WBB solutions, as , then would retain key asymptotic properties such as consistency and asymptotic normality. More precisely, following theorems 1 and 2 from [33], along with the results from [32], we have that if or , as , then:

-

1.

For any scalar , as ,

for almost every infinite sequence of data .

-

2.

For all measurable , as ,

for almost every infinite sequence of data . In this case, , is the Fisher information, and denotes the Borel field on .

Remark 1.

The probabilities, , from above, refer to the distribution of induced by random weights given the sequence of data .

Remark 2.

The Bernstein–von Mises theorem states that, under regularity conditions, the actual Bayesian posterior converges to a normal distribution. More formally, as , . Comparing this result with our previous results, one have that it is possible to approximate posterior credible sets with BOB as , for all , and the approximation would get better with a growing .

On the whole, we have that BOB provides an automatic and much more informed approach to select the random weights, while retaining the asymptotic first order correctness from existing Bootstrap-based posterior samplers.

4 Simulations

We now evaluate the performance of different approximate posterior samplers trough various simulation experiments.

4.1 Simulations Setup

To generate the simulated data, we start by sampling , for , from , where . For the dimension , we are going to consider low , medium , and high dimensional problems. To generate each , we set, for ,

where only 60% of the entries of are important parameters in separating the clusters, while the remaining entries are set to zero. Lastly, we set each to be an identity matrix of size , i.e., . In total, we consider nine different simulations settings, which are summarized in table 1. In all cases, we standardize the data so that each feature has mean 0 and variance 1.

| Setting | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Simulation parameters | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 50 | 50 | 50 | 100 | 100 | 100 | 150 | 150 | 150 | |

| 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 | |

| 2 | 2 | 2 | 3 | 3 | 3 | 4 | 4 | 4 | |

| \botrule | |||||||||

The prior hyper-parameters are set as , , , , and , for . To select the regularization parameters, and , we use a likelihood-based cross-validation approach, as in [14]. The idea is to consider a grid of values for and , run an unweighted EM algorithm using each pair of and on a training subset of the data, and choose the pair of and that maximizes the log-likelihood over the validation set. We then use the same values of and across all the posterior samplers. That being said, the goal of these experiments is not to choose the “best” regularization parameters. Instead, as pointed out by [37], the goal is to compare different posterior approximations for given values of and .

For our approximate posterior samplers we consider BOB as well as two versions of WBB, namely WBB1 (with random prior weights) and WBB2 (with fixed prior weights). To implement WBB1 we set and , and to implement WBB2 we set and . We also consider a mean-field Automatic Differentiation Variational Inference (ADVI) algorithm [25] and for our MCMC algorithm we use a No-U-Turn sampler, or NUTS [17]. Both NUTS and ADVI are executed in Stan [7] as it is a highly optimized and off-the-shelf probabilistic programming language, widely used by many practitioners.

We set the lower and upper bounds of the BO search space, , to be and , respectively, except in settings 4 and 7, where we set as we have more available data. Note that in any case, the search space contains WBB and WLB as potential solutions. To set the initial parameter values, we consider a pool of candidate values and choose the initialization that yields the largest posterior density. Further details on our initialization strategy can be found in the supplementary materials. It is worth noting that we initialize all the algorithms (i.e., BOB, WBB, NUTS, and ADVI) at the same point. Thus, if we see any difference in performance, it would be due to the sampling algorithm and not due to the initialization, as they all share the same initial values.

We obtain approximate posterior draws using BOB, WBB, and ADVI, while with NUTS, we run the algorithm for iterations and discard the first half as burn-in. In the case of BOB, we use batches of size to construct . We run all our simulations and data analyses on an Apple Silicon-based machine with 48 GB of memory and 16 CPU cores.

4.2 Comparison Metrics

To assess the accuracy of posterior approximations, the Kolmogorov–Smirnov (KS) and the Total Variation (TV) distances between the Bayesian posterior and its approximation have been traditionally used. To ease computations, this assessment has been usually based on comparing the Bayesian marginal posteriors with the approximate marginal posteriors [51, 34]. However, in the context of mixture models, these comparisons are no longer viable because of the so-called label switching problem [11]. One could use relabeling algorithms, as in [50], but the problem would remain as the resulting ordering of the approximate marginals might be different to the ordering of the Bayesian marginals, even after employing a relabeling algorithm, making comparisons between the marginals virtually meaningless.

We, therefore, base our comparisons on the posterior predictive distribution, as the posterior predictive is invariant to any permutation of (i.e., it circumvents the label switching problem). More formally, let be the Bayesian posterior and let be its approximation. Then, the Bayesian posterior predictive distribution would be given by , while the approximate posterior predictive would be . Note, additionally, that the posterior predictive distribution reflects two kinds of uncertainty: (a) Sampling uncertainty about conditional on and (b) parametric uncertainty about [29]. Following [15], under a correctly specified model and a proper prior, the Bayes risk

is minimized when , where denotes the KL divergence between the true data generating mechanism and the approximate posterior predictive distribution , making the Bayesian posterior predictive distribution a natural benchmark when one is interested in quantifying uncertainty.

Thus, we consider the TV and KS distances between and as comparison metrics, where smaller distances would suggest a better approximation to the Bayesian posterior and a better uncertainty quantification. To ease computations, we approximate the TV and KS distances between and as

| (19) | |||

| (20) |

where denotes the empirical CDF of the actual Bayesian posterior predictive distribution of (i.e., the -th element of ) and denotes the empirical CDF of the posterior predictive of obtained by one of the approximations (i.e., BOB, WBB, NUTS, or ADVI). Details on how one can sample from the posterior predictive distributions and the actual Bayesian posterior (when the true latent variables, , are known), can be found in the supplementary materials.

4.3 Simulation Results

Figure 1 and Table 2 present the simulation results. Figure 1 displays boxplots of the and distances between the Bayesian posterior predictive distribution and its approximations obtain via BOB, WBB1, and WBB2 based on ten independent runs per setting. Table 2 shows the median (and the interquartile range) of the and distances, as well as of the elapsed (wall-clock) times, for all considered methods, based on the same ten independent runs. Figure 1 clearly shows that, across the nine settings, BOB constantly outperforms both versions of WBB in recovering the Bayesian posterior predictive distribution. Interestingly, we can also observe that the performance of both versions of WBB is very similar, with no major differences between the two methods.

| Setting | ||||||||||

| Metric | Method | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| BOB | 0.033 | 0.049 | 0.061 | 0.026 | 0.036 | 0.045 | 0.023 | 0.032 | 0.039 | |

| (0.003) | (0.001) | (0.007) | (0.002) | (0.000) | (0.001) | (0.001) | (0.001) | (0.001) | ||

| WBB1 | 0.047 | 0.068 | 0.084 | 0.034 | 0.050 | 0.062 | 0.030 | 0.043 | 0.054 | |

| (0.001) | (0.001) | (0.002) | (0.002) | (0.000) | (0.002) | (0.001) | (0.001) | (0.001) | ||

| WBB2 | 0.047 | 0.070 | 0.087 | 0.033 | 0.050 | 0.063 | 0.028 | 0.043 | 0.055 | |

| (0.002) | (0.001) | (0.002) | (0.002) | (0.000) | (0.002) | (0.000) | (0.001) | (0.001) | ||

| NUTS | 0.006 | 0.006 | 0.056 | 0.039 | 0.070 | 0.057 | 0.072 | 0.058 | – | |

| (0.004) | (0.049) | (0.027) | (0.061) | (0.012) | (0.027) | (0.010) | (0.018) | – | ||

| ADVI | 0.083 | 0.107 | 0.284 | 0.074 | 0.094 | 0.159 | 0.071 | 0.095 | 0.113 | |

| (0.018) | (0.040) | (0.077) | (0.016) | (0.058) | (0.032) | (0.015) | (0.042) | (0.076) | ||

| BOB | 0.028 | 0.040 | 0.051 | 0.023 | 0.031 | 0.037 | 0.021 | 0.027 | 0.033 | |

| (0.002) | (0.001) | (0.005) | (0.004) | (0.001) | (0.001) | (0.001) | (0.002) | (0.004) | ||

| WBB1 | 0.039 | 0.055 | 0.067 | 0.028 | 0.041 | 0.051 | 0.026 | 0.035 | 0.043 | |

| (0.003) | (0.001) | (0.001) | (0.002) | (0.001) | (0.001) | (0.001) | (0.003) | (0.001) | ||

| WBB2 | 0.036 | 0.055 | 0.070 | 0.027 | 0.039 | 0.050 | 0.023 | 0.033 | 0.044 | |

| (0.003) | (0.001) | (0.001) | (0.002) | (0.002) | (0.001) | (0.001) | (0.001) | (0.001) | ||

| NUTS | 0.008 | 0.009 | 0.058 | 0.048 | 0.063 | 0.065 | 0.049 | 0.047 | – | |

| (0.004) | (0.050) | (0.031) | (0.043) | (0.008) | (0.017) | (0.002) | (0.008) | – | ||

| ADVI | 0.078 | 0.081 | 0.151 | 0.062 | 0.075 | 0.102 | 0.052 | 0.066 | 0.081 | |

| (0.013) | (0.010) | (0.034) | (0.012) | (0.015) | (0.019) | (0.002) | (0.019) | (0.040) | ||

| Elapsed | BOB | 1.891 | 2.271 | 2.731 | 2.525 | 2.988 | 3.553 | 3.281 | 3.829 | 4.692 |

| (0.033) | (0.086) | (0.040) | (0.140) | (0.035) | (0.050) | (0.075) | (0.053) | (0.197) | ||

| WBB1 | 0.002 | 0.003 | 0.004 | 0.003 | 0.005 | 0.008 | 0.004 | 0.007 | 0.011 | |

| (0.000) | (0.001) | (0.001) | (0.000) | (0.002) | (0.001) | (0.001) | (0.001) | (0.001) | ||

| WBB2 | 0.002 | 0.003 | 0.004 | 0.003 | 0.004 | 0.008 | 0.004 | 0.007 | 0.011 | |

| (0.000) | (0.001) | (0.001) | (0.000) | (0.000) | (0.000) | (0.000) | (0.001) | (0.001) | ||

| NUTS | 0.681 | 4.400 | 22.027 | 8.591 | 36.547 | 80.995 | 22.318 | 85.547 | – | |

| (0.073) | (6.519) | (8.028) | (5.367) | (3.141) | (2.646) | (5.641) | (21.072) | – | ||

| ADVI | 0.033 | 0.081 | 0.157 | 0.078 | 0.180 | 0.369 | 0.131 | 0.323 | 0.686 | |

| (0.004) | (0.008) | (0.010) | (0.004) | (0.031) | (0.020) | (0.008) | (0.068) | (0.052) | ||

| \botrule | ||||||||||

In Table 2, we can observe that both versions of WBB have the lowest running times. We can also observe that in simpler settings, the running times and accuracy of NUTS are comparable to those of BOB. However, as the simulation settings get more complicated, the median running times of BOB become significantly lower than those of NUTS. For instance, in setting 5, BOB takes a median running time of 2.99 minutes, while NUTS takes 36.55 minutes. This issue is more clear in settings 6 and 8, where BOB’s median running times are 3.55 and 3.83 minutes, respectively, while NUTS takes median running times of 81 and 85.55 minutes, respectively. In other words, BOB can be 22.8 times faster than NUTS. Nonetheless, it is worth mentioning that in setting 9, NUTS was not able to run within a computing budget of 8 hours (480 minutes), while BOB took a median running time of 4.69 minutes, illustrating the poor scalability of MCMC algorithms to large problems.

More interestingly, though, we can observe that in more complicated settings, BOB is not only faster than NUTS, but also more accurate. For instance, in setting 7, the median distance obtained via NUTS is 3 times larger than the one of BOB, illustrating that BOB is, indeed, a reliable posterior sampler. It is also clear that, across all settings, ADVI produces the least accurate approximations of the Bayesian posterior predictive distribution. On the whole, even if WBB has the lowest running times, BOB constantly produces reliable approximations of the Bayesian posterior predictive distribution in a timely fashion, nicely balancing the trade-off between computational cost and accuracy.

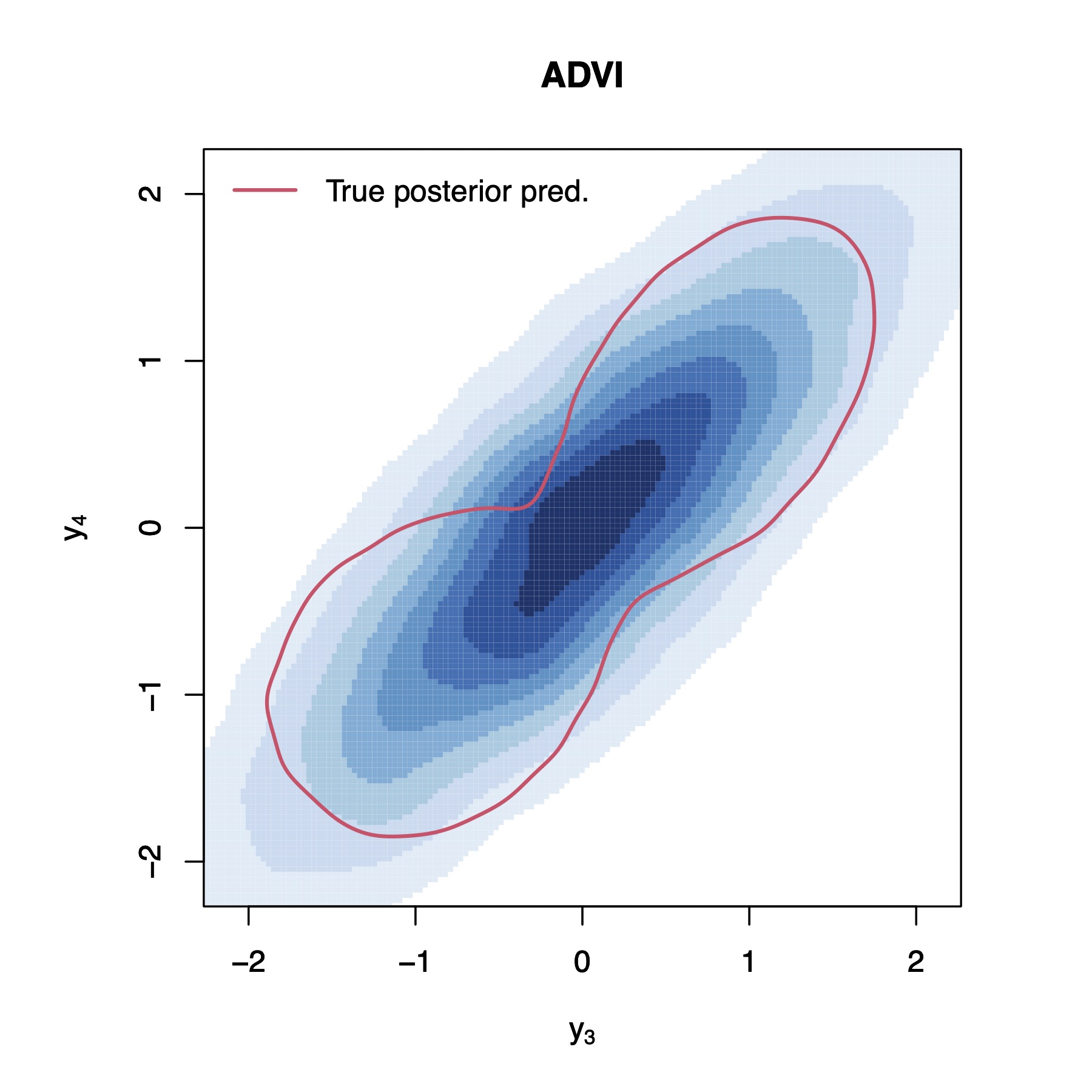

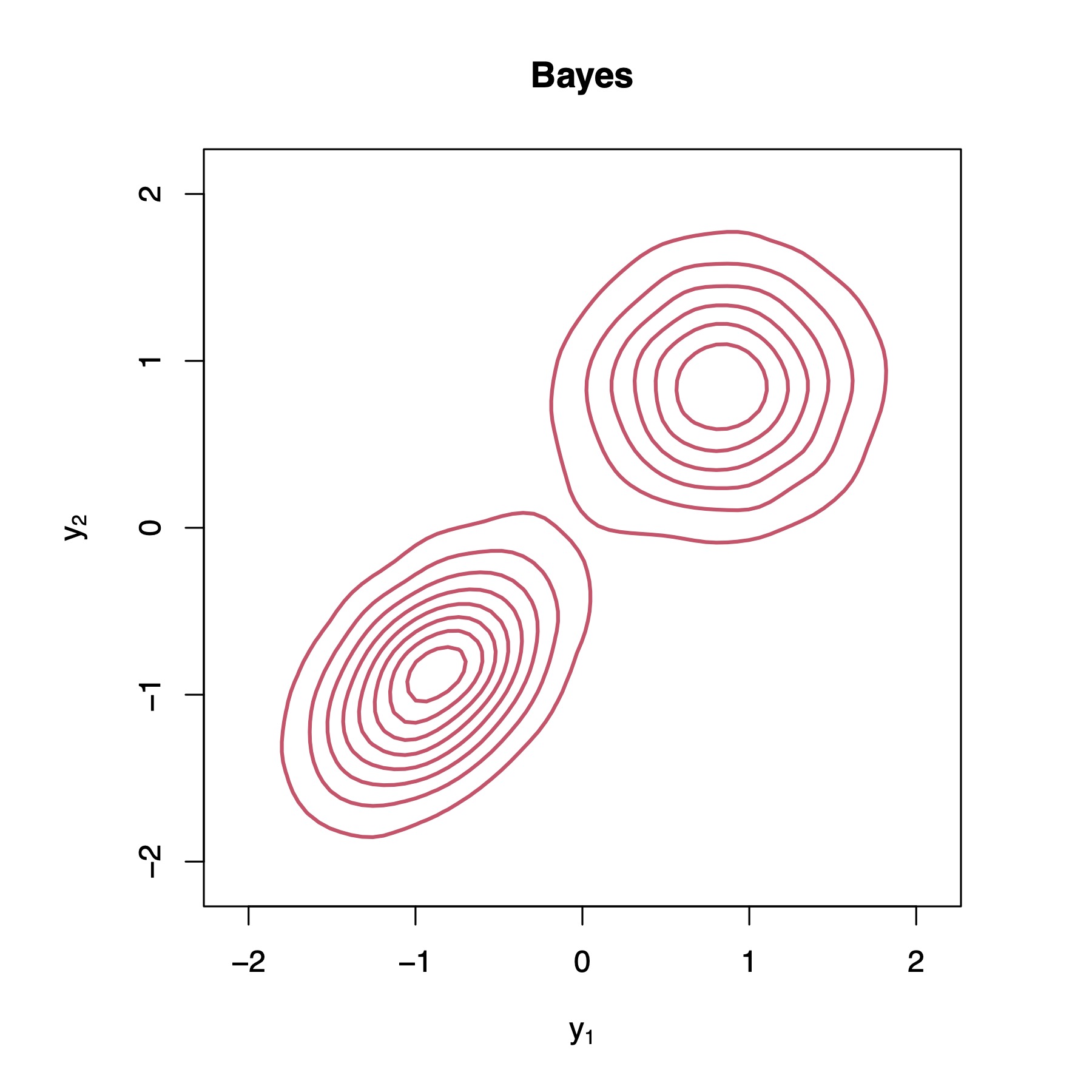

To provide a clearer demonstration of what is happening, let us bring up an illustrative example with , , and . Figure 2 present KDEs of the true Bayesian posterior predictive distribution, as well as of the different approximations (details on how one can sample from the Bayesian posterior predictive distribution can be found in the supplementary materials). We can observe that the Bayesian posterior predictive density (i.e., the red contour plots) clearly indicates the existence of two clusters. We can also observe that WBB1 and WBB2 correctly capture the locations of such clusters, but do not correctly capture the dispersion of the Bayesian posterior. In fact, both versions of WBB produce overconfident posterior predictive distributions, which is not optimal for uncertainty quantification. Moreover, NUTS and ADVI fail to identify the two clusters in the data. Instead, they fit the data as one big cluster, which is not desirable either. These results are not surprising as the reparameterization used by ADVI forces the posterior to be unimodal [31]. As a matter of fact, similar results for NUTS and ADVI have also been reported in [12], where the authors show that the samplers produce unimodal posteriors. Overall, it is clear that BOB produces the closest approximation to the Bayesian posterior predictive distribution and the best uncertainty quantification. Additional posterior predictive density plots are provided in the supplementary materials, where we can observe similar results.

Table 3 presents the and distances between the true Bayesian posterior predictive distribution and its approximations, as well as the elapsed (wall-clock) times, for our illustrative example with , , and . We can observe that BOB not only yields the smallest distances, but it is also 2.4 times faster than NUTS.

| Method | |||||

|---|---|---|---|---|---|

| Metric | BOB | WBB1 | WBB2 | NUTS | ADVI |

| 0.047 | 0.070 | 0.071 | 0.054 | 0.099 | |

| 0.040 | 0.055 | 0.055 | 0.055 | 0.059 | |

| Elapsed (min) | 2.136 | 0.005 | 0.005 | 5.144 | 0.083 |

| \botrule | |||||

Lastly, we would like to investigate how the sample size affects both BOB and WBB. As discussed in section 3.2, both BOB and WBB are first order correct for the Bayesian posterior. In other words, their approximations should get better with a growing sample size. Thus, we consider an additional experiment where we fix and , and consider various sample sizes spanning from to . Figure 3 displays the and distances between the Bayesian posterior predictive distribution and its approximations obtained via BOB, WBB1, and WBB2, as a function of . We can observe that the and distances decrease as increases, which is expected. However, we can see that BOB constantly outperforms WBB, for all values of . Asymptotically, though, we can observe that WBB and BOB tend to converge to the same limiting distribution, which is desirable as WBB possesses appealing asymptotic properties. Altogether, we have that BOB is a reliable method for approximate posterior sampling, which can be applied in a wide range of problems with different sample sizes.

5 Analysis of Real-world Data

To further demonstrate the performance and practical utility of our proposed methods, we apply them to the widely analyzed Wine [1] and Seeds [9] datasets. Both datasets are publicly available from the UC Irvine Machine Learning Repository.

5.1 Wine Data

The data consists of chemical properties for Italian wines, belonging to different types, namely Barbera, Barolo, and Grignolino. From the Barbera type we have 48 specimens, from Barolo we have 59 specimens, and from Grignolino we have 71 specimens. A detailed description of all the chemical properties from each wine is presented in the supplementary materials. The idea is to cluster types of wine based on these chemical features. To assess the validity of our model specification and model fitting, we randomly split our data into training and held-out sets, with observations in the training set. In all our analyses, we standardize the data so that each feature has mean 0 and variance 1. To set the prior hyperparameters, we follow the same approach as in section 4.1. We obtain approximate posterior draws using BOB, WBB1, WBB2, and ADVI. In the case of NUTS, we run the algorithm for 40000 iterations and discard the first half as burn-in. For BOB, we use batches of size to construct . As before, we set the lower and upper bounds of the BO search space to be and , respectively.

5.2 Seeds Data

Our second dataset is made up of observed measurements of geometrical properties for kernels belonging to different varieties of wheat, namely, Kama, Rosa and Canadian. There are 70 kernels of each variety and the idea is to cluster varieties of wheat based on these observed measurements. Further details on these observed measurements are presented in the supplementary materials. Again, we randomly split our data into training and held-out sets, with observations in the training set. All other configurations are set as in subsection 5.1.

5.3 Results

Figures 4 and 5 present posterior predictive density plots for selected variables from the wine and seeds datasets, respectively. As before, the red contours represent the true Bayesian posterior predictive density, while the blue KDEs represent the different approximations. These contours and KDEs were obtained using only the training data. On the other hand, the scatter plots represent the held-out data, which can help us evaluate how well the different methods generalize to unseen data and how well the different approximations recover the true underlying data generating mechanism. Different symbols and colors in the scatter plots represent the actual labels of the held-out data. For instance, in Figure 4, orange squares, pink triangles and dark circles represent Barolo, Grignolino, and Barbera wines, respectively. In Figure 5, orange squares, pink triangles and dark circles represent Kama, Rosa, and Canadian wheat kernels, respectively.

In Figures 4 and 5, the Bayesian posterior predictive density clearly indicates the existence of three clusters, which align with the held-out data, suggesting that the model assumptions are reasonable. We can also observe that both versions of WBB correctly capture the location of these clusters. However, both versions of WBB produce overconfident posterior predictive distributions and do not capture the dispersion of the Bayesian posterior. NUTS and ADVI are unable to identify the three clusters in the data. Having said that, it is clear that in the wine and seeds datasets, BOB produces the closest approximation to the Bayesian posterior predictive distribution and the best uncertainty quantification. Note that these results are consistent with the results from section 4. Additional posterior density plots are provided in the supplementary materials, where we can observe similar patterns across different variables from the wine and seeds datasets.

Moreover, Table 4 presents elapsed (wall-clock) times in minutes, as well as the and distances between the Bayesian posterior predictive distribution and its approximations obtained via BOB, WBB1, WBB2, NUTS, and ADVI, for the wine and seeds datasets. We can observe that BOB returns the smallest and distances in both datasets. Additionally, note that in the wine dataset, NUTS takes 69.4 minutes to run. BOB, on the other hand, takes only 3.16 minutes. This is a 22-fold reduction in the running time, suggesting that BOB not only outperforms competing approaches in recovering the Bayesian posterior predictive distribution, but it can also be substantially faster than MCMC samplers. On the whole, this illustrates BOB’s practical utility in real-world problems.

| Method | ||||||

| Dataset | Metric | BOB | WBB1 | WBB2 | NUTS | ADVI |

| Wine | 0.039 | 0.056 | 0.058 | 0.065 | 0.117 | |

| 0.032 | 0.048 | 0.049 | 0.047 | 0.071 | ||

| Elapsed (min) | 3.158 | 0.010 | 0.007 | 69.399 | 0.256 | |

| Seeds | 0.019 | 0.025 | 0.023 | 0.072 | 0.071 | |

| 0.020 | 0.027 | 0.023 | 0.079 | 0.079 | ||

| Elapsed (min) | 2.869 | 0.005 | 0.005 | 13.657 | 0.131 | |

| \botrule | ||||||

6 Discussion

In this article, we have investigated alternatives to MCMC algorithms in order to sample from the posterior distribution of GMMs. More precisely, we developed a randomly weighted EM algorithm and introduced BOB, a novel computational methodology for approximate posterior sampling. We build on WLB and WBB ideas, which are based on the premise that optimizing randomly weighted posterior densities can be faster and less computationally intensive than sampling from an intractable posterior. BOB, however, tackles the problem of automatically selecting the random weights under arbitrary sample sizes, which has not been addressed before. This is done by minimizing, through Bayesian Optimization, a black-box and noisy version of the reverse KL divergence between the Bayesian posterior and an approximate posterior induced by random weighting. We have demonstrated that BOB constantly outperforms competing approaches in recovering the Bayesian posterior, it provides a better uncertainty quantification, and it retains key asymptotic properties from existing methods. Moreover, we showed that BOB is not only more accurate than all the other existing methods, but it can also be substantially faster than MCMC algorithms, making BOB a competitive approximate posterior sampler.

Limitations: As with many other methodological developments, BOB presents both strengths and limitations, which can make it either highly suitable, or impractical, depending on the specific context of its application. That said, we can identify two main limitations: (1) Somehow ironic, the first limitation involves the use of Bayesian Optimization. Despite being one of the most efficient approaches to optimize a black-box objective, BO does not scale well to high-dimensional search spaces [30]. Recall that our search space, , is -dimensional, and thus, we should expect that BOB’s applicability and performance would be significantly better under small-medium values of (i.e., the number of components in the mixture). Fortunately, high-dimensional black-box optimization is an active and vibrant area of research within the scientific community. Incorporating such developments and comparing different black-box optimizers could be an avenue for future research. (2) The second limitation is with respect to the sensitivity of our algorithms to the initial parameter values. Despite the incorporation of a tempering profile, both WBB and BOB are still sensitive to the initial parameter values. Poorly chosen initial values might lead the algorithm to saddle at a sub-optimal solution, and no amount of tempering would help us escape such a local optima. Fortunately, recent developments in clustering methods can help us obtain adequate starting points. Lastly, throughout this article, we have assumed that the number of clusters, , is fixed and known. The problem of selecting within a Bayesian paradigm has been widely discussed in the literature (see e.g. [43], [49], [38], or [3]), and it is outside the scope of this article.

On the whole, BOB joins a growing body of literature, which aims to draw practitioner’s attention toward posterior samplers beyond traditional MCMC algorithms [37, 32]. Thus, extending BOB (and BOB-like methodologies) from GMMs with conjugate priors to arbitrary posterior distributions presents an exciting research opportunity, which we intend to pursue in the future.

Acknowledgements

We would like to thank Michael Martin for many constructive comments and helpful suggestions. Any remaining errors are, of course, our own.

References

- \bibcommenthead

- Aeberhard et al. [1994] Aeberhard, S., D. Coomans, and O. de Vel. 1994. Comparative analysis of statistical pattern recognition methods in high dimensional settings. Pattern Recognition 27(8): 1065–1077 .

- Allassonnière and Chevallier [2021] Allassonnière, S. and J. Chevallier. 2021. A new class of stochastic em algorithms. escaping local maxima and handling intractable sampling. Computational Statistics & Data Analysis 159: 107159 .

- Baudry et al. [2010] Baudry, J.P., A.E. Raftery, G. Celeux, K. Lo, and R. Gottardo. 2010. Combining mixture components for clustering. Journal of Computational and Graphical Statistics 19(2): 332–353 .

- Bishop [2006] Bishop, C.M. 2006. Pattern recognition and machine learning. Springer New York, NY.

- Blei et al. [2017] Blei, D.M., A. Kucukelbir, and J.D. McAuliffe. 2017. Variational inference: A review for statisticians. Journal of the American Statistical Association 112(518): 859–877 .

- Bull [2011] Bull, A.D. 2011. Convergence rates of efficient global optimization algorithms. Journal of Machine Learning Research 12(88): 2879–2904 .

- Carpenter et al. [2017] Carpenter, B., A. Gelman, M.D. Hoffman, D. Lee, B. Goodrich, M. Betancourt, M. Brubaker, J. Guo, P. Li, and A. Riddell. 2017. Stan: A probabilistic programming language. Journal of Statistical Software 76(1): 1–32 .

- Celeux et al. [2000] Celeux, G., M. Hurn, and C.P. Robert. 2000. Computational and inferential difficulties with mixture posterior distributions. Journal of the American Statistical Association 95(451): 957–970 .

- Charytanowicz et al. [2010] Charytanowicz, M., J. Niewczas, P. Kulczycki, P.A. Kowalski, S. Łukasik, and S. Żak. 2010. Complete gradient clustering algorithm for features analysis of x-ray images. Information Technologies in Biomedicine 2: 15–24 .

- Dempster et al. [1977] Dempster, A.P., N.M. Laird, and D.B. Rubin. 1977. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society Series B: Statistical Methodology 39(1): 1–22 .

- Diebolt and Robert [1994] Diebolt, J. and C.P. Robert. 1994. Estimation of finite mixture distributions through bayesian sampling. Journal of the Royal Statistical Society Series B: Statistical Methodology 56(2): 363–375 .

- Fong et al. [2019] Fong, E., S. Lyddon, and C. Holmes. 2019. Scalable nonparametric sampling from multimodal posteriors with the posterior bootstrap. Proceedings of the 36th International Conference on Machine Learning 97: 1952–1962 .

- Fraley and Raftery [2002] Fraley, C. and A.E. Raftery. 2002. Model-based clustering, discriminant analysis, and density estimation. Journal of the American Statistical Association 97(458): 611–631 .

- Friedman et al. [2008] Friedman, J., T. Hastie, and R. Tibshirani. 2008, 12. Sparse inverse covariance estimation with the graphical lasso. Biostatistics 9(3): 432–441 .

- Geisser [2017] Geisser, S. 2017. Predictive Inference. Chapman and Hall/CRC.

- Gelman and Rubin [1992] Gelman, A. and D.B. Rubin. 1992. Inference from iterative simulation using multiple sequences. Statistical Science 7(4): 457–472 .

- Hoffman and Gelman [2014] Hoffman, M.D. and A. Gelman. 2014. The no-u-turn sampler: Adaptively setting path lengths in hamiltonian monte carlo. Journal of Machine Learning Research 15(47): 1593–1623 .

- Hofmeyr [2021] Hofmeyr, D.P. 2021. Fast exact evaluation of univariate kernel sums. IEEE Transactions on Pattern Analysis and Machine Intelligence 43(2): 447–458 .

- Hofmeyr [2022] Hofmeyr, D.P. 2022. Fast kernel smoothing in r with applications to projection pursuit. Journal of Statistical Software 101(3): 1–33 .

- Izenman and Sommer [1988] Izenman, A.J. and C.J. Sommer. 1988. Philatelic mixtures and multimodal densities. Journal of the American Statistical Association 83(404): 941–953 .

- Jones [2001] Jones, D.R. 2001. A taxonomy of global optimization methods based on response surfaces. Journal of Global Optimization 21: 345–383 .

- Jones et al. [1998] Jones, D.R., M. Schonlau, and W.J. Welch. 1998. Efficient global optimization of expensive black-box functions. Journal of Global Optimization 13: 455–492 .

- Kandasamy et al. [2020] Kandasamy, K., K.R. Vysyaraju, W. Neiswanger, B. Paria, C.R. Collins, J. Schneider, B. Poczos, and E.P. Xing. 2020. Tuning hyperparameters without grad students: Scalable and robust bayesian optimisation with dragonfly. Journal of Machine Learning Research 21(81): 1–27 .

- Kirkpatrick et al. [1983] Kirkpatrick, S., C.D. Gelatt Jr, and M.P. Vecchi. 1983. Optimization by simulated annealing. Science 220(4598): 671–680 .

- Kucukelbir et al. [2017] Kucukelbir, A., D. Tran, R. Ranganath, A. Gelman, and D.M. Blei. 2017. Automatic differentiation variational inference. Journal of Machine Learning Research 18(14): 1–45 .

- Lartigue et al. [2022] Lartigue, T., S. Durrleman, and S. Allassonnière. 2022. Deterministic approximate em algorithm; application to the riemann approximation em and the tempered em. Algorithms 15(3): 78 .

- Le Riche and Picheny [2021] Le Riche, R. and V. Picheny. 2021. Revisiting bayesian optimization in the light of the coco benchmark. Structural and Multidisciplinary Optimization 64(5): 3063–3087 .

- Lyddon et al. [2019] Lyddon, S.P., C. Holmes, and S. Walker. 2019. General bayesian updating and the loss-likelihood bootstrap. Biometrika 106(2): 465–478 .

- Lynch and Western [2004] Lynch, S.M. and B. Western. 2004. Bayesian posterior predictive checks for complex models. Sociological Methods & Research 32(3): 301–335 .

- Moriconi et al. [2020] Moriconi, R., M.P. Deisenroth, and K. Sesh Kumar. 2020. High-dimensional bayesian optimization using low-dimensional feature spaces. Machine Learning 109: 1925–1943 .

- Morningstar et al. [2021] Morningstar, W., S. Vikram, C. Ham, A. Gallagher, and J. Dillon. 2021. Automatic differentiation variational inference with mixtures. International Conference on Artificial Intelligence and Statistics 130: 3250–3258 .

- Newton et al. [2021] Newton, M.A., N.G. Polson, and J. Xu. 2021. Weighted bayesian bootstrap for scalable posterior distributions. Canadian Journal of Statistics 49(2): 421–437 .

- Newton and Raftery [1994] Newton, M.A. and A.E. Raftery. 1994. Approximate bayesian inference with the weighted likelihood bootstrap. Journal of the Royal Statistical Society Series B: Statistical Methodology 56(1): 3–48 .

- Ng and Newton [2022] Ng, T.L. and M.A. Newton. 2022. Random weighting in lasso regression. Electronic Journal of Statistics 16(1): 3430–3481 .

- Ni et al. [2020] Ni, Y., P. Müller, M. Diesendruck, S. Williamson, Y. Zhu, and Y. Ji. 2020. Scalable bayesian nonparametric clustering and classification. Journal of Computational and Graphical Statistics 29(1): 53–65 .

- Nie and Ročková [2023a] Nie, L. and V. Ročková. 2023a. Bayesian bootstrap spike-and-slab lasso. Journal of the American Statistical Association 118(543): 2013–2028 .

- Nie and Ročková [2023b] Nie, L. and V. Ročková. 2023b. Deep bootstrap for bayesian inference. Philosophical Transactions of the Royal Society A 381(2247): 20220154 .

- Nobile and Fearnside [2007] Nobile, A. and A.T. Fearnside. 2007. Bayesian finite mixtures with an unknown number of components: The allocation sampler. Statistics and Computing 17: 147–162 .

- Omori et al. [2007] Omori, Y., S. Chib, N. Shephard, and J. Nakajima. 2007. Stochastic volatility with leverage: Fast and efficient likelihood inference. Journal of Econometrics 140(2): 425–449 .

- Pompe [2021] Pompe, E. 2021. Introducing prior information in weighted likelihood bootstrap with applications to model misspecification. arXiv preprint arXiv:2103.14445 .

- Provost and Zang [2024] Provost, S.B. and Y. Zang. 2024. Nonparametric copula density estimation methodologies. Mathematics 12(3): 398 .

- Rasmussen and Williams [2005] Rasmussen, C.E. and C.K.I. Williams. 2005, 11. Gaussian Processes for Machine Learning. The MIT Press.

- Richardson and Green [1997] Richardson, S. and P.J. Green. 1997. On bayesian analysis of mixtures with an unknown number of components (with discussion). Journal of the Royal Statistical Society Series B: Statistical Methodology 59(4): 731–792 .

- Roustant et al. [2012] Roustant, O., D. Ginsbourger, and Y. Deville. 2012. Dicekriging, diceoptim: Two r packages for the analysis of computer experiments by kriging-based metamodeling and optimization. Journal of Statistical Software 51(1): 1–55 .

- Sambridge [2014] Sambridge, M. 2014. A Parallel Tempering algorithm for probabilistic sampling and multimodal optimization. Geophysical Journal International 196(1): 357–374 .

- Schilling [2017] Schilling, R.L. 2017. Measures, Integrals and Martingales. Cambridge University Press.

- Sklar [1959] Sklar, M. 1959. Fonctions de répartition à N dimensions et leurs marges. Annales de l’ISUP 8(3): 229–231 .

- Snoek et al. [2012] Snoek, J., H. Larochelle, and R.P. Adams. 2012. Practical bayesian optimization of machine learning algorithms. Advances in Neural Information Processing Systems 25: 2951–2959 .

- Stephens [2000a] Stephens, M. 2000a. Bayesian analysis of mixture models with an unknown number of components—an alternative to reversible jump methods. The Annals of Statistics 28(1): 40 – 74 .

- Stephens [2000b] Stephens, M. 2000b. Dealing with label switching in mixture models. Journal of the Royal Statistical Society Series B: Statistical Methodology 62(4): 795–809 .

- Stringer et al. [2023] Stringer, A., P. Brown, and J. Stafford. 2023. Fast, scalable approximations to posterior distributions in extended latent gaussian models. Journal of Computational and Graphical Statistics 32(1): 84–98 .

- Van Havre et al. [2015] Van Havre, Z., N. White, J. Rousseau, and K. Mengersen. 2015. Overfitting bayesian mixture models with an unknown number of components. PloS one 10(7): e0131739 .

- Wade and Ghahramani [2018] Wade, S. and Z. Ghahramani. 2018. Bayesian Cluster Analysis: Point Estimation and Credible Balls (with Discussion). Bayesian Analysis 13(2): 559 – 626 .

Supplementary Materials for “BOB: Bayesian Optimized Bootstrap for Uncertainty Quantification in Gaussian Mixture Models”

\AuthorfontSantiago Marin, Bronwyn Loong, Anton H. Westveld

S.1 Proof of Proposition 1

From (8), we have that the update of and is given by

with

where follows from the fact that , where denotes the matrix whose -th row is , and by writing as . ∎

S.2 Sampling from the Posterior Predictive Distribution

Given (approximate or actual) posterior draws , one can easily obtain posterior predictive draws as described in algorithm S.1.

Input:

Posterior draws:

Model:

Output:

Posterior predictive draws:

S.3 Sampling from the Actual Bayesian Posterior

Following \citeSuppgelman2013bayesian, under the likelihood and priors specified in section 2.1, the Bayesian posteriors for , , and are given by

where , , , , and , with , , and . When the true latent indicator variables, , are known (e.g., under simulated data or when we know the true cluster labels), one can easily compute, evaluate or obtain draws from the joint Bayesian posterior distribution. More precisely, algorithm S.2 generates draws from the joint Bayesian posterior, namely . Then, with the posterior draws , we can proceed to obtain posterior predictive draws as in algorithm S.1.

Input:

Data:

True latent indicator variables:

Total number of posterior draws:

Posterior hyper-parameters:

Output:

Bayesian posterior draws:

S.4 Warm Initialization Strategy

To initialize our algorithms, we consider a pool of candidate initial values. These candidate values are obtained via (1) Hard-thresholded -means \citepSuppHT_k_means_raymaekers2022, where the HTK-means penalty term is selected using AIC, BIC and a regularization path plot; (2) Sparse -means \citepSuppwitten2010framework, where the sparse -means shrinkage parameter is selected using a permutation approach; (3) Model-based clustering using the “mclust” R package \citepSuppmclustScrucca, which itself is initialized by hierarchical model-based agglomerative clustering; and (4) -means clustering \citepSuppmacqueen1967some. Then, we choose as a starting point the values that yield the largest posterior density. To facilitate comparisons, we initialize all algorithms at this starting point.

S.5 Features in Benchmark Data

Tables S.1 and S.2 present details and descriptions of the variables in the Wine and Seeds datasets, respectively.

| Wine Feature | Wine Feature | ||

|---|---|---|---|

| Abbreviation | Description | Abbreviation | Description |

| alco | Alcohol percentage | nflav | Nonflavanoid phenols |

| mal | Malic acid | proa | Proanthocyanins |

| ash | Ash | col | Colour intensity |

| akash | Alkalinity of ash | hue | Hue |

| mag | Magnesium | ODdw | of diluted wines |

| phen | Total phenols | prol | Proline |

| flav | Flavanoids | ||

| \botrule | |||

| Seed Feature | Seed Feature | ||

|---|---|---|---|

| Abbreviation | Description | Abbreviation | Description |

| area | Area of kernel | k.width | Width of kernel |

| perimeter | Perimeter of kernel | asymmetry | Asymmetry coefficient |

| compactness | Compactness of kernel | k.gr.len | Length of kernel groove |

| k.len | Length of kernel | ||

| \botrule | |||

S.6 Additional Posterior Density Plots

Figure 6 presents additional posterior predictive density plots for our illustrative example, with , , and . We can observe similar patterns as in the main article. More precisely, both versions of WBB fail to capture the variance from the Bayesian posterior, while NUTS and ADVI produce unimodal posterior predictive distributions, failing to identify the clusters in the data. It is clear that BOB offers the best approximation to the Bayesian posterior predictive distribution and the best uncertainty quantification.

Figures 7 and 8 present posterior density plots for additional variables from the wine and seeds datasets, respectively. Similar as in the main document, we can observe that both versions of WBB correctly capture the location of the three clusters. However, both versions of WBB produce overconfident posterior predictive distributions and do not capture the dispersion of the Bayesian posterior. NUTS and ADVI are unable to identify the three clusters in the data. Clearly, BOB produces the closest approximation to the Bayesian posterior predictive distribution and the best uncertainty quantification.

sn-chicago.bst \bibliographySuppreferences-supp.bib