BITS: Bi-level Imitation for Traffic Simulation

Abstract

Simulation is the key to scaling up validation and verification for robotic systems such as autonomous vehicles. Despite advances in high-fidelity physics and sensor simulation, a critical gap remains in simulating realistic behaviors of road users. This is because, unlike simulating physics and graphics, devising first principle models for human-like behaviors is generally infeasible. In this work, we take a data-driven approach and propose a method that can learn to generate traffic behaviors from real-world driving logs. The method achieves high sample efficiency and behavior diversity by exploiting the bi-level hierarchy of driving behaviors by decoupling the traffic simulation problem into high-level intent inference and low-level driving behavior imitation. The method also incorporates a planning module to obtain stable long-horizon behaviors. We empirically validate our method, named Bi-level Imitation for Traffic Simulation (BITS), with scenarios from two large-scale driving datasets and show that BITS achieves balanced traffic simulation performance in realism, diversity, and long-horizon stability. We also explore ways to evaluate behavior realism and introduce a suite of evaluation metrics for traffic simulation. Finally, as part of our core contributions, we develop and open source a software tool that unifies data formats across different driving datasets and converts scenes from existing datasets into interactive simulation environments. We include additional results at https://sites.google.com/view/bits2022/home.

1 Introduction

Simulation is an integral part of developing effective robotic systems. Simulators allow developers to rapidly verify changes and triage erroneous behaviors before deploying to physical systems. Realistic simulators are especially crucial for autonomous vehicles (AVs), because it is costly and potentially dangerous to test new features and changes directly on the road. Yet, despite advances in physics simulation and high-fidelity sensor simulation, AV developers still primarily rely on large-scale, real-world road testing for validation and verification [1, 2, 3, 4, 5, 6, 7]. One critical reason why is that existing simulation platforms do not generate realistic behaviors for simulated road users, such as cars and pedestrians, which is difficult because, unlike physics and graphics, it is challenging to design models that generate human-like behaviors from first principles.

Today’s mainstream driving simulators synthesize agent behaviors by either replaying recorded driving logs or implementing heuristics-based controllers. While log replay allows for scenario-specific triaging, it is difficult to validate new features as replayed agents do not react to counterfactual ego motions. On the other hand, heuristics-based controllers are often equipped with simple driving logic and can thus respond to new ego behaviors in a closed-loop manner [8, 9, 10]. While these methods can produce plausible traffic flows, synthesizing diverse and complex driving behaviors such as yielding and cutting-in for a large number of real-world scenarios remains challenging.

On the other hand, learning-based approaches can ground reactive behavior generation in real-world driving logs. For example, recent works show that trajectory forecasting models trained from large-scale driving logs can accurately infer distributions of future agent trajectories in many challenging scenarios [11, 12, 13, 14]. While these methods excel at predicting realistic trajectories, they are brittle under domain shifts such as new scenes with unseen driving behavior, and the multi-agent nature of traffic simulation may cause a combinatorial explosion in the number of agent states. This challenge is exacerbated when applying prediction approaches to closed-loop behavior simulation over long time horizons, as prediction errors at each step compound over time [15], leading to divergence and irreversible failures such as collisions and driving off-road.

In this work, we aim to develop a learning-based traffic simulation model that can generate diverse, stable, and realistic traffic behaviors. Our key insight is two-fold. First, while learning stable long-horizon driving behaviors requires large amounts of data, the problem has a natural bi-level hierarchy that can be exploited to improve learning efficiency. Specifically, we decouple the learning problem into high-level intent inference and low-level goal-conditioned control. Our model leverages a 2D birds-eye-view structure of urban driving and learns to generate a spatial distribution of intended goal locations (Fig. 1). A low-level controller policy then generates short segments of goal-conditioned behaviors that move agents towards their inferred goals. In addition, the spatial goal distribution aids in disentangling multi-modal behaviors and generates diverse traffic simulations. At the same time, while such a hierarchical policy greatly improves learning efficiency, agents may still encounter unseen situations that the model alone struggles to handle. To stabilize the long-term behaviors of the model, we augment the policy with a prediction-and-planning module. The module samples likely trajectory segments from the hierarchical policy and selects actions with rule-following cost functions as regularization. This way, the overall framework balances between generating human-like behaviors at in-distribution states and preventing divergences at out-of-distribution states.

We name our method BITS (Bi-level Imitation for Traffic Simulation). We evaluate BITS on two popular driving log datasets, Lyft Level 5 [16] and nuScenes [17]. The Lyft dataset contains 1000 hours of driving data collected along a 6.8 mile route in Palo Alto. While densely covered, the trajectory annotations are auto-labeled using a perception stack, resulting in abundant labeling errors [18]. In contrast, the nuScenes dataset contains 5.5 hours of manually-annotated trajectories spanning two cities (Boston and Singapore) with more diverse scenarios. Through these two datasets, we demonstrate the capability of our method under different types of learning challenges. Beyond generating realistic traffic behaviors, we also explore ways to evaluate the generated behaviors through a suite of analytical and learned metrics, since conventional trajectory generation metrics such as ADE and FDE are ill-fitted for evaluating closed-loop traffic simulation. Finally, as part of our core contributions, we develop and open source a software tool that unifies data formats across different AV datasets and allows users to transform scenarios from existing datasets into interactive simulation environments. We hope that our novel traffic behavior simulation method, along with the evaluation protocol and data interface software, can serve as a foundation for further research on this topic.

2 Related work

Traffic simulation. Approaches for traffic simulation can broadly be categorized into two groups: macroscopic, studying large scale traffic flows without instantiating individual agent states, and microscopic, focusing on individual agents in traffic and simulating their behaviors on the road [19, 20]. Since our ultimate goal is to validate autonomous vehicles and their interaction with surrounding agents, we focus our attention on microscopic simulation. Existing systems for microscopic simulation such as SUMO [8], Aimsun [9], and VISSIM [10] use analytical models to control agents in a scene, including cellular automata, the intelligent driver model (IDM), and the optimal velocity model (see [21] for a comprehensive review). These analytic models typically have fixed routes for vehicles to follow and separate longitudinal and lateral motions of agents, with some models omitting lateral motion entirely. Accordingly, these analytical microscopic traffic simulation tools lack sufficient complexity and expressiveness for developing or evaluating autonomous driving features.

As a result, recent works have started to develop more expressive models for traffic simulation, generally based on neural networks [22, 23, 24], that learn from real-world trajectory datasets [25, 16, 17]. Notably, STRIVE [24] proposes to generate near-collision scenarios by searching in the latent space of a trajectory prediction model. TrafficSim [23] adapts a graph-based trajectory prediction model to perform scene-level traffic simulation. Compared to STRIVE, which focuses on generating worst-case scenarios and short open-loop trajectories, we aim to synthesize a broad range of long-horizon closed-loop traffic behaviors. Compared to TrafficSim, which relies on scene-level control to ensure consistent interaction among agents (e.g., coordinated collision avoidance), our method enables each agent to act without coordination with others at the model level. Such an agent-centric setup allows our method to be deployed in more practical simulation usecases, where different types of agents (analytical or learned) are mixed together to interact in a scene. Moreover, we also show that our method outperforms TrafficSim in simulation diversity and stability.

Trajectory prediction. A separate set of research aims to predict the trajectories of agents in traffic, with initial works applying analytical models such as Social Forces [26, 27], Hidden Markov Models [28] and IDM [29]. However, limited by their expressiveness, they fail to scale to the level of complexity required by autonomous driving. As a result, various data-driven models have been proposed, which directly learn to predict agent behaviors from a wealth of demonstrations. Several early examples include Social GAN [30], GAIL [31], MFP [32], and DESIRE [33]. More recent trajectory prediction models can be further categorized into agent-centric models, which generate independent predictions for each agent in a scene [34, 33, 35], and scene-centric models, which generate joint predictions for all (or a subset of) agents in a scene [36, 37]. For a comprehensive review of trajectory prediction methods, see [38]. While these methods perform well over short time-horizons (up to 5s), their performance generally degrades over longer time-horizons. To combat this, many recent state-of-the-art methods adopt a multi-stage approach which first predicts agents’ goal locations and subsequently links agents’ current positions to their inferred goals [39, 40, 41, 42, 43, 44, 45, 46, 47]. While these methods solely focus on open-loop prediction, we will show in this work that inferring and conditioning on an agent’s goal also improves the stability of long-horizon closed-loop simulation.

Imitation learning. Our bi-level imitation learning method is heavily inspired by literature in hierarchical decision making and multimodal imitation learning. A hierarchical policy consists of a high-level planner that sets abstract goals and a low-level policy that learns to achieve the goals [48, 49, 50, 51, 52, 53, 54]. Methods along this vein have favorable properties such as compositionality [53] and interpretability [55], and they have achieved superior performance in long-horizon tasks especially [51, 52, 53, 54]. At the same time, an equally important desideratum of traffic simulation is behavior diversity, which most prior works neglect. Multimodal imitation learning was recently studied in the manipulation domain [56, 57, 58, 59]. Notably, GTI [58] trains a CVAE-based planner to set multi-modal subgoals in observation space for a low-level goal-conditioning controller to achieve. Our method adopts a similar hierarchical structure but instead exploits the domain structure of driving to efficiently represent goal distributions as 2D birds-eye view spatial maps (shown in Fig. 1). We empirically show that our method generates realistic, diverse, and stable long-horizon traffic simulations.

3 Bi-level imitation for traffic simulation

In this section, we dive into the details of the traffic simulation problem and our primary technical contributions. We propose (1) a hierarchical imitation learning framework that generates diverse and realistic traffic behaviors, (2) a prediction-and-planning module that stabilizes long-horizon simulation, and (3) a suite of analytical and learned evaluation metrics for traffic simulation. Specific implementation details (network architectures, shapes) will be described in Sec. 4.1 and the Appendix.

3.1 Traffic simulation as imitation learning

We take an agent-centric approach to traffic simulation, i.e., each agent makes decisions in a decentralized manner without explicit coordination. As mentioned previously, this allows for flexible integration with existing simulation frameworks containing other types of simulated agents and encourages the emergence of new interactive behaviors. We focus on simulating vehicle traffic in this work, but an agent can be any type of road user captured in driving logs (e.g., cyclists, pedestrians). We use and to denote the dynamic state and decision-relevant context for an agent, respectively. Specifically, state includes the position, heading, and velocity of an agent. Context includes a local semantic map and the previous states of an agent and its neighboring agents . Given the decision context information and the current state , the goal of a traffic simulation model is to generate the next state of the agent subject to a dynamics transition function . We use a simple unicycle model with dynamics constraints as and defer more realistic vehicle dynamics models to future works.

We leverage driving logs captured in the real world [16, 17] to train our traffic model. Since log data readily includes semantic maps and the trajectories of all observed agents, we can treat driving logs as a set of multi-agent expert demonstration sequences and formulate traffic simulation as a supervised imitation learning problem. However, the nature of urban driving poses significant technical challenges. First, the decision process is partially-observed as the model does not have access to the underlying intent of the demonstrator and other decision-relevant cues such as the turn signals of other vehicles. Accordingly, action supervisions are inherently ambiguous and are usually modeled with probabilistic distributions [34, 33, 35]. Although this ambiguity complicates training, effectively modeling action distributions also enables generating diverse counterfactual traffic simulations. Second, since each agent acts without explicit coordination, their joint behaviors create a combinatorial space of possible future states. Such uncertainty makes generating stable traffic simulations extremely challenging. Below, we describe how our approach can generate multimodal simulations with a stochastic hierarchical policy and mitigate uncertainty in state evolution with a prediction-and-planning module.

3.2 Bi-level imitation learning for multi-modal behavior generation

The goal of our traffic simulation model is to produce diverse and plausible behaviors by learning from real-world driving logs as demonstrations. Most existing methods in trajectory prediction use deep latent variable models (e.g., VAEs) to capture the behavior distributions. However, findings in the imitation learning literature [60] suggest that learning to generate stable long-horizon behaviors requires a large amount of training data. Our method instead decomposes the learning problem into (1) training a high-level goal network that captures the spatial distribution of possible short-term goals, and (2) training a deterministic goal-conditional policy that learns to reach the predicted goal. The spatial goal network exploits the 2D birds-eye-view structure of driving motion and represents the spatial goal distribution efficiently with a 2D grid. This decomposition additionally moves the burden of modeling multi-modal trajectories to the high-level goal predictor, enabling the low-level goal-conditioned policy to reuse goal-reaching skills to improve sample efficiency.

Spatial goal network. The spatial goal network is trained to predict the distribution of the short-term goal pose (2D position and heading) of an agent given its decision context . Following prior works [22, 61], we first encode the decision context into a rasterized semantic map, which includes the semantic map and past agent trajectories rasterized as 2D bounding boxes in additional channels. The model takes as input the rasterized semantic map and outputs a 2D grid of goal likelihood as well as residual components to refine the predicted goal location. The output takes the shape of a 4-channel tensor with the same spatial size as the input rasterized map. Channel is the likelihood of the coarse goal location 2D probability map. Each pixel in channel and is the scalar residual relative to the grid location. Channel is the heading prediction at each grid location. Once a location is selected based on the probability map in channel , the location is corrected by the residual and transformed into a goal pose in the agent local coordinate frame. We treat the 2D location map as a joint distribution and train via cross-entropy loss across locations. The other channels are trained with masked regression losses (e.g., squared error).

Goal-conditional policy. The goal-conditional policy takes the form of a deterministic trajectory generator . Although we may further augment the policy with stochastic components, we empirically found it unnecessary as the short-term goal largely reduces the uncertainty in prediction. Inspired by prior works [34, 23], instead of directly regressing each state in an agent’s trajectory the model predicts controls (velocity, change of heading) at each future time step and forward integrates them through an agent’s dynamics model (e.g., extended unicycle dynamics [62] for vehicles). The errors between the predicted and reference trajectory are then backpropagated directly through the dynamics model. Overall, this strategy provides a strong dynamically-grounded learning signal which corrects predictions at earlier steps to reduce errors at later steps.

3.3 Prediction and planning for long-horizon stability

So far, we have described a bi-level imitation learning method that can generate plausible traffic simulations from limited data. The policy can synthesize diverse behaviors by sampling from the multi-modal spatial goal predictor. However, the performance of the policy remains bounded by the size and coverage of training data. Driving logs are biased towards nominal behaviors and contain almost no safety-critical situations such as collisions or driving off-road. The objective of generating diverse behaviors further amplifies this challenge, as agents are encouraged to enter previously-unseen regions of the map and create new interactions. As a result, to achieve stable long-horizon simulations, agents must generate reasonable behaviors even at states where guidance from training data is lacking.

To this end, we propose to augment our policy with a prediction-and-planning module to stabilize long-horizon rollouts. The module draws action samples from the stochastic bi-level policy described above and selects the action that minimizes a rule-based cost function given the predicted future states of the environment , that is, . This approach is similar to the motion planning pipeline in a typical modular AV stack, with the important difference that we use our learned policy to generate human-like motion trajectory candidates. The key idea is that the policy can directly follow the data likelihood at in-distribution states, where most action samples are rule-following, and receive corrective guidance at states where the most likely actions may lead to bad consequences. In addition, the sampling module allows for agile adjustment of the simulator (e.g. level of diversity, emphasis on multiple objectives) without retraining. Below we describe the model for future state prediction and the cost function for action selection.

Future state prediction. Since we assume an analytical vehicle dynamics model and known static map, the main task of the model is to predict the future motion trajectories of nearby agents. We follow a typical trajectory prediction pipeline and featurize each agent by its local and global scene context. Specifically, we use RoIAlign [63] to crop the features extracted by the intermediate layer of a deep CNN. The per-agent features are then concatenated with a global scene context feature (extracted by the final layer of the same CNN) to make the final trajectory prediction for each neighboring agent . The model is illustrated in Fig. 1. We use deterministic prediction in this work and defer more sophisticated probabilistic prediction and planning to future work.

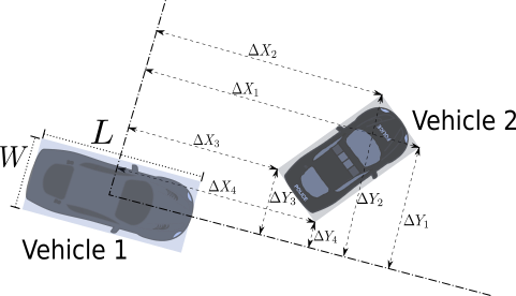

Cost-based trajectory selection. We consider two rule-based costs: collision and road departure. The collision cost is computed based on distances from the corners of two bounding box rectangles using distances between the four corners. To calculate the road departure cost, we first generate a distance map that records the Manhattan distance to the drivable area in pixel space (obtained efficiently via convolution steps). The resulting distance map assigns zero to points within the drivable area, with values increasing outside the drivable area until saturating at . We directly use this Manhattan distance value as a penalty. Note that both cost terms are zero for nominal trajectories, i.e., trajectories that do not result in collision and road departure, minimizing their effect on selecting among rule-following action samples.

3.4 Evaluation metrics for traffic simulation

Designing metrics for simulation is particularly difficult because of the lack of ground truth. As a result, metrics such as average displacement error (ADE) and final displacement error (FDE), commonly used to evaluate trajectory prediction, do not suit the evaluation of simulation models. To address this evaluation gap, we propose three types of simulation metrics: (i) metrics measuring how much simulated agents violate common traffic rules, such as driving off-road or causing collisions with other agents; (ii) metrics measuring the statistics of simulation rollouts, including resemblance to collected driving logs in terms of driving characteristics such as speed profile, control effort, coverage of the driving area, and behavior diversity between different simulation trials; (iii) data-driven metrics learned from real-world driving logs, such as measuring the likelihood of simulation rollouts under data-driven trajectory forecasting models. Here we describe (ii) and (iii) in details.

Coverage and diversity. To calculate how much of a scene is covered by the agents, we first compute a simulator’s trajectory distribution via Density Estimation with a Gaussian kernel over all time steps of the simulator’s rollouts, focusing on the 2D spatial distribution of the trajectory. To measure the coverage of the map, we count the number of grid points where the KDE estimate is above a threshold, separating the count between drivable areas and non-drivable areas. To measure the diversity of stochastic policies, we run multiple trials with the same initial condition and collect the density estimates for each trial. Given two different trials, we compute the Wasserstein distance between the two density profiles. In particular, all grid points with non-zero density are flattened and a distance matrix is computed containing the Euclidean distances between every pair of grid points. The density profile is then normalized to sum to 1 and the Wasserstein distance can be computed efficiently as in [64]. For trials of the same scene, we calculate the Wasserstein distances between the pairs of density profiles and take the mean as a metric for diversity:

where is the Wasserstein distance and is the density profile for the -th trial.

Learned metrics. There are many potential ways to learn a metric that evaluates whether simulated behavior is human-like. One such way is to evaluate simulated agent trajectory likelihoods using a prediction model trained from real-world driving logs. To evaluate this possibility, we develop an occupancy-based prediction model that predicts where an agent will be in future time steps. The model uses a similar structure to our spatial goal network and discretizes the position space into bins. The model is trained to minimize a cross-entropy loss function and its predictions are then used to compute the trajectory likelihoods of simulated trajectories. We include more details in the Appendix.

4 Evaluation

Our experiments seek to validate the primary claims that (1) BITS can generate plausible behaviors by learning from real-world driving logs, (2) compared to other flat policies, our hierarchical policy learning framework achieves better sample efficiency and behavior diversity, (3) our proposed prediction-and-planning module is effective at providing corrective guidance for out-of-distribution states. We conduct evaluations with two large-scale real-world driving datasets, Lyft Level 5 [16] and nuScenes [17]. Since learning-based traffic simulation is a new topic and lacks a standardized benchmark, in this work we also develop and open source a software framework that unifies data formats across different AV datasets (starting with the two used in this work) and can transform scenes from datasets to interactive simulation environments. We use this framework to run closed-loop simulations and report performance based on the metrics described in Sec. 3.4.

4.1 Evaluation setup

Datasets. The Lyft dataset [16] contains 1000 hours of driving data collected along a 6.8 mile route in Palo Alto. The dataset contains many repeated trips along each road segment. Since the annotations are auto-generated using a perception stack, there are many labeling errors including inaccurate agent positions, headings, and semantic types [18]. In contrast, nuScenes [17] contains 5.5 hours of accurate manually-labeled trajectories spanning two cities (Boston and Singapore) with more diverse scenarios and denser traffic. Through these datasets, we compare our method to baselines under different learning challenges such as noisy labels and small training sets. For both datasets, we train all models on trajectories from the train split and conduct evaluation on 100 scenes randomly sampled from the validation split. We consider only vehicle simulation in this paper and defer simulating other types of agents (e.g., pedestrians, cyclists) to future works.

Simulation environments. As stated above, we initialize our simulation environments from real driving data, leading to realistic agent placements and dynamic states. Note that all scenes are drawn from the validation split previously unseen to the trained models. Each agent in the scene is independently controlled by replicas of the same model. The simulation runs at a frequency of 10 Hz and results are reported on 20-seconds simulation episodes.

| FR | coll | offroad | coverage | diversity | speed | jerk | sADE | |

|---|---|---|---|---|---|---|---|---|

| SimNet[22] | 38.35 | 35.57 | 1.38 | 460.44 | 0.00 | 1.65 | 3.85 | 3.19 |

| SocialGAN[30] | 64.96 | 42.86 | 19.45 | 189.98 | 9.02 | 1.48 | 5.17 | 13.22 |

| SocialGAN+p | 69.41 | 42.96 | 25.12 | 131.47 | 7.64 | 1.51 | 4.78 | 13.90 |

| TPP[34] | 15.62 | 14.65 | 0.59 | 495.69 | 3.23 | 1.14 | 2.60 | 5.50 |

| TPP+p | 16.03 | 15.12 | 0.65 | 508.16 | 2.75 | 1.23 | 2.32 | 5.44 |

| TrafficSim[23] | 26.98 | 15.98 | 5.76 | 566.35 | 7.68 | 1.50 | 3.82 | 5.89 |

| TrafficSim+p | 22.97 | 13.58 | 4.70 | 617.50 | 7.96 | 1.73 | 3.09 | 6.04 |

| BITS (max) | 20.71 | 18.75 | 1.18 | 443.93 | 0.00 | 0.76 | 4.44 | 7.77 |

| BITS (sample) | 25.37 | 22.37 | 1.27 | 780.52 | 16.84 | 1.86 | 4.29 | 7.78 |

| BITS | 9.97 | 8.66 | 0.46 | 1014.43 | 22.94 | 1.96 | 3.75 | 11.21 |

| Dataset | 18.36 | 17.49 | 0.84 | 327.25 | 0.00 | 0.00 | 0.00 | 0.00 |

| FR | coll | offroad | coverage | diversity | speed | jerk | sADE | |

|---|---|---|---|---|---|---|---|---|

| SimNet[22] | 24.58 | 15.80 | 3.05 | 395.21 | 0.00 | 6.81 | 18.61 | 7.01 |

| SocialGAN[30] | 71.33 | 33.81 | 19.71 | 154.21 | 2.29 | 38.44 | 42.51 | 19.89 |

| SocialGAN+p | 71.86 | 35.84 | 18.26 | 151.61 | 2.32 | 37.07 | 42.48 | 19.76 |

| TPP[34] | 49.76 | 33.23 | 8.27 | 489.36 | 7.06 | 8.37 | 4.61 | 12.13 |

| TPP+p | 9.84 | 9.52 | 0.04 | 661.07 | 7.73 | 8.17 | 5.96 | 13.09 |

| TrafficSim[23] | 27.08 | 20.39 | 3.42 | 861.00 | 4.28 | 10.03 | 10.01 | 10.51 |

| TrafficSim+p | 25.97 | 18.45 | 3.61 | 933.53 | 4.43 | 10.44 | 11.93 | 11.06 |

| BITS (max) | 13.24 | 11.51 | 0.30 | 559.58 | 0.00 | 6.35 | 13.87 | 6.41 |

| BITS (sample) | 14.72 | 13.79 | 0.17 | 888.12 | 6.36 | 6.54 | 13.44 | 6.70 |

| BITS | 6.63 | 5.67 | 0.14 | 1122.94 | 8.40 | 6.91 | 13.20 | 8.39 |

| Dataset | 11.99 | 11.39 | 0.42 | 397.92 | 0.00 | 0.00 | 0.00 | 0.00 |

Baselines. We consider methods from both the traffic simulation and trajectory prediction literature. SimNet [22] is a deterministic behavior-cloning model for traffic simulation. TrafficSim is an agent-centric adaptation of the original traffic simulation method in [23] that features an isotropic Gaussian CVAE. We remove the scene consistency loss in training since we do not assume control over all agents. SocialGAN [30] learns to generate trajectories through adversarial imitation. TPP is adapted from Trajectron++ [34], comprised of a discrete CVAE with Gaussian trajectory decoder for each discrete mode. We also consider variants of these methods augmented with our planning-and-control module (marked with “+p”), i.e., selecting future action samples with a cost function. We also evaluate ablations of our method, BITS (max) takes the maximum-likelihood action instead of sampling and BITS (sample) samples actions for rollouts without the prediction-and-planning module described in Sec. 3.3. All methods share the same rasterized input format, ResNet-18 encoder backbone, and MLP-based trajectory decoder networks.

Metrics. As mentioned in Sec. 3.4, designing evaluation metrics for traffic simulation is challenging as there are not single quantities that can summarize the performance of a method, and we cannot easily compare with ground truth dataset trajectories as our goal is to generate new and diverse simulation rollouts. To address this problem, we consider three types of evaluation metrics.

Environment metrics measure rule violations, environment coverage, and trajectory diversity. Both coverage and diversity as described in Sec. 3.4 are calculated from 5 simulation trials with different seeds per scene. We define a critical failure as an agent colliding with other agents or driving off-road for more than 1s. Failure Rate (FR) is the average fraction of agents experiencing a critical failure in a scene. We also report raw collision rate (coll) and road departure rate (offroad).

Dataset metrics compare simulation and ground truth data statistics. They are computed using a normalized Wasserstein distance between the histograms of the driving profiles of the simulated and recorded trajectories. We focus on speed and jerk, commonly used as driver comfort metrics, in the main paper and include others in the Appendix. We also report scene Average Distance Error (sADE) which measures the average position differences between simulated and recorded trajectories. Note that sADE is not suitable for measuring simulation realism and is included only as a reference since it heavily penalizes alternative simulations (e.g., turning left vs. going straight).

Finally, learned metrics as described in Sec. 3.4 measure simulation realism based on a likelihood model trained from real-world driving log.

4.2 Main results

Table 1 and Table 2 show quantitative results of closed-loop simulation on Lyft and nuScenes datasets, respectively. Fig. 2 qualitatively visualizes trajectories generated by selected methods and Fig. 3 shows a more detailed analysis on time-to-failure caused by road departure error. We make the following core observations from these results.

Recorded driving data is noisy. As stated above, both datasets contain certain levels of labeling noise indicated by non-zero failure rates for ground truth data (labeled “Dataset"), with higher noise in Lyft than nuScenes and a majority of errors stemming from vehicle-vehicle collisions due to imprecise bounding box labels. Our method BITS is able to achieve lower failure rates than even the recorded trajectories in both datasets.

Sample efficiency. nuScenes contains far fewer training samples than Lyft, necessitating high sample efficiency in order for a policy to manage compounding errors over long simulations. As shown in Table 2, even without the prediction-and-planning module, both variants of BITS achieve low failure rates compared to other baselines. For a more direct analysis, we also report the mean time-to-failure in Fig. 3, observing that failure rates in nuScenes increase significantly over the course of simulation for the non-hierarchical policy baselines and remain low for BITS and its ablations.

BITS generates diverse and stable simulations. We observe that the baseline methods exhibit trade-offs between generating diverse rollouts and overfitting to a single mode of behaviors. For example, in Lyft, TPP suffers from mode collapse which yields low failure rates at the cost of low diversity. TrafficSim achieves relatively high diversity and coverage, but also high failure rates. This observation is corroborated by the visualizations shown in Fig. 2, where all simulation trials by TPP are visually identical and resembles the ground truth (on right, titled “Dataset"), and while the trajectories generated by TrafficSim are diverse, some suffer from collisions and road departures. In contrast, BITS simultaneously attains high diversity and coverage with a low failure rate. This contrast is more pronounced in nuScenes where the training set is small. BITS achieves a balanced performance even without the prediction-and-planning module thanks to its high sample efficiency.

Prediction-and-planning is not always effective. The prediction-and-planning module is generally effective in reducing failure rates, with two important exceptions: (1) when action samples are not diverse, and (2) when all action samples lead to failure. Case (1) is exemplified by TPP in the Lyft environment (Fig. 2), where the model’s predictions overfit to a single behavior mode. Case (2) is exemplified by SocialGAN in both datasets. While the simulations are relatively diverse, they have high failure rates, entailing poor action sample quality. The prediction-and-planning module has negligible effects on the simulation in both cases.

Quantifying behavioral realism. As discussed above, evaluating simulation realism remains a challenging open problem because there is no single correct answer for traffic simulation. Here we consider both dataset statistics and learned metrics as a proxy for quantifying behavioral realism. For Lyft, we see that all methods achieve comparable speed and jerk statistical distances relative to the recorded trajectories. As expected, SimNet has the lowest sADE due to its behavior cloning objective. In nuScenes, BITS achieves comparable performance to SimNet in dataset metrics, showing that our method does not have to sacrifice behavioral realism for diversity and stability.

Finally, we consider the learned metric described in Sec. 3.4. To show that this occupancy likelihood-based metric indeed captures meaningful data likelihoods, we roll out ground truth trajectories with different levels of Ornstein-Uhlenbeck noise [65] and measure the predicted likelihood score. As shown in Fig. 3, the likelihood score decreases smoothly as the noise intensity grows, indicating that the learned metric captures the effect of disturbances well. We report the learned likelihood scores for representative baselines in Table. 3. Sensibly, we see that in both datasets the ground truth dataset trajectories have the highest likelihood scores. BITS yields comparable or higher likelihood scores than other baselines, with scores on par with ground truth dataset trajectories on nuScenes data.

5 Discussion and conclusions

Limitations and broader impact. Our work has a few important limitations. First, despite our efforts, devising evaluation metrics for traffic simulation remains an open research problem. The proposed metrics can only serve as proxies for measuring behavior realism. In particular, the learned metric is likely biased by the model choice and the training data. Second, we do not consider traffic rules (e.g., driving on the correct side of the road, obeying traffic lights) in evaluation and will work on enriching our simulation software framework with additional environment constraints. Finally, a limitation that might have broader impact is that data-driven simulation models are inherently biased by their training data, which is often curated from a small number of geographic regions. As a result, verification pipelines built on top of such models may be limited by the scenarios that they can generate. This may cause potential safety concerns for deploying tested vehicles in regions that are less represented in the training data.

Conclusions. In this work, we present Bi-level Imitation for Traffic Simulation (BITS), a novel data-driven traffic simulation model. BITS achieves high sample efficiency and behavioral diversity through a bi-level imitation learning formulation, generating stable long-horizon rollouts aided by a prediction-and-planning module. To facilitate evaluation and future studies in the field, we develop and open source a software tool that unifies data formats from different AV datasets and transforms scenes from existing datasets into interactive simulation environments. We compare BITS against a number of competitive baselines on two large-scale real-world AV datasets and find that BITS can generate diverse, realistic, and stable traffic simulations.

References

- [1] Waymo, “Safety report,” 2021. Available at https://waymo.com/safety/safety-report. Retrieved on July 4, 2021.

- [2] Uber Advanced Technologies Group, “A principled approach to safety,” 2020. Available at https://uber.app.box.com/v/UberATGSafetyReport.

- [3] NVIDIA, “Self-driving safety report,” 2021. Available at https://images.nvidia.com/content/self-driving-cars/safety-report/auto-print-self-driving-safety-report-2021-update.pdf.

- [4] Argo AI, “Developing a self-driving system you can trust,” Apr. 2021. Available at https://www.argo.ai/wp-content/uploads/2021/04/ArgoSafetyReport.pdf.

- [5] Zoox, “Safety report volume 2.0,” 2021. Available at https://zoox.com/safety/.

- [6] Motional, “Voluntary safety self-assessment,” 2021. Available at https://drive.google.com/file/d/1JjfQByU_hWvSfkWzQ8PK2ZOZfVCqQGDB/view.

- [7] General Motors, “Self-driving safety report,” 2018. Available at https://www.gm.com/content/dam/company/docs/us/en/gmcom/gmsafetyreport.pdf.

- [8] P. A. Lopez, M. Behrisch, L. Bieker-Walz, J. Erdmann, Y.-P. Flötteröd, R. Hilbrich, L. Lücken, J. Rummel, P. Wagner, and E. Wießner, “Microscopic traffic simulation using sumo,” in International Conference on Intelligent Transportation Systems (ITSC), pp. 2575–2582, 2018.

- [9] J. Casas, J. L. Ferrer, D. Garcia, J. Perarnau, and A. Torday, “Traffic simulation with aimsun,” in Fundamentals of traffic simulation, pp. 173–232, Springer, 2010.

- [10] M. Fellendorf and P. Vortisch, “Microscopic traffic flow simulator vissim,” in Fundamentals of traffic simulation, pp. 63–93, Springer, 2010.

- [11] Waymo, “Motion prediction leaderboard,” 2021. Available at https://waymo.com/open/challenges/2021/motion-prediction/.

- [12] Argo AI, “Argoverse 2: Motion forecasting competition,” 2022. Available at https://eval.ai/challenge/1719/leaderboard/4098.

- [13] Lyft, “Motion prediction for autonomous vehicles,” 2021. Available at https://www.kaggle.com/competitions/lyft-motion-prediction-autonomous-vehicles/leaderboard.

- [14] Motional, “nuScenes prediction task leaderboard,” 2021. Available at https://www.nuscenes.org/prediction?externalData=all&mapData=all&modalities=Any.

- [15] S. Ross and D. Bagnell, “Efficient reductions for imitation learning,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics, pp. 661–668, JMLR Workshop and Conference Proceedings, 2010.

- [16] J. Houston, G. Zuidhof, L. Bergamini, Y. Ye, A. Jain, S. Omari, V. Iglovikov, and P. Ondruska, “One thousand and one hours: Self-driving motion prediction dataset,” in Conf. on Robot Learning, 2020.

- [17] H. Caesar, V. Bankiti, A. H. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom, “nuScenes: A multimodal dataset for autonomous driving,” in IEEE Conf. on Computer Vision and Pattern Recognition, 2020.

- [18] B. Ivanovic, K.-H. Lee, P. Tokmakov, B. Wulfe, R. McAllister, A. Gaidon, and M. Pavone, “Heterogeneous-agent trajectory forecasting incorporating class uncertainty,” arXiv preprint arXiv:2104.12446, 2021.

- [19] G. Kotusevski and K. Hawick, “A review of traffic simulation software,” Research Letters in the Information and Mathematical Sciences, vol. 13, pp. 35–54, 2009.

- [20] P. M. Ejercito, K. G. E. Nebrija, R. P. Feria, and L. L. Lara-Figueroa, “Traffic simulation software review,” in 2017 8th International Conference on Information, Intelligence, Systems & Applications (IISA), pp. 1–4, IEEE, 2017.

- [21] E. Brockfeld, R. D. Kühne, A. Skabardonis, and P. Wagner, “Toward benchmarking of microscopic traffic flow models,” Transportation research record, vol. 1852, no. 1, pp. 124–129, 2003.

- [22] L. Bergamini, Y. Ye, O. Scheel, L. Chen, C. Hu, L. Del Pero, B. Osiński, H. Grimmett, and P. Ondruska, “Simnet: Learning reactive self-driving simulations from real-world observations,” in 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 5119–5125, IEEE, 2021.

- [23] S. Suo, S. Regalado, S. Casas, and R. Urtasun, “Trafficsim: Learning to simulate realistic multi-agent behaviors,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10400–10409, 2021.

- [24] D. Rempe, J. Philion, L. J. Guibas, S. Fidler, and O. Litany, “Generating useful accident-prone driving scenarios via a learned traffic prior,” in IEEE Conf. on Computer Vision and Pattern Recognition, 2022.

- [25] Waymo, “Waymo Open Dataset: An autonomous driving dataset.” https://waymo.com/open/, 2019.

- [26] D. Helbing and P. Molnár, “Social force model for pedestrian dynamics,” Physical Review E, vol. 51, no. 5, pp. 4282–4286, 1995.

- [27] R. Mehran, A. Oyama, and M. Shah, “Abnormal crowd behavior detection using social force model,” in IEEE Conf. on Computer Vision and Pattern Recognition, pp. 935–942, 2009.

- [28] K. M. Kitani, B. D. Ziebart, J. A. Bagnell, and M. Hebert, “Activity forecasting,” in European Conf. on Computer Vision, 2012.

- [29] M. Treiber, A. Hennecke, and D. Helbing, “Congested traffic states in empirical observations and microscopic simulations,” Physical Review E, vol. 62, no. 2, pp. 1805–1824, 2000.

- [30] A. Gupta, J. Johnson, F. Li, S. Savarese, and A. Alahi, “Social GAN: Socially acceptable trajectories with generative adversarial networks,” in IEEE Conf. on Computer Vision and Pattern Recognition, 2018.

- [31] A. Kuefler, J. Morton, T. A. Wheeler, and M. Kochenderfer, “Imitating driver behavior with generative adversarial networks,” in IEEE Intelligent Vehicles Symposium, 2017.

- [32] Y. C. Tang and R. Salakhutdinov, “Multiple futures prediction,” in Conf. on Neural Information Processing Systems, 2019.

- [33] N. Lee, W. Choi, P. Vernaza, C. B. Choy, P. H. S. Torr, and M. Chandraker, “DESIRE: distant future prediction in dynamic scenes with interacting agents,” in IEEE Conf. on Computer Vision and Pattern Recognition, 2017.

- [34] T. Salzmann, B. Ivanovic, P. Chakravarty, and M. Pavone, “Trajectron++: Dynamically-feasible trajectory forecasting with heterogeneous data,” in European Conf. on Computer Vision, 2020.

- [35] T. Zhao, Y. Xu, M. Monfort, W. Choi, C. Baker, Y. Zhao, Y. Wang, and Y. N. Wu, “Multi-agent tensor fusion for contextual trajectory prediction,” in IEEE Conf. on Computer Vision and Pattern Recognition, 2019.

- [36] S. Casas, C. Gulino, R. Liao, and R. Urtasun, “SpAGNN: Spatially-aware graph neural networks for relational behavior forecasting from sensor data,” in Proc. IEEE Conf. on Robotics and Automation, 2020.

- [37] S. Casas, C. Gulino, S. Suo, K. Luo, R. Liao, and R. Urtasun, “Implicit latent variable model for scene-consistent motion forecasting,” in European Conf. on Computer Vision, 2020.

- [38] A. Rudenko, L. Palmieri, M. Herman, K. M. Kitani, D. M. Gavrila, and K. O. Arras, “Human motion trajectory prediction: A survey,” Int. Journal of Robotics Research, vol. 39, no. 8, pp. 895–935, 2020.

- [39] H. Zhao and R. P. Wildes, “Where are you heading? dynamic trajectory prediction with expert goal examples,” in IEEE Int. Conf. on Computer Vision, 2019.

- [40] K. Mangalam, H. Girase, S. Agarwal, K.-H. Lee, E. Adeli, J. Malik, and A. Gaidon, “It is not the journey but the destinaton: Endpoint conditioned trajectory prediction,” in European Conf. on Computer Vision, 2020.

- [41] N. Rhinehart, R. McAllister, K. Kitani, and S. Levine, “PRECOG: Prediction conditioned on goals in visual multi-agent settings,” in IEEE Int. Conf. on Computer Vision, 2019.

- [42] C. Choi, S. Malla, A. Patil, and J. H. Choi, “DROGON: A trajectory prediction model based on intention-conditioned behavior reasoning,” in Conf. on Robot Learning, 2020.

- [43] H. Zhao, J. Gao, T. Lan, C. Sun, B. Sapp, B. Varadarajan, Y. Shen, Y. Shen, Y. Chai, C. Schmid, C. Li, and D. Anguelov, “TNT: Target-driveN Trajectory Prediction,” in Conf. on Robot Learning, 2020.

- [44] J. Gu, C. Sun, and H. Zhao, “DenseTNT: End-to-end trajectory prediction from dense goal sets,” in IEEE Int. Conf. on Computer Vision, 2021.

- [45] T. Gilles, S. Sabatini, D. Tsishkou, B. Stanciulescu, and F. Moutarde, “HOME: Heatmap output for future motion estimation,” in Proc. IEEE Int. Conf. on Intelligent Transportation Systems, 2021.

- [46] T. Gilles, S. Sabatini, D. Tsishkou, B. Stanciulescu, and F. Moutarde, “GOHOME: Graph-oriented heatmap output for future motion estimation,” in Proc. IEEE Conf. on Robotics and Automation, 2022.

- [47] T. Gilles, S. Sabatini, D. Tsishkou, B. Stanciulescu, and F. Moutarde, “THOMAS: Trajectory heatmap output with learned multi-agent sampling,” in Int. Conf. on Learning Representations, 2022.

- [48] R. S. Sutton, D. Precup, and S. Singh, “Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning,” Artificial intelligence, vol. 112, no. 1-2, pp. 181–211, 1999.

- [49] P. Dayan and G. E. Hinton, “Feudal reinforcement learning,” Advances in neural information processing systems, vol. 5, 1992.

- [50] P.-L. Bacon, J. Harb, and D. Precup, “The option-critic architecture,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31, 2017.

- [51] A. S. Vezhnevets, S. Osindero, T. Schaul, N. Heess, M. Jaderberg, D. Silver, and K. Kavukcuoglu, “Feudal networks for hierarchical reinforcement learning,” in International Conference on Machine Learning, pp. 3540–3549, PMLR, 2017.

- [52] H. Le, N. Jiang, A. Agarwal, M. Dudik, Y. Yue, and H. Daumé III, “Hierarchical imitation and reinforcement learning,” in International conference on machine learning, pp. 2917–2926, PMLR, 2018.

- [53] K. Shiarlis, M. Wulfmeier, S. Salter, S. Whiteson, and I. Posner, “Taco: Learning task decomposition via temporal alignment for control,” in International Conference on Machine Learning, pp. 4654–4663, PMLR, 2018.

- [54] K. Pertsch, O. Rybkin, F. Ebert, S. Zhou, D. Jayaraman, C. Finn, and S. Levine, “Long-horizon visual planning with goal-conditioned hierarchical predictors,” Advances in Neural Information Processing Systems, vol. 33, pp. 17321–17333, 2020.

- [55] T. Shu, C. Xiong, and R. Socher, “Hierarchical and interpretable skill acquisition in multi-task reinforcement learning,” arXiv preprint arXiv:1712.07294, 2017.

- [56] C. Lynch, M. Khansari, T. Xiao, V. Kumar, J. Tompson, S. Levine, and P. Sermanet, “Learning latent plans from play,” in Conference on robot learning, pp. 1113–1132, PMLR, 2020.

- [57] A. Mandlekar, F. Ramos, B. Boots, S. Savarese, L. Fei-Fei, A. Garg, and D. Fox, “Iris: Implicit reinforcement without interaction at scale for learning control from offline robot manipulation data,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 4414–4420, IEEE, 2020.

- [58] A. Mandlekar, D. Xu, R. Martín-Martín, S. Savarese, and L. Fei-Fei, “Learning to generalize across long-horizon tasks from human demonstrations,” arXiv preprint arXiv:2003.06085, 2020.

- [59] P. Florence, C. Lynch, A. Zeng, O. A. Ramirez, A. Wahid, L. Downs, A. Wong, J. Lee, I. Mordatch, and J. Tompson, “Implicit behavioral cloning,” in Conference on Robot Learning, pp. 158–168, PMLR, 2022.

- [60] S. Ross, G. J. Gordon, and J. A. Bagnell, “A reduction of imitation learning and structured prediction to no-regret online learning,” in AI & Statistics, 2011.

- [61] S. Konev, K. Brodt, and A. Sanakoyeu, “Motioncnn: A strong baseline for motion prediction in autonomous driving,” 2021.

- [62] S. M. LaValle, “Better unicycle models,” in Planning Algorithms, pp. 743–743, Cambridge Univ. Press, 2006.

- [63] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” in Proceedings of the IEEE international conference on computer vision, pp. 2961–2969, 2017.

- [64] O. Pele and M. Werman, “Fast and robust earth mover’s distances,” in 2009 IEEE 12th International Conference on Computer Vision, pp. 460–467, IEEE, September 2009.

- [65] G. E. Uhlenbeck and L. S. Ornstein, “On the theory of the brownian motion,” Physical review, vol. 36, no. 5, p. 823, 1930.

- [66] A. Zeng, S. Song, S. Welker, J. Lee, A. Rodriguez, and T. Funkhouser, “Learning synergies between pushing and grasping with self-supervised deep reinforcement learning,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4238–4245, IEEE, 2018.

6 Appendix

6.1 Implementation details

As shown in Fig. 4, our work includes three learned component: spatial goal network, goal-conditional policy, and the future state predictor. We will describe their shared input, network backbones, and the output heads below. Table 4 includes key hyperparameter selections.

Input. All three networks take rasterized decision context as input. A decision context includes (1) a semantic map including information such as lane marks, crosswalks, and lane boundaries and (2) kinematic history states of all agents (vehicles) in the view. For the semantic map, we follow the original rasterization scheme included in Lyft [16] and nuScenes [17] development kit, respectively. Specifically, Lyft semantic map is a 3-channel tensor that includes lane boundary, lane areas, traffic light faces, and crosswalk information. nuScenes’s semantic maps are 7-channel tensors including lane, road segment, drivable area, road divider, lane divider, pedestrian crossing, and walkaway. Following prior works [22, 61], we use a binary occupancy map same size as the semantic map to represent the kinematic states (i.e., position, heading, and extent) of agents at a given timestep. Each agent in the view is rasterized as filled bounding boxes on the occupancy map. State histories are represented as multi-channel occupancy maps. We concatenate the semantic map and the rasterized agent history map channel-wise and use the tensor as input for all models.

Architecture details. All three networks use an identical ResNet-18 ConvNet backbone to encode an input decision context tensor into a compact feature vector. Here we describe the output heads of each network.

| Key params | Values |

|---|---|

| Step time (s) | 0.1 |

| History length (step) | 10 |

| Prediction length (step) | 20 |

| Input | Values |

| Semantic map size - Lyft | (3, 224, 224) |

| Semantic map size - nuScenes | (7, 224, 224) |

| Pixel size (m/pixel) | 0.5 |

| Training params | Values |

| Learning rate | 0.0001 |

| Batch size | 100 |

| Optimizer | Adam |

| Loss - trajectory prediction | L2 regression |

| Loss - spatial occupancy | CrossEntropy |

| Simulation params | Values |

| Num simulation steps | 200 |

| Num action samples | 50 |

| -step action | 5 |

| Planning cost weight - collision | 10.0 |

| Planning cost weight - offroad | 1.0 |

Goal-conditional policy. Given the feature and a goal pose, i.e., target position and heading in the agent coordinate frame, the goal-conditional policy uses a MLP-based trajetory decoder to generate a length- trajectory as actions. As mentioned in the main text, inspired by prior works [34, 23], instead of directly regressing each state in an agent’s trajectory, the decoder predicts control inputs (velocity, change of heading) at each future time step and forward integrates them through an agent’s dynamics model (e.g., extended unicycle dynamics [62] for vehicles) to obtain the trajectory predictions. The model is trained end-to-end with an L2 regression loss against the recorded trajectories in the dataset, similar to a regular behavior cloning objective.

Future state predictor. The network is part of our prediction-and-planning module that selects action based on the predicted future states of the environment. Since we assume an analytical vehicle dynamics model and known static map, the main task of the network is to predict the future motion trajectories of nearby agents. As described in the main text, we follow a typical trajectory prediction pipeline and featurize each agent by its local and global scene context. Specifically, we use RoIAlign [63] to crop the features extracted at an intermediate layer of the ResNet-18 encoder. Specifically, we use a cropping window of at the end of the second block of the network. The per-agent features are then concatenated with a global scene context feature (extracted by the final fully-connected layer of the ResNet-18) to make the final trajectory prediction for each neighboring agent . We only make predictions for agents that are visible in the rasterized map. The model is trained with an L2 regression loss against the recorded neighboring agent trajectories in the dataset. The goal-conditional policy and the future state prediction network share encoder network weights and are trained jointly.

Spatial goal network. The spatial goal predictor extends the ConvNet encoder backbone with a U-Net-style decoder network (mirroring the encoder size) to generate the spatial goal prediction. Specifically, the model generates a 2D grid of goal likelihood as well as residual components to refine the predicted goal location. The output takes the shape of a 4-channel tensor with the same spatial size as the input rasterized map. Channel is the likelihood of the coarse goal location 2D probability map. We use a grid size of 0.5 meter per pixel. Each pixel in channel and is the scalar residual (in meters) relative to the grid location. Channel is the heading prediction at each grid location in radian. Once a location is selected based on the probability map in channel , the location is corrected by the residual and transformed into a goal pose in the agent local coordinate frame. Note that it is possible to further discretize the heading prediction into discrete bins [66], but we empirically found that the heading prediction has negligible impact on the goal-conditional policy. We treat the 2D location map as a joint distribution and train via cross-entropy loss across locations. The other channels are trained with regression losses (e.g., squared error).

Training setup. We train all models on shared cloud computing nodes equipped with NVIDIA Tesla V100 GPUs (32gb GPU memory). We train all models for 100k iterations (gradient steps) and choose the checkpoint based on their respective offline validation metrics (e.g., prediction error). On average, training takes between 20-30 hours to complete with single-GPU jobs.

Cost functions for prediction and planning. As mentioned in the main text, we consider two rule-based costs: collision and road departure. We follow prior work [34] and compute the collision cost based on distances from the corners of two bounding box rectangles using distances between the four corners. The minimum distance is approximated as:

which is further illustrated in Fig. 5. We then add the following collision loss

where and are parameters used to shape the sigmoid loss. In our experiments, .

To calculate the road departure cost, we first generate a distance map that records the Manhattan distance to the drivable area in pixel space. To be specific, we first pick a maximum distance constant and set all pixels inside the drivable area to 0, and all pixels outside the drivable area to . Then we perform the following convolution step times:

where is the value at the coordinate. The resulting distance map assigns zero to points within the drivable area, with values increasing outside the drivable area until saturating at . We directly use this Manhattan distance value as a penalty. To account for the size of the vehicle, we perform RoIAlign on the distance map with vehicle patches that take the vehicles’ size and orientation into account. Note that both cost terms are close to zero (the collision loss may be nonzero due to the sigmoid function) for nominal trajectories, i.e., trajectories that do not result in collision and road departure, minimizing their effect on selecting among rule-following action samples.

Learned metrics. To compute likelihood of a simulated trajectory, we use an occupancy-based prediction model that predicts where an agent will be in future time steps. The model uses a similar structure to our spatial goal network and discretizes the position space into bins. The model is trained to minimize a cross-entropy loss function and its predictions are then used to compute the trajectory likelihoods of simulated trajectories. During evaluation, the likelihood of an agent’s trajectory is calculated in a receding horizon manner. For each time step, we compare its rollout trajectory for the next steps to the predicted occupancy and calculate the likelihood and aggregate the whole rollout duration by taking the mean. The likelihood values of multiple agents in the scene are also aggregated by taking the mean.

6.2 Baselines

SimNet. SimNet [22] is a deterministic behavior-cloning model for traffic simulation. Prior work also show that its rasterized encoding scheme yields strong performance in trajectory prediction tasks [61]. We use the same rasterization input and CNN-based encoding backbone for all baselines and our BITS model.

SocialGAN. SocialGAN is adapted from a trajectory prediction model [30]. The model is trained using conditional generative-adversarial learning, where the condition is the decision context and the generation target comes from the trajectory dataset. To facilitate a fair comparison, the model uses the same encoding backbone and MLP-based trajectory decoder as all other methods.

TPP (Trajectron++). TPP is adapted from the Trajectron++ model [34], which is a CVAE model with a discrete latent space and generates a Gaussian Mixure Model (GMM) as trajectory prediction. We modified the encoder to take rasterized features as input, which shares the same map encoder as the proposed BITS model. The TPP model generates predictions of the control input, which are propagated through a unicycle model to obtain the trajectory prediction. For simplicity, we directly let the decoder predict the variance of predicted trajectory instead of propagating the variance through the dynamics as in [34]. The latent dimension is chosen to be 10 in our experiments.

TrafficSim. TrafficSim is an agent-centric adaptation of the original TrafficSim work [23], meaning that agents in a scene are controlled by independent replica of the same model instead of by a single model that explicitly coordinates their actions. The TrafficSim baseline uses an isotropic Gaussian CVAE to model action distributions and facilitate diverse simulation. We adopt a similar differentiable dynamics trajectory decoder from the original paper but remove the scene consistency loss in training since we do not assume control over all agents.

7 Additional results

7.1 Complete evaluation metrics

Here we provide the exhaustive list of (non-learned) evaluation metrics we considered in this work and report performances in Table 5 for Lyft and Table 6 for nuScenes.

-

•

FR: The average fraction of failed agent in each scene. The failure can be caused by either collision failure or offroad failure (defined below).

-

•

collFR: The average fraction of failed agent in each scene, where the failure is caused by agent colliding with any other agent in the scene at any timestep throughout an episode.

-

•

offroadFR: The average fraction of failed agent in each scene, where the failure is caused by agent departing drivable region for more than 1 second (10 timesteps for a simulation frequency of 0.1 second).

-

•

coll: The average fraction of agent colliding with another agent. We in addition list the type of collision occurred (rea, front, side). Note that each collision type is independently calculated, i.e., each agent can have multiple type of collision per episode.

-

•

offroad: The fraction of timesteps that an agent spend outside of drivable area, averaged across agents per scene.

-

•

coverage and diversity: As described in detail in the main text, both metrics measure the diversity of rollouts across multiple simulation trials with the same initial condition. The coverage metric calculates the (discretized) map area covered by multiple simulation trials with the same initial condition. The diversity metric in addition computes the Wasserstein distances among the density profiles of the map coverage attained by different simulation trials. In practice, we accumulate the metrics across 5 trials and compute the coverage density by discretizing the map at a 2m by 2m resolution.

-

•

Dataset metrics. Dataset metrics compare the driving profile of simulation trajectories with trajectories recorded in the dataset. We consider speed, lon acc (longitudinal acceleration magnitude), lat acc (latitudinal acceleration magnitude), and jerk. To calculate the difference between the driving profile of the simulation and the dataset, we first collect histograms of these driving profile measurements for both the simulated and the dataset trajectories across all evaluation scenes. We then calculate the distances between the histograms of the simulation and the dataset using Wasserstein distance normalized by a constant. We use 20 bins for all histograms, with a speed range of , an acceleration magnitude range of , and a jerk magnitude range of .

We also report scene Average Distance Error (sADE) and scene Final Distance Error (sFDE), which measures the average and the final position differences between simulated and recorded trajectories, respectively. Note that sADE and sFDE are not suitable for measuring simulation realism and is included only as a reference since it heavily penalizes alternative simulations (e.g., turning left vs. going straight).

| FR | collFR | offroadFR | coll (any) | coll (rear) | coll (front) | coll (side) | offroad | |

|---|---|---|---|---|---|---|---|---|

| SimNet[22] | 38.35 | 35.57 | 3.61 | 35.57 | 20.58 | 20.46 | 30.59 | 1.38 |

| SocialGAN[30] | 64.96 | 42.86 | 41.00 | 42.86 | 16.26 | 15.78 | 39.60 | 19.45 |

| SocialGAN+p | 69.41 | 42.96 | 48.02 | 42.96 | 15.56 | 15.26 | 40.39 | 25.12 |

| TPP[34] | 15.62 | 14.65 | 0.98 | 14.65 | 7.23 | 6.94 | 8.00 | 0.59 |

| TPP+p | 16.03 | 15.12 | 1.02 | 15.12 | 7.41 | 7.14 | 10.85 | 0.65 |

| TrafficSim[23] | 26.98 | 15.98 | 13.53 | 15.98 | 6.43 | 6.58 | 8.59 | 5.76 |

| TrafficSim+p | 22.97 | 13.58 | 11.39 | 13.58 | 5.90 | 5.82 | 7.23 | 4.70 |

| BITS (max) | 20.71 | 18.75 | 2.36 | 18.75 | 7.44 | 7.08 | 12.72 | 1.18 |

| BITS (sample) | 25.37 | 22.37 | 3.94 | 22.37 | 9.62 | 9.93 | 15.60 | 1.27 |

| BITS | 9.97 | 8.66 | 1.48 | 8.66 | 3.64 | 3.61 | 6.79 | 0.46 |

| Dataset | 18.36 | 17.49 | 1.22 | 17.49 | 6.14 | 7.31 | 14.59 | 0.84 |

| coverage | diversity | speed | lon acc | lat acc | jerk | sADE | sFDE | |

| SimNet[22] | 460.44 | 0.00 | 1.65 | 19.16 | 21.26 | 3.85 | 3.19 | 8.43 |

| SocialGAN[30] | 189.98 | 9.02 | 1.48 | 10.10 | 16.21 | 5.17 | 13.22 | 32.74 |

| SocialGAN+p | 131.47 | 7.64 | 1.51 | 9.66 | 15.53 | 4.78 | 13.90 | 34.09 |

| TPP[34] | 495.69 | 3.23 | 1.14 | 17.42 | 19.09 | 2.60 | 5.50 | 11.85 |

| TPP+p | 508.16 | 2.75 | 1.23 | 17.93 | 19.72 | 2.32 | 5.44 | 11.75 |

| TrafficSim[23] | 566.35 | 7.68 | 1.50 | 17.20 | 21.42 | 3.82 | 5.89 | 14.02 |

| TrafficSim+p | 617.50 | 7.96 | 1.73 | 17.84 | 21.74 | 3.09 | 6.04 | 13.94 |

| BITS (max) | 443.93 | 0.00 | 0.76 | 15.10 | 19.18 | 4.44 | 7.77 | 16.74 |

| BITS (sample) | 780.52 | 16.84 | 1.86 | 18.20 | 20.38 | 4.29 | 7.78 | 17.25 |

| BITS | 1014.43 | 22.94 | 1.96 | 17.05 | 20.76 | 3.75 | 11.21 | 23.05 |

| Dataset | 327.25 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| FR | coll FR | offroad FR | coll (any) | coll (rear) | coll (front) | coll (side) | offroad | |

|---|---|---|---|---|---|---|---|---|

| SimNet[22] | 24.58 | 15.80 | 11.95 | 15.80 | 5.91 | 7.02 | 13.17 | 3.05 |

| SocialGAN[30] | 71.33 | 33.81 | 51.24 | 33.81 | 22.27 | 23.86 | 30.41 | 19.71 |

| SocialGAN+p | 71.86 | 35.84 | 51.36 | 35.84 | 23.54 | 25.26 | 30.51 | 18.26 |

| TPP[34] | 49.76 | 33.23 | 24.93 | 33.23 | 13.48 | 13.85 | 28.88 | 8.27 |

| TPP+p | 9.84 | 9.52 | 0.32 | 9.52 | 2.38 | 2.38 | 7.97 | 0.04 |

| TrafficSim[23] | 27.08 | 20.39 | 11.16 | 20.39 | 7.60 | 8.29 | 17.48 | 3.42 |

| TrafficSim+p | 25.97 | 18.45 | 12.01 | 18.45 | 6.68 | 6.91 | 16.60 | 3.61 |

| BITS (max) | 13.24 | 11.51 | 1.83 | 11.51 | 4.11 | 4.24 | 8.31 | 0.30 |

| BITS (sample) | 14.72 | 13.79 | 1.20 | 13.79 | 4.98 | 5.28 | 10.47 | 0.17 |

| BITS | 6.63 | 5.67 | 1.01 | 5.67 | 1.85 | 1.91 | 3.87 | 0.14 |

| Dataset | 11.99 | 11.39 | 0.64 | 11.39 | 4.72 | 6.44 | 9.26 | 0.42 |

| coverage | diversity | speed | lon acc | lat acc | jerk | sADE | sFDE | |

| SimNet[22] | 395.21 | 0.00 | 6.81 | 144.06 | 125.14 | 18.61 | 7.01 | 17.87 |

| SocialGAN[30] | 154.21 | 2.29 | 38.44 | 319.37 | 342.78 | 42.51 | 19.89 | 36.79 |

| SocialGAN+p | 151.61 | 2.32 | 37.07 | 316.63 | 339.96 | 42.48 | 19.76 | 36.68 |

| TPP[34] | 489.36 | 7.06 | 8.37 | 137.54 | 117.77 | 4.61 | 12.13 | 27.26 |

| TPP+p | 661.07 | 7.73 | 8.17 | 138.76 | 114.82 | 5.96 | 13.09 | 28.65 |

| TrafficSim[23] | 861.00 | 4.28 | 10.03 | 176.83 | 156.61 | 10.01 | 10.51 | 25.77 |

| TrafficSim+p | 933.53 | 4.43 | 10.44 | 179.63 | 159.45 | 11.93 | 11.06 | 27.39 |

| BITS (max) | 559.58 | 0.00 | 6.35 | 150.67 | 124.78 | 13.87 | 6.41 | 15.71 |

| BITS (sample) | 888.12 | 6.36 | 6.54 | 152.42 | 125.97 | 13.44 | 6.70 | 16.22 |

| BITS | 1122.94 | 8.40 | 6.91 | 158.61 | 133.00 | 13.20 | 8.39 | 20.19 |

| Dataset | 397.92 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

7.2 Planning cost weight ablation

As described above and in the main text, we use two cost terms: collision and road departure in our prediction-and-planning module. We conduct an ablation study on different planning cost weight setting using the Lyft dataset and report the result in Fig. 6. Because there are only two cost terms, we mainly consider the ratio of the cost weights. We set offroad cost weight to either and and continuously vary the collision cost weight at a log scale. We observe that both terms are effective at reducing rates of their corresponding cause of failure, the reduction saturates when the weight is above a certain value, and the overall failure reduction performance is not sensitive to the cost weight ratio.

7.3 Prediction and planning horizon ablation

Here we investigate the impact of the planning horizon in BITS’s planning and control module. We train all three components (i.e., goal-conditional policy, future state predictor, spatial goal network) with the same set of prediction horizons: on the Lyft dataset. We report performances using essential metrics in Table 7. We observe that yield similar performances, but the failure rates increase significantly as the model expands its prediction horizon to . This is because predicting long-term future is extremely challenging, especially when all agents are controlled by stochastic policies. The model’s decisions can be easily misled by wrong future predictions, leading to critical mistakes. At the same time, we also observe that while short-term predictions result in more successful simulation, they lead to less smooth driving behavior caused by more frequent braking and acceleration, as indicated by the dataset metrics (e.g., higher acc and jerk). Hence an important future direction is to dynamically adjust the prediction and planning horizon by taking into account prediction uncertainties.

| horizon | FR | coll | offroad | coverage | diversity | speed | lon acc | lat acc | jerk |

|---|---|---|---|---|---|---|---|---|---|

| 10 | 8.89 | 6.94 | 0.75 | 948.41 | 23.31 | 1.58 | 17.50 | 20.93 | 4.03 |

| 20 | 9.97 | 8.66 | 0.46 | 1014.43 | 22.94 | 1.96 | 17.05 | 20.76 | 3.75 |

| 50 | 23.86 | 21.30 | 0.75 | 654.25 | 15.14 | 0.69 | 12.67 | 17.91 | 3.50 |

| 80 | 32.39 | 28.62 | 1.48 | 355.69 | 11.43 | 1.83 | 9.31 | 15.46 | 3.50 |

7.4 Additional qualitative results

We include additional qualitative results for BITS. Rollouts using the nuScenes dataset is shown in Fig. 7 and that of the Lyft dataset is shown in Fig. 8. Each row shows different rollouts from the same initial scene configuration. The recorded trajectories are shown in the rightmost column for reference.