Bit-mask Robust Contrastive Knowledge Distillation for Unsupervised Semantic Hashing

Abstract.

Unsupervised semantic hashing has emerged as an indispensable technique for fast image search, which aims to convert images into binary hash codes without relying on labels. Recent advancements in the field demonstrate that employing large-scale backbones (e.g., ViT) in unsupervised semantic hashing models can yield substantial improvements. However, the inference delay has become increasingly difficult to overlook. Knowledge distillation provides a means for practical model compression to alleviate this delay. Nevertheless, the prevailing knowledge distillation approaches are not explicitly designed for semantic hashing. They ignore the unique search paradigm of semantic hashing, the inherent necessities of the distillation process, and the property of hash codes. In this paper, we propose an innovative Bit-mask Robust Contrastive knowledge Distillation (BRCD) method, specifically devised for the distillation of semantic hashing models. To ensure the effectiveness of two kinds of search paradigms in the context of semantic hashing, BRCD first aligns the semantic spaces between the teacher and student models through a contrastive knowledge distillation objective. Additionally, to eliminate noisy augmentations and ensure robust optimization, a cluster-based method within the knowledge distillation process is introduced. Furthermore, through a bit-level analysis, we uncover the presence of redundancy bits resulting from the bit independence property. To mitigate these effects, we introduce a bit mask mechanism in our knowledge distillation objective. Finally, extensive experiments not only showcase the noteworthy performance of our BRCD method in comparison to other knowledge distillation methods but also substantiate the generality of our methods across diverse semantic hashing models and backbones. The code for BRCD is available at https://github.com/hly1998/BRCD.

1. Introduction

Content-based image retrieval is a crucial image search problem in which similar images are retrieved from a database given a query image (Lew et al., 2006). It has been widely applied in many web applications (e.g., iStock 111https://www.istockphoto.com/). In recent years, we have witnessed a significant surge in visual data on the Internet. To address the exponential growth of data volume and expensive annotating requirements in large-scale databases, unsupervised semantic hashing methods have played a pivotal role (Luo et al., 2023). These methods aim to maintain the semantic similarity of images by deep convolutional neural networks (e.g., VGG (Simonyan and Zisserman, 2015)) without relying on labels, where the original high-dimensional embedding of the image is converted into a compact hash code for presentation. Taking advantage of using hash codes for efficient search, semantic hashing has achieved impressive results in large-scale image retrieval (Wang et al., 2017).

With the emergence of more advanced architectures like Vision Transformer (ViT) (Dosovitskiy et al., 2020), some pioneering studies (Gong et al., 2022; Jang et al., 2022) have demonstrated their superiority in semantic hashing. For example, a recent publication (Gong et al., 2022) reports that by replacing the original backbone with ViT, the semantic hashing model CIBHash (Qiu et al., 2021) gains around 46% improvement. This suggests a promising trend where semantic hashing approaches increasingly adopt such powerful backbones to extract meaningful representations. Unfortunately, a drawback arises when employing large-scale backbones. For example, we find that if we employ the large-scale model ViT_L_16 as the hashing model’s backbone, it takes approximately 657 ms to process a batch of 64 images and generate hash codes. In contrast, the subsequent search process requires less than one-tenth of that time 222See Appendix E for details. Consequently, the inference time becomes a bottleneck, adversely affecting the user experience in practical scenarios.

To promote the inference process, Knowledge Distillation (KD) is a frequently employed method (Gou et al., 2021), which compresses the large backbone of the teacher into a small student model for inference. The teacher tries to transform the necessary knowledge to the student for performance guarantee. Using such a technology, we have summarized two search paradigms for semantic hashing, including the symmetric and asymmetric search paradigms. First, as shown in Figure 1 (a), a conventional solution follows a symmetric search paradigm, and we name such a paradigm the Symmetric Semantic Hashing search Paradigm (SSHP). In SSHP, a single student model serves as the hash code extractor for semantically learning both candidates and queries in the offline and online stages, respectively. Additionally, another large teacher model takes charge of distilling the required knowledge to the student. Second, as shown in Figure 1 (b), we synthesize a novel paradigm named Asymmetric Semantic Hashing search Paradigm (ASHP) for semantic hashing. The ASHP retains a powerful large teacher model offline to generate better hash codes of candidates for accuracy but leverages a lightweight student model online to facilitate quick search. This paradigm draws inspiration from some works (Lin et al., 2013; Chen et al., 2019; Yuan et al., 2020; Tu et al., 2021) that employ the methodology of firstly generating hash codes, followed by the learning of a hash function, which means we can deploy different models for the same hash code. The ASHP improves accuracy by sacrificing offline inference time while ensuring online inference efficiency for good user experiments.

At this point, we hope to grasp a suitable distillation method that can be used for both paradigms. However, the current knowledge distillation methods lack explicit design considerations for semantic hashing, thereby overlooking crucial factors inherent to semantic hashing. We identify three critical factors for designing an effective knowledge distillation strategy that facilitates the semantic hashing problem. (1) Semantic space alignment. In the symmetric paradigm (SSHP), the hash codes outputted from different stages are in the same space naturally because one single model is applied in both stages. However, in the asymmetric paradigm (ASHP), since we implement different models offline and online, the Hamming semantic space of both is extremely misaligned, which argues a necessary distillation way to ensure the space is consistent for effective search. (2) Robust optimization. Some current works (Luo et al., 2018; Song et al., 2021; He et al., 2023; Hansen et al., 2021) target to ensure robustness in the hash model because noise significantly impacts the performance of hash codes. When employing a powerful teacher model, we cannot guarantee the accuracy for all samples, making some supervision signals noise and leading to incorrect optimization directions. Hence, we argue that a robust knowledge distillation process should also be achieved to avoid such situations. (3) Hash code property. Desirable hash codes have unique properties in distribution. For example, some semantic hashing models (Zheng et al., 2020; Doan and Reddy, 2020; Li and van Gemert, 2021; Qiu et al., 2021) tend to generate “bit independence” hash codes, resulting in each bit being independent of the others. This property can better preserve the original locality structure of the data but may render it unsuitable to calculate the distance between two hash codes as done in prior knowledge distillation methods (Tian et al., 2019; Xu et al., 2020; Zhu et al., 2021), which are generally designed for real-value representations.

To realize the above factors while addressing the related challenges, we propose a novel Bit-mask Robust Contrastive knowledge Distillation (BRCD) method. First, we achieve the semantic space alignment for unsupervised semantic hashing by identifying two learning targets, including individual-space knowledge distillation and structural-semantic knowledge distillation. Specifically, the former forces the student model to learn embedding position knowledge from the teacher model for the same image. The latter ensures the student model acquires structure relation knowledge between different images from the teacher model. We introduce a contrastive knowledge distillation method to achieve both targets and demonstrate its effectiveness through formal analysis. Second, in our contrastive knowledge distillation, we try to generate positive samples through data augmentation. However, the teacher model may assign some augmented images to a distant location from the anchor images as illustrated in Figure 2 (a), where image and its augmentation may not be close in Hamming space. We name such samples as “offset positive samples”. This issue arises because augmented images may represent out-of-distribution data for the teacher model. Served as noisy data, these offset positive samples provide wrong optimization directions. Thus, a cluster-based method is employed in the contrastive knowledge distillation process to detect and remove offset positive samples explicitly. The new contrastive method protects the student model from being influenced by the wrong optimization direction and improves the robustness of the knowledge distillation. Third, we conduct a quantitative analysis of bit independence at the bit level and reveal an interesting problem of “redundancy bit” presence in hash codes. This issue indicates that some bits in hash codes are redundant for a specific relevance set under the assumption of bit independence, leading to decreased learning effectiveness. Therefore, we present a bit mask mechanism to revise similarity calculations to mitigate the effects of redundancy bit. Finally, extensive experiments demonstrate our BRCD can achieve significant improvements over several knowledge distillation baselines and also show the generality of BRCD across diverse semantic hashing models and backbones.

2. Related Work

2.1. Semantic Hashing

Semantic hashing is an extensively researched and applied technique across various domains (Luo et al., 2021b; TONG et al., 2024), particularly in the realm of large-scale image retrieval. They can be broadly classified into two categories: supervised (Chen and Cheung, 2019; Sun et al., 2022; Zhan et al., 2020; Liu et al., 2019; Tu et al., 2021) and unsupervised (Hu et al., 2017; Zhang et al., 2017; Yang et al., 2018; Luo et al., 2021a, b; Li and van Gemert, 2021; Jang and Cho, 2021). The primary distinction between these two approaches is the availability of supervised information. In this paper, we focus on unsupervised hashing methods, as they effectively leverage unlabeled data and enable practical applications.

Unsupervised hashing methods try to preserve similarities of original data in the Hamming space. With the development of deep learning, deep semantic hashing has attracted growing interest in efficient image search. These methods can be roughly classified into two categories. Firstly, weak supervised learning-based approaches aim to reconstruct similar structures using pre-trained models (Hu et al., 2017; Zhang et al., 2017; Shen et al., 2019; Zhang et al., 2020) or cluster methods (Yang et al., 2018; Luo et al., 2021a, b). These reconstructed structures or labels guide hash code learning via deep supervised hashing methods. Some of them (Yang et al., 2018, 2019; Jang et al., 2022) incorporate the idea of knowledge distillation, but their objective is to construct pseudo labels or signals rather than focusing on reducing the inference time. Secondly, self-supervised learning-based techniques incorporate popular self-supervised methods such as auto-encoders (Dai et al., 2017; Shen et al., 2020) and generative adversarial networks (GANs) (Dizaji et al., 2018; Song et al., 2018) into deep unsupervised hashing. Recently, several methods have adopted contrastive learning in unsupervised hashing (Luo et al., 2021b; Jang and Cho, 2021; Qiu et al., 2021; Wang et al., 2022). For example, CIBHash (Qiu et al., 2021) applies contrastive learning to unsupervised hashing from an information bottleneck perspective.

With the development of large backbones like ViT (Dosovitskiy et al., 2020), some advanced works (Gong et al., 2022; Jang et al., 2022) report a great improvement in semantic hashing when using such a powerful backbones. Unfortunately, the computational overhead of large-scale backbones conflicts with the efficiency requirements of semantic hashing, making it arduous to employ them in edge devices or frameworks (Cui et al., 2024). Therefore, there is a need to discover ways to reduce inference time in practice.

2.2. Knowledge Distillation

Knowledge distillation aims to transfer knowledge from one model (teacher) to another (student). It has gained widespread attention in recent years (Gou et al., 2021; Wang et al., 2021; Lu et al., 2024; Zhao et al., 2023; Liu et al., 2023). The primary challenge is to determine what knowledge the teacher has learned and how to distill it to the student (Hinton et al., 2015). This paper focuses on exploring knowledge distillation techniques suitable for image retrieval tasks.

Analyzing and exploiting the relation between data samples is a popular approach in image retrieval. In knowledge distillation, similarity-preserving knowledge (SP) (Tung and Mori, 2019) and relational knowledge distillation (RKD) (Park et al., 2019) aim to transfer knowledge by preserving the sample relations between input pairs. However, they use shallow relation modeling between features, which may be suboptimal for capturing complex inter-sample relations. To address this issue, some studies have integrated contrastive learning into knowledge distillation (Tian et al., 2019; Xu et al., 2020; Zhu et al., 2021; Yu et al., 2022). For example, CRD (Tian et al., 2019) combines knowledge distillation with contrastive learning to maximize the mutual information between teacher and student representations. Additionally, SSKD (Xu et al., 2020) employs contrastive tasks as self-supervised pretext tasks to enable richer knowledge extraction from the teacher to the student. CRCD (Zhu et al., 2021) also utilizes contrastive loss but focuses on the mutual relations of deep representations instead of the representations themselves. PACKD (Yu et al., 2022) further considers the correlation among intra-class samples. These approaches enable more effective modeling of complex relations for improved knowledge distillation in image retrieval.

However, existing knowledge distillation methods are designed for real-value representation, which does not consider some special problems in semantic hashing or property in hash codes. We modify our knowledge distillation method to cope with these special challenges in the semantic hashing domain.

3. PRELIMINARIES

3.1. Semantic Hashing

Consider a database comprising images. Semantic hashing targets to learn a hash function that maps each image to a low-dimensional binary vector , referred to as a hash code, where denotes the dimensionality of . This mapping aims to preserve the pairwise similarities between the images and in the Hamming space, characterized by the Hamming distance .

3.2. Semantic Hashing Search Paradigm with Knowledge Distillation

When applying knowledge distillation methods to semantic hashing, we identify two search paradigms, including the Symmetric Semantic Hashing search Paradigm (SSHP) and the Asymmetric Semantic Hashing search Paradigm (ASHP).

The search paradigm typically involves online and offline stages. When using the KD technology, we have a pre-trained large teacher model and a lightweight student model guided by the teacher model . In the SSHP, the student model is used to precompute hash codes for candidate images, which can be used to construct the semantic hashing index (Norouzi et al., 2012). In the online stage, the student model is also used to extract the hash codes of query images, and the index is utilized to locate relevant images quickly. ASHP introduces a modification to the SSHP. ASHP still employs a lightweight student model during the online stage but uses the large teacher model during the offline stage. Due to this setting, ASHP can rapidly return results in the online stage while obtaining more accurate hash codes in the offline stage.

4. The proposed BRCD method

In this section, we present a description of our proposed Bit-mask Robust Contrastive knowledge Distillation (BRCD).

4.1. Contrastive Knowledge Distillation

To achieve valid results in both SSHP and ASHP, the basic objective of knowledge distillation is semantic space alignment, which consists of two targets. Firstly, the student model should learn the individual-space knowledge from the teacher model . It targets to force hash codes of the same image output in student model and teacher model close to each other. This can be formulated as follows:

| (1) |

where represents a Hamming distance measurement, and the lower the value, the more similar the compared images. Secondly, the student model should learn the structural-semantic knowledge from the teacher model. The goal is to ensure that image pairs with similar semantics are mapped closely in the Hamming space while pairs with dissimilar semantics are far apart when they are inputted into student model and teacher model . This objective can be expressed as follows:

| (2) | ||||

where denotes the negative set for , represents the positive set for . Considering either of the two objects separately is not sufficient. If we only consider individual-space knowledge, it is hard to optimize all to achieve the Eq. (1) because of the capacity gap between student and teacher models (Mirzadeh et al., 2020). Conversely, concentrating solely on structural-semantic knowledge, the original space position is ignored and may lead to suboptimal results.

To design a knowledge distillation objective to satisfy both Eq. (1) and Eq. (2), we still lack information on and in the unsupervised scenario. Inspired by SimCLR (Chen et al., 2020), we utilize the augmented images as the positive images of the anchor while including other images from a given batch as irrelevant images. As illustrated in Figure 2 (a), given randomly sampled images from the training set , our contrastive training mini-batch consists of images obtained by applying data augmentation on the sampled image. Then we get original sample set and augmented sample set . The only relevant (positive) image for is its augmentation, denoted as , while the other images jointly form the set of negative samples . Using the teacher model, we obtain sample hash code set and augmented sample hash code set . By inputting training batch into the student model , we get . Subsequently, we propose a contrastive loss function as follows:

| (3) |

Here, is the cosine similarity, and is a hyper-parameter that controls whether the hash code should be closer to or . To explore its behaviors, we derive the gradients with respect to the hash code as:

| (4) |

where are the weighted coefficients. Based on their gradients in Eq. (4), we can find the object of Eq. (3) is the generalization of the combination between the knowledge distillation targets described in Eq. (1) and Eq. (2) with coefficients (detailed proof is presented in Appendix A). Thus, it can facilitate the student model’s learning of individual-space knowledge and structural-semantic knowledge from the teacher model in Hamming space.

4.2. Cluster-based Robust Optimization

Although we have proposed the contrastive knowledge distillation Eq. (3) to support the SSHP and ASHP, relying solely on the proposed contrastive loss can not ensure a robust knowledge distillation process. For example, the hash code of anchor image and its augmentation should ideally be close in Hamming space. However, as depicted in the upper-right part of Figure 2 (a), due to the teacher model may produce inaccurate hash codes for augmentations, the hash codes of image and its augmentation may not be close in Hamming space. Consequently, these augmentations serve as noisy data, and the optimization may force the student model in the wrong direction according to Eq. (3). We term these augmentations as “offset positive samples” and evaluate their occurrence probability in Appendix D.

To ensure a robust optimization process, we propose a cluster-based method that explicitly detects and removes offset positive samples to learn the semantic output space of the teacher model effectively. As illustrated in Figure 2 (b), we first perform k-means clustering on the set of hash codes of all training images and group them into clusters. In the unsupervised scenario, we can determine the value of by using the elbow method or silhouette analysis (Rousseeuw, 1987). Subsequently, we assign a pseudo label to image based on the closest centroid. In each training batch, augmentations are also assigned their respective pseudo label based on the closest centroid. If the anchor image and its augmentation have different pseudo labels, we consider as an offset positive sample. Thus, we define a dynamic to replace the original in Eq. (3) as follows:

| (5) |

Additionally, this method can incidentally solve the problem of false negative samples (Saunshi et al., 2019). The negative sample set is random and includes semantic content similar to the anchor image . This inclusion can cause a significant distance between one image and its relevant image, thereby adversely affecting the optimization process. If two different images and belong to the same centroid in one training batch, we consider a false negative sample of . Thus, we modify the set in Eq. (3) as follows:

| (6) |

Finally, the contrastive loss becomes:

| (7) |

The new contrastive loss is effective in detecting and removing the offset positive sample and false negative sample issues depicted in Figure 2 (c), where image eliminates the impact of offset positive sample and does not consider its relation with false negative samples and . Thus Eq. (7) can alleviate the influence of noisy samples and achieve a robust knowledge distillation process.

4.3. Bit mask mechanism

To achieve semantic space alignment, we need to measure the similarity between two hash codes, where we apply cosine similarity in the learning objective Eq. (7). However, current approaches may overlook the special distribution property of hash codes referred to as “bit independence.” Specifically, bit independence means each bit is independent of the others in the output distribution for all hash codes (He et al., 2011). It can better preserve the original locality structure of the data (Doan and Reddy, 2020) but may render it unsuitable to calculate the similarity as prior knowledge distillation methods directly. To address this property, we first explore the distribution of bits within one relevance set.

Specifically, we obtain by using the candidate set and the trained teacher model . We then generate relevance sets , , based on their ground truth (e.g., class), where is the number of relevance sets, is the number of elements in and is the j-th bit of hash code . We randomly choose a relevance set and compute the frequency of 1 and -1 in each dimension for all hash codes in to plot the frequency histograms. Figure 3 shows the frequency histogram of eight randomly selected dimensions. We can observe two types of bits: (1) in some dimensions, the frequency of 1 and -1 is disparate (e.g., ), and (2) in other dimensions, the frequency of 1 and -1 is slightly disparate (e.g., ). Under the bit independence assumption, if 1 and -1 have an equal probability of occurring in a specific dimension within a relevance set, this dimension does not provide any useful semantic information because it tends to express random semantics. Worse still, these dimensions lead to the wrong optimization direction for structural knowledge in the learning process. As shown in the left part of Figure 2 (e), to stay away from images and , image tends to move away from its relevant images . We refer to such bit as “redundancy bit”.

To mitigate the bad effect of redundancy bits, we propose a bit mask mechanism that excludes redundancy bits in similarity calculation, as shown in Figure 2 (d). In the unsupervised scenario, we use the cluster results of all training hash codes obtained in Section 4.2 to get cluster sets as relevance sets. Here, and is the number of elements in . Next, we calculate the expectation of each bit for each relevance set as follows:

| (8) |

where is the r-th dimension of . Then, we can define the i-th cluster’s bit mask as , where the r-th bit mask for relevance set is defined as:

| (9) |

Here, is a threshold. Then, we propose the Bit-mask Robust Contrastive knowledge Distillation (BRCD) as follows:

| (10) |

where and represents the revised similarity function, which is defined as:

| (11) |

Here, is the bit mask of the relevance set corresponding to image . The motivation behind it is that if the expectation of one dimension in a relevance set is lower than the threshold , it should be the redundancy bit. In Eq. (10), when measuring structural-semantic knowledge, the dimensions of redundancy bits are ignored. This setting prevents the optimization process from the suboptimal results. For instance, in the right part of Figure 2 (e), image avoids choosing the wrong optimization direction after eliminating the redundancy bit. Certainly, the assumption of “each bit is independent of the other” is a relative concept. Many semantic hashing models (Zheng et al., 2020; Li and van Gemert, 2021; Qiu et al., 2021) approach this target, but the complete bit independence is hard to grasp. Therefore, the hyper-parameter in Eq. (9) can be considered a reflection of bit independence. If a semantic hashing model can achieve bit independence well, a high value should be given to ; otherwise, a small value is appropriate.

5. Experiments

To assess the performance of our proposed method, we conducted experiments on three datasets: CIFAR-10 (Krizhevsky et al., 2009), MSCOCO (Lin et al., 2014), and ImageNet100 (Qiu et al., 2021). Besides, we employed the mean Average Precision (mAP) at the top K as our evaluation metric. All experiments were conducted on two Intel Xeon Gold 5218 CPUs and one NVIDIA Tesla V100. For specific details regarding the dataset, evaluation metrics, and training procedures, please refer to Appendix B.

| KD Methods | CIFAR-10 (mAP@1000) | MSCOCO (mAP@5000) | IMAGENET100 (mAP@1000) | |||||||||

| 32 bit | 64 bit | 32 bit | 64 bit | 32 bit | 64 bit | |||||||

| SSHP | ASHP | SSHP | ASHP | SSHP | ASHP | SSHP | ASHP | SSHP | ASHP | SSHP | ASHP | |

| NO KD | 0.4935 | - | 0.5111 | - | 0.7459 | - | 0.7609 | - | 0.7097 | - | 0.7613 | - |

| KL (Hinton et al., 2015) | 0.6300 | 0.6612 | 0.6460 | 0.6767 | 0.7956 | 0.8013 | 0.8087 | 0.8120 | 0.8463 | 0.8568 | 0.8576 | 0.8671 |

| SP (Tung and Mori, 2019) | 0.6208 | 0.4728 | 0.6273 | 0.6765 | 0.7984 | 0.8123 | 0.8129 | 0.7320 | 0.8524 | 0.7473 | 0.8641 | 0.4031 |

| PKT (Passalis and Tefas, 2018) | 0.5939 | 0.1075 | 0.6035 | 0.1101 | 0.7532 | 0.3499 | 0.7602 | 0.3376 | 0.8241 | 0.0134 | 0.8365 | 0.0129 |

| RKD (Park et al., 2019) | 0.4059 | 0.1089 | 0.6171 | 0.0969 | 0.7813 | 0.3785 | 0.7951 | 0.3843 | 0.8319 | 0.0164 | 0.8496 | 0.0155 |

| CRD (Tian et al., 2019) | 0.6366 | 0.0982 | 0.6549 | 0.1117 | 0.7934 | 0.3395 | 0.8013 | 0.3356 | 0.8346 | 0.0126 | 0.8479 | 0.0145 |

| SSDK (Xu et al., 2020) | 0.6086 | 0.0986 | 0.6268 | 0.1457 | 0.7846 | 0.3596 | 0.7967 | 0.3583 | 0.8158 | 0.0156 | 0.8328 | 0.0164 |

| CRCD (Zhu et al., 2021) | 0.6382 | 0.0922 | 0.6576 | 0.0927 | 0.7986 | 0.3256 | 0.8059 | 0.3285 | 0.8394 | 0.0124 | 0.8589 | 0.0126 |

| PACKD (Yu et al., 2022) | 0.6453 | 0.1009 | 0.6699 | 0.0657 | 0.8089 | 0.0343 | 0.8204 | 0.0341 | 0.8574 | 0.0138 | 0.8693 | 0.0129 |

| BRCD | 0.6787 | 0.7285 | 0.6900 | 0.7378 | 0.8208 | 0.8336 | 0.8349 | 0.8407 | 0.8866 | 0.8955 | 0.8966 | 0.9020 |

| BRCD w/o BM | 0.6612 | 0.7100 | 0.6721 | 0.7216 | 0.8087 | 0.8233 | 0.8251 | 0.8306 | 0.8761 | 0.8843 | 0.8860 | 0.8919 |

| BRCD w/o NP | 0.6564 | 0.7068 | 0.6659 | 0.7178 | 0.8074 | 0.8219 | 0.8239 | 0.8287 | 0.8715 | 0.8804 | 0.8818 | 0.8821 |

| BRCD w/o P | 0.6650 | 0.7144 | 0.6756 | 0.7259 | 0.8156 | 0.8253 | 0.8274 | 0.8329 | 0.8789 | 0.8872 | 0.8885 | 0.8945 |

| BRCD w/o SSK | 0.6498 | 0.6891 | 0.6560 | 0.6979 | 0.7949 | 0.8076 | 0.8014 | 0.8157 | 0.8609 | 0.8764 | 0.8680 | 0.8808 |

| Hashing Model | Paradigm | NO KD | KL | SP | PKT | RKD | CRD | SSDK | CRCD | PACKD | BRCD |

| GreedyHash (Su et al., 2018) | SSHP | 0.3084 | 0.6128 | 0.6284 | 0.5784 | 0.6013 | 0.6295 | 0.6005 | 0.6453 | 0.6481 | 0.6687 |

| ASHP | - | 0.6651 | 0.6525 | 0.1204 | 0.1123 | 0.1142 | 0.1347 | 0.1023 | 0.0924 | 0.7023 | |

| Bi-half Net (Li and van Gemert, 2021) | SSHP | 0.3254 | 0.5680 | 0.6414 | 0.5481 | 0.6171 | 0.6601 | 0.6125 | 0.6669 | 0.6721 | 0.6703 |

| ASHP | - | 0.6189 | 0.4199 | 0.1252 | 0.0978 | 0.0940 | 0.1274 | 0.0936 | 0.1162 | 0.7085 | |

| TBH (Shen et al., 2020) | SSHP | 0.4383 | 0.6215 | 0.6337 | 0.5794 | 0.6326 | 0.6403 | 0.6052 | 0.6475 | 0.6518 | 0.6755 |

| ASHP | - | 0.6536 | 0.6635 | 0.1217 | 0.1126 | 0.1278 | 0.1336 | 0.0974 | 0.0837 | 0.7153 | |

| CIBHash (Qiu et al., 2021) | SSHP | 0.5111 | 0.6460 | 0.6273 | 0.6035 | 0.6171 | 0.6549 | 0.6268 | 0.6576 | 0.6699 | 0.6900 |

| ASHP | - | 0.6767 | 0.6765 | 0.1101 | 0.0969 | 0.1117 | 0.1457 | 0.0927 | 0.0657 | 0.7378 | |

| MeCoQ (Wang et al., 2022) | SSHP | 0.5483 | 0.6693 | 0.6536 | 0.6398 | 0.6534 | 0.6873 | 0.6431 | 0.6882 | 0.7045 | 0.7228 |

| ASHP | - | 0.6912 | 0.6935 | 0.1085 | 0.1027 | 0.1075 | 0.1367 | 0.0988 | 0.0894 | 0.7504 |

5.1. Knowledge Distillation Methods Comparison

In this experiment, we compare the mAP@K of different knowledge distillation methods on three datasets.

5.1.1. Setting

We consider the following knowledge distillation methods for comparison: KL (Hinton et al., 2015), SP (Tung and Mori, 2019), RKD (Park et al., 2019), PKT (Passalis and Tefas, 2018), CRD (Tian et al., 2019), SSKD (Xu et al., 2020), CRCD (Zhu et al., 2021) and PACKD 333PACKD requires the availability of supervised signals to construct positive data, and we have applied this setting in our experiments. (Yu et al., 2022). These methods, including BRCD, are applied to the final output of the semantic hashing model, i.e., the hash code layer for practicability. We choose CIBHash (Qiu et al., 2021) as the semantic hashing model to train the teacher and student models. We use ViT_B_16 (Dosovitskiy et al., 2020) as the teacher model’s backbone and use EfficientNetB0 (Tan and Le, 2019) as the student model’s backbone. The code length is set to 32 and 64. To explore the effect of different components in the BRCD method, we design variants of BRCD, including BRCD w/o NP (BRCD without removing false negative and offset positive samples), BRCD w/o BM (BRCD without bit mask mechanism) and BRCD w/o P (BRCD without removing offset positive samples). Moreover, we also design BRCD w/o SSK (BRCD without considering structural-semantic knowledge), which only uses Eq.(1) as the objective.

5.1.2. Results

Table 1 summarizes the results, where we make the following observations:

1) Our proposed BRCD method outperforms other baselines. In the SSHP, BRCD performs competitively with other baselines. These results demonstrate that our BRCD method effectively captures and transfers valuable knowledge to enhance search performance. Furthermore, in the ASHP, BRCD shows significant performance, surpassing the second-best result by 9.6%, 4.3%, and 3.1% on the CIFAR-10, ImageNet100, and MSCOCO datasets, respectively, averaged across two code length. We attribute this success to the specific considerations made by BRCD, such as semantic space alignment between the student and teacher models, a robust optimization procedure, and the incorporation of hash code properties.

2) Certain baselines exhibit better performance in the ASHP compared to the SSHP, while others fail to reach the same level of effectiveness. For instance, KL is effective in the ASHP because it promotes semantic space alignment by reducing the distributional discrepancies between binary representations. Conversely, methods like RKD, PKT, CRD, SSKD, CRCD, and PACKD do not achieve semantic space alignment as they primarily focus on transferring relations between images, resulting in their inability to yield valid results in the ASHP. Interestingly, in ASHP, SP performs well in some situations but fails in others. Through formal analysis presented in Appendix C, we have discovered that the optimization of the SP method exhibits two directions in the Hamming space: one direction aims to achieve individual-space alignment, while the other does not. This observation explains the divergent outcomes achieved by the SP method in the ASHP.

3) Compared to BRCD, BRCD w/o NP, BRCD w/o P, BRCD w/o BM and BRCD w/o SSK have poor performance, indicating that the consideration of false negative and offset positive samples, as well as the bit mask mechanism, is necessary to improve the performance for our knowledge distillation methods. Besides, compared to BRCD w/o NP, BRCD w/o P achieves significant improvement. These results confirm the importance of considering offset positive samples for effective knowledge distillation. It is worth noting that the issue of offset positive samples has been largely overlooked in previous studies. In Appendix D, we conducted further analysis on offset positive samples to validate the prevalence of this phenomenon.

5.2. Performance on Different Hashing Models

In this experiment, we further validate BRCD on different hashing models by using mAP@1000 on the CIFAR-10 dataset.

5.2.1. Setting

We still use the previous knowledge distillation methods for comparison and consider the following representative semantic hashing models: GreedyHash (Su et al., 2018), TBH (Shen et al., 2020), Bi-half Net (Li and van Gemert, 2021), CIBHash (Qiu et al., 2021), and MeCoQ (Wang et al., 2022). These methods utilize distinct binarization techniques. We still use ViT_B_16 as the teacher model’s backbone and EfficientNetB0 as the student model’s backbone. The code length is set to be 64.

5.2.2. Results

Table 2 summarizes the results. We can find that in most cases, our proposed BRCD method is better than other baselines when using different hashing models. In SSHP, the BRCD is superior to all KD methods except for PACKD when using the Bi-harf Net model. However, PACKD applies supervised signals to create positive data, whereas our proposed BRCD does not require any supervised signal at all. In ASHP, BRCD is better than the other methods in these five hash models. This result demonstrates the generality of our method across different semantic hash models.

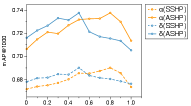

5.3. Performance on Different Backbones

In this experiment, we validate our methods when using different backbones on the CIFAR-10 dataset. Specifically, we adopt several representative knowledge distillation methods that have been used earlier for comparison. The teacher model uses ViT_B_16 (Dosovitskiy et al., 2020) or ViT_L_16 as backbone, and the student model uses EfficientNetB0 (Tan and Le, 2019), ResNet18 (He et al., 2016), or MobileNetV2 (Sandler et al., 2018) as backbone. In prior experiments, our proposed BRCD achieves a significant advantage within the ASHP and most KD methods can’t get valid performance in this paradigm. Consequently, we select some representative KD methods for comparison under the SSHP in this experiment. We perform this setting in the CIFAR-10 dataset, use CIBHash as the hashing model, and set the code length to 64. Figure 4 shows the performance, from which we can find that BRCD is still better than other KD methods when using different backbones in the teacher model or student model. This outcome validates the generality of our method across diverse backbone scenarios.

5.4. Model Analysis

5.4.1. Analysis on semantic space alignment

Semantic space alignment is a prerequisite in the ASHP and a crucial factor for SSHP. To verify the ability of the BRCD from the perspective of semantic space alignment, we analyze the distribution of hash codes from the metric calculation. We propose two metrics to evaluate semantic space alignment from two perspectives.

First, to assess the transfer of individual-space knowledge, we introduce the Individual Sample Distance (ISD) metric, which measures whether the same image is assigned to a nearby space from different models. The formula for ISD is as follows:

| (12) |

where the lower the value of , the closer the student’s hash code and the teacher’s hash code for the same image. Second, to evaluate structural knowledge transfer, we define the Neighbor Relevance Accuracy (NRA@K) metric as follows:

| (13) |

where means choosing the nearest neighbor of hash code among the set in Hamming space. This metric measures the percentage of relevant images’ codes from the teacher model around the hash codes produced by the student model, with a higher value indicating better structural-semantic knowledge transfer. We set to 100, perform this experiment on the CIFAR-10 dataset, and use ViT_B_16 as the teacher model’s backbone and EfficientNetB0 as the student’s backbone.

Figure 5(a) shows the results. We can find that KL, SP, and BRCD methods achieve lower than other distillation methods, with the BRCD achieving the lowest value. These findings suggest that our distillation method effectively achieves individual space knowledge transfer. Besides, our proposed BRCD also achieves the highest . Thus, we have confidence that our proposed distillation method can effectively transfer structural-semantic knowledge. Moreover, the KL method achieves high without a structural-semantic knowledge distillation objective. The phenomenon shows that individual space knowledge distillation can also partially achieve the goal of structural-semantic knowledge distillation. However, as discussed in Section 4.1, due to the capacity gap between student and teacher models, it is challenging to optimize all samples to achieve the ideal results of individual-space knowledge distillation. Therefore, we think the goal of structural-semantic knowledge distillation can also be regarded as a regularization term that prevents suboptimal cases during the optimization process of individual-space knowledge distillation.

5.4.2. Parameter analysis

To examine the impact of the key hyper-parameters and on performance, we evaluate the model under different and values. The parameter in the Eq. (10) (specifically, the Eq. (10) is influenced by through Eq. (5)) can adjust the objective function to prioritize either individual-space knowledge or structural-semantic knowledge. As shown in Figure 5 (b), plays an important role in obtaining optimal performance. Setting to values that are too small or too large fails to yield the best performance in either case. This observation underscores the importance of simultaneously considering both individual-space knowledge transfer and structural-semantic knowledge transfer. Besides, the parameter in Eq. (9) serves as the threshold for the bit mask. As parameter increases, more dimensions of the binary vector are masked. Similar to parameter , the parameter should not be set excessively large or small. When is set to a smaller value, it becomes insufficient to eliminate most of the redundancy bits. Conversely, if parameter is too large, it risks removing non-redundancy bits, resulting in the loss of valuable information.

6. Conclusion

In this paper, we investigated the practical problem of inference delay in semantic hashing and proposed a novel distillation method Bit-mask Robust Contrastive knowledge Distillation (BRCD). Our method introduces a contrastive knowledge distillation to ensure the effectiveness of the symmetric paradigm (SSHP) and the asymmetric paradigm (ASHP) in semantic hashing. Our method also provides a robust knowledge distillation process and eliminates the effect of redundancy bits. Extensive experiments on three datasets demonstrated the effectiveness of the BRCD methods. More importantly, we analyzed and discovered the “redundancy bit” property that exists in hash codes. Further studies on this property can be explored in the future.

Acknowledgements.

This research was partially supported by grants from the National Key Research and Development Program of China (2021YFF0901005) and the National Natural Science Foundation of China (62106244, U20A20229), and the University Synergy Innovation Program of Anhui Province (GXXT-2022-042).References

- (1)

- Cao et al. (2017) Zhangjie Cao, Mingsheng Long, Jianmin Wang, and Philip S Yu. 2017. Hashnet: Deep learning to hash by continuation. In Proceedings of the IEEE international conference on computer vision. 5608–5617.

- Chen and Cheung (2019) Junjie Chen and William K Cheung. 2019. Similarity preserving deep asymmetric quantization for image retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 8183–8190.

- Chen et al. (2020) Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey E. Hinton. 2020. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event (Proceedings of Machine Learning Research, Vol. 119). PMLR, 1597–1607.

- Chen et al. (2019) Zhen-Duo Chen, Yongxin Wang, Hui-Qiong Li, Xin Luo, Liqiang Nie, and Xin-Shun Xu. 2019. A two-step cross-modal hashing by exploiting label correlations and preserving similarity in both steps. In Proceedings of the 27th ACM International Conference on Multimedia. 1694–1702.

- Cui et al. (2024) Yuya Cui, Degan Zhang, Jie Zhang, Ting Zhang, Lixiang Cao, and Lu Chen. 2024. Multi-user reinforcement learning based task migration in mobile edge computing. Frontiers of Computer Science 18, 4 (2024), 184504.

- Dai et al. (2017) Bo Dai, Ruiqi Guo, Sanjiv Kumar, Niao He, and Le Song. 2017. Stochastic generative hashing. In International Conference on Machine Learning. PMLR, 913–922.

- Dizaji et al. (2018) Kamran Ghasedi Dizaji, Feng Zheng, Najmeh Sadoughi, Yanhua Yang, Cheng Deng, and Heng Huang. 2018. Unsupervised deep generative adversarial hashing network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3664–3673.

- Doan and Reddy (2020) Khoa D Doan and Chandan K Reddy. 2020. Efficient implicit unsupervised text hashing using adversarial autoencoder. In Proceedings of The Web Conference 2020. 684–694.

- Dosovitskiy et al. (2020) Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. 2020. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In International Conference on Learning Representations.

- Fan et al. (2020) Lixin Fan, Kam Woh Ng, Ce Ju, Tianyu Zhang, and Chee Seng Chan. 2020. Deep Polarized Network for Supervised Learning of Accurate Binary Hashing Codes.. In IJCAI. 825–831.

- Gong et al. (2022) Qinkang Gong, Liangdao Wang, Hanjiang Lai, Yan Pan, and Jian Yin. 2022. Vit2hash: unsupervised information-preserving hashing. arXiv preprint arXiv:2201.05541 (2022).

- Gou et al. (2021) Jianping Gou, Baosheng Yu, Stephen J Maybank, and Dacheng Tao. 2021. Knowledge distillation: A survey. International Journal of Computer Vision 129 (2021), 1789–1819.

- Hansen et al. (2021) Christian Hansen, Casper Hansen, Jakob Grue Simonsen, Stephen Alstrup, and Christina Lioma. 2021. Unsupervised multi-index semantic hashing. In Proceedings of the Web Conference 2021. 2879–2889.

- He et al. (2011) Junfeng He, Shih-Fu Chang, Regunathan Radhakrishnan, and Claus Bauer. 2011. Compact hashing with joint optimization of search accuracy and time. In CVPR 2011. IEEE, 753–760.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- He et al. (2023) Liyang He, Zhenya Huang, Enhong Chen, Qi Liu, Shiwei Tong, Hao Wang, Defu Lian, and Shijin Wang. 2023. An Efficient and Robust Semantic Hashing Framework for Similar Text Search. ACM Transactions on Information Systems 41, 4 (2023), 1–31.

- Hinton et al. (2015) Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015).

- Hu et al. (2017) Qinghao Hu, Jiaxiang Wu, Jian Cheng, Lifang Wu, and Hanqing Lu. 2017. Pseudo label based unsupervised deep discriminative hashing for image retrieval. In Proceedings of the 25th ACM international conference on Multimedia. 1584–1590.

- Jang and Cho (2021) Young Kyun Jang and Nam Ik Cho. 2021. Self-supervised product quantization for deep unsupervised image retrieval. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 12085–12094.

- Jang et al. (2022) Young Kyun Jang, Geonmo Gu, Byungsoo Ko, Isaac Kang, and Nam Ik Cho. 2022. Deep hash distillation for image retrieval. In European Conference on Computer Vision. Springer, 354–371.

- Krizhevsky et al. (2009) Alex Krizhevsky, Geoffrey Hinton, et al. 2009. Learning multiple layers of features from tiny images. (2009).

- Lew et al. (2006) Michael S Lew, Nicu Sebe, Chabane Djeraba, and Ramesh Jain. 2006. Content-based multimedia information retrieval: State of the art and challenges. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 2, 1 (2006), 1–19.

- Li and van Gemert (2021) Yunqiang Li and Jan van Gemert. 2021. Deep unsupervised image hashing by maximizing bit entropy. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35. 2002–2010.

- Lin et al. (2013) Guosheng Lin, Chunhua Shen, David Suter, and Anton Van Den Hengel. 2013. A general two-step approach to learning-based hashing. In Proceedings of the IEEE international conference on computer vision. 2552–2559.

- Lin et al. (2014) Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. 2014. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 740–755.

- Liu et al. (2023) Jiayu Liu, Zhenya Huang, Zhiyuan Ma, Qi Liu, Enhong Chen, Tianhuang Su, and Haifeng Liu. 2023. Guiding Mathematical Reasoning via Mastering Commonsense Formula Knowledge. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 1477–1488.

- Liu et al. (2019) Xingbo Liu, Xiushan Nie, Quan Zhou, and Yilong Yin. 2019. Supervised discrete hashing with mutual linear regression. In Proceedings of the 27th ACM International Conference on Multimedia. 1561–1568.

- Lu et al. (2024) Zepu Lu, Jin Chen, Defu Lian, Zaixi Zhang, Yong Ge, and Enhong Chen. 2024. Knowledge Distillation for High Dimensional Search Index. Advances in Neural Information Processing Systems 36 (2024).

- Luo et al. (2023) Xiao Luo, Haixin Wang, Daqing Wu, Chong Chen, Minghua Deng, Jianqiang Huang, and Xian-Sheng Hua. 2023. A survey on deep hashing methods. ACM Transactions on Knowledge Discovery from Data 17, 1 (2023), 1–50.

- Luo et al. (2021a) Xiao Luo, Daqing Wu, Chong Chen, Jinwen Ma, and Minghua Deng. 2021a. Deep unsupervised hashing by global and local consistency. In 2021 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 1–6.

- Luo et al. (2021b) Xiao Luo, Daqing Wu, Zeyu Ma, Chong Chen, Minghua Deng, Jinwen Ma, Zhongming Jin, Jianqiang Huang, and Xian-Sheng Hua. 2021b. Cimon: Towards high-quality hash codes. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Virtual Event / Montreal, Canada, 19-27 August 2021. 902–908.

- Luo et al. (2018) Yadan Luo, Yang Yang, Fumin Shen, Zi Huang, Pan Zhou, and Heng Tao Shen. 2018. Robust discrete code modeling for supervised hashing. Pattern Recognition 75 (2018), 128–135.

- Mirzadeh et al. (2020) Seyed Iman Mirzadeh, Mehrdad Farajtabar, Ang Li, Nir Levine, Akihiro Matsukawa, and Hassan Ghasemzadeh. 2020. Improved knowledge distillation via teacher assistant. In Proceedings of the AAAI conference on artificial intelligence, Vol. 34. 5191–5198.

- Norouzi et al. (2012) Mohammad Norouzi, Ali Punjani, and David J Fleet. 2012. Fast search in hamming space with multi-index hashing. In 2012 IEEE conference on computer vision and pattern recognition. IEEE, 3108–3115.

- Park et al. (2019) Wonpyo Park, Dongju Kim, Yan Lu, and Minsu Cho. 2019. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3967–3976.

- Passalis and Tefas (2018) Nikolaos Passalis and Anastasios Tefas. 2018. Learning deep representations with probabilistic knowledge transfer. In Proceedings of the European Conference on Computer Vision (ECCV). 268–284.

- Qiu et al. (2021) Zexuan Qiu, Qinliang Su, Zijing Ou, Jianxing Yu, and Changyou Chen. 2021. Unsupervised hashing with contrastive information bottleneck. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Virtual Event / Montreal, Canada, 19-27 August 2021. 959–965.

- Rousseeuw (1987) Peter J Rousseeuw. 1987. Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. Journal of computational and applied mathematics 20 (1987), 53–65.

- Sandler et al. (2018) Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. 2018. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4510–4520.

- Saunshi et al. (2019) Nikunj Saunshi, Orestis Plevrakis, Sanjeev Arora, Mikhail Khodak, and Hrishikesh Khandeparkar. 2019. A Theoretical Analysis of Contrastive Unsupervised Representation Learning. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA (Proceedings of Machine Learning Research, Vol. 97), Kamalika Chaudhuri and Ruslan Salakhutdinov (Eds.). PMLR, 5628–5637.

- Shen et al. (2019) Yuming Shen, Li Liu, and Ling Shao. 2019. Unsupervised binary representation learning with deep variational networks. International Journal of Computer Vision 127, 11-12 (2019), 1614–1628.

- Shen et al. (2020) Yuming Shen, Jie Qin, Jiaxin Chen, Mengyang Yu, Li Liu, Fan Zhu, Fumin Shen, and Ling Shao. 2020. Auto-encoding twin-bottleneck hashing. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2818–2827.

- Simonyan and Zisserman (2015) Karen Simonyan and Andrew Zisserman. 2015. Very deep convolutional networks for large-scale image recognition. In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings.

- Song et al. (2021) Ge Song, Xiaoyang Tan, Jun Zhao, and Ming Yang. 2021. Deep robust multilevel semantic hashing for multi-label cross-modal retrieval. Pattern Recognition 120 (2021), 108084.

- Song et al. (2018) Jingkuan Song, Tao He, Lianli Gao, Xing Xu, Alan Hanjalic, and Heng Tao Shen. 2018. Binary generative adversarial networks for image retrieval. In Proceedings of the AAAI conference on artificial intelligence, Vol. 32.

- Su et al. (2018) Shupeng Su, Chao Zhang, Kai Han, and Yonghong Tian. 2018. Greedy hash: Towards fast optimization for accurate hash coding in cnn. Advances in neural information processing systems 31 (2018).

- Sun et al. (2022) Jinan Sun, Haixin Wang, Xiao Luo, Shikun Zhang, Wei Xiang, Chong Chen, and Xian-Sheng Hua. 2022. HEART: Towards Effective Hash Codes under Label Noise. In Proceedings of the 30th ACM International Conference on Multimedia. 366–375.

- Tan and Le (2019) Mingxing Tan and Quoc Le. 2019. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning. PMLR, 6105–6114.

- Tian et al. (2019) Yonglong Tian, Dilip Krishnan, and Phillip Isola. 2019. Contrastive representation distillation. arXiv preprint arXiv:1910.10699 (2019).

- TONG et al. (2024) Wei TONG, Liyang HE, Rui LI, Wei HUANG, Zhenya HUANG, and Qi LIU. 2024. Efficient similar exercise retrieval model based on unsupervised semantic hashing. Journal of Computer Applications 44, 1 (2024), 206.

- Tu et al. (2021) Rong-Cheng Tu, Xian-Ling Mao, Jia-Nan Guo, Wei Wei, and Heyan Huang. 2021. Partial-softmax loss based deep hashing. In Proceedings of the Web Conference 2021. 2869–2878.

- Tung and Mori (2019) Frederick Tung and Greg Mori. 2019. Similarity-preserving knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 1365–1374.

- Wang et al. (2022) Jinpeng Wang, Ziyun Zeng, Bin Chen, Tao Dai, and Shu-Tao Xia. 2022. Contrastive quantization with code memory for unsupervised image retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 2468–2476.

- Wang et al. (2017) Jingdong Wang, Ting Zhang, Nicu Sebe, Heng Tao Shen, et al. 2017. A survey on learning to hash. IEEE transactions on pattern analysis and machine intelligence 40, 4 (2017), 769–790.

- Wang et al. (2021) Shuai Wang, Kun Zhang, Le Wu, Haiping Ma, Richang Hong, and Meng Wang. 2021. Privileged graph distillation for cold start recommendation. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval. 1187–1196.

- Xu et al. (2020) Guodong Xu, Ziwei Liu, Xiaoxiao Li, and Chen Change Loy. 2020. Knowledge distillation meets self-supervision. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX. Springer, 588–604.

- Yang et al. (2018) Erkun Yang, Cheng Deng, Tongliang Liu, Wei Liu, and Dacheng Tao. 2018. Semantic structure-based unsupervised deep hashing. In Proceedings of the 27th international joint conference on artificial intelligence. 1064–1070.

- Yang et al. (2019) Erkun Yang, Tongliang Liu, Cheng Deng, Wei Liu, and Dacheng Tao. 2019. Distillhash: Unsupervised deep hashing by distilling data pairs. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2946–2955.

- Yu et al. (2022) Zhipeng Yu, Qianqian Xu, Yangbangyan Jiang, Haoyu Qin, and Qingming Huang. 2022. Pay Attention to Your Positive Pairs: Positive Pair Aware Contrastive Knowledge Distillation. In Proceedings of the 30th ACM International Conference on Multimedia. 5862–5870.

- Yuan et al. (2020) Li Yuan, Tao Wang, Xiaopeng Zhang, Francis EH Tay, Zequn Jie, Wei Liu, and Jiashi Feng. 2020. Central similarity quantization for efficient image and video retrieval. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 3083–3092.

- Zhan et al. (2020) Yu-Wei Zhan, Xin Luo, Yongxin Wang, and Xin-Shun Xu. 2020. Supervised hierarchical deep hashing for cross-modal retrieval. In Proceedings of the 28th ACM International Conference on Multimedia. 3386–3394.

- Zhang et al. (2017) Haofeng Zhang, Li Liu, Yang Long, and Ling Shao. 2017. Unsupervised deep hashing with pseudo labels for scalable image retrieval. IEEE Transactions on Image Processing 27, 4 (2017), 1626–1638.

- Zhang et al. (2020) Wanqian Zhang, Dayan Wu, Yu Zhou, Bo Li, Weiping Wang, and Dan Meng. 2020. Deep unsupervised hybrid-similarity hadamard hashing. In Proceedings of the 28th ACM International Conference on Multimedia. 3274–3282.

- Zhao et al. (2023) Guanhao Zhao, Zhenya Huang, Yan Zhuang, Jiayu Liu, Qi Liu, Zhiding Liu, Jinze Wu, and Enhong Chen. 2023. Simulating Student Interactions with Two-stage Imitation Learning for Intelligent Educational Systems. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management. 3423–3432.

- Zheng et al. (2020) Xiangtao Zheng, Yichao Zhang, and Xiaoqiang Lu. 2020. Deep balanced discrete hashing for image retrieval. Neurocomputing 403 (2020), 224–236.

- Zhu et al. (2021) Jinguo Zhu, Shixiang Tang, Dapeng Chen, Shijie Yu, Yakun Liu, Mingzhe Rong, Aijun Yang, and Xiaohua Wang. 2021. Complementary relation contrastive distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9260–9269.

Appendix A THEORETICAL ANALYSIS FOR CONTRASTIVE KNOWLEDGE DISTILLATION LOSS

In Section 4.1, we have proposed a contrastive knowledge distillation objective in Eq.(3). In this section, we show the derivation of the gradients of this contrastive knowledge distillation objective and demonstrate it is the generalization of our two knowledge distillation targets. Without loss of generality, we use to represent an arbitrary anchor image and rewrite the loss function Eq.(3) as:

| (14) |

Next, we derive the gradient of with respect to the student hash codes of the anchor image :

| (15) | ||||

where , , and represent the coefficient terms defined as:

| (16) |

| (17) |

| (18) |

Please notice that the Hamming distance between two hash codes and can be described as:

| (19) |

We then apply and () weights to individual-space knowledge and structural-semantic knowledge in Eqs. (1) and (2) of the manuscript, respectively. Consequently, we obtain the following equation:

| (20) | ||||

Similarly, the gradient of with respect to is given by:

| (21) |

It is worth noting that in our data augmentation setting. Thus, we can rewrite Eq.(21) as:

| (22) |

Appendix B Dataset, Metric, and Training details

B.1. Dataset

We conduct experiments on three datasets to evaluate the performance of our proposed hashing method. CIFAR-10 (Krizhevsky et al., 2009) consists of 60,000 images from 10 classes. We randomly select 1,000 images per class as the query set, 500 images per class as the training set, and use all remaining images except queries as the database. MSCOCO (Lin et al., 2014) is a large-scale dataset for object detection, segmentation, and captioning. We consider a subset of 122,218 images from 80 categories, as in previous works (Qiu et al., 2021). We randomly select 5,000 images from the subset as the query set and use the remaining images as the database. For training, we randomly select 10,000 images from the database. ImageNet100 is a subset of ImageNet with 100 classes. We follow the settings from (Cao et al., 2017; Fan et al., 2020) and randomly select 100 categories. Then, we use all the images of these categories in the training set as the database and the images in the validation set as the queries. Furthermore, we randomly select 13,000 as the training images from the database.

B.2. Evaluation Metric

B.3. Training Details

We implement models using Pytorch and conduct experiments on two Intel Xeon Gold 5218 CPUs and one NVIDIA Tesla V100. Model training consists of two parts: the training loss in the semantic hashing model itself and the knowledge distillation loss. In our knowledge distillation part, we implement the optimizer Adam for optimization, in which the default parameters are used, and the learning rate is set to 0.001. The temperature is set to 0.3, and we consider the hyper-parameters in and in . We perform the grid search method on different cases for the best combination.

Appendix C THEORETICAL ANALYSIS FOR SIMILARITY PRESERVE KNOWLEDGE DISTILLATION

| 4 | 8 | 16 | 32 | 64 | 128 | 256 | 512 | Params | GFlops | |

| ViT_B_16 | 14.32 | 27.21 | 52.35 | 104.16 | 205.53 | 415.55 | 814.24 | 1796.03 | 86.6M | 17.56 |

| ViT_L_16 | 46.34 | 88.61 | 169.04 | 344.96 | 657.67 | 1354.89 | 2673.72 | 5454.58 | 304.3M | 61.55 |

| ResNet18 | 6.58 | 10.48 | 19.58 | 35.83 | 68.22 | 164.84 | 333.79 | 648.53 | 11.7M | 1.81 |

| MobileNetV2 | 9.35 | 12.98 | 20.85 | 36.91 | 69.67 | 162.54 | 323.27 | 642.99 | 3.5M | 0.3 |

| EfficientNetB0 | 12.75 | 15.81 | 24.36 | 40.98 | 89.20 | 175.33 | 313.07 | 637.89 | 5.3M | 0.39 |

| n=10,000,000; k=100 | 2.47 | 2.61 | 2.75 | 2.85 | 4.70 | 6.67 | 12.16 | 24.13 | - | - |

| n=10,000,000; k=1000 | 5.25 | 5.87 | 7.97 | 8.46 | 13.38 | 17.36 | 28.47 | 65.26 | - | - |

| n=30,000,000; k=100 | 5.52 | 7.59 | 10.11 | 13.39 | 14.54 | 22.28 | 36.68 | 65.61 | - | - |

| n=30,000,000; k=1000 | 12.38 | 18.54 | 26.64 | 37.84 | 45.36 | 68.16 | 106.63 | 174.84 | - | - |

| n=50,000,000; k=100 | 8.96 | 11.7 | 14.02 | 16.17 | 20.7 | 32.91 | 61.51 | 109.77 | - | - |

| n=50,000,000; k=1000 | 20.15 | 27.34 | 37.63 | 47.73 | 61.36 | 99.62 | 196.35 | 347.72 | - | - |

In Section 5.1, we have found that in the asymmetric paradigm (ASHP), the results of the Similarity-Preserving knowledge distillation (SP) (Tung and Mori, 2019) method on different cases are vastly different. In this section, we present a formal analysis to explore why this phenomenon occurs. We represent the output of the student model and teacher model as binary hash codes and , respectively, where denotes the length of the hash code. The objective of the SP method is defined as:

| (23) |

where denotes the Frobenius norm. To simplify the analysis, we consider the relationship between hash codes of arbitrary two images’ hash codes and and express the corresponding loss function as:

| (24) |

We assume that , where represents the k-th bit of . Expanding Eq. (24), we obtain:

| (25) | ||||

Notice that for , we have , then we get:

| (26) | ||||

To minimize , we need to maximize the second term and minimize the third term. We observe that if any satisfies the following condition:

| (27) |

then can be minimized to because the second term is and the third term is . Based on Eq. (27), we identify two optimization directions: (1) for any and , , which make the student model learn the individual-space knowledge from teacher model to achieve semantic space alignment; (2) there exist and such that , which can not ensure semantic space alignment. That is why the SP method on the ASHP works in some cases but not in others. The SP method works well in some cases where the model tends to achieve direction (1) more, enabling the student model to achieve semantic space alignment with the teacher model. Conversely, if the optimization tends to achieve direction (2) more, it cannot achieve semantic space alignment and cannot to ensure that the hash codes from different models for one image are mapped to a close position in Hamming space.

Appendix D Analysis of offset positive sample

In this experiment, we explored the occurrence probability of offset positive samples in CIFAR-10 when using EfficientNetB0 or ViT_B_16 as the CIBHash model’s backbone. We inputted the training images into the trained teacher model to obtain hash codes and conducted k-means clustering on the to assign pseudo label for each image. We then created augmented images using the same augmentation techniques as in CIBHash (Qiu et al., 2021). By inputting into the same model, we assigned the centroid as the pseudo label for each augmentation based on the Hamming distance. We defined the offset positive rate () as:

| (28) |

where is the indicator function. A higher indicates a greater occurrence of offset positive samples. We set various cluster numbers and conducted repeated experiments to increase the credibility of the results. The box plots in Figure 6 show that the is significant, and with an increasing number of clusters, the also increases. This outcome demonstrates that regardless of whether a lightweight backbone EfficientNetB0 or a more powerful model ViT_B_16 is employed, the “offset positive sample” is present. Besides, these findings support our consideration that removing offset positive samples is necessary for effective knowledge distillation. Intuitively, reducing the degree of image augmentation can decrease , but this may also reduce the number of hard positive samples in the augmentations, leading to performance degradation. Therefore, there could be a trade-off in this process, which requires further research in the future.

Appendix E Inference and Search Time Analysis

This section presents an analysis of the time required for model inference and hash code search. We provide a detailed examination of key factors that significantly impact these processes. We explore different backbones and batch sizes to assess their influence on inference time. Additionally, we investigate the impact of varying candidate sizes, the number of relevant images to retrieve, and batch sizes on search time. It is worth noting that the inference and search time can also be affected by various factors, including code implementation, used libraries, software versions, and other hardware-related factors in practice. We implement our experiments on our server as described in Section B.3. To construct the index, we utilized the faiss library 444https://github.com/facebookresearch/faiss.

Table 3 provides an overview of the inference time overhead and search time overhead. The upper part of the table shows the inference time of the CIBHash (Qiu et al., 2021) with different backbones and batch sizes. Although large-scale backbones provide better performance for semantic hashing models, it is noteworthy that the inference time of ViT_L_16 is approximately seven times higher than that of smaller models such as ResNet18. The lower part of the table shows the search time required to locate relevant images after obtaining the hash codes from the CIBHash model, where denotes the size of the candidate set, and represents the retrieval of the top most relevant images. As expected, a larger candidate set size and a larger value of will take more time to find relevant images. Moreover, we can find that the search time overhead is higher than the inference time when considering the same batch size. When using a large batch size, the inference time becomes the dominant factor in the overall time consumption of the retrieval procedure.