Binary Neural Network in Robotic Manipulation: Flexible Object Manipulation for Humanoid Robot Using Partially Binarized Auto-Encoder on FPGA

Abstract

A neural network based flexible object manipulation system for a humanoid robot on FPGA is proposed. Although the manipulations of flexible objects using robots attract ever increasing attention since these tasks are the basic and essential activities in our daily life, it has been put into practice only recently with the help of deep neural networks. However such systems have relied on GPU accelerators, which cannot be implemented into the space limited robotic body. Although field programmable gate arrays (FPGAs) are known to be energy efficient and suitable for embedded systems, the model size should be drastically reduced since FPGAs have limited on-chip memory. To this end, we propose “partially” binarized deep convolutional auto-encoder technique, where only an encoder part is binarized to compress model size without degrading the inference accuracy. The model implemented on Xilinx ZCU102 achieves 41.1 frames per second with a power consumption of 3.1 W, which corresponds to 10 and 3.7 improvements from the systems implemented on Core i7 6700K and RTX 2080 Ti, respectively.

I Introduction

Robotic manipulation of deformable objects such as clothes attracts ever increasing attention since these tasks are one of the basic and essential activities in our daily life. This type of manipulation is one of the most challenging tasks since the shape of flexible objects changes largely for different end-effector trajectories. To solve this problem, applications of end-to-end learning, where robots learn directly from human demonstrations, have been reported [1, 2, 3]. One of the state-of-the-art method proposed by Pin-Chu Yang et al. introduced a two-stage deep learning model where a deep convolutional autoencoder (DCAE) extracts low-dimensional image features from raw camera inputs, followed by a fully connected deep time delay neural network for predicting the temporal sequences of the image features and the joint angles of the robot. During the inference phase, the raw image captured by the camera mounted on robot’s head and current joint angles of the robot are provided to the trained model which, in turn, outputs the joint angles at the next time step [4]. Although they succeeded in folding a towel, their approach is implemented on a GPU-based platform consuming hundreds of watts, thus cannot be embedded into the space-limited robotic body. To alleviate this problem, we propose an FPGA-based system for robotic manipulation of flexible objects. Contrary to instruction-based processors like CPU or GPU, FPGAs are configured by hardware circuit specifications, which drastically improves the energy efficiency. However, FPGA platforms usually has less memory space compared to GPU platforms, and hence an aggressive model size compression is definitely required. One of the well-known model compression method is “binarized” neural networks (BNNs), where the synaptic weights and network activations are approximately represented by 1-bit values [5]. Surprisingly, even when the networks are binarized, the BNNs have acceptable performance on several tasks such as MNIST or CIFAR10 image classifications.

In spite of the successful applications of BNNs in the image classification tasks, we find that the naive binarization of DCAE results in the corruption of the extracted image features since the binarization blocks gradients to well propagate through the network. To alleviate this, we propose “partial” binarization method named partially-binarized DCAE (PB-DCAE), where only synaptic weights and activations in the encoder part are binarized whereas those in the decoder part are left unchanged. The full precision decoder part help gradient to propagate through the deep layers, resulting in the well self-organized image features. Note here that the decoder part is only required during training and is removed for inference. Hence, our method does not incur any additional memory space compared to the fully binarized DCAE. This paper makes the following contributions:

-

•

To the best of our knowledge, this is the first study reporting the successful application of BNN into robotic domain.

-

•

A lightweight neural network model named PB-DCAE for end-to-end learning of flexible object manipulation by a humanoid robot is proposed.

-

•

The proposed model implemented on Xilix ZCU102 board demonstrates that 10 and 3.7 more improvement in energy efficiency compared to that implemented on Core i7 6700K CPU and RTX2080Ti GPU, respectively.

II PRELIMINARIES

II-A End-to-End Learning of Robotic Object Manipulation

End-to-end learning of robotic manipulation is composed of three phases: (1) data collection, (2) model training, and (3) model deployment on a robot.

II-A1 Data collection

A robot teleoperating environment is constructed, where a human instructor can control robot end-effectors via 3D spatial input devices. During the teleoperation, a robot is operated by a human instructor while recording the states and the corresponding actions from its own sensors, e.g., a camera mounted on the robot’s head to capture changes in the physical environment and angle sensors to monitor the movements of the robot arms. Note here that since the state and actions are recorded by robot’s own sensors, no coordinate transformations are required to train the model.

II-A2 Model training

The typical network architecture to enable end-to-end robotic object manipulation is to combine two different models: a DCAE to extract low-dimensional features from raw images and a recurrent neural network (RNN) to model temporal sequences of image features and joint angles [4, 6]. DCAE is a symmetrical hourglass-shaped convolutional neural network composed of an “encoder” and a “decoder,” which is used for dimensionality reduction [7]. The training objective of the DCAE is to reconstruct the original images provided to the encoder. Since its middle layers have fewer neurons compared to the input/output layers, the trained DCAE can create a “compressed” representation of the original image in its middle layer. After the training, the decoder is removed and the output of the encoder is directed to the RNN for joint angle prediction.

RNN is a class of neural networks where the outputs are fed as input recursively, which enable the RNN to have internal memory and to behave temporally. RNN can process sequential information and maintain robustness against instantaneous noise. Therefore, RNN are well suited for robot manipulation and have been used for various tasks such as drawing[8], tool usage[9], and ”put-in-box” tasks[2]. Given the image features extracted by the encoder and the current joint angles of the robot, the RNN is trained to successfully predict the joint angles at the next time step.

II-A3 Model deployment

Firstly, the camera image is fed into the trained encoder to extract the low-dimensional feature. Secondly, the feature vector is concatenated with the current joint angles, which is provided to the RNN for predicting the joint angles at the next time step. Finally, the predicted joint angles are transmitted to the robot controller to actually rotate joints. The above steps are repeated until the robot complete object manipulation.

II-B Binarized Neural Networks

To reduce the model size, [5] has proposed binarization of synaptic parameters and activations, i.e., a real-valued weight or activation is approximated by either or . The real-valued input tensor is binarized using the following activation function:

| (1) |

However, since the derivative of the sign function is almost zero everywhere, naive binarization leads to poor network performance. To alleviate this, [5] proposed a technique called “straight-through estimator,” where the derivative of the sign function is substituted by that of hard hyperbolic tangent, i.e.,

| (2) |

By constraining the weights and activations to be binary, we can (1) dramatically reduce model size, e.g., considering float32 as baseline, binarization achieves 1/32 model size reduction, and (2) multiply-and-accumulate (MAC) operations can be realized by using only XNOR gates and 1’s counters.

II-C Batch Normalization

Even with “straight-through estimator,” training of BNN is unstable and gradients tend to explode frequently. Hence, BNN usually comes with batch normalization (BN) layer which basically standardizes activations, i.e., it re-scales and re-centers input activations so that their means and variances become zero and one, respectively[10]. Let is the input to the BN layer, it computes:

| (3) |

where is the output of the BN layer, and are trainable parameters, and are the empirical mean and variances of mini-batch, and is a small constant for numerical stabilization.

One may argue that adding BN requires additional hardware resource since Eq. (3) includes a division and multiplication. However, remembering that BNN requires only the sign of activations, the BN layer at inference time can be realized simply by shifting bias term:

| (4) |

where is the floor function.

Owing to the straight-through estimator and BN layers, BNNs can achieve comparable performance with the full-precision counterpart. However, we find that naive replacement of DCAE with BNNs results in corruption of extracted image features, which motivates us to develop “partiall” binarization technique.

III Proposed system

III-A Overview of our system

Fig. 1 shows the overall system, which is composed of two neural networks: a PB-DCAE to convert raw images into low-dimensional feature vectors and a long-short term memory (LSTM) to generate joint angles of robot arms. Since the LSTM accounts for only 1.2% of whole model size, we leave the LSTM unquantized to maintain its performance. Zynq SoC platform is selected to cope with heterogeneous arithmetic precisions, i.e., the PB-DCAE requires binary operations whereas the LSTM requires floating point operations, and the PB-DCAE and the LSTM are implemented onto a programmable-logic (PL) and processing-system (PS) having an ARM processor, respectively. Our model is firstly trained by using GPU. Then, the parameters of PB-DCAE are converted to C++ header file and converted to “.bit” file via Vivado toolchain to configure the PL. The LSTM is re-implemented by using Numpy from scratch so that it can be efficiently executed on the resource limited ARM processor.

III-B Model architecture

III-B1 PB-DCAE for image feature extraction

To implement the model onto FPGA, the model size should be drastically reduced so that it can fit onto on-chip memory. BNN is a promising technique to compress the model. However, as we demonstrate in Sec. IV, the naive binarization leads to corruption of extracted image features. To alleviate this problem, we propose “partially” binarized DCAE (PB-DCAE), where only the weights and activations in the encoder part are binarized. Since the decoder part is left unchanged, the errors at the output can well backpropagate through the network resulting in the better generalization capability. Note again that the decoder is required only during the training phase and that it is removed for the deployment phase. Hence, PB-DCAE does not incur any additional memory cost compared to fully binarized DCAE.

III-B2 LSTM-based Motion generation module

For an RNN part, we employ LSTM which is an extention of naive RNN so that it can take long-term dependencies in sequential data. LSTMs are widely used for their expressive power. For example, R. Rahmatizadeh et al. employed a low-cost robotic arm to generate multiple tasks using LSTM[11], and S. Funabashi et al. achieved in-hand object manipulations via CNN-LSTMs[12]. The input of LSTM is the robot’s joint angles including the gripper commands and image features extracted by PB-DCAE, and outputs the joint angles at the next time step. By recursively feeding the predicted outputs into the input of LSTM, it can make multi-step prediction, which is used to evaluate the performance of the trained LSTM.

III-C Training

For the ease of stabilization of training, we employed a two-stage training, where the PB-DCAE is trained firstly to yield well self-organized image features, followed by training of LSTM.

PB-DCAE training: PB-DCAE is trained to reconstruct input images provided to the input of the encoder. Since PB-DCAE has a hourglass-shaped structure, i.e., middle layers have fewer neurons than input or output layers, the network is forced to “reconstruct” images by using only degenerated information. Hence, the encoder part tries to find better compressive expressions of input images for the decoder to successfully recover original images. After the training, the decoder part is removed and the parameters of the encoder are frozen.

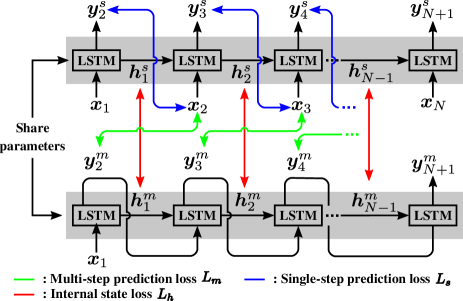

LSTM training: The image features extracted by the trained PB-DCAE is concatenated with the joint angles and the gripper command to form a visuo-motor sequence, . Here, is a vector containing the extracted image feature and the joint angles and the gripper command at time step . is the sequence length. At each time step, the LSTM takes the input and the current state , and outputs and the updated state . Let be the mathematical function representing the LSTM’s behaviour, the relationship between inputs and outputs can be written as: . By recursively feeding to the LSTM, it can generate the sequence: . In our application, we want to predict visuo-motor sequence and hence the training objective is to minimize mean-square-loss between and . Unfortunately, however, this “multi-step” prediction task is difficult to train and loss tend to diverge frequently especially for the longer sequences. Hence, to stabilize the training, we propose to exploit single-step prediction loss in combinaiton with multi-step prediction loss. Our training setup is shown in Fig. 2.

1) Multi-step prediction loss: We firstly compute “multi-step” prediction loss by recursively feeding LSTM’s outputs to its inputs as shown in the lower part of Fig. 2. Let be the generated sequence. Then, the multi-step prediction loss is given by:

| (5) |

2) Single-step prediction loss: Unlike the multi-step prediction setup, the LSTM takes “teacher” visuo-motor sequence at each time step, i.e., given an element of visuo-motor sequence and the internal state , it computes . Then, by recursively feeding only the internal state , the next output is computed as shown in the upper part of Fig. 2: . Repeating this procedure, we have a sequence of single-step ahead prediction of visuo-motor sequence: . Then, the single-step prediction loss is given by:

| (6) |

3) Internal state loss: Since internal states have no teacher signal, the small error may accumulate and cause gradient explosion when making multi-step prediction. Hence, we further introduce an additional loss to make close the internal states for single-step prediction and those for multi-step prediction :

| (7) |

The final loss function is given by linear combination of the three losses as follows:

| (8) |

where , , and are hyper-parameters.

III-D Implementation

The proposed model is implemented on Zynq UltraScale+ MPSoC ZCU102 board. The on-chip RAM and LUT resources of this FPGA board are very limited, so it is necessary to implement a reduced hardware resource usage while maintaining the inference throughput. The overall design flow is shown in Fig. 3.

First, PB-DCAE is trained using PyTorch framework and the network parameters are extracted. Next, a C++ model of the proposed PB-DCAE is created and synthesized using two Xilinx Vivado software to yield an executable “.bit” file containing the network topology to properly configure the FPGA board. Finally, download all the generated files to a Xilinx ZCU102 FPGA board and test/measure the performance. PYNQ (PYthon Productivity of zynQ) environment is installed onto ZCU102 board so that we can write programs and view the results via Jupyter Notebook. Note again that The image feature extraction module is implemented on PL whereas the LSTM-based motion generation module is implemented on PS. Since the DCAE-based image feature extraction module accounts for 99.94% of total multiplications required for single step inference the performance of the entire system can be greatly improved even when the LSTM remains on the PS-side.

FPGA implementation: Fig. 4 shows the block diagram of PB-DCAE implemented on FPGA. A dedicated computation unit is assigned for each layer to minimize data movements, and each layer is connected via AXI-Stream interface. To process the data stream efficiently with a small hardware resources, shift registers are prepared to hold only the data necessary for the computation and perform convolution. Binarization allows floating-point multiply-and-accumulate operations to be replaced by XNOR and bit counting, which can be implemented in small circuits. In addition, although BN requires multipliers and adders, it can be converted to integer comparisons by transforming the expression together with the Sign function that follows, thus reducing the circuit size and implementation. The model size can be reduced by binarization. Since the model size is compressed by binarization, all the parameters can be expanded to the on-chip BRAM. Therefore, feature maps and binarization weights can be stored in on-chip memory for PB-DCAE computation. Since no external I/O is involved, latency due to communication can be minimized and the system can be executed with low power consumption.

IV Experiment

IV-A Experimental setup

The dataset for the experiments was collected by using Nextage Open developed by Kawada Robotics. It has two arms with grippers and a camera mounted on robot’s head. Each arm has six degrees of freedom (DoF). To collect the training data, a robot teleoperating environment is deveoped, where a human operator can control the gripper positions by using 3D mouse. Using this environment, the operator is requested to complete a cloth-folding task, during which camera images (1421423=60,492 dimensions, RGB), joint angles of both arms (62=12 dimensions), and a gripper command (1 dimension) are recorded. Note here that since we only used right gripper to pick up the cloth, we only recorded the gripper command attached at the right arm. A total of 75 task sequences were collected, 50 of which were used for training and 25 for evaluation. As a result, approximately 22k and 11k steps of data are used to train and to validate the model, respectively. , , and in Eq. (8) are set to be 0.1, 1.0, and 0.1, respectively. The model architecture is summarized in Tab. I.

IV-B Experimental result

To validate the impact of partial binarization, we firstly compare the quality of reconstructed image using three different DCAEs, i.e., a full-precision DCAE, a fully-binarized DCAE, and a PB-DCAE, as shown in Fig. 5. The left most image is the raw input image which is captured when the robot moves its grippers to pick the towel placed at the center. The remaining three images are those reconstructed by three different DCAEs.

Observing Fig. 5, we see no noticeable difference between images reconstructed by the full-precision DCAE and the PB-DCAE. However, the fully-binarized DCAE outputs the corrupted image. To quantitatively compare the quality of reconstructed images, peak signal-to-noise ratios (PSNRs), one of the common method to measure the image quality of lossy compression codecs, are calculated. PSNR is expressed by

| (9) |

Higher PSNR means higher image quality and, according to [13], PSNR of over 20 dB is sufficient quality for practical applications. As shown in Fig. 5, the average PSNR for full-precision DCAE was 21.85 dB while that for fully-binarized DCAE was 7.164 dB, which again demonstrates that naive binarization leads to corruption of reconstructed images. We also confirmed that PSNR for PB-DCAE was 20.78 dB which is comparable to that of the full-precision DCAE.

We then examine the impact of binarization on the prediction accuracy of the joint angles and the gripper command. For this purpose, we compare the multi-step prediction accuracy of the LSTM, i.e., given only image features, joint angles, a gripper command at time step , the LSTM is requested to predict the whole remaining sequence for by recursively feeding the predicted output into the LSTM. The reconstruction errors calculated by the mean square error between the original and the predicted sequences are 2.98∘, 6.32∘, and 3.43∘ each corresponds to the image features extracted by full-precision DCAE, fully-binarized DCAE, and PB-DCAE, respectively. We again confirmed that the PB-DCAE achieved comparable performance with the full-precision DCAE although the model size was dramatically reduced from 51.3MB to 2.20MB, as shown Tab. I.

We finally compare the energy efficiency of the proposed method with the conventional method relying on a GPU-based platform. The power consumptions of Zynq ZCU102 board, CPU, and GPU are measured by using Maxim powertool, “s-tui” command, and “nvidia-smi” command, respectively. Tab. II summarizes the processing time, power consumption, and energy efficiency for CPU, GPU, and Zynq platforms. Studying Tab. II, we can confirm that the Zynq platform achieved the highest energy efficiency, which corresponds to 10 and 3.7 improvements compared to CPU and GPU platform, respectively, while satisfying the processing speed above 30 FPS which is the upper bound determined by the frame rate of a commercial RGB camera.

| Layer | Out. | In. | Float-besed | Proposed |

| F size | F maps | model | model | |

| Conv1 | 142142 | 3 | 3.38 KB | 0.105 KB |

| Max Pool | 7070 | 32 | – | – |

| Conv2 | 7070 | 32 | 72.0 KB | 2.25 KB |

| Max Pool | 3434 | 64 | – | – |

| Conv3 | 3434 | 64 | 288 KB | 9.00 KB |

| Max Pool | 1616 | 128 | – | – |

| Conv4 | 1616 | 128 | 1.15 MB | 36.0 KB |

| Max Pool | 77 | 256 | – | – |

| FC1 | 1024 | 12544 | 50.2 MB | 1.57 MB |

| FC2 | 64 | 1024 | 256 KB | 8.00 KB |

| LSTM1 | 100 | 77 | 280 KB | 280 KB |

| LSTM2 | 100 | 100 | 316 KB | 316 KB |

| FC3 | 77 | 100 | 30.4 KB | 30.4 KB |

| Total | – | – | 51.3 MB | 2.20 MB |

| Core i7 6700K | RTX 2080Ti | ZCU102 | |

|---|---|---|---|

| Preprocessing [msec] | 0.759 | 1.47 | 4.45 |

| Conv1-FC2 [msec] | 12.4 | 2.24 | 15.4 |

| LSTM1-FC3 [msec] | 0.420 | 0.315 | 4.48 |

| Total [msec] | 13.7 | 4.03 | 24.3 |

| FPS [sec-1] | 73.0 | 248 | 41.1 |

| Power [W] | 55.4 | 69 | 3.1 |

| Efficiency [FPS/W] | 1.32 | 3.59 | 13.3 |

V Conclusion

We proposed a lightweight neural network named PB-DCAE for robotic flexible object manipulation. By binarizing only the encoder part of DCAE, we successfully reduced the model size while preserving the performance of the full-precision DCAE. PB-DCAE and other peripheral modules are implemented on Zynq ZCU102 board, which demonstrated the model size can be reduced by 95.7% compared to the full-precision counterpart, while the degradation of the predictive accuracy of joint angles is kept to 0.45∘. Further, we examined the energy efficiency of our system by comparing the same model implemented on Core i7 6700K and RTX 2080 Ti, which demonstrated that 10 and 3.7 more efficiency compared the CPU and GPU-based platform, respectively.

References

- [1] K. Kawaharazuka, T. Ogawa, J. Tamura, and C. Nabeshima, “Dynamic manipulation of flexible objects with torque sequence using a deep neural network,” in Int. Conf. on Robotics and Automat. (ICRA), pp. 2139–2145, 2019.

- [2] K. Kase, K. Suzuki, P.-C. Yang, H. Mori, and T. Ogata, “Put-in-Box Task Generated from Multiple Discrete Tasks by aHumanoid Robot Using Deep Learning,” in Int. Conf. on Robotics and Automat. (ICRA), pp. 6447–6452, 2018.

- [3] N. Saito, N. B. Dai, T. Ogata, H. Mori, and S. Sugano, “Real-time Liquid Pouring Motion Generation: End-to-End Sensorimotor Coordination for Unknown Liquid Dynamics Trained with Deep Neural Networks,” in Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 1077–1082, 2019.

- [4] P.-C. Yang, K. Sasaki, K. Suzuki, K. Kase, S. Sugano, and T. Ogata, “Repeatable folding task by humanoid robot worker using deep learning,” IEEE Robot. Autom. Lett., vol. 2, no. 2, pp. 397–403, 2016.

- [5] I. Hubara, M. Courbariaux, D. Soudry, R. El-Yaniv, and Y. Bengio, “Binarized Neural Networks,” in Int. Conf. on Neural Inf. Process. Syst. (NIPS), p. 4114–4122, 2016.

- [6] K. Kase, R. Nakajo, H. Mori, and T. Ogata, “Learning Multiple Sensorimotor Units to Complete Compound Tasks using an RNN with Multiple Attractors,” in Int. Conf. on Intell. Robots and Syst. (IROS), pp. 4244–4249, 2019.

- [7] J. Masci, U. Meier, D. Cireşan, and J. Schmidhuber, “Stacked convolutional auto-encoders for hierarchical feature extraction,” in Int. Conf. on Artif. Neural Networks, pp. 52–59, Springer, 2011.

- [8] K. Sasaki, H. Tjandra, K. Noda, K. Takahashi, and T. Ogata, “Neural network based model for visual-motor integration learning of robot’s drawing behavior: Association of a drawing motion from a drawn image,” in Int. Conf. on Intell. Robots and Syst. (IROS), pp. 2736–2741, 2015.

- [9] K. Takahashi, T. Ogata, H. Tjandra, Y. Yamaguchi, and S. Sugano, “Tool-body assimilation model based on body babbling and neurodynamical system,” Math. Problems in Eng., vol. 2015, 2015.

- [10] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Int. Conf. on Mach. Learn. (ICML), pp. 448–456, 2015.

- [11] R. Rahmatizadeh, P. Abolghasemi, L. Bölöni, and S. Levine, “Vision-based multi-task manipulation for inexpensive robots using end-to-end learning from demonstration,” in Int. Conf. on Robot. and Automat. (ICRA), pp. 3758–3765, 2018.

- [12] S. Funabashi, S. Ogasa, T. Isobe, T. Ogata, A. Schmitz, T. P. Tomo, and S. Sugano, “Variable In-Hand Manipulations for Tactile-Driven Robot Hand via CNN-LSTM,” in Int. Conf. on Intell. Robots and Syst. (IROS), 2020.

- [13] N. Thomos, N. V. Boulgouris, and M. G. Strintzis, “Optimized transmission of JPEG2000 streams over wireless channels,” IEEE Trans. Image Process., vol. 15, no. 1, pp. 54–67, 2005.