Bi-Level Graph Structure Learning for

Next POI Recommendation

Abstract

Next point-of-interest (POI) recommendation aims to predict a user’s next destination based on sequential check-in history and a set of POI candidates. Graph neural networks (GNNs) have demonstrated a remarkable capability in this endeavor by exploiting the extensive global collaborative signals present among POIs. However, most of the existing graph-based approaches construct graph structures based on pre-defined heuristics, failing to consider inherent hierarchical structures of POI features such as geographical locations and visiting peaks, or suffering from noisy and incomplete structures in graphs. To address the aforementioned issues, this paper presents a novel Bi-level Graph Structure Learning (BiGSL) for next POI recommendation. BiGSL first learns a hierarchical graph structure to capture the fine-to-coarse connectivity between POIs and prototypes, and then uses a pairwise learning module to dynamically infer relationships between POI pairs and prototype pairs. Based on the learned bi-level graphs, our model then employs a multi-relational graph network that considers both POI- and prototype-level neighbors, resulting in improved POI representations. Our bi-level structure learning scheme is more robust to data noise and incompleteness, and improves the exploration ability for recommendation by alleviating sparsity issues. Experimental results on three real-world datasets demonstrate the superiority of our model over existing state-of-the-art methods, with a significant improvement in recommendation accuracy and exploration performance.

Index Terms:

Next POI Recommendation, Graph Structure Learning, Hierarchical Structure, Contrastive Multiview Fusion.1 Introduction

The emergence of location-based social networks has brought to light a subject of great interest to both researchers and service providers alike: next point-of-interest (POI) recommendation. This task seeks to comprehend the temporal nature of a user’s preferences by analyzing their historical check-in sequences and then make predictions about the next POIs that they are most likely to visit. Such insights can be used to improve both the user experience as well as the service provider’s services.

Graph-based methods have been widely used in POI recommendation due to their capability of modeling global collaborative relationships of POIs across users. These methods typically involve two stages: (1) the construction of a topology graph based on POI features and (2) the learning of POI representations based on the constructed graph. Depending on the type of information to be used, such a graph may be built by taking into consideration the spatial information of POIs, such as distance intervals [1, 2, 3] or grid regions [4], as well as temporal features from users’ sequential check-in data, such as the average time intervals between consecutive visits [1] or the Jaccard similarity of time slot sets [4]. Additionally, it is also common to model transitions between POIs based on the number or frequency of consecutive visits between each POI [5, 6, 3]. After the graph is built, graph neural networks (GNNs) are used to learn the POI representations by aggregating information from the neighborhood of the nodes. These POI representations are then used to further learn users’ preferences from the sequences of visited POIs and rank candidate POIs for producing recommendations.

Despite their success, existing graph-based methods for POI recommendation suffer from various limitations.

Firstly, previous methods construct graphs based solely on local neighborhoods, disregarding the valuable hierarchical structures of POIs. Hierarchical structure means that fine-grained POIs can be divided into coarse-grained groups, and POIs within the same group have similar group characteristics in some aspects. As shown in Fig. 1, POIs could be grouped into the same group due to similar spatial locations, transition sequence patterns, temporal visiting peaks, or category descriptors. These hierarchical structures have been proven to improve recommendations by mitigating the sparsity issues [7, 8, 9] and improving the exploration ability [4, 10]. Some previous methods handle hierarchical information by employing multi-task learning [4] or designing hierarchical encoders [11], however, the ingenious combination of the advantages of GNNs and hierarchical structures remains unexplored.

Secondly, the graph structure of these existing methods is usually fixed during training, determined by pre-defined rules or empirical laws [6, 3, 12, 13]. This lack of flexibility could lead to performance degradation due to the presence of noise or incompleteness in the graph structure, since GNNs heavily rely on the quality of graph structure [14]. For example, there could be noisy and missing edges in the graph structure, due to erroneous check-ins and missed check-ins by users, or even malicious attacks.

Lastly, multiple POI features (e.g., spatial, transition, and temporal features) are usually leveraged to construct multiple graphs. In different graphs, the same POI may have common neighbors and graph-specific neighbors, so there is shared information and specific information contained in different POI features. However, existing approaches often simply linearly combine or concatenate the POI representations in each graph to fuse them [1, 4], which inadequately models the alignment and complementary relationships of POI representations in different graphs, and results in sub-optimal POI representations.

To overcome the aforementioned limitations, we introduce a novel Bi-level Graph Structure Learning method for next POI recommendation, BiGSL for brevity. Our proposed BiGSL model consists of two graph structure learning modules that adaptively capture coarse- and fine-grained connectivity structures of POIs. Specifically, we first map each POI to a node in the graph space and then resort to clustering on the POI features to discover the hierarchical structure. The resulting prototypes represent the coarse-grained clusters, which are then added to the graph to augment the neighborhood of POI nodes. Subsequently, we introduce a pairwise structure learning method to infer the connectivity between POI pairs and prototype pairs in an adaptive manner, the result of which represents fine-grained connections that supplement the coarse-grained information. This bi-level approach produces coarse-to-fine connectivities of POIs that can be learned in a data-driven manner, thereby alleviating the first two limitations. Based on the bi-level graphs, we propose a multi-relational graph attention network that considers two facets of local structures including POI- and prototype-level neighbors, which produces better POI representations as a result. Finally, to further boost the performance, we construct multiple views based on the original POIs and users’ sequential data. To encourage the fusion of POI information from distinct views, we design a contrastive multiview fusion approach by mining view-shared and view-specific information, which better aligns complementary features in different views.

The main contributions of our work are outlined as follows:

-

•

We propose a novel BiGSL model for next POI recommendation, which employs a bi-level graph structure learning method that adaptively infers hierarchical graph structures in a data-driven manner.

-

•

Based on the learned bi-level graphs, we design a multi-relational graph network to generate more informative POI representations, considering both POI- and prototype-level neighbors.

-

•

We further introduce a multiview contrastive learning strategy to integrate information from multiple views to improve the recommendation accuracy.

-

•

We conduct extensive experiments on three real-world datasets including two widely adopted benchmarks (Gowalla and Foursquare) and a new commercial dataset. The results show that BiGSL significantly outperforms state-of-the-art methods in recommendation accuracy and exploration performance.

2 Related Work

In this section, we succinctly review existing studies for next POI recommendation and graph structure learning.

2.1 Next POI Recommendation

Next POI recommendation aims to infer the users’ dynamic preferences and predict where the user will go next, given the historical check-ins and a set of POI candidates.

Recurrent neural networks (RNNs) and self-attention have shown promising performance in handling sequential data, hence they have been widely used as the backbone of the next POI recommenders [15, 16, 17, 18]. Some studies are dedicated to capturing sequential dependencies in sequences to model the dynamic user preferences [19, 20, 21, 22]. On the other hand, POI features and historical check-ins contain rich collaborative signals, such as spatial location, visited time, and frequency of consecutive visits, and are therefore leveraged to make more effective recommendations from sparse data. Early works introduce these collaborative signals directly into the backbone, such as computing transition matrices or gates in RNNs [23, 24, 25, 26] and attention maps in self-attention [27].

Recently, it has been found that these collaborative signals can be represented by graphs and the GNNs can be employed to effectively capture the correlations among POIs [12, 3, 13]. For example, STP-UDGAT [1] constructs three types of POI graphs based on the spatial distance, time interval, and consecutive visiting, so as to learn user preferences in different views. GETNext [6] introduces a global trajectory graph to better leverage the extensive collaborative signals from different users. HMT-GRN [4] constructs global spatio-temporal graphs to model collaborative signals among POIs and utilizes auxiliary tasks to alleviate the data sparsity issue.

Although great success has been achieved, these existing graph-based methods rely on pre-defined rules to construct graphs, which leads to noise and incompleteness in the graph and degenerates the performance of GNNs [28]. Although GraphFlashback [2] attempts to automatically learn latent POI graphs based on a holistic knowledge graph, the topology of such latent graphs lacks interpretability and still neglects the underlying hierarchical structure in POI features. SNPM [29] is another attempt to infer latent graph structures. However, it only considers relationships within the same region and remains constrained by a pre-defined graph structure. Our proposed BiGSL addresses the above issues by hierarchical and pairwise structure learning.

2.2 Graph Structure Learning

Although GNNs have achieved superior performance in analyzing graph-structured data, most GNNs are highly sensitive to the quality of graph structures and usually require a credible graph structure that is hard to construct in real-world applications [30]. Given that GNNs recursively aggregate information from neighborhoods to update node embeddings, the iterative nature of this process has consequential cascading effects. Small noise in a graph will be propagated to the neighboring nodes, subsequently affecting the embeddings of numerous other nodes [14, 31]. Recently, considerable literature has arisen around the theme of graph structure learning (GSL), which targets at jointly learning an optimized graph structure and corresponding representations. Existing GSL methods can be roughly grouped into three categories: metric learning that models or refines the edge weights by measuring the similarity between node representations [32, 33, 34], probabilistic modeling that models the probability distribution of edges and then samples a graph from this distribution [30, 35, 36], and direct optimization that treats the graph adjacency matrix as parameters and optimizes it directly along with GNN parameters [37, 38, 39].

Although these studies leverage graph structure learning to refine the graph structures, they are not tailored for next POI recommendation. They only learn pairwise relationships between nodes and lack consideration on meaningful hierarchical structure. NCL [40] extracts coarse-grained prototypes for cross-granularity contrastive learning in graph collaborative filtering, inspiring us to construct hierarchical graph structures using prototypes. Distinct from previous graph structure learning methods, we not only adaptively learn pairwise relationships between POIs to suppress the potential noise, but also construct hierarchical structures to further enrich the neighborhoods of POIs by extracting POI prototypes, so as to capture the global relationships between POIs effectively and provide accurate recommendations.

3 Preliminaries and Problem Statement

Let and denote the set of users and POIs, respectively.

Definition 1. Check-in. A check-in record is represented in a tuple , in which is the POI visited on time step with its location coordinates , and is the timestamp.

Definition 2. Check-in Sequence. Each user has a chronologically ordered historical check-in sequence , where is the last POI visited.

Definition 3. POI Feature. Apart from the POI IDs, POIs typically possess several primitive features, including spatial features (latitude and longitude), temporal features (the time distribution of check-ins), transition features (the distribution of consecutive check-ins between POIs), and category features, among others. These features reveal the similarity or collaborative relationships among POIs, making them useful in constructing graphs for existing graph-based next POI recommendation methods, following pre-defined rules. For instance, a spatial graph can be constructed by dividing grids according to latitude and longitude or calculating distances, while a temporal graph can be constructed based on temporal feature similarity.

Definition 4. Feature View. To effectively capture collaborative signals embedded in different primitive features, we adopt a multi-view framework. In each feature view, we model the information provided by only one type of primitive features and then fuse the information from all views. We denote the set of feature views, which also represents the set of POI primitive feature types, as . In feature view , the primitive features of POIs are denoted by , where represents the dimension of the primitive feature. The -th row, , denotes the primitive feature of POI . For instance, in the spatial feature view, , and .

Problem 1. Next POI Recommendation. Next POI recommendation takes a user’s historical check-in sequence and the POI candidate set as input to generate a ranked POI list for the next time step , where the next visited POI should be highly ranked.

4 The proposed BiGSL Model

This section elaborates on our proposed BiGSL. We first introduce the base recommender backbone. Then, we introduce four main components in our model: (1) hierarchical structure learning that infers the hierarchical structure by grouping POIs into different clusters and extracting prototypes, (2) pairwise structure learning that adaptively infers relationships between POIs or prototypes, (3) a novel multi-relational graph attention network that can fully exploit learned hierarchical graphs, and (4) contrastive multiview fusion that computes view-shared and view-specific representations to facilitate information fusion. Finally, we explain how to optimize our model and provide a complexity analysis. The overall framework of BiGSL is shown in Fig. 2.

4.1 Backbone Recommender

The key components of our proposed BiGSL are model-agnostic and can be plugged into any sequential recommendation model. To show the effectiveness of our approach, we choose a simple yet effective recommender as the backbone, which contains only an embedding layer, an LSTM layer and a dense layer with softmax normalization.

The embedding layer offers dense POI ID embeddings and user ID embeddings. We describe a POI (a user ) with an embedding vector (), where denotes the embedding size.

We first use the LSTM layer to learn the user’s dynamic preference from the historical check-in sequence :

| (1) |

where represents the LSTM layer, and is the hidden representation of user ’s historical check-ins.

Next, we compute the conditional probability of next POI distribution and rank all POIs to make personalized recommendation:

| (2) |

where is the predicted conditional probability distribution regarding , and is the probability that the next POI is given the historical sequence . is the trainable user ID embedding to introduce personalization, is the vector concatenation, and is the learnable weight matrix. The softmax function is performed to compute the conditional probability of the next POI distribution. Finally, we can sort POIs in descending order of conditional probability and get a ranked POI list.

4.2 Hierarchical Structure Learning

Based on the aforementioned backbone, we point out that existing methods, which employ conventional GNNs on manually constructed graphs to encode the interaction relationship between POIs, cannot model the hierarchical information well. To address this concern, we propose a hierarchical structure learning method to embed the hierarchical information in the graph structures explicitly.

To model the hierarchical nature in POI features, we propose a hierarchical structure learning method. We design a hierarchical structure learning objective to group POIs into different clusters and extract prototypes. Roughly speaking, prototypes can be regarded as the center of clusters that represent a group of semantically similar POIs.

Formally, the goal of graph structure learning is to maximize the following log-likelihood function:

| (3) | ||||

where is learnable parameters of model, is primitive features of POIs, is the learned structure embedding of POI that will be used to construct the graph structure. is the set of cluster centroids, and we use to denote the number of clusters. The objective in Eq. 3 is hard to optimize directly because are both free variables. Therefore, we introduce its tractable lower bound by Jensen’s inequality:

| (4) | ||||

where denotes the distribution of latent variable when is observed. The goal of graph structure learning can be reformulated to maximize the function over when is estimated. Since the cluster centroids are latent, we introduce the Expectation–Maximization (EM) algorithm to formulate the optimization process.

In the E-step, is fixed and can be estimated by K-Means algorithm over all . The distribution is estimated by a hard indicator if POI belongs to -th cluster, and for other centroids .

In the M-step, we fix and optimize . By introducing hard indicator , maximizing the lower bound in Eq. 4 yields a loss function:

| (5) |

Taking inspiration from NCL [40], we assume that the distribution of POIs is isotropic Gaussian over their corresponding clusters and each Gaussian distribution has the same variance. Therefore, the loss function can be written as:

| (6) | ||||

where is the centroid of the cluster to which POI belongs, and is represented by a temperature coefficient . Since and have been -normalized in advance, we can leverage to simplify the loss function.

This objective suggests that in the M-step, the structure embedding of each POI and its corresponding cluster centroid should be as close as possible. We achieve this by iteratively conducting K-Means in the E-step and minimizing in the M-step. With the above objective, the hierarchical nature in POI features can be captured in the structure embedding and the prototypes can be obtained by averaging POI representations in each cluster. The prototype will be used to represent the coarse-grained semantics of clusters and to extend the neighborhoods of POI nodes.

Compared to heuristic grouping approaches, such as grouping temporal features by date, our clustering-based method enables the adaptive uncovering of intricate hierarchical structures in POI features, which may be irregular and not aligned with pre-defined heuristic rules.

After hierarchical structure learning, we can define an adjacency matrix between POIs and prototypes based on the hard indicator:

| (7) |

4.3 Pairwise Structure Learning

Although hierarchical structure information has been explicitly captured, the GNNs are still susceptible to the presence of data noise and incompleteness in the graph structures. Traditional rule-based graph construction relies on pre-defined rules or assumptions, inevitably leading to noisy and missing edges. In contrast, graph structure learning methods can alleviate these issues by automatically identifying patterns and inferring the topological structure.

To address the issue of noisy and incomplete graphs, we adopt a deep graph structure learning method to learn pairwise relationships between POIs adaptively:

| (8) |

| (9) |

where is the primitive feature (e.g., latitude and longitude in the spatial view) of POI , which is used to construct graphs, is the structure embedding transformed by learnable parameters . is the adjacency matrix of POI graph. To refine into a sparse and normalized adjacency matrix, we also conduct -neighborhood sparsification and normalization post-processing, which are widely used in graph structure learning [41, 42, 43].

Distinct from the previous methods that only consider the resemblance between POIs, we also capture the relationships between coarse-grained prototypes to further explore the hierarchical structure. Following the pairwise structure learning defined in Eq. (8) and (9), we take the cluster centroids as the primitive features to construct the connections between the prototypes:

| (10) |

| (11) |

Following bi-level graph structure learning, we obtained bi-level graphs that encompass both fine-grained POI-level information and coarse-grained prototype-level information. The prototype-level information unveils the collective characteristics of similar POIs. A conventional graph can be symbolized as , where represents the set of nodes, and denotes the adjacency matrix. We present the formalized notation for a bi-level graph as , where and respectively signify the sets of POI nodes and prototype nodes, and are the three adjacency matrices learned through bi-level graph structure learning. These matrices portray the interconnections between POI-POI, prototype-prototype, and POI-prototype, respectively.

4.4 Multi-Relational Graph Attention Network

” for graph representation learning. We define three types of neighbor nodes: “

” for graph representation learning. We define three types of neighbor nodes: “ ” denotes the POI-level neighbor nodes, “

” denotes the POI-level neighbor nodes, “ ” and “

” and “ ” denote the 1-hop and 2-hop prototype-level neighbor nodes, respectively.

” denote the 1-hop and 2-hop prototype-level neighbor nodes, respectively.The hierarchical and pairwise structure learning methods produce high-quality hierarchical graphs, which not only are confronted with less noise and missing information, but also manifest the hierarchical information in POI features through the graph topology.

To fully exploit the learned hierarchical graphs, we design a multi-relational graph attention network.

First, we define the neighborhood of each POI node based on the hierarchical graph. As shown in Fig. 3, we define three types of neighbor nodes. As “![]() ” is the target node to be updated, “

” is the target node to be updated, “![]() ” represents the connected POI nodes that are most semantically similar to the target node, “

” represents the connected POI nodes that are most semantically similar to the target node, “![]() ” is the prototype of cluster that the target node belongs to, which can provide coarse-grained information about this group of similar nodes, and “

” is the prototype of cluster that the target node belongs to, which can provide coarse-grained information about this group of similar nodes, and “![]() ” represents 2-hop prototype neighbors that provide information about more potential similar groups.

Thus, there are three types of relations between target node and its neighbor nodes: .

Then, the graph representation learning is defined as:

” represents 2-hop prototype neighbors that provide information about more potential similar groups.

Thus, there are three types of relations between target node and its neighbor nodes: .

Then, the graph representation learning is defined as:

| (12) |

| (13) |

where denotes the ID embedding of POI , is the hidden representation learned from the bi-level graph, and denotes the neighborhood of under relation . and are learnable weights, and denotes LeakyReLU activation function. is subscripted with , indicating that aggregation patterns at different levels are modeled separately. The information propagation from to is controlled by attention weight and topology score , which is related to learned graph structure:

| (14) |

where belongs to the -th cluster when is -hop prototype neighbor.

4.5 Contrastive Multiview Fusion

In POI recommendation, multiple features are widely used to construct graphs, such as spatial graph, temporal graph, and transition graph. We claim that each feature provides a feature view. In each view, a set of POI representations can be obtained by conducting graph representation learning. POI representations derived from multiple views convey both shared and complementary information. However, existing methods for fusing representations tend to rely on simple techniques such as summation or concatenation. As a result, important informative features may be overlooked.

In deep learning, linear disentanglement has been used to obtain distinguishable and generalizable representations [44, 45]. To fully exploit the information embedded in multiple views, we propose a contrastive multiview fusion method that captures both view-shared and view-specific information. Each POI representation is decomposed into view-shared and view-specific parts. View-shared information denotes the common characteristics of POIs across all views. Extracting view-shared information aids in constructing robust and generalizable POI representations. In contrast, view-specific information reflects the unique attributes of POIs within a specific view. For instance, a pair of POIs might exhibit strong geographical connections but not exhibit the same peak visitation times. By distinguishing view-specific information, our model can more flexibly adapt to the information presented by different views, offering potential for explaining the decision-making process.

To achieve the aforementioned semantic decoupling, we introduce two optimization objectives to guide the semantics of the learnable representations.

First, we employ contrastive learning to extract view-shared representations. Henceforth, we employ the symbol to represent the latent representation of the -th POI, obtained through the multi-relational graph attention network within the feature view . Contrastive learning aims to enhance the agreement among diverse views of the same data and has proven effective in multiview and multimodal tasks [46, 47]. In this work, we propose an adaptive contrastive learning auxiliary task to distill the shared information from multiple views by maximizing the agreement between POI representations under different views and the fused representations. The resulting view-shared loss can be mathematically noted as:

| (15) |

where represents the mutual information computed using InfoNCE [48]. Specifically, we define as positive samples, while all other POI embeddings within the same view and other fused multiview embeddings are regarded as negative samples:

| (16) | ||||

where is the temperature parameter and is the critic function implemented as a cosine similarity function.

Capturing the unique features held by each view is also crucial for a comprehensive understanding of the semantics of POIs. To this end, we extract view-specific representations to complement the view-shared representations. Specifically, the view-specific representations of each view are obtained by subtracting the view-shared representations from the view representation :

| (17) |

To ensure that view-specific representations do not encode shared information, we employ an orthogonality constraint:

| (18) |

Then, we integrate the view-shared and view-specific representations with an attention module. The importance of each representation for POI is formulated as:

where is the learnable vector. Then, the final fused multiview representation of POI is formulated as:

| (19) |

To allow the recommender to perceive the collaborative signals among POIs in diverse feature views, we introduce fused representations obtained in Eq. 19 into the backbone by combining POIs’ original ID embeddings and fused representations. The details can be found in Appendix A.

4.6 Optimization

We adopt the cross entropy (CE) loss to compute the ranking of ground-truth next POI, which encourages the prediction of ground truth next POI to be ranked more highly:

| (20) |

where is the predicted probability of the ground-truth next POI for the -th training sample, and is the total number of samples in training set.

The overall objective function can be formulated as:

| (21) |

where are hyper-parameters to control the hierarchical structure learning and contrastive multiview fusion.

Since hierarchical structure learning needs to be performed iteratively, the model is optimized with the EM algorithm. To help better understand the optimization process, we provide the detailed workflow for EM-based optimization in Algorithm 1.

4.7 Complexity Analysis

Our design encompasses hierarchical structure learning based on the EM algorithm, pairwise structure learning based on metric learning, multi-relational GNN, and contrastive multiview fusion. For our proposed bi-level graph, we use and to represent the number of POIs and prototypes, respectively. , , and are used to denote the number of edges in the adjacency matrices , , and , respectively. We analyze the complexity of each component individually:

-

1.

Hierarchical Structure Learning: We employ the EM algorithm to learn the hierarchical structure within POI features. In the E-step, we execute K-Means to assign each POI to its corresponding group, and in the M-step, we update the structural representation of the POIs by optimizing . In both the E-step and M-step, we need to compute the pairwise distances between POIs and cluster centroids, resulting in a complexity of .

-

2.

Pairwise Structure Learning: We learn the POI-level pairwise structure by computing the pairwise similarity between POI nodes. The same operation is also performed on prototype nodes. Therefore, the complexity of this part is .

-

3.

Multi-Relational GNN: We modify the vanilla GNN to accommodate our bi-level graph, which includes nodes and edges. Since the complexity of a vanilla GNN layer is , where and are the number of nodes and edges respectively, we can conclude that the complexity of our modified graph model is . Since we only update the representations of POI nodes, the complexity is independent of .

-

4.

Contrastive Multiview Fusion: To implement the representation decomposition and fusion we proposed, we need to calculate and . The complexity of computing these two losses is and , respectively. Since the number of views is a very small constant, the complexity of this part is .

Based on the above analysis, we can conclude that the total complexity of our design is . In our model, the following inequalities hold:

-

•

(the number of prototypes is much less than the number of POIs)

-

•

(the degree of each POI node is greater than 1)

-

•

(POIs are not fully connected)

-

•

(each POI belongs to only one cluster)

-

•

(the number of prototype-prototype edges is less than the number of POI-POI edges)

Thus, the total complexity of our design can be simplified as . The efficiency bottlenecks lie in the POI-level pairwise structure learning and the contrastive fusion, which involves the scalability issues of graph structure learning and contrastive learning. In fact, several methods have been proposed to enhance the efficiency of these two techniques [49, 50]. These methods provide valuable support for further enhancing efficiency.

5 Experiments

In this section, we first introduce the evaluation setups and then present the empirical results to enable a fair comparison. Subsequently, we provide detailed analyses of ablation, sensitivity, actual runtime, exploration ability, and visualizations to validate the effectiveness of our BiGSL.

5.1 Experimental Setups

5.1.1 Datasets

We evaluate our proposed BiGSL model on three real-world datasets: Gowalla, Foursquare, and BJ. Gowalla111http://snap.stanford.edu/data/loc-gowalla.html [51] contains check-ins of users over the period of February 2009 - October 2010 from all over the world. Foursquare222https://sites.google.com/site/yangdingqi/home/foursquare-dataset [52] contains check-ins in 415 cities collected from April 2012 to September 2013. BJ dataset is provided by Meituan, which includes real-world transaction records in Beijing. The dataset is sampled from the logs of a mobile application for the period spanning from 1 October 2021 to 25 October 2021. We regard the user’s order payment behavior as the check-in in traditional location-based social networks.

We follow the protocol of HMT-GRN [4] to keep users with check-in counts between 20 and 50, and remove POIs that have been visited by fewer than 10 users. The numbers of users, POIs, and check-ins in each dataset and data density after preprocessing are shown in Table I. After sorting the timestamps in chronological order, we use the first 80% visits and the last 20% visits of each user’s sequence for training and testing respectively.

| Dataset | #Users | #POIs | #Check-ins | Density |

| Gowalla | 11,864 | 3,359 | 86,670 | 0.168% |

| Foursquare | 16,636 | 4,455 | 170,573 | 0.155% |

| BJ | 6,096 | 6,032 | 148,736 | 0.275% |

| Gowalla | Foursquare | BJ | ||||||||||||

| Acc@5 | Acc@10 | Acc@20 | MRR | Acc@5 | Acc@10 | Acc@20 | MRR | Acc@5 | Acc@10 | Acc@20 | MRR | |||

| RNN | 0.1873 | 0.2440 | 0.3050 | 0.1381 | 0.2246 | 0.2973 | 0.3752 | 0.1700 | 0.1553 | 0.2182 | 0.2839 | 0.1076 | ||

| GRU | 0.1869 | 0.2489 | 0.3161 | 0.1406 | 0.2300 | 0.3027 | 0.3852 | 0.1740 | 0.1570 | 0.2224 | 0.2929 | 0.1098 | ||

| LSTM | 0.1968 | 0.2575 | 0.3276 | 0.1510 | 0.2437 | 0.3174 | 0.4032 | 0.1854 | 0.1658 | 0.2316 | 0.3050 | 0.1175 | ||

| HST-LSTM | 0.0366 | 0.0636 | 0.1004 | 0.0279 | 0.0307 | 0.0500 | 0.0806 | 0.0244 | 0.0250 | 0.0447 | 0.0741 | 0.0181 | ||

| STGCN | 0.0909 | 0.1351 | 0.1955 | 0.0684 | 0.0948 | 0.1531 | 0.2323 | 0.0703 | 0.0701 | 0.1165 | 0.1789 | 0.0486 | ||

| LSTPM | 0.2282 | 0.2720 | 0.3200 | 0.1803 | 0.2671 | 0.3214 | 0.3778 | 0.2078 | 0.1870 | 0.2396 | 0.2919 | 0.1360 | ||

| STAN | 0.1928 | 0.2440 | 0.3039 | 0.1460 | 0.2382 | 0.3136 | 0.3987 | 0.1759 | 0.1623 | 0.2240 | 0.2918 | 0.1126 | ||

| STP-UDGAT | 0.2374 | 0.2783 | 0.3202 | 0.1770 | 0.2926 | 0.3556 | 0.4187 | 0.2136 | 0.1996 | 0.2548 | 0.0538 | 0.1366 | ||

| Flashback | 0.2342 | 0.2770 | 0.3285 | 0.1821 | 0.2768 | 0.3347 | 0.4012 | 0.2118 | 0.1928 | 0.2467 | 0.3047 | 0.1380 | ||

| GETNext | 0.2546 | 0.3008 | 0.3683 | 0.1950 | 0.3141 | 0.3806 | 0.4566 | 0.2352 | 0.2142 | 0.2778 | 0.3390 | 0.1505 | ||

| Graph-Flashback | 0.2593 | 0.3040 | 0.3730 | 0.1979 | 0.3272 | 0.3994 | 0.4607 | 0.2399 | 0.2205 | 0.2820 | 0.3479 | 0.1530 | ||

| SNPM | 0.2658 | 0.3234 | 0.3952 | 0.2091 | 0.3304 | 0.4135 | 0.4978 | 0.2493 | 0.2231 | 0.2889 | 0.3507 | 0.1542 | ||

| HMT-GRN | 0.2783 | 0.3394 | 0.4033 | 0.2120 | 0.3357 | 0.4148 | 0.4983 | 0.2510 | 0.2285 | 0.3010 | 0.3606 | 0.1564 | ||

| BiGSL | 0.2923 | 0.3685 | 0.4471 | 0.2162 | 0.3500 | 0.4423 | 0.5323 | 0.2552 | 0.2422 | 0.3271 | 0.4095 | 0.1650 | ||

| Improvement | 5.04% | 8.57% | 10.85% | 1.96% | 4.25% | 6.62% | 6.81% | 1.67% | 5.94% | 8.68% | 13.56% | 5.48% | ||

5.1.2 Baselines

To show the effectiveness of our proposed model, we compare it with the following baseline models:

-

•

RNN is a recurrent network that captures sequential dependencies in check-in sequences but suffers from the vanishing gradient issue. The variants of GRU and LSTM introduce gate mechanism to control information flow and alleviate the above issue.

- •

-

•

LSTPM [19] is an LSTM-based model that effectively captures both short-term and long-term user preferences by employing a geo-dilated operation for the former and a geo-nonlocal operation for the latter.

-

•

STAN [27] introduces dependencies of non-adjacent POIs and non-consecutive visits into the self-attention model, to model the dependencies more effectively.

-

•

STP-UDGAT [1] is a graph-based model that captures global correlations among POIs and personalized user preferences through multiple graphs.

-

•

Flashback [26] accesses historical hidden states with similar contexts, thus utilizing rich spatio-temporal information from sparse user mobility traces.

-

•

GETNext [6] introduces a global trajectory graph to leverage the extensive collaborative signals globally.

-

•

Graph-Flashback [2] incorporates the POI graph learned from spatial-temporal knowledge graph into RNNs for capturing the transition patterns.

-

•

SNPM [29] adopts the Eigenmap method to construct a latent POI similarity graph to tackle the sparsity issue.

-

•

HMT-GRN [4] is a graph-based model that constructs global spatio-temporal POI graphs to model collaborative signals. It also utilizes auxiliary next-region prediction tasks to alleviate the data sparsity issue.

5.1.3 Metrics

The performance is evaluated by how well the target POIs in the candidate set are ranked. We adopt two widely-used metrics of ranking evaluation: , which counts the fraction of times that the target POI is among the top probability samples, and Mean Reciprocal Rank (MRR). The details of metrics can be found in Appendix B.

5.1.4 Settings

Adam is employed as the optimizer, where the learning rate is set to 1e-4. The training process would be stopped after 60 epochs. The batch size is set to 96. The dimension of POI/user ID embedding and hidden dimension are both set to . The number of clusters for Gowalla and for Foursquare and BJ. The temperature coefficient is and is . The weight of loss function are set to 1e-4, 1e-1, 1e-4 respectively. We use geolocation as the primitive spatial feature and time slot frequency distribution as the primitive temporal feature (a week is partitioned equally into 56 time slots).

5.2 Performance Comparison

We compare BiGSL with the baselines and the results are summarized in Table II. Under all the metrics, BiGSL can significantly outperform all the baselines on each of the datasets, which demonstrates its effectiveness in improving next POI recommendation.

Among baseline models, graph-based models, such as GETNext, Graph-Flashback, SNPM, and HMT-GRN, perform better than other models. It is not a surprise since graph-based models are better at capturing global POI-POI relationships and transition patterns across users.

GETNext, Graph-Flashback, SNPM, and HMT-GRM are the best-performing baseline models, they still have some deficiencies compared to our proposed BiGSL. HMT-GRN takes into account the hierarchical structure in spatial features by introducing objectives of auxiliary tasks at the regional levels. However, these objectives in multi-task learning paradigm are independent, therefore fall short in modeling the interaction between different levels. Additionally, it utilizes spatial and temporal graphs constructed according to pre-defined rules, resulting in performance affected by the noise in the graphs. The advantage of our method over GETNext lies in our consideration of graph-based encoding for multiple features, which is more capable of capturing the intricate relationships between POIs than the direct embedding layer used in GETNext. Graph-Flashback considers learning a latent POI graph with the knowledge graph containing spatio-temporal information, but it does not explicitly consider hierarchical structure when learning latent graphs. Although SNPM adopts the Eigenmap method to infer the latent graph structure, it remains constrained by pre-defined rules, which results in inferior performance compared to ours. Moreover, for fusing multiple features, they either simply perform a linear combination of multiple features or directly embed them into a unified latent graph, leading to suboptimal results and lacking interpretability. For modeling hierarchical structures, even though some baseline models employ stacked hierarchical encoders to extract subsequence-level information from sequences, they are limited to capturing hierarchical patterns in sequence features and are incapable of modeling more comprehensive topological structure between POIs. Due to these limitations in their modeling capabilities, the performance of these methods is not as effective as our proposed method.

Our BiGSL achieves the best performance, which unequivocally outperforms all baseline models and verifies the effectiveness of our proposed methods. Compared with existing graph-based models, the BiGSL model automatically learns hierarchical structures and pairwise structures embedded in the POI features, and fuses the information mined from them in a more effective manner.

| Acc@5 | Acc@10 | Acc@20 | MRR | ||

| Gowalla | BiGSL | 0.2923 | 0.3685 | 0.4471 | 0.2162 |

| w/o HSL | 0.2898 | 0.3587 | 0.4293 | 0.2152 | |

| w/o PSL | 0.2803 | 0.3435 | 0.4135 | 0.2133 | |

| w/o Shar | 0.2904 | 0.3608 | 0.4327 | 0.2158 | |

| w/o Spec | 0.2856 | 0.3536 | 0.4274 | 0.2147 | |

| w/o Shar&Spec | 0.2837 | 0.3523 | 0.4265 | 0.2143 | |

| Foursquare | BiGSL | 0.3500 | 0.4423 | 0.5323 | 0.2552 |

| w/o HSL | 0.3482 | 0.4345 | 0.5179 | 0.2548 | |

| w/o PSL | 0.3405 | 0.4220 | 0.5049 | 0.2537 | |

| w/o Shar | 0.3488 | 0.4363 | 0.5206 | 0.2550 | |

| w/o Spec | 0.3450 | 0.4307 | 0.5164 | 0.2545 | |

| w/o Shar&Spec | 0.3434 | 0.4289 | 0.5160 | 0.2543 | |

| BJ | BiGSL | 0.2422 | 0.3271 | 0.4095 | 0.1650 |

| w/o HSL | 0.2403 | 0.3173 | 0.3908 | 0.1645 | |

| w/o PSL | 0.2310 | 0.3021 | 0.3744 | 0.1607 | |

| w/o Shar | 0.2407 | 0.3196 | 0.3945 | 0.1647 | |

| w/o Spec | 0.2361 | 0.3120 | 0.3888 | 0.1625 | |

| w/o Shar&Spec | 0.2343 | 0.3108 | 0.3878 | 0.1616 |

5.3 Ablation Study

To analyze the effectiveness of the different components, we conduct an ablation study. We denote the base model as BiGSL and drop different components to form variants. The main components in our model are listed as:

-

•

HSL: The hierarchical structure learning module, which is responsible for capturing clustering and hierarchical information in POI features and extracting prototypes. Removing this component will result in no more prototype neighbors in graph representation learning.

-

•

PSL: The pairwise structure learning module, which learns relative relationships between POIs or prototypes adaptively. Removing this module means that we use pre-defined rules for graph construction and the hierarchical structure learning based on the learned structure embedding will not be employed.

-

•

Shared (Shar): The view-shared loss in the contrastive multiview fusion, which aims to extract information shared by representations in multiple views.

-

•

Specific (Spec): The view-specific loss in the contrastive multiview fusion, which ensures the view-specific representations of different feature views do not encode view-shared information.

The results are summarized in Table III. We have the following observations from this table.

We observe that the hierarchical structure learning helps to improve the model performance, verifying the effectiveness of this component. Since this component mainly leverages the loss function to control the optimization process in the EM algorithm, to further understand the influence of this component, we visualize the effect of on graph structure learning in Fig. 4.

From Fig. 4, we can intuitively find that the structure embedding learned based on either spatial or temporal features has more obvious clustering under the guidance of . This indicates that the introduction of can effectively capture the hierarchical properties in POI features and guide the learning of structure embedding. In addition, the spatial structure embedding has more pronounced clustering than temporal structure embedding, indicating that spatial features naturally exhibit more significant hierarchical structures. Consequently, in graphs constructed with these embeddings, connected POIs are more semantically similar. The prototypes extracted from clusters present more coarse-grained group features, thus constructing effective hierarchical structures.

The results in Table III also demonstrate the effectiveness of pairwise structure learning and contrastive multiview fusion. Note that view-specific information has a more significant impact on contrastive fusion than view-shared information, which indicates that the difference in semantics of different features is more pronounced than similarity, and it is meaningful to fully consider multiple features in POI recommendation.

5.4 Sensitivity Analysis

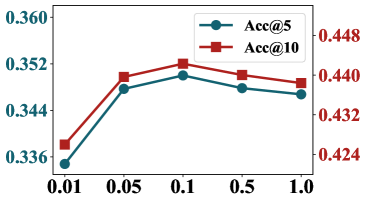

We conduct sensitivity analysis with four hyper-parameters on the hierarchical structure learning and the contrastive multiview fusion, which are the most pivotal parts of BiGSL. The investigated hyper-parameters include the number of clusters , the weights of loss function , , , and the temperature coefficients . Fig. 5 and Fig. 6 shows the effect of varied hyper-parameter values, from which we have the following observations.

5.4.1 Effect of cluster number .

When excavating hierarchical structure and extracting prototypes, we employ K-Means to conduct node clustering. We vary the cluster number from 40 to 200 with step 40. Table III (a,b) shows that and are the best cluster numbers on Gowalla and Foursquare datasets respectively. We speculate that the best number of clusters is related to the number of POIs in the dataset. When the number of POIs increases, there may also be more feature patterns in the dataset and more clusters are needed to distinguish them. Since the number of POIs in Foursquare is more than that in Gowalla, Foursquare needs more clusters and prototypes to represent the coarse-grained groups.

5.4.2 Effect of weights of loss functions .

We analyze the effect of , , and since they determine the effectiveness of hierarchical structure learning and contrastive multiview fusion. As shown in Table III, we tune them to analyze their effect. On both datasets, the best performance is achieved when =1e-4, =1e-1, and =1e-4. Note that, consistent with the observations of the ablation study, view-specific information has a greater impact on performance than view-shared information.

5.4.3 Effect of temperature coefficients .

Our method incorporates two temperature coefficients: in Eq. 6, which controls hierarchical clustering, and in Eq. 16, used for computing the mutual information in the view-shared loss. We test the performance of the model with each of them set to different values within [0.01, 0.05, 0.1, 0.5, 1]. The experimental results, as shown in Fig. 6, indicate that and are the optimal choices. From Fig. 6, small values of and lead to a significant decrease in performance. This is due to and controlling the smoothness of the similarity computation. Lower temperature coefficients result in reduced smoothness, which enlarges the differences in similarity between different sample pairs. This makes the similarity more sensitive to changes in representations, easily leading to overfitting, thus impairing the model’s generalization ability.

| Feature Grouping Scheme | Group Method | Gowalla | Foursquare | |||||||

| Acc@5 | Acc@10 | Acc@20 | MRR | Acc@5 | Acc@10 | Acc@20 | MRR | |||

| Without Feature Grouping | None | 0.2898 | 0.3587 | 0.4293 | 0.2152 | 0.3482 | 0.4345 | 0.5179 | 0.2548 | |

| Pre-defined Rule Based | Group Spatial Features by Grid | 0.2826 | 0.3563 | 0.4276 | 0.2072 | 0.3359 | 0.4274 | 0.5138 | 0.2477 | |

| Group Temporal Features by Date | 0.2901 | 0.3611 | 0.4308 | 0.2154 | 0.3476 | 0.4294 | 0.5157 | 0.2502 | ||

| Ours (Clustering Based) | Hierarchical Structure Learning | 0.2923 | 0.3685 | 0.4471 | 0.2162 | 0.3500 | 0.4423 | 0.5323 | 0.2552 | |

| Representation Fusion Scheme | Fusion Method | Gowalla | Foursquare | |||||||

| Acc@5 | Acc@10 | Acc@20 | MRR | Acc@5 | Acc@10 | Acc@20 | MRR | |||

| Early Fusion | Concatenate | 0.2794 | 0.3550 | 0.4345 | 0.2024 | 0.3344 | 0.4173 | 0.5051 | 0.2470 | |

| Linear Sum () | 0.2841 | 0.3620 | 0.4370 | 0.2096 | 0.3388 | 0.4279 | 0.5194 | 0.2479 | ||

| Linear Sum () | 0.2856 | 0.3598 | 0.4380 | 0.2096 | 0.3417 | 0.4323 | 0.5248 | 0.2491 | ||

| Linear Sum () | 0.2890 | 0.3642 | 0.4423 | 0.2111 | 0.3400 | 0.4311 | 0.5216 | 0.2489 | ||

| Intermediate Fusion | Concatenate | 0.2702 | 0.3416 | 0.4242 | 0.2015 | 0.3236 | 0.4109 | 0.4998 | 0.2339 | |

| Linear Sum () | 0.2845 | 0.3620 | 0.4400 | 0.2105 | 0.3383 | 0.4299 | 0.5225 | 0.2452 | ||

| Linear Sum () | 0.2852 | 0.3640 | 0.4426 | 0.2106 | 0.3409 | 0.4319 | 0.5234 | 0.2494 | ||

| Linear Sum () | 0.2870 | 0.3645 | 0.4464 | 0.2108 | 0.3399 | 0.4307 | 0.5235 | 0.2463 | ||

| Late Fusion | Average | 0.2807 | 0.3529 | 0.4276 | 0.2072 | 0.3343 | 0.4221 | 0.5140 | 0.2450 | |

| Ours | Contrastive Decomposition + Attentive Fusion | 0.2923 | 0.3685 | 0.4471 | 0.2162 | 0.3500 | 0.4423 | 0.5323 | 0.2552 | |

5.5 Comparison of Feature Grouping Schemes

We adopt a clustering-based grouping method in Section 4.2 to construct hierarchical relationships. Regarding the feature grouping scheme, we compare our clustering-based grouping with the approach of not conducting feature grouping (i.e., not modeling the hierarchical structure). We also compare changing the grouping of spatial features from clustering-based to grid-based grouping, and the grouping of temporal features from clustering-based to date-based grouping. Experiments are conducted on Gowalla and Foursquare, with the results presented in Table IV.

From Table IV, we observe that compared to not conducting feature grouping, traditional grouping schemes do not always improve performance. An improvement is only noted on Gowalla when temporal features are grouped by date, but this still falls short of our clustering-based grouping method. This indicates that the hierarchical structure within POI features is complex and nonlinear, making it challenging to be directly modeled by heuristic rules. Conversely, our method is capable of adaptively modeling the complex hierarchical structure within POI features through the K-Means clustering and EM algorithm framework.

5.6 Comparison of Representation Fusion Schemes

For the representation fusion scheme [55, 56], we compared our contrastive fusion with other strategies such as early, intermediate, and late fusion for integrating spatial and temporal representations. In early fusion, we perform a concatenation or a linear sum on the node representations obtained in each view. In the linear sum, the weights for spatial and temporal representations are and , respectively. Similarly, in intermediate fusion, we perform a concatenation or a linear sum on the intermediate results after further encoding with the LSTM layer. In the linear sum, the weights for spatial and temporal representation are set as and . In late fusion, we average the predicted logits from different views at the decision layer. Comparative experiments are conducted on Gowalla and Foursquare, with the results presented in Table V.

From Table V, we observe that among the compared fusion schemes, early fusion performs the best, while late fusion yields the worst results. This may be due to the high correlation between POI representations from different views, where early fusion facilitates better interaction between representations. Additionally, we notice that linear sum outperforms concatenation, possibly because concatenation increases the hidden layer dimension, leading to over-parameterization due to the introduction of more model parameters. Lastly, when evaluating the performance of linear sum, the optimal value of or varies across different datasets. This indicates that the optimal weights for linear sum relies on empirical manual selection, whereas our method could adaptively learn the optimal weights.

5.7 Actual Runtime Analysis

We compare the actual runtime of our method with the best baseline, HMT-GRN, by recording the total training time required for convergence. All experiments are conducted on a NVIDIA RTX 3090 GPU to ensure fairness in comparison. The runtime required to train HMT-GRN and our method is around 5.2 hours and 6.5 hours, respectively. We observe that due to the difference in the complexity of the training process, our method requires more time per batch compared to HMT-GRN. However, our design does not lead to a significant increase in actual runtime due to the efficient implementation and the PyTorch library’s automatic acceleration of matrix multiplication operations. Therefore, in terms of total training time, our method is comparable to existing methods.

5.8 Exploration Ability Analysis

| -Acc@5 | -Acc@10 | -Acc@20 | -MRR | |

| HMT-GRN | 0.0809 | 0.1190 | 0.1739 | 0.0617 |

| BiGSL | 0.1002 | 0.1380 | 0.1881 | 0.0721 |

| Improvement | 23.88% | 15.87% | 8.11% | 16.67% |

In recommendation, balancing personalization and exploration is a critical challenge. Personalization focuses on learning preferences from POIs which users have already visited. In contrast, exploration evaluates the ability to accurately recommend POIs that users have not visited. Both abilities ensure accuracy and diversity in recommendation.

To evaluate the exploration performance of POI recommendation, we adopt the Next New () extension of metrics (i.e., -Acc@K, -MRR) proposed in HMT-GRN [4]. This set of metrics only considers Next New POIs that have never been visited by the user, and thus can be used to evaluate the exploratory ability of the recommenders.

The higher-density dataset may provide more global collaborative signals for exploration, so we conduct experiments on the BJ dataset. The results are shown in the Table VI. HMT-GRN is currently the most state-of-the-art model for next new POI recommendation, thanks to its selectivity layer designed to identify whether a POI has been visited by a user. Compared to HMT-GRN, our model significantly enhances the exploration ability without hurting the personalization performance. Note that we do not employ the selectivity layer proposed by HMT-GRN, but only rely on the graph structure learning and the multi-relational graph network to achieve it. The improvement of the metric score illustrates that our method can effectively establish connections for POIs with similar feature semantics and explore more potential POIs in recommendation.

5.9 Visualization of Graph Structure Learning

In Fig. 7, we visualize an example of the spatial graphs obtained with pre-defined rules (e.g. grid mapping) and our structure learning method, respectively. The POIs with the same color in the figure are connected by edges. For brevity, we only show the colors of the POI nodes here instead of plotting the edges. Intuitively, the graph constructed by pre-defined rules is prone to noise (in the red circles) and incompleteness (in the black circle), i.e., geographically non-aggregated POIs may be connected by edges, while geographically aggregated POIs are grouped into different grids. In contrast, our method adaptively learns a better spatial graph with less uncertainty. The higher graph quality is helpful for the learning of POI representations and downstream POI recommendations.

6 Conclusion

In this study, we propose a novel bi-level graph structure learning method for next POI recommendation, named BiGSL. We employ hierarchical and pairwise structure learning to automatically learn hierarchical graphs, addressing the lack of hierarchical information and the challenge posed by noisy connections. Based on the learned hierarchical graphs, we devise a novel multi-relational graph attention network to capture collaborative relationships among POIs, considering both POI-level and prototype-level neighbors. Furthermore, we propose a contrastive multiview fusion strategy to facilitate information fusion. Comprehensive comparison with baseline models unequivocally shows the superior performance of BiGSL.

Acknowledgments

This work was supported by National Natural Science Foundation of China (62206291, 62141608).

References

- [1] N. Lim, B. Hooi, S. Ng, X. Wang, Y. L. Goh, R. Weng, and J. Varadarajan, “STP-UDGAT: spatial-temporal-preference user dimensional graph attention network for next POI recommendation,” in CIKM, 2020, pp. 845–854.

- [2] X. Rao, L. Chen, Y. Liu, S. Shang, B. Yao, and P. Han, “Graph-flashback network for next location recommendation,” in KDD, 2022, pp. 1463–1471.

- [3] Z. Wang, Y. Zhu, H. Liu, and C. Wang, “Learning graph-based disentangled representations for next POI recommendation,” in SIGIR, 2022, pp. 1154–1163.

- [4] N. Lim, B. Hooi, S. Ng, Y. L. Goh, R. Weng, and R. Tan, “Hierarchical multi-task graph recurrent network for next POI recommendation,” in SIGIR, 2022, pp. 1133–1143.

- [5] Y. Li, T. Chen, Y. Luo, H. Yin, and Z. Huang, “Discovering collaborative signals for next POI recommendation with iterative seq2graph augmentation,” in IJCAI, 2021, pp. 1491–1497.

- [6] S. Yang, J. Liu, and K. Zhao, “Getnext: Trajectory flow map enhanced transformer for next POI recommendation,” in SIGIR, 2022, pp. 1144–1153.

- [7] K. Zhao, Y. Zhang, H. Yin, J. Wang, K. Zheng, X. Zhou, and C. Xing, “Discovering subsequence patterns for next POI recommendation,” in IJCAI, 2020, pp. 3216–3222.

- [8] D. Lian, Y. Wu, Y. Ge, X. Xie, and E. Chen, “Geography-aware sequential location recommendation,” in KDD, 2020, pp. 2009–2019.

- [9] P. Zhao, X. Xu, Y. Liu, Z. Zhou, K. Zheng, V. S. Sheng, and H. Xiong, “Exploiting hierarchical structures for POI recommendation,” in ICDM, 2017, pp. 655–664.

- [10] S. Feng, L. V. Tran, G. Cong, L. Chen, J. Li, and F. Li, “HME: A hyperbolic metric embedding approach for next-poi recommendation,” in SIGIR, 2020, pp. 1429–1438.

- [11] J. Xie and Z. Chen, “Hierarchical transformer with spatio-temporal context aggregation for next point-of-interest recommendation,” arXiv, vol. abs/2209.01559, 2022.

- [12] Q. Guo, Z. Sun, J. Zhang, and Y. Theng, “An attentional recurrent neural network for personalized next location recommendation,” in AAAI, 2020, pp. 83–90.

- [13] X. Wang, G. Sun, X. Fang, J. Yang, and S. Wang, “Modeling spatio-temporal neighbourhood for personalized point-of-interest recommendation,” in IJCAI, 2022, pp. 3530–3536.

- [14] Y. Zhu, W. Xu, J. Zhang, Q. Liu, S. Wu, and L. Wang, “Deep graph structure learning for robust representations: A survey,” arXiv, vol. abs/2103.03036, 2021.

- [15] M. Zhang, Y. Yang, R. Abbas, K. Deng, J. Li, and B. Zhang, “SNPR: A serendipity-oriented next POI recommendation model,” in CIKM, 2021, pp. 2568–2577.

- [16] S. Halder, K. H. Lim, J. Chan, and X. Zhang, “Transformer-based multi-task learning for queuing time aware next POI recommendation,” in PAKDD, vol. 12713, 2021, pp. 510–523.

- [17] H. Sun, J. Xu, K. Zheng, P. Zhao, P. Chao, and X. Zhou, “MFNP: A meta-optimized model for few-shot next POI recommendation,” in IJCAI, 2021, pp. 3017–3023.

- [18] Y. Chen, X. Wang, M. Fan, J. Huang, S. Yang, and W. Zhu, “Curriculum meta-learning for next POI recommendation,” in KDD. ACM, 2021, pp. 2692–2702.

- [19] K. Sun, T. Qian, T. Chen, Y. Liang, Q. V. H. Nguyen, and H. Yin, “Where to go next: Modeling long- and short-term user preferences for point-of-interest recommendation,” in AAAI, 2020, pp. 214–221.

- [20] Y. Wu, K. Li, G. Zhao, and X. Qian, “Personalized long- and short-term preference learning for next POI recommendation,” IEEE Transactions on Knowledge and Data Engineering, vol. 34, no. 4, pp. 1944–1957, 2022.

- [21] L. Zhang, Z. Sun, Z. Wu, J. Zhang, Y. S. Ong, and X. Qu, “Next point-of-interest recommendation with inferring multi-step future preferences,” in IJCAI, 2022, pp. 3751–3757.

- [22] Q. Liu, S. Wu, and L. Wang, “Multi-behavioral sequential prediction with recurrent log-bilinear model,” IEEE Transactions on Knowledge and Data Engineering, vol. 29, no. 6, pp. 1254–1267, 2017.

- [23] Q. Liu, S. Wu, L. Wang, and T. Tan, “Predicting the next location: A recurrent model with spatial and temporal contexts,” in AAAI, 2016, pp. 194–200.

- [24] P. Zhao, H. Zhu, Y. Liu, J. Xu, Z. Li, F. Zhuang, V. S. Sheng, and X. Zhou, “Where to go next: A spatio-temporal gated network for next POI recommendation,” in AAAI, 2019, pp. 5877–5884.

- [25] P. Zhao, A. Luo, Y. Liu, J. Xu, Z. Li, F. Zhuang, V. S. Sheng, and X. Zhou, “Where to go next: A spatio-temporal gated network for next POI recommendation,” IEEE Transactions on Knowledge and Data Engineering, vol. 34, no. 5, pp. 2512–2524, 2022.

- [26] D. Yang, B. Fankhauser, P. Rosso, and P. Cudré-Mauroux, “Location prediction over sparse user mobility traces using rnns: Flashback in hidden states!” in IJCAI, 2020, pp. 2184–2190.

- [27] Y. Luo, Q. Liu, and Z. Liu, “STAN: spatio-temporal attention network for next location recommendation,” in WWW, 2021, pp. 2177–2185.

- [28] L. Zhang, Z. Sun, J. Zhang, Y. Lei, C. Li, Z. Wu, H. Kloeden, and F. Klanner, “An interactive multi-task learning framework for next POI recommendation with uncertain check-ins,” in IJCAI, 2020, pp. 3551–3557.

- [29] F. Yin, Y. Liu, Z. Shen, L. Chen, S. Shang, and P. Han, “Next POI recommendation with dynamic graph and explicit dependency,” in AAAI, 2023, pp. 4827–4834.

- [30] L. Franceschi, M. Niepert, M. Pontil, and X. He, “Learning discrete structures for graph neural networks,” in ICML, 2019, pp. 1972–1982.

- [31] Z. Li, L. Wang, X. Sun, Y. Luo, Y. Zhu, D. Chen, Y. Luo, X. Zhou, Q. Liu, S. Wu, L. Wang, and J. X. Yu, “GSLB: the graph structure learning benchmark,” in NeurIPS, 2023.

- [32] Y. Chen, L. Wu, and M. J. Zaki, “Iterative deep graph learning for graph neural networks: Better and robust node embeddings,” in NeurIPS, 2020.

- [33] R. Li, S. Wang, F. Zhu, and J. Huang, “Adaptive graph convolutional neural networks,” in AAAI, 2018, pp. 3546–3553.

- [34] X. Wang, M. Zhu, D. Bo, P. Cui, C. Shi, and J. Pei, “AM-GCN: adaptive multi-channel graph convolutional networks,” in KDD, 2020, pp. 1243–1253.

- [35] D. Luo, W. Cheng, W. Yu, B. Zong, J. Ni, H. Chen, and X. Zhang, “Learning to drop: Robust graph neural network via topological denoising,” in WSDM, 2021, pp. 779–787.

- [36] C. Zheng, B. Zong, W. Cheng, D. Song, J. Ni, W. Yu, H. Chen, and W. Wang, “Robust graph representation learning via neural sparsification,” in ICML, 2020, pp. 11 458–11 468.

- [37] X. Gao, W. Hu, and Z. Guo, “Exploring structure-adaptive graph learning for robust semi-supervised classification,” in ICME, 2020.

- [38] W. Jin, Y. Ma, X. Liu, X. Tang, S. Wang, and J. Tang, “Graph structure learning for robust graph neural networks,” in KDD, 2020, pp. 66–74.

- [39] L. Yang, Z. Kang, X. Cao, D. Jin, B. Yang, and Y. Guo, “Topology optimization based graph convolutional network,” in IJCAI, 2019, pp. 4054–4061.

- [40] Z. Lin, C. Tian, Y. Hou, and W. X. Zhao, “Improving graph collaborative filtering with neighborhood-enriched contrastive learning,” in WWW, 2022, pp. 2320–2329.

- [41] Y. Chen, L. Wu, and M. J. Zaki, “Iterative deep graph learning for graph neural networks: Better and robust node embeddings,” in NeurIPS, 2020.

- [42] J. Zhao, X. Wang, C. Shi, B. Hu, G. Song, and Y. Ye, “Heterogeneous graph structure learning for graph neural networks,” in AAAI, 2021, pp. 4697–4705.

- [43] Y. Liu, Y. Zheng, D. Zhang, H. Chen, H. Peng, and S. Pan, “Towards unsupervised deep graph structure learning,” in WWW, 2022, pp. 1392–1403.

- [44] J. Wang, C. Lan, C. Liu, Y. Ouyang, T. Qin, W. Lu, Y. Chen, W. Zeng, and P. S. Yu, “Generalizing to unseen domains: A survey on domain generalization,” IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 8, pp. 8052–8072, 2023.

- [45] T. Han, P. Wang, S. Niu, and C. Li, “Modality matches modality: Pretraining modality-disentangled item representations for recommendation,” in WWW, 2022, pp. 2058–2066.

- [46] Y. Tian, D. Krishnan, and P. Isola, “Contrastive multiview coding,” in ECCV, 2020, pp. 776–794.

- [47] Y. Liu, Q. Fan, S. Zhang, H. Dong, T. A. Funkhouser, and L. Yi, “Contrastive multimodal fusion with tupleinfonce,” in ICCV, 2021, pp. 734–743.

- [48] A. van den Oord, Y. Li, and O. Vinyals, “Representation learning with contrastive predictive coding,” arXiv, 2018.

- [49] Q. Wu, W. Zhao, Z. Li, D. P. Wipf, and J. Yan, “Nodeformer: A scalable graph structure learning transformer for node classification,” in NeurIPS, 2022.

- [50] Y. Zheng, S. Pan, V. C. S. Lee, Y. Zheng, and P. S. Yu, “Rethinking and scaling up graph contrastive learning: An extremely efficient approach with group discrimination,” in NeurIPS, 2022.

- [51] E. Cho, S. A. Myers, and J. Leskovec, “Friendship and mobility: user movement in location-based social networks,” in KDD, 2011, pp. 1082–1090.

- [52] D. Yang, D. Zhang, L. Chen, and B. Qu, “Nationtelescope: Monitoring and visualizing large-scale collective behavior in lbsns,” Journal of Network and Computer Applications, vol. 55, 2015.

- [53] D. Kong and F. Wu, “HST-LSTM: A hierarchical spatial-temporal long-short term memory network for location prediction,” in IJCAI, 2018, pp. 2341–2347.

- [54] H. Han, M. Zhang, M. Hou, F. Zhang, Z. Wang, E. Chen, H. Wang, J. Ma, and Q. Liu, “STGCN: A spatial-temporal aware graph learning method for POI recommendation,” in ICDM, 2020, pp. 1052–1057.

- [55] T. Baltrusaitis, C. Ahuja, and L. Morency, “Multimodal machine learning: A survey and taxonomy,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 2, pp. 423–443, 2019.

- [56] C. Zhang, Z. Yang, X. He, and L. Deng, “Multimodal intelligence: Representation learning, information fusion, and applications,” IEEE Journal of Selected Topics in Signal Processing, vol. 14, no. 3, pp. 478–493, 2020.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/LiangWang.jpg) |

Liang Wang is currently pursuing his Ph.D. degree of Computer Science at the Center for Research on Intelligent Perception and Computing (CRIPAC) at State Key Laboratory of Multimodal Artificial Intelligence Systems (MAIS), Institute of Automation, Chinese Academy of Sciences (CASIA). His current research interests mainly include graph representation learning, AI for science, and spatio-temporal data mining. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/ShuWu.jpg) |

Shu Wu received his B.S. degree from Hunan University, China, in 2004, M.S. degree from Xiamen University, China, in 2007, and Ph.D. degree from Department of Computer Science, University of Sherbrooke, Quebec, Canada, all in computer science. He is an Associate Professor with the Center for Research on Intelligent Perception and Computing (CRIPAC), State Key Laboratory of Multimodal Artificial Intelligence Systems (MAIS), Institute of Automation, Chinese Academy of Sciences (CASIA). He has published more than 50 papers in the areas of data mining and information retrieval in international journals and conferences, such as IEEE TKDE, IEEE THMS, AAAI, ICDM, SIGIR, and CIKM. His research interests include data mining, information retrieval, and recommendation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/QiangLiu.jpg) |

Qiang Liu is an Associate Professor with the Center for Research on Intelligent Perception and Computing (CRIPAC), State Key Laboratory of Multimodal Artificial Intelligence Systems (MAIS), Institute of Automation, Chinese Academy of Sciences (CASIA). He received his PhD degree from CASIA. Currently, his research interests include data mining, misinformation detection, LLM safety and AI for science. He has published papers in top-tier journals and conferences, such as IEEE TKDE, AAAI, NeurIPS, KDD, WWW, SIGIR, CIKM, ICDM, ACL and EMNLP. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/YanqiaoZhu.jpg) |

Yanqiao Zhu is currently pursuing his Ph.D. degree at the Department of Computer Science, University of California, Los Angeles. His current research interests involve the exploration of graph and geometric representation learning and particularly their applications in computational chemistry. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/XiangTao.jpeg) |

Xiang Tao is currently pursuing his master’s degree of Computer Science at the Center for Research on Intelligent Perception and Computing (CRIPAC) at State Key Laboratory of Multimodal Artificial Intelligence Systems (MAIS), Institute of Automation, Chinese Academy of Sciences (CASIA). His current research interests mainly include data mining, graph representation learning and recommender systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/MengdiZhang.jpeg) |

Mengdi Zhang is a senior algorithm expert and technical manager at Meituan. She co-founded the OpenKG community and serves as a committee member of CIPS SIGKG. She received the BEng degree from Shandong University in 2014. Her research interests lie in industry large-scale knowledge representation and reasoning systems. She has published papers in top journals and conferences, such as IEEE TKED, AAAI, IJCAI, ACL, SIGIR, KDD, and WWW. She was a winner of the 12th Wu Wenjun AI Science and Technology Progress Award. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d0c497d5-7137-4f3a-9ea1-817cecb6a164/LarryWang.jpg) |

Liang Wang received both the BEng and MEng degrees from Anhui University in 1997 and 2000, respectively, and the PhD degree from the Institute of Automation, Chinese Academy of Sci- ences (CASIA) in 2004. From 2004 to 2010, he was a research assistant at Imperial College London, United Kingdom, and Monash University, Australia, a research fellow at the University of Melbourne, Australia, and a lecturer at the University of Bath, United Kingdom, respectively. Currently, he is a full professor of the Hundred Talents Program at the State Key Laboratory of Multimodal Artificial Intelligence Systems, CASIA. His major research interests include machine learning, pattern recognition, and computer vision. He has widely published in highly ranked international journals such as IEEE TPAMI and IEEE TIP, and leading international conferences such as CVPR, ICCV, and ECCV. He has served as an Associate Editor of IEEE TPAMI, IEEE TIP, and PR. He is an IEEE Fellow and an IAPR Fellow. |