Bi-CL: A Reinforcement Learning Framework for Robots Coordination Through Bi-level Optimization

Abstract

In multi-robot systems, achieving coordinated missions remains a significant challenge due to the coupled nature of coordination behaviors and the lack of global information for individual robots. To mitigate these challenges, this paper introduces a novel approach, Bi-level Coordination Learning (Bi-CL), that leverages a bi-level optimization structure within a centralized training and decentralized execution paradigm. Our bi-level reformulation decomposes the original problem into a reinforcement learning level with reduced action space, and an imitation learning level that gains demonstrations from a global optimizer. Both levels contribute to improved learning efficiency and scalability. We note that robots’ incomplete information leads to mismatches between the two levels of learning models. To address this, Bi-CL further integrates an alignment penalty mechanism, aiming to minimize the discrepancy between the two levels without degrading their training efficiency. We introduce a running example to conceptualize the problem formulation and apply Bi-CL to two variations of this example: route-based and graph-based scenarios. Simulation results demonstrate that Bi-CL can learn more efficiently and achieve comparable performance with traditional multi-agent reinforcement learning baselines for multi-robot coordination.

I Introduction

Multi-robot systems have extensive applications in various engineering fields, but their deployment relies on scalable algorithms that enable robots to make coordinated and sequential decisions using local observation [1, 2]. To this end, Centralized Training with Decentralized Execution (CTDE) [3] emerges as a promising approach, offering a balanced framework for coordinating multiple robots through centralized learning processes that guide decentralized operational decisions. However, the effectiveness of CTDE faces significant challenges as the dimensionality of action spaces expands and each robot only has local observation of the system. These challenges complicate the training process and potentially impedes practical deployment.

In this context, we note that multi-robot cooperative missions often exhibit inherent hierarchical structures, allowing them to be decomposed into high-level and low-level tasks [4]. For instance, rescue missions utilize teams of robots to search large or hazardous areas [5, 6], where a high-level planning task may focus on area coverage, and a low-level task may address navigation and obstacle avoidance. A similar scenario arises from warehouse automation [7], where high-level tasks involve managing robot fleets for picking, packing, and sorting, and low-level tasks ensure precise and safe navigation in densely populated environments. Nevertheless, in most cases, the decomposed problems are internally coupled by state dependence, which can not be solved independently. Bi-level optimization [8] presents a solution to this issue, with the capability of enhancing learning efficiency and stability while maintaining the explicit connections between the two levels of problems. However, despite the abundance of research on addressing such coupling in static optimization problems for both single-agent [9] or multi-agent [10] cases, there remains a scarcity of studies tailored for multi-agent reinforcement learning (MARL) under CTDE with robots’ local observation. This gap motivates our research to develop new approaches that leverage the benefits of bi-level optimization for reinforcement learning applications in multi-robot systems, thereby enhancing their deployment efficiency across a variety of complex and dynamic environments.

Statement of contribution: The contributions of this paper include (i) the formulation of a bi-level Decentralized Markov Decision Process (Dec-MDP) for multi-robot coordination; (ii) a Bi-level Coordination Learning (Bi-CL) framework with a novel alignment mechanism to integrate multi-agent reinforcement learning and imitation learning under the CTDE scheme; (iii) simulated experiments that verify the effectiveness of the proposed Bi-CL algorithm and a comparison with traditional MARL algorithms to solve multi-robot coordination tasks.

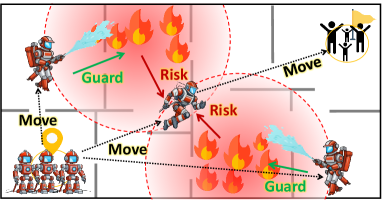

Running Example: As visualized in Fig. 1, we introduce an example throughout this paper to conceptualize and verify the proposed bi-level approach for learning multi-robot coordination. Consider multiple robots traversing an area with multiple adversaries. During their traversal, each robot suffers risk or damage from adversaries accumulated over time, if it enters their impact range. We use robots’ states to represent their physical locations. Each robot has two decomposable actions: the action of move and the action of guard, which are performed simultaneously. The move action changes the robot’s locations in the environment. In each step, the relative positions of robots to adversaries determine a base risk the robot accumulates. The guard is an additional action each robot can choose to perform against an adversary, which increases its own risk but reduces the risks that this adversary poses to other robots. Our goal is to minimize the accumulated risk and traveling time for all robots to arrive at a target position, given each robot only has local (partial) observation of the system.

Depending on the weights in performance evaluation, the team may face trade-offs between strategies. For example, each robot may move along the shortest path to reach the target as soon as possible v.s. spending more time to form guard behaviors to reduce team risk. These strategies boil down to robots’ interdependent decision-making regarding move (to where) and guard (which adversary) at each time step, making the problem non-trivial. On the other hand, as a key idea this paper highlights, the move and guard actions of robots in this scenario exhibit a hierarchical structure. This structure can be leveraged through a bi-level reformulation, which simplifies the problem’s complexity while still characterizing the interdependencies between the robots’ two types of actions.

The same scenario discussed above applies to a handful of applications for multi-robot coordination, such as firefighting considering fire as adversaries; battlefields considering enemy robots as adversaries; and team car racing or football games considering non-cooperative players as adversaries.

II Literature Review

II-A MARL for Multi-Robot Coordination

Reinforcement Learning (RL) has gained significant popularity for controlling robotic systems for complex tasks [11]. Using RL for multi-robot systems faces the challenge of increased system dimension and the need for robots to make decisions based on local observations. Addressing these challenges has led to the development of the CTDE [3] learning scheme, which enables robots to cooperatively learn coordination strategies through centralized training, while the learned policies are local and, thus, can be deployed for decentralized execution. Examples include MADDPG [3], which extends the centralized Deep Deterministic Policy Gradient (DDPG) framework to multi-robot systems. A similar case applies to Proximal Policy Optimization (PPO) algorithm and its generalization MAPPO [12]. While these approaches might be concerned with state dimensionality during centralized training, recent advances, such as Value-Decomposition Networks (VDN), can be leveraged to decompose the joint value function into individual value functions for each robot [13]. Building on this, QMIX further extends the framework, allowing for a more complex, state-dependent mixing of individual robot’s value functions to learn coordinated behaviors [14].

These CTDE approaches often rely on end-to-end learning, which cannot leverage the hierarchical structures of robot actions considered in this paper. In addition, their performance and training efficiency may significantly degrade [15] if each robot’s local observation covers only parts of the state space. Such local observation leads to a Decentralized Markov Decision Process (Dec-MDP), whose complexity is NEXP-complete [16]. In this case, even a slight reduction in the state/action space can have a huge impact on the overall computational complexity.

II-B Bi-level Optimization

Regarding state/action space reduction, bi-level optimization is a hierarchical mathematical formulation where the solution of one optimization task is restricted by the solution set mapping of another task [8]. In recent years, this technique has been incorporated with various machine learning methods for nested decision-making, including multi-task meta-learning [17], neural architecture search [18], adversarial learning [19], and deep reinforcement learning [20]. The applications of these bi-level learning approaches span economics, management, optimal control, systems engineering, and resource allocation problems [21, 22, 23, 24, 25], with a comprehensive review by Liu et al. [8]. Corresponding to the scope of this paper, when solving multi-robot coordination problems, bi-level decomposition has been introduced [10, 26] for actor-critic MARL to reduce the training burden of each level, thus improving the training efficiency and convergence. Le et al. [27] uses latent variables to introduce a mechanism similar to bi-level optimization that can significantly improve the performance of multi-agent imitation learning.

Nevertheless, a commonality among the mentioned works is the assumption that robots (agents) can access complete state information of the system. In cases where each robot has only partial information, the incomplete information can lead to a mismatch between the optimal solutions on the two levels. This challenge has motivated our work to incorporate an alignment penalty in order to generalize the bi-level optimization concept under CTDE, taking into account robots’ local observations.

III Preliminaries and Problem Formulation

III-A Formulation of a Bi-level Optimization

A regular centralized Markov Decision Process (MDP) can be defined by a tuple , including state, action, state transition, discount factor, and reward. The goal is to learn a policy to maximize the expected cumulative reward over the task horizon , i.e.,

| (1) |

where and are the action and state of the system at each step.

Let’s assume the actions of the system can be decomposed as , and the system’s state transition depends only on action such that :

| (2) |

However, the reward function depends on both actions:

| (3) |

If the structure of problem (1) satisfies (2) and (3), it can be formulated into a bi-level problem:

| (4a) | ||||

| (4b) | ||||

In this paper, we make the following assumption:

Assumption 1.

The assumption can be justified by the fact that (4a) is a planning problem involving state transitions and rewards for future steps, while (4b) only involves a one-step reward.

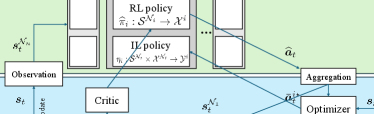

Under Assumption 1, for the lower level problem (4b), the optimal is solvable from , based on the current and the choice of . Bringing this solution back to the upper level (4a), we only need to solve a reduced-dimension MDP defined by , to obtain a policy that generates action . During implementation, the policy in problem (1) can be a composition of . We learn the policy to generate from , then use to generate from . Fig. 2 provides a visualization of this bi-level mechanism builds on actor-critic reinforcement leaning.

III-B Bi-level Formulation for Multi-robot Coordination Learning with Local Robot Observation

In this paper, we generalize the bi-level formulation into a multi-robot system with local observation. Consider a multi-robot system with a set of robots. Suppose the centralized actions and states in (1) are decomposed into each robot’s state and action as and . Assume each robot’s action can be further written as , where the action determines the robot’s local state update . The multi-robot system shares a global reward determined by all robots’ states, and their two actions: .

For many real-world applications, the deployment of a multi-robot system faces communication and sensing constraints, where each agent may only have access to the states of its neighbors through a network, denoted by set . The neighbors’ states are a subset of the full system state, denoted by . Such local observation turns the problem into a Dec-MDP. Under this constraint, the problem of interest in this paper is to learn a local policy for each agent such that:

| (5) |

While solving Dec-MDP problems is known to be difficult [16], in this paper, we will generalize the idea of bi-level optimization under the scheme of CTDE and use action space reduction to alleviate the challenges in high-dimensional parameter tuning for MARL. Note that such a generalization is non-trivial and the main challenge lies in the local observation and local decision-making of each robot using incomplete information. As a consequence, compared with the centralized case, the robots cannot solve an optimization problem like (4b) to directly determine their secondary action .

Running Example. We demonstrate how formulation (5) aligns with the running example. Let state represent robots’ physical locations. The move action is associated with , which impacts the robots’ state update. The guard action is associated with . The risk function (negative reward) in each time step depends on the robots’ current locations , their move , and how robots are guarding against adversaries, i.e., . Thus, the scenario satisfies (2)-(3) and can be reformulated into a multi-robot bi-level optimization problem. All robots only receive states from their neighbors , through an underlying network.

IV Main Approach

As described in Sec. III-A, the centralized bi-level formulation leverages the optimizer (4b) to reduce the dimensionality of the action space, thereby enhancing training efficiency. However, such optimization requires global information, which is not accessible to robots during decentralized execution. Thus, robots cannot locally generate . To address this issue, in this section, we introduce a new reformulation to generalize the concept of bi-level optimization to a CTDE setup.

To present our approach, we reformulate (5) into the following Bi-level Coordination Learning (Bi-CL) problem, ,

| (6a) | ||||

| (6b) | ||||

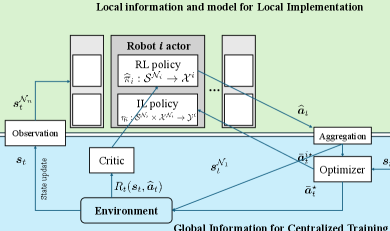

| (6c) | ||||

In equations (6a) and (6b), we aim to train two local policies for each robot: one policy, , generates action ; another policy, , generates action . Note that the two policies are coupled since their inputs rely on the outputs of each other. If training two policies simultaneously, such coupling can pose a stability issue and reduce training efficiency. To address this, our idea is to introduce a global optimizer (6c) under the CTDE scheme to decouple and guide the training of both policies, as we explain next and visualized in Fig. 3.

Bi-level optimization: First, we introduce optimization problem (6c) to solve . If temporarily ignoring the alignment penalty term, the equations (6a) and (6c) together represent a CTDE version of the bi-level problem (4). During the centralized training process, we can utilize global information for all robots’ states and actions to solve . Based on , we train the local policy in (6a) for each agent’s using a reinforcement learning scheme. has a reduced-dimension action space because is not learned.

On the other hand, while optimizer (6c) can be used in centralized training to obtain action for all robots, it cannot be used by robots during decentralized execution due to the local observations. Thus, we introduce (6b) to train a local policy to generate agent’s . The policy can be trained conveniently using an imitation learning (IL) scheme that uses optimizer (6c) as the expert demonstration. Here, the imitation loss in (6b) measures the difference between the policy output using local information and the optimal action solved from optimizer (6c) using global information.

Alignment of the two policies: We explain the alignment term in (6c). Based on the above explanation, the policy is trained assuming the actions are optimal for all robots, and the policy seeks to generate such optimal actions using only local information. Clearly, during decentralized execution, the optimality of can hardly be guaranteed, thus creating a mismatch between the policies and . To address this, we introduce an alignment penalty term in (6c), where and

Here, is the output of the model , then evaluates how well an action aligns with the policy . Minimizing this penalty helps to reduce the mismatch between the two local policies. Mathematically, the value of for each robot equals the loss in (6b). Hence, is an important coefficient and we shall remark on its choice during the training. If , the reformulation (6) disregards the policy mismatch, always opting for the solution that maximizes to train . Conversely, when , the penalty forces the training of using the action chosen by , making the two policies fully coupled, and the IL (6b) no longer updates. To strike a balance, our idea is to make sure the IL model is sufficiently trained before the penalty is applied. For this purpose, we define a modified logistic function:

| (7) |

where is the training episode. We let start with zero to ‘jump start’ the training of and using the optimal for maximizing . Afterwards, is gradually increased to a sufficiently large value to fine-tune , taking into account the output of and ensuring alignment between the two policies, and . The described training scheme is summarized in Algorithm 1.

Remark 1.

In the presentation of Algorithm (1), we assume the use of actor-critic-based methods for (6a) and a quadratic mismatch function for the imitation loss (6b). However, the proposed algorithm is also applicable to general CTDE setups when using other reinforcement learning and imitation learning methods. Furthermore, in handling the local observations of robots, there exist more advanced methods [28, Sec. 2.2.2] in the literature on Dec-MDPs and POMDPs that leverage the memory (historic states) of robots to learn more powerful policies. In this paper, we only use simple memory-less policies, but these more advanced methods are also compatible with Algorithm (1).

V Numerical Results

In this section, we employ two variants of the running example to verify the proposed algorithm. We use simulated experiments to demonstrate: (i) the effectiveness of the proposed bi-level learning schemes, in particular, the alignment penalty term; (ii) the advantage of the proposed approach in terms of training efficiency compared with alternative MARL algorithms.

Through this section, we use the accumulated reward (5) to evaluate the proposed Bi-CL algorithm. To avoid ambiguity, we distinguish the following metrics:

RL-Reward: refers to the reward of the reinforcement learning policy when solving (6a) during centralized training.

T-Reward: refers to an average reward obtained by executing both the learned reinforcement learning policy and imitation learning policy in the environment for thirty times.

R-Gap: refers to the subtraction between the RL-Reward and the T-Reward.

Remark 2.

Note that RL-Reward and T-Reward are different because they use different ways to choose robots’ action . RL-Reward uses an ‘optimal’ solution of (6c); T-Reward uses output of the learned policy . Only the T-Reward reflects the true performance of the policies because it is achievable during decentralized execution, and higher means better. Since R-Gap evaluates the difference, smaller means better.

V-A Coordinated Multi-robot Route Traversal

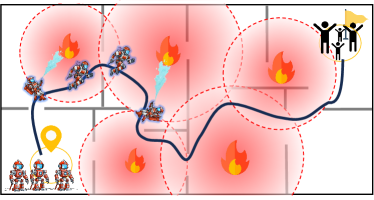

Running Example (a): We introduce a variant of the running example, by assuming all robots travel continuously through a route. Each robot can only observe a subset of full system states through an underlying communication network. Fig. 4 provides a visualization of the environment. The testing environment we use is a mathematical abstraction that may not exactly follow its physical layout and the number of adversaries may change.

Each robot has a continuous move action (velocity) , and a discrete guard action where is the set of all adversaries. The robot ’s state transition (position) follows dynamics . These align with definition (2).

Suppose adversary has an impacted area . Each time-step, if , robot accumulates a cost . Besides, for any robot , if , it can perform guard against adversary , i.e., . The guarding effect is characterized by a discount factor on the costs created by adversary :

Such a discount is more effective when the robot admits at a lower moving velocity . The total team cost in each step is defined as:

where is a constant time penalty. A one-time positive reward is added when all robots arrive at the target. The definition of aligns with definition (3).

The goal is to minimize the team accumulated cost in the form of (5) before all robots arrive at the target position.

The alignment of the problem with (2) and (3) allows it to be reformulated into (6) and then solved by our Bi-CL.

Effectiveness of Alignment Penalty: We first implement the proposed Bi-CL in an environment with 4 robots and 4 adversaries (fire). In Bi-CL, the reinforcement learning of uses an independent actor in each robot with centralized critic [29, Sec. 2.4], following the structure of MADDPG [3] but over reduced action space. Each robot only observes part of the global information, i.e., its own state and the states of a subset of other robots in the system The cost function is an affine function that depends on the closeness between the robot and the center of the adversary.

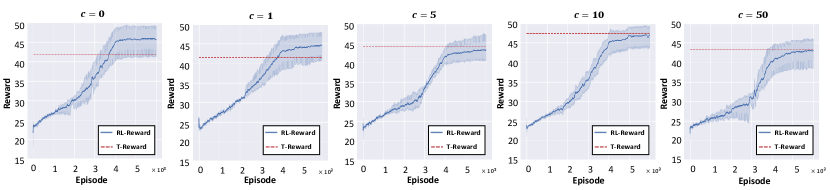

Fig. 5 illustrates the RL-Reward curves and T-Reward for different by setting , all with and . This, together with (7), makes gradually increase in an ‘S’ shape from to . Upon examining the results, it is evident that the R-Gap is large when is small, and the gap diminishes as increases. This verifies the effectiveness of the proposed alignment penalty term in (6c), as it motivates the policy to be tuned using a that is closer to the output of . It is also important to note that although the MARL training reward appears high when is small, this is misleading and unattainable in decentralized execution due to the mismatch between and . The attainable reward (T-Reward) is much lower with smaller .

| Environments | Metric | |||||

|---|---|---|---|---|---|---|

| N3_M3 | T-Reward | 51.83 | 53.01 | 53.44 | 53.17 | 52.13 |

| R-Gap | 1.34 | 0.73 | 0.26 | -0.45 | -0.23 | |

| N4_M4 | T-Reward | 42.10 | 42.03 | 44.05 | 47.38 | 43.56 |

| R-Gap | 3.98 | 1.55 | -0.87 | -0.39 | -0.11 | |

| N5_M4 | T-Reward | 56.31 | 58.27 | 59.68 | 60.08 | 59.46 |

| R-Gap | 4.79 | 4.45 | 1.17 | -0.04 | -0.15 | |

| N5_M4* | T-Reward | 50.67 | 53.39 | 54.09 | 54.60 | 54.20 |

| R-Gap | 6.54 | 3.84 | 1.82 | -0.59 | 0.27 | |

Finally, we observe in the last plot of Fig. 5 that a very large may negatively impact the training performance. This is due to two reasons. First, as we discussed in the algorithm development, larger reduces the training efficiency of the imitation learning part of the algorithm. This is reflected by the lower values on both RL-Reward and T-Reward. Second, since impacts the computation of during the training process, changing it too aggressively will lead to instability in training. This is evidenced by the middle part of the curve, where increased osculation is observed.

The same test is performed under various environment setups and the results are shown in Table I. Here, Na_Mb means the environment has robots and adversaries. Especially, in N5_M4*, each robot further reduces the number of other robots’s states it can observe. The results in the table align with our above analysis. In all cases where , i.e., without alignment penalty, the performance is the worst. The R-Gap generally reduces when grows large. Since T-Reward is the core metric for performance, the best result mostly occurs at with only one exception and the difference is small. Furthermore, to read the table column-wise, environments with the same number of adversaries, i.e., M4 are comparable. From N4_M4 to N5_M4, the T-Rewards generally increase because more coordination behaviors can be generated. When comparing N5_M4* and N5_M4, the T-Rewards generally decrease due to the reduced sensing distances of the robots. This also leads to a larger R-Gap when , necessitating the introduced alignment penalty.

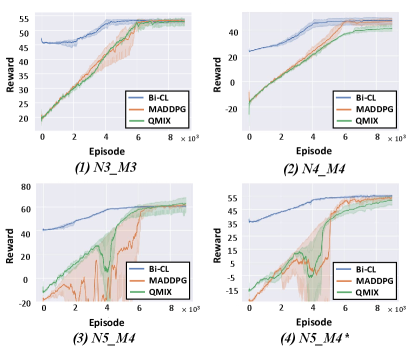

Comparing Training Efficiency with Baselines: We also compare the proposed Bi-CL algorithm with two well-established MARL algorithms, MADDPG [3] and QMIX [14], respectively. MADDPG and QMIX are implemented in complete action space of each robot. The same learning rates with Bi-CL are used. The comparison is visualized in Fig. 6 for the four cases in the above discussion. Here, we choose as the R-Gaps are small. Thus, the RL-Reward curve can represent the convergence of our algorithm and can approximate the true reward our algorithm can achieve. It can be observed that in all cases, Bi-CL can achieve similar final rewards compared with baselines, which justifies the effectiveness of the proposed algorithm. The efficiency of Bi-CL is evidenced by its faster convergence speed compared with baselines. Furthermore, the starting reward of our algorithm is significantly better because it uses the optimization (6c) to boost and guide the policy training, while other methods are purely based on exploration. For a similar reason, we observe that both MADDPG and QMIX suffer from training stability issues for complex scenarios, while the proposed Bi-CL does not.

V-B Coordinated Multi-robot Graph Traversal

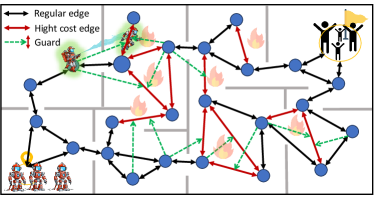

Running Example (b): In the second scenario, we assume all robots travel through a graph environment, which highlights the coordination of robots from a spatial aspect. Fig. 7 provides a visualization of the environment; however, the testing environment we use includes randomly generated graphs that may not exactly follow the physical layout. Each robot can only observe a subset of full system states. The environment is characterized by a environment graph describing robot traversability from one node to another. It also features a coordination mechanism (green arrows) describing some robots, when located on certain nodes, can guard other robots’ edge traversal to reduce their cost, similar to the work by Limbu et al. [30, 31].

Each robot has a discrete move action that moves the robot to a new node in the graph based on its current position . Here, is the set of adjacent neighboring nodes of robot position in the environment graph. The robot ’s state transition (position) follows subject to . The robot also has a discrete guard action , where is the set of edges that can be guarded by a robot at its new position (visualized by green arrows in Fig. 7). The robots’ actions and state transitions align with definition (2).

When robot travels an edge , i.e., from to , there is an associated edge cost . Besides, based on robot coordination, if another robot can and chooses to guard this edge, i.e., , then this edge cost will be discounted by a factor

Based on this, the total team cost in each step is defined as:

where is a constant time penalty. A one-time positive reward is added when all robots arrive at the target. The definition of aligns with definition (3).

The goal is to minimize the team accumulated cost in the form of (5) before all robots arrive at the target position.

The alignment of the problem with (2) and (3) allows it to be reformulated into (6) and then solved by our Bi-CL.

| Graph | Robots/ Nodes | T-Reward | Converge () | ||||

|---|---|---|---|---|---|---|---|

| Bi-CL | MAPPO | QMIX | Bi-CL | MAPPO | QMIX | ||

| Sparse | 3/5 | 83.16 | 82.08 | 78.82 | 3.58 | 4.89 | 5.85 |

| 3/10 | 64.86 | 65.39 | 64.97 | 3.69 | 4.87 | 5.95 | |

| 5/10 | 54.91 | 50.33 | 55.21 | 3.75 | 4.96 | 6.14 | |

| 5/15 | 51.37 | 33.14 | 49.67 | 3.71 | 5.24 | 6.03 | |

| Dense | 3/5 | 93.21 | 96.90 | 94.23 | 3.63 | 4.95 | 6.19 |

| 3/10 | 73.41 | 60.89 | 71.08 | 3.79 | 5.09 | 6.38 | |

| 5/10 | 66.32 | 54.14 | 68.08 | 3.80 | 4.94 | 6.29 | |

| 5/15 | 60.08 | 47.82 | 58.16 | 3.85 | 4.94 | 6.44 | |

Comparing Training efficiency with Baselines: In this scenario, we compare the proposed Bi-CL algorithm with two MARL algorithms MAPPO [3] and QMIX [14], respectively. Here, we use MAPPO instead of MADDPG because centralized PPO’s suitability for this task has been validated by the study in Limbu et al. [31]. In Bi-CL, the reinforcement learning part is implemented by QMIX in action space for each robot. While the MAPPO and original QMIX are implemented in complete action space of each robot. The simulations are configured with the following setups. We experiment with 5, 10, 15 nodes in the environment graph with 3 or 5 robots in two types of graph connectivity, i.e., sparse and dense, for edges and the number of edges that can be guarded. We randomly create 15 environment graphs as our test set. For all approaches, training is terminated when the cumulative reward no longer changes more than for 10 steps.

The comparison results are presented in Table II, which verifies the effectiveness of the Bi-CL algorithm for learning coordinated actions among robots within graph-based coordination tasks. Despite variations in the number of robots, graph nodes, or densities of the graph edges, the proposed Bi-CL algorithm delivers comparable results to the baseline algorithms while requiring fewer training episodes to converge. By observing the column of MAPPO, the results tend to fall below those of other methods under conditions of increased environmental complexity. This suggests that policy gradient approaches may only learn a sub-optimal policy solution in these cases. The QMIX method is more robust in terms of optimality, however, it generally requires more training episodes to converge. In comparison, the proposed Bi-CL method enjoys much more efficient convergence properties. This improvement is mainly due to (i) the reduction of the action space for reinforcement learning, and (ii) the use of optimization (6c) to jump-start the training.

VI Conclusion

We presented a bi-level formulation for multi-robot coordination learning with local observation, wherein robots’ state transitions and their cooperative behaviors are abstracted and optimized on different levels, significantly enhancing learning efficiency. A key enabler of our Bi-CL algorithm was an alignment penalty that enables upper-level learning to account for potential discrepancies arising from local observations in lower-level optimization. We validated our algorithm through a running example, designing two distinct environments: route-based and graph-based. Experimental results demonstrated that our algorithm can effectively learn in both environments, underscoring its versatility and applicability across a diverse set of coordination tasks. We evaluated the performance enhancement of the Bi-CL using different alignment penalty parameters. Comparative analysis with baselines verified the efficiency of our algorithm.

For future work, we aim to explore the scalability of our Bi-CL to accommodate larger multi-robot systems and more complex environments, further refining the alignment penalty mechanism to enhance its adaptability and efficiency. Moreover, we intend to extend our way of handling robots’ information loss to effectively manage dynamic, stochastic, and noisy scenarios, thereby enhancing its resilience and performance in unpredictably evolving multi-robot coordination environments.

References

- [1] Y. Rizk, M. Awad, and E. W. Tunstel, “Cooperative heterogeneous multi-robot systems: A survey,” ACM Computing Surveys (CSUR), vol. 52, no. 2, pp. 1–31, 2019.

- [2] X. Wang, J. Hudack, and S. Mou, “Distributed algorithm with resilience for multi-agent task allocation,” in 2021 4th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS), pp. 112–117, IEEE, 2021.

- [3] R. Lowe, Y. I. Wu, A. Tamar, J. Harb, O. Pieter Abbeel, and I. Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” Advances in neural information processing systems, vol. 30, 2017.

- [4] Z. Yan, N. Jouandeau, and A. A. Cherif, “A survey and analysis of multi-robot coordination,” International Journal of Advanced Robotic Systems, vol. 10, no. 12, p. 399, 2013.

- [5] X. Xiao, J. Dufek, and R. R. Murphy, “Autonomous visual assistance for robot operations using a tethered uav,” in Field and Service Robotics: Results of the 12th International Conference, pp. 15–29, Springer, 2021.

- [6] B. Liu, X. Xiao, and P. Stone, “Team orienteering coverage planning with uncertain reward,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 9728–9733, IEEE, 2021.

- [7] Y. Lian, Q. Yang, Y. Liu, and W. Xie, “A spatio-temporal constrained hierarchical scheduling strategy for multiple warehouse mobile robots under industrial cyber–physical system,” Advanced Engineering Informatics, vol. 52, p. 101572, 2022.

- [8] R. Liu, J. Gao, J. Zhang, D. Meng, and Z. Lin, “Investigating bi-level optimization for learning and vision from a unified perspective: A survey and beyond,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 12, pp. 10045–10067, 2021.

- [9] A. Biswas and C. Hoyle, “A literature review: solving constrained non-linear bi-level optimization problems with classical methods,” in International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, vol. 59193, p. V02BT03A025, American Society of Mechanical Engineers, 2019.

- [10] H. Zhang, W. Chen, Z. Huang, M. Li, Y. Yang, W. Zhang, and J. Wang, “Bi-level actor-critic for multi-agent coordination,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 7325–7332, 2020.

- [11] L. P. Kaelbling, M. L. Littman, and A. W. Moore, “Reinforcement learning: A survey,” Journal of artificial intelligence research, vol. 4, pp. 237–285, 1996.

- [12] C. Yu, A. Velu, E. Vinitsky, J. Gao, Y. Wang, A. Bayen, and Y. Wu, “The surprising effectiveness of ppo in cooperative multi-agent games,” Advances in Neural Information Processing Systems, vol. 35, pp. 24611–24624, 2022.

- [13] P. Sunehag, G. Lever, A. Gruslys, W. M. Czarnecki, V. Zambaldi, M. Jaderberg, M. Lanctot, N. Sonnerat, J. Z. Leibo, K. Tuyls, et al., “Value-decomposition networks for cooperative multi-agent learning,” arXiv preprint arXiv:1706.05296, 2017.

- [14] T. Rashid, M. Samvelyan, C. S. De Witt, G. Farquhar, J. Foerster, and S. Whiteson, “Monotonic value function factorisation for deep multi-agent reinforcement learning,” The Journal of Machine Learning Research, vol. 21, no. 1, pp. 7234–7284, 2020.

- [15] P. J. Gmytrasiewicz and P. Doshi, “A framework for sequential planning in multi-agent settings,” Journal of Artificial Intelligence Research, vol. 24, pp. 49–79, 2005.

- [16] D. S. Bernstein, R. Givan, N. Immerman, and S. Zilberstein, “The complexity of decentralized control of markov decision processes,” Mathematics of operations research, vol. 27, no. 4, pp. 819–840, 2002.

- [17] A. Antoniou, H. Edwards, and A. Storkey, “How to train your maml,” in International Conference on Learning Representations, 2018.

- [18] H. Liu, K. Simonyan, and Y. Yang, “Darts: Differentiable architecture search,” in International Conference on Learning Representations, 2018.

- [19] D. Pfau and O. Vinyals, “Connecting generative adversarial networks and actor-critic methods,” arXiv preprint arXiv:1610.01945, 2016.

- [20] M. Hong, H. Wai, Z. Wang, and Z. Yang, “A two-timescale framework for bilevel optimization: Complexity analysis and application to actor-critic. arxiv e-prints, art,” arXiv preprint arXiv:2007.05170, 2020.

- [21] X. Wang, S. Mou, and B. D. Anderson, “Consensus-based distributed optimization enhanced by integral feedback,” IEEE Transactions on Automatic Control, vol. 68, no. 3, pp. 1894–1901, 2022.

- [22] A. Sinha, P. Malo, and K. Deb, “A review on bilevel optimization: From classical to evolutionary approaches and applications,” IEEE Transactions on Evolutionary Computation, vol. 22, no. 2, pp. 276–295, 2017.

- [23] Y. Mintz, J. A. Cabrera, J. R. Pedrasa, and A. Aswani, “Control synthesis for bilevel linear model predictive control,” in 2018 Annual American Control Conference (ACC), pp. 2338–2343, IEEE, 2018.

- [24] T. Nisha, D. T. Nguyen, and V. K. Bhargava, “A bilevel programming framework for joint edge resource management and pricing,” IEEE Internet of Things Journal, vol. 9, no. 18, pp. 17280–17291, 2022.

- [25] X. Wang, S. Mou, and B. D. Anderson, “Scalable, distributed algorithms for solving linear equations via double-layered networks,” IEEE Transactions on Automatic Control, vol. 65, no. 3, pp. 1132–1143, 2019.

- [26] L. Zheng, T. Fiez, Z. Alumbaugh, B. Chasnov, and L. J. Ratliff, “Stackelberg actor-critic: Game-theoretic reinforcement learning algorithms,” in Proceedings of the AAAI conference on artificial intelligence, vol. 36, pp. 9217–9224, 2022.

- [27] H. M. Le, Y. Yue, P. Carr, and P. Lucey, “Coordinated multi-agent imitation learning,” in International Conference on Machine Learning, pp. 1995–2003, PMLR, 2017.

- [28] K. Zhang, Z. Yang, and T. Başar, “Multi-agent reinforcement learning: A selective overview of theories and algorithms,” Handbook of reinforcement learning and control, pp. 321–384, 2021.

- [29] Y. Xiao, W. Tan, and C. Amato, “Asynchronous actor-critic for multi-agent reinforcement learning,” Advances in Neural Information Processing Systems, vol. 35, pp. 4385–4400, 2022.

- [30] M. Limbu, Z. Hu, S. Oughourli, X. Wang, X. Xiao, and D. Shishika, “Team coordination on graphs with state-dependent edge costs,” in 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 679–684, IEEE, 2023.

- [31] M. Limbu, Z. Hu, X. Wang, D. Shishika, and X. Xiao, “Team coordination on graphs with reinforcement learning,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), IEEE, 2024.