Beyond Unimodal: Generalising Neural Processes for Multimodal Uncertainty Estimation

Abstract

Uncertainty estimation is an important research area to make deep neural networks (DNNs) more trustworthy. While extensive research on uncertainty estimation has been conducted with unimodal data, uncertainty estimation for multimodal data remains a challenge. Neural processes (NPs) have been demonstrated to be an effective uncertainty estimation method for unimodal data by providing the reliability of Gaussian processes with efficient and powerful DNNs. While NPs hold significant potential for multimodal uncertainty estimation, the adaptation of NPs for multimodal data has not been carefully studied. To bridge this gap, we propose Multimodal Neural Processes (MNPs) by generalising NPs for multimodal uncertainty estimation. Based on the framework of NPs, MNPs consist of several novel and principled mechanisms tailored to the characteristics of multimodal data. In extensive empirical evaluation, our method achieves state-of-the-art multimodal uncertainty estimation performance, showing its appealing robustness against noisy samples and reliability in out-of-distribution detection with faster computation time compared to the current state-of-the-art multimodal uncertainty estimation method.

1 Introduction

Uncertainty estimation of deep neural networks (DNNs) is an essential research area in the development of reliable and well-calibrated models for safety-critical domains [49, 45]. Despite the remarkable success achieved by DNNs, a common issue with these model is their tendency to make overconfident predictions for both in-distribution (ID) and out-of-distribution (OOD) samples [16, 43, 35]. Extensive research has been conducted to mitigate this problem, but most of these efforts are limited to unimodal data, neglecting the consideration of multimodal data [57, 11, 50, 63, 38, 34].

In many practical scenarios, safety-critical domains typically involve the processing of multimodal data. For example, medical diagnosis classification that uses X-ray, radiology text reports and the patient’s medical record history data can be considered as a form of multimodal learning that requires trustworthy predictions [8]. Despite the prevalence of such multimodal data, the problem of uncertainty estimation for multimodal data has not been comprehensively studied. Furthermore, it has been demonstrated that using existing unimodal uncertainty estimation techniques directly for multimodal data is ineffective, emphasising the need for a meticulous investigation of multimodal uncertainty estimation techniques [24, 17].

Several studies investigated the problem of multimodal uncertainty estimation with common tasks including 1) assessing calibration performance, 2) evaluating robustness to noisy inputs, and 3) detecting OOD samples. Trusted Multi-view Classification (TMC) [17] is a single-forward deterministic classifier that uses the Demptser’s combination rule [7] to combine predictions obtained from different modalities. The current state-of-the-art (SOTA) in this field is Multi-view Gaussian Process (MGP) [24], which is a non-parametric Gaussian process (GP) classifier that utilises the product-of-experts to combine predictive distributions derived from multiple modalities. Although MGP has shown reliability of GPs in this context, it should be noted that the computational cost of a GP increases cubically with the number of samples [19, 65].

Neural processes (NPs) offer an alternative approach that utilises the representation power of DNNs to imitate the non-parametric behaviour of GPs while maintaining a lower computational cost [13, 14]. It has been shown that NPs can provide promising uncertainty estimation for both regression [28, 33, 29] and classification tasks [62, 25] involving unimodal data. Despite the promising potential of NPs for multimodal data, to the best of our knowledge, no research has yet investigated the feasibility of using NPs for multimodal uncertainty estimation.

In this work, we propose a new multimodal uncertainty estimation framework called Multimodal Neural Processes (MNPs) by generalising NPs for multimodal uncertainty estimation. MNPs have three key components: the dynamic context memory (DCM) that efficiently stores and updates informative training samples, the multimodal Bayesian aggregation (MBA) method which enables a principled combination of multimodal latent representations, and the adaptive radial basis function (RBF) attention mechanism that facilitates well-calibrated predictions. Our contributions are:

-

1.

We introduce a novel multimodal uncertainty estimation method by generalising NPs that comprise the DCM, the MBA, and the adaptive RBF attention.

-

2.

We conduct rigorous experiments on seven real-world datasets and achieve the new SOTA performance in classification accuracy, calibration, robustness to noise, and OOD detection.

-

3.

We show that MNPs achieve faster computation time (up to 5 folds) compared to the current SOTA multimodal uncertainty estimation method.

2 Background

Multimodal Classification

The aim of this research is to investigate uncertainty estimation for multimodal classification. Specifically, we consider a multimodal training dataset , where is the number of input modalities and is the number of training samples. We assume that each sample has modalities of input with the input dimension of and a single one-hot encoded label with the number of classes . In this study, we consider the input space to be a feature space. The objective is to estimate the labels of test samples given , where is the number of test samples.

Neural Processes

NPs are stochastic processes using DNNs to capture the ground truth stochastic processes that generate the given data [13]. NPs learn the distribution of functions and provide uncertainty of target samples, preserving the property of GPs. At the same time, NPs exploit function approximation of DNNs in a more efficient manner than GPs. For NPs, both training and test datasets have a context set and a target set with being the number of context samples and the number of target samples111The modality notation is intentionally omitted as the original NPs are designed for unimodal inputs [13, 14]., and the learning objective of NPs is to maximise the likelihood of .

Conditional Neural Processes (CNPs), the original work of NPs [13], maximise with where is an encoder parameterised by , is the concatenation of two vectors along the feature dimension, and is the feature dimension. The mean vector is the permutation invariant representation that summarises the context set which is passed to a decoder with to estimate .

The original CNPs use the unweighted mean operation to obtain by treating all the context points equally, which has been shown to be underfitting [28]. To improve over this, Attentive Neural Processes (ANPs) [28] leveraged the scaled dot-product cross-attention [60] to create target-specific context representations that allocate higher weight on the closer context points:

| (1) |

where is the attention weight with as the query, is the key, the encoded context set is the value, and is the input dimension of and . The resulted target-specific context representation has been shown to enhance the expressiveness of the task representation [28, 53, 29, 9, 47, 67].

The training procedure of NPs often randomly splits a training dataset to the context and the target sets (i.e., ). In the inference stage, a context set is provided for the test/target samples (e.g., a support set in few-shot learning or unmasked patches in image completion). While the context set for the test dataset is given for these tasks, NPs also have been applied to other tasks where the context set is not available (e.g., semi-supervised learning [62] and uncertainty estimation for image classification [25]). In those tasks, existing studies have used a context memory during inference, which is composed of training samples that are updated while training [25, 62]. It is assumed that the context memory effectively represents the training dataset with a smaller number of samples. We build upon these previous studies by leveraging the concept of context memory in a more effective manner, which is suitable for our task.

Note that the existing NPs such as ETP [25] can be applied to multimodal classification by concatenating multimodal features into a single unimodal feature. However, in Section 5.1, we show that this approach results in limited robustness to noisy samples.

3 Multimodal Neural Processes

The aim of this study is to generalise NPs to enable multimodal uncertainty estimation. In order to achieve this goal, there are significant challenges that need to be addressed first. Firstly, it is essential to have an efficient and effective context memory for a classification task as described in Section 2. Secondly, a systematic approach of aggregating multimodal information is required to provide unified predictions. Finally, the model should provide well-calibrated predictions without producing overconfident estimates as described in Section 1.

Our MNPs, shown in Figure 1, address these challenges with three key components respectively: 1) the dynamic context memory (DCM) (Section 3.1), 2) the multimodal Bayesian aggregation (MBA) (Section 3.2), and 3) the adaptive radial basis function (RBF) attention (Section 3.3). From Section 3.1 to 3.3, we elaborate detailed motivations and proposed solutions of each challenge, and Section 3.4 outlines the procedures for making predictions. Throughout this section, we refer the multimodal target set to the samples from training and test datasets and the multimodal context memory to the context set.

3.1 Dynamic Context Memory

Motivation

In NPs, a context set must be provided for a target set, which however is not always possible for non-meta-learning tasks during inference. One simple approach to adapt NPs to these tasks is to randomly select context points from the training dataset, but its performance is suboptimal as randomly sampling a few samples may not adequately represent the entire training distribution. Wang et al. [62] proposed an alternative solution by introducing a first-in-first-out (FIFO) memory that stores the context points with the predefined memory size. Although the FIFO performs slightly better than the random sampling in practice, the performance is still limited because updating the context memory is independent of the model’s predictions. Refer to Appendix C.1 for comparisons.

Proposed Solution

To overcome this limitation of the existing context memory, we propose a simple and effective updating mechanism for the context memory in the training process, which we call Dynamic Context Memory (DCM). We partition context memory of modality into subsets ( as the number of classes) as to introduce a class-balance context memory, with each subset devoted to one class. Accordingly, where is the number of context elements per modality (i.e., the size of ) and is the number of class-specific context samples per modality. This setting resembles the classification setting in [13], where class-specific context representations are obtained for each class.

DCM is initialised by taking random training samples from each class of each modality and is updated every mini-batch during training by replacing the least “informative” element in the memory with a possibly more “informative” sample. We regard the element in DCM that receives the smallest attention weight (i.e., in Equation (1)) during training as the least informative one and the target point that is most difficult to classify (i.e., high classification error) as a more informative sample that should be added to DCM to help the model learn the decision boundary. Formally, this can be written as:

| (2) | ||||

| (3) |

where indicates the row vector of a matrix, indicates the row and the column element of a matrix, and is the predicted probability of the modality input. selects the context memory element that receives the least average attention weight in a mini-batch during training, and selects the target sample with the highest classification error. To measure the error between the predictive probability and the ground truth of a target sample, one can use mean squared error (MSE) (Equation (3)) or cross-entropy loss (CE). We empirically found that the former gives better performance in our experiments. Refer to Appendix C.1 for ablation studies comparing the two and the impact of on its performance.

The proposed updating mechanism is very efficient as the predictive probability and the attention weights in Equation (3) are available with no additional computational cost. Also, in comparison to random sampling which requires iterative sampling during inference, the proposed approach is faster in terms of inference wall-clock time since the updated DCM can be used without any additional computation (refer to Appendix C.1 for the comparison).

3.2 Multimodal Bayesian Aggregation

Motivation

With DCM, we obtain the encoded context representations for the modality input as follows:

| (4) |

where and are the encoders’ parameters for the modality. Next, given the target set , we compute the target-specific context representations with the attention mechanism:

| (5) |

where the attention weight can be computed by any scoring function without loss of generality. Note that a single target sample consists of multiple representations from modalities . It is important to aggregate these multiple modalities into one latent variable/representation for making a unified prediction of the label.

Proposed Solution

Instead of using a deterministic aggregation scheme, we propose Multimodal Bayesian Aggregation (MBA), inspired by [61]. Specifically, we view as a sample from a Gaussian distribution with mean : where is the sample in . Additionally, we impose an informative prior on : with the mean context representations of and , which assign uniform weight across the context set as follows:

| (6) |

where and are the encoders’ parameters for the modality. Note that the encoders in Equation (4) and (6) are different because they approximate different distribution parameters.

Lemma 3.1 (Gaussian posterior distribution with factorised prior distribution).

If we have and for i.i.d. observations of dimensional vectors, then the mean and covariance of posterior distribution are:

| (7) |

As both and are Gaussian distributions, the posterior of is also a Gaussian distribution: , whose mean and variance are obtained by using Lemma 3.1 as follows:

| (8) |

where and are element-wise inverse and element-wise product respectively (see Appendix A for proof).

We highlight that if the variance of a modality formed by the target-specific context representation is high in , formed by the mean context representations dominates the summation of the two terms. Also, if both and are high for a modality, the modality’s contribution to the summation over modalities is low. By doing so, we minimise performance degradation caused by uncertain modalities (see Section 5.1 for its robustness to noisy samples). Refer to Appendix C.2 for ablation studies on different multimodal aggregation methods.

3.3 Adaptive RBF Attention

Pseudocode of MNPs

Motivation

The attention weight in Equation (3) and (5) can be obtained by any attention mechanism. The dot-product attention is one of the simple and efficient attention mechanisms, which has been used in various NPs such as [28, 53, 9, 47]. However, we have observed that it is not suitable for our multimodal uncertainty estimation problem, as the dot-product attention assigns excessive attention to context points even when the target distribution is far from the context distribution. The right figure in Figure 2(a) shows the attention weight in Equation (1) with where is a single OOD target sample (grey sample) far from the context set (red and blue samples). This excessive attention weight results in overconfident predictive probability for OOD samples (see the left figure of Figure 2(a)), which makes the predictive uncertainty of a classifier hard to distinguish the ID and OOD samples.

Proposed Solution

To address the overconfident issue of the dot-product attention, we propose an attention mechanism based on RBF. RBF is a stationary kernel function that depends on the relative distance between two points (i.e., ) rather than the absolute locations [65], which we define as where is the squared Euclidean norm, and is the lengthscale parameter that controls the smoothness of the distance in the modality input space. The RBF kernel is one of the widely used kernels in GPs [64, 65], and its adaptation with DNNs has shown well-calibrated and promising OOD detection [40, 41, 58, 24]. Formally, we define the attention weight using the RBF kernel as:

| (9) |

where the elements of are , and Sparsemax [39] is an alternative activation function to Softmax. It is defined as where is the dimensional simplex . Here we use Sparsemax instead of the standard Softmax because Sparsemax allows zero-probability outputs. This property is desirable because the Softmax’s output is always positive even when (i.e., ) leading to higher classification and calibration errors (see Appendix C.3 for ablation studies).

The lengthscale is an important parameter that determines whether two points are far away from each other (i.e., ) or close to each other (i.e., ). However, in practice, has been either considered as a non-optimisable hyperparameter or an optimisable parameter that requires a complex initialisation [24, 59, 58, 42, 65, 56]. To address this issue, we propose an adaptive learning approach of to form a tight bound to the context distribution by leveraging the supervised contrastive learning [27]. Specifically, we let anchor index with negative indices and positive indices . Given an anchor sample of the target set, the negative samples refer to all samples except for the anchor sample, while the positive samples refer to other samples that share the same label as the anchor sample. We define the multimodal supervised contrastive loss as:

| (10) |

where is the cardinality of , and is the temperature scale. This loss encourages higher RBF output of two target samples from the same class and lower RBF output of two target samples from different classes by adjusting the lengthscale. In addition to , a -loss is added to form the tighter bound by penalising large lengthscale. Overall, the loss term for our adaptive RBF attention is with the balancing coefficient .

We show the difference between the RBF attention without (see Figure 2(b)) and the adaptive RBF attention with (see Figure 2(c)). It can be seen that the decision boundary modelled by the predictive probability of the adaptive RBF attention is aligned with the data distribution significantly better than the non-adaptive one. For ablation studies with real-world datasets, see Appendix C.4.

3.4 Conditional Predictions

We follow the standard procedures of Gaussian process classification to obtain predictions [65, 24, 42, 19] where we first compute the predictive latent distribution as a Gaussian distribution by marginalising :

| (11) |

where , , and is parameterised by a decoder. Then, we obtain the predictive probability by:

| (12) |

Similarly, we can obtain the unimodal predictive latent distribution and the unimodal predictive probability for the modality as:

| (13) |

We minimise the negative log likelihood of the aggregated prediction and the unimodal predictions by:

| (14) |

Since Equations (11)-(13) are analytically intractable, we approximate the integrals by the Monte Carlo method [44]. The overall loss for MNPs is where is the balancing term. Refer to Figure 2(d) for the summarised steps of training MNPs.

4 Related Work

Neural Processes

CNP and the latent variant of CNP that incorporates a latent variable capturing global uncertainty were the first NPs introduced in the literature [13, 14]. To address the under-fitting issue caused by the mean context representation in these NPs, Kim et al. [28] proposed to leverage the attention mechanism for target-specific context representations. This approach has been shown to be effective in subsequent works [53, 29, 9, 47, 67, 22]. Many other variants, such as SNP [51, 67], CNAP [48], and MPNPs [5] were proposed for common downstream tasks like 1D regression, 2D image completion [28, 14, 15, 10, 61, 26, 20], and image classification [13, 62, 25]. However, none of these studies have investigated the generalisation of NPs to multimodal data. This study is the first to consider NPs for multimodal classification and its uncertainty estimation.

Multimodal Learning

The history of multimodal learning that aims to leverage multiple sources of input can be traced back to the early work of Canonical Correlation Analysis (CCA) [21]. CCA learns the correlation between two variables which was further improved by using feed-forward networks by Deep CCA (DCCA) [2]. With advances in various architectures of DNNs, many studies on multimodal fusion and alignment were proposed [4, 12, 55, 6]. In particular, transformer-based models for vision-language tasks [36, 52, 1] have obtained great attention. Nonetheless, most of these methods were not originally intended for uncertainty estimation, and it has been demonstrated that many of them exhibit inadequate calibration [16, 43].

Multimodal Uncertainty Estimation

Multimodal uncertainty estimation is an emerging research area. Its objective is to design robust and calibrated multimodal models. Ma et al. [37] proposed the Mixture of Normal-Inverse Gamma (MoNIG) algorithm that quantifies predictive uncertainty for multimodal regression. However, this work is limited to regression, whereas our work applies to multimodal classification. Han et al. [17] developed TMC based on the Dempster’s combination rule to combine multi-view logits. In spite of its simplicity, empirical experiments showed its limited calibration and capability in OOD detection [24]. Jung et al. [24] proposed MGP that combines predictive posterior distributions of multiple GPs by the product of experts. While MGP achieved the current SOTA performance, its non-parametric framework makes it computationally expensive. Our proposed method overcomes this limitation by generalising efficient NPs to imitate GPs.

5 Experiments

Apart from measuring the test accuracy, we assessed our method’s performance in uncertainty estimation by evaluating its calibration error, robustness to noisy samples, and capability to detect OOD samples. These are crucial aspects that a classifier not equipped to estimate uncertainty may struggle with. We evaluated MNPs on seven real-world datasets to compare the performance of MNPs against four unimodal baselines and three multimodal baselines.

To compare our method against unimodal baselines, we leveraged the early fusion (EF) method [3] that concatenates multimodal input features to single one. The unimodal baselines are (1) MC Dropout (MCD) [11] with dropout rate of 0.2, (2) Deep Ensemble (DE) [32] with five ensemble models, (3) SNGP’s GP layer [35], and (4) ETP [25] with memory size of 200 and a linear projector. ETP was chosen as a NP baseline because of its original context memory which requires minimal change of the model to be used for multimodal classification.

The multimodal baselines consist of (1) Deep Ensemble (DE) with the late fusion (LF) [3] where a classifier is trained for each modality input, and the final prediction is obtained by averaging predictions from all the modalities, (2) TMC [17], and (3) MGP [24]. For TMC and MGP, we followed the settings proposed by the original authors. In each experiment, the same feature extractors were used for all baselines. We report mean and standard deviation of results from five random seeds. The bold values are the best results for each dataset, and the underlined values are the second-best ones. See Appendix B for more detailed settings.

5.1 Robustness to Noisy Samples

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| MCD | 99.250.00 | 92.331.09 | 91.320.62 | 92.950.29 | 71.750.25 | 71.680.36 |

| DE (EF) | 99.200.11 | 93.160.70 | 91.760.33 | 92.990.09 | 72.700.39 | 71.670.23 |

| SNGP | 98.850.22 | 89.500.75 | 87.061.23 | 91.240.46 | 64.684.03 | 67.651.03 |

| ETP | 98.750.25 | 92.331.99 | 91.760.62 | 92.080.33 | 72.581.35 | 67.430.95 |

| DE (LF) | 99.250.00 | 92.330.70 | 87.210.66 | 92.970.13 | 67.050.38 | 69.980.36 |

| TMC | 98.100.14 | 91.170.46 | 91.181.72 | 91.630.28 | 67.680.27 | 65.170.87 |

| MGP | 98.600.14 | 92.330.70 | 92.060.96 | 93.000.33 | 70.000.53 | 72.300.19 |

| MNPs (Ours) | 99.500.00 | 93.501.71 | 95.000.62 | 93.460.32 | 77.900.71 | 71.970.43 |

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| MCD | 0.0090.000 | 0.0690.017 | 0.2990.005 | 0.0170.003 | 0.1810.003 | 0.3880.004 |

| DE (EF) | 0.0070.000 | 0.0540.010 | 0.2690.004 | 0.0360.001 | 0.0890.003 | 0.0950.003 |

| SNGP | 0.0230.004 | 0.2000.010 | 0.8520.012 | 0.4420.004 | 0.1110.063 | 0.2270.010 |

| ETP | 0.0200.002 | 0.0510.009 | 0.2870.007 | 0.0960.002 | 0.0450.008 | 0.1000.010 |

| DE (LF) | 0.2920.001 | 0.2700.009 | 0.5670.006 | 0.0230.002 | 0.3190.005 | 0.2700.003 |

| TMC | 0.0130.002 | 0.1410.002 | 0.0720.011 | 0.0680.002 | 0.1800.004 | 0.5940.008 |

| MGP | 0.0060.004 | 0.0380.007 | 0.0790.007 | 0.0090.003 | 0.0620.006 | 0.0360.003 |

| MNPs (Ours) | 0.0050.001 | 0.0490.008 | 0.0400.005 | 0.0170.003 | 0.0380.009 | 0.0280.006 |

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| MCD | 82.150.17 | 76.080.61 | 64.650.77 | 73.450.11 | 48.970.33 | 42.630.08 |

| DE (EF) | 82.160.18 | 76.940.82 | 65.530.20 | 73.990.19 | 49.450.35 | 41.920.06 |

| SNGP | 72.460.41 | 61.271.24 | 56.520.69 | 56.570.17 | 38.191.86 | 37.490.42 |

| ETP | 75.850.31 | 73.991.12 | 62.640.35 | 66.620.08 | 46.170.58 | 38.280.23 |

| DE (LF) | 95.630.08 | 76.160.28 | 67.690.35 | 81.850.14 | 50.130.27 | 43.010.19 |

| TMC | 82.440.15 | 74.190.69 | 62.180.80 | 71.770.22 | 42.520.29 | 36.610.30 |

| MGP | 97.660.12 | 85.480.25 | 90.970.19 | 92.680.23 | 65.740.56 | 67.020.21 |

| MNPs (Ours) | 98.580.10 | 88.961.98 | 93.800.49 | 92.830.18 | 74.140.35 | 64.110.15 |

| OOD AUC | ||||

|---|---|---|---|---|

| Method | Test accuracy | ECE | SVHN | CIFAR100 |

| MCD | 74.760.27 | 0.0130.002 | 0.7880.022 | 0.7250.014 |

| DE (EF) | 72.950.13 | 0.1540.048 | 0.7690.008 | 0.7210.014 |

| SNGP | 61.510.30 | 0.0200.003 | 0.7530.026 | 0.7050.024 |

| ETP | 74.420.20 | 0.0320.003 | 0.7800.011 | 0.6950.007 |

| DE (LF) | 75.400.06 | 0.0950.001 | 0.7220.016 | 0.6930.006 |

| TMC | 72.420.05 | 0.1080.001 | 0.6810.004 | 0.6750.006 |

| MGP | 73.300.05 | 0.0180.001 | 0.8030.007 | 0.7480.007 |

| MNPs (Ours) | 74.920.07 | 0.0110.001 | 0.8720.002 | 0.7860.005 |

| Dataset | Training | Testing |

|---|---|---|

| Handwritten | 1.62 | 2.66 |

| CUB | 1.41 | 2.12 |

| PIE | 2.88 | 5.37 |

| Caltech101 | 1.56 | 3.30 |

| Scene15 | 1.89 | 2.63 |

| HMDB | 2.03 | 3.27 |

| CIFAR10-C | 1.17 | 2.16 |

Experimental Settings

In this experiment, we evaluated the classification performance of MNPs and the robustness to noisy samples with six multimodal datasets [24, 17]. Following [24] and [17], we normalised the datasets and used a train-test split of 0.8:0.2. To test the robustness to noise, we added zero-mean Gaussian noise with different magnitudes of standard deviation during inference (10 evenly spaced values on a log-scale from to ) to half of the modalities. For each noise level, all possible combinations of selecting half of the modalities (i.e., ) were evaluated and averaged. We report accuracy and expected calibration error (ECE) for each experiment. Please refer to Appendix B for details of the datasets and metrics.

Results

We provide the test results without noisy samples in Table 5, 5, and 5 and the results with noisy samples in Table 5. In terms of accuracy, MNPs outperform all the baselines in 5 out of 6 datasets. At the same time, MNPs provide the most calibrated predictions in 4 out of 6 datasets, preserving the non-parametric GPs’ reliability that a parametric model struggles to achieve. This shows that MNPs bring together the best of non-parametric models and parametric models. Also, MNPs provide the most robust predictions to noisy samples in 5 out of 6 datasets achieved by the MBA mechanism. Both unimodal and multimodal baselines except MGP show limited robustness with a large performance degradation.

In addition to its superior performance, MNPs, as shown in Table 5, are also faster than the SOTA multimodal uncertainty estimator MGP in terms of wall-clock time per epoch (up to faster) measured in the identical environment including batch size, GPU, and code libraries (see Appendix B for computational complexity of the two models). This highly efficient framework was made possible by DCM that stores a small number of informative context points. It also highlights the advantage of using efficient DNNs to imitate GPs, which a non-parametric model like MGP struggles to achieve.

5.2 OOD Detection

Experimental Settings

Following the experimental settings of [24], we trained the models with three different corruption types of CIFAR10-C [18] as a multimodal dataset and evaluated the OOD detection performance using two different test datasets. The first test dataset comprised half CIFAR10-C and half SVHN [46] samples, while the second test dataset comprised half CIFAR10-C and half CIFAR100 [31] samples. We used the Inception v3 [54] pretrained with ImageNet as the backbone of all the baselines. The area under the receiver operating characteristic (AUC) is used as a metric to classify the predictive uncertainty into ID (class 0) and OOD (class 1).

Results

Table 5 shows test accuracy and ECE with CIFAR10-C and OOD AUC against SVHN and CIFAR100. MNPs outperform all the baselines in terms of ECE and OOD AUC. A large difference in OOD AUC is observed which shows that the proposed adaptive RBF attention identifies OOD samples well. Also, we highlight MNPs outperform the current SOTA MGP in every metric. A marginal difference in test accuracy between DE (LF) and MNPs is observed, but MNPs achieve much lower ECE (approximately folds) with higher OOD AUC than DE (LF).

6 Conclusion

In this study, we introduced a new multimodal uncertainty estimation method by generalising NPs for multimodal uncertainty estimation, namely Multimodal Neural Processes. Our approach leverages a simple and effective dynamic context memory, a Bayesian method of aggregating multimodal representations, and an adaptive RBF attention mechanism in a holistic and principled manner. We evaluated the proposed method on the seven real-world datasets and compared its performance against seven unimodal and multimodal baselines. The results demonstrate that our method outperforms all the baselines and achieves the SOTA performance in multimodal uncertainty estimation. A limitation of this work is that despite the effectiveness of the updating mechanism of DCM in practive, it is not theoretically guaranteed to obtain the optimal context memory. Nonetheless, our method effectively achieves both accuracy and reliability in an efficient manner. We leave developing a better updating mechanism for our future work. The broader impacts of this work are discussed in Appendix D.

References

- Akbari et al. [2021] H. Akbari, L. Yuan, R. Qian, W.-H. Chuang, S.-F. Chang, Y. Cui, and B. Gong. Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. In M. Ranzato, A. Beygelzimer, Y. Dauphin, P. Liang, and J. W. Vaughan, editors, Advances in Neural Information Processing Systems, volume 34, pages 24206–24221. Curran Associates, Inc., 2021.

- Andrew et al. [2013] G. Andrew, R. Arora, J. Bilmes, and K. Livescu. Deep canonical correlation analysis. In S. Dasgupta and D. McAllester, editors, Proceedings of the 30th International Conference on Machine Learning, volume 28 of Proceedings of Machine Learning Research, pages 1247–1255, Atlanta, Georgia, USA, 17–19 Jun 2013. PMLR.

- Baltrušaitis et al. [2019] T. Baltrušaitis, C. Ahuja, and L.-P. Morency. Multimodal machine learning: A survey and taxonomy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2):423–443, 2019. doi: 10.1109/TPAMI.2018.2798607.

- Bhatt et al. [2019] G. Bhatt, P. Jha, and B. Raman. Representation learning using step-based deep multi-modal autoencoders. Pattern Recognition, 95:12–23, 2019. ISSN 0031-3203. doi: https://doi.org/10.1016/j.patcog.2019.05.032.

- Cangea et al. [2022] C. Cangea, B. Day, A. R. Jamasb, and P. Lio. Message passing neural processes. In ICLR 2022 Workshop on Geometrical and Topological Representation Learning, 2022.

- Dao et al. [2023] S. D. Dao, D. Huynh, H. Zhao, D. Phung, and J. Cai. Open-vocabulary multi-label image classification with pretrained vision-language model. In 2023 IEEE International Conference on Multimedia and Expo (ICME), pages 2135–2140. IEEE, 2023.

- Dempster [1967] A. Dempster. Upper and lower probabilities induced by a multi- valued mapping. Annals of Mathematical Statistics, 38:325–339, 1967.

- Dipnall et al. [2021] J. F. Dipnall, R. Page, L. Du, M. Costa, R. A. Lyons, P. Cameron, R. de Steiger, R. Hau, A. Bucknill, A. Oppy, E. Edwards, D. Varma, M. C. Jung, and B. J. Gabbe. Predicting fracture outcomes from clinical registry data using artificial intelligence supplemented models for evidence-informed treatment (praise) study protocol. PLOS ONE, 16(9):1–12, 09 2021. doi: 10.1371/journal.pone.0257361.

- Feng et al. [2023] L. Feng, H. Hajimirsadeghi, Y. Bengio, and M. O. Ahmed. Latent bottlenecked attentive neural processes. In The Eleventh International Conference on Learning Representations, 2023.

- Foong et al. [2020] A. Foong, W. Bruinsma, J. Gordon, Y. Dubois, J. Requeima, and R. Turner. Meta-learning stationary stochastic process prediction with convolutional neural processes. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 8284–8295. Curran Associates, Inc., 2020.

- Gal and Ghahramani [2015] Y. Gal and Z. Ghahramani. Bayesian convolutional neural networks with bernoulli approximate variational inference. arXiv preprint arXiv:1506.02158, 2015.

- Gao et al. [2019] M. Gao, J. Jiang, G. Zou, V. John, and Z. Liu. Rgb-d-based object recognition using multimodal convolutional neural networks: A survey. IEEE Access, 7:43110–43136, 2019. doi: 10.1109/ACCESS.2019.2907071.

- Garnelo et al. [2018a] M. Garnelo, D. Rosenbaum, C. Maddison, T. Ramalho, D. Saxton, M. Shanahan, Y. W. Teh, D. Rezende, and S. M. A. Eslami. Conditional neural processes. In J. Dy and A. Krause, editors, Proceedings of the 35th International Conference on Machine Learning, volume 80 of Proceedings of Machine Learning Research, pages 1704–1713. PMLR, 10–15 Jul 2018a.

- Garnelo et al. [2018b] M. Garnelo, J. Schwarz, D. Rosenbaum, F. Viola, D. J. Rezende, S. Eslami, and Y. W. Teh. Neural processes. arXiv preprint arXiv:1807.01622, 2018b.

- Gordon et al. [2020] J. Gordon, W. P. Bruinsma, A. Y. K. Foong, J. Requeima, Y. Dubois, and R. E. Turner. Convolutional conditional neural processes. In International Conference on Learning Representations, 2020.

- Guo et al. [2017] C. Guo, G. Pleiss, Y. Sun, and K. Q. Weinberger. On calibration of modern neural networks. In D. Precup and Y. W. Teh, editors, Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pages 1321–1330. PMLR, 06–11 Aug 2017.

- Han et al. [2021] Z. Han, C. Zhang, H. Fu, and J. T. Zhou. Trusted multi-view classification. In International Conference on Learning Representations, 2021.

- Hendrycks and Dietterich [2019] D. Hendrycks and T. Dietterich. Benchmarking neural network robustness to common corruptions and perturbations. Proceedings of the International Conference on Learning Representations, 2019.

- Hensman et al. [2015] J. Hensman, A. Matthews, and Z. Ghahramani. Scalable Variational Gaussian Process Classification. In G. Lebanon and S. V. N. Vishwanathan, editors, Proceedings of the Eighteenth International Conference on Artificial Intelligence and Statistics, volume 38 of Proceedings of Machine Learning Research, pages 351–360, San Diego, California, USA, 09–12 May 2015. PMLR.

- Holderrieth et al. [2021] P. Holderrieth, M. J. Hutchinson, and Y. W. Teh. Equivariant learning of stochastic fields: Gaussian processes and steerable conditional neural processes. In M. Meila and T. Zhang, editors, Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pages 4297–4307. PMLR, 18–24 Jul 2021.

- Hotelling [1936] H. Hotelling. Relations between two sets of variates. Biometrika, 28(3/4):321–377, 1936. ISSN 00063444.

- Jha et al. [2023] S. Jha, D. Gong, H. Zhao, and Y. Lina. NPCL: Neural processes for uncertainty-aware continual learning. In Advances in Neural Information Processing Systems, 2023.

- Jøsang [2016] A. Jøsang. Belief fusion. In Subjective Logic, volume 3, chapter 12, pages 207–236. Springer, 2016.

- Jung et al. [2022] M. C. Jung, H. Zhao, J. Dipnall, B. Gabbe, and L. Du. Uncertainty estimation for multi-view data: The power of seeing the whole picture. In A. H. Oh, A. Agarwal, D. Belgrave, and K. Cho, editors, Advances in Neural Information Processing Systems, 2022.

- Kandemir et al. [2022] M. Kandemir, A. Akgül, M. Haussmann, and G. Unal. Evidential turing processes. In International Conference on Learning Representations, 2022.

- Kawano et al. [2021] M. Kawano, W. Kumagai, A. Sannai, Y. Iwasawa, and Y. Matsuo. Group equivariant conditional neural processes. In International Conference on Learning Representations, 2021.

- Khosla et al. [2020] P. Khosla, P. Teterwak, C. Wang, A. Sarna, Y. Tian, P. Isola, A. Maschinot, C. Liu, and D. Krishnan. Supervised contrastive learning. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 18661–18673. Curran Associates, Inc., 2020.

- Kim et al. [2019] H. Kim, A. Mnih, J. Schwarz, M. Garnelo, A. Eslami, D. Rosenbaum, O. Vinyals, and Y. W. Teh. Attentive neural processes. In International Conference on Learning Representations, 2019.

- Kim et al. [2022] M. Kim, K. R. Go, and S.-Y. Yun. Neural processes with stochastic attention: Paying more attention to the context dataset. In International Conference on Learning Representations, 2022.

- Kingma and Ba [2015] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. In Y. Bengio and Y. LeCun, editors, 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015.

- Krizhevsky and Hinton [2009] A. Krizhevsky and G. Hinton. Learning multiple layers of features from tiny images. Master’s thesis, Department of Computer Science, University of Toronto, 2009.

- Lakshminarayanan et al. [2017] B. Lakshminarayanan, A. Pritzel, and C. Blundell. Simple and scalable predictive uncertainty estimation using deep ensembles. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, page 6405–6416, Red Hook, NY, USA, 2017. Curran Associates Inc. ISBN 9781510860964.

- Lee et al. [2020a] B.-J. Lee, S. Hong, and K.-E. Kim. Residual neural processes. Proceedings of the AAAI Conference on Artificial Intelligence, 34(04):4545–4552, Apr. 2020a. doi: 10.1609/aaai.v34i04.5883.

- Lee et al. [2020b] J. Lee, M. Humt, J. Feng, and R. Triebel. Estimating model uncertainty of neural networks in sparse information form. In H. D. III and A. Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 5702–5713. PMLR, 13–18 Jul 2020b.

- Liu et al. [2020] J. Liu, Z. Lin, S. Padhy, D. Tran, T. Bedrax Weiss, and B. Lakshminarayanan. Simple and principled uncertainty estimation with deterministic deep learning via distance awareness. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 7498–7512. Curran Associates, Inc., 2020.

- Lu et al. [2019] J. Lu, D. Batra, D. Parikh, and S. Lee. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- Ma et al. [2021] H. Ma, Z. Han, C. Zhang, H. Fu, J. T. Zhou, and Q. Hu. Trustworthy multimodal regression with mixture of normal-inverse gamma distributions. In M. Ranzato, A. Beygelzimer, Y. Dauphin, P. Liang, and J. W. Vaughan, editors, Advances in Neural Information Processing Systems, volume 34, pages 6881–6893. Curran Associates, Inc., 2021.

- Malinin and Gales [2018] A. Malinin and M. Gales. Predictive uncertainty estimation via prior networks. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, page 7047–7058, Red Hook, NY, USA, 2018. Curran Associates Inc.

- Martins and Astudillo [2016] A. Martins and R. Astudillo. From softmax to sparsemax: A sparse model of attention and multi-label classification. In M. F. Balcan and K. Q. Weinberger, editors, Proceedings of The 33rd International Conference on Machine Learning, volume 48 of Proceedings of Machine Learning Research, pages 1614–1623, New York, New York, USA, 20–22 Jun 2016. PMLR.

- Meronen et al. [2020] L. Meronen, C. Irwanto, and A. Solin. Stationary activations for uncertainty calibration in deep learning. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 2338–2350. Curran Associates, Inc., 2020.

- Meronen et al. [2021] L. Meronen, M. Trapp, and A. Solin. Periodic activation functions induce stationarity. In M. Ranzato, A. Beygelzimer, Y. Dauphin, P. Liang, and J. W. Vaughan, editors, Advances in Neural Information Processing Systems, volume 34, pages 1673–1685. Curran Associates, Inc., 2021.

- Milios et al. [2018] D. Milios, R. Camoriano, P. Michiardi, L. Rosasco, and M. Filippone. Dirichlet-based gaussian processes for large-scale calibrated classification. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., 2018.

- Minderer et al. [2021] M. Minderer, J. Djolonga, R. Romijnders, F. A. Hubis, X. Zhai, N. Houlsby, D. Tran, and M. Lucic. Revisiting the calibration of modern neural networks. In A. Beygelzimer, Y. Dauphin, P. Liang, and J. W. Vaughan, editors, Advances in Neural Information Processing Systems, 2021.

- Murphy [2022] K. P. Murphy. Probabilistic machine learning: an introduction. MIT press, 2022.

- Nair et al. [2020] T. Nair, D. Precup, D. L. Arnold, and T. Arbel. Exploring uncertainty measures in deep networks for multiple sclerosis lesion detection and segmentation. Medical Image Analysis, 59:101557, 2020. ISSN 1361-8415. doi: https://doi.org/10.1016/j.media.2019.101557.

- Netzer et al. [2011] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng. Reading digits in natural images with unsupervised feature learning. In NIPS Workshop on Deep Learning and Unsupervised Feature Learning, 2011.

- Nguyen and Grover [2022] T. Nguyen and A. Grover. Transformer neural processes: Uncertainty-aware meta learning via sequence modeling. In K. Chaudhuri, S. Jegelka, L. Song, C. Szepesvari, G. Niu, and S. Sabato, editors, Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pages 16569–16594. PMLR, 17–23 Jul 2022.

- Requeima et al. [2019] J. Requeima, J. Gordon, J. Bronskill, S. Nowozin, and R. E. Turner. Fast and flexible multi-task classification using conditional neural adaptive processes. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- Roy et al. [2019] A. G. Roy, S. Conjeti, N. Navab, and C. Wachinger. Bayesian quicknat: Model uncertainty in deep whole-brain segmentation for structure-wise quality control. NeuroImage, 195:11–22, 2019. ISSN 1053-8119. doi: https://doi.org/10.1016/j.neuroimage.2019.03.042.

- Sensoy et al. [2018] M. Sensoy, L. Kaplan, and M. Kandemir. Evidential deep learning to quantify classification uncertainty. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., 2018.

- Singh et al. [2019] G. Singh, J. Yoon, Y. Son, and S. Ahn. Sequential neural processes. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- Sun et al. [2019] C. Sun, A. Myers, C. Vondrick, K. Murphy, and C. Schmid. Videobert: A joint model for video and language representation learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- Suresh and Srinivasan [2019] A. Suresh and S. Srinivasan. Improved attentive neural processes. In Fourth Workshop on Bayesian Deep Learning at the Conference on Neural Information Processing Systems, volume 14, page 17, 2019.

- Szegedy et al. [2016] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- Toriya et al. [2019] H. Toriya, A. Dewan, and I. Kitahara. Sar2opt: Image alignment between multi-modal images using generative adversarial networks. In IGARSS 2019 - 2019 IEEE International Geoscience and Remote Sensing Symposium, pages 923–926, 2019. doi: 10.1109/IGARSS.2019.8898605.

- Ulapane et al. [2020] N. Ulapane, K. Thiyagarajan, and S. Kodagoda. Hyper-parameter initialization for squared exponential kernel-based gaussian process regression. In 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA), pages 1154–1159, 2020. doi: 10.1109/ICIEA48937.2020.9248120.

- Valdenegro-Toro [2019] M. Valdenegro-Toro. Deep sub-ensembles for fast uncertainty estimation in image classification. arXiv preprint arXiv:1910.08168, 2019.

- Van Amersfoort et al. [2020] J. Van Amersfoort, L. Smith, Y. W. Teh, and Y. Gal. Uncertainty estimation using a single deep deterministic neural network. In H. D. III and A. Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 9690–9700. PMLR, 13–18 Jul 2020.

- van Amersfoort et al. [2021] J. van Amersfoort, L. Smith, A. Jesson, O. Key, and Y. Gal. On feature collapse and deep kernel learning for single forward pass uncertainty. arXiv preprint arXiv:2102.11409, 2021.

- Vaswani et al. [2017] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. u. Kaiser, and I. Polosukhin. Attention is all you need. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc., 2017.

- Volpp et al. [2021] M. Volpp, F. Flürenbrock, L. Grossberger, C. Daniel, and G. Neumann. Bayesian context aggregation for neural processes. In International Conference on Learning Representations, 2021.

- Wang et al. [2022] J. Wang, T. Lukasiewicz, D. Massiceti, X. Hu, V. Pavlovic, and A. Neophytou. NP-match: When neural processes meet semi-supervised learning. In K. Chaudhuri, S. Jegelka, L. Song, C. Szepesvari, G. Niu, and S. Sabato, editors, Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pages 22919–22934. PMLR, 17–23 Jul 2022.

- Wen et al. [2020] Y. Wen, D. Tran, and J. Ba. Batchensemble: an alternative approach to efficient ensemble and lifelong learning. In International Conference on Learning Representations, 2020.

- Williams [1996] C. Williams. Computing with infinite networks. In M. Mozer, M. Jordan, and T. Petsche, editors, Advances in Neural Information Processing Systems, volume 9. MIT Press, 1996.

- Williams and Rasmussen [2006] C. K. Williams and C. E. Rasmussen. Gaussian Processes for Machine Learning. The MIT Press, 2006.

- Xu et al. [2015] B. Xu, N. Wang, T. Chen, and M. Li. Empirical evaluation of rectified activations in convolutional network. arXiv preprint arXiv:1505.00853, 2015.

- Yoon et al. [2020] J. Yoon, G. Singh, and S. Ahn. Robustifying sequential neural processes. In H. D. III and A. Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 10861–10870. PMLR, 13–18 Jul 2020.

Appendix A Lemma and Proof

For the comprehensiveness of proof, we duplicate Lemma 3.1 here.

Lemma A.1 (Gaussian posterior distribution with factorised prior distribution).

If we have and for i.i.d. observations of dimensional vectors, then the mean and covariance of posterior distribution are:

| (15) |

Proof.

If we use Lemma A.1 with diagonal covariance matrices for and , we can obtain the posterior distribution of as follows:

| (22) |

where is the element-wise inversion, and is the element-wise product.

Appendix B Experimental Details

In this section, we outline additional details of the experimental settings including the datasets (Appendix B.1), hyperparameters of the models used (Appendix B.2), metrics (Appendix B.3), and a brief analysis of computational complexity of MGP and MNPs (Appendix B.4). For all the experiments, we used the Adam optimiser [30] with batch size of 200 and the Tensorflow framework. All the experiments were conducted on a single NVIDIA GeForce RTX 3090 GPU.

B.1 Details of Datasets

Synthetic Dataset

Figure 2 shows the predictive probability and the attention weight of different attention mechanisms. Here, we describe the dataset and the settings used for the demonstrations.

We generated 1,000 synthetic training samples (i.e., ) for binary classification by using the Scikit-learn’s moon dataset 222https://scikit-learn.org/stable/modules/generated/sklearn.datasets.make_moons.html with zero-mean Gaussian noise () added. The test samples were generated as a mesh-grid of 10,000 points (i.e., grid with ). The number of points in the context memory was set to 100. In this demonstration, we simplified the problem by setting which is equivalent to the unimodal setting and illustrated the difference in attention mechanisms.

Robustness to Noisy Samples Dataset

In Section 5.1, we evaluated the models’ robustness to noisy samples with the six multimodal datasets. The details of each dataset are outlined in Table 6. These datasets lie within a feature space where each feature extraction method can be found in [17].

| Dataset | ||||||

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| # of modalities | 6 | 2 | 3 | 2 | 3 | 2 |

| Types of modalities | Images | Image&Text | Images | Images | Images | Images |

| # of samples | 2,000 | 11,788 | 680 | 8,677 | 4,485 | 6,718 |

| # of classes | 10 | 10 | 68 | 101 | 15 | 51 |

OOD Detection Dataset

B.2 Details of Models

In our main experiments, four unimodal baselines with the early fusion (EF) method [3] (MC Dropout, Deep Ensemble (EF), SNGP, and ETP) and three multimodal baselines with the late fusion (LF) method [3] (Deep Ensemble (LF), TMC, and MGP) were used. In this section, we describe the details of the feature extractors and each baseline.

Feature Extractors

We used the same feature extractor for all the methods to ensure fair comparisons of the models. For the synthetic dataset, the 2D input points were projected to a high-dimensional space () with a feature extractor that has 6 residual fully connected (FC) layers with the ReLU activation. For the OOD detection experiment, the Inception v3 [54] pretrained with ImageNet was used as the feature extractor. Note that the robustness to noisy samples experiment does not require a separate feature extractor as the dataset is already in a feature space.

MC Dropout

Monte Carlo (MC) Dropout [11] is a well-known uncertainty estimation method that leverages existing dropout layers of DNNs to approximate Bayesian inference. In our experiments, the dropout rate was set to 0.2 with 100 dropout samples used to make predictions in the inference stage. The predictive uncertainty was quantified based on the original paper [11].

Deep Ensemble

Deep ensemble [32] is a powerful uncertainty estimation method that trains multiple independent ensemble members. In the case of the unimodal baseline, we employed five ensemble members, whereas for the multimodal baseline, a single classifier was trained independently for each modality input. In both scenarios, the unified predictions were obtained by averaging the predictions from the ensemble members, while the predictive uncertainty was determined by calculating the variance of those predictions.

SNGP

Spectral-normalized Neural Gaussian Process (SNGP) [35] is an effective and scalable uncertainty estimation method that utilises Gaussian process (GP). It consists of a feature extractor with spectral normalisation and a GP output layer. Since we used the identical feature extractor for all the baselines, we only used the GP layer in this work. Following [24], the model’s covariance matrix was updated without momentum with for the mean-field approximation. As the original authors proposed, we quantified the predictive uncertainty based on the Dempster-Shafer theory [7] defined as where is the class of output logit with the number of classes .

ETP

Evidential Turing Processes (ETP) [25] is a recent variant of NPs for uncertainty estimation of image classification. Since none of the existing NPs can be directly applied to multimodal data, there are several requirements to utilise them for multimodal classification: 1) a context set in the inference stage (e.g., context memory) and 2) a method of processing multimodal data. ETP was selected due to its inclusion of the original context memory, requiring minimal modifications to be applicable to our task. We used the memory size of 200 and quantified the predictive uncertainty with entropy as proposed by the original paper [25].

TMC

Trusted Multi-view Classification (TMC) [17] is a simple multimodal uncertainty estimation based on the Subjective logic [23]. We used the original settings of the paper with the annealing epochs of ten for the balancing term. TMC explicitly quantifies its predictive uncertainty based on the Dempster-Shafer theory [7].

MGP

Multi-view Gaussian Process (MGP) [24] is the current SOTA multimodal uncertainty estimation method that combines predictive posterior distributions of multiple GPs. We used the identical settings of the original paper with the number of inducing points set to 200 and ten warm-up epochs. Its predictive uncertainty was quantified by the predictive variance as proposed by the original paper [24].

MNPs (Ours)

The encoders and decoder in Multimodal Neural Processes (MNPs) consist of two FC layers with the Leaky ReLU activation [66] after the first FC layer. A normalisation layer is stacked on top of the second FC layer for the encoders. For and that approximate the variance of distributions, we ensure positivity by transforming the outputs as where is the raw output from the encoders. of the adaptive RBF attention was initialised as , and DCM was initialised by randomly selecting training samples. We used five samples for the Monte Carlo method to approximate the integrals in Equations (11)-(13), which we found enough in practice. Refer to Table 7 for the hyperparameters of MNPs. We provide the impact of on the model performance in Appendix C.1.

| Dataset | |||||||

| Parameter | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | CIFAR10-C |

| 100 | 200 | 300 | 700 | 300 | 400 | 200 | |

| 1 | 0.03 | 1 | 1 | 0.0001 | 1 | 1 | |

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 0.25 | 0.01 | 0.1 | 0.01 | 0.5 | 0.01 | 0.01 | |

B.3 Details of Metrics

Apart from test accuracy, we report the expected calibration error (ECE) [16] and the area under the receiver operating characteristic curve (AUC). ECE is defined as:

where is the number of testing samples, is a bin with partitioned predictions with the number of bins , is the number of elements in , is the accuracy of predictions in , and is the average predictive confidence in . Following [35] and [24], we set . AUC was used for the OOD detection experiment with ground truth labels of class 0 being the ID samples and class 1 being the OOD samples. Each model’s predictive uncertainty was used as confidence score to predict whether a test sample is a ID or OD sample.

B.4 Computational Complexity of MGP and MNPs

In addition to the empirical difference of wall-clock time per epoch in Table 5, we provide computational complexity of the two models in Table 8. We assume that the number of inducing points in MGP equals to the number of context points in MNPs. During training of MNPs, each modality requires a cross-attention () and a contrastive learning () that sum to with being the number of modalities, whereas during inference, each modality only requires the cross-attention which results in .

| Training | Inference | |

|---|---|---|

| MGP | ||

| MNPs (Ours) |

Appendix C Ablation Studies

In this section, we analyse MNPs’ performance with different settings and show the effectiveness of the proposed framework.

C.1 Context Memory Updating Mechanisms

We compare the updating mechanism of DCM based on MSE in Equation (2)-(3) with three other baselines: random sampling, first-in-first-out (FIFO) [62], and cross-entropy based (CE). Random sampling bypasses DCM and randomly selects training samples during inference. For FIFO, we follow the original procedure proposed by [62] that updates the context memory during training and only uses it during inference. CE-based mechanism replaces in Equation (3) with .

We provide experimental results for all the experiments outlined in Section 5. We highlight that random sampling and FIFO achieve high accuracy both without noise and with noise as shown in Table 9 and 11. However, MSE and CE outperform the others in terms of ECE in Table 10 and OOD AUC in Table 12. As MSE and CE select the new context points based on classification error, the selected context points tend to be close to decision boundary, which is the most difficult region to classify. We believe this may contribute to the lower calibration error, suppressing overconfident predictions. The MSE and CE mechanisms show comparable overall results, but we selected MSE for its lower ECE. In terms of time efficiency, Table 13 shows that random sampling is slower than the other three methods.

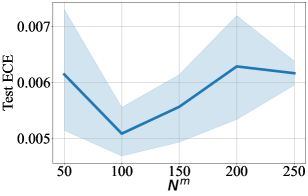

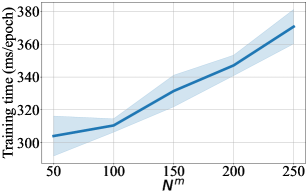

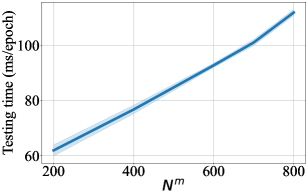

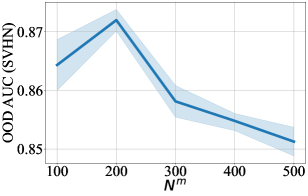

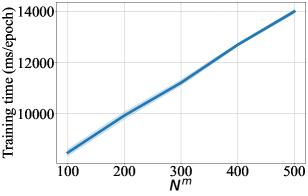

For DCM updated by MSE, we also provide difference in performance for a range of number of context points in Figure 3-9. For every figure, the bold line indicates the mean value, and the shaded area indicates 95% confidence interval. Unsurprisingly, the training time and the testing time increase with respect to . The general trend in test accuracy across the datasets shows the benefit of increasing the number of context points. However, the performance gain in ECE and OOD AUC is ambivalent as different patterns are observed for different datasets. We leave an in-depth analysis of this behaviour for our future study.

| Updating Mechanism | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Random | 99.400.14 | 88.505.12 | 94.850.90 | 90.381.38 | 76.032.96 | 68.420.53 |

| FIFO | 99.300.11 | 90.333.26 | 95.291.85 | 91.090.97 | 76.081.92 | 69.650.66 |

| CE | 99.400.14 | 93.672.25 | 95.001.43 | 93.590.27 | 77.400.73 | 70.771.11 |

| MSE | 99.500.00 | 93.501.71 | 95.000.62 | 93.460.32 | 77.900.71 | 71.970.43 |

| Updating Mechanism | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Random | 0.0070.001 | 0.0690.029 | 0.0500.009 | 0.0430.005 | 0.0590.061 | 0.0520.006 |

| FIFO | 0.0070.001 | 0.0670.021 | 0.0570.016 | 0.0270.004 | 0.0560.048 | 0.0320.007 |

| CE | 0.0060.001 | 0.0500.016 | 0.0410.009 | 0.0170.003 | 0.0380.010 | 0.0340.008 |

| MSE | 0.0050.001 | 0.0490.008 | 0.0400.005 | 0.0170.003 | 0.0380.010 | 0.0280.006 |

| Updating Mechanism | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Random | 98.390.21 | 83.114.08 | 92.550.55 | 89.361.18 | 72.852.30 | 62.100.44 |

| FIFO | 98.510.11 | 85.862.87 | 93.810.67 | 89.591.02 | 72.591.82 | 63.000.89 |

| CE | 98.490.13 | 88.801.57 | 93.750.72 | 92.870.21 | 73.980.41 | 63.970.71 |

| MSE | 98.580.10 | 88.961.98 | 93.800.49 | 92.830.18 | 74.140.35 | 64.110.15 |

| Updating Mechanism | OOD AUC | |||

|---|---|---|---|---|

| Test accuracy | ECE | SVHN | CIFAR100 | |

| Random | 74.610.22 | 0.0730.005 | 0.8600.003 | 0.7770.002 |

| FIFO | 74.820.11 | 0.0730.006 | 0.8620.007 | 0.7780.005 |

| CE | 74.700.19 | 0.0130.002 | 0.8710.004 | 0.7890.004 |

| MSE | 74.920.07 | 0.0110.001 | 0.8720.002 | 0.7860.005 |

| Updating Mechanism | Dataset | ||||||

|---|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | CIFAR10-C | |

| Random | 31.803.68 | 8.152.80 | 12.143.09 | 255.3713.25 | 33.733.56 | 79.195.95 | 710.488.58 |

| FIFO | 24.910.68 | 5.873.06 | 7.202.77 | 101.022.90 | 25.043.35 | 41.502.74 | 496.2310.85 |

| CE | 25.000.28 | 5.611.56 | 6.851.04 | 101.102.59 | 25.473.77 | 43.453.85 | 500.797.04 |

| MSE | 22.531.88 | 5.571.58 | 6.700.92 | 101.012.38 | 26.6010.37 | 41.872.10 | 493.189.91 |

C.2 Multimodal Aggregation Methods

We demonstrate the performance of MBA compared with two other methods namely “Concat” and “Mean”. “Concat” bypasses MBA and directly provides of multiple modalities to the decoder (see Figure 1) by simple concatenation followed by passing to a MLP which lets in Equation (12) be parameterised by a decoder where . represents concatenating multiple vectors along their feature dimension. Similarly,“Mean” also bypasses MBA and simply averages the multiple modalities into single representation. Formally, parameterised by a decoder where .

The results are shown in Table 14-17. In every case, MBA outperforms both baselines. While similar performance can be observed for Handwritten, Scene15, and Caltech101, large differences are observed in CUB, PIE, and HMDB across different metrics. The test accuracy of CIFAR10 is almost consistent across all methods, but large gaps in ECE and OOD performance are observed. This highlights the importance of MBA, especially in robustness and calibration performance.

| Aggregation Methods | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Concat | 99.350.22 | 89.001.24 | 89.712.49 | 92.630.18 | 77.180.64 | 56.062.13 |

| Mean | 99.450.11 | 92.502.43 | 90.882.24 | 93.140.25 | 77.600.56 | 57.801.97 |

| MBA | 99.500.00 | 93.501.71 | 95.000.62 | 93.460.32 | 77.900.71 | 71.970.43 |

| Aggregation Methods | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Concat | 0.007±0.001 | 0.109±0.008 | 0.092±0.020 | 0.038±0.005 | 0.061±0.005 | 0.060±0.017 |

| Mean | 0.006±0.001 | 0.057±0.012 | 0.059±0.008 | 0.030±0.004 | 0.038±0.005 | 0.117±0.014 |

| MBA | 0.005±0.001 | 0.049±0.008 | 0.040±0.005 | 0.017±0.003 | 0.038±0.009 | 0.028±0.006 |

| Aggregation Methods | Dataset | |||||

|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | |

| Concat | 97.71±0.46 | 85.51±1.42 | 85.94±2.48 | 89.84±0.17 | 72.23±0.52 | 45.22±2.86 |

| Mean | 98.42±0.09 | 88.27±1.83 | 88.74±2.33 | 92.07±0.16 | 74.06±0.28 | 49.58±2.24 |

| MBA | 98.58±0.10 | 88.96±1.98 | 93.80±0.49 | 92.83±0.18 | 74.14±0.35 | 64.11±0.15 |

| Aggregation Methods | OOD AUC | |||

|---|---|---|---|---|

| Test accuracy | ECE | SVHN | CIFAR100 | |

| Concat | 74.24±0.27 | 0.125±0.005 | 0.781±0.016 | 0.728±0.004 |

| Mean | 74.72±0.24 | 0.109±0.003 | 0.803±0.007 | 0.742±0.003 |

| MBA | 74.92±0.07 | 0.011±0.001 | 0.872±0.002 | 0.786±0.005 |

C.3 Attention Types

We decompose the attention weight in Equation (9) as follows:

| (23) |

where is the normalisation function such as Softmax and Sparsemax, and as the similarity function such as the dot-product and the RBF kernel. We provide experimental results of four different combinations of normalisation functions and similarity functions in Table 18-21.

Among the four combinations, the RBF function with Sparsemax outperforms the others in most cases. More importantly, Table 20 shows a large difference in robustness to noisy samples between the RBF function with Sparsemax and the dot-product with Sparsemax, even when a marginal difference in accuracy is shown in Table 18. For instance, for the PIE dataset, the difference in accuracy without noisy samples is 0.3, but the difference increases to 6.0 in the presence of noisy samples. The same pattern is observed with OOD AUC in Table 21. This illustrates the strength of RBF attention that is more sensitive to distribution-shift as shown in Figure 2. Lastly, for both similarity functions, Sparsemax results in superior overall performance.

| Similarity Function | Normalisation Function | Dataset | |||||

|---|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | ||

| RBF | Softmax | 98.800.45 | 87.006.42 | 75.153.00 | 82.950.47 | 69.831.41 | 56.281.18 |

| Sparsemax | 99.500.00 | 93.501.71 | 95.000.62 | 93.460.32 | 77.900.71 | 71.970.43 | |

| Dot | Softmax | 99.000.18 | 79.673.94 | 86.322.88 | 88.900.36 | 74.950.33 | 64.680.78 |

| Sparsemax | 98.950.11 | 82.172.67 | 94.261.90 | 92.460.26 | 78.301.06 | 63.231.89 | |

| Similarity Function | Normalisation Function | Dataset | |||||

|---|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | ||

| RBF | Softmax | 0.0190.005 | 0.0840.020 | 0.1000.017 | 0.0250.004 | 0.1520.007 | 0.2020.019 |

| Sparsemax | 0.0050.001 | 0.0490.008 | 0.0400.005 | 0.0170.003 | 0.0380.009 | 0.0280.006 | |

| Dot | Softmax | 0.0080.003 | 0.1660.015 | 0.3730.037 | 0.0330.007 | 0.0610.010 | 0.1750.006 |

| Sparsemax | 0.0100.001 | 0.1310.028 | 0.0530.010 | 0.0250.002 | 0.0320.008 | 0.0840.015 | |

| Similarity Function | Normalisation Function | Dataset | |||||

|---|---|---|---|---|---|---|---|

| Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB | ||

| RBF | Softmax | 94.560.66 | 82.585.98 | 65.882.98 | 81.230.29 | 67.771.05 | 38.630.63 |

| Sparsemax | 98.580.10 | 88.961.98 | 93.800.49 | 92.830.18 | 74.140.35 | 64.110.15 | |

| Dot | Softmax | 77.990.32 | 73.891.77 | 70.801.71 | 63.800.12 | 58.740.24 | 34.280.45 |

| Sparsemax | 96.000.24 | 70.302.61 | 87.441.44 | 81.951.92 | 67.841.00 | 40.260.56 | |

| Similarity Function | Normalisation Function | OOD AUC | |||

|---|---|---|---|---|---|

| Test accuracy | ECE | SVHN | CIFAR100 | ||

| RBF | Softmax | 67.650.16 | 0.0800.001 | 0.8640.006 | 0.7710.006 |

| Sparsemax | 74.920.07 | 0.0110.001 | 0.8720.002 | 0.7860.005 | |

| Dot | Softmax | 68.810.62 | 0.1300.019 | 0.8490.009 | 0.7750.005 |

| Sparsemax | 75.070.09 | 0.0550.001 | 0.8370.004 | 0.7650.004 | |

C.4 Adaptive Learning of RBF Attention

We have shown that the effectiveness of learning the RBF attention’s parameters with the synthetic dataset in Figure 2. We further provide the ablation studies with the real-world datasets in Table 22-25.

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| Without | 96.850.29 | 91.172.40 | 93.381.27 | 92.640.38 | 74.450.45 | 48.951.70 |

| With | 99.500.00 | 93.501.71 | 95.000.62 | 93.460.32 | 77.900.71 | 71.970.43 |

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| Without | 0.0070.001 | 0.0780.011 | 0.0430.007 | 0.0360.004 | 0.0540.011 | 0.0430.008 |

| With | 0.0050.001 | 0.0490.008 | 0.0400.005 | 0.0170.003 | 0.0380.010 | 0.0280.006 |

| Dataset | ||||||

|---|---|---|---|---|---|---|

| Method | Handwritten | CUB | PIE | Caltech101 | Scene15 | HMDB |

| Without | 89.440.54 | 86.691.65 | 91.500.94 | 92.320.27 | 71.180.38 | 37.330.92 |

| With | 98.580.10 | 88.961.98 | 93.800.49 | 92.830.18 | 74.140.35 | 64.110.15 |

| OOD AUC | ||||

|---|---|---|---|---|

| Method | Test accuracy | ECE | SVHN | CIFAR100 |

| Without | 74.960.16 | 0.0190.002 | 0.8220.004 | 0.7460.004 |

| With | 74.920.07 | 0.0110.001 | 0.8720.002 | 0.7860.005 |

Appendix D Broader Impacts

As a long-term goal of this work is to make multimodal classification of DNNs more trustworthy by using NPs, it has many potential positive impacts to our society. Firstly, with transparent and calibrated predictions, more DNNs can be deployed to safety-critical domains such as medical diagnosis. Secondly, this work raises awareness to the machine learning society to evaluate and review reliability of a DNN model. Lastly, our study shows the potential capability of NPs in more diverse applications. Nevertheless, a potential negative impact may exist if the causes of uncertain predictions are not fully understood. To take a step further to reliable DNNs, the source of uncertainty should be transparent to non-experts.