.pdfpng.pngconvert #1 \OutputFile

Best Subset Selection: Statistical Computing Meets Quantum Computing

Abstract

With the rapid development of quantum computers, quantum algorithms have been studied extensively. However, quantum algorithms tackling statistical problems are still lacking. In this paper, we propose a novel non-oracular quantum adaptive search (QAS) method for the best subset selection problems. QAS performs almost identically to the naive best subset selection method but reduces its computational complexity from to , where is the total number of subsets over covariates. Unlike existing quantum search algorithms, QAS does not require the oracle information of the true solution state and hence is applicable to various statistical learning problems with random observations. Theoretically, we prove QAS attains any arbitrary success probability within iterations. When the underlying regression model is linear, we propose a quantum linear prediction method that is faster than its classical counterpart. We further introduce a hybrid quantum-classical strategy to avoid the capacity bottleneck of existing quantum computing systems and boost the success probability of QAS by majority voting. The effectiveness of this strategy is justified by both theoretical analysis and extensive empirical experiments on quantum and classical computers.

Keywords: hybrid quantum-classical strategy, majority voting, non-oracular quantum search, quantum experiments, quantum linear prediction.

1 Introduction

The rapid advance in science and technology in the past decade makes the quantum computer a reality, offering researchers an unprecedented opportunity to tackle more complex research challenges. Unlike classical computers depend on a classical bit having a state of either 0 or 1, a quantum computer operates on quantum processing units, quantum bits (or qubits), which can be in a state 0, 1, or both simultaneously due to the superposition property. The qubits create different states for the system that are superposed on each other. The superposition enables researchers to perform computations using all of those states simultaneously. In contrast, in classical computing, one conducts computations with one state at-a-time among these states. Consequently, one has to repeat the computations times in classical computing to get the quantum computation results. (Nielsen and Chuang,, 2010). Such a paradigm change has motivated significant developments of quantum algorithms in many areas, e.g., algebraic number theory (Shor,, 1999; Hallgren,, 2002; Van Dam and Shparlinski,, 2008), scientific simulations (Abrams and Lloyd,, 1997; Byrnes and Yamamoto,, 2006; Kassal et al.,, 2008; Wang,, 2011; Hu and Wang,, 2020), optimization (Jordan,, 2005; Jansen et al.,, 2007; Harrow et al.,, 2009), and many more.

The opportunity, however, has not yet been fully utilized by statisticians, because there are significant challenges to developing quantum search algorithms to solve statistical problems. First, a quantum computer does not provide deterministic results but instead gives a different result each time we run an algorithm according to some probability distribution. For example, if we search the smallest number in a set of numbers using a classical computer, the result is determined if the algorithm is deterministic. However, a quantum computer output the smallest number with a certain probability. That is, a quantum computer may output another number other than the correct result. Second, existing quantum search algorithms, in general, depend on an oracle function to decide if an outcome is a solution or not. For example, the celebrated Grover’s algorithm (Grover,, 1997) requires an oracle function that can map all solutions to state 1 and all non-solutions to state 0. However, such an oracle function is usually not available in practice. When the oracle is inaccurate, Grover’s algorithm can perform as bad as a random guess which will be demonstrated in our numerical experiments. Third, the current public available general-purpose quantum computer, such as the quantum computing system developed by IBM, only has a few dozens of qubits, and thus allows very small piloting projects.

In this paper, we investigate the possibility of solving the best subset selection problem on a quantum computing system. The best subset selection problem can be described as follows: Given a response and multiple predictors , we aim to find the best subset among to fit the response . There are candidate subsets. For , we have more than one billion candidate subsets. Currently, there is no quantum algorithm specifically designed for the best subset selection problem. We propose a novel non-oracular quantum method named quantum adaptive search (QAS). To overcome the aforementioned limitations, we randomly choose a model as our initial guess of the best subset. QAS starts with an equally weighted superposition of the rest candidate models and iteratively updates the superposition towards the direction that minimizes the loss. Within each iteration, the new superposition is compared with the old one through a local evaluation function which does not require any oracle information of the true solution. After the number of iterations, the result will replace our initial guess. We then repeat the above procedures until no more replacement can be made. Since the output of the quantum computer is random, we further introduce a hybrid quantum-classical strategy and boost the success probability of outputting the best subset by a majority voting.

Our theoretical and methodological contributions are as follows. First, we design an iterative algorithm to eliminate the requirement of an oracle function in the quantum algorithm. Second, we design a majority voting so that we have a high probability to get the correct solution from the algorithm. Moreover, for the best subset selection problem over candidate models, within iterations, QAS converges to a superposition that heavily weighs on the solution state and hence outputs the exact solution with a high probability. In contrast, a classic computing algorithm requires quires to search for the best model. Last but not least, the computational complexity of QAS is upper bounded by the order which is only a factor larger than the theoretical lower bound for any oracular quantum search algorithms (Bennett et al.,, 1997).

Our empirical contribution is that we explore the simulation and real data analysis in IBM Quantum Experience111www.ibm.com/quantum-computing, which is a publicly available cloud-based quantum computing system. The code and tutorial are publicly available. The exploration will facilitate the subsequent development in quantum algorithm development for solving statistical problems.

The rest of the paper is organized as follows. We review the state-of-the-art quantum search algorithm and its limitation in Section 2. In Section 3, we propose a novel quantum adaptive search (QAS) algorithm and discuss its intuition and theoretical properties. We then introduce a quantum linear prediction algorithm for computing the criterion. In Section 4 , we introduce a quantum-classical hybrid strategy to improve the stability of quantum best subset selection. In Sections 5 and 6, we evaluate the empirical performance of the proposed method via extensive experiments on quantum and classical computers. Section 7 concludes the paper with a few remarks. Due to the limitation of space, the proofs of main theoretical results, additional simulation results, and a real data application are relegated to a supplemental material.

2 Review of quantum search algorithm

2.1 Notations and definitions

To facilitate the discussion in the paper, we review some essential notations and definitions used in quantum computing that may be alien to the audience of statistics. We refer to Nielsen and Chuang, (2010) and Schuld and Petruccione, (2018) for more detailed tutorials of quantum computing. We start by introducing the linear algebra notations used in quantum mechanics, which were invented by Paul Dirac. In Dirac’s notation, a column vector is denoted by which reads as in a ‘ket’. Typical vector space of interest in quantum computing is a Hilbert space of dimension with a positive integer . For a vector , we denote its dual vector as (reads as in a ‘bra’), which is an element of . Besides, and together naturally induce an inner product , which is also known as a ‘bra-ket’. We say is a unit vector if .

The framework of quantum computing resides in a state-space postulate which describes the state of a system by a unit vector in a Hilbert space. A state of a qubit can be described by a unit vector in a two-dimensional Hilbert space. A superposition, as the linear combination of the states in one qubit, can be defined as

where coefficients and satisfy . Now suppose that we have two qubits and the system has four orthonormal states . Then, a superposition can be represented as

where coefficients , , , satisfiy . Analogously, a quantum computer of qubits can represent a state of a system by a unit vector in a dimensional Hilbert space . Let be an orthonormal basis of . Every superposition can be represented as

| (1) |

where is a set of coefficients with and .

Another salient feature of quantum computing is the measurement of a quantum system yields a probabilistic outcome rather than a deterministic one. Suppose the superposition in (1) is measured, it collapses to a random state in . In addition, the probability we observe is for .

Different from a classical computer with logic gates, e.g., AND and NOT gates, a quantum computer is built with quantum gates. These quantum gates not only have the logic gates mentioned above but also include the new gates that are not available in classical computers. For example, the Hadamard gate enables the rotations and reflections of the input states. Some quantum algorithms that make use of these new gates have shown some remarkable results that significantly outperform the algorithms in classical computers.

2.2 Grover’s algorithm

The best subset selection problem can be decomposed into two subproblems: (1) What is the criterion for the best? (2) Given the criterion, which subset is the best? For the first subproblem, there are many criteria, e.g, AIC, BIC, cross-validation, and mean squared error. For the second subproblem, searching for the best is challenging if the number of candidate subsets is super-large. We shall review Grover’s algorithm, which is the most popular quantum searching algorithm for the second subproblem.

Recall that given predictors, there are candidate subsets, from which we aim to find the best subset. Once the best criterion is chosen and applied to each subset, we have criterion values computed for all subsets. We aim to find the solution (e.g. the smallest or the largest value) from elements, which are indexed by . To ease the presentation, we assume there are no tied solutions although the discussions in this paper can be easily generalized to such cases. In the language of quantum computing, each index is regarded as a state, which can be modeled by a unit vector in an orthonormal basis of a dimensional Hilbert space .

2.2.1 Geometric view of Grover’s algorithm

We now provide an intuitive geometric presentation of Grover’s algorithm which is summarized in Algorithm 1 below. Denote the solution state by and the average of all non-solution states by . Notice that is orthogonal to the solution state . We plot them as -axis and -axis in Figure 1. Grover’s algorithm is initialized with a superposition as the equally weighted average of all states , . To be specific, the initial superposition is defined as

Denote the angel between the initial superposition and by . It is easy to see that .

The first iteration of Grover’s algorithm contains two operations. The first operation reflects with respect to to get , The second operation reflects with respect to to get , which is our new superposition, denoted by . As a result, the angle between and is . Figure 1 provides a visualization of the two operations in the first Grover’s operation. Then, Grover’s algorithm iteratively repeats the above two operations, i.e, a reflection w.r.t. and then a reflection w.r.t. . After the th iteration, the the angle between and is . The iteration terminates if the th state coincides with the solution state , i.e., , which implies that

| (2) |

The typical motivation to use quantum search algorithm is that the number of candidate set is large. When is large, i.e., is small, we can approximate the angle by

| (3) |

Substituting (3) into (2), we have , which yields is approximately . This is a remarkable result since the number of iteration is in the order of . Grover’s algorithm can be easily extended to a search problem with solutions, where the number of iteration is approximately . See Boyer et al., (1998) for more detailed discussions.

2.2.2 Technical details of Grover’s algorithm

Now we present Grover’s algorithm with more mathematical rigor. Grover’s algorithm assumes that we are provided with an quantum oracle evaluation function , which can recognize the solution to the search problem. That is, suppose that index corresponds to the best subset, we have and for . Recall that, Grover’s algorithm is initialized with the following superposition

where . Then, Grover’s algorithm updates and in an iterative manner. In the th iteration, Grover’s algorithm applies the following two operations to the current superposition ,

-

(a)

A flip operation to , where . In other words, and for .

-

(b)

A Grover’s diffusion operation to , where and is a identity matrix.

In the rest of this paper, we call the two operations together, i.e. , as Grover’s operation

After the th iteration, the superposition is updated to

where the coefficients and satisfy

Let be the angle that satisfies . The coefficients and admit a closed form (Nielsen and Chuang,, 2010),

2.2.3 Significance and challenges of Grover’s algorithm

Grover, (1997) proposed a quantum search algorithm with a success probability of at least 50%. The computational complexity of Grover’s algorithm is of the order . Bennett et al., (1997) showed that this rate is optimal, up to a constant, among all possible quantum search algorithms. In contrast, any classical search algorithm needs to query the system for at least times to solve the searching problem with a 50% or higher success probability. As a result, most classical search algorithms have the computational complexity of the order .

Grover’s algorithm is a quantum algorithm that depends on an oracle evaluation function that maps the solution state to 1 and all other states to 0. However, such a piece of oracle information is usually not available in statistical problems including the best subset selection. When we have no oracle information of the solution state at all, the oracle evaluation function can only be constructed by a randomly selected solution state. Then, Grover’s algorithm is highly likely to rotate the initial superposition in a wrong direction and the output of the algorithm can be as bad as a random guess. We empirically demonstrate this phenomenon in Section 5.2.

3 Best subset selection with quantum adaptive search

In this section, we first review the best subset selection problem. We then propose a novel quantum search algorithm named quantum adaptive search (QAS) which aims to overcome the aforementioned limitation of Grover’s algorithm. We also provide a quantum linear prediction (QLP) method to compute the loss function.

3.1 Review of best subset selection

Best subset selection has been a classical and fundamental method for linear regressions (e.g. Hocking and Leslie,, 1967; Beale et al.,, 1967; Shen et al.,, 2012; Fan et al.,, 2020). When the covariates contain redundant information for the response, selecting a parsimonious best subset of covariates may improve the model interpretability as well as the prediction performance. The best subset selection for linear regression can be formulated as

| (4) |

where is a response vector, is a design matrix of covariates and is a vector of unknown regression coefficients. Throughout this paper, we assume and are centered for the simplicity of presentation. Further, is the norm of and is a subset size. In general, solving (4) is a non-convex optimization problem. Without a convex relaxation or a stage-wise approximation, the optimization involves a combinatorial search over all subsets of sizes and hence is an NP-hard problem (Welch,, 1982; Natarajan,, 1995). Therefore, seeking the exact solution of (4) is usually computationally intractable when is moderate or large.

To overcome the computational bottleneck of the best subset selection, many alternative and approximate approaches have been developed and carefully studied. Stepwise regression methods (e.g. Efroymson,, 1960; Draper and Smith,, 1966) sequentially add or eliminate covariates according to their marginal contributions to the response condition on the existing model. Stepwise regression methods speed up the best subset selection as they only optimize (4) over a subset of all candidates, and hence their solutions may not coincide with the solution of (4). However, stepwise regression methods suffer from the instability issue in high dimensional regime (Breiman,, 1996). Regularized regression methods suggest to relax the nonconvex norm in (4) by various convex or nonconcave alternatives. A celebrated member in the house is LASSO (Tibshirani,, 1996) which solves an regularized problem instead. Elegant statistical properties and promising numerical performance soon made regularized regression a popular research topic in statistics, machine learning and other data science related areas. We refer to basis pursuit (Chen and Donoho,, 1994), SCAD (Fan and Li,, 2001), elastic net (Zou and Hastie,, 2005), adaptive LASSO (Zou,, 2006), Dantzig selector (Candes and Tao,, 2007), nonnegative garrotte (Yuan and Lin,, 2007), and MCP (Zhang,, 2010), among many others. Though regularized regression methods can perform equivalent to or even better than the solution of (4) in certain scenarios (e.g. Zhao and Yu,, 2006; Fan et al.,, 2014; Hastie et al.,, 2020), they are not universal replacements for the best subset selection method. Nevertheless, solving (4) efficiently remains a statistically attractive and computationally challenging problem.

Recently, Bertsimas et al., (2016) has reviewed the best subset selection problem from a modern optimization point of view. Utilizing the algorithmic advances in mixed integer optimization, Bertsimas et al., (2016) proposed a highly optimized solver of (4) which is scalable to relatively large and . Later, Hazimeh and Mazumder, (2018) considered a new hierarchy of necessary optimality conditions and propose to solve (4) by adding extra convex regularizations. Then, Hazimeh and Mazumder, (2018) developed an efficient algorithm based on coordinate descent and local combinatorial optimization. Such recent optimization developments push the frontier of computation for the best subset selection problems by a big margin.

3.2 Quantum adaptive search algorithm

Let us consider the best subset selection problem (4) and assume such that all subsets of covariates are attainable. When , we only need to search over subsets which is a less challenging combinatorial search problem.

3.2.1 Overview of quantum adaptive search algorithm

Recall that each subset of can be encoded as a state in an orthonormal basis , which consists of elements of a Hilbert space . We define a state loss function by the mean squared error (MSE),

| (5) |

where the state is a vector in that corresponds to the subset , and are the regression coefficient estimates obtained by minimizing (4) with fixed and . Intuitively, the best subset corresponds to the state that minimizes the state loss function.

Now, we present the proposed non-oracular quantum adaptive search method, i.e. QAS, by three key steps as follows.

(1) Initialization: We choose an initial benchmark state randomly from the set . Also, we pre-specify a learning rate .

(2) Updating: We run Algorithm 1 on set by inputting the benchmark state and the number of iterations , where is a positive integer. Denote the output of Algorithm 1 as which is a state in . Then, we compare with in terms of the state loss function . If , we update the current benchmark state with , otherwise we do not update .

(3) Iteration and output: Start with and repeat the updating step. After each updating step, set . The QAS stops when , where is a positive constant that depends on the learning rate . Then, we measure the quantum register with the latest superposition. The output is the observed state in and its corresponding subset.

Unlike Grover’s algorithm, QAS randomly selects a state in as the benchmark state which does not require any oracle information of the solution state. Then, QAS iteratively updates the benchmark state towards the direction that reduces the state loss function. Besides, QAS is data-adaptive in the sense that it starts with a conservative learning step size (e.g. ) and gradually increases the learning step size as the benchmark state has been updated towards the truth. We summarize QAS in Algorithm 2 below.

Algorithm 2 provides a general non-oracular quantum computing framework for best subset selection problems as the state loss function can be tailored for various statistical models, such as nonlinear regression, classification, clustering, and low-rank matrix recovery.

3.2.2 Intuition of quantum adaptive search

In this subsection, we demonstrate the intuition of QAS. Suppose that we implement QAS on an orthonormal basis with a pre-specified state loss function and a learning rate . We assume there exists a sole solution state in that minimizes . Without loss of generality, we number the states in as the ascending rank of their state loss function values, i.e.

| (6) |

where is the sole solution state.

If the initialization step in Algorithm 2 luckily selects the sole solution state as the initial benchmark state, QAS will never update the benchmark state and hence reduces to Grover’s algorithm with a known oracle state and . Therefore, QAS can recover the true state with a high success probability.

A more interesting discussion would be considering the initial benchmark state does not coincide with the truth, i.e. . Given the rank in (6), the local evaluation function can be simplified as

In the th iteration, QAS calls Algorithm 1 by inputting , (suppose that ) and . Algorithm 1 initializes a equally weighted superposition as

Then, Algorithm 1 applies Grover’s operations to which updates to with coefficients satisfy

where the angle satisfies . After the th iteration, Algorithm 1 outputs a random state with the following probability mass function

Since the learning rate , we have for some positive . After the th iteration, QAS amplifies the probability of drawing the states whose state loss function values are smaller or equal to . Meanwhile, QAS suppresses the probability of drawing the states whose state loss function values are greater than . Geometrically, QAS rotates the initial superposition towards , which is an average over and the states that can reduce the state loss function from . If the output of the th iteration is for some , QAS will not update . On the other hand, if , QAS will update with which is equivalent to descend to a state with a smaller state loss function value. In Figure 2, we visually illustrate the mechanism of QAS when .

3.3 Theoretical analysis of quantum adaptive search

Intuitively, one can think of QAS as a “quantum elevator” that starts at a random floor of a high tower and aims to descent to the ground floor. In each operation, a quantum machine will randomly decide if this elevator stays at the current floor or goes down to a random lower-level floor. Besides, the probability of going down will gradually increase as the number of operations increases. Thus, it is not hard to imagine that the “quantum elevator” can descent to the ground floor with a high success probability after a large enough amount of operations. Our intuition of QAS is justified by the following theorem. Theorem 3.1 states that within iterations, QAS finds the sole solution state for any pre-specified success probability greater than 50%.

Theorem 3.1.

Let be a constant. With probability at least , the quantum adaptive search (e.g. Algorithm 2) finds the sole solution state within iterations, where is a positive constant that depends on .

Suppose that, in the th iteration of Algorithm 2, the current benchmark state is with being the th smallest among . Theorem 3.2 below shows the expected number of Grover’s operations that Algorithm 2 needs to update is on the order . This together with Theorem 3.1 imply the computational complexity upper bound of QAS is of order which is only a factor larger than the theoretical lower bound of any oracular quantum search algorithm (Bennett et al.,, 1997). Notice that, QAS achieves a near optimal computational efficiency as oracular quantum search algorithms without using any oracle information.

3.4 Computation of the loss function via quantum computing

The success of QAS relies on the efficient comparison of two states through the state loss function defined in (5), which is the MSE of linear regression. Recently, a line of studies (Wiebe et al.,, 2012; Rebentrost et al.,, 2014; Wang,, 2017; Zhao et al.,, 2019) focuses on formulating linear regression as a matrix inversion problem, which can be tackled by the quantum algorithm to solve linear systems of equations (Harrow et al.,, 2009). Their goal is to find a series of quantum states whose amplitudes represent the ordinary least squares estimator of linear regression coefficients. Although their algorithms can encode such quantum states efficiently, “it may be exponentially expensive to learn via tomography” (Harrow et al.,, 2009). As a result, most existing quantum linear regression algorithms require the input design matrix to be sparse. Otherwise, the computation is slow and the estimation accuracy may suffer from noise accumulation.

In this paper, we investigate a novel quantum linear prediction approach that is based on the singular-value decomposition. The proposed method avoids the matrix inversion problem. Instead, we estimate the inverse singular values by utilizing a recently developed quantum tomography technique (Lloyd et al.,, 2014). Let be a subset of with . Denote the sub-matrix of corresponding to the subset . Let be a new observation of the selected covariates. Then, the corresponding response value can be predicted by

| (7) |

Suppose that the rank of is , a compact singular value decomposition of yields

where is a diagonal matrix of positive singular values, and and are matrices of left and right singular vectors, respectively. Further, we have . Then, the linear predictor in (7) can be represented by

| (8) |

Motivated by the quantum principle component analysis (QPCA) (Lloyd et al.,, 2014) and the inverted singular value problem (Schuld et al.,, 2016), we propose to calculate (8) by a quantum linear prediction (QLP) procedure, which can be summarized as the following three steps.

Step 1: Quantum states preparation.

We encode , and , , into a quantum system as

| (9) |

where , and and are orthonormal quantum bases representing the linear space spanned by the left and right singular vectors of , respectively.

Then, we encode and the compact singular value decomposition of into a quantum system as

| (10) |

Similarly, we can encode and into a quantum system as

| (11) |

with , and and are orthonormal quantum bases.

Step 2: Extracting the inverted singular values.

To calculate (8) with a quantum computer, we first need to calculate the inverse of singular values of . According to the ideas of QPCA (Lloyd et al.,, 2014), we apply to and follow the quantum phase estimation algorithm (e.g. Shor,, 1994; Szegedy,, 2004; Wocjan et al.,, 2009) which yields

| (12) |

where , , are the eigenvalues of which is encoded in a quantum register . Then, by adding an extra qubit and rotating (12) condition on the eigenvalue register , we have

| (13) |

where is a constant to ensure the inverse of eigenvalues are no larger than 1.

We can repeatedly perform a conditional measurement of (13) until it is in state . After that, we can uncompute and discard the eigenvalue register which results in

| (14) |

where is the probability of the qubit in (13) collapsing to state .

Step 3: Calculating the inner products.

Follow the notations in (9) –(11), we can rewrite (8) as

| (15) |

Motivated by the strategy in Schuld et al., (2016), we write (15) into some entries of a qubit so that it can be assessed by a single measurement. To this end, we construct two states and that are entangled with a qubit, i.e.

where is defined in (14) and . Then, the off-diagonal elements of this qubit’s density matrix read

which contain the desired results (8) up to a known normalization factor.

Given a testing set of observations, i.e. , the prediction error can be calculated as

| (16) |

where is a quantum state represents and is the predictor of which can be calculated follow the QLP procedure introduced above. In addition, the computational complexity of running QLP over a training set of size and a testing set of is approximately of the order which is faster than the classical computing algorithm that is usually of order .

4 Hybrid quantum adaptive search

In the high dimensional regime, implementing the best subset selection procedure completely on a quantum computing system is desirable since both QAS and QLP are substantially faster than their classical counterparts. However, such an objective may not be readily accomplished due to the prototypical development of quantum computers. On one hand, the capacity of the state-of-the-art quantum processor (about 70 qubits) is still far from large enough to carry out big data applications. For example, the quantum state preparation step of QLP requires at least qubits which may exceed the capacity of most public available quantum computers when and are moderate or large. On the other hand, existing quantum computing systems are usually highly sensitive to the environment and can be influenced by both internal and external noises (Sung et al.,, 2019). For example, a superconducting quantum computing system can be affected by the internal noises due to material impurities as well as the external noises that come from control electronics or stray magnetic fields (Kandala et al.,, 2019). Further, such noises can accumulate through the computing process and create a non-negligible bias.

To address the aforementioned practical challenges, we propose a hybrid quantum-classical strategy to balance computational efficiency, scalability, and reliability of quantum best subset selection. The intuition of the hybrid quantum-classical strategy is to advance complementary advantages of quantum and classical computing by implementing computational demanding steps on quantum nodes and running capacity demanding steps on classical nodes. The accuracy of quantum nodes can be boosted by some aggregation approaches, like majority voting. To be specific, we first input the data into a classical node and parallelly compute the linear prediction errors (16) over candidate models. The linear prediction errors, stored in a -dimensional vector, will be passed to quantum nodes. Then, we independently implement QAS on quantum nodes to select the minimum element in the -dimensional prediction error vector. The selection results, stored in a list of items, will be passed to a classical node for a majority voting. The best subset with the most votes is selected as the final estimator. We present the flow chart and details of this procedure in Figure 3 and Algorithm 3, respectively.

The Theorem 4.1 below states a majority voting over independent quantum nodes can boost the success probability of finding the best subset. The lower bound of success probability in Theorem 4.1 can be boosted by increasing the number of quantum nodes or improving the success probability on each quantum node.

Theorem 4.1.

Let’s consider a majority voting system that consists of nodes, where is a positive integer. Suppose that each node works independently with a probability to vote the correct model and a probability to vote an incorrect model. Let denote the event that the majority voting system selects the correct model. Then, the probability of is lower bounded by

where is the cumulative distribution function of a standard normal random variable and

is the Kullback-Leibler divergence between two Bernoulli distributions with parameters and , respectively.

In Figure 4, we plot the lower bound of versus the values of . We let increase from 0.75 to 1 and choose the number of nodes . According to Figure 4, all three solid lines are concave curves which means a majority voting system with a moderate number of nodes can effectively boost the success probability of finding the correct model. For instance, a majority voting over independent nodes with can boost the probability of finding the correct model to 0.9 or higher.

5 Experiments on the quantum computer

In this section, we implement the proposed hybrid quantum-classical strategy on IBM Quantum Experience. This platform has developed a Qiskit Python development kit222https://qiskit.org/, which allows users to perform both quantum computing and classical computing in a single project. In Section 5.1, we analyze the performance of Algorithm 3 regarding the selections of tuning parameters. In Section 5.2, we assess the best subset section performance of Algorithm 3 under various linear regression settings and compare QAS with Grover’s algorithm in stage 2.

5.1 Selection of tuning parameters

The proposed hybrid quantum-classical strategy involves two tuning parameters: the number of quantum nodes ; and a learning rate which will be passed to each quantum node to implement Algorithm 2. As suggested by Theorem 4.1, the success probability of Algorithm 3 converges to 1 as increases when the success probability of each quantum node surpasses 0.5. Therefore, one should choose as large as possible subject to the availability of quantum computing resources. On the other hand, the learning rate controls the “step size" of each iteration in Algorithm 2. In this subsection, we use an experiment to assess the performance and the sensitivity of Algorithm 3 with respect to the choices of and . To focus on the selection of tuning parameters, we skip the stage 1 in Algorithm 3. Instead, we generate as an i.i.d. sample of size from the uniform distribution . Then we use the stages 2 and 3 in Algorithm 3 to find the minimum element in . We set , or , and let be a sequence of grid points between 0.40 and 0.60 with a step size of 0.01. For each pair of and , we simulate 200 replications. The performance is measured by the accuracy rate which is defined as

| (17) |

The results are presented in Figure 5. The accuracy rates for or are plotted as blue, orange, and green dots with different symbols. The solid lines are corresponding smoothed curves. According to Figure 5, we find that increasing the number of quantum nodes can improve the accuracy rate though the improvement is marginal when is large enough, e.g. . Besides, we observe that the highest accuracy rates are attained with some between 0.5 and 0.55 for both choices of . One can notice that the accuracy rates are close to 1 when and which can be considered as a rule-of-thumb recommendation. Further, the smoothed curves in Figure 5 indicate that Algorithm 3 is not very sensitive for the selection of tuning parameters.

5.2 Best subset selection with hybrid quantum-classical strategy

In this subsection, we assess the best subset selection performance of the proposed hybrid quantum-classical strategy. We consider a linear regression model

| (18) |

where , , , and . First, we draw as an i.i.d. sample from a multivariate normal distribution , where the th entry of equals for some . Then, we draw as an i.i.d. sample from a normal distribution . Notice that, controls the autocorrelation level among covariates, and , and together control the signal to noise ratio (SNR), i.e. . Let be a positive integer indicating the model sparsity. We set the first elements of to be 1 and the rest elements to be 0.

Subject to the capacity of the quantum computing system, we set , , and . We choose the autocorrelation level and the signal to noise ratio , respectively. For each scenario, we simulate 200 replications. We implement the hybrid quantum-classical strategy as proposed in Algorithm 3 with and to select the best subset of the linear regression model. We denote this method as QAS since it implements the quantum adaptive search in stage 2. Besides, we consider two variants of Algorithm 3 as two competitors. The first competitor, denoted as Grover Oracle, replaces QAS in stage 2 by Grover’s algorithm with a known oracle state. The second competitor, denoted as Grover Random, replaces QAS in stage 2 by Grover’s algorithm with a randomly selected oracle state. Notice that Grover Oracle is an oracular method and not applicable in practice since the oracle state is not observable.

For each replication, we record the false positives (FP) and false negatives (FN) of each method. FP is the number of inactive covariates that are selected into the model and FN is the number of active covariates that are ignored in the selected model. The box-plots of FP and FN over 200 replications are reported in Figure 6 below. According to Figure 6, Grover Oracle performs the best among the three competitors which is not surprising as it utilizes the oracle information of the true best subset. The proposed QAS method, as a non-oracular approach, performs almost identical to Grover Oracle in nearly all scenarios. QAS is slightly outperformed by Grover Oracle when the correlation level is high () and the signal to noise ratio is small (), which is the most challenging scenario. In contrast, Grover Random performs as bad as random guesses in all scenarios. This observation, which is inline with the discussions in Section 2.2.3, shows oracular quantum search algorithms are not suitable to statistical learning problems.

6 Comparison with classical methods

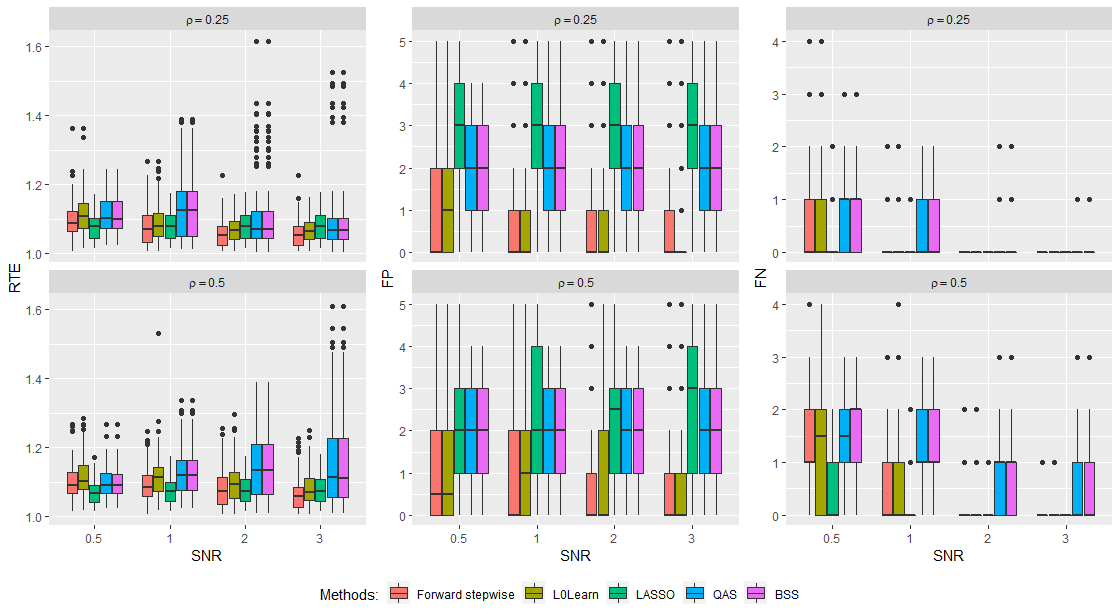

In this section, we compare the empirical performance of quantum adaptive search (QAS) with the naive best subset selection method (BSS) implemented on a classical computer. We also include some popular approximate best subset selection methods designed for classical computers: forward stepwise selection (e.g. Draper and Smith,, 1966); LASSO (Tibshirani,, 1996); and the fast best subset selection method (L0Learn) (Hazimeh and Mazumder,, 2018). The implementation details of the 5 comparison methods are summarized in Table 1.

| Method name | Computing method | Tuning parameter selection | ||

|---|---|---|---|---|

| QAS | Algorithm 3 | and | ||

| BSS | Naive best subset selection | NA | ||

| Forward stepwise | R package bestsubset333Avaliable at https://github.com/ryantibs/best-subset | NA | ||

| LASSO | R package glmnet444Avaliable at https://cran.r-project.org/web/packages/glmnet | 10-folds cross-validation | ||

| L0Learn | R package L0Learn555Avaliable at https://cran.r-project.org/web/packages/L0Learn | 10-folds cross-validation |

We consider the same linear regression model in (18). The coefficient vector is generated from one of the following two settings.

-

(1)

Strong sparsity: the first elements of equal to 1 while the rest elements equal to 0.

-

(2)

Weak sparsity: the first elements of equal to while the rest elements equal to 0.

In this experiment, we set , and . We choose the autocorrelation level and the signal to noise ratio , respectively. For each scenario, we simulate 100 replications. Additional results for and a real data analysis are relegated to the supplemental material. Besides the false positives (FP) and false negatives (FN) defined in Section 5.2, we measure the prediction performance of each method by the relative test error (RTE):

where is a testing observation which is i.i.d. with the training sample . It is easy to see that RTE is lower bounded by 1 and the smaller the better.

Figures 7 and 8 present box-plots of FP, FN and RTE over 100 replications under strong sparsity and weak sparsity settings, respectively. According to the box-plots, none of the 5 competing methods dominate the others in terms of all three measurements, which is inline with the discussions in Hastie et al., (2020). Generally speaking, LASSO tends to select generous models which may have small FNs but large FPs. Forward stepwise and L0Learn prefer to select parsimonious models which may lead to small FPs but large FNs. However, none of the three methods can be considered as a good approximation of BSS since their performance are distinct in most scenarios. In contrast, QAS performs almost identical to BSS in terms of all three measurements in every scenario.

Next, we take a closer look at best subset selection behaviors of the 5 comparison methods. We simulate 1,000 replications of (18) with , , , , , and the strong sparsity . In the top panel of Figure 9, we present the histogram of selected model sizes for 5 methods. In the bottom panel of Figure 9, we report the box-plots of RTSs for 5 methods. According to Figure 9, the selected model sizes of QAS and BSS sharply concentrates around the truth, i.e. . The histograms of Forward stepwise and L0Learn are severely left skewed which indicates they tend to underestimate the size of the active set. In contrast, LASSO has a right skewed histogram and hence often select oversized models. Again, the selection as well as prediction performances of QAS and BSS are almost identical. The results in Figure 9 further justified our argument that QAS is an efficient quantum alternative of BSS while Forward stepwise, LASSO and L0Learn are not ideal approximates of BSS.

7 Conclusion

In this paper, we investigated the solution of the best subset selection problem in a quantum computing system. We proposed an adaptive quantum search algorithm that does not require the oracle information of the solution state and can significantly reduce the computational complexity of classical best subset selection methods. An efficient quantum linear prediction method was also discussed. Further, we introduced a novel hybrid quantum-classical strategy to avoid the hardware bottleneck of existing quantum computing systems and boost the success probability of quantum adaptive search. The quantum adaptive search is applicable to various best subset selection problems. Moreover, our study opens the door to a new research area: reinvigorating statistical learning with powerful quantum computers.

References

- Abrams and Lloyd, (1997) Abrams, D. S. and Lloyd, S. (1997). Simulation of many-body fermi systems on a universal quantum computer. Physical Review Letters, 79(13):2586.

- Beale et al., (1967) Beale, E., Kendall, M., and Mann, D. (1967). The discarding of variables in multivariate analysis. Biometrika, 54(3-4):357–366.

- Bennett et al., (1997) Bennett, C. H., Bernstein, E., Brassard, G., and Vazirani, U. (1997). Strengths and weaknesses of quantum computing. SIAM journal on Computing, 26(5):1510–1523.

- Bertsimas et al., (2016) Bertsimas, D., King, A., and Mazumder, R. (2016). Best subset selection via a modern optimization lens. The Annals of Statistics, 44(2):813–852.

- Boyer et al., (1998) Boyer, M., Brassard, G., Høyer, P., and Tapp, A. (1998). Tight bounds on quantum searching. Fortschritte der Physik: Progress of Physics, 46(4-5):493–505.

- Breiman, (1996) Breiman, L. (1996). Heuristics of instability and stabilization in model selection. The Annals of Statistics, 24(6):2350–2383.

- Byrnes and Yamamoto, (2006) Byrnes, T. and Yamamoto, Y. (2006). Simulating lattice gauge theories on a quantum computer. Physical Review A, 73(2):022328.

- Candes and Tao, (2007) Candes, E. and Tao, T. (2007). The dantzig selector: Statistical estimation when p is much larger than n. The Annals of Statistics, 35(6):2313–2351.

- Chen and Donoho, (1994) Chen, S. and Donoho, D. (1994). Basis pursuit. In Proceedings of 1994 28th Asilomar Conference on Signals, Systems and Computers, volume 1, pages 41–44. IEEE.

- Draper and Smith, (1966) Draper, N. and Smith, H. (1966). Applied regression analysis. j. wiley & sons inc., ny.

- Efroymson, (1960) Efroymson, M. (1960). Multiple regression analysis. Mathematical methods for digital computers, pages 191–203.

- Fan et al., (2020) Fan, J., Guo, Y., and Zhu, Z. (2020). When is best subset selection the "best"?

- Fan and Li, (2001) Fan, J. and Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96(456):1348–1360.

- Fan et al., (2014) Fan, J., Xue, L., and Zou, H. (2014). Strong oracle optimality of folded concave penalized estimation. The Annals of Statistics, 42(3):819.

- Grover, (1997) Grover, L. K. (1997). Quantum mechanics helps in searching for a needle in a haystack. Physical Review Letters, 79(2):325.

- Hallgren, (2002) Hallgren, S. (2002). Polynomial-time quantum algorithms for pell’s equation and the principal ideal problem. In Proceedings of the thiry-fourth annual ACM symposium on Theory of computing, pages 653–658.

- Harrow et al., (2009) Harrow, A. W., Hassidim, A., and Lloyd, S. (2009). Quantum algorithm for linear systems of equations. Physical Review Letters, 103(15):150502.

- Hastie et al., (2020) Hastie, T., Tibshirani, R., and Tibshirani, R. (2020). Best subset, forward stepwise or lasso? analysis and recommendations based on extensive comparisons. Statistical Science, 35:579–592.

- Hazimeh and Mazumder, (2018) Hazimeh, H. and Mazumder, R. (2018). Fast best subset selection: Coordinate descent and local combinatorial optimization algorithms. Operations Research, 68.

- Hocking and Leslie, (1967) Hocking, R. and Leslie, R. (1967). Selection of the best subset in regression analysis. Technometrics, 9(4):531–540.

- Hu and Wang, (2020) Hu, J. and Wang, Y. (2020). Quantum annealing via path-integral monte carlo with data augmentation. Journal of Computational and Graphical Statistics, pages 1–13.

- Jansen et al., (2007) Jansen, S., Ruskai, M.-B., and Seiler, R. (2007). Bounds for the adiabatic approximation with applications to quantum computation. Journal of Mathematical Physics, 48(10):102111.

- Jordan, (2005) Jordan, S. P. (2005). Fast quantum algorithm for numerical gradient estimation. Physical Review Letters, 95(5):050501.

- Kandala et al., (2019) Kandala, A., Temme, K., Córcoles, A. D., Mezzacapo, A., Chow, J. M., and Gambetta, J. M. (2019). Error mitigation extends the computational reach of a noisy quantum processor. Nature, 567(7749):491–495.

- Kassal et al., (2008) Kassal, I., Jordan, S. P., Love, P. J., Mohseni, M., and Aspuru-Guzik, A. (2008). Polynomial-time quantum algorithm for the simulation of chemical dynamics. Proceedings of the National Academy of Sciences, 105(48):18681–18686.

- Lloyd et al., (2014) Lloyd, S., Mohseni, M., and Rebentrost, P. (2014). Quantum principal component analysis. Nature Physics, 10(9):631–633.

- Natarajan, (1995) Natarajan, B. K. (1995). Sparse approximate solutions to linear systems. SIAM journal on computing, 24(2):227–234.

- Nielsen and Chuang, (2010) Nielsen, M. A. and Chuang, I. L. (2010). Quantum Computation and Quantum Information. Cambridge University Press.

- Rebentrost et al., (2014) Rebentrost, P., Mohseni, M., and Lloyd, S. (2014). Quantum support vector machine for big data classification. Physical Review Letters, 113(13):130503.

- Schuld and Petruccione, (2018) Schuld, M. and Petruccione, F. (2018). Supervised Learning with Quantum Computers. Springer.

- Schuld et al., (2016) Schuld, M., Sinayskiy, I., and Petruccione, F. (2016). Prediction by linear regression on a quantum computer. Physical Review A, 94(2):022342.

- Shen et al., (2012) Shen, X., Pan, W., and Zhu, Y. (2012). Likelihood-based selection and sharp parameter estimation. Journal of the American Statistical Association, 107(497):223–232.

- Shor, (1994) Shor, P. W. (1994). Algorithms for quantum computation: discrete logarithms and factoring. In Proceedings 35th annual symposium on foundations of computer science, pages 124–134. IEEE.

- Shor, (1999) Shor, P. W. (1999). Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM review, 41(2):303–332.

- Sung et al., (2019) Sung, Y., Beaudoin, F., Norris, L. M., Yan, F., Kim, D. K., Qiu, J. Y., von Lüpke, U., Yoder, J. L., Orlando, T. P., Gustavsson, S., et al. (2019). Non-gaussian noise spectroscopy with a superconducting qubit sensor. Nature Communications, 10(1):1–8.

- Szegedy, (2004) Szegedy, M. (2004). Quantum speed-up of markov chain based algorithms. In 45th Annual IEEE symposium on foundations of computer science, pages 32–41. IEEE.

- Tibshirani, (1996) Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological), 58(1):267–288.

- Van Dam and Shparlinski, (2008) Van Dam, W. and Shparlinski, I. E. (2008). Classical and quantum algorithms for exponential congruences. In Workshop on Quantum Computation, Communication, and Cryptography, pages 1–10. Springer.

- Wang, (2017) Wang, G. (2017). Quantum algorithm for linear regression. Physical review A, 96(1):012335.

- Wang, (2011) Wang, Y. (2011). Quantum monte carlo simulation. The Annals of Applied Statistics, 5(2A):669–683.

- Welch, (1982) Welch, W. J. (1982). Algorithmic complexity: three np-hard problems in computational statistics. Journal of Statistical Computation and Simulation, 15(1):17–25.

- Wiebe et al., (2012) Wiebe, N., Braun, D., and Lloyd, S. (2012). Quantum algorithm for data fitting. Physical Review Letters, 109(5):050505.

- Wocjan et al., (2009) Wocjan, P., Chiang, C.-F., Nagaj, D., and Abeyesinghe, A. (2009). Quantum algorithm for approximating partition functions. Physical Review A, 80(2):022340.

- Yuan and Lin, (2007) Yuan, M. and Lin, Y. (2007). On the non-negative garrotte estimator. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 69(2):143–161.

- Zhang, (2010) Zhang, C.-H. (2010). Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics, 38(2):894–942.

- Zhao and Yu, (2006) Zhao, P. and Yu, B. (2006). On model selection consistency of lasso. Journal of Machine learning research, 7(Nov):2541–2563.

- Zhao et al., (2019) Zhao, Z., Fitzsimons, J. K., and Fitzsimons, J. F. (2019). Quantum-assisted gaussian process regression. Physical Review A, 99(5):052331.

- Zou, (2006) Zou, H. (2006). The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101(476):1418–1429.

- Zou and Hastie, (2005) Zou, H. and Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (statistical methodology), 67(2):301–320.