This is the author’s version of the article that has been accepted in CSCW 2023 and will be published in PACMHCI

Belief Miner: A Methodology for Discovering Causal Beliefs and Causal Illusions from General Populations

Abstract.

Causal belief is a cognitive practice that humans apply everyday to reason about cause and effect relations between factors, phenomena, or events. Like optical illusions, humans are prone to drawing causal relations between events that are only coincidental (i.e., causal illusions). Researchers in domains such as cognitive psychology and healthcare often use logistically expensive experiments to understand causal beliefs and illusions. In this paper, we propose Belief Miner, a crowdsourcing method for evaluating people’s causal beliefs and illusions. Our method uses the (dis)similarities between the causal relations collected from the crowds and experts to surface the causal beliefs and illusions. Through an iterative design process, we developed a web-based interface for collecting causal relations from a target population. We then conducted a crowdsourced experiment with 101 workers on Amazon Mechanical Turk and Prolific using this interface and analyzed the collected data with Belief Miner. We discovered a variety of causal beliefs and potential illusions, and we report the design implications for future research.

1. Introduction

In the view of psychology, a belief is “the mental acceptance or conviction in the truth or actuality of some idea” (Schwitzgebel, 2011). Accordingly, a causal belief is a belief about one or more factors that are thought to cause or contribute to the development of a certain phenomenon, such as an illness or the outcome of an intervention. A causal belief is not restricted to a single relation; it can embrace entire causal mechanisms. As Blanzieri (Blanzieri, 2012) writes, causal beliefs are halfway between actual knowledge about a physically objective reality and a socially-constructed reality. They are different from causality and causal inference which are strictly derived from hard data via well-defined statistical principles (Blanzieri, 2012).

An interesting phenomenon is that of causal illusion which occurs when people develop the belief that there is a causal connection between two events that are in fact just coincidental (Matute et al., 2015). Causal illusions are the underpinnings of pseudoscience and superstitious thinking and they can have disastrous consequences in many critical areas such as health, finances, and well-being (Freckelton, 2012). A mild form of a causal illusion is the good-luck charm that people carry to special events or in general, but more serious are bogus medicines that can inhibit people from taking up scientifically more credible treatments to restore or preserve their health.

The first step towards combating a causal illusion is to detect it. Researchers typically use contingency judgement tasks for this purpose (Matute et al., 2015). In these experiments, participants are exposed to a number of trials (around 50) in which a given cause is present or absent, followed by the presence or absence of a potential outcome. At the end of the experiment, participants are asked to judge the causal relations between the cause and the effect (or outcome). While effective, these experiments have several limitations: 1) they lack a mechanism to expose complex causal structures (with many links and chains) beyond a single cause and effect; 2) they are unable to elicit complex cognitive conflicts such as how people fare with competing causes to the same effect; and 3) they are highly controlled laboratory studies and logistically expensive to conduct. We believe that crowd-sourcing these activities can provide a powerful alternative tool to address these challenges.

We propose Belief Miner, a crowdsourcing methodology for discovering causal beliefs and illusions from the general population. While prior works have showcased the capability of crowdsourcing in identifying causal relations (Berenberg and Bagrow, 2018; Yen et al., 2021, 2023), they often approach the topic from a predominantly data-driven perspective, focusing either on creating large networks or training datasets for Causal ML. Prior works were not designed to model contingency judgment tasks and identify causal illusions using crowdsourcing. “Belief Miner” aims to fill this gap.

Our methodology draws on the rich literature in psychology, causal illusion, and crowdsourcing (Section 2, 3, and 4). Informed by the literature, our method uses crowdsourcing to collect a dataset of causal relations from a general population on a topic of interest and contrasts these with causal relations obtained from domain experts to understand causal beliefs and detect illusions via several metrics and mechanisms we propose. Thus, Belief Miner also offers a nuanced and formalized post-hoc analysis (Berenberg and Bagrow, 2018) in causal crowdsourcing, which current studies and systems lack (Berenberg and Bagrow, 2018; Yen et al., 2021, 2023).

To validate our method, we designed an interactive web-based tool that would allow crowd workers to interactively create small causal networks (and alter their created network if needed) from a set of variables. We then conducted a formative study with 94 crowd workers on Amazon Mechanical Turk (AMT). We asked participants to create causal relations between randomly chosen variables relevant to climate change. We selected the theme of climate change due to its propensity for controversial views and potential to expose causal illusions. For instance, in a study conducted in 2019 (Leiserowitz et al., 2019), a slight majority (59%) of Americans believed climate change is human-caused, and nearly a third (30%) believed that natural variability is the primary cause. This belief and confusion can be harmful to necessary policy-making to counter climate change (Fleming et al., 2021).

As mentioned, in addition to the crowdsourced data, we also collected causal relations from a group of domain experts (e.g., climate scientists). Then, with both datasets in place, we employed our method on them to discover causal illusions. While the results were generally positive, we identified two issues: 1) there was a moderate possibility of selection bias due to the order in which the variables were presented to the participants; and 2) the completion time was longer than expected which pointed to possible usability issues.

Based on the findings of this formative study, we revised the design of the interface and the experimental protocol. Using the revised design, we conducted another study with 101 crowd workers from AMT and Prolific. We observed a stronger alignment between the causal beliefs of the crowd and the experts and a reduced completion time of the crowdworkers compared to the formative study. Our findings also reveal various discrepancies between the causal relations created by crowd workers and experts. We observe 1) that a significant number of workers overestimated the impact of certain attributes, and 2) that participants with flawed causal beliefs (i.e., illusions) assigned lower confidence scores to their networks in both studies, suggesting that our mechanism effectively counters illusion and has the potential to increase awareness among individuals.

In summary, our contributions are as follows: 1) Belief Miner, a methodology that includes a web-based interactive system and evaluation method for discovering causal beliefs and illusions; and 2) Two crowdsourcing studies on Amazon Mechanical Turk and Prolific with 94 and 101 crowd workers. The collected data from the experiment shows an application of our methodology in the domain of climate change.

2. Background: Causal Belief and Causal Illusion

In this section, we will provide the background that has guided our research and development. We will build on a principal metric, the index (Allan, 1980), which researchers use to design the level of contingency in an experiment.

In the simplest case, there is one potential cause and one observed outcome . Given these two variables there are then four possible configurations: 1) both and occur, 2) occurs but may not, 3) may not occur but still does, and 4) neither nor occur. We can capture these four configurations into the contingency table shown in Table 1. is defined as follows (Allan, 1980; Matute et al., 2015):

| Outcome present | Outcome not present | |

|---|---|---|

| Cause present | ||

| Cause not present |

| Trial Matrix 1 | Trial Matrix 2 | |||||

| Outcome present | Outcome not present | Probabilities | Outcome present | Outcome not present | Probabilities | |

| Cause present | 80 | 20 | 20 | 80 | ||

| Cause not present | 20 | 80 | 80 | 20 | ||

| Trial Matrix 3 | Trial Matrix 4 | |||||

| Outcome present | Outcome not present | Probabilities | Outcome present | Outcome not present | Probabilities | |

| Cause present | 80 | 20 | 20 | 80 | ||

| Cause not present | 80 | 20 | 20 | 80 | ||

A true causal relationship exists when is non-zero. When is positive then is said to promote , while when is negative is said to inhibit .

The contingency table gives rise to four distinct cases of trial matrices shown in Table 2. In the first case, , might be an evidence-based medicine to treat a cold and is the disappearance of the cold. This is shown in trial matrix 1 where row 1 is the treatment that divides the response of a cohort of patients who took the medicine, while row 2 is the control that divides the response of a cohort of patients who did not take the medicine and instead took a placebo.

In the second case, , might be an effective medicine to control a person’s cholesterol level, and is the cholesterol level that remains in check and will not rise. This case is illustrated in trial matrix 2 which is trial matrix 1 transposed.

Trial matrix 3 is one for which , which occurs when –- the null contingency. In this case, the treatment has no effect on the outcome. A patient may take the cold medicine or not, but the cold will always disappear on its own. A classic example of this scenario is alternative (homeopathic) medicine which typically lacks strong scientific evidence for its effectiveness (Health and Council, 2015; Singh and Ernst, 2008).

The symmetric case of trial matrix 4 is analogous. A person with no risk of high cholesterol might take, inspired by effective product marketing, a scientifically unproven medicine and feel confirmed in that choice when the level stays normal.

2.1. When a Causal Illusion Weakens Trust in a Proven Cause

Having formed a null contingency about a certain phenomenon can lead people to discount a true causal relationship of a scientifically acknowledged cause for the same outcome. As Matute et al. (Matute et al., 2015) write: “The availability of more than one potential cause can result in a competition between both causes so that if one is considered to be a strong candidate, the other will be seen as a weak one.” This was verified via several experiments where it was found that participants who had established a prior belief about the effectiveness of an unproven bogus medicine – a causal illusion – weakened the belief in the effectiveness of a proven medicine. In contrast, participants who did not have a chance to develop the illusion had sustained trust in the proven medicine (Vadillo et al., 2013).

Experiments have shown that the strength of a causal illusion can be effectively controlled by the frequency at which the cause is present, even when its effectiveness to drive the outcome remains the same. This has important implications on a person’s belief in a proven medicine. The more frequently the competing (alternative) medicine has been administered and a confirmatory (yet specious) outcome has been experienced, either now or in the past, the smaller the belief in the proven medicine (Yarritu et al., 2015).

An effective way to convince people that they have fallen victim to the null contingency is to ask them not to take the treatment when they hope for an outcome to occur (Blanco et al., 2012). But this proves difficult when the cost of the treatment is low and the outcome is ubiquitous and persuasive. In fact, the ubiquity of both treatment and outcome are perfect conditions for a causal illusion to emerge (Vadillo et al., 2011; Yarritu et al., 2014). It fosters trust in the belief that there must be a causal relationship between the two since there are many opportunities for coincidences (Blanco et al., 2013).

While the examples given so far are relatively benign, there are more serious scenarios where these cognitive mechanisms can be harmful (Freckelton, 2012). A person with a natural medicine mindset, when receiving, say, a cancer diagnosis might resort to acupuncture, herbal treatments, fruit juice therapy, and spiritual consultations instead of seeking more conventional evidence-based interventions, such as surgery or radiation, and chemotherapy. This is what has been reported to have happened to Steve Jobs, the founder of Apple Computer and an exceptionally tech-savvy and forward-thinking individual (Cartwright, 2011). It vividly shows that gaining immunity from null contingencies is hard.

2.2. Gauging Causal Illusions: From the Lab into the Wild

Causal illusion has been of interest to researchers for a long time. It can reveal many cognitive and behavioral practices, as described in Section 2.1. To study different research questions, researchers typically tweak the trial matrices (Table 2) to create desired scenarios and then conduct control experiments based on that.

These experiments typically involve hypothetical scenarios set in the medical domain. Participants are asked to impersonate doctors who are assigned a set of fictitious computer-modeled patients (i.e., the total number of trials). In some scenarios, the participants take on an active role in prescribing medical treatments for some illnesses, while in others they simply observe the patients follow a certain treatment regime. As the experiment proceeds, participants see records of patients who either have or have not taken a certain medicine and then have or have not recovered from the disease (based on the trial matrices). At the end of the experiment, the participants are asked whether the treatment was effective or not (for example see (Matute et al., 2015)).

The experiments are meticulously designed to expose the triggers and impacts of causal illusions. They are highly controlled laboratory studies and focus on very simple and elementary causal relationships. Our crowd-based belief miner takes these studies out of the research laboratory into the wild where an abundance of data awaits, reflecting complex causal chains with many links and paths. There are also many unexpected cause and outcome variables that might be discovered when the crowd-sourced data are studied in depth. It is a tool by which complex cognitive conflicts can be efficiently extracted and exposed for real-life phenomena of possibly high complexity. While this approach cannot directly replicate the usage of trial matrices shown in Table 2, it can produce the conclusions that are typically derived from the experiments that use trial matrices. Furthermore, it is easy to set up for practitioners.

Another interesting aspect of our methodology is that it asks participants to engage in a critical assessment of their beliefs, by ways of reviewing the small causal network they construct. As we have already hinted at in the introduction, we found evidence of the implications of a theory proposed by Walsh and Sloman (Walsh and Sloman, 2004), who experimented with the concept of contradiction as a way to get people to revise their beliefs in a causal illusion. They suggest that a person might reduce their belief in the effectiveness of a certain treatment upon discovering that some elements of it have an outcome that is opposite of what they expected.

3. Related Work

Our goal in this paper is to discover causal beliefs and illusions using crowdsourcing. In this section, we discuss crowdsourcing, HCI, and CSCW concepts relevant to achieve that.

3.1. Crowdsourcing and Quality Control in Crowdsourcing

Bigham et al. (Bigham et al., 2015) identified three broad areas for collective intelligence and HCI. They are 1) directed crowdsourcing, where a single person or a group guides a large set of people to accomplish a task (e.g., labeling a large dataset (Kairam and Heer, 2016; Chang et al., 2017), CommunityCrit (Mahyar et al., 2018)); 2) collaborative crowdsourcing, where a group of people works together to accomplish a task (e.g., Wikipedia, Project Sidewalk (Saha et al., 2019), ConceptScape (Liu et al., 2018)); and 3) finally, passive crowdsourcing, where people do not coordinate and are not consciously aware of participating in a crowdsourced system, however, one can still mine their behavior to infer collective intelligence (e.g., mining search history).

In this paper, we mainly focus on directed crowdsourcing (referred to as crowdsourcing from here on) since we provided explicit direction to our crowd workers. In a crowdsourcing experiment, a crowd worker typically completes a small part of the overall task (i.e., micro-tasks) (Chung et al., 2019; Kittur et al., 2008, 2011). These micro-tasks are eventually aggregated for inferring collective intelligence. In our case, a micro-task refers to creating a causal network between a small number of attributes.

Over the years, several methods and tools have been proposed for measuring and increasing the efficacy of the design of the micro-tasks based crowdsourcing (Kittur et al., 2013, 2012; Rzeszotarski and Kittur, 2012; Bernstein et al., 2010; Kittur et al., 2011; Noronha et al., 2011). Of particular interest is Quality Control in crowdsourcing (Kittur et al., 2013), which ensures the validity of the collected response from crowd workers. This is important since crowd workers tend to spend minimum effort and sometimes try to game the systems (Kittur et al., 2013). The currently established methods for quality controls typically fall into two broad categories: task design and post-hoc analysis (Kittur et al., 2013). Examples for task design include fault-tolerant subtasks (Bernstein et al., 2010; Kittur et al., 2011; Noronha et al., 2011; Krishna et al., 2016), attention check (Agley et al., 2022), peer review filters (Dow et al., 2012; Kittur et al., 2011; Bernstein et al., 2010; Horton and Chilton, 2010), intelligent task assignment (Kim et al., 2018) and optimizing instructions (Kittur et al., 2008; Downs et al., 2010; Lasecki et al., 2015; Qiu et al., 2020). Examples for post-hoc analysis include comparison with gold standards (Downs et al., 2010; Callison-Burch, 2009; Berenberg and Bagrow, 2018), validation study (Saha et al., 2019), agreement between crowd workers (Callison-Burch, 2009; Ipeirotis et al., 2010), and behavior analysis (Rzeszotarski and Kittur, 2012; Robert and Romero, 2015; Gadiraju et al., 2015; Chiang et al., 2021; Yin et al., 2018).

Our work can be seen as a post-hoc analysis model for causal crowdsourcing. We use causal relations collected from experts (i.e., gold standards), a popular post-hoc method, to find illusions in the crowd-generated causal networks. Although relevant work in causal crowdsourcing (Berenberg and Bagrow, 2018) employed comparison with gold standards on a smaller scale, our work both scales up the approach and adds a nuanced dimension to the post-hoc analysis model tailored for causal crowdsourcing. This makes our contribution one of the first to delve into such depth and granularity in this domain. Finally, we believe our findings will guide future task designs for causal crowdsourcing. Appropriate task designs can make people self-aware and help them avoid falling victim to causal illusions. We lay down this future direction in our discussion (Section 11).

3.2. Causality and Crowdsourcing

While causality is a core concept across several scientific domains, designing crowdsourced experiments for collecting causal relations is a relatively new research area. Caselli et al. contributed to this area by focusing on annotating causal relations in narrative texts, specifically news data, through crowdsourcing experiments (Caselli and Inel, 2018). Their work analyzed parameters affecting annotation quality and compared crowdsourced and expert annotations, emphasizing the generation of structured data based on narrative strategies. The most relevant work in this space, however, is Iterative Pathway Refinement (Berenberg and Bagrow, 2018), a network search strategy where workers modify a short linear pathway between attributes. The authors showed that their method is more efficient than a single line-based micro task, provides better contexts to crowd workers, and the union of the pathways can create a large network. Yen et al. (Yen et al., 2021) extended this line of work by proposing CausalIDEA, an interactive interface where users can create small networks as well as provide textual explanations for creating specific causal relations. The causal diagrams and textual narratives add a new dimension to understanding causal beliefs. The authors investigated how a user’s causal perception is affected by seeing the causal networks created by others. Furthermore, Yen et al. introduced CrowdIDEA (Yen et al., 2023), a tool that integrates crowd intelligence and data analytics to support causal reasoning, featuring a three-panel setup: enabling access to crowd’s causal beliefs, data analytics, and the ability to draw causal diagrams. Their study also demonstrated that seeing the crowd’s causal beliefs significantly improved the accuracy of causal relationships in the final diagrams of the participants and reduced reliance on data analytics, showcasing the tool’s potential to enhance causal reasoning processes.

These prior works provide evidence that people can identify causal relations between pairs of events and collaboratively create causal networks that are information-rich. While inspiring, they consider causal crowdsourcing from an algorithmic or data science perspective, focusing either on creating large networks or training datasets for Causal ML (Kusner et al., 2017). In contrast, we consider causal crowdsourcing as a tool to surface how causal illusions persist in a domain, which prior works have largely overlooked (Berenberg and Bagrow, 2018; Yen et al., 2021).

While we do not focus on data science or machine learning, our work has implications for Causal ML. For example, failing to detect causal illusions and erroneous causal relations can introduce biases into datasets, leading to skewed decision-making based on inferred causal relations (Wang and Mueller, 2015; Hoque and Mueller, 2022; Ghai and Mueller, 2023). Broader ML literature has also demonstrated that ML models trained with datasets containing spurious relations or errors may perform poorly and yield incorrect inferences (Yapo and Weiss, 2018; Rastogi et al., 2022; Panch et al., 2019; Kusner et al., 2017).

In summary, the absence of mechanisms to detect causal illusions is a significant obstacle to the practical application of causal crowdsourcing in different domains, including social science and policy-making (domain of this work), causal ML, finances, and health. We aim to bridge this gap.

4. Method

In this section, we describe the evaluation method we have devised to help expose the potential causal illusions and the complex cognitive conflicts mentioned in Section 2.

4.1. Data

4.1.1. Causal Belief Data

Causal beliefs are conceptualized through the presence of a cause and an effect and their relationship in a certain phenomenon. Following the Structural Causal Model (SCM) (Pearl and Mackenzie, 2018), we define a causal relationship through a directed link/edge between the cause and effect (cause effect). Therefore, gathering causal relations from a domain of interest is synonymous with identifying the causal edges among a collection of relevant attributes that form an interconnected directed causal network (Berenberg and Bagrow, 2018).

Let us define a causal network with nodes and links . Here, is a set of causal attributes and , where is a unidirectional causal relationship between attributes and .

4.1.2. Ground Truth

Comparison with gold standards or ground truth is common in crowdsourcing (Downs et al., 2010; Callison-Burch, 2009; Berenberg and Bagrow, 2018). In our case, ground truth refers to scientifically verified causal relations. However, establishing ground truth in a complex domain such as climate change where many interconnected factors may exist, can be a demanding task. We propose the following collaborative method with two phases to establish ground truth for all possible causal links.

Phase1: Independent Ground Truth Generation. First, a fixed number of experts are instructed to create their version of the causal networks independently using all the attributes. We propose a minimum of three experts. We require experts to provide scientifically verified references to the created relations.

Phase2: Collaborative Meeting. After the network creation phase, the union of their networks is considered for the discussion phase. Here, the experts work collaboratively to assign credibility scores for all possible causal links in the unified network. Credibility scores denote the level of validity for a specific causal link. We chose this notion since there can be “levels” of correctness for a specific causal relation instead of them being just right or wrong. This can also be described as the strength of a causal relationship. The notion is motivated by mediation analysis in causality (MacKinnon et al., 2007). According to mediation analysis (MacKinnon et al., 2007), in a causal network, an attribute may have a direct effect on another attribute as well as indirect effects through other attributes (mediating attributes). Thus, the number of mediating attributes between two attributes indicates the strength of the causal relationship.

Based on this observation, we propose four levels for the credibility scores: all links that did not appear in any experts’ causal network can be assigned the lowest possible credibility score (0), and the links that appear in all of them can be assigned the highest credibility score (3). Finally, the rest of the links are assigned a score of 1 or 2 after expert deliberation, depending on the number of mediating variables present in between.

4.2. Metrics

We offer two angles of evaluation or analysis of the collected causal belief data: 1) an aggregated quantitative and qualitative overview of the causal beliefs; and 2) causal illusion detection. The metrics required are defined below:

4.2.1. Aggregated Evaluation:

Aggregated statistics such as the distribution of total votes are common in crowdsourcing (Kittur et al., 2013), including causal crowdsourcing (Berenberg and Bagrow, 2018; Yen et al., 2021). They are useful for obtaining an overview of the data and determining outliers. In addition to the total votes, we also examine the distribution for the Average Network Credibility Score (ANC). We calculate the average network credibility (ANC) score using the following equation:

Here, is a small causal network created by an individual, and is the credibility score (from Section 4.1.2) of link present in network . Thus, can be calculated for each network created by separate individuals, and their distribution will indicate the credibility for the networks created by people.

4.2.2. Causal Illusion Detection

In Section 2, we define Causal Illusion as the incorrect assumption of cause and effect in a certain phenomenon. Thus, causal illusion inherently denotes a discrepancy between people’s causal beliefs and the ground truth.

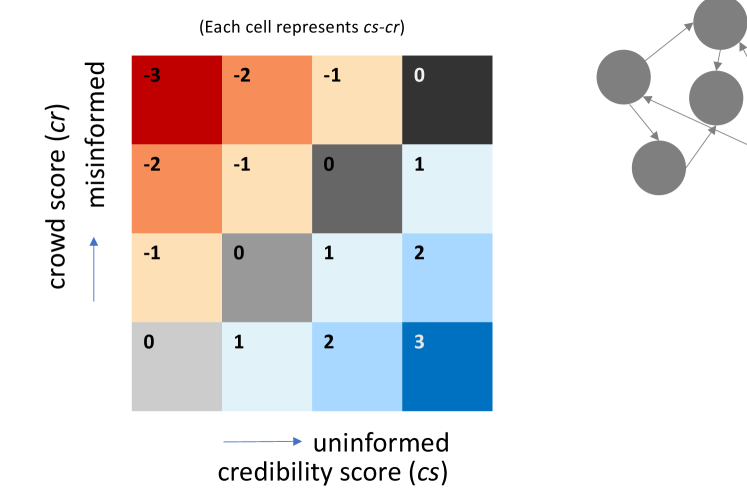

We define two types of causal illusions that utilize ground truth data and the causal belief data collected from the people/crowd. We represent the crowd data using the crowd score (cr) (a score representing the crowd’s inclination toward a specific causal link) and the ground truth data using the credibility score (cs). There are several ways the crowd score (cr) can be calculated, such as using the normalized total votes assigned to a causal link by the crowd.

Let us suppose we have a causal link with a crowd score of and a credibility score (from ground truth) of . There can be two potential cases of causal illusion;

-

•

Where the crowd had a stronger inclination toward a causal link with a comparatively lower credibility score, i.e., . We define this state as being potentially misinformed. While we do not investigate the reasons behind this, they can indicate the consumption of less credible sources, shallow reading practices, and denial of climate change (Today, 2022).

-

•

Where the crowd had a weaker inclination toward a causal link with a comparatively higher credibility score, i.e., . We define this state as the state of being potentially uninformed/oblivious. The cause behind obliviousness can be a lack of knowledge regarding that specific topic, i.e., people genuinely do not know about it enough to vote for it.

5. Initial Interface

Our overarching goal in designing the interface was to foster critical thinking about a topic of interest (e.g., climate change) while ensuring ease of use in creating causal networks. We felt the necessity to develop our own data collection tool since there is no open-source tool available for causal belief collection. In pursuit of this objective, we employed an iterative design process with specific design goals to continually refine and improve the interface based on evolving needs.

Figure 1 shows a screenshot of the collection interface. It shows a case where a crowd worker is in the process of making a causal network.

5.1. Design Goals

In this section, we provide the initial design goals for the interface. Some part of the interface loosely follows the methodology proposed in prior works (Berenberg and Bagrow, 2018; Yen et al., 2021): people create small causal networks to demonstrate their beliefs, and then the small networks are aggregated into a large causal network. However, we non-trivially enhanced the methodology to meet the following design goals:

DG1. Interactively Create and Modify Causal Networks.

Interactive visual interfaces can enhance people’s understanding and decision-making of complex systems (Dietvorst et al., 2018). We decided to utilize an interactive visual interface based on a Directed Acyclic Graph (DAG) to represent causal networks, enabling users to freely choose attributes and their causal relations. The interface should provide modification controls, such as changing link directions, to allow users to refine networks.

DG2. Use of Natural Language to Narrate Causality

Natural language texts are used in conjunction with visual representations to provide explanations and enhance comprehension of causal networks (Yen et al., 2021). This inspired us to use natural language as an explanation along with the interactive visual representations (Choudhry et al., 2021). The narrative component should clarify potential confusion arising from graphical representations.

DG3. Quick Completion Time

One important design goal in crowdsourcing studies is to ensure quick completion time to enhance the efficiency of data collection (Kittur et al., 2013). By minimizing the time required for participants to create, modify, and evaluate causal networks, we want to harness the crowd’s collective intelligence more effectively and gather a larger volume of diverse causal networks for analysis and insights.

5.2. Processing Raw Data and Generating Causal Attributes

Before collecting causal beliefs, the set of single/multi-word attributes relevant to the domain must be identified. Experiments with techniques purposed to automatically extract attributes specific to “climate change” (our demonstration domain) from relevant text documents were only mildly successful. To identify the relevant attributes for our domain (climate change), we manually extracted them from reputable climate-related sources 111 https://www.climaterealityproject.org/blog/key-terms-you-need-understand-climate-change https://www.climaterealityproject.org/blog/10-indicators-that-show-climate-change https://opr.ca.gov/facts/common-denier-arguments.html. We also determined specific words to represent upward and downward trends, ensuring natural comprehension, such as “fewer (human activities)”, “less (methane)”, and “decreasing (solar radiation)”. This process yielded a table of 17 attributes with trend terms (34 attributes in total, combining trends with attributes). We then validated these attributes via an informal discussion session with three climate science experts from our university. All experts hold PhDs in relevant fields and have been conducting climate science research for at least ten years. All agreed that the attributes are of importance to any climate science expert, and understanding people’s perceptions about them is crucial.

In addition to extracting the attributes, we also use Word2Vec (Mikolov et al., 2013), a neural word embedding model trained on the English Wikipedia corpus containing many climate-related documents, to compute attribute coordinates based on semantic distances. We used these coordinates to lay out the variables in the 2D space of the initial interface (Section 5.3). The Word Mover Distance (WMD) was employed for multi-word attributes, calculating the minimum distance words need to travel between documents. We also explored other text embedding models, including BERT (Devlin et al., 2018), RoBERTa (Liu et al., 2019), and GloVe (Pennington et al., 2014). After a discussion with the research team, we found Word2Vec’s embeddings to be the most suitable option.

We note that we did not utilize the Word2Vec coordinates in our final and redesigned interface because we adopted a different layout approach (Section 8.1).

5.3. Collection Interface Modules

The collection interface is a web-based interface implemented using Python as the back-end language and D3 for visualization (Bostock et al., 2011). We used MongoDB as a database for our collected results. The input to the interface is a dataset containing nodes of a causal network () where the edge list () is unknown. Thus, this interface is our tool to infer from the crowd. The detail of the input dataset is provided in Section 5.2. We describe the visual component of the several interface modules in the following sections. The modules are independent and can be implemented according to the intended sequential workflow. Snapshots of each module are provided as supplemental material. We further describe our workflow specific to the experimental setup later in Section 6.

5.3.1. Instructions and Overview Module

This module provides an overview of the interface and study tasks as a step-by-step guide, with necessary explanations and instructions for each step. It also presents necessary pictures of each page that people will encounter in their workflow.

5.3.2. Demographics Survey Module

This module collects participants’ demographics and their perception of domain-specific (e.g., climate change) knowledge and awareness. We currently support multiple-choice and Likert scale questions in this module.

5.3.3. Causal Network Creation Module

The main module in the tool allows participants to create causal relations between pairs of attributes to build a small causal network (DG1). The module follows DG1 and DG2 principles, visualizing causal links as node connections and describing them in natural language. The attributes are presented as circular nodes in the interface, positioned based on their word-vector space. The order of the attributes’ appearances is pre-determined. Therefore, all individuals will see the attributes in the same order. We did this to observe the difference in people’s perceptions given the same set of choices. This makes people’s perception a random variable in our experiment instead of the order of the attribute’s appearance.

Participants perform two micro-tasks per causal link: choosing trends for attributes (e.g., increasing and decreasing for the attribute CO2) and selecting the causal relationship between them. This process is repeated in multiple rounds to create the network (Figure 1). Following (Berenberg and Bagrow, 2018), we decided to keep a narrative flow in the causal network. Therefore, after the first round, a participant needs to create a causal link between any previously chosen attribute and one new attribute. Participants need to make three different causal networks. Except for the first network, participants are presented with a mix of previously used and new attributes when creating a causal network. This ensures reduced learning requirements, which contributes to shorter completion times (DG3).

5.3.4. Alteration Module

Following DG1, we developed this module to allow people to alter the network they have created by modifying each network link. The available options are (1) changing the originally selected link direction or (2) deleting the link entirely. This module always appears after the Creation Module.

5.3.5. Interpretation and Evaluation Module

In this final step, each individual is asked to evaluate their created (and possibly altered) network. Following DG2, we provided a narration of the network. To generate this narration, we combine a graph traversal algorithm with a reasonably simple text template to translate the network into a textual narration. The module then provides people with the opportunity to evaluate their created network. The evaluation is collected as a confidence level on a 5-point Likert scale. This module always appears after the Alteration Module.

5.3.6. Usability Rating Module

The purpose of this module is to provide participants with the opportunity to evaluate the complete data collection interface. Following the System Usability Scale (SUS) (usability.gov, [n. d.]), we present each individual with five usability-related statements. We also provide them with two knowledge-related statements to measure their evaluation of the interface from the perspective of gaining knowledge. A primary goal of the knowledge/learning-related statements was to gauge active thinking’s effects by creating a causal network on people’s original perceptions. The positive and negative usability statements are presented in alternating order. We mention the seven statements as supplemental materials.

6. Formative Study

We recruited crowd workers from Amazon Mechanical Turk (AMT) to collect causal perceptions on climate change. They used the initial collection interface to create small causal networks. We include all study materials in the supplement.

Crowd Workers’ Demographics and Expertise Level Regarding Climate Change

We needed to discard the work of 4 workers due to incompleteness, which led us to have 94 valid workers. We present various aspects of the crowd workers’ demographics in Figure 2. The majority of the crowd workers happened to be male, white, and within the age group of 20-40. More than half of the crowd workers finished their bachelor’s degrees, and more than two-thirds are employed for wages. Geographically, we only collected results from the United States. A significant portion of the crowd workers is from the Southern region of the United States.

Figure 2 shows a collection of bar charts, each containing statistics of various demographic variables. The variables in order from the top-left are: Gender, Age Group, Employment Status, Marital Status, Region, Ethnicity, and Education Status.

We also examine the self-reported knowledge and agreement levels of crowd workers regarding climate change-related attributes and statements. Around 49% the of participants consider themselves knowledgeable about climate change attributes. Additionally, there is a strong agreement among participants with climate change-related statements (around 90%), indicating a belief in climate change. Further details can be found in Appendix A.1.

Protocol

We used the “external HIT” function on AMT, where the interface hosted on our server was accessible to the workers. Therefore, our interface did not require the crowd workers to log in and provide personal information beforehand. We only accepted the results when the crowd workers completed every task in the workflow. The successful crowd workers were paid $2.75 each upon completion. We initially estimated the work would take around 15 minutes. However, the average time taken by the workers was around 30 minutes, according to the AMT website. We provide the step-wise protocol below:

-

(1)

Read the instructions and pass the test: In the “Instructions and Overview Module” (Section 5.3.1), the crowd workers received a step-by-step guide and explanations of each interface module. They had the option to revisit previous pages and restart if needed. A test required them to demonstrate their understanding, and they could retry it to improve their comprehension.

-

(2)

Complete the demographics survey: In the “Demographics Survey Module” (Section 5.3.2), the crowd workers answered 11 questions about their ethnicity, gender, marital status, geographical location (state), education, employment status, age group, knowledge, concern, and agreement towards climate change. They had the option not to provide their information for each demographic question.

-

(3)

Create a causal network: The crowd workers used the “Causal Network Creation Module” (Section 5.3.3) to build a small causal network. They created five causal links by (i) selecting two attributes along with their trends (e.g., “CO2” with an “increasing” or “decreasing” trend) and (ii) choosing the causal relationship between the selected attributes (e.g., increasing emissions leads to increasing CO2). This process involved two micro-tasks for each link creation. After the first two links, the workers are asked to select a new node that has not been selected before and an already selected node in order to create a new causal relation, adding to the emerging small network.

-

(4)

Alter causal network: In the “Alteration Module” (Section 5.3.4), the crowd workers could modify their created causal network. They selected a link, left-clicked on it, and chose from available options for alteration, including deletion, changing the direction, or no modification.

-

(5)

Evaluate causal network: Crowd workers used the “Interpretation and Evaluation Module” (Section 5.3.5) to review and evaluate their created causal network. They could view it in a node-link diagram or Directed Acyclic Graph (DAG) format and read it in natural language text. Additionally, they provided a confidence level for each network on a scale of 1 to 5.

-

(6)

Repeat Task 3-5 two more times: Crowd workers repeated the process of creating small networks, altering them, and evaluating them two more times. The interface provided necessary prompts and repetition for this task.

-

(7)

Evaluate the interface: After creating three causal networks, the crowd workers used the “Usability Rating Module” (Section 5.3.6) to rate the interface. They rated seven usability and learning statements on a 5-point Likert scale to provide feedback.

-

(8)

Verification and compensation: At the end of their participation, each crowd worker received a unique code. This code was used for result validation, filtering incomplete data, and providing compensation for their contribution.

7. Findings from the Formative Study

We collected three types of data in this experiment: demographic information (94 users), small causal networks (282 networks), and subjective feedback (94 users). The causal networks were saved as vectors containing causal links or (source node, target node) pairs, along with confidence scores tied to the worker identifier.

7.1. Establishing Credibility Scores

Figure 3 illustrates the examples of ground truth networks created by experts.

We assigned credibility scores to the causal links based on the methodology proposed in Section 4. Five experts, including two authors of this paper, participated in this stage. The other three experts are climate science experts from our university. They hold PhDs in relevant fields and have been conducting climate science research for at least ten years. Experts independently created their version of the causal networks using the 34 attributes. We provided them with climate change-related literature that is publicly accessible and is more likely to be used by the general population 222

https://climate.nasa.gov/

https://www.epa.gov/

https://www.noaa.gov/

https://www.climatecentral.org/

. Figure 3 shows the causal networks created by the experts. The ground truth establishment procedure yielded a dataset of all possible causal links and their credibility scores.Based on our proposed evaluation metrics in Section 4, we conduct an extensive analysis of the causal belief data collected during the experiment. The results are presented next.

Figure 4 demonstrates an adjacency matrix heatmap representation, where both rows and columns denote causal attributes and each cell shows the total number of votes of specific causal relations with numbers and color intensity.

7.2. Aggregated Evaluation

7.2.1. Combined Network and Total Votes Per Link

We combined all 282 small causal networks by counting the votes for each causal link. Following (Yen et al., 2021), we present the results as an adjacency matrix heat-map in Figure 4. The top-3 most voted links were, “more fossil fuel burning increasing CO2”, “increasing solar radiation more intense heatwaves”, and “increasing solar radiation increasing temperature” with 50, 49, and 46 votes, respectively. The combined network is sparse, and most relations have zero votes. The Pearson Correlation Coefficient between the total votes and the credibility scores for the causal links is 0.4 with , indicating a moderate consensus between experts and crowd.

7.2.2. Average Network Credibility Scores (ANC) and Average Confidence Score (AC)

Figure 5a shows the distribution of ANC scores. The majority of created networks were less credible , suggesting a shallow understanding of climate change topics and occasional worker reluctance. Additionally, Figure 5b displays the distribution of Average Confidence Scores. Less than 15% of cases showed low confidence, while nearly 57% indicated high confidence. These scores reflect the level of certainty associated with the crowd workers’ network creations.

The Aggregated Evaluation of the data we collected highlights the sparsity of the combined network, the tendency of less credible networks, and the presence of low confidence of the crowd workers in their own created network. These observations motivated us to dive deeper into the data and find the rationales behind them.

Figure 5b contains two histograms of Average Network Credibility Scores and Confidence scores on the created causal networks.

A closer look at the most popular causal relations revealed that widely acknowledged scientific facts were reflected in the highly voted relations. However, there were also spurious relations, such as “increasing solar radiation → more intense heatwaves” and “increasing solar radiation → increasing temperature”. The identified causal relations frequently lacked essential aspects of the climate change issue and resembled arguments used by climate change deniers who attribute it solely to natural factors like the sun (Global Climate Change, 2021). These findings also align with the results of (Leiserowitz et al., 2019), which indicate that people tend to view climate change as an effect of natural variability. It is essential to note that these unfocused relations received lower confidence scores than the more focused ones mentioned above (about 10% lower).

| Bogus Cause |

|

|

True Cause |

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

(True) | (False) |

|

True | False | ||||||||

|

True | False |

|

False | True | ||||||||

| Bogus Cause |

|

|

True Cause |

|

|

||||||||

|

(True) | (False) |

|

True | False | ||||||||

|

True | False |

|

False | True |

7.3. Formulating the Trial Matrices

For the outcome variable “increasing temperature” (synonymous with “more intense heat waves” in climate science (Organization, [n. d.])), we identified two competing causes: the simple bogus cause “increasing solar radiation” and the true cause “more fossil fuel burning.” The trial matrices for both outcome variables, as shown in Table 3, can be constructed following a similar approach as in Table 2.

The left trial matrix represents the classic bogus cause of “increasing solar radiation.” We have added the parentheses to the former since this is a truly imaginary cause as the solar radiation is not really increasing. It indicates that regardless of whether solar radiation increases or not, there will be more intense heat waves. The second column for the opposite outcome, “less intense heat waves,” is set to false for both conditions since this outcome is not observed in real life or simulations. The trial matrix on the right represents the true cause of “more fossil fuel burning.” It is scientifically established that “more fossil fuel burning” causes “more intense heat waves,” while “less fossil fuel burning” eliminates the outcome. The second column, “less intense heat waves,” reflects the inverse relationship, as expected in a genuine causal relationship.

It is important to note that our crowd-sourced tool does not provide values for each cell in these trial matrices. Our focus is not on conducting formal experiments with complete matrices, but rather on designing experiments to assess the degree of causal illusion in a general population. We compare the magnitudes of the upper left cells in the trial matrices for the bogus and true causes to gauge the level of causal illusion. Most principled work on causal illusions also largely focuses on these types of results. Additionally, we identify critical links in the causal chain and assess potential knowledge gaps, which are discussed in Section 7.4.2.

7.4. Causal Illusion Detection

The low ANC scores in Section 7.2 indicate a lack of credibility among the crowd-created networks. We explore this further by analyzing the concept of Causal Illusion.

7.4.1. Causal Illusions

Figure 6 shows three different discrepancy networks and one legend table describing the link colors.

In Figure 6, we present the Discrepancy Network that reveals various levels of discrepancies between the crowd and ground truth networks. The discrepancy/illusion score is determined by subtracting the credibility score from the crowd score for each link. A non-zero discrepancy score indicates the presence of a causal illusion. The crowd score (cr) is calculated by normalizing the link’s total votes, considering the long-tailed distribution of causal links. To assign crowd scores (cs) to each link, we utilize a modified equal depth/frequency binning technique. Both crowd scores (cs) and credibility scores (cs) range from 0 to 3.

Each link color represents either the state of having a causal illusion (being misinformed or oblivious) or correct (no causal illusion). The colors for these links are coded by the legend on the right. The upper left colors label the state of being misinformed, the lower right colors label the state of being oblivious, and the grey diagonal labels scores where there was agreement. For the latter, we use shading to indicate credibility.

The dominance of misinformed links is evident, outnumbering oblivious and correct links. However, analyzing different discrepancy/illusion scores (Table 4) reveals that correct links constitute almost half of all links (139 out of 281). Notably, when considering significant/visible links, correct links still account for over a quarter (18 out of 61). The lowest grey level shading represents links with minimal votes and is not displayed in the discrepancy network.

| Type | Link Color | Discrepancy Score (cs-cr) | Count (All) | Count (Visible) |

| Misinformed | Red | -3 | 16 | 16 |

| Orange | -2 | 28 | 28 | |

| Yellow | -1 | 77 | 21 | |

| Correct | Grey(Darkest) | 0 (cs = 3) | 14 | 14 |

| Grey(Darker) | 0 (cs = 2) | 4 | 4 | |

| Grey | 0 (cs = 1) | 11 | 0 | |

| Grey(Light) | 0 (cs = 0) | 110 | 0 | |

| Oblivious | Blue(Very Light) | 1 | 16 | 3 |

| Blue(Light) | 2 | 4 | 0 | |

| Blue | 3 | 1 | 0 | |

| Total | 281 | 86 |

Table 4 contains the statistics of various discrepancy scores.

Consistently higher ratios of misinformed links emphasize the crowd’s vulnerability to misinformation compared to being oblivious/uninformed. This vulnerability is particularly evident in the prevalence of misconceptions related to increasing solar radiation among climate-change deniers (Figure 6). Furthermore, other interesting and noteworthy examples, along with more details and analyses, are provided in Appendix A.2. The occurrence of uninformed crowd judgments is negligible for significant causal links, underscoring the importance of debunking misinformation and promoting accurate information on complex topics like climate change.

7.4.2. Causal Illusion Quantification.

Using the votes obtained for each causal link, we quantified the presence of causal illusion by comparing the bogus cause to the true cause. In Figure 7, we presented the causal links associated with two trial matrices in Table 3, demonstrating the contrasting results. Panel A depicted the simple bogus relation increased solar radiation increased temperature/more intense heat waves which received a combined vote of 95. In contrast, Panel B showed the more complex true relation starting with increased fossil fuel burning and ends with increased temperature/more intense heat waves. Various traversals were observed in which the wisdom of the crowd navigated this chain. The most accurate 4-hop path (increased fossil fuel burning increasing emissions increasing CO2 more trapped heat increased temperature/more intense heat waves) received a support of 16 votes, assuming the weakest link as the defining one, or 23.75 votes on average. Additionally, 3-hop and 2-hop paths also emerged, each offering varying degrees of accuracy. Evaluating the degree of illusion, we found that the ratio of votes for the bogus cause to the true cause ranged from 95/23.75=4 to 95/14=5.94, indicating a stronger belief in the causal illusion. On the other hand, if we compare the dominant true causal knowledge with the bogus cause we get a ratio of about 2.

7.5. Design Issues

7.5.1. Selection Bias

One important issue in the initial interface was the potential for selection bias due to the pre-determined order and the selective and gradual appearance of attributes in the interface. The order in which attributes are presented can influence participants’ perceptions and choices. Further, it is possible that the positioning of certain attributes based on Word2Vec coordinates influenced participants to perceive and associate them more favorably compared to others. This may have contributed to the sparse nature of the combined network (Figure 4).

7.5.2. Longer Completion Time than Anticipated

The average completion time was 30 minutes, exceeding the estimated duration of 15 minutes, which directly contradicted our design goal, DG3. One reason behind that could be the three rounds required to complete the task. Participants had to perform a total of seven clicks in various parts of the interface to create a single link. The visual representation of node-link diagrams could also contribute to the longer completion times.

8. Re-designed and Final interface

The formative study produced encouraging results, as the crowd demonstrated the ability to create meaningful networks while exhibiting evidence of various types of causal illusions. However, we also identified two major design issues. Based on these findings, we revised the design of the protocol and interface for collecting causal networks from crowd workers. We had three specific goals in mind. Firstly, we aimed to eliminate any potential selection bias that may have influenced participant responses. Secondly, we sought to streamline the data collection process by reducing the number of micro-tasks required and the amount of completion time. Lastly, we decided to enforce a structured process for recruiting participants and performing post-hoc quality control.

Figure 8 shows a screenshot of the collection interface. It shows a case where a crowd worker is in the process of making a causal network.

8.1. Collection Interface Modules

The redesigned interface utilized a similar web-based technology as our initial interface. We excluded the attribute “renewable energy” from our input dataset, as it had a low number of votes in the formative study. The interface also has modules similar to the initial interface. We describe major changes to the modules below.

8.1.1. Demographics Survey Module

We decided to replace the previous questions gauging participants’ self-assessed knowledge and awareness with a more standardized question set since crowdworkers may over or underestimate their knowledge about climate change (Kittur et al., 2013). We decided to use the Six Americas Super Short Survey (SASSY) (Chryst et al., [n. d.]). These questions classify individuals into six categories representing different levels of climate change awareness: Alarmed, Concerned, Cautious, Disengaged, Doubtful, and Dismissive.

Note that SASSY captures people’s attitudes towards climate change, not their knowledge level. Our target audience is the general population, thus neither knowledge nor attitude is an influential factor in the study. Nevertheless, we wanted to recruit people with diverse opinions and beliefs about climate change to validate our method and find a wide range of causal beliefs and illusions. With the lack of a standardized method for measuring knowledge about climate change, we believe SASSY is a reasonable proxy to determine the diversity of our participants’ pool.

8.1.2. Causal Network Creation Module

We made four major changes to the Causal Network Creation Module (see Figure 8). First, instead of presenting the attributes using a node-link diagram, we provide two drop downs for users to select causes and effects (Figure 8A and B). This design reduces the possibilities of biases induced by the position of the nodes in a node-link diagram and scales the interface to any number of attributes. Secondly, instead of randomly selecting a subset of attributes, we presented all attributes to the users in the drop-downs. This design choice again reduces any possibilities of selection bias. Thirdly, since a user can see the whole set of attributes from the beginning, we only asked participants to complete a single session (creating five relations), eliminating the need for the additional two sessions. This design choice significantly reduces the completion time. Finally, even though users do not create networks using node-link diagrams, we show the created diagrams to the users using GraphViz (Ellson et al., 2002), a well-known graph visualization library that has built-in rendering mechanisms (Figure 8D). We also reduced the number of micro-tasks for optimizing the completion time. For example, trend selection was a separate micro-task in the initial interface, but now the trends are embedded with the attribute names. Users do not need to select trends separately, reducing two micro-tasks in total (one for selecting cause and one for effect). We attached a video to demonstrate the overall workflow. Appendix B.1 describes the workflow in more detail.

9. Final Study

We recruited crowd workers from Amazon Mechanical Turk (AMT) and workers on Prolific (132 workers in total) to collect causal perceptions on climate change using our final interface. We excluded the work of 31 workers due to incomplete and fraudulent results, totaling to 101 valid responses. Successful crowd workers were compensated $3.75 on AMT and $3 on Prolific upon completion. The compensation amount on Prolific was determined automatically based on the estimated completion time, while on AMT we estimated it ourselves. The average completion time was around 12 minutes. The study design and protocol remained similar to our formative study, except for the following changes. We mention the detailed protocol in Appendix B.1.

9.1. Crowd Workers’ Validation Process and Post-hoc Quality Control

In the final study, in contrast to the formative study, we implemented a rigorous quality control process to ensure data integrity. First, we noticed from the formative study that while we designed the crediblilty score to measure causal beliefs and illusions, it can also be used to filter out potential fraudulent or random causal relations. For example, an excessive number (3 or more out of 5) of non-credible links (credibility score = 0) could indicate randomly created relations or fraudulent behaviors. We flagged such relations and crowd workers in the final study. Then, the research team manually reviewed each case. During the review, we examined patterns of inconsistencies, repeated responses, and indications of random or careless selection. Based on the manual review, we identified and excluded workers who produced unreliable or fraudulent data. We provide two examples of such data in Figure 9-A and B. Note that the lack of credibility (a score of 0) does not necessarily mean fraudulent behavior. We accepted submission as long as the overall network exhibited a pattern of understanding and relevance, even if some links had a credibility score of 0 (Figure 9-C). We interpreted these results as beliefs stemming from potentially flawed understanding, which are of potential interest to us, and accepted the results. Thus, the combined networks had links with credibility scores spanning from 0 to 3.

We conducted the study in phases, 10 participants at a time, allowing us to calculate node exploration in the aggregated network and perform quality control at each phase. All 31 workers whose results were rejected due to incomplete or fraudulent data were from AMT. Thus, we decided to conduct the rest of the study on Prolific, as it is known for its more stringent participant vetting process and higher-quality data. We ensured all participants finished the study exactly once. Therefore, every participant from AMT and Prolific constructed their own network from the ground up without building upon one another’s work. This led us to have 101 valid workers. We present various aspects of the crowd workers’ demographics in Figure 10. Geographically, we only collected results from the United States.

Figure LABEL:fig:demographics_2 shows a collection of bar charts, each containing statistics of various demographic variables. The variables in order from the top-left are: Gender, Age Group, Employment Status, Marital Status, Region, Ethnicity, and Education Status.

9.2. Crowd Workers’ View on Climate Change

We employed the “Six Americas Super Short SurveY (SASSY)” Group Scoring Tool to segment our participants into different groups based on their responses to climate change (Chryst et al., [n. d.]). We present the results in Figure 11. The majority of workers (36) were “Alarmed” and expressed high concern about climate change. There were also significant numbers of workers in the “Dismissive” group (25) who held dismissive or denying attitudes. Other categories included “Concerned” (11), “Cautious” (10), “Doubtful” (16), and “Disengaged” (3). The group scoring tool also allowed us to compare the groups to national averages (Figure 11). Compared to national averages from December 2022, we observed some variations in the distribution of attitudes toward climate change. Nevertheless, all groups were present in our participant pool, reflecting a range of perspectives and attitudes toward climate change.

9.3. Stopping Criteria

We conducted the study in phases (10 participants at a time). After each phase, we analyzed the combined network created by the crowd and compared it with the previous phases. We also checked how many nodes/attributes have been explored in the combined network. We stopped the study once the network was saturated, displaying minimal changes from previous phases. We present the final node exploration status in Appendix B.2. Additionally, we considered the representation of the participants in terms of the SASSY groups. We wanted representatives from each group.

10. Findings from the Final Study

The analysis pipeline in the final study remained consistent with that of the formative study. We present the findings below.

10.1. Aggregated Evaluation

Figure 12 demonstrates an adjacency matrix heatmap representation, where both rows and columns denote causal attributes and each cell shows the total number of votes of specific causal relations with numbers and color intensity.

10.1.1. Combined Network and Total Votes Per Link

We present the adjacency matrix of the 101 combined networks in Figure 12. The top-3 most voted links were, “increasing temperature melting sea ice”, “increasing CO2 more trapped heat,” and “more trapped heat increasing temperature” with 24, 21, and 18 votes, respectively. The combined network is sparse, and most relations have zero votes, similar to the formative study. The Pearson Correlation Coefficient between the total votes and the credibility scores for the causal links is 0.63 with . This indicates a stronger alignment between the crowd and expert consensus compared to the formative study. Although the level of consensus remains moderate, the stronger correlation suggests that the crowd was able to create networks that contain more scientifically accurate causal links in general.

10.1.2. Average Network Credibility Scores (ANC) and Average Confidence Scores (AC)

Figure 13a shows the distribution of ANC scores, which reflects that the majority of the crowd workers created less credible networks in general. We present the confidence scores in Figure 13b, which reflects that in less than 18% of the cases, the workers did not feel very confident (Confidence Score 1-2) in their own created network and nearly 54% of the time, they felt positively confident (Confidence Score 4-5).

Figure 13b contains two histograms of Average Network Credibility Scores and Confidence scores on the created causal networks.

10.2. A Closer Look into the Most Popular Causal Relations

Similar to the formative study, we mention the most noteworthy aspect of the most popular causal links below.

-

•

Similar to the formative study, the most voted causal relations in the final study reflected widely acknowledged scientific facts. The top relation (24 votes, average confidence (AC) = 3.5, credibility score (CS) = 3) was “increasing temperature melting sea ice”. Other top relations attributed the “more trapped heat” to “increasing CO2” (21 votes, 3.5 AC, 3 CS) and “increasing temperature” to “more trapped heat (18 votes, 3.3 AC, 3 CS).

-

•

There was evidence of recognizing underlying factors of climate change, such as linking “more human activities” to “decreasing forests” (6 votes, 3.7 AC, 2 CS) and “increasing CO2” (4 votes, 3.5 AC, 2 CS) and considering “increasing CO2” as an effect of “decreasing forests” (10 votes, 3.7 AC, 3 CS) and “increasing emissions” (8 votes, 3.5 AC, 3.5 CS) (Forests, 2022; Climate.gov, [n. d.]).

-

•

There was also evidence of physical understanding; many marked that “increasing wildfire” leads to “decreasing forests” (16 votes, 3.5 AC, 3 CS) and “increasing emissions” (6 votes, 3.7 AC, 3 CS) and “increasing CO2” (6 votes, 3.5 AC, 2 CS), and, in turn, “increasing wildfires” is caused by “increasing temperature” (12 votes, 3.2 AC, 2 CS) and “more intense heatwaves” (7 votes, 4.14, 2 CS).

-

•

Among the causal links that received at least 10 votes, all were highly credible links (CS=3) except for 2 links: “increasing temperature” “increasing wildfires” and “more trapped heat” “melting sea ice” (CS=2). Interestingly, these links also received the lowest AC scores (3.2 and 2.8, respectively) compared to others, exhibiting a parallel trend to the formative study findings. While these links are partially correct, they lack crucial mediators. It is important to acknowledge that wildfires are primarily caused by dry weather resulting from drought, which is a consequence of rising temperatures. Similarly, the consistent trapping of heat contributes to increasing temperatures, which leads to the melting of sea ice (Drought.gov, 2022; Life, [n. d.]).

10.3. Causal Illusion Detection

Figure 14 shows three different discrepancy networks and one legend table describing the link colors.

In Figure 14 we present the Discrepancy Network along with various levels of discrepancies. Similar to the formative study, the ratio of misinformed links consistently stays higher (47 out of 92 visible links). In contrast to the formative study, the results of the current study reveal a multitude of cases where the crowd demonstrated a significant degree of obliviousness, with 26 out of 92 visible links exhibiting this phenomenon. We present the statistics of various values of different discrepancy/illusion scores in Table 5.

| Type | Link Color | Discrepancy Score (cs-cr) | Count (All) | Count (Visible) |

| Misinformed | Red | -3 | 0 | 0 |

| Orange | -2 | 7 | 7 | |

| Yellow | -1 | 40 | 40 | |

| Correct | Grey(Darkest) | 0 (cs = 3) | 4 | 4 |

| Grey(Darker) | 0 (cs = 2) | 9 | 9 | |

| Grey | 0 (cs = 1) | 6 | 6 | |

| Grey(Light) | 0 (cs = 0) | 75 | 0 | |

| Oblivious | Blue(Very Light) | 1 | 27 | 21 |

| Blue(Light) | 2 | 9 | 5 | |

| Blue | 3 | 4 | 0 | |

| Total | 181 | 92 |

Table 4 contains the statistics of various discrepancy scores.

In Figure 15, we present some noticeable cases within the discrepancy network. The crowd selected increasing solar radiation as a cause for more intense heatwaves, this misconception is consistent among the participants of both studies (Figure 15-A). The crowd also seemed to think that expanding forest causes increasing wildfires (Figure 15-B), whereas, the relationship between expanding forests and increased wildfires is more nuanced. Factors such as climate conditions, human activities, and forest management practices play significant roles in determining wildfire risk (Nps.gov, 2022; Drought.gov, 2022).

Several intriguing instances of obliviousness were observed within the crowd as depicted in Figure 15-X, Y, Z. Notably, the crowd exhibited a preference for attributing emissions to increasing wildfires, which is indeed a valid relationship. However, there was a comparative disregard for the influence of fossil fuel burning and coal burning on emissions. Additionally, a certain level of unawareness was evident regarding the interplay between variables such as drought, precipitation, and temperature-related factors like trapped heat and heatwaves. This highlights the need to prioritize the dissemination of accurate information concerning the impact of rising temperatures on drought conditions.

Figure 15 shows seven screenshots of various interesting Misconception and Obliviousness cases.

10.3.1. Causal Illusion Quantification.

Figure 16 illustrates the causal links associated with the two trial matrices described in Section 7.3 (Table 3). The strongest 4-hop path (more fossil fuel burning increasing emissions increasing CO2 more trapped heat increased temperature/more intense heat waves) received 4 votes (based on the weakest link criterion) or 13.75 votes (based on the average link criterion). The optimal 3-hop path (more fossil fuel burning increasing CO2 more trapped heat increased temperature/more intense heat waves) garnered 4 votes (weakest link criterion) or 15.67 votes (average link criterion). The best 2-hop path (more fossil fuel burning increasing CO2 increased temperature/more intense heat waves) obtained 4 votes (weakest link criterion) or 4.5 votes (average link criterion). Lastly, the simplest 1-hop path (more fossil fuel burning increasing temperature/more intense heat waves) received 2 votes. To assess the degree of illusion between the bogus cause and the true cause, we can examine the vote ratios. For the most accurate 4-hop path, this ratio is 5/4 = 1.25 for the weakest link criterion and 5/13.75 = 0.36 for the average criterion. Although the weakest link criterion suggests a mild degree of illusion, the number of votes is too low. Another encouraging finding is that a significant number of workers correctly identified the direct cause of increased temperature/more intense heat waves, which is more trapped heat (21 votes).

10.4. Interface Usability and Knowledge

Figure 17 contains a horizontal bar chart along with error bars expressing the average ratings of the crowd workers on the usability and knowledge-related statements.

Figure 17 presents crowd workers’ agreement on the usability and learning statements. The results reflect an overall positive outlook toward the usability of our system. For the knowledge-related statements, the majority of workers felt neutral to positive towards the statement “I became more aware of the relationships that govern climate change” and disagreed with the statement “My views on climate change have been altered”. We think this happened because they might not have felt comfortable agreeing to such a strong statement.

11. Discussion, Limitations, and Future work

We briefly summarize the study findings, design implications, and limitations of our work below.

11.1. Reflecting on the User Studies

The two studies show that Belief Miner is data-driven and agnostic to the method used to collect the data. Regardless of the collection mechanism, Belief Miner can extract causal illusions given a set of causal networks collected from the crowd and ground truth collected from experts. Thus, we were able to apply the same method in both studies, even though the underlying protocol and interfaces were different.

We found a wide range of causal beliefs and illusions in the studies. Some findings from the studies align while others do not. For example, in both studies, participants with causal illusions assigned lower confidence scores to their networks. However, we could not replicate some results from the formative study in the final study. For example, we did not find the causal illusion reported in Figure 7 from the formative study in the final study. Several factors could contribute to this phenomenon: the redesigned interface, the participants’ pool, or the changes in the general knowledge about climate change within the timeframe of the studies (Fall 2021 to Spring 2023).

11.2. Design Implications

11.2.1. Belief Miner as an Intervention against Causal Illusion.

In our current system, we collect the causal beliefs from crowd workers through the interactive visual interface and detect the causal illusions later. Both of our studies show the phenomenon of people rating their own causal networks containing spurious causal links with low confidence scores once they see the complete picture. We see this as an indication of people correcting or educating themselves while they see the externalized version of their mental causal model. Motivated by this, we envision using Belief Miner as an intervention tool against causal illusion. Several crowdsourcing methods could be useful here. For example, seeing other people’s beliefs often positively affect a crowdsourcing task (Yen et al., 2021; Kittur et al., 2011). In our case, we can expose the controversy or disagreement about a causal relation at the time of the experiment by looking at the networks already created by others. Another potential solution is enabling peer review (Whiting et al., 2017), allowing crowdworkers to provide feedback to each other. Other potential solutions include automatically extracting digestible scientific documents related to the relevant causal attributes. We believe these additions will facilitate informed decision-making and will promote scientific thinking which is identified as the best defense against causal illusions (Matute et al., 2015).

11.2.2. From Causal Illusion to Misconception

Belief Miner investigates the concept of causal illusion, which is related to people’s inherent bias to draw connections between coincidental events. A closely related concept is misconception, which is the inaccurate or wrong interpretation of concepts (Shi et al., 2021). The terms misconception and misinformation are often used interchangeably. While misconception generally comes from a lack of knowledge, misinformation is often deliberately created for deception and spread intentionally or unintentionally (Wu et al., 2019). Existing misconception and misinformation discovery methods mainly rely on natural language processing (NLP) and machine learning (ML). These methods fall under the broader category of content-based detection (Mazid and Zarnaz, 2022; Benamira et al., 2019), context-based detection (Kwon and Cha, 2014), propagation-based detection (Kim et al., 2019), etc. We believe our method could provide a realistic tool to measure misconceptions where content-based analysis is not feasible.

11.2.3. What Kinds of Causal Illusions Appear Together and Who Falls Victim to Them?