Behavioral event detection and rate estimation for autonomous vehicle evaluation

Abstract.

Autonomous vehicles are continually increasing their presence on public roads. However, before any new autonomous driving software can be approved, it must first undergo a rigorous assessment of driving quality. These quality evaluations typically focus on estimating the frequency of (undesirable) behavioral events. While rate estimation would be straight-forward with complete data, in the autonomous driving setting this estimation is greatly complicated by the fact that detecting these events within large driving logs is a non-trivial task that often involves human reviewers. In this paper we outline a streaming partial tiered event review configuration that ensures both high recall and high precision on the events of interest. In addition, the framework allows for valid streaming estimates at any phase of the data collection process, even when labels are incomplete, for which we develop the maximum likelihood estimate and show it is unbiased. Constructing honest and effective confidence intervals (CI) for these rate estimates, particularly for rare safety-critical events, is a novel and challenging statistical problem due to the complexity of the data likelihood. We develop and compare several CI approximations, including a novel Gamma CI method that approximates the exact but intractable distribution with a weighted sum of independent Poisson random variables. There is a clear trade-off between statistical coverage and interval width across the different CI methods, and the extent of this trade-off varies depending on the specific application settings (e.g., rare vs. common events). In particular, we argue that our proposed CI method is the best-suited when estimating the rate of safety-critical events where guaranteed coverage of the true parameter value is a prerequisite to safely launching a new ADS on public roads.

1. Introduction

1.1. Autonomous Driving Systems

An “autonomous driving system" (ADS) refers to a level 3, 4, or 5 system [12] that is capable of performing a dynamic driving task on a sustained basis. It is comprised of both software and hardware components, and any vehicle equipped with this system may be referred to as an "Autonomous Vehicle" (AV).

Development of safe and high quality ADSs is a very active area of research within both academia and industry [15, 22]. Waymo is a prominent player in this space, with its origins in 2009 as the Google Self-Driving Car Project. Today Waymo produces Level 4 ADSs which currently provide fully autonomous rides, i.e., with no human in the driver seat, to members of the public in Arizona and in California. Waymo also has parallel work in the autonomous trucking and delivery space.

At Waymo, rigorous validation and evaluation of the ADS performance is a core tenet of the deployment philosophy. Prior to releasing AVs equipped with a new ADS onto public roads, we must have confidence that the safety and other performance metrics meet our stringent quality bar. Previous Waymo publications have already outlined the safety and readiness determinations [20, 13]. Complementing those publications, here we articulate some of the statistical and data science challenges that arise during the evaluation process.

1.2. ADS Performance Evaluation

ADS performance evaluation typically involves three primary components:

-

(1)

Definition of specific undesirable111There are many desirable behavioral events that are also of interest to track. For simplicity, in this paper we assume that these events can all be negated to produce a corresponding undesirable event. E.g., a desirable event may be “sufficiently pullover to pick up passenger out of the travel lane,” and the associated negative metrics would be things like “pullover had X percent overlap with travel lane” or “failed to pullover.” behavioral events, along with associated severity levels or other relevant annotations.

-

(2)

Rate estimation for the behavioral events’ relative frequencies on-road, typically in terms of the number of incidents per driving mile (IPM).

-

(3)

Accommodation of product requirements from the stakeholders who use these data for decision-making.

More details on each of these components are provided below.

The behavioral events of interest referenced in component (1) span a spectrum from critical safety situations such as collisions (very rare) to behavioral quirks such as unnecessary slowing (more common). While on its surface this aspect of performance evaluation appears straightforward, in practice rigorous definitions and delineation of edge cases can be a challenge. These challenges are left as out of scope for the current paper. Rather, we focus on data and statistical questions associated with the components (2) and (3), and how we are addressing these challenges at Waymo.

There are several data and statistical questions that arise when producing the rate estimates referenced in component (2):

-

a)

What data is best suited to produce these rate estimates? Waymo has autonomously driven tens of millions of miles on public roads as well as tens of billions of miles in simulation. The former have minimal observation error but may have sparse coverage of rare events; the latter may be subject to simulation artifacts but can more easily ensure coverage of rare events.

-

b)

Given the driving data, how can we reliably count these behavioral events? The ease of measurement for an individual class depends not only on the event’s frequency (denser events are easier to measure empirically on smaller datasets), but also our ability to algorithmically identify relevant events in the driving data. Identification of behavioral events in a purely algorithmic fashion is difficult and usually requires human review, often of varying levels of expertise ("tiered event review").

-

c)

How can we quantify statistical uncertainty via reliable confidence intervals for the rate estimates? IPM is not a typical metric of interest within the technological industry outside of AVs, nor is it a quantity that receives broad attention from the academic literature. Moreover, the uncertainty introduced from imperfect event detection in (b) further complicates the statistical theory. Finally, these confidence intervals must accommodate a broad range of events, including rare event scenarios, for which traditional asymptotic methods can fail easily.

These statistical challenges are further constrained by the product requirements referred to in component (3):

-

•

In order to drive efficient decision-making at Waymo, these rate estimates must be available as soon as possible after mileage collection. As such, we need the ability to compute rate estimates even when not all candidate events have been reviewed. Early rate estimates may have greater uncertainty than the final estimates, but they should still be unbiased and provide robust confidence intervals.

-

•

The most practically important event classes are very sparse (< 5-10 events), which means the most challenging statistical scenarios are also the most important.

The rest of this paper outlines how we address 2(b) and 2(c) while accommodating these product constraints. In Section 2 we introduce a streaming partial tiered event review framework. Then, Sections 3, 4, 5 outline the data generation model, estimation, and confidence interval construction implied by this framework. Section 6 provides numerical studies to assess the performance of the proposed methods for both rare and common event cases. Finally, Section 7 concludes the paper with some discussion. Technical details are provided in the appendix, including a relation between Poisson, multivariate Hypergeometric and multinomial distributions, which may be of independent interest.

2. The Role of Human Review in Event Detection

2.1. Behavioral Event Detection Overview

As described above, computing the rate at which a behavioral event occurs will require us to first identify and count those events within our driving data. To facilitate this detection at scale, we rely on a set of sophisticated algorithms that can automatically identify events of interest. These classification problems are conceptually similar to many other data science applications in industry. However, unlike most other application settings, edge cases are incredibly important within the autonomous vehicles industry and cannot be swept aside or averaged out. These edge cases, for us, are frequently the most interesting and most important events.

Take, for example, safety-relevant behavioral events which occur very rarely. Errors in their classification will show up as a very small percentage of the algorithm’s aggregate performance metrics, but lack of detection for these events can result in a very serious lack of information when deciding whether to put an ADS on public roads. This risk is compounded by the fact that estimating the recall loss by these algorithms can be very challenging and time-consuming since it requires finding sparse False Negatives in a very large collection of True Negatives.

To ensure confidence in our safety estimates at Waymo, we supplement these algorithmic solutions with a final stage of human review. When tuning any algorithmic classifier, one can adjust the operation point to favor either precision or recall, with very few algorithms being able to achieve high performance in both simultaneously. Our solution allows us to tune the algorithms for very high recall, and then lean on a final layer of human reviewers to fix any gaps in precision. Combined, the algorithm and human reviewers represent a multi-stage strategy that is both scalable and provides trustworthy rate estimates.

2.2. Review of Candidate Events

Let refer to the corpus of candidate events identified by the algorithms. For simplicity, we assume the algorithm feeding events to the human reviewers has perfect recall, so the human reviewers only need to address precision. Then, for any candidate event in , we ultimately want to confirm whether the event was a True Positive or False Positive detection of the behavioral event of interest.222Note that detailed instructions and rigorous training are often needed to ensure that human reviewers provide high quality labels, particularly for the most nuanced behavioral events. For the purposes of this paper, we assume the human labels are perfect and, as such, recall from this system is perfect.

A well-optimized event review configuration should maintain both high precision and high recall for True Positive events. If the precision is low, then the rate estimates will be too high; if the recall is low, then the rate estimates will be too low. With this as our motivation, we have developed an event review configuration at Waymo referred to as streaming partial tiered event review. The core concepts motivating this configuration are outlined below.

Under a complete event review configuration, all candidate events would be reviewed. However, for many applications this can be inefficient and labor intensive. Instead, reviewing a subset of the candidate events, i.e., partial event review, is often sufficient to estimate event rates with acceptable levels of uncertainty. Beyond efficiency, supporting estimates based on partial event review also ensures that we can fulfill the product requirements by providing trustworthy estimates at any stage of the event review process (e.g., checking results after only 10% of events have received review), and that the uncertainty in those estimates will continually be reduced as more events are reviewed.333In addition to ongoing event review, the on-road ADS logs and simulated autonomous driving provide a continuous stream of candidate events that are being fed into the event review pipeline. As a result, the corpus of candidate events is constantly changing. For simplicity, this is not addressed here, and we instead assume the full corpus of candidate events is available and unchanging prior to the event review initiation.

In its simplest form, partial event review utilizes uniform random sampling to select a subset of candidate events for labeling, but this is unlikely to be the most efficient strategy for most use-cases. To reduce sampling error, stratified random sampling can instead be used; this involves partitioning candidate events into mutually exclusive, cumulatively exhaustive strata based on pertinent event metadata (e.g. road characteristics, algorithmic model scores). The sampling probability may be identical across strata (i.e. proportional allocation), or may differ across strata (e.g. Neyman allocation or alternative allocation schemes). In some sections of this paper we simplify to assume a single stratum, without loss of generality, while in other sections we outline the statistical implications of this stratification in the data.

For operational efficiency, an event review configuration may utilize several tiers of human reviewers with increasing levels of expertise in the higher tiers. This tiered configuration is arranged such that each tier improves the precision of the candidate event corpus while maintaining recall. For example, in a three tiered process, the first tier would exclude clear false positive candidate events while escalating all other events to the second tier. The second tier would exclude additional false positive candidate events, while escalating the remainder to the third tier. The third tier would then make the final determination of false positive vs. true positive status. Each tier may itself consist of evaluation by a single reviewer or multiple reviewers who reach consensus.

Operationally, partial tiered event review can be implemented as either a batched or streaming process. Under a batched process, any candidate event that is reviewed in Tier 1 will complete its journey through the system and be confirmed as either a False Positive or make it to the final tier and be confirmed as a True Positive. In this setting, the rate estimates do not need to account for the details of how candidate events are escalated between tiers; the rate estimates are ultimately identical between a partial tiered setting and a partial untiered setting. However, at Waymo it is often the case that we need to produce unbiased rate estimates while many events are still only partway through the event review pipeline. E.g., a candidate event may have already been reviewed by Tier 1 and escalated, but hasn’t received further review yet. This is considered to be a streaming process where rate estimates are made based on the data available at any snapshot in time.

3. Statistical Formulation for Partial Tiered Event Review

Given a collection of driving data representing autonomous driving miles, we are interested in providing an estimate and confidence interval for the rate of occurrence () for the behavioral event of interest. Let be the number of tiers in the event review configuration and be the total number of True Positive behavioral events in the driving data. We assume that follows a Poisson distribution with mean . Note that is not directly observed; instead, a corpus of candidate events, are sent through the streaming tiered partial event review process described in Section 2.2, resulting in a collection of partial event review results for each tier.444For simplicity, assume that the algorithmic approach to identify the corpus of candidate events has perfect recall. With this assumption, we don’t need to consider its role in the following sections.

We first discuss the statistical framing for a single stratum, and then extend this to accommodate multiple strata in a straightforward manner.

3.1. One stratum

Let be the number of candidate events in the full corpus.

3.1.1. Partial Event Review

Due to the partial event review configuration, only a subset of the candidate events will be randomly sampled and receive review in Tier 1; denoted by . The reviewers will improve the precision of the event pool by clearly labeling the most obvious False Positives (removing them from further review) and escalating any ambiguous events to Tier 2. Let be the number of events that Tier 1 escalates for review by Tier 2. This process proceeds at each tier with denoting the number of events reviewed by Tier , and denoting the number of events escalated by Tier up to Tier . Finally, will be the number of candidate events formally confirmed as True Positives by Tier .

Figure 3.1 provides an illustration of the sampling and escalation process in a single stratum under a partial event review configuration with 3 Tiers, and the detailed data generation process is described next.

3.1.2. Data Generation Model

Recall that our goal is to estimate the True Positive rate, , for the behavioral event of interest. Under complete event review, the total confirmed True Positive count would be equal to the total True Positive count in , and the estimate of would be a simple function of . However, because our event review is partial, we know that ; i.e., there are additional True Positives within the event review pipeline that have not yet been reviewed by the top tier. We have developed an estimation scheme that includes the contribution from these as-of-yet unreviewed True Positives. Towards this end, we propose a data generation model for the full set of candidate events in the event review pipeline, including both True Positives and False Positives.

To introduce the data generation model, let’s consider the potential outcome of each candidate event in under a complete event review configuration. Under the complete event review configuration, a candidate event would first be reviewed by Tier-1, then it would be either rejected as False Positive by Tier-1, or escalated by Tier-1; were the candidate escalated by Tier-1, it would be reviewed by Tier-2, and then it would be either rejected by Tier-2 as False Positive, or escalated by Tier-2, and so on. That is, the event review process continues until the candidate event would be either rejected as False Positive by Tier- (labeled as FP-) for some or would not be rejected by any Tier (labeled as TP). Whenever a candidate event is rejected as False Positive, it is removed from the event review pipeline for further consideration.

Let be the number of candidate events in which would be labeled as TP, and let be the number of candidate events in which would be labeled as FP- for , under the complete event review configuration. It is natural to assume that the are independent counts and that they each follow some Poisson process, i.e. for autonomous miles,

| (3.1) |

Note that has a simple interpretation: it is the total number of candidate events in which if fully reviewed would not be rejected by Tier-. Again, in the complete event review case these would be observed, but in the partial event review configuration these are instead treated as latent variables; only their total is observed, i.e.

Now given the set of candidate events , we draw a random sample of size without replacement for Tier-1 review, among which the subset of candidate events with labels other than FP- — that is, not rejected by Tier-1 — will be denoted as and will be escalated for Tier-2 review. The process continues as follows: for , we draw a random sample of size from without replacement for Tier- review, among which the subset of candidate events with labels other than FP- — that is, not rejected by Tier- — will be denoted as and will be escalated for Tier- review. Let be the number of candidate events escalated by Tier-:

Obviously, is the number of candidate events which are reviewed but rejected as False Positives by Tier-. It is also important to note that any candidate event in will be either TP or FP- for some .

Configuration: miles and tiers.

Parameters: and .

-

(1)

Draw

where is the number of (latent) True Positive events (labeled as TP), and for ,

-

•

is the number of (latent) candidate events which are labeled as FP- — that is, they would be escalated by Tier- but rejected as False Positive by Tier-.

Then the total number of candidate events in the event review pipeline is

Let consist of all candidate events.

-

•

-

(2)

For tier ,

-

•

If :

-

–

Let be the number of candidate events which are drawn randomly from without replacement and are reviewed by Tier-:

-

–

Let be the subset of the candidate events with labels other than FP- — that is, they are escalated by Tier-.

-

–

Set .

-

–

-

•

Else:

-

–

Set and for .

-

–

Break (i.e. early termination).

-

–

-

•

-

(3)

Output: and as the observed data.

Of course, if is empty for some , i.e. (no candidate events are escalated by Tier-), then the partial event review process terminates at Tier-.

It is convenient to model the sample size by a Binomial process. Note that if but , i.e. there are candidate events escalated for Tier- event review, but none is reviewed by Tier-, then is not identifiable. To make the estimation problem well defined, whenever , we need , and to be precise, we use

| (3.2) |

where stands for the random variable from a Binomial distribution with parameters and .

This data generation model is summarized as Algorithm 1, which takes (associated with the latent variables ) and (associated with tier level sampling) as the input parameters, and outputs the tier level sample sizes () and the numbers of tier level escalations () as the observed data.

3.2. Multi-strata

Now suppose we sample from the candidate events via a stratified sampling scheme with comprehensive and mutually exclusive strata. We can generalize all the notations in Section 3.1 in a straightforward way by an additional subscript indicating the stratum. The detailed data generation model is provided as Algorithm 2, which calls Algorithm 1 for each stratum by taking (associated with latent variables) and (associated with tier level sampling) as the input parameters, and outputing the tier level sample sizes () and the numbers of tier level escalations () as the observed data. The parameter of interest can be written as

| (3.3) |

Configuration: miles, strata, and tiers.

Parameters: and for .

-

•

For each ,

-

–

use and as the input parameters and apply Algorithm 1 to generate the output for stratum-: and .

-

–

-

•

Output: and as the observed data for all strata.

4. Point estimation

To estimate , it is desirable to derive the maximum likelihood estimate (MLE) due to its various optimality properties ([1, 21, 9]) with the hope that it is also unbiased. Instead of deriving the MLE directly, we first derive the point estimate based on a heuristic argument, then show that it is indeed the MLE. This is due to the complexity of the likelihood function under the partial event review configuration, with more details provided in the Appendix. The estimate is also unbiased.

Since the data across strata are independent, the rates associated with different strata can be estimated independently. Without loss of generality, we may consider a single stratum, using the same notation as in Section 3.1.

A natural estimate of is the total number of true positives within (all candidate events in the stratum). This quantity is unfortunately not observable under partial event review and must be estimated instead. We start the argument by assuming .

First, consider the total number of True Positive events present in the final tier, Tier . Recall that candidate events will be escalated to tier , a random sample of size will be reviewed, and of those reviewed events will be confirmed as True Positives. Then, one can estimate the total expected number of true positives that were present in Tier as below by up-weighting the observed true positives by the inverse of the sampling weight:

By the same logic, one can estimate the expected number of true positives that were present in Tier to be

| . |

Iterating through each of the tiers leads to the estimate for the total number of true positives in all candidate events in the stratum:

| . |

Normalizing the above by after simplification leads to the estimate of :

| (4.1) |

Note, an edge case occurs when , or even for some ; that is, when no events are escalated to Tier for some . In these cases the partial event review process would terminate early, and the natural estimate is .

For , let

That is, is the overall Poisson rate for candidate events in which if fully reviewed would not be rejected by Tier- for . Note .

Similar to the heuristic derivation of (4.1), one can estimate as follows:

and for ,

| (4.2) |

Note that is well defined whenever , which is guaranteed by the sampling model (3.2). If , which may occur due to (i.e. early termination if , see Algorithm 1), then for due to monotonicity. Therefore, the estimates are always well-defined. Note that . In fact, the estimates are not only MLE but unbiased.

Theorem 1.

The derivation of the MLE for (and thus ) relies on a novel application of the Expectation-Maximization algorithm [5]. The complete proof is provided in the Appendix.

5. Confidence Interval

As described in Algorithm 2, our model involves a single parameter of interest but a large set of nuisance parameters and . Due to the complexity of the model, it is hard to derive an “exact” confidence interval for . As such, we consider several possible strategies for computing confidence intervals (CIs), then assess the relative metris of each strategy.

We first describe two standard approaches based on a parametric bootstrap and a normal approximation (Wald CI) respectively. These methods are expected to work well asymptotically as the expected True Positive event count increases, i.e., as the mileage goes to infinity. However, the CIs may be less reliable when the true positive events are rare. Due to the product importance of these rare event settings, we propose a novel approach by approximating the exact model with a simpler model – weighted sum of independent Poissons (WSIP) – and then applying the Gamma method ([7]), one of the most established methods for WSIP. The Gamma method is expected to work well not only for scenarios with high event counts (common events), but also for scenarios with relatively few events (rare events).

5.1. Parametric Bootstrap CI

With the mileage fixed, conceptually, the model for the data generation can be written as , where consists of and . The detailed data generation procedure is provided by Algorithm 2. This is a parametric model, and the parametric bootstrap CI [6] can be constructed as follows:

Let be the point estimates of respectively, where s are obtained by applying the MLE estimator described in Theorem 1 to each stratum separately, and the following empirical estimate is used for according to (3.2):

| (5.1) |

which is also MLE when . It may be worth noting that when and , the MLE for by the sampling model (3.2) is simply 0, which is close to (5.1) for large but would be less desirable in general.

Then draw i.i.d. samples of according to the generative process outlined in Algorithm 2 by plugging in the parameter estimates from the observed data. For ,

For each draw, calculate the parameter estimates by replacing in (4.3) and (5.1) with corresponding quantities in , and let

The confidence interval for is given by the and quantiles of .

5.2. Wald CI

In order to construct the Wald CI, we need to estimate . Unfortunately, the exact formula for is extremely complicated. Instead, we derive a first order approximation by assuming is large.

It may be interesting to note that as defined by (4.1) with index added to indicate the stratum can be rewritten as

where

and s are defined by (5.1). Thus in (4.4) can be rewritten as

| (5.2) |

Lemma 2.

If a random variable , and , then .

For large , we may approximate the model (3.2) with subscript added to indicate stratum as

Then by iteratively applying Lemma 2, we have

| (5.3) |

where . By combining this with (5.2), we can obtain the following result.

Theorem 3.

Assume and for all . As , we have

where denotes the normal distribution with mean and variance .

As and thus , the proof follows from Slutsky’s theorem.

From Theorem 3, the Wald CI can then be constructed by based on the plug-in principle, where is the quantile of .

As is well known, proper coverage from the Wald CI requires the asymptotic normality to hold well. As such, it may not be desirable when the expected event counts are low (i.e., for rare events or for low mileage); for example, its lower bound may become negative.

5.3. Gamma CI based on an approximate weighted sum of independent Poissons

Given the limitations of the normal asymptotic approximation outlined above, we propose an alternative approach to estimating the CIs for . Consider the estimate in (5.2), which can be approximated by the quantity below:

where by (5.3) the right hand side is a weighted sum of independent Poissons (WSIP). The approximate WSIP becomes clear by rewriting as,

The distribution for WSIP random variables has been studied extensively in the statistics literature [10, 16, 17, 8]. A WSIP is close to normally distributed when there are high event counts, but can be far from normal for rare events. This again motivates the consideration of a CI methodology that does not rely on normality. In particular, the original Gamma method proposed by [7] has been conjectured and also shown numerically to provide good coverage properties for the distribution mean in all simulated scenarios [10, 8].

Our third CI methodology leverages these WSIP properties from the literature and can be described as follows:

-

•

The lower bound is the quantile of the Gamma distribution with mean and variance .

-

•

The upper bound is the quantile of the Gamma distribution with mean and variance , where .

Note that the above is based on the original Gamma method, but one may also apply other Gamma methods for WSIP such as the mid-p Gamma interval [8].

6. Numerical Studies

6.1. Simulation Setup

We provide several simulation studies to assess and compare the performance of the three confidence interval methods defined in Section 5. The first two simulation studies compare the performance when the behavioral event is rare vs. when the behavioral event is common, using fixed values for most parameters. The third study illustrates that these coverage properties hold for a wide range of parameter values, beyond the specific values chosen for the first two examples. Throughout the simulation section we set for convenience (the unit could be 1 mile, 10K miles, or one million miles).

In each study, we simulate the data in a consistent manner. Following Algorithm 2, we generate the and . We set strata and assume tiers. Then will be the total number of candidate events entering the tiered event review pipeline in stratum .

Note that when this event review methodology is put into practice one can imagine a fixed set of events to review, and the Tier 1 sampling rate will steadily increase over time as reviewers complete more events. We simulate this event review progression by varying the sampling rate at Tier 1, . In this way, we can verify whether the confidence intervals have good coverage properties throughout the entire event review process.

For the third simulation study in Section 6.4, we consider a wide range of scenarios. For each scenario, we generate each Tier 1 sampling rate , and generate so that the sampling rate for each strata will not decrease as Tier goes up. is specified later.

Given each set of parameter values ( and ), we simulate the tiered partial event review data and following Algorithm 2 and replicate 1000 times. This allows us to obtain the empirical coverage and average CI width under each set of parameters.

6.2. Simulation for Common Events

Some behavioral events are relatively common, and the observed event counts will be relatively high. As described earlier, this can result in better behaved quantities that more closely follow a normal distribution. Here we assess the performance of our proposed CI methodologies in this setting.

To represent this common event scenario, the values below are used for :

With this parameter setting, .

Figure 6.1 illustrates the coverage properties for the three CI methods when the behavioral event is common. The x-axis represents the sampling rate, allowing us to assess how well these CIs fulfill the product requirement of adequate coverage regardless of the sampling rate. For all three methods, the coverage rates are more biased (over- or under-covering) when sampling rates are low, with improvements in coverage as event review progresses. As sampling rates increase, the parametric bootstrap rapidly converges close to expected coverage rates, and also produces the narrowest intervals. However, the under-coverage at low sampling rates is non-negligible (82% coverage vs. the desired 90%). In contrast, the Gamma CI consistently over-covers (98% coverage for low sampling rates) and produces the widest intervals. However, the Gamma method also provides the most symmetric errors, with similar error rates for the upper and lower bounds; this guarantees that the intervals from this approach will not systematically under- or over-estimate the rates. Finally, the Wald CI falls between the other two methods, with intervals that somewhat over-cover.

6.3. Simulation for Rare Events

Next, we consider more rare events, where the observed event counts will be quite low. In this setting, the estimates are not going to cleanly follow a normal distribution, making the CI calculation potentially more challenging. Here we assess the performance of our proposed CI methodologies in this setting, again tracking how the coverage properties evolve as more events receive review.

To represent this scenario, we use the same as before for but reduce for all :

With this parameter setting, .

Figure 6.2 reports the coverage properties for the three confidence interval methods when the behavioral event is rare. The patterns in this figure are largely similar to the patterns in Figure 6.1. The primary difference between the rare event case and the common event case is the performance at low sampling rates. The under-coverage by the Wald and Parametric Bootstrap is severe at the lowest sampling rates, off by as much as 30 percentage points (60% coverage vs. the desired 90%). In this case, the over-coverage produced by the Gamma CI (97% vs. the desired 90%) is clearly preferable. That said, the Gamma CI is much wider than the Parametric Bootstrap, as much as 3x wider for low sampling rates.

6.4. Comprehensive simulation

In each of the simulations above, the majority of parameters were fixed, while the first tier sampling rate was allowed to vary. In our final simulation we continue to vary the sampling rate, as described earlier, but consider different scenarios covering a wide range of parameter values . In each scenario, we simulate the underlying Poisson rates as follows:

where independently. This simulation study is inspired by the simulation setup in [8].

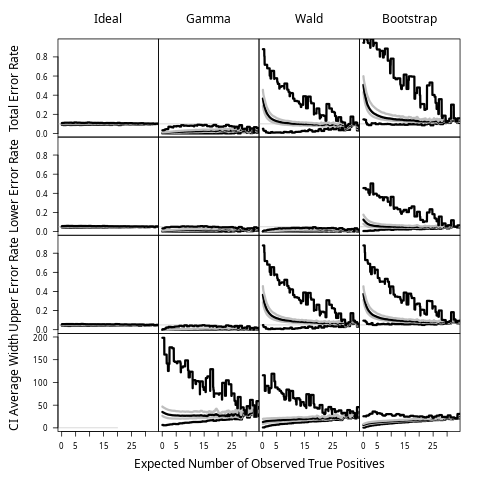

Figure 6.3 reports the performance comparison. Unlike Figures 6.1 and 6.2 where the x-axis represented a range of sampling rates, the x-axis here corresponds to the expected observed number of true positive events per mile, i.e. which equals . In the earlier examples, the underlying event rates were fixed and only the sampling rates varied; in this study, both the sampling rates and the event rates are varying simultaneously. In this setup, this x-axis allows for consideration of how the coverage properties evolve in the asymptotic sense, regardless of whether it is increased review or increased event occurrence.

The pattern is overall similar to the previous examples, for example, for the lower bound, both Gamma method and Wald method maintain the right coverage. However, the Wald method can be badly under-covered for its upper bound, while parametric bootstrap can be badly under-covered for both lower bound and upper bound. On the other hand, the Gamma method is the only method that always produces reliable confidence intervals for all scenarios. Not surprisingly, this method also produces the widest intervals on average.

7. Summary and Discussion

Before launching any new autonomous driving software onto public roads, it is critical to first evaluate the driving quality of the software. To this end, estimation of behavioral event rates has become a statistical focus area within the autonomous vehicles industry. This estimation process often requires more sophisticated statistical consideration than one might expect at first glance.

As described earlier, accurate estimation of these event rates relies on accurate detection of the behavioral events within the driving logs. For many such events, we rely on algorithmic detectors combined with a partial tiered event review process where human reviewers reject or escalate the candidate events at each tier. Importantly, this event review process is a streaming process that rarely provides complete review of all events in the system. As a result, there are many candidate events with missing outcome labels, complicating the total event count estimation. In addition, each of these events may have already progressed to a different stage within the event review pipeline, and this partial information can be used to better estimate the candidate event’s likelihood of being a True Positive.

While the point estimate for the event rate is fairly intuitive, how to construct a reliable confidence interval for the rate is less straightforward. As defined, the data generation model has a very large number of latent variables and nuisance parameters. In addition, the practical application of this work within the autonomous vehicles industry necessitates strong performance for a range of behavioral event types ranging from benign common events to more safety-relevant rare events. As shown through the simulation studies, the different CI methods have different strengths and weaknesses, and these vary depending on the sampling rate and/or event frequency. Thus, the choice of confidence interval may depend on the characteristics of the behavioral event of interest. For a blanket approach that ensures consistent conservative coverage, we propose the Gamma method based on the weighted sum of independent Poissons.

The Parametric Bootstrap CI is consistently the narrowest CI, and it also frequently under-covers. For common behavioral events with a reasonable rate of review, this method’s under-coverage is typically not severe. However, when the sampling rate is low, e.g. under 25%, this method can drastically undercover.

The Wald CI is largely similar to the Parametric Bootstrap, except that the Wald CIs are somewhat wider and provide greater coverage. For applications where it is practical to require minimum rates of review, this method may be attractive to some users. With sufficient guardrails (i.e., only for common events and required minimum rates of review before reporting), this method provides close to the intended coverage and provides narrower intervals that can inform downstream product decision-making by stakeholders. Of course, we do not recommend use of this method unless the practitioner can guarantee the application is a setting where the method achieves desired coverage.

In many cases the above methods are not appropriate, for example when the user does not have a priori information about the relative frequency of the behavioral event, the behavioral event is known to be rare, or there is a product requirement to report rates at all stages of the event review process. In each of these settings, we recommend using the Gamma method based on the weighted sum of independent Poissons, which was shown to maintain consistent coverage across all simulations.

The Gamma method is a conservative strategy, which consistently over-covers relative to the target coverage rate and has interval widths that are consistently wider than the other methods. While this over-coverage is not strictly desirable (we’d prefer to achieve the target coverage rate precisely!), we believe that a conservative approach is the best strategy when estimating the rate of high priority safety-relevant behavioral events. In addition, the upper and lower error rates are generally quite similar, giving confidence that these intervals will not systematically over- or under-estimate the rate of occurrence for undesirable behavioral events. These properties are particularly important given the very-real implications of launching ADS on public roads.

In summary, we have outlined the benefits of the streaming partial tiered event review, but we have also illustrated that estimating confidence intervals for behavioral event rates under this scheme is quite challenging. While the Gamma method had the most consistent coverage, the utility of the resulting confidence intervals is limited by their excessive width and over-coverage in many settings. These findings are similar to the properties observed in the original Gamma method [7]. Future work should further refine and improve these strategies while maintaining the strong properties of the existing approach.

A vast literature exists on the subject of sampling [4], but application of the complex mixed sampling technique as presented is new for the area of autonomous vehicles. How to construct a valid confidence interval in the presence of many nuisance parameters is still very much an open problem for rare events, and the standard approaches such as bootstrap and Wald CI may fail easily for small event counts (e.g. [19]). The most relevant work to ours include the profile likelihood-based method [18], the Buehler method [2], and the hybrid sampling method [3], which however cannot be easily applied for our problem, see [14, 11] for some recent reviews on the subject.

8. Appendix: proof of Theorem 1

We first present a theorem about the relationship between Poisson, multivariate Hypergeometric and multinomial distributions, which may be of independent interest and is used to prove Theorem 1. We then proceed to derive the MLE by making use of the EM algorithm. Results 1) and 3) of Theorem 1 follows directly from Proposition 9, and result 2) follows from Lemma 5. In the end, we also provide an illustrative example for on how to solve the EM equations.

8.1. Relation between Poisson, multivariate Hypergeometric and multinomial distributions

Consider an urn of balls, where each ball has a color with . Let be the number of balls with color , and let and be the column vector of .

Let be a random number whose distribution only depends on .

Randomly draw balls from the urn without replacement, and let be the number of balls with color for . Let and be the column vector of .

Then, conditional on , follows a multivariate Hypergeometric distribution:

Theorem 4.

If , , then the following results hold.

1) Conditional on , the distribution of is Multinomial with shifted mean:

where .

2) Conditional on , is independent of , and follows a conditional Poisson distribution:

where is the normalization factor and only depends on . In other words,

where and are independent.

Proof.

Note that the joint probability can be written as

So given , we have

where . This completes the proof of 1).

The proof of 2) follows from the Bayes theorem, i.e.

∎

8.2. Likelihood inference for a single stratum

The data for a single stratum can be described as . Note that in case the partial event review process is terminated earlier due to no escalation, say for some tier , then there will be no data from higher tiers (i.e., and for according to Algorithm 1), so we may simply reset to for the data likelihood calculation. Let and . The observed data consists of and . Without loss of generality, we will take the mileage .

To follow Algorithm 1, let consist of all candidate events. Let be the set of events which are escalated by Tier- for , so consists of the events which are True Positive. Furthermore, each candidate event is associated with a label, which is either FP- (if the event would be escalated by Tier- but rejected as False Positive by Tier-) for some , or TP (if the event would not be rejected by any Tier), under the complete event review configuration.

For , let be the number of FP- events in , , and let be the number of TP events in , then

For convenience, we also introduce . With some abuse of notation, is identical to as defined by (3.1).

Let , for . Table 1 summarizes the notation of and relation with .

| Tier-0 | Tier-1 | Tier- | Tier- | ||

| FP-1 | 0 | 0 | 0 | 0 | |

| FP-2 | 0 | 0 | 0 | ||

| 0 | 0 | ||||

| FP- | 0 | ||||

| TP | |||||

| Total |

According to the sampling procedure described in Algorithm 1, the following conditional independence holds:

| (8.1) |

Also note that only depends on . Therefore the full likelihood function of and can be written as

| (8.2) |

According to the Poisson assumption described in Algorithm 1,

According to the sampling procedure,

| (8.3) |

Lemma 5.

Let be defined by (4.2), then

Proof.

It is sufficient to prove the result for , since the proof is the same for other . By (8.3), conditional on and , (which is ) follows a hypergeometric distribution. Using (8.1), for , we have

Note that

where indicates whether for all . Then

By iteratively applying (8.1) for ,

for , we get

which is by (3.1) since . ∎

In order to drive the MLE, we apply the EM algorithm.

8.3. The Expectation-Maximization algorithm

Lemma 6.

The M-step simplifies to

| (8.4) |

which implies , since .

Proof.

Note that in the full likelihood function (8.2), only the term involves the parameters of interest. So the M-step, which maximizes w.r.t. , is equivalent to maximization of

where does not depend on .

The conclusion follows from

∎

For let

In order to derive the E-step, we need the result below which follows from Theorem 4. The detailed proof is omitted for conciseness.

Lemma 7.

Conditional on , is independent of , and follows a multinomial distribution with mean shift as follows:

Furthermore, for each , conditional , is independent of , and follows multinomial with mean shift:

The result below follows trivially from Lemma 7.

Lemma 8.

The E-step can be calculated as below: for ,

| (8.5) | ||||

| (8.6) |

The convergence point satisfies the equations from both the M-step and E-step, i.e. (8.4), (8.5) and (8.6), which is summarized below.

Proposition 9.

The EM algorithm converges to a unique solution as below:

for . Thus the MLE of is given by for and .

The detail for solving the equations is omitted for conciseness. Instead, we provide the detailed proof for to illustrate the idea.

8.4. Illustration with

For illustration, below shows the complete E-step and M-step for , for which, the notation of is listed in Table 2 for convenience.

| Tier-0 | Tier-1 | Tier-2 | |

|---|---|---|---|

| FP-1 | 0 | 0 | |

| FP-2 | 0 | ||

| TP | |||

| Total |

According to the description of the EM algorithm in the previous section, we have, with ,

-

•

M-step:

-

•

E-step:

The convergence point of the EM algorithm, i.e. satisfies the equations in both E-step and M-step, which simplifies to:

| (8.7) | ||||

| (8.8) | ||||

| (8.9) |

Acknowledgment

We would like to thank Henning Hohnhold and Kelvin Wu for many insightful discussions.

The event review processes described here are operationalized in partnership with multiple cross-functional teams. We would like to especially thank Vinit Arondekar, Jesse Breazeale, Han Hui, Jeffy Johns, Sidlak Malaki, Kentaro Takada, Ally Yang and Frank Zong for their collaboration.

References

- [1] Peter J Bickel and Kjell A Doksum. Mathematical statistics: basic ideas and selected topics, volumes I, 2nd Edition. Chapman and Hall/CRC, 2015.

- [2] Robert J Buehler. Confidence intervals for the product of two binomial parameters. Journal of the American Statistical Association, 52(280):482–493, 1957.

- [3] Chin-Shan Chuang and Tze Leung Lai. Hybrid resampling methods for confidence intervals. Statistica Sinica, pages 1–33, 2000.

- [4] William G Cochran. Sampling Techniques, 3rd edition. John Wiley & Sons, 1977.

- [5] Arthur P Dempster, Nan M Laird, and Donald B Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1):1–22, 1977.

- [6] Bradley Efron and Raoul LePage. Introduction to bootstrap. Wiley & Sons, New York, 1992.

- [7] Michael P Fay and Eric J Feuer. Confidence intervals for directly standardized rates: a method based on the gamma distribution. Statistics in medicine, 16(7):791–801, 1997.

- [8] Michael P Fay and Sungwook Kim. Confidence intervals for directly standardized rates using mid-p gamma intervals. Biometrical Journal, 59(2):377–387, 2017.

- [9] Erich L Lehmann and George Casella. Theory of point estimation. Springer Science & Business Media, 2006.

- [10] Hon Keung Tony Ng, Giovanni Filardo, and Gang Zheng. Confidence interval estimating procedures for standardized incidence rates. Computational statistics & data analysis, 52(7):3501–3516, 2008.

- [11] Wolfgang A Rolke, Angel M Lopez, and Jan Conrad. Limits and confidence intervals in the presence of nuisance parameters. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 551(2-3):493–503, 2005.

- [12] SAE. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles - J3016 (April 2021). \urlhttps://www.sae.org/standards/content/j3016_202104.

- [13] Matthew Schwall, Tom Daniel, Trent Victor, Francesca Favaro, and Henning Hohnhold. Waymo public road safety performance data. Technical report, Waymo LLC, 2020. \urlhttps://www.waymo.com/safety.

- [14] Bodhisattva Sen, Matthew Walker, and Michael Woodroofe. On the unified method with nuisance parameters. Statistica Sinica, pages 301–314, 2009.

- [15] Pei Sun, Henrik Kretzschmar, Xerxes Dotiwalla, Aurelien Chouard, Vijaysai Patnaik, Paul Tsui, James Guo, Yin Zhou, Yuning Chai, Benjamin Caine, Vijay Vasudevan, Wei Han, Jiquan Ngiam, Hang Zhao, Aleksei Timofeev, Scott Ettinger, Maxim Krivokon, Amy Gao, Aditya Joshi, Yu Zhang, Jonathon Shlens, Zhifeng Chen, and Dragomir Anguelov. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [16] Michael Bruce Swift. A simulation study comparing methods for calculating confidence intervals for directly standardized rates. Computational statistics & data analysis, 54(4):1103–1108, 2010.

- [17] Ram C Tiwari, Limin X Clegg, and Zhaohui Zou. Efficient interval estimation for age-adjusted cancer rates. Statistical methods in medical research, 15(6):547–569, 2006.

- [18] DJ Venzon and SH Moolgavkar. A method for computing profile-likelihood-based confidence intervals. Journal of the Royal Statistical Society: Series C (Applied Statistics), 37(1):87–94, 1988.

- [19] Weizhen Wang. A note on bootstrap confidence intervals for proportions. Statistics & Probability Letters, 83(12):2699–2702, 2013.

- [20] N. Webb, D. Smith, C. Ludwick, T.W. Victor, Q. Hommes, F. Favaro, G. Ivanov, and T. Daniel. Waymo’s safety methodologies and safety readiness determinations. Technical report, Waymo LLC, 2020. \urlhttps://www.waymo.com/safety.

- [21] Yannis G Yatracos. A small sample optimality property of the mle. Sankhyā: The Indian Journal of Statistics, Series A, pages 90–101, 1998.

- [22] Ekim Yurtsever, Jacob Lambert, Alexander Carballo, and Kazuya Takeda. A survey of autonomous driving: Common practices and emerging technologies. IEEE access, 8:58443–58469, 2020.