Bayesian segmented Gaussian copula factor model for single-cell sequencing data

Abstract

Single-cell sequencing technologies have significantly advanced molecular and cellular biology, offering unprecedented insights into cellular heterogeneity by allowing for the measurement of gene expression at an individual cell level. However, the analysis of such data is challenged by the prevalence of low counts due to dropout events and the skewed nature of the data distribution, which conventional Gaussian factor models struggle to handle effectively. To address these challenges, we propose a novel Bayesian segmented Gaussian copula model to explicitly account for inflation of zero and near-zero counts, and to address the high skewness in the data. By employing a Dirichlet-Laplace prior for each column of the factor loadings matrix, we shrink the loadings of unnecessary factors towards zero, which leads to a simple approach to automatically determine the number of latent factors, and resolve the identifiability issue inherent in factor models due to the rotational invariance of the factor loadings matrix. Through simulation studies, we demonstrate the superior performance of our method over existing approaches in conducting factor analysis on data exhibiting the characteristics of single-cell data, such as excessive low counts and high skewness. Furthermore, we apply the proposed method to a real single-cell RNA-sequencing dataset from a lymphoblastoid cell line, successfully identifying biologically meaningful latent factors and detecting previously uncharacterized cell subtypes.

Keyword: Dirichlet-Laplace prior; Inflation of low counts; Non-Gaussianity; Skewness.

1 Introduction

Factor analysis or latent factor model is a powerful tool for multivariate data exploration, routinely applied in fields such as social science, economics, and genomics. It reduces dimension by representing observed variables in terms of a linear combination of a smaller number of latent factors, simplifying the interpretation of complex datasets. In this paper, we focus on single-cell sequencing data, with a motivating dataset from the 10x Genomics single-cell RNA-sequencing (scRNA-seq). Our goal is to develop a novel factor analysis approach for single-cell sequencing data, aimed at uncovering the underlying dependencies among sequenced genes that reflect coordinated biological processes, while simultaneously reducing the dimensionality of the data to facilitate downstream analyses and data visualization.

Single-cell sequencing measures the nucleic acid sequence information in individual cells, revealing a high resolution of cellular differences to facilitate our understanding of individual cell functions. Single-cell data analysis is revolutionizing the landscape of whole-organism science, enabling unbiased identification of previously undiscovered molecular heterogeneity at the cellular level (Haque et al., 2017; Hwang et al., 2018). Despite this promise, a prominent challenge in analyzing single-cell data is the prevalence of dropouts, where sequencing measurements of cells with limited sequencing information are reported as lower than their true levels due to the low sequencing depth. These dropouts result in an abundance of zero and near-zero measurements in the data, leading to difficulties in the analysis of single-cell data (Lin et al., 2017; Ran et al., 2020). This is the case for our motivating dataset that has a high frequency of low counts (0, 1, and possibly 2) as illustrated in Figure 1 for a few genes. In addition to the prevalence of low counts, the single-cell sequencing data are highly skewed. Both low counts and skewness lead to misleading inference when traditional Gaussian factor models are applied (Pierson and Yau, 2015).

Several extensions of factor models, which go beyond the traditional Gaussianity assumption, have been proposed. In the realm of parametric models, Wedel et al. (2003) developed Poisson factor models that can provide a parsimonious representation of multivariate dependencies in count data, later extended to dynamic count data by Acharya et al. (2015). Sammel et al. (2002); Dunson (2000) proposed a latent variable model for the factor analysis of mixed data by incorporating shared latent factors in separate generalized linear models for each observed variable, assuming the marginal distributions are in the exponential family. Parametric models, however, might struggle to capture complex multivariate dependencies in real data due to their strict distributional assumptions, which are often proven inadequate in real-world scenarios (Murray et al., 2013). This limitation has prompted the development of semiparametric latent factor models. Song et al. (2010) assumed flexible nonparametric error distributions instead of Gaussian error distributions, while Yang and Dunson (2010) proposed a wide class of semiparametric structural equation models that accommodate an unknown distribution for factor scores. Murray et al. (2013) proposed a semiparametric Bayesian Gaussian copula factor model for the analysis of mixed data. However, these parametric and semiparametric models were not designed for single-cell data, and thus cannot fully handle the data skewness and heavy low-count inflation.

Factor models for single-cell sequencing data have also been proposed, with a focus on incorporating zero inflation. Lee et al. (2013) developed a Poisson factor model with offsets that explicitly takes into account the abundance of zero reads in single-cell data and automatically incorporates sample normalization. Cao et al. (2017) proposed a Poisson-multinomial model to address the high variation that arises due to excessive zeros. To explicitly account for the presence of dropouts in single-cell data, zero-inflated models have been utilized to extend factor models to address sequencing read data with zero-inflation (Pierson and Yau, 2015; Sohn and Li, 2018; Xu et al., 2021). While these methods have successfully tackled the zero-inflation characteristic of single-cell sequencing data, their limitation lies in the inability to account for the inflation of near-zero values, which should also be regarded as dropout events. Moreover, these methods heavily depend on parametric assumptions, potentially limiting their ability to address the high skewness inherent in single-cell data. Therefore, a notable gap remains in the availability of a dimension reduction approach that not only addresses the inflation of low counts in single-cell sequencing data but also demonstrates the flexibility necessary to deal with the high skewness prevalent in such datasets.

In this paper, we propose a segmented Gaussian copula factor model (scFM) to learn low-dimensional latent representations that capture dependencies of single-cell sequencing data with excessive low counts. Unlike existing factor models for single-cell data, the proposed approach leverages the flexibility of copulas, enabling the accommodation of heavy-tailed distributions while effectively addressing the issue of inflated low counts via segmentation. We also introduce a Bayesian framework that utilizes a column-wise Dirichlet-Laplace prior (Bhattacharya et al., 2015) for factor loadings to accommodate the uncertainty in the number of latent factors and allow for automatic selection of the number of significant latent factors. Notably, our Bayesian approach effectively resolves the identifiability issue inherent in factor models due to the invariance to rotations of the factor loadings matrix (Anderson and Rubin, 1955). Unlike conventional approaches that impose the lower triangular constraint on the factor loadings matrix (Geweke and Zhou, 1995; Aguilar and West, 2000), which makes the factor scores dependent on the ordering of variables, our method circumvents this need for subjective decisions on variable ordering. By resolving the identifiability issue without resorting to such triangular constraints, our approach ensures that inference results and their interpretation remain independent of variable ordering and facilitates consistent results in downstream analyses. Lastly, an efficient data-augmented Gibbs sampler is developed for posterior inference on the proposed model, and the utility of the proposed method is empirically demonstrated through both simulation studies and a real data application. The major contributions of this paper are four-fold:

-

•

We introduce scFM, a novel approach for factor analysis on single-cell sequencing data characterized by excessively low counts. The proposed copula framework is adept at addressing the inflation of low counts while accommodating heavy-tailed distributions.

-

•

We propose a Bayesian framework that incorporates the uncertainty in the true number of latent factors and automatically estimates the number of factors.

-

•

The proposed approach effectively resolves the identifiability issue in factor models, deviating from conventional methods that impose triangular constraints on factor loadings, thereby eliminating the dependency on variable ordering and enhancing the interpretability of latent factors.

-

•

We develop an efficient data-augmented Gibbs sampler for posterior inference.

2 Bayesian segmented Gaussian copula factor model

In this section, we introduce a Bayesian semiparametric approach, scFM, for modeling dependencies in skewed count data with an excessive number of low counts.

2.1 Segmented Gaussian copula factor model

Let denote random variables of interest, i.e., read counts of an individual cell in single-cell sequencing data. In order to account for an excessive number of low counts, including zeros, we propose the segmented Gaussian copula model. We begin by reviewing the standard Gaussian copula model developed by Liu et al. (2009).

Definition 1 (Gaussian copula model).

A random vector satisfies the Gaussian copula model (also known as the nonparanormal model), if there exist strictly increasing transformations such that , where is a correlation matrix. We denote this by .

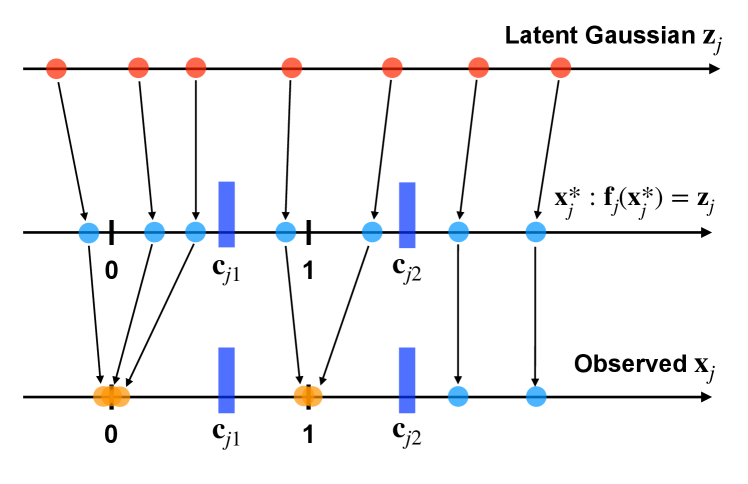

Our proposed segmented Gaussian copula model accounts for dropouts (low counts) by associating them with segments of underlying latent continuous values. Let be a hyperparameter determining the maximum low count being inflated in the observed data. Figure 2 illustrates a schematic of the proposed segmented Gaussian copula model when , and the formal definition for any follows below.

Definition 2 (Segmented Gaussian copula model).

A random vector satisfies the segmented Gaussian copula model, if there exists a latent random vector and strictly increasing constants such that

| (1) |

As illustrated in Figure 2 and formally introduced in Definiton 2, the observed variables are generated through a two-stage process. Initially, a latent random vector is generated from the Gaussian copula model, serving as an underlying source for the observed data. Subsequently, the observed data are derived from the latent random vector via a segmentation process. Specifically, the latent random variable is segmented into multiple intervals , , and . If exceeds a specific threshold , corresponding to the interval , the latent source is directly observed (i.e., . Otherwise, if where , it results in the observation of a low count value . Hence, represents unknown thresholds that truncate the latent random variable to near-zero counts for the observed variable . Additionally, given that the transformations are strictly increasing, (1) can be equivalently expressed using the latent Gaussian vector ,

| (2) |

where is the latent Gaussian level threshold corresponding to .

When , the proposed segmented Gaussian copula model is reduced to the truncated Gaussian copula model of Yoon et al. (2020), which focuses on addressing zero inflation. When , the segmented Gaussian copula model is capable of accommodating the inflation of low counts besides zero. For example, if , the latent variable is segmented into three intervals , and . If falls into either one of the first two intervals and , our observation will be either or . This allows for a flexible representation of zero and one counts in the observed data.

Next, we extend the proposed segmented Gaussian copula to scFM to capture underlying dependencies among observed genes in single-cell sequencing data while simultaneously addressing the issue of inflated near-zero counts. We adopt a low-rank decomposition of the copula correlation matrix so that the dependence among the latent variables can be explained by low-dimensional latent factors.

Definition 3 (Segmented Gaussian copula factor model).

We say that a random vector follows the segmented Gaussian factor model (scFM), if satisfies the segmented Gaussian copula model in Definition 2 and the latent Gaussian vector is defined by a probabilistic factor model,

| (3) |

where is a diagonal matrix that adjusts the scale of to ensure that its covariance matrix is a correlation matrix, is a matrix of factor loadings, is a latent factor scores, and is an idiosyncratic Gaussian noise independent of with .

Note that the covariance matrix of the latent Gaussian is constrained to be a correlation matrix for identifiability (Liu et al., 2009), and thus implies , where is the -th row of the factor loading matrix . In practice, it is typically assumed that the number of latent factors is much smaller than the number of observed variables , leading to a low-rank characterization of . Therefore, once the factor scores are estimated, the dimensionality of the dataset can be effectively reduced from to a lower dimension , which facilitates downstream analyses. A fundamental challenge here is that the number of latent factors is typically unknown a priori. Hence, the selection of an appropriate is of great significance. In the next subsection, we introduce a meticulously selected prior distribution for the factor loadings that enables the automatic selection of the number of latent factors as well as facilitates the identifiability of latent factors.

2.2 Prior specification

We propose to use a column-wise Dirichlet-Laplace (DL, Bhattacharya et al. 2015) prior for the factor loading matrix . The column-wise DL prior enables simultaneous shrinkage of all the entries of a column, thereby effectively zeroing out unnecessary latent factors. Specifically, we assume, for and ,

| (4) |

where , denotes the Laplace or double-exponential distribution with scale parameter , denotes the Dirichlet distribution with concentration parameter which is considered as a hyperparameter.

The global scale controls the overall shrinkage towards zero, while the column-specific local scale allows each column of the factor loading matrix to deviate away from zero. Here represents the maximum allowable number of latent factors. Since DL prior allows for aggressive shrinkage of all entries of a column towards zero, the effective number of factors can be much smaller than , and is determined automatically; see Section 3.3.

Intuitively, the class of column-wise DL priors approximates a spike-and-slab prior (George and McCulloch, 1997) assigned to the factor loadings at the column level by a continuous density concentrated near zero with a heavy tail. A distinguishing characteristic of the DL prior, in contrast to commonly used shrinkage priors like Laplace priors (Park and Casella, 2008), is the presence of a singularity at zero in its distribution while still maintaining exponential tails. Under the proposed column-wise DL priors, the singularity at zero facilitates the concentration of most of the columns of around zero, while the exponential tails ensure little shrinkage of a small number of significant factors. See Bhattacharya et al. (2015) for the theoretical properties of the DL prior.

In addition to the adaptive shrinkage, imposing DL priors for the factor loadings also resolves the identifiability issue of factor models. In the Bayesian framework, a typical approach for factor analysis is assigning Gaussian priors to the factor loadings (Lopes and West, 2004). Although this approach allows for a conditionally conjugate model, facilitating posterior inference through standard Gibbs sampling, it fails to address the invariance of factor models to orthogonal transformations as for any orthogonal matrix (Anderson and Rubin, 1955). A popular technique to deal with this invariance property is imposing conditions that remove invariance to orthogonal transformation, e.g., the lower-triangular constraint on with an assumption of strictly positive diagonal elements (Geweke and Zhou, 1995; Aguilar and West, 2000). While this approach is effective for estimating the covariance matrix, the constraint introduces order-dependence in estimating factor loadings, making the interpretation of latent factors sensitive to the ordering of observed variables. Consequently, changes in variable ordering can influence downstream analyses, such as clustering, thereby compromising the reproducibility of their results. In contrast, our approach, which assigns the column-wise DL priors to the factor loadings, breaks this invariance in the posterior distribution without necessitating the lower-triangular constraint on the factor loadings matrix. Our factor model (3) with non-Gaussian DL prior can be viewed as a noisy independent component analysis (ICA) model, where the columns of are the hidden sources, is the mixing matrix, and is the noise. Since the sources are non-Gaussian, the theory of noisy ICA model ensures identifiability of our model parameters and (up to column permutations and sign changes) (Comon, 1994; Hyvärinen et al., 2004). In summary, our approach alleviates the burden of subjective decisions regarding the ordering of observed variables, which often introduces ambiguity in conventional factor models. As a result, our approach achieves greater consistency in the interpretation of latent factors, and contributes to the robustness and reliability of downstream analyses.

For the Gaussian level thresholds in (2), we assume an improper uniform prior with the following monotonicity constraint,

For the error variances , we assign a conditionally conjugate inverse-gamma prior .

3 Posterior inference

In this section, we detail the posterior inference procedure for the proposed scFM model, focusing on the case where , which involves inflation of zero and one values. Extending the procedure to accommodate other values of is straightforward. Let , , be samples from the proposed scFM, and and , , be the corresponding latent Gaussian vectors and latent factor scores, respectively. We denote the sub-vectors of containing zero and one values by and , respectively. The observed sub-vectors of (containing values greater than one) are denoted as . Likewise, we denote by , and the corresponding latent Gaussian sub-vectors.

3.1 Copula transformations

The latent Gaussian variables corresponding to the observed data are defined by . We will first compute “observed pseudodata” by estimating via the empirical cumulative distribution function (cdf).

Let be the (monotonically increasing) marginal cdf of the -th latent random variable and be the cdf of standard Gaussian. Then, we observe

which implies that . Since is unknown, we replace it with its empirical estimate (Klaassen and Wellner, 1997),

| (5) |

Here, the constant term is necessary to restrict to make finite. Given , we compute the observed pseudodata .

3.2 Data-augmented Gibbs sampling

Given the observed pseudodata, we employ an MCMC algorithm to draw posterior samples of the model parameters. Let , and . Given , we use MCMC to draw posterior samples of , and . In particular, we utilize an efficient data-augmented Gibbs sampler, which is derived by a data-augmented representation of the proposed column-wise DL priors. Introducing auxiliary variables , the DL prior (2.2) can be equivalently represented as

which facilitates Gibbs sampling by enabling the derivation of full conditionals for all parameters. Our implementation of the Gibbs sampler leverages marginalization and blocking to reduce auto-correlation in the posterior samples. It is given that, upon conditioning on the latent Gaussian vectors , the thresholds become independent of the other parameters, and similarly, conditioning on the factor loadings , the hyperparameters are independent from the remaining parameters. Utilizing these conditional independences, our Gibbs sampler cycles through the following draws: (i) , (ii) , (iii) , (iv) , (v) , and (vi) .

Sample . We draw from a truncated multivariate Gaussian distribution,

| (6) |

where and are the conditional mean and conditional covariance matrix of given and . Since for , and are the sub-vector and sub-matrix of and corresponding to the variables , respectively. In (6), the vector consists of the thresholds for the -th observation, specifically corresponding to those variables that equal . The subscript signifies whether the threshold represents a lower bound or an upper bound , respectively.

Sample . The conditional posterior of is proportional to the -product of indicator functions in (6), and thus Step (ii) can be done by sampling , independently from the uniform full conditional distribution

Sample . We draw from .

Sample . We draw where and .

Sample . We draw each row of from , where and with .

Sample . Since

we sequentially sample from , , and . For sampling , we follow the strategy in Bhattacharya et al. (2015). Specifically, let denote a three-parameter generalized inverse Gaussian distribution with the density for . We draw independently, , and set with . Given , we then sample from . Lastly, we sample by drawing independently from . An outline of our data-augmented Gibbs sampler is provided in Algorithm 1.

3.3 Estimation of the number of significant latent factors

In the proposed scFM, is the maximum allowable number of latent factors, and the number of significant factors, denoted by , is determined by the proposed column-wise DL prior. The column-wise DL prior induces a shrinkage effect on factor loadings associated with irrelevant latent factors that lack support from the data, thereby aiding in the identification of . More specifically, our DL prior promotes the concentration of the majority of columns in the factor loadings matrix around zero, except for a few columns corresponding to a limited number of significant factors. This implies the existence of potentially two clusters of columns in : one cluster closely centered around zero, indicating insignificant factor, and another cluster distant from zero, indicating significant factors.

Consequently, we suggest a simple automated approach for selecting . At each MCMC iteration, we cluster the columns of the factor loadings matrix into two clusters using k-means, and estimate the number of significant factors by the size of the cluster that exhibits greater deviance from zero. The number of significant factors is then estimated by taking the mode over all the MCMC iterations. Upon obtaing the estimated , we identify the significant factors by selecting the latent factors associated with the largest columns (based on -norm) of the posterior mean of .

4 Simulation study

We empirically evaluate the performance of the proposed scFM with synthetic data. We compare the proposed method against other factor models for single-cell sequencing data: the zero-inflated factor analysis (ZIFA, Pierson and Yau, 2015) and the zero-inflated Poisson factor analysis (ZIPFA, Xu et al., 2021). We also compare scFM with the Bayesian Gaussian copula factor model (CopulaFM, Murray et al., 2013), which is a flexible semiparametric factor model for mixed data.

To generate synthetic data with marginal distributions matching the empirical marginal distributions of our motivating scRNA-seq data, we follow the ideas in Chung et al. (2022). Specifically, we generate the latent Gaussian vectors from (3) with the true factor loadings sampled from the Laplace distribution and the true error variances sampled from the uniform distribution . To obtain the observed data from the latent Gaussian , we use the empirical cdfs of the motivating scRNA-seq dataset in Section 5. First, we select genes that exhibit the highest cell-to-cell variations and use the empirical cdf of each selected gene as true . The observed data are then obtained by , where is the pseudo inverse of . This data generating process allows us to preserve the marginal distributions of the motivating scRNA-seq dataset in our simulated data while introducing the joint dependence structure through the pre-specified and . We consider different sample sizes and different numbers of genes while fixing the number of true latent factors . Each scenario is repeated 50 times. The simulated datasets show a significant abundance of zeros () and ones ().

For the proposed scFM, we set the hyperparameters at and . The maximum count value that we consider to be inflated is set to . To assess the efficacy of the DL prior in the selection of significant latent factors and its robustness to varying specifications of , we examine two distinct values of . The first value () corresponds to the true number of factors, while the second value is intentionally overspecified, allowing us to test how well the proposed approach accommodates the uncertainty in the selection of significant latent factors. For each , we run the data-augmented Gibbs sampler proposed in Section 3 for 10,000 iterations, of which the first 5,000 iterations are discarded as burn-in. Based on the MCMC samples, we calculate the posterior mean of the factor scores and the factor loadings (for factors) to obtain their estimates. For the competitors, ZIFA, ZIPFA, and CopulaFM, we set the number of latent factors to the simulation ground truth (i.e., ). Additionally, we apply the transformation to the simulated data before applying ZIFA in concordance with the data processing pipeline in the corresponding paper.

To evaluate the performance, we follow the approach in Pierson and Yau (2015), which accounts for the rotational invariance of factor model specification in terms of scores and loadings. While the proposed scFM addresses this rotational invariance, we use the approach in Pierson and Yau (2015) to be objective in comparison with other methods. The main idea is that while rotation changes the values of scores and loading, it preserves relative distances. Thus instead of comparing the true factor scores with estimated factor scores directly, we will evaluate concordance between corresponding score-induced pairwise distances. Let represent a pairwise distance matrix with each element being the Euclidean distance between true factor scores and , and let be the corresponding pairwise distance matrix based on estimated factor scores . We will compute the Spearman correlation between and its estimated counterpart , with larger correlation values indicating more accurate estimation performance. We will use a similar approach to evaluate the accuracy of estimated factor loadings.

| Method | 500 | 1000 | 2000 | 25 | 50 | 100 |

|---|---|---|---|---|---|---|

| Spearman correlation in factor score estimation | ||||||

| scFM | 0.973 (0.000) | 0.976 (0.000) | 0.978 (0.000) | 0.966 (0.000) | 0.976 (0.000) | 0.989 (0.000) |

| scFM | 0.962 (0.001) | 0.964 (0.000) | 0.964 (0.000) | 0.952 (0.000) | 0.964 (0.000) | 0.983 (0.001) |

| ZIFA | 0.933 (0.001) | 0.934 (0.001) | 0.935 (0.000) | 0.893 (0.004) | 0.934 (0.001) | 0.975 (0.000) |

| ZIPFA | 0.532 (0.005) | 0.530 (0.005) | 0.525 (0.003) | 0.401 (0.006) | 0.530 (0.005) | 0.639 (0.003) |

| CopulaFM | 0.916 (0.001) | 0.889 (0.001) | 0.851 (0.001) | 0.929 (0.000) | 0.889 (0.001) | N/A |

| Spearman correlation in factor loading estimation | ||||||

| scFM | 0.991 (0.000) | 0.994 (0.000) | 0.995 (0.000) | 0.995 (0.000) | 0.994 (0.000) | 0.995 (0.000) |

| scFM | 0.992 (0.000) | 0.995 (0.000) | 0.997 (0.000) | 0.996 (0.000) | 0.995 (0.000) | 0.996 (0.000) |

| ZIFA | 0.591 (0.001) | 0.673 (0.001) | 0.595 (0.001) | 0.664 (0.003) | 0.673 (0.001) | 0.665 (0.001) |

| ZIPFA | 0.156 (0.006) | 0.153 (0.007) | 0.143 (0.005) | 0.139 (0.006) | 0.153 (0.007) | 0.440 (0.008) |

| CopulaFM | 0.916 (0.001) | 0.891 (0.001) | 0.882 (0.001) | 0.960 (0.000) | 0.891 (0.001) | N/A |

The resulting correlation values for the factor scores and loadings are summarized in Tables 1. Results for CopulaFM with are omitted due to numerical issues encountered with its R package implementation. The proposed scFM consistently outperforms its competitors in estimating both factor scores and factor loadings, demonstrating its advantages on skewed count data with excessive zero and near-zero values. Notably, even when using a value of larger than the ground truth (i.e., ), the estimation of factor scores by scFM did not deteriorate much and is still the second best across all scenarios. Similarly, in the estimation of factor loadings, our scFM outperforms the competing approaches across every scenario for both . This highlight the efficacy of our column-wise DL prior in adapting to the unknown number of latent factors and the robustness of our method to different selections. Remarkably, the approaches that utilize the copula model (i.e., scFM and CopulaFM) exhibit superior performance in estimating factor loadings compared to their parametric alternatives. This demonstrates the advantage of employing the copula model framework for factor analysis on datasets characterized by the inflation of low counts and high skewness. However, despite the flexibility of CopulaFM as a copula-based factor model, its performance falls short of ours, with its effectiveness diminishing as data and feature sizes grow. CopulaFM also encounters numerical issues, such as the failure of the Cholesky decomposition, in the scenario with the largest number of genes (). We conjecture that larger data and feature sizes exacerbates the multi-modality issue in its posterior distribution, deteriorating the mixing of the MCMC implementation for CopulaFM and resulting in inferior results. This multi-modality issue might also navigate the MCMC algorithm into regions prone to numerical issues. To summarize, scFM demonstrates highly effective performance in estimating both factor scores and loadings for datasets that exhibit the characteristics of scRNA-seq data – an abundance of low counts and high skewness.

5 Application to scRNA-seq data

5.1 Data and aim

We apply the proposed scFM to our motivating scRNA-seq dataset, which consists of 7,247 cells from the lymphoblastoid cell line (LCL) GM12878, for which 18,170 genes were sequenced. The data can be obtained from the 10x Genomics Datasets website (https://www.10xgenomics.com). The goal of this analysis is to characterize underlying relationships among the sequenced genes and to obtain low-dimensional factor scores summarizing the variability in the observed scRNA-seq data to aid detection of previously uncharacterized cell subtypes in LCL. We filter out low-quality cells such as empty droplets, cell doublets and multiplets using the R package Seurat (Hao et al., 2023), resulting in cells. The detailed data preprocessing procedure is provided in Appendix A. We focus on genes that show the largest variability across cells, which contain zeros and ones across those genes.

5.2 Factor analysis

For the proposed scFM, we use to explicitly account for the notable inflation of zero and one counts observed in the motivating scRNA-seq dataset (refer to Figure 1). We set the maximum number of latent factors at to achieve a balance between model interpretability and avoiding model misspecification, as fewer latent factors tend to provide clearer insights for model interpretation while too few risks excluding significant factors. Our analysis supports this decision, demonstrating that the number of significant latent factors is indeed fewer than . We use the same hyperparameter values as in Section 4 and run the proposed MCMC in Section 3 for 10,000 iterations with 5,000 burn-in. To assess the goodness-of-fit of the proposed scFM on this dataset, we perform posterior predictive checks, which compare the posterior predictive distribution with the observed data. Our proposed model shows no significant lack of fit; see Appendix A for details.

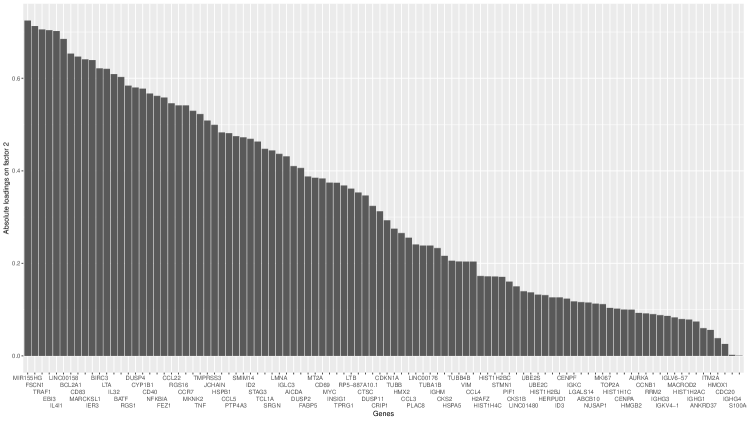

We utilize the approach outlined in Section 3.3 to determine the number of significant factors and to discern their identity. For this dataset, scFM determines that there are two significant factors (i.e. ), and factors corresponding to the two largest columns (in -norm) of the posterior mean of the factor loadings matrix are identified as the significant factors (termed factors 1 and 2).

Figure 3 illustrates the absolute loadings of all genes on these two significant factors, providing insight into a potential biological interpretation for each estimated factor. For factor 1, genes with the 10 largest absolute loadings are MKI67, CENPF, HMGB2, NUSAP1, TOP2A, CDC20, CENPA, CKS1B, UBE2C, and STMN1. Notably, several of these genes are closely linked to the cell cycle, a fundamental process where cells go through a series of events leading to cell division. Specifically, MKI67, CENPF, HMGB2, and TOP2A have been known as cell cycle markers in single-cell studies of human lymphoblastoid cell lines (Sawada et al., 2020; SoRelle et al., 2022). Therefore, we call factor 1 the cell cycle factor.

For factor 2, genes with the ten most substantial loadings are MIR155HG, FSCN1, TRAF1, EBI3, IL4I1, LINC00158, BCL2A1, CD83, MARCKSL1, and IER3, some of which are known to be related to immune functions. FSCN1 and CD83 are markers for dendritic cells, specialized immune cells crucial for presenting antigens to other immune cells and initiating immune responses (Zimmer et al., 2012; Lechmann et al., 2002). Moreover, MIR155HG and IL4I1 are frequently over-expressed in immune cells including B-cells, and play important roles in immune infiltration (Peng et al., 2019; Bod et al., 2018). Therefore, we call factor 2 the immune cell factor.

5.3 Cell clustering

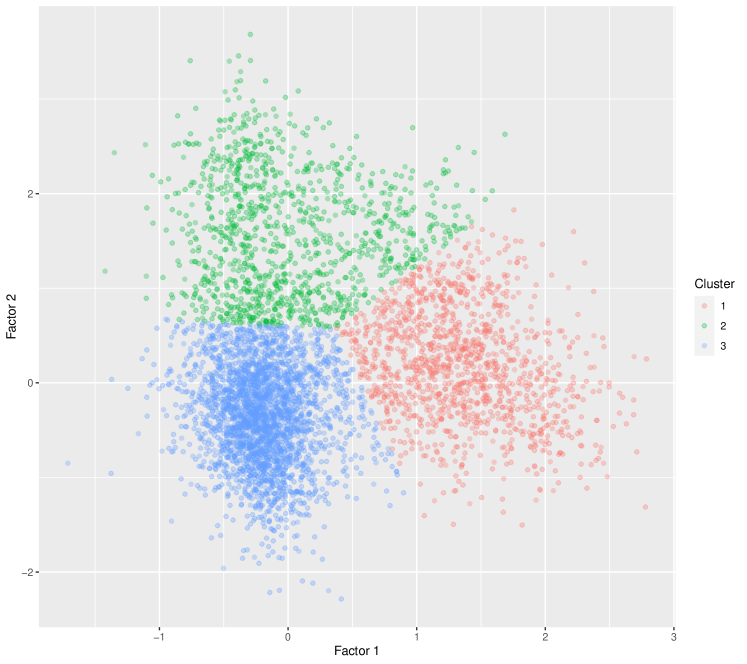

To further interpret the two major latent factors identified by the proposed scFM, we use them to identify distinct cellular subtypes or states in LCL. The scores of the two selected factors serve as a low-dimensional representation of the high-dimensional gene expression measurements in scRNA-seq data. Therefore, by clustering the data in the low-dimensional factor space, we can identify groups of cells exhibiting similar expression behavior. For simplicity, we use the k-means algorithm to cluster the latent factor scores, where the number of clusters is determined by the elbow method.

Our analysis reveals three clusters, which are depicted in Figure 4. For cluster 1, we observe a notable elevation in scores for factor 1, while scores for factor 2 are close to zero. Cluster 2, in contrast, exhibits elevated scores for factor 2 but with diminished scores for factor 1. Cluster 3 displays factor scores around zero for both factors. Combining this observation with the biological interpretation of the estimated factors in Section 5.2 suggests that cluster 1 likely comprises cycling cells, cluster 2 likely consists of immune cells, and the remaining cells are grouped into cluster 3. We further investigate gene expression measurements for relevant genes discussed in Section 5.2 to support this interpretation. Table 2 reports average gene expression measurements of MKI67, CENPF, HMGB2, TOP2A, FSCN1, CD83, MIR155HG, and IL4I1 for the entire dataset and for each cluster. Recall that the first four of these genes are associated with factor 1, while the latter four are associated with factor 2. For cluster 1, MKI67, CENPF, HMGB2, and TOP2A are over-expressed. Given that MKI67, CENPF, HMGB2, and TOP2A serve as markers for cycling cells, it can be inferred that cells in cluster 1 are currently undergoing cell cycling. On the other hand, cluster 2 exhibits high expression of the latter four genes FSCN1, CD83, MIR155HG, and IL4I1, which is known to be strongly correlated with immune cells and immune cell function. Consequently, it is plausible to conclude that cluster 2 represents immune cells.

| MKI67 | CENPF | HMGB2 | TOP2A | FSCN1 | CD83 | MIR155HG | IL4I1 | |

|---|---|---|---|---|---|---|---|---|

| Entire dataset | 1.938 | 0.942 | 7.622 | 0.777 | 1.536 | 0.789 | 3.238 | 1.038 |

| Cluster 1 | 6.493 | 3.241 | 24.911 | 2.716 | 1.022 | 0.422 | 1.864 | 0.628 |

| Cluster 2 | 1.147 | 0.469 | 4.390 | 0.366 | 5.585 | 2.786 | 11.276 | 3.653 |

| Cluster 3 | 0.140 | 0.065 | 0.892 | 0.042 | 0.127 | 0.147 | 0.603 | 0.164 |

6 Discussion

In this work, we have developed a segmented Gaussian copula factor model with application to single-cell sequencing data, which have an excessive amount of low counts due to limited sequencing depth. Our approach adopts a Bayesian framework that utilizes a column-wise Dirichlet-Laplace prior for the factor loadings. This framework accommodates the uncertainty related to the number of latent factors, enabling automatic selection of the most significant ones. Additionally, our formulation resolves the identifiability issues inherent in factor models, thereby enhancing model interpretability. Simulation studies on synthetic datasets that mimic real scRNA-seq data show that the proposed approach significantly outperforms existing methods. In an application to scRNA-seq data, our method finds two biologically meaningful latent factors and identifies distinct cellular subpopulations with differing expression behaviors.

While our primary focus lies in the analysis of low-count inflated data, specifically single-cell sequencing data, our proposed model can be directly extended to mixed type data. An example of such data can be found in projects like the Human Cell Atlas Project, which contains a combination of binary (e.g., mutation) and low-count inflated data (e.g., scRNA-seq). We expect that extending our model to address such mixed data would be feasible by leveraging the Gaussian copula model for mixed data as proposed by Fan et al. (2016) along with the proposed segmented Gaussian copula model.

Acknowledgements

Ni’s research is partially supported by CPRIT RP230204, NIH 1R01GM148974-01, and NSF DMS-2112943. Gaynanova’s research is partially supported by NSF DMS-2044823.

References

- Acharya et al. (2015) Acharya, A., J. Ghosh, and M. Zhou (2015). Nonparametric Bayesian Factor Analysis for Dynamic Count Matrices. In G. Lebanon and S. V. N. Vishwanathan (Eds.), Proceedings of the Eighteenth International Conference on Artificial Intelligence and Statistics, Volume 38 of Proceedings of Machine Learning Research, San Diego, California, USA, pp. 1–9. PMLR.

- Aguilar and West (2000) Aguilar, O. and M. West (2000). Bayesian dynamic factor models and variance matrix dis-counting for portfolio allocation.

- Anderson and Rubin (1955) Anderson, T. W. and H. Rubin (1955). Statistical Inference in Factor Analysis. Cowles Foundation for Research in Economics at Yale University.

- Bhattacharya et al. (2015) Bhattacharya, A., D. Pati, N. S. Pillai, and D. B. Dunson (2015, October). Dirichlet–Laplace priors for optimal shrinkage. J. Am. Stat. Assoc. 110(512), 1479–1490.

- Bod et al. (2018) Bod, L., L. Douguet, C. Auffray, R. Lengagne, F. Bekkat, E. Rondeau, V. Molinier-Frenkel, F. Castellano, Y. Richard, and A. Prévost-Blondel (2018, February). IL-4-induced gene 1: A negative immune checkpoint controlling B cell differentiation and activation. J. Immunol. 200(3), 1027–1038.

- Cao et al. (2017) Cao, Y., A. Zhang, and H. Li (2017). Microbial composition estimation from sparse count data. Preprint. Available at.

- Chung et al. (2022) Chung, H. C., I. Gaynanova, and Y. Ni (2022, December). Phylogenetically informed bayesian truncated copula graphical models for microbial association networks. aoas 16(4), 2437–2457.

- Comon (1994) Comon, P. (1994, April). Independent component analysis, a new concept? Signal Processing 36(3), 287–314.

- Dunson (2000) Dunson, D. B. (2000, July). Bayesian latent variable models for clustered mixed outcomes. J. R. Stat. Soc. Series B Stat. Methodol. 62(2), 355–366.

- Fan et al. (2016) Fan, J., H. Liu, Y. Ning, and H. Zou (2016, April). High dimensional semiparametric latent graphical model for mixed data. J. R. Stat. Soc. Series B Stat. Methodol. 79(2), 405–421.

- George and McCulloch (1997) George, E. I. and R. E. McCulloch (1997). APPROACHES FOR BAYESIAN VARIABLE SELECTION. Stat. Sin. 7(2), 339–373.

- Geweke and Zhou (1995) Geweke, J. and G. Zhou (1995). Measuring the Pricing Error of the Arbitrage Pricing Theory. Federal Reserve Bank of Minneapolis, Research Department.

- Hao et al. (2023) Hao, Y., T. Stuart, M. H. Kowalski, S. Choudhary, P. Hoffman, A. Hartman, A. Srivastava, G. Molla, S. Madad, C. Fernandez-Granda, and R. Satija (2023, May). Dictionary learning for integrative, multimodal and scalable single-cell analysis. Nat. Biotechnol..

- Haque et al. (2017) Haque, A., J. Engel, S. A. Teichmann, and T. Lönnberg (2017, August). A practical guide to single-cell RNA-sequencing for biomedical research and clinical applications. Genome Med. 9(1), 75.

- Hwang et al. (2018) Hwang, B., J. H. Lee, and D. Bang (2018, August). Single-cell RNA sequencing technologies and bioinformatics pipelines. Exp. Mol. Med. 50(8), 1–14.

- Hyvärinen et al. (2004) Hyvärinen, A., J. Karhunen, and E. Oja (2004, April). Independent Component Analysis. John Wiley & Sons.

- Klaassen and Wellner (1997) Klaassen, C. A. J. and J. A. Wellner (1997). Efficient estimation in the bivariate normal copula model: Normal margins are least favourable. Bernoulli 3(1), 55–77.

- Lechmann et al. (2002) Lechmann, M., S. Berchtold, J. Hauber, and A. Steinkasserer (2002, June). CD83 on dendritic cells: more than just a marker for maturation. Trends Immunol. 23(6), 273–275.

- Lee et al. (2013) Lee, S., P. E. Chugh, H. Shen, R. Eberle, and D. P. Dittmer (2013, May). Poisson factor models with applications to non-normalized microRNA profiling. Bioinformatics 29(9), 1105–1111.

- Lin et al. (2017) Lin, P., M. Troup, and J. W. K. Ho (2017, March). CIDR: Ultrafast and accurate clustering through imputation for single-cell RNA-seq data. Genome Biol. 18(1), 59.

- Liu et al. (2009) Liu, H., J. Lafferty, and L. Wasserman (2009, October). The nonparanormal: Semiparametric estimation of high dimensional undirected graphs. J. Mach. Learn. Res., 2295–2328.

- Lopes and West (2004) Lopes, H. F. and M. West (2004). BAYESIAN MODEL ASSESSMENT IN FACTOR ANALYSIS. Stat. Sin. 14(1), 41–67.

- Murray et al. (2013) Murray, J. S., D. B. Dunson, L. Carin, and J. E. Lucas (2013, June). Bayesian gaussian copula factor models for mixed data. J. Am. Stat. Assoc. 108(502), 656–665.

- Park and Casella (2008) Park, T. and G. Casella (2008, June). The bayesian lasso. J. Am. Stat. Assoc. 103(482), 681–686.

- Peng et al. (2019) Peng, L., Z. Chen, Y. Chen, X. Wang, and N. Tang (2019, December). MIR155HG is a prognostic biomarker and associated with immune infiltration and immune checkpoint molecules expression in multiple cancers. Cancer Med. 8(17), 7161–7173.

- Pierson and Yau (2015) Pierson, E. and C. Yau (2015, November). ZIFA: Dimensionality reduction for zero-inflated single-cell gene expression analysis. Genome Biol. 16, 241.

- Ran et al. (2020) Ran, D., S. Zhang, N. Lytal, and L. An (2020, August). scdoc: correcting drop-out events in single-cell RNA-seq data. Bioinformatics 36(15), 4233–4239.

- Rubin (1984) Rubin, D. B. (1984). Bayesianly justifiable and relevant frequency calculations for the applied statistician. Ann. Stat. 12(4), 1151–1172.

- Sammel et al. (2002) Sammel, M. D., L. M. Ryan, and J. M. Legler (2002, January). Latent variable models for mixed discrete and continuous outcomes. J. R. Stat. Soc. Series B Stat. Methodol. 59(3), 667–678.

- Sawada et al. (2020) Sawada, T., T. E. Chater, Y. Sasagawa, M. Yoshimura, N. Fujimori-Tonou, K. Tanaka, K. J. M. Benjamin, A. C. M. Paquola, J. A. Erwin, Y. Goda, I. Nikaido, and T. Kato (2020, November). Developmental excitation-inhibition imbalance underlying psychoses revealed by single-cell analyses of discordant twins-derived cerebral organoids. Mol. Psychiatry 25(11), 2695–2711.

- Sohn and Li (2018) Sohn, M. B. and H. Li (2018, June). A GLM-based latent variable ordination method for microbiome samples. Biometrics 74(2), 448–457.

- Song et al. (2010) Song, X.-Y., J.-H. Pan, T. Kwok, L. Vandenput, C. Ohlsson, and P.-C. Leung (2010, June). A semiparametric bayesian approach for structural equation models. Biom. J. 52(3), 314–332.

- SoRelle et al. (2022) SoRelle, E. D., J. Dai, N. M. Reinoso-Vizcaino, A. P. Barry, C. Chan, and M. A. Luftig (2022, August). Time-resolved transcriptomes reveal diverse B cell fate trajectories in the early response to Epstein-Barr virus infection. Cell Rep. 40(9), 111286.

- Wedel et al. (2003) Wedel, M., U. Böckenholt, and W. A. Kamakura (2003, November). Factor models for multivariate count data. J. Multivar. Anal. 87(2), 356–369.

- Xu et al. (2021) Xu, T., R. T. Demmer, and G. Li (2021, March). Zero-inflated poisson factor model with application to microbiome read counts. Biometrics 77(1), 91–101.

- Yang and Dunson (2010) Yang, M. and D. B. Dunson (2010, December). Bayesian semiparametric structural equation models with latent variables. Psychometrika 75(4), 675–693.

- Yoon et al. (2020) Yoon, G., R. J. Carroll, and I. Gaynanova (2020, September). Sparse semiparametric canonical correlation analysis for data of mixed types. Biometrika 107(3), 609–625.

- Zimmer et al. (2012) Zimmer, A., J. Bouley, M. Le Mignon, E. Pliquet, S. Horiot, M. Turfkruyer, V. Baron-Bodo, F. Horak, E. Nony, A. Louise, H. Moussu, L. Mascarell, and P. Moingeon (2012, April). A regulatory dendritic cell signature correlates with the clinical efficacy of allergen-specific sublingual immunotherapy. J. Allergy Clin. Immunol. 129(4), 1020–1030.

Appendix A Additional details for application to scRNA-seq data

A.1 Data pre-processing

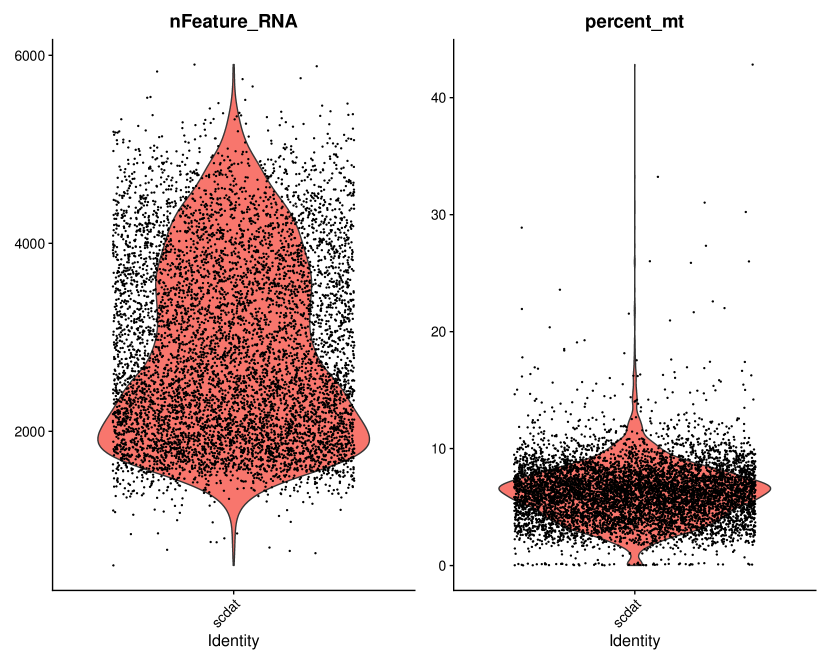

The scRNA-seq dataset in Section 5 is pre-processed using the R package Seurat (Hao et al., 2023) for our factor analysis. After eliminating genes with over zeros, we further refine the dataset by filtering out low-quality cells such as empty droplets, cell doublets, and multiplets based on quality control (QC) metrics. We then select genes showing the largest cell-to-cell variation. Among the commonly used QC metrics for scRNA-seq data, we specifically consider the number of unique genes detected in each cell and the percentage of reads mapping to the mitochondrial genome, which are illustrated in Figure A.1. Cells of poor quality or empty droplets typically exhibit a very low gene count, while cell doublets or multiplets often display an unusually high gene count. Additionally, low-quality cells frequently demonstrate significant mitochondrial contamination. Consequently, we filter out cells with unique feature counts over or less than , as well as cells with mitochondrial counts exceeding . Based on the retained cells, we identify genes exhibiting the most substantial cell-to-cell variation in the dataset:

IGHG1, IGHG3, IGKC, CCL22, IGHM, CCL3, IGHG4, CCL4, IGLC3, HIST1H1C, HIST1H2BJ, LTB, LTA, HSPB1, CYP1B1, RGS1, HIST1H4C, LINC00176, CD69, MIR155HG, IGLV6-57, UBE2C, CTSC, FSCN1, MARCKSL1, NFKBIA, CCR7, MT2A, DUSP2, CD83, ABCB10, IL4I1, IER3, JCHAIN, UBE2S, LMNA, DUSP4, IL32, HMGB2, RGS16, HMOX1, BIRC3, TMPRSS3, S100A4, MYC, TNF, CDKN1A, TUBA1B, TCL1A, CRIP1, BCL2A1, TOP2A, EBI3, CDC20, ANKRD37, HMX2, AICDA, ID3, MKI67, LGALS14, TPRG1, STAG3, CCL5, HIST1H2BC, CKS2, RRM2, HIST1H2AC, CCNB1, IGKV4-1, FABP5, CENPF, MACROD2, CENPA, H2AFZ, INSIG1, MKNK2, RP5-887A10.1, SRGN, LINC01480, LINC00158, HSPA5, TUBB4B, PIF1, ITM2A, CD40, TUBB, VIM, PLAC8, DUSP11, STMN1, CKS1B, HERPUD1, TRAF1, PTP4A3, NUSAP1, BATF, ID2, FEZ1, AURKA, SMIM14.

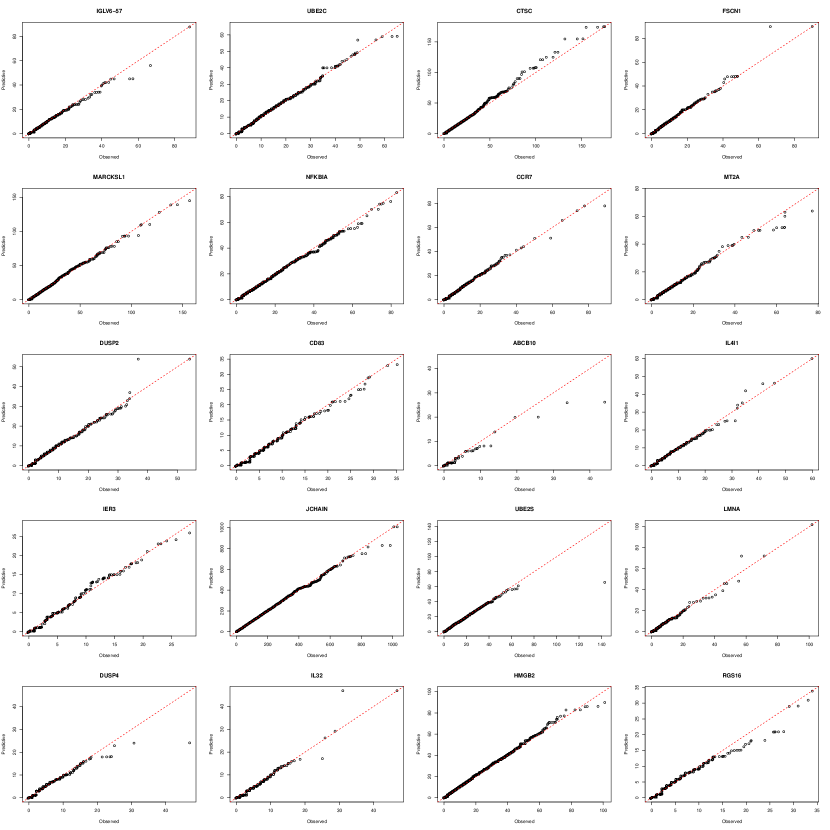

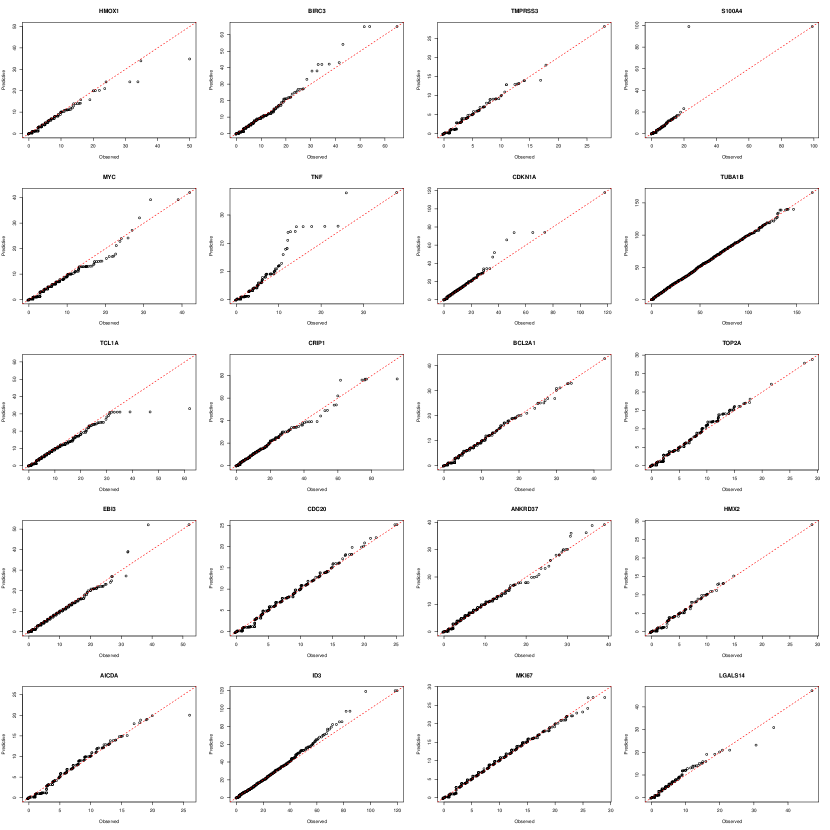

A.2 Goodness-of-fit

We perform posterior predictive checks (Rubin, 1984) to assess the goodness-of-fit of the proposed scFM on the scRNA-seq dataset in Section 5. To assess model fit, these checks compare observed data with the posterior predictive distribution of hypothetical replicated data that reflects what we expect under our posterior distribution. A good fit is indicated when the observed data aligns with the posterior predictive distribution based on the model.

Specifically, we generate posterior predictive samples as follow:

where is the correlation matrix obtained from the covariance matrix , which is constructed using posterior samples of . Moreover, denotes the pseudo inverse of the empirical cdf and are posterior samples of the Gaussian level thresholds . We compare these posterior predictive samples with the observed data for each gene. In general, the posterior predictive samples show very good fits to the observed data, as illustrated in the Q-Q plots in Figures A.2-A.6. For each gene, the majority of data points closely align along the 45-degree line, with only a few deviations observed at the tail, suggesting excellent fits to the observed data.