Bayesian Parameter Identification for

Jump Markov Linear Systems

Abstract

This paper presents a Bayesian method for identification of jump Markov linear system parameters. A primary motivation is to provide accurate quantification of parameter uncertainty without relying on asymptotic in data-length arguments. To achieve this, the paper details a particle-Gibbs sampling approach that provides samples from the desired posterior distribution. These samples are produced by utilising a modified discrete particle filter and carefully chosen conjugate priors.

Faculty of Engineering and Built Environment, The University of Newcastle, Callaghan, NSW 2308 Australia

1 Introduction

In many important applications, the underlying system is known to abruptly switch behaviour in a manner that is temporally random. This type of system behaviour has been observed across a broad range of applications including econometrics [39, 33, 50, 12, 26, 34, 40, 20], telecommunications [43], fault detection and isolation [35], and mobile robotics [44], to name just a few.

For the purposes of decision making and control, it is vital to obtain a model of this switched system behaviour that accurately captures both the dynamics and switching events. At the same time, it is well recognised that obtaining such a model based on first principles considerations is often prohibitive.

This latter difficulty, together with the importance of the problem of switched system modelling has been recognised by many other authors who have taken an approach of deriving algorithms to estimate both the dynamics and the switching times on the basis of observed system behaviour. The vast majority of methods are directed towards providing maximum-likelihood or related estimates of the system [13, 30, 3, 55, 51, 29], including our own work [9]. While maximum-likelihood (and related) estimates have proven to be very useful, their employment within decision making and control should be accompanied by a quantification of uncertainty. Otherwise trust or belief may be apportioned in error, which can lead to devastating outcomes.

Classical results for providing such error quantifications have relied on central limit theorems, that are asymptotic in the limit as the available data length [41, 42, 5, 4]. These results are then used in situations where only finite data lengths are available, which can provide inaccurate error bounds. This raises a question around providing error quantification that is accurate for finite length data sets. A further question concerns the quantification of performance metrics that rely on these system models, such as the performance of a model-based control strategy.

Fortunately, issues relating to model uncertainty can be addressed using the Bayesian framework [48]. The rationale of the Bayesian approach is that is captures the full probabilistic information of the system conditioned on the available data, even for short data lengths. However, the Bayesian approach is not without its challenges. Except for some very special cases, the Bayesian posterior distribution is not straightforward to compute due to the typically large multi-dimensional integrals that are required in forming the solution.

A remarkable alternative approach aims instead to construct a random number generator whose samples are distributed according to the desired Bayesian posterior distribution. The utility of this random number generator is that its samples can be used to approximate, with arbitrary accuracy, user defined expectation integrals via Monte-Carlo integration (see e.g. [47]). For example, this can provide estimates of the conditional mean, which is known to be the minimum mean-squared estimate.

Constructing such a random number generator has received significant research attention and among the possible solutions is the so-called Markov chain approach. As the name suggests, this approach constructs a Markov chain whose stationary distribution coincides with desired target distribution, and therefore iterates of the Markov chain are in fact samples from the desired target.

Ensuring that this Markov chain does indeed converge to the desired stationary distribution can be achieved using the Metropolis–Hastings (MH) algorithm (see e.g. [47]). Despite achieving this remarkable outcome, the MH algorithm approach has a significant drawback in that it requires a certain user supplied “proposal density”. This proposal must be carefully selected so as to deliver realisations that have a reasonable probability of originating from the target distribution.

Fortunately, this latter problem has received significant research attention and there are by now many variants of the MH method. One alternative, the Gibbs sampler, proceeds by sampling from conditional distributions in a given sequence with the primary benefit that each sample is guaranteed to be accepted. Alternatively, as we will consider in this paper, particle filters may be used to construct samples from the required conditional distribution, this resulting algorithm is aptly referred to as the particle-Gibbs method.

This particle-Gibbs approach has been previously employed for identifying linear state-space models [54, 15], autoregressive (AR) systems [21, 26, 28, 1], change-point models [19, 16, 31], stochastic volatility models [50], and stochastically switched systems operating according to drift processes [33]. Employing the particle-Gibbs sampler for a switched system encounters additional challenges. In essence, these stem from the exponential (in data-length) growth in complexity caused by possibility that the system switches among a number of possible dynamic models at each time-step [8, 3, 13, 30, 7, 29, 14, 11, 10, 37, 39].

In this work, we focus on applying a particle-Gibbs sampler to an important subclass of switched systems known as jump Markov linear systems (JMLS). This class is attractive due to its relative simplicity and versatility in modelling switched systems. Previous research effort has considered particle-Gibbs sampling for the JMLS class, each with various limitations, including univariate models in [40, 25, 26], limited dynamics modes in [40, 17, 1], and constrained system or noise structure in [16, 33, 31, 28, 23]. We focus on removing these limitations.

To perform particle-Gibbs sampling on this system class two methods need to be provided:

-

1.

A method for generating smoothed hybrid state trajectories conditioned on the available data.

-

2.

A method of updating the prior chosen for the system parameters using the sampled hybrid state trajectory.

The latter can employ the MH algorithm, but is attended to by use of conjugate prior solutions in this paper as this approach is computationally advantageous. The relevant details for this approach are discussed later.

Previous solutions to the former problem have involved sampling the continuous and discrete latent variables in separate stages of the particle-Gibbs algorithm [49, 40, 25, 26, 16, 28, 50, 23]. These approaches rely on [24, 27] for sampling the continuous state trajectory, and [21, 34, 18, 27] for the sampling the discrete state sequence. Methods used to sample the discrete sequence include ‘single move’ sampling, where the model used at each time step is sampled separately by increasing the number of steps within the particle-Gibbs iteration, and ‘multi-move sampling’ where the entire discrete trajectory is sampled as a block [27, 40]. However, these approaches have been criticised for poor mixing [53], slowing down the convergence rate of the particle-Gibbs algorithm.

Rao-Blackwellization may be used to improve the efficiency of particle filters. Additional improvements often used with Rao-Blackwellization include using a discrete particle filter (DPF) [22], which offers more efficient sampling from the discrete state space. The algorithm does this by using a metric to determine which components/particles to be kept deterministically, and which particles should undergo a resampling procedure. As the particles which are kept deterministically are those with the greatest probability mass, and therefore are not duplicated during resampling, the algorithm presents with fewer duplicated particles, and is therefore more efficient. The DPF algorithm also promises to be exact as the number of particles reaches the impracticable limit of the total number of components required to represent the probability distribution exactly, as all components will be kept deterministically in this case.

In this paper, we apply the particle-Gibbs sampler to the general JMLS class using a modified version of the DPF. As detailed later, this modified version is specifically tailored to the JMLS class and attends to some of the shortcomings in the papers which conceived the idea [22, 53]. Unlike previous particle-Gibbs approaches to JMLS identification, we do not assume the system to be univariate [40, 25, 26], support only a small number of models [40, 17, 1], or constrain the system or noise structure [23, 16, 33, 31, 28]. This in part, is made possible by use of the inverse-Wishart conjugate prior such as used within [54, 23], opposed to commonly used inverse-Gamma distribution (e.g. see [1, 18, 40, 26, 21]) that only describes scalar probability distributions.

The contributions of this paper are therefore:

-

1.

A self contained particle-Gibbs sampler which targets the parameter distribution for JMLS systems, without restriction on state dimension, model or noise structure, or the number of models.

-

2.

The provided solution uses a modified discrete particle filter for application specifically toward JMLS, which is detailed thoroughly. A discussion is also provided, clarifying a point of confusion in the original papers [53, 22]. Additionally, an alternative sampling approach is used when compared to [53], which is simpler and unbiased.

2 Problem Formulation

In this paper, we address the modelling of systems which can jump between a finite number of linear dynamic system modes using a discrete-time jump Markov linear system description, which can be expressed as

| (1a) | ||||

| where is the system state, is the system output, is the system input, is a discrete random variable called the model index that indicates the active model, and the noise terms and originate from the Gaussian white noise process | ||||

| (1b) | ||||

where

| (2) |

denotes a multivariate Normal distribution.

The system matrices and noise covariances are allowed to randomly jump or switch values as a function of the model index . A switch event is captured by allowing to transition to stochastically with the probability of transitioning from the model at time-index to the model at time-index given by

| (3) |

The set of model parameters that fully describe the above JMLS class can be conveniently collected into a parameter object , defined as

| (4) |

Problem: Presented with known state dimension and known number of system modes , a data record of outputs and inputs

respectively, the primary focus of this paper is provide a random number generator that produces samples from the target distribution

| (5) |

The following section details how this distribution can be targeted using a Markov chain Monte Carlo technique called particle-Gibbs sampling.

3 Markov Chain Monte-Carlo Approach

The essential idea underpinning so-called Markov chain Monte-Carlo (MCMC) methods is that they are focussed on computing expectation integrals of the form

| (6) |

where the function may be quite general. For example, could be as simple as an indicator function for belonging to a given set, or more complex functions such as the gain or phase margins of a dependent control design.

Solving (6) is generally intractable in closed-form, and classical quadrature methods are limited to small dimensions only. An alternative computational approach relies on a law of large numbers (LLN) to provide estimates of (6) via so-called Monte-Carlo integration where samples from are used to compute a sample average of according to

| (7) |

Under rather general assumptions on and , it can be shown that

| (8) |

where a.s. denotes almost sure convergence. Importantly, the rate of convergence of to is maximised when the samples are uncorrelated [46]. This raises the question of how to generate samples from that have minimal correlation.

A remarkably effective approach aimed at solving this problem is to construct a Markov chain whose stationary distribution coincides with the target . Equally remarkable is that such a Markov chain can be constructed in a straightforward manner using the Metropolis–Hastings (MH) algorithm [45, 36].

In this work, we will use a variant of the MH algorithm known as the particle-Gibbs sampler. In essence, the main idea of the particle-Gibbs sampler is to reduce a high-dimensional sampling problem into a sequence of low-dimensional sub-problems. The primary aim is to design these sub-problems so that sampling is relatively straightforward.

In detailing this approach for JMLS identification, it is important to extend our target distribution to the joint parameter and state posterior

| (9) |

where is a hybrid continuous-discrete trajectory, i.e.,

| (10a) | ||||

| (10b) | ||||

The particle-Gibbs algorithm will produce a Markov chain, whose iterates

| (11) |

are samples from the target . Furthermore, samples from the desired marginal posterior are obtained by simply ignoring the component of the joint samples.

There are many different ways to design a particle-Gibbs sampler to provide the desired Markov chain in (11), but possibly the most direct choice is to firstly sample the state trajectory based on the assumption of an available value. Then in a second step, this is reversed and a new sample of is generated based on the sampled state trajectory . This process is then repeated in order to provide the desired Markov chain. This procedure can be summarised by the following steps, which are indexed by the integer to clarify the iterative nature of this approach:

-

1.

Given , sample a hybrid trajectory according to

(12) -

2.

Then, given sample a new

(13)

The key to success for the above procedure is tied to the relative ease of generating samples in each step. Unfortunately, for the JMLS class it is not tractable to construct exactly, as this distribution requires an exponentially increasing number of terms as the data length increases.

It is tempting to consider the impact of simply replacing the exact distribution with a tractable approximation instead. While this may seem appealing from a practical perspective, it in general forfeits guarantees that samples are from the distribution , and therefore this implies that the resulting particle-Gibbs sampler does not produce samples from the desired target.

A remarkable result from [2] shows that such a particle-Gibbs sampler can be constructed by using sequential Monte Carlo (SMC) approximations of with guaranteed converge properties if the approximated distribution includes the previously sampled discrete model sequence . Using this particle approach, a particle filter can sample possible model sequence hypothesis (), indexed by and to calculate the approximate distribution

| (14) |

As the continuous space has a closed-form solution when conditioned on a model sequence, the particle filter employed for this problem is used to explore the discrete space only. As such, it is immensely computationally wasteful to directly employ traditional resampling schemes based on the component weights with the indices and collapsed. Doing so will likely result in duplicate components and repeated calculations, leaving other model hypothesis that could have been considered to remain unexplored.

This motivates the use of the discrete particle filter, which is employed in the proposed scheme to prevent this phenomenon. As specific details are required to implement a working DPF, it is introduced thoroughly in the following section. This section begins by relating the approximate distribution (14) produced by the DPF, to the required samples .

3.1 Sampling the latent variables

In the proposed solution, the latent variables are sampled from an approximated distribution of , with the requirement that this distribution considers the model sequence [2]. Complying with this requirement is very straight-forward, as any JMLS forward filter with a pruning/resampling scheme can be modified to always produce hybrid mixtures with the component related to . Using the produced approximated forward distributions (14), the samples can be generated by first sampling from the prediction distribution , followed by conditioning the filtered distribution on the latest sample, and sampling from the resulting distribution. These latter steps can be completed for , and can be shown to generate samples from the correct target distribution using the equations

| (15a) | ||||

Care must be taken when choosing the component reduction scheme required to compute with a practical computational cost. A merging-based scheme is strictly prohibited as this voids the convergence guarantees of the particle-Gibbs algorithm. Additionally, traditional resampling schemes for particles exploring discrete spaces are highly inefficient, as often multiple particles remaining after resampling are clones of each other. This not only is computationally wasteful, as the same calculations are performed multiple times, but also doesn’t explore the discrete space effectively.

This motivates the use of the DPF, a particle filter designed to explore a discrete space. In describing the main ideas behind the DPF it is convenient to define a new set of weights for each time instant, denoted as , that are simply the collection of all the weights from (14) for all values of and . That is

| (16) |

We can then index this array of weights using the notation to indicate the entry.

To reduce the growing number of hypothesises encountered, the DPF uses a sampling scheme which is partially deterministic, where the number of components are reduced but few (if any) clones will exist in the reduced mixture. Essentially, when tasked to reduce a mixture of components with respective weights , which satisfies , to a mixture with components, this scheme operates by considering the corresponding to the unique solution of

| (17) |

The component reduction algorithm then dictates that components with a weight satisfying

| (18) |

will be kept deterministically, leaving the remainder of the mixture to be undergo resampling.

Interestingly enough, the exact value of doesn’t need to be computed, and instead, the inclusion of each of the components can be tested, as detailed in Appendix C of [22]. In providing this solution, a second optimisation problem was conceived which only generates values of at the boundaries of the inclusion of each component. Unfortunately these values of are in general not common, and confusion mounts as resampled components are allocated a weight of , which provably should refer to the value generated from the second optimisation problem. The ’s have been used interchangeably in the paper and has led to dependant literature (e.g. [53]) skipping over the second optimisation problem entirely and mistakenly using the from the first optimisation problem.

To make matters worse, the following issues exist in the provided solution [22] to the second optimisation problem:

-

1.

The solution proposed does not handle the case when all components should be resampled, e.g., if reduction needs to be completed on components with equal weight.

-

2.

A strict inequality has been used mistakenly to form the sets which are used to compute the terms , and in the paper, misclassifying an equality that will be encountered at every boundary test.

Furthermore, the literature of [22, 53] mistakes systematic sampling for stratified sampling, and the sampling algorithm presented in [22] also contains an error, preventing duplicated components. These components statistically should either be allowed or instead, the component should be issued a modified weight when sampled multiple times.

The proposed Algorithm builds on the work of [22, 53] to provide a simpler DPF algorithm that is free of confusion about a term, which is capable of handling the case when all components should undergo resampling. Like [53], these sequences are produced using a particle-Gibbs algorithm, and the previous discrete state sequence is evaluated in the filter to ensure a guarantee of convergence. In the proposed scheme, this component is kept deterministically, which avoids the overly complex conditional sampling scheme from [53] that provably places biases upon component number and results in some components never being chosen if the algorithm was rerun an infinite number of times. To add further distinction to [53], a two-filter smoothing approach is not used in the proposed solution, and instead, the direct conditioning on the partially sampled hybrid trajectory described by (15) is used for sampling the remainder of the trajectory.

As the produced algorithms for sampling a hybrid trajectory are quite long and detailed, they have been placed in Appendix A along with practical notes for implementation.

With the approach taken for sampling the latent hybrid state trajectory covered, the choice of conjugate priors that govern the parameter distributions are now discussed. These priors are then updated with the freshly sampled hybrid trajectory to form new parameter distributions , which are subsequently sampled from to produce .

3.2 Conditioned parameter distributions

To enable efficient sampling of the JMLS parameters , the parameters were assumed to be distributed according to conjugate priors which allow for to be calculated using closed-form solutions. These conjugate priors were assumed to be as follows.

Like [21, 27, 18, 26, 33, 31], the columns of the model transition matrix were assumed to be distributed according to independent Dirichlet distributions,

| (19) |

where is a matrix of concentration parameters with elements , and is the gamma function.

The covariance matrices were assumed to be distributed according to an Inverse-Wishart distribution

| (20) |

where is the positive definite symmetric matrix argument, is the positive definite symmetric scale matrix, is the degrees of freedom, which must satisfy , and is the multivariate gamma function with dimension . A higher degree of freedom indicates greater confidence about the mean value of if .

Finally, the deterministic parameters for a model are assumed to be distributed according to a Matrix-Normal distribution with the form

| (21) |

with mean , positive definite column covariance matrix , and denoting the trace of matrix . The Matrix Normal is related to the Normal distribution by

| (22) |

Lemma 3.1 provides instructions on how these conjugate prior distributions can be conditioned on the sample , thus calculating the parameters for the distribution .

Lemma 3.1.

The distribution which parameters are sampled from can be expressed as

| (23) |

where the parameters define the prior for the -th model, and the corrected parameters for each can be calculated using

| (24a) | ||||

| (24b) | ||||

| (24c) | ||||

| (24d) | ||||

which use the quantities,

| (24ya) | ||||

| (24yb) | ||||

| (24yc) | ||||

| (24yd) | ||||

| (24ye) | ||||

| (24yf) | ||||

| (24yg) | ||||

| (24yh) | ||||

where is the set of time steps for which , and is the set of time steps for which and . Note that denotes the element in the -th row and -th column of matrix . Additionally, and denote the transpose and inverse of matrix respectively.

3.3 Sampling new parameters

As the distribution the distribution can now be calculated from Lemma 3.1, we now provide instruction on how may be sampled from such distribution.

We begin by sampling from a Inverse-Wishart distribution for each model , i.e.

| (24z) |

With sampled for , it is then possible to sample for each model using Lemma 3.2.

Lemma 3.2.

If we let be determined by

| (24aa) |

where denotes an upper Cholesky factor of , i.e. , and each element of is distributed i.i.d. according to

| (24ab) |

then

| (24ac) |

Proof.

See [32]. Finally sampling the transition matrix can be completed by sampling from Dirichlet distributions, all parametrised by . This is completed by sampling each element of from a Gamma distribution with shape parameter , and scale parameter of , i.e.,

| (24ad) |

before normalising over each column,

| (24ae) |

The complete procedure for sampling the parameter set is further outlined in Algorithm 1.

3.4 Algorithm Overview

For clarity, the proposed method is summarised in full by Algorithm 2.

4 Simulations

In this section we provide two simulations demonstrating the effectiveness of the proposed method.

4.1 Univariate system

In this example we use the proposed method on a univariate JMLS single-input single-output (SISO) system, comprising of two models . This ensures that parameters are scalar and distributions can appear on 2D plots. The choice to estimate a univariate system also ensures that certain system matrices () are free from a similarity transformation [6, 52, 51, 9], as in general, there is not a unique solution for these parameters.

The true system used in this example was parameterised by

| (24af) |

Input-output data was generated using these parameters and the input for time steps before the proposed method was applied to the dataset. The proposed method used a resampling step allowing hybrid components per time step, and used uninformative priors on the parameters to ensure the PDFs were highly data driven. The priors chosen were parameterised by

| (24aga) | |||

| (24agb) | |||

| (24agc) | |||

| (24agd) | |||

| (24age) | |||

Using these priors, the PDFs produced by the proposed method should have good support of the true parameters, and grow certainty about them with increasing size of dataset. Other alternative algorithms [40] can potentially operate on this system, but due to their use of inverse-Gamma distributions, cannot operate on the example within subsection 4.2. Additionally, as a univariate inverse-Wishart distribution is an inverse-Gamma distribution, these algorithms are equivalent for the univariate case.

The initial parameter set used in the particle-Gibbs sampler is allowed to be chosen arbitrarily. To avoid a lengthy burn-in procedure, the particle-Gibbs algorithm was initialised with values close to those which correspond the maximum likelihood solution. For a real-world problem this could be provided using the EM algorithm [9].

After iterations of the particle-Gibbs algorithm, the parameter samples were used to construct Figure 1 and Figure 2. Figure 1 shows the distribution of diagonal elements of the transition matrix, and represents the probability of models being used for consecutive time steps. As the off-diagonals are constrained by the total law of probability, there is no need to plot them. Whereas Figure 2 shows the distribution of components within which are free from a similarity transformation.

The proposed method has produced distributions with a large amount of support for the true parameters, which suggests that the algorithm is correctly performing Bayesian identification on the system. As expected, subsequent testing has shown that an increase in data length will tighten the confidence intervals of the parameters.

Generation of the both figures for this example, required the models to be sorted. Models were sorted or ‘relabelled’ by comparison of the magnitude of the models Bode response. This is not a core part of the proposed algorithm, as reordering is only required for plotting purposes.

4.2 Multivariate system

In this example, we consider identification of a multivariate three-state SISO JMLS system comprising of three models . To the best of the authors knowledge there are no alternative algorithms suitable for this problem, or for generating a ground truth.

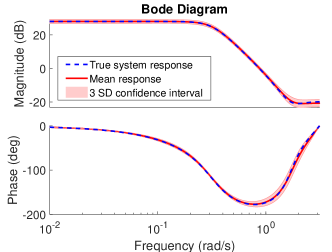

As this example considers a multivariate system, there are an infinite number of state space modes which has equivalent system response. Because of this, plotting the distribution of the model parameters themselves would be somewhat arbitrary. Instead, for the analysis, we provide a variation likely frequency responses of the models. The distribution of the transition matrix however, is free from similarity transformations, but due to the increase in available models can no longer appear on a 2D plot.

The system analysed in this example, described by the discrete transfer functions and transition matrix

| (24aha) | ||||

| (24ahb) | ||||

| (24ahc) | ||||

| and | ||||

| (24ahd) | ||||

respectively was then simulated for time steps using an input . This system was then used to initialise the proposed procedure to avoid a lengthy burn-in time. For a real-world example, this initial estimate could be obtained using the EM algorithm [9].

The proposed method was then used to identify the system based on the generated input-output data with the filter being allowed to store hybrid Gaussian mixture components. The uninformative priors chosen for identification were parameterised by

| (24aia) | |||

| (24aib) | |||

| (24aic) | |||

| (24aid) | |||

| (24aie) | |||

After particle-Gibbs iterations, the samples were used to construct Figure 3 and Figure 4. Figure 3 shows the estimated distribution of model transition probabilities, and Figure 4 shows the variation of expected model responses. As with Example 1, models were sorted using their frequency response before producing these figures. Both of these figures show good support for the model used to generate the data, demonstrating validity of the proposed algorithm.

5 Conclusion

We have developed and demonstrated an effective algorithm for Bayesian parameter identification of JMLS systems. Unlike alternative methods, we have not forced assumptions such as a univariate state or operation according to drift models. The proposed method scales easily to an increase in models and state dimension. This solution was based on a modified version of the discrete particle filter. In developing this method, points of confusion surrounding the documentation for the DPF were discovered, and have been addressed within this paper.

The proposed method was deployed for Bayesian estimation of a multivariate JMLS system in subsection 4.2, yielding distributions with good support of the transition matrix and models used to generate the data. It should be noted that due to a finite data length the true values used are unlikely to align with the maximum a posteriori (MAP) estimate.

It is unfortunate that competing approaches for either of the examples don’t exist. Regarding the first example, competing approaches use an inverse-Gamma distribution, which is identical to the inverse-Wishart distribution for the univariate case, and yield an identical algorithm. Regarding the second example, to the best of our knowledge, no alternative algorithms are available for the unconstrained multivariate JMLS identification problem.

References

- [1] James H Albert and Siddhartha Chib. Bayes inference via gibbs sampling of autoregressive time series subject to Markov mean and variance shifts. Journal of Business & Economic Statistics, 11(1):1–15, 1993.

- [2] Christophe Andrieu, Arnaud Doucet, and Roman Holenstein. Particle markov chain monte carlo methods. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 72(3):269–342, 2010.

- [3] Trevor T Ashley and Sean B Andersson. A sequential monte carlo framework for the system identification of jump Markov state space models. In 2014 American Control Conference, pages 1144–1149. IEEE, 2014.

- [4] Karl Johan Åström and Peter Eykhoff. System identification—a survey. Automatica, 7(2):123–162, 1971.

- [5] KJ Astrom. Maximum likelihood and prediction error methods. IFAC Proceedings Volumes, 12(8):551–574, 1979.

- [6] Laurent Bako, Guillaume Mercère, René Vidal, and Stéphane Lecoeuche. Identification of switched linear state space models without minimum dwell time. IFAC Proceedings Volumes, 42(10):569–574, 2009.

- [7] Hamsa Balakrishnan, Inseok Hwang, Jung Soon Jang, and Claire J Tomlin. Inference methods for autonomous stochastic linear hybrid systems. In International Workshop on Hybrid Systems: Computation and Control, pages 64–79. Springer, 2004.

- [8] Mark P. Balenzuela, Adrian G. Wills, Christopher Renton, and Brett Ninness. A new smoothing algorithm for jump Markov linear systems. Submitted to Automatica.

- [9] Mark P. Balenzuela, Adrian G. Wills, Christopher Renton, and Brett Ninness. A variational expectation-maximisation algorithm for learning jump Markov linear systems. Submitted to Automatica.

- [10] David Barber. Expectation correction for smoothed inference in switching linear dynamical systems. Journal of Machine Learning Research, 7(Nov):2515–2540, 2006.

- [11] Niclas Bergman and Arnaud Doucet. Markov chain monte carlo data association for target tracking. In Acoustics, Speech, and Signal Processing, 2000. ICASSP’00. Proceedings. 2000 IEEE International Conference on, volume 2, pages II705–II708. IEEE, 2000.

- [12] Francesco Bianchi. Regime switches, agents’ beliefs, and post-world war ii us macroeconomic dynamics. Review of Economic studies, 80(2):463–490, 2012.

- [13] Lars Blackmore, Stephanie Gil, Seung Chung, and Brian Williams. Model learning for switching linear systems with autonomous mode transitions. In 2007 46th IEEE Conference on Decision and Control, pages 4648–4655. IEEE, 2007.

- [14] Henk AP Blom and Yaakov Bar-Shalom. The interacting multiple model algorithm for systems with Markovian switching coefficients. IEEE transactions on Automatic Control, 33(8):780–783, 1988.

- [15] Chris K Carter and Robert Kohn. On gibbs sampling for state space models. Biometrika, 81(3):541–553, 1994.

- [16] Christopher K Carter and Robert Kohn. Markov chain monte carlo in conditionally gaussian state space models. Biometrika, 83(3):589–601, 1996.

- [17] Yoosoon Chang, Junior Maih, and Fei Tan. State space models with endogenous regime switching. 2018.

- [18] Siddhartha Chib. Calculating posterior distributions and modal estimates in Markov mixture models. Journal of Econometrics, 75(1):79–97, 1996.

- [19] Siddhartha Chib. Estimation and comparison of multiple change-point models. Journal of econometrics, 86(2):221–241, 1998.

- [20] Luca Di Persio and Vukasin Jovic. Gibbs sampling approach to Markov switching models in finance. International Journal of Mathematical and Computational Methods, 1, 2016.

- [21] Jean Diebolt and Christian P Robert. Estimation of finite mixture distributions through bayesian sampling. Journal of the Royal Statistical Society: Series B (Methodological), 56(2):363–375, 1994.

- [22] Paul Fearnhead and Peter Clifford. On-line inference for hidden Markov models via particle filters. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 65(4):887–899, 2003.

- [23] Emily Fox, Erik B Sudderth, Michael I Jordan, and Alan S Willsky. Bayesian nonparametric inference of switching dynamic linear models. IEEE Transactions on Signal Processing, 59(4):1569–1585, 2011.

- [24] Sylvia Frühwirth-Schnatter. Data augmentation and dynamic linear models. Journal of time series analysis, 15(2):183–202, 1994.

- [25] Sylvia Frühwirth-Schnatter. Fully bayesian analysis of switching gaussian state space models. Annals of the Institute of Statistical Mathematics, 53(1):31–49, 2001.

- [26] Sylvia Frühwirth-Schnatter. Markov chain monte carlo estimation of classical and dynamic switching and mixture models. Journal of the American Statistical Association, 96(453):194–209, 2001.

- [27] Sylvia Frühwirth-Schnatter. Finite mixture and Markov switching models. Springer Science & Business Media, 2006.

- [28] Richard Gerlach, Chris Carter, and Robert Kohn. Efficient bayesian inference for dynamic mixture models. Journal of the American Statistical Association, 95(451):819–828, 2000.

- [29] Zoubin Ghahramani and Geoffrey E Hinton. Variational learning for switching state-space models. Neural computation, 12(4):831–864, 2000.

- [30] Stephanie Gil and Brian Williams. Beyond local optimality: An improved approach to hybrid model learning. In Proceedings of the 48h IEEE Conference on Decision and Control (CDC) held jointly with 2009 28th Chinese Control Conference, pages 3938–3945. IEEE, 2009.

- [31] Paolo Giordani and Robert Kohn. Efficient bayesian inference for multiple change-point and mixture innovation models. Journal of Business & Economic Statistics, 26(1):66–77, 2008.

- [32] Arjun K Gupta and Daya K Nagar. Matrix variate distributions. Chapman and Hall/CRC, 2018.

- [33] Markus Hahn, Sylvia Frühwirth-Schnatter, and Jörn Sass. Markov chain monte carlo methods for parameter estimation in multidimensional continuous time Markov switching models. Journal of Financial Econometrics, 8(1):88–121, 2010.

- [34] James D Hamilton. A new approach to the economic analysis of nonstationary time series and the business cycle. Econometrica: Journal of the Econometric Society, pages 357–384, 1989.

- [35] Masafumi Hashimoto, Hiroyuki Kawashima, Takashi Nakagami, and Fuminori Oba. Sensor fault detection and identification in dead-reckoning system of mobile robot: interacting multiple model approach. In Intelligent Robots and Systems, 2001. Proceedings. 2001 IEEE/RSJ International Conference on, volume 3, pages 1321–1326. IEEE, 2001.

- [36] W Keith Hastings. Monte carlo sampling methods using markov chains and their applications. 1970.

- [37] Ronald E Helmick, W Dale Blair, and Scott A Hoffman. Fixed-interval smoothing for Markovian switching systems. IEEE Transactions on Information Theory, 41(6):1845–1855, 1995.

- [38] Jeroen D Hol. Resampling in particle filters, 2004.

- [39] Chang-Jin Kim. Dynamic linear models with Markov-switching. Journal of Econometrics, 60(1-2):1–22, 1994.

- [40] Chang-Jin Kim, Charles R Nelson, et al. State-space models with regime switching: classical and gibbs-sampling approaches with applications. MIT Press Books, 1, 1999.

- [41] EL Lehmann. Theory of point estimation, john willey & sons. Inc., New York, 1983.

- [42] Ljung Lennart. System identification: theory for the user. PTR Prentice Hall, Upper Saddle River, NJ, pages 1–14, 1999.

- [43] Andrew Logothetis and Vikram Krishnamurthy. Expectation maximization algorithms for MAP estimation of jump Markov linear systems. IEEE Transactions on Signal Processing, 47(8):2139–2156, 1999.

- [44] Efim Mazor, Amir Averbuch, Yakov Bar-Shalom, and Joshua Dayan. Interacting multiple model methods in target tracking: a survey. IEEE Transactions on aerospace and electronic systems, 34(1):103–123, 1998.

- [45] Nicholas Metropolis, Arianna W Rosenbluth, Marshall N Rosenbluth, Augusta H Teller, and Edward Teller. Equation of state calculations by fast computing machines. The journal of chemical physics, 21(6):1087–1092, 1953.

- [46] Brett Ninness. Strong laws of large numbers under weak assumptions with application. IEEE Transactions on Automatic Control, 45(11):2117–2122, 2000.

- [47] C. P. Robert and G. Casella. Monte Carlo Statistical Methods. Springer texts in statistics. Springer, New York, USA, second edition, 2004.

- [48] Christian Robert. The Bayesian choice: from decision-theoretic foundations to computational implementation. Springer Science & Business Media, 2007.

- [49] Neil Shephard. Partial non-gaussian state space. Biometrika, 81(1):115–131, 1994.

- [50] Mike EC P So, Kin Lam, and Wai Keung Li. A stochastic volatility model with Markov switching. Journal of Business & Economic Statistics, 16(2):244–253, 1998.

- [51] Andreas Svensson, Thomas B Schön, and Fredrik Lindsten. Identification of jump Markov linear models using particle filters. In 53rd IEEE Conference on Decision and Control, pages 6504–6509. IEEE, 2014.

- [52] René Vidal, Alessandro Chiuso, and Stefano Soatto. Observability and identifiability of jump linear systems. In Proceedings of the 41st IEEE Conference on Decision and Control, 2002., volume 4, pages 3614–3619. IEEE, 2002.

- [53] Nick Whiteley, Christophe Andrieu, and Arnaud Doucet. Efficient Bayesian inference for switching state-space models using discrete particle Markov chain Monte Carlo methods. arXiv preprint arXiv:1011.2437, 2010.

- [54] Adrian Wills, Thomas B Schön, Fredrik Lindsten, and Brett Ninness. Estimation of linear systems using a gibbs sampler. IFAC Proceedings Volumes, 45(16):203–208, 2012.

- [55] Sinan Yildirim, Sumeetpal S Singh, and Arnaud Doucet. An online expectation–maximization algorithm for changepoint models. Journal of Computational and Graphical Statistics, 22(4):906–926, 2013.

Appendix A Hybrid state sampling algorithms

This appendix details a self-contained complete set of algorithms required for sampling hybrid state trajectories.

Here, the forward filter is described by Algorithm 3, that makes use of Algorithm 4 to control the computational complexity. Algorithm 4 then makes subsequent use of the DPF threshold calculated by Algorithm 5 and systematic resampling scheme detailed in Algorithm 6. Following the forward-filtering pass, Algorithm 7 may be used to sample the required trajectories.

The following notes are intended to assist the practitioner:

-

•

The forwards filter and sampling of a latent trajectory is intended to be completed completed using the parameter set, this is not explicitly written for readability purposes.

-

•

If the ancestor component has a weight of numerically zero in Algorithm 3, it no longer needs to be tracked, as it cannot be selected by the backwards simulator, improving the produced distribution.

-

•

Algorithm 5 should be implemented using the LSE trick.

-

•

Duplicated components are allowed to be returned from Algorithm 6.

- •

Before filtering and backwards simulating each time step using Algorithms 3 and 7, the following transformation made to efficiently handle the cross-covariance term ,

| (24aj) |

Notice that the new system uses a different input .

| (24ak) |

| (24ala) | ||||

| (24alb) | ||||

| (24alc) | ||||

| (24ald) | ||||

| (24ale) | ||||

| (24alf) | ||||

| (24am) |

| (24ana) | ||||

| (24anb) | ||||

| (24anc) | ||||

| (24ao) |

| (24ap) |

| (24aq) |

| (24ar) | ||||

| (24as) | ||||

| (24at) |

| (24aua) | ||||

| (24aub) | ||||

| (24auc) | ||||

| (24aud) | ||||

| (24aue) | ||||

| (24auf) | ||||

| (24av) |

| (24aw) | ||||

| (24ax) | ||||

| (24ay) |

Appendix B Proofs

In this appendix we provide proofs for the Algorithms and Lemmata within the paper.

B.1 Proof of Algorithm 7

For readability, in this proof we utilise the shorthand . We begin the derivation with

| (24az) |

We will now outline computations the distribution . Since the terms in the numerator have a known form,

| (24ba) |

and by removing conditionally independent terms we yield

| (24bb) |

we can rewrite and manipulate the numerator as follows,

| (24bc) |

where , and , and can be computed straight-forwardly as this pattern of Normal distribution terms has a well known solution which is identical the correction step of the weighted Kalman filter used for forward-filtering. With the numerator of now having a closed form, it can be marginalised to yield the denominator

| (24bd) |

Therefore

| (24be) |

where

| (24bf) |

Sampling may be computed by introducing a auxiliary variable of some hybrid Gaussian mixture, which represents a possible model sequence for this application,

| (24bg) |

Normally this variable is not of interest and is marginalised out. Using conditional probability, we outline sampling from , and as sampling from the distributions

| (24bh) |

where the component of the sample may be discarded to obtain a sample from . Next we consider marginalisation of to yield

| (24bi) |

Considering the and distributions,

| (24bj) | ||||

| (24bk) |

where these are Categorical distributions, which and can easily be sampled from. Next it follows from (24bg) and (24bi) that

| (24bl) |

and therefore sampling can be completed straight-forwardly using

| (24bm) |

∎

Proof of Lemma 3.1

For readability, in this proof we utilise the shorthand . We begin with

| (24bn) |

as is a constant for each iteration. By expanding and exercising conditional independence, we yield

| (24bo) |

Next we consider the each of the columns in the matrix, written as , to be conditionally independent, and therefore . Therefore

where is the set of time steps in sample which has model being active, i.e. . Substituting the assumed distributions for these terms yields

| (24bq) |

where denotes the -th column of . We can now use well known results for updating the conjugate prior, see e.g. [54]. This yields a solution of the form

| (24br) |

where the parameters can be calculated using the instructions provided in Lemma 3.1. A sample from this distribution can be taken by sampling from the Dirichlet, Matrix-Normal and Inverse-Wishart distribution for each model.

∎