Bayesian Optimization under Stochastic Delayed Feedback

Abstract

Bayesian optimization (BO) is a widely-used sequential method for zeroth-order optimization of complex and expensive-to-compute black-box functions. The existing BO methods assume that the function evaluation (feedback) is available to the learner immediately or after a fixed delay. Such assumptions may not be practical in many real-life problems like online recommendations, clinical trials, and hyperparameter tuning where feedback is available after a random delay. To benefit from the experimental parallelization in these problems, the learner needs to start new function evaluations without waiting for delayed feedback. In this paper, we consider the BO under stochastic delayed feedback problem. We propose algorithms with sub-linear regret guarantees that efficiently address the dilemma of selecting new function queries while waiting for randomly delayed feedback. Building on our results, we also make novel contributions to batch BO and contextual Gaussian process bandits. Experiments on synthetic and real-life datasets verify the performance of our algorithms.

1 Introduction

Bayesian optimization (BO) (Shahriari et al., 2015; Frazier, 2018; Garnett, 2022) is a popular and widely-used sequential method for zeroth-order optimization of unknown black-box functions that are complex and expensive to compute. The existing BO methods assume that the function evaluation (feedback) is available to the learner immediately or after a fixed delay.

However, these assumptions are impractical in many real-life problems where feedback is available after a random delay. To take advantage of the experimental parallelization in these problems, the learner needs to start new function evaluations without waiting for the randomly delayed feedback.

We refer to this new BO problem as ‘Bayesian Optimization under Stochastic Delayed Feedback’ (BO-SDF). In this paper, we propose algorithms that efficiently address the problem of selecting new function queries while waiting for randomly delayed feedback. Specifically, we answer the following question:

How to start a new function query when the observations of the past function queries are randomly delayed?

Many real-life applications can be cast as BO-SDF problems. For example, when performing clinical trials to discover new medicines, we need to optimize the amount of different raw materials in order to find their most effective composition using a small number of clinical trials (Chow & Chang, 2006), which is a natural application for BO due to its sample efficiency. However, the evaluation of the effectiveness of a medicine needs to take into account the side effects which are usually not observed immediately but are instead revealed after a random period due to the varying physiology of different patients. Therefore, a natural challenge here is how to use BO to choose the next composition for testing, while accounting for the stochastically delayed observations from some previous clinical trials. A similar challenge also arises when we aim to find the most effective dose of a new medicine through clinical trials (Takahashi & Suzuki, 2021).

Another motivating example arises from online product recommendation (Chapelle, 2014; Diemert et al., 2017). Most online platforms make recommendations in milliseconds, but the user’s response (i.e., watching a movie or buying a product) generally happens after a random time period which may range from hours to days or even weeks. Furthermore, the issue of randomly delayed feedback also plagues other common applications of BO such as hyperparameter tuning of machine learning (ML) models (Snoek et al., 2012) (e.g., the training time of a neural network depends on the number of layers, network width, among others) and material discovery (Ueno et al., 2016).

The closest BO setting to our BO-SDF is batch BO (Desautels et al., 2014; Daxberger & Low, 2017; Chowdhury & Gopalan, 2019) which also allows multiple queries to be performed on the black-box function in parallel. Both problems require choosing an input query to evaluate the black-box function while the observations of some previously selected inputs are not available (i.e., delayed). However, there are important differences because the delays are fixed in a certain way in batch BO but can be random in BO-SDF. Interestingly, batch BO can be considered as a special case of our BO-SDF problem and hence, our algorithms proposed for BO-SDF can be applied to batch BO while achieving important improvements (Section 4).

Following the practice of BO, in order to choose the input queries to quickly approach the global optima, we employ a Gaussian process (GP) (Rasmussen & Williams, 2006) to model the black-box function, which builds a posterior belief of the function using the history of function queries and their feedback. However, when constructing this posterior belief in BO-SDF problems, we are faced with the important question of how to handle the randomly delayed feedback whose observations are not available. To this end, we replace the unavailable delayed feedback by the minimum function value,111In many problems, the minimum value of a function is known, e.g., a user’s minimum response in online recommendation systems is ‘no click’ (i.e., ) and the minimum accuracy for hyperparameter tuning of machine learning models is ‘’. which we refer to as censored feedback. The use of censored feedback, interestingly, improves the exploration in BO-SDF problems (Section 3.1) and hence leads to better theoretical and empirical performances. With the posterior belief built using censored feedback, we propose algorithms using upper confidence bound (UCB) and Thompson sampling (TS), both of which enjoy sub-linear regret guarantees. Specifically, our contributions are as follows:

-

•

We introduce and formalize the notion of censored feedback in the BO-SDF problem, and propose UCB- and TS-based algorithms with sub-linear regret guarantees (Section 3).

-

•

Since batch BO is a special case of BO-SDF, we apply our proposed algorithms (Section 3) to batch BO and show that our algorithms enjoy tighter regret upper bounds than classic batch BO algorithms (Section 4). This gain is mainly attributed to the censored feedback, which leads to a better exploration policy.

- •

-

•

Our experimental results in Section 6 validate the different performance aspects of our proposed algorithms on synthetic and real-life datasets.

2 Problem Setting

This paper considers the Bayesian optimization (BO) problem where the function evaluations (feedback) are available to the learner after a random delay. Let be a finite domain of all function queries where .222We assume to be finite, but it is straightforward to extend our theoretical results to a compact domain using a suitable discretization scheme (Li & Scarlett, 2022, Lemma 2). The learner selects a new function query as soon as enough resources are available (or when experiment parallelization is possible). We denote the -th query by . After the learner selects the query to evaluate the unknown black-box function , the environment generates a noisy function evaluation, denoted by feedback . We assume that is an -sub-Gaussian noise. The learner observes only after a stochastic delay , which is generated from an unknown distribution .

We refer to this new BO problem as ‘Bayesian Optimization under Stochastic Delayed Feedback’ (BO-SDF). The unknown function , query space , and unknown delay distribution identify an instance of the BO-SDF problem. The optimal query () has the maximum function value (global maximizer), i.e., . After selecting a query , the learner incurs a penalty (or instantaneous regret) .

Since the optimal function query is unknown, we sequentially estimate this using the available information of the selected queries and observed feedback. Our goal is to learn a sequential policy for selecting queries that finds the optimal query (or global maximizer) as quickly as possible. There are two common performance measures for evaluating a sequential policy. The first performance measure is simple regret. Let be the -th function query selected by the policy. Then, after observing function evaluations, the simple regret is Any good policy must have no regret, i.e., . The second performance measure of the policy is cumulative regret which is the sum of total penalties incurred by the learner. After observing function evaluations, the cumulative regret of a policy is given by Any good policy should have sub-linear regret, i.e., Even though simple regret and cumulative regret are different performance measures, having a policy with no regret or sub-linear regret implies that the policy will eventually converge to the optimal query (or global optimizer).

3 BO under Stochastic Delayed Feedback

A function estimator is the main component of any BO problem for achieving good performance. We use a Gaussian process (GP) as a surrogate for the posterior belief of the unknown function (Rasmussen & Williams, 2006; Srinivas et al., 2010). To deal with the delayed feedback, we will introduce the notion of censored feedback in GPs, in which the delayed feedback is replaced by the minimum function value. Finally, we will propose UCB- and TS-based algorithms with sub-linear regret guarantees.

3.1 Estimating Function using Gaussian Processes

We assume that the unknown function belongs to the reproducing kernel Hilbert space (RKHS) associated with a kernel , i.e., where . By following standard practice in the literature, we assume without loss of generality that for all . We also assume that feedback is bounded, i.e., for all . This assumption is not restrictive since any bounded function can be re-scaled to satisfy this boundedness requirement. For example, the validation accuracy of machine learning models in our hyperparameter tuning experiment in Section 6 is bounded in .

Censored Feedback.

The learner has to efficiently exploit available information about queries and feedback to achieve a better performance. The estimated GP posterior belief has two parts, i.e., mean function and covariance function. The posterior covariance function only needs the function queries for building tighter confidence intervals, whereas the posterior mean function needs both queries and their feedback. Incorporating the queries with delayed feedback in updating the posterior mean function leads to a better estimate, but the question is how to do it. One possible solution is to replace the delayed feedback with some value, but then the question is what should that value be.

To pick the suitable value for replacing delayed feedback, we motivate ourselves from real-life applications like the online movies recommendations problem. When an online platform recommends a movie, it expects the following responses from the users – not watching the movie, watching some part of the movie, and watching the entire movie. The platform can map these responses to where is assigned for ‘not watching the movie’, for ‘watching the entire movie’, and an appropriate value in for ‘watching some part of the movie.’ There are two reasons for the delay in the user’s response: The user does not like the recommended movie or does not have time to watch the movie. Before the user starts watching the movie, the platform can replace the user’s delayed response with ‘not watching the movie’ (i.e., ‘’) and update it later when more information is available. Therefore, we can replace the delayed feedback with the minimum function value.333 It is possible to replace the delayed feedback with other values. When it is replaced by the current GP posterior mean, it has the same effect as the hallucination in batch BO (more details in Section 4). Another possible value for replacing the delayed feedback is the -nearest posterior means where . We refer to this replaced feedback as censored feedback. The idea of censored feedback is also used in other problems such as censoring delayed binary feedback (Vernade et al., 2020) and censoring losses (Verma et al., 2019, 2021) or rewards (Verma & Hanawal, 2020) in online resource allocation problems.

When the minimum function value replaces the delayed feedback, the posterior mean around that query becomes small, consequently ensuring that the learner will not select the queries around the queries with delayed feedback before exploring the other parts of the query’s domain. Therefore, the censored feedback leads to better exploration than replacing delayed feedback with a larger value (e.g., current posterior mean). However, the queries with delayed feedback need to be stored so that the posterior mean can be updated when feedback is revealed. Due to several reasons (e.g., limited storage, making resources available to new queries), the learner cannot keep all queries with delayed feedback and replace the old queries with the latest ones.444Our motivation for removing older queries comes from online recommendation problems where the chance of observing feedback diminishes with time. The learner can also use a more complicated scheme for query removal. It can degrade the algorithm’s performance, as discussed in Section 3.3 and demonstrated by experiments in Section 6.

Let an incomplete query represent the function query with unobserved feedback and be the number of incomplete queries the learner can store. We assume that the random delay for a query is the number of new queries that need to be started (i.e., number of iterations elapsed) before observing its feedback. Therefore, the delay only takes values of non-negative integers.555We have introduced the delay based on iterations here to bring out the main ideas of censored feedback. Any suitable discrete distribution (e.g., Poisson) can model such delays. We have also introduced the censored feedback with time-based delay, which is more practical and can be modeled using a suitable continuous distribution (see Section A.4 for more details). The censored feedback of the -th query before selecting the -th query is denoted by . That is, the learner censors the incomplete queries by assigning to them.666We can make the minimum value of any bounded function by adding to it the negative of the minimum function value. It does not change the optimizer since the optimizer of a function is invariant to the constant function shift. By doing this, we can represent censored feedback by an indicator function, making censored feedback easier to handle in theoretical analysis. Now, the GP posterior mean and covariance functions can be expressed using available function queries and their censored feedback, as follows:

| (1) | ||||

where , , , , and is a regularization parameter that ensures is a positive definite matrix. We denote .

3.2 Algorithms for the BO-SDF problem

After having a posterior belief of the unknown function, the learner can decide which query needs to be selected for the subsequent function evaluation. Since the feedback is only observed for the selected query, the learner needs to deal with the exploration-exploitation trade-off. We use UCB- and TS-based algorithms for BO-SDF problems that handle the exploration-exploitation trade-off efficiently.

UCB-based Algorithm for BO-SDF problems. UCB is an effective and widely-used technique for dealing with the exploration-exploitation trade-off in various sequential decision-making problems (Auer et al., 2002; Garivier & Cappé, 2011). We propose a UCB-based algorithm named GP-UCB-SDF for the BO-SDF problems. It works as follows: When the learner is ready to start the -th function query, he firstly updates the GP posterior mean and standard deviation defined in Eq. 1 using available censored noisy feedback. Then, he selects the input for the next function evaluation by maximizing the following UCB value:

| (2) |

where , , , and is the maximum information gain about the function from any set of function queries (Srinivas et al., 2010).

After selecting the input , the learner queries the unknown function and the environment generates a noisy feedback with an associated delay . The learner observes only after a delay of iff . Before starting a new function evaluation, the learner keeps observing the noisy feedback of past queries. The same process is repeated for the subsequent function evaluations.

TS-based Algorithm for BO-SDF problems. In contrast to the deterministic UCB, TS (Thompson, 1933; Agrawal & Goyal, 2012, 2013a; Kaufmann et al., 2012) is based on Bayesian updates that select inputs according to their probability of being the best input. Many works have shown that TS is empirically superior to UCB-based algorithms (Chapelle & Li, 2011; Chowdhury & Gopalan, 2019). We name our TS-based algorithm GP-TS-SDF which works similarly to GP-UCB-SDF, except that the -th function query is selected as follows:

| (3) |

where .

3.3 Regret Analysis

Let for be the probability of observing delayed feedback within the next iterations. The following result gives the confidence bounds of GP posterior mean function and is important to our subsequent regret analysis:

Theorem 1 (Confidence Ellipsoid).

Let , , and . Then, with probability at least ,

The detailed proofs of 1 and other results below are given in Appendix A. Now, we state the regret upper bounds of our proposed GP-UCB-SDF and GP-TS-SDF:

Theorem 2 (GP-UCB-SDF).

Let . Then, with probability at least ,

As expected, the regret inversely depends on , i.e., the probability of observing delayed feedback within the next iterations. If we ignore the logarithmic factors and constants in 2, then the regret of GP-UCB-SDF is upper bounded by .

Theorem 3 (GP-TS-SDF).

With probability at least ,

The regret bounds in both Theorems 2 and 3 are sub-linear for the commonly used squared exponential (SE) kernel, for which (Srinivas et al., 2010).

By following the common practice in the BO literature (Contal et al., 2013; Kandasamy et al., 2018), we can upper-bound the simple regret by the average cumulative regret, i.e., .

Discussion on the trade-off regarding . A large value of ensures that fewer queries are discarded and favors the regret bound (i.e., via the term ) by making large. However, a large also means that our algorithm allows larger delays, which consequently forces us to enlarge the width of the confidence interval (1) to account for them. It makes the regret bound worse, which is also reflected by the linear dependence on in the second term of the regret upper bounds (Theorems 2 and 3). As is directly related to the storage requirement of input queries whose feedback are yet to be observed, a large value of is constrained by the resources available to the learner.

Input-dependent random delays. In some applications, the random delay can depend on the function query: for example, a larger number of hidden layers increases the training time of a neural network. Our regret bounds still hold with input-dependent random delays by redefining for queries as .

Limitations of censored feedback. Censoring, i.e., replacing values of incomplete queries with the minimum function values, leads to an aggressive exploration strategy. However, censoring is required to ensure that our BO method does not unnecessarily explore the incomplete queries or queries near them. Moreover, we can reduce the effect of this aggressive exploration by increasing the value of , since a larger leads to less aggressive censoring. Another limitation of the censoring method is that it needs to know the minimum function value. However, when the minimum value is unknown, we can use a suitable lower bound as a proxy for the minimum function value. However, regret analysis of such a method can be challenging and left for future research.

4 Improved Algorithms for Batch BO

Notably, our BO-SDF problem subsumes batch BO as a special case. Specifically, the function queries in batch BO can be viewed as being sequentially selected, in which some queries need to be selected while some other queries of the same batch are still incomplete (Desautels et al., 2014). Therefore, in batch BO with a batch size of , the incomplete queries can be viewed as delayed feedback with fixed delays s.t. . In this case, by choosing , we can ensure that . As a result, our method of censoring (Section 3.1) gives a better treatment to the incomplete queries than the classic technique from batch BO, which we will demonstrate next via both intuitive justification and regret comparison.

The classic technique to handle the incomplete queries in batch BO is hallucination which was proposed by the GP-BUCB algorithm from (Desautels et al., 2014). It has also been adopted by a number of its extensions (Daxberger & Low, 2017; Chowdhury & Gopalan, 2019). In particular, the feedback of incomplete queries is hallucinated to be (i.e., censored with) the GP posterior mean computed using only the completed queries (excluding the incomplete queries). It is equivalent to keeping the GP posterior mean unchanged (i.e., unaffected by the incomplete queries) while updating the GP posterior variance using all selected queries (including the incomplete queries). Note that in contrast to the hallucination, our censoring technique sets the incomplete observations to the minimum function value (i.e., ) (Section 3.1). As a result, compared with the hallucination where the GP posterior mean is unaffected by the incomplete queries, our censoring can reduce the value of the GP posterior mean at the incomplete queries (because we have treated their observations as if they are ). As a result, our censoring can discourage these incomplete queries from being unnecessarily queried again. It encourages the algorithm to explore other unexplored regions, hence leading to better exploration.

Interestingly, the better exploration resulting from our censoring technique also translates to a tighter regret upper bound. Specifically, in batch BO with a batch size of , after plugging in and , our next result gives the regret upper bound of GP-UCB-SDF.

Proposition 1.

With probability at least ,

As long as which holds for most commonly used kernels such as the squared exponential (SE) and Matérn kernels, both terms in 1 grow slower than which is the regret upper bound of the GP-BUCB algorithm based on hallucination (Desautels et al., 2014). Importantly, to achieve a sub-linear regret upper bound (i.e., to ensure that can be upper-bounded by a constant), GP-BUCB (Desautels et al., 2014) and its extensions (Daxberger & Low, 2017; Kandasamy et al., 2018; Chowdhury & Gopalan, 2019) all require a special initialization scheme (i.e., uncertainty sampling). It is often found unnecessary in practice (Kandasamy et al., 2018) and hence represents a gap between theory and practice. Interestingly, our tighter regret upper bound from 1 has removed the dependence on and hence eliminated the need for uncertainty sampling, thereby closing this gap between theory and practice.

Note that our discussions above regarding the advantage of censoring over hallucination also apply to TS-based algorithms. In particular, in batch BO with a batch size of , the regret upper bound of GP-TS-SDF is given by the following result.

Proposition 2.

With probability at least ,

All three terms in 2 grow slower than which is the regret upper bound of the GP-BTS algorithm based on hallucination (Chowdhury & Gopalan, 2019) as long as .

To summarize, though batch BO is only a special case of our BO-SDF problem, we have made non-trivial contributions to the algorithm and theory of batch BO. Our GP-UCB-SDF and GP-TS-SDF algorithms and our theoretical analyses may serve as inspiration for future works on batch BO.

5 Contextual Gaussian Process Bandits under Stochastic Delayed Feedback

Before making a decision, sometimes additional information is available to the learner in many real-life scenarios (e.g., users’ profile information for an online platform and patients’ medical history before clinical trials). This additional information is referred to as context in the literature, and the value of a function depends on the context (Agrawal & Goyal, 2013b; Chu et al., 2011; Krause & Ong, 2011; Li et al., 2010). The learner can design the algorithms to exploit the contextual information to make a better decision. We can extend our results for the BO-SDF problem to the contextual Gaussian process bandits (Krause & Ong, 2011) with stochastic delayed feedback where a non-linear function maps a context to the feedback.

Let be a finite set of available actions (or queries) and be a set of all contexts where . In round , the environment generates a context . Then, the learner selects an action and observes noisy feedback denoted by . We assume that and is an -sub-Gaussian noise. The is only observed after a random delay , which is generated from an unknown distribution .

We refer to this new problem as ‘Contextual Gaussian process Bandits under Stochastic Delayed Feedback’ (CGB-SDF). The unknown function , context space , action space , and unknown delay distribution identify an instance of the CGB-SDF problem. The optimal action for a given context is the action where the function has its maximum value, i.e., . The learner incurs a penalty of for a context and action . We aim to learn a policy that achieves the minimum cumulative penalties or regret which is given by .

Our goal is to learn a policy that has a small sub-linear regret, i.e., . The sub-linear regret here implies that the policy will eventually select the optimal action for a given context. We have adapted our algorithms for the BO-SDF problem to the CGB-SDF problem and shown that they also enjoy similar sub-linear regret guarantees; see Appendix B for more details.

6 Experiments

We compare with previous baseline methods that can be applied to BO-SDF problems after modifications. Firstly, we compare with standard GP-UCB (Srinivas et al., 2010) which ignores the delayed observations when selecting the next query. Note that GP-UCB is likely to repeat previously selected queries in some iterations when the GP posterior remains unchanged (i.e., if no observation is newly collected between two iterations). Next, we also compare with the batch BO method of asy-TS (Kandasamy et al., 2018) which, similarly to GP-UCB, ignores the delayed observations when using TS to choose the next query. Lastly, we compare with the batch BO methods of GP-BUCB (Desautels et al., 2014) and GP-BTS (Chowdhury & Gopalan, 2019) which handle the delayed observations via hallucination (Section 4). Following the suggestion from (Vernade et al., 2020), if not specified otherwise, we set to be where is the mean of the delay distribution. However, this is for convenience only since we will demonstrate (Section 6.1) that our algorithms consistently achieve competitive performances as long as is large enough. Therefore, does not need to be known in practice. We defer some experimental details to Appendix C due to space constraint. Our code is released at https://github.com/daizhongxiang/BO-SDF.

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

| (e) | (f) | (g) | (h) |

6.1 Synthetic Experiments

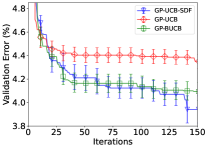

For this experiment, we use a Poisson distribution (with a mean parameter ) as the delay distribution and sample the objective function from a GP with an SE kernel defined on a discrete -dimensional domain. Fig. 1a plots the performances of our GP-UCB-SDF for different delay distributions and shows that larger delays (i.e., larger ’s) result in worse performances. Fig. 1b shows that for a fixed delay distribution (i.e., ), an overly small value of leads to large regrets since it causes our algorithm to ignore a large number of delayed observations (i.e., overly aggressive censoring). On the other hand, a large is desirable since and produce comparable performances.

However, an overly large value of may exert an excessive resource requirement since we may need to cater to many pending function evaluations. Therefore, when the delay distribution (hence ) may be unknown, we recommend using a large value of that is allowed by the resource constraints. We now compare the performances of our GP-UCB-SDF with the baselines of GP-UCB and GP-BUCB for both stochastic (, Fig. 1c) and deterministic (delays fixed at , Fig. 1d) delay distributions. The figures show that our GP-UCB-SDF achieves smaller simple regret than the baselines in both settings.

6.2 Real-world Experiments

In this section, we perform real-world experiments on hyperparameter tuning for ML models under two realistic settings. In the first setting, we consider stochastic delay distributions where the delays are sampled from a Poisson distribution with a mean parameter . This setting is used to simulate real-world scenarios where the evaluations of different hyperparameter configurations may be stochastically delayed because they may require a different amount of computations (e.g., training a neural network with more layers is more time-consuming), or the machines deployed in the experiment may have different computational capabilities. The second setting considers fixed delay distributions where every delay is fixed at , which is used to emulate real-world asynchronous batch BO problems with a batch size of .

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

| (a) | (b) | (c) | (d) |

We now tune the hyperparameters of three different ML models to demonstrate the consistency of our algorithms. Specifically, we tune two hyperparameters of SVM (i.e., the penalty parameter and RBF kernel parameter) used for diabetes diagnosis, three hyperparameters of convolution neural networks (CNNs) (i.e., the batch size, learning rate, and learning rate decay) using the MNIST dataset, and the same three hyperparameters of logistic regression (LR) used for breast cancer detection. The experimental results on hyperparameter tuning for SVM and CNN are plotted in Fig. 2, including the results for UCB- and TS-based algorithms for both settings. The results for hyperparameter tuning of LR are shown in Fig. 3. The figures show that our GP-UCB-SDF and GP-TS-SDF consistently outperform the corresponding baselines in both settings with stochastic and deterministic delay distributions. The results here verify the practical effectiveness of our proposed algorithms in real-world problems.

6.3 Real-world Experiments on Contextual Gaussian Process Bandits

In this section, we apply our algorithms to two real-world contextual GP bandit problems (Section 5). Our first experiment performs multi-task BO in the contextual GP bandit setting following the work of Krause & Ong (2011). We use the tabular benchmark dataset for SVM hyperparameter tuning from the work of Wistuba et al. (2015), which consists of the validation accuracy evaluated on a discrete domain of six SVM hyperparameters for different classification tasks. Each of the tasks is associated with a set of meta-features which are used as the contexts . We sequentially tackle the tasks and perform hyperparameter tuning for each task for iterations, i.e., the context for every task is repeated consecutively for times and the contexts for all tasks arrive sequentially.

Our second experiment performs non-stationary BO in the contextual GP bandit setting. In some real-world BO problems, the objective function is non-stationary (i.e., changing over time). For example, when we tune the hyperparameters of an ML model for diabetes diagnosis (Section 6.2), we may need to perform the hyperparameter tuning task periodically because the size of the dataset is progressively growing due to sequentially arriving patient data. We divide the entire dataset into progressively growing datasets and assign an index to each dataset such that a smaller dataset has a smaller index. Then, we use the indices as the contexts because two datasets whose indices are close share many common data points and are hence expected to lead to similar performances. We let the contexts arrive in the order of their indices to simulate sequentially arriving patients and again repeat each context for iterations.

For both experiments, we use a stochastic delay distribution (i.e., Poisson distribution with ) and set . The cumulative regrets of different algorithms are shown in Fig. 4, in which both GP-UCB-SDF and GP-TS-SDF incur smaller regrets than the other baselines.

7 Related Work

We have developed algorithms for the BO problems with stochastic delayed feedback. In the following, we briefly review the papers relevant to our problem.

Batch BO. Batch BO is the closest BO setting to our BO-SDF problem. Many algorithms have been proposed for different variants of batch BO problems in the literature (Desautels et al., 2014; González et al., 2016; Daxberger & Low, 2017; Kandasamy et al., 2018; Gong et al., 2019; Chowdhury & Gopalan, 2019; Balandat et al., 2020). However, the delay in observing feedback observations in all these batch BO problems is fixed. Some batch BO algorithms are based on the UCB index (Desautels et al., 2014; Daxberger & Low, 2017), while some others are based on TS (Kandasamy et al., 2018; Chowdhury & Gopalan, 2019; Gong et al., 2019). The batch BO methods like ‘local penalization’ (González et al., 2016) and ‘fantasize’ (Balandat et al., 2020) can not work with stochastically delayed feedback and have no theoretical guarantees. All variants of batch BO problems can be treated as special cases of the BO-SDF problem by fixing the delays appropriately. Therefore, our UCB- and TS-based algorithms can be used for any batch BO problem.

Contextual Gaussian Process (GP) Bandits. Several prior works consider the availability of additional information to the learner before making the decision. This line of work is popularly known as contextual bandits (Li et al., 2010). It has many applications in advertising, web search, and e-commerce. In contextual bandits, the mean reward is a function of context and often parameterized (e.g., linear (Li et al., 2010; Chu et al., 2011; Agrawal & Goyal, 2013b), GLM (Li et al., 2017), or non-linear (Valko et al., 2013)). Contextual GP bandits model the posterior belief of a non-linear function using GPs (Krause & Ong, 2011). To the best of our knowledge, our work is the first to introduce stochastic delayed feedback into contextual GP bandits.

Stochastic Delayed Feedback. Due to several practical applications, some works have explored fixed or stochastic delayed feedback in the stochastic multi-armed bandits (MAB) (Slivkins, 2019; Lattimore & Szepesvári, 2020) which is a simpler sequential decision-making framework than BO. In the MAB literature, the problems ranging from known fixed delay (Joulani et al., 2013), unknown delay (Zhong et al., 2017), unknown stochastic delay (Vernade et al., 2017; Grover et al., 2018), and unknown delays in the adversarial setting (Li et al., 2019) are well-studied. The setting of the unknown stochastic delay is further extended to the linear bandits (Zhou et al., 2019; Vernade et al., 2020) which is closest to our BO-SDF problem. However, these works on linear bandits assume that the black-box function is linear and has binary feedback, which may not hold in real-life applications.

8 Conclusion

This paper has studied the Bayesian optimization (BO) problem with stochastic delayed feedback (BO-SDF). We have used Gaussian processes as a surrogate model for the posterior belief of the unknown black-box function. To exploit the information of queries with delayed feedback (i.e., incomplete queries) for updating the posterior mean function, we have used censored feedback that assigns the minimum function value (or ‘0’) to the delayed feedback. It discourages the learner from selecting the incomplete queries (or queries near them) when choosing the next query and hence leads to more efficient exploration.

We have proposed UCB- and TS-based algorithms for the BO-SDF problem and given their sub-linear regret guarantees. Interestingly, when delays are fixed in a certain way, batch BO becomes a special case of the BO-SDF problem. Hence, we can adapt our algorithms for the batch BO problem. We have shown that our algorithms are theoretically and empirically better and do not need the special initialization scheme required for the existing batch BO algorithms. We also extended our algorithms to contextual Gaussian process bandits with stochastic delayed feedback, which is in itself a non-trivial contribution.

As the learning environment keeps changing in real life, an interesting future direction will be considering the BO-SDF problem with a non-stationary function. We also plan to generalize our algorithms to nonmyopic BO (Kharkovskii et al., 2020b; Ling et al., 2016), high-dimensional BO (Hoang et al., 2018), private outsourced BO (Kharkovskii et al., 2020a), preferential BO (Nguyen et al., 2021d), federated/collaborative BO (Dai et al., 2020b, 2021; Sim et al., 2021), meta-BO (Dai et al., 2022), and multi-fidelity BO (Zhang et al., 2017, 2019) settings, handle risks (Nguyen et al., 2021b, a; Tay et al., 2022) and information-theoretic acquisition functions (Nguyen et al., 2021c, e), incorporate early stopping (Dai et al., 2019), and/or recursive reasoning (Dai et al., 2020a), and consider its application to neural architecture search (Shu et al., 2022a, b) and inverse reinforcement learning (Balakrishnan et al., 2020). For applications with a huge budget of function evaluations, we like to couple our algorithms with the use of distributed/decentralized (Chen et al., 2012, 2013a, 2013b, 2015; Hoang et al., 2016, 2019; Low et al., 2015; Ouyang & Low, 2018), online/stochastic (Hoang et al., 2015, 2017; Low et al., 2014; Xu et al., 2014; Yu et al., 2019b), or deep sparse GP models (Yu et al., 2019a, 2021) to represent the belief of the unknown objective function efficiently.

Acknowledgements

This research/project is supported by A*STAR under its RIE Advanced Manufacturing and Engineering (AME) Industry Alignment Fund – Pre Positioning (IAF-PP) (Award AEa) and by the Singapore Ministry of Education Academic Research Fund Tier .

References

- Agrawal & Goyal (2012) Agrawal, S. and Goyal, N. Analysis of Thompson sampling for the multi-armed bandit problem. In Proc. COLT, pp. 39.1–39.26, 2012.

- Agrawal & Goyal (2013a) Agrawal, S. and Goyal, N. Further optimal regret bounds for Thompson sampling. In Proc. AISTATS, pp. 99–107, 2013a.

- Agrawal & Goyal (2013b) Agrawal, S. and Goyal, N. Thompson sampling for contextual bandits with linear payoffs. In Proc. ICML, pp. 127–135, 2013b.

- Auer et al. (2002) Auer, P., Cesa-Bianchi, N., and Fischer, P. Finite-time analysis of the multiarmed bandit problem. Machine Learning, pp. 235–256, 2002.

- Balakrishnan et al. (2020) Balakrishnan, S., Nguyen, Q. P., Low, B. K. H., and Soh, H. Efficient exploration of reward functions in inverse reinforcement learning via Bayesian optimization. In Proc. NeurIPS, pp. 4187–4198, 2020.

- Balandat et al. (2020) Balandat, M., Karrer, B., Jiang, D., Daulton, S., Letham, B., Wilson, A. G., and Bakshy, E. BoTorch: a framework for efficient Monte-Carlo Bayesian optimization. In Proc. NeurIPS, pp. 21524–21538, 2020.

- Besson & Kaufmann (2018) Besson, L. and Kaufmann, E. What doubling tricks can and can’t do for multi-armed bandits. arXiv preprint arXiv:1803.06971, 2018.

- Chapelle (2014) Chapelle, O. Modeling delayed feedback in display advertising. In Proc. KDD, pp. 1097–1105, 2014.

- Chapelle & Li (2011) Chapelle, O. and Li, L. An empirical evaluation of Thompson sampling. In Proc. NeurIPS, pp. 2249–2257, 2011.

- Chen et al. (2012) Chen, J., Low, K. H., Tan, C. K.-Y., Oran, A., Jaillet, P., Dolan, J. M., and Sukhatme, G. S. Decentralized data fusion and active sensing with mobile sensors for modeling and predicting spatiotemporal traffic phenomena. In Proc. UAI, pp. 163–173, 2012.

- Chen et al. (2013a) Chen, J., Cao, N., Low, K. H., Ouyang, R., Tan, C. K.-Y., and Jaillet, P. Parallel Gaussian process regression with low-rank covariance matrix approximations. In Proc. UAI, pp. 152–161, 2013a.

- Chen et al. (2013b) Chen, J., Low, K. H., and Tan, C. K.-Y. Gaussian process-based decentralized data fusion and active sensing for mobility-on-demand system. In Proc. RSS, 2013b.

- Chen et al. (2015) Chen, J., Low, K. H., Jaillet, P., and Yao, Y. Gaussian process decentralized data fusion and active sensing for spatiotemporal traffic modeling and prediction in mobility-on-demand systems. IEEE Trans. Autom. Sci. Eng., 12:901–921, 2015.

- Chow & Chang (2006) Chow, S.-C. and Chang, M. Adaptive Design Methods in Clinical Trials. CRC Press, 2006.

- Chowdhury & Gopalan (2017) Chowdhury, S. R. and Gopalan, A. On kernelized multi-armed bandits. In Proc. ICML, pp. 844–853, 2017.

- Chowdhury & Gopalan (2019) Chowdhury, S. R. and Gopalan, A. On batch Bayesian optimization. arXiv preprint arXiv:1911.01032, 2019.

- Chu et al. (2011) Chu, W., Li, L., Reyzin, L., and Schapire, R. E. Contextual bandits with linear payoff functions. In Proc. AISTATS, pp. 208–214, 2011.

- Contal et al. (2013) Contal, E., Buffoni, D., Robicquet, A., and Vayatis, N. Parallel Gaussian process optimization with upper confidence bound and pure exploration. In Proc. ECML/PKDD, pp. 225–240, 2013.

- Dai et al. (2019) Dai, Z., Yu, H., Low, B. K. H., and Jaillet, P. Bayesian optimization meets Bayesian optimal stopping. In Proc. ICML, pp. 1496–1506, 2019.

- Dai et al. (2020a) Dai, Z., Chen, Y., Low, B. K. H., Jaillet, P., and Ho, T.-H. R2-B2: Recursive reasoning-based Bayesian optimization for no-regret learning in games. In Proc. ICML, pp. 2291–2301, 2020a.

- Dai et al. (2020b) Dai, Z., Low, B. K. H., and Jaillet, P. Federated Bayesian optimization via Thompson sampling. In Proc. NeurIPS, pp. 9687–9699, 2020b.

- Dai et al. (2021) Dai, Z., Low, B. K. H., and Jaillet, P. Differentially private federated Bayesian optimization with distributed exploration. In Proc. NeurIPS, pp. 9125–9139, 2021.

- Dai et al. (2022) Dai, Z., Chen, Y., Yu, H., Low, B. K. H., and Jaillet, P. On provably robust meta-Bayesian optimization. In Proc. UAI, 2022.

- Daxberger & Low (2017) Daxberger, E. A. and Low, B. K. H. Distributed batch Gaussian process optimization. In Proc. ICML, pp. 951–960, 2017.

- Desautels et al. (2014) Desautels, T., Krause, A., and Burdick, J. W. Parallelizing exploration-exploitation tradeoffs in Gaussian process bandit optimization. JMLR, 15:3873–3923, 2014.

- Diemert et al. (2017) Diemert, E., Meynet, J., Galland, P., and Lefortier, D. Attribution modeling increases efficiency of bidding in display advertising. In Proc. KDD Workshop on AdKDD and TargetAd, 2017.

- Frazier (2018) Frazier, P. I. A tutorial on Bayesian optimization. arXiv preprint arXiv:1807.02811, 2018.

- Garivier & Cappé (2011) Garivier, A. and Cappé, O. The KL-UCB algorithm for bounded stochastic bandits and beyond. In Proc. COLT, pp. 359–376, 2011.

- Garnett (2022) Garnett, R. Bayesian Optimization. Cambridge Univ. Press, 2022.

- Gong et al. (2019) Gong, C., Peng, J., and Liu, Q. Quantile Stein variational gradient descent for batch Bayesian optimization. In Proc. ICML, pp. 2347–2356, 2019.

- González et al. (2016) González, J., Dai, Z., Hennig, P., and Lawrence, N. Batch Bayesian optimization via local penalization. In Proc. AISTATS, pp. 648–657, 2016.

- Grover et al. (2018) Grover, A., Markov, T., Attia, P., Jin, N., Perkins, N., Cheong, B., Chen, M., Yang, Z., Harris, S., Chueh, W., and Ermon, S. Best arm identification in multi-armed bandits with delayed feedback. In Proc. AISTATS, pp. 833–842, 2018.

- Hoang et al. (2017) Hoang, Q. M., Hoang, T. N., and Low, K. H. A generalized stochastic variational Bayesian hyperparameter learning framework for sparse spectrum Gaussian process regression. In Proc. AAAI, pp. 2007–2014, 2017.

- Hoang et al. (2015) Hoang, T. N., Hoang, Q. M., and Low, K. H. A unifying framework of anytime sparse Gaussian process regression models with stochastic variational inference for big data. In Proc. ICML, pp. 569–578, 2015.

- Hoang et al. (2016) Hoang, T. N., Hoang, Q. M., and Low, K. H. A distributed variational inference framework for unifying parallel sparse Gaussian process regression models. In Proc. ICML, pp. 382–391, 2016.

- Hoang et al. (2018) Hoang, T. N., Hoang, Q. M., and Low, B. K. H. Decentralized high-dimensional Bayesian optimization with factor graphs. In Proc. AAAI, pp. 3231–3238, 2018.

- Hoang et al. (2019) Hoang, T. N., Hoang, Q. M., Low, K. H., and How, J. P. Collective online learning of Gaussian processes in massive multi-agent systems. In Proc. AAAI, 2019.

- Joulani et al. (2013) Joulani, P., Gyorgy, A., and Szepesvári, C. Online learning under delayed feedback. In Proc. ICML, pp. 1453–1461, 2013.

- Kandasamy et al. (2018) Kandasamy, K., Krishnamurthy, A., Schneider, J., and Póczos, B. Parallelised Bayesian optimisation via Thompson sampling. In Proc. AISTATS, pp. 133–142, 2018.

- Kaufmann et al. (2012) Kaufmann, E., Korda, N., and Munos, R. Thompson sampling: An asymptotically optimal finite-time analysis. In Proc. ALT, pp. 199–213, 2012.

- Kharkovskii et al. (2020a) Kharkovskii, D., Dai, Z., and Low, B. K. H. Private outsourced Bayesian optimization. In Proc. ICML, pp. 5231–5242, 2020a.

- Kharkovskii et al. (2020b) Kharkovskii, D., Ling, C. K., and Low, B. K. H. Nonmyopic Gaussian process optimization with macro-actions. In Proc. AISTATS, pp. 4593–4604, 2020b.

- Krause & Ong (2011) Krause, A. and Ong, C. S. Contextual Gaussian process bandit optimization. In Proc. NeurIPS, pp. 2447–2455, 2011.

- Lattimore & Szepesvári (2020) Lattimore, T. and Szepesvári, C. Bandit Algorithms. Cambridge Univ. Press, 2020.

- Li et al. (2019) Li, B., Chen, T., and Giannakis, G. B. Bandit online learning with unknown delays. In Proc. AISTATS, pp. 993–1002, 2019.

- Li et al. (2010) Li, L., Chu, W., Langford, J., and Schapire, R. E. A contextual-bandit approach to personalized news article recommendation. In Proc. WWW, pp. 661–670, 2010.

- Li et al. (2017) Li, L., Lu, Y., and Zhou, D. Provably optimal algorithms for generalized linear contextual bandits. In Proc. ICML, pp. 2071–2080, 2017.

- Li & Scarlett (2022) Li, Z. and Scarlett, J. Gaussian process bandit optimization with few batches. In Proc. AISTATS, pp. 92–107, 2022.

- Ling et al. (2016) Ling, C. K., Low, B. K. H., and Jaillet, P. Gaussian process planning with Lipschitz continuous reward functions: Towards unifying Bayesian optimization, active learning, and beyond. In Proc. AAAI, pp. 1860–1866, 2016.

- Low et al. (2014) Low, K. H., Xu, N., Chen, J., Lim, K. K., and Özgül, E. B. Generalized online sparse Gaussian processes with application to persistent mobile robot localization. In Proc. ECML/PKDD Nectar Track, pp. 499–503, 2014.

- Low et al. (2015) Low, K. H., Yu, J., Chen, J., and Jaillet, P. Parallel Gaussian process regression for big data: Low-rank representation meets Markov approximation. In Proc. AAAI, pp. 2821–2827, 2015.

- Nguyen et al. (2021a) Nguyen, Q. P., Dai, Z., Low, B. K. H., and Jaillet, P. Optimizing conditional value-at-risk of black-box functions. In Proc. NeurIPS, pp. 4170–4180, 2021a.

- Nguyen et al. (2021b) Nguyen, Q. P., Dai, Z., Low, B. K. H., and Jaillet, P. Value-at-risk optimization with Gaussian processes. In Proc. ICML, pp. 8063–8072, 2021b.

- Nguyen et al. (2021c) Nguyen, Q. P., Low, B. K. H., and Jaillet, P. An information-theoretic framework for unifying active learning problems. In Proc. AAAI, pp. 9126–9134, 2021c.

- Nguyen et al. (2021d) Nguyen, Q. P., Tay, S., Low, B. K. H., and Jaillet, P. Top- ranking Bayesian optimization. In Proc. AAAI, pp. 9135–9143, 2021d.

- Nguyen et al. (2021e) Nguyen, Q. P., Wu, Z., Low, B. K. H., and Jaillet, P. Trusted-maximizers entropy search for efficient Bayesian optimization. In Proc. UAI, pp. 1486–1495, 2021e.

- Ouyang & Low (2018) Ouyang, R. and Low, K. H. Gaussian process decentralized data fusion meets transfer learning in large-scale distributed cooperative perception. In Proc. AAAI, pp. 3876–3883, 2018.

- Rasmussen & Williams (2006) Rasmussen, C. E. and Williams, C. K. I. Gaussian Processes for Machine Learning. MIT Press, 2006.

- Shahriari et al. (2015) Shahriari, B., Swersky, K., Wang, Z., Adams, R. P., and De Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proceedings of the IEEE, 104(1):148–175, 2015.

- Shu et al. (2022a) Shu, Y., Cai, S., Dai, Z., Ooi, B. C., and Low, B. K. H. NASI: Label- and data-agnostic neural architecture search at initialization. In Proc. ICLR, 2022a.

- Shu et al. (2022b) Shu, Y., Chen, Y., Dai, Z., and Low, B. K. H. Neural ensemble search via Bayesian sampling. In Proc. UAI, 2022b.

- Sim et al. (2021) Sim, R. H. L., Zhang, Y., Low, B. K. H., and Jaillet, P. Collaborative Bayesian optimization with fair regret. In Proc. ICML, pp. 9691–9701, 2021.

- Slivkins (2019) Slivkins, A. Introduction to multi-armed bandits. Foundations and Trends® in Machine Learning, 12(1–2):1–286, 2019.

- Snoek et al. (2012) Snoek, J., Larochelle, H., and Adams, R. P. Practical Bayesian optimization of machine learning algorithms. In Proc. NeurIPS, 2012.

- Srinivas et al. (2010) Srinivas, N., Krause, A., Kakade, S., and Seeger, M. Gaussian process optimization in the bandit setting: No regret and experimental design. In Proc. ICML, pp. 1015–1022, 2010.

- Takahashi & Suzuki (2021) Takahashi, A. and Suzuki, T. Bayesian optimization design for dose-finding based on toxicity and efficacy outcomes in phase I/II clinical trials. Pharmaceutical Statistics, 20(3):422–439, 2021.

- Tay et al. (2022) Tay, S. S., Foo, C. S., Urano, D., Leong, R. C. X., and Low, B. K. H. Efficient distributionally robust Bayesian optimization with worst-case sensitivity. In Proc. ICML, 2022.

- Thompson (1933) Thompson, W. R. On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika, 25(3–4):285–294, 1933.

- Ueno et al. (2016) Ueno, T., Rhone, T. D., Hou, Z., Mizoguchi, T., and Tsuda, K. Combo: An efficient Bayesian optimization library for materials science. Materials Discovery, 4:18–21, 2016.

- Valko et al. (2013) Valko, M., Korda, N., Munos, R., Flaounas, I., and Cristianini, N. Finite-time analysis of kernelised contextual bandits. In Proc. UAI, pp. 654–663, 2013.

- Verma & Hanawal (2020) Verma, A. and Hanawal, M. K. Stochastic network utility maximization with unknown utilities: Multi-armed bandits approach. In Proc. IEEE INFOCOM, pp. 189–198, 2020.

- Verma et al. (2019) Verma, A., Hanawal, M., Rajkumar, A., and Sankaran, R. Censored semi-bandits: A framework for resource allocation with censored feedback. In Proc. NeurIPS, pp. 14499–14509, 2019.

- Verma et al. (2021) Verma, A., Hanawal, M. K., Rajkumar, A., and Sankaran, R. Censored semi-bandits for resource allocation. arXiv preprint arXiv:2104.05781, 2021.

- Vernade et al. (2017) Vernade, C., Cappé, O., and Perchet, V. Stochastic bandit models for delayed conversions. In Proc. UAI, 2017.

- Vernade et al. (2020) Vernade, C., Carpentier, A., Lattimore, T., Zappella, G., Ermis, B., and Brueckner, M. Linear bandits with stochastic delayed feedback. In Proc. ICML, pp. 9712–9721, 2020.

- Wistuba et al. (2015) Wistuba, M., Schilling, N., and Schmidt-Thieme, L. Learning hyperparameter optimization initializations. In Proc. IEEE International Conference on Data Science and Advanced Analytics, 2015.

- Xu et al. (2014) Xu, N., Low, K. H., Chen, J., Lim, K. K., and Özgül, E. B. GP-Localize: Persistent mobile robot localization using online sparse Gaussian process observation model. In Proc. AAAI, pp. 2585–2592, 2014.

- Yu et al. (2019a) Yu, H., Chen, Y., Dai, Z., Low, B. K. H., and Jaillet, P. Implicit posterior variational inference for deep Gaussian processes. In Proc. NeurIPS, pp. 14475–14486, 2019a.

- Yu et al. (2019b) Yu, H., Hoang, T. N., Low, K. H., and Jaillet, P. Stochastic variational inference for Bayesian sparse Gaussian process regression. In Proc. IJCNN, 2019b.

- Yu et al. (2021) Yu, H., Liu, D., Low, K. H., and Jaillet, P. Convolutional normalizing flows for deep Gaussian processes. In Proc. IJCNN, 2021.

- Zhang et al. (2017) Zhang, Y., Hoang, T. N., Low, B. K. H., and Kankanhalli, M. Information-based multi-fidelity Bayesian optimization. In Proc. NIPS Workshop on Bayesian Optimization, 2017.

- Zhang et al. (2019) Zhang, Y., Dai, Z., and Low, B. K. H. Bayesian optimization with binary auxiliary information. In Proc. UAI, pp. 1222–1232, 2019.

- Zhong et al. (2017) Zhong, J., Huang, Y., and Liu, J. Asynchronous parallel empirical variance guided algorithms for the thresholding bandit problem. arXiv preprint arXiv:1704.04567, 2017.

- Zhou et al. (2019) Zhou, Z., Xu, R., and Blanchet, J. Learning in generalized linear contextual bandits with stochastic delays. In Proc. NeurIPS, pp. 5197–5208, 2019.

Appendix

Appendix A Proofs of Theoretical Results

A.1 Proof of 1

We define as the feature map associated with the kernel , i.e., maps any input into a feature in the (potentially infinite-dimensional) feature space of the RKHS associated with . The reproducing property tells us that . Next, we define , , and . Of note, the GP posterior mean (1) can be equivalently expressed as , and the GP posterior variance (1) can be equivalently written as . In this section, we prove 1, which is restated below.

See 1

Proof.

Firstly, we can decompose as follows:

Next, we can plug in this decomposition of to derive:

| (4) |

in which the equality makes use of the expression for the GP posterior mean: , and the reproducing property: . Next, we will separately provide upper bounds on the two terms in Eq. 4.

Firstly, We upper-bound the second term in Eq. 4 as follows:

| (5) |

The second inequality and the equality have made use of the expression for the GP posterior variance: , the third and last inequalities has made use of the fact that because (note that if , then there will be an additional multiplier factor of ), the fourth inequality follows since we have assumed that all observations are bounded: .

Next, define as the standard GP posterior mean without censoring the observations (i.e., replace every by in Eq. 1). Now the first term in Eq. 4 can be upper-bounded as:

| (6) |

In the first equality, we have defined . In the second equality, we have again used the expression for the GP posterior mean and the reproducing property for . The last inequality follows from Theorem 2 of Chowdhury & Gopalan (2017), which holds with the probability of . We have also used the fact that in the last inequality.

To bound the first term in Eq. 6, we firstly define , which is by definition a -sub-Gaussian noise. Also define which is a dimensional vector and define which is a -dimensional matrix. These definitions imply that and . Also define 777The value of is set as (Chowdhury & Gopalan, 2017). However, one can use a Doubling Trick (Besson & Kaufmann, 2018) for unknown . with . Based on these definitions, we can upper-bound by

| (7) |

In the equality in the third line, we have made use of the following matrix equality: , and in the second last equality, we have used the matrix equality of . Next, making use of Theorem 1 of Chowdhury & Gopalan (2017) and following similar steps as those in the proof there, we have that with probability of ,

| (8) |

Next, combining Eq. 7 and Eq. 8 above allows us to upper-bound the first term in Eq. 6:

| (9) |

in which the second inequality has made use of the expression for the GP posterior variance: , and the last inequality follows from Eq. 7 and Eq. 8 (and hence holds with probability of ), as well as the fact that . Now, we can plug in Eq. 9 as an upper bound on the first term in Eq. 6, and then use the resulting Eq. 6 as an upper bound on the first term in Eq. 4. Combining this with the upper bound on the second term of Eq. 4 given by Eq. 5, we have that

in which we have plugged in the definition of in the last equality. This completes the proof. ∎

A.2 Proof of 2

See 2

Proof.

To begin with, we can upper-bound the instantaneous regret as

| (10) |

in which the first and last inequalities have made use of 1, and the second inequality follows because is selected using the UCB policy: (2). Now the cumulative regret can be upper-bounded as

| (11) |

where we have plugged in the expression of in the equality.

Next, we use to denote the information gain from the noisy observations in the first iterations about the objective function . The first term in Eq. 11 can be upper-bounded as:

| (12) |

The first inequality follows since is monotonically increasing in , the inequality in the second line follows because for (substitute ), and the inequality in the fourth line follows from plugging in the expression for the information gain: (Lemma 5.3 of Srinivas et al. (2010)).

Next, we can derive an upper bound on the second term in Eq. 11:

| (13) |

The first inequality has made use of the inequality of , the second inequality follows since which is because is calculated by conditioning on more input locations than , and the last inequality follows easily from some of the intermediate steps in the derivations of Eq. 12.

A.3 Proof of 3

First we define , and . Note that we have replaced the in the definition of (1) by . We use to denote the filtration containing the history of selected inputs and observed outputs up to iteration . To begin with, we define two events and through the following two lemmas.

Lemma 1.

Let . Define as the event that for all . We have that for all .

Lemma 2.

Define as the event that . We have that for any possible filtration .

2 makes use of the concentration of a random variable sampled from a Gaussian distribution, and its proof follows from Lemma 5 of Chowdhury & Gopalan (2017). Next, we define a set of saturated points in every iteration, which intuitively represents those inputs that lead to large regrets in an iteration.

Definition 1.

In iteration , define the set of saturated points as

where and .

Based on this definition, we will later prove that we can get a lower bound on the probability that the selected input in iteration is unsaturated (4). To do that, we first need the following auxiliary lemma.

Lemma 3.

For any filtration , conditioned on the event , we have that ,

| (14) |

where .

Proof.

Adding and subtracting both sides of , we get

| (15) |

in which the second inequality makes use of 1 (note that we have conditioned on the event here), and the last inequality follows from the Gaussian anti-concentration inequality: where . ∎

The next lemma proves a lower bound on the probability that the selected input is unsaturated.

Lemma 4.

For any filtration , conditioned on the event , we have that,

Proof.

To begin with, we have that

| (16) |

This inequality can be justified because the event on the right hand side implies the event on the left hand side. Specifically, according to 1, is always unsaturated because . Therefore, because is selected by , we have that if , then the selected is guaranteed to be unsaturated. Now conditioning on both events and , for all , we have that

| (17) |

in which the first inequality follows from 1 and 2 and the second inequality makes use of 1. Next, separately considering the cases where the event holds or not and making use of Eq. 16 and Eq. 17, we have that

| (18) |

Next, we use the following lemma to derive an upper bound on the expected instantaneous regret.

Lemma 5.

For any filtration , conditioned on the event , we have that,

Proof.

To begin with, define as the unsaturated input with the smallest GP posterior standard deviation:

| (19) |

This definition gives us:

| (20) |

in which the second inequality makes use of 4, as well as the definition of .

Next, conditioned on both events and , we can upper-bound the instantaneous regret as

in which the first inequality follows from 1 and 2 as well as the definition of (1), the second inequality follows because is unsaturated, and the last inequality follows because since . Next, by separately considering the cases where the event holds and otherwise, we are ready to upper-bound the expected instantaneous regret:

| (21) |

in which the second inequality results from Eq. 20, and the last inequality follows because and . ∎

Next, we define the following stochastic process , which we prove is a super-martingale in the subsequent lemma by making use of 5.

Definition 2.

Define , and for all ,

Lemma 6.

is a super-martingale with respect to the filtration .

Proof.

As , we have

When the event holds, the last inequality follows from 5; when is false, and hence the inequality trivially holds. ∎

Lastly, we are ready to prove the upper bound of GP-TS-SDF on the cumulative regret by applying the Azuma-Hoeffding Inequality to the stochastic process defined above.

See 3

Proof.

To begin with, we derive an upper bound on :

| (22) |

where the second inequality follows because , and the last inequality follows since . Now we are ready to apply the Azuma-Hoeffding Inequality to with an error probability of :

| (23) |

in which the second inequality makes use of the fact that is monotonically increasing and that , and the last inequality follows from the proof of 2 (Section A.2) which gives an upper bound on . Note that Eq. 23 holds with probability . Also note that with probability of because the event holds with probability of (1), therefore, the upper bound from Eq. 23 is an upper bound on with probability of .

Lastly, we can simplify the regret upper bound from Eq. 23 into asymptotic notation. Note that , and . To simplify notations, we analyze the asymptotic dependence while ignoring all log factors, and ignore the dependence on constants including , and . This gives us and . As a result, the regret upper bound can be simplified as

It completes the proof. ∎

A.4 Time based Censored Feedback

Let be the amount of time the learner waits for the delayed feedback, be the time of initiating the selection process for the -th query, and be the time of starting the -th query. The censored feedback of -th query before selecting -th query is denoted by , where . Now, the GP posterior mean and covariance functions can be expressed using available information of function queries and their censored feedback as follows:

| (24) |

where , , , and is a regularization parameter to ensures is an invertible matrix. Our current regret analysis will work for this setting as well after appropriately changing the used variables.

Appendix B Theoretical Analysis of Contextual Gaussian Process Bandit

Our regret upper bounds in 2 and 3 are also applicable in the contextual GP bandit setting after some slight modifications to their proofs. Note that in the contextual GP bandit setting (Section 5), the only major difference with the BO setting is that now the objective function depends on both a context vector and an input vector , in which every is generated by the environment and is selected by our GP-UCB-SDF or GP-TS-SDF algorithms. Therefore, the most important modifications to the proofs of 2 and 3 involve replacing the original input space of by the new input space of and accounting for the modified definition of regret in Section 5.

To begin with, with the same definition of , it is easy to verify that 1 can be extended to the contextual GP bandit setting:

Theorem 4 (Confidence Ellipsoid for Contextual GP Bandit).

With probability at least ,

B.1 GP-UCB-SDF for Contextual Gaussian Process Bandit

For the GP-UCB-SDF algorithm in the contextual GP bandit setting, the instantaneous regret can be analyzed in a similar way to Eq. 10 in the proof of 2 (Section A.2):

in which the first and last inequalities both make use of 4, and the second inequality follows from the way in which is selected: . Following this, the subsequent steps in the proof in Section A.2 immediately follow, hence producing the same regret upper bound as 2.

B.2 GP-TS-SDF for Contextual Gaussian Process Bandit

Lemma 7.

Let . Define as the event that for all . We have that for all .

Lemma 8.

Define as the event that . We have that for any possible filtration .

The validity of 7 follows immediately from 4 (after replacing the error probability of by in the definition of , which we have omitted here for simplicity), and the proof of 8 is the same as that of 2. Next, we modify the definition of saturated points from 1:

Definition 3.

In iteration , given a context vector , define the set of saturated points as

where and .

Note that according to this definition, is always unsaturated. The next lemma is a counterpart of 3, and the proofs are the same.

Lemma 9.

For any filtration , conditioned on the event , we have that ,

where .

The next lemma, similar to 4, shows that in contextual GP bandit problems, the probability that the selected is unsaturated can also be lower-bounded.

Lemma 10.

For any filtration , given a context vector , conditioned on the event , we have that,

Proof.

To begin with, we have that

| (25) |

The validity of this equation can be seen following the same analysis of Eq. 16, i.e., the event on the right hand side implies the event on the left hand side. Now conditioning on both events and , for all , we have that

| (26) |

in which the first inequality follows from 7 and 8 and the second inequality makes use of 3.

Lemma 11.

For any filtration , given a context vector , conditioned on the event , we have that,

Proof.

To begin with, given a context vector , define as the unsaturated input with the smallest GP posterior standard deviation:

| (27) |

This definition gives us:

| (28) |

in which the second inequality makes use of 4, as well as the definition of . Next, conditioned on both events and , we can upper-bound the instantaneous regret as

where the first inequality follows from 7 and 8 as well as the definition of (3), the second inequality follows because is unsaturated, and the last inequality follows because since . Next, the proof in Eq. 21 can be immediately applied, hence producing the upper bound on the instantaneous regret in this lemma. ∎

Now the remaining steps in the proof of 3 in Section A.3 immediately follow. Specifically, we can first define a stochastic process in the same way as 2, and then use 6 to show that is super-martingale. Lastly, we can apply the Azuma Hoeffding Inequality to in the same way as 3, which completes the proof.

Appendix C More Experimental Details

In our experiments, since it has been repeatedly observed that the theoretical value of is overly conservative (Srinivas et al., 2010), we set to be a constant () for all methods. In all experiments and for all methods, we use the SE kernel for the GP and optimize the GP hyperparameters by maximizing the marginal likelihood after every 10 iterations. In all experiments where we report the simple regret (e.g., Fig. 1, Fig. 2, etc.), we calculate the simple regret in an iteration using only those function evaluations which have converted (i.e., we ignore all pending observations).

C.1 Synthetic Experiment

In the synthetic experiment, the objective function is sampled from a GP using the SE kernel with a lengthscale of defined on a 1-dimensional domain. The domain is an equally spaced grid within the range with a size . The sampled function is normalized into the range of .

|

|

| (a) | (b) |

C.2 Real-world Experiments

In the SVM hyperparameter tuning experiment, the diabetes diagnosis dataset can be found at https://www.kaggle.com/uciml/pima-indians-diabetes-database. We use of the dataset as the training set and the remaining as the validation set. For every evaluated hyperparameter configuration, we train the SVM using the training set with the particular hyperparameter configuration and then evaluate the learned SVM on the validation set, whose validation accuracy is reported as the observations. We tune the penalty parameter within the range of and the RBF kernel parameter within .

In the experiment on hyperparameter tuning for CNN, we use the MNIST dataset and follow the default training-testing sets partitions given by the PyTorch package. The CNN consists of one convolutional layer (with 8 channels and convolutional kernels of size 3), followed by a max-pooling layer (with a pooling size of 3), and then followed by a fully connected layer (with 8 nodes). We use the ReLU activation function and the Adam optimizer. We tune the batch size (within ), the learning rate (within ) and the learning rate decay (within ).

In the hyperparameter tuning experiment for LR, the breast cancer dataset we have adopted can be found at https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+(Diagnostic). Similar to the SVM experiment, we use of the dataset as the training set and the remaining as the testing set. Here we tune three hyperparameters of the LR model: the batch size (within ), the learning rate (within ) and learning rate decay (within ).

C.3 Real-world Experiments on Contextual Gaussian Process Bandits

For the multi-task BO experiment, the tabular benchmark on hyperparameter tuning of SVM is introduced by the work of (Wistuba et al., 2015) and can be found at https://github.com/wistuba/TST. The dataset consists of 50 tasks, and each task corresponds to a different classification problem with a different dataset. The domain of hyperparameter configurations in this benchmark (which is shared by all 50 tasks) consists of discrete values for six hyperparameters: 3 binary parameters indicating (via one-hot encoding) whether the linear, polynomial, or RBF kernel is used, and the penalty parameter, the degree for the polynomial kernel, and the RBF kernel parameter. The size of the domain is 288. As a result, for each task, the validation accuracy for each one of the 288 hyperparameter configurations is recorded as the observation. As we have mentioned in the main text (Section 6.2), each of the 50 tasks is associated with some meta-features, and here we use the first six meta-features as the contexts. Each of the 50 tasks is associated with a 6-dimensional context vector , which is used to characterize this particular task. For the non-stationary BO task, the dataset and the other experimental settings (e.g., the tuned hyperparameters, the range of the hyperparameters, etc.) are the same as the SVM hyperparameter tuning experiment in Section 6.2. Refer to Section C.2 for more details.