Bayesian Elastic Net based on Empirical Likelihood

Abstract

We propose a Bayesian elastic net that uses empirical likelihood and develop an efficient tuning of Hamiltonian Monte Carlo for posterior sampling. The proposed model relaxes the assumptions on the identity of the error distribution, performs well when the variables are highly correlated, and enables more straightforward inference by providing posterior distributions of the regression coefficients. The Hamiltonian Monte Carlo method implemented in Bayesian empirical likelihood overcomes the challenges that the posterior distribution lacks a closed analytic form and its domain is nonconvex. We develop the leapfrog parameter tuning algorithm for Bayesian empirical likelihood. We also show that the posterior distributions of the regression coefficients are asymptotically normal. Simulation studies and real data analysis demonstrate the advantages of the proposed method in prediction accuracy.

keywords:

Bayesian empirical likelihood; Hamiltonian Monte Carlo; Variable selection1 Introduction

Regularization methods have been introduced to a linear model to improve the prediction accuracy and interpretability by introducing a penalty term to the least squares criterion; lasso that implements penalty [1], ridge that adds penalty [2], elastic net (EN) that introduces a combination of and penalties [3], and non-convex penalties [4, 5]. It is known that EN shows better performances compared to the lasso while preserving a sparse variable selection property [3, 6]. EN also identifies influential variables better than the lasso and has lower false positives than ridge regression [7].

The penalized regression approaches have an intuitive Bayesian counterpart stemming from introducing penalty terms in the form of priors. For instance, Bayesian lasso (BL) [8] uses a double-exponential prior, and Bayesian elastic net (BEN) [9] uses an informative prior that is a compromise between normal and Laplace priors. The non-convex penalties also have been implemented including spike-and-slab prior [10], horseshoe prior [11], and hyperlasso [12]. Motivated by Li and Lin [9], various BEN studies have been conducted including applications to gene-expression data [13], empirical BEN [14], and regressions [15, 16, 17].

The Bayesian approaches on regularization regression have several advantages. First, the Bayesian methods offer a natural interpretation of parameter uncertainty. For example, the standard errors of our parameter of interest can be estimated as the posterior standard deviations because the Bayesian method provides the entire posterior distribution. Second, the Bayesian methods provide valid standard errors compared to the variances of the non-Bayesian penalized regressions estimated by the sandwich or bootstrap [18]. Third, the penalty parameters in the Bayesian methods can be estimated simultaneously with model parameters. When the non-Bayesian models have multiple penalty parameters, such as EN, multi-stage cross-validations are used to estimate the penalty terms. However, the sequential estimation of penalties may incur the over-shrinkage of parameters that leads to larger bias [9]. Lastly, the Bayesian penalized regression approaches show similar or better performances compared to the corresponding non-Bayesian approaches [19, 18, 9].

Owen [20, 21] introduces empirical likelihood (EL), a nonparametric likelihood method to make inference for statistical functionals. EL does not require an assumption that data follow a certain distribution while maintaining similar properties of a conventional likelihood method such as Wilk’s theorem and Bartlett correctability [21, 22, 23]. The EL approach has been applied to complex inferences, including general estimating equation [24], density estimation [25], area under the receiver operating characteristic curve [26], and data imputation [27]. We refer to Chen and Keilegom [28] for a review of EL methods on regression.

EL has extended to high dimensional data. Hjort et al. [29] and Chen et al. [30] show that the asymptotic normality of the EL ratio holds when the dimension of data grows. Tang and Leng [31] and Leng and Tang [32] propose the penalized EL and show that it has the oracle property [4, 33]. Lahiri and Mukhopadhyay [34] propose the penalized EL for estimating population means when the dimension of data is larger than the number of observations. Chang et al. [35] also suggest implementing two penalty functions to penalized models and Lagrange multipliers.

Also, the EL-based Bayesian methods have been developed. Lazar [36] replaces the likelihood function in Bayesian settings by EL and shows the validity of the resulting posterior distribution. In addition, Grendar and Judge [37] show that Bayesian empirical likelihood (BEL) is consistent under the misspecification of the model. BEL has been studied in various areas; the higher-order asymptotic and coverage properties of the posterior distribution for the population mean [38, 39], the survey sampling [40], small area estimation [41], quantile regression [42], Bayesian computation [43], sampling method [44], and lasso and ridge regressions [45].

In this paper, we suggest a Bayesian approach for EN where the likelihood function is replaced by the profile EL of the linear model. We place a special prior distribution on the parameter of interest, which combines the and penalties leading to the EN approach. The proposed approach takes advantage of the interpretability and robustness of the results achieved by the Bayesian perspective and EL method, respectively. We implement the HMC sampling for BEL suggested by Chaudhuri et al. [44] and propose the leapfrog step size tuning algorithm based on the bisection method. The proposed algorithm enables more efficient sampling than hand-tuning or grid-searching HMC parameters. In addition, our method extends the BEL method for penalized regressions of Bedoui and Lazar [45] by proposing efficient HMC sampling rather than utilizing the tailored Metropolis-Hasting of Chib and Greenberg [46].

The outline of the remaining sections is as follows. In Section 2, we briefly describe the Bayesian linear model based on EL. In Section 3, we propose BEN based on EL and discuss the HMC sampling implementations, along with variable selection procedures. In Section 4, we compare the proposed method with other penalized regression methods using various simulation studies. The air pollution data application is presented in Section 5. Section 6 contains conclusion and future work.

2 Linear model based on empirical likelihood

We first outline how EL is implemented in a linear model. Let be a collection of independent covariate vectors of size . We consider the linear model

where the error is independent and identically distributed and follows an unknown distribution with mean zero and variance . The empirical likelihood function for is defined as

| (1) |

and is the weights on the data points. The coefficients can be estimated by maximizing equation (1) using the Lagrange multipliers. The profile empirical log-likelihood becomes

| (2) |

where solves the equation

Here, numerical approaches such as the Newton-Raphson method can be used to find the Lagrange multipliers [47].

The regularization method can be implemented to EL for linear models in different ways. First, the penalty terms can be introduced in the model through the priors , where is a penalty function. Second, Tang and Leng [31] introduce the penalty terms in EL . In our study, the and penalties are introduced in the form of priors.

3 Bayesian elastic net based on empirical likelihood

EN uses both the and penalties for [3]. The EN estimator is defined as the minimizer of

| (3) |

where

Here, and are and penalty parameters that control the amount of shrinkage. Without loss of generality, we assume that

Penalized linear regression models have their Bayesian counterpart models. The Bayesian penalized models introduce priors for where the hyperparameters are functions of the penalty terms. For example, from the form of the EN penalty term in (3), the EN regression parameters can be presented by the following prior

Li and Lin [9] propose the Bayesian counterpart of EN by placing normal and Laplace priors to and penalties, respectively. The shrinkage parameters, and , are introduced into the model in the form of hyperparameters.

The BEL approach for linear models replaces the likelihood with EL and places parametric priors. Then, the posterior density of EN under the BEL approach becomes

The hierarchical representation of the full model becomes

| (4) |

where is the profile empirical likelihood for the linear model in (2), IG is an inverse gamma whose probability density function is for , and and are omitted in for simplicity. We condition on to guarantee the unimodality of the posterior distributions [8, 9]. One can also choose a noninformative prior on of the form of .

The prior of given defined in (4) is a multiplication of normal and Laplace densities. Andrews and Mallows [48] show that the Laplace distribution can be represented as a scale mixture of normals with an exponential mixing density

Li and Lin [9] also showed that the prior defined in (4) can be presented as a scale mixture of normals with a truncated gamma mixing density. The hierarchical scheme in (4) becomes as follows

| (5) |

where follows the truncated gamma distribution from 1 to with the shape parameter and the rate parameter . The full joint posterior density is as follows

| (6) |

To sample from the posterior , we draw from the following conditional distributions

-

1.

Sample from

-

2.

Sampling is equivalent to sampling from

-

3.

Sample from

Here, is the generalized inverse Gaussian distribution with probability density function for , where is the modified Bessel function of the third kind with order . We use the rejection algorithm proposed by Hörmann and Leydold [49] to generate samples from the GIG distribution.

The posterior estimates of BEN based on EL estimates are consistent. As the sample size increases, the estimates converge to the true value of the parameter being estimated. The consistency of the estimators under the BEL framework has been proved for the quantile regression [42] and the penalized regression [45], and it can be easily extended to the proposed estimates.

3.1 Hamiltonian Monte Carlo sampling for Bayesian empirical likelihood

Implementing traditional sampling methods like Gibbs sampler and Metropolis-Hastings (MH) under the Bayesian empirical likelihood framework is a daunting task. First, the conditional distribution of has a non-closed form, which makes the implementation of the Gibbs sampler impossible. Second, implementing MH is suitable to sample from a distribution that lacks a closed form, but it may fail to achieve convergence. In addition, the nature of the posterior density support complicates the process of finding an efficient proposal density. The support of the posterior EL is nonconvex with many local optima where its surface is rigid. In these cases, the chain can be trapped in a region and not reach the global optimum. Therefore, it is challenging to find the proper proposal density that provides a high acceptance right with the appropriate location and dispersion parameters.

We use HMC [50] to sample , inspired by Chaudhuri et al. [44]. Let be the current position vector and be the momentum vector where is the dispersion matrix. The Hamiltonian is defined by the joint distribution of and that can be represented as the the sum of potential energy and kinetic energy ,

| (7) | |||||

The partial derivatives of Hamiltonian determine how the transits to a new state,

The Hamiltonian dynamics has reversible, invariant, and volume-preserving properties that enable MCMC updates [50]. The Hamiltonian equations are computed by discretizing the small time interval . The leapfrog integrator is the most widely-used method to implement HMC. First, given the current time and the position , the momentum is independently drawn from . Then the position and the momentum at time is updated as follows:

The proposed state is obtained after repeating the above updates times. Here, and are also called the step size and leapfrog steps, respectively. The proposed state is accepted with the probability

HMC is known to be more efficient than random-walk-based MH in sampling from the posterior for Bayesian EL. First, Chaudhuri et al. [44] show that once the parameters are inside the support, the HMC chain does not go outside and return to the interior of the support if they reach its boundary under certain assumptions. This is due to the property of , whose gradient diverges at the boundary. Second, HMC converges quickly towards the target distribution and enables faster convergence. In HMC, distances between successively generated points are large. Thus, fewer iterations are required to obtain a representative sample.

The performance of an HMC depends on its parameters, and it is known that tuning them is important [50, 51]. However, the optimal HMC parameter tuning procedure for BEL is not discussed in Chaudhuri et al. [44]. It is generally suggested to set a sufficiently small leapfrog step size to ensure that the discretization is good and use sufficiently large leapfrog steps so that the overall trajectory length is not too short. However, setting a large could be inefficient for HMC used in the BEL framework. This is because each leapfrog step requires computationally expensive EL computation. Therefore, we fix the leapfrog steps to and find the optimal step size in our study.

We develop the leapfrog step size tuning algorithm for BEL based on the bisection method [52]. The optimal step size will achieve the known theoretical optimal acceptance rate of 65.1% [53]. For a fixed , a larger step size tends to lower an acceptance rate and vice versa. For given lower and upper tolerance levels for acceptance rates, and , respectively, the proposed algorithm searches for that results in a high acceptance rate, greater than , while increasing it by . If the acceptance rate is within the target range , then is selected. On the other hand, if the step size makes the acceptance rate , the method bisects the interval . The bisection procedure is repeated until the acceptance rate reaches the target range. Compared to the grid search algorithm, the proposed algorithm converges to the target acceptance rate range linearly and enables finer and computationally efficient step size tuning. The detailed bisection algorithm is given in Algorithm 1. One can also consider implementing the No-U-Turn sampler (NUTS) [51] that automatically selects the leapfrog steps and step size. We discuss this in Section 6.

We run a single chain of length 2,000 with 1,000 burn-ins for quicker step size tuning. For the estimation of , we simulate four chains of length 2,000 with 1,000 burn-ins, respectively.

3.2 Choosing Bayesian elastic net penalty parameters

The proposed EL based approach needs to specify the penalty parameters . We consider two approaches: empirical Bayes (EB) and full Bayes (FB).

First, the EB method estimates the penalty parameter from data and plugs the estimated parameters into the model. Park and Casella [8] use the EB method to estimate the shrinkage parameter in BL. It treats the parameters as missing data and uses the Monte Carlo expectation-maximization (EM) algorithm approach proposed by Casella [55]. The EM algorithm is iteratively used to approximate the parameters of interest by substituting Monte Carlo estimates for any expected values that cannot be computed explicitly. For our proposed model, and are treated as missing data whereas and are treated as fixed parameters.

We use a building block of HMC and the Gibbs sampler to sample , and . The negative log empirical posterior density of in (7) is defined as

where and its gradient is defined as

The hierarchical model presented in (6) yields the complete-data log-likelihood

At the th step of the Monte Carlo EM algorithm, the conditional log-likelihood on and is

Then we find and that maximize . The maximization procedure can be obtained by using the partial derivatives of

Here, the expectations in and are evaluated by using the means of sampled , , and .

Second, the FB approach treats as unknown model parameters and specify a prior for them. Park and Casella [8] suggest to use the gamma prior for the squared value of penalty for BL. Similarly, we assume the prior on . Also, we place a prior on , which is a conjugate prior [9]. The full joint posterior density becomes

The full conditional distributions for the penalty parameters are

An alternative prior for to consider is . Then, the full conditional distribution becomes

The gamma distribution is a special case of the GIG distribution family with . Thus, placing a gamma prior on results on a posterior distribution that follows a GIG distribution.

3.3 Variable selection methods

The Bayesian regularization approaches require additional variable selection methods. In most non-Bayesian penalized methods, the estimated coefficients shrink to zero. On the other hand, the outputs of Bayesian models are the posterior distributions, which are not necessarily equal to zero. We present two variable selection methods: Bayesian credible interval and scaled neighborhood criteria.

First, the Bayesian credible interval can be used to select variables whose credible regions do not include zero for a given probability level [56]. Lazar [36] proves that under standard regularity conditions and as , the posterior EL distribution converges to the normal distribution. Bedoui and Lazar [45] show that the asymptotic posterior distribution of the BEL version of ridge and lasso regressions follows the normal distribution. Lemma 3.1 shows that the posterior EL distribution of for the elastic net model converges to a normal distribution as . However, we want to note that the Bayesian credible interval may include zero in the presence of strong correlation among explanatory variables because of bimodality of the posterior of the regression parameters [57].

Lemma 3.1.

Assume standard regularity conditions, and . The posterior EL distribution of for the Bayesian elastic net converges to the normal distribution with mean and covariance as , where

is the prior mode, is the profile maximum likelihood estimate of and

Also, as .

Proof.

Under the standard regularity conditions, the prior term is dominated by the likelihood function, and the log posterior distribution of can be expressed using up to quadratic order terms [58],

Lazar [36] shows that the posterior distribution of converges to normal distribution with mean and variance as . Then, showing is symmetric and positive semi-definite completes the proof.

First, the first term of , the diagonal matrix , is the minus second derivative of the log prior distribution of in (5) evaluated at . Also, the second term of , , can be approximated as Fisher information matrix [58], which is a positive semi-definite matrix by definition. Therefore, is positive semi-definite because both and positive semi-definite. Thus, as . ∎

Second, the scaled neighborhood criterion uses the posterior probability to select variables [9]. The variable is excluded when the posterior probability is greater than for a given threshold .

4 Simulation studies

We conduct Monte Carlo simulations to compare the performance of the proposed Bayesian elastic net based on the empirical likelihood (BEN-EL) model with four different models: BEN, BL, EN, and lasso applied to the least absolute deviation regression (LADL). LADL is known to be robust to heavy tail errors and outliers [59]. We generate data from the linear model

Note that BEN-EL and LADL assume that the mean and the median of errors are zero, respectively. On the other hand, BEN, BL, and EN assume normal errors.

4.1 Prediction performance

4.1.1 Simulation 1

In Simulation 1, we set . The explanatory variables ’s are generated from the standard normal distribution with pairwise correlations for all . We generate training data sets with three different sizes (). For each setting, we generate test data sets of size . The error is generated from three different distributions: 1) normal distribution, , 2) non-normal distribution, or with probability of 0.5, respectively, and 3) skew Student distribution proposed by Fernández and Steel [60], with mean 0 and standard deviation 3. We use the R package fGarch [61] to sample from the skew Student distribution. The sampling procedures are repeated 100 times for each simulation setting.

The shrinkage parameters and are estimated using the EB method for BEN-EL, BEN, and BL methods. For BEN-EL, the hyperparameters and are used. For the bisection step size tuning, the initial step size and tolerances are used. For BEN and BL, we use MCMC with 2,000 burn-ins and 12,000 iterations. The optimal value for in EN is selected from .

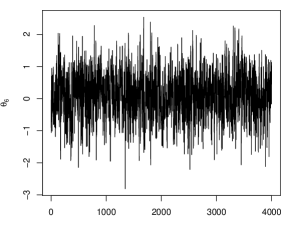

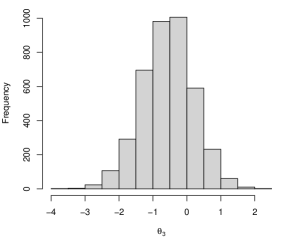

In all cases, the simulated HMC chains meet the convergence criteria . The trace plots and histograms of estimated by BEN-EL for one dataset of skew Student errors and are given in Figures 3 and 4 in Appendix A.

The median of mean squared prediction errors (MMSPE) along with the standard errors (SE) of BEN-EL, BEN, BL and EN methods for Simulation 1. The smallest MMSPEs are marked in bold. Error MMSPE (SE) BEN-EL BEN BL EN LADL 10.24 (0.18) 11.33 (0.25) 10.00 (0.16) 10.43 (0.15) 11.07 (0.18) 9.61 (0.10) 11.65 (0.19) 9.55 (0.10) 9.73 (0.10) 9.96 (0.15) 9.13 (0.07) 11.65 (0.10) 9.10 (0.08) 9.17 (0.07) 9.36 (0.12) 10.80 (0.12) 12.33 (0.25) 10.91 (0.12) 11.20 (0.17) 14.37 (0.29) or 10.49 (0.11) 12.36 (0.21) 10.49 (0.11) 10.68 (0.09) 13.09 (0.18) 10.29 (0.05) 12.66 (0.13) 10.27 (0.06) 10.34 (0.07) 12.52 (0.10) Skew 90.20 (0.99) 91.38 (1.30) 91.83 (1.22) 93.93 (1.30) 92.29 (0.76) Student 86.31 (1.26) 86.77 (1.07) 86.09 (1.12) 88.50 (1.48) 88.15 (1.08) 83.56 (0.80) 83.95 (0.68) 83.66 (0.76) 84.51 (0.89) 84.81 (1.05)

BEN-EL outperforms the other methods when the number of observations is small () and the normality assumption in errors is violated. Table 4.1.1 presents the median of mean squared prediction error (MMSPE) and the standard error (SE) results based on Simulation 1. For Bayesian methods, the explanatory variables are selected based on the scaled neighborhood criterion with in computing MMSPE. The SE is computed as a standard deviation of 1,000 bootstrap samples of mean squared prediction error values.

The empirical frequency (%) that the regression coefficient is dropped for Simulation 1 with errors from or . For BEN-EL, BEN, and BL, the scaled neighborhood criterion with is used. Empirical frequency of exclusion BEN-EL 0 0 58 69 0 65 75 64 BEN 0 0 34 39 0 53 80 76 BL 0 3 69 76 0 77 82 75 LADL 0 18 70 76 9 86 90 82 BEN-EL 0 0 70 61 0 59 72 69 BEN 0 0 13 15 0 34 73 74 BL 0 0 80 73 0 75 82 77 LADL 0 12 76 73 5 81 88 84 BEN-EL 0 0 56 66 0 68 74 62 BEN 0 0 2 3 0 17 68 82 BL 0 0 69 69 0 75 82 75 LADL 0 6 77 71 0 81 88 84

Table 4.1.1 reports the number of exclusion of each variable for 100 simulated data sets for BEN-EL, BEN, BL, and LADL. The variable selection results for EN are not included because all the estimated coefficients are nonzero. LADL performs the best in dropping variables with zero coefficients (, , , , and ) but some nonzero coefficients (, , and ) are also excluded. Among the Bayesian methods, BL best identifies the zero coefficients. However, the MMSPEs for BL are larger than BEN-EL for . This implies that BEN-EL makes more accurate estimates even though their zero variables exclusion rate is worse than BL.

4.1.2 Simulation 2

Simulation 2 dataset has a more complex correlation structure than Simulation 1. First, we generate , , and independently from . Then, we add normal errors so that for , for , for , and for , where for . As a result, the variables in the first three groups are highly correlated within each group. We set the true parameters of the linear model as

We generate error using three different distributions: 1) normal distribution, , 2) Student distribution with degrees of freedom 3, , and 3) skew Student distribution, . The normal and distributions are symmetric and have similar variances, but the distribution has a thicker tail than the normal distribution. On the other hand, the skew Student distribution is right-skewed. We use training data sets with two different sizes () and fix the size of testing data sets to . We generate 100 data sets for each simulation setting. For the other simulation parameters, we use the same setting used in Simulation 1.

For BEN-EL, the simulated HMC chains converge with in all simulation settings. We also present the trace plots and histograms of four nonzero coefficients and four zero elements estimated by BEN-EL for one dataset of the skew Student errors and in Figures 5 and 6 in Appendix A.

The median of mean squared prediction errors (MMSPE) along with the standard errors (SE) of BEN-EL, BEN, BL, EN, and LADL methods for Simulation 2. The smallest MMSPEs are marked in bold. MMSPE (SE) BEN-EL BEN BL EN LADL Normal 281.98 (3.70) 291.44 (4.50) 295.28 (4.16) 309.52 (5.36) 332.72 (3.42) 255.06 (2.58) 254.12 (3.02) 258.17 (3.35) 263.62 (3.42) 323.32 (3.73) Student 334.10 (10.75) 344.72 (7.61) 345.03 (7.79) 380.51 (12.33) 374.70 (5.78) 313.37 (6.77) 302.26 (6.46) 307.12 (6.62) 311.59 (6.13) 351.81 (7.51) Skew 289.95 (4.03) 297.96 (4.43) 301.23 (4.64) 318.13 (8.88) 344.05 (2.83) Student 253.97 (3.06) 255.24 (2.74) 256.02 (2.97) 262.62 (3.52) 310.68 (4.33)

The simulation results suggest that BEN-EL outperforms when the sample size is small or the error follows the asymmetric distribution. Table 4.1.2 reports the MMSPE and SE results of Simulation 2. In all cases, the Bayesian methods (BEN-EL, BEN, and BL) perform better than the non-Bayesian methods (EN and LADL), and the Bayesian EN methods (BEN-EL and BEN) perform better than the BL. This corresponds to the findings that the EN-based methods perform better than the lasso-based methods under the complex correlation structure [3]. For the symmetric error distributions (normal and Student ), BEN-EL provides the smallest MMSPE and SE values compared to BEN when the sample size is small. However, BEN-EL performs the best under the skewed error distribution regardless of the sample size.

Table 4.1.2 presents the variable selection results. For the Bayesian methods, variables are selected using the scaled neighborhood criterion with . The variable selection results for EN are not reported because its estimated coefficients are nonzero. BEN-EL tends to keep more variables than BEN and BL for both nonzero and zero coefficients. BEN and BL perform poorly in keeping nonzero variables when the sample size is small, but they improve as the sample size increases. LADL shows the highest exclusion rate for zero coefficients but removes many nonzero coefficients.

The empirical frequency (%) that the 15 nonzero and 15 zero regression coefficients are dropped for Simulation 2. For Bayesian methods, the scaled neighborhood criterion with is used. Empirical frequency of exclusion BEN-EL BEN BL LADL Nonzero Zero Nonzero Zero Nonzero Zero Nonzero Zero Normal 22.26 63.33 34.60 74.07 42.33 87.53 71.20 92.93 5.47 68.47 5.60 69.27 9.87 82.20 48.87 94.40 Student 23.73 59.13 41.73 78.40 48.93 88.47 64.67 94.40 10.53 58.80 12.13 70.07 16.60 83.20 35.93 93.87 Skew 21.53 61.07 32.53 76.00 38.67 87.53 72.07 94.00 Student 5.40 65.47 5.67 66.53 8.40 81.07 45.67 92.73

4.2 Sensitivity of Hyperparameters

We examine the sensitivity of the hyperparameters of the penalty parameters and in BEN-EL for the FB approach. Li and Lin [9] suggest that the BEN posterior samples may be heavily dependent on the hyperparameters on the penalty parameters based on their experience. Still, it has not been investigated for BEN-EL. Therefore, we investigate how the priors affect posterior inferences by changing hyperparameters: 1) of the gamma prior for and 2) of the GIG prior for . In both simulations, we use one data set from Simulation 1 with and normal errors . For the gamma prior, 1,600 combinations of the shape and rate parameters and are used. For the GIG prior, we fix and use 1,640 combinations of and . The shape and rate parameters and for the gamma prior and and for the GIG prior affect in the opposite directions for nonzero and zero coefficients. For example, as and increase and and decrease, the estimated nonzero coefficients will decrease, whereas the estimated zero coefficients will increase.

The step size is estimated by the proposed bisection algorithm using the same input parameters used in Simulation 1. We run four HMC chains of 2,000 iterations with 1,000 burn-ins. In all cases, the split-’s are less than 1.01 and the HMC chains converge.

The estimated coefficients are affected by choice of the hyperparameters, but the variability is not large enough to interrupt overall inference. Figure 1 shows heatmaps of estimated coefficients of and according to different combinations of the hyperparameters. The estimated coefficients are the median of the posteriors of and whose true values are 3 and 0, respectively. We see the estimated coefficients vary according to the hyperparameters, but they are close to the true coefficients. For example, the estimated differs only up to 0.15 and 0.4 for the various combinations of the hyperparameters of and , respectively.

5 Air pollution data analysis

We use the air pollution to mortality data [62]. The data set includes the mortality rate () and 15 explanatory variables of weather, socioeconomics, and air pollution in 60 metropolitan areas of the United States from 1959 to 1961. See Table A in Appendix A for a description of the variables.

The air pollution set has a complicated correlation structure. The correlation plot is presented in Figure 2. For example, the nitroc oxide level is highly correlated with other air pollution variable hydrocarbon pollution , but also correlated with the weather variable and population variable .

We randomly split the data into a training set with 30 observations and a test set of 30 observations. The five models, BEN-EL, BEN, BL, EN, and LADL, are conducted using the training set, and the prediction errors are obtained using the test set. For Bayesian methods, the EB approach is used to select the penalty parameters, and the Bayesian credible interval with the probability level is used to select the variables. We repeat the procedure 100 times.

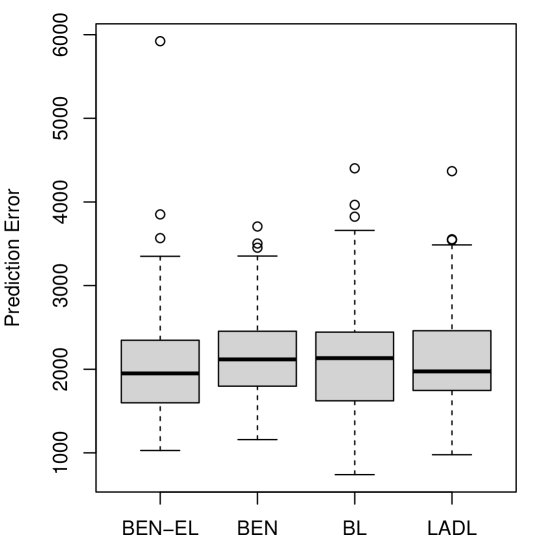

Table 5 reports the mean prediction error and standard deviation of the five models. BEN-EL yields the smallest mean prediction error, but its standard deviation is the second largest. The relatively large standard deviation of BEN-EL is due to one large prediction error. BEN-EL has the smallest median, mean, the first quartile (Q1), and the third quartile (Q3) than the other methods. Figure 7 in Appendix A shows boxplots of prediction errors of BEN-EL, BEN, BL, LADL. The results imply that the proposed method outperforms the other methods when data have a complex correlation structure and a small number of observations.

The mean prediction errors along with the standard errors (SE) of BEN-EL, BEN, BL and EN methods for air pollution data analysis. The smallest mean prediction error is marked in bold. Mean Prediction Error (SE) BEN-EL BEN BL EN LADL 1950 (679) 2118 (518) 2133 (665) 3044 (2903) 1974 (620)

6 Conclusion

We propose a new Bayesian approach for EN based on EL. Accordingly, the profile EL for the linear model replaces the ordinary likelihood function in the Bayesian setting. We use the HMC algorithm because the resulting posterior EL distribution lacks a closed form, and the implementation of even standard MCMC methods is challenging. A new HMC parameter tuning algorithm based on the bisection method is proposed for BEL. Simulation studies and the air pollution data application show that our approach outperforms the other methods when the sample size is small, data are correlated, and error distributions are misspecified.

BEL methods share the same limitations of EL in the sense that the number of variables cannot increase as the sample size decreases. The reason is that the estimating equations are included in the convex hull to maximize the profile EL ratio. However, is not of full rank when and hence it is non-invertible. To address this shortcoming, several approaches have been proposed, such as penalized EL [34] and double penalization [35]. Thus, it might be worth a formal investigation whether the proposed method could be extended for case for future work.

Another interesting topic is implementing the NUTS algorithm of [51] to the BEL framework. NUTS automatically tunes leapfrog steps and step sizes and has been used as a default sampler in popular MCMC software such as Stan [63] and PyMC3 [64]. However, the proper introduction of NUTS into BEL has not been studied. We observe that HMC chains often diverge when the NUTS algorithm is used for BEN-EL. Also, BEL is not supported by most MCMC libraries because EL cannot be used as a likelihood function. Developing a BEL computational pipeline compatible with Stan or PyMC3 for practitioners will be of further interest.

Disclosure statement

No potential conflict of interest was reported by the authors.

Data availability statement

All data used in simulation studies are generated randomly and the air pollution data are obtained from McDonald et al. [62]. The R code used to generate, import, and analyze the data used in this paper is publicly available at the URL: https://github.com/chulmoon/BEN-EL.

References

- [1] Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc B. 1996;58(1):267–288.

- [2] Tikhonov AN, Nikolayevich N. On the stability of inverse problems. Dokl Akad Nauk SSSR. 1943;39(5):195–198.

- [3] Zou H, Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc B. 2005;67(2):301–320.

- [4] Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc. 2001;96(456):1348–1360.

- [5] Zhang CH. Nearly unbiased variable selection under minimax concave penalty. Ann Stat. 2010;38(2):894–942.

- [6] Bühlmann P, Van De Geer S. Statistics for high-dimensional data: methods, theory and applications. Springer Science & Business Media; 2011.

- [7] Tutz G, Ulbricht J. Penalized regression with correlation-based penalty. Stat Comput. 2009;19(3):239–253.

- [8] Park T, Casella G. The bayesian lasso. J Am Stat Assoc. 2008;103(482):681–686.

- [9] Li Q, Lin N. The bayesian elastic net. Bayesian Anal. 2010;5(1):151–170.

- [10] Ishwaran H, Rao JS. Spike and slab variable selection: Frequentist and bayesian strategies. Ann Stat. 2005;33(2):730–773.

- [11] Carvalho CM, Polson NG, Scott JG. The horseshoe estimator for sparse signals. Biometrika. 2010;97(2):465–480.

- [12] Griffin JE, Brown PJ. Bayesian hyper-lassos with non-convex penalization. Aust N Z J Stat. 2011;53(4):423–442.

- [13] Chen M, Carlson D, Zaas A, et al. Detection of viruses via statistical gene expression analysis. IEEE Trans Biomed Eng. 2010;58(3):468–479.

- [14] Huang A, Xu S, Cai X. Empirical bayesian elastic net for multiple quantitative trait locus mapping. Heredity. 2015;114(1):107–115.

- [15] Alhamzawi R. Bayesian elastic net tobit quantile regression. Commun Stat Simul Comput. 2016;45(7):2409–2427.

- [16] Alshaybawee T, Midi H, Alhamzawi R. Bayesian elastic net single index quantile regression. J Appl Stat. 2017;44(5):853–871.

- [17] Alhamzawi R, Ali HTM. The bayesian elastic net regression. Commun Stat Simul Comput. 2018;47(4):1168–1178.

- [18] Kyung M, Gill J, Ghosh M, et al. Penalized regression, standard errors, and bayesian lassos. Bayesian Anal. 2010;5(2):369–411.

- [19] Hans C. Bayesian lasso regression. Biometrika. 2009;96(4):835–845.

- [20] Owen AB. Empirical likelihood ratio confidence intervals for a single functional. Biometrika. 1988;75(2):237–249.

- [21] Owen AB. Empirical likelihood ratio confidence regions. Ann Stat. 1990;18(1):90–120.

- [22] DiCiccio T, Hall P, Romano J. Empirical likelihood is bartlett-correctable. Ann Stat. 1991;19(2):1053–1061.

- [23] Chen SX, Cui H. On bartlett correction of empirical likelihood in the presence of nuisance parameters. Biometrika. 2006;93(1):215–220.

- [24] Qin J, Lawless J. Empirical likelihood and general estimating equations. Ann Stat. 1994;22(1):300–325.

- [25] Hall P, Owen AB. Empirical likelihood confidence bands in density estimation. J Comput Graph Stat. 1993;2(3):273–289.

- [26] Qin G, Zhou XH. Empirical likelihood inference for the area under the roc curve. Biometrics. 2006;62:613–622.

- [27] Wang D, Chen SX. Empirical likelihood for estimating equations with missing values. Ann Stat. 2009;37(1):490–517.

- [28] Chen SX, Keilegom IV. A review on empirical likelihood methods for regression. TEST. 2009;18:415–447.

- [29] Hjort NL, McKeague IW, Van Keilegom I. Extending the scope of empirical likelihood. Ann Stat. 2009;37(3):1079–1111.

- [30] Chen SX, Peng L, Qin YL. Effects of data dimension on empirical likelihood. Biometrika. 2009;96(3):711–722.

- [31] Tang CY, Leng C. Penalized high-dimensional empirical likelihood. Biometrika. 2010;97(4):905–920.

- [32] Leng C, Tang CY. Penalized empirical likelihood and growing dimensional general estimating equations. Biometrika. 2012;99(3):703–716.

- [33] Fan J, Peng H. Nonconcave penalized likelihood with a diverging number of parameters. Ann Stat. 2004;32(3):928–961.

- [34] Lahiri SN, Mukhopadhyay S. A penalized empirical likelihood method in high dimensions. Ann Stat. 2012;40(5):2511–2540.

- [35] Chang J, Tang CY, Wu TT. A new scope of penalized empirical likelihood with high-dimensional estimating equations. Ann Stat. 2018;46(6B):3185–3216.

- [36] Lazar NA. Bayesian empirical likelihood. Biometrika. 2003;90(2):319–326.

- [37] Grendar M, Judge G. Asymptotic equivalence of empirical likelihood and bayesian map. Ann Stat. 2009;37(5a):2445–2457.

- [38] Fang KT, Mukerjee R. Empirical-type likelihoods allowing posterior credible sets with frequentist validity: higher-order asymptotics. Biometrika. 2006;93(3):723–733.

- [39] Chang IH, Mukerjee R. Bayesian and frequentist confidence intervals arising from empirical-type likelihoods. Biometrika. 2008;95(1):139–147.

- [40] Rao JNK, Wu C. Bayesian pseudo-empirical-likelihood intervals for complex surveys. J R Stat Soc B. 2010;72(4):533–544.

- [41] Chaudhuri S, Ghosh M. Empirical likelihood for small area estimation. Biometrika. 2011;98(2):473–480.

- [42] Yang Y, He X. Bayesian empirical likelihood for quantile regression. Ann Stat. 2012;40(2):1102–1131.

- [43] Mengersen KL, Pudlo P, Robert CP. Bayesian computation via empirical likelihood. Proc Natl Acad Sci U S A. 2013;110(4):1321–1326.

- [44] Chaudhuri S, Mondal D, Yin T. Hamiltonian monte carlo sampling in bayesian empirical likelihood computation. J R Stat Soc B. 2017;79(1):293–320.

- [45] Bedoui A, Lazar AN. Bayesian empirical likelihood for ridge and lasso regressions. Comput Stat Data Anal. 2020;145:106917.

- [46] Chib S, Greenberg E. Understanding the metropolis-hastings algorithm. Am Stat. 1995;49(4):327–335.

- [47] Owen AB. Empirical likelihood. Chapman & Hall/CRC, Boca Raton; 2001.

- [48] Andrews DF, Mallows CI. Scale mixtures of normal distributions. J R Stat Soc B. 1974;36(1):99–102.

- [49] Hörmann W, Leydold J. Generating generalized inverse gaussian random variates. Stat Comput. 2014;24(4):547–557.

- [50] Neal RM. Mcmc using hamiltonian dynamics. Handbook of Markov Chain Monte Carlo. 2011;2(11):2.

- [51] Hoffman MD, Gelman A, et al. The no-u-turn sampler: adaptively setting path lengths in hamiltonian monte carlo. J Mach Learn Res. 2014;15(1):1593–1623.

- [52] Burden R, Faires J, Burden A. Numerical analysis. Cengage Learning; 2015.

- [53] Beskos A, Pillai N, Roberts G, et al. Optimal tuning of the hybrid monte carlo algorithm. Bernoulli. 2013;19(5A):1501–1534.

- [54] Vehtari A, Gelman A, Simpson D, et al. Rank-normalization, folding, and localization: An improved for assessing convergence of mcmc. Bayesian Anal. 2021;1(1):1–28.

- [55] Casella G. Empirical bayes gibbs sampling. Biostatistics. 2001;2(4):485–500.

- [56] Li J, Das K, Fu G, et al. The bayesian lasso for genome-wide association studies. Bioinformatics. 2011;27(4):516–523.

- [57] Pasanen L, Holmström L, Sillanpää MJ. Bayesian lasso, scale space and decision making in association genetics. PLoS One. 2015;10(4):e0120017.

- [58] Bernardo JM, Smith AFM. Bayesian theory. John Wiley & Sons, Chichester, New York, USA; 1994.

- [59] Wang H, Li G, Jiang G. Robust regression shrinkage and consistent variable selection through the lad-lasso. J Bus Econ Stat. 2007;25(3):347–355.

- [60] Fernández C, Steel MF. On bayesian modeling of fat tails and skewness. J Am Stat Assoc. 1998;93(441):359–371.

- [61] Wuertz D, Setz T, Chalabi Y, et al. fgarch: Rmetrics - autoregressive conditional heteroskedastic modelling; 2020. R package version 3042.83.2.

- [62] McDonald GC, Schwing RC. Instabilities of regression estimates relating air pollution to mortality. Technometrics. 1973;15(3):463–481.

- [63] Stan Development Team. Stan modeling language users guide and reference manual, version 2.28 ; 2022.

- [64] Salvatier J, Wiecki TV, Fonnesbeck C. Probabilistic programming in python using pymc3. PeerJ Comput Sci. 2016;2:e55.

Appendix A

Summary of variables of the air pollution data set. Variable type Variable name Description Weather prec Mean annual precipitation (in) jant Mean January temperature (F) jult Mean July temperature (F) humid Annual average relative humidity at 1 pm (%) Socioeconomic ovr95 Percentage of population aged 65 or older in 1960 popn Population per household in 1960 educ Median school years completed hous Percentage of housing units dens Population per square mile in 1960 nonw Percentage non-white population in 1960 wwdrk Percentage employed in white collar occupations in 1960 poor Percent of families with income under 3,000 dollars in 1960 Pollution hc Relative hydrocarbon pollution potential nox Relative oxides of nitrogen pollution potential so Relative sulfur dioxide pollution potential mort Age-adjusted mortality rate per 100,000