Bayesian approach for validation of runaway electron simulations

Abstract

Plasma-terminating disruptions in future fusion reactors may result in conversion of the initial current to a relativistic runaway electron beam.

Validated predictive tools are required to optimize the scenarios and mitigation actuators to avoid the excessive damage that can be caused by such events.

Many of the simulation tools applied in fusion energy research require the user to specify several input parameters that are not constrained by the available experimental information.

Hence, a typical validation exercise requires multiparameter optimization to calibrate the uncertain input parameters for the best possible representation of the investigated physical system.

The conventional approach, where an expert modeler conducts the parameter calibration based on domain knowledge, is prone to lead to an intractable validation challenge.

For a typical simulation, conducting exhaustive multiparameter investigations manually to ensure a globally optimal solution and to rigorously quantify the uncertainties is an unattainable task, typically covered only partially and unsystematically.

Bayesian inference algorithms offer a promising alternative approach that naturally includes uncertainty quantification and is less subjective to user bias in choosing the input parameters.

The main challenge in using these methods is the computational cost of simulating enough samples to construct the posterior distributions for the uncertain input parameters.

This challenge can be overcome by combining probabilistic surrogate modelling, such as Gaussian Process regression, with Bayesian optimization, which can reduce the number of required simulations by several orders of magnitude.

Here, we implement this type of Bayesian optimization framework for a model for analysis of disruption runaway electrons, and explore for simulations of current quench in a JET plasma discharge with an argon induced disruption.

We use this proof-of-principle framework to explore the optimum input parameters with uncertainties in optimization tasks ranging from one to seven dimensions.

The relevant Python codes that are used in the analysis are available via https://github.com/aejarvin/BO_FOR_RE_SIMULATIONS/

Keywords: Bayesian inference, Bayesian optimization, DREAM, Runaway electrons, Fusion energy, Uncertainty quantification

1 Introduction

Runaway electrons (RE) pose one of the leading concerns regarding the integrity and duty cycle of future fusion reactors (Boozer, 2018). As RE generation in disruptions is exponentially sensitive to the plasma current, Rosenbluth & Putvinski (1997), and is projected to increase from the level of a few MA in large present-day tokamaks to the range of 10 – 20 MA in power plant relevant tokamaks, an unmitigated RE beam at reactor-scale would be expected to cause severe damage, extended downtime, and repair costs Boozer (2018); Breizman et al. (2019). Therefore, there is a strong need for validated tools to predict and avoid disruptions and RE beam generations when entering the era of reactor-scale devices.

To address this need, several numerical tools have been developed for disruption and RE analysis, such as the nonlinear magnetohydrodynamic (MHD) codes JOREK Huysmans & Czarny (2007), and NIMROD Sovinec et al. (2004), the kinetic code CQL3D Harvey et al. (2000), and the fluid-kinetic framework DREAM Hoppe et al. (2021). However, validating these simulation tools with experimental data is complicated, as typically some of the input parameters of the simulations are not well constrained by the available experimental information. In such a situation, the user must first specify values for these uncertain input parameters to calibrate the model to appropriately represent the investigated system. This challenge is common to other numerical tools applied in magnetic confinement fusion research as well, such as scrape-off layer plasma simulations conducted with SOLPS-ITER Wiesen et al. (2015). A conventional approach to calibrate the model is to use previous experience and domain knowledge to conduct the necessary parameter fitting manually, aiming to find a set of input parameters that minimizes the discrepancy between the synthetic and measured diagnostic data. The remaining discrepancy is then evaluated and documented as a degree of validity of the model.

However, this type of expert modeller approach becomes intractable as the number of uncertain parameters increases. With multiple uncertain input parameters, manual optimization is prone to lead to subjective reasoning for the trajectory selection through the space of optimized parameters. As a result, the degree of confidence on the obtained solution and its uncertainty is likely to remain ambiguous. Furthermore, the ad hoc nature of subjective reasoning is prone to lack of scientific rigour, which is problematic from the point of view of aiming to establish objective and reproducible scientific results. These challenges can be alleviated by applying a regimented approach, such as grid search, instead of subjective reasoning, but such an approach is intractable to conduct manually with multiple uncertain input parameters and is computationally inefficient when operating with costly simulations. Due to these inefficiencies of the expert modeller approach, an optimization algorithm that would take the human out-of-the-loop, provide a rigorous systematic approach with natural uncertainty quantification, and select the samples from the search space in a computationally efficient manner would be a very attractive alternative approach Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018).

In this study, approximate Bayesian computation (ABC) and Bayesian optimization (BO) are applied to find the optimal values and provide inverse uncertainty quantification of uncertain input parameters Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018); Marin et al. (2012) in DREAM runaway electron simulations. Inverse uncertainty quantification here refers to quantifying the uncertainty of the uncertain input parameters of the model given observed experimental data Wu et al. (2018); Oberkampf & Trucano (2002). The aim of this study is to provide a proof-of-principle approach to using these methods in calibrating the uncertain input parameters in RE simulations, while the methodology could be used broadly in validating other predictive tools within magnetic confinement fusion research. The implementation is based on the Bayesian Optimization for Likelihood-Free Inference (BOLFI) method of the Engine for Likelihood-Free Inference (ELFI) Python software package Gutmann & Corander (2016); Lintusaari et al. (2018).

2 Bayesian approach

This Section describes the methodology used in this study. Subsection 2.1 provides an overview of Approximate Bayesian Computation (ABC), Subsection 2.2 an introduction to Bayesian Optimization (BO), Subsection 2.3 describes the usage of Gaussian Process Regression as a probabilistic surrogate model in BO, and Subsection 2.4 describes the functionality of the acquisition functions in BO.

2.1 Approximate Bayesian computation

Bayesian inference aims to establish the conditional probability distribution, , called the posterior, of the uncertain input parameters, , given observed experimental measurements, . represents the best estimate and uncertainty of the input parameters for the investigated system. Bayesian inference applies the Bayes’ theorem:

| (1) |

which states that the posterior is proportional to the likelihood of given , , multiplied by the prior probability distribution for , . The marginal probability of the experimental measurements, , represents an integral over all possible data generating input values, , which would be computationally challenging to evaluate. However, it is typically sufficient to establish the relative posterior probabilities of various values of . Therefore, does not need to be directly evaluated, and it is sufficient to apply the proportionality:

| (2) |

When the likelihood function, , is either not available analytically or cannot be evaluated within the available computational or time resources, the standard alternative is to use approximate Bayesian computation (ABC) Marin et al. (2012); Järvenpää et al. (2018). ABC aims to establish the approximate Bayesian posterior:

| (3) |

where is data generated with the simulation model with input parameters , and is a discrepancy function between the simulated and measured data. represents the threshold parameter controlling the trade-off between posterior estimation accuracy and efficiency, and is the Heaviside step function which takes a value 1 whenever is greater than the discrepancy. Small values of lead to more accurate approximate posteriors, but also increase the computational challenge.

One of the simplest ABC algorithm that could be applied to numerically estimate the integral (Eq. 3) is rejection sampling Marin et al. (2012); Järvenpää et al. (2018); Lintusaari et al. (2017):

-

1.

Draw a random sample from .

-

2.

Generate .

-

3.

Accept if .

-

4.

Go back to step (i) until sufficient number of accepted samples are collected.

The accepted values represent the approximate posterior distribution. The drawback of the standard rejection sampling is the number of required function evaluations. For a typical simulation tool in magnetic confinement fusion, a function evaluation takes at least several minutes and more typically hours or days. Therefore, it is not computationally feasible to collect sufficiently many samples to get an accurate ABC posterior distribution using a rejection sampler. Furthermore, a rejection sampler with a small threshold parameter, , is computationally very inefficient as a large fraction of the sampled function evaluations are rejected. While it is possible to improve the efficiency by applying approaches such as Markov Chain Monte Carlo (MCMC) Marjoram et al. (2003), the direct sampling based ABC algorithms are still expected to be computationally too costly for the type of applications targeted in this work.

The inefficiency of the direct sampling based ABC approach can be circumvented by applying Bayesian optimization to traverse the space of optimized input parameters Gutmann & Corander (2016); Järvenpää et al. (2019). This leads to a probabilistic surrogate model based ABC approach that is observed to be several orders of magnitude more efficient in terms of full function evaluations than the direct sampling based ABC algorithms. At each step of the algorithm, the ABC posterior is estimated using the surrogate model as , where the probability is computed using the probabilistic surrogate model.

2.2 Bayesian optimization

Bayesian optimization (BO) offers a powerful approach for global optimization of costly-to-evaluate, non-convex functions, without access to first- or second-order derivatives Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018). The problem of finding optimal values, , for the uncertain input parameters, , can be represented as a task of finding the optimum of a non-linear function of a compact set , called search space in this report. If represents the discrepancy between the synthetic and measured diagnostic data, then the problem can be formulated as:

| (4) |

The target for a BO algorithm is to be able to traverse the search space efficiently in terms of function evaluations and to find the globally optimum solution by applying prior belief about the optimized function and by balancing exploration and exploitation of the search space Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018). In exploitation, samples are collected in regions of the search space that are known to lead to near optimal function values based on prior belief, and in exploration, samples are collected in regions that encompass a large uncertainty.

A standard BO algorithm consists of two main components Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018):

-

1.

A probabilistic model for the objective function.

-

2.

An acquisition function for recommending the next sampling point.

The probabilistic model represents essentially a low evaluation cost surrogate model for the objective function, and the uncertainties retained in the probabilistic model represent the degree of confidence on the surrogate model predictions. The acquisition function applies the mean and variance of the probabilistic model to balance exploitation and exploration. The collected sample values are then used to update the probabilistic surrogate model.

2.3 Gaussian process regression

The usual choice for the probabilistic model is to use Gaussian Process Regression (GPR), also known as Kriging (Stein, 1999; Rasmussen & Williams, 2006, and references therein). Kriging surrogate-based optimization was previously used for parameter optimization of plasma transport codes by Rodriguez-Fernandez et al. (2018). Other examples of GPR applications in plasma physics can be found in (Ho et al., 2019; Chilenski et al., 2017, 2015; von Nessi et al., 2012; Li et al., 2013; Romero & Svensson, 2013; von Nessi & Hole, 2013, and references therein).

GPR is a Bayesian regression technique and is very powerful for interpolating small sets of data as well as retaining information about the uncertainty of the regression (Stein, 1999; Rasmussen & Williams, 2006, and references therein). Gaussian process (GP) is a stochastic process, for which any finite collection of random values has a multivariate normal distribution. GP is specified by the mean function, , and the covariance function, Rasmussen & Williams (2006):

| (5) |

The GPR represents a family of functions, for which the point to point variance is described by the covariance function. Usually, the mean function is assumed as zero, although other assumptions are possible. This means that the mean of the prior assumption on variation of the objective function value when propagating from a collected data point is zero. The covariance function or kernel describes the smoothness assumption on the possible functions . GP essentially describes a normal distribution over possible functions, , conditioned with observations {, }. Assuming a collection of possibly noisy observations with a Gaussian noise variance , the posterior probability distribution function of at point is Gaussian with posterior mean and posterior variance :

| (6) |

Assuming a zero mean function, , the mean and variance can be obtained as

| (7) |

| (8) |

where represents the vector of covariances between the test point, , and the observations, is a vector of the observations, and is the covariance matrix Gutmann & Corander (2016); Rasmussen & Williams (2006). Since the function evaluations in this work are deterministic, the term is constrained to a low value that does not impact the predictions. An estimate of the likelihood at is given by

| (9) |

where is the cumulative distribution function of . In this work, we will set equal to the current optimal value provided by the probabilistic surrogate model, which is also the default in BOLFI Gutmann & Corander (2016).

A key step in building a GP regression is to select the covariance function or kernel Rasmussen & Williams (2006). Usually the default choice is the radial basis function (RBF), also known as squared exponential or Gaussian kernel:

| (10) |

where is a vector of covariance lengthscales for each dimension, , and is the variance. In the applications in this work, the single constant was observed to be often too restrictive and a rational quadratic kernel (RQ) was used instead:

| (11) |

which is equivalent to summing many RBF kernels with varying . The hyperparameter represents the relative weighting between large and small values. Before applying the model, the hyperparameters (, , , ) must be optimized first. These can be estimated by maximizing the marginal log-likelihood Rasmussen & Williams (2006). The GPR library used in this work as well as in BOLFI is the Python GP framework GPy GPy (since 2012), which encompasses the applied optimization routines.

2.4 Acquisition function

The acquisition function applies the mean and variance of the probabilistic surrogate model to recommend the next sampling point for the objective function Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018). The acquisition functions are typically constructed to recommend sampling points that either encompass optimal predicted mean for the objective function, exploitation, or large uncertainty, exploration. The sampling point is selected by optimizing the acquisition function. Several acquisition functions have been developed and can be found in the reviews in Brochu et al. (2010); Shahriari et al. (2016); Frazier (2018). The acquisition function used in sequential sampling in this work is the lower confidence bound selection criterion (LCBSC), which is also the default acquisition function in BOLFI (Brochu et al., 2010; Gutmann & Corander, 2016; Srinivas et al., 2010, and references therein). This function can be written as

| (12) |

| (13) |

where the coefficient is the trade-off parameter between exploration and exploitation, is a constant chosen by the user, and is the dimensionality of the search space. Optimizing the acquisition function provides a deterministic answer for the next sampling point. An example of the application of this acquisition function is shown in Section 3.2.

Since the next sampling point is obtained deterministically for a given state of the surrogate model, the approach is naturally sequential: (1) the objective function is evaluated for the sampling location provided by the optimum of the acquisition function, (2) the GPR surrogate model is updated, and (3) the acquisition function is optimized again, using the updated GPR, to recommend the next sampling point. However, with complicated, multi-dimensional optimization tasks with computationally time consuming function evaluations, it would be more attractive to conduct several objective function evaluations in parallel to each other to reduce the overall time consumption of the optimization task, especially when suitable high-performance computing (HPC) resources are available.

To conduct parallel BO, stochastic acquisition rules can be used. Various approaches for parallel BO and batch acquisition have been developed Järvenpää et al. (2018, 2019); Thompson (1933); Kandasamy et al. (2018); Chapelle & Li (2011); Palma et al. (2019). In this work, the randmaxvar approach developed by Järvenpää et al. (2019) is used as the stochastic acquisition method. The approach is based on the maxvar acquisition rule also presented in Järvenpää et al. (2019). The maxvar acquisition method recommends a sample in a location that encompasses the maximum variance of the unnormalized ABC posterior. Basically, due to the limited information, represented by the collected samples, there is uncertainty in the GPR representation of the objective function and this uncertainty is propagated as uncertainty of the unnormalized ABC posterior. The maxvar method aims to collect samples that lead to maximum reduction of this uncertainty. In the stochastic version of this method, samples are collected from the distribution that represents the variance of the unnormalized ABC posterior. Since samples are collected stochastically, several samples can be collected without updating the GPR surrogate in between the samples, enabling parallelization. Furthermore, sampling can be done asynchronously by simply updating the GPR surrogate whenever new results are added to the dictionary of collected samples and by sampling new values whenever an idle processor becomes available.

3 Application to a JET runaway electron experiment

This Section describes proof-of-principle applications of the methodology discussed in Section 2 for a RE experiment at JET. Subsection 3.1 describes the investigated JET plasma discharge and the DREAM setup, Subsection 3.2 documents a 1D proof-of-principle optimization, Subsections 3.3, 3.4, and 3.5 extend the search to 4 to 7 dimensional search spaces, and Subsection 3.6 evaluates the number of samples required for convergence as a function of dimensionality of the search.

3.1 Simulated experiment and DREAM setup

The BO and ABC approach discussed in the previous section is applied for DREAM runaway electron simulations of current quench (CQ) in the disruption of a JET discharge #95135. This was a deuterium limiter plasma with an argon massive gas injection induced disruption, described in detail by Reux et al. (2021) and Brandström (2021). DREAM is a numerical tool for self-consistently simulating the evolution of temperature, poloidal flux, and impurity densities, along with the generation and transport of REs in tokamak disruptions (Hoppe et al., 2021). The DREAM simulations in this manuscript are similar to those presented by Brandström (2021) with the exception that only a fluid model for RE electrons is used here, as kinetic simulations were not necessary for the proof-of-principle of the Bayesian approach. For a full description of the physics model in DREAM, we refer the reader to Ref. (Hoppe et al., 2021).

The simulations are started at the peak of the total plasma current, , obtained during the disruption. An instantaneous thermal quench (TQ) is assumed, after which all background plasma quantities, except the electron temperature, , are evolved self-consistently. The post-disruption is instead given as an uncertain input parameter. The background plasma density is obtained from pre-disruption measurement with the high resolution Thomson scattering (HRTS) (Pasqualotto et al., 2004; Frassinetti et al., 2012). Even though the uncertainty of the electron density measurement could be taken into account in the Bayesian approach, for simplicity we neglect it here.

The argon density in the plasma is obtained from the estimated amount of injected argon, volume of the vessel, and fraction of argon that is assimilated. Argon is assumed to be uniformly distributed in the plasma. While the injected amount can be obtained from the experiment, atoms Brandström (2021), the assimilated fraction is given as an uncertain input parameter, .

Hot-tail and Dreicer RE generation mechanisms were not self-consistently included in the simulations. Instead, a RE seed profile is given as an uncertain input to generate a RE beam through the avalanche mechanism. The initial total plasma current (combination of ohmic and prescribed runaway seed current) is constrained to match the experimentally measured peak value, MA, by adjusting the initial electric field in order for the avalanche multiplication factor to to be constrained. The electric field is evolved self-consistently, during the CQ and RE plateau simulations. The conductivity of the wall is controlled by a characteristic wall time, , that is provided as an uncertain input parameter. Here, is the external inductance and the resistance of the wall.

The full list of uncertain input parameters are , , RE seed distribution, and . In addition, the RE seed distribution is scaled with a multiplier such that the RE plateau current matches the experimentally measured value. This is done iteratively with a binary search algorithm. The algorithm is initialized by multiplying or dividing the multiplier by at each iteration until the predicted plateau current passes the experimentally measured value, after which binary search is applied to converge to the optimum. If the multiplier is reduced below a small value, , the search algorithm stops without finding a solution that matches the RE plateau current and the objective function value at the point of reaching that threshold is propagated through the algorithm. This is simply due to the fact that it cannot be assumed that for all input values within the search space it is possible to find a multiplier that would enable matching the RE plateau current.

In the proof-of-principle examples that follow, the objective function is chosen as the L1-norm of the discrepancy between the measured and predicted during the CQ (Fig. 1):

| (14) |

Effectively this discrepancy function calculates the area between the two curves in Figure 1. To avoid accumulating excessive integration within the RE plateau, the discrepancy is only calculated between 0 and 25 ms from the current peak.

3.2 1D search space

The first proof-of-principle test is to apply the Bayesian approach to search for the post TQ that minimises the discrepancy function (Eq. 14). The other uncertain input parameters are fixed as %, uniform RE seed profile, and ms. A rational quadratic kernel is used and the lengthscale is constrained to be below 1.0. This is done to avoid the model becoming overconfident in regions that have not been sampled yet. If the lengthscale converges to a large value, it can suppress exploration prematurely and prevent the algorithm from finding the optimal solution. More generally it seems that in BO algorithms it is probably better to have a model that has capability to overfit rather than underfit the data. The BO algorithm is interpolating solutions within the search space and an overfitting model will just encourage exploration while an underfitting model might not have the generalization capability to fit the solution near the optimum. The acquisition function is the LCBSC (Eq. 12) with exploration constant . The search space for is bounded between 1.0 and 20 eV. A uniform prior uncertainty distribution is assumed.

Within less than 10 iterations, the algorithm starts to converge to the optimum value (Fig. 2). The first 3 samples are collected randomly from the uniform prior distribution. After these are collected, the GPR surrogate model is fitted to the data (Fig. 2). The mean and variance of the GPR are applied by the acquisition function to recommend the next sampling location. The algorithm proceeds by choosing the location of the minimum value of the acquisition function. By proceeding like this, the algorithm converges near the optimum value with a narrow confidence interval around the sample number 7 to 8 (Fig. 3a). Since the prior distribution is uniform, the posterior probability distribution can be obtained from the GPR by applying Eq. 9 (Fig. 3b). Finally, the simulated current value with the temperature value providing the highest posterior probability, eV, can be compared to the experimental measurements (Fig. 3c). The results indicate that by only adjusting the constant post thermal quench , the model is not able to reproduce the experimentally measured CQ rate. Alternatively, these results indicate that the background plasma resistivity is changing during the CQ.

3.3 5D search space

After the 1D proof-of-principle, the next step is to extend the search space to include the other uncertain parameters as well. Since the RE seed is a 1D profile, it is parameterized as the probability distribution function of the gamma distribution:

| (15) |

where and are free parameters and is the gamma function (Fig. 4). The intention is to provide a general parameterization that constrains as little as possible the possible RE seed profile shapes. The search spaces for both and are set as uniform distributions between 0.001 and 10. 10 randomly sampled RE seed profile shapes are shown in Figure 4. It can be clearly seen that this parameterization allows flexible representations of profiles peaking at the center, middle, or edge of the plasma. The search space for the argon assimilation fraction is set as uniform between 0.001% and 100%. The search space for the characteristic wall time is set such that is sampled uniformly between 0 and 7. As a result, the values range between about 1 ms and 1100 ms. This allows sampling for very large parameters exceeding 1.0 s, while encouraging collection of samples at low values.

Due to the expanded volume of the search space, more samples are expected to be needed than in the 1D example. Therefore, the randmaxvar acquisition function was used with a batch size of 10 samples conducted in parallel. The first 50 samples were collected by sampling the search space randomly, after which the acquisition function was used to recommend sampling locations.

Similar to the 1D search, a rational quadratic kernel is used. The kernel parameters are restricted such that the power parameter in the rational quadratic kernel was constrained to be between and 0.03. The lengthscale constraints are altered for every batch of 10 samples. For even round batches, the lengthscales are constrained to be positive and manually initialized as the distance of the search domain for the dimension divided by the number of collected samples. After this preconditioning step, GP optimization is conducted. There is no maximum lengthscale setup during the even rounds. During the odd round batches, the lengthscales for variations are constrained to be below 1 for the and dimensions, below 0.5 for the and dimensions, and below 0.1 for the dimension. The lower limits for the lengthscales were set to . The even rounds perform essentially automatic relevance determination obtaining very long lengthscales for dimensions that do not show a significant impact on the objective function value, guiding the search to prioritize optimization of input parameters that have more significant impact on the objective function value. However, this approach alone would risk the surrogate model becoming overly confident early in the search and stop exploration for input parameters deemed unimportant. To counteract this risk, the odd rounds apply restrictions of lengthscales, such that the algorithm understands to explore input parameter values, which would simply be extrapolated and interpolated over by the long-lengthscale surrogate model.

The sampling algorithm finds clear optima in the search space for the background plasma temperature and characteristic wall time (Fig. 5). It can be observed that there is a global optimum at around 5 – 7 eV and less than about 2.7 ms (), and a local optimum at about 15 – 20 eV and larger than about 50 ms () (Fig. 5 red and black ellipses). The two solution branches can also be observed in the plot as a function of the argon assimilation fraction, such that the low temperature solution branch reaches the optimum at values above 20 %, while the higher temperature solution branch at lower values between 5 – 30 % (Fig. 5c). The shape of the RE seed distribution does not seem to impact the discrepancy significantly (Figs. 5d, e).

After 290 samples, the algorithm estimates the global optimum to be eV, %, ms, , and , where the 95 % confidence intervals for , , and span most of the search space (Fig. 6). The local uncertainties for the global optimum can be obtained by evaluating the local properties of the approximate posterior. The approximate posterior can be extracted from the probabilistic surrogate model by applying Eq. 9. While a global ABC posterior can be obtained through, for example, MCMC sampling, the local inverse uncertainty near the global optimum is likely of more practical interest in fusion energy research and can be obtained directly from the GPR representation of the posterior. This type of analysis was done by evaluating the one dimensional posterior distribution for each search dimension from the global optimum, which can be integrated to obtain confidence intervals (Fig. 6). It should be noted that this analysis does not take into account the secondary optimum at higher temperatures as that would require non-linear analysis of the approximate posterior, while MCMC sampling of the posterior would collect some distribution in that area also. However, since multimodality of the optimized function can be observed from the discrepancy plot already (Fig. 5), it is considered more important to obtain local uncertainty estimations near the global optimum than sample the full approximate posterior. For completeness, MCMC sampling was conducted for the approximate posterior and the resulting distributions for and show the global optimum, also visible in (Fig. 6), as well as the secondary local optimum at higher temperatures (Fig. 7).

Analysing the convergence of the search it can be observed that after about 80 – 110 samples, the posterior distributions for and have converged (Figs. 6a, b). For the other parameters, the 95 % confidence intervals remain large, indicating that the discrepancy value is not very sensitive to these input parameters, as was observed in the discrepancy plot already (Fig. 5). The step observed at 200 samples highlights the stochastic nature of the GPR surrogate model fitting. Depending on the initial conditions, the optimization algorithm might find somewhat different hyperparameters for the GPR, leading to a different shape of the approximate posterior and shift of the optimum and boundaries of the confidence interval as well. However, the large steps of the optimum for , as well as the large steps at other sample numbers for , and , are a result of the relatively flat posterior distribution shapes, while posterior shape for and are not changed significantly.

Finally, the predicted and measured total plasma current are compared for the global optimum extracted by the algorithm (Fig. 6f). Comparing the result to the optimum case in the 1D search space example, it can be clearly seen that, with a 5D search space, the algorithm is able to obtain a significantly better fit to the experimentally measured current (Figs. 3c, 6f). In the 1D example, the current was reducing significantly faster than experimentally measured during the early part of the CQ and the end of the CQ happened several ms later than experimentally measured, such that the average L1-norm discrepancy was minimized, while the rate of change of plasma current was poorly matched (Fig. 3c). On the other hand, in the 5D example, the initial drop is also faster than measured experimentally, but soon the rate of change of the plasma current is matched to the experimentally measured rate of change, such that the end of CQ happens near the experimentally measured end of the CQ when the L1-norm between the two currents is minimized (Fig. 6f). The fact that the current is reducing faster than experimentally measured during the early parts of the CQ indicates that the plasma resistance that on average matches the plasma current evolution probably overestimates the resistance during early parts of the CQ. To address this, the final proof-of-principle test conducted in this manuscript is to allow linear variation of during the simulation by extending the search space to seven dimension.

3.4 7D search space

As a next extension of the search, a parameterized variation of the background plasma during the simulation is allowed. Linear variation of is assumed, such that the search space is extended to seven dimensions, by adding final temperature, , and the time at which is reached, . After is reached, is assumed to stay constant. Same search spaces are used for the initial and final , and the search space for is set as uniform between 1 ms and 44 ms.

The rational quadratic kernel is used in the GPR. The power of the kernel was restricted similarly to the setup in the 5D search. Similar to the 5D search, the lengthscale restrictions were altered between even and odd round batches. Batch size was set to 50. For the odd round batches, the lenghscale constraints are similar to those in the 5D search with the same lengthscale contraint applied for the initial and final . For the the minimum lenghtscale is set as 10-3 ms and maximum as 1 ms.

After 950 samples, the local 95 % confidence intervals around the optimum point, recommended by the algorithm, are eV, eV, ms, ms, %, , and (Fig. 8). The optimum point is eV, eV, ms, ms, %, , and . With these input parameters, the predicted current quench rate is very close to the measured values (Fig. 8f). Initially, the rate is faster than measured and also the transition to runaway plateau in the simulation occurs about 1 ms earlier than measured (Fig. 8f). However, between 3 and 12 ms, the predicted current is nearly exactly on top of the measured current.

3.5 Constraining to 5 ms

In both the 5D and 7D searches, the algorithm found optimum around 1.1 - 1.4 ms. This result was somewhat surprising when the conventional prior expectation would have suggested higher values in the range of 5 to 10 ms. Since the optimization algorithm finds the set of parameters that minimize the discrepancy, it is possible that the algorithm compensates for missing physics in the model by reducing below values that would actually be realistic. As a final test, further 4D and 6D optimizations were conducted, where was fixed to 5 ms.

In the 4D search, the optimum point recommended by the algorithm is eV, %, , and (Fig. 9a). The local 95 % confidence intervals are eV and %, , and . In the 6D search, the optimum point recommended by the algorithm is eV, eV, ms, %, , and (Fig. 9b). The local 95 % confidence intervals are eV, eV, ms, %, and , .

As the , , and do not impact the discrepancy significantly, the best match obtained by the 4D search seems very similar to the best match obtained by the 1D search (Figs. 3c, 9a). When allowing linearly varying background plasma , the algorithm is able to find a solution that matches the experimentally measured plasma current nearly as well as in the 7D search (Figs. 8f, 9b). However, when fixing ms, the recommended initial is increased from 9.5 to 16.2 eV, and reduced from 14 ms to 10 ms, highlighting the non-linear dependencies between the optimal input parameters.

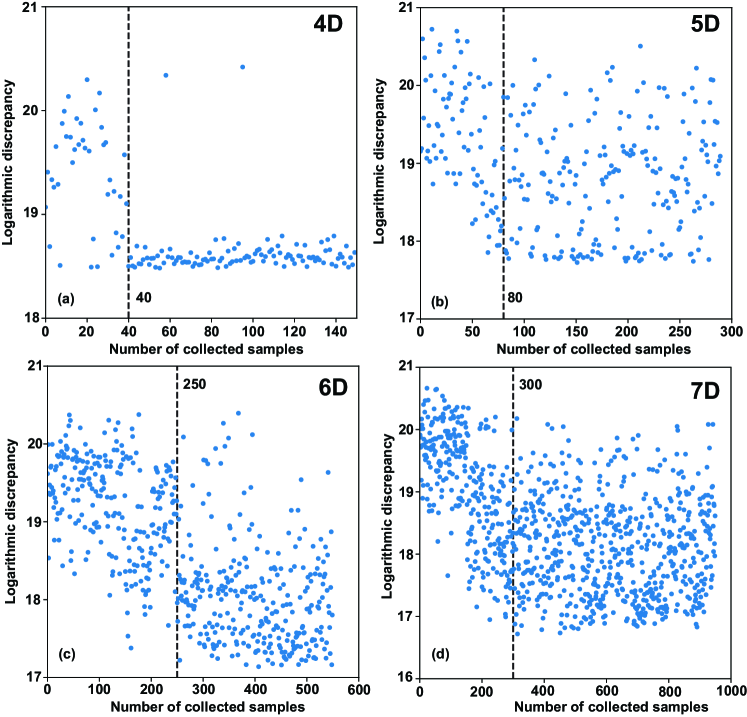

3.6 Convergence as a function of dimensions

The computational challenge of the optimization task increases with the number of dimensions of the search space. Figure 10 illustrates the discrepancy as a function of sample numbers for the 4D, 5D, 6D, and 7D search tasks in this work. Evaluating the convergence based on the sample number after which the minimum discrepancy saturates, the 4D search converges after about 40 samples, the 5D search after about 80 samples, the 6D search after about 250 samples, and the 7D after about 300 samples. Beyond this point, increasing sample numbers will reduce the uncertainty of the posterior distribution while the minimum discrepancy is not reduced anymore.

Comparing to a grid search of eight samples for each dimension, the Bayesian optimization algorithm is very efficient (Fig. 11). Beyond four dimensions, the grid search algorithm would be calling for over 10000 samples and soon become intractable. The Bayesian approach, on the other hand, obtains samples near the minimum discrepancy after a few hundred samples even in the case of the seven dimensional search space.

4 Summary

Bayesian approach has been explored for validation of runaway electron simulations. Many of the simulation tools applied in fusion energy research require the user to specify several input parameters that are not constrained by the available experimental information. Bayesian inference algorithms offer a promising approach to determine these free parameters with uncertainty quantification and is less subjective to user bias than approaches based on manual parameter calibration. The main challenge in using an algorithmic approach to parameter calibration is the computational cost of simulating enough samples to construct the posterior distributions for the uncertain input parameters. By using probabilistic surrogate modelling, through Gaussian Process regression, with Bayesian optimization, it is possible to reduce the number of required simulations by several orders of magnitude. This type of Bayesian optimization framework was implemented in this work for a disruption runaway electron analysis model, and explored for current quench simulations for a JET plasma discharge with an argon induced disruption. The algorithm is able to find optimal input parameters with uncertainties in one to seven dimensional proof-of-principle cases, and is several orders of magnitude more sample efficient than a regimented grid search algorithm would have been.

Surrogate model specification is central to the performance of the search algorithm. Using the Gaussian process approach, the kernel parameters need to be appropriately constrained for the surrogate model to provide meaningful guidance for the search through the acquisition function. An overly smooth kernel with long maximum correlation lengthscales can make the surrogate model overly confident and not find the actual global optimum. On the other hand, limiting the lengthscales to small values will encourage exploration but also require more iterations for convergence. Finding the appropriate surrogate model specifications is an area that requires attention from the user of these algorithms. The most appropriate constraints are likely to be specific to each search task. Furthermore, both specifying appropriate kernel constraints and diagnosing potential issues with kernel constraints become more challenging with increasing number of search dimensions. Therefore, it would be desired to find default kernel constraints that are likely to work acceptably well in most circumstances. The approach chosen here was to alternate the kernel constraints at specific sample intervals between unconstrained but positive lengthscales and lengthscales constrained to be below a certain threshold that is a fraction of the width of the search dimension. By alternating the kernel constraints, the risk of the algorithm either oversmoothing a region or getting into a mode of infinite exploration is reduced. However, more generally applicable methods for constraining the surrogate model are likely to exist, and could improve the performance of the algorithm further.

Acknowledgements

This work has been carried out within the framework of the EUROfusion Consortium, funded by the European Union via the Euratom Research and Training Programme (Grant Agreement No 101052200 — EUROfusion). Views and opinions expressed are however those of the authors only and do not necessarily reflect those of the European Union or the European Commission. Neither the European Union nor the European Commission can be held responsible for them. The work of Eero Hirvijoki was supported by the Academy of Finland grant no. 315278. The work was supported in part by the Swedish Research Council (Dnr. 2018-03911). The authors wish to acknowledge CSC – IT Center for Science, Finland, for computational resources.

References

- Boozer (2018) Boozer, A. 2018 Pivotal issues on relativistic electrons in ITER. Nucl. Fusion 58, 036006.

- Brandström (2021) Brandström, B. 2021 Spatio-temporal analysis of runaway electrons in a JET disruption with material injection. Master’s thesis, Chalmers University of Technology.

- Breizman et al. (2019) Breizman, B., Aleynikov, P., Hollmann, E. & Lehnen, M. 2019 Physics of runaway electrons in tokamaks. Nucl. Fusion 59, 083001.

- Brochu et al. (2010) Brochu, E., Cora, V. M. & de Freitas, N. 2010 A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning, arXiv: 1012.2599.

- Chapelle & Li (2011) Chapelle, O. & Li, L. 2011 An Empirical Evaluation of Thompson Sampling. In Proceedings of the 24th International Conference on Neural Information Processing Systems, p. 2249–2257. Red Hook, NY, USA: Curran Associates Inc.

- Chilenski et al. (2017) Chilenski, M., Greenwald, M., Hubbard, A., Hughes, J., Lee, J., Marzouk, Y., Rice, J. & White, A. 2017 Experimentally testing the dependence of momentum transport on second derivatives using Gaussian process regression. Nucl. Fusion 57, 126013.

- Chilenski et al. (2015) Chilenski, M. A., Greenwald, M., Marzouk, Y. M., Howard, N. T., White, A. E., Rice, J. E. & Walk, J. R. 2015 Improved profile fitting and quantification of uncertainty in experimental measurements of impurity transport coefficients using Gaussian process regression. Nucl. Fusion 55, 023012.

- Frassinetti et al. (2012) Frassinetti, L., Beurskens, M., Scannell, R., Osborne, T., Flanagan, J., Kempenaars, M., Maslov, M., Pasqualotto, R., Walsh, M., & Contributors, J.-E. 2012 Spatial resolution of the JET Thomson scattering system. Rev. Sci. Instrum. 83, 013506.

- Frazier (2018) Frazier, P. I. 2018 A Tutorial on Bayesian Optimization, arXiv: 1807.02811.

- GPy (since 2012) GPy since 2012 GPy: A Gaussian process framework in python.

- Gutmann & Corander (2016) Gutmann, M. & Corander, J. 2016 Bayesian Optimization for Likelihood-Free Inference of Simulator-Based Statistical Models. Journal of Machine Learning Research 17, 1–47.

- Harvey et al. (2000) Harvey, R., Chan, V., Chiu, S., Evans, T., Rosenbluth, M. & Whyte, D. 2000 Runaway electron production in DIII-D killer pellet experiments, calculated with the CQL3D/KPRAD model. Phys. Plasmas 7, 4590.

- Ho et al. (2019) Ho, A., Citrin, J., Auriemma, F., Bourdelle, C., Casson, F., Kim, H.-T., Manas, P., Szepesi, G., Weisen, H. & Contributors, J. 2019 Application of Gaussian process regression to plasma turbulent transport model validation via integrated modelling. Nucl. Fusion 59, 056007.

- Hoppe et al. (2021) Hoppe, M., Embreus, O. & Fülöp, T. 2021 DREAM: A fluid-kinetic framework for tokamak disruption runaway electron simulations. Comput. Phys. Commun. 268, 108098.

- Huysmans & Czarny (2007) Huysmans, G. & Czarny, O. 2007 MHD stability in X-point geometry: simulation of ELMs. Nucl. Fusion 47, 659–666.

- Järvenpää et al. (2019) Järvenpää, M., Gutmann, M. & A. Pleska, A. Vehtari, P. M. 2019 Efficient Acquisition Rules for Model-Based Approximate Bayesian Computation. Bayesian Analysis 14 (2), 595–622.

- Järvenpää et al. (2018) Järvenpää, M., Gutmann, M., Vehtari, A. & Marttinen, P. 2018 Gaussian process modelling in approximate Bayesian computation to estimate horizontal gene transfer in bacteria. The Annals of Applied Statistics 12 (4), 2228 – 2251.

- Kandasamy et al. (2018) Kandasamy, K., Krishnamurthy, A., Schneider, J. & Poczos, B. 2018 Parallelised Bayesian Optimisation via Thompson Sampling. In Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics (ed. Amos Storkey & Fernando Perez-Cruz), Proceedings of Machine Learning Research, vol. 84, pp. 133–142. PMLR.

- Li et al. (2013) Li, D., Svensson, J., Thomsen, H., Medina, F., Werner, A. & Wolf, R. 2013 Bayesian soft X-ray tomography using non-stationary Gaussian Processes. Rev. Sci. Instrum. 84, 083506.

- Lintusaari et al. (2017) Lintusaari, J., Gutmann, M., Dutta, R., Kaski, S. & Corander, J. 2017 Fundamentals and Recent Developments in Approximate Bayesian Computation. Syst. Biol. 66(1), e66–e82.

- Lintusaari et al. (2018) Lintusaari, J., Vuollekoski, H., Kangasrääsiö, A., Skytén, K., Järvenpää, M., Marttinen, P., Gutmann, M., Vehtari, A., Corander, J. & Kaski, S. 2018 ELFI: Engine for Likelihood-Free Inference. Journal of Machine Learning Research 19 (16), 1–7.

- Marin et al. (2012) Marin, J., Pudlo, P., Robert, C. & Ryder, R. 2012 Approximate Bayesian computational methods. Stat. Comput. 22, 1167–1180.

- Marjoram et al. (2003) Marjoram, P., Molitor, J., Plagnol, V. & Tavaré, S. 2003 Markov chain Monte Carlo without likelihoods. Proceedings of the National Academy of Sciences 100 (26), 15324–15328.

- von Nessi & Hole (2013) von Nessi, G. & Hole, M. 2013 Using Bayesian analysis and Gaussian processes to infer electron temperature and density profiles on the Mega-Ampere Spherical Tokamak experiment. Rev. Sci. Instrum. 84, 063505.

- von Nessi et al. (2012) von Nessi, G., Hole, M., Svensson, J. & Appel, L. 2012 Evidence cross-validation and Bayesian inference of MAST plasma equilibria. Phys. Plasmas 19, 012506.

- Oberkampf & Trucano (2002) Oberkampf, W. & Trucano, T. 2002 Verification and validation in computational fluid dynamics. Prog. Aerosp. Sci. 38 (3), 209–272.

- Palma et al. (2019) Palma, A. D., Mendler-Dünner, C., Parnell, T. P., Anghel, A. & Pozidis, H. 2019 Sampling Acquisition Functions for Batch Bayesian Optimization. CoRR abs/1903.09434.

- Pasqualotto et al. (2004) Pasqualotto, R., Nielsen, P., Gowers, C., Beurskens, M., Kempenaars, M., Carlstrom, T. & Johnson, D. 2004 High resolution Thomson Scattering for Joint European Torus (JET). Rev. Sci. Instrum, 75, 3891.

- Rasmussen & Williams (2006) Rasmussen, C. & Williams, C. 2006 Gaussian Processes for Machine Learning. the MIT Press.

- Reux et al. (2021) Reux, C., Paz-Soldan, C., Aleynikov, P., Bandaru, V., Ficker, O., Silburn, S., Hoelzl, M., Jachmich, S., Eidietis, N., Lehnen, M., Sridhar, S. & contributors, J. 2021 Demonstration of Safe Termination of Megaampere Relativistic Electron Beams in Tokamaks. Phys. Rev. Lett. 126, 175001.

- Rodriguez-Fernandez et al. (2018) Rodriguez-Fernandez, P., White, A. E., Creely, A. J., Greenwald, M. J., Howard, N. T., Sciortino, F. & Wright, J. C. 2018 VITALS: A Surrogate-Based Optimization Framework for the Accelerated Validation of Plasma Transport Codes. Fusion Science and Technology 74 (1-2), 65–76.

- Romero & Svensson (2013) Romero, J. & Svensson, J. 2013 Optimization of out-vessel magnetic diagnostics for plasma boundary reconstruction in tokamaks. Nucl. Fusion 53, 033009.

- Rosenbluth & Putvinski (1997) Rosenbluth, M. & Putvinski, S. 1997 Theory for avalanche of runaway electrons in tokamaks. Nuclear Fusion 37 (10), 1355–1362.

- Shahriari et al. (2016) Shahriari, B., Swersky, K., Wang, Z., Adams, R. & Freitas, N. 2016 Taking the Human Out of the Loop: A Review of Bayesian Optimization. Proc. IEEE 104, 148–175.

- Sovinec et al. (2004) Sovinec, C., Glasser, A., Gianakon, T., Barnes, D., Nebel, R., Kruger, S., Schnack, D., Plimpton, S., Tarditi, A., Chu, M. & the NIMROD Team 2004 Nonlinear magnetohydrodynamics simulation using high-order finite elements. J. Comput. Phys. 195, 355–386.

- Srinivas et al. (2010) Srinivas, N., Krause, A., Kakade, S. & Seeger, M. 2010 Gaussian Process Optimization in the Bandit Setting: No Regret and Experimental Design. In Proceedings of the 27 International Conference on Machine Learning. Haifa, Israel.

- Stein (1999) Stein, M. 1999 Interpolation of Spatial Data: Some Theory for Kriging. New York: Springer Series in Statistics.

- Thompson (1933) Thompson, W. 1933 On the likelihood that one unknown probability exceeds another in views of the evidence of two samples. Biometrika 25 (3-4), 285–294.

- Wiesen et al. (2015) Wiesen, S., Reiter, D., Kotov, V., Baelmans, M., Dekeyser, W., Kukushkin, A., Lisgo, S., Pitts, R., Rozhansky, V., Saibene, G., Veselova, I. & Voskoboynikov, S. 2015 The new SOLPS-ITER code package. J. Nucl. Mat. 463, 480 – 484.

- Wu et al. (2018) Wu, X., Kozlowski, T., Meidani, H. & Shirvan, K. 2018 Inverse uncertainty quantification using the modular Bayesian approach based on Gaussian process, Part 1: Theory. Nucl. Eng. Des. 335, 339–355.