Batch Belief Trees for Motion Planning Under Uncertainty

Abstract

In this work, we develop the Batch Belief Trees (BBT) algorithm for motion planning under motion and sensing uncertainties. The algorithm interleaves between batch sampling, building a graph of nominal trajectories in the state space, and searching over the graph to find belief space motion plans. By searching over the graph, BBT finds sophisticated plans that will visit (and revisit) information-rich regions to reduce uncertainty. One of the key benefits of this algorithm is the modified interplay between exploration and exploitation. Instead of an exhaustive search (exploitation) after one exploration step, the proposed algorithm uses batch samples to explore the state space and, in addition, does not require exhaustive search before the next iteration of batch sampling, which adds flexibility. The algorithm finds motion plans that converge to the optimal one as more samples are added to the graph. We test BBT in different planning environments. Our numerical investigation confirms that BBT finds non-trivial motion plans and is faster compared with previous similar methods.

I Introduction

For safe and reliable autonomous robot operation in a real-world environment, consideration of various uncertainties becomes necessary. These uncertainties may arise from an inaccurate motion model, actuation or sensor noise, partial sensing, and the presence of other agents moving in the same environment. In this paper, we study the safe motion planning problem of robot systems with nontrivial dynamics, motion uncertainty, and state-dependent measurement uncertainty, in an environment with non-convex obstacles.

Planning under uncertainties is referred to as belief space planning (BSP), where the state of the robot is characterized by a probability distribution function (pdf) over all possible states. This pdf is commonly referred to as the belief or information state [1, 2]. A BSP problem can be formulated as a partially observable Markov decision process (POMDP) problem [3]. Solving POMDPs for continuous state, control, and observation spaces, is, however, intractable. Existing methods based on discretization are resolution-limited [4, 5]. Optimization over the entire discretized belief space to find a path is computationally expensive and does not scale well to large-scale problems. Online POMDP algorithms are often limited to short-horizon planning, have challenges in dealing with local minimums, and are not suitable for global planning in large environments [6, 7].

Planning in infinite-dimensional distributional (e.g., belief) spaces can become more tractable by using sampling-based methods [8]. For example, belief roadmap methods [9] build a belief roadmap to reduce estimation uncertainty; the rapidly-exploring random belief trees (RRBT) algorithm [10] has been proposed to grow a tree in the belief space. Owing to their advantages in avoiding local minima, dealing with nonconvex obstacles and high-dimensional state spaces, along with their anytime property, sampling-based methods have gained increased attention in the robotics community recently [11, 12, 13, 14, 15, 16].

Robot safety under uncertainty can be also formulated as a chance-constrained optimization problem [17, 18, 19, 10]. In addition to minimizing the cost function, one also wants the robot not to collide with obstacles, with high probability. By approximating the chance constraints as deterministic constraints, references [17, 18, 19] solve the problem using an optimization-based framework. However, those approaches lack scalability with respect to problem complexity [20], and the explicit representation of the obstacles is usually required.

In this paper, we focus on sampling-based approaches similar to [10, 11, 15]. One challenge of sampling-based algorithms for planning under uncertainty is the lack of the optimal substructure property, which has been discussed in [10, 16]. The lack of optimal substructure property is further explained by the lack of total ordering on paths based on cost. Specifically, it is not enough to only minimize the usual cost function – explicitly finding paths that reduce the uncertainty of the robot is also important (see Figure 1(a)).

The RRBT algorithm proposed in [10] overcomes the lack of optimal substructure property by introducing a partial-ordering of belief nodes and by keeping all non-dominated nodes in the belief tree. Note that without this partial-ordering, the methods in [11, 12, 13, 15] may not be able to find a solution, even if one exists. Minimizing the cost and checking the chance constraints can only guarantee that the existing paths in the tree satisfy the chance constraints. Without searching for paths that explicitly reduce the state uncertainty, it will be difficult for future paths to satisfy the chance constraints.

In this paper, we propose the Batch Belief Tree (BBT) algorithm, which improves over the RRBT algorithm with the introduction of a batch sampling extension and by introducing a modified exploration and exploitation interplay. BBT uses the partial ordering of belief nodes as in [10] and searches over the graph of nominal trajectories to find non-dominated belief nodes. Compared to [11, 12, 13, 15], BBT is able to find sophisticated plans that visit and revisit the information-rich region to gain information. Compared to RRBT, instead of an exhaustive graph search (exploitation) after every exploration (adding a sample), BBT uses batch sampling for faster state-space exploration, and does not require an exhaustive graph search before adding another batch of samples. Thus, BBT is able to find the initial solution in a shorter time and has better cost-time performance compared to RRBT, as will be shown in Section VI.

II Related Works

In [9], the problem of finding the minimum estimation uncertainty path for a robot from a starting position to a goal is studied by building a roadmap. In [10, 21], it was noted that the true a priori probability distribution of the state should be used for motion planning instead of assuming maximum likelihood observations [9, 22]. A linear-quadratic Gaussian (LQG) controller along with the RRT algorithm [23] were used for motion planning in [21]. To achieve asymptotic optimality, the authors in [10] incrementally construct a graph and search over the graph to find all non-dominated belief nodes. Given the current graph, the Pareto frontier of belief nodes at each vertex is saved, where the Pareto frontier is defined by considering both the path cost and the node uncertainty.

In [12] high-frequency replanning is shown to be able to better react to uncertainty during plan execution. Monte Carlo simulation and importance sampling are used in [13] to compute the collision probability. Moving obstacles are considered in [20]. In [24], state dependence of the collision probability is considered and incorporated with chance-constraint RRT* [11, 25]. In [26], a roadmap search method is proposed to deal with localization uncertainty; however, solutions for which the robot need to revisit a position to gain information are ruled out. Distributionally robust RRT is proposed in [15, 27], where moment-based ambiguity sets of distributions are used to enforce chance constraints instead of assuming Gaussian distributions. Similarly, a moment-based approach considering non-Gaussian state distributions is studied in [28].

Other works that are not based on sampling-based methods formulate the chance-constrained motion planning problem as an optimization problem [17, 18, 19]. In those methods, the explicit representation of the obstacles is usually required. The obstacles may be represented by convex constraints or polynomial constraints. The chance constraints are then approximated as deterministic constraints and the optimization problem is solved by convex [18] or nonlinear programming [19]. Differential dynamic programming has also been used to solve motion planning under uncertainty [2, 29, 30]. These algorithms find a locally optimal trajectory in the neighborhood of a given reference trajectory. The algorithms iteratively linearize the system dynamics along the reference trajectory and solve an LQG problem to find the next reference trajectory.

III Problem formulation

We consider the problem of planning for a robot with nontrivial dynamics, model uncertainty, measurement uncertainty from sensor noise, and obstacle constraints. The motion model and sensing model are given by the following discrete-time equations,

| (1) | ||||

| (2) |

where are the discrete time-steps, is the state, is the control input, and is the measurement at time step . The steps of the noise processes and are i.i.d standard Gaussian random vectors, respectively. We assume that and are independent.

The state-space is decomposed into free space and obstacle space . The motion planning problem is given by

| (3) | ||||

| (4) | ||||

| (5) | ||||

| (6) |

where (4) is the boundary condition for the motion planning problem. The goal is to steer the system from some initial distribution to a goal state. Since the robot state is uncertain, the mean of the final state is constrained to be equal to the goal state . Condition (5) is a chance constraint that enforces safety of the robot.

Similar to [10], the motion plan considered in this paper is formed by a nominal trajectory and a feedback controller that stabilizes the system around the nominal trajectory. Specifically, we will use a Connect function that returns a nominal trajectory and a stabilizing controller between two states and ,

| (7) |

and are the sequence of states and controls from the nominal trajectory, and is a sequence of feedback control gains. The nominal trajectory can be obtained by solving a deterministic optimal control problem with boundary conditions and , and system dynamics . The stabilizing controller can be computed using, for example, finite-time LQR design [16].

A Kalman filter is used for online state estimation, which gives the state estimate111Note non-standard notation. of . Thus, the control at time is given by

| (8) |

With the introduction of the Connect function, the optimal motion planning problem (3)-(6) is reformulated as finding the sequence of intermediate states . The final control is given by

| (9) |

The remaining problem is to find the optimal sequence of intermediate states and enforce the chance constraints (5).

IV Covariance Propagation

We assume that the system given by (1) and (2) is locally well approximated by its linearization along the nominal trajectory. This is a common assumption as the system will stay close to the nominal trajectory using the feedback controller [16, 31]. Define

| (10) |

By linearizing along , the error dynamics is

| (11) |

We will consider this linear time-varying system hereafter. A Kalman filter is used for estimating and is given by

| (12) | ||||

| (13) |

where,

| (14) |

and is the Kalman gain.

The covariances of , and are denoted as , and , respectively. Note that the covariance of is also given by and the estimation error covariance is computed from (14). From (11)-(13), it can be verified that . Since , by choosing , we have for . Using (12) and (13) we also have that

| (15) |

Using the fact that , it can be verified that . Thus, given the feedback gains and the Kalman filter gain , we can predict the covariances of the state estimation error and the state along the trajectory, which also provides the state distributions in the case of a Gaussian distribution.

V Batch Belief Tree Algorithm

The Batch Belief Tree algorithm performs two main operations: It first builds a graph of nominal trajectories to explore the state space of the robot, and then it searches over this graph to grow a belief tree in the belief space. For graph construction, batches of samples are added to the graph intermittently. The Rapidly-exploring Random Graph (RRG) [8] algorithm is adopted to add a batch of samples and maintain a graph of nominal trajectories.

The operation of graph construction is referred to as exploration, as it will incrementally build a graph to cover the state space. Analogously, the operation of searching over the current graph is referred to as exploitation, as it exploits the current graph to search a tree in belief space. Previous work [10] performed an exhaustive search whenever one sample is added to the graph, which results in poor performance in terms of exploration. Note that the operation of an exhaustive search is more complicated than adding a single sample to the RRG graph. The proposed Batch Belief Tree (BBT) algorithm adds a batch of samples at a time and it does not require a complete graph search before adding another batch of samples. As it will be shown in Section VI, the resulting advantage of BBT is that it finds a better path given the same amount of time compared to [10].

The motivation of BBT is shown in Figure 1. Two paths reach point in Figure 1(a). The red path reaches with a large cost but with low uncertainty. The blue path reaches with a small cost but with high uncertainty. In this case, the blue path cannot dominate the red path, as it will incur a high probability of chance constraint violation for future segments of the path. Thus, both paths are preserved in the belief tree as discussed in [10]. However, in Figure 1(b), if the blue path (starting from the blue ellipse) satisfies the chance constraint, the blue path will be the solution of the problem since it satisfies the chance constraints and has a lower cost than . The operation of searching the current graph to find more paths reaching with less uncertainty (but a higher cost), such as the red path, becomes redundant. Here, we assume that the cost in (3) is mainly the cost from the mean trajectory. That is, for path , starting from the red ellipse and blue ellipse will incur a similar cost. Reducing the uncertainty at node is mainly for satisfying the chance constraint of the future trajectory. Such assumption can also be found, for example, in [14].

RRBT performs an exhaustive search to find all non-dominated nodes whenever a vertex is added to the graph. Specifically, it will spend a lot of effort finding nodes with low uncertainty but a high cost-to-come. Such nodes are only necessary if they are indeed part of the optimal path. If the blue path in Figure 1(b) is the solution, we do not need to search for other non-dominated nodes (red node). However, since we do not know if the future blue path will satisfy the chance constraint or not, the red node may still be needed. Thus, we propose to delay the search procedure and only search (exploitation) when is necessary and when prioritizing exploration becomes beneficial. The refined exploration versus exploitation in BBT can be interpreted as delayed graph exploitation (which will promote exploration) and will allow us to find the solution faster.

The complete BBT algorithm is given by Algorithm 1 and Algorithm 2. The RRG-D algorithm given by Algorithm 2 follows the RRG algorithm developed in [8] with the additional consideration of system dynamics. RRG-D uses the Connect function introduced in Section III to build a graph of nominal trajectories. The edge is added to the graph only if the nominal trajectory is obstacle-free, which is indicated by the ObstacleFree checking in Algorithm 2. RRG-D adds samples to the current graph whenever it is called by the BBT algorithm. The samples constitute one batch. One batch of samples is added to the graph without any graph exploitation in between, which is different from RRBT, which performs a search whenever a single sample is added. RRG-D also updates the belief queue , which is defined later.

The sampled states along with the edges connecting them generate a graph in the search space. Additional variables are needed to define a belief tree. A belief node is defined by a state covariance , an estimation error covariance , a cost , and a parent node index . A vertex is defined by a state , and a set of belief nodes . Each belief node traces back a unique path from the initial belief node. Two queues and are defined to store two sets of belief nodes.

We use the partial ordering of belief nodes as in [10]. Let and be two belief nodes of the same vertex . We use to denote that belief node is dominated by . is true if

| (16) |

In this case, is better than since it traces back a path that reaches with less cost and less uncertainty compared with .

Next, we summarize some primitive procedures used in the BBT algorithm.

Pop: selects a belief node from and removes it from . Here we select the belief node with the minimum cost .

Propagate: The Propagate procedure implements three operations: covariance propagation, chance constraint evaluation, and cost calculation.

performs the covariance propagation using (14) and (15).

It takes an edge and an initial belief node at the starting vertex of the edge as inputs.

Chance constraints are evaluated using the state covariance along the edge.

If there are no chance constraint violations, a new belief is returned, which is the final belief at the end vertex of the edge.

Otherwise, the procedure returns no belief.

The cost of is the sum of and the cost of edge by applying the controller (8) associated with .

Append Belief: The function AppendBelief decides if the new belief should be added to vertex or not.

If is not dominated by any existing belief nodes in , is added to .

Note that adding means extending the current belief tree such that becomes a leaf node of the current belief tree.

Next, we also check if any existing belief node is dominated by .

If an existing belief is dominated, its descendant and the node itself are pruned.

New Batch: The NewBatch condition calls the RRG-D algorithm to add a new batch of samples.

The NewBatch condition is satisfied, for example, if the inner while loop Line 7-17 is executed with a maximum number of times or the queue is empty (or close to empty).

Terminate: The Terminate condition allows the algorithm to terminate without conducting an exhaustive graph search.

This condition may be satisfied when the current solution is close to the optimal one or the maximum planning time is reached.

The initial condition of the motion planning problem is given by the initial state , state covariance , and estimation error covariance . Lines 1-3 of Algorithm 1 initialize the belief tree. In Line 4, the RRG-D is called to add samples and maintain a graph of nominal trajectories. After Line 4, only contains one belief node which is the initial belief node.

The BBT algorithm explicitly uses two lists, and , to store belief nodes that will be propagated later. In Lines 7-12, all belief nodes in are propagated once, and will be empty. All the newly added belief nodes are added to , which will be propagated in the next iteration. refers to the vertex associated with . In Lines 9-12, the belief is propagated outwards to all the neighbor vertices of to grow the belief tree. The new belief is added to the if the connection is successful. The batch sampling in Lines 15-16, along with and allow adding another batch of samples without an exhaustive graph search, which results in delayed graph exploitation and promoting exploration.

V-A Convergence Analysis

In this section, we briefly discuss the asymptotic optimality of the BBT algorithm, which states that the solution returned by BBT converges to the optimal solution as the number of the samples goes to infinite.

Note that the RRBT algorithm is shown to be asymptotically optimal [10]. Here, we argue the asymptotic optimality of the BBT by drawing to its connection with the RRBT algorithm.

Proposition 1

Given the same sequence of samples of the RRBT algorithm with any fixed length, the RRG graphs constructed by BBT and RRBT are the same. Provided that the terminate condition in Line 13 of Algorithm 1 is not satisfied, the BBT algorithm finds all the non-dominated belief nodes over the RRG graph and the belief trees built by BBT and RRBT are the same.

Proof:

The proof of Proposition 1 is straightforward. After running the BBT algorithm, both and will be empty. The AppendBelief function ensures that every non-dominated belief is added to the belief tree, and only the dominated beliefs are pruned. Thus, the RRG graph is exhaustively searched and all the non-dominated belief nodes are in the belief tree. Therefore, the asymptotic optimality of the RRBT algorithm implies the asymptotic optimality of the BBT algorithm. ∎

VI Experimental Results

In this section, we test the BBT algorithm for different motion planning problems and compared the results with the RRBT algorithm [10].

VI-A Double Integrator

The first planning environment is shown in Figure 2. The gray areas are obstacle and the blue region is the information-rich region, that is, the measurement noise is small when the robot is in this region. We use the 2D double integrator dynamics with motion and sensing uncertainties as an example. The system model is linear and is given by

| (17) |

where the system state includes position and velocity, the control input is the acceleration. The system matrices are given by

| (18) |

, and when the robot is in a information-rich region, otherwise .

To compute the nominal trajectory, we consider a quadratic cost of the control input where the cost matrix is . We use the analytical solution for this mean steering problem [31]. An LQG controller is used to compute the feedback gain in the Connect function. The collision probability in the chance constraint is approximated using Monte-Carlo simulations. We sample from the state distribution and count the number of samples that collide with the obstacles. The ratio of collided samples to the total samples is the approximate collision probability. All simulations were done on a laptop computer with a 1.6 GHz Intel i5-8255u processor and 8 GB RAM using MATLAB.

We compared the performance of RRBT and BBT to find the first solution. The belief tree from RRBT is shown in Figure 2(a), and the belief tree from BBT is shown in Figure 2(b). Both algorithms find the same solution, which is given in Figure 3. The robot first goes down to the information-rich region to reduce its uncertainty, then revisits the starting position and go up towards the goal. Directly moving toward to goal will violate the chance constraint. Other methods [12, 13] that do not utilize partial-ordering of the belief nodes cannot find this solution [10].

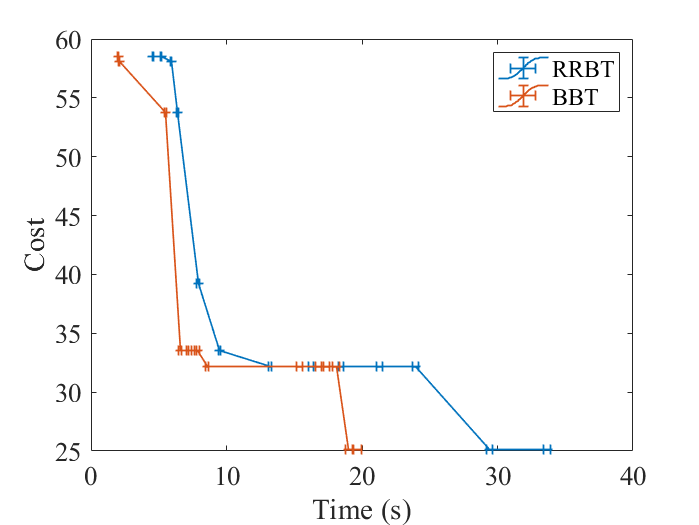

Fewer belief nodes are searched and added to the tree in Figure 2(b) compared with Figure 2(a), even though they return the same solution. This is due to the refined exploration and exploitation interplay of the BBT algorithm. RRBT tries to find all non-dominated belief nodes whenever a vertex is added to the graph. Thus, it will find belief nodes that have low uncertainty but high cost-to-come (shown as small ellipses in Figure 2(a)). However, if such a node is not part of the solution path, this computation is not necessary. BBT delays such exploitation and prioritizes exploration. Note that BBT will eventually find all the non-dominated belief nodes and return the same belief tree as RRBT when the belief queues are empty. The comparison of the results is shown in Figure 4. BBT is faster than RRBT to find the solution. Note that belief nodes being pruned are not shown in Figure 2.

The second planning environment is shown in Figure 5. The problem setting is similar to the first environment except that more obstacles and information-rich regions are added. The belief tree built by the BBT algorithm, when the first solution is found, is shown in Figure 5(a). The first solution and the improved solution are shown in Figure 5(b) and (c), respectively. The green lines are the mean trajectories. The gray lines around the green lines are the Monte-Carlo simulation results. The comparison with the RRBT algorithm is given in Figure 6. The same sequence of samples is used in both algorithms. After finding the initial solution, both algorithms are able to improve their current solution when more samples are added to the graph but BBT is able to find the same paths as the RRBT algorithm at a much shorter time.

VI-B Dubins Vehicle

Finally, we tested our algorithm using the Dubins vehicle model. The deterministic discrete-time model is given by

| (19) |

The nominal trajectory for the Dubins vehicle is chosen as the minimum length path connecting two configurations of the vehicle. The analytical solution for the nominal trajectory is available in [32].

After linearization, the error dynamics around the nominal path is given by (17), where the system matrices are

| (20) |

, when the robot is in a information-rich region, otherwise . An LQG controller is used to compute the feedback gain , the weighting matrices of the LQG cost are and .

The belief tree built by the BBT algorithm is shown in Figure 7(a). The planned trajectory is shown in Figure 7(b). The green line is the mean trajectory. The gray lines around the green lines are the Monte-Carlo simulation results. The comparison with the RRBT algorithm is given in Figure 8. After finding the initial solution, both algorithms are able to improve their current solution when more samples are added to the graph. BBT is able to find the same paths as the RRBT algorithm at a much shorter time.

VII Conclusion

In this paper, we propose the Batch Belief Tree (BBT) algorithm for motion planning under uncertainties. The algorithm considers a robot that is partially observable, has motion uncertainty, and operates in a continuous domain. By searching over the graph, the algorithm finds sophisticated plans that will visit (and revisit) information-rich regions to reduce uncertainty. With intermittent batch sampling and delayed graph exploitation, BBT has good performance in terms of exploring the state space. BBT finds all non-dominated belief nodes within the graph and is asymptotic optimal. We have tested the BBT algorithm in different planning environments. Compare to with previous methods, BBT finds non-trivial solutions that have lower costs at the same amount of time.

Extensions of the BBT algorithm include, for example, adding informed state-space sampling. Also, heuristics could be used for ordering the belief nodes in , which will expand the promising beliefs first, thus helping with the convergence of the algorithm.

References

- [1] S. Thrun, W. Burgard, and D. Fox, Probabilistic Robotics. MIT Press, 2005.

- [2] J. Van Den Berg, S. Patil, and R. Alterovitz, “Motion planning under uncertainty using iterative local optimization in belief space,” The International Journal of Robotics Research, vol. 31, no. 11, pp. 1263–1278, 2012.

- [3] L. P. Kaelbling, M. L. Littman, and A. R. Cassandra, “Planning and acting in partially observable stochastic domains,” Artificial Intelligence, vol. 101, pp. 99–134, 1998.

- [4] J. M. Porta, N. Vlassis, M. T. Spaan, and P. Poupart, “Point-based value iteration for continuous POMDPs,” Journal of Machine Learning Research, pp. 2329–2367, November 2006.

- [5] G. Shani, J. Pineau, and R. Kaplow, “A survey of point-based POMDP solvers,” Autonomous Agents and Multi-Agent Systems, vol. 27, no. 1, pp. 1–51, 2013.

- [6] S. Ross, J. Pineau, S. Paquet, and B. Chaib-Draa, “Online planning algorithms for POMDP,” Journal of Artificial Intelligence Research, vol. 32, pp. 663–704, 2008.

- [7] A. Somani, N. Ye, D. Hsu, and W. S. Lee, “DESPOT: online POMDP planning with regularization,” Advances in neural information processing systems, vol. 26, pp. 1772–1780, 2013.

- [8] S. Karaman and E. Frazzoli, “Sampling-based algorithms for optimal motion planning,” The International Journal of Robotics Research, vol. 30, pp. 846–894, June 2011.

- [9] S. Prentice and N. Roy, “The belief roadmap: Efficient planning in belief space by factoring the covariance,” The International Journal of Robotics Research, vol. 28, pp. 1448–1465, 2009.

- [10] A. Bry and N. Roy, “Rapidly-exploring random belief trees for motion planning under uncertainty,” in IEEE International Conference on Robotics and Automation, pp. 723–730, May 2011.

- [11] B. D. Luders, S. Karaman, and J. P. How, “Robust sampling-based motion planning with asymptotic optimality guarantees,” in AIAA Guidance, Navigation, and Control, p. 5097, 2013.

- [12] W. Sun, S. Patil, and R. Alterovitz, “High-frequency replanning under uncertainty using parallel sampling-based motion planning,” IEEE Transactions on Robotics, vol. 31, no. 1, pp. 104–116, 2015.

- [13] L. Janson, E. Schmerling, and M. Pavone, “Monte Carlo motion planning for robot trajectory optimization under uncertainty,” in Robotics Research, pp. 343–361, Springer, Cham., 2018.

- [14] B. Ichter, A. A. Schmerling, E.and Agha-mohammadi, and M. Pavone, “Real-time stochastic kinodynamic motion planning via multiobjective search on GPUs,” in IEEE International Conference on Robotics and Automation, pp. 5019–5026, May 2017.

- [15] T. Summers, “Distributionally robust sampling-based motion planning under uncertainty,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 6518–6523, October 2018.

- [16] A. A. Agha-Mohammadi, S. Chakravorty, and N. M. Amato, “FIRM: sampling-based feedback motion-planning under motion uncertainty and imperfect measurements,” The International Journal of Robotics Research, vol. 33, no. 2, pp. 268–304, 2014.

- [17] L. Blackmore, M. Ono, and B. C. Williams, “Chance-constrained optimal path planning with obstacles,” IEEE Transactions on Robotics, vol. 27, no. 6, pp. 1080–1094, 2011.

- [18] M. P. Vitus and C. J. Tomlin, “Closed-loop belief space planning for linear, gaussian systems,” in IEEE International Conference on Robotics and Automation, pp. 2152–2159, May 2011.

- [19] A. Wang, A. Jasour, and B. C. Williams, “Non-Gaussian chance-constrained trajectory planning for autonomous vehicles under agent uncertainty,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6041–6048, 2020.

- [20] G. S. Aoude, B. D. Luders, J. M. Joseph, N. Roy, and J. P. How, “Probabilistically safe motion planning to avoid dynamic obstacles with uncertain motion patterns,” Autonomous Robots, vol. 35, no. 1, pp. 51–76, 2013.

- [21] J. Van Den Berg, P. Abbeel, and K. Goldberg, “LQG-MP: optimized path planning for robots with motion uncertainty and imperfect state information,” The International Journal of Robotics Research, vol. 30, no. 7, pp. 895–913, 2011.

- [22] R. Platt, R. Tedrake, L. Kaelbling, and T. Lozano-Perez, “Belief space planning assuming maximum likelihood observations,” in Robotics: Science and Systems, 2010.

- [23] S. LaValle and J. J. Kuffner Jr, “Randomized kinodynamic planning,” The International Journal of Robotics Research, vol. 20, no. 5, pp. 378–400, 2001.

- [24] W. Liu and M. H. Ang, “Incremental sampling-based algorithm for risk-aware planning under motion uncertainty,” in IEEE International Conference on Robotics and Automation, pp. 2051–2058, May 2014.

- [25] B. Luders, M. Kothari, and J. How, “Chance constrained RRT for probabilistic robustness to environmental uncertainty,” in AIAA Guidance, Navigation, and Control, p. 8160, 2010.

- [26] T. Shan and B. Englot, “Belief roadmap search: Advances in optimal and efficient planning under uncertainty,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5318–5325, September 2017.

- [27] S. Safaoui, B. J. Gravell, V. Renganathan, and T. H. Summers, “Risk-averse RRT* planning with nonlinear steering and tracking controllers for nonlinear robotic systems under uncertainty,” arXiv preprint arXiv:2103.05572, 2021.

- [28] A. Wang, A. Jasour, and B. Williams, “Moment state dynamical systems for nonlinear chance-constrained motion planning,” arXiv preprint arXiv:2003.10379, 2020.

- [29] K. Sun and V. Kumar, “Belief space planning for mobile robots with range sensors using iLQG,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 1902–1909, 2021.

- [30] S. Rahman and S. L. Waslander, “Uncertainty-constrained differential dynamic programming in belief space for vision based robots,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3112–3119, 2021.

- [31] D. Zheng, J. Ridderhof, P. Tsiotras, and A. A. Agha-mohammadi, “Belief space planning: A covariance steering approach,” in International Conference on Robotics and Automation, (Philadelphia, PA), 2022.

- [32] S. M. LaValle, Planning Algorithms. Cambridge university press, 2006.