Bandwidth-Optimal Random Shuffling for GPUs

Abstract.

Linear-time algorithms that are traditionally used to shuffle data on CPUs, such as the method of Fisher-Yates, are not well suited to implementation on GPUs due to inherent sequential dependencies, and existing parallel shuffling algorithms are unsuitable for GPU architectures because they incur a large number of read/write operations to high latency global memory. To address this, we provide a method of generating pseudo-random permutations in parallel by fusing suitable pseudo-random bijective functions with stream compaction operations. Our algorithm, termed ‘bijective shuffle’ trades increased per-thread arithmetic operations for reduced global memory transactions. It is work-efficient, deterministic, and only requires a single global memory read and write per shuffle input, thus maximising use of global memory bandwidth. To empirically demonstrate the correctness of the algorithm, we develop a statistical test for the quality of pseudo-random permutations based on kernel space embeddings. Experimental results show that the bijective shuffle algorithm outperforms competing algorithms on GPUs, showing improvements of between one and two orders of magnitude and approaching peak device bandwidth.

1. Introduction

Shuffling is a fundamental computer science problem, the objective of which is to rearrange a set of input elements into some pseudo-random order. The classical method of Fisher-Yates (Fisher and Yates, 1943) (popularised by Knuth in (Knuth, 1997)) randomly removes elements from an input buffer, one at a time, appending them to the output buffer. This algorithm runs optimally in time, outputs uniformly distributed permutations, has a simple in-place variant, and is straightforward to implement. As such, sequential shuffling has long been considered a solved problem. However, modern computing hardware such as GPUs offer massively parallel computation that cannot be effectively exploited by the standard sequential shuffling approach due to dependencies inherent in Fisher-Yates type algorithms. The work presented in this paper was motivated by a gap in GPU-oriented parallel primitive libraries, such as Thrust (Bell and Hoberock, 2012), Cub (Merrill, 2015), and Boost.Compute (Szuppe, 2016), which aim to implement the C++ standard library in equivalent form for GPUs, that prior to the method presented in this paper all lacked shuffling algorithms. The primary contributions of this paper are:

-

•

‘Bijective shuffle’: A new shuffling algorithm, carefully optimised for GPUs.

-

•

A novel statistical test for shuffling algorithms, based on kernel space embeddings.

Some applications motivating this work are: permutation Monte Carlo tests (Good, 2006), bootstrap sampling (Mitchell and Frank, 2017), approximation of Shapley values for feature attribution (Mitchell et al., 2021), differentially private variational inference (Prediger et al., 2021), and shuffle operators in GPU accelerated dataframe libraries (Team, 2018). More generally, whenever shuffling forms part of a high-performance GPU-based algorithm, performance is lost when data is copied back to the host system, shuffled using the CPU, and copied back to the GPU. Host-to-device copies are limited by PCIE bandwidth, which is typically an order of magnitude or more smaller than available device bandwidth (Farber, 2011).

Informally, an ideal parallel algorithm would be capable of assigning elements of the input sequence randomly to unique output locations without contention, communicate minimally between processors, evenly distribute work between processors, use minimal working space, and have deterministic run-time. To achieve this goal, we utilise pseudo-random bijective functions, defining mappings between permutations. These bijective functions allow independent processors to write permuted elements to an output buffer, in parallel, without collision. Critically, we allow bijective functions defining permutations of larger sets to be applied to smaller shuffling inputs via a parallel compaction operation that preserves the pseudo-random property. The result is an work algorithm for generating uniformly random permutations. We perform thorough experiments to validate the empirical performance and correctness of our algorithm. To this end, we also develop a novel test on distributions of permutations via unique kernel space embeddings.

We begin in Section 2 by introducing notation, summarising existing parallel shuffling algorithms, and describing GPUs. Section 3 introduces the idea of bijective functions, explaining their connection to shuffling and proposing two candidate bijective functions. Section 4 explains how compaction operations can be combined with bijective functions to generate bijections for arbitrary length sequences, forming the ‘bijective shuffle’ algorithm. Section 5 describes a statistical test comparing the kernel space embedding of a shuffling algorithm’s output with its expected value. This test is used to evaluate the quality of the proposed shuffling algorithms and to select parameters. Finally, in Section 6, we evaluate the runtime and throughput of the proposed GPU shuffling algorithm.

2. Background

Before discussing existing work, we briefly describe some notation. The order of elements in the shuffled version of an input sequence can be defined as a permutation. We refer to the symmetric group of permutations of elements as . The permutation assigns rank to element by . For example, given the permutation written in one-line notation:

and the list of items

the items are reordered such that occupies the coordinate

Note that we are using non-traditional zero-based indexing of permutations for notational convenience: this simplifies description of the functions used for our shuffling approach later in this paper.

The product of permutations refers to the composition operator, where the element is assigned rank .

Shuffling is a reordering of the elements of an array of length by a random permutation on the finite symmetric group, , such that each possible reordering is equally likely, i.e., .

2.1. Existing Work on Parallel Shuffling

We analyse the computational complexity of shuffling algorithms in terms of the work-time framework (JéJé, 1992), where an algorithm is described in terms of a number of rounds, where processors perform operations in parallel. GPUs are assumed to be data parallel processors such that there is an independent processor for each data element. Algorithms are evaluated based on the notion of work complexity, or the total number of operations performed on all processors. As the Fisher-Yates shuffle runs in optimal work on a single processor, a parallel algorithm is said to be work efficient if it achieves work complexity.

A parallel divide-and-conquer shuffling algorithm is independently proposed by Rao (Rao, 1961) and Sandelius (Sandelius, 1962) (we refer to this algorithm as RS). The input is recursively divided into subarrays by selecting a random number until each subarray contains one element. The final permutation is obtained via an in-order traversal of all subarrays. Bacher et al. (Bacher et al., 2015) provide a variant of this algorithm named MergeShuffle, taking a bottom-up approach where the input is partitioned into subarrays that are randomly merged with their neighbours until the entire array is shuffled. The parallel work complexity of these recursive divide-and-conquer approaches is . The RS and MergeShuffle algorithms have strong parallels to integer sorting algorithms. In particular, RS is equivalent to a most significant digit (MSD) radix sort (Bacher et al., 2017) on a sequence, where each element in the sequence is an infinite length random bit stream. Likewise, MergeShuffle has strong parallels to merge sort, differing in the definition of the merge operator for each pass. We provide experiments in Section 6 for divide-and-conquer style shuffling algorithms using GPU sorting.

Anderson (Anderson, 1990) proves that each of the swaps in the Fisher-Yates algorithm can be reordered without biasing the generated permutation, assuming parallel processors implement an atomic swap operation and that atomic swaps are serialised fairly. Note that current GPU architectures do not serialise atomic swaps fairly, as no assumptions can be made as to the ordering of operations (NVIDIA Corporation, 2020), so such an approach would be unsuitable for GPUs. Shun et al. (Shun et al., 2015) take a similar approach, proving that the Fisher-Yates algorithm has an execution dependence graph with a depth of with high probability, allowing parallel execution of the swaps, while generating the same output as the sequential approach.

The literature also has divide-and-conquer shuffling algorithms that focus on distributed environments. Sanders (Sanders, 1998) describes an algorithm for external memory and distributed environments where each item is allocated to a separate processor and permuted locally. A prefix sum is then used to place each element in the final output buffer. Langr et al. (Langr et al., 2014) provide a concrete extension of Sander’s algorithm for use with the MPI library. Gustedt (Gustedt, 2003) also expands on this approach, describing a method of constructing a random communication matrix that ensures work is distributed fairly between all processors.

Reif (Reif, 1985) describes an algorithm that assigns each element a random integer key between 1 and , where represents the number of processors. Elements are then assigned to processors by a sorting operation, where they are shuffled by the sequential Fisher-Yates method. The work complexity of the integer sort can be achieved in time using processors using the sort algorithm described by Reif (Reif, 1985). A limitation of this approach is non-deterministic load balancing. One processor may receive significantly more work than others, limiting the algorithm to the speed of the slowest processor.

Alonso and Schott (Alonso and Schott, 1996) define a custom representation with an associated total ordering that can be ‘sorted’ using a variant of merge-sort to produce a random permutation, falling into the class of divide-and-conquer algorithms. Their method forms a bijection between a so-called ‘lower-exceeding sequence’ of length n and a permutation of n elements. It can be shown that there are exactly n! such sequences of length n. Given a uniformly selected random lower-exceeding sequence, the algorithm outputs the corresponding random permutation.

Czumaj et al. (Czumaj et al., 1998) make use of network simulation to define a shuffling algorithm. Their work describes two methods for randomly generating a network mapping input elements to a random output location. The first constructs a network representing a random Fisher-Yates shuffle. The network is processed to produce distinct keys. After sorting these keys, elements are efficiently mapped to output locations. All pre/post-processing steps require time using processors in linear space. The second method proposed by Czumaj et al. (Czumaj et al., 1998), composes n-way ‘splitters’ to construct a network randomly permuting the input array. Each splitter randomly divides the input into two equal sized groups. For each splitter, the first group is ordered before the second in the final output. Each group is recursively split until the size of each group is one. At this stage, the network defines a random permutation, and is traversed to find the output position of each element. The algorithm runs in time using processors, for an arbitrary positive constant . Granboulan and Pornin (Granboulan and Pornin, 2007) use this method to generate a bijective function over an arbitrary input domain using space and time, but find the cost of the hyper-geometric random number generator used to construct splitters prohibitive.

‘Dart-throwing’ algorithms place input items randomly in an array of size until each item is placed in a unique location. A compaction operation is applied to place items in a dense output array. When a placement collides with an occupied space, the process is retried until the element is placed successfully. Reif and Miller (Miller and Reif, 1985) and later Reif and Rajasekaran (Rajasekaran and Reif, 1989) describe a simple method in which a parallel prefix sum is used to perform the compaction. Matias and Vishkin (Matias and Vishkin, 1991) describe a method using the canonical cycle representation to perform the compaction step, and Hagerup (Hagerup, 1991) provides an alternative method for computing the min prefixes used when generating the cycle representation. Dart-throwing approaches achieve parallel work complexity of in expectation, however, non-determinism makes them unsuitable for many practical applications. In our work, we similarly utilise prefix sum to perform compaction, but show how to obtain permutations in a completely deterministic way, using pseudo-random bijective functions in place of dart-throwing.

Cong and Bader (Cong and Bader, 2005) compare four divide-and-conquer, integer sorting, and dart-throwing algorithms and evaluate their performance relative to a sequential Fisher-Yates shuffle on up to 12 processors. They find that the sorting based approach is substantially worse than the other approaches, with Anderson’s (Anderson, 1990) approach tending to perform the best.

Closest to our work is the linear congruential generator (LCG) based approach of Andrés and Pérez (Andrés and Pérez, 2011), where permutations are generated by an LCG of full period. This approach is limited by the need to find viable LCG parameterisations for arbitrary length inputs, where the subset of available parameters is dramatically smaller than the space of permutations. In this paper we describe how any bijective function can be applied to the process of generating random permutations; in particular, we look at a more flexible use of LCGs and the use of n-bit block ciphers with much stronger pseudo-random properties.

The above algorithms all suffer from one or more drawbacks with respect to an ideal GPU implementation. The divide-and-conquer approaches have sub-optimal work complexity. The Rao-Sandelius (Rao, 1961) additionally suffers from load balancing issues, where the partitions generated by the divide-and-conquer process can be unevenly sized. The parallel Fisher-Yates algorithm of (Anderson, 1990) is non-deterministic and relies on fairly serialised atomics for correctness (GPU atomics are not fairly serialised). The network simulation algorithm of (Czumaj et al., 1998) has a complicated implementation difficult to adapt to GPUs. Dart-throwing algorithms are non-deterministic both in terms of shuffle output and runtime. The simple LCG approach of (Andrés and Pérez, 2011) offers no solution for arbitrary length sequences and suffers from poor quality pseudorandom outputs. In subsequent sections, we develop a new approach addressing these issues.

2.2. Graphics Processing Units

GPUs are massively parallel processors optimised for throughput, in contrast to conventional CPUs, which optimise for latency. GPUs in use today consist of processing units with single-instruction, multiple-thread (SIMT) lanes that efficiently execute instructions for groups of threads operating in lockstep. In the CUDA programming model, execution units called “streaming multiprocessors” (SMs), have 32 SIMT lanes. The corresponding group of 32 threads is called a “warp”. Warps are generally executed on SMs without order guarantees, enabling latency of global memory loads to be hidden by switching between warps (NVIDIA Corporation, 2020).

Large speed-ups in the domain of GPU computing commonly occur when problems are expressed as a balanced set of vector operations with minimal control flow, and coalesced memory access patterns. Notable examples are matrix multiplication (Fatahalian et al., 2004; Hall et al., 2003; Changhao Jiang and Snir, 2005), image processing (Bo Fang et al., 2005; Moreland and Angel, 2003), deep neural networks (Perry et al., 2014; Coates et al., 2013; Chetlur et al., 2014), and sorting (Green et al., 2012; Satish et al., 2010).

Implementation of shuffling algorithms poses a particular challenge for GPUs. The SIMD architecture favours a data-parallel approach, where work is evenly distributed among threads belonging to an execution unit — if any one thread is slow to complete, the entire execution unit is stalled. This rules out approaches from Section 2.1 with uneven work distribution per processor. Dart-throwing algorithms may be implemented for GPUs, using atomic compare and swap operators available in CUDA, although collisions for atomic compare and swap operations imply global synchronisation and are extremely costly. Of the existing approaches, sort-based approaches appear the most promising, given the availability of state-of-the-art GPU sorting. Highly optimised algorithms exist for both merge-sort and LSB radix sort via the Thrust (Bell and Hoberock, 2012) library. As mentioned in Section 2.1, the problem of shuffling can be expressed as a sort over a list whose elements are infinite length random bit strings. In fact, the keys do not need to be infinite, only long enough to break any ties in comparisons between elements. One baseline GPU algorithm we consider is to generate random integer sort keys of machine word length (assumed to be 64 bits) and perform a key-value sort. GPU sorting algorithms such as merge-sort and LSB radix sort perform several passes ( for merge-sort and for radix sort, with proportional to the sort key size in bits) scattering elements in memory. These scatter passes are particularly expensive for random keys, as memory writes cannot be coalesced together efficiently, and represent the largest performance bottleneck for this shuffling algorithm.

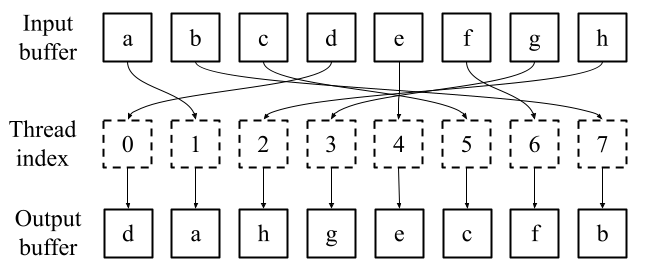

While the above algorithms are candidates for implementation on GPUs, we may improve shuffle throughput and device utilisation significantly by devising a new algorithm tailored to the architecture. As the shuffle operation must at minimum reorder elements using a gather or scatter operation, we posit that the maximum bandwidth of any random shuffle algorithm implemented for GPUs is that of a random gather or scatter. Figure 1 demonstrates a parallel random gather operation, where threads read from noncontiguous memory locations in the input buffer and write to contiguous memory locations in the output buffer. A scatter operation is the inverse, where threads read from contiguous memory locations and write to noncontiguous memory locations. Scatter/gather operations for GPUs are discussed in detail in (He et al., 2007). As our experiments show that gather operations have a higher bandwidth than scatter operations, we focus on the former.

Having established an upper bound on GPU bandwidth, we develop an optimised shuffling algorithm operating in a single gather pass, to approach the theoretical peak performance of the device.

3. Shuffling With Bijective Functions

Our proposed approach is based on applying bijective functions. The symmetric group , defined over any set of distinct items, contains all bijections of the set onto itself, or all distinct permutations of elements. A bijection is a one-to-one map between the elements of two sets, where each element from the first set is uniquely paired with an element from the second set. A bijection between two sets with is characterised by some function . The bijective function admits a corresponding inverse , which is also a bijection. For any two such functions , their composition is also a bijection. As we are dealing with bijections from the symmetric group , we define functions of the form . Without loss of generality, consider the set of nonnegative integers . An example of a bijection is

If each of the functions can be executed on a processor , independently of any other processor, without communication and in reasonable time, then this defines a simple parallel algorithm for reordering elements. If furthermore exhibit suitable pseudo-random properties such that (or close enough for practical application), then we have an effective parallel shuffling algorithm. We now discuss potential candidate bijective functions.

3.1. Linear Congruential

A first candidate is based on the common linear congruential random number generator (LCG). Given constants and , the LCG outputs

| (1) |

If and are co-prime, it is well-known that the input maps to a unique output location in the integer ring . Therefore, Equation 1 defines a bijective function and may be used to create permutations over inputs of length . For now, assume is fixed at the length of the input sequence. To find a bijection for a fixed , the task reduces to finding some co-prime to ( is unrestricted). Finding is trivial if has certain properties, for example, any where is prime, or odd where is a power of two. We will later show how to modify the length of the input sequence such that co-prime is always easily available.

The method described above is simple to implement and computationally inexpensive; however, linear congruential generators are known to have weaknesses as random number generators (Press et al., 2007). For our use case in particular, assuming some fixed relative to input length and varying and from the above, we can achieve at most unique permutations — significantly less than the possible permutations. Hence, permutations generated by this method may be appropriate for basic applications, but a more robust permutation generator is desirable.

3.2. Feistel Network

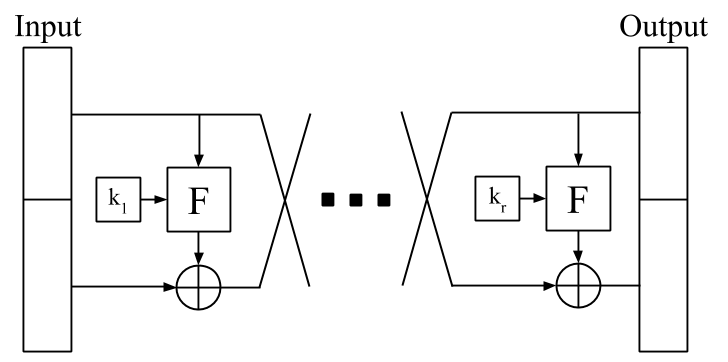

A second candidate bijective function is a block cipher construction known as a Feistel network (Feistel, 1973). A cryptographic block cipher, accepting an encryption key and a -bit plain-text block, provides a bijective mapping to a -bit cipher-text block. A perfect block cipher outputs cipher-text computationally indistinguishable from a random bit string, when the key is unknown. Thus, for a random choice of key, the output should be computationally indistinguishable from a random permutation. The Feistel network construction is a core component of many modern encryption algorithms, such as the Data Encryption Standard (DES) and so represents a significant improvement over the LCG in terms of the quality of its pseudo-random outputs (Biryukov and De Cannière, 2005).

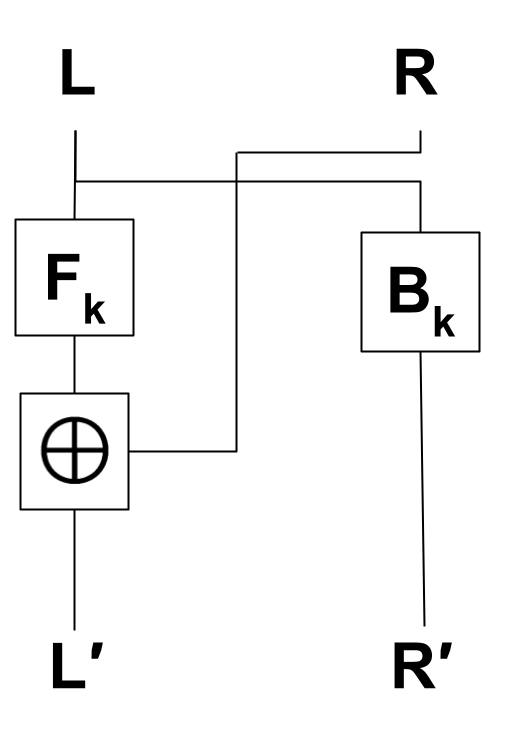

The construction for a -bit Feistel network consists of multiple applications of a round function as shown in Figure 2. In round , the input is split into a -bit binary string , representing the left half of the input block and a -bit binary string representing the right half of the input block. A round function is applied to and a round key . does not need to be bijective. The first -bit output from is combined with using the exclusive-or operation to give the right half for round . The right half from round becomes the left half for round . Thus, the th round of the Feistel Network is defined as

| (2) |

with the bitwise exclusive-or operation. Consequently, a block cipher constructed using a Feistel Network construction with rounds can be defined as

| (3) |

Luby and Rackoff (Luby and Rackoff, 1988) proved that a three-round Feistel network is a pseudo-random permutation if the round function is a pseudo-random function. Therefore, a Feistel Network with can be used to generate a pseudo-random permutation for any set of size .

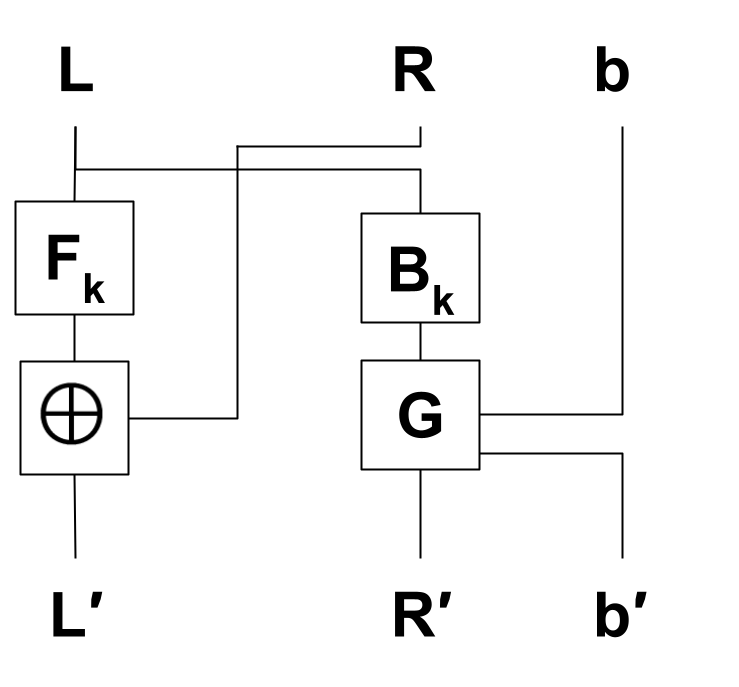

Feistel networks have been shown to be effective for parallel random number generation. Salmon et al. (Salmon et al., 2011) describe two hardware-efficient pseudo-random number generators (PRNGs), named Philox and Threefry, based on simplifications of cryptographic block ciphers. These PRNGs are sometimes called counter-based, as generation of the ’th random number is stateless, requiring only the index and a random seed. Advancing the series does not require , and so may be performed trivially by parallel threads. This is in contrast to the canonical Mersenne twister (Matsumoto and Nishimura, 1998), which requires a state of 2.5kB and cannot advance the series arbitrarily from to in constant time with respect to . The Philox and Threefry generators are shown to produce at least independent streams of random numbers, with a period of or more, and pass BigCrush (L’Ecuyer and Simard, 2007) statistical tests. Figure 4 shows one step of the Philox PRNG, which differs from the standard Feistel network in the addition of the function , which is strictly a bijection. Philox makes use of fast integer multiplication instructions, where multiplication by a carefully chosen, odd constant, yields upper bits forming and lower bits modulo form the bijection . is guaranteed to be a bijection because the odd multiplicand is always coprime to the integer ring modulo .

Although these PRNGs happen to have the bijective property, the connection between bijections and the symmetric group is not exploited in (Salmon et al., 2011). In this paper, we adapt the Philox cipher to generate bijections with lengths of arbitrary powers of two instead of , while preserving the invertibility of the process, terming our modification VariablePhilox. For example, shuffling a sequence of size results in halves with sizes and the bijective property is lost for the standard Philox cipher. Figure 4 shows a modified construction, where is the odd bit and . is a pseudo-random key-dependent function, and is a key-dependent bijective function. is a function mixing bit into , where for , is inserted in the least significant position of , yielding . Then the most significant bit of is removed and returned as . is clearly invertible such that . Defining as bitwise exclusive-or, VariablePhilox encodes inputs as

This process is inverted to retrieve by computing (in order):

Invertibility guarantees the bijective property, so VariablePhilox is a bijection for arbitrary power-of-two length sequences. C++ reference code is given in Listing 5.

We have now described several methods of generating bijections with pseudo-random properties, but these bijections do not apply to arbitrary input lengths. In particular, the Feistel bijective functions are available for sequences of power of two length. We now show how to efficiently extend these to arbitrarily sequences.

4. Arbitrary Length Bijections

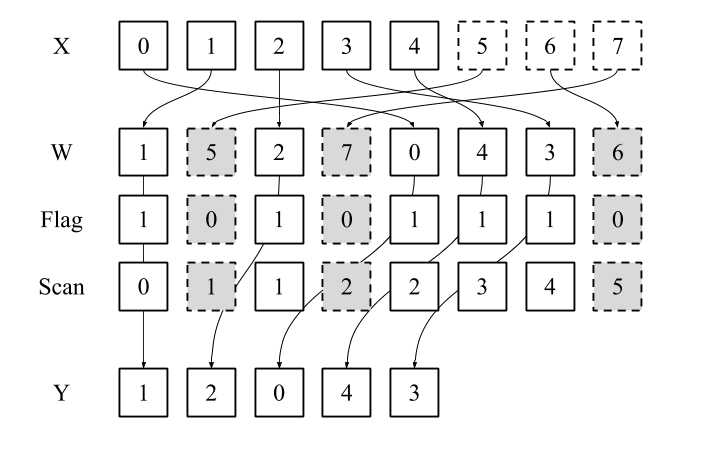

The key observation for generating bijections of arbitrary length is this: given an input vector of length , there may not be a readily available bijective function of the same length, however, we can find the nearest applicable such that and apply the bijective function to the padded vector . The output is a vector of length , containing the randomly permuted input indices. By ‘deleting’ all from , we obtain a permutation of length . Using this process, we form an algorithm generating longer permutations of length , then compacting them to form permutations of length . This compaction of a longer random permutation is still an unbiased random permutation as per the following proposition.

Proposition 0.

Define the function removing elements of where , returning the permutation of length . If , then .

Proof.

The function is a surjective map from . For each , there exists a non-overlapping subset such that . Clearly and so, if , then . ∎

To achieve the compaction in parallel, we use the work-efficient exclusive scan of Blelloch (Blelloch, 1990). Flagging each element of the extended permutation with if or if , the output of the exclusive scan over these flags gives the scatter indices into the shuffled output vector . This process is shown in Figure 6 for , and pseudocode is given in Algorithm 1. Given independent threads, the exclusive scan operation has work complexity . Our bijective function, executed in parallel for each element, also has work complexity , and so the final algorithm has optimal work complexity . Note that, using the bijective functions described in Section 3, the padded array of length will have at most elements (the nearest power of two length), so the work complexity holds regardless of input length.

Algorithm 1 can be implemented easily in several steps using a GPU parallel primitives library, for example, evaluating the bijective function, gathering elements to an output buffer, then applying a stream compaction algorithm. Achieving “bandwidth optimality”, i.e., a single global memory read and write per element, is more challenging, requiring the fusion of all operations into a single GPU kernel. The standard GPU parallel prefix sum of (Harris et al., 2007) uses two passes through global memory, the first to perform block-level scans and the second to propagate partial block-level results globally. This can be improved upon by using the specialised single-pass scan implementation of (Merrill and Garland, 2016), using a technique termed ‘decoupled look-back’ to achieve non-blocking communication between thread blocks, achieving optimal global memory reads/writes. In Section 6, we evaluate three versions of our GPU shuffling algorithm, incorporating varying levels of kernel fusion and demonstrating the effectiveness of optimising global memory read/writes for the shuffling problem.

5. Statistical Tests

We now consider statistical tests for pseudo-random permutations to assess the suitability of the methods discussed in Section 3 and 4 for practical applications, and to select the number of rounds for the VariablePhilox cipher. Testing the distribution of permutations is challenging as the sampling space grows super-exponentially with the length of the permutation. In particular, , so any computer algorithm for shuffling that uses a 64 bit integer seed cannot generate all permutations for .

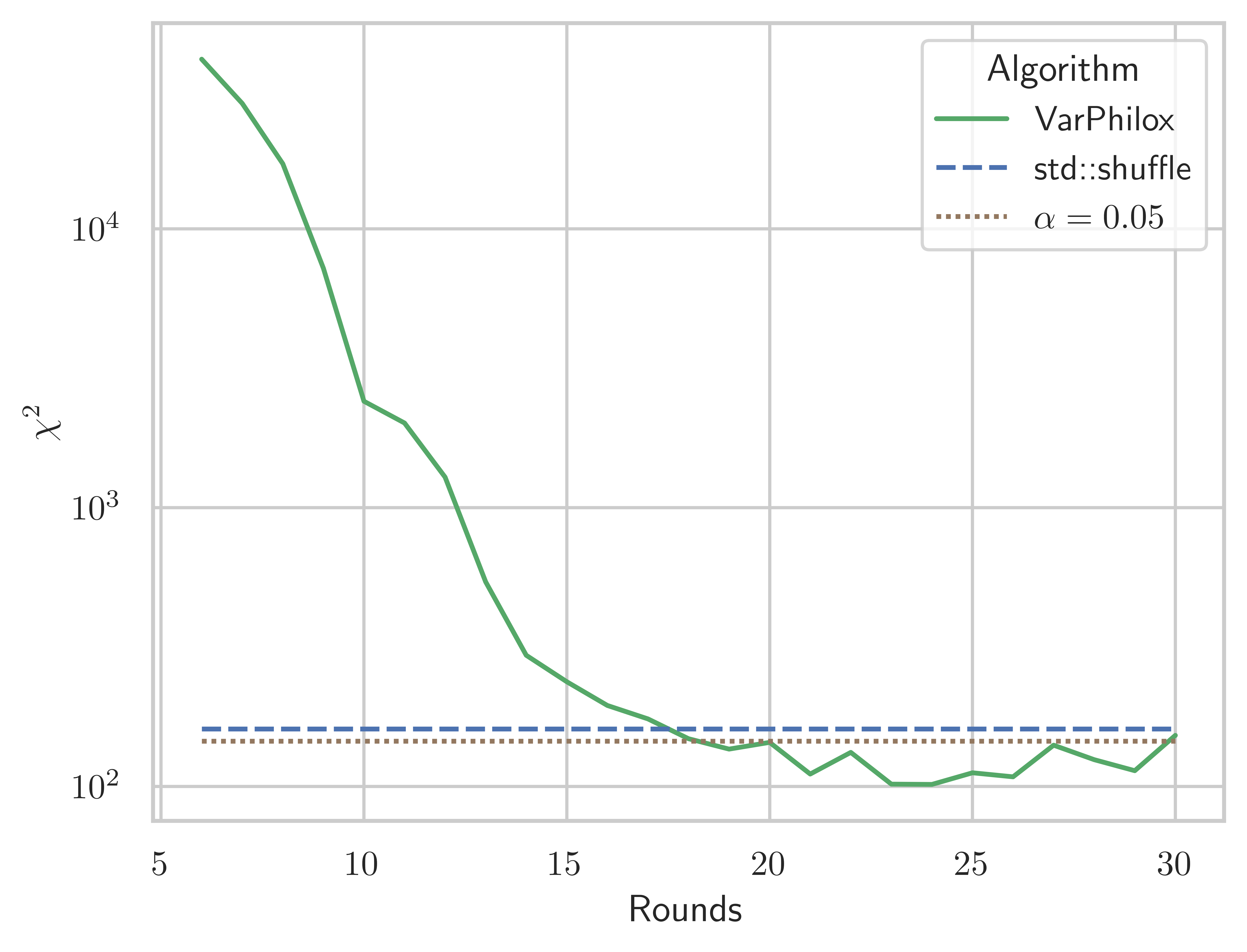

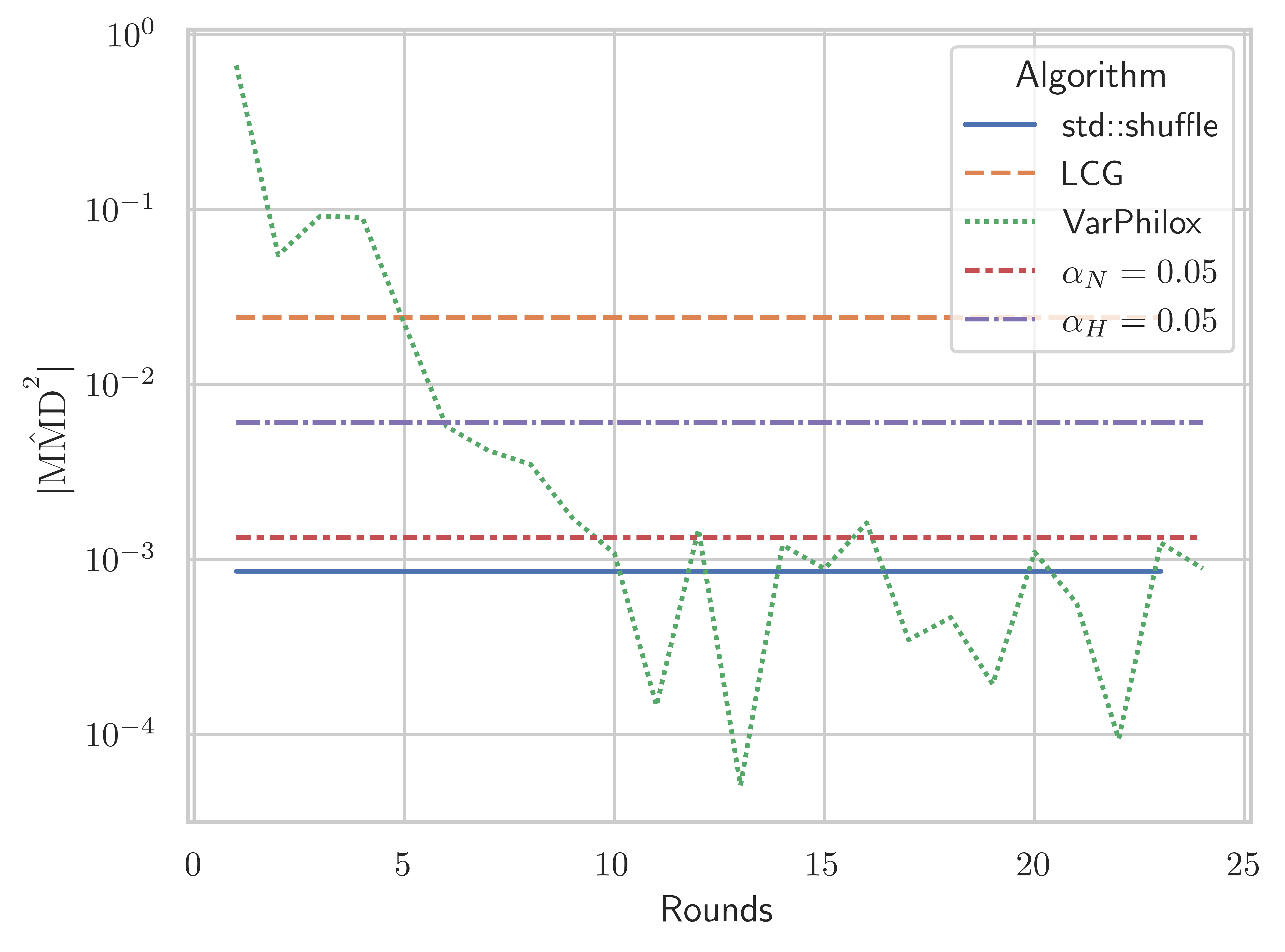

Our first test considers the distribution of random permutations at , where , using a standard test with 119 degrees of freedom, under the null hypothesis that permutations are uniformly distributed, i.e., each permutation occurs with probability . Figure 10 shows the change in the statistic as the number of rounds in the VariablePhilox cipher is increased. Each data point is computed from 100,000 random permutations. We also plot the acceptance thresholds for , corresponding to the probability of observing a value of the statistic above the line indicated on the figure, if the null hypothesis is true. Considering the results shown in the figure, it is unlikely that samples from VariablePhilox with less than 20 rounds are drawn from a uniform distribution. This is somewhat surprising given that only 10 rounds are recommended for the original Philox cipher in the PRNG setting (Salmon et al., 2011). The LCG bijective function generates a statistic in the region of 500,000, so it clearly fails the test and is not included in this figure.

The test is useful for small , but is intractable otherwise. We develop another test statistic suited to larger , based on the maximum mean discrepancy (MMD) in a reproducing kernel Hilbert space (RKHS). Two-sample hypothesis tests using MMD are developed in (Gretton et al., 2012). In a similar spirit, we derive a one sample test comparing the uniform distribution of permutations against a finite sample generated by a shuffling algorithm. The between two distributions and in a RKHS , equipped with positive definite kernel , is defined as

| (4) |

If the kernel is a characteristic kernel, the mean embedding of a distribution induced by the kernel is injective (Fukumizu et al., 2004). In other words, the mean embedding of a distribution is unique to that distribution. As a consequence, if and only if . Thus, a strategy for statistical testing is to form the null hypothesis that , compute the sample estimate as the test statistic, and evaluate the probability of obtaining a sample estimate greater than some threshold, assuming , using a concentration inequality. If the observed test statistic is sufficiently unlikely, this provides evidence that .

To implement this idea, a characteristic kernel measuring the similarity of two permutations is needed. The Mallows kernel, for , is defined for permutations as

where

We (somewhat arbitrarily) use the parameter throughout this paper. The Mallows kernel is introduced in (Jiao and Vert, 2015) and shown to be characteristic in (Mania et al., 2018). It may be implemented in time using the procedure presented in (Knight, 1966). In the following, we make use of the fact (Mitchell et al., 2021) that the expected value of the Mallows kernel under a uniform distribution of permutations is

The Mallows kernel is right-invariant in the sense that for any (Diaconis, 1988). Also note that the uniform distribution of permutations is invariant to composition. Using right invariance in conjunction with , and assuming denotes the uniform distribution over permutations, then

with the identity permutation. Using the above, and assuming that is a uniform distribution of permutations, the from (4) is simplified as

Replacing with a sample of random permutations , of size and with , we obtain an unbiased sample estimate via

| (5) |

where if and only if . We derive two tests based on the statistic. The first is a distribution-free bound. Applying Hoeffding’s inequality (Hoeffding, 1963), with the fact that , we have

Let be the level of significance under the Hoeffding bound. With null hypothesis , the acceptance region of the test is

| (6) |

The second test uses the central limit theorem, implying that approaches a normal distribution as . The variance of the Mallows kernel may be computed directly from its expectation as

So we have

The factor of two arises because the sum in (5) is of size . According to a normal distribution with mean 0 and variance as above,

where is the canonical error function. The acceptance region for is

| (7) |

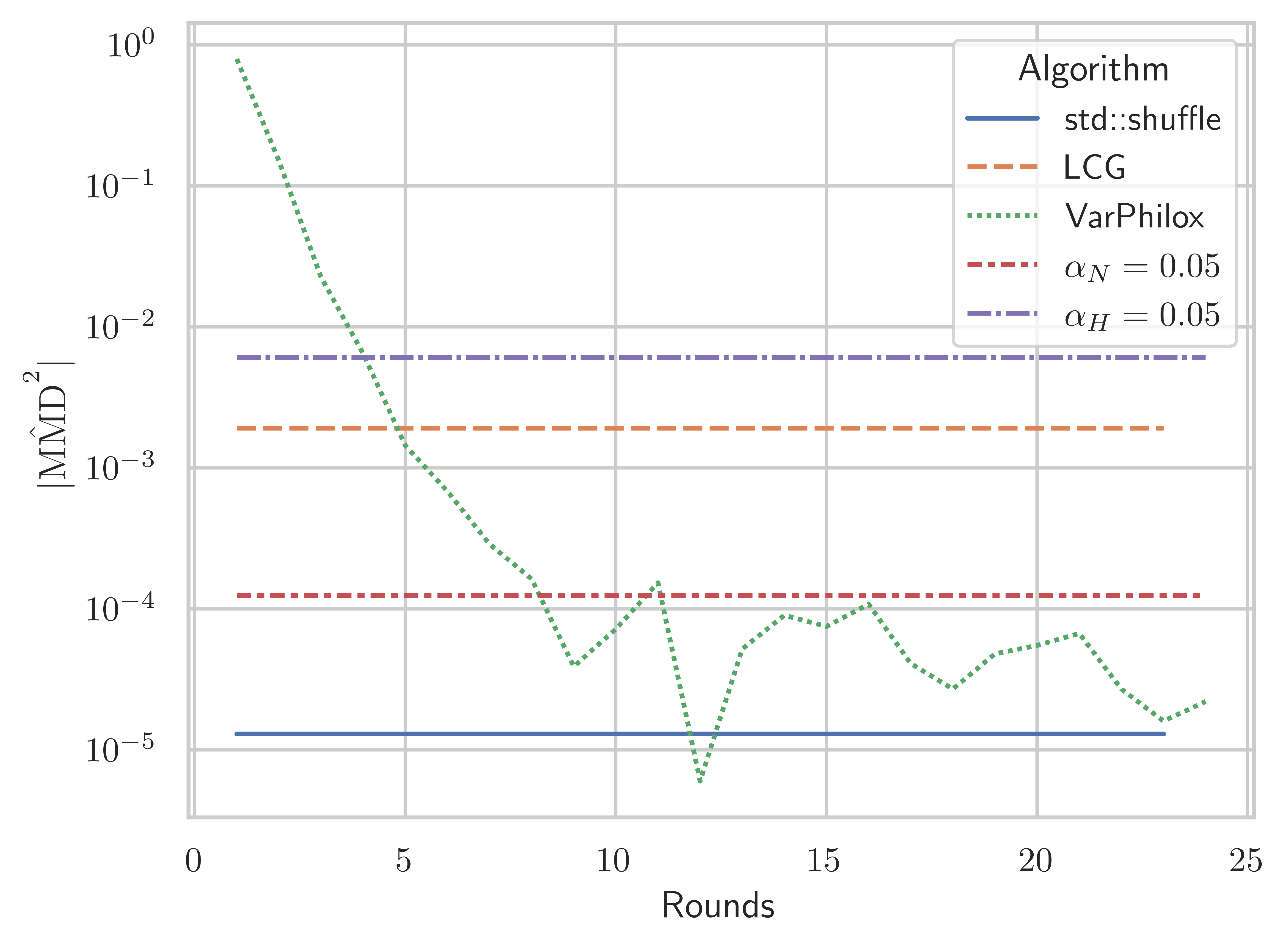

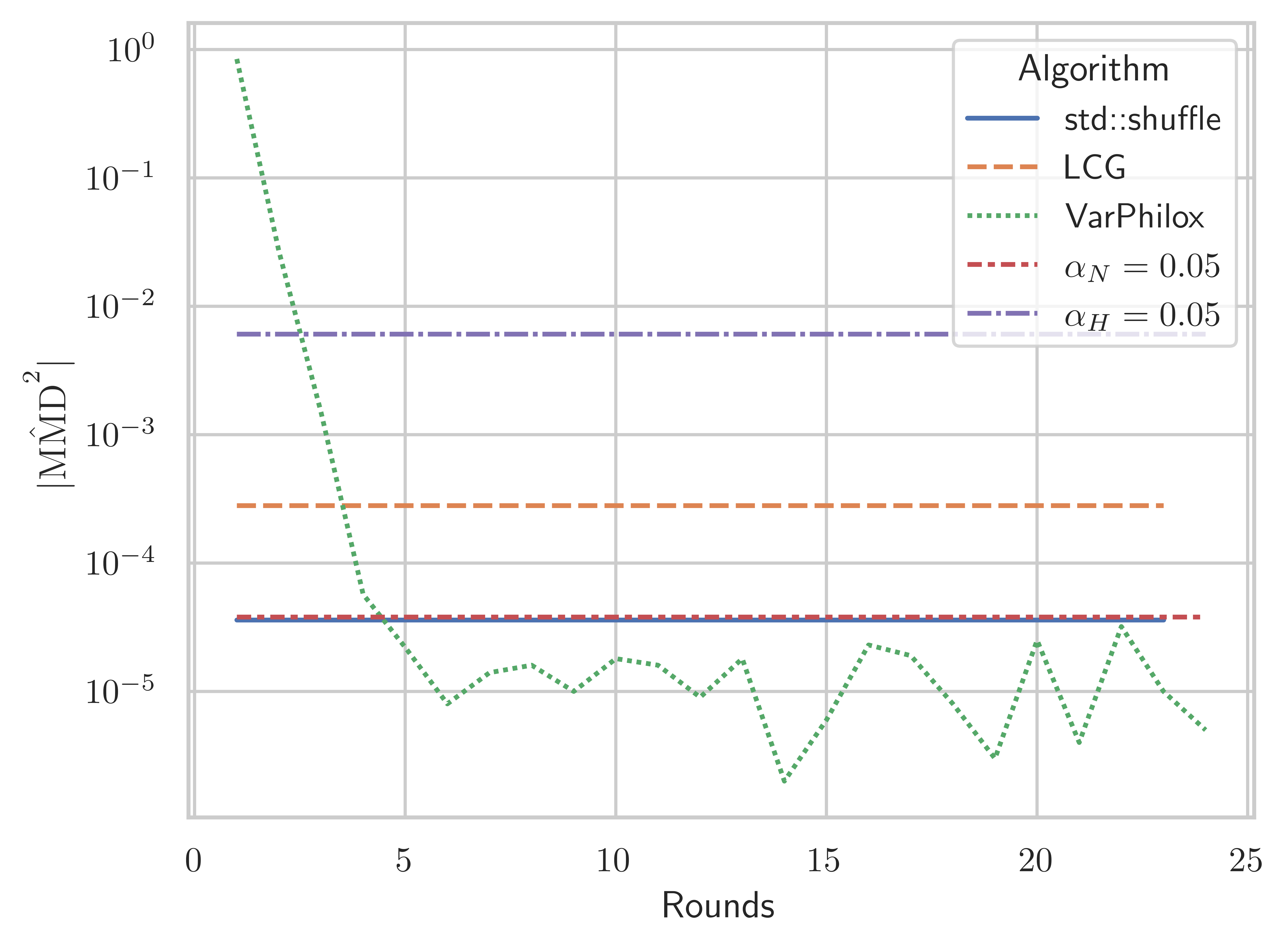

The threshold for should be used for small (i.e. ), as it makes no assumptions on the distribution of . For larger sample sizes, the asymptotic threshold is significantly tighter and should be preferred. Comparing Figures 10 and 10, we see the MMD tests with the asymptotic acceptance threshold roughly coincide with the test at n = 5, where some tests fail for VariablePhilox with fewer than 20 rounds, but VariablePhilox with 24 rounds or more passes all tests. Figures 10, 10, and 10 plot the statistic for VariablePhilox, LCG, and std::shuffle using 100,000, for varying permutation lengths. These experiments lead us to recommend a 24 round VariablePhilox cipher for random permutation generation, and we use this configuration in all subsequent experiments.

6. Evaluation of throughput

We now evaluate the throughput of our bijective shuffling method, where throughput refers to (millions of keys)/time(s). Unless otherwise stated, experiments are performed on an array of 64-bit keys of length , where ranges from 8 to 29. This represents the worst-case scenario, where our bijective shuffle algorithm must redundantly evaluate elements. For all throughput results, we report the average of five trials.

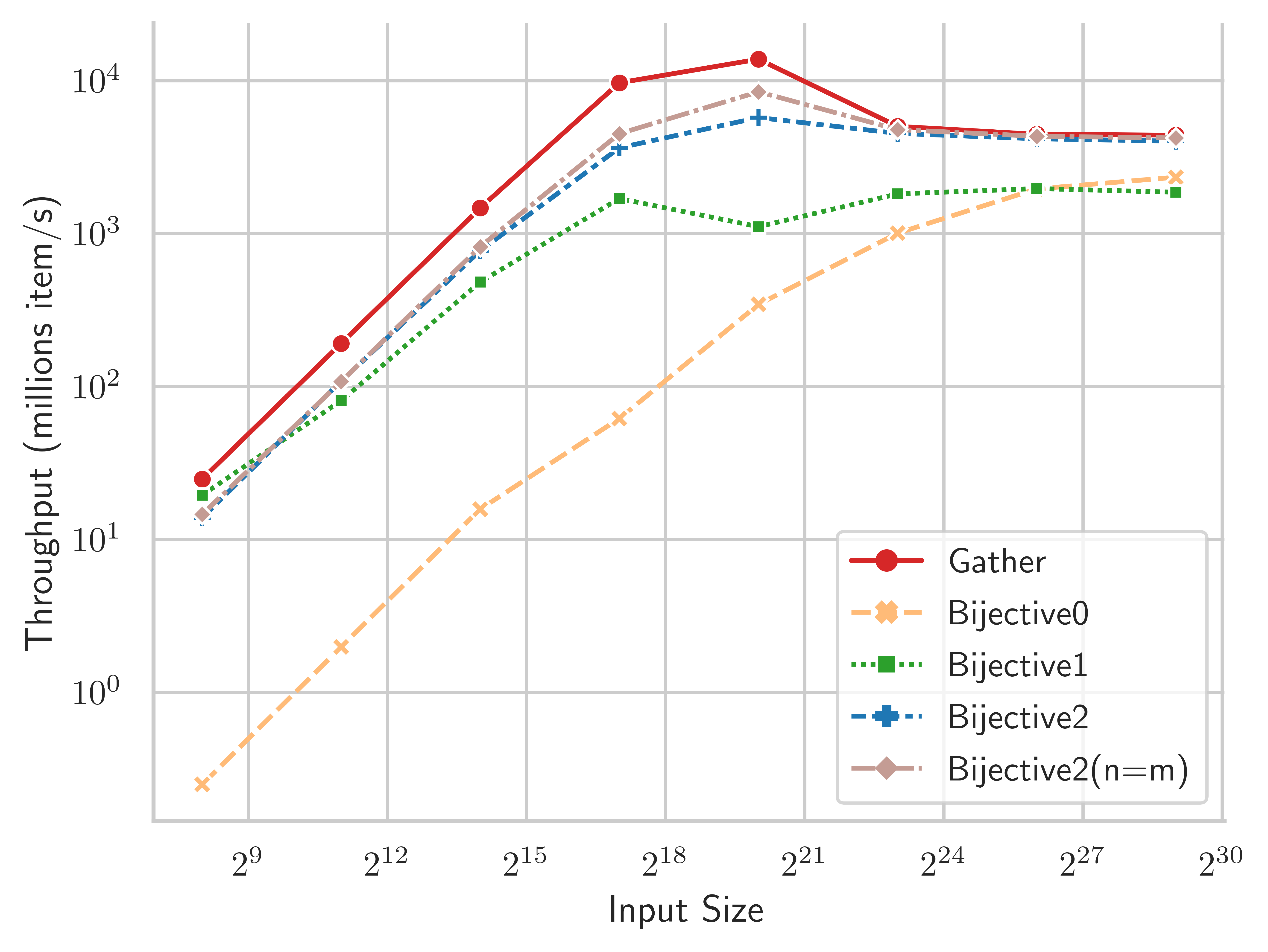

To consider the effect of code optimisations, we evaluate three CUDA implementations of Algorithm 1 with varying levels of GPU kernel fusion:

-

•

Bijective0: The transformation (), stream compaction, and gather are implemented in separate passes.

-

•

Bijective1: The transformation, stream compaction, and gather are fused into a single scan operation. The two-pass scan algorithm of (Harris et al., 2007) is used.

-

•

Bijective2: The transformation, stream compaction, and gather are fused into a single scan operation. The single pass scan algorithm of (Merrill and Garland, 2016) is used, for total global memory reads/writes.

Figure 11 plots the throughput of these variants, on a Tesla V100-32GB GPU, using VariablePhilox as the bijective function. For reference, the line labeled “gather” shows an upper bound on throughput for random gather operations in global memory. The optimised algorithm Bijective2 closely matches the optimal throughput of random gather, and is equivalent in performance for sizes . Bijective2(n=m) indicates the best-case performance of the algorithm, where the sequence is exactly a power of two length. The best-case and worst-case performance do not differ greatly because redundant elements do not incur global memory transactions, and are therefore relatively inexpensive to evaluate. The performance of random gather peaks around , where there is sufficient L2 cache to mitigate the effects of uncoalesced reads/writes. At this size, there is a gap between Bijective2 and random gather due to arithmetic operations required in evaluating multiple rounds of the VariablePhilox function, and because the prefix-sum must be redundantly evaluated up to the nearest power of two (although no global memory transactions occur for these redundant elements). This gap disappears at larger sizes when the runtime of the kernel becomes dominated by memory operations.

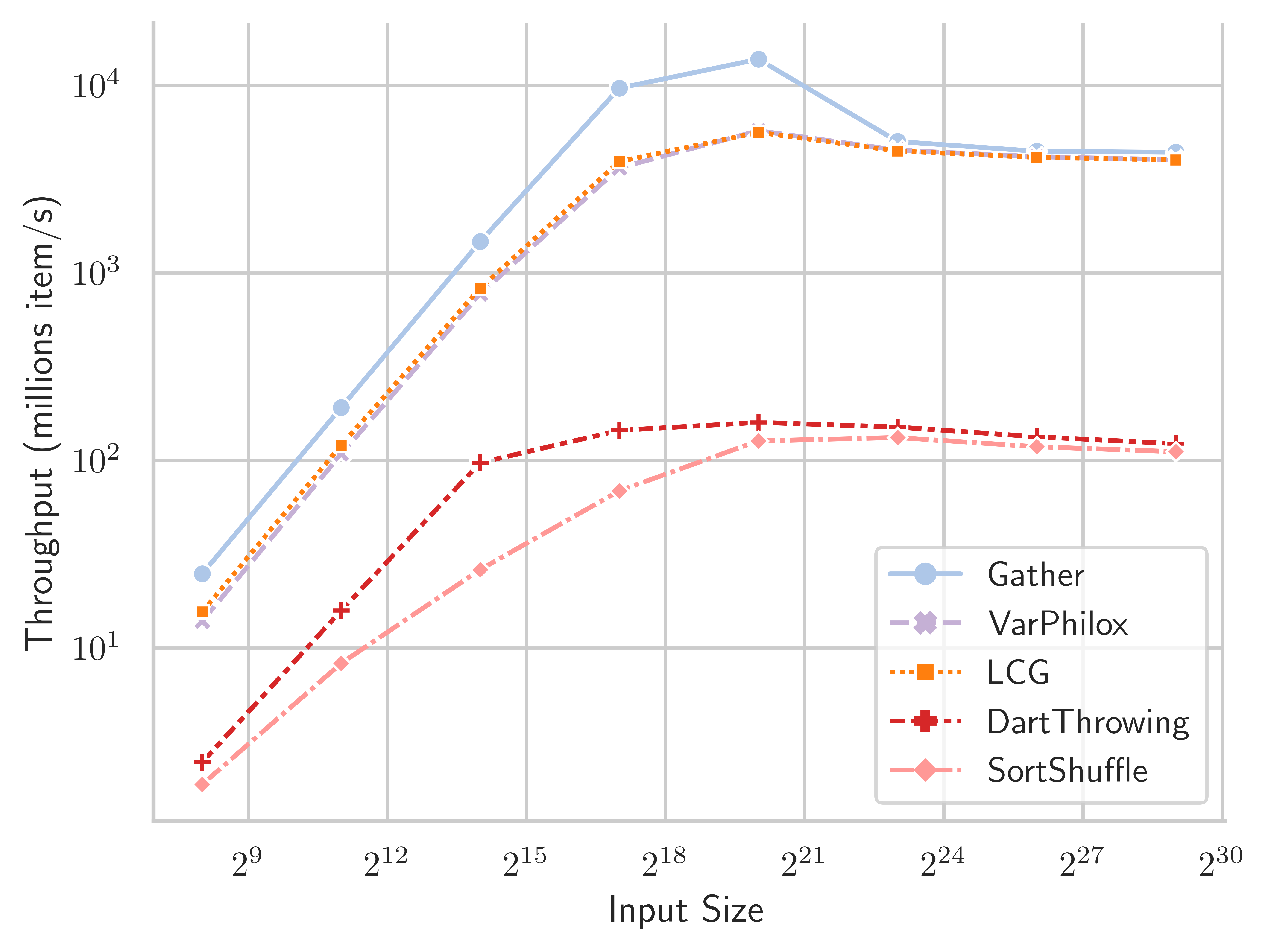

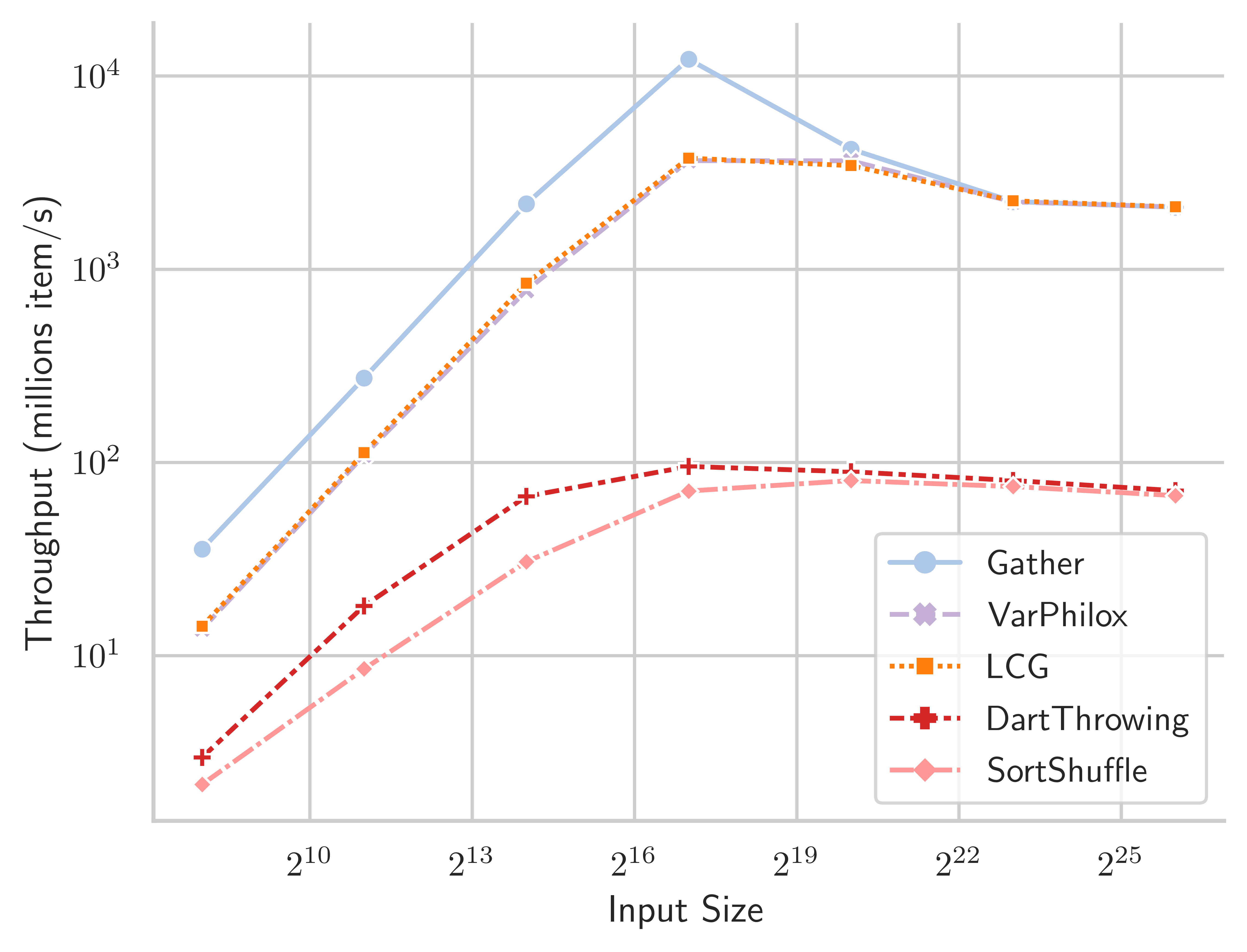

We now proceed to a comparison of different shuffling algorithms. Table 1 and Figure 13 show the throughput in millions of items per second, for the Tesla V100-32GB GPU, and Table 2 and Figure 13 show data for the GeForce RTX 2080 GPU. LCG and VariablePhilox implement optimised bijective shuffling using the methods described in Section 3. The dart-throwing algorithm uses CUDA atomic exchange instructions to attempt to place items randomly in a buffer of size , a stream compaction operation is then applied to the buffer to provide the final shuffled output. SortShuffle applies the state-of-the-art radix sort algorithm of (Merrill, 2015) to randomly generated 64-bit keys. As discussed in Section 2.1, many existing work on shuffling reduce to sorting algorithms on infinite length keys. Thus, SortShuffle is representative of a wide class of divide-and-conquer algorithms.

The bijective shuffle algorithms with the VariablePhilox and LCG functions achieve near-optimal throughput at large sizes, where performance is dominated by global memory operations. Throughput for these methods is more than an order of magnitude higher than the DartThrowing or SortShuffle methods. The lesser throughput of DartThrowing can be explained by the overhead of generalised atomic instructions in the CUDA architecture, as well as contention among threads when multiple threads attempt to write to the same memory location. SortShuffle relies on radix sort, where prefix sum is applied to 4 bits in each pass, requiring 16 passes over the data to fully sort 64-bit keys. In comparison, bijective shuffle is designed to require only a single prefix sum operation. Bijective shuffle is also fully deterministic, unlike DartThrowing, and requires no global memory for working space, whereas DartThrowing and SortShuffle use memory proportional to .

| Gather | VarPhilox | LCG | DartThrowing | SortShuffle | |

| Input size | |||||

| 24.64 | 14.61 | 16.21 | 2.459 | 1.845 | |

| 188.8 | 115.4 | 124.8 | 15.83 | 8.191 | |

| 1471 | 810.6 | 851.4 | 97.3 | 26.17 | |

| 9696 | 3836 | 3968 | 145. | 69.26 | |

| 13830 | 5800 | 5642 | 159.8 | 127.3 | |

| 5036 | 4527 | 4476 | 150.9 | 132.6 | |

| 4465 | 4172 | 4143 | 133.8 | 118.4 | |

| 4409 | 4018 | 4011 | 123.2 | 111.8 |

| Gather | VarPhilox | LCG | DartThrowing | SortShuffle | |

|---|---|---|---|---|---|

| Input size | |||||

| 35.63 | 13.73 | 14.19 | 2.98 | 2.16 | |

| 273.70 | 109.07 | 112.40 | 18.10 | 8.54 | |

| 2183.41 | 776.85 | 846.42 | 66.74 | 30.62 | |

| 12225.35 | 3649.39 | 3759.00 | 95.38 | 71.17 | |

| 4187.73 | 3641.61 | 3437.88 | 89.55 | 80.63 | |

| 2238.91 | 2232.61 | 2260.85 | 80.67 | 75.03 | |

| 2097.25 | 2096.47 | 2105.51 | 71.44 | 67.35 |

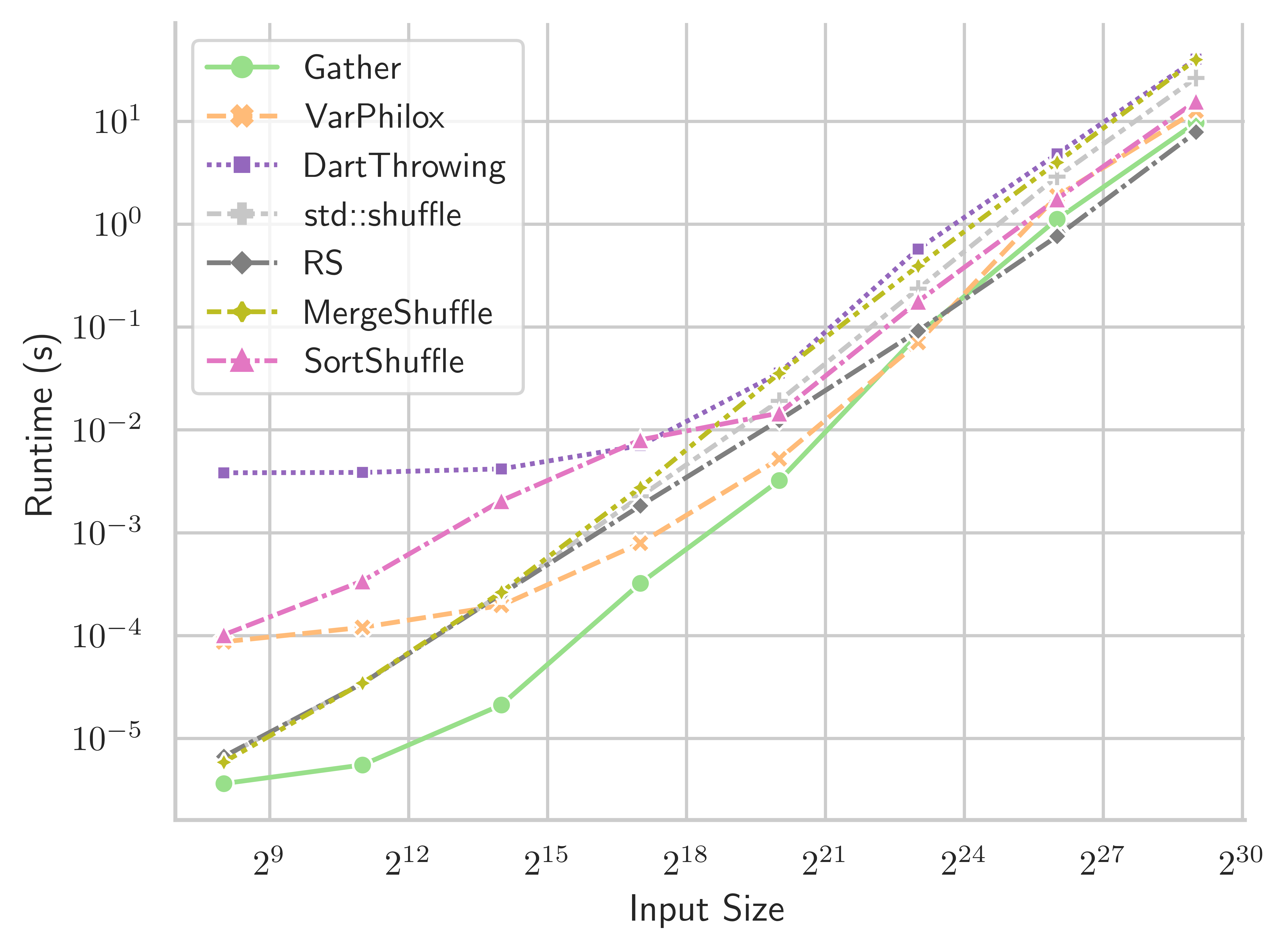

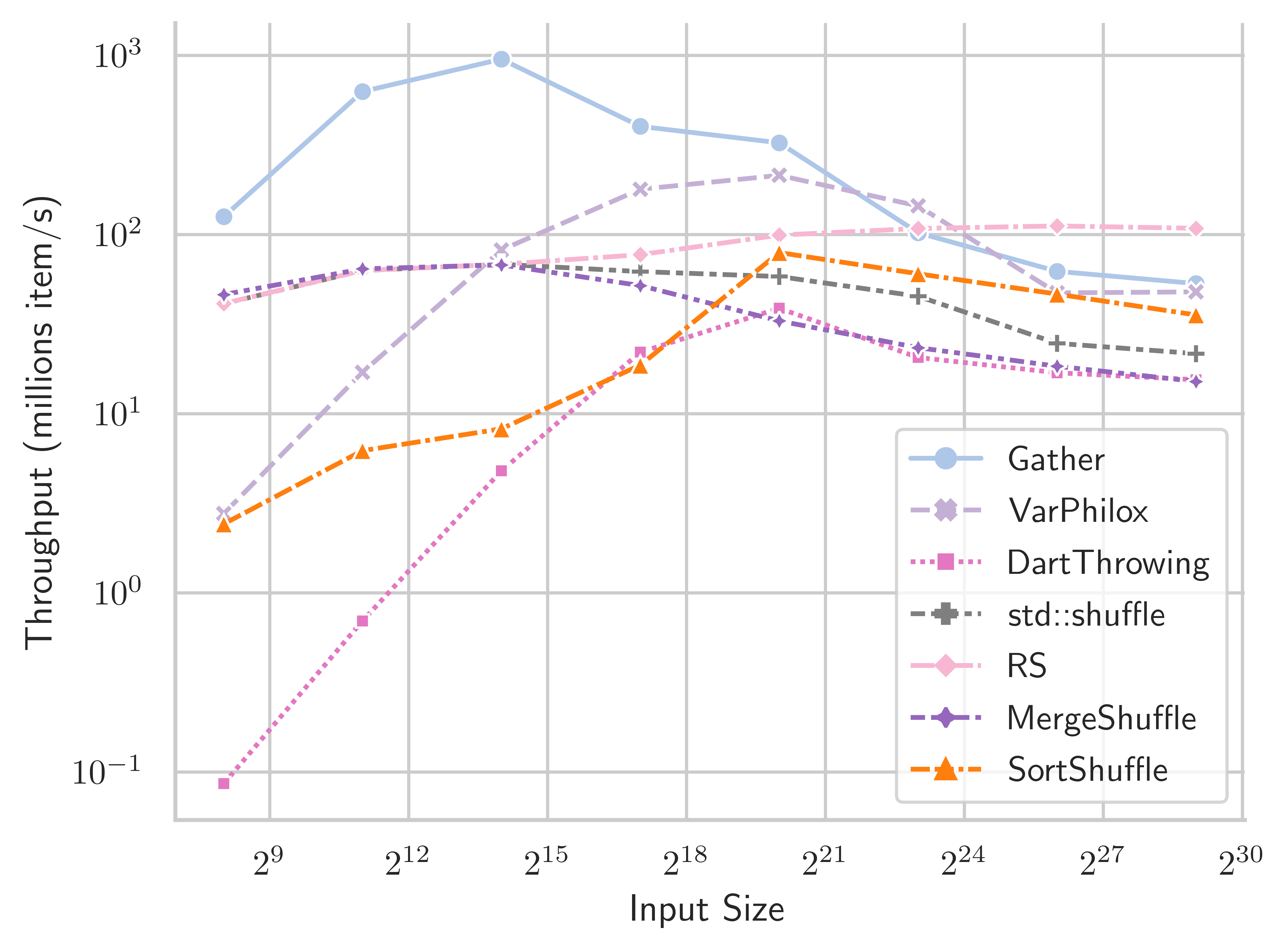

We also evaluate the performance of our shuffling method using 2x Intel Xeon E5-2698 CPUs, with a total of 40 physical cores. Results are presented in Table 3 and Figure 14. The bijective shuffle method is not designed for CPU architectures, but the results are informative nonetheless. The Gather, VariablePhilox (bijective shuffle) and SortShuffle algorithms are implemented using the Intel Thread Building Blocks (TBB) library (Reinders, 2007). The Rao-Sandelius (RS) (Rao, 1961) and MergeShuffle (Bacher et al., 2015) algorithms use the implementation of (Bacher et al., 2015), and std::shuffle is the single-threaded C++ standard library implementation. VariablePhilox has the highest throughput for medium-sized inputs, although it is outperformed by RS when . Curiously, RS has higher throughput at large sizes than the TBB gather implementation. This could indicate that RS has a more cache-friendly implementation, despite having a worse computational complexity of , or simply a suboptimal implementation in TBB. Further work is needed to properly investigate these effects for CPU architectures.

| Gather | VarPhilox | DartThrowing | std::shuffle | RS | MergeShuffle | SortShuffle | |

|---|---|---|---|---|---|---|---|

| Input size | |||||||

| 128.03 | 2.81 | 0.09 | 40.77 | 41.18 | 46.24 | 2.45 | |

| 635.04 | 17.65 | 0.69 | 63.83 | 63.82 | 64.95 | 6.26 | |

| 935.55 | 89.88 | 4.76 | 68.05 | 68.00 | 67.85 | 8.30 | |

| 434.46 | 207.94 | 21.69 | 63.05 | 78.71 | 52.03 | 18.60 | |

| 353.72 | 246.79 | 39.53 | 59.38 | 99.76 | 32.94 | 79.30 | |

| 108.23 | 157.88 | 21.37 | 45.67 | 108.78 | 23.44 | 60.11 | |

| 64.32 | 49.02 | 16.04 | 24.93 | 111.15 | 18.38 | 47.45 | |

| 54.12 | 44.06 | 15.63 | 21.66 | 104.99 | 15.03 | 39.23 |

7. Limitations and Future Research

While the presented algorithm for GPUs is highly effective in terms of throughput and the quality of its pseudorandom outputs, its parameterisation differs from that of typical standard library implementations of shuffle. For example, the C++ standard library implementation accepts any ‘uniform random bit generator’ (Stroustrup, 2013), such as the common Mersenne-twister generator (Matsumoto and Nishimura, 1998). Our implementation is instead parameterised by the selection of a bijective function, and a key of length bits, where is the length of the sequence to be shuffled, and is the number of rounds in the cipher.

Future work may explore other useful constructions of bijective functions, beyond the LCG and Feistel variants discussed in this paper.

It also should be noted that the Fisher-Yates and Rao-Sandelius algorithms may operate in-place, and our proposed GPU algorithm does not, requiring the allocation of an output buffer. This is not unexpected, as there are few truly in-place GPU algorithms that reorder inputs ((Peters et al., 2009) is a notable exception). For future work, it would be interesting to consider if an in-place parallel shuffling algorithm is possible for GPU architectures.

8. Conclusion

We provide an algorithm for random shuffling specifically optimised for GPU architectures, using modified bijective functions from cryptography. Our algorithm is highly practical, achieving performance approaching the maximum possible device throughput, while being fully deterministic, using no extra working space, and providing high-quality distributions of random permutations. An outcome of this work is also a statistical test for uniform distributions of permutations based on the Mallows kernel that we expect to be useful beyond shuffling algorithms.

References

- (1)

- Alonso and Schott (1996) Laurent Alonso and René Schott. 1996. A parallel algorithm for the generation of a permutation and applications. Theoretical Computer Science 159, 1 (1996), 15 – 28.

- Anderson (1990) R. Anderson. 1990. Parallel Algorithms for Generating Random Permutations on a Shared Memory Machine. In Proceedings of the Second Annual ACM Symposium on Parallel Algorithms and Architectures (Island of Crete, Greece) (SPAA ’90). Association for Computing Machinery, New York, NY, USA, 95–102.

- Andrés and Pérez (2011) David Miraut Andrés and Luis Pastor Pérez. 2011. Efficient Parallel Random Rearrange. In International Symposium on Distributed Computing and Artificial Intelligence, Ajith Abraham, Juan M. Corchado, Sara Rodríguez González, and Juan F. De Paz Santana (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 183–190.

- Bacher et al. (2015) Axel Bacher, Olivier Bodini, Alexandros Hollender, and Jérémie O. Lumbroso. 2015. MergeShuffle: A Very Fast, Parallel Random Permutation Algorithm. CoRR abs/1508.03167 (2015). arXiv:1508.03167 http://arxiv.org/abs/1508.03167

- Bacher et al. (2017) Axel Bacher, Olivier Bodini, Hsien-Kuei Hwang, and Tsung-Hsi Tsai. 2017. Generating Random Permutations by Coin Tossing: Classical Algorithms, New Analysis, and Modern Implementation. ACM Trans. Algorithms 13, 2, Article 24 (Feb. 2017), 43 pages.

- Bell and Hoberock (2012) Nathan Bell and Jared Hoberock. 2012. Thrust: A productivity-oriented library for CUDA. In GPU computing gems Jade edition. Elsevier, 359–371.

- Biryukov and De Cannière (2005) Alex Biryukov and Christophe De Cannière. 2005. Data encryption standard (DES). Springer US, Boston, MA, 129–135.

- Blelloch (1990) Guy E. Blelloch. 1990. Prefix Sums and Their Applications. Technical Report CMU-CS-90-190. School of Computer Science, Carnegie Mellon University.

- Bo Fang et al. (2005) Bo Fang, Guobin Shen, Shipeng Li, and Huifang Chen. 2005. Techniques for efficient DCT/IDCT implementation on generic GPU. In 2005 IEEE International Symposium on Circuits and Systems. 1126–1129 Vol. 2.

- Changhao Jiang and Snir (2005) Changhao Jiang and M. Snir. 2005. Automatic tuning matrix multiplication performance on graphics hardware. In 14th International Conference on Parallel Architectures and Compilation Techniques (PACT’05). 185–194.

- Chetlur et al. (2014) Sharan Chetlur, Cliff Woolley, Philippe Vandermersch, Jonathan Cohen, John Tran, Bryan Catanzaro, and Evan Shelhamer. 2014. cuDNN: Efficient Primitives for Deep Learning. arXiv:1410.0759 [cs.NE]

- Coates et al. (2013) Adam Coates, Brody Huval, Tao Wang, David J. Wu, Andrew Y. Ng, and Bryan Catanzaro. 2013. Deep Learning with COTS HPC Systems. In Proceedings of the 30th International Conference on International Conference on Machine Learning - Volume 28 (Atlanta, GA, USA) (ICML’13). JMLR.org, III–1337–III–1345.

- Cong and Bader (2005) Guojing Cong and David A. Bader. 2005. An Empirical Analysis of Parallel Random Permutation Algorithms ON SMPs. In Proceedings of the ISCA 18th International Conference on Parallel and Distributed Computing Systems, September 12-14, 2005 Imperial Palace Hotel, Las Vegas, Nevada, USA, Michael J. Oudshoorn and Sanguthevar Rajasekaran (Eds.). ISCA, 27–34.

- Czumaj et al. (1998) A. Czumaj, P. Kanarek, M. Kutylowski, and K. Lorys. 1998. Fast Generation of Random Permutations Via Networks Simulation. Algorithmica 21, 1 (1998), 2–20.

- Diaconis (1988) Persi Diaconis. 1988. Group representations in probability and statistics. Institute of Mathematical Statistics, Hayward, CA. vi+198 pages.

- Farber (2011) Rob Farber. 2011. CUDA application design and development. Elsevier.

- Fatahalian et al. (2004) K. Fatahalian, J. Sugerman, and P. Hanrahan. 2004. Understanding the Efficiency of GPU Algorithms for Matrix-Matrix Multiplication. In Proceedings of the ACM SIGGRAPH/EUROGRAPHICS Conference on Graphics Hardware (Grenoble, France) (HWWS ’04). Association for Computing Machinery, New York, NY, USA, 133–137.

- Feistel (1973) Horst Feistel. 1973. Cryptography and Computer Privacy. Scientific American 228, 5 (1973), 15–23.

- Fisher and Yates (1943) Ronald A Fisher and Frank Yates. 1943. Statistical tables for biological, agricultural and medical research. Oliver and Boyd Ltd, London.

- Fukumizu et al. (2004) Kenji Fukumizu, Francis R. Bach, and Michael I. Jordan. 2004. Dimensionality Reduction for Supervised Learning with Reproducing Kernel Hilbert Spaces. J. Mach. Learn. Res. 5 (Dec. 2004), 73–99.

- Good (2006) Phillip I Good. 2006. Permutation, parametric, and bootstrap tests of hypotheses. Springer Science & Business Media.

- Granboulan and Pornin (2007) Louis Granboulan and Thomas Pornin. 2007. Perfect Block Ciphers with Small Blocks. In Fast Software Encryption, Alex Biryukov (Ed.). Springer Berlin Heidelberg, Berlin, Heidelberg, 452–465.

- Green et al. (2012) Oded Green, Robert McColl, and David A. Bader. 2012. GPU Merge Path: A GPU Merging Algorithm. In Proceedings of the 26th ACM International Conference on Supercomputing (San Servolo Island, Venice, Italy) (ICS ’12). Association for Computing Machinery, New York, NY, USA, 331–340.

- Gretton et al. (2012) Arthur Gretton, Karsten M. Borgwardt, Malte J. Rasch, Bernhard Schölkopf, and Alexander Smola. 2012. A Kernel Two-Sample Test. J. Mach. Learn. Res. 13 (March 2012), 723–773.

- Gustedt (2003) Jens Gustedt. 2003. Randomized permutations in a coarse grained parallel environment. In Proceedings of the 6th European Conference on Computer Systems.

- Hagerup (1991) Torben Hagerup. 1991. Fast parallel generation of random permutations. In Automata, Languages and Programming, Javier Leach Albert, Burkhard Monien, and Mario Rodríguez Artalejo (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 405–416.

- Hall et al. (2003) Jesse D Hall, Nathan A Carr, and John C Hart. 2003. Cache and bandwidth aware matrix multiplication on the GPU. (2003).

- Harris et al. (2007) Mark Harris, Shubhabrata Sengupta, and John D Owens. 2007. Parallel prefix sum (scan) with CUDA. GPU gems 3, 39 (2007), 851–876.

- He et al. (2007) Bingsheng He, Naga K Govindaraju, Qiong Luo, and Burton Smith. 2007. Efficient gather and scatter operations on graphics processors. In Proceedings of the 2007 ACM/IEEE conference on Supercomputing. 1–12.

- Hoeffding (1963) Wassily Hoeffding. 1963. Probability Inequalities for Sums of Bounded Random Variables. J. Amer. Statist. Assoc. 58, 301 (1963), 13–30.

- JéJé (1992) Joseph JéJé. 1992. An introduction to parallel algorithms. Reading, MA: Addison-Wesley.

- Jiao and Vert (2015) Yunlong Jiao and Jean-Philippe Vert. 2015. The Kendall and Mallows kernels for permutations. In International Conference on Machine Learning. PMLR, 1935–1944.

- Knight (1966) William R. Knight. 1966. A Computer Method for Calculating Kendall’s Tau with Ungrouped Data. J. Amer. Statist. Assoc. 61, 314 (1966), 436–439.

- Knuth (1997) Donald E. Knuth. 1997. The Art of Computer Programming, Volume 2 (3rd Ed.): Seminumerical Algorithms. Addison-Wesley Longman Publishing Co., Inc., Boston, MA, USA.

- Langr et al. (2014) Daniel Langr, Pavel Tvrdik, Tomas Dytrych, and J. Draayer. 2014. Algorithm 947: Paraperm-Parallel Generation of Random Permutations with MPI. ACM Trans. Math. Software 41 (10 2014), 5:1–5:26.

- L’Ecuyer and Simard (2007) Pierre L’Ecuyer and Richard Simard. 2007. TestU01: AC library for empirical testing of random number generators. ACM Transactions on Mathematical Software (TOMS) 33, 4 (2007), 1–40.

- Luby and Rackoff (1988) Michael Luby and Charles Rackoff. 1988. How to Construct Pseudorandom Permutations from Pseudorandom Functions. SIAM J. Comput. 17, 2 (1988).

- Mania et al. (2018) Horia Mania, Aaditya Ramdas, Martin J Wainwright, Michael I Jordan, Benjamin Recht, et al. 2018. On kernel methods for covariates that are rankings. Electronic Journal of Statistics 12, 2 (2018), 2537–2577.

- Matias and Vishkin (1991) Yossi Matias and Uzi Vishkin. 1991. Converting high probability into nearly-constant time - With applications to parallel hashing. Proc. 23rd Ann. ACM Symp. on Theory of Computing (01 1991), 307–316.

- Matsumoto and Nishimura (1998) Makoto Matsumoto and Takuji Nishimura. 1998. Mersenne Twister: A 623-Dimensionally Equidistributed Uniform Pseudo-Random Number Generator. ACM Trans. Model. Comput. Simul. 8, 1 (Jan. 1998), 3–30.

- Merrill (2015) Duane Merrill. 2015. Cub. NVIDIA Research (2015).

- Merrill and Garland (2016) Duane Merrill and Michael Garland. 2016. Single-pass parallel prefix scan with decoupled look-back. NVIDIA, Tech. Rep. NVR-2016-002 (2016).

- Miller and Reif (1985) G. L. Miller and J. H. Reif. 1985. Parallel tree contraction and its application. In 26th Annual Symposium on Foundations of Computer Science (sfcs 1985). 478–489.

- Mitchell et al. (2021) Rory Mitchell, Joshua Cooper, Eibe Frank, and Geoffrey Holmes. 2021. Sampling Permutations for Shapley Value Estimation. arXiv preprint arXiv:2104.12199 (2021).

- Mitchell and Frank (2017) Rory Mitchell and Eibe Frank. 2017. Accelerating the XGBoost algorithm using GPU computing. PeerJ Computer Science 3 (2017), e127.

- Moreland and Angel (2003) Kenneth Moreland and Edward Angel. 2003. The FFT on a GPU. In Proceedings of the ACM SIGGRAPH/EUROGRAPHICS Conference on Graphics Hardware (San Diego, California) (HWWS ’03). Eurographics Association, Goslar, DEU, 112–119.

- NVIDIA Corporation (2020) NVIDIA Corporation. 2020. CUDA C++ Programming Guide. Version 11.1.

- Perry et al. (2014) Michelle Perry, Harrison B Prosper, and Anke Meyer-Baese. 2014. GPU Implementation of Bayesian Neural Network Construction for Data-Intensive Applications. Journal of Physics: Conference Series 513, 2 (jun 2014), 022027.

- Peters et al. (2009) Hagen Peters, Ole Schulz-Hildebrandt, and Norbert Luttenberger. 2009. Fast in-place sorting with cuda based on bitonic sort. In International Conference on Parallel Processing and Applied Mathematics. Springer, 403–410.

- Prediger et al. (2021) Lukas Prediger, Niki Loppi, Samuel Kaski, and Antti Honkela. 2021. d3p–A Python Package for Differentially-Private Probabilistic Programming. arXiv preprint arXiv:2103.11648 (2021).

- Press et al. (2007) William H. Press, Saul A. Teukolsky, William T. Vetterling, and Brian P. Flannery. 2007. Numerical Recipes 3rd Edition: The Art of Scientific Computing (3 ed.). Cambridge University Press, New York, NY, USA.

- Rajasekaran and Reif (1989) Sanguthevar Rajasekaran and John H. Reif. 1989. Optimal and Sublogarithmic Time Randomized Parallel Sorting Algorithms. SIAM J. Comput. 18, 3 (06 1989), 594–14. Copyright - Copyright] © 1989 © Society for Industrial and Applied Mathematics; Last updated - 2012-07-02.

- Rao (1961) C. Radhakrishna Rao. 1961. Generation of Random Permutations of Given Number of Elements Using Random Sampling Numbers. Sankhyā: The Indian Journal of Statistics, Series A (1961-2002) 23, 3 (1961), 305–307.

- Reif (1985) J. H. Reif. 1985. An optimal parallel algorithm for integer sorting. In 26th Annual Symposium on Foundations of Computer Science (sfcs 1985). 496–504.

- Reinders (2007) James Reinders. 2007. Intel threading building blocks: outfitting C++ for multi-core processor parallelism. ” O’Reilly Media, Inc.”.

- Salmon et al. (2011) J. K. Salmon, M. A. Moraes, R. O. Dror, and D. E. Shaw. 2011. Parallel random numbers: As easy as 1, 2, 3. In SC ’11: Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis. 1–12.

- Sandelius (1962) Martin Sandelius. 1962. A Simple Randomization Procedure. Journal of the Royal Statistical Society. Series B (Methodological) 24, 2 (1962), 472–481.

- Sanders (1998) Peter Sanders. 1998. Random permutations on distributed, external and hierarchical memory. Inform. Process. Lett. 67, 6 (1998).

- Satish et al. (2010) Nadathur Satish, Changkyu Kim, Jatin Chhugani, Anthony D. Nguyen, Victor W. Lee, Daehyun Kim, and Pradeep Dubey. 2010. Fast Sort on CPUs and GPUs: A Case for Bandwidth Oblivious SIMD Sort. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data (Indianapolis, Indiana, USA) (SIGMOD ’10). Association for Computing Machinery, New York, NY, USA, 351–362.

- Shun et al. (2015) Julian Shun, Yan Gu, Guy E. Blelloch, Jeremy T. Fineman, and Phillip B. Gibbons. 2015. Sequential Random Permutation, List Contraction and Tree Contraction Are Highly Parallel. In Proceedings of the Twenty-Sixth Annual ACM-SIAM Symposium on Discrete Algorithms (San Diego, California) (SODA ’15). Society for Industrial and Applied Mathematics, USA, 431–448.

- Stroustrup (2013) Bjarne Stroustrup. 2013. The C++ programming language. Pearson Education.

- Szuppe (2016) Jakub Szuppe. 2016. Boost.Compute: A Parallel Computing Library for C++ Based on OpenCL. In Proceedings of the 4th International Workshop on OpenCL (Vienna, Austria) (IWOCL ’16). Association for Computing Machinery, New York, NY, USA, Article 15, 39 pages.

- Team (2018) RAPIDS Development Team. 2018. RAPIDS: Collection of Libraries for End to End GPU Data Science. https://rapids.ai

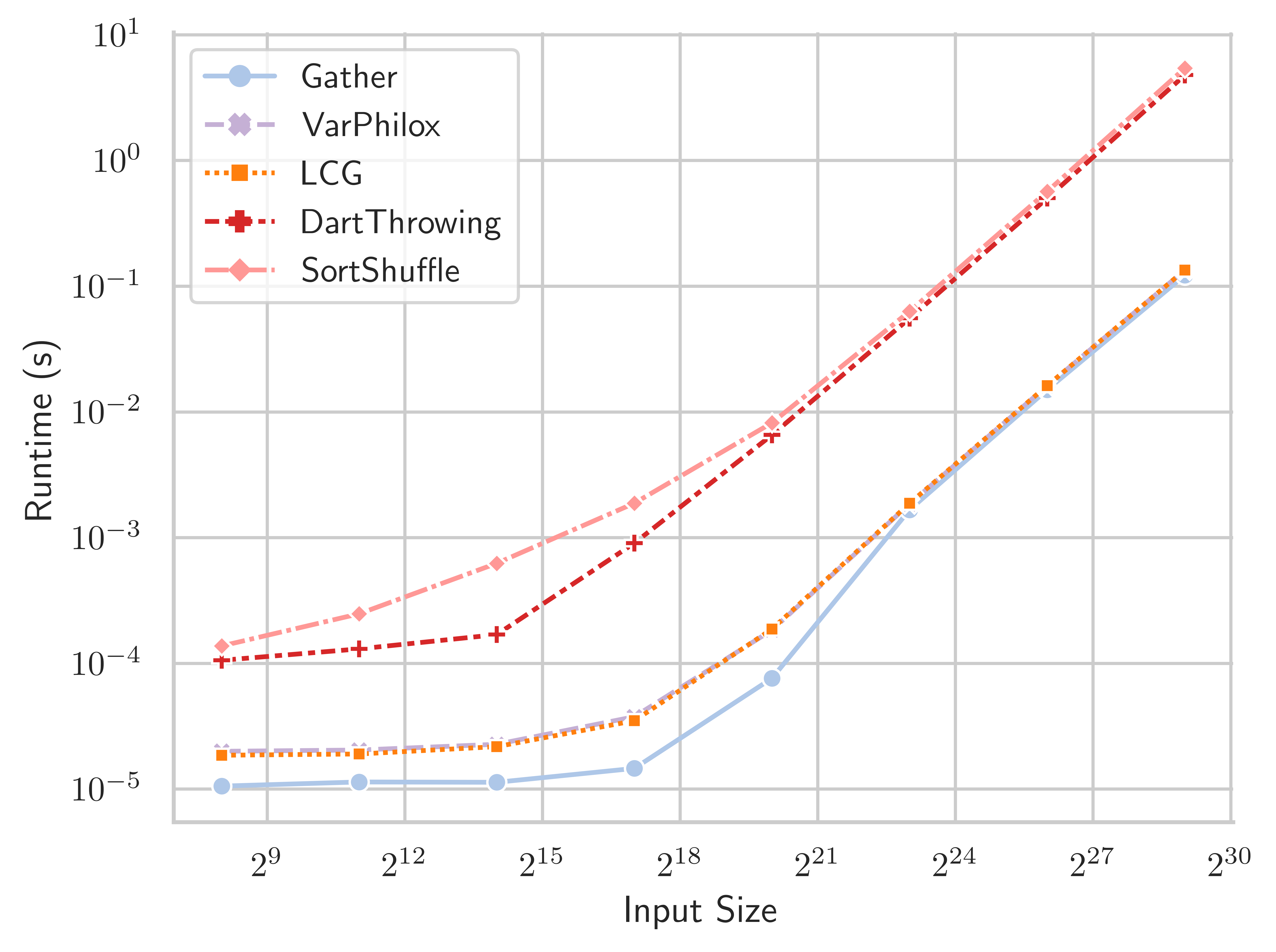

Appendix A Runtime of GPU and CPU shuffling algorithms

Figures 16 and 16 reproduce Figures 13 and 14, reporting runtime in seconds instead of throughput ((millions of keys)/time(s)).