Balancing Asymptotic and Transient Efficiency Guarantees

in Set Covering Games

Abstract

Game theoretic approaches have gained traction as robust methodologies for designing distributed local algorithms that induce a desired overall system configuration in multi-agent settings. However, much of the emphasis in these approaches is on providing asymptotic guarantees on the performance of a network of agents, and there is a gap in the study of efficiency guarantees along transients of these distributed algorithms. Therefore, in this paper, we study the transient efficiency guarantees of a natural game-theoretic algorithm in the class of set covering games, which have been used to model a variety of applications. Our main results characterize the optimal utility design that maximizes the guaranteed efficiency along the transient of the natural dynamics. Furthermore, we characterize the Pareto-optimal frontier with regards to guaranteed efficiency in the transient and the asymptote under a class of game-theoretic designs. Surprisingly, we show that there exists an extreme trade-off between the long-term and short-term guarantees in that an asymptotically optimal game-theoretic design can perform arbitrarily bad in the transient.

I Introduction

Recently, game theory has emerged as a significant framework in the study of multi-agent systems: the agents in the system are modeled as agents in a game, each with its own actions and individual rewards that are functions of the joint action of the other agents. Some prominent examples where these models have found success include wireless communication networks [1], UAV swarm task allocation [2], news subscription services [3], vaccinations during an epidemic [4], security systems [5], facility location [6], coordinating the charging of electric vehicles [7], and national defense [8], among others. Indeed, game theory has played a significant role in many applications and across various disciplines.

A central focus in using game-theoretic models to control multi-agent systems is the design of local objective (or reward) functions for the agents that result in a desirable emergent system-level outcome, as measured by a system welfare function [9]. Generally the emergent outcome is assumed to be a coordinated decision which is a Nash equilibrium of the game, wherein each agent cannot improve its own objective function by switching actions. A significant research effort has been devoted to designing local objective functions that ensure good performance of Nash equilibria. For example, [10] shows that for the class of set covering games, there exists a set of local objective functions which guarantee that the performance of any Nash equilibrium is within a multiplicative factor of of the optimal decision set. Furthermore, no other polynomial time algorithm can perform better [11].

Rather than focusing solely on the system welfare at the Nash equilibria, this work studies the welfare of decision sets along the transient path toward equilibrium. This may be important when the relevant system parameters evolve on faster time scales, agent modalities vary with time etc. The class of potential games [12] is particularly relevant to this goal as the class of best response/reply processes and its many variants (see, e.g., [13]) are known to converge to a Nash equilibrium asymptotically from any initial configuration. Best reply processes are intimately tied with the development of game theory, present in Cournot processes, Nash’s seminal paper [14], as well as stable and evolutionary equilibrium [15]. Efficiency guarantees along these best response processes are much less studied than the asymptotic guarantees that come with Nash equilibrium. In this work, we restrict our attention to an important subclass of potential games known as set covering games, in which agents with limited capabilities try to coordinate to cover as large of an area as possible. For set covering games, we characterize bounds on the efficiency for best response processes, and compare utility designs that maximize either the short- or long-efficiency.

While few works study the guarantees that can arise through best response processes, we highlight a subset of important works that deal with analyzing the emergent behavior of best response dynamics. The authors in [16, 17] analyze lower bounds for the efficiency guarantees resulting after a single best response round in linear congestion and cut games. These guarantees were verified through a linear programming approach in [18] and extended to quadratic and cubic congestion games. Best response dynamics are also studied in [19], where it was found that the efficiency resulting from best response dynamics can be arbitrarily bad for non-potential games. The speed of convergence to efficient states is studied in [20]. While similar in spirit, our paper extends the previous literature by moving from characterization results of prespecified utility designs to instead identifying optimal game-theoretic designs that maximize the efficiency guarantees along a best response process.

The contributions of this paper are all with respect to the class of set covering games and are as follows:

-

1.

We identify the local objective that optimizes the transient efficiency guarantee along a best response dynamic;

-

2.

We identify a trade-off between transient and asymptotic guarantees, and provide explicit characterizations of the Pareto frontier and Pareto-optimal agent objective functions.

The rest of the paper is organized as follows: In Section II, we introduce the necessary notation and definitions for best response dynamics, as well as for set covering games. We then outline our main results in Section III. Section IV contains the proof of the main result. We present a simulation study in Section V to examine how our findings translate to practice. Finally, we conclude in Section VI.

II Model

Notation. We use and to denote the sets of real and natural numbers respectively. Given a set , represents its cardinality. describes the indicator function for set ( if , otherwise).

II-A Set Covering Games and Best Response Dynamics

We present all of our results in the context of set covering games [10], a well-studied class of potential games. We first recall some definitions to outline our game-theoretic model, which is used to describe the given class of distributed scenarios. Set covering games are characterized by a finite set of resources that can be possibly covered by a set of agents . Each resource has an associated value determining its relative importance to the system. Each agent has a finite action set , representing the sets of resources that each agent can opt to select. The performance of a joint action comprised of every agent’s actions is evaluated by a system-level objective function , defined as the total value covered by the agents, i.e.,

| (1) |

The goal is to coordinate the agents to a joint action that maximizes this welfare . Thus, each agent is endowed with its own utility function that dictates its preferences among the joint actions. We use to denote the joint action without the action of agent . A given set covering game can be summarized as the tuple . We consider the utility functions of the form

| (2) |

where is the number of agents that choose resource in action profile , and the utility rule defines the resource independent agent utility determined by . This utility rule will be our design choice for defining the agents preferences towards each of their decisions. To motivate our results, we provide an example application of set covering games below, which represents just one among many [21, 22, 23].

Example 1 (Wireless Sensor Coverage [24]).

Consider a group of sensors that can sense a particular region depending on the orientation, physical placement of the sensor, etc. The choice of these parameters constitute the different possible actions for each sensor. We partition the entire space into disjoint regions and each region is attributed a weight to signify the importance of covering it. As a whole, the set of sensors wish to maximize the total weight of the regions covered. Using a game-theoretic model of this scenario allows us to abstract away much of the complexity that arises from having to consider specific sensor types and their respective capabilities, in contrast to [24]. In this scenario, the results in the paper allow us to study guarantees of performance of local coordination algorithms that the sensors may employ. We further note that short-term performance guarantees may also be desirable especially when there is some drift in the parameters of the game (e.g., in or ).

Next, we introduce some notation relevant to the study of best reply processes. For a given joint action , we say the action is a best response for agent if

| (3) |

Note that may be set valued. In this fashion, the underlying discrete-time dynamics of the best response process is

| (4) | ||||

where at each step, a single agent acts in a best response to the current joint action profile. It is important to stress that the fixed points of this best response process are Nash equilibria of the game, i.e., a joint action such that

| (5) |

In the case of potential games [12], the set of Nash equilibrium is also globally attractive with respect to the best response dynamics. A potential game is defined as any game for which there exists a potential function where for all agents and pairs of actions ,

| (6) |

In these games, the potential function decreases monotonically along the best response process in a similar manner to a Lyapunov function. For non-potential games, there is a possibility that the best response process ends up in a cycle [25], but this falls outside the scope of this paper.

In this work we assume that the best response process is such that the agents are ordered from to and perform successive best responses in that order. While other best response processes have been previously studied (see, e.g., covering walks [16]), we limit our analysis to this specific process for tractability. We also assume that each agent has a null action available to them representing their non-participation in the game .

Definition 1.

Given a game with agents, a -best response round is a step best reply process with an initial state and the corresponding dynamics

| (7) |

This process results in a set of possible action trajectories with a set of possible end action states resulting after running for time steps.

II-B Price of Best Response and Price of Anarchy

The primary focus of this paper is to analyze guarantees on the efficiency of possible end actions after a -best response round with respect to the system welfare . In this regard, we introduce the price of best response metric to evaluate the worst case ratio between the welfare of the outcome of the -best response round and the optimal welfare in a game.

| (8) |

Our study of the price of best response will be in direct comparison with the standard game-theoretic metric of price of anarchy, which is defined as

| (9) |

The price of anarchy is a well established metric, with a number of results on its characterization, complexity, and design [26]. We frame price of anarchy as a backdrop to many of our results, where Nash equilibria are the set of end states that are possible, following a -best response round in the limit as . Under this notation, we can analyze the transient guarantees, which have been relatively unexplored, in these distributed frameworks in comparison to the asymptotic setting.

To isolate the efficiency analysis over the utility rule and the number of agents , we consider the price of best response with respect to the class of all set covering games with a fixed utility rule and number of agents, and any resource set and corresponding values and set of agent actions :

| (10) |

Similarly, we define the price of anarchy over as

| (11) |

When appropriate, we may also remove the dependence on the number of agents by denoting (and likewise for ) to take the worst case guarantees across any number of agents.

We are interested in the transient and asymptotic system guarantees that can result from a given utility rule. Therefore we bring to attention two well-motivated utility rules to highlight the spectrum of guarantees that are possible. The first utility rule , introduced in [10], attains the optimal price of anarchy guarantee for the class of set covering games and is defined as

| (12) |

The second rule is the marginal contribution (MC) rule. The MC rule is a well studied [27] utility rule and is defined as

| (13) |

Note that the induced utility under this mechanism is , where the utility for agent is the added welfare from playing its null action to playing . Using ensures that all Nash equilibria of the game are local optima of the welfare function. However, it is known that the MC rule has sub-optimal asymptotic guarantees as [28]. Thus, under the standard tools of asymptotic analysis, has been considered to be ‘optimal’ in inducing desirable system behavior. Utilizing the notion of , we inquire if the favorable characteristics of translate to good short-term behavior of the system. Intuitively, since guarantees optimal long-term performance, we expect the same in the short-term as well. This discussion motivates the following three questions:

-

(a)

For a given , is always greater than ?.

-

(b)

If not, then for how many rounds does the best response algorithm under have to be run for the guarantees on the end state performance to surpass the asymptotic performance of marginal contribution? In other words, is there for which ?

-

(c)

For a given , what is the utility mechanism that maximizes the end state performance after running the best response algorithm for rounds, i.e. what is ?

In the next section, we answer these questions in detail.

III Main Results

In this section, we outline our main results for price of best response guarantees. In this setting, utility functions are designed by carefully selecting the utility rule and improvement in transient system level behavior corresponds with a higher price of best response. Hence, we characterize the optimal that achieves the highest possible . We formally state the optimality of the MC rule for the class of set covering games below.

Theorem 1.

Consider the class of set covering games with users. The following statements are true:

-

(a)

For any number of rounds , the transient performance guarantees associated with the utility mechanisms and satisfy

(14) (15) Furthermore, the marginal contribution rule achieves its asymptotic guarantees after a single round, i.e.,

(16) -

(b)

For any number of rounds , the marginal contribution rule optimizes the , i.e.,

(17) Alternatively, there is no utility rule with for any .

Proof.

To prove the statements outlined in items and of the theorem statement, we show, more generally, that for any and any , the following series of inequalities is true:

The fact that is a result of Lemma 1 (stated later in the paper) for and the definition of . For proving , we first show that

| (18) |

Let be joint actions such that is a best response to for agent . If the MC rule is used as in Equation (13), then

Then, after any best response rounds, , and therefore the claim is shown. For the other direction as well as the inequality , we construct a game construction (also shown in Figure 1) such that for any utility rule , . The game is constructed as follows with agents. Let the resource set be with , , , and . For agent , let with and . For agent , let with and . It can be seen in this game, if , the optimal joint action is resulting in a welfare of , and if , the optimal joint action is resulting in a welfare of . Additionally, starting from , note the best response path exists, ending in the action with a welfare . Since is a Nash equilibrium, both agents stay playing their first action for rounds and agent only switches to in the -round. Therefore for this game. This game construction can be extended to by fixing the actions of agents to be , where the only nonempty action available to them is that selects the value resource.

Since may not be the worst case game, and the final claim is shown. ∎

Observe that Theorem 1 answers Questions (a)–(c) posed in the previous section. Specifically, we have shown that for any number of rounds, the performance guarantees of never overcome the performance guarantees of . Remarkably, for any fixed number of rounds , remains greater than and the advantages of using only appear when the system settles to a Nash equilibrium as . Furthermore, the marginal contribution rule is in fact the utility mechanism that optimizes the price of best response and that this optimal efficiency guarantee is achieved after round of best response. Thus, the transient guarantees of are quite strong in comparison to . Note that this is in stark contrast with their price of anarchy values, where significantly outperforms [10, 28]. Clearly, there exists a trade-off between short-term and long-term performance guarantees in this setting.

In our next theorem, we characterize the trade-off between short-term and long-term performance guarantees by providing explicit expressions for the Pareto curve between and and the corresponding Pareto-optimal utility mechanisms. Surprisingly, we show that can be arbitrarily bad.

Theorem 2.

For a fixed , the optimal is

| (19) |

Furthermore, if for a given , then must be equal to .

The price of anarchy is only taken on the interval , since the greatest achievable is [10] and the price of anarchy of is (which attains the optimal as shown in Theorem 1). The corresponding Pareto-optimal utility rules are given in Lemma 2. The optimal trade-off curve between and is plotted in Figure 2.

Theorem 2 highlights the disparity between the efficiency guarantees of the asymptotic and transient values, quantified by the pair of achievable and . While there is a decline in guarantees when using the MC rule, the deterioration in that results from using the mechanism that optimizes is much more extreme. In fact, the transient performance of can be arbitrarily bad. This is quite surprising, it appears that a utility rule’s asymptotic performance guarantees do not reflect on its transient guarantees. This extreme trade-off has significant consequences on distributed design, especially when the short-term performance of the multi-agent system is critical, and prompts a more careful interpretation of the asymptotic results in such scenarios.

IV Proof of Theorem 2

In this section, we prove the result in Theorem 2. First, we characterize the -round price of best response for any utility rule in the next lemma. The proof of this lemma is based on a worst case game construction using a smoothness argument. The worst case game construction is well structured and is shown in Figure 3.

Lemma 1.

Given a utility rule and number of agents, the -round price of best response is

| (20) |

Proof.

To characterize , we first formulate it as an optimization program, where we search over all possible set covering games with a fixed and for the worst -round outcome. We give a proof sketch of the correctness of the proposed optimization program in (22). Without loss of generality, we can only consider games where each agent has actions and , in which is the action that optimizes the welfare and is the action that results from a -round best response. Informally, the optimization program involves searching for a set covering game that maximizes with the constraints and that is indeed the state that is achieved after a -round best response. Since the welfare as well as can be described fully by and , each game is parametrized through the resource values from a common resource set . Let

| (21) |

denote the set of agents that select the resource in . We define similarly. Additionally, we can define to denote the set of agents that select the resource in in . The common resource set is comprised of all the resources that have unique signature from and . Taking the dual of the program in the previous outline results in the following program.

| (22) | ||||

| (23) | ||||

Now we reduce the constraints of this program to give a closed form expression of . We first examine the constraints in (23) associated with with . Simplifying the corresponding constraints gives

| (24) |

for any subset of agents . Only considering gives the set of binding constraints for any agent .

Now we consider the converse constraints in (23) where . For this set of constraints, the binding constraint is postulated to be

| (25) |

corresponding to the resource with and . Under this assumption, the optimal dual variables are . This follows from the constraint in (24) and the fact that decreasing any results in a lower binding constraint in (25).

Now we can verify that the constraint in (25) with and is indeed the binding constraint under the dual variables . The resulting dual program assuming is

| (26) | ||||

| (27) | ||||

Since any valid utility rule has for any , the terms in (27) is maximized if for all which implies that . Also note that , as

| (28) |

is less binding if . Since for the binding constraint, if , the terms in (28) reduces to for some . Similarly, if , the terms in (28) reduces to . Note that , and so as well. Thus is binding. Taking any attains the maximum value for , and so we have shown the claim that the binding constraint for is in equation (25). Taking the binding constraint with equality gives

giving the expression for as stated. ∎

To characterize the trade-off, we now provide an explicit expression of Pareto optimal utility rules, i.e., utility rules that satisfy either or for all .

Lemma 2.

For a given , a utility rule that satisfies while maximizing is defined as in the following recursive formula:

| (29) | ||||

| (30) |

Proof.

According to a modified version of Theorem in [26], the price of anarchy () can be written as

| (31) |

We first show that if is Pareto optimal, then it must also be non-increasing. Otherwise, we show that another exists that achieves at least the same , but a higher , contradicting our assumption that is Pareto optimal. Assume, by contradiction, that there exists that is Pareto optimal and decreasing, i.e., there exists a , in which . Notice that switching the value with results in an unchanged according to (20) in Lemma 1 if . We show that with the values switched has a higher than .

For any , the expressions from (IV) that include or are

After switching, the relevant expressions for are

Since , switching the values results in a strictly looser set of constraints, and the value of the binding constraint in (IV) for is at most the value of the binding constraint for . Therefore . Note that if , then as well. This contradicts our assumption that is Pareto optimal.

Now we restrict our focus to non-increasing with . Observe that for any , the transformation , , doesn’t change the price of best response and price of anarchy. Thus, we can take without loss of generality. Given a that satisfies the above constraints, the price of anarchy is

| (32) |

as detailed in Corollary in [26]. Let

| (33) |

For to be Pareto optimal, we claim that must hold for all . Consider any other with . It follows that . By induction, we show that for all . The base case is satisfied, as . Under the assumption , we also have that

| (34) |

where by definition in (33), and so for all . Therefore the summation in (20) is diminished and , proving our claim. As must satisfy for all to be a valid utility rule, is set to be . Then we get the recursive definition for the maximal . Finally, we note that for infinite , is not achievable, as shown in [10]. ∎

With the two previous lemmas, we can move to showing the result detailed in Theorem 2.

Proof of Theorem 2.

We first characterize a closed form expression of the maximal utility rule , which is given in Lemma 2. We fix so that = . To calculate the expression for for a given , a corresponding time varying discrete time system to (30) is constructed as follows.

| (35) | ||||

| (36) | ||||

| (37) | ||||

| (38) |

where corresponds to . Solving for the explicit expression for gives

| (39) | ||||

| (40) |

Simplifying the expression and substituting for gives

| (41) |

Substituting the expression for the maximal into (20) gives the . Notice that for , , and therefore . Shifting variables , we get the statement in (19).

Finally, we prove that if for a given , then must be equal to . According to (19), among utility rules with , the best achievable can be written as

Since , it follows that . ∎

V Simulations

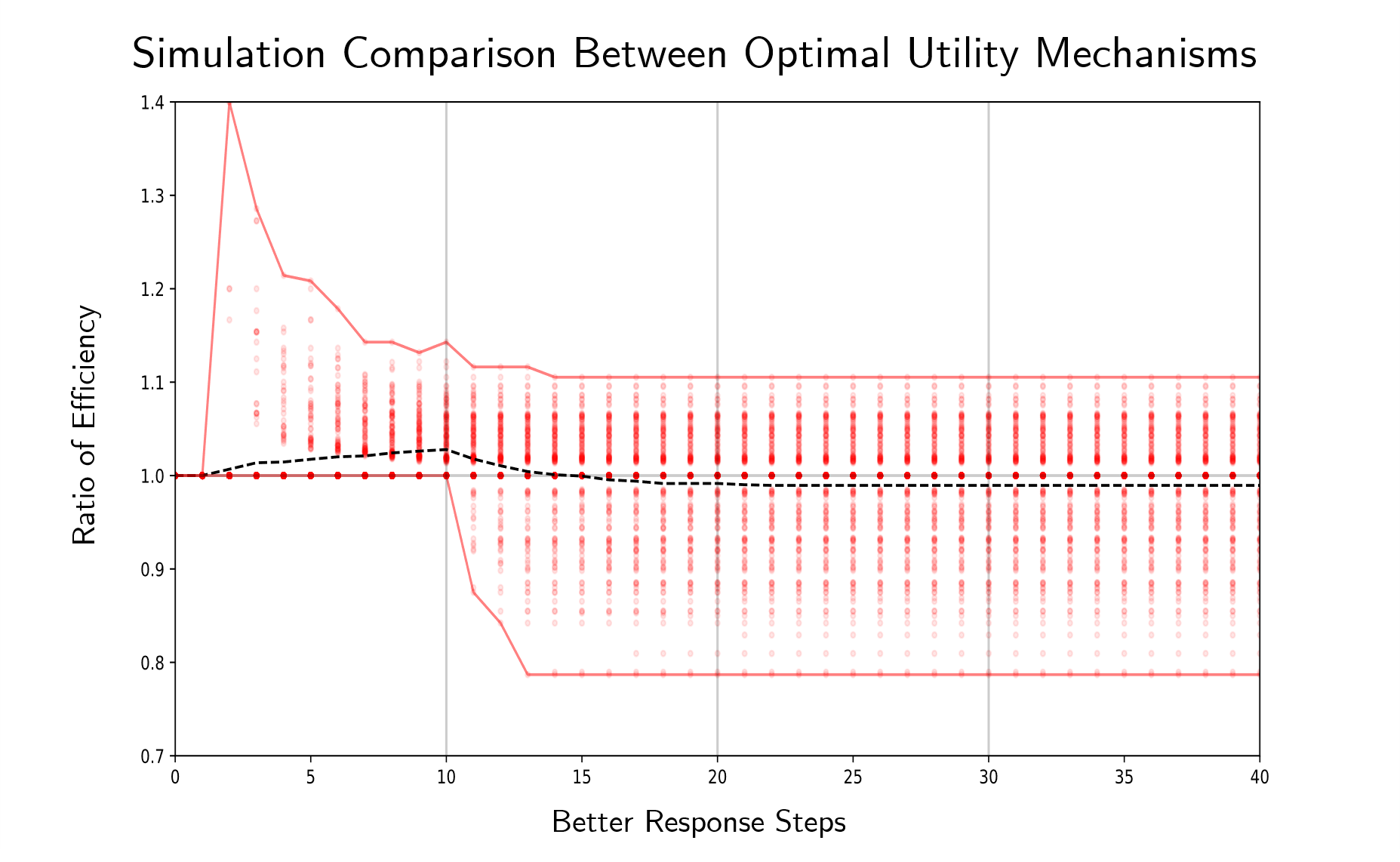

We analyze our theoretical results empirically through a Monte Carlo simulation, presented in Figure 5. We simulate instances where a random game is generated and the welfare along a -round best response path is recorded. Each game has agents and two sets of resources, where the values are drawn uniformly from the continuous interval . Each agent in the game has two singleton actions, where in one action the agent selects a resource in the first set and in the other action the agent selects a resource in the second set, as seen in Figure 4. The two resources in each agent’s actions are selected uniformly, at random. These parameters were chosen to induce a natural set of game circumstances that have a rich best response behavior. Additionally, the game is either endowed with the utility rule that optimizes or that optimizes . Then the best response round algorithm is performed, where each agent successively performs a best response. The ratio of the welfare achieved at each time step following the two utility rules , is displayed in Figure 5.

Several significant observations can be made from Figure 5. Observe that after one round (after best response steps), the performance of exceeds the performance on average. This is indicative of the trade-off shown in Theorem 2. Furthermore, running the best-response algorithm for additional steps recovers the performance difference between and , with having a slightly better end performance on average. These results support our claim that optimizing for asymptotic results may induce sub-optimal system behavior in the short term, and those results must be carefully interpreted for application in multi-agent scenarios. By analyzing the performance directly along these dynamics, we see that a richer story emerges in both the theoretical and the empirical results.

VI Conclusion

There are many works that focus on efficiency guarantees for the asymptotic behavior of multi-agent systems under the game-theoretic notion of Nash equilibrium. However, much less is understood about the efficiency guarantees in the transient behavior of such systems. In this paper, we examine a natural class of best response dynamics and characterize the efficiency along the resulting transient states. We characterize a utility design that performs optimally in the transient for the class of set covering games. Furthermore, we complement these results by providing an explicit characterization of the efficiency trade-off between -round best response and Nash equilibrium that can result from a possible utility design. From these results, we observe that there is a significant misalignment between the optimization of transient and asymptotic behavior for these systems. Interesting future directions include extending our results to a more general class of games and considering other classes of learning dynamics from the literature.

References

- [1] Z. Han, D. Niyato, W. Saad, T. Başar, and A. Hjørungnes, Game theory in wireless and communication networks: theory, models, and applications. Cambridge university press, 2012.

- [2] J. J. Roldán, J. Del Cerro, and A. Barrientos, “Should we compete or should we cooperate? applying game theory to task allocation in drone swarms,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5366–5371, IEEE, 2018.

- [3] C.-C. Hsu, A. Ajorlou, M. Yildiz, and A. Jadbabaie, “Information disclosure and network formation in news subscription services,” in 2020 59th IEEE Conference on Decision and Control (CDC), pp. 5586–5591, IEEE, 2020.

- [4] A. R. Hota and S. Sundaram, “Game-theoretic vaccination against networked sis epidemics and impacts of human decision-making,” IEEE Transactions on Control of Network Systems, vol. 6, no. 4, pp. 1461–1472, 2019.

- [5] K. Paarporn, R. Chandan, M. Alizadeh, and J. R. Marden, “Characterizing the interplay between information and strength in blotto games,” in 2019 IEEE 58th Conference on Decision and Control (CDC), pp. 5977–5982, 2019.

- [6] A. Vetta, “Nash equilibria in competitive societies, with applications to facility location, traffic routing and auctions,” in The 43rd Annual IEEE Symposium on Foundations of Computer Science, 2002. Proceedings., pp. 416–425, 2002.

- [7] J. Martinez-Piazuelo, N. Quijano, and C. Ocampo-Martinez, “Decentralized charging coordination of electric vehicles using multi-population games,” in 2020 59th IEEE Conference on Decision and Control (CDC), pp. 506–511, IEEE, 2020.

- [8] E. S. Lee, D. Shishika, and V. Kumar, “Perimeter-defense game between aerial defender and ground intruder,” in 2020 59th IEEE Conference on Decision and Control (CDC), pp. 1530–1536, IEEE, 2020.

- [9] J. R. Marden and J. S. Shamma, “Game theory and distributed control,” in Handbook of game theory with economic applications, vol. 4, pp. 861–899, Elsevier, 2015.

- [10] M. Gairing, “Covering games: Approximation through non-cooperation,” in International Workshop on Internet and Network Economics, pp. 184–195, Springer, 2009.

- [11] U. Feige, “A threshold of ln n for approximating set cover,” Journal of the ACM (JACM), vol. 45, no. 4, pp. 634–652, 1998.

- [12] D. Monderer and L. S. Shapley, “Potential games,” Games and economic behavior, vol. 14, no. 1, pp. 124–143, 1996.

- [13] H. P. Young, “The evolution of conventions,” Econometrica: Journal of the Econometric Society, pp. 57–84, 1993.

- [14] J. F. Nash et al., “Equilibrium points in n-person games,” Proceedings of the national academy of sciences, vol. 36, no. 1, pp. 48–49, 1950.

- [15] W. H. Sandholm, “Evolutionary game theory,” Complex Social and Behavioral Systems: Game Theory and Agent-Based Models, pp. 573–608, 2020.

- [16] G. Christodoulou, V. S. Mirrokni, and A. Sidiropoulos, “Convergence and approximation in potential games,” in Annual Symposium on Theoretical Aspects of Computer Science, pp. 349–360, Springer, 2006.

- [17] V. Bilò, A. Fanelli, M. Flammini, and L. Moscardelli, “Performances of one-round walks in linear congestion games,” in International Symposium on Algorithmic Game Theory, pp. 311–322, Springer, 2009.

- [18] V. Bilo, “A unifying tool for bounding the quality of non-cooperative solutions in weighted congestion games,” Theory of Computing Systems, vol. 62, no. 5, pp. 1288–1317, 2018.

- [19] V. S. Mirrokni and A. Vetta, “Convergence issues in competitive games,” in Approximation, randomization, and combinatorial optimization. algorithms and techniques, pp. 183–194, Springer, 2004.

- [20] A. Fanelli, M. Flammini, and L. Moscardelli, “The speed of convergence in congestion games under best-response dynamics,” in International Colloquium on Automata, Languages, and Programming, pp. 796–807, Springer, 2008.

- [21] D. S. Hochbaum and W. Maass, “Approximation schemes for covering and packing problems in image processing and vlsi,” Journal of the ACM (JACM), vol. 32, no. 1, pp. 130–136, 1985.

- [22] S. Mandal, N. Patra, and M. Pal, “Covering problem on fuzzy graphs and its application in disaster management system,” Soft Computing, vol. 25, no. 4, pp. 2545–2557, 2021.

- [23] J. M. Nash, “Optimal allocation of tracking resources,” in 1977 IEEE Conference on Decision and Control including the 16th Symposium on Adaptive Processes and A Special Symposium on Fuzzy Set Theory and Applications, pp. 1177–1180, IEEE, 1977.

- [24] C.-F. Huang and Y.-C. Tseng, “The coverage problem in a wireless sensor network,” Mobile networks and Applications, vol. 10, no. 4, pp. 519–528, 2005.

- [25] M. Goemans, V. Mirrokni, and A. Vetta, “Sink equilibria and convergence,” in 46th Annual IEEE Symposium on Foundations of Computer Science (FOCS’05), pp. 142–151, IEEE, 2005.

- [26] D. Paccagnan and J. R. Marden, “Utility design for distributed resource allocation–part ii: Applications to submodular, covering, and supermodular problems,” arXiv preprint arXiv:1807.01343, 2018.

- [27] A. Vetta, “Nash equilibria in competitive societies, with applications to facility location, traffic routing and auctions,” in The 43rd Annual IEEE Symposium on Foundations of Computer Science, 2002. Proceedings., pp. 416–425, IEEE, 2002.

- [28] V. Ramaswamy, D. Paccagnan, and J. R. Marden, “Multiagent maximum coverage problems: The trade-off between anarchy and stability,” in 2019 18th European Control Conference (ECC), pp. 1043–1048, IEEE, 2019.