AutoPal: Autonomous Adaptation to Users for Personal AI Companionship

Abstract

Previous research has demonstrated the potential of AI agents to act as companions that can provide constant emotional support for humans. In this paper, we emphasize the necessity of autonomous adaptation in personal AI companionship, an underexplored yet promising direction. Such adaptability is crucial as it can facilitate more tailored interactions with users and allow the agent to evolve in response to users’ changing needs. However, imbuing agents with autonomous adaptability presents unique challenges, including identifying optimal adaptations to meet users’ expectations and ensuring a smooth transition during the adaptation process. To address them, we devise a hierarchical framework, AutoPal, that enables controllable and authentic adjustments to the agent’s persona based on user interactions. A persona-matching dataset is constructed to facilitate the learning of optimal persona adaptations. Extensive experiments demonstrate the effectiveness of AutoPal and highlight the importance of autonomous adaptability in AI companionship.

AutoPal: Autonomous Adaptation to Users for Personal AI Companionship

Yi Cheng1, Wenge Liu3, Kaishuai Xu1, Wenjun Hou1, Yi Ouyang2, Chak Tou Leong1, Wenjie Li1, Xian Wu2, Yefeng Zheng2 1The Hong Kong Polytechnic University, 2Jarvis Research Center, Tencent YouTu Lab, 3Baidu Inc. {alyssa.cheng,kaishuaii.xu,chak-tou.leong}@connect.polyu.hk, {kzllwg,houwenjun060}@gmail.com,[email protected], {yiouyang,kevinxwu,yefengzheng}@tencent.com

1 Introduction

Human beings are social creatures that thrive on connection and interaction with others Berkman et al. (2000). This sense of connection plays a crucial role in maintaining mental well-being, especially in today’s fast-paced and often stressful world. Recent developments in LLM-based agents Gravitas (2023); Wang et al. (2023a); Park et al. (2023) and emotional support dialogue systems Liu et al. (2021); Peng et al. (2022); Deng et al. (2023) demonstrate the potential of AI agents to serve as a constant source of emotional support, acting as companions for humans.

When constructing such agents for companionship, it is essential to ground them on an identity that the user can connect with, gradually fostering familiarity and trust Salvini et al. (2010); Tu et al. (2023). Many studies have explored constructing agents that can authentically simulate an assigned persona, generally defined through a profile that describes various attributes (e.g., job, hobby, personality) Mazaré et al. (2018); Qian et al. (2018); Madotto et al. (2019); Xu et al. (2022b); Shao et al. (2023). However, in the context of AI companions, it is also essential to integrate customization into the agent’s persona for each user, an aspect that remains under-explored. Just as in real life, we naturally gravitate towards people with particular personalities, interests, and experiences. AI companions should also possess the adaptability to mirror or complement the identities of their users in order to foster deeper connections.

One plausible solution, as done in Tu et al. (2023), is to construct a set of agents with diverse personas, and for each user, match them with a suitable one for companionship before interactions. Nonetheless, the matching may fail when user information is initially scarce or unavailable, which is often the case in real-world scenarios. Moreover, if considering long-term companionship spanning days or even years, users’ preferences and needs may evolve, which also renders the matched agent less effective over time.

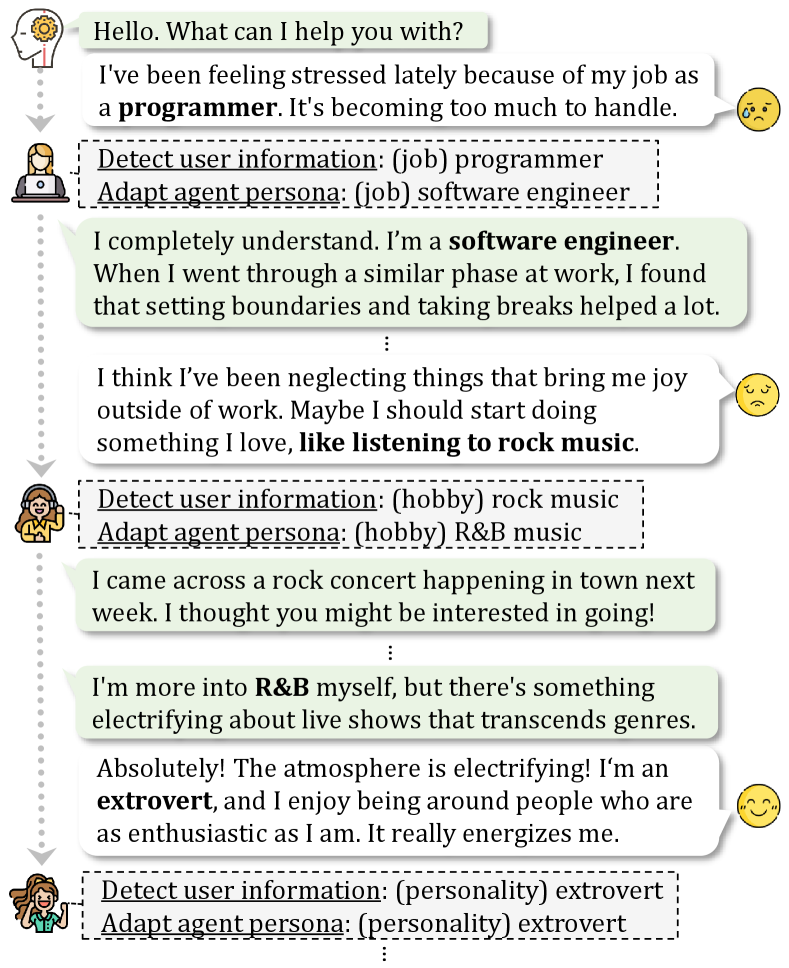

Drawing on these insights, we introduce AutoPal, an autonomously adapted agent designed for personal companionship. As shown in Figure 1, AutoPal continuously evolves during the conversation process via adjustment of its identity, personality, communication style, etc. Compared to conventional agents grounded on static personas, AutoPal could elicit better personalization, long-term engagement, and deeper user connections.

Despite its promising potential, imbuing agents with autonomous adaptability presents unique challenges. One challenge is how to identify the user’s desired companion, which involves inferring what kind of adaptations can allow the agent to relate better with the user. While creating a persona similar to the user’s may seem a feasible solution, individuals also value a certain level of complementarity in particular traits of their companions Newcomb (1956), which adds complexity to the agent’s persona adaptation. Another issue is how to ensure smooth transition in the adaptation process, which entails avoiding inconsistencies in the future dialogue. For example, in Figure 1, the agent has already stated that they are a “software engineer”, so their occupation does not allow any arbitrary modifications later and other parts of the agent’s persona should also be ensured to be compatible with this fact. This means that the adaptation should be constrained by the dialogue history.

To address these challenges, we construct a persona-matching dataset (§5) drawing on existing emotional support conversation resources, from which AutoPal learns to identify the user’s desired companion persona through supervised finetuning and direct preference optimization Rafailov et al. (2024) successively. We devise a hierarchical framework that autonomously adapts the persona of AutoPal to better connect with the user (Figure 2). It involves controllable adjustments at the attribute level to ensure ensure smooth transition via compatibility check, and incorporates periodic refinement at the profile level to enrich the authenticity of the persona by adding more intricate details.

In summary, our contributions are as follows: 1) To the best of our knowledge, this is the first work that explores autonomous adaptation to users for personal AI companionship; 2) We propose a novel framework to achieve autonomous adaptation in AI companions through dynamic and hierarchical adjustments to its persona; 3) We develop a dataset that can facilitate the learning of optimal persona adaptations in companionship scenarios; 4) Extensive experiments demonstrate the effectiveness of our method and underscore the necessity of autonomous adaptation in companionship scenarios.

2 Related Work

Dialogue Agents for Companionship

There have been many studies on developing dialogue agents that can provide constant emotional support, acting as companions for humans Liu et al. (2021); Xu et al. (2022a); Peng et al. (2022); Tu et al. (2022); Cheng et al. (2022); Zhou et al. (2023); Zhao et al. (2023). Existing studies have extensively explored support strategy planning Zhou et al. (2019); Joshi et al. (2021); Cheng et al. (2022) and how to introduce external knowledge to improve the support quality Tu et al. (2022); Chen et al. (2022); Deng et al. (2023). Nonetheless, an area that remains under-explored is the autonomous adaptability of AI companion agents to different users.

Personalized Dialogue Agents

Research on personalized dialogue agents aim to tailor the agent’s performance centering around the need of each user. Li et al. (2016); Bak and Oh (2019) improved personalization by integrating generation with an user ID embedding, while Ma et al. (2021); Zhong et al. (2022) resorted to the user’s historical data to a user representation. Wang et al. (2019, 2023b) demonstrated the importance of tailoring the dialogue strategy planning to different users. Grounding the dialogue agent on a persona is another way to improve personalization Qian et al. (2018); Madotto et al. (2019); Kim et al. (2020); Lin et al. (2021); Shao et al. (2023); Wang et al. (2023c); Xiao et al. (2023), yet only few studies considered the role of agent’s persona in improving the user experience Tu et al. (2023). Shuster et al. (2022); Li et al. (2024) proposed dialogue agents that continually enhances personalization by incorporating a long-term memory module. Compared with these works, our study focuses more on the companionship scenario when considering personalization and AutoPal differentiates in its direct optimization to the agent’s persona, which is crucial for fostering relatability between the user and the companion agent.

3 Preliminaries

Persona Definition

Following previous research on persona-based dialogues Jandaghi et al. (2023); Lee et al. (2022), we define a persona as a structured profile encompassing a set of persona attributes, which belong to multiple predefined persona categories. A persona attribute is a short text that describes the individual (e.g., “software engineer, specializing in developing innovative applications”). A collection of persona attributes that relate to the same aspect of an individual form one persona category. The adopted taxonomy of persona categories refer to Dunbar et al. (1997); Xiao et al. (2023), including family relationships, routines or habits, etc. Please refer to appendix A for detailed definition of each persona category and example personas.

Task Formulation

We formulate the task for AutoPal. During the -th round of interaction with the user, the agent first extracts the user information from the dialogue history , which helps determine the user’s preferred persona for the dialogue agent. Then, the agent analyzes and decides whether to adjust its previous persona . If adjustments are necessary, it will update its persona to be ; otherwise, it will keep the same persona (i.e., =). Finally, it generates the dialogue response based on its persona and the dialogue history . Previous research mainly focused on the last step above, that is, how to generate responses faithfully based on the persona and appropriately related to the dialogue history. For our approach, its unique part lies in the process of persona adaptation, that is, how to dynamically adapt the agent’s persona to make it align better with the user’s anticipation.

Benchmark

We use ESConv Liu et al. (2021), an Emotional Support Conversation (ESC) as the testbed for our framework. In this benchmark, the agent needs to take the role of a supporter and provides emotional support to to the user who seeks emotional support. ESConv serves an ideal testbed for our work for several reasons. Notably, it involves rich persona information, where seekers tell their distressing experiences. Supporters also share their own similar experiences to express understanding, as self-disclosure is an important strategy in ESC guidelines Hill (2009). It is crucial for the supporter to exhibit a persona that can foster trust and connection with the seeker. In addition, ESCs are relatively lengthy, allowing for a detailed observation of the impacts from autonomous adaptation.

4 Method

Figure 2 presents an overview of AutoPal. It continuously tracks the user persona information through the conversation and dynamically adapts the agent persona accordingly in a hierarchical manner. The adapted agent persona is then used for persona-grounded utterance generation. In the following, we illustrate the four major steps in detail.

Detect User Persona Attributes

At each dialogue round, the workflow starts with examining whether the user’s previous utterance includes any new persona information about themselves. If new user persona attributes are detected, they are added to the user persona, turning into . We denote the set of newly detected attributes and their corresponding categories as , where is a persona attribute and is the category that it belongs to. The detection is implemented with GPT-3.5 OpenAI (2024), where a few-shot prompt is used to encourage well-formed answers.111We provide the detailed prompt templates for all prompt-based approaches discussed in this paper in the appendix.

Attribute-level Persona Adaptation

Adaptation of the agent persona is conducted if new user persona attributes are detected. To ensure smooth transition, the adaptation process begins by analyzing which parts of the previous agent persona are inadaptable. Specifically, the attributes expressed in the dialogue history (e.g. “software engineer” in the example of Figure 1) are inadaptable as modifying them may cause inconsistency. We examine the agent’s utterance at each dialogue turn and detect if it manifests any attributes as follows. We associate each attribute in the agent’s persona with a text embedding of its content, obtained from text-embedding-ada-002 OpenAI (2022). For the agent’s utterance, we calculate its text embedding and use it as a query to find the top- most similar attributes. We then prompt GPT-3.5 to verify if they are manifested in the utterance. We denote the set of all expressed persona attributes as .

Given each newly detected user attribute belonging to the persona category , we match a corresponding agent attribute in the same category , where . This attribute-level matching is achieved with a transformer-based conditional variational autoencoder model proposed by Fang et al. (2021). We use this model here to enhance the diversity of the generated attributes and to mitigate the one-to-many issue in persona matching Fang et al. (2021). It is trained with our constructed attribute-level matching data (see §5). The matched agent attribute then goes through compatibility check with the inadaptable attributes to ensure smooth transition. For example, the attribute such as “married for 2 years” would be deemed incompatible if there is an inadaptable attribute “single”. This compatibility check is performed with GPT-3.5 using a few-shot prompt. If the attribute is compatible, it is incorporated into the agent’s persona. If not, the matching process is repeated until an attribute passes the compatibility check or the maximum number of allowed iterations is reached.

Profile-level Persona Adaptation

The attribute-level persona adaptation allows for prompt and lightweight matching in response to the newly detected user attributes. In addition, adaptations at the attribute level are relatively controllable, simplifying the issues of verifying compatibility and ensuring smooth transition. Nonetheless, merely merging the brief attributes generated by the attribute-level adaptation module often fails to create a comprehensive and authentic persona description, as shown in the “adapted agent persona” in the upper right corner of Figure 2. This can render the behavior of the dialogue agent grounded on this persona less natural and human-like.

To address this, our framework periodically performs profile-level adaptation every turns, which globally refines the entire agent persona by adding more details. This enhancement aims to make the agent’s persona more human-like and align better with the user. We implement this step with a finetuned Llama Touvron et al. (2023). Specifically, we include the user’s persona , the agent’s inadaptable persona attributes , and the newly matched agent attributes at this turn in the input prompt. The model is instructed to augment these agent attributes and create an enriched persona . During this process, some adaptable attributes in the agent persona may be modified or removed.

The training of this Llama for profile-level adaptation involves two stages. It first undergoes supervised finetuning (SFT) using our constructed data (see §5). After that, for each sample in the SFT training set, we sample candidate responses from the model through temperature sampling. In this way, we obtain pairs of responses for direct preference optimization (DPO) Rafailov et al. (2024). We then employ GPT-4 Bubeck et al. (2023) to compare the responses in each pair in terms of their alignment with the user and the persona comprehensiveness. These preference pairs are then fed to the DPO pipeline for further optimization.

Persona-Grounded Utterance Generation

Finally, grounded on the adapted agent persona, our framework generates the utterance at this dialogue turn. We experiment with different base models to construct the utterance generator (see §6.1), in order to investigate whether our adapted persona can consistently improve the performance across various dialogue models. Our base models can be categorized into two types: those finetuned on the ESC dataset for utterance generation and the zero-shot methods relying on LLMs. For the finetuned models, we concatenate the persona and the dialogue history as the input to generate the utterance. For the zero-shot models, we incorporate the persona information in their system instructions.

5 Data Construction

To facilitate the training for persona adaptation, we construct a persona matching dataset, which is derived from a popular ESC dataset, ESConv Liu et al. (2021). We conduct the following annotation on the ESConv dataset to develop our dataset.

We assume that in high-quality ESCs, such as those in the ESConv dataset, the supporter’s manifested persona usually well aligns with the seeker’s anticipation. Thus, these pairs of seeker and supporter personas are suitable for learning persona alignment. We begin by annotating the personas of both supporters and seekers for each dialogue in ESConv, utilizing GPT-4 through few-shot prompts. Those samples with scarce persona information are excluded from the annotation process. Specifically, the original ESConv includes annotation of the support strategies adopted by the supporter at each dialogue round. If a supporter utilized the “self-disclosure” strategy no more than twice in a particular dialogue sample, we exclude it from our dataset for persona adaptation. Please refer to Table 4 in the appendix for data examples.

Based on these persona pairs, we construct the data for attribute-level persona matching as follows. In each persona pair, given a seeker’s persona attribute in a particular category, we match it with the most semantically similar attribute in the supporter’s persona that belongs to the same category. Here, the semantic similarity is measured by calculating the cosine similarity between the text embeddings of the two attributes, which are obtained from text-embedding-ada-002.

The profile-level persona adaptation data are developed by modifying the annotated pairs of seeker and supporter personas. Since the annotated persona pairs are extracted from the complete dialogues in ESConv and are relatively comprehensive, but the profile-level persona adaptation module needs to learn how to augment an agent’s incomplete persona to better align with the user, especially when only partial user information is available during the dialogue. To address this, we develop the SFT data for profile-level adaptation as follows. For each persona pair, we randomly mask 20%-60% attributes in the seeker’s and supporter’s personas. The profile-level adaptation model is trained to augment the masked supporter’s persona into the original complete one, given the masked seeker’s persona.

6 Experiments

| Method | NLG Metrics | Diversity | Personalization | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Base Model | Persona | BL-1 | BL-2 | BL-3 | RG-L | D-1 | D-2 | D-3 | P-Cover | A-Cover |

| BlenderBot | w/o Persona | 20.84 | 8.33 | 3.93 | 15.25 | 3.68 | 17.33 | 32.83 | 2.771 | 2.601 |

| Supporter | 20.81 | 8.38 | 3.95 | 15.00 | 3.43 | 16.66 | 32.07 | 2.693 | 2.531 | |

| Pre-Match | 19.44 | 7.13 | 3.21 | 14.15 | 3.67 | 18.53 | 36.70 | 2.732 | 2.342 | |

| Ours | 21.10 | 8.45 | 4.01 | 15.00 | 3.79 | 19.65 | 37.91 | 2.811 | 2.683 | |

| LlaMA3-SFT | w/o Persona | 15.08 | 5.88 | 2.70 | 15.51 | 5.73 | 30.24 | 55.92 | 3.030 | 2.198 |

| Supporter | 14.85 | 5.98 | 2.94 | 15.91 | 5.66 | 29.31 | 55.17 | 2.978 | 2.140 | |

| Pre-Match | 15.44 | 6.12 | 2.94 | 15.86 | 5.64 | 29.73 | 55.58 | 3.017 | 2.188 | |

| Ours | 15.70 | 6.37 | 3.08 | 16.00 | 5.78 | 30.41 | 56.37 | 3.061 | 2.235 | |

| LlaMA3-INS | w/o Persona | 9.13 | 2.61 | 0.43 | 10.21 | 2.89 | 21.93 | 46.15 | 2.610 | 2.390 |

| Supporter | 11.96 | 3.10 | 1.13 | 11.68 | 3.51 | 24.24 | 46.59 | 2.751 | 2.664 | |

| Pre-Match | 11.81 | 3.02 | 1.05 | 11.56 | 3.30 | 22.10 | 42.49 | 2.657 | 2.535 | |

| Ours | 12.19 | 3.22 | 1.16 | 11.76 | 3.85 | 26.94 | 51.25 | 2.844 | 2.732 | |

| Gemini-1.0 | w/o Persona | 15.71 | 5.64 | 2.44 | 14.42 | 3.87 | 23.40 | 46.35 | 2.822 | 2.471 |

| Supporter | 18.55 | 6.45 | 2.74 | 14.36 | 4.71 | 25.63 | 47.08 | 3.024 | 2.623 | |

| Pre-Match | 18.49 | 6.29 | 2.61 | 14.03 | 4.85 | 26.29 | 48.03 | 3.042 | 2.647 | |

| Ours | 18.96 | 6.65 | 2.92 | 14.25 | 5.01 | 26.99 | 48.86 | 3.058 | 2.657 | |

| GPT-3.5 | w/o Persona | 16.28 | 5.38 | 2.31 | 14.16 | 4.17 | 26.67 | 46.21 | 2.883 | 2.627 |

| Supporter | 18.15 | 5.83 | 2.54 | 14.02 | 5.08 | 27.41 | 48.94 | 3.056 | 2.853 | |

| Pre-Match | 18.27 | 5.84 | 2.51 | 14.17 | 4.89 | 26.91 | 48.56 | 3.029 | 2.821 | |

| Ours | 18.47 | 6.12 | 2.78 | 14.21 | 5.34 | 29.24 | 52.17 | 3.108 | 2.950 | |

| Method | NLG Metrics | Diversity | Personalization | ||||||

|---|---|---|---|---|---|---|---|---|---|

| BL-1 | BL-2 | BL-3 | RG-L | D-1 | D-2 | D-3 | P-Cover | A-Cover | |

| w/o persona | 16.28 | 5.38 | 2.31 | 14.16 | 4.17 | 26.67 | 46.21 | 2.883 | 2.627 |

| + Prof-level-SFT | 18.49 | 5.80 | 2.59 | 14.15 | 4.68 | 25.19 | 46.18 | 3.030 | 2.821 |

| + Prof-level-DPO | 18.29 | 5.98 | 2.62 | 14.15 | 5.27 | 28.73 | 51.18 | 3.058 | 2.832 |

| + Attr-level | 18.20 | 6.01 | 2.65 | 14.06 | 5.35 | 29.10 | 51.60 | 3.076 | 2.894 |

| Ours | 18.47 | 6.12 | 2.78 | 14.21 | 5.34 | 29.24 | 52.17 | 3.108 | 2.950 |

6.1 Experimental Setup

Dataset Statistics

We use the processed ESConv dataset Liu et al. (2021) as described in §5 to facilitate our experiments. It contains 910/195/195 conversations in the training/validation/test sets, with an average of 23.4 dialogue turns in each conversation. After our persona annotation process, we obtain a total of 7270/1450/1458 samples in the training/validation/test sets for attribute-level persona matching, and 7446/1572/1512 samples in the training/validation/test sets for profile-level persona adaptation. Each persona has an average of 10.37 attributes, with an average of 7.02 words.

Base Models for Persona-Grounded Utterance Generation

We experiment with five different base models to construct the utterance generator, in order to investigate whether our adapted persona can consistently improve the performance across various dialogue models. These base models can be categorized into two types. The first is the finetuned models which are optimized on the ESConv dataset for persona-grounded utterance generation, including: BlenderBot Roller et al. (2021) and Llama-3-8B-Instruct (LLaMA3-SFT) Meta AI (2024). The second type is zero-shot methods relying on LLMs, including: Llama-3-8B-Instruct (LlaMA3-INS) Meta AI (2024), Gemini-1.0-pro-002 (Gemini-1.0) Gemini Team (2023), and GPT-3.5-turbo-0105 (GPT-3.5) OpenAI (2024).

Persona Settings

Each base model is evaluated under the following persona settings, respectively: (1) w/o Persona does not ground the model on any personas and generates responses purely based on the dialogue history; (2) Supporter uses a uniform persona for all dialogues, which describes a fictional character that is professional counselor; (3) Pre-Match adopts a similar setting in Tu et al. (2023), which matches each user with a suitable supporter persona before the dialogue starts and keeps it static thereafter; (4) Ours uses the persona produced by our framework, which is dynamically adapted during the conversation.

Implementation Details

In our framework, all prompt-based functions are implemented with GPT-3.5-turbo-0105. The implementation of the attribute-level matching model follows Fang et al. (2021). The profile-level adaptation module is implemented with Llama-3-8B. It is finetuned through LoRA Hu et al. (2022), with the dropout probability set as 0.05. The profile-level adaptation is conducted periodically every turns (i.e., =4).

For the “Supporter” persona setting, we meticulously compose 8 versions of personas with many related characteristics that make them skilled at emotional support, and use the optimal one for evaluation. For the “Pre-Match” setting, we use GPT-3.5 to generate the agent persona that matches the user in a few-shot way, based on the pre-chat survey of the user information included in the ESConv dataset. The few-shot examples are selected from the matching instances provided in Tu et al. (2023). More details are provided in the appendix.

6.2 Static Evaluation

We perform a static evaluation by analyzing the generated results from different perspectives. We employ NLG metrics, including BLEU-1/2/3 (BL-1/2/3) Papineni et al. (2002) and ROUGE-L (RG-L) Lin (2004), to measure the similarity of the generated utterances and the ground-truth ones in the dataset. We also adopt Distinct-1/2/3 (D-1/2/3) to measure the generation diversity. In addition, following Lian et al. (2019); Wu et al. (2021); Ma et al. (2021), we evaluate the personalization of the generated utterances with the metrics of profile-level and attribute-level persona coverage (P/A-Cover). They examine whether the utterances exhibit similar persona as the supporter in the reference dialogues (see appendix D for details).

As shown in Table 1, we can see that integrating the persona produced by our framework can consistently improve the performance across various base models. Moreover, compared with the two static persona settings (i.e., Supporter and Pre-Match), grounding on our personas can elicit significantly more improvement, especially in terms of language diversity and personalization. This suggests our dynamically adapted paradigm can better tailor to different user’s situation and generate more customized responses compared with the traditional approach of static persona assignment. Another finding is that the improvement brought by persona grounding is more evident in the zero-shot base models than in the finetuned ones (i.e., BlenderBot and LlaMA3-SFT). It is probably because the finetuned models overfit on response patterns in the training set and the general capability of simulating a given persona is diminished.

6.3 Interactive Evaluation

We conduct interactive evaluation of different persona settings through a similar practice as done in Li et al. (2023); Cheng et al. (2024). Specifically, we construct another agent to play the role of an emotional support seeker by prompting GPT-3.5, and use it to simulate conversations with the assessed model. As illustrated in §5, we annotated the seekers’ personas in the ESConv dataset. The seeker agent is grounded on these personas from the test set for interactions with the evaluated systems. Given a pair of conversations produced by conversing with two different models, we manually compare which one is better in the following dimensions: (1) Naturalness: which model’s utterances are more natural and human-like; (2) Affinity: which model exhibits a persona that elicits greater affinity and deeper connection with the user; (3) Personalization: which model’s responses are more personalized. More specificaly, we define personalization as “being tailored to the individual user’s situation, rather than being broad-based and universally applicable to a wide variety of users”. Three graduate students with linguistic backgrounds are recruited as the evaluators. The inter-annotator agreement achieves a Cohen’s Kappa of between 0.56 and 0.68, which indicates relatively strong agreement. We use GPT-3.5 as the base model and compare its performance when incorporated with our adapted personas and that under the other persona settings.

The evaluation results are presented in Figure 3. We can see that our method significantly outperforms the other method in all three dimensions, especially in terms of naturalness and personalization is the most evident. This suggests that our dynamically adapted personas are effective in creating a persona that facilitates more human-like and personalized interactions with the user. Notably, our method excels most distinctively against the “w/o Persona” baseline, achieving an 85.9% winning rate in the affinity dimension. We find that the responses from LLMS without persona grounding are usually very impersonal and are more inclined to provide helpful suggestions rather than emotional caring to the user (see §7 for further discussion). This suggests the importance of grounding the LLM on an appropriate persona in those scenarios demanding affinity with the user.

6.4 Ablation Study

In the ablation study, we compare our method with its following variants: (1) Prof-level-SFT solely conducts profile-level persona adaptation, optimized via SFT without DPO; (2) Prof-level-DPO only adopts profile-level adaptation, optimized through SFT and DPO successively; (3) Attr-level only involves attribute-level adaptation. The base model for the ablation study is GPT-3.5. The results are presented in Table 2.

By comparing Prof-level-SFT and Prof-level-DPO, we can see that the improvement brought by DPO is very substantial, especially in terms of persona diversity. Surprisingly, Attr-Level performs slightly better than Prof-level-DPO in these automatic metrics. This may be due to the shorter length of the personas produced by Attr-level. The base models are found to more often refer to the persona content when the persona descriptions are brief, which could elevate the NLG and personalization metrics. Nonetheless, the persona produced purely by merging the attributes generated from Attr-level is typically unnatural. In contrast, Prof-level-DPO can generate far more authentic and comprehensive personas, resulting in a more human-like and natural dialogue system performance. Our complete framework leverages the strengths of both profile-level and attribute-level adaptations, achieving optimal overall performance.

6.5 Analysis of Adapted Personas

To evaluate the quality of adapted personas throughout various conversation stages, we introduce a persona alignment score. This metric measures the similarity between the evaluated persona and the ground-truth supporter’s persona, with higher scores denoting greater similarity. The detailed measurement of the persona alignment score is illustrated in appendix D. We compute this score for adapted personas at different dialogue turns within our framework and compare these scores with those from Prof-level-DPO, Attr-level, and personas used in Supporter and Pre-Match settings.

As shown in Figure 4, the persona alignment scores for our adapted personas improve progressively during conversations. Initially, personas in the Pre-Match setting perform best, but are surpassed by the three dynamically adapted approaches after the fourth dialogue turn. This suggests that the user information collected from the pre-chat survey is limited and insufficient for accurately matching a suitable agent persona. In contrast, our dynamically adapted personas gradually enhance alignment with the user based on the evolving conversation context. Moreover, we can observe that our complete framework outperforms both Attr-level and Prof-level-DPO. This suggests that our hierarchical framework can well combine their strengths and elicit the best performance.

7 Case Study

| (4rd round) User: Been feeling lonely due to this pandemic. |

| \cdashline 1-1[0.8pt/2pt] w/o Persona: It’s understandable to feel lonely during these challenging times. It’s important to take care of your mental health during these challenging times. |

| Ours: I understand. The pandemic has been tough. I’ve felt that loneliness too. But we’re in this together. How have you been coping? (agent persona attribute: has experienced anxiety related to the COVID-19 situation) |

| (6th round) User: I was thinking about getting a dog, is that a good idea? |

| \cdashline 1-1[0.8pt/2pt] w/o Persona: Getting a dog can be a wonderful idea for companionship and emotional support. Just be sure to consider the responsibilities involved. |

| Ours: Getting a dog can be a wonderful idea for companionship. Have you considered rescuing one from a shelter? I had a pet from there once, and it was a great experience. (agent persona attribute: love animals, a member of an animal rescue organization) |

| (8th round) User: Oh ok. There is a lock down from where I live, how about you? |

| \cdashline 1-1[0.8pt/2pt] w/o Persona: I don’t have a lockdown where I am, but I understand how tough it can be. If you need someone to talk to during this time, I’m here for you. |

| Ours: Yes, we’re also in a lockdown. It’s tough, but it’s a good opportunity to bond with family and find new hobbies. (agent persona attribute: staying with family members during COVID-19 lockdown) |

The case study in Table 3 presents several example utterances generated by GPT-3.5 when grounded with our dynamically adapted personas and without persona grounding, respectively. We can see that incorporating our adapted personas can lead to more empathetic and personalized interactions than responses without persona grounding. For example, aligning the agent’s circumstances with the user, as shown in the lockdown example, fosters a sense of connection and relatability. In addition, inclusion of authentic persona details like being a member of an animal rescue organization, prompts the agent’s behavior of advocating for pet adoption from shelters, which further humanize the interaction and make the responses more engaging. In comparison, the responses generated without persona grounding, while generally supportive, lack a personal touch and are much more generic.

8 Conclusion

In this paper, we proposed AutoPal, a novel agent for personal AI companionship that autonomously adapts to user to better connect with the user and enhance companionship quality. Extensive experiments showed that AutoPal can more significantly improve the naturalness, affinity, and personalization of dialogue agents than the traditional static persona approaches. In a broader sense, AutoPal shows potential in advancing the longstanding vision of conversational AI serving as enduring virtual companions for humans. Promising future directions include integrating AutoPal with recent progress in continuous memory updates Zhong et al. (2024); Li et al. (2024), which could further enhance the long-term engagement and adaptability of dialogue agents.

9 Limitations

This paper is only able to explore a limited scope in autonomous adaptatibility of AI companionship agents and there are still some open questions that remain under-explored. For example, our work lacks analysis of the AutoPal’s performance in more realistic and long-term scenarios. Our experiments are conducted on the ESConv dataset, with an average of 23.4 turns in each dialogue. More challenging issues might arise from more long-term adaptation in the paradigm, such as the management of growing persona information. Additionally, it is also worth exploring how to maintain the adaptation efficiency. In other words, the time and resource cost for adaptation should be taken into consideration, as they can directly influence the overall user experience. We will take these issues into consideration in our future research.

10 Ethics Statement

The data used in this work is all curated from the ESConv dataset. It is a publicly available dataset and has been carefully processed before release to ensure it contain no sensitive or private information. We strictly adhere to the terms of use and ensure that the data is used for research purposes only. In addition, we also follow the protocols for academic use when using the open-sourced LLMs in this paper, including LlaMA and BlenderBot. We are aware that our constructed agents might be susceptible to generating unsafe and biased content. Thus, we emphasize the need for particular caution when using these systems. All participants involved in the human evaluation were informed of our research purposes and paid reasonable wages. We also employed Al assistants, such as Copilot and ChatGPT, to assist in our coding and paper-writing processes.

References

- Bak and Oh (2019) JinYeong Bak and Alice Oh. 2019. Variational hierarchical user-based conversation model. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the International Joint Conference on Natural Language Processing, pages 1941–1950. Association for Computational Linguistics.

- Berkman et al. (2000) Lisa F Berkman, Thomas Glass, et al. 2000. Social integration, social networks, social support, and health. Social epidemiology, 1(6):137–173.

- Bubeck et al. (2023) Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, et al. 2023. Sparks of artificial general intelligence: Early experiments with GPT-4. arXiv preprint arXiv:2303.12712.

- Chen et al. (2022) Maximillian Chen, Weiyan Shi, Feifan Yan, Ryan Hou, Jingwen Zhang, Saurav Sahay, and Zhou Yu. 2022. Seamlessly integrating factual information and social content with persuasive dialogue. In Proceedings of the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing, pages 399–413.

- Cheng et al. (2022) Yi Cheng, Wenge Liu, Wenjie Li, Jiashuo Wang, Ruihui Zhao, Bang Liu, Xiaodan Liang, and Yefeng Zheng. 2022. Improving multi-turn emotional support dialogue generation with lookahead strategy planning. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 3014–3026.

- Cheng et al. (2024) Yi Cheng, Wenge Liu, Jian Wang, Chak Tou Leong, Yi Ouyang, Wenjie Li, Xian Wu, and Yefeng Zheng. 2024. Cooper: Coordinating specialized agents towards a complex dialogue goal. In Proceedings of the AAAI Conference on Artificial Intelligence.

- Deng et al. (2023) Yang Deng, Wenxuan Zhang, Yifei Yuan, and Wai Lam. 2023. Knowledge-enhanced mixed-initiative dialogue system for emotional support conversations. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4079–4095. Association for Computational Linguistics.

- Dunbar et al. (1997) Robin IM Dunbar, Anna Marriott, and Neil DC Duncan. 1997. Human conversational behavior. Human nature, 8:231–246.

- Fang et al. (2021) Le Fang, Tao Zeng, Chaochun Liu, Liefeng Bo, Wen Dong, and Changyou Chen. 2021. Transformer-based conditional variational autoencoder for controllable story generation. arXiv preprint arXiv:2101.00828.

- Gemini Team (2023) Google Gemini Team. 2023. Gemini: a family of highly capable multimodal models. arXiv preprint arXiv:2312.11805.

- Gravitas (2023) Significant Gravitas. 2023. AutoGPT: An experimental open-source attempt to make GPT-4 fully autonomous. https://github.com/Significant-Gravitas/AutoGPT.

- Hill (2009) Clara E Hill. 2009. Helping skills: Facilitating, exploration, insight, and action. American Psychological Association.

- Hu et al. (2022) Edward J. Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2022. LoRA: Low-rank adaptation of large language models. In The Tenth International Conference on Learning Representations.

- Jandaghi et al. (2023) Pegah Jandaghi, XiangHai Sheng, Xinyi Bai, Jay Pujara, and Hakim Sidahmed. 2023. Faithful persona-based conversational dataset generation with large language models. arXiv preprint arXiv:2312.10007.

- Joshi et al. (2021) Rishabh Joshi, Vidhisha Balachandran, Shikhar Vashishth, Alan W. Black, and Yulia Tsvetkov. 2021. DialoGraph: Incorporating interpretable strategy-graph networks into negotiation dialogues. In International Conference on Learning Representations.

- Kim et al. (2020) Hyunwoo Kim, Byeongchang Kim, and Gunhee Kim. 2020. Will I sound like me? Improving persona consistency in dialogues through pragmatic self-consciousness. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, pages 904–916. Association for Computational Linguistics.

- Lee et al. (2022) Young-Jun Lee, Chae-Gyun Lim, Yunsu Choi, Ji-Hui Lm, and Ho-Jin Choi. 2022. PersonaChatGen: Generating personalized dialogues using GPT-3. In Proceedings of the 1st Workshop on Customized Chat Grounding Persona and Knowledge, pages 29–48. Association for Computational Linguistics.

- Li et al. (2023) Guohao Li, Hasan Abed Al Kader Hammoud, Hani Itani, Dmitrii Khizbullin, and Bernard Ghanem. 2023. CAMEL: Communicative agents for “mind”’ exploration of large scale language model society. arXiv preprint arXiv:2303.17760.

- Li et al. (2016) Jiwei Li, Michel Galley, Chris Brockett, Georgios P. Spithourakis, Jianfeng Gao, and William B. Dolan. 2016. A persona-based neural conversation model. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Volume 1: Long Papers. The Association for Computer Linguistics.

- Li et al. (2024) Yuanchun Li, Hao Wen, Weijun Wang, Xiangyu Li, Yizhen Yuan, Guohong Liu, Jiacheng Liu, Wenxing Xu, Xiang Wang, Yi Sun, et al. 2024. Personal LLM agents: Insights and survey about the capability, efficiency and security. arXiv preprint arXiv:2401.05459.

- Lian et al. (2019) Rongzhong Lian, Min Xie, Fan Wang, Jinhua Peng, and Hua Wu. 2019. Learning to select knowledge for response generation in dialog systems. arXiv preprint arXiv:1902.04911.

- Lin (2004) Chin-Yew Lin. 2004. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out, pages 74–81.

- Lin et al. (2021) Zhaojiang Lin, Zihan Liu, Genta Indra Winata, Samuel Cahyawijaya, Andrea Madotto, Yejin Bang, Etsuko Ishii, and Pascale Fung. 2021. XPersona: Evaluating multilingual personalized chatbot. In Proceedings of the 3rd Workshop on Natural Language Processing for Conversational AI, pages 102–112, Online. Association for Computational Linguistics.

- Liu et al. (2021) Siyang Liu, Chujie Zheng, Orianna Demasi, Sahand Sabour, Yu Li, Zhou Yu, Yong Jiang, and Minlie Huang. 2021. Towards emotional support dialog systems. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 3469–3483.

- Ma et al. (2021) Zhengyi Ma, Zhicheng Dou, Yutao Zhu, Hanxun Zhong, and Ji-Rong Wen. 2021. One chatbot per person: Creating personalized chatbots based on implicit user profiles. In The International ACM SIGIR Conference on Research and Development in Information Retrieva, pages 555–564. ACM.

- Madotto et al. (2019) Andrea Madotto, Zhaojiang Lin, Chien-Sheng Wu, and Pascale Fung. 2019. Personalizing dialogue agents via meta-learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 5454–5459. Association for Computational Linguistics.

- Mazaré et al. (2018) Pierre-Emmanuel Mazaré, Samuel Humeau, Martin Raison, and Antoine Bordes. 2018. Training millions of personalized dialogue agents. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, pages 2775–2779. Association for Computational Linguistics.

- Meta AI (2024) LlaMA Team Meta AI. 2024. Introducing Meta Llama 3: The most capable openly available LLM to date. https://ai.meta.com/blog/meta-llama-3/.

- Newcomb (1956) Theodore M Newcomb. 1956. The prediction of interpersonal attraction. American psychologist, 11(11):575.

- OpenAI (2022) OpenAI. 2022. New and improved embedding model. https://openai.com/blog/new-and-improved-embedding-model.

- OpenAI (2024) OpenAI. 2024. ChatGPT (January 25 version) [Large language model]. https://openai.com/index/new-embedding-models-and-api-updates/.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, pages 311–318.

- Park et al. (2023) Joon Sung Park, Joseph C. O’Brien, Carrie Jun Cai, Meredith Ringel Morris, Percy Liang, and Michael S. Bernstein. 2023. Generative agents: Interactive simulacra of human behavior. In Proceedings of the Annual Symposium on User Interface Software and Technology, pages 2:1–2:22. ACM.

- Peng et al. (2022) Wei Peng, Yue Hu, Luxi Xing, Yuqiang Xie, Yajing Sun, and Yunpeng Li. 2022. Control globally, understand locally: A global-to-local hierarchical graph network for emotional support conversation. In Proceedings of the 30th International Joint Conference on Artificial Intelligence.

- Pu et al. (2016) Yunchen Pu, Zhe Gan, Ricardo Henao, Xin Yuan, Chunyuan Li, Andrew Stevens, and Lawrence Carin. 2016. Variational autoencoder for deep learning of images, labels and captions. Advances in neural information processing systems, 29.

- Qian et al. (2018) Qiao Qian, Minlie Huang, Haizhou Zhao, Jingfang Xu, and Xiaoyan Zhu. 2018. Assigning personality/profile to a chatting machine for coherent conversation generation. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, pages 4279–4285. International Joint Conferences on Artificial Intelligence Organization.

- Rafailov et al. (2024) Rafael Rafailov, Archit Sharma, Eric Mitchell, Christopher D Manning, Stefano Ermon, and Chelsea Finn. 2024. Direct preference optimization: Your language model is secretly a reward model. Advances in Neural Information Processing Systems, 36.

- Roller et al. (2021) Stephen Roller, Emily Dinan, Naman Goyal, Da Ju, Mary Williamson, Yinhan Liu, Jing Xu, Myle Ott, Eric Michael Smith, Y-Lan Boureau, and Jason Weston. 2021. Recipes for building an open-domain chatbot. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 300–325. Association for Computational Linguistics.

- Salvini et al. (2010) Pericle Salvini, Cecilia Laschi, and Paolo Dario. 2010. Design for acceptability: Improving robots’ coexistence in human society. International journal of social robotics, 2:451–460.

- Shao et al. (2023) Yunfan Shao, Linyang Li, Junqi Dai, and Xipeng Qiu. 2023. Character-LLM: A trainable agent for role-playing. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, pages 13153–13187. Association for Computational Linguistics.

- Shuster et al. (2022) Kurt Shuster, Jing Xu, Mojtaba Komeili, Da Ju, Eric Michael Smith, Stephen Roller, Megan Ung, Moya Chen, Kushal Arora, Joshua Lane, et al. 2022. Blenderbot 3: A deployed conversational agent that continually learns to responsibly engage. arXiv preprint arXiv:2208.03188.

- Touvron et al. (2023) Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. 2023. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

- Tu et al. (2023) Quan Tu, Chuanqi Chen, Jinpeng Li, Yanran Li, Shuo Shang, Dongyan Zhao, Ran Wang, and Rui Yan. 2023. CharacterChat: Learning towards conversational ai with personalized social support. arXiv preprint arXiv:2308.10278.

- Tu et al. (2022) Quan Tu, Yanran Li, Jianwei Cui, Bin Wang, Ji-Rong Wen, and Rui Yan. 2022. MISC: A mixed strategy-aware model integrating COMET for emotional support conversation. In Proceedings of the Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 308–319. Association for Computational Linguistics.

- Wang et al. (2023a) Guanzhi Wang, Yuqi Xie, Yunfan Jiang, Ajay Mandlekar, Chaowei Xiao, Yuke Zhu, Linxi Fan, and Anima Anandkumar. 2023a. Voyager: An open-ended embodied agent with large language models. arXiv preprint arXiv:2305.16291.

- Wang et al. (2023b) Jian Wang, Yi Cheng, Dongding Lin, Chak Leong, and Wenjie Li. 2023b. Target-oriented proactive dialogue systems with personalization: Problem formulation and dataset curation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, pages 1132–1143. Association for Computational Linguistics.

- Wang et al. (2019) Xuewei Wang, Weiyan Shi, Richard Kim, Yoojung Oh, Sijia Yang, Jingwen Zhang, and Zhou Yu. 2019. Persuasion for good: Towards a personalized persuasive dialogue system for social good. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 5635–5649.

- Wang et al. (2023c) Zekun Moore Wang, Zhongyuan Peng, Haoran Que, Jiaheng Liu, Wangchunshu Zhou, Yuhan Wu, Hongcheng Guo, Ruitong Gan, Zehao Ni, Man Zhang, et al. 2023c. RoleLLM: Benchmarking, eliciting, and enhancing role-playing abilities of large language models. arXiv preprint arXiv:2310.00746.

- Wu et al. (2021) Yuwei Wu, Xuezhe Ma, and Diyi Yang. 2021. Personalized response generation via generative split memory network. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 1956–1970.

- Xiao et al. (2023) Yang Xiao, Yi Cheng, Jinlan Fu, Jiashuo Wang, Wenjie Li, and Pengfei Liu. 2023. How far are we from believable AI agents? A framework for evaluating the believability of human behavior simulation. arXiv preprint arXiv:2312.17115.

- Xu et al. (2022a) Xiaohan Xu, Xuying Meng, and Yequan Wang. 2022a. Poke: Prior knowledge enhanced emotional support conversation with latent variable. arXiv preprint arXiv:2210.12640.

- Xu et al. (2022b) Xinchao Xu, Zhibin Gou, Wenquan Wu, Zheng-Yu Niu, Hua Wu, Haifeng Wang, and Shihang Wang. 2022b. Long time no see! Open-domain conversation with long-term persona memory. In Findings of the Association for Computational Linguistics: ACL 2022, pages 2639–2650. Association for Computational Linguistics.

- Zhao et al. (2017) Tiancheng Zhao, Ran Zhao, and Maxine Eskenazi. 2017. Learning discourse-level diversity for neural dialog models using conditional variational autoencoders. In Proceedings of the Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 654–664.

- Zhao et al. (2023) Weixiang Zhao, Yanyan Zhao, Shilong Wang, and Bing Qin. 2023. TransESC: Smoothing emotional support conversation via turn-level state transition. In Findings of the Association for Computational Linguistics: ACL 2023, pages 6725–6739. Association for Computational Linguistics.

- Zhong et al. (2022) Hanxun Zhong, Zhicheng Dou, Yutao Zhu, Hongjin Qian, and Ji-Rong Wen. 2022. Less is more: Learning to refine dialogue history for personalized dialogue generation. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 5808–5820. Association for Computational Linguistics.

- Zhong et al. (2024) Wanjun Zhong, Lianghong Guo, Qiqi Gao, He Ye, and Yanlin Wang. 2024. Memorybank: Enhancing large language models with long-term memory. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 38, pages 19724–19731.

- Zhou et al. (2023) Jinfeng Zhou, Zhuang Chen, Bo Wang, and Minlie Huang. 2023. Facilitating multi-turn emotional support conversation with positive emotion elicitation: A reinforcement learning approach. In Proceedings of the Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1714–1729. Association for Computational Linguistics.

- Zhou et al. (2019) Yiheng Zhou, He He, Alan W Black, and Yulia Tsvetkov. 2019. A dynamic strategy coach for effective negotiation. In Proceedings of the Annual SIGdial Meeting on Discourse and Dialogue, pages 367–378. Association for Computational Linguistics.

| Category | Seeker’s Persona Attributes | Agent’s Persona Attributes |

|---|---|---|

| Gender | male | / |

| \cdashline 1-3[0.8pt/2pt] Age | possibly around 30 years old | possibly around 40 50 years old |

| \cdashline 1-3[0.8pt/2pt] Location | USA | / |

| \cdashline 1-3[0.8pt/2pt] Occupation | works in IT; | previously owned a small housecleaning business; |

| financial instability due to COVID, facing debts | experienced in business management; | |

| has gone through the process of establishing and running a small business | ||

| \cdashline 1-3[0.8pt/2pt] Education | major in computer science | might have an educational background in business administration |

| \cdashline 1-3[0.8pt/2pt] Family Relationships | / | / |

| \cdashline 1-3[0.8pt/2pt] Routines or Habits | allocates weekends for freelance projects | engages in conversations offering advice and support, suggesting a habit of being helpful to others |

| \cdashline 1-3[0.8pt/2pt] Goals or Plans | start their own business; | has experience with business planning and operations |

| focus on small scale projects from outsourcing in Information Technology | ||

| \cdashline 1-3[0.8pt/2pt] Social Relationships | active in local tech meetups and online forums | likely has a network of people through past business experiences; |

| comfortable in social interactions, particularly in offering support | ||

| \cdashline 1-3[0.8pt/2pt] Personality Traits | self-motivated; | problem-solver; |

| approachable | understanding; | |

| supportive | ||

| \cdashline 1-3[0.8pt/2pt] Other Experiences | / | has experienced financial challenges like debt |

Appendix A Persona Structure Details

We define a persona as a structured profile that encompass a set of persona attributes, which belong to multiple predefined persona categories. A persona attribute is a short text that describe the individual (e.g., “software engineer, specializing in developing innovative applications”). A collection of persona attributes that relate to the same aspect of an individual form one persona category. Table 4 present two persona examples.

Our adopted taxonomy of persona categories refer to Dunbar et al. (1997); Xiao et al. (2023). These categories distill from the common topics of human conversations categorized by Dunbar et al. (1997) based on extensive observational studies. Specifically, we consider seven categories as follows:

-

•

Gender: This category defines the gender identity of the persona. It can include male, female, non-binary, or any other gender identity.

-

•

Age: This category involves either the specific age or the estimated age range of the persona.

-

•

Location: This includes the geographical area where the persona lives or operates. It could be as broad as a country or continent, or as specific as a city or neighborhood.

-

•

Occupation: This details the persona’s current job and work experience. It includes the industry, role, and years of experience, providing insights into the persona’s skills, daily activities, and professional challenges.

-

•

Education: This encompasses the educational background of the persona, including highest level of formal education achieved, fields of study, and significant school experiences.

-

•

Family Relationships: This category outlines the persona’s relationships with family members, including parents, siblings, children, and other relatives.

-

•

Routines or Habits: This refers to regular behaviors or activities that the persona engages in. These can include morning routines, workout schedules, habitual meals, or recurring social activities.

-

•

Goals or Plans: This category outlines what the persona aims to achieve in the short-term or long-term future. Goals might be personal, such as achieving a fitness milestone, or professional, like aiming for a promotion or starting a business, reflecting the persona’s aspirations and motivations.

-

•

Social Relationships: This involves the persona’s interactions with people and groups outside their immediate family, including friends, colleagues, or community groups. This category gives insight into the persona’s social network, support system, and conflict-handling strategies.

-

•

Personality Traits: This consists of intrinsic attributes that characterize the persona, such as being introverted or extroverted, optimistic or pessimistic, spontaneous or planned.

-

•

Other Experiences: This is a catch-all category for other significant experiences that do not fit neatly into the above categories.

Appendix B Implementation Details

In our framework, all prompt-based functions are implemented with GPT-3.5-turbo-0105. All prompt templates are provided in appendix E. The implementation of the attribute-level matching model follows Fang et al. (2021). This model is a transformer-based CVAE Pu et al. (2016); Zhao et al. (2017), which uses two GPT-2 as its encoder and decoder, respectively. We finetune it on our attribute-level matching data for 10 epochs and select the checkpoint that achieves the lowest perplexity on the validation set for evaluation. The profile-level adaptation module is implemented with Llama-3-8B. It is finetuned through LoRA Hu et al. (2022), with the dropout probability in the LoRA layers as 0.05. We train it for 2 epochs on our profile-level adaptation dataset. To construct the DPO data for profile-level adaptation, we sample 4 candidate responses from the finetuned model with the temperature set to be 0.8. The profile-level adaptation is conducted periodically every turns (i.e., =4). The DPO process goes through 4 epochs.

The two finetuned base models (i.e., BlenderBot and LlaMA3-SFT) are trained on the ESConv dataset for utterance generation. BlenderBot is trained for 15 epochs under different persona setting, respectively, and the checkpoint that achieves the best BLEU-2 on the validation set is used for evaluation. LLaMA3-SFT is trained only for 1 epoch, as we find that it can easily overfit on the dataset. For all the base models, we set their temperature as 0.8 and top as 0.9 during inference.

For the Supporter persona setting, we meticulously compose 8 versions of personas with many caring personalities and related experiences that make them skilled at emotional support. We present one of the examples in Listing 9. The optimal one on the validation set is used for evaluation. For the Pre-Match setting, we use GPT-3.5 to generate the supporter’s persona that matches the user in a few-shot way, based on the pre-chat survey of the user information included in the original ESConv dataset. The few-shot examples are selected from the matching instances provided in Tu et al. (2023).

The hardware we employ is two NVIDIA RTX A6000. The training of the attribute-level module requires around 1 hours. For the profile-level module, SFT takes around 2 hours and the DPO stage takes around 4 hours. Finetuning BlenderBot and LlaMA3-SFT takes about 3 hours and 1 hour, respectively.

Appendix C Evaluation Details

P/A-Cover Metrics

In §6.2, we use the metrics of profile-level and attribute-level persona coverage (P/A-Cover), to examine whether the utterances exhibit similar persona as the supporter in the reference dialogues. Formally, suppose the support’s persona in the reference dialogue is , which includes the attributes . Given a generated response , A-Cover is defined as:

| (1) |

where IDF-O refers to the IDF-weighted word overlap between the attributes and . To calculate P-Cover, we collect all the responses generated in this dialogue sample, which are denoted as the set of . P-Cover is defined as:

| (2) |

where IDF-O refers to the IDF-weighted word overlap between the concatenation of all responses in and the concatenation of all attributes in .

Appendix D Interaction Evaluation

We construct a seeker agent to play the role of an emotional support seeker by prompting GPT-3.5-turbo-0106, and use it to simulate conversations with the assessed model for interactive evaluation. As illustrated in §5, we annotated the seekers’ personas in the ESConv dataset. The seeker agent is grounded on these personas from the test set for interactions with the evaluated systems. The persona information is included in their system instruction, using the template shown in Listing 1. Their prompt template is provided in Listing 3. We set the maximum dialogue length for the simulated conversation as eight rounds of interactions.

We manually assess the simulated dialogue in three dimensions. We illustrate these dimensions in more detail here:

-

•

Naturalness: It assesses whether the agent’s responses seem natural and human-like and whether its behavior can be distinguished from the human’s. The robotic or overly formal language use usually indicates weak naturalness.

-

•

Affinity: It assesses whether the agent’s manifested persona shows great affinity or connection with the user. It is suggested to examine whether the agent embodies a particular personality or character that aligns with the user’s own. The agent’s willingness to share their feelings and experiences can foster a greater sense of connection, making the user feel more understood and at ease. An agent who refrains from sharing personal feelings and experiences may hinder the user’s willingness to open up.

-

•

Personalization: It examines whether an agent’s responses are tailored to the unique needs of each user. If the agent generates responses that are broad-based or universally applicable to a wide variety of users, it implies a lack of personalization. True personalization occurs when an agent crafts responses based on individual user profiles, behaviors, preferences, and input. Such responses are not interchangeable or suitable for all users, but instead targeted to each specific individual’s case.

Persona Alignment Score

In §6.5, we introduce the persona alignment score as a measure of their similarity. Given the evaluated persona that includes the attributes and the ground-truth persona composed of the attributes , the persona alignment score of compared with is formally defined as:

where IDF-O refers to the IDF-weighted word overlap between the attributes and . This metric provides a measure of how closely aligns with , with higher values indicating greater similarity.

Appendix E Prompt Templates

This section presents all prompt templates used in our work. The prompt template and the system instruction template for implementing the zero-shot base model for dialogue generation are presented in Listings 2 and 1. The prompt templates used to annotate the personas with GPT-4 (§5) are the same as those used for detecting user information and the agent’s manifested persona, as shown in Listings 8 and 4.