Automatic Image Labelling at Pixel Level

Abstract

The performance of deep networks for semantic image segmentation largely depends on the availability of large-scale training images which are labelled at the pixel level. Typically, such pixel-level image labellings are obtained manually by a labour-intensive process. To alleviate the burden of manual image labelling, we propose an interesting learning approach to generate pixel-level image labellings automatically. A Guided Filter Network (GFN) is first developed to learn the segmentation knowledge from a source domain, and such GFN then transfers such segmentation knowledge to generate coarse object masks in the target domain. Such coarse object masks are treated as pseudo labels and they are further integrated to optimize/refine the GFN iteratively in the target domain. Our experiments on six image sets have demonstrated that our proposed approach can generate fine-grained object masks (i.e., pixel-level object labellings), whose quality is very comparable to the manually-labelled ones. Our proposed approach can also achieve better performance on semantic image segmentation than most existing weakly-supervised approaches.

Index Terms:

Semantic Image Segmentation, Pixel-level Semantic Labellings, Guided Filter, Deep Networks.

I Introduction

Semantic image segmentation, which assigns one particular semantic label to each pixel in an image, is a critical task in computer vision. Recently, Deep Convolutional Neural Networks (DCNNs) have demonstrated their outstanding performance on the task of semantic image segmentation [1, 2, 3]. Obviously, their success is largely owed to the availability of large-scale training images whose semantic labels are given precisely at the pixel level. However, manually labelling large-scale training images at the pixel level is dreadfully labour-intensive and it could also be very difficult for humans to provide consistent labelling quality.

To alleviate the burden of manual image labelling, some weakly-supervised approaches have been developed to support deep semantic image segmentation. Instead of requiring precise pixel-level image labellings, such weakly-supervised methods employ the semantic labels that are coarsely given at the level of image bounding boxes [4] or image scribbles [5]. To further reduce human involvements in image labelling, numerous methods [6, 7, 8] train the deep networks from large-scale training images with coarse image-level labellings. Unfortunately, the performance of all these weakly-supervised methods is far from satisfactory because pixel-level image labellings play a critical role on learning discriminative networks for semantic segmentation. Thus it is very attractive to develop new algorithms that are able to automatically generate fine-grained object masks with detailed pixel-level structures/boundaries (i.e., pixel-level object labellings), so that we can learn more discriminative networks for deep semantic image segmentation.

Recently, many self-supervised learning methods [9, 10, 11] were proposed to learn visual features from large-scale unlabelled images by using their pseudo labels rather than using human annotations. This new technique has been widely applied to the task of object recognition [12, 13, 14], object detection [15] and image segmentation [16, 17]. The main idea is to design a pre-defined pretext task for DCNNs to solve, and the pseudo labels for the pretext task can be automatically generated by using attributes of data. Then the DCNNs is trained to learn object functions of the pretext task. After the self-supervised training finished, the learned visual features can be further transferred to downstream tasks as initialization parameters to improve performance [18].

Based on these observations, an interesting approach, called Guided Filter Network (GFN), is developed in this paper to automatically generate pixel-level image labellings. The proposed approach can be divided into two stages: the proxy stage, and the iterative learning stage. The proxy stage does not need any pixel-level image labellings in the target domain but requires one to design a pretext task to learn a segmentation knowledge in the source domain. In the iterative learning stage, the learned parameters are transferred to GFN for generating initial object masks for the images in the target domain. Such coarse object masks are further leveraged to optimiza/refine the GFN iteratively in the target domain. For a given object class of interest, we designed two strategies to obtain its source domain: (a) if itself or its semantically-similar category (i.e., its sibling classes under the same parent node, or its parents or its ancestors on a concept ontology) can be identified from public datasets (which provide pixel-level object labellings), e.g. PASCAL VOC [19], Microsoft COCO [20] and BSD [21], we can treat such semantically-similar category and its pixel-level labelled images in public datasets as its source domain; (b) if itself or its semantically-similar category cannot be identified from public datasets, we can first search some relevant images with simple backgrounds from the Internet and an Otsu detector [22] is utilized to extract the given object class of interest and generate pixel-level object labellings automatically.

Our proposed GFN contains three modules: Boundary Guided (BG) module, Guided Filter (GF) module and High-level Guided Low-level (HGL) module. GFN can recover the structures and the localization details of objects effectively. For a given object class of interest, GFN first learns a segmentation knowledge from the source domain. GFN then transfers such segmentation knowledge to generate coarse object masks from the images in the target domain. Those coarse object masks are treated as pseudo labels and they are further leveraged to optimize/refine the GFN iteratively, so that it is able to generate the fine-grained object masks with detailed pixel-level structures/boundaries (i.e., pixel-level object labellings) gradually.

II Related Work

Deep Convolutional Neural Networks (DCNNs) have achieved a great success on semantic image segmentation. One of the most popular CNN-based approaches is the Fully Convolution Network (FCN) [1]. Chen et al. [23] improves the FCN-style architecture by applying fully connected CRF as a post-processing step. Several deep models are proposed to exploit the contextual information for semantic image segmentation [2, 24]. Other methods [3, 25] have built a deep encoder-decoder structure to preserve object boundaries precisely. Although these existing approaches have achieved a substantial improvement on deep semantic image segmentation, a critical bottleneck is to collect large amounts of training images with pixel-level labellings and this bottleneck may seriously limit their practicality [26].

To tackle the deficiency of training images with pixel-level labellings, some weakly-supervised approaches are proposed, where deep models are learned from large-scale training images whose semantic labels are given coarsely at the level of bounding boxes or scribbles rather than at the pixel level. For example, Dai et al. [4] proposed to learn from the training images with coarse object masks at the level of bounding boxes. The basic idea is to perform an iteration process between automatically generating region proposals and training convolutional networks. Lin et al. [5] used the scribbles to label the training images for network training.

To further reduce human involvements in manual image labelling, some deep models directly learn from large-scale training images whose semantic labels are coarsely given at the image level. By adding an extra layer for multiple-instance learning (MIL), Pinheiro et al. [27] learned deep models which are able to assign more weights to the important pixels. Kolesnikov et al. [6] proposed to learn the segmentation network from the classification networks. Some researches [7, 8] used the training images with image-level labellings to learn the semantic segmentation networks. Unfortunately, the performance of all these weakly-supervised methods is far from satisfactory. One possible reason is that the learned segmentation networks may not be able to recover the original spatial structures of objects precisely because the intrinsic properties of pixel-level object masks are completely ignored.

Different from these methods, for a given object class of interest, our proposed approach takes the following steps to achieve deep semantic image segmentation: (a) the GFN first generates coarse object masks automatically by transferring the segmentation knowledge learned from the source domain to the target domain; (b) such coarse object masks are further leveraged to optimize/refine the GFN iteratively in the target domain, so that we can obtain fine-grained object masks with detailed pixel-level structures/boundaries gradually.

III Architecture of our Proposed GFN

In this section, we first introduce three components of our proposed Guided Filter Network (GFN): (a) Boundary Guided (BG) module; (b) Guided Filter (GF) module; and (c) High-level Guided Low-level (HGL) module. Then we elaborate our complete GFN architecture.

III-A Boundary Guided Module

In the task of semantic segmentation, numerous method [3, 25] usually combine low-level and high-level features to boost performance. Compared to simply fusing low-level features with high-level ones, Zhang et al. [28] pointed out that later fusion is more effective by introducing high-resolution details into high-level features and embedding semantic information into low-level features.

Inspired by the works in [28, 29], we establish a Boundary Guided (BG) module that can recover the localization details of objects by introducing low-level features into high-level ones and embedding semantic information into low-level features. The structure of BG module is shown in Figure 1, where a boundary extractor is first applied to obtain the semantic features of object boundaries. Based on the observation that the pixel values in the non-boundary areas change slowly while the pixel values around the boundary change significantly, the boundary extractor can be designed as:

| (1) |

where represents the feature maps, and are max-pooling and min-pooling operations respectively. In practice Eq. (1) can be implemented by . Thus, the boundary extractor makes that the values of pixels not around the non-boundary are close to zero while others are not (see Figure 2). After that, an element-wise multiplication is performed between the low-level features and the semantic features of object boundaries.

III-B Guided Filter Module

DCNNs have demonstrated their outstanding capability on the task of semantic image segmentation, but they have difficulty in obtaining good results with detailed pixel-level structures/boundaries. One core issue is that obtaining object-centric decisions from a classifier requires the invariance to spatial transformations, which inherently limits the spatial accuracy of the DCNNs [2]. Several methods have recently been proposed to alleviate this issue and they can be grouped into two main strategies. The first strategy is to build deep encoder-decoder networks which can preserve accurate object boundaries [3, 25]. The second strategy is to use CRF to refine the segmentation results iteratively [2, 30]. Although these approaches have achieved substantial improvements on semantic image segmentation, using multiple down-sampling layers may lead to more information loss.

The guided filter [31] is an edge-preserving operator and can transfer the structure of the guidance image (e.g., original RGB image or low-level features) to the filtering output. In this paper, we use the guided filter to extract the edge contour coefficient for each pixel from the guidance image, then such coefficients are used to weight the coarse object masks for generating the refined ones. More specifically, suppose that two inputs, the coarse object mask (e.g., the output of BG module) and the guidance image , are fed to the guided filtering. The outputs of the guided filter (i.e., the refined masks) are obtained as follows:

| (2) |

where is the index of a pixel in , is the index of a point in , and is the index of a local square window with radius . is the edge contour coefficient of the pixel in the image and it is calculated as:

| (3) |

where is the number of pixels in the local square window , and are the mean and variance of the image patch in , and is a regularization parameter. In this way, we seamlessly integrate the recognition capacity of DCNNs and the fine-grained localization ability of guided filter. The object masks can further be refined by using the guidance image to fine-tune the output of DCNNs.

III-C High-Level Guided Low-Level (HGL) Module

In the GF module, the low-level features are often used as the guidance image because they contain more object structures. In fact, the low-level features are too noisy to provide a high-quality guidance. Using the low-level features directly as the guidance image could be less effective for semantic image segmentation. Actually, the high-level features can guide the low-level features on recovering the localization details of objects. To this end, we propose High-level Guided Low-level (HGL) module, which integrates the high-level features to extract more discriminative low-level features:

| (4) |

where and are high-level features and low-level features, respectively. represents the element-wise multiplication. The detailed structure of HGL module is shown in Figure 1. By using the HGL module, the quality of the guidance image can be improved, which can further boost the performance of semantic image segmentation.

III-D Complete Network Architecture

The ResNet-101 model [32] has achieved promising performance on image classification and the Modified Aligned ResNet-101 used in DeepLabv3+ [3] has also demonstrated its strong potential for the task of semantic image segmentation. On top of that, we use Atrous Spatial Pyramid Pooling module (ASPP) proposed in the DeepLabv2 [2] to capture multi-scale information. With our designed BG, GF and HGL, we propose a Guided Filter Network (GFN) to achieve semantic image segmentation, as illustrated in Figure 1.

In this paper, we use the cross-entropy loss function for semantic image segmentation, which is formulated as follows:

| (5) |

where is the number of classes and is the ground truth probability of the class at the pixel . represents the posterior probability of pixel belonging to the class. and are the height and the width of the image, respectively.

IV Generation of Pixel-Level Semantic Labellings

The overall architecture of our proposed method is illustrated in Figure 3. To generate fine-grained object masks automatically, our proposed approach takes three key steps: (a) the GFN is first trained in the source domain, where the pixel-level labellings are available for the training images; (b) the GFN is then used to produce initial coarse object masks with object boundaries for the images in the target domain; (c) such coarse object masks are treated as pseudo labels and they are further leveraged to optimize/refine the GFN iteratively.

IV-A Pretext Tasks design for Generating Initial Object Masks

Compared with supervised learning methods that require manual labelling, the proposed approach requires initial object masks which can be automatically generated by transferring the segmentation knowledge learned from the source domain to the target domain. The learning of segmentation knowledge depends on the design of the pretext task, and a good pretext task can effectively improve the quality of the initial object masks. Considering that the goal of self-supervised learning is to generate initialization parameters for downstream tasks by proxy task that do not require human annotations, we design three pretext tasks to learn segmentation knowledge. After the pretext task training finished, the learned parameters are transferred to GFN to generate initial object masks in the target domain.

IV-A1 A transfer learning approach

For a given object class of interest, its semantically-similar category is first identified from public datasets, and the training images with pixel-level labellings for its semantically-similar category are used to train its GFN. Such learned GFN is further used to obtain the initial object masks for the images in the target domain. For example, for a given object class of interest “angraecum-mauritianum” (fine-grained plant species) in the “Orchid” family, we do not have its training images with pixel-level labellings, but we may have sufficient training images that are labelled at the pixel level for its semantically-similar category “plant” in public datasets such as PASCAL VOC, Microsoft COCO and BSD. Thus we can leverage the training images for the semantically-similar category “plant” (in the source domain) to train the GFN for the given object class “angraecum-mauritianum” (in the target domain) as illustrated in the left of Figure 3 (a), and the GFN can further be used to obtain the initial masks for the object class “angraecum-mauritianum”.

IV-A2 A simple-to-complex approach

For some object classes of interest, their semantically-similar categories cannot be identified from public datasets, thus our transfer learning approach cannot be used and a simple-to-complex approach is developed to handle this situation. To generate the initial object masks for a given object class of interest, our simple-to-complex approach consists of the following components: (a) some images with simple background are searched from the Internet; (b) Otsu detector [22], as illustrated in Figure 4, is performed on such simple images with homogeneous backgrounds to generate the masks for the given object class of interest; (c) such object masks are treated as the training images to train GFN to extract initial object masks from the images with more complex backgrounds.

IV-A3 A Class Activation Maps (CAMs) approach

One drawback of the simple-to-complex approach is the requirement of manual selection of the images with simple backgrounds. To solve this problem, we use CAMs [33] to generate the initial object masks. We follow the approach in [33] to learn CAMs whose architecture is typically a classification network with global average pooling (GAP) followed by a fully-connected layer. We train the CAMs from the images with image-level labellings by using a classification criteria. The learned CAMs is then used to generate the object masks from the images in the target domain, and such object masks are further refined by using dCRF [34]. The segmentation label for a training image can be obtained by selecting the class label associated with the maximum activation score at every pixel in the up-sampled CAMs [35].

IV-B Iterative Learning of GFN for labellings generation

For a given set of object classes of interest, after the GFN is learned from the training images in the source domain (pretext task), it is then used to obtain the initial masks for the object classes of interest in the target domain. Because of the significant difference between the source domain and the target domain, the GFN may not be able to obtain the initial object masks accurately in the target domain. One reasonable solution is to fine-tune the GFN iteratively in the target domain. For this purpose, we treat the refined object masks as a pseudo label to optimize/refine GFN iteratively. Note that noisy labellings may lead to a drift in semantic image segmentation when they are used to supervise the training of GFN directly. Thus we define the reliability of a labelling (generated by our GFN) based on the area ratio, e.g., the proportion of the area between the automatically-labelled region and the whole image. The area ratio can be formulated as:

| (6) |

where represents the sum of pixels of the automatically-labelled region, and is the sum of pixels of the whole image. Only a subset of the pixels in the automatically-labelled region within a ratio between 0.1 and 0.9 are selected for network training, and this parameter can appropriately be adjusted according to the ratio of the object’s area in the image. We choose such parameters because the objects in our image set are neither too big nor too small. By continuously leveraging such training images with automatically-generated pixel-level labellings to optimize/refine our GFN in the target domain, the generated object masks may become more accurate gradually. After several iterations, we can finally obtain the fine-grained object masks with detailed pixel-level structures/boundaries in the target domain.

V Experimental Results and Analysis

This section describes our experimental results and our analysis for algorithm evaluation over multiple image datasets.

V-A Training details

To train the GFN, a mini-batch size of 8 images is used. The network parameters are initialized by the ResNet-101 model [32] which is pre-trained on ImageNet [36]. The initial learning rate is set as 0.007 and divided by 10 in every 5 epochs. The weight decay and the momentum are set to 0.0002 and 0.9, respectively. In addition, in the Guided Filter (GF) Module, the values of and are first determined by grid search on the validation set. We then use the same parameters to train GFN.

V-B Generating pixel-level labellings over “Orchid”, FGVC Aircraft, CUB-200-2011 and Stanford Cars Image Sets

-

•

PASCAL VOC 2012: The PASCAL VOC 2012 segmentation benchmark [19] contains 20 foreground object classes and one background class. It has 1,464, 1,449, and 1,456 images labelled at the pixel level for network training, validation, and testing, respectively. The dataset is augmented by the extra labellings provided by [37], resulting in 10,582 training images.

-

•

“Orchid” Plant Image Set: We have crawled large-scale plant images which include 252 plant species (object classes) in the “Orchid” family, where 149,500 plant images are used to train the base network and other 500 images for testing.

-

•

FGVC Aircraft: FGVC Aircraft dataset [38] contains 10,000 images for 100 fine-grained aircraft classes. We divide 10,000 images into 9,000 training images and 1,000 test images.

-

•

CUB-200-2011: The CUB-200-2011 dataset [39] contains 200 fine-grained bird species. It has 11,788 images, of which 11,288 images for training, 500 images for testing.

-

•

Stanford Cars: The Stanford Cars dataset [40] contains 16,185 images for 196 fine-grained classes. We divide 16,185 images into 15,685 training images and 500 test images.

Note that the test images of the last four datasets are manually labelled at the pixel level. We use our proposed approach to generate pixel-level labellings, where the initial object masks are generated by our transfer learning approach.

It is worth noting that our transfer learning approach can generate initial object masks directly, where PASCAL VOC 2012 is treated as the source domain and our “Orchid” plant, FGVC Aircraft, CUB-200-2011, and Stanford Cars image sets are treated as the target domain. The reason is that the categories of “potted plant”, “aeroplane”, “bird”, and “car” in the PASCAL VOC 2012 is semantically-similar with all 252 plant species in our “Orchid” plant image set, 100 fine-grained “aircraft” classes in the FGVC Aircraft image set, 200 fine-grained “bird” classes in the CUB-200-2011 image set, and 196 fine-grained “car” classes in the Stanford Cars image set, respectively. Thus the knowledge learned from the PASCAL VOC 2012 dataset is transferable to our target datasets.

V-C Generating pixel-level labellings over Branch Image Set

Our Branch image set contains 2,134 images, including 500 complex images and 1,634 simple images. Images are further divided into the training set and the test set, which have 2,034 and 100 images respectively. The 100 test images are manually labelled at the pixel level as the ground truth.

For the “branch” class, its semantically-similar category cannot be identified from public datasets, we use our proposed approach to generate pixel-level labellings, where the initial object masks are generated by our simple-to-complex approach.

We have also evaluated the impacts of the number of simple images with pixel-level labellings on the performance of our proposed method. As illustrated in Table I, one can easily observe that the performance of our proposed method can be improved when more simple images are incorporated for network training.

| Number of Simple Images | 205 | 409 | 817 | 1634 |

|---|---|---|---|---|

| IoU () | 58.5 | 60.5 | 64.8 | 70.1 |

V-D Generating pixel-level labellings over Pascal Voc 2012

For the PASCAL VOC 2012 dataset, we use our proposed approach to generate pixel-level labellings, where the initial object masks are generated by the CAMs approach [33].

Figure 5 provides some of our segmentation results on the PASCAL VOC 2012 training set. From these experimental results, we observe that the automatically-generated labellings have precise segmentation masks even some labellings are better than ground truth. We attribute this to our proposed Guided Filter Network (GFN) that can transfer the structure of the object class of interest into the segmentation result to reason refined masks. Meanwhile, this indicates that manually labelling large-scale training images at the pixel level is very difficult to provide consistent labelling quality.

V-E Select the number of iterations

We use mIoU to verify the effectiveness of our proposed method and calculate its mIoU after every iteration until the mIoU no longer grows. The model with the best mIoU is then selected to generate the final pixel-level labellings. For the iterative training method, we start from the segmentation results for the first iteration, in later iterations, the segmentation results for the previous iteration are employed to train the segmentation network for the next iteration.

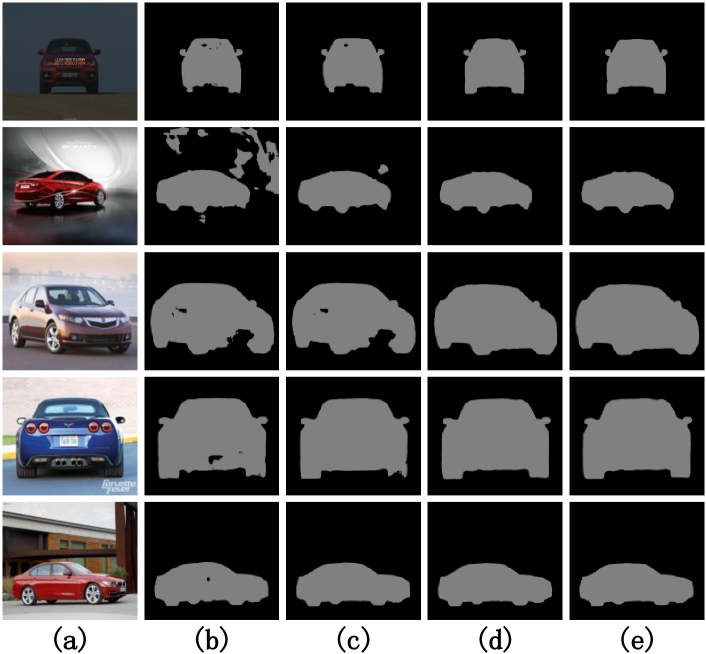

Figure 6 shows the performance of the recursive refinement. The performance of all these networks improves as the training round increases at first, and then saturates after few training rounds. Some examples for each step of our proposed approach are shown in Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11.

V-F Evaluation of automatically-generated labellings

We use mIoU as our main evaluation metric. Table II summarizes the evaluation results on the mIoU over “Orchid” Plant, CUB-200-2011, Stanford Cars, FGVC Aircraft, Branch, and PASCAL VOC 2012 image sets. One can easily observe that our proposed approach can achieve good performance on generating pixel-level labellings (e.g., fine-grained object masks) with acceptable mIoU. Figure 12 and Figure 13 describe several fine-grained object masks with detailed pixel-level structures/boundaries on “Orchid” Plant, FGVC Aircraft, CUB-200-2011, Stanford Cars, Branch, and PASCAL VOC image sets. From these experimental results, one can easily observe that our proposed approach can automatically generate fine-grained object masks, and the annotation quality is very close to that of manually-labelled ones.

| Dataset | mIoU () | Dataset | mIoU () |

|---|---|---|---|

| “Orchid” Plant | 80.3 | CUB-200-2011 | 84.6 |

| Stanford | 87.8 | FGVC Aircraft | 91.5 |

| Branch | 70.1 | PASCAL VOC 2012 | 62.3 |

There is an experiment that could be carried out to shed some light in terms of performance. We provide the comparison results when the manually-labelled images and the automatically-labelled images (whose annotations at the pixel level are automatically generated by our proposed method) are employed for network training. Thus, we have evaluated two approaches on their mIoU when: (a) manually-labelled images are used, where we choose 800 manually-labelled images in the FGVC Aircraft image set as the training images and 200 as the test images; (b) automatically-labelled images are used, where the pixel-level labellings for these 800 training images are automatically generated by our proposed method. We use our GFN as the baseline model, where the parameters are initialized by the ResNet-101 model which is pre-trained on ImageNet. Table III summarizes the evaluation results on mIoU, one can easily observe that our proposed approach (i.e., leveraging automatically-labelled images for network training) can achieve very competitive performance.

| Training Images | mIoU () |

|---|---|

| Manually-Labelled Ones | 92.3 |

| Automatically-Labelled Ones | 88.3 |

V-G Comparison with Weakly-Supervised Methods

V-G1 PASCAL VOC 2012

Our proposed approach share some similar principles with weakly-supervised methods, e.g. fine-tuning image-level coarse labellings into pixel-level ones, and our proposed method is compared against numerous up-to-date weakly supervised approaches. The segmentation results on PASCAL VOC 2012 validation set are quantified and compared as illustrated in Table IV. We achieve the mIoU score of 62.3 on PASCAL VOC 2012 validation set, which outperforms most the previous approaches using the same supervision settings. In order to accomplish a fair comparison, we use VGG16 [41] as an encoder. We achieve the mIoU score of 59.4 on PASCAL VOC 2012 validation set, which outperforms other methods in weakly-supervised semantic image segmentation. Moreover, we want to emphasize that the proposed method only use image-level labelling as supervision without the information of external sources.

From Table IV, we observe that the results of [42] and [43] on the PASCAL VOC 2012 validation set are slightly better than our method. One possible reason is that the PASCAL VOC 2012 containing very few images per class, and our proposed approach can improve the segmentation performance by incorporating more images for network training (see Table I). When large-scale images are used for training, these methods may be far inferior to our method. The specific experimental verification is shown in the Section V-G2 of this paper.

| Methods | mIoU(%) |

|---|---|

| Image-level labels with external source | |

| STC [44] | 49.3 |

| Co-segmentation [45] | 56.4 |

| Webly-supervised [6] | 53.4 |

| Crawled-Video [46] | 58.1 |

| AF-MCG [47] | 54.3 |

| Joon et al. [48] | 55.7 |

| DCSP-VGG16 [49] | 58.6 |

| DCSP-ResNet-101 [49] | 60.8 |

| Mining-pixels [50] | 58.7 |

| Image-level labels w/o external source | |

| MIL-FCN [51] | 25.7 |

| EM-Adapt [52] | 38.2 |

| BFBP [53] | 46.6 |

| DCSM [54] | 44.1 |

| SEC [6] | 50.7 |

| AF-SS [47] | 52.6 |

| SPN [26] | 50.2 |

| Two-phase [55] | 53.1 |

| Anirban et al. [56] | 52.8 |

| AdvErasing [7] | 55.0 |

| DCNA-VGG16 [8] | 55.4 |

| MCOF [57] | 60.3 |

| MDC [58] | 60.4 |

| DSRG [59] | 61.4 |

| AffinityNet [35] | 61.7 |

| Zeng et al. [42] | 64.3 |

| SEAM [43] | 64.5 |

| Ours-VGG16 | 59.4 |

| Ours-ResNet-101 | 62.3 |

V-G2 Branch+Aircraft+Plant

We have compared our proposed approach with some weakly-supervised methods [52, 6, 35, 43] on generating fine-grained object masks on the Branch+Aircraft+Plant image set. As illustrated in Table V, one can easily observe that our proposed approach can achieve better performance on generating fine-grained object masks than the state-of-the-art weakly-supervised methods. Interestingly, one can also find that our proposed approach actually gives slightly better performance on PASCAL VOC 2012 validation set, and there is a significant improvement on Branch+Aircraft+Plant image set. This is due to the PASCAL VOC 2012 containing very few images per class, and our proposed approach can improve the segmentation performance by incorporating more images for network training (see Table I).

Unlike these weakly-supervised methods which directly leverage coarsely-labelled images for network training, our proposed approach first fine-tune image-level coarse labellings into pixel-level fine-grained ones, and then leverage such training images with pixel-level labellings to learn more discriminative networks for semantic image segmentation.

-

•

Branch+Aircraft+Plant: We merged the Branch Image set, FGVC Aircraft dataset and “Orchid” Plant Image set, 25,822 images for training (2,034 images from Branch image set, 9,000 images from FGVC Aircraft dataset, 14,788 images from “Orchid” plant image set) and 1,600 for testing (of which 100 branch images are from Branch image set, 1,000 images from FGVC Aircraft dataset, 500 images from “Orchid” plant image set). To avoid data imbalance, we only select 14,788 “Orchid” plant images for network training.

| Methods | bkg | aircraft | plant | branch | mIoU |

|---|---|---|---|---|---|

| EM-Adapt [52] | 33.6 | 28.4 | 35.8 | 11.2 | 27.3 |

| SEC [6] | 62.0 | 61.0 | 65.7 | 28.3 | 54.3 |

| AffinityNet [35] | 84.3 | 58.0 | 51.4 | 20.9 | 53.6 |

| SEAM [43] | 87.7 | 57.8 | 50.0 | 19.4 | 53.7 |

| Ours-VGG16 | 92.7 | 88.3 | 74.3 | 65.4 | 80.2 |

| Ours-ResNet-101 | 94.8 | 91.1 | 78.5 | 68.5 | 83.2 |

V-H Ablation study

In this subsection, the effectiveness of our proposed GFN is firstly evaluated over the PASCAL VOC 2012 segmentation benchmark [19] against numerous state-of-the-art techniques for semantic image segmentation, and we adopt the mean intersection-over-union (mIoU) as the evaluation metric on segmentation accuracy.

V-H1 Ablation study on GF Module

We use DeepLabv2 as our base network, and the baseline comparison is used to evaluate the effectiveness of our proposed GF. As shown in Table VI, our proposed GF improves the segmentation performance from 71.79 to 73.82, which indicates the effects of our proposed GF module.

V-H2 Ablation study on HGL Module

The low-level features are often used as the guidance image because they contain the object structures. However, the low-level features are too noisy to provide a high-quality guidance. Therefore, we integrate the HGL into the GF. As presented in Table VI, one can easily observe that integrating HGL into GF can boost the segmentation performance by a margin of 0.98 (from 73.82 to 74.80).

V-H3 Ablation study on BG Module

By further integrating the BG module into the HGL module, the segmentation performance is advanced from 74.80 to 75.19 as shown in Table VI. The BG module facilitates the feature fusion by introducing more semantic information into the low-level features and embedding more spatial information into the high-level features. Interestingly, one can easily observe that BG actually only provides a boost of 0.39 on the segmentation performance, which is very limited. One possible reason is that part of the spatial information is already encoded by the combination of GF, HGL and ASPP. To enable better assessment of the contribution of each module, three proposed modules are evaluated separately. As shown in Table VI, three proposed modules can effectively improve the segmentation performance from different aspects. In addition, Wu et al. [60] proposed an end-to-end trainable guided filter module and applied it to semantic image segmentation. Compared with [60], our method achieves better performance on the PASCAL VOC 2012 dataset (75.19% vs. 73.58% [60]). The reason is that directly using the low-level features as the guidance image may introduce more noise information.

| Index | Baseline | GF | HGL | BG | mIoU(%) |

|---|---|---|---|---|---|

| 1 | ✓ | 71.79 | |||

| 2 | ✓ | ✓ | 73.82 | ||

| 3 | ✓ | ✓ | 72.79 | ||

| 4 | ✓ | ✓ | 74.49 | ||

| 5 | ✓ | ✓ | ✓ | 74.80 | |

| 6 | ✓ | ✓ | ✓ | ✓ | 75.19 |

It should be emphasized that the three proposed modules have different contributions for different tasks. Based on the results over PASCAL VOC 2012 in Table VI, the BG module is the major source of improvement for fully-supervised learning. As presented in Table VII, three proposed modules can effectively improve the segmentation performance over “Orchid” Plant image set from different aspects. Interestingly, one can easily observe that the GF module is the major source of improvement for weakly-supervised learning. The reason is that the proposed GF module can transfer the structure of the original image to the filter output, so as to refine the object boundaries and improve the segmentation quality.

| Index | Baseline | GF | HGL | BG | mIoU(%) |

|---|---|---|---|---|---|

| 1 | ✓ | 67.43 | |||

| 2 | ✓ | ✓ | 76.29 | ||

| 3 | ✓ | ✓ | 68.14 | ||

| 4 | ✓ | ✓ | 69.08 | ||

| 5 | ✓ | ✓ | ✓ | ✓ | 80.30 |

V-H4 Replacing GFN for labellings generation

The GFN can be seen as a segmentation network which can be used for both fully- and weakly-supervised semantic segmentation. The GFN plays a bigger role in weakly-supervised semantic segmentation than in fully-supervised semantic segmentation. The main reason is that fully-supervised learning has been able to obtain good object boundaries, so GFN only further optimized the object details. Since weakly-supervised learning has no effective supervised information, it is difficult to recover the structures/boundaries of the object. The proposed GFN can transfer the structure of the original image to the filter output, so as to refine the object boundaries and improve the segmentation quality. It would be useful to use a common network (e.g., DeepLabv3+) instead of GFN to highlight the true contribution of GFN to generate pixel-level labellings. As shown in Table VIII, our approach is superior to DeepLabv3+ for generating pixel-level labellings.

| Methods | “Orchid” Plant | FGVC Aircraft |

|---|---|---|

| DeepLabv3+ | 69.72 | 85.47 |

| GFN | 80.30 | 91.50 |

VI Conclusions

A novel approach is proposed in this paper to automatically generate pixel-level object labellings (e.g., fine-grained object masks). Specifically, we first train the GFN in the source domain. Such GFN is then used to identify coarse object masks from the images in the target domain. Such coarse object masks are treated as pseudo labels and they are further leveraged to optimize/refine the GFN iteratively in the target domain. Our experiments on six image sets have demonstrated that our proposed approach can generate fine-grained object masks with detailed pixel-level structures/boundaries, whose quality is very comparable to the manually-labelled ones. In addition, our proposed method can achieve better performance on semantic image segmentation than most existing weakly-supervised approaches.

References

- [1] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [2] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 4, pp. 834–848, 2017.

- [3] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 801–818.

- [4] J. Dai, K. He, and J. Sun, “Boxsup: Exploiting bounding boxes to supervise convolutional networks for semantic segmentation,” in The IEEE International Conference on Computer Vision (ICCV), December 2015.

- [5] D. Lin, J. Dai, J. Jia, K. He, and J. Sun, “Scribblesup: Scribble-supervised convolutional networks for semantic segmentation,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [6] A. Kolesnikov and C. H. Lampert, “Seed, expand and constrain: Three principles for weakly-supervised image segmentation,” in European Conference on Computer Vision. Springer, 2016, pp. 695–711.

- [7] Y. Wei, J. Feng, X. Liang, M.-M. Cheng, Y. Zhao, and S. Yan, “Object region mining with adversarial erasing: A simple classification to semantic segmentation approach,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1568–1576.

- [8] T. Zhang, G. Lin, J. Cai, T. Shen, C. Shen, and A. C. Kot, “Decoupled spatial neural attention for weakly supervised semantic segmentation,” IEEE Transactions on Multimedia, 2019.

- [9] M. Noroozi and P. Favaro, “Unsupervised learning of visual representations by solving jigsaw puzzles,” in European Conference on Computer Vision. Springer, 2016, pp. 69–84.

- [10] R. Zhang, P. Isola, and A. A. Efros, “Colorful image colorization,” in European conference on computer vision. Springer, 2016, pp. 649–666.

- [11] D. Pathak, P. Krahenbuhl, J. Donahue, T. Darrell, and A. A. Efros, “Context encoders: Feature learning by inpainting,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2536–2544.

- [12] X. Zhai, A. Oliver, A. Kolesnikov, and L. Beyer, “S4l: Self-supervised semi-supervised learning,” in Proceedings of the IEEE international conference on computer vision, 2019, pp. 1476–1485.

- [13] A. Kolesnikov, X. Zhai, and L. Beyer, “Revisiting self-supervised visual representation learning,” in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 2019, pp. 1920–1929.

- [14] I. Misra and L. v. d. Maaten, “Self-supervised learning of pretext-invariant representations,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 6707–6717.

- [15] K. He, H. Fan, Y. Wu, S. Xie, and R. Girshick, “Momentum contrast for unsupervised visual representation learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9729–9738.

- [16] G. Larsson, M. Maire, and G. Shakhnarovich, “Learning representations for automatic colorization,” in European conference on computer vision. Springer, 2016, pp. 577–593.

- [17] J. Novosel, P. Viswanath, and B. Arsenali, “Boosting semantic segmentation with multi-task self-supervised learning for autonomous driving applications,” in Proc. of NeurIPS-Workshops, pp. 1–11.

- [18] L. Jing and Y. Tian, “Self-supervised visual feature learning with deep neural networks: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [19] M. Everingham, S. A. Eslami, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes challenge: A retrospective,” International Journal of Computer Vision, vol. 111, no. 1, pp. 98–136, 2015.

- [20] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in European Conference on Computer Vision. Springer, 2014, pp. 740–755.

- [21] B. C. Russell, A. Torralba, K. P. Murphy, and W. T. Freeman, “Labelme: a database and web-based tool for image annotation,” International Journal of Computer Vision, vol. 77, no. 1-3, pp. 157–173, 2008.

- [22] N. Otsu, “A threshold selection method from gray-level histograms,” IEEE transactions on Systems, Man, and Cybernetics, vol. 9, no. 1, pp. 62–66, 1979.

- [23] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Semantic image segmentation with deep convolutional nets and fully connected crfs,” arXiv preprint arXiv:1412.7062, 2014.

- [24] F. Yu and V. Koltun, “Multi-scale context aggregation by dilated convolutions,” arXiv preprint arXiv:1511.07122, 2015.

- [25] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer-assisted Intervention. Springer, 2015, pp. 234–241.

- [26] S. Kwak, S. Hong, and B. Han, “Weakly supervised semantic segmentation using superpixel pooling network,” in Thirty-First AAAI Conference on Artificial Intelligence, 2017.

- [27] P. O. Pinheiro and R. Collobert, “From image-level to pixel-level labeling with convolutional networks,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [28] Z. Zhang, X. Zhang, C. Peng, X. Xue, and J. Sun, “Exfuse: Enhancing feature fusion for semantic segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 269–284.

- [29] G. Ghiasi and C. C. Fowlkes, “Laplacian pyramid reconstruction and refinement for semantic segmentation,” in European Conference on Computer Vision. Springer, 2016, pp. 519–534.

- [30] G. Papandreou, L.-C. Chen, K. P. Murphy, and A. L. Yuille, “Weakly- and semi-supervised learning of a deep convolutional network for semantic image segmentation,” in The IEEE International Conference on Computer Vision (ICCV), December 2015.

- [31] K. He, J. Sun, and X. Tang, “Guided image filtering,” IEEE transactions on pattern analysis and machine intelligence, vol. 35, no. 6, pp. 1397–1409, 2013.

- [32] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [33] B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba, “Learning deep features for discriminative localization,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2921–2929.

- [34] P. Krähenbühl and V. Koltun, “Efficient inference in fully connected crfs with gaussian edge potentials,” in Advances in neural information processing systems, 2011, pp. 109–117.

- [35] J. Ahn and S. Kwak, “Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4981–4990.

- [36] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in Computer Vision and Pattern Recognition (CVPR), 2009 IEEE Conference on. Ieee, 2009, pp. 248–255.

- [37] B. Hariharan, P. Arbeláez, L. Bourdev, S. Maji, and J. Malik, “Semantic contours from inverse detectors,” in 2011 International Conference on Computer Vision. IEEE, 2011, pp. 991–998.

- [38] S. Maji, E. Rahtu, J. Kannala, M. Blaschko, and A. Vedaldi, “Fine-grained visual classification of aircraft,” arXiv preprint arXiv:1306.5151, 2013.

- [39] T. Xiao, Y. Xu, K. Yang, J. Zhang, Y. Peng, and Z. Zhang, “The application of two-level attention models in deep convolutional neural network for fine-grained image classification,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, pp. 842–850.

- [40] J. Krause, M. Stark, J. Deng, and L. Fei-Fei, “3d object representations for fine-grained categorization,” in Proceedings of the IEEE International Conference on Computer Vision Workshops, 2013, pp. 554–561.

- [41] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [42] Y. Zeng, Y. Zhuge, H. Lu, and L. Zhang, “Joint learning of saliency detection and weakly supervised semantic segmentation,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 7223–7233.

- [43] Y. Wang, J. Zhang, M. Kan, S. Shan, and X. Chen, “Self-supervised equivariant attention mechanism for weakly supervised semantic segmentation,” arXiv preprint arXiv:2004.04581, 2020.

- [44] Y. Wei, X. Liang, Y. Chen, X. Shen, M.-M. Cheng, J. Feng, Y. Zhao, and S. Yan, “Stc: A simple to complex framework for weakly-supervised semantic segmentation,” IEEE transactions on pattern analysis and machine intelligence, vol. 39, no. 11, pp. 2314–2320, 2016.

- [45] T. Shen, G. Lin, L. Liu, C. Shen, and I. D. Reid, “Weakly supervised semantic segmentation based on co-segmentation.” in BMVC, 2017.

- [46] S. Hong, D. Yeo, S. Kwak, H. Lee, and B. Han, “Weakly supervised semantic segmentation using web-crawled videos,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 7322–7330.

- [47] X. Qi, Z. Liu, J. Shi, H. Zhao, and J. Jia, “Augmented feedback in semantic segmentation under image level supervision,” in European Conference on Computer Vision. Springer, 2016, pp. 90–105.

- [48] S. J. Oh, R. Benenson, A. Khoreva, Z. Akata, M. Fritz, and B. Schiele, “Exploiting saliency for object segmentation from image level labels,” in Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on. IEEE, 2017, pp. 5038–5047.

- [49] A. Chaudhry, P. K. Dokania, and P. H. Torr, “Discovering class-specific pixels for weakly-supervised semantic segmentation,” arXiv preprint arXiv:1707.05821, 2017.

- [50] Q. Hou, P. K. Dokania, D. Massiceti, Y. Wei, M.-M. Cheng, and P. H. Torr, “Mining pixels: Weakly supervised semantic segmentation using image labels,” arXiv preprint arXiv:1612.02101, 2016.

- [51] D. Pathak, E. Shelhamer, J. Long, and T. Darrell, “Fully convolutional multi-class multiple instance learning,” arXiv preprint arXiv:1412.7144, 2014.

- [52] G. Papandreou, L.-C. Chen, K. P. Murphy, and A. L. Yuille, “Weakly-and semi-supervised learning of a deep convolutional network for semantic image segmentation,” in Proceedings of the IEEE international conference on computer vision, 2015, pp. 1742–1750.

- [53] F. Saleh, M. S. Aliakbarian, M. Salzmann, L. Petersson, S. Gould, and J. M. Alvarez, “Built-in foreground/background prior for weakly-supervised semantic segmentation,” in European Conference on Computer Vision. Springer, 2016, pp. 413–432.

- [54] W. Shimoda and K. Yanai, “Distinct class-specific saliency maps for weakly supervised semantic segmentation,” in European Conference on Computer Vision. Springer, 2016, pp. 218–234.

- [55] D. Kim, D. Cho, D. Yoo, and I. So Kweon, “Two-phase learning for weakly supervised object localization,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 3534–3543.

- [56] A. Roy and S. Todorovic, “Combining bottom-up, top-down, and smoothness cues for weakly supervised image segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 3529–3538.

- [57] X. Wang, S. You, X. Li, and H. Ma, “Weakly-supervised semantic segmentation by iteratively mining common object features,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1354–1362.

- [58] Y. Wei, H. Xiao, H. Shi, Z. Jie, J. Feng, and T. S. Huang, “Revisiting dilated convolution: A simple approach for weakly-and semi-supervised semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7268–7277.

- [59] Z. Huang, X. Wang, J. Wang, W. Liu, and J. Wang, “Weakly-supervised semantic segmentation network with deep seeded region growing,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7014–7023.

- [60] H. Wu, S. Zheng, J. Zhang, and K. Huang, “Fast end-to-end trainable guided filter,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/759a0b9a-a602-42cb-9d4e-73a12af9ca2e/x14.png) |

Xiang Zhang received the MS degree in computer science from Northwest University, Xi’an, China, in 2018. He is now a PhD candidate at the same university. His research interests include statistical machine learning, large-scale visual recognition, plant species identification, and fine-grained semantic image segmentation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/759a0b9a-a602-42cb-9d4e-73a12af9ca2e/x15.png) |

Wei Zhang received the PhD degree in computer science from Fudan University, China, in 2008. He is currently an associate professor with the School of Computer Science, Fudan University. He was a visiting scholar with the UNC-Charlotte, in 2016-2017. His current research interests include deep learning, computer vision, and video object segmentation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/759a0b9a-a602-42cb-9d4e-73a12af9ca2e/x16.png) |

Jinye Peng received his MS degree in computer science from Northwest University, Xi’an, China, in 1996 and his PhD degree from Northwest Polytechnical University, Xi’an, China, in 2002. He joined Northwest Polytechnical University as Full Professor at 2006. His research interests include image retrieval, face recognition, and machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/759a0b9a-a602-42cb-9d4e-73a12af9ca2e/x17.png) |

Jianping Fan is a professor at UNC-Charlotte. He received his MS degree in theory physics from Northwest University, Xi’an, China in 1994 and his PhD degree in optical storage and computer science from Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences, Shanghai, China, in 1997. He was a Researcher at Fudan University, Shanghai, China, during 1997-1998. From 1998 to 1999, he was a Researcher with Japan Society of Promotion of Science (JSPS), Osaka University, Japan. From 1999 to 2001, he was a Postdoc Researcher in the Department of Computer Science, Purdue University, West Lafayette, IN. His research interests include image/video privacy protection, automatic image/video understanding, and large-scale deep learning. |