Automatic Generation of Interpolants for Lattice Samplings: Part I — Theory and Analysis

Abstract.

Interpolation is a fundamental technique in scientific computing and is at the heart of many scientific visualization techniques. There is usually a trade-off between the approximation capabilities of an interpolation scheme and its evaluation efficiency. For many applications, it is important for a user to be able to navigate their data in real time. In practice, the evaluation efficiency (or speed) outweighs any incremental improvements in reconstruction fidelity. In this two-part work, we first analyze from a general standpoint the use of compact piece-wise polynomial basis functions to efficiently interpolate data that is sampled on a lattice. In the sequel, we detail how we generate efficient implementations via automatic code generation on both CPU and GPU architectures. Specifically, in this paper, we propose a general framework that can produce a fast evaluation scheme by analyzing the algebro-geometric structure of the convolution sum for a given lattice and basis function combination. We demonstrate the utility and generality of our framework by providing fast implementations of various box splines on the Body Centered and Face Centered Cubic lattices, as well as some non-separable box splines on the Cartesian lattice. We also provide fast implementations for certain Voronoi splines that have not yet appeared in the literature. Finally, we demonstrate that this framework may also be used for non-Cartesian lattices in 4D.

1. Introduction

Given a set of discrete data points that reside on a lattice, one of the key fundamental operations that one can perform on those data is interpolation. This appears in many different contexts in science and engineering: one may use interpolation to fill in data between points in time-series data, to fill in missing data between pixels in an image, or missing data between voxels in a volumetric image. In fact, many scientific visualization algorithms require a continuous representation of a signal as an input.

As simple as it may seem, interpolation of lattice sampled data can quickly become complicated. To many, interpolation is synonymous with linear interpolation, and if we wish to interpolate in higher dimensions, we can simply linearly interpolate in each dimension separately (i.e. a tensor product extension). However, there is much more freedom available that we may take advantage of when we move past one dimension. For example, there are many alternative interpolants (or basis functions) we may choose instead of a simple linear interpolant, many with higher accuracy and/or higher smoothness than a simple linear interpolant. Not only this, but we may have more freedom in terms of where samples are placed when the dimension of the space is . We say ‘may’ because for some problems, one is forced to use a specific lattice structure - typically the Cartesian lattice, whereas other problems allow lattice choice as a degree of freedom.

In this work, we build a framework for interpolation on lattices, and show how to do it fast. Our work originated in scientific visualization, in particular working with volumetric data, but the observations we make are generally applicable to other domains as well as higher dimensions. We will mainly keep to the 3-dimensional case, but we will note how to generalize to higher dimensions when it is necessary.

Returning to the context of scientific visualization, interpolation speed is important in practice because it facilitates interactivity which, in turn, allows for fast iteration over data-sets — the ability to quickly (visually) explore data is one of the key strengths of visualization. But more often than not, in practice, interpolation boils down to “use trilinear interpolation”, and it is clear why: trilinear interpolation is fast, it is implemented in hardware for all modern graphics processing units; it is straightforward to use, it inlvolves a single texture fetch instruction for modern GPUs; and it looks “good enough” in practice. However, linear interpolation has a number of shortcomings. Perhaps the most obvious is smoothness. Trilinear interpolation is based on a piece-wise linear univariate function extended via tensor product along all three dimensions; this ensures a continuous approximation but introduces discontinuities in the first and higher order derivatives. While this could be mitigated by using filtering methods, there is additional memory overhead associated with such methods (Alim et al., 2010). Linear interpolation also introduces visual artifacts when interpolating at lower resolutions. This is again related to the smoothness of the trilinear interpolant, but also has deeper roots in sampling and approximation theory. In short, the geometry and support of the chosen interpolant dictate how a reconstruction attenuates high-frequency content in the Fourier domain. This gives rise to different types of artifacts in data reconstruction (Csébfalvi and Rácz, 2016). Thus, there is value in exploring the design space of interpolants with the goal of minimizing error; in practice, one also needs to account for the computational costs associated with different interpolants.

Before moving further, we need to clarify what we mean by non-Cartesian computing. We use the term “non-Cartesian computing” to indicate a move away from tensor-product extensions. This can take the form of non-Cartesian lattices — take Figures 1 and 2 as examples that show the typical cubic Cartesian (CC) and square lattices in three and two dimensions, as well as more efficient non-Cartesian variants, namely the Body Centered Cubic (BCC) lattice, and the Face Centered Cubic (FCC) lattice, both of which have received a good amount of attention in the field of scientific visualization. We may also use the term “non-Cartesian computing” to denote the move away from tensor-product interpolants to non-separable interpolants. In the Cartesian world, one typically uses tensor product B-splines as basis functions; however, non tensor-product splines exist, and have been investigated in the literature (Csébfalvi and Rácz, 2016). One example is the ZP-element which requires fewer memory accesses per reconstruction than the cubic tensor-product B-spline but has similar approximation properties and provides smoother, more isotropic reconstructions (Entezari and Möller, 2006). When comparing different sampling lattices, one may argue that a true comparison for these lattices would be their Voronoi splines (Mirzargar and Entezari, 2010), which, until this work, have yet to have a dedicated GPU implementation.

What do we gain by adding additional complexity to our interpolation and data processing schemes? The seemingly benign difference in lattice and basis function changes the properties of the resulting approximation scheme. In fact, in 2D, an optimal hexagonal sampling scheme (Fig. 2) yields better approximations when combined with an appropriate interpolant, whereas on the BCC lattice (Fig. 1)— the optimal sampling lattice in 3D — the improvement is around (Entezari, 2006). Despite these advantages, the benefits of non-Cartesian computing have remained elusive. This is largely due to the complex algebro-geometric interplay between the lattices and the basis function they are paired with. These basis functions are typically composed of box splines which have intricate piece-wise polynomial structures which are challenging to evaluate in an efficient manner. Most treatments have dealt with evaluation and implementation issues in a somewhat adhoc manner.

Our goal in this work is to make the advantage of non-Cartesian computing more tangible — explicitly, we want to make the use of alternative interpolants/lattices more practical and usable. Our main contribution is a holistic analysis of approximation spaces with a careful focus on fast implementation. We outline a framework for translating interpolation bases into fast interpolation schemes for piece-wise polynomial interpolants. We then show how to use this pipeline to create unified fast implementations of many of the interpolants in the non-Cartesian volumetric visualization literature. It is important to mention that we obtain a form of evaluation that is agnostic to platform. We could generate CPU code, GPU code, or Verilog from our intermediate representation. Our GPU implementation takes advantage of the in-built tensor product linear filtering hardware (i.e. linear texture fetches) on contemporary GPUs to reduce the amount of memory accesses needed for reconstruction, however this does not always lead to optimal results.

We provide the theoretical analysis in this paper, and in the subsequent work we provide extensive implementation details. In our supplementary material we share the worksheet we use to automate the analysis as well as the code generation module, and pre-generated CUDA PTX code. To summarise, in this part, our contributions are as follows:

-

•

We provide a unified framework of analyses for combinations of splines and lattices from the context of scientific visualization.

-

•

We show how to use trilinear interpolation to reduce memory fetches and increase performance of our implementations.

-

•

We provide implementations for many interpolants in the literature that have not yet had robust GPU implementations.

-

•

We show the relative performance between GPU implementations, and show generality by moving towards a 4 dimensional example.

The remainder of this paper is organized as follows. In Section 2, we review the literature in our native context, scientific visualisation, but we will also pay note to some of the work done in one dimension and in image processing. In Section 3, we provide the background necessary for our framework. In Section 4, we attack the problem at its root, the convolution sum. We manipulate the convolution sum until it emits a form suitable for fast evaluation — this can be thought of as unrolling the convolution sum loop. In Section 5 we generate code for various interpolants (box splines and Voronoi splines) some of which have not yet appeared in the literature. We then evaluate their performance on the CPU and GPU with respect to the application of volume rendering.

2. Related Work

Interpolation is a fundamental operation in scientific visualization. Many visualization tasks (volumetric rendering, iso-surface extraction, flow visualization etc.) rely on the interpolation of a continuous function from a discrete set of values. In one dimension, the family of B-splines are the prototypical interpolant — the linear B-spline is the least computationally expensive in this family, and has received the most attention in practice (Unser, 1999). However, it is well understood what B-splines are members of the maximal order minimal support (MOMS) family, which are the optimal interpolants for a given support (Blu et al., 2001). This is not the only option though, there are other constraints one may wish to design around. For example: arithmetical complexity; total number of memory accesses (i.e. support) needed for a single point reconstruction; and smoothness are all parameters that can be tweaked. If one requires infinite differentiability, then CINAPACT splines are an appropriate interpolant candidate (Akram et al., 2015).

The design space for uni-variate interpolants is well understood. Appropriate choice of interpolant is not so clear in higher dimensions. For the domain of scientific visualization, the move to three dimensions allows practitioners the freedom to consider non-seperable splines. In this case, it is clear that the tensor product B-splines are no longer MOMS splines; the generalized ZP-element shows this, since it has lower support than (but the same approximation order as) the cubic B-spline (Entezari and Möller, 2006).

There are multiple works that investigate non-separable basis functions on non-Cartesian lattices; box splines and weighted linear combinations of box splines often appear as popular candidates (de Boor et al., 1993; Domonkos and Csébfalvi, 2010; Csebfalvi, 2013). However, it is fairly well known that evaluating a box spline numerically is quite difficult; the recursive form for box splines is unstable if naively implemented (de Boor, 1993). One can rectify this by ensuring that any decision made while evaluating a point on the spline’s separating hyperplanes is consistent (Kobbelt, 1997). Even then, recursive box spline evaluation is unsuitable for use on the GPU — the conditional nature and large branching behaviour of the recursive form will lead to branch divergence and stall execution units on the GPU, leaving its resources underutilized. It is more convenient to work with either the Bernstein Bézier (BB) form, or the explicit piece-wise polynomial (PP) form of a box spline which has been characterized in closed form (Horacsek and Alim, 2016). Mathematically these two forms are equivalent, the BB form is simply one specific factorization of the PP form that lends itself to evaluation with De Castlejau’s algorithm. Generally, a polynomial can be factorized in many different ways.

The BB form has been used in fast GPU evaluation schemes for box splines on non-Cartesian lattices. In the work of Kim et al. (Kim, 2017), the symmetry of a box spline is used to create look-up tables of the BB polynomial coefficients. Since these coefficients are rational, they are stored as pairs of integers and any division occurs at run time. This is particularly convenient on the GPU, as it allows one to write one function for De Castlejau’s algorithm, and each separate region of the spline to be evaluated is a set of integers that can be looked up based on an analysis of the regions of the spline’s mesh at runtime. However, this is somewhat wasteful since most regions within a spline’s mesh are related by a rotation and/or a shift (i.e. an affine change of variables). As we will see in Section 4, this change of variables allows us to re-write the polynomial within the region of evaluation as a transformation followed by a polynomial evaluation of some chosen reference polynomial (provided the spline has an appropriate structure). This reference polynomial can be evaluated in BB form, however, we choose to use a Horner factorization of the PP form — this allows us to reduce the amount of operations needed to calculate a given polynomial (Ceberio and Kreinovich, 2004). This is a generalization of the approach used in the work of Finkbeiner et al. (Finkbeiner et al., 2010).

On the BCC lattice multiple box splines have been investigated for volumetric visualization. The linear and quintic box splines were among the first used by Entezari et al. (Entezari et al., 2008, 2009) for volumetric data visualization. These splines are particularly interesting as they mimic the geometry of the BCC lattice and they attain the same order of approximation as the trilinear and tricubic splines on the CC lattice, but with smaller support. Then there is the quartic box spline of Kim et al. which has even smaller support than the quintic box spline, but the same order of approximation (Kim, 2013c). Additionally, in that work, Kim et al. proposed a 12-direction box spline with tensor product structure; this box spline has a large support size, but is reasonably fast compared to the other proposed box splines due to its tensor product flavour — in our implementation, we call the 12-direction box spline the tricubic box spline on the BCC lattice.

The FCC lattice has not received as much attention from researchers as the BCC lattice; we are only aware of few works that investigate box splines on the FCC lattice. In particular, Kim et al. have investigated a six direction box spline that respects the geometry of the FCC lattice (Kim et al., 2008a; Kim, 2013a). However, it is also true that the 12-direction box-spline (Kim, 2013c) is usable on the FCC lattice. The proposed splines of Csebfalvi et al. may also be generalized to the FCC lattice (Csebfalvi, 2013; Domonkos and Csébfalvi, 2010).

We are only aware few works that attempt the use of a non tensor-product box spline on the CC lattice for visualization. The work of Entezari et al. generalizes the Zwart-Powell (ZP) element of two dimensions to three dimensions. This also maintains the same order of approximation as the cubic tensor product spline, but with smaller support (Entezari and Möller, 2006). Even though this spline tends toward higher fidelity approximations, we are not currently aware of any GPU implementation of this spline, and we provide one in the supplementary material.

There is also the work of Csebfalvi et al., where a shifted and re-weighted box spline is designed so as to map easily to linear interpolation among the cosets of the BCC lattice (Csebfalvi, 2013). This method has the advantage of being extremely easy to implement on the GPU, has respectable reconstruction quality and runs at decent speeds compared to trilinear interpolation. There has also been some work investigating the use of splines designed for one lattice on another (Csébfalvi and Rácz, 2016). The use of direction vectors that do not correspond to principle lattice directions helps distribute frequency content more evenly in the Fourier domain.

Another intuitive idea is to look at the Voronoi cell of a lattice and convolve that with itself to obtain an interpolant, Figure 1 shows the CC, BCC and FCC lattices with their Voronoi cells. This produces a valid approximation scheme, but is quite expensive to compute (Mirzargar and Entezari, 2010; Van De Ville et al., 2004; Mirzargar and Entezari, 2011). Until this work, there was no GPU implementation available for these splines. These fit within our pipeline, and we provide an implementation for the BCC and FCC lattices — Voronoi splines on the CC lattice correspond to the tensor product B-spline.

Finally, while not strictly related to spline evaluation, it is important to discuss quasi-interpolation, since an approximation space may not harness the full approximation power of a given basis function without proper pre-filtering. Most of the basis functions discussed so far are not interpolating. If a basis is stable (i.e it forms a Riesz basis) then it is possible to process the input data so that the resulting reconstruction interpolates data (Alim, 2012). However, this does not always yield the best reconstruction. Moreover, some bases cannot be made interpolating, so what is to be done in those cases? Quasi-interpolants ensure that a reconstruction harnesses the full approximation order of a space — in general it is a good idea to prefilter data with a quasi-interpolant if a basis is not interpolating. This is related to an error kernel analysis, where the data convolved with a filter is guaranteed to decay with the order of the basis (Blu and Unser, 1999; Alim, 2012). While this is an important ingredient to a good reconstruction scheme, in this work we are not concerned with the approximation properties of a basis function, only on the speed at which it can be evaluated.

3. Background

When we work with data that are uniformly sampled in one dimension there is only one possible sampling scheme — take equally spaced samples along the indepenent axis. The straightforward extension of this to multiple dimensions is to sample evenly in all cardinal directions, i.e. a Cartesian sampling. However, the situation is generally more complex in higher dimensions.

To move away from Cartesian lattices and account for this complexity, a function can be sampled according to an invertible matrix. This matrix — known as the generating matrix — represents a lattice structure. That is, if we have a real valued function in some dimension , we define the sampled version of that function with respect to a given lattice as . Here, is a real valued scale parameter that controls the granularity or coarseness of the sampling pattern (i.e. the sampling rate), and is the generating matrix of the lattice. In any dimension, the identity matrix generates a Cartesian sampling. In two dimensions the hexagonal and quincunx lattices are generated by the matrices

| (1) |

respectively (Figure 2). In three dimensions, the BCC and FCC lattices are generated by

| (2) |

respectively (Figure 1). It is worth noting that a lattice may be generated by any number of different generating matrices — one may construct an equivalent lattice by carefully choosing vectors in . In general, finding a minimal basis for a given generating matrix is an NP-hard problem (Conway and Sloane, 2013), as such it is difficult to find a relationship between matrices that generate the same lattice.

While lattice theory is quite deep and general, we only adhere to the case where and has full rank. This is because, in practice, we use lattices as array-like data structures and it is not possible to index via real valued numbers. As a consequence, it is not possible for us to directly represent functions on the hexagonal lattice within our framework. However, it is possible to stretch an integer lattice into another non-integer lattice; for example, in Figure 2, the hexagonal lattice can be seen as a distorted quincunx lattice.

Another fundamental object related to a lattice is its Voronoi region, which is the set of points closest to the lattice site situated at the origin (both Figure 1 and Figure 2 show each lattice’s Voronoi region). The Voronoi region of a lattice embodies all the properties of a lattice — from it one may derive the shortest vector of a lattice (i.e. the shortest vector problem which is NP-complete) as well as set of shortest spanning vectors, the symmetry group of the lattice, packing efficiency etc. (Conway and Sloane, 2013; Viterbo and Biglieri, 1996). Unsurprisingly, computing the Voronoi region of a lattice is NP-complete as well, but for reasonably specified bases in lower dimensions, the problem is tractable via the Diamond Cutting algorithm (Viterbo and Biglieri, 1996).

To reconstruct a function on a given lattice, we choose a basis function , shift it to each lattice site, and modulate it by the coefficient stored at that lattice site. The space of all functions spanned by the lattice shifts of is defined as the set

| (3) |

where is a real-valued coefficient sequence associated with the lattice. Obtaining a good approximation to equates to finding an appropriate set ; this is highly dependent on the choice of and . If we are so lucky that is interpolating on (i.e. and when ), then we may choose . However, in general, some preprocessing must be performed in order to achieve optimal error decay between the original function and its approximation. This is beyond the scope of this article, but the interested reader may refer to some of the related work (Unser, 2000; Blu and Unser, 1999; Alim, 2012). Since any asymptotic analysis is outside of the scope of this paper, without loss of generalization we set .

As for specific choices of , box splines have been investigated extensively in scientific visualization. Box splines have many equivalent definitions, but perhaps the most intuitive is the convolutional definition where one successively convolves an indicator function along the direction vectors (de Boor et al., 1993). We collect of these vectors in an matrix

| (4) |

In our case, we are interested only in matrices where and the dimension of the range of is equal to ; we require these for a valid approximation scheme (de Boor et al., 1993). With this, a box spline can be defined recursively as

| (5) |

where is interpreted as removing a direction vector from the matrix . When , we define as

| (6) |

which provides a base case for the recursion.

The box spline is compactly supported within a polytope defined by the Minkowski sum of the direction vectors in . We denote the support as ; it is given by

| (7) |

is piecewise polynomial within its support. The polynomial pieces are delineated by all -dimensional hyperplanes spanned by the direction vectors in . Denoting as the set of all such hyperplanes, we can define a fine mesh that is formed by the lattice shifts of :

| (8) |

Each region of the mesh is a convex polytope that contains a polynomial, typically these are different polynomials, however, it is possible that some regions may share the same polynomial. In general, it is possible to derive the explicit piece-wise polynomial form for each of these regions (Horacsek and Alim, 2016)— we will use this explicit form in Section 4 to determine a simpler representation of the convolution sum in Equation 3.

Our framework also accounts for Voronoi splines, which can be intuitively defined as successive convolutions of a lattice’s indicator region with itself. More precisely, Voronoi splines are defined as

| (9) | |||||

| (10) |

where denotes convolution, and if is in the Voronoi region of the lattice , and otherwise. Voronoi splines are also piece-wise polynomial, and their piece-wise polynomial form may be obtained by first redefining as the sum of a collection of constant valued box splines; higher order may be obtained by the convolution definition (Mirzargar and Entezari, 2010). Unrolling the recursion, we obtain where denotes successive convolutions. If is the sum of box-splines, then one may use the multinomial theorem to expand into the sum of many box-spline convolutions; convolving a box-spline with another box-spline simply produces a (possibly shifted) box-spline. Thus, by summing all these box-splines, we arrive at the final piece-wise polynomial form. However, these calculations are not trivial. For the BCC lattice, is the sum of 16 box-splines, (analogous to the tri-linear B-spline on the CC lattice) is the sum of 136 box-splines, is the sum of 816 and , the sum of 3876 (Mirzargar and Entezari, 2010). While these numbers are not intractably large, keep in mind that one must compute a box-spline’s polynomial representation at every iteration, which can take a non-trivial amount of time; on the order of a few dozen minutes, for the splines that sum to . Spread across 4 CPU cores, this took us about a month to compute.

4. Methodology

The convolution sum in Equation 3 is the focus of this section. There are two important parts to evaluating a function of this form; the first is the representation of the coefficient set (i.e. the memory layout), the second is the evaluation of the basis function . Concerning , we choose a specific representation in which each corresponds to a fetch from an -dimensional array. Thus, for any lattice, we choose to treat it as a union of shifted Cartesian lattices (also known as a Cartesian coset decomposition). This allows us to use texture memory on GPUs to store each coset, as shown in Figure 3. Provided we combine all reads from a given coset, this also allows us to take advantage of data locality (i.e. cache). It also allows us to use trilinear fetches as building blocks for our interpolation schemes — that is, we attempt to write our interpolation schemes as weighted sums of trilinear texture fetches on the different cosets of the lattice. This does not necessarily reduce computation per evaluation, but rather reduces the number of texture fetches needed to evaluate the convolution sum, and therefore reduces bandwidth.

With the memory layout solidified, we turn to the basis function — we apply a series of manipulations to “unroll” the convolution sum. We build some insight into how to transform into a more convenient form for evaluation with some running examples. We consider the linear tensor product spline and the Zwart-Powell (ZP) element on the two-dimensional Cartesian lattice. These have direction matrices

respectively. On the quincunx lattice, we use a tensor product style spline defined by

These box splines are shown in Figure 4. While this is nowhere near an exhaustive list of interpolants, the examples we provide are simple enough to understand in 2D and show the intricacies of the procedure.

4.1. Manipulating the Convolution Sum

We start by reiterating the convolution sum in Equation (3):

| (11) |

At a high level, our methodology consists of incrementally applying a series of simple algebraic manipulations to this sum so that it can be more effectively mapped to an implementation; be it CPU or GPU. Along the way, we pause and discuss the effects of certain choices and properties of on an evaluation scheme.

The first manipulation we apply is one that has been used to derive fast interpolants on the BCC and FCC lattices (Csebfalvi, 2013; Domonkos and Csébfalvi, 2010). In particular, these interpolation schemes take advantage of the fact that an integer lattice can be decomposed into a sum of sub-lattices. We build upon this observation by noting that we may decompose the convolution sum up over any coset structure of the lattice. This decomposition is independent of our data representation. For example, one may take a cubic Cartesian lattice and decompose it as two shifted BCC lattices. Within our framework, on the GPU each of these two BCC lattices will in turn have two 3D textures associated with it.

Formally, if is an integer subsampling matrix that yields a sub-lattice of , then there exist integer vectors such that . Note that the lattice sites constitute the coset corresponding to the shift vector . Without loss of generality, we take to be zero vector of . We can now write the convolution sum as

| (12) |

which decomposes the sum over the coset structure of the lattice induced by . Figure 2 shows the Cartesian coset structure of the quincunx lattice. In three dimensions, the BCC lattice emits similar behaviour to this; it consists of two interleaved Cartesian grids where one of the Cartesian grids is shifted by the vector . The FCC lattice is similarly decomposed into Cartesian cosets, as shown in Figure 1.

The matrix is a parameter choice that must be made depending on what is most appropriate for the device on which we are implementing an interpolation scheme. Currently, for evaluation on the GPU, a reasonable choice is a such that where is a diagonal matrix. In other words, yields a Cartesian coset structure, and we may store the lattice as a collection of Cartesian lattices, specifically 3D textures (see Figure 3). However, it is not always strictly advantageous to do so; if does not have partition of unity on the sub-lattice generated by , then this complicates the geometric decomposition of the spline. We will revisit this when we discuss the sub-regions of evaluation of a spline. While an integer invertible will still produce a valid evaluation scheme, in practice must be chosen so that it both produces a simple sub-lattice and respects the geometry of .

Next, we note that in all practical instances we have a compact basis function — either we choose to be compact, or the finite nature of our data imply a bound on the support of . Thus we change our perspective and rewrite the convolution sum over the support of shifted to the evaluation point . From this perspective, any lattice site that falls within the support of this function will contribute to the reconstruction. To simplify notation, we define the set

| (13) |

As such, is the set of lattice sites on coset that contribute to the reconstruction at point . Therefore, we may write

| (14) |

So far, we have not strictly required to be compact, but now we impose the following constraints on : it has partition of unity on , and it is piece-wise polynomial with compact polyhedral support. Partition of unity — the ability for a reconstruction space to reproduce a constant function — is a basic requirement for a valid approximation scheme, along with polynomial decay in the Fourier domain (Strang and Fix, 2011). Compactness is generally not restrictive, almost all used in practice are compact. The polynomial restriction is slightly restrictive, as it does not allow us to consider the class of exponential box splines (Ron, 1988), the CINAPACT splines (Akram et al., 2015), or the cosine weighted spline (Csebfalvi, 2013) (which is an exponential box spline).

From Equation (14), it is clear how the support of affects the inner summation of this equation — if there are more lattice sites within the support of , then the inner summation will run over more terms. The effect this has on performance depends on the underlying architecture of the machine and the complexity of . If we can commit memory reads for the while beginning to compute parts of that do not depend on the coefficients of the summation, we can effectively hide the computation of in the latency of the memory fetches. Latency hiding of this form is common in GPU compute applications. Moreover, the out-of-order execution units on modern CPUs allow for this behaviour without explicitly coding for it. However, if memory accesses are not an issue (i.e. if we are reading from fast memory, such as cache), then the cost of reconstruction will be dominated by the complexity of , and it would be advantageous to choose with larger support but lower evaluation time complexity.

We now shift our focus to the geometry of . Without loss of generality, we limit our discussion to since all other cases are shifted versions of this base case. Let us choose some such that there exists some -neighborhood around for which remains constant. Then there is some larger region containing for which does not change. That is, if we pick any then . The closure of these regions tessellate space; Figure 5 shows examples of this. For box splines, these correspond to the regions within the finer mesh of a the box spline on the lattice (de Boor et al., 1993). In this work, we refer to these as the sub-regions of the spline.

Note the periodic nature of the sub-regions. They naturally fit into equivalence classes under the following definition: we say that two mesh regions and belong to the same equivalence class if for any , there exists some lattice shift such that . Note that there are only finitely many equivalence classes, say of them, and we denote them as . We will call the choice of representative mesh regions sub-regions of evaluation. We define a region of evaluation as a convex polyhedral collection of sub-regions with hyper-volume . Note that, since we have hyper-volume , a region of evaluation will tessellate space with one convex polyhedron about the lattice . Additionally, while the sub-region equivalence classes are determined by the (decomposed) lattice and , there are multiple possible regions of evaluation for a given (see Figure 5 and Figure 6). We choose all our regions of evaluation so that they contain or touch the origin, we call the choice of such a region .

The reason we focus on a single region of evaluation is that it allows us to exploit the shift invariant nature of the approximation space. That is, say we derive a fast evaluation function with for (but perhaps not outside ). The function necessarily depends on some subset of coefficients for some fixed . Since the region of evaluation tessellates space, for any there exists some such that . Thus, we can derive a fast evaluation scheme for all points by shifting the evaluation to and shifting the coefficients — this is a consequence of the “shift invariance” property of shift invariant spaces. We define the function

| (15) |

to formalize this notion, and assert that this must exist if tessellates space and has hypervolume equal to .

Thanks to this shift invariance, we may focus solely on the region of evaluation. To each representative sub-region of evaluation, we associate a function that is defined as follows:

| (16) |

For our piece-wise polynomial splines, we may explicitly construct the polynomial representation of . We use a greedy Horner scheme to optimize the evaluation of , computation on the GPU is relatively cheap, at least compared to bandwidth access.

We combine all these sub-region functions to a single “approximation” function, , with the definition

| (17) |

We can finally write the convolution sum per coset as

| (18) |

To obtain the final approximation, one only needs to sum this over all cosets.

While this is an optimized form, it still contains multiple branches — we have different cases for each sub-region. Modern CPUs have been heavily optimized to efficiently execute branch heavy code, whereas GPUs have not. The conditional behaviour of is a major problem for GPU implementations. However, it is possible to use the symmetry of to eliminate branches. A similar idea has been used to derive fast interpolation schemes on non-Cartesian lattices (Kim and Peters, 2009). The approach taken in other works has been to encode each different polynomial in BB form, use the sub-regions to determine which coefficient sequence to use in deCasteljau’s algorithm (Kim and Peters, 2009). In this work, we use a slightly more efficient idea — we use the symmetry of the basis function to encode a change of variable relationship between subregions. This allows us to rely on a small set of polynomials, rather than having one for each sub-region. We first discuss how to determine which sub-region a point lies within without any branches. We then discuss how to eliminate branches in Equation 17.

4.2. Branch Free Sub-region Membership

Suppose we have some , since is a convex polyhedron, and is the closed union of finitely many convex polyhedra, we know there is a finite set of planes that divide the region of evaluation into the sub-regions of evaluation. We will say we have of these planes, and we will call the set of planes . Here is the normalized orientation of the plane, and is the distance from the origin; explicitly, these planes are the planes that “cut” the region of evaluation into sub-regions. Thus, if we have some point , we can construct an integer in the range by assigning its bit a value of if is on the left side of the plane , and otherwise. This allows us to associate an integer with every single sub-region of evaluation. However, this does not map to the entire range , that is, it is possible that there are more integers in the range than there are sub-regions. Further, if is large, then may be vastly bigger than . Therefore, we first compress the range down to some range — that is, we first construct as above, then take its remainder upon division with (i.e. ) where is chosen so that each subregion maps to a unique integer in . To find such an , we simply perform a bruteforce search. We then create another (surjective) map that takes to a sub-region’s index. This is realized by an array of entries. On a GPU, we store this in fast constant or shared memory. On a CPU, this will hopefully be cached very quickly. Thus, to determine this index we only need plane comparisons, one remainder operation and one fast memory lookup (the conditional behaviour of the plane comparison is done on an instruction predicate level, and does not cause any branching).

For most of the cases we consider, the number of planes turns out to be quite small. The largest for box splines in 3D we observe is , which corresponds to the quartic BCC spline (Kim, 2013c), and seems to have little effect on reconstruction speed. We did however, notice that for all orders of the Voronoi BCC spline, is prohibitively large, . While this does not pose a problem to the theory of this method, if we did not impose the compression of the range to the mapping to sub-regions would consume over of memory. We can additionally apply this procedure recursively. That is, we determine which octant a reconstruction lies within, then transform it to the positive octant; this approach only works provided the spline has the reflective symmetry necessary, which the BCC Voronoi spline does indeed have, and allows us to reduce our lookup tables further. Note that this does introduce some overhead, as one needs to incorporate another transform in the generated code.

4.3. Branch Free Evaluation via Symmetry Analysis

To build the intuition for this optimization, we begin with an example. Say we have two regions and that “appear” similar, Figure 7 shows an example for two sub-regions. Intuitively we can see that is simply a rotation (or a reflection) of . One could easily model this with a rotation matrix and, to be more general, a translation vector . That is, we would want . If this were true, we could store and in a lookup table and implement the piece-wise structure in Equation 17 as a lookup instead of branch by first looking up and , applying them to , then evaluating a single . The situation, however, is slightly more complicated, as we have failed to match the coefficients between and . To make this consistent, we need some “renaming” map that renames the to be consistent with the transformation and shift . That is, we desire and such that

If we find such and then we know for any then we have , and by renaming the memory look-ups, we can use to evaluate the sub-region’s function. Here, must be an invertible map, and must be an invertible rigid body transformation.

To find such parameters, we first choose a region as our reference sub-region and search for and for every other sub-region . The shift is chosen to be the zero vector, or the center of mass for the sub-region; is chosen from the symmetry group of the spline. We implement this as a combinatorial brute force search. That is, we enumerate all possible pairs of and and check whether this combination yields a valid transformation. Here, “valid” means that there exists a such that the above simplification holds. For splines with polynomial sub-regions, we can associate a polynomial with each coefficient lookup in , then we apply the transformation to and determine the polynomial associated with each of the transformed sub-region evaluation function. This forms a bipartite graph where the untransformed coefficients are colored in, say blue, and the transformed coefficients are coloured in red; we assign an edge between two points in those sets if their associated polynomial is identical. If there exists a perfect matching between two sets, then we say it is “valid”. Moreover, the same perfect matching yields the function . Perhaps unsurprisingly, is a rigid body transformation in all the cases we consider in this work — that is, .

In general, there may be instances where it is not possible to find and for two sub-regions . This is the case for the 6 direction box spline on the FCC lattice — there are sub-regions with vastly different geometry (Kim, 2013b) . In general, we may have such unique regions that are not re-writeable in terms of one another, and we collect them in the set . To handle these cases, we use branch predication — we calculate the polynomial within all sub-regions and use a predicate operator to choose the correct region’s result at the end of the calculation. This performs unnecessary computation, but avoids the heavy overhead of branching on the GPU (Kim et al., 2013). Algorithm 1 summarizes how to use the techniques we have discussed to perform point-wise evaluation.

4.4. Reducing Bandwidth Requirements

While the above form is appropriate for execution on the GPU, each call to incurs a heavy amount of memory accesses for larger splines. All contemporary GPUs include hardware accelerated linear texture fetches. A naive implementation of the previous sections would use nearest neighbor interpolation to facilitate the coeffecient fetches, however, trilinear texture fetches are only a small constant factor slower (approximately on our hardware) and combine up to 8 texture fetches in one instruction.

In general, it is not always possible to use trilinear interpolation to reduce 8 texture fetches into 1 — this is only possible when the basis is separable. However, it is possible to rewrite two memory fetches (that reside on adjacent points of a given Cartesian coset) as a single tri-linear fetch. This offloads some of the reconstruction bandwidth pressure, but may require more computation. Fortunately, on GPUs, computation is relatively cheap compared to bandwidth.

To demonstrate this, let us fix a single , and suppose that we have two two lattice sites and that are adjacent on the same Cartesian coset. Then we attempt to find two functions , and such that

| (19) |

The term is exactly linear interpolation; this can be easily translated into single texture fetch instruction (and some additional computation for and Explicitly, the solutions and are given by

which is easily verified. Note that we require for this to be valid — to see why this is true in our case, we fix within a given sub-region. Since we wish to use Equation (19) to replace two fetches in the convolution sum (3), we know that the basis shifts associated with two coefficients in that sum must contribute to the final sum over the entire sub-region — they cannot be identically zero. The only other troublesome case is if for some in the sub-region under investigation. For all the cases we consider, our basis functions are strictly positive, so we need not worry about this — however, for some interpolating bases, this may present a problem. If we are working with volumetric data, with and defined as the texture coordinates of the lattice sites and in GPU memory, then we can write Equation 19 as

where is the linear texture fetch built into most GPUs. This is demonstrated in Figure 8. It also possible to perform a similar optimization with groups of 4 points that are arranged as a square (on a Cartesian coset) and groups of 8 arranged as a cube (again, on a Cartesian coset). Algebraically, these cases are much more involved, but the basic principle of solving for two functions and remains the same.

While we may be able to re-write groups of fetches as a single linear fetch, there are some details that need to be addressed. The first subtlety is that, while it may be possible to cover two memory fetches with one, it may also be possible to group those two memory fetches in a larger group. If every grouped memory fetch is a subset of the total collection of memory fetches, then we seek a minimal set covering of the total set.

The set covering problem is NP-complete, however, we only perform this step once as a precomputation. We enumerate all groups of 1, 2, 4 and 8 that can be combined into a single texture fetch and use Algorithm X to find a solution to the minimal set covering problem (Knuth, 2000). Once we have a set covering we may still choose an ordering of the memory fetches. We make the assumption that, when a texture fetch occurs, all the points that contribute to that texture fetch are cached — any neighboring texture fetch may then reuse some of the values that have been cached. To take advantage of this, we create a complete directed-graph in which each node corresponds to one linear texture fetch. To each edge of the graph we assign the number of cache-misses incurred by performing the pair of memory fetches (in order). We then seek a route through this graph that minimizes cache misses — this reduces to the travelling salesman problem; another NP-complete problem. Again, this is a one-time precomputation, and Figure 9 shows an example of the set covering for the quadratic tensor product spline.

5. Experiments & Results

In this work, we validate our methodology by demonstrating that our framework works for many different test cases. While performance is important, and we do report limited performance metrics in this work, we defer in-depth performance analysis to Part II (Horacsek and Alim, 2021). To this end, we choose two simple applications that demonstrate the generality of our framework. The first is volumetric rendering, in which we generate implementations for various different spline lattice combinations, many of which have not had fast GPU implementations. The second application is simply function approximation, however, we do this on the lattice on the CPU. To our knowledge, there relatively little works that investigate function approximation in this space. We are not aware of any that assess the convergence of such spaces, nor any that provide implementations.

We do not “implement” Algorithm 1 directly; we use it simply as a template for code generation. That is, the input to our pipeline is a list of (polyhedral region, polynomial) pairs, and the output is generated LLVM code (Lattner and Adve, 2004). For our volumetric rendering experiments, we use the LLVM code to generate PTX code, for our other tests we emit x86_64 assembly. We have constrained ourselves to the CUDA ecosystem as it is the most popular GPGPU platform, however, LLVM contains both a backend for AMD GPUs and a platform agnostic SPIR-V backend that is capable of targing OpenCL + OpenGL devices (Kessenich et al., 2018). The details of the code generation stage, and a more detailed breakdown of performance is detailed in part II (Horacsek and Alim, 2021).

The processing time for a given interpolant varies based on the spline, higher order splines with large support take much longer, but the process takes typically on the order of hours on an Intel i7 7700 with 16GB of DDR4 RAM at 2333 MHz. However, the processing time of the pipeline is a one time computation, and need not be repeated once an effecient form has been derived. We run our tests on the generated code.

5.1. Volume Rendering

Volume rendering is an illustrative problem as it demonstrates an average case application — as rays march through the cells in the volume, encountering a new cell will cause a cache miss, however, for points sampled within a cell we get cache hits. Moreover it does not favour any given lattice structure — a problem that uses slices of a volume along principle lattice directions may favour one case over another.

All of our tests were run with the same CPU as above and an NVIDIA GTX 1060 with 6GB ram and no memory or core over-clocks. Compute kernels were set to use a maximum of 128 registers. Table 1 enumerates the list of interpolants for which we generated code. When generating code, there is a small number of parameters to choose — we tuned each interpolant separately in order to maximize their performance as detailed in Part II (Horacsek and Alim, 2021).

Each test cased was budgeted approximately the same amount of memory to ensure fairness between test results. For our test function, we sampled the Marshner Lobb function (Marschner and Lobb, 1994) at each lattice site, this is the de-facto standard when comparing interpolation kernels — as one moves further from the center of the volume, the spatial frequency increases, as such reconstruction errors visually depict how frequency content is distorted. Typically, one renders the Marshner Lobb as an iso-surface, however we stick to volumetric rendering to expose the worst case behaviour of the algorithm. The isosurface for a given isovalue may change slightly among grids, thus one grid may terminate earlier than others, our volumetric rendering scheme ensures uniformity of marched rays. Scale was chosen to be equal in each case. Our transfer function was chosen so that the volume was mostly transparent, thus forcing all rays to traverse the entire volume.

| Interpolant Name | Lattice | Direction Matrix | Order | # Lookups | Reference |

| CC Voronoi Spline 1 | CC |

|

2 | 8 | |

| FCC Voronoi Spline 1† | CC | N\A | 2 | 16 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| Linear Rhombic† Dodecahedron | CC |

|

2 | 16 | (Entezari et al., 2004) (Csébfalvi and Rácz, 2016) |

| CC Voronoi Spline 2 | CC |

|

3 | 27 | |

| BCC Voronoi Spline 1† | CC | N\A | 2 | 32 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| Truncated Rhombic Dodecahedron | CC |

|

4 | 53 | (Entezari and Möller, 2006) |

| FCC Voronoi Spline 2† | CC | N\A | 3 | 54 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| CC Voronoi Spline 3 | CC |

|

4 | 64 | |

| Linear Rhombic Dodecahedron | BCC |

|

2 | 4 | (Entezari et al., 2004) |

| BCC Voronoi Spline 1† | BCC | N\A | 2 | 8 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| BCC Voronoi Spline 2† | BCC | N\A | 3 | 27 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| Quartic Truncated Rhombic Dodecahedron | BCC |

|

4 | 30 | (Kim, 2013c) |

| Qunitic Rhombic Dodecahedron | BCC |

|

4 | 32 | (Entezari et al., 2004) |

| FCC Voronoi Spline 1† | FCC | N\A | 2 | 8 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

| Cubic Truncated Octohedron | FCC |

|

3 | 16 | (Kim et al., 2008b) |

| FCC Voronoi Spline 2† | FCC | N\A | 3 | 27 | (Mirzargar and Entezari, 2010) (Mirzargar and Entezari, 2011) |

5.1.1. Quantitative Results

For every combination of basis function and lattice tested, we obtained reasonable render times, shown in Figure 10. The only case in which performance degraded beyond what we consider reasonable is the BCC Voronoi 2 spline on the BCC lattice — this is likely due to the complex polynomial structure of the spline combined with the large amount of memory fetches one needs to reconstruct a single value. We do not consider this spline to be real time, however it may be used in progressive rendering approaches to refine a rendered image once user interaction has stopped.

A question one might have, based on these results, is how the generated code scales up as the interpolants become more complex. That is, if we require double the memory accesses per reconstruction, it is reasonable to think that a reconstruction would take double the amount of time to complete. Figure 11 shows a plot relating the number of points that contribute to a reconstructed point versus the render time, and a least squares fit (with the single outlier point removed). The least squares fit has a slope of approximately 0.6. At first glance this appears quite promising — one might expect a slope of 1, and 0.6 implies that the cost of doubling the complexity of the spline is less than one might expect. However, the correlation coefficient of these data is approximately 0.45 (with p-value 0.07), thus this correlation is quite weak. Unfortunately, there are too many confounding variables — remember, we take the best results over all configurations of spline spaces (i.e we mix branch predication, tri-linear lookups, etc.). We will return to this in Part II.

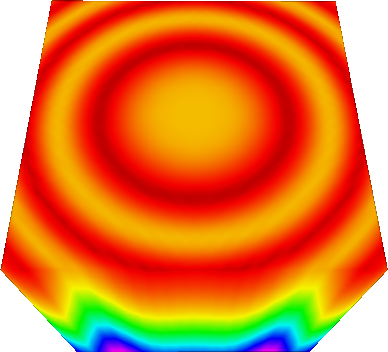

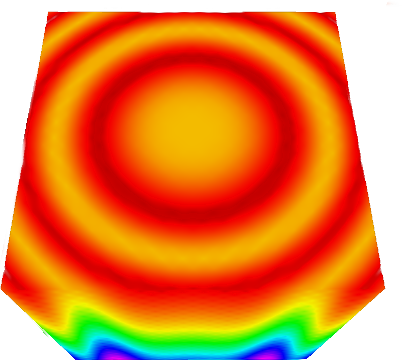

5.1.2. Qualitative Results

While it is not the goal of this work to showcase qualitative visual results, we compare the splines of each lattice on their respective lattice. Figure 12 shows the qualitative difference for the rendered volumes. These are at similar resolutions, and it is clear that the BCC and FCC outperform the CC lattice. Between the BCC and FCC lattice the comparison is more difficult, there are subtle difference in reconstruction, yet the artifacting seems wholly more isotropic on the BCC lattice.

5.2. Approximation on the lattice

To demonstrate that we are not bound to 2 and 3 dimensions, we derive a fast evaluation scheme for the 4-dimensional lattice. The generating lattice, as well as the direction matrix for our spline are

| (20) |

respectively. The geometry of this lattice is similar to that of the FCC lattice; the lattice consists of 8 Cartesian cosets, shifted to the 3-facets of the 4-dimensional hypercube. The spline we choose is a modification of the one presented by Kim et al., however some direction vectors have been removed to make the space computable in a reasonable amount of time (Kim and Peters, 2011). The spline is a fourth order spline, but not interpolating, as such it must be pre-filtered to ensure that the error will decay as expected. We used a filter with value at the origin, and at all lattice sites at distance from the origin. We approximated a Gaussian with mean and . We started with a grid scale and successively halved 10 times, at each iteration we sampled the function on the grid , then measured the error over the domain via Monte Carlo integration with 10,000 samples. The overall decomposition analysis for the spline took approximately 2 days to compute, and the code generation (Horacsek and Alim, 2021) took approximately a day to compute. Again, this is a one time pre-computation; reconstruction, on the other hand, is many orders of magnitude faster, a single point evaluation takes less than a millisecond.

5.2.1. Results

Since the spline is fourth order spline, we expect to see the error decay by a factor of at every iteration. Figure 13 demonstrates exactly this behaviour. An equivalent tensor-product spline on the 4-dimensional Cartesian lattice would require 256 memory accesses for the same order, whereas this case requires only 240. Perhaps astonishingly, the same spline has the same order on the lattice (the dual of the ) requiring only 60 memory accesses. The lattice attains the optimal hyper-sphere packing in 4-dimensions, as such, the lattice would be the optimal sampling lattice. However, we stick to the lattice, as it is more difficult to derive interpolants for; its coset structure contains more shifted lattices than the lattice, and is somewhat of a “stress-test” for our methodology.

6. Conclusion

We presented a generalized framework for analysing multidimensional splines on non-Cartesian (and Cartesian grids), with the target of producing fast evaluation schemes for said spline spaces. While this is the main contribution of the work, we also produced performant code for the notoriously complex Voronoi splines on the FCC and BCC lattice which have not yet had efficient implementations. We also demonstrated the computational behaviour of our approach as spline size increased, showing reasonable computational increase as the support of a spline increases—however we saw that it is difficult to predict performance based on support size alone. Finally, we investigated the performance in 4-dimensions, and provided an imlpementation (and quasi-interpolant filter) for a spline on the lattice. The entire pipeline of our worksheet is implemented within a SageMath worksheet, and is available on github (Developers, 2016; Horacsek, 2021). Further details related to the code generation step of our pipeline, as well as detailed performance results are presented in part II of this work (Horacsek and Alim, 2021). Future theoretical work is focused on extending this framework to more classes of splines (i.e. the exponential box splines), designing optimized interpolants and assessing the quality of interpolants in a systematic manner.

References

- Akram et al. (2015) Bita Akram, Usman R. Alim, and Faramarz F. Samavati. Cinapact-splines: A family of infinitely smooth, accurate and compactly supported splines. In George Bebis, Richard Boyle, Bahram Parvin, Darko Koracin, Ioannis Pavlidis, Rogerio Feris, Tim McGraw, Mark Elendt, Regis Kopper, Eric Ragan, Zhao Ye, and Gunther Weber, editors, Advances in Visual Computing, pages 819–829, Cham, 2015. Springer International Publishing. ISBN 978-3-319-27857-5.

- Alim et al. (2010) Usman Alim, Torsten Möller, and Laurent Condat. Gradient estimation revitalized. IEEE Transactions on Visualization and Computer Graphics, 16(6):1495–1504, 2010.

- Alim (2012) Usman R. Alim. Data Processing on the Body-Centered Cubic Lattice. PhD thesis, Simon Fraser University, Burnaby BC, Canada, 07/2012 2012.

- Blu and Unser (1999) Thierry Blu and Michael Unser. Quantitative fourier analysis of approximation techniques. i. interpolators and projectors. IEEE Transactions on signal processing, 47(10):2783–2795, 1999.

- Blu et al. (2001) Thierry Blu, P Thcvenaz, and Michael Unser. Moms: Maximal-order interpolation of minimal support. IEEE Transactions on Image Processing, 10(7):1069–1080, 2001.

- Ceberio and Kreinovich (2004) Martine Ceberio and Vladik Kreinovich. Greedy algorithms for optimizing multivariate horner schemes. ACM SIGSAM Bulletin, 38(1):8–15, 2004.

- Conway and Sloane (2013) John Horton Conway and Neil James Alexander Sloane. Sphere packings, lattices and groups, volume 290. Springer Science & Business Media, 2013.

- Csebfalvi (2013) Balazs Csebfalvi. Cosine-weighted b-spline interpolation: A fast and high-quality reconstruction scheme for the body-centered cubic lattice. IEEE transactions on visualization and computer graphics, 19(9):1455–1466, 2013.

- Csébfalvi and Rácz (2016) Balázs Csébfalvi and Gergely Rácz. Retailoring box splines to lattices for highly isotropic volume representations. Computer Graphics Forum, 35(3):411–420, 2016. ISSN 1467-8659. doi: 10.1111/cgf.12917. URL http://dx.doi.org/10.1111/cgf.12917.

- de Boor (1993) Carl de Boor. On the evaluation of box splines. Numerical Algorithms, 5(1):5–23, 1993. ISSN 1017-1398. doi: 10.1007/BF02109280.

- de Boor et al. (1993) Carl de Boor, Klaus Höllig, and Sherman Riemenschneider. Box Splines. Springer-Verlag New York, Inc., New York, NY, USA, 1993. ISBN 0-387-94101-0.

- Developers (2016) The Sage Developers. Sage Mathematics Software (Version 7.2), 2016. http://www.sagemath.org.

- Domonkos and Csébfalvi (2010) Balázs Domonkos and Balázs Csébfalvi. DC-splines: Revisiting the trilinear interpolation on the body-centered cubic lattice. In Proceedings of the 15th Vision, Modeling, and Visualization Workshop (VMV), pages 275–282, Siegen, Germany, November 2010.

- Entezari et al. (2008) A. Entezari, D. Van De Ville, and T. Moller. Practical box splines for reconstruction on the body centered cubic lattice. Visualization and Computer Graphics, IEEE Transactions on, 14(2):313–328, March 2008. ISSN 1077-2626. doi: 10.1109/TVCG.2007.70429.

- Entezari (2006) Alireza Entezari. Towards computing on non-cartesian lattices. IEEE Transactions on Visualization and Computer Graphics, 2006.

- Entezari and Möller (2006) Alireza Entezari and Torsten Möller. Extensions of the Zwart-Powell box spline for volumetric data reconstruction on the cartesian lattice. IEEE Transactions on Visualization and Computer Graphics, 12(5), 2006.

- Entezari et al. (2004) Alireza Entezari, Ramsay Dyer, and Torsten Möller. Linear and cubic box splines for the body centered cubic lattice. In Proceedings of the conference on Visualization’04, pages 11–18. IEEE Computer Society, 2004.

- Entezari et al. (2009) Alireza Entezari, Mahsa Mirzargar, and Leila Kalantari. Quasi-interpolation on the body centered cubic lattice. In Computer Graphics Forum, volume 28, pages 1015–1022. Wiley Online Library, 2009.

- Finkbeiner et al. (2010) Bernhard Finkbeiner, Alireza Entezari, Dimitri Van De Ville, and Torsten Möller. Efficient volume rendering on the body centered cubic lattice using box splines. Computers & Graphics, 34(4):409–423, 2010.

- Horacsek and Alim (2016) J.J. Horacsek and U.R. Alim. Fast and exact evaluation of box splines via the PP-form. ArXiv e-prints, June 2016.

- Horacsek (2021) Joshua Horacsek. Fast spline. https://github.com/jjh13/fast-spline, 2021.

- Horacsek and Alim (2021) Joshua Horacsek and Usman Alim. Generating fast interpolants for volumetric data: Part ii — implementation and code generation. 2021.

- Kessenich et al. (2018) John Kessenich, Boaz Ouriel, and Raun Krisch. Spir-v specification. Khronos Group, 3, 2018.

- Kim (2013a) Minho Kim. GPU isosurface raycasting of FCC datasets. Graphical Models, 75(2):90 – 101, 2013a. ISSN 1524-0703. doi: http://dx.doi.org/10.1016/j.gmod.2012.11.001. URL http://www.sciencedirect.com/science/article/pii/S152407031200077X.

- Kim (2013b) Minho Kim. Gpu isosurface raycasting of fcc datasets. Graphical Models, 75(2):90–101, 2013b.

- Kim (2013c) Minho Kim. Quartic box-spline reconstruction on the BCC lattice. IEEE transactions on visualization and computer graphics, 19(2):319–330, 2013c.

- Kim (2017) Minho Kim. Analysis of symmetry groups of box-splines for evaluation on gpus. Graphical Models, 93:14 – 24, 2017. ISSN 1524-0703. doi: https://doi.org/10.1016/j.gmod.2017.08.001. URL http://www.sciencedirect.com/science/article/pii/S1524070317300553.

- Kim and Peters (2009) Minho Kim and Jorg Peters. Fast and stable evaluation of box-splines via the bb-form. Numerical Algorithms, 50(4):381–399, 2009. ISSN 1017-1398. doi: 10.1007/s11075-008-9231-6.

- Kim and Peters (2011) Minho Kim and Jörg Peters. Symmetric box-splines on root lattices. Journal of computational and applied mathematics, 235(14):3972–3989, 2011.

- Kim et al. (2008a) Minho Kim, Alireza Entezari, and Jörg Peters. Box spline reconstruction on the face-centered cubic lattice. Visualization and Computer Graphics, IEEE Transactions on, 14(6):1523–1530, 2008a.

- Kim et al. (2008b) Minho Kim, Alireza Entezari, and Jörg Peters. Box spline reconstruction on the face-centered cubic lattice. IEEE Transactions on Visualization and Computer Graphics, 14(6):1523–1530, 2008b.

- Kim et al. (2013) Minho Kim, Hyunjun Kim, and Aaliya Sarfaraz. Efficient gpu isosurface ray-casting of bcc datasets. 2013.

- Knuth (2000) Donald E Knuth. Dancing links. arXiv preprint cs/0011047, 2000.

- Kobbelt (1997) Leif Kobbelt. Stable evaluation of box‚splines. Numerical Algorithms, 14(4):377–382, 1997. ISSN 1017-1398. doi: 10.1023/A:1019133501773.

- Lattner and Adve (2004) Chris Lattner and Vikram Adve. LLVM: A compilation framework for lifelong program analysis and transformation. pages 75–88, San Jose, CA, USA, Mar 2004.

- Marschner and Lobb (1994) Stephen R Marschner and Richard J Lobb. An evaluation of reconstruction filters for volume rendering. In Proceedings of the conference on Visualization’94, pages 100–107. IEEE Computer Society Press, 1994.

- Mirzargar and Entezari (2010) Mahsa Mirzargar and Alireza Entezari. Voronoi splines. IEEE Transactions on Signal Processing, 58(9):4572–4582, 2010.

- Mirzargar and Entezari (2011) Mahsa Mirzargar and Alireza Entezari. Quasi interpolation with voronoi splines. IEEE transactions on visualization and computer graphics, 17(12):1832–1841, 2011.

- Ron (1988) Amos Ron. Exponential box splines. Constructive Approximation, 4(1):357–378, 1988.

- Strang and Fix (2011) Gilbert Strang and George Fix. A fourier analysis of the finite element variational method. In Constructive aspects of functional analysis, pages 793–840. Springer, 2011.

- Unser (1999) Michael Unser. Splines: A perfect fit for signal and image processing. IEEE Signal processing magazine, 16(6):22–38, 1999.

- Unser (2000) Michael Unser. Sampling-50 years after shannon. Proceedings of the IEEE, 88(4):569–587, 2000.

- Van De Ville et al. (2004) Dimitri Van De Ville, Thierry Blu, Michael Unser, Wilfried Philips, Ignace Lemahieu, and Rik Van de Walle. Hex-splines: A novel spline family for hexagonal lattices. IEEE Transactions on Image Processing, 13(6):758–772, 2004.

- Viterbo and Biglieri (1996) Emanuele Viterbo and Ezio Biglieri. Computing the voronoi cell of a lattice: the diamond-cutting algorithm. IEEE transactions on information theory, 42(1):161–171, 1996.