∎

Automatic Exposure Selection and Fusion

for High Dynamic Range Photography via Smartphones

Abstract

High Dynamic Range (HDR) photography involves fusing a bracket of images taken at different exposure settings in order to compensate for the low dynamic range of digital cameras such as the ones used in smartphones. In this paper, a method for automatically selecting the exposure settings of such images is introduced based on the camera characteristic function. In addition, a new fusion method is introduced based on an optimization formulation and weighted averaging. Both of these methods are implemented on a smartphone platform as an HDR app to demonstrate the practicality of the introduced methods. Comparison results with several existing methods are presented indicating the effectiveness as well as the computational efficiency of the introduced solution.

Keywords:

Automatic exposure selection High Dynamic Range photography on smartphones exposure bracketing1 Introduction

Many camera sensors, in particular the ones used in smartphones, have limited dynamic or contrast ranges. High Dynamic Range (HDR) techniques allow compensating for low dynamic ranges of such sensors by capturing a number of images at different exposures, called an exposure bracket, and by fusing these images to form an HDR image Ref1 , Ref2 .

Although an exposure bracket may contain many images taken at different exposure settings, Barakat et al. Ref3 showed that in most cases, an exposure bracket consisting of three images is adequate for capturing the full contrast of a scene. Exposure bracketing is thus normally done by taking three images with one taken at the auto-exposure (AE) setting together with a brighter looking image and a darker looking image taken at exposure settings, where is manually selected or is a user-specified exposure level. An automatic exposure selection method makes it possible to generate HDR images without requiring users to select the exposure level n. Automatic exposure selection methods in the literature can be grouped into two major categories: scene irradiance-based methods Ref3 , Ref4 and ad-hoc methods Ref5 -Ref7 .

Fusion approaches in the literature can also be placed into one of these two major categories: (i) irradiance mapping Ref8 followed by tonal mapping, and (ii) direct exposure fusion Ref9 . Tonal mapping methods work either globally or locally. Global tonal mapping methods, e.g. Ref10 , use a monotonically increasing curve to compress an irradiance map. Such methods do not retain local image details. On the other hand, local tonal mapping methods retain local image details. Recent tonal mapping algorithms, e.g. Ref11 -Ref13 , decompose the luminance of an irradiance map image into a base layer and a detail layer where the base layer consists of large scale variations and the detail layer of small scale variations. Direct exposure fusion methods, e.g. Ref15 -Ref21 , use local image characteristics to generate weight maps and then fuse bracket images using such maps. It is worth noting that some exposure fusion works have addressed the generation of HDR images in the presence of changing scenes Ref22 , Ref23 .

In this paper, a new HDR photography method for scenes that remain static during the time different exposures are captured is introduced and implemented on smartphones. This method consists of two parts: exposure selection and exposure fusion. In the exposure selection part, the scene is analyzed to determine three exposure times automatically for under-exposed, normal-exposed, and over-exposed images. These images are then blended or fused in the exposure fusion part using an optimization framework and weighted averaging.

The rest of the paper is organized as follows. In sections 2 and 3, the exposure selection and the exposure fusion parts are described, respectively. Section 4 provides the smartphone implementation or app for generating HDR images. The results and comparisons with several existing methods are then presented in section 5 and finally the paper is concluded in section 6.

2 Automatic Exposure Selection Method

Exposure bracketing involves the use of three images: a normal-exposed image (), an over-exposed image () and an under-exposed image (). The exposure selection method we introduced in Ref7 has been employed here for the smartphone implementation. The steps to find optimal exposure deviations about the normal exposure are shown in Fig. 1.

Initially, all the camera parameters are set to automatic and an auto-exposed image is captured. The luminance of is clustered into dark, normal, and bright regions. These regions represent under-exposed, well-exposed, and over-exposed parts of the image, respectively. The optimal exposure times are then obtained in order to make the dark, the normal, and the bright regions of better exposed in , , and , respectively. Unlike the conventional automatic exposure methods that consider a linear relationship between the exposure time and the brightness level, in this work the camera characteristic function is used to establish this relationship and the exposure times are found by mapping the gray-level means of the clustered regions to the optimal gray level (usually 128 for 256-level images). More details of the exposure selection process are provided in Ref7 .

3 Fusion Method to Generate HDR Images

This section describes the fusion of the images , , and , using an algorithm described in the next subsections. Let , , , , , , , , , Y, Cb, Cr represent the Y, Cb, and Cr components of the images , , , and the fused or output image, respectively. Fusion is conducted for the luminance and chrominance component separately as described in the following two subsections.

3.1 Luminance fusion

Here gradient information is used to guide the luminance fusion. The rationale behind using gradient information is that a well-exposed image provides a better representation of edges, that is to say it provides higher gradient values compared to a poorly-exposed image Ref18 . The gradients are extracted from the well-exposed regions of , , and and merged into gradient maps along the horizontal () and vertical () directions. An initial estimate (X) of the fused luminance (Y) is also obtained by averaging , , and . Using the extracted gradients and the initial estimate, the fused luminance (Y) is devised by solving the optimization problem that is stated below.

| (1) |

where denotes the Frobenius matrix norm, and indicate the horizontal and vertical gradient operators, and is a weighting parameter. The solution to this optimization problem is given by (see Appendix A):

| (2) |

where u and v are the pixel coordinates, and denote the forward and inverse Fourier transforms, respectively, and denotes complex conjugate.

For luminance fusion, the images , , and , are available and the output image Y is estimated. As stated earlier, X, and are obtained from the available image data in the solution provided in (2) to estimate Y. In this work, the initial estimate X is chosen to be the average of the images captured at different exposure settings because the average luminance conveys the brightness of a scene as illustrated in Fig. 2. In other words, X is set to be

| (3) |

The horizontal () and the vertical () gradients are extracted from the luminance components using the clustering outcome obtained in the exposure selection step. In other words, the gradient in the dark, normal, and bright regions is obtained from the over-exposed, normal-exposed, and under-exposed images, respectively. For this purpose, three binary cluster maps (R) are generated which correspond to the three clusters obtained from the exposure selection step . For a pixel k of , if its gray-level belongs to the cluster 1, 2, or 3 (specified by the dark, normal, and bright areas, respectively), then the corresponding pixel in , , or is set to 1, where , , and represent the binary cluster maps for the under-exposed, normal-exposed, and over-exposed clusters, respectively. Hence, for a pixel k

| (4) |

where denotes the cluster label of the pixel of . Then, and for the pixel k are computed as follows:

| (5) |

The method described above causes sharp transitions on cluster borders since image gradients get multiplied by binary cluster maps. In order to avoid such sharp transitions, a low-pass filter is applied to every binary map. Here, the so-called guided filter Ref13 is applied to the binary masks to make transitions of cluster borders smooth. In other words, the smooth cluster maps () for the three clusters are obtained as follows:

| (6) |

where X acts as a guide and r and indicate the filter parameters. The smoothed cluster maps are then normalized. The normalized weights for a pixel k are computed this way

| (7) |

Once the normalized weights are computed, and are obtained as follows:

| (8) |

3.2 Chrominance fusion

Unlike the luminance component, chrominance fusion is achieved through weighted averaging. The saturation value of a pixel is used as a weight to fuse its chrominance components. The saturation for a pixel k with red, green, and blue components of , , is computed this way

| (9) |

Let , , and represent the saturation of the pixel k in , , and , respectively. The normalized weights are obtained as follows:

| (10) |

And the chrominance components of the pixel k are then fused according to these equations:

| (11) |

4 Smartphone Implementation

A smartphone app is developed in this work to demonstrate the practicality aspect of the introduced automatic exposure selection and fusion methods. This app is developed for smartphones running Android operating system by utilizing the following software tools: Android Studio and Android Native Development Kit (Android NDK) which allows incorporating C/C++ codes into Android Studio. The entire processing is divided into two parts: capturing part, which is done via Java code, and processing part, which is done via C code. More details about the two parts are provided in the following two subsections.

4.1 Capturing

The capturing part involves taking followed by taking , , and . A button named Capture is provided in the app to launch capturing images. Once the Capture button is pressed by the user, the capturing process starts as described in Algorithm 1. The output of Algorithm 1 consists of three images in the YCbCr format, corresponding to the under-exposed, normal-exposed, and over-exposed conditions as well as the clustering outcome in the form of three binary maps.

| Input: None. |

| Output: Three YCbCr images and binary maps |

| 1. Perform an initial capture |

| 2. Cluster the luminance component of this initial |

| capture, record the 3 cluster means and generate |

| three binary maps |

| 3. Initialize three CaptureRequests and set the |

| CaptureRequest exposure times |

| 4. Initialize a burst CaptureSession with the three |

| defined CaptureRequests |

| 5. Launch CaptureSession and wait for camera to |

| acquire the three images |

| 6. Pass the three images together with the three binary |

| maps through JNI (Java Native Interface) to the C |

| fusion code |

4.2 Fusion part

When the three images are made available from the capturing part, the processing outlined in section 3, which is coded in C, is performed and an HDR image as described in Algorithm 2 is generated. This image is then sent back to the Java environment for getting saved in memory.

| Input: Three images in YCbCr format and binary maps. |

| Output: HDR image |

| 1. Fuse luminance component |

| a. Obtain X via (3) |

| b. Calculate the horizontal and vertical gradients of |

| , , and |

| c. Apply the guided filter to get smooth cluster maps |

| via (6) and normalize the weights via (7) |

| d. Acquire the gradients via (8) |

| e. Estimate Y via (2) |

| 2. Fuse chrominance component |

| a. Obtain the saturation for each pixel of , , |

| and via (9) and calculate the normalized |

| chrominance weights via (10) |

| b. Calculate Cb, Cr via (11) |

| 3. Combine Y, Cb, and Cr to form the output image |

| in the YCbCr format and then convert to the RGB |

| format |

5 Results and Discussion

This section provides the results of the experimentations conducted to evaluate the introduced automatic exposure selection and fusion methods for generating HDR images.

An Android app was designed to capture 34 images of a scene at 8M size and fixed ISO, fixed lens position, with the auto-white-balance enabled, across different exposure times. 34 exposure times varying from 0.5 sec to 1/4000 sec in steps of 1/3 standard exposure value were used to generate a ground-truth irradiance map image () of the scene using the method introduced in Ref8 . The above process was repeated for 10 different indoor and outdoor scenes. The complete dataset is made available for public use at http://www.utdallas.edu/kehtar/ImageEnhancement/

HDR/Scenes.

The first set of experiments mentioned next addressed selecting the optimal parameters while the second and third sets of experiments addressed the performance and the results obtained by a modern smartphone, respectively.

5.1 Parameter selection

The parameters of the exposure fusion part included the regularization parameter of the optimization in (2), as well as the guided filter parameters, i.e. the filter radius r and the regularization parameter in (6). For the guided filter, since maximal smoothing within short distances was desired, the minimal value of 1 for r together with a large value of 1 for were considered. The kernels and were used as the horizontal and vertical gradient operators, respectively.

The experiments revealed that the optimal was highly dependent on the image size. To address this issue, resized copies of the images were generated by scaling the dimension from 0.1 to 1 in steps of 0.1. The fusion algorithm was then applied to all the images, the original images and resized ones. was varied according to (12) and the widely used image quality measure of Tone-Mapped Quality Index TMQI Ref24 was computed for every ,

| (12) |

where denotes the diameter of the input image and is a scaling factor. The average TMQI over all the images for different scale values is shown in Fig. 3. This figure indicates that the scale value of 2 generated the best TMQI. Hence, was set to for the subsequent experimentations.

Furthermore, in order to gain computational efficiency, another experiment was conducted by applying the guided filtering operation to the down-sampled binary maps (instead of the original size) and TMQI was recomputed. The binary cluster maps were scaled to a smaller size, after applying the guided filter, the filtering outcome was rescaled to the original size. All the other steps of the exposure fusion were kept the same. The dimensions of the binary cluster maps were lowered as follows:

| (13) |

where denotes the down-sampling ratio and represents a scaling factor. The average TMQI over all the images for different values is shown in Fig. 4. As can be seen from this figure, TMQI dropped monotonically as was increased. However, since the decrease in TMQI was more as varied from 3 to 4 compared to the previous decreases, was chosen in the smartphone app. In other words, for computational efficiency reasons on a smartphone platform, the binary clustered maps were down-sampled with a factor of 8 before applying the guided filter.

5.2 Comparison

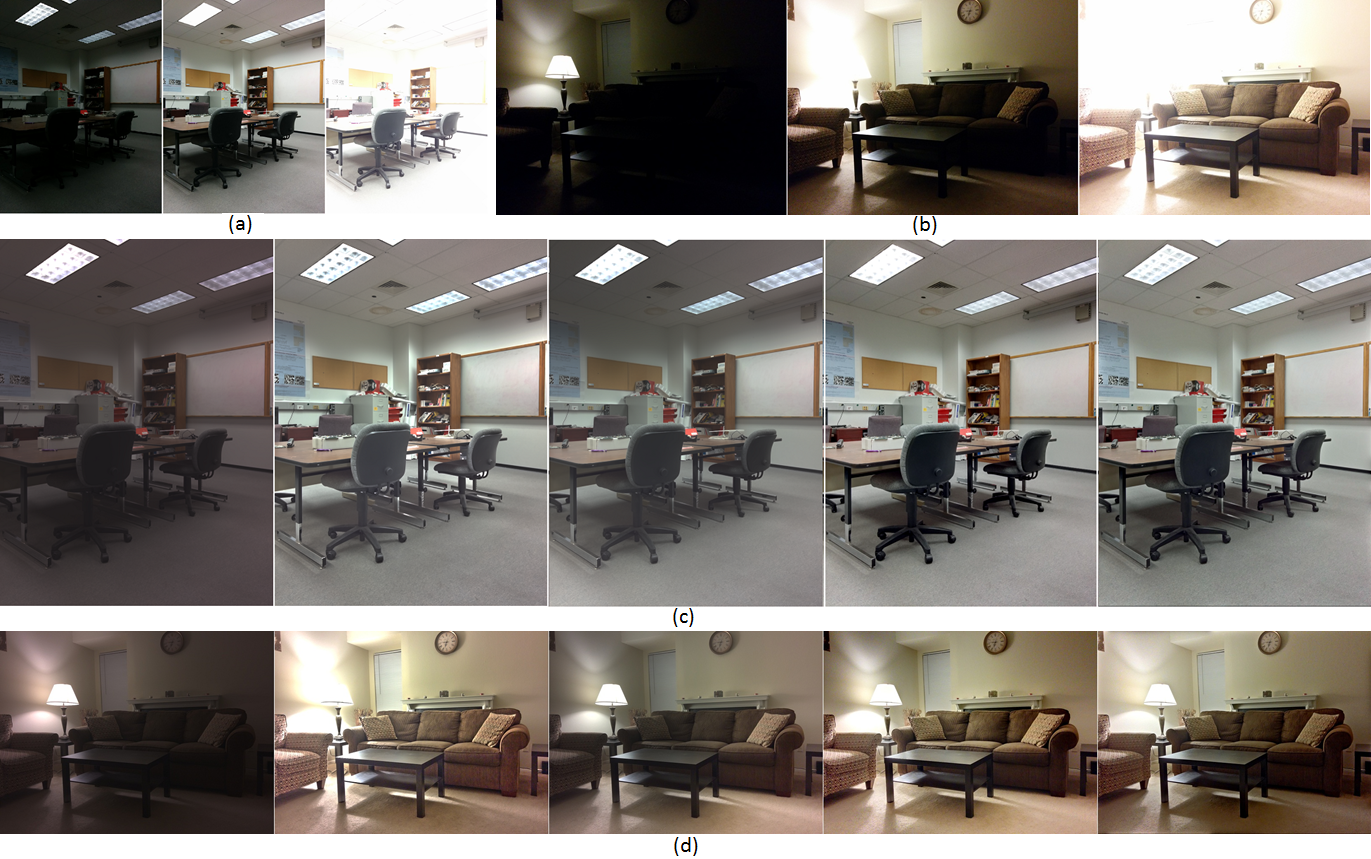

This section provides objective and subjective comparisons with several representative existing methods. The method introduced in this paper, named OB, together with iCam Ref10 , Weighted Least Squares (WLS) Ref11 , Guided Filtering (GF) Ref13 , and Exposure Fusion (EF) Ref15 methods were applied to the image sequences. Sample image sequences as well as the corresponding fusion results are displayed in Fig. 5. As can be seen from Figs. 5 (c) and (d), the fusion results using the EF and OB methods were found to be better compared to the other methods. However, the light region in Fig. 5 (c) appeared over-exposed and the wall region above the light in Fig. 5 (d) lost some color information when using the EF method whereas these effects did not occur when using the OB method.

The TMQI measure averaged over all the 10 scenes are provided in Fig. 6. As can be seen from this figure, the performance of the EF and OB methods were found comparable and higher than those of the other methods.

The processing times of the aforementioned methods were measured by applying them to the images in the dataset resized to 1 megapixels. These times were found to be 2.67, 22.61, 1.07, 2.37, and 0.99 seconds for the iCam, WLS, GF, EF, and OB methods, respectively, when using a 2.66 GHz machine with all the methods coded in Matlab. It is also worth mentioning that the processing times for the iCam, WLS, and GF methods, which all fall under the tonal mapping category of HDR, only reflect the time associated with the tonal mapping and do not include the time associated with the scene irradiance map generation. Since the TMQI measure for the iCam, WLS, and GF methods was found to be lower compared to those for the EF and OB methods, the processing time comparison was limited to the EF and OB methods which is stated below.

The computational complexities of the EF and OB methods are and , respectively, where and denote constants and denotes the image size (height or width). Although the two methods have the same big , the constants and have a noticeable impact on the processing time as increases. Fig. 7 shows the average processing times of the EF and OB methods over the resized versions of the captured scenes in terms of the image size expressed in pixels. The processing times were measured for =64, 128, 256, 512, 1024, 2048. In addition, and curves were fitted to the processing times thus generating the values of 8.47e-8 and 2.25e-7 for the constants and , respectively. As can be seen from this figure, the two dashed lines fit the processing times closely. As a result, the processing time of the OB method is on average that of the EF method, that is the OB method is about 60% faster than the EF method. More computational gain can be achieved noting that the Fourier transforms of and in (2) are input image-independent and can be computed offline and stored in memory.

Although the introduced solution is intended for use in scenes that do not change during the time that different exposure images are captured, the exposure selection and capturing parts are designed and implemented in such a way that there is minimal delay in capturing the three exposure images. Thus, in practice, one experiences little or no misalignments between the three exposure images because of possible changes that may occur in a scene. Furthermore, the exposure fusion part is tolerant to slight misalignments since it extracts the fusion information locally from the auto-exposed image rather than the other exposure images. An example of an HDR image in the presence of misalignments that are generated by the EF and OB methods is shown in Fig. 8. As can be seen from this figure, the ghost effect is noticeable around the cables and the edges of the monitor and the mouse in the image generated by the EF method while no ghost artifacts are present in the image generated by the OB method. It is worth noting that it is possible to use computationally efficient image registration techniques, e.g. Ref4 , Ref25 , to align different exposure images before performing fusion for situations where severe misalignments may occur.

5.3 Smartphone results

In this subsection, the actual smartphone implementation outcome of the OB method is reported. This HDR app can be downloaded from http://www.utdallas.edu/

kehtar/HDRApp.apk and run on an Android smartphone. A sample exposure selection and fusion outcome obtained by running the developed app on a smartphone is shown in Fig. 9 together with the corresponding under-exposed, normal-exposed, and over-exposed images.

The average processing time for images of size pixels on a modern smartphone was found to be about 1.8s. Note that the intention here has been to show the practicality of running the introduced solution on smartphones. It should be realized that it is possible to achieve lower processing times as the app code running on the smartphone does not utilize vector processing on the NEON coprocessor present on nearly all modern smartphones.

6 Conclusion

A method for automatic exposure selection and a method for fusion of exposure bracket images were introduced in this paper. The exposure selection was done by analyzing the brightness of a scene via clustering and the camera characteristic function. For exposure fusion, the luminance and chrominance of three bracket images were blended via an optimization formulation and weighted averaging, respectively. The smartphone implementation and comparisons with the existing methods have shown the practicality and performance of the introduced solution.

Appendix A Optimization Solution

This appendix provides the solution of the optimization problem with only one gradient term. The derivation for two terms is straightforward and not included here to save space. The optimization formulation for one gradient term is given by

| (14) |

where represents the gradient of Y and indicates the gradient operator. In vector form, Equation (14) can be written as:

| (15) |

where y, x, and represent the column vector versions of Y, X, and , respectively, and C denotes the block-circulant matrix representation of . By taking the derivative with respect to y, the following solution is obtained

| (16) |

where I denotes the identity matrix. Since C is a block-circulant matrix, it can be represented in diagonal form as:

| (17) |

where E is the diagonal version of C and W is the DFT (Discrete Fourier Transform) operator. The diagonal values of E correspond to the DFT coefficients of ( should be zero-padded properly before applying DFT). Hence, (16) can be rewritten as:

| (18) |

By multiplying both sides of (18) by , the following equation is resulted

| (19) |

Since , , and correspond to the DFT of , x, and , respectively, (19) can be stated as follows:

| (20) |

References

- (1) Mann, S., Picard, R.,: Being ‘undigital’ with digital cameras: extending dynamic range by combining differently exposed pictures. In: IS&T, 48th annual conference, USA, pp. 422-428 (1995).

- (2) Reinhard, E., Heidrich, W., Debevec, P., Pattanaik, S., Ward, G., Myszkowski, K.: High Dynamic Range Imaging, 2nd edn. Morgan Kaufmann Publishers, San Francisco (2010).

- (3) Barakat, N., Hone, A.N., Darcie, T.E.: Minimal-bracketing sets for high-dynamic-range image capture. IEEE Trans. Image Process. 17(10), 1864-1875 (2008).

- (4) Gupa, M., Iso, D., Nayar, S.K.: Fibonacci exposure bracketing for high dynamic range imaging. In: IEEE Intl. Conf. Cumputer Vision (ICCV), Australia, pp. 1473-1480 (2013).

- (5) Huang, K., Chiang, J.: Intelligent exposure determination for high quality HDR image generation. In: IEEE Intl. Conf. Image Process. (ICIP), Australia, pp. 3201-3205 (2013).

- (6) Pourreza-Shahri, R., Kehtarnavaz, N.: Automatic exposure selection for high dynamic range photography. In: IEEE Intl. Conf. Consumer Electronics (ICCE), USA, pp. 469-497, (2015).

- (7) Pourreza-Shahri, R., Kehtarnavaz, N.: Exposure bracketing via automatic exposure selection. In: IEEE Intl. Conf. Image Proc. (ICIP), Canada, pp. 320-323 (2015).

- (8) Debevec, P., Malik, J.: Recovering high dynamic range radiance maps from photographs. In: 24th Annual Conf. Computer Graphics and Interactive Techniques (SIGGRAPH), USA, pp. 369-378 (1997).

- (9) Cvetković, S., Klijn, J., With, P.H.N.: Tone-mapping functions and multiple-exposure techniques for high dynamic-range images. IEEE Trans. Consumer Electron. 54(2), 904-911 (2008).

- (10) Kuang, J., Johnson, G.M., Fairchild, M.D.: iCAM06: A refined image appearance model for HDR image rendering. J. Visual Communication 18, 406-414 (2007).

- (11) Farbman, Z., Fattal, R., Lischinski, D., Szeliski, R.:Edge-preserving decompositions for multi-scale tone and detail manipulation. ACM Trans. Graphics 21(3), 249–256 (2008).

- (12) Durand, F., Dorsey, J.: Fast bilateral filtering for the display of high-dynamic-range images. ACM Trans. Graphics 21(3), 257–266 (2002).

- (13) He, K., Sun, J., Tang, X.: Guided image filtering. IEEE Trans. Pattern Analysis and Machine Intelligence 35(6), 1397-1409 (2013).

- (14) Mertens, T., Kautz, J., Van Reeth, F.:Exposure fusion: a simple and practical alternative to high dynamic range photography. Computer Graphics Forum 28(1), 161–171 (2009).

- (15) Li, S., Kang, X.: Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consumer Electron. 58(2), 626–632 (2012).

- (16) Song, M., Tao, D., Chen, C., Bu, J., Luo, J., Zhang, C.: Probabilistic exposure fusion. IEEE Trans. Image Process. 21(1), 341– 357 (2012).

- (17) Zhang, W., Cham, W.-K.: Gradient-directed multi-exposure composition. IEEE Trans. Image Process. 21(4), 2318–2323 (2012).

- (18) Shen, R., Cheng, I., Shi, J., Basu, A.: Generalized random walks for fusion of multi-exposure images. IEEE Trans. Image Process. 20(12), 3634-3646 (2011).

- (19) Shen, R., Cheng, I., Basu, A.: QoE-based multi-exposure fusion in hierarchical multivariate gaussian CRF. IEEE Trans. Image Process. 22(6), 2469–2478 (2013).

- (20) Xu, L., Du, J., Zhang, Z.: Feature-based multiexposure image-sequence fusion with guided filter and image alignment. J. Electron. Imaging 24(1), 013022 (2015).

- (21) Hu, J., Gallo, O., Pulli, K.: Exposure stacks of live scenes with hand-held cameras. In: Europ. Conf. Computer Vision (ECCV), Italy, pp. 499-512 (2012).

- (22) Tico, M., Gelfand, N., Pulli, K.: Motion-blur-free exposure fusion. In: IEEE Intl. Conf. Image Process. (ICIP), China, pp. 3321-3324 (2010).

- (23) Yeganeh H., Wang, Z.: Objective quality assessment of tone-mapped images. IEEE Trans. Image Process. 22(2), 657-667 (2013).

- (24) Ward, G.: Fast, robust image registration for compositing high dynamic range photographs from handheld exposures. J. Graphic Tools 8(2), 17-30 (2003).