Automated Federated Learning in Mobile Edge Networks — Fast Adaptation and Convergence

Abstract

Federated Learning (FL) can be used in mobile edge networks to train machine learning models in a distributed manner. Recently, FL has been interpreted within a Model-Agnostic Meta-Learning (MAML) framework, which brings FL significant advantages in fast adaptation and convergence over heterogeneous datasets. However, existing research simply combines MAML and FL without explicitly addressing how much benefit MAML brings to FL and how to maximize such benefit over mobile edge networks. In this paper, we quantify the benefit from two aspects: optimizing FL hyperparameters (i.e., sampled data size and the number of communication rounds) and resource allocation (i.e., transmit power) in mobile edge networks. Specifically, we formulate the MAML-based FL design as an overall learning time minimization problem, under the constraints of model accuracy and energy consumption. Facilitated by the convergence analysis of MAML-based FL, we decompose the formulated problem and then solve it using analytical solutions and the coordinate descent method. With the obtained FL hyperparameters and resource allocation, we design a MAML-based FL algorithm, called Automated Federated Learning (AutoFL), that is able to conduct fast adaptation and convergence. Extensive experimental results verify that AutoFL outperforms other benchmark algorithms regarding the learning time and convergence performance.

Index Terms:

Fast adaptation and convergence, federated learning, model-agnostic meta-learning, mobile edge networksI Introduction

In recent years, modern mobile user equipments (UEs) such as smart phones and wearable devices have been equipped with advanced powerful sensing and computing capabilities [1]. This enables their access to a wealth of data that are suitable for learning models, which brings countless opportunities for meaningful applications such as Artificial Intelligence (AI) medical diagnosis [2] and air quality monitoring [3]. Traditionally, learning models requires data to be processed in a cloud data center [4]. However, due to the long distance between the devices where data is generated and the servers in data centers, cloud-based Machine Learning (ML) for mobile UEs may incur unacceptable latencies and communication overhead. Therefore, Mobile Edge Computing (MEC) [5, 6] has been proposed to facilitate the deployment of servers near the base station (BS) at mobile edge networks, so as to bring intelligence to network edge.

In mobile edge networks, for a traditional ML paradigm, it is inevitable to upload raw data from mobile UEs to a server which could be deployed near the BS for model learning. However, the unprecedented amount of data created by mobile UEs are private in nature, leading to the increasing concern of data security and user privacy. To address this issue, Federated Learning (FL) has been proposed [7] as a new ML paradigm. FL in mobile edge networks refers to training models across multiple distributed UEs without ever uploading their raw data to the server. In particular, UEs compute local updates to the current global model based on their own local data, which are then aggregated and fed-back by an edge server, so that all UEs have access to the same global model for their new local updates. Such a procedure is implemented in one communication round and is repeated until a certain model accuracy is reached.

Despite its promising benefits, FL also comes with new challenges in practice. Particularly, the datasets across UEs are heterogenous. Not only does the number of data samples generated by UEs vary, but these data samples are usually not independent and identically distributed (non-i.i.d). Learning from such heterogeneous data is not easy, as conventional FL algorithms usually develop a common model for all UEs [8–10] such that, the global model obtained by minimizing the average loss could perform arbitrarily poorly once applied to the local dataset of a specific UE. That is, the global model derived from conventional FL algorithms may conduct weak adaptations to local UEs. Such weak adaptations will further restrict the convergence rate of the FL algorithms.

Multiple techniques are emerging as promising solutions to the data heterogeneity problem, such like adding user context [8], transfer-learning [9], multi-task learning [10], and Model-Agnostic Meta-Learning (MAML) [11]. Of all these techniques, MAML is the only one that not only addresses the heterogenous data problem, but greatly speeds up the FL learning process as well. MAML is a well-known meta-learning [12] approach that learns from the past experience to adapt to new tasks much faster. Such adaptations make it possible for MAML to develop well-behaved user-specific models. More specifically, MAML aims to find a sensitive initial point that learned from the past experience to conduct fast adaptations requiring only a few data points on each UE. With the learned initial model, MAML dramatically speeds up the learning process by replacing hand-engineered algorithms with an automated, data-driven approach [13]. Fortunately, in both MAML and FL, existing algorithms use a variant of gradient descent method locally, and send an overall update to a coordinator to update the global model. This similarity makes it possible to interpret FL within a MAML framework [14, 15, 16, 17]. Such a simple MAML-based FL is termed as Personalized Federated Learning (PFL), and the algorithm that realizes PFL is termed as Personalized FederatedAveraging (Per-FedAvg). However, Per-FedAvg is a pure ML algorithm, and how to apply it into practical mobile edge networks remains unclear. Recently several attempts have been made to study the implementation of Per-FedAvg in practice. [18] proposes a framework to execute Per-FedAvg for intelligent IoT applications without considering Per-FedAvg’s strength in saving learning time. [19], although aims to achieve fast learning for IoT applications at the edge, does not consider the resource allocation in a practical mobile edge network. Therefore, to what degree a MAML-based FL algorithm expedites FL in mobile edge networks and under what conditions such benefit could be achieved still remain to be unexplored areas.

In order to quantify the benefit MAML brings to FL in mobile edge networks, we consider two aspects to minimize the overall learning time: optimizing learning-related hyperparameters as well as resource allocation. On the one hand, the typical FL hyperparameters, including the sampled data sizes across UEs and the number of communication rounds, have significant impact on the overall learning time and thus, these hyperparameters need to be carefully specified. On the other hand, the resource allocation should be considered to account for UEs’ practical wireless communication environments and limited battery lifetimes. Particularly, due to the existence of random channel gain and noise over wireless channels, the transmit power allocation on an UE will decide whether the transmitted updates from the UE can be successfully decoded by the edge server. Restricted by the limited battery lifetime, it will also determine whether the remaining energy on that UE is sufficient to support its local training.

It is non-trivial to solve the formulated optimization problem considering above two quantitative dimensions, given that the relationship between the variables (i.e., the sampled data sizes across UEs, the number of communication rounds, and the transmit power on UEs) and the model accuracy is implicit. Therefore, we start with the convergence analysis of the MAML-based FL algorithm, by which the three variables are bounded as functions of . After that, the formulated optimization problem can be approximatively decoupled into three sub-problems, each of which accounts for one of the three variables. Specifically, solving the first sub-problem gives an insight to achieve the required number of communication round. As for the other two sub-problems, we use the coordinate descent method [20] to compute the sampled data size and the transmit power of all UEs iteratively. In other words, we first give an initial value of the transmit power of UEs, and use it to compute the sampled data sizes in the second sub-problem; then with the attained sampled data sizes, we compute the transmit power in the third sub-problem. This process repeats until a certain model accuracy is achieved.

The solution to the optimization problem guides us to the design of Automated Federated Learning (AutoFL). AutoFL uses the results derived from the optimization problem to design the learning process, thereby quantifying as well as maximizing the benefit MAML brings to FL over mobile edge networks. More specifically, in each round , the BS first sends the current model parameter to a random subset of UEs. According to the given model accuracy , the sampled data sizes and the transmit power of the selected UEs are determined by our proposed solution. Each selected UE then trains its local model with the determined sampled data size and then transmits the local update to the edge server with the determined transmit power. The server receives local updates from the selected UEs and decides whether these updates can be successfully decoded. Those successfully decoded updates are then aggregated to update the global model as . Such a communication round is executed repeatedly until the model accuracy is achieved. In this way, AutoFL is able to inherit the advantages of MAML over mobile edge networks, thereby conducting model learning with fast adaptation and convergence.

To summarize, in this paper we make the following contributions:

-

•

We provide a comprehensive problem formulation for the MAML-based FL design over mobile edge networks, which can account for the impact of FL hyperparameters and network parameters on the model accuracy and learning time at the same time. Specifically, we jointly optimize the UEs’ sampled data size and transmit power, as well as the number of communication rounds, to quantify and maximize the benefit MAML brings to FL, by which the overall learning time is minimized under the constraint of model accuracy and energy consumption.

-

•

We analyse the convergence of MAML-based FL algorithm as the first step to solve the formulated problem. The convergence analysis makes the relationships between optimization variables and the model accuracy explicit, especially characterizes the sampled data size, the transmit power, and the number of communication rounds as functions of model accuracy .

-

•

Applying the obtained results of convergence analysis, the formulated problem is decoupled into three sub-problems. By solving these sub-problems, the number of communication rounds can be characterized using a closed-form solution, while the sampled data sizes and the transmit power of all UEs in each round can be achieved using the coordinate descent method. With the optimized hyperparameters and resource allocation, we further propose AutoFL with fast adaptation and convergence.

-

•

By conducting extensive experiments with MNIST and CIFAR-10 datasets, we demonstrate the effectiveness and advantages of AutoFL over Per-FedAvg and FedAvg, two baseline FL algorithms in terms of learning time, model accuracy and training loss.

The rest of this paper is organized as follows. We first give the system model and problem formulation in Section II. Then we give the convergence analysis to make the formulated problem tractable in Section III. We recast the optimization problem and then propose the solutions to guide the design of AutoFL in Section IV. Extensive experimental results are presented and discussed in Section V. Finally, we conclude this paper in Section VI.

II System Model and Problem Formulation

Consider a typical mobile edge network with a edge server co-deployed with a BS and UEs, and the UEs are indexed by , as illustrated in Fig. 1. In this section, we first explain why the FL problem can be interpreted within the MAML framework. Based on this framework, we introduce our system model and problem formulation.

II-A Interpreting FL within the MAML framework

In FL, we consider a set of UEs which are connected with the server via the BS, where each UE has access only to its local data [7]. For a sample data point with input , the goal of the server is to find the model parameter that characterizes the output with loss function , such that the value of can be minimized. More specifically, if we define as the loss function of UE , the goal of the server is to solve

| (1) |

In particular, for each UE , we have

| (2) |

where is the error between the true label and the prediction of model using input . Each UE has a local dataset , with data samples. Since the datasets captured by the UEs are naturally heterogenous, the probability distribution of across UEs is not identical.

MAML, on the other hand, is one of the most attractive technique in meta-learning. MAML is proposed to learn an initial model that adapts quickly to a new task through one or more gradient steps with only a few data points from that new task. Each task can be regarded as an object with its own dataset to learn, just like an UE in FL. MAML allows us to replace hand-engineered algorithms with data-driven approaches to learn initial parameters automatically.

To show how to exploit the fundamental idea behind the MAML framework [11] to design an automated variant of FL algorithm, let us first briefly recap the MAML formulation. In MAML, if we regard the tasks as UEs and assume each UE takes the initial model and updates it using one step of gradient descent with respect to its own loss function111A more general case is to perform multiple steps of gradient descent. However, this would lead to expensive cost of computing multiple Hessians. Therefore, for simplicity, throughout the whole paper we consider only one single step of gradient descent., problem (1) then changes to

| (3) |

where is the learning rate at a UE. For UE , its optimization objective can be expressed as

| (4) |

Such a transformation from problem (1) to (3) implies that the FL problem can be interpreted within the MAML framework. The FL algorithm proposed to solve (3) is termed as Personalized Federated Averaging (Per-FedAvg) [21, 14]. Per-FedAvg is inspired by FedAvg, which is proposed in [7] as a classic and general FL algorithm to solve (1) in a distributed manner.

Per-FedAvg is summarized in Algorithm 1. In each round , the central BS randomly picks a set of UEs and then sends them the current model parameter . Each UE first adapts the global model to its local data and obtains an intermediate parameter , where . Then, with , UE updates its local model using one or more steps of gradient descent and obtains . Such local model parameter updates are then sent to the server for model aggregation. The server will update the global model or meta model as . This process repeats in the sequel rounds until a certain model accuracy is achieved or a predefined maximum number of rounds is reached.

Per-FedAvg is a MAML-based FL algorithm, which suggests a general approach to use the MAML method for solving the data heterogeneity problem in FL. It is proposed with focus on the personalization in FL, which is a natural feature inherited from MAML. Except for the personalization, MAML is also a few-shot learning approach, as only a few samples are required to learn new skills after just minutes of experience. Therefore, Per-FedAvg also inherits this feature of MAML, thereby adapting quickly from only a few samples. To what degree of fast adaptation MAML benefits FL and under what conditions such benefit can be achieved is what exactly this paper is about.

II-B Machine Learning Model

We consider the above described MAML-based FL. In detail, we concentrate on the situation where UEs communicate in a synchronous manner, so as to avoid using outdated parameters for global model update and make high-quality refinement in each round. Meanwhile, for each UE, we consider the case that only one step of stochastic gradient descent (SGD) is performed, following the same setting as [11].

As for the concerned MAML-based FL, our goal is to optimize the initial model using only a few data points at each UE. Hence, we only obtain an estimate of the desired gradient with SGD. Here, the desired gradient on UE is computed using all data points in its dataset , while the estimated gradient on UE is computed using SGD with the sampled dataset . Note that, the superscript represents different sampled datasets are used to estimate the involved gradients and Hessian in . Meanwhile, the sampled data size is .

More specifically, in order to solve (3), each UE computes the desired gradient in round , as follows:

| (5) |

At every round, computing the gradient by using all data points of UE is often computationally expensive. Therefore, we take a subset from to obtain an unbiased estimate for , which is given by

| (6) |

Similarly, the outer gradient update and Hessian update in (5) can be replaced by their unbiased estimates and respectively. Here, and are sampled from as well. Therefore, using SGD, we can finally obtain an estimated local gradient on UE in round , which is given by

| (7) |

It is worth noting that is a biased estimator of . This is because the stochastic gradient contains another stochastic gradient inside. Hence, to improve the estimate accuracy, used for inner gradient update is independent from the sampled datasets and used for outer gradient and Hessian update respectively. Meanwhile, in this paper we assume and are also independent from each other.

II-C Communication and Computation Model

When exploring the MAML-based FL in realistic mobile edge networks, the communication and computation model should be captured carefully. Particularly, we consider that UEs access the BS through a channel partitioning scheme, such as orthogonal frequency division multiple access (OFDMA). In order to successfully upload the local update to the BS, two conditions need to be satisfied: (1) the UE is selected, and (2) the transmitted local update is successfully decoded. In this respect, we first introduce as a selection indicator, where indicates the event that UE is chosen in round , and otherwise. Next, we characterize the transmission quality of the wireless links. For the signals transmitted from UE , the SNR received at the BS can be expressed as , where is the transmit power of UE during round , is the path loss exponent. is the channel gain between UE and the BS with being the distance between UE and the BS and being the small-scale channel coefficient. is the noise power spectral density. In order for the BS to successfully decode the local update from UE , it is required that the received SNR exceeds a decoding threshold , i.e., . Assume that the small-scale channel coefficients across communication rounds follow Rayleigh distribution, then according to [22], the update success transmission probability, defined as , can be estimated as follows:

| (8) |

where . Then the achievable uplink rate of UE transmitting its local update to the BS in round is given by

| (9) |

where is the bandwidth allocated to each UE. Based on , the uplink transmission delay of UE in round can be specified as follows:

| (10) |

where is the size of in number of bits. Since the transmit power of the BS is much higher than that of the UEs, the downlink transmission delay is much smaller than the uplink transmission delay. Meanwhile, we care more about the transmit power allocation on individual UEs rather than that on the BS, so here we ignore the downlink transmission delay for simplicity.

Further, we calculate the computation time for each UE, which is consumed for computing the local update. Given the CPU-cycle frequency of UE by , the computation time of UE is expressed as

| (11) |

In (11), denotes the number of CPU cycles for UE to execute one sample of data points and denotes the sampled data size of UE in round .

In term of and , we then give the energy consumption of each UE in round , which consists of two parts: (1) the energy for transmitting the local updates and (2) the energy for computing the local updates. Let denote the energy consumption of each UE in round , and then can be computed as follows [23, 24]:

| (12) |

where is the effective capacitance coefficient of UE ’s computing chipset. From (12), we observe that in round for each UE , both the sampled data size and transmit power have significant impacts on its energy consumption. This observation motivates us to jointly consider these two variables when quantifying the benefit MAML brings to FL in mobile edge networks.

II-D Problem Formulation

In order to quantify the benefit MAML brings to FL in mobile edge networks, we focus on the learning time minimization under the constraint of model accuracy and UEs’ energy consumption. Particularly, the learning time is the duration over all communication rounds, in which the duration of round is determined by the slowest UE as follows,

| (13) |

Note that we can replace with in , that is, . This is because the UEs that are not chosen in has a with the value of , which would not effect the result of . Let and denote the UEs’ sampled data size vector and the transmit power vector respectively, where and . Then, we can give the problem formulation by

| (P1) | ||||

| s.t. | (C1.1) | |||

| (C1.2) | ||||

| (C1.3) | ||||

| (C1.4) | ||||

In problem (P1), we not only optimize and , but also the total number of rounds . The hidden reason is that, both and have an effect on the duration of each round, thereby impacting the number of rounds to achieve a certain model accuracy . Besides, (C1.1) characterizes an -approximate convergence performance. (C1.2) limits the energy consumption of UE in round not larger the predefined maximum value . (C1.3) gives the maximum transmit power of UE in round by . (C1.4) is the sampled data size constraint, that the sampled data size of one UE in round is smaller than or equal to the data points generated by that UE. The solution to problem (P1) can be exploited for designing an optimized MAML-based FL algorithm, which is implemented iteratively to update the global model parameter . According to the communication and computation model, only those local updates from the selected UEs as well as being successfully decoded by the BS can contribute to updating the global model parameter. That is, in round , we have

| (15) |

where if the event of is true, and it equals to zero otherwise.

Based on this update rule, (C1.1) related to the MAML-based FL can be analytically analysed to make the relationship between the decision variables and the model accuracy explicit, thereby facilitating solving problem (P1). In this regard, the convergence analysis of the MAML-based FL is given in the following section.

III Convergence Analysis of MAML-based FL

III-A Preliminaries

For a general purpose, we concentrate on the non-convex settings on loss functions and aim to find an -approximate first-order stationary point (FOSP) for the loss function minimization problem (3) in the MAML-based FL, which is defined as follows.

Definition 1.

A random vector is called an -FOSP if it satisfies .

In terms of this Definition, we then elaborate on the assumptions, convergence bounds, and discussions on the convergence analysis, respectively, from which we can reformulate (C1.1) in problem (P1) as an explicit constraint with respect to the optimization variables.

Without loss of generality, the assumptions used for the convergence analysis of MAML-based FL algorithm is consistent with that of Per-FedAvg [21, 14] and are given in the following.

Assumption 1.

For every UE , is twice continuously differentiable. Its gradient is -Lipschitz continuous, that is,

| (16) |

Assumption 2.

For every UE , the Hessian matrix of is -Lipschitz continuous, that is,

| (17) |

Assumption 3.

For any , and , computed w.r.t. a single data point , have bounded variance, that is,

| (18) |

Assumption 4.

For any , the gradient and Hessian matrix of local loss function and the average loss function have bound variance, that is

| (19) |

Before the convergence analysis, we first introduce three lemmas inherited from [14, 21] quantifying the smoothness of and , the deviation between and its estimate , and the deviation between and , respectively.

Lemma 1.

Lemma 2.

III-B Analysis of Convergence Bound

Let , where denotes the normalized update successful probability of UE in round . Then, with Lemmas 1, 2 and 3, the expected convergence result of the MAML-based FL within a general mobile edge network we describe in Section II can now be obtained by the following theorem.

Theorem 1.

Given the transmit power vector , the sampled data size vector , the number of communication rounds , and the optimal global loss , the normalized update successful probability , and , then we have the following FOSP conditions,

| (25) |

where is the average of the local updates that are successfully decoded by the BS in round . That is, we have

| (26) |

Proof:

See the Appendix. ∎

Unlike the convergence guarantee of Per-FedAvg in [14] which is characterized by and , the convergence guarantee obtained from Theorem 1 is characterized by , and , where is a function of the transmit power . That is, the convergence bound of the proposed MAML-based FL in our paper is described in terms of , and . Therefore, our convergence analysis can combine the FL hyperparameters as well as the resource allocation in mobile edge networks.

III-C Discussions

From Theorem 1, we are able to characterize , and with respect to the predefined model accuracy . According to Theorem 1 and (C1.1) in problem (P1), it is desired for the right-hand-side of (1) to be equal to or smaller than . Consequently, we further present the following corollary.

Corollary 1.

Suppose the conditions in Theorem 1 are satisfied. If we set the number of total communication rounds as , the global learning rate as , the number of data samples as , then we find an -FOSP for the MAML-based FL in problem (P1).

Proof:

and will make sure that the order of magnitude of the first term on the right-hand-side of (1) to be equal to .

Then we examine the second term. Since is the normalized update successful probability with the consideration of UEs, its order of magnitude is defined by rather than , i.e., . Therefore, it is natural to take attentions on the term for an -FOSP. By setting (i.e., ), will dominate the value of . This is because, in this case, , which is a high-order infinitesimal of . Finally, combining the magnitudes of the first and second terms, we can conclude that the joint of , , and yields an -FOSP for the MAML-based FL. ∎

Remark 1.

Guided by Corollary 1, we can recast (C1.1) in problem (P1) as a series of constraints, which give explicit relationships between optimization variables and the model accuracy . In this way, we then solve problem (P1) with high efficiency in the next section.

IV Automated Federated Learning (AutoFL)

In this section, we first resort to Theorem 1 and Corollary 1 for problem (P1) decomposition, and then solve the resultant subproblems respectively. Based on the obtained solutions, we finally propose Automated Federated Learning (AutoFL) algorithm, by which the benefit MAML brings to FL can be quantified in mobile edge networks.

IV-A Problem Decoupling

The clue to the decomposition of problem (P1) follows the primal decomposition [25]. Specifically, the master problem is optimized with respect to the number of communication rounds , which is given as follows:

| (P2) | ||||

| s.t. | (C2.1) | |||

Note that, we refer to Theorem 1 and use a contracted (C1.1), i.e., (C2.1), as the constraint in problem (P2) for tractability. This contraction replaces with its upper bound without the second term. The reason that we get rid of the second term is that we only consider as the decision variable in (P2), and the second term of the upper bound can be regarded as a constant and thus removed as long as its value is no larger than . Meanwhile, the above contraction shrinks the feasible region of . Therefore, as long as (C2.1) is satisfied, (C1.1) must be satisfied. Moreover, if there exists an optimal solution to problem (P2), it is also feasible to the original problem (P1). We can regard the optimal solution to (P2) as an optimal approximation to (P1).

The reason that we consider as a decision variable is for the decoupling of (P1). The original objective of minimizing the overall learning time in rounds can be regarded as the minimization of the learning time in each round, once is determined and is set to be a constant in the following decoupling. As such, problem (P2), which has a slave problem with respect to the sampled data size and transmit power , can be regarded as an approximation of problem (P1).

From Theorem 1, we find that and are coupled even though (C1.1) is replaced by the right-hand-side term in (1). In this regard, we aim to optimize and in the slave problem with the coordinate descent [20] method. That is, the slave problem is firstly solved with as variables and as constants, and then is solved with as variables and as constants. This procedure is repeated in an iterative manner. In this way, - and -related slave problems can be further decomposed among rounds under the assistance of Corollary 1. Specifically, in any round , the sampled data size for UE is optimized by solving problem (P3):

| (P3) | ||||

| s.t. | (C3.1) | |||

| (C3.2) | ||||

| (C3.3) | ||||

(C3.1) is obtained from Corollary 1, that a will yield an -FOSP. (C3.1) uses instead to indicate that as long as is not smaller than , an -FOSP would be guaranteed. This is a reasonable replacement (i.e., from to ) such that, the larger the sampled data size is, the larger accuracy AutoFL would achieve, thereby leading to a smaller . Note that (C3.1) is also a contraction of (C1.1), and the feasible domain of is also shrunk. That is, the optimal , as long as it exists in (P3), is also feasible in problem (P1), and can be regarded as an optimal approximation to (P1). (C3.2) is the energy consumption constraint on each UE and (C3.3) indicates that the sampled data size of UE is no greater than the number of data points generated by UE . Furthermore, addressing the following problem can make an decision on the transmit power of UE in round :

| (P4) | ||||

| s.t. | (C4.1) | |||

| (C4.2) | ||||

| (C4.3) | ||||

where (C4.1) is obtained from Corollary 1, in terms of the fact that dominates the value of the second term in the right-hand-side of (1) to guarantee an -FOSP for the MAML-based FL. Here we replace with to show that in each round , (C4.1) should be satisfied. The reason that we replace with is to indicate that, as long as (C4.1) is satisfied, an -FOSP would be guaranteed. Note that (C4.1) is also a contraction of (C1.1). That is, as long as there exists an optimal solution to (P4), this solution is also feasible and is close to the optimal solution to (P1). We can regard the optimal solution to (P4) as an optimal approximation to (P1). (C4.2) and (C4.3) are the energy constraint and transmit power constraint on each UE, respectively.

Based on the above decomposition, we can solve the original problem (P1) by solving problem (P2), in which problems (P3) and (P4) are nested and addressed with the coordinate descent method. This decoupling only provides the approximate optimal results. However, the evaluation results show that, with the approximate optimal results obtained from (P2), (P3) and (P4), the proposed MAML-based FL algorithm always outperforms Per-FedAvg in learning time and convergence performances. Next, we deal with problems (P2), (P3), and (P4), respectively.

IV-B Problem Solution

IV-B1 Communication Round Optimization

Once the power transmit power and the sampled data size are obtained from the slave problem, the master problem (P2) is monotonously increasing with respect to . Therefore, to minimize the total training time, the optimal value of should be obtained as its lower bound:

| (30) |

Note that, we do not use the formulation of to predict the actual optimal global communication rounds. It is not practical to determine this value in advance because in practice, the optimal value of the communication rounds can be easily observed once the training loss starts to converge. There are so many factors that can affect the actual value of , even the version of Pytorch/Tensorflow in the experiments! Therefore, the theoretical formulation of in (31), with the initial global model , the global optimal loss , the global learning rate , and the model accuracy to be decided, is only used as a guidance indicating the order of magnitude of the practical optimal communication rounds.

IV-B2 Data Sample Size Optimization

It is easy to observe from problem (P3) that for each UE , its optimal lies at the lower bound . However, whether this lower bound can be reached depends on the relationship between the values of ’s lower bound and upper bounds defined by (C3.2) and (C3.3). Specifically, the upper bound defined by (C3.2) is , while the upper bound defined by (C3.3) is . Consequently, for each UE , we need to consider two cases: and , in which we further discuss other two cases: and . That is, we discuss the optimal solution of for problem (P3) as follows.

Case 1: In this case, UE generates sufficient data points which are larger than or equal to its lower bound for a model accuracy . That is, for each UE , we have

| (31) |

Under this case, we need to further discuss the relationship between and :

-

•

When , the lower bound of can be obtained with enough data points to achieve the model accuracy . Therefore, the optimal sampled data size .

- •

Case 2: In this case, UE creates deficient data points which are smaller than its lower bound for a model accuracy . That is, for each UE , we have

| (32) |

Under this case, we also need to further discuss the relationship between and :

-

•

When , the optimal value of is ;

-

•

When , the optimal value of is ;

The above process of computing for problem (P3) can be summarized as SampledDataSize algorithm, which is omitted due to the space limitation.

IV-B3 Transmit Power Optimization

At last, we turn to solving problem (P4), where transmit power for UE in round is optimized with fixed . To this end, we first convert the problem into a more concise form. Given that denotes the normalized update successful probability, we have . Then the inequality is always true. Consequently, we can further transform (C4.1) to . Combine the transformed constraint with (C3.1), (C3.2) and (C3.3), we find that as long as , (C4.1) can always be satisfied. Therefore, problem (P4) can be further transformed to a new optimization problem without constraint (C4.1).

With the above concise formulation of (P4), we analyze the monotonicity of in the objective function of problem (P4). Specifically, the derivative of can be computed as follows:

| (33) |

which means monotonically decreases with .

Further, as for the constraint (C4.2), the derivative of its left-hand-side term can be proved to be always larger than 0, which is shown as follows,

| (34) |

where the inequality is derived from the fact that , for . Therefore, the left-hand-side term of (C4.2) monotonically increases with . It means (C4.2) defines another upper bound of just as in (C4.3).

IV-C AutoFL Implementation

After devising the algorithms to determining the number of communication round , the sampled data size , and the transmit power , we are able to combine these sub-algorithms together to design the AutoFL algorithm, which is shown in Algorithm 2. Note that, to reduce the computational complexity, we only adopt the coordinate descent method to solve problem (P3) and (P4) once in one round. More specifically, given the current transmit power , problem (P4) with respect to is solved for UE in round using SampledDataSize algorithm, as line 6 in AutoFL. Then, based on the obtained , problem (P4) with respect to is addressed for UE in round with PowerComputation algorithm, as line 7 in AutoFL. The result of is further used in the next round to compute for UE , which is used to compute again. This process repeats until model accuracy is achieved. As in line 15 in AutoFL, once the model accuracy is reached, the algorithm is terminated and the required number of rounds is output.

V Performance Evaluation

In this section, we evaluate the performances of AutoFL to (1) verify its effectiveness in saving the learning time, (2) examine its training loss and accuracy to demonstrate its effectiveness in fast adaptation and convergence, (3) present its advantages in different network and dataset settings.

V-A Simulation Settings

V-A1 Network settings

| Parameter | Value | Parameter | Value | Parameter | Value |

|---|---|---|---|---|---|

| (MNIST) | 1 MHz | J | |||

| (MNIST) | W | ||||

| (CIFAR-10) | 0.06 | dB | |||

| (CIFAR-10) | 0.02 | dBm/Hz |

Unless otherwise sepecified, we consider a mobile edge network that consists of UEs located in a cell of radius m and a BS located at its center. Assume that all UEs are uniformly distributed in the cell. The Rayleigh distribution parameter of is . The other parameters used in simulations are listed in Table I.

V-A2 Datasets

We evaluate the training performance using two datasets, the MNIST dataset [26] for handwritten digits classification and CIFAR-10 [27]. The network model we used for MNIST is a 2-layer NN with hidden layer of size 100, while the network model we used for CIFAR-10 is LeNet-5 [28], where there are two convolutional layers and three fully connected layers. The datasets are partitioned randomly with and for training and testing respectively. Meanwhile, in the simulation we use both i.i.d datasets and non-i.i.d datasets. In order to generate i.i.d datasets, the original training dataset is randomly partitioned into 20 portions and each UE is assigned a portion. As for the non-i.i.d datasets, the original training set is first partitioned into 10 portions according to the labels. Each UE has only of the 10 labels and is allocated a different local data size in the range of , where . The value of reflects the non-i.i.d level of local datasets. The smaller is, the higher non-i.i.d level. Unless otherwise defined, we use in the evaluation. All experiments are conducted by PyTorch [29] version 1.7.1.

V-A3 Baselines

We compare the performance of AutoFL with Per-FedAvg [14] and FedAvg [7]. FedAvg is the first algorithm that is proposed for FL and is the most general FL algorithm. Per-FedAvg, on the other hand, is the most general MAML-based FL algorithm. In Per-FedAvg, we first fix the sampled data size of all UEs to be . As for the transmit power settings in Per-FedAvg, all UEs use the maximal power , given that there are only 5 data samples to train on each UE and more power can be saved to transmit the local updates to the BS. As for FedAvg, we consider two sets of sampled data sizes: for the first one, we set the sampled data sizes of the UEs to be and the transmit power in all rounds to be , which is the same with the settings with Per-FedAvg. We name the FedAvg algorithm with the first setting as FedAvg-1; For the second one, we set the sampled data sizes and transmit power of the UEs to be the same with AutoFL. We name the FedAvg with the second setting as FedAvg-2. Later, we may change the sampled data sizes in Per-FedAvg and FedAvg-1 to check the influence of the number of sampled data on the performance gap between Per-FedAvg and AutoFL.

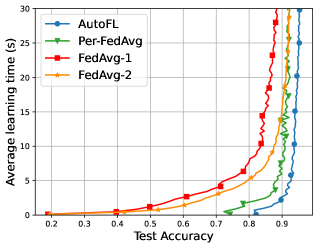

V-B Learning Time Comparisons

We first compare the learning time of AutoFL with that of Per-FedAvg, FedAvg-1, and FedAvg-2 with respect to the test accuracy. The results are shown in Fig. 2. We analyse the results from three aspects. (i) First, it is clear that at the same accuracy, AutoFL consumes the smallest learning time. For example, when the algorithms start to converge, Per-FedAvg takes at least twice as much time as AutoFL. This is a reasonable result as AutoFL is designed to minimize the overall learning time. Meanwhile, as the test accuracy increases, the average learning time of all algorithms grows rapidly. The experimental results verify our theoretical analysis and confirms the effectiveness of AutoFL in saving the overall learning time. (ii) Second, we observe that the two MAML-based FL algorithms outperform the two conventional FL algorithms. This advantage is even more pronounced when using the non-i.i.d CIFAR-10 dataset rather than the MNIST datasets. This result testifies the advantage of MAML in accelerating the training speed of FL. Meanwhile, the two algorithms with designed sampled data size and transmit power, AutoFL and FedAvg-2 outperform Per-FedAvg and FedAvg-1. This indicates that the joint optimization of the sampled data size and transmit power is a promising way to improve the FL performance over mobile edge networks. (iii) Third, the results derived from i.i.d datasets are more stable than that from non-i.i.d datasets, meanwhile the average learning time under i.i.d datasets is smaller than that under non-i.i.d datasets. This is because, in the i.i.d case, the local model of each individual UE has a higher representative for the learned global model, which is beneficial to improve the learning performance.

Thereafter, we change the sampled data size of Per-FedAvg to see how the gap between Per-FedAvg and AutoFL would change with different number of data samples for Per-FedAvg. Note that when the number of samples increases, the transmit power of UEs in Per-FedAvg is not always . Therefore, here we use the PowerComputation algorithm to compute the transmit power with the predifined data sample sizes. Fig. 3 shows the results. From Fig. 3 we observe that, no matter how does the number of data samples change in Per-FedAvg, its overall learning time is smaller than AutoFL. As the sampled data size increase from to , for the same test accuracy, the average learning time of Per-FedAvg is getting smaller and smaller. This phenomenon is more obvious using the CIFAR-10 dataset. However, when the number of sampled data points is , the learning time performance of Per-FedAvg suddenly deteriorate. We attribute this phenomenon to the reason that the larger sampled data size requires the larger transmit power. And once the transmit power of a certain UE reaches its upper bound, it can not be improved. Due to insufficient transmit power, the uploads transmitted from this UE fail to arrive the server for global model update. Therefore, although the number of samples on each UE has increased, the learning time may have become longer and longer. This result gives us great confidence, that although the original problem (P1) is NP-hard, and AutoFL is designed to approximate the optimal solutions to (P1), the approximate results are also effective in consuming as little time as possible.

V-C Convergence Performance Comparisons

Next, we compare convergence performance of AutoFL, Per-FedAvg, FedAvg-1, and FedAvg-2, in terms of training loss and test accuracy. Specifically, the smaller the training loss is, the better the convergence performance is. On the contrary, as for the test accuracy, the larger, the better. Fig. 4 shows the convergence performance using the i.i.d MNIST, non-i.i.d MNIST and non-i.i.d CIFAR-10 datasets, respectively.

From Fig. 4, it is observed that AutoFL outperforms the other three algorithms on the two concerned metrics. This phenomenon is more obvious using the non-i.i.d CIFAR-10 dataset. Besides, AutoFL has the fastest convergence rate, which is consistent with the learning time performance, that AutoFL performs the best. This result indicates that the in AutoFL is smaller than that in Per-FedAvg and FedAvg. For example, for the non-i.i.d MNIST datasets shown in Fig. 4, after about rounds, AutoFL start to converge, while Per-FedAvg starts to converge after about rounds, the two FedAvg algorithms start to converge after about rounds.

V-D Effect of Network and Dataset Settings

To understand how different network and dataset settings, such as the radius of the cell and the data heterogeneity, affect the convergence of AutoFL, we conduct a set of experiments on the MNIST dataset.

-

•

Effect of the radius of the cell : Figs. 5a and 5b show the average and the highest achievable test accuracy with different radius of the cell . is measured in number of rounds the algorithm starts to converge with test accuracy std . From Figs. 5a and 5b, we observe that increases faster and faster with , while the test accuracy decreases faster and faster with . The hidden reason is that, with increasing, the number of UEs whose local updates can be successfully decoded at the BS decreases. This reduction of UEs’ participation in global model update will definitely cause increased and decreased test accuracy. Besides, comparing AutoFL with Per-FedAvg, FedAvg-2 with FedAvg-1, we find that the optimization of transmit power and sampled data size on inividual UEs is beneficial to FL convergence, especially when the wireless environment becomes worse with increasing .

-

•

Effect of the metric of data heterogeneity : The non-i.i.d level is used to measure the data heterogeneity. Specifically, the smaller is, the larger data heterogeneity is. Figs. 5c and 5d show the average and the highest achievable model accuracy with respect to the non-i.i.d level . We can observe from the figures that as the non-i.i.d level decreases, is decreasing while the test accuracy is increasing. This result is reasonable as the higher degree of data heterogeneity across UEs has more negative impact on the learning process. As expected, Figs. 5c and 5d also demonstrate that AutoFL can achieve more gains for the more heterogeneous datasets across UEs.

VI Conclusions

In this paper, we have quantified the benefit MAML brings to FL over mobile edge networks. The quantification is achieved from two aspects: the determination of FL hyperparameters (i.e., sampled data sizes and the number of communication rounds) and the resource allocation (i.e., transmit power) on individual UEs. In this regard, we have formulated an overall learning time minimization problem, constrained by the model accuracy and energy consumption at individual UEs. We have solved this optimization problem by firstly analysing the convergence rate of MAML-based FL, which is used to bound the three variables as functions of the model accuracy . With these upper bounds, the optimization problem can be decoupled into three sub-problems, each of which considers one of the variables and uses the corresponding upper bound as its constraint. The first sub-problem guides the optimization of the number of communication rounds. The second and the third sub-problem are computed using the coordinate descent method, to achieve the sampled data size and the transmit power for each UE in each round respectively. Based on the solutions, we have proposed the AutoFL, a MAML-based FL algorithm that not only quantifies the benefit MAML brings to FL but also maximize such benefit to conduct fast adaptation and convergence over mobile edge networks. Extensive experimental results have verified that AutoFL outperforms Per-FedAvg and FedAvg, with the fast adaptation and convergence.

Appendix

In order to prove Theorem 1, we first introduce an intermediate inference derived from the Lipschitzan gradient assumption. That is, if is L-Lipschitz continuous, then is equivalent to

| (35) |

Note that although we assume that each UE only performs one step of gradient descent given the current global model parameter, in the appendix we first consider the general case where () steps of local gradient descents are performed. Then for each UE , we have

| (36) | ||||

| (37) |

where . After we find the -FOSP, we use to obtain the desired result shown in Theorem 1. In this case, we introduce the following lemma that has been proved in [14] to facilitate our proof.

Lemma 4.

If the conditions in Assumptions 2-4 hold, then for any and any , we have

| (38) |

where .

Recall that , from Lemma 1, we know is -Lipschitz continuous, and thus, by (35), we have

| (39) |

where the last inequality is obtained given the fact that

| (40) |

Taking expectation on both sides of (Appendix) yields

| (41) |

From the above inequality, it is obvious that the key is to bound the term . Let

| (42) |

where

| (43) |

Let be the information up to round , local step . Our next step is to bound the moments of , , , conditioning on . Recall the Cauchy-Schwarz inequality

| (44) |

-

•

As for , consider the Cauchy-Schwarz inequality (44) with and , we obtain

(45) where . Hence, by using Lemma 2 along with the tower rule, we have

(46) -

•

As for , consider the Cauchy-Schwarz inequality (44) with and , along with the smoothness of , we obtain

(47) Again, taking expectation on both sides of (• ‣ Appendix) along with the tower rule, we obtain

(48) where the last step is obtained from (38) in Lemma 4 along with the fact that .

-

•

As for , first recall that if we have numbers with mean , and variance . If we denote the number of UEs that successfully update their local parameters to the global model as , (), according to [14], we have

(49) Using this, we have

(50) Therefore, by taking expectation on both sides of (• ‣ Appendix) along with the tower rule, we have

(51) where the inequality is also obtained from (38) in Lemma 4. Next, consider the Cauchy-Schwarz inequality (44) with and , we have

(52) At this point, taking expectation on both sides of (• ‣ Appendix) along with the use of (• ‣ Appendix) yields,

(53)

Now, getting back to (Appendix), we first lower bound the term

| (54) |

From (42), we have

| (55) |

This inequality follows the same thought as [14] by using the fact that

| (56) |

Meanwhile, the bounds of the terms on the right-hand-side of (Appendix) can be derived similar to what the authors did in [14]. That is,

| (57) |

Meanwhile, using the Cauchy-Schwarz inequality (44), we have

| (58) |

Combining (Appendix) and (Appendix) yields

| (59) |

where the last step is derived from the fact that . Next, we characterize an upper bound for the other term in (Appendix):

| (60) |

To do this, we still use the equality (42), that is,

| (61) |

Hence, taking expectations on both sides of (Appendix) along with (46) and (Appendix) we have

| (62) |

Again, consider the Cauchy-Schwarz inequality with and , we have

| (63) |

By Lemma 3, we have

| (64) |

Recall the relationship between the expectation of a random vector , and its variance, , that . Given that , we have

| (65) |

Combining (Appendix), (Appendix) and (65) yields

| (66) |

Substituting (Appendix) and (Appendix) in (Appendix) implies

| (67) |

where

| (68) |

The last step of (Appendix) is obtained by . Summarizing (Appendix) from to , we have

| (69) |

which is obtained by the fact that . Finally, summarizing (Appendix) from to , we have

| (70) |

As a result, we have

| (71) |

Given that we only consider one step of local SGD in this paper, we use , and then the desired result is obtained.

References

- [1] M. Ghahramani, M. Zhou, and G. Wang, “Urban sensing based on mobile phone data: approaches, applications, and challenges,” IEEE/CAA Journal of Automatica Sinica, vol. 7, no. 3, pp. 627–637, 2020.

- [2] R. Pryss, M. Reichert, J. Herrmann, B. Langguth, and W. Schlee, “Mobile crowd sensing in clinical and psychological trials–a case study,” in IEEE International Symposium on Computer-Based Medical Systems, 2015, pp. 23–24.

- [3] R. K. Ganti, F. Ye, and H. Lei, “Mobile crowdsensing: current state and future challenges,” IEEE Communications Magazine, vol. 49, no. 11, pp. 32–39, 2011.

- [4] P. Li, J. Li, Z. Huang, T. Li, C.-Z. Gao, S.-M. Yiu, and K. Chen, “Multi-key privacy-preserving deep learning in cloud computing,” Future Generation Computer Systems, vol. 74, pp. 76–85, 2017.

- [5] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: The communication perspective,” IEEE Communications Surveys & Tutorials, vol. 19, no. 4, pp. 2322–2358, 2017.

- [6] X. Wang, Y. Han, V. C. Leung, D. Niyato, X. Yan, and X. Chen, “Convergence of edge computing and deep learning: A comprehensive survey,” IEEE Communications Surveys & Tutorials, vol. 22, no. 2, pp. 869–904, 2020.

- [7] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Artificial Intelligence and Statistics, 2017, pp. 1273–1282.

- [8] Y. Mansour, M. Mohri, J. Ro, and A. T. Suresh, “Three approaches for personalization with applications to federated learning,” arXiv preprint arXiv:2002.10619, 2020.

- [9] J. Schneider and M. Vlachos, “Mass personalization of deep learning,” arXiv preprint arXiv:1909.02803, 2019.

- [10] V. Smith, C.-K. Chiang, M. Sanjabi, and A. S. Talwalkar, “Federated multi-task learning,” vol. 30, 2017.

- [11] C. Finn, P. Abbeel, and S. Levine, “Model-agnostic meta-learning for fast adaptation of deep networks,” in International Conference on Machine Learning (ICML), vol. 70, 2017, pp. 1126–1135.

- [12] J. Vanschoren, “Meta-learning,” in Automated Machine Learning. Springer, Cham, 2019, pp. 35–61.

- [13] F. Hutter, L. Kotthoff, and J. Vanschoren, Automated machine learning: methods, systems, challenges. Springer Nature, 2019.

- [14] A. Fallah, A. Mokhtari, and A. Ozdaglar, “Personalized federated learning with theoretical guarantees: A model-agnostic meta-learning approach,” in Conference on Neural Information Processing Systems (NeuIPS), 2020.

- [15] Y. Jiang, J. Konečnỳ, K. Rush, and S. Kannan, “Improving federated learning personalization via model agnostic meta learning,” arXiv preprint arXiv:1909.12488, 2019.

- [16] Y. Deng, M. M. Kamani, and M. Mahdavi, “Adaptive personalized federated learning,” arXiv preprint arXiv:2003.13461, 2020.

- [17] C. T. Dinh, N. H. Tran, and T. D. Nguyen, “Personalized federated learning with moreau envelopes,” arXiv preprint arXiv:2006.08848, 2020.

- [18] Q. Wu, K. He, and X. Chen, “Personalized federated learning for intelligent iot applications: A cloud-edge based framework,” IEEE Open Journal of the Computer Society, vol. 1, pp. 35–44, 2020.

- [19] S. Yue, J. Ren, J. Xin, S. Lin, and J. Zhang, “Inexact-admm based federated meta-learning for fast and continual edge learning,” in Proceedings of the Twenty-second International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing, 2021, pp. 91–100.

- [20] S. J. Wright, Coordinate descent algorithms. Springer, 2015, vol. 151, no. 1.

- [21] A. Fallah, A. Mokhtari, and A. Ozdaglar, “Personalized federated learning: A meta-learning approach,” arXiv preprint arXiv:2002.07948, 2020.

- [22] H. H. Yang, Z. Liu, T. Q. Quek, and H. V. Poor, “Scheduling policies for federated learning in wireless networks,” IEEE Transactions on Communications, vol. 68, no. 1, pp. 317–333, 2019.

- [23] N. H. Tran, W. Bao, A. Zomaya, M. N. Nguyen, and C. S. Hong, “Federated learning over wireless networks: Optimization model design and analysis,” in IEEE International Conference on Computer Communications (INFOCOM), 2019, pp. 1387–1395.

- [24] Y. Pei, Z. Peng, Z. Wang, and H. Wang, “Energy-efficient mobile edge computing: three-tier computing under heterogeneous networks,” Hindawi Wireless Communications and Mobile Computing, vol. 2020, 2020.

- [25] D. P. Palomar and M. Chiang, “A tutorial on decomposition methods for network utility maximization,” IEEE Journal on Selected Areas in Communications (JSAC), vol. 24, no. 8, pp. 1439–1451, 2006.

- [26] L. Yann, C. Corinna, and B. Christopher, “The MNIST database,” http://yann.lecun.com/exdb/mnist/, 1998.

- [27] “The CIFAR-10 dataset,” https://, 2014.

- [28] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [29] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems (NeurIPS), 2019, pp. 8026–8037.