Augmenting Prototype Network with TransMix for Few-shot Hyperspectral Image Classification

Abstract

Few-shot hyperspectral image classification aims to identify the classes of each pixel in the images by only marking few of these pixels. And in order to obtain the spatial-spectral joint features of each pixel, the fixed-size patches centering around each pixel are often used for classification. However, observing the classification results of existing methods, we found that boundary patches corresponding to the pixels which are located at the boundary of the objects in the hyperspectral images, are hard to classify. These boundary patchs are mixed with multi-class spectral information. Inspired by this, we propose to augment the prototype network with TransMix for few-shot hyperspectrial image classification(APNT). While taking the prototype network as the backbone, it adopts the transformer as feature extractor to learn the pixel-to-pixel relation and pay different attentions to different pixels. At the same time, instead of directly using the patches which are cut from the hyperspectral images for training, it randomly mixs up two patches to imitate the boundary patches and uses the synthetic patches to train the model, with the aim to enlarge the number of hard training samples and enhance their diversity. And by following the data agumentation technique TransMix, the attention returned by the transformer is also used to mix up the labels of two patches to generate better labels for synthetic patches. Compared with existing methods, the proposed method has demonstrated sate of the art performance and better robustness for few-shot hyperspectral image classification in our experiments. All the codes are available at https://github.com/HENULWY/APNT.

Index Terms:

Hyperspectral image classification, Cross-domain few-shot learning, TransMix, TransformerI Introduction

Hyperspectral images (HSIs) capture radiation information of ground objects over dozens or even hundreds of continuous spectral channels. It can obtain spectral features that reflect the unique characteristics of the targets. Compared to natural images with only RGB three channels, HSIs integrate spatial and spectral features of the ground objects, which can capture more subtle differences between them. Due to this advantage, HSIs have great application value in the fields such as environmental monitoring[1] and resource utilization and management[2, 3].

HSI classification, which is one fundamental task for HSI applications, is to classify each pixel of HSIs and predict their classes. Because the spectral vectors behind each pixel of HSIs contain rich radiation information, the works for HSI classification in early stage focus mainly on the spectral features, and directly take these spectral vectors as the samples to be classified [4, 5]. But caused by the factors such as illumination and atmosphere, the phenomenon of same objects with different spectrum and different objects with same spectrum is widely present in HSIs. Therefore, later methods for HSIs classification pay much attention to integrating the spatial features with the spectral features of each pixel [6, 7], where the spatial features provide additional useful information about the shape, context, and layout around each pixel. To capture the spatial features, the fixed-size (e.g., ) patches centering around each pixel are often generated from HSIs and then taken as the samples to be classified.

In recent years, with the powerful feature extraction ability and a great success in a series of fields, deep learning has been widely used for HSI classification [8, 9, 10]. Based on these typical deep learning models of convolutional neural networks (CNN), recurrent neural networks (RNN) and graph neural networks (GNN), many deep learning methods have been designed to obtain more distinguishable spatial and spectral features from HSIs. Some methods adopt the two branches architecture, which uses different neural networks to extract the spatial and spectral features respectively and then fuses them together [11, 12, 13]. At the same time, many methods strive to use a single feature extractor such as 3DCNN to capture the spatial-spectrial joint features [14, 15].

In light of that deep learning methods often require a large number of labeled training samples and it is expensive and time-consuming to manually mark these samples, few-shot learning methods have also aroused lots of interest recently [16, 17, 18, 19, 20, 21]. Few-shot learning, which is a branch of deep learning, aims to learn to identify some classes of samples by marking only few of them, e.g., three or five samples per class. This kind of ability has been viewed as one skill possessed by humans. With the aids of available abundant labeld samples, current few-shot learning methods mainly follow the way of transfer learning, that is, acquire prior knowledge from available abundant labeled samples and then transfer the knowledge to target tasks which contain only few labeled samples. To transfer knowledge effectively, few-shot learning methods often adopt the meta-learning technique which constructs lots of similar tasks by imitating the target tasks and uses the constructed tasks to train a model that can easily adapt to target tasks. Based on typical few-shot learning models such as prototype network [16], many few-shot methods have been designed for HSI classification. For example, DFSL [22] took the 3D residual network as feature extractor to extract better spatial-spectral joint features from HSIs. Different from DFSL, SSPN [23] used the local pattern coding technique to combine the spatial and spectral information, and then applied 1D convolutional neural network as extractor to obtain features. Instead of using the Euclidean distance, HSEMD-Net [24] used the Earth Mover distance to learn prototype representations for each hyperspectral class. Meanwhile, RL-Net [25] followed the relational network [26] which is an improved version of prototype network for few-shot HSI classification.

When classifying HSIs under few-shot setting, early methods usually assume that the samples from which the prior knowledge are learned and the samples of the target tasks are coming from the same domain. This often means that these samples should be captured by the same sensors and in the same environment. To relax this assumption, a set of crosss-domain few-shot methods have been further proposed for HSI classification. Their purposes are to enhance model’s cross-domain generalization ability. For example, DCFSL [27] augmented DFSL method with a domain discriminator to obtain domain-independent features; SSFT [28] changed the feature distribution by a feature-wise transformation module with the aim to obtain generalized features; CMFSL [29] used the task-adapted class-covariance metric to obtain better features under few-shot setting; Gia-CFSL [30] enhanced prototype network with domain alignment strategy to increase domain adaptation. By using supervised contrastive learning, RPCL[31] imposed triple constraints on prototypes of the support set to stabilize and refine the prototypes. Moreover, MRLF proposed to learn task-specific relations between samples, including the contrastive and affinitive relations, to futher improve the feature discriminability [32]. In addition, considering the availability of the large number of natural images, HFSL [33] took natural image datasets for pre-training to obtain prior classification knowledge, allowing the model to better distinguish hyperspectral samples.

However, while taking the fixed-size patches around each pixel as samples to obtain spatial-spectral joint features, current few-shot research works for HSI classification, including these cross-domain methods, have paid less attention to the difference between the patches around the boundary pixels and the others. As shown in Fig. 1, each ground object in HSIs occupies a part of the images, which covers a set of pixels. The boundary patches built for the boundary pixels of the object will be different from these patches for the pixels located far from the boundary. These boundary patches will constitute the pixels belonging to the adjacent ground objects, which will be also mixed with multi-class spectral information. This means that the classification results of these boundary patches will be affected by these pixels from adjacent objects and the different pixels in the patches will have different importance. Generally, these boundary patches are the hard samples to be classified. Our primary experimental results shown in Fig. 1 have also indcated that current methods have shown lower performance for these boundary patches. What’s worse, these boundary patches only constitute a minority in the training samples, so they have less contributions to improve the robustness of the classification model.

To address above issue, we augment the prototype network with TransMix for few-shot HSI classification (APNT) in this paper. In light of that boundary patches are the samples mixed by the pixels from different objects, our main idea is to randomly mix up two different training samples online and use the synthetic samples to train the model. The typical few-shot learning model prototype network, which takes the few labeled samples as supports to caculate the class prototypes and predicts the unlabeled query samples according to their distance to the prototypes, is adopted as the backbone of our proposed network. To learn about the different importantce of the pixels in the patches, the proposed network enhance the prototype network by using transformer as the feature extractor. Through treating each patch as a sequence of pixels which are the spectral vectors, this can enable the model to pay different attention to different pixels in the patche. Moreover, inspired by TransMix [34] which is a transformer based mix-up technique, the attention returned from transformer will be further used to derive the labels for the synthetic samples, to better reflect the contributions of each sample to the synthetic samples. In sumary, the proposed method is expected to enhance the robustness of prototype network when classifying these boundary pixels in HSIs. The main contributions of this work are as follows.

-

1.

We propose to randomly mix up two HSI patches by imitating the boundary patches which are centering around the boundary pixels of objects in HSIs and use these synthetic patches to train the model, in order to enlarge the number of hard training samples and enhance their diversity for few-shot HSI classification.

-

2.

A lightweight TransMix based prototype network is designed for mixing up patches online for few-shot HSI classification, which adopts transformer as feature extractor to pay different attention to different pixels in the patches, and takes the attention to mix up the labels of two patches to generate better labels for synthetic patches.

-

3.

Extensive experiments have been done and the proposed method has shown the state of art performance when compared with latest methods. The experiments also reveal that without the pre-training on existing datasets, the proposed method also has comparable performance by using only these few labeled samples in the target tasks. This will make it more convenient for its use in a range of applications.

The remainder of this paper is structured as follows. Section 2 details the proposed method. Section 3 describes the datasets, the design and the results of the experiments. Finally, the conclusions are in section 4.

II The proposed method

In this section, we state the problem of few-shot HSI classification, and detail the proposed method.

II-A Problem Statement

For the problem of few-shot HSI classification, there are two datasets given: source dataset and target dataset. In practical application, all the samples in the source dataset are labeled, which are used for training the classification model. While, there are only few labeled samples in the target dataset. The samples are the pixels of the HSIs. In order to fully preserve spatial information, the fixed-size patches centering around each pixel instead of the pixeles themselves are often treated as the samples. The few-shot learning task is to predict the classes of these unlabeled samples in the target dataset by using all these labeled samples in source dataset and target dataset. Nevertheless, another dataset in which all the samples are also labeled is often selected as the target dataset in the research environment, to facilitate the performance evaluation of the few-shot learning methods. Such dataset can be also called testing dataset.

To elaborate the problem formally, it is often assumed that there are classses in the source dataset and classses in the target dataset , where is smaller than . To imitate the setting of target dataset, lots of the tasks will be constructed by randomly selecting samples each time, and used to train the classification model in an episode manner. The refers to the number of classes selected, which is often set to the number of classes in the target dataset. is the number of few labeled samples per class, and is the number of query samples per class to be classified, where is smaller than . The set consisiting of the labeled samples is often called support set , while these query samples will form the query set . Such few-shot tasks are often called the -way -shot tasks, whose purpose are to predict the classes of these query samples by using these support samples.

II-B The Model Architecture and Learning Process

The architecture of the proposed APNT method and the flowchart of the learning process is shown in Fig. 2. APNT model mainly consists of three components: query sample mixing, transformer based feature extractor, and TransMix based few-shot learning loss. Given an input task consisting of the support and query set, the query sample mixing module will mix up each query sample with another randomly selected query sample in the way of CutMix[35], and output the synthetic query samples. Subsequently, the transformer based feature extractor extracts the embedding features of the support samples and the synthetic query samples, and also returns the attention maps reflecting the importance of each pixel of the original query samples. Following that, the mean of the embedding features of the support samples in the same classes will be caculated as the prototpyes of each class. In the meanwhile, the TransMix based few-shot learning loss module computes the cross entropy loss of the synthetic query samples according to their synthetic labels which are derived by using the attention maps returned by transformer.

Similar with the work of RPCL[31], We adopt the fine-tuning strategy to train the network. That is, we first pre-train the network by using the tasks constructed from , and then fine-tune it by using the tasks from . This also means there are some labeled samples selected from to participate in the training. Consistent with previous works, there are five samples per class selected, which are augmented to 200 samples per class in our experiments. The tasks from will be constructed from these augmented smaples. Finally, the remaining samples in the target dataset are used for testing. And after extracting sample features with the trained transformer, the classes of these query samples are pedicted by using KNN algorithm during testing. It is worth noting that because the spectral dimensions of the samples from source and target datasets are different, there are also the mapping modules to unify the dimensionality.

II-C Query Samples Mixing

When given an input task consisting of a support set and a query set, either the support or query samples will be first processed by the mapping modules to transform their channel dimensionality. After that, each sample in the query set is mixed up with another randomly selected sample to generate the synthetic query samples. We choose to only mix up the query samples without support samples for the purpose of ensuring the correctness of the class prototypes caculated from these support samples. For two query samples and with the labels of and , the mixing up process can be formally stated as Eq. 1.

| (1) |

where denotes a binary mask indicating where to drop out the pixels from the sample with the spatial size of . is a binary mask filled with ones, and is the element-wise multiplication. is the synthetic query sample, and is the corresponding synthetic label, where and are the proportion of and in the synthetic label.

The proposed method follows CutMix [35] to mix up the query samples. That is, a randomly sampled patch in is removed and filled with the patch cropped from the same region of . CutMix uses the proportion of the sampled patch to the entire sample as the value of (i.e., ) to mix up the labels. Differently, we will caculate the values of and by using the attention maps returned from transformer, to take the different importance of the pixels into consideration. The details will be described in the following subsection of .

II-D Transformer based Feature Extraction

Once the synthetic query samples are generated, the original query samples, the synthetic query samples, and the support samples are input into the transformer module. The embedding features of the support samples and the synthetic query samples will be extracted. At the same time, the attention maps of the original query samples are also required. The whole process can be seen in Fig. 3.

When inputting the samples, i.e., the patches with the three-dimensional size of , into transformer, each sample will be first pull into a sequence of which the composing items are the spectral vectors of the pixels. And then, the token vectors with dimension, which are used to learn global features of each sample, are randomly generated and appended into the sequences of each sample. After that, the features and the attention maps of each sample are obtained by directly inputting the updated sequences into transformer module.

A shown in Fig. 3, the transformer module of APNT is composed of a set of stacked transformer encoders. Each encoder consists of a self-attention layer and a feedforward layer. For the self-attention layer, there exist three learnable projection matrixs , and . They will project the input sequences into different embedding spaces, as shown in Eq. 2.

| (2) |

where , and are the projected matrices of the sequence . With these projected matrices, the attention maps of each input sequence , which capture the attention of each item in the sequence to the other items, can be caculated as Eq. 3. And the features of each item in the sequence are updated by Eq. 4.

| (3) |

| (4) |

There are multiple heads of self-attention compution in transformer. The features returned from each head will be concatenated together and restored to the original dimension through a linear operation. The updated features will be further processed through the feedforward layer without changing the dimensions. That is, a new sequence of will be produced by a transformer encoder for each sample. At the same time, the attention maps from different heads will be averaged. Through two transformer encoders, the updated taken vectors in the sequence generated by the last transformer encoder are selected as the feature vectors of the samples. And the attention vectors capturing attentions of the samples to their constituent pixels will be also selected from the attention maps returned by the last transformer encoder. When reshaping the obtained attention vectors into the shape of , the required attention maps reflecting the importance of each pixel are obtained.

II-E TransMix based Few-shot Learning Loss

After obtaining the attention maps of these query samples, the sum of the attention values belonging to the mixed region is directly used to derive the values of and for label mixing shown in Eq. 1. This is because that the sum of all values in a attention map is 1. This derivation process can be experssed as Eq. 5.

| (5) |

where and represent the attention maps of the query smaple of and . represents the binary mask mentioned in Eq. 1. From Eq. 5, it can be seen that the values of and represent the probability that the synthetic sample shown in Eq. 1 belongs to the classes of and .

In addition, assuming the feature of each support smaple is , the class prototype is computed as Eq.6.

| (6) |

where represents the set of features of class support samples.

Then, through predicting the synthetic query sample as the classes denoted by the labels of and , the few-shot learning losses are caculated according to Eq. 7 and Eq. 8. The in Eq. 7 and 8 represents the embedding feature of synthetic query sample . And means the probability that the model accurately predicts the synthetic query sample to the class of . Meanwhile, denotes the Euclidean distance between the feature of synthetic query sample and the class prototype of .

| (7) |

| (8) |

Because the synthetic query samples only partially belong to the classes of the samples used for mixing, the total loss used for updating the model is caculated as Eq. 9, which uses the values of and to balance the losses of and .

| (9) |

III Experiments

In order to validate the effectiveness of the proposed method, extensive experiments have been done. In this section, we describe the experimental setup and results.

III-A Experimental Setup

Dataset

In order to fairly evaluate the performance of the proposed method, the datasets which had been widely used in related works were selected for training and testing in our work. We used the Indian Pine, University of Pavia, and Salinas datasets as the target datasets, and used the Chikusei dataset as the source dataset. We give a brief introduction about these datasets.

(a) Chikusei: It was taken by Hyperspec-VNIR-C sensor in Chikusei, Japan. The dataset contains 128 bands with the wavelength ranging from to , and pixels with a spatial resolution of . As shown in Fig. 4, there are 19 classes of land cover, including urban and rural areas.

(b) Indian Pines (IP): It was imaged by an airborne visible infrared imaging spectrometer in Indiana, USA. This dataset contains 200 bands with the wavelength ranging from . The size is pixels, with a spatial resolution of about . As shown in Fig. 5, there are 16 classes of land cover including crops and natural vegetation.

(c) Salinas (SA): This dataset was also captured by the airborne visible infrared imaging spectrometer in the Salinas Valley, California, USA. It contains 204 wavebands with a size of pixels, and a spatial resolution of about . As shown in Fig. 6, there are 16 classes of land cover, which include vegetables, exposed soil, etc.

(d) Pavia University (PU): This dataset was imaged by the German airborne reflective optical spectral imager in the city of Pavia, Italy. The dataset contains 103 wavebands, with a size of pixels and a spatial resolution of about . As shown in Fig. 7, there are 9 classes of land cover, including trees, asphalt roads, bricks, etc.

Metric

Also for the fairness of comparison, we adopted the metrics of overall accuracy rate (OA), average accuracy rate (AA) and kappa coefficient which have been widely used to evaluate the performance of proposed method. The definitions of these metrics can be referred to the work of [28].

Implementation and Configuration

For the proposed method, the mapping modules are implemented with a Conv2D layer with kernel. The feature extractor is implemented by two transformer encoders, each of which includes a self-attention layer and a fully connected feedforward layer. The specific parameters related to the implementation are shown in the Table I, where Head_Dim denotes the number of attention channels, and Feed_Dim represents the hidden layer dimension of the feedforward layer.

All linear and convolutional layers were normalized using Xavier, and the Adam optimizer was used to optimize the model, with an initial learning rate of 0.001. All comparison experiments used the same 10 random seeds to fairly compare the mean of AA, OA, and kappa. The patches with the size of pixels were cut from HSIs. Moreover, as mentioned early, there are five samples per class selected from the target dataset to fine-tune the model trained on the source dataset. And they are augmented to 200 samples per class through cropping and resizing, which is consistent with the work of [31]. There are 3000 training iterations adopted in our experiments. Among them, only the samples from source datasets are used in the first 1000 training iterations, and the remaining 2000 iterations are run on these augmented samples from target datasets.

| Model Architecture | SIZE | |

|---|---|---|

| INPUT | 99 | |

| MAPPING | Conv2d | 11(100) |

| TRANSFORMER ENCODER1 | Head | 8 |

| Head_Dim | 64 | |

| Feed_Dim | 1024 | |

| TRANSFORMER ENCODER2 | Head | 8 |

| Head_Dim | 64 | |

| Feed_Dim | 1024 | |

| OUTPUT | Feature | 1160 |

| Attention Map | 99 | |

III-B Comparison with Related Methods

To verify the performance of the proposed method, we selected several typical classification methods for comparison, including DFSL[22], DCFSL[27], CMFSL[29], Gia-CFSL[30], RPCL[31], MRLF[32], and HFSL[33]. In the experiment, according to the specific training process of each model, DFSL only used these augmented samples from target dataset for training. DCFSL, CMFSL, Gia-CFSL, MRLF, RPCL and our proposed method used both the samples from source datasets and target datasets, while HFSL used the natural image dataset of Mini-ImageNet for pre-training and was fine-tuned on these augmented samples from target datasets. In addition, in order to prove that the proposed method still had good performance without pre-training on source datasets, the performance of APNT* method which is only trained on these augmented samples from target datasets is also evaluated.

| DFSL | DCFSL | CMFSL | Gia-CFSL | MRLF | RPCL | HFSL | APNT* | APNT | |

| 1 | 96.75 | 96.34 | 96.34 | 92.44 | 98.05 | 99.51 | 99.51 | 100.0 | 100.0 |

| 2 | 36.38 | 48.10 | 48.70 | 43.08 | 51.78 | 63.53 | 55.06 | 69.09 | 68.67 |

| 3 | 38.34 | 53.27 | 59.96 | 51.24 | 55.43 | 64.85 | 61.38 | 63.61 | 62.15 |

| 4 | 77.16 | 81.59 | 78.88 | 75.60 | 77.28 | 90.99 | 88.15 | 91.81 | 92.16 |

| 5 | 73.92 | 73.77 | 77.13 | 70.98 | 80.61 | 80.61 | 74.62 | 78.56 | 79.12 |

| 6 | 86.25 | 86.14 | 80.36 | 83.08 | 91.26 | 89.85 | 79.26 | 91.02 | 91.70 |

| 7 | 97.10 | 99.57 | 100.00 | 99.57 | 98.7 | 100.0 | 99.57 | 100.0 | 100.0 |

| 8 | 81.82 | 82.98 | 90.53 | 85.48 | 83.07 | 90.13 | 98.50 | 97.80 | 97.53 |

| 9 | 75.56 | 100.0 | 100.0 | 100.0 | 98.67 | 100.0 | 98.67 | 98.67 | 100.0 |

| 10 | 52.22 | 63.45 | 63.52 | 64.38 | 66.61 | 68.61 | 63.06 | 70.92 | 73.22 |

| 11 | 59.96 | 60.09 | 60.65 | 63.96 | 63.53 | 65.81 | 64.04 | 64.88 | 66.11 |

| 12 | 36.56 | 44.73 | 48.16 | 50.49 | 53.91 | 64.54 | 64.35 | 60.00 | 61.48 |

| 13 | 98.00 | 99.00 | 99.35 | 97.95 | 99.05 | 97.15 | 99.05 | 97.00 | 97.25 |

| 14 | 84.63 | 81.72 | 81.33 | 81.94 | 83.76 | 89.37 | 90.27 | 90.62 | 90.21 |

| 15 | 74.10 | 71.44 | 72.31 | 64.51 | 82.10 | 85.88 | 91.15 | 91.76 | 89.97 |

| 16 | 100.0 | 98.86 | 99.66 | 99.32 | 97.50 | 98.18 | 96.59 | 96.36 | 95.91 |

| OA | 61.69 | 65.85 | 66.85 | 65.70 | 69.46 | 74.55 | 71.74 | 75.68 | 76.06 |

| AA | 73.05 | 77.57 | 78.56 | 76.50 | 80.24 | 84.31 | 82.79 | 85.13 | 85.34 |

| Kappa | 56.78 | 61.59 | 62.67 | 61.28 | 65.69 | 71.34 | 68.18 | 72.68 | 73.10 |

| DFSL | DCFSL | CMFSL | Gia-CFSL | MRLF | RPCL | HFSL | APNT* | APNT | |

| 1 | 73.43 | 79.07 | 81.50 | 78.23 | 78.77 | 82.41 | 75.64 | 90.55 | 91.41 |

| 2 | 89.25 | 85.43 | 83.45 | 87.73 | 83.54 | 78.65 | 88.62 | 86.22 | 87.58 |

| 3 | 48.09 | 62.82 | 68.72 | 63.13 | 68.83 | 67.76 | 82.35 | 81.44 | 82.60 |

| 4 | 84.72 | 92.69 | 90.14 | 91.41 | 93.11 | 93.66 | 93.08 | 93.93 | 93.18 |

| 5 | 99.65 | 99.47 | 99.71 | 99.24 | 99.71 | 99.45 | 99.96 | 98.94 | 99.41 |

| 6 | 67.81 | 74.82 | 76.04 | 72.36 | 79.72 | 78.88 | 84.29 | 87.06 | 84.79 |

| 7 | 64.48 | 75.12 | 80.96 | 76.83 | 89.26 | 81.51 | 85.43 | 98.41 | 99.10 |

| 8 | 67.37 | 65.22 | 77.57 | 70.85 | 73.39 | 86.42 | 95.26 | 85.40 | 87.94 |

| 9 | 92.92 | 98.45 | 98.13 | 98.27 | 96.36 | 95.55 | 97.91 | 89.76 | 90.66 |

| OA | 79.63 | 81.28 | 82.01 | 82.31 | 82.41 | 81.58 | 87.14 | 88.09 | 88.83 |

| AA | 76.41 | 81.46 | 82.29 | 84.02 | 84.74 | 84.92 | 89.17 | 90.19 | 90.74 |

| Kappa | 73.05 | 75.74 | 77.05 | 76.92 | 77.40 | 76.58 | 83.27 | 84.60 | 85.55 |

| DFSL | DCFSL | CMFSL | Gia-CFSL | MRLF | RPCL | HFSL | APNT* | APNT | |

| 1 | 73.92 | 99.27 | 97.13 | 99.06 | 99.65 | 99.53 | 98.84 | 99.39 | 99.32 |

| 2 | 96.85 | 98.89 | 99.16 | 99.34 | 99.97 | 99.00 | 92.47 | 99.98 | 100.00 |

| 3 | 96.28 | 93.21 | 90.72 | 89.88 | 89.37 | 90.90 | 96.15 | 96.91 | 95.27 |

| 4 | 99.11 | 99.52 | 99.17 | 98.76 | 99.47 | 99.40 | 99.60 | 99.45 | 99.58 |

| 5 | 80.72 | 91.26 | 93.03 | 89.16 | 91.18 | 91.40 | 95.79 | 92.58 | 94.77 |

| 6 | 91.63 | 99.38 | 99.46 | 97.63 | 99.93 | 97.92 | 98.83 | 96.11 | 95.59 |

| 7 | 97.73 | 99.40 | 97.73 | 99.29 | 99.06 | 98.93 | 95.25 | 97.91 | 97.67 |

| 8 | 82.33 | 75.83 | 70.05 | 76.80 | 79.85 | 83.62 | 72.06 | 81.66 | 78.84 |

| 9 | 94.44 | 98.70 | 99.20 | 98.32 | 99.90 | 99.85 | 99.23 | 99.91 | 99.98 |

| 10 | 80.96 | 84.91 | 84.27 | 81.31 | 87.17 | 86.72 | 91.37 | 91.26 | 90.64 |

| 11 | 93.38 | 98.14 | 96.69 | 96.43 | 98.67 | 98.25 | 98.42 | 99.01 | 99.44 |

| 12 | 97.94 | 99.78 | 98.36 | 98.76 | 99.27 | 98.95 | 98.90 | 98.09 | 97.01 |

| 13 | 95.79 | 99.15 | 99.67 | 98.11 | 98.40 | 99.13 | 99.01 | 99.07 | 99.08 |

| 14 | 98.87 | 98.59 | 98.81 | 97.89 | 98.78 | 98.10 | 96.80 | 94.88 | 94.61 |

| 15 | 71.13 | 74.36 | 77.11 | 74.89 | 78.08 | 76.63 | 82.29 | 81.31 | 84.31 |

| 16 | 90.57 | 89.51 | 87.08 | 82.14 | 92.5 | 91.81 | 91.51 | 98.76 | 97.51 |

| OA | 86.95 | 89.14 | 87.99 | 88.45 | 90.79 | 91.15 | 89.43 | 91.94 | 91.65 |

| AA | 90.08 | 93.74 | 92.98 | 92.36 | 94.77 | 94.39 | 94.16 | 95.39 | 95.23 |

| Kappa | 85.51 | 87.94 | 86.67 | 87.16 | 89.69 | 90.15 | 88.28 | 91.04 | 90.72 |

Table II to Table IV report the specific classification results of each class, as well as the AA, OA, and kappa values of each method on the three target datasets. From the comparison results, it can be seen that APNT* and APNT have superior classification results compared to other methods. Among them, APNT outperforms the other results by , , and on the IP, PU, and SA datasets, respectively, reaching the optimal results on class 1, 4, 6, 7, 9, 10, and 11 of IP dataset, class 1, 3, and 7 of PU dataset, and class 2, 9, 11, and 15 of SA dataset. This result demonstrates the effectiveness of the proposed method. In the meanwhile, APNT* outperforms the other models by , , and on the IP, PU, and SA datasets respectively, reaching the optimal results on class 1, 2, 7, 14, and 15 of IP dataset, class 4 and 6 of PU dataset, and class 3 and 16 of SA dataset. Because APNT* does not use the sampes from source dataset for pre-training, this result shows that the proposed method can eliminate the dependence on existing large-scale datasets and can achieve comparative performance by only using these few-shot labeled samples in target tasks. This will facilitate the use of the proposed method in a range of applications.

The classification maps corresponding to each method are shown in Figures 8 to 10. In these maps, colored pixels are labeled, and black pixels are unlabeled and displayed as the background. By observing the results displayed in the classification maps, it can be observed that the classification maps generated by the proposed method are most similar to the real ground map. In particular, we can notice the reduction of misclassified boundary points. These results also confirm the effective improvement of the classification results by the proposed method.

III-C Evaluation on Boundary Patches

To validate the improvement of the predicting on boundary pixels, we processed the datasets and extracted all the boundary patches for testing. In the hyperspectral datasets, when all the pixels in the patches don’t belong to the same class, we define such patches as boundary patches. Generally speaking, these boundary patches are the hard samples, and it is more difficult to predict their classes.

We calculated the overall accuracy of various methods on all the boundary patches, which are shown in Fig. 11. As can be seen from the results, consistent with the assumption, APNT has the highest overall prediction performance on the boundary patches over three datasets.

III-D Ablation Experiments

While applying transformer to pay different attention to different pixels and extract better features, the proposed method also mixs up the query samples by following TransMix and use the synthetic samples to train the model. We divide our contribution into two parts: the transformer based feature extractor and the TransMix based sample mixing. To verify the contribution of each part, the ablation experiments were also conducted. By comparing the proposed method with the method with 3DCNN feature extractor which is used in the work of [31], we validate the contribution of transformer based feature extractor. At the same time, by comparing the proposed method with that of using CutMix [35] instead of TransMix [34] to mix up query samples, the contribution of TransMix based query sample mixing is also validated.

Table V shows the results of the ablation experiments, where Trans denotes the transformer. From the first and second column, it can be seen that when adopting the same mixing technique of CutMix, better performance are achieved in most cases by adopting transformer as feature extractor. This indicates that compared with the 3DCNN feature extractor, transformer model can learn more point-to-point relationships in the patches. Furthermore, when using transformer as the feature extractor but adopting TransMix instead of CutMix for query sample mixing, there are also better performance which can be seen from the second and third column of Table V. This proves that compared with CutMix which mixs up the labels of two samples just according to the proportion of synthetic areas, TransMix can generate better mixing labels by using the attention on each pixel which reflect their relative importance.

| Var | CNN+CutMix | Trans+CutMix | Trans+TransMix |

|---|---|---|---|

| Dataset | IP | ||

| OA | 75.27 +- 3.23 | 76.04 +- 2.96 | 76.06 +- 2.50 |

| AA | 85.32 +- 1.67 | 85.26 +- 1.09 | 85.34 +- 1.00 |

| Kappa | 72.23 +- 3.53 | 73.08 +- 3.19 | 73.10 +- 2.70 |

| Dataset | PU | ||

| OA | 83.63 +- 5.17 | 88.60 +- 3.52 | 88.83 +- 4.38 |

| AA | 87.83 +- 2.50 | 90.17 +- 1.67 | 90.74 +- 2.03 |

| Kappa | 79.12 +- 5.83 | 85.22 +- 4.30 | 85.55 +- 5.30 |

| Dataset | SA | ||

| OA | 90.89 +- 2.59 | 91.30 +- 2.00 | 91.65 +- 1.59 |

| AA | 95.10 +- 1.17 | 94.99 +- 1.11 | 95.23 +- 1.00 |

| Kappa | 89.88 +- 2.85 | 90.34 +- 2.21 | 90.72 +- 1.75 |

III-E Sensitive Analysis of Parameters

There are several parameters which affect the performance of the proposed method. We have done the experiments to study how sensitive the proposed method is to these parameters. In this section, we report the analysis results of two kinds of parameters, namely the number of transformer encoders and the size of the HSI patch.

III-E1 Effect of the Number of Transformer Encoders

To analyze the impact of the number of transformer encoders on classification performance, we set the number of transformer encoders in the for testing. The changes of the performance of the proposed method on three datasets are shown in Fig. 12. It can be seen that as the number of transformer encoders increases, the performance in OA, AA, and kappa do not significantly improve, and even slightly decreases. This indicates that larger number of transformer encoders does’t mean better performance. This may be because that limited by the number of training samples, the model has fully learned the classification knowledge in the samples when adopting fewer transformer encoders. It can be seen that when the number of transformer encoders in the model is 2, it achieves the best performance on PU and SA datasets, and achieves the second-best performance on IP dataset. On balance, in order to reduce the scale of model parameters, we finally set the number of transformer encoders to 2 in our experiments.

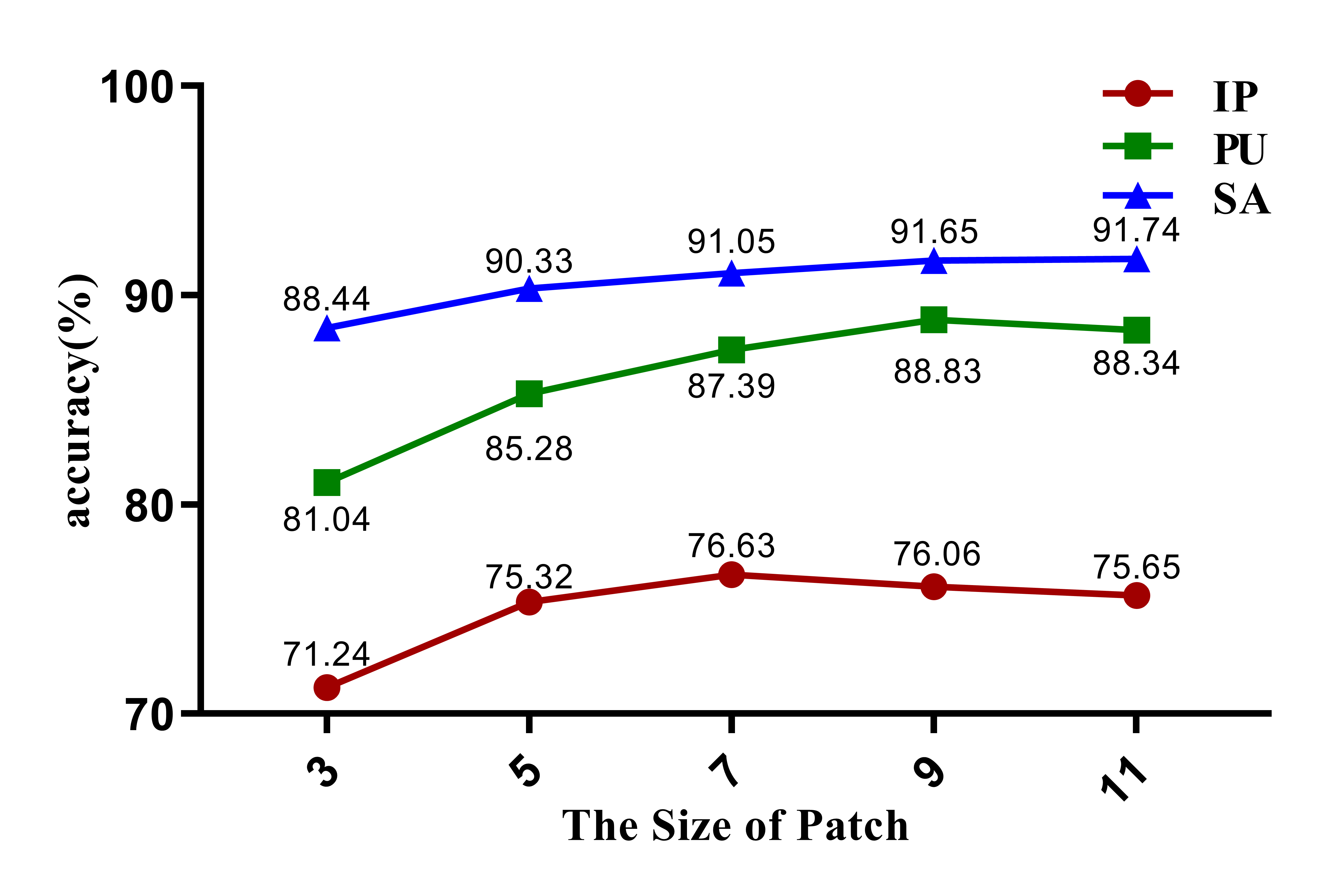

III-E2 Effect of the Patch Size

The proposed method uses transformer to learn the relationship among pixels in the patches and extract the spatial-spectral joint features from the patches. Then, increasing the patch size will introduce more spatial information. In order to illustrate the influence of patch size on classification performance, we tested the values in . The changes of the performance of the proposed method on three datasets are shown in Fig. 13. It can be seen that with the change of patch size, the best effect is achieved when the patch size is 7 on IP dataset, while it is better to set the patch size to 11 on PU and SA dataset. When the size is 9, the proposed method achieves the second-best effect on all three datasets. In order to facilitate the comparison with other models, we set the patch size to 9 in our experiments.

III-F Analysis of Parameter and Computational Time

To further illustrate the performance of the proposed method on time and space consumption, Table VI lists the size of model parameters (M) and the inference time including the training and testing time (s) of these comparsion methods. It can be seen that although our APNT method is not the lightest compared to other few-shot methods, it has a faster training speed and competitive testing time. This may be attributed to the parallel execution characteristics possessed by the Transformer model.

TIME OF DIFFERENT METHODS

| Method | CMFSL | Gia-CFSL | MRLF | RPCL | HFSL | APNT* | APNT |

|---|---|---|---|---|---|---|---|

| Dataset | IP | ||||||

| Train(s) | 1444.91 | 3697.22 | 2652.73 | 587.21 | 5148.49 | 213.56 | 459.97 |

| Test(s) | 1.35 | 1.18 | 1.43 | 1.79 | 4.69 | 0.91 | 1.30 |

| Params(M) | 2.49 | 3.49 | 6.07 | 0.34 | 12.95 | 3.36 | 3.36 |

| Dataset | PU | ||||||

| Train(s) | 826.91 | 2232.29 | 1843.12 | 278.42 | 2291.59 | 122.12 | 300.55 |

| Test(s) | 4.49 | 4.47 | 5.50 | 4.22 | 11.94 | 2.97 | 4.61 |

| Params(M) | 2.49 | 3.44 | 6.04 | 0.30 | 12.37 | 3.32 | 3.32 |

| Dataset | SA | ||||||

| Train(s) | 1185.34 | 5701.69 | 2796.91 | 510.10 | 6492.53 | 256.97 | 509.99 |

| Test(s) | 4.99 | 9.95 | 11.80 | 6.46 | 25.68 | 5.10 | 7.08 |

| Params(M) | 2.49 | 3.49 | 6.08 | 0.34 | 12.97 | 3.35 | 3.35 |

IV Conclusion

In this paper, we augment the prototype network with TransMix for few-shot HSI classification. Focusing on the practical problem of low predicting accuracy at boundary pixels, the proposed method uses the transformer as the feature extractor of prototype network, in order to pay different attention to different pixels in the patches adopted for obtaining spatial-spectral joint features. In the meanwhile, it randomly mixs up two patches to imitate the boundary patches which are mixed with multi-class spectral information, and uses these synthetic patches to train the model. It is expected to enlarge the number of hard training samples and enhance the diversity of training samples. And to mix up the labels of two patches, the attention captured by transformer are used to generate better labels for the synthetic patches. The proposed method requires less training time and achieves better results on these datasets widely used for HSI classification experiments. Particularly, in comparative experiments, we found that it can also achieve good results without using auxiliary datasets for pre-training. This shows that the proposed method can remove the dependence on the auxiliary datasets, and can be easily appied in practice.

References

- [1] I. B. Strachan, E. Pattey, and J. B. Boisvert, “Impact of nitrogen and environmental conditions on corn as detected by hyperspectral reflectance,” Remote Sensing of environment, vol. 80, no. 2, pp. 213–224, 2002.

- [2] S. Chabrillat, R. Milewski, T. Schmid, M. Rodriguez, P. Escribano, M. Pelayo, and A. Palacios-Orueta, “Potential of hyperspectral imagery for the spatial assessment of soil erosion stages in agricultural semi-arid spain at different scales,” in 2014 IEEE Geoscience and Remote Sensing Symposium. IEEE, 2014, pp. 2918–2921.

- [3] P. Kuflik and S. R. Rotman, “Band selection for gas detection in hyperspectral images,” in 2012 IEEE 27th Convention of Electrical and Electronics Engineers in Israel. IEEE, 2012, pp. 1–4.

- [4] F. Melgani and L. Bruzzone, “Classification of hyperspectral remote sensing images with support vector machines,” IEEE Transactions on geoscience and remote sensing, vol. 42, no. 8, pp. 1778–1790, 2004.

- [5] J. Li, J. M. Bioucas-Dias, and A. Plaza, “Semisupervised hyperspectral image classification using soft sparse multinomial logistic regression,” IEEE Geoscience and Remote Sensing Letters, vol. 10, no. 2, pp. 318–322, 2012.

- [6] N. Falco, J. A. Benediktsson, and L. Bruzzone, “Spectral and spatial classification of hyperspectral images based on ica and reduced morphological attribute profiles,” IEEE Transactions on Geoscience and Remote Sensing, vol. 53, no. 11, pp. 6223–6240, 2015.

- [7] Y. Qian, M. Ye, and J. Zhou, “Hyperspectral image classification based on structured sparse logistic regression and three-dimensional wavelet texture features,” IEEE Transactions on Geoscience and Remote Sensing, vol. 51, no. 4, pp. 2276–2291, 2012.

- [8] S. Li, W. Song, L. Fang, Y. Chen, P. Ghamisi, and J. A. Benediktsson, “Deep learning for hyperspectral image classification: An overview,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 9, pp. 6690–6709, 2019.

- [9] M. Paoletti, J. Haut, J. Plaza, and A. Plaza, “Deep learning classifiers for hyperspectral imaging: A review,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 158, pp. 279–317, 2019.

- [10] S. Yu, S. Jia, and C. Xu, “Convolutional neural networks for hyperspectral image classification,” Neurocomputing, vol. 219, pp. 88–98, 2017.

- [11] H. Zhang, Y. Li, Y. Zhang, and Q. Shen, “Spectral-spatial classification of hyperspectral imagery using a dual-channel convolutional neural network,” Remote sensing letters, vol. 8, no. 5, pp. 438–447, 2017.

- [12] F. Zhou, R. Hang, Q. Liu, and X. Yuan, “Hyperspectral image classification using spectral-spatial lstms,” Neurocomputing, vol. 328, pp. 39–47, 2019.

- [13] D. Hong, L. Gao, J. Yao, B. Zhang, A. Plaza, and J. Chanussot, “Graph convolutional networks for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 7, pp. 5966–5978, 2020.

- [14] B. Fang, Y. Li, H. Zhang, and J. C.-W. Chan, “Collaborative learning of lightweight convolutional neural network and deep clustering for hyperspectral image semi-supervised classification with limited training samples,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 161, pp. 164–178, 2020.

- [15] C. Shi and C.-M. Pun, “Multi-scale hierarchical recurrent neural networks for hyperspectral image classification,” Neurocomputing, vol. 294, pp. 82–93, 2018.

- [16] J. Snell, K. Swersky, and R. Zemel, “Prototypical networks for few-shot learning,” Advances in neural information processing systems, vol. 30, 2017.

- [17] B. Zhang, X. Li, Y. Ye, Z. Huang, and L. Zhang, “Prototype completion with primitive knowledge for few-shot learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 3754–3762.

- [18] K. J. Liang, S. B. Rangrej, V. Petrovic, and T. Hassner, “Few-shot learning with noisy labels,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9089–9098.

- [19] Y. Liu, W. Zhang, C. Xiang, T. Zheng, D. Cai, and X. He, “Learning to affiliate: Mutual centralized learning for few-shot classification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 14 411–14 420.

- [20] C. Doersch, A. Gupta, and A. Zisserman, “Crosstransformers: spatially-aware few-shot transfer,” Advances in Neural Information Processing Systems, vol. 33, pp. 21 981–21 993, 2020.

- [21] A. Li, W. Huang, X. Lan, J. Feng, Z. Li, and L. Wang, “Boosting few-shot learning with adaptive margin loss,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 12 576–12 584.

- [22] B. Liu, X. Yu, A. Yu, P. Zhang, G. Wan, and R. Wang, “Deep few-shot learning for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 4, pp. 2290–2304, 2018.

- [23] H. Tang, Y. Li, X. Han, Q. Huang, and W. Xie, “A spatial–spectral prototypical network for hyperspectral remote sensing image,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 1, pp. 167–171, 2019.

- [24] J. Sun, X. Shen, and Q. Sun, “Hyperspectral image few-shot classification network based on the earth mover’s distance,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–14, 2022.

- [25] X. Ma, S. Ji, J. Wang, J. Geng, and H. Wang, “Hyperspectral image classification based on two-phase relation learning network,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 12, pp. 10 398–10 409, 2019.

- [26] F. Sung, Y. Yang, L. Zhang, T. Xiang, P. H. Torr, and T. M. Hospedales, “Learning to compare: Relation network for few-shot learning,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1199–1208.

- [27] Z. Li, M. Liu, Y. Chen, Y. Xu, W. Li, and Q. Du, “Deep cross-domain few-shot learning for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–18, 2022.

- [28] J. Bai, S. Huang, Z. Xiao, X. Li, Y. Zhu, A. C. Regan, and L. Jiao, “Few-shot hyperspectral image classification based on adaptive subspaces and feature transformation,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–17, 2022.

- [29] B. Xi, J. Li, Y. Li, R. Song, D. Hong, and J. Chanussot, “Few-shot learning with class-covariance metric for hyperspectral image classification,” IEEE Transactions on Image Processing, vol. 31, pp. 5079–5092, 2022.

- [30] Y. Zhang, W. Li, M. Zhang, S. Wang, R. Tao, and Q. Du, “Graph information aggregation cross-domain few-shot learning for hyperspectral image classification,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [31] Q. Liu, J. Peng, Y. Ning, N. Chen, W. Sun, Q. Du, and Y. Zhou, “Refined prototypical contrastive learning for few-shot hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–14, 2023.

- [32] C. Liu, L. Yang, Z. Li, W. Yang, Z. Han, J. Guo, and J. Yu, “Multi-view relation learning for cross-domain few-shot hyperspectral image classification,” arXiv preprint arXiv:2311.01212, 2023.

- [33] Y. Wang, M. Liu, Y. Yang, Z. Li, Q. Du, Y. Chen, F. Li, and H. Yang, “Heterogeneous few-shot learning for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 19, pp. 1–5, 2021.

- [34] J.-N. Chen, S. Sun, J. He, P. H. Torr, A. Yuille, and S. Bai, “Transmix: Attend to mix for vision transformers,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 135–12 144.

- [35] S. Yun, D. Han, S. J. Oh, S. Chun, J. Choe, and Y. Yoo, “Cutmix: Regularization strategy to train strong classifiers with localizable features,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6023–6032.