Received May 18, 2021, accepted Jun 21, 2021, date of publication xxxx 00, 0000, date of current version xxxx 00, 0000. 10.1109/ACCESS.2021.3093456

This work was supported by JSPS KAKENHI Grant Number 21J14143.

Corresponding author: Shunsuke Kitada (e-mail: [email protected]).

Attention Meets Perturbations:

Robust and Interpretable Attention

with Adversarial Training

Abstract

Although attention mechanisms have been applied to a variety of deep learning models and have been shown to improve the prediction performance, it has been reported to be vulnerable to perturbations to the mechanism. To overcome the vulnerability to perturbations in the mechanism, we are inspired by adversarial training (AT), which is a powerful regularization technique for enhancing the robustness of the models. In this paper, we propose a general training technique for natural language processing tasks, including AT for attention (Attention AT) and more interpretable AT for attention (Attention iAT). The proposed techniques improved the prediction performance and the model interpretability by exploiting the mechanisms with AT. In particular, Attention iAT boosts those advantages by introducing adversarial perturbation, which enhances the difference in the attention of the sentences. Evaluation experiments with ten open datasets revealed that AT for attention mechanisms, especially Attention iAT, demonstrated (1) the best performance in nine out of ten tasks and (2) more interpretable attention (i.e., the resulting attention correlated more strongly with gradient-based word importance) for all tasks. Additionally, the proposed techniques are (3) much less dependent on perturbation size in AT.

Index Terms:

natural language processing, attention mechanism, adversarial training, interpretability, binary classification, question answering, natural language inference=-15pt

I Introduction

Attention mechanisms [1] are widely applied in natural language processing (NLP) field through deep neural networks (DNNs). As the effectiveness of attention mechanisms became apparent in various tasks [2, 3, 4, 5, 6, 7], they were applied not only to recurrent neural networks (RNNs) but also to convolutional neural networks (CNNs). Moreover, Transformers [8] which make proactive use of attention mechanisms have also achieved excellent results. However, it has been pointed out that DNN models tend to be locally unstable, and even tiny perturbations to the original inputs [9] or attention mechanisms can mislead the models [10]. Specifically, Jain and Wallace [10] used a practical bi-directional RNN (BiRNN) model to investigate the effect of attention mechanisms and reported that learned attention weights based on the model are vulnerable to perturbations.111In Jain and Wallace [10], the vulnerability of attention mechanisms to perturbations is confirmed with an RNN-based model [10]. In this paper, we focus on the model, and Transformer [8]-based model such as BERT [11] and their successor models [12, 13] will be future work.

The Transformer [8] and its follow-up models [12, 13] have self-attention mechanisms that estimate the relationship of each word in the sentence. These models take advantage of the effect of the mechanisms and have shown promising performances. Thus, there is no doubt that the effect of the mechanisms is extremely large. However, they are not easy to train, as they require huge amounts of GPU memory to maintain the weights of the model. Recently, there have been proposals to reduce memory consumption [14], and we acknowledge the advantages of the models. On the other hand, the application of attention mechanisms to DNN models, such as RNN and CNN models, which have been widely used and do not require relatively high training requirements, has not been sufficiently studied.

In this paper, we focus on improving the robustness of commonly used BiRNN models (as described detail in Section III) to perturbations in the attention mechanisms. Furthermore, we demonstrate that the result of overcoming the vulnerability of the attention mechanisms is an improvement in the prediction performance and model interpretability.

To tackle the models’ vulnerability to perturbation, Goodfellow et al. [15] proposed adversarial training (AT) that increases robustness by adding adversarial perturbations to the input and the training technique forcing the model to address its difficulties. Previous studies [15, 16] in the image recognition field have theoretically explained the regularization effect of AT and shown that it improves the robustness of the model for unseen images.

AT is also widely used in the NLP field as a powerful regularization technique [18, 19, 20, 21]. In pioneering work, Miyato et al. [18] proposed a simple yet effective technique to improving the text classification performance by applying AT to a word embedding space. Later, interpretable AT (iAT) was proposed to increase the interpretability of the model by restricting the direction of the perturbations to existing words in the word embedding space [19]. The attention weight of each word is considered an indicator of the importance of each word [22], and thus, in terms of interpretability, we assume that the weight is considered a higher-order feature than the word embedding. Therefore, AT for attention mechanisms that adds an adversarial perturbation to deceive the attention mechanisms is expected to be more effective than AT for word embedding.

From motivations above, we propose a new general training technique for attention mechanisms based on AT, called adversarial training for attention (Attention AT) and more interpretable adversarial training for attention (Attention iAT). The proposed techniques are the first attempt to employ AT for attention mechanisms. The proposed Attention AT/iAT is expected to improve the robustness and the interpretability of the model by appropriately overcoming the adversarial perturbations to attention mechanisms [23, 24, 25]. Because our proposed AT techniques for attention mechanisms is model-independent and a general technique, it can be applied to various DNN models (e.g., RNN and CNN) with attention mechanisms. Our technique can also be applied to any similarity functions for attention mechanisms, e.g, additive function [1] and scaled dot-product function [8], which is famous for calculating the similarity in attention mechanisms.

To demonstrate the effects of these techniques, we evaluated them compared to several other state-of-the-art AT-based techniques [18, 19] with ten common datasets for different NLP tasks. These datasets included binary classification (BC), question answering (QA), and natural language inference (NLI). We also evaluated how the attention weights obtained through the proposed AT technique agreed with the word importance calculated by the gradients [26]. Evaluating the proposed techniques, we obtained the following findings concerning AT for attention mechanisms in NLP:

-

•

AT for attention mechanisms improves the prediction performance of various NLP tasks.

-

•

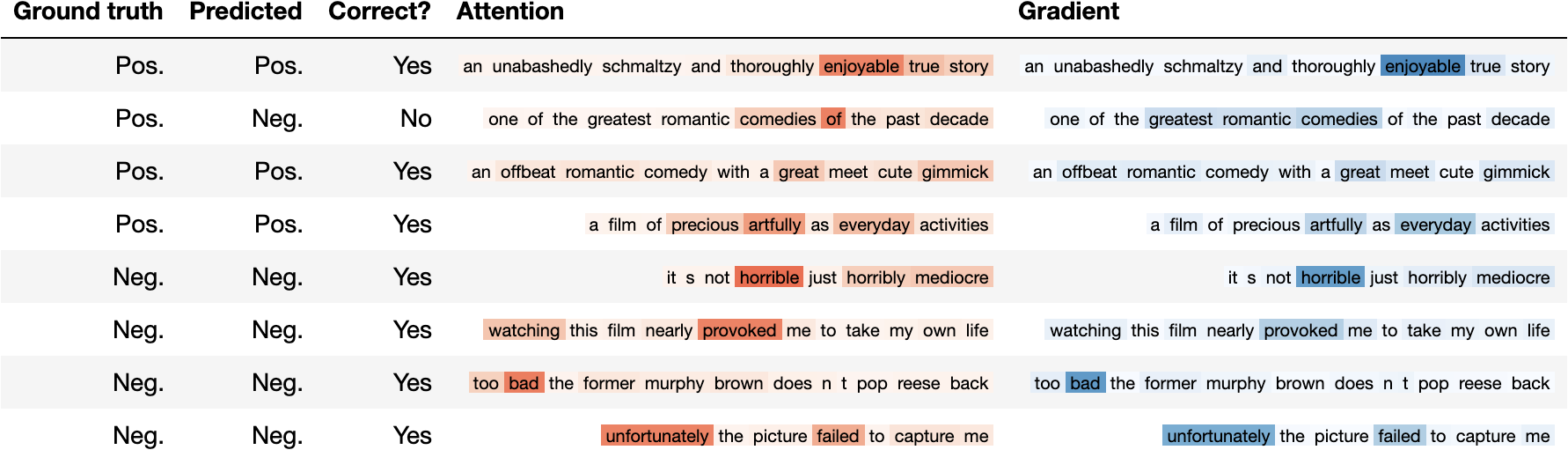

AT for attention mechanisms helps the model learn cleaner attention (as shown in Figure 1) and demonstrates a stronger correlation with the word importance calculated from the model gradients.

-

•

The proposed training techniques are much less independent concerning perturbation size in AT.

Especially, our Attention iAT demonstrated the best performance in nine out of ten tasks and more interpretable attention, i.e., resulting attention weight correlated more strongly with the gradient-based word importance [26]. The implementation required to reproduce these techniques and the evaluation experiments are available on GitHub.222https://github.com/shunk031/attention-meets-perturbation

II Related Work

II-A Attention Mechanisms

Attention mechanisms were introduced by Bahdanau et al. [1] for the task of machine translation. Today, these mechanisms contribute to improving the prediction performance of various tasks in the NLP field, such as sentence-level classification [2], sentiment analysis [3], question answering [4], and natural language inference [5]. There are a wide variety of attention mechanisms; for instance, additive [1] and scaled dot-product [8] functions are used as similarity functions.

Attention weights are often claimed to offer insights into the inner workings of DNNs [22]. However, Jain and Wallace [10] reported that learned attention weights are often uncorrelated with the word importance calculated through the gradient-based method [26], and perturbations interfere with interpretation. In this paper, we demonstrate that AT for attention mechanisms can mitigate these issues.

II-B Adversarial Training

AT [9, 15, 27] is a powerful regularization technique that has been primarily explored in the field of image recognition to improve the robustness of models for input perturbations. In the NLP field, AT has been applied to various tasks by extending the concept of adversarial perturbations, e.g., text classification [18, 19], part-of-speech tagging [20], and machine reading comprehension [21, 28]. As mentioned earlier, these techniques apply AT for word embedding. Other AT-based techniques for the NLP tasks include those related to parameter updating [29] and generative adversarial network (GAN)-based retrieval-enhancement method [30]. Our proposal is an adversarial training technique for attention mechanisms and is different from these methods.

Miyato et al. [18] proposed Word AT, a technique that applied AT to the word embeddings. The adversarial perturbations are generated according to the back-propagation gradients. These perturbations are expected to regularize the model. Since then, Sato et al. [19] proposed Word iAT, and it has been known to achieve almost the same performance as Word AT that does not expect interpretability [19]. The Word iAT technique aims to increase the model’s interpretability by determining the perturbation’s direction so that it is closer to other word embeddings in the vocabulary. Both reports demonstrated improved task performance via AT. However, the specific effect of AT on attention mechanisms has yet to be investigated. In this paper, we aim to address this issue by providing analyses of the effects of AT for attention mechanisms using various NLP tasks.

AT is considered to be related to other regularization techniques (e.g., dropout [31], batch normalization [32]). Specifically, dropout can be considered a kind of noise addition. Word dropout [33] and character dropout [34], known as wildcard training, are variants for NLP tasks. These techniques can be considered random noise for the target task. In contrast, AT has been demonstrated to be effective because it creates particularly vulnerable perturbations that the model is trained to overcome [15].

It has been reported that DNN models that introduce adversarial training to overcome adversarial perturbations capture human-like features [23, 24, 25]. These features help to make the prediction of DNN models easier to interpret for humans. In this paper, we demonstrate that the proposed AT to attention mechanisms provides cleaner attention that is more easily interpreted by humans.

III Common Model Architecture

Our goal is to improve the performance of NLP models (i.e., predictability and interpretability) by aiming at the robustness of the attention mechanisms. To demonstrate the effectiveness of exploiting AT to attention for words, we adopted the BiRNN-based model used by Jain and Wallace [10] as our common model architecture and set their performance as our performance baseline, as described in Section I. This is because they performed extensive experiments across a variety of public NLP tasks to investigate the effect of attention mechanisms, and their model has demonstrated desirable prediction performance. However, the attention mechanism in the model has been reported to be vulnerable to perturbations.

Based on the model of Jain and Wallace [10], we investigated three common NLP tasks, BC, QA, and NLI. Because Jain and Wallace [10] considered the same tasks. A BC task is a single sequence task that takes one input text, while QA and NLI tasks are pair sequence tasks that take two input sequences. Then, we defined two base models, a single sequence model and pair sequence model, for those tasks, as shown in Figure 2.

III-A Model with Attention Mechanisms for Single Sequence Task

For a single sequence task, such as the BC task, the input is a word sequence of one-hot encoding , where and are the number of words in the sentence and vocabulary size. We introduce the following short notation for the sequence as . Let be a -dimensional word embedding that corresponds to . We represent each word with the word embeddings to obtain . Next, we use the BiRNN encoder to obtain -dimensional hidden states :

| (1) |

where is the initial hidden state and is regarded as a zero vector. Next, we use the additive formulation of attention mechanisms proposed by Bahdanau et al. [1] to compute the attention score for the -th word , defined as:

| (2) |

where and are the parameters of the model. Then, from the attention scores , the attention weights for all words are computed as

| (3) |

The weighted instance representation is calculated as

| (4) |

Finally, is fed to a dense layer Dec, and the output activation function is then used to obtain the predictions:

| (5) |

where is a sigmoid function, and is the label set size.

III-B Model with Attention Mechanisms for Pair Sequence Task

For a pair sequence task, such as the QA and NLI tasks, the input is and . and are the number of words in each sentence. and represent the paragraph and question in the QA and the hypothesis and premise in the NLI. We used two separate BiRNN encoders to obtain the hidden states and :

| (6) |

where and are the initial hidden states and are regarded as zero vectors. Next, we computed the attention weight of each word of as:

| (7) |

where and denote the projection matrices, and are the parameter vectors. Similar to Eq. 3, the attention weight can be calculated from . The presentation is obtained from a sum of words in .

| (8) |

is fed to a Dec, and then a softmax function is used as to obtain the prediction (in the same manner as in Eq. 5).

III-C Training Model with Attention Mechanisms

Let be an input sequence with attention score , where is a concatenated attention score for all . We model the conditional probability of the class as , where represents all model parameters. For training the model, we minimize the following negative log likelihood as a loss function with respect to the model parameters:

| (9) |

IV Adversarial Training for Attention Mechanisms

The main contribution of this paper is to explore the idea of employing AT for attention mechanisms. In this paper, we propose a new training technique for attention mechanisms based on AT, called Attention AT and Attention iAT. The proposed techniques aim to achieve better regularization effects and to provide better interpretation of attention in the sentence. These techniques are the first application of AT to the attention in each word, which is expected to be more interpretable, with reference to AT for word embeddings [18] and a technique more focused on interpretability [19]. In this paper, we generate adversarial perturbations based on the model described in Section III.

IV-A Attention AT: Adversarial Training for Attention

We describe the proposed Attention AT, which features adversarial perturbations in the attention mechanisms rather than in the word embeddings [18, 19]. The adversarial perturbation on the mechanisms is defined as the worst-case perturbation on attention mechanisms of a small bounded norm that maximizes loss function of the current model:

| (10) |

where is the input sequence with attention score , its perturbation , is the target output, and represents the current model parameters. We apply the fast gradient method [11, 18], i.e., first-order approximation to obtain an approximate worst-case perturbation of norm , through a single gradient computation as follows:

| (11) |

is a hyper-parameter to be determined using the validation dataset. We find this against the current model parameterized by at each training step and construct an adversarial perturbation for attention score :

| (12) |

IV-B Attention iAT: Interpretable Adversarial Training for Attention

We describe the proposed Attention iAT for further boosting the prediction performance and the interpretability of NLP tasks. Rather than utilizing AT to attention mechanisms (as described in Section IV-A), Attention iAT effectively exploits differences in the attention to each word in a sentence for the training. As a result, this technique provides cleaner attention in the sentence and improves the interpretability of the attention. These effects contribute to improving the performance of various NLP tasks.

| Task | Dataset | # class | # train | # valid | # test | # vocab | Avg. # words | ||

| Binary Classification (BC) | SST | [17] | 2 | 6,920 | 872 | 1,821 | 13,723 | 18 | |

| IMDB | [35] | 2 | 17,186 | 4,294 | 4,353 | 12,485 | 171 | ||

| 20News | [36] | 2 | 1,145 | 278 | 357 | 5,986 | 110 | ||

| AGNews | [37] | 2 | 51,000 | 9,000 | 3,800 | 13,713 | 35 | ||

| Question Answering (QA) | CNN news | [38] | 584 | 380,298 | 3,924 | 3,198 | 70,192 | 773 | |

| bAbI | Task 1 | 6 | 8,500 | 1,500 | 1,000 | 22 | 39 | ||

| Task 2 | [39] | 6 | 8,500 | 1,500 | 1,000 | 36 | 98 | ||

| Task 3 | 6 | 8,500 | 1,500 | 1,000 | 37 | 313 | |||

| Natural Language Inference (NLI) | SNLI | [40] | 3 | 549,367 | 9,842 | 9,824 | 20,979 | 22 | |

| Multi NLI | [41] | 3 | 314,161 | 78,541 | 19,647 | 53,112 | 34 | ||

In terms of formulation, the proposed Attention iAT is analogous to interpretable AT for word embeddings (Word iAT) [19], which increases the interpretability of AT for word embeddings in formulas. However, the implications and effects for training the model are very different; in the proposed Attention iAT, the attention difference enhancement, described later, enhances the difference in attention for each word. The difference and its effect will be explained later in this section and discussed in Section VII-C.

Suppose denotes the attention score corresponding to the -th position in the sentence. We define the difference vector as the difference between the attention to the -th word in a sentence and the attention to any -th word :

| (13) |

in single sequence task, and in a pair sequence task. By normalizing the norm of the vector, we define a normalized difference vector of the attention for the -th word:

| (14) |

The number of dimensions in is the number of the vocabulary (fixed length) for Word iAT, while the dimension of words in a sentence (variable length) for Attention iAT. The dimensionality of in Attention iAT is much smaller compared to Word iAT.333This normalization is done on a sentence-by-sentence basis, so it does not matter that the dimension is varies (). We define perturbation for attention to the -th word with trainable parameters and the normalized difference vector of the attention as follows:

| (15) |

By combining for all , we can calculate perturbation for the sentence:

| (16) |

Then, similar to in Eq. 10, we introduce and seek the worst-case weights of the difference vectors that maximize the loss functions as follows:

| (17) |

Contrary to Attention iAT, in Word iAT, the difference in Eq. 13 is defined as the distance between the -th word in a sentence and the -th word in the vocabulary in the word embedding space. Based on the distance, Word iAT determines the direction of perturbation for the -th word as a linear sum of the word directional vectors in the vocabulary. In contrast, Attention iAT does not compute the distance to word embeddings in the vocabulary. Instead, this technique computes the difference in attention to other words in the sentence and determines the direction of the perturbation. The adversarial perturbation of Attention iAT, defined in this way, works to increase the difference in attention to each word. We call this process in Attention iAT as attention difference enhancement. Owing to the process, Attention iAT improves the interpretability of attention and contributes to the performance of the model’s prediction. The detail discussions are shared in Section VII-C.

IV-C Training a Model with Adversarial Training

At each training step, we generate adversarial perturbation in the current model. To this end, we define the loss function for adversarial training as follows:

| (20) |

where is the coefficient that controls the balance between two loss functions. Note that can be for Attention AT or for Attention iAT.

V Experiments

In this section, we describe the evaluation tasks and datasets, the details of the models, and the evaluation criteria.

V-A Tasks and Datasets

We evaluated the proposed techniques using the open benchmark tasks (i.e., four BCs, four QAs, and two NLIs) used in Jain and Wallace [10]. In our experiment, we added MultiNLI [41] as an additional NLI task for more detailed analysis (see the details in Appendix A). Table I presents the statistics for all datasets. We split the dataset into a training set, a validation set, and a test set.444Jain and Wallace [10] split the dataset into only a training set and a test set, so we did not get the same results. We performed preprocessing, including tokenization with spaCy555spaCy · Industrial-strength Natural Language Processing in Python https://spacy.io/, mapping out vocabulary words to a special <unk> token, and mapping all words with numeric characters to qqq in the same manner as Jain and Wallace [10].

V-B Model Settings

We compared the two proposed training techniques to four conventional training techniques. They were implemented using the same model architecture as described in Section III. Following Jain and Wallace [10], we used bi-directional long short-term memory (LSTM) [42] as the BiRNN-based encoder, including Enc, , and . A total of six training techniques were evaluated in the experiments:

-

•

Vanilla [10]: a model with attention mechanisms trained without the use of AT.

-

•

Word AT [18]: word embeddings trained with AT.

-

•

Word iAT [19]: word embeddings trained with iAT.

-

•

Attention RP: attention to the word embeddings is trained with random perturbation (RP).

-

•

Attention AT (Proposed): attention to the word embeddings is trained with AT.

-

•

Attention iAT (Proposed): attention to the word embeddings is trained with iAT.

We implemented the training techniques above using the AllenNLP library with Interpret [43, 44]. Through the experiments, we set the hyper-parameter related to AT or iAT in Eq. 20. To ensure a fair comparison of the training techniques, we followed the configurations (e.g., initialization of word embedding, hidden size of the encoder, optimizer settings) used in the literature [10] (see the details in Appendix B).

V-C Evaluation Criteria

First, we compared the prediction performance of each model for each task. As an evaluation metric of the prediction performance, we used the F1 score666The F1 score is a metric that harmonizes precision and recall.. Therefore, this score takes both false positives and false negatives into account., accuracy, and the micro-F1 score for the BC, QA, and NLI, respectively, as in [10].

Next, we compared how the attention weights obtained through the proposed AT-based technique agreed with the importance of words calculated by the gradients [26]. To evaluate the agreement, we compared the Pearson’s correlations between the attention weights and the word importance of the gradient-based method. In [10], the Kendall tau, which represents rank correlation, was used to evaluate the relationship between attention and the word importance obtained by the gradients. Recently, however, it has been pointed out that rank correlations often misrepresent the relationship between the two due to the noise in the order of the low rankings [46]; we concurred with this, so we used Pearson’s correlations.

VI Results

| Model | SST | IMDB | 20News | AGNews | ||||

|---|---|---|---|---|---|---|---|---|

| F1 [%] | Corr. | F1 [%] | Corr. | F1 [%] | Corr. | F1 [%] | Corr. | |

| Vanilla [10] | 79.27 | 0.652 | 88.77 | 0.788 | 95.05 | 0.891 | 95.27 | 0.822 |

| Word AT [18] | 79.61 | 0.647 | 89.65 | 0.838 | 95.11 | 0.892 | 95.59 | 0.813 |

| Word iAT [19] | 79.57 | 0.643 | 89.64 | 0.839 | 95.14 | 0.893 | 95.62 | 0.809 |

| Attention RP | 81.90 | 0.531 | 89.79 | 0.628 | 96.09 | 0.883 | 96.08 | 0.792 |

| Attention AT (Proposed) | 81.72 | 0.852 | 90.00 | 0.819 | 96.69 | 0.868 | 96.12 | 0.835 |

| Attention iAT (Proposed) | 82.20 | 0.876 | 90.21 | 0.861 | 96.58 | 0.897 | 96.19 | 0.891 |

| Model | CNN news | bAbI | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc. [%] | Corr. | Task 1 | Task 2 | Task 3 | ||||

| Acc. [%] | Corr. | Acc. [%] | Corr. | Acc. [%] | Corr. | |||

| Vanilla [10] | 64.95 | 0.765 | 99.90 | 0.714 | 45.10 | 0.459 | 52.00 | 0.387 |

| Word AT [18] | 65.67 | 0.779 | 100.00 | 0.797 | 79.50 | 0.657 | 55.10 | 0.439 |

| Word iAT [19] | 65.66 | 0.776 | 99.90 | 0.798 | 79.80 | 0.658 | 54.90 | 0.437 |

| Attention RP | 65.78 | 0.614 | 100.00 | 0.592 | 80.60 | 0.584 | 55.35 | 0.373 |

| Attention AT (Proposed) | 65.93 | 0.771 | 100.00 | 0.807 | 82.30 | 0.632 | 56.00 | 0.514 |

| Attention iAT (Proposed) | 66.17 | 0.784 | 100.00 | 0.821 | 85.40 | 0.710 | 57.10 | 0.589 |

| Model | SNLI | Multi NLI | ||||

| Micro-F1 [%] | Corr. | Micro-F1 [%] | Corr. | |||

| Avg. | Matched | Mismatched | ||||

| Vanilla [10] | 78.64 | 0.764 | 60.26 | 59.80 | 60.71 | 0.541 |

| Word AT [18] | 79.03 | 0.812 | 60.72 | 60.58 | 60.86 | 0.601 |

| Word iAT [19] | 79.12 | 0.815 | 60.73 | 60.59 | 60.87 | 0.603 |

| Attention RP | 79.23 | 0.569 | 60.97 | 61.02 | 60.91 | 0.547 |

| Attention AT (Proposed) | 79.19 | 0.792 | 61.17 | 61.20 | 61.13 | 0.626 |

| Attention iAT (Proposed) | 79.32 | 0.818 | 61.34 | 61.75 | 60.93 | 0.668 |

In this section, we share the results of the experiments. Table II presents the prediction performance and the Pearson’s correlations between the attention weight for the words and word importance calculated from the model gradient. The most significant results are shown in bold.

VI-A Comparison of Prediction Performance

In terms of prediction performance, the model that applied the proposed Attention AT/iAT demonstrated a clear advantage over the model without AT (as shown in Vanilla [10]) as well as other AT-based techniques (Word AT [18] and Word iAT [19]). The proposed technique achieved the best results in almost all benchmarks. For 20News and AGNews in the BC and bAbI task 1 in QA, the conventional techniques, including the Vanilla model, were sufficiently accurate (the score was higher than 95%), so the performance improvement of the proposed techniques to the tasks was limited to some extent. Meanwhile, Attention AT/iAT contributed to solid performance improvements in other complicated tasks.

VI-B Comparison of Correlation between Attention Weights and Gradients on Word Importance

In terms of model interpretability, the attention to the words obtained with the Attention AT/iAT techniques notably correlated with the importance of the word as determined by the gradients. Attention iAT demonstrated the highest correlation among the techniques in all benchmarks. Figure 3 visualizes the attention weight for each word and gradient-based word importance in the SST test dataset. Attention AT yielded clearer attention compared to the Vanilla model or Attention iAT. Specifically, Attention AT tended to strongly focus attention on a few words. Regarding the correlation of word importance based on attention weights and gradient-based word importance, Attention iAT demonstrated higher similarities than the other models.

VI-C Effects of Perturbation Size

Figure 4 shows the effect of the perturbation size on the validation performance of SST (BC), CNN news (QA), and Multi NLI (NLI) with a fixed . We observed that the performances of the conventional Word AT/iAT techniques deteriorated according to the increase in the perturbation size; meanwhile, our Attention AT/iAT techniques maintained almost the same prediction performance. We observed similar trends in other datasets as described in Section V-A.

VII Discussion

VII-A Comparison of Adversarial Training for Attention Mechanisms and Word Embedding

Attention AT/iAT is based on our hypothesis that attention is more important in finding significant words in document processing than the word embeddings themselves. Therefore, we sought to achieve prediction performance and model interpretability by introducing AT to the attention mechanisms. We confirmed that the application of AT to the attention mechanisms (Attention AT/iAT) was more effective than word embedding (Word AT/iAT) and supports the correctness of our hypothesis, as shown in Table II. In particular, the Attention iAT technique was not only more accurate in its model than the Word AT/iAT techniques but also demonstrated a higher correlation with the importance of the words predicted based on the gradient.

As shown in Figure 3, Attention AT tended to display more attention to the sentence than the Vanilla model. The results showed that training with adversarial perturbations to the attention mechanism allowed for cleaner attention without changing word meanings or grammatical functions. Furthermore, we confirmed that the proposed Attention AT/iAT techniques were more robust regarding the variation of perturbation size than conventional Word AT/iAT, as shown in Figure 4. Although it is difficult to directly compare perturbations to attention and word embedding because of the difference in the range of the perturbation size to the part, the model that added perturbations to attention behaved robustly even when the perturbations were relatively large.

VII-B Comparison of Random Perturbations and Adversarial Perturbations

Attention RP demonstrated better prediction performance than Word AT/iAT. The results revealed that augmentation for the attention mechanism is very effective, even with simple random noise. In contrast, the correlations between the attention weight for the word and the gradient-based word importance were significantly reduced, as shown in Table II. We consider that Attention RP is successful in learning robust discriminative boundaries through random perturbation and improving to the desired classification performance. However, as the gradient is smoothed out by the perturbation around the (supervised) data points, the correlation with the word importance by the gradient is considered to be degraded. In other words, Attention RP can achieve a certain level of classification performance, but it does not lead to which words are useful from their gradients.

VII-C Comparison of Attention AT, Attention iAT, and Word iAT

In the experiments, Attention iAT showed a better performance compared Attention AT in the prediction performance and the correlation with the gradient-based word importance. Attention iAT exploits the difference in the attention weight of each word in a sentence to determine adversarial perturbations. Because the norm of the difference in attention weight (as shown in Eq. 14) is normalized to one, adversarial perturbations in attention mechanisms will make these differences clear, especially in the case of sentences with a small difference in the attention to each word. That is, even in situations where there is little difference in attention between each element of , the difference is amplified by Eq. 14. Therefore, even for the same perturbation size , more effective perturbations weighted by were successfully obtained for each word. However, in the case of sentences where there was originally a difference in the clear attention to each word, the regularization of in Eq. 14 had practically no effect because it did not change their ratio nearly as much. Thus, we posit that the Attention iAT technique enhances the effectiveness of AT applied to attention mechanisms by generating effective perturbations for each word.

The Attention iAT technique was inspired by Word iAT. Word iAT generates perturbations in the direction that maximizes the loss function while restricting the direction of the perturbation to become a linear combination of the direction of word embedding in the vocabulary. Word iAT indirectly improves the interpretability of the model by indicating which words in the vocabulary to which the perturbation is similar. However, we are confident that Attention iAT is a direct improvement in the interpretability of the model, because it can show more clearly which words to pay attention. This is owing to the attention difference enhancement process described in Eq. 14. Thus, the proposed techniques are highly effective in that they lead to a more substantive improvement in interpretability.

VII-D Limitations

Our proposal is a general-purpose robust training technique for DNN models, which are commonly used for NLP tasks. Therefore, we have chosen here an RNN with an attention mechanism that has been put to practical use [10]. For this reason, models such as BERT [47] that deal with self-attention were outside the scope of this study, and will be the subject of future work. We also did not deal with tasks (such as machine translation) that were not used in the literature [10] as baselines. Additionally, for the same reason in as [48], we did not consider the variants of attention mechanisms, such as bi-attentive architecture [5], multi-headed architecture [8], because they could have different interpretability properties.

VIII Conclusion

We proposed robust and interpretable attention training techniques that exploit AT. In the experiments with various NLP tasks, we confirmed that AT for attention mechanisms achieves better performance than techniques using AT for word embedding in terms of the prediction performance and the interpretability of the model. Specifically, the Attention iAT technique introduced adversarial perturbations that emphasized differences in the importance of words in a sentence and combined high accuracy with interpretable attention, which was more strongly correlated with the gradient-based method of word importance. The proposed technique could be applied to various models and NLP tasks. This paper provides strong support and motivation for utilizing AT with attention mechanisms in NLP tasks.

In the experiment, we demonstrated the effectiveness of the proposed techniques for RNN models that are reported to be vulnerable to attention mechanisms, but we will confirm the effectiveness of the proposed technique for large language models with attention mechanisms such as Transformer [8] or BERT [47] in the future. Because the proposed techniques are model-independent and general techniques for attention mechanisms, we can expect they will improve predictability and the interpretability for language models.

Appendix A Tasks and Dataset

A-A Binary Classification

The following datasets were used for evaluation. The Stanford Sentiment Treebank (SST) [17]777https://nlp.stanford.edu/sentiment/trainDevTestTrees_PTB.zip was used to ascertain positive or negative sentiment from a sentence. IMDB Large Movie Reviews (IMDB) [35]888https://s3.amazonaws.com/text-datasets/imdb_full.pkl,999https://s3.amazonaws.com/text-datasets/imdb_word_index.json was used to identify positive or negative sentiment from movie reviews. 20 Newsgroups (20News) [36]101010https://ndownloader.figshare.com/files/5975967 were used to ascertain the topic of news articles as either baseball (set as a negative label) or hockey (set as a positive label). The AG News (AGNews) [37]111111The dataset can be found on Xiang Zhang’s Google Drive. was used to identify the topic of news articles as either world (set as a negative label) or business (set as a positive label).

A-B Question Answering

The following datasets were used for evaluation. The CNN news article corpus (CNN news) [38]121212The dataset can be found on Deep Mind Q&A Google Drive. was used to identify answer entities from a paragraph. The bAbI dataset (bAbI) [39]131313https://research.fb.com/downloads/babi/ contains 20 different question-answer tasks, and we considered three tasks: (task 1) basic factoid question answered with a single supporting fact, (task 2) factoid question answered with two supporting facts, and (task 3) factoid question answered with three supporting facts. The model was trained for each task.

A-C Natural Language Inference

The following datasets were used for evaluation. The Stanford Natural Language Inference (SNLI) [40]141414https://nlp.stanford.edu/projects/snli/snli_1.0.zip is used to identify whether a hypothesis sentence entails, contradicts, or is neutral concerning a given premise sentence. Multi-Genre NLI (MultiNLI) [41]151515https://www.nyu.edu/projects/bowman/multinli/multinli_1.0.zip uses the same format as SNLI and is comparable in size, but it includes a more diverse range of text, as well as an auxiliary test set for cross-genre transfer evaluation.

Appendix B Implementation Detail

For all datasets, we either used pretrained GloVe [50] or fastText [51] word embedding with 300 dimensions except the bAbI dataset. For the bAbI dataset, we trained 50 dimensional word embeddings from scratch during training. We used a one-layer LSTM as the encoder with a hidden size of 64 for the bAbI dataset and 256 for the other datasets. All models were regularized using regularization () applied to all parameters. We trained the model using the maximum likelihood loss utilizing the Adam [52] optimizer with a learning rate of 0.001.

Acknowledgment

We would like to appreciate the editors and anonymous reviewers for their helpful feedback. We also thank Quan Huu Cap and Mahmoud Daif for feedback and fruitful discussions.

References

- [1] D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” CoRR preprint arXiv:1409.0473, 2014. [Online]. Available: https://arxiv.org/abs/1409.0473

- [2] Z. Lin, M. Feng, C. N. dos Santos, M. Yu, B. Xiang, B. Zhou, and Y. Bengio, “A structured self-attentive sentence embedding,” in Proc. of the 5th International Conference on Learning Representations, ICLR, Conference Track Proceedings, 2017. [Online]. Available: https://openreview.net/forum?id=BJC_jUqxe¬eId=BJC_jUqxe

- [3] Y. Wang, M. Huang, and L. Zhao, “Attention-based LSTM for aspect-level sentiment classification,” in Proc. of the 2016 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2016, pp. 606–615. [Online]. Available: http://dx.doi.org/10.18653/v1/D16-1058

- [4] X. He and D. Golub, “Character-level question answering with attention,” in Proc. of the 2016 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2016, pp. 1598–1607. [Online]. Available: http://dx.doi.org/10.18653/v1/D16-1166

- [5] A. Parikh, O. Täckström, D. Das, and J. Uszkoreit, “A decomposable attention model for natural language inference,” in Proc. of the 2016 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2016, pp. 2249–2255. [Online]. Available: http://dx.doi.org/10.18653/v1/D16-1244

- [6] T. Luong, H. Pham, and C. D. Manning, “Effective approaches to attention-based neural machine translation,” in Proc. of the 2015 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2015, pp. 1412–1421. [Online]. Available: http://dx.doi.org/10.18653/v1/D15-1166

- [7] A. M. Rush, S. Chopra, and J. Weston, “A neural attention model for abstractive sentence summarization,” in Proc. of the 2015 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2015, pp. 379–389. [Online]. Available: http://dx.doi.org/10.18653/v1/D15-1044

- [8] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in Proc. of the 30th International Conference on Neural Information Processing Systems, 2017, pp. 5998–6008. [Online]. Available: https://arxiv.org/abs/1706.03762

- [9] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” in 2nd International Conference on Learning Representations, ICLR, Conference Track Proceedings, 2013. [Online]. Available: https://arxiv.org/abs/1312.6199

- [10] S. Jain and B. C. Wallace, “Attention is not explanation,” in Proc. of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), ser. Association for Computational Linguistics (ACL), 2019, pp. 3543–3556. [Online]. Available: http://dx.doi.org/10.18653/v1/N19-1357

- [11] Y. Liu, X. Chen, C. Liu, and D. Song, “Delving into transferable adversarial examples and black-box attacks,” CoRR preprint arXiv:1611.02770, 2016. [Online]. Available: https://arxiv.org/abs/1611.02770

- [12] Y. Liu, M. Ott, N. Goyal, J. Du, M. Joshi, D. Chen, O. Levy, M. Lewis, L. Zettlemoyer, and V. Stoyanov, “Roberta: A robustly optimized bert pretraining approach,” CoRR preprint arXiv:1907.11692, 2019. [Online]. Available: https://arxiv.org/abs/1907.11692

- [13] Z. Lan, M. Chen, S. Goodman, K. Gimpel, P. Sharma, and R. Soricut, “Albert: A lite bert for self-supervised learning of language representations,” in International Conference on Learning Representations, 2020. [Online]. Available: https://openreview.net/forum?id=H1eA7AEtvS

- [14] Y. Tay, M. Dehghani, D. Bahri, and D. Metzler, “Efficient transformers: A survey,” CoRR preprint arXiv:2009.06732, 2020. [Online]. Available: https://arxiv.org/abs/2009.06732

- [15] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” in 3rd International Conference on Learning Representations, ICLR, Conference Track Proceedings, 2014. [Online]. Available: http://arxiv.org/abs/1412.6572

- [16] U. Shaham, Y. Yamada, and S. Negahban, “Understanding adversarial training: Increasing local stability of supervised models through robust optimization,” Neurocomputing, vol. 307, pp. 195–204, 2018. [Online]. Available: https://doi.org/10.1016/j.neucom.2018.04.027

- [17] R. Socher, A. Perelygin, J. Wu, J. Chuang, C. D. Manning, A. Ng, and C. Potts, “Recursive deep models for semantic compositionality over a sentiment treebank,” in Proc. of the 2013 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2013, pp. 1631–1642. [Online]. Available: https://www.aclweb.org/anthology/D13-1170/

- [18] T. Miyato, A. M. Dai, and I. Goodfellow, “Adversarial training methods for semi-supervised text classification,” in 5th International Conference on Learning Representations, ICLR, Conference Track Proceedings, 2016. [Online]. Available: https://openreview.net/forum?id=r1X3g2_xl

- [19] M. Sato, J. Suzuki, H. Shindo, and Y. Matsumoto, “Interpretable adversarial perturbation in input embedding space for text,” in Proc. of the 27th International Joint Conference on Artificial Intelligence, ser. AAAI Press, 2018, pp. 4323–4330. [Online]. Available: https://dl.acm.org/doi/10.5555/3304222.3304371

- [20] M. Yasunaga, J. Kasai, and D. Radev, “Robust multilingual part-of-speech tagging via adversarial training,” in Proc. of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), ser. Association for Computational Linguistics (ACL), 2018, pp. 976–986. [Online]. Available: http://dx.doi.org/10.18653/v1/N18-1089

- [21] Y. Wang and M. Bansal, “Robust machine comprehension models via adversarial training,” in Proc. of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), ser. Association for Computational Linguistics (ACL), 2018, pp. 575–581. [Online]. Available: http://dx.doi.org/10.18653/v1/N18-2091

- [22] J. Li, W. Monroe, and D. Jurafsky, “Understanding neural networks through representation erasure,” CoRR preprint arXiv:1612.08220, 2016. [Online]. Available: https://arxiv.org/abs/1612.08220

- [23] D. Tsipras, S. Santurkar, L. Engstrom, A. Turner, and A. Madry, “Robustness may be at odds with accuracy,” in International Conference on Learning Representations, no. 2019, 2019. [Online]. Available: https://openreview.net/forum?id=SyxAb30cY7

- [24] T. Itazuri, Y. Fukuhara, H. Kataoka, and S. Morishima, “What do adversarially robust models look at?” CoRR preprint arXiv:1905.07666, 2019. [Online]. Available: https://arxiv.org/abs/1905.07666

- [25] T. Zhang and Z. Zhu, “Interpreting adversarially trained convolutional neural networks,” in International Conference on Machine Learning. PMLR, 2019, pp. 7502–7511. [Online]. Available: http://proceedings.mlr.press/v97/zhang19s.html

- [26] K. Simonyan, A. Vedaldi, and A. Zisserman, “Deep inside convolutional networks: Visualising image classification models and saliency maps,” in 2nd International Conference on Learning Representations, ICLR, Workshop Track Proceedings, 2013. [Online]. Available: https://arxiv.org/abs/1312.6034

- [27] B. Wang, J. Gao, and Y. Qi, “A theoretical framework for robustness of (deep) classifiers against adversarial examples,” CoRR preprint arXiv:1612.00334, 2016. [Online]. Available: https://arxiv.org/abs/1612.00334

- [28] K. Liu, X. Liu, A. Yang, J. Liu, J. Su, S. Li, and Q. She, “A robust adversarial training approach to machine reading comprehension,” in Proc. of the AAAI Conference on Artificial Intelligence, vol. 34, no. 05, 2020, pp. 8392–8400. [Online]. Available: https://doi.org/10.1609/aaai.v34i05.6357

- [29] S. Barham and S. Feizi, “Interpretable adversarial training for text,” CoRR preprint arXiv:1905.12864, 2019. [Online]. Available: https://arxiv.org/abs/1905.12864

- [30] Q. Zhu, L. Cui, W.-N. Zhang, F. Wei, and T. Liu, “Retrieval-enhanced adversarial training for neural response generation,” in Proc. of the 57th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, 2019, pp. 3763–3773. [Online]. Available: http://dx.doi.org/10.18653/v1/P19-1366

- [31] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” The journal of machine learning research, vol. 15, no. 1, pp. 1929–1958, 2014. [Online]. Available: https://jmlr.org/papers/v15/srivastava14a.html

- [32] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in International Conference on Machine Learning, 2015, pp. 448–456. [Online]. Available: http://proceedings.mlr.press/v37/ioffe15.html

- [33] M. Iyyer, V. Manjunatha, J. Boyd-Graber, and H. Daumé III, “Deep unordered composition rivals syntactic methods for text classification,” in Proc. of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 2015, pp. 1681–1691. [Online]. Available: http://dx.doi.org/10.3115/v1/P15-1162

- [34] D. Shimada, R. Kotani, and H. Iyatomi, “Document classification through image-based character embedding and wildcard training,” in Proc. of IEEE International Conference on Big Data. IEEE, 2016, pp. 3922–3927. [Online]. Available: https://doi.org/10.1109/BigData.2016.7841067

- [35] A. L. Maas, R. E. Daly, P. T. Pham, D. Huang, A. Y. Ng, and C. Potts, “Learning word vectors for sentiment analysis,” in Proc. of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, ser. Association for Computational Linguistics (ACL), vol. 1, 2011, pp. 142–150. [Online]. Available: https://www.aclweb.org/anthology/P11-1015/

- [36] K. Lang, “Newsweeder: Learning to filter netnews,” in Machine Learning Proceedings. Elsevier, 1995, pp. 331–339. [Online]. Available: https://doi.org/10.1016/B978-1-55860-377-6.50048-7

- [37] X. Zhang, J. Zhao, and Y. LeCun, “Character-level convolutional networks for text classification,” in Proc. of the 28th International Conference on Neural Information Processing Systems, ser. MIT Press, vol. 1, 2015, pp. 649–657. [Online]. Available: https://dl.acm.org/doi/10.5555/2969239.2969312

- [38] K. M. Hermann, T. Kocisky, E. Grefenstette, L. Espeholt, W. Kay, M. Suleyman, and P. Blunsom, “Teaching machines to read and comprehend,” in Proc. of the 28th International Conference on Neural Information Processing Systems, ser. MIT Press, vol. 1, 2015, pp. 1693–1701. [Online]. Available: https://dl.acm.org/doi/10.5555/2969239.2969428

- [39] J. Weston, A. Bordes, S. Chopra, and T. Mikolov, “Towards AI-complete question answering: A set of prerequisite toy tasks,” in 4th International Conference on Learning Representations, ICLR, Conference Track Proceedings, 2016. [Online]. Available: https://arxiv.org/abs/1502.05698

- [40] S. Bowman, G. Angeli, C. Potts, and C. D. Manning, “A large annotated corpus for learning natural language inference,” in Proc. of the 2015 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2015, pp. 632–642. [Online]. Available: http://dx.doi.org/10.18653/v1/D15-1075

- [41] A. Williams, N. Nangia, and S. R. Bowman, “A broad-coverage challenge corpus for sentence understanding through inference,” in Proc. of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Long Papers), ser. Association for Computational Linguistics (ACL), 2017. [Online]. Available: http://dx.doi.org/10.18653/v1/N18-1101

- [42] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural Computation, vol. 9, no. 8, pp. 1735–1780, 1997. [Online]. Available: https://doi.org/10.1162/neco.1997.9.8.1735

- [43] M. Gardner, J. Grus, M. Neumann, O. Tafjord, P. Dasigi, N. Liu, M. Peters, M. Schmitz, and L. Zettlemoyer, “Allennlp: A deep semantic natural language processing platform,” CoRR preprint arXiv:1803.07640, 2018. [Online]. Available: http://dx.doi.org/10.18653/v1/W18-2501

- [44] E. Wallace, J. Tuyls, J. Wang, S. Subramanian, M. Gardner, and S. Singh, “AllenNLP Interpret: A framework for explaining predictions of NLP models,” in Proc. of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP): System Demonstrations, ser. Association for Computational Linguistics (ACL), 2019, pp. 7–12. [Online]. Available: http://dx.doi.org/10.18653/v1/D19-3002

- [45] J. Dodge, S. Gururangan, D. Card, R. Schwartz, and N. A. Smith, “Show your work: Improved reporting of experimental results,” in Proc. of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), ser. Association for Computational Linguistics (ACL), 2019, pp. 2185–2194. [Online]. Available: http://dx.doi.org/10.18653/v1/D19-1224

- [46] A. K. Mohankumar, P. Nema, S. Narasimhan, M. M. Khapra, B. V. Srinivasan, and B. Ravindran, “Towards transparent and explainable attention models,” in Proc. of the 58th Annual Meeting of the Association for Computational Linguistics, ser. Association for Computational Linguistics (ACL), 2020, pp. 4206–4216. [Online]. Available: http://dx.doi.org/10.18653/v1/2020.acl-main.387

- [47] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” in Proc. of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), ser. Association for Computational Linguistics (ACL), 2019, pp. 4171–4186. [Online]. Available: http://dx.doi.org/10.18653/v1/N19-1423

- [48] S. Serrano and N. A. Smith, “Is attention interpretable?” in Proc. of the 57th Annual Meeting of the Association for Computational Linguistics, ser. Association for Computational Linguistics (ACL), 2019, pp. 2931–2951. [Online]. Available: http://dx.doi.org/10.18653/v1/P19-1282

- [49] T. Miyato, S.-i. Maeda, M. Koyama, and S. Ishii, “Virtual adversarial training: a regularization method for supervised and semi-supervised learning,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 8, pp. 1979–1993, 2018. [Online]. Available: https://doi.org/10.1109/TPAMI.2018.2858821

- [50] J. Pennington, R. Socher, and C. Manning, “Glove: Global vectors for word representation,” in Proc. of the 2014 Conference on Empirical Methods in Natural Language Processing, ser. Association for Computational Linguistics (ACL), 2014, pp. 1532–1543. [Online]. Available: http://dx.doi.org/10.3115/v1/D14-1162

- [51] P. Bojanowski, E. Grave, A. Joulin, and T. Mikolov, “Enriching word vectors with subword information,” Transactions of the Association for Computational Linguistics, vol. 5, pp. 135–146, 2017. [Online]. Available: http://dx.doi.org/10.1162/tacl_a_00051

- [52] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” CoRR preprint arXiv:1412.6980, 2014. [Online]. Available: https://arxiv.org/abs/1412.6980