Attention Mechanism Meets with Hybrid Dense Network for Hyperspectral Image Classification

Abstract

The nonlinear relation between the spectral information and the corresponding objects (complex physiognomies) makes pixel-wise classification challenging for conventional methods. To deal with nonlinearity issues in Hyperspectral Image Classification (HISC), Convolutional Neural Networks (CNN) are more suitable, indeed. However, fixed kernel sizes make traditional CNN too specific, neither flexible nor conducive to feature learning, thus impacting on the classification accuracy. The convolution of different kernel size networks may overcome this problem by capturing more discriminating and relevant information. In light of this, the proposed solution aims at combining the core idea of 3D and 2D Inception net with the Attention mechanism to boost the HSIC CNN performance in a hybrid scenario. The resulting attention-fused hybrid network (AfNet) is based on three attention-fused parallel hybrid sub-nets with different kernels in each block repeatedly using high-level features to enhance the final ground-truth maps. In short, AfNet is able to selectively filter out the discriminative features critical for classification. Several tests on HSI datasets provided competitive results for AfNet compared to state-of-the-art models. The proposed pipeline achieved, indeed, an overall accuracy of 97% for the Indian Pines, 100% for Botswana, 99% for Pavia University, Pavia Center, and Salinas datasets.

Index Terms:

Convolutional Neural Network (CNN); Hyperspectral Images Classification (HSIC), Inception Network, Attention Mechanism.I Introduction

Hyperspectral Imaging (HSI) systems based on collections of electromagnetic spectrum, ranging from visible to near-infrared region, reflected by the objects of interest [1]. The images thus obtained are usually generated by a pre-configured HSI camera installed on either mobile (e.g. satellites, drones, aircrafts) or static (e.g., indoor, rooms, labs) setups depending upon the problem at hand [2, 3, 4, 5, 6, 7, 8]. Thereby, HSI sensors gather a huge amount of data from hundreds of contiguous spectral bands [9, 10].

Such big and rich HSI dataset, including the different spectral bands data related by the (spatial) geo-located position, may contain hidden information and patterns. HSI Classification (HSIC) [11] aims at discover, detect, identify and recognize such patterns. However, the spectral dataset size usually increases combinatorially with the problem size (e.g. the area, the resolution), leading to the curse of dimensionality and thus making traditional HSIC methods inefficient [12]. To mitigate such issues, several dimensionality reduction and feature selection techniques have been proposed [13, 14]. Conventional feature extraction/selection methods rely on hand-crafted features, however, due to the spatial variability of spectral information [15], the extraction of discriminative and most informative features is still a big challenge [16].

Hand-crafted features may be insubstantial in the case of HSI data, therefore, it is challenging to achieve a trade-off between discriminability and robustness on features which also considerably differ on the different HSI datasets. Furthermore, the process of feature design and selection is strongly affected by the designer and architect knowledge and skills [17, 16, 18, 19]. To such a purpose, an automatic approach to hierarchically identify the features was developed by Hinton back in 2006 [17, 20, 21], based on a deep learning model developed in growing semantic layers until a desirable representation is achieved. Similarly, other models have been proposed on feature learning and classification as well [22, 17, 23, 24], automatically learning and improving the underlying system representation from the available data without any prior knowledge. They can extract both linear and non-linear features, thus capable of handling HSI data in both spatial and spectral domains [25].

In nature, HSI datasets are usually non-linear due to the undesired light-scattering phenomena given by land cover objects and particles in the atmosphere [26]. Thus rendering the use of linear transformation or feature learning methods [27] for HSIC. To overcome the non-linearity issues, Convolutional Neural Network (CNN) was proposed to extract both high as well as low-level features which ultimately lead to the extraction of abstract and invariant features [28]. As a result, 2D CNN achieved remarkable performance but unfortunately not so good for HSIC due to the missing channel-related information, i.e. 2D CNN are not able to learn spectrally discriminative features. Unlike 2D CNN, 3D ones can jointly extract the spatial-spectral information for HSIC providing higher accuracy than 2D CNN [29]. However, 3D CNN models are computationally-/time-intensive due to the high number of parameters involved by 3D convolutional filters on each layer.

For instance, in [30], a Spatial-Spectral Residual Network (SSRN) implemented a 3D CNN residual network based on ResNet [31]. Despite SSRN achieves remarkable classification results, the summation method used to aggregate features at each layer requires output feature maps to have consistent scale as the residual feature maps. Hence each layer has self weights which overall lead to an explosion of network parameters [26]. Thereby, high accuracy comes at expenses of the computational power, i.e. SSRN is more complex than traditional 3D CNN. Similarly, [17] proposed a fast and compact 3D CNN (FC-3D-CNN) model to overcome the limitations of computational cost and reduce the number of training parameters. FC-3D-CNN achieves better results in a computationally efficient manner than SSRN due to the reduced spectral information used in the experimental process.

In general, CNN models tend to poorly perform especially in the case of pixels of different classes but similar texture over contiguous spectral bands [32]. To deal with that, [33, 34, 35] proposed hybrid models that combine the power of 2D and 3D convolutional layers to extract high and low-level features i.e., extraction of abstract and invariant features. The hybrid models achieve better accuracy than state-of-the-art 2D and 3D CNN solutions. Despite the high accuracy, hybrid models still require a large number of parameters as compared to the 3D CNN network [17] while, on the other hand, 3D CNN/SRNN has a longer processing time than hybrid models. Therefore, inception models have proved that the network topology significantly affects the complexity and the accuracy [36]. Recently, graph convolutional network (GCN) shows the superiority in HSCI, Hong et al. [37] proposed a novel GCN in a mini-batch fashion, called miniGCN, which solves the problem of large-scale graph computation and learning. Apart from the complexity and accuracy trade-off, all the inception models have one common property i.e., a split-transform-merge strategy which proved to be a good strategy for HSIC. Traditional 2D, 3D, hybrid, and inception models exploit the fixed convolution kernel size, however, HSI class distribution is complicated thus conventional CNN with fixed kernel size is not flexible enough. Convolutions with different spatial sizes may capture more discriminative and important information for pixel-based HSIC.

Nowadays, an attention mechanism has been extensively used to suppress redundant information while extracting features for classification. SENet [38] was the first network proposed to suppress the redundant features by weighting channel direction features. The work [39] proposed CBAM (convolutional block attention module) combines spatial attention with channel attention through pooling whereas the work [40] proposed a NLNet which combines the convolution operations with matrix multiplication operations to capture the long-range relationship in the global space. A combination of SENEt and NLNet was proposed in [41], which consists of a simplified lightweight module GCNet for effectively extracting global context. Very recently, a novel transformer framework was rapidly developed and its spectral version, called SpectralFormer, was for the first time proposed with the application to HSIC, yielding state-of-the-art classification performance [42].

Though, attention networks have achieved remarkable results for HSIC based on the internal architecture of the attention module. These works, to some extent, put attention weights in either one or two dimensions and ignore the rest of the HSI dimensions. For instance, single and double attention networks were proposed in [43, 44], in which the work [43] only consider the spectral information and ignore the spatial information, whereas, the work [44] was proposed to reduce the interference between the spatial and channel information. The work [45] was proposed to jointly explore the spectral and spatial information, where spectral-spatial dimensions were weighted by the spectral-spatial attention module. The combination of attention in more or less in one or two dimensions may improve the performance, however, it is highly recommended to integrate all channel information for better classification.

Wang, et.al. [46] significantly improved the squeeze and excitation structure attention mechanism proposed in [38], reducing the model complexity by a local cross-channel interaction strategy without any preprocessing, i.e., dimensional reduction. Zheng, et.al. [47] worked to overcome the limitations of inconsistent class ratio and over-parameterization using a stratified sample-based training strategy. While the spectral attention module was proposed to render the soft band selection process to further refine the redundant spectrum information. However, all these spatial or spectral attention models are to some extent isolated in which the spatial attention ignores to make a connection between spectral dimension whereas, the spectral attention ignores the spatial connection and uses only the correlation between different spectral bands.

Moreover, it has been proven fact that accuracy improves while increasing the depth of the model, thus require more parameters and higher computational burden which makes optimization an NP hard problem. Therefore, to overcome the aforesaid issues, this work explicitly investigates the possibilities of combining the core idea of 2D as well as 3D inception models into an Hybrid attention architecture to boost the pixel-based HSIC performance. We tested the model on several publicly available HSI datasets which shows competitive results compared to the state-of-the-art methods. Our proposed pipeline achieved an overall accuracy of 97% for the Indian Pines dataset, 100% for Botswana, 99% for both Pavia University, Pavia Center, and Salinas datasets, respectively. In a nutshell, the contributions made in this work as summarized as follows.

-

1.

A densely connected hybrid inception net is proposed to enrich the spatial-spectral feature learning process. Different from the conventional inception model which consists of a single branch in each block, the proposed densely connected hybrid blocks are composed of parallel multiple sized filters which significantly improves the propagation and reuse of features with less number of tune-able parameters. Moreover, the proposed hybrid inception net comprehensively utilizes features of different scales from HSI dataset.

-

2.

A triple-branch attention fusion block is introduced which boosts the robustness of the discriminative network. As compared with recently published attention blocks for HSIC, the proposed pipeline simultaneously model interactions across different spectral bands and spatial regions by re-weighting the significance of features. The triple-branch attention block adaptively emphasizes the important information and significantly suppresses the redundant and ineffective information.

-

3.

A hybrid spectral convolutional block is used to reduce the required number of parameters for the HSIC model. Moreover, the activation inducted convolutional layer can further improve the non-linear representation capacity of the whole network.

The rest of the paper is structured as follows. Section II presents the pipeline proposed in this paper. Section III describes the experimental settings along the metrics used to compute the accuracies. Sections III-A-III-C exhibits the experimental datasets used to conduct the experiments and to validate the proposed methodology. Moreover, sections III-A-III-C demonstrates the experimental results as compared with the state-of-the-art methods proposed in the literature. Finally, section IV concludes the paper with possible future research directions.

II Problem Formulation

Lets assume be the HSI cube, where samples associated with classes and band images. Each where be the class associated with sample. In nature, exhibit high intra-class variability and inter-class similarity, overlapping and nested regions. Therefore, HSI cube has been first divided into small spatial patches to overcome the aforesaid issues. For each patch, the ground truths are formed based on the central pixel of the patch. Principle Component Analysis (PCA) has been used before creating the patches which eliminate the redundancy among the band images i.e. , where .

The patching process creates neighboring patches centered at the spatial location covering spatial windows [17, 33]. The total of patches given by . Thus, in total, these patches cover the width from to and height from to [33]. The patches are first convolved with a kernel function which computes the sum of the dot product between the input patch and kernel function to introduces the nonlinearity [17, 33, 48, 21]. The activation maps for spatial-spectral position at feature map and layer can be represented as .

where be the total number of feature maps at layer, and be the depth of the kernel and bias, respectively. Moreover, , , and be the height, width, and depth of the kernel [17]. Similarly, 2D modules do the same process with 2D input as well as the 2D kernel function. In both 3D and 2D layers, the kernel is striding over the input to cover the whole spatial dimension. More specifically, as the proposed model combines the power of 3D and 2D kernel functions, thus, 2D convolution represents the activation value of feature map at spatial position on layer and can be formulated as and finally can be formed as follows.

where and be the height and width of the kernel, respectively. In short, the proposed hybrid AfNet convolutional filters are as follows with the input of . The size of 3D filters are , , , , , , and , and for each layer on each block with different number of filters, i.e., for first block, for second block and for third block. Similarly the size of 2D filters are , , , , and , for each layer on each block with different number of filters, i.e., for first block, for second block and for third block. A 2D fusion module has been used to incorporate the information learned hierarchically at different blocks. Finally, a 2D convolutional layer is used with kernel size with total of filters to better represent the low to high level information.

To decrease the number of spectral-spatial feature maps, nineteen densely connected 3D and 2D convolutional layers are used prior to the flatten layer to make sure the convolutional process discriminate the spatial information while considering different spectral bands with no loss and less number of parameters to boost the performance [17]. The weights are randomized initially and optimized using Adam optimizer based on back-propagation with a soft-max loss function. Later the randomized weights are updated using a mini-batch size of for epochs. The overall structure of the proposed hybrid AfNet using the Indian Pines dataset as an example is presented in Figure 1.

II-A Dense Connections and Attention Blocks

CNN extracts different features with different characteristics on each layer in which lower and middle layer features have relatively high resolution and encompass more location and detailed information. However, may have lower semantics and more noise due to fewer convolutional layers passing through. Since we know that the high-level features hold strong semantic information with low resolution and poor perception capability. Therefore, cross-layer feature fusion can be considered as an effective strategy to preserve the quality features and ultimately improve classification performance [5].

Dense connectivity (e.g., different kinds of connectivity patterns irrespective of the traditional network) has been first proposed in DenseNet and widely used framework in many real-life applications. Traditionally, all layers are connected one after another, in order to maximize the feature information flow between layers. In this hierarchy, each layer accepts the features of all previous layers in front of it as input and passes its output to the subsequent layer. However, irrespective of the traditional dense connections, this paper proposes dense connections along with the attention mechanism among different Inception Network blocks as shown in Figure 1. From Figure 1, one can see that the output of the 2nd convolutional layer of block-1 is densely connected with the 2nd layer of block-2 where the other layers of each block are densely connected traditionally as well followed by the nonlinear transformation in both cases.

Let us assume be the output of the ith block and be the output of the previous convolutional block. Thus the output of the ith convolutional block is not only related to the output of (i-1)th block but also includes the middle output of all previous blocks. Similarly, each 3D CNN block is densely connected along with the attention mechanism with the 2D CNN blocks, respectively as just explained above. However, while connecting the 3D feature maps with 2D feature maps, a reshape and max-pooling with kernel is used. Finally, a concatenation (fusion) layer is deployed to fuse the output maps obtained from all three blocks, and subsequently, a 2D convolutional layer is used to further refine the feature maps obtained from the densely connected network. The attention blocks are flexible in the proposed model and can be positioned anywhere in the network as explained in Figure 1.

II-B Overview

For high-level intuition of the proposed model, the overall structure has been illustrated in Figure 1 in which each block of the network is densely connected with the help of an attention mechanism. The proposed AfNet is an end-to-end framework for HSIC in which the input is the original HSI dataset and output is regarded as the probability of each HSI pixel for classes.

Since HSI composed of hundreds of contiguous spectral bands, and some of these are highly correlated with each other, which provides no new information for classification. Moreover, some noisy bands exist in HSI, therefore, to eliminate the noisy and redundant bands, PCA transformation has been applied before feature learning and classification which significantly reduces the processing time and memory capacity as well.

The HSI cube has been divided into overlapping 3D cubes to take full advantage of spatial-spectral information present in the HSI dataset. These 3D cubes are composed of the target pixel and its neighboring pixels to perform pixel-level feature learning and classification. Let’s assume each 3D patch formed from HSI is where denotes the spatial size of each patch and be the number of PCs preserved in the spectral dimension. In AfNet, a densely connected convolutional (3D and 2D) layers with rectified linear unit (ReLu) and without normalization is a basic building unit of the network.

The 3D patches are first to go through nine attention-based 3D inter-connected convolutional layers. The obtained feature maps are further fed to nine attention-based 2D inter-connected convolutional layers which are designed to improve the propagation and reuse of features with a fewer number of tune-able parameters. Moreover, a hybrid structure can discriminate the spatial information while considering different spectral bands with no loss to increase the generalization performance. On the other hand, the proposed network is built by stacking multiscale parallel filters of various sizes. On top of that, the attention module has been added to handle the skip connections from the first block to the second and all other subsequent blocks, which improves the flow of information. As a result, the fused features provide surpass feature space as compared to the stacked single-sized convolutional layers.

Another way around, attention blocks are incorporated to selectively filter out the information (features) which are critical for classification, i.e., weakening information that is useless for classification has been eliminated which leads to obtaining a feature representation that is more discriminative to get a higher class probability for each pixel. Following the fusion, a 2D convolutional layer is employed to aggregate the obtained features once again. Afterward, feature maps are converted into feature vectors by flattening, and finally, class labels are generated using Softmax.

III Experimental Settings

The experimental results explained in this work have been obtained through Google Colab, an online platform to execute any python environment with Graphical Process Unit (GPU), up to 358 GB of cloud storage, and up to 25 GB of Random Access Memory (RAM). In all the stated experiments (not only for the proposed method but for all comparative methods as well), the training, validation, and test sets are randomly divided into three parts; 15%/15%/70% (i.e., Training/Validation/Test sets).

For fair comparative analysis and to make the claims more reliable, the learning rate for all experimental methods are set to 0.001, Relu as the activation function for all hidden layers except the final (output) later on which Softmax activation function is used. Patch size is set to for all experimental results, and most informative spectral dimensions were selected using PCA to reduce the computational burden in terms of time and space. All the models are evaluated on 100 epochs without batch normalization.

To compute the experimental results, several metrics such as Average Accuracy (AA), Overall Accuracy (OA), and Kappa () have been used. Where metric is known as a statistical metric that considered the mutual information regarding a strong agreement among the classification and ground-truth maps. AA represents the average class-wise classification performance, whereas the OA is computed as the number of correctly classified examples out of the total test examples.

| (1) |

| (2) |

| (3) |

where

where be the total number of classes presents in HSI dataset. Moreover, , , , and are false positive, false negative, true positive, and false negative, respectively.

III-A Experimental Datasets and Initial Experiments

Several publicly available Hyperspectral datasets have been used to evaluate the performance of our proposed pipeline. These datasets are acquired at different times and locations using different sensors such as Hyperion NASA EO-1 satellite, Reflective Optics System Imaging Spectrometer (ROSIS), and Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor. Further information regarding the experimental datasets can be found from [17, 49, 50, 51]. As earlier explained, the performance of our proposed pipeline is evaluated using four publicly available HSI datasets namely Indian Pines, Pavia University, Botswana, and Salinas. For each of the above datasets, the samples are randomly splited into three subsets i.e., training, validation, and test sets. Table I provides a summary description of each dataset used in the following experiments.

| Dataset | Year | Source | Spatial dimensions | Spectral | Wavelength | Samples | Classes | Sensor | Resolution |

|---|---|---|---|---|---|---|---|---|---|

| Botswana | 2001-2004 | NASA EO-1 | 242 bands | 400-2500 | 3248 | 14 | Satellite | 30 | |

| Indian Pines | 1992 | NASA AVIRIS | 220 bands | 400 - 2500 | 10249 | 16 | Aerial | 20 | |

| Salinas | 1998 | NASA AVIRIS | 224 bands | 360 - 2500 | 54129 | 16 | Aerial | 3.7 | |

| Pavia University | 2001 | ROSIS-03 sensor | 115 bands | 430 - 860 | 42776 | 9 | Aerial | 1.3 |

III-A1 Indian Pines

Indian Pines dataset was acquired back in 1992, June 12 over the Purdue University Agronomy farms, northwest of West Lafayette and the surrounding area using AVIRIS sensor. This dataset was mainly acquired to facilitate soil research being initiated by Prof. Marion Baumgardner and his graduate students. Indian Creek and Pine Creek watersheds contain most of the part of the dataset thus known by Indian Pines and include two flight lines: 1): Flown East-West, 2): Flown North-South. There are three miles intensive test sites with the area as; 1): Northern portion of North-South flight line, 2): Near the center, 3): Southern portion.

Indian Pines dataset consists of spatial dimensions per spectral band and in total 224 spectral bands in the wavelength range meters. The scene used in this research is a subset of a larger scene. It consists of 1/3 forest, 2/3 agriculture, and natural perennial vegetation, a rail line, two major dual-lane highways, low-density housing, other structures, and small roads as earlier explained. Some crops e.g., corn, soybeans are in the early stages of growth i.e., less than 5% coverage due to the reason that the dataset was acquired in June. The ground truths are available and distinguished into 16 non-mutual exclusive classes. The image cube and true ground truths label maps are shown in Figures 2(a) and 2(b) whereas Figures 2(c), 2(d), and 2(e) show the classification performance in terms of classification maps (ground truth label maps) for three different models, i.e. Hybrid Attention Fused Network (AfNet), 3D Attention Inception Net and 2D Attention Inception Net. These maps clearly show that the proposed method performs better than 3D as well as 2D Attention Inception Networks. The higher accuracies are emphasized.

III-A2 Botswana

The Hyperion NASA EO-1 satellite acquired the Botswana dataset over OkavangoDelta, Botswana back in 2001-2004. The WO-1 sensor acquired the subject data at 30 m pixel resolution over a 7.7 km strip in 242 spectral bands covering the 400-2500 nm portion of the spectrum in 10 nm window.

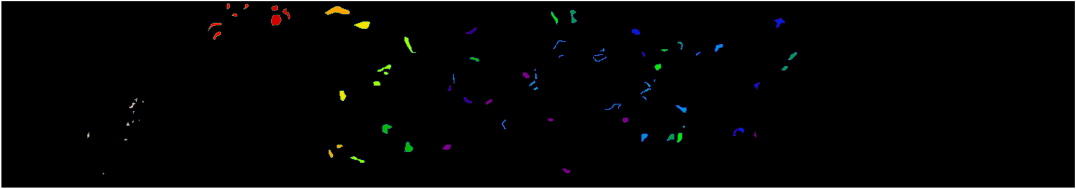

To mitigate the effects of bad detectors, inter detector miscalibration, and intermittent anomalies, extensive preprocessing has been carried out by UT Center for space research. While processing, uncalibrated and noisy bands (i.e., water absorption features) were removed and the remaining 145 spectral bands are used for experimental purposes. The data used in this study was acquired back on May 31, 2001, and it consist of observations from 14 mutually exclusive classes which represent the land cover types in seasonal swamps, occasional swamps, and drier woodlands located in the distal portion of the Delta. The image cube and true ground truths label maps are shown in Figures 3(a) - 3(b) whereas Figures 3(c) - 3(d) show the classification performance in terms of classification maps (ground truth label maps) for three different models, i.e. Hybrid Attention Fused Network (AfNet), 3D Attention Inception Net and 2D Attention Inception Net. These maps clearly show that the proposed method performs better than 3D as well as 2D Attention Inception Networks. The higher accuracies are emphasized.

III-A3 Pavia University

Pavia university dataset was acquired using ROSIS sensor during a flight campaign over Pavia, northern Italy. The total number of spectral bands is 103 in which each spectral band covers spatial dimensions per spectral band. Some of the samples contain no information in the above spatial dimensions, thus have to be discarded before the analysis. The geometric resolution is 1.3 meters. Pavia Center image ground truths differentiate 9 mutually exclusive classes. The image cube and true ground truths label maps are shown in Figures 4(a) - 4(b) whereas Figures 4(c) - 4(d) show the classification performance in terms of classification maps (ground truth label maps) for three different models, i.e. Hybrid Attention Fused Network (AfNet), 3D Attention Inception Net and 2D Attention Inception Net. These maps clearly show that the proposed method performs better than 3D as well as 2D Attention Inception Networks. The higher accuracies are emphasized.

III-A4 Salinas

The Salinas dataset was acquired using the AVIRIS sensor over Salinas Valley, California, and is characterized by high spatial resolution with 3.7 meters per pixel with 224 spectral bands. The area covered by each spectral band is samples. As with the Indian Pines dataset, 20 water absorption bands which are [108-112], [154-167], and 224 were discarded. Salinas dataset is only available as sensor radiance data. It includes vegetables, bare soils, and vineyard fields. Salinas ground truths contain 16 mutually exclusive classes. The image cube and true ground truths label maps are shown in Figures 5(a) - 5(b) whereas Figures 5(c) - 5(d) show the classification performance in terms of classification maps (ground truth label maps) for three different models, i.e. Hybrid Attention Fused Network (AfNet), 3D Attention Inception Net and 2D Attention Inception Net. These maps clearly show that the proposed method performs better than 3D as well as 2D Attention Inception Networks. The higher accuracies are emphasized.

III-B Artefacts of Spatial Dimensions

To process the HSI cube in any CNN, the Spatial dimensions is being considered an important component and have an important impact on classification results [17]. This section experimentally illustrates the impact of spatial dimensions on classification results i.e., Overall (OA), Average Accuracy (AA), and Kappa () accuracy irrespective of the processing time and the computational cost which gradually increases as the spatial dimensions increase. The OA, AA, and accuracy is presented on all four experimental datasets to explore the impact of spatial dimensions on our proposed Hybrid AfNet. All the experimental settings and tuning parameters for this particular experiment remain the same except spatial dimensions in which we tested the models on several different sizes i.e., , , , and .

| Dataset | Measure | Spatial Dimensions | |||

|---|---|---|---|---|---|

| IP | (%) | 91.79 | 94.34 | 95.04 | 95.97 |

| OA (%) | 92.82 | 95.04 | 95.65 | 96.47 | |

| AA (%) | 83.26 | 79.53 | 86.62 | 86.13 | |

| Tr Time | 83.92 | 83.88 | 143.23 | 263.93 | |

| Te Time | 1.60 | 1.83 | 2.62 | 3.39 | |

| BS | (%) | 98.57 | 98.76 | 99.18 | 98.90 |

| OA (%) | 98.68 | 98.85 | 99.25 | 98.98 | |

| AA (%) | 97.83 | 98.01 | 98.85 | 98.774 | |

| Tr Time | 22.42 | 42.14 | 83.95 | 144.14 | |

| Te Time | 0.94 | 0.91 | 1.30 | 1.62 | |

| SA | (%) | 99.54 | 99.72 | 99.94 | 99.85 |

| OA (%) | 99.59 | 99.74 | 99.94 | 99.86 | |

| AA (%) | 99.76 | 99.85 | 99.96 | 99.84 | |

| Tr Time | 248.53 | 443.90 | 716.78 | 1043.87 | |

| Te Time | 10.77 | 10.90 | 21.23 | 21.40 | |

| PU | (%) | 99.27 | 99.57 | 99.79 | 99.47 |

| OA (%) | 99.45 | 99.67 | 99.84 | 99.60 | |

| AA (%) | 98.92 | 99.38 | 99.68 | 99.28 | |

| Tr Time | 203.92 | 323.88 | 552.19 | 821.60 | |

| Te Time | 4.74 | 10.71 | 10.81 | 11.74 | |

All these experimental results are presented in Figure 6 and Table II in which one can observe that the classification accuracy improves as the spatial size improves. The reason behind this trend is that “the larger spatial dimensions contains more samples”. However, this trend does not remain the same for all the spatial dimensions, as it may contain redundant samples, or by increasing the spatial dimension may contain interfering samples in a spatial patch or may contain the overlapping regions which bring nothing new to the classifier but just confuse the classifier and deteriorate the classification performance with redundant samples. Thus, in a nutshell, an appropriate size of spatial dimension with respect to the characteristics of the data is quite important to attain reliable accuracy.

III-C Artefacts of Training Samples

CNN’s have been extensively utilized for HSIC however, deep CNN requires a large number of annotated training samples for appropriate learning. However, the collection of annotated samples for HSI is expensive and critical which demands human experts or exploration of real-time scenarios. Limited availability of annotated training samples hinders HSIC performance. Thus, an appropriate size of annotated training samples is an important factor for HSIC performance. This section provides the performance evaluation in terms of OA, AA, and accuracy for different percentages of training samples. These percentages include 5/5/90% (Train/Validation/Test), 7/7/86%, 10/10/80%, 12/12/76%, and 15/15/70% respectively. We intentionally did not use below 5% training samples as several classes of different datasets do not have enough training samples to include, for instance, Oats class of IP dataset which only have 20 samples in total, thus selecting 1-4% of training samples for this class would only include 1 sample from this class which is not enough to train the model. There is another option to avoid such limitation is to select the number of training samples rather using the percentage. However, this process will lead to another issue which is known as the ”Class Imbalance” issue, which is not the problem under study. This may be considered as a potential future research direction.

| Dataset | Measure | Percentage of Training Samples | ||||

|---|---|---|---|---|---|---|

| 5% | 7% | 10% | 12% | 15% | ||

| IP | (%) | 77.41 | 84.32 | 84.06 | 90.15 | 91.79 |

| OA(%) | 80.34 | 86.26 | 86.04 | 91.37 | 92.82 | |

| AA(%) | 65.78 | 74.08 | 80.33 | 88.48 | 83.26 | |

| Tr Time | 22.41 | 42.88 | 33.44 | 42.88 | 83.93 | |

| Te Time | 1.95 | 1.87 | 2.89 | 2.91 | 1.60 | |

| BS | (%) | 95.25 | 96.97 | 98.16 | 98.06 | 98.57 |

| OA(%) | 95.62 | 97.20 | 98.30 | 98.21 | 98.68 | |

| AA(%) | 96.10 | 96.62 | 98.32 | 97.15 | 97.83 | |

| Tr Time | 16.69 | 22.42 | 20.26 | 22.47 | 22.42 | |

| Te Time | 1.07 | 1.08 | 0.90 | 0.91 | 0.94 | |

| SA | (%) | 98.39 | 97.72 | 99.19 | 97.79 | 99.54 |

| OA(%) | 98.56 | 97.95 | 99.27 | 98.01 | 99.59 | |

| AA(%) | 99.16 | 98.07 | 99.56 | 98.25 | 99.76 | |

| Tr Time | 83.81 | 2243.88 | 143.90 | 208.87 | 248.53 | |

| Te Time | 7.33 | 82.28 | 10.77 | 10.82 | 10.77 | |

| PU | (%) | 98.14 | 98.36 | 99.26 | 98.26 | 99.27 |

| OA(%) | 98.59 | 98.76 | 99.44 | 98.68 | 99.45 | |

| AA(%) | 97.39 | 97.90 | 99.05 | 98.19 | 98.92 | |

| Tr Time | 1164.25 | 1583.49 | 2243.48 | 166.92 | 203.92 | |

| Te Time | 57.44 | 82.54 | 49.62 | 5.58 | 4.74 | |

Table III and Figure 7 show the classification performance of our proposed method with different percentages of annotated training samples. One can observe from these results, as the number of annotated training samples increases the classification performance significantly improves. However, the trend is for some certain stage not for the entire group of percentages. This is due to the redundancy among the training samples, as the higher number of annotated samples may contain samples spectrally similar to each other, brings nothing new information, or may lead to confusion for learning. The performance evaluation indicates the quality of spatial-spectral features learned by our proposed model, i.e. the features learned by AfNet. From these results, one can also conclude that the 7-10% training samples are enough to get satisfactory results. For all these experimental results, spatial dimension are used, rest of the experimental protocols remains the same except the number of training samples which are further explained in Table III. From the computational time, similar observations can be made, as the number of training samples increases, the training and testing time significantly increase as well the accuracy increases.

IV Conclusion

Convolution Neural Networks (CNNs) are known to overcome the nonlinearity issues with fixed kernel sizes which are not flexible enough because these kernels are specific and not conducive to feature learning thus impairs classification accuracy. However, CNN with different kernel sizes may capture more discriminative and important features. Thus, taking into account the aforesaid advantages, this work proposed a hybrid (3D-2D) Inception net with an attention mechanism to boost the classification performance. The proposed attention fused hybrid network (AfNet) used attention-based six parallel hybrid sub-nets with different kernels in each sub-block to enhance the final ground-truth maps. The proposed AfNet selectively filters out the discriminative feature i.e., the critical features for classification. AfNet has been tested on several Hyperspectral datasets and shows competitive results as compared to the state-of-the-art models except for a few expensive choices.

References

- [1] D. Hong, W. He, N. Yokoya, J. Yao, L. Gao, L. Zhang, J. Chanussot, and X. Zhu, “Interpretable hyperspectral artificial intelligence: When nonconvex modeling meets hyperspectral remote sensing,” IEEE Geosci. Remote Sens. Mag., vol. 9, no. 2, pp. 52–87, 2021.

- [2] M. H. Khan, Z. Saleem, M. Ahmad, A. Sohaib, H. Ayaz, and M. Mazzara, “Hyperspectral imaging for color adulteration detection in red chili,” Applied Sciences, vol. 10, no. 17, 2020. [Online]. Available: https://www.mdpi.com/2076-3417/10/17/5955

- [3] Z. Saleem, M. H. Khan, M. Ahmad, A. Sohaib, H. Ayaz, and M. Mazzara, “Prediction of microbial spoilage and shelf-life of bakery products through hyperspectral imaging,” IEEE Access, vol. 8, pp. 176 986–176 996, 2020.

- [4] H. Ayaz, M. Ahmad, A. Sohaib, M. N. Yasir, M. A. Zaidan, M. Ali, M. H. Khan, and Z. Saleem, “Myoglobin-based classification of minced meat using hyperspectral imaging,” Applied Sciences, vol. 10, no. 19, 2020. [Online]. Available: https://www.mdpi.com/2076-3417/10/19/6862

- [5] D. Hong, L. Gao, N. Yokoya, J. Yao, J. Chanussot, D. Qian, and B. Zhang, “More diverse means better: Multimodal deep learning meets remote-sensing imagery classification,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 5, pp. 4340–4354, 2021.

- [6] H. Ayaz, M. Ahmad, M. Mazzara, and A. Sohaib, “Hyperspectral imaging for minced meat classification using nonlinear deep features,” Applied Sciences, vol. 10, no. 21, 2020. [Online]. Available: https://www.mdpi.com/2076-3417/10/21/7783

- [7] M. Zulfiqar, M. Ahmad, A. Sohaib, M. Mazzara, and S. Distefano, “Hyperspectral imaging for bloodstain identification,” Sensors, vol. 21, no. 9, 2021. [Online]. Available: https://www.mdpi.com/1424-8220/21/9/3045

- [8] H. Hussain Khan, Z. Saleem, M. Ahmad, A. Sohaib, H. Ayaz, M. Mazzara, and R. A. Raza, “Hyperspectral imaging-based unsupervised adulterated red chili content transformation for classification: Identification of red chili adulterants,” Neural Computing and Applications, vol. 33, pp. 1–15, 11 2021.

- [9] B. Rasti, D. Hong, R. Hang, P. Ghamisi, X. Kang, J. Chanussot, and J. Benediktsson, “Feature extraction for hyperspectral imagery: The evolution from shallow to deep: Overview and toolbox,” IEEE Geosci. Remote Sens. Mag., vol. 8, no. 4, pp. 60–88, 2020.

- [10] T. V. Bandos, L. Bruzzone, and G. Camps-Valls, “Classification of hyperspectral images with regularized linear discriminant analysis,” IEEE Transactions on Geoscience and Remote Sensing, vol. 47, no. 3, pp. 862–873, 2009.

- [11] Y. Bengio, A. Courville, and P. Vincent, “Representation learning: A review and new perspectives,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 8, pp. 1798–1828, 2013.

- [12] M. Ahmad, S. Protasov, and A. M. Khan, “Hyperspectral band selection using unsupervised non-linear deep auto encoder to train external classifiers,” arXiv preprint ArXiv:1705.06920, 2017.

- [13] D. Hong, N. Yokoya, J. Chanussot, J. Xu, and X. X. Zhu, “Learning to propagate labels on graphs: An iterative multitask regression framework for semi-supervised hyperspectral dimensionality reduction,” ISPRS journal of photogrammetry and remote sensing, vol. 158, pp. 35–49, 2019.

- [14] M. Ahmad, S. Shabbir, R. A. Raza, M. Mazzara, S. Distefano, and A. M. Khan, “Hyperspectral image classification: Artifacts of dimension reduction on hybrid cnn,” arXiv preprint arXiv:2101.10532, 2021.

- [15] D. Hong, N. Yokoya, J. Chanussot, and X. Zhu, “An augmented linear mixing model to address spectral variability for hyperspectral unmixing,” IEEE Trans. on Image Process., vol. 28, no. 4, pp. 1923–1938, 2019.

- [16] S. Shabbir and M. Ahmad, “Hyperspectral image classification–traditional to deep models: A survey for future prospects,” arXiv preprint arXiv:2101.06116, 2021.

- [17] M. Ahmad, A. M. Khan, M. Mazzara, S. Distefano, M. Ali, and M. S. Sarfraz, “A fast and compact 3-d cnn for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, pp. 1–5, 2020.

- [18] D. Hong, N. Yokoya, N. Ge, J. Chanussot, and X. X. Zhu, “Learnable manifold alignment (lema): A semi-supervised cross-modality learning framework for land cover and land use classification,” ISPRS journal of photogrammetry and remote sensing, vol. 147, pp. 193–205, 2019.

- [19] J. Yao, D. Hong, L. Xu, D. Meng, J. Chanussot, and Z. Xu, “Sparsity-enhanced convolutional decomposition: A novel tensor-based paradigm for blind hyperspectral unmixing,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–14, 2022, Art no. 5505014, DOI: 10.1109/TGRS.2021.3069845.

- [20] G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” Science, vol. 313, no. 5786, pp. 504–507, 2006. [Online]. Available: https://science.sciencemag.org/content/313/5786/504

- [21] D. Hong, N. Yokoya, G.-S. Xia, J. Chanussot, and X. X. Zhu, “X-modalnet: A semi-supervised deep cross-modal network for classification of remote sensing data,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 167, pp. 12–23, 2020.

- [22] B. Zhang, S. Li, X. Jia, L. Gao, and M. Peng, “Adaptive markov random field approach for classification of hyperspectral imagery,” IEEE Geoscience and Remote Sensing Letters, vol. 8, no. 5, pp. 973–977, 2011.

- [23] Q. Zou, L. Ni, T. Zhang, and Q. Wang, “Deep learning based feature selection for remote sensing scene classification,” IEEE Geoscience and Remote Sensing Letters, vol. 12, no. 11, pp. 2321–2325, 2015.

- [24] D. Hong, J. Hu, J. Yao, J. Chanussot, and X. X. Zhu, “Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 178, pp. 68–80, 2021.

- [25] B. Zhang, W. Yang, L. Gao, and D. Chen, “Real-time target detection in hyperspectral images based on spatial-spectral information extraction,” EURASIP journal on advances in signal processing, vol. 2012, no. 1, pp. 1–15, 2012.

- [26] D. Nyasaka, J. Wang, and H. Tinega, “Learning hyperspectral feature extraction and classification with resnext network,” CoRR, vol. abs/2002.02585, 2020. [Online]. Available: https://arxiv.org/abs/2002.02585

- [27] M. Ahmad, A. Khan, and R. Hussain, “Graph-based spatial-spectral feature learning for hyperspectral image classification,” IET image processing, 2017.

- [28] L. Gómez-Chova, D. Tuia, G. Moser, and G. Camps-Valls, “Multimodal classification of remote sensing images: A review and future directions,” Proceedings of the IEEE, vol. 103, no. 9, pp. 1560–1584, 2015.

- [29] H. Gao, D. Yao, Y. Yang, C. Li, H. Liu, and Z. Hua, “Multiscale 3-D-CNN based on spatial–spectral joint feature extraction for hyperspectral remote sensing images classification,” Journal of Electronic Imaging, vol. 29, no. 1, pp. 1 – 17, 2020. [Online]. Available: https://doi.org/10.1117/1.JEI.29.1.013007

- [30] Z. Zhong, J. Li, Z. Luo, and M. Chapman, “Spectral–spatial residual network for hyperspectral image classification: A 3-d deep learning framework,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 2, pp. 847–858, 2018.

- [31] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [32] L. He, J. Li, C. Liu, and S. Li, “Recent advances on spectral-spatial hyperspectral image classification: An overview and new guidelines,” IEEE Transactions on Geoscience and Remote Sensing, vol. PP, pp. 1–19, 11 2017.

- [33] S. K. Roy, G. Krishna, S. R. Dubey, and B. B. Chaudhuri, “Hybridsn: Exploring 3-d–2-d cnn feature hierarchy for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 2, pp. 277–281, 2020.

- [34] M. Ahmad, S. Shabbir, R. A. Raza, M. Mazzara, S. Distefano, and A. M. Khan, “Hyperspectral image classification: Artifacts of dimension reduction on hybrid cnn,” arXiv preprint arXiv:2101.10532, 2021.

- [35] M. Ahmad, M. Mazzara, and S. Distefano, “3d/2d regularized cnn feature hierarchy for hyperspectral image classification,” arXiv preprint arXiv:2104.12136, 2021.

- [36] B.-C. Kuo, C.-H. Li, and J.-M. Yang, “Kernel nonparametric weighted feature extraction for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 47, no. 4, pp. 1139–1155, 2009.

- [37] D. Hong, L. Gao, J. Yao, B. Zhang, P. Antonio, and J. Chanussot, “Graph convolutional networks for hyperspectral image classification,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 7, pp. 5966–5978, 2021.

- [38] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132–7141.

- [39] S. Woo, J. Park, J.-Y. Lee, and I. S. Kweon, “Cbam: Convolutional block attention module,” in Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [40] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7794–7803.

- [41] Y. Cao, J. Xu, S. Lin, F. Wei, and H. Hu, “Gcnet: Non-local networks meet squeeze-excitation networks and beyond,” 10 2019, pp. 1971–1980.

- [42] D. Hong, Z. Han, J. Yao, L. Gao, B. Zhang, A. Plaza, and J. Chanussot, “Spectralformer: Rethinking hyperspectral image classification with transformers,” IEEE Trans. Geosci. Remote Sens., 2021, dOI: 10.1109/TGRS.2021.3130716.

- [43] B. Fang, Y. Li, H. Zhang, and J. C.-W. Chan, “Hyperspectral images classification based on dense convolutional networks with spectral-wise attention mechanism,” Remote Sensing, vol. 11, no. 2, 2019. [Online]. Available: https://www.mdpi.com/2072-4292/11/2/159

- [44] W. Ma, Q. Yang, Y. Wu, W. Zhao, and X. Zhang, “Double-branch multi-attention mechanism network for hyperspectral image classification,” Remote Sensing, vol. 11, no. 11, 2019. [Online]. Available: https://www.mdpi.com/2072-4292/11/11/1307

- [45] E. Pan, Y. Ma, X. Mei, X. Dai, F. Fan, X. Tian, and J. Ma, “Spectral-spatial classification of hyperspectral image based on a joint attention network,” in IGARSS 2019 - 2019 IEEE International Geoscience and Remote Sensing Symposium, 2019, pp. 413–416.

- [46] Q. Wang, B. Wu, P. Zhu, P. Li, W. Zuo, and Q. Hu, “Eca-net: Efficient channel attention for deep convolutional neural networks,” 06 2020, pp. 11 531–11 539.

- [47] Z. Zheng and Y. Zhong, “S3net: Towards real-time hyperspectral imagery classification,” in IGARSS 2019 - 2019 IEEE International Geoscience and Remote Sensing Symposium, 2019, pp. 3293–3296.

- [48] Y. Li, H. Zhang, and Q. Shen, “Spectral–spatial classification of hyperspectral imagery with 3d convolutional neural network,” Remote Sensing, vol. 9, p. 67, 01 2017.

- [49] M. F. Baumgardner, L. L. Biehl, and D. A. Landgrebe, “220 band aviris hyperspectral image data set: June 12, 1992 indian pine test site 3,” Sep 2015. [Online]. Available: https://purr.purdue.edu/publications/1947/1

- [50] Hyperspectral Datasets Description, 2021 (accessed 2021-01-01), http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes.

- [51] D. Hong, N. Yokoya, J. Chanussot, J. Xu, and X. X. Zhu, “Joint and progressive subspace analysis (jpsa) with spatial-spectral manifold alignment for semisupervised hyperspectral dimensionality reduction,” IEEE Trans. Cybern., vol. 51, no. 7, pp. 3602–3615, 2021.