Asymptotically Optimal Search for a Change Point Anomaly under a Composite Hypothesis Model

Abstract

We address the problem of searching for a change point in an anomalous process among a finite set of processes. Specifically, we address a composite hypothesis model in which each process generates measurements following a common distribution with an unknown parameter (vector). This parameter belongs to either a normal or abnormal space depending on the current state of the process. Before the change point, all processes, including the anomalous one, are in a normal state; after the change point, the anomalous process transitions to an abnormal state. Our goal is to design a sequential search strategy that minimizes the Bayes risk by balancing sample complexity and detection accuracy.

We propose a deterministic search algorithm with the following notable properties. First, we analytically demonstrate that when the distributions of both normal and abnormal processes are unknown, the algorithm is asymptotically optimal in minimizing the Bayes risk as the error probability approaches zero. In the second setting, where the parameter under the null hypothesis is known, the algorithm achieves asymptotic optimality with improved detection time based on the true normal state. Simulation results are presented to validate the theoretical findings.

Index Terms:

Anomaly detection, change point detection, controlled sensing, active hypothesis testing.I Introduction

We consider the problem of detecting a change point that occurs in an anomalous process among processes. At each time step, the decision maker observes a subset of processes (). Using terminology from target search, we refer to these processes as cells, with the anomalous process after the change point being the target, located in one of the cells. We adopt a composite hypothesis model, where the observations follow a distribution dependent on an unknown parameter (vector). When probing a specific cell, random continuous values are measured, drawn from a common distribution . This distribution has an unknown parameter that belongs to either or , depending on whether the target is absent or present, respectively. Before the change point, all cells are in a benign state, i.e., with parameters in . After the change point, the distribution of the observations for one of the cells may change, with its parameter shifting to . We assume the change point is an unknown deterministic parameter. The policy for selecting which subset of cells to probe at each time step must be designed jointly with the change detection algorithm. Therefore, the objective is to design a sequential search strategy that minimizes the Bayes risk, accounting for sample complexity and detection accuracy, by determining both the subset of cells to observe and when to terminate the search and declare the anomalous cell.

I-A Main Results

The problem of searching for a change point anomaly, as considered in this paper, is closely related to both the sequential design of experiments and change point detection. Within the sequential design of experiments, search problems involving a finite set of processes require critical decisions about which processes to sample sequentially at each time step. This process continues until testing concludes, and the anomaly’s location is reliably identified. This class of problems has been referred to in recent years as controlled sensing for hypothesis testing [2] or active hypothesis testing [3]. These frameworks trace their origins to Chernoff’s seminal work on sequential design of experiments [4]. Chernoff primarily addressed binary hypothesis testing, introducing a randomized test strategy, the Chernoff test, which is asymptotically optimal as the error probability approaches zero. Variations and extensions of this framework are discussed in Section I-B. However, these approaches typically assume a fixed distribution setting, where the anomalous state is present from the outset.

In contrast, the problem addressed in this paper involves a target process that transitions from a benign to an anomalous state at an unknown change point. This aspect aligns the problem with change point detection, where the objective is to identify a change in the distribution as quickly as possible after it occurs, while maintaining a low false alarm rate to avoid premature declarations. The CUSUM algorithm, introduced in [5], serves as a foundational method in change point detection for single processes, achieving optimality under certain performance criteria. Variants of this problem are also discussed in Section I-B. Despite these connections, the specific problem of searching for a change point anomaly across multiple processes under a composite hypothesis model considered here has not been analyzed before. Below, we summarize the main contributions of this work.

First, in terms of algorithm development, We propose a novel algorithm, Searching for Change Point Anomaly (SCPA), to address the problem at hand. The algorithm is deterministic with low-complexity implementations and operates through three distinct phases: Exploration, exploitation, and sequential testing. In the exploration phase, all cells are probed in a round-robin fashion to estimate the unknown parameter. During the exploitation phase, probing focuses on the cell where the change point is most likely to occur. Finally, in the sequential testing phase, this cell is sampled until sufficient confidence is reached to declare it as anomalous. A notable challenge arises from early observations in the pre-change regime, which could delay detection. This is mitigated by strategically resetting the statistic measure. Detailed descriptions of the algorithm are provided in Section III.

In terms of theoretical performance analysis, we establish the asymptotic optimality of SCPA as the error probability approaches zero. Traditional change point analysis often restricts the change point by assuming prior distributions on or adopting weaker performance metrics, such as fixed, non-vanishing error rates. Here, we introduce a novel approach to tackle the problem by setting in a manner that ensures robust guarantees for vanishing error probabilities. Specifically, we impose an asymptotic constraint on , enabling it to be arbitrarily large while ensuring it occurs within a timeframe that does not excessively delay detection. This approach enables strong guarantees on the vanishing of error probabilities, aligning with the classical Chernoff’s sequential experimental design [4], and addresses gaps in existing approaches where globally optimal solutions are unavailable under general settings [6, 7]. Current modifications often use techniques like increasing curved boundaries [8] or constraining local false alarm probabilities [7]. In contrast, our analysis provides explicit conditions under which SCPA achieves asymptotic optimality. This setting is particularly applicable to real-world scenarios, such as cyber anomaly detection, where algorithms operate during intervals where anomalies are likely to manifest (e.g., before corrective actions like patch installations are implemented). More generally, it applies to systems operating within time intervals where the focus is on ensuring vanishing error probabilities within periods after the change point. This robust framework contrasts with approaches accepting fixed error rates across the entire time horizon.

We demonstrate the asymptotic optimality of SCPA in two scenarios: (i) When the distributions of normal and anomalous processes are unknown, and (ii) when the parameter value under the null hypothesis is known and identical across all non-anomalous processes. In both cases, our algorithm matches the performance of the active hypothesis testing setting in [9], which is a special case of our problem where the anomaly is present from the start without temporal change. Finally, we present simulation results that validate our theoretical findings and demonstrate the practical effectiveness of the SCPA algorithm.

I-B Related Work

As highlighted earlier, our problem is closely related to both sequential experimental design and change point detection. Sequential experimental design, originally introduced by Chernoff [4], has been extensively studied and expanded upon in works such as [3, 2, 10, 11, 12, 13, 14, 15, 16, 9, 17, 18, 19, 20, 21, 22, 23, 24]. The specific challenge of anomaly detection across multiple processes has been addressed in [25, 20, 14, 16, 21, 22, 23, 10, 12, 9, 13, 15]. The detection of abnormal processes over densities with an unknown parameter was explored in [10], focusing on independent process states across cells. In [25], anomaly detection among processes was studied, where processes could be observed at each time step. Observations followed one of two known distributions, depending on whether the process was normal or abnormal. A deterministic search policy was proven asymptotically optimal in minimizing detection time under an error probability constraint. An extension of [25] was considered in [9], where observations followed a common distribution with an unknown parameter, varying based on the process state. A sequential search strategy achieving asymptotic optimality was developed. For Poisson point processes with unknown rates, [13] established an asymptotically optimal policy for single-location probing, requiring a randomized selection rule and linear exploration time. Non-parametric detection over finite observation spaces was studied in [26], showing asymptotic optimality with logarithmic exploration time when the null distribution is known. Extensions to M-ary and composite hypothesis testing for single processes were investigated in [27, 28]. In contrast to these anomaly detection frameworks with fixed distribution settings, our setting addresses a dynamic scenario involving a change point where the anomalous process transitions between states. Additional works on sequential detection across independent processes include [29, 30, 31, 10, 32, 33].

This work also relates to the field of change point detection. Page [34] introduced CUSUM charts, which leverage cumulative data for enhanced detection efficiency. Shiryaev [35] developed a Bayesian framework minimizing average detection delay with known geometric priors. Lorden [36] proposed a minimax framework optimizing worst-case detection delay, with CUSUM achieving asymptotic optimality. Subsequent improvements to Lorden’s criterion were presented in [37, 38]. Pollak [39] introduced a single-maximization criterion, showing the asymptotic optimality of Shiryaev-Roberts and CUSUM algorithms [28]. Non-Bayesian quickest detection was explored in [40], demonstrating CUSUM’s optimality. In [41], the problem of detecting distribution changes in random vector sequences was considered, addressing unknown post-change parameters influenced by control actions, requiring both a stopping rule and a sequential control policy. [42] addressed streaming data changing from a known to an unknown parametric distribution, combining CUSUM with post-change parameter estimation. Shiryaev [43] examined change detection in a single process under a Bayesian loss framework, while Lorden [44] and Pollak [45] proposed minimax criteria for i.i.d. observations with known pre- and post-change distributions but unknown deterministic change points. Asymptotic performance of Shiryaev’s procedures was studied in [46]. Extensions and variations appear in [6, 47, 48, 49]. Multi-stream settings were addressed in [50], where streams share a known distribution pre-change, and one stream diverges to a different known distribution post-change. [51] generalized to exponential distributions with a common pre-change parameter, where one stream shifts to an unknown parameter. However, these studies focused on declaring a change without pinpointing the affected stream.

II System Model and Problem Formulation

We begin this section by presenting the system model, followed by formulating the objective.

II-A System Model

We consider the problem of detecting a change point in an anomalous process within a finite set of processes, where is large yet finite. Initially, before the change point, all processes—including the anomalous one—are in a normal state. After the change point, the anomalous process transitions to an abnormal state. Specifically, the system consists of discrete time stochastic processes , with taking real values at times . The distribution of the anomalous process changes at an unknown deterministic change point . If process is the anomalous one, we denote this as hypothesis being true. Drawing an analogy from target search, we often refer to the anomalous process as the target, which can be located in any of the cells.

We focus on a composite hypothesis setting, where the observation distribution depends on an unknown parameter (vector). Let denote the unknown parameter specifying the observation distribution for cell . When cell is observed at time , an observation is drawn independently from a common density , where and represents the parameter space for all cells. We define two disjoint open subsets of : and , representing the parameter spaces for the non-anomalous distribution and the anomalous distribution, respectively. Specifically, under the non-anomalous distribution, , while under the anomalous distribution, . Under hypothesis , the observations , are drawn independently from with . For cell , the observations are drawn independently from with , and the observations are drawn independently from with . The prior probability that is true is denoted by , where . To avoid trivial solutions, we assume for all . At each time step, only cells () can be observed. Let denote the probability measure under hypothesis , and let represent the expectation operator with respect to the measure .

The stopping time is defined as the time when the decision-maker concludes the search by declaring the target’s location. Let be a decision rule, where indicates that the decision-maker declares to be true. Define as the selection rule specifying the cells chosen for observation at time . The time-series vector of selection rules is denoted by . Let represent the vector of observations obtained from the selected cells at time , and let represent the collection of all cell selections and corresponding observations up to time . A deterministic selection rule at time is a mapping from to . Alternatively, a randomized selection rule maps to probability mass functions over .

An admissible strategy for the sequential change point anomaly detection problem is characterized by the tuple . Note that we seek a search strategy that does not rely on knowledge of and assumes no prior knowledge about it.

II-B Objective

We begin by defining the error probabilities associated with a sequential decision strategy . In classical change point detection involving a single process, the decision rule declares a change has occurred once sufficient evidence is gathered. In this setting, only false alarm events can occur, which correspond to declaring a change before it actually happens. After the change point, all subsequent decisions are correct. The objective in this case is to strike a balance between minimizing the detection delay, i.e., the time elapsed between the actual change point and the declaration time, and minimizing the false alarm probability.

In contrast, for anomaly change point detection involving multiple processes, as considered in this paper, the objective is not only to detect whether a change has occurred but also to identify the anomalous process in which the change occurred. As a result, errors arise from two types of events, depending on whether the change point has occurred at or before the stopping time : false alarms and missed detections. The false alarm probability is the probability of declaring an anomaly in one of the cells before the change point occurs (when all processes are still in their normal states). Conversely, the missed detection probability is the probability of incorrectly identifying a non-anomalous cell as the target after the change point has occurred (when one process is in an anomalous state, but the decision-maker fails to detect it and instead declares another process as anomalous).

Formally, under strategy and hypothesis , the false alarm probability is given by:

| (1) |

and the miss detection probability is given by:

| (2) |

Hence, the error probability under hypothesis is given by:

| (3) |

Finally, the overall error probability under strategy is given by:

| (4) |

Note that in the special case of , i.e., a single anomalous process, missed detection events do not occur. Consequently, the error probability reduces to the false alarm probability (1).

Let be the average detection delay under , where . We adopt the Bayes risk framework, as utilized in both active hypothesis testing and change point detection formulations [4, 25, 28, 52, 9, 29], by assigning a cost of for each observation made after the change point and a loss of for a wrong declaration. The risk under strategy when hypothesis is true and change point , is thus given by:

| (5) |

The average risk is given by:

| (6) |

The objective is to find a strategy that minimizes the Bayes risk over all possible realizations of without knowledge of its value:

| (7) |

III The Search Algorithm for Change Point Anomaly (SCPA)

In this section we present the Search algorithm for Change Point Anomaly (SCPA) for solving (7). To streamline the presentation, we describe the algorithm for (i.e., sampling one cell at each time slot), which is common in dynamic search problems (e.g., [53, 26, 9] and subsequent studies). Later, in Section III-B, we explain its extension to . We also assume that the parameter space is finite, consistent with the assumption made in [4] and subsequent studies. Later, in Section IV, we will provide asymptotic analysis of the algorithm performance.

Let us provide below notations used in the algorithm description and throughout the paper. For every we define

| (8) |

as the log-likelihood ratio (LLR), testing against at time . The term

| (9) |

denotes the Kullback-Leibler (KL) divergence between the two distributions, and .

III-A Description of the Algorithm

The SCPA algorithm is structured into exploration and exploitation epochs, as described below.

-

1.

Phase 1: Sequential Round-Robin Exploration: Observe the cells sequentially in a round-robin manner, collecting (the observation from cell at time ), and estimate the cell parameter using the last observations, , through an unconstrained maximum likelihood estimator (MLE)

(10) Here, is a predefined parameter, and its selection will be discussed later in the algorithm description.

If (i.e., there is exactly one cell suspected of having experienced a change point), proceed to Phase 2. Otherwise, return to Phase 1. -

2.

Phase 2: Exploitation with Targeted Parameter Estimation: Let denote the most recent time at which Phase 1 was exited, and let represent the index of the single cell whose MLE falls outside . Observe cell . Based on the observations estimate the cell parameter using the unconstrained MLE:

(11) If , return to Phase 1. Otherwise, proceed to Phase 3.

-

3.

Phase 3: Sequential Testing: Let

(12) be the constrained MLE for cell to be in a normal state, and

(13) be the sum of the adaptive LLR (SALLR) of cell at time n used to confirm hypothesis .

If stop the test and declare as the anomalous cell, otherwise go to Phase 2.

III-B Insights and Discussion on the SCPA Algorithm

III-B1 Considerations and Implementation Details

Note that the SCPA algorithm is deterministic and transitions between phases based on the current state as determined by the MLE. In the exploration phase, we estimate the cell parameter using only the most recent observations. The value of is a predefined parameter, which can be chosen freely. Since we only use a finite number of observations, after the change point, we exclude benign observations from the abnormal cell that occurred before the change point. This approach is particularly beneficial when the change point happens after a long time, as it reduces the need to accumulate too many abnormal observations to counteract the bias from earlier normal ones, thus ensuring that our performance remains unaffected by the timing of the change point. Selecting too small may result in instability, causing the algorithm to repeatedly enter and exit Phase 1, while a larger may lead to prolonged stays in Phase 1 after the change point. For simplicity, we chose in our theoretical and numerical evaluations, though any fixed natural constant can be used theoretically.

In Phases 2 and 3, we avoid using all the normal observations of the anomalous cell to prevent performance degradation, while ensuring enough observations from the target cell are accumulated to confirm the correct hypothesis. Note that in Phase 3, the summation begins at rather than , ensuring it relies on observations from to , excluding . This adjustment accounts for the parameter estimation in the numerator and arises from technical considerations necessary to establish the theoretical asymptotic performance presented in the appendix.

III-B2 Tackling Multi-Process Probing and Detection of Multiple Anomalies

Next, we discuss the generalization of the SCPA algorithm to accommodate the case of . The extension works as follows: In Phase 1, at each time step, cells are sequentially probed in a round-robin fashion in groups of . The transition to Phase 2 occurs under the same condition as before, when exactly one cell is suspected of having an anomaly. In Phase 2, the remaining cells are ranked by their SALLR values, selecting the next cells with the highest likelihood of being anomalous. The suspected cell and these cells are then probed. If the suspected cell’s parameter estimate falls within the normal range, or if any of the cells exhibit parameter estimates outside the normal range (based on observations collected since entering this phase), we return to Phase 1. Otherwise, we proceed to Phase 3. In Phase 3, the algorithm compares the SALLR of the suspected cell with the second-highest SALLR. If the difference is greater than or equal to , the suspected cell is declared abnormal, and the test stops. Otherwise, the process returns to Phase 2.

Next, we address the extension of SCPA to handle multiple (say ) anomalies. The implementation of Phase 1 remains unchanged, but the algorithm proceeds to Phase 2 when exactly cells are suspected of being anomalous. In Phase 2, these cells are sequentially observed. If any of the cells no longer appear anomalous based on their parameter estimates, the algorithm returns to Phase 1. Otherwise, after each observation in Phase 2, the SALLR values of the cells are evaluated. When the maximum SALLR exceeds the threshold, the corresponding cell is declared anomalous. The algorithm then continues observing the remaining suspected cells in Phase 2 until all anomalies are detected.

III-B3 Using Generalized LLR

In Phase 3, the sum of the adaptive LLR from equation (13) is used to evaluate the suspected cell. Alternatively, the sum of the Generalized LLR (GLLR) can be applied in a similar manner:

| (14) |

The ALLR is advantageous for bounding the error probability effectively using martingale techniques. However, it depends on parameter estimates derived from a limited number of initial observations, which remain fixed and cannot be updated even as additional data is collected. On the other hand, the GLLR (14) is known to achieve better empirical performance, as it updates parameter estimates with the latest observations. Despite this practical advantage, the GLLR lacks the theoretical performance guarantees provided by the ALLR.

IV Performance Analysis

In this section, we provide an asymptotic analysis of the algorithm’s performance. The analysis assumes the setting of and a single anomaly, which are common assumptions in dynamic search problem analysis (e.g., [53, 26, 9] and subsequent studies). We begin in Section IV-A by examining the case where no additional information about the process states is available—that is, both the pre-change and post-change distribution parameters are unknown. In Section IV-B, we consider the case where the parameter under the null hypothesis is known and identical across all non-anomalous processes.

In traditional change point analysis, deriving optimality properties often involves restricting the occurrence of the change point, . This is typically done by assuming a prior distribution on or adopting weaker performance measures, such as allowing a fixed error rate that does not vanish asymptotically. In contrast, this work introduces an assumption for that enables strong guarantees on the vanishing of error probabilities, aligning with the classical frameworks of sequential experimental design by Chernoff [4]. Specifically, we impose an asymptotic constraint on , allowing it to be arbitrarily large while ensuring its occurrence is not excessively delayed relative to the detection time.

This assumption is particularly relevant in practical anomaly detection systems. For example, in cyber anomaly detection, algorithms often operate during suspicious intervals where anomalies, such as malicious or undesired behavior, are expected to manifest before corrective actions (e.g., patch installations) take effect. More generally, this assumption applies to systems operating within time intervals, where the focus is on ensuring vanishing error probabilities within intervals where the change has occurred (i.e., when the assumption on the asymptotic bound on holds), rather than accepting fixed error rates over the entire time horizon. This approach provides a robust framework for maintaining strong performance guarantees under realistic operational conditions. The asymptotic constraint on assumed throughout the analysis in this section is formally defined as follows:

Assumption 1: Let be the cost per observation defined in (5). Then, the change point is of order for some .

This assumption implies that grows slower than , specifically, . Later, we demonstrate that the detection time is of order , ensuring that does not occur too late relative to the detection time as approaches zero.

IV-A change point Anomaly Search without Side Information

We start by analyzing the SCPA algorithm in the setting where the parameters of both the anomalous and non-anomalous distributions are unknown. The following theorem establishes its asymptotic optimality:

Theorem 1.

Let and be the Bayes risks under the SCPA algorithm and any other policy , respectively. Then, the Bayes risk satisfies:111The notation as refers to .

where .

Proof.

The proof can be found in Appendix VII-B. The asymptotic optimality of the SCPA algorithm relies on several key results demonstrated in the Appendix. First, we establish that the algorithm achieves an error probability of order . Second, we show that the post-change exploration time is of order . The bounded post-change exploration time of the SCPA algorithm is particularly noteworthy, as it contrasts sharply with the logarithmic exploration time commonly observed in many active search strategies (see, for example, [26, 10]). Additionally, recall that the asymptotic constraint on results in a pre-change time of order . Finally, we demonstrate that the post-change detection time asymptotically satisfies

Taken together, these results yield the asymptotic Bayes risk stated earlier. Since serves as a lower bound on the Bayes risk of any algorithm, these findings collectively establish the asymptotic optimality of SCPA. For a detailed proof, see Appendix VII-B. ∎

IV-B change point Anomaly Search Under a Known Model of Normality

Here, we assume that the parameter under the null hypothesis, , is known and equal for the non-anomalous distribution across all cells. This setup models many anomaly detection scenarios, where the decision maker can infer the pre-change distribution parameters, which represent the typical distribution before the change, while there is uncertainty regarding the distribution after the change point (i.e., under abnormal conditions). To utilize this information, we substitute the estimation of the parameter in the SALLR statistics (13) with its known true value:

| (16) |

The following theorem establishes the asymptotic optimality of SCPA algorithm in this setting.

Theorem 2.

Let and be the Bayes risks under the SCPA algorithm and any other policy , respectively. Then, the Bayes risk satisfies:

| (17) |

Proof.

The proof is given in Appendix VII-A. ∎

V Numerical Examples

We validate the theoretical findings of SCPA through numerical examples. In our simulations, we modeled a single anomaly occurring in one of the cells after the change point, with the following setup: The a priori probability of the target being present in cell was set to for all . When cell was observed at time , an observation was independently drawn from either the distribution or , depending on whether the target was absent or present, respectively.

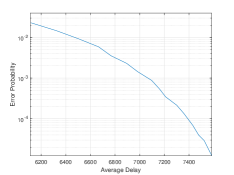

We examine the cases for both and , with the results shown in Fig. 1 and Fig. 2, respectively, for the average detection delay, and in Fig. 3 and Fig. 4, respectively, for the log error probability. The results indicate similar outcomes for both realizations of the change point, as it does not influence the detection time. This observation is consistent with our theoretical findings. Additionally, we observe a linear relationship between the detection time and , which further aligns with the theoretical findings.

We proceed to compare the proposed SCPA algorithm with the CUSUM algorithm, both used for change point detection, with SALLR based on the closest abnormal and normal parameters. At each step, if the SALLR is negative, the next cell is probed. Otherwise, we determine whether to stop and declare the cell anomalous (if the SALLR exceeds ) or to probe the cell again and repeat the process. The SCPA algorithm was evaluated in two scenarios: without side information and with side information, as detailed in Sections IV-A and IV-B, respectively. The results are presented in Fig. 5 and Fig. 6. In both simulations with side information, the SCPA algorithm demonstrated a clear advantage, achieving a smaller error probability and a shorter detection time from the start of the measurements. Without side information, CUSUM achieved faster detection times for higher error probability values. However, asymptotically, as the error probability decreased, the SCPA algorithm without side information exhibited superior performance.

VI Conclusion

We addressed the anomaly detection problem in a set of processes, where only a subset of cells can be probed at each step. Observations depend on unknown cell parameters, classified as normal or abnormal. Before the change point, all cells are normal; after the change point, one cell transitions to the abnormal state. Our goal was to develop a sequential strategy minimizing detection time under an error probability constraint. We analyzed two scenarios: without prior information and with known normal parameters. We proved asymptotic optimality in both cases and showed improved detection times with additional information. Simulations validated the theoretical guarantees and efficiency of the proposed SCPA algorithm.

VII Appendix

For clarity, we begin by proving Theorem 2, followed by highlighting the key steps required to extend the results to the context of Theorem 1.

VII-A Proof of Theorem 2

To prove the theorem, we introduce the following definitions:

Definition 1.

Let be the minimal integer such that and for all , the parameter estimates satisfy: , for all under hypothesis .

Definition 2.

denotes the total amount of time between and the change point, .

Remark 1.

Later in the proof, we will show that the expected time spent during the exploration phase is . Note that for all , we probe only cell , which occurs during the exploitation phase. Therefore, the time spent in the exploration phase after the change point is bounded by . As a result, to show that the expected exploration phase time is , it is sufficient to prove that the expectation of is . The detailed proof is provided rigorously later.

Remark 2.

In the following lemma, when we say that SCPA is implemented indefinitely, we mean that the algorithm operates without stopping, disregarding the stopping rule.

Lemma 1.

Assume that SCPA is implemented indefinitely. Then, there exist and such that

| (18) |

Proof.

At any given moment, we are either in Phase 1 (exploration) or Phase 2 (exploitation). Therefore, if , it implies that after the change point, we remained in either Phase 1 or Phase 2 for at least time indices. Let denote the time spent in Phase 1 after . Then, we have:

| (19) |

We now bound the first term on the RHS of (VII-A). A complete round of Phase 1 requires time indices. Therefore, there are at least rounds of Phase 1 after the anomaly occurs. Each round of Phase 1 can lead to one of the following outcomes: (i) Return to Phase 1. (ii) Move to Phase 2 with . (iii) Move to Phase 2 with . Based on the above, at least rounds end with one of these outcomes. Thus, it suffices to demonstrate that the probability of each outcome is bounded.

(i) For at least rounds that ended by returning to Phase 1: If a round of Phase 1 ends with a return to Phase 1, it implies either that the observations ended by: for all , or that there exist two cells with (so we have with ). Following the same arguments as before, we deduce that after the change point, we have at least rounds of Phase 1 that end with for all , or at least rounds of Phase 1 that end with some that . We will show that each of them is bounded.

If there are at least rounds ending with for all , it implies that there are at least observations of cell resulting in . Lets be the total number of these observations and represent their corresponding time indices. Therefore, by the definition of the MLE in (10) we know that whenever , for all , and by summation we have,

| (20) |

Thus, applying the Chernoff bound and leveraging the independence of , we obtain:

| (21) |

for all .

Note that a moment-generating function (MGF) is equal to 1 at . Since is strictly negative, the derivative of the MGF of with respect to is strictly negative at . Therefore, there exist and such that . Consequently, we can find and such that for all . Hence,

| (22) |

where the last inequality holds because , and for an appropriately chosen , we obtain the desired result.

For at least rounds of Phase 1, some satisfies . Note that this may vary across rounds, but there are at least rounds where the same occurs. Consequently, we have observations of that resulted in . Let denote the time indices of these observations, and represent their corresponding estimates during Phase 1. By the MLE definition (10), we have whenever , for all , which implies:

| (23) |

By applying the Chernoff bound and leveraging the independence of , we obtain:

| (24) |

For all . Since for all , by applying similar arguments as in the previous case, we can find and such that for all . Hence, (VII-A) can be bounded by:

| (25) |

for some suitable .

(ii) For at least rounds that ended by moving to Phase 2 with : Since , we are guaranteed to return to Phase 1. This means that the observations of cell in Phase 2 result in the MLE, as defined in (11), being within the normal parameter space, . Denote by the number of times we enter Phase 2 under these conditions up to time . Therefore, we have under this assumption. For let be the time at which we exit the exploration phase (as defined in the exploitation phase), and be the time at which we exit Phase 2 and return to Phase 1. Thus, for all we have:

| (26) |

when and . Therefore, (26) implies:

| (27) |

By summing over all , we obtain:

| (28) |

By applying the Chernoff bound and the i.i.d property of we obtain:

| (29) |

Since for all , there exist and such that . Thus, we can find and such that for all . As a result, we have:

| (30) |

where the second inequality follows from .

(iii) For at least rounds that ended by moving to Phase 2 with :

If we moved to Phase 2 with , this implies that at least observations of cell resulted in . Therefore, the probability is bounded using the same arguments as in the first case of (i) in step 1.

Next, we bound the second term on the RHS of (VII-A). We can enter Phase 2 in one of two ways: (i) , or (ii) . Spending less than time indices in Phase 1 implies that we spent more than time indices in Phase 2. In this case, we either spent more than time indices in Phase 2 with , or we spent more than time indices in Phase 2 with . It is sufficient to show that each of these cases is bounded.

(i) For more than time on Phase 2 with : As in (ii) in step 1, denote by the number of times we entered Phase 2 with cell up to time . Since , we know that we will return to Phase 1. Let and represent the time we entered and the time we returned to Phase 1, respectively, for all . Notice that is the total time spent in Phase 2 with cell up to time , which is assumed to be greater than . Using similar arguments as in (ii) in step 1, there exists such that the probability of this case is bounded by:

| (31) |

(ii) For more than time on Phase 2 with :

Under this assumption, one of the following occurs: (a) We have more than rounds of Phase 1 that end with moving to Phase 2 where . (b) We enter Phase 2 with and remain in Phase 2 for more than time indices. If neither (a) nor (b) occurs, we will conclude that we entered Phase 2 with the wrong cell at most times, and in each case, we stayed for at most time indices. Therefore, the total time spent in Phase 2 with the wrong cell is at most , which contradicts the assumption in (ii). Hence, to prove the lemma, it remains to show that both (a) and (b) are bounded.

For Case (a), we have more than observations of cell that result in . Therefore, following the same reasoning as in the first case of (i) in step 1, let denote the number of these observations up to time , with the condition that . Using the Chernoff bound and the properties of (independence and negative expectation), we can find a constant such that the probability of this case is bounded by:

| (32) |

For Case (b), if we spent more than time units in Phase 2 with , this implies that after time units in Phase 2, we did not return to Phase 1. Let denote the time at which we entered Phase 2. Thus, at time , the MLE, as defined in (11), satisfies . Therefore,

| (33) |

By applying the Chernoff bound and using the i.i.d property of , we have:

| (34) |

Using the fact that , we can find and , such that (VII-A) is bounded by

| (35) |

for , which completes the proof of the lemma. ∎

Definition 3.

Let be the smallest integer such that for all :

| (36) |

and let denote the total amount of time between and .

Lemma 2.

Assume that SCPA is implemented indefinitely. Then, for every fixed there exist and such that

| (37) |

Proof.

Let

| (38) |

and,

| (39) |

denote the ALLR and the normalized ALLR, respectively, of cell at time . Note that for all , is a zero-mean random variable under hypothesis , and as such, we refer to it as the normalized ALLR.

For , by the definition of and in the context of the exploitation phase notation, we have . Let . Then,

| (40) |

for all

As a result, , implies that

.

Therefore, for every there exists such that,

| (41) |

Hence, it suffices to show that each term on the RHS of (2) is bounded.

Next, we bound the first term on the RHS of (2). Fix . Then,

| (42) |

Again, it suffices to prove that each term on the RHS of (2) is bounded. The first term is bounded by Lemma 1. For the second term, we have two cases: (i) The last exit from Phase 1 occurred after the change point, i.e., . (ii) The last exit from Phase 1 occurred before the change point, i.e., . Under Case (i), . Since can be arbitrarily small and has a finite expectation, by applying the Chernoff bound we obtain that the probability of Case (i) decreases exponentially with . Hence, we have and such that the probability of (i) is bounded by . We fix again . Then, (ii) is bounded by:

| (43) |

The first term on the RHS of (2) can be bounded using similar arguments as in Case (i). For any and , arbitrarily small values can be chosen, and has a finite expectation. For the second term, we observe that after the time index , there was no return to Phase 1, i.e., . Consequently, the observations resulted in . By the MLE definition in (10), we have:

| (44) |

Hence, by applying the Chernoff bound and using the i.i.d property of , we obtain:

| (45) |

for all . Since , we can find suvh that (2) is bounded by:

| (46) |

for .

Lemma 3.

The error probability under SCPA is .

Proof.

Recall that the error probabuility is given by:

| (48) |

Let for all , and for all , denote the miss-detect and false-alarm probabilities, respectively. Thus, .

Therefore, it suffices to show that . We start by analyzing the false-alarm error. Note that

| (49) |

where we define for fixed ,

| (50) |

Also, notice that is a non-negative martingale:

| (51) |

Hence, by Lemma 1 in [54] for a non-negative martingale, we have,

| (52) |

where the last equality is due to the fact that , since for , .

Next, we analyze the miss-detect error. Note that

| (53) |

The last inequality arises from the fact that the event depends solely on samples up to , making it independent of samples from onward. Additionally, the inequality follows from Lemma 1 in [54] for non-negative martingales, applied to , along with the property that for all and fixed . Finally, note that . Therefore, we can apply Lemma 1 and obtain:

| (54) |

which completes the proof of the lemma. ∎

Next, we complete the proof of the theorem. From Lemma 1, we have , and from Lemma 2, we have .

The detection time under the SCPA algorithm is upper bounded by . Therefore, . Combining all together, we obtain:

| (55) |

To upper bound the Bayes risk under the SCPA algorithm, we apply the bound on the error by Lemma 3, and (55) to have

| (56) |

Lastly, we apply the lower bound on the Bayes risk under simple hypotheses for any algorithm [25]: . Combining the upper and lower bounds concludes the proof.

VII-B Proof of Theorem 1

In this section, we extend the proof for the case when no additional information on the parameters is given, and for all , is unknown. Without loss of generality, we prove the theorem when hypothesis is true.

To bound the detection time, we define and as in Definition 3, where and . Additionally, we introduce the following definition:

Definition 4.

For any , is the smallest integer satisfying for . Specifically,

| (57) |

Also, let denote the total amount of time between and .

Note that for all , and . The following lemma bounds for all .

Lemma 4.

Assume that SCPA is implemented indefinitely. Then, for every and for every fixed there exist and such that

| (58) |

Next, we show that the error probability is . Similar to the previous case, it suffices to prove that for all and , respectively. Note that for , and for , for all . Therefore,

| (61) |

Hence, with the same notations as in the previous case for fixed , we have:

| (62) |

where again we used Lemma 1 in [54] for a non-negative martingale, . The last inequality follows due to . The same holds for , which proves the error bound.

To complete the proof, note that implies that there exists with , such that

| (63) |

Recall that is a finite space. Therefore, using Lemma 4, for every we can find and such that for all we have:

| (64) |

Using (64), we obtain that (63) is bounded by:

| (65) |

for suitable . Since , using the bound on and , we have:

| (66) |

Finally, combining the error bound and (66) yields:

| (67) |

which, together with the lower bound on the Bayes risk, completes the proof.

References

- [1] L. L. Didi, T. Gafni, and K. Cohen, “Active change point anomaly detection over composite hypotheses,” in 2024 60th Annual Allerton Conference on Communication, Control, and Computing, pp. 1–6, 2024.

- [2] S. Nitinawarat, G. K. Atia, and V. V. Veeravalli, “Controlled sensing for multihypothesis testing,” IEEE Transactions on automatic control, vol. 58, no. 10, pp. 2451–2464, 2013.

- [3] M. Naghshvar and T. Javidi, “Active sequential hypothesis testing,” 2013.

- [4] H. Chernoff, “Sequential design of experiments,” The Annals of Mathematical Statistics, vol. 30, no. 3, pp. 755–770, 1959.

- [5] E. S. Page, “Continuous inspection schemes,” Biometrika, vol. 41, pp. 100–115, 1954.

- [6] V. V. Veeravalli and T. Banerjee, “Quickest change detection,” in Academic press library in signal processing, vol. 3, pp. 209–255, Elsevier, 2014.

- [7] A. G. Tartakovsky, “Asymptotic performance of a multichart cusum test under false alarm probability constraint,” in Proceedings of the 44th IEEE Conference on Decision and Control, pp. 320–325, IEEE, 2005.

- [8] A. A. Borovkov, “Asymptotically optimal solutions in the change-point problem,” Theory of Probability & Its Applications, vol. 43, no. 4, pp. 539–561, 1999.

- [9] B. Hemo, T. Gafni, K. Cohen, and Q. Zhao, “Searching for anomalies over composite hypotheses,” IEEE Trans. Sig. Proc., vol. 68, pp. 1181–1196, 2020.

- [10] K. Cohen and Q. Zhao, “Asymptotically optimal anomaly detection via sequential testing,” IEEE Transactions on Signal Processing, vol. 63, no. 11, pp. 2929–2941, 2015.

- [11] Y. Kaspi, O. Shayevitz, and T. Javidi, “Searching with measurement dependent noise,” IEEE Transactions on Information Theory, vol. 64, no. 4, pp. 2690–2705, 2017.

- [12] Y. Song and G. Fellouris, “Asymptotically optimal, sequential, multiple testing procedures with prior information on the number of signals,” Electronic Journal of Statistics, vol. 11, no. 1, pp. 338–363, 2017.

- [13] N. K. Vaidhiyan and R. Sundaresan, “Learning to detect an oddball target,” IEEE Transactions on Information Theory, vol. 64, no. 2, pp. 831–852, 2017.

- [14] B. Huang, K. Cohen, and Q. Zhao, “Active anomaly detection in heterogeneous processes,” IEEE Transactions on Information Theory, vol. 65, no. 4, pp. 2284–2301, 2018.

- [15] C. Zhong, M. C. Gursoy, and S. Velipasalar, “Deep actor-critic reinforcement learning for anomaly detection,” in IEEE Global Communications Conference (GLOBECOM), pp. 1–6, 2019.

- [16] A. Gurevich, K. Cohen, and Q. Zhao, “Sequential anomaly detection under a nonlinear system cost,” IEEE Transactions on Signal Processing, vol. 67, no. 14, pp. 3689–3703, 2019.

- [17] C. Wang, K. Cohen, and Q. Zhao, “Information-directed random walk for rare event detection in hierarchical processes,” IEEE Transactions on Information Theory, 2020.

- [18] T. Gafni, K. Cohen, and Q. Zhao, “Searching for unknown anomalies in hierarchical data streams,” IEEE Signal Processing Letters, vol. 28, pp. 1774–1778, 2021.

- [19] A. Tajer, J. Heydari, and H. V. Poor, “Active sampling for the quickest detection of markov networks,” IEEE Transactions on Information Theory, vol. 68, no. 4, pp. 2479–2508, 2021.

- [20] T. Lambez and K. Cohen, “Anomaly search with multiple plays under delay and switching costs,” IEEE Transactions on Signal Processing, vol. 70, pp. 174–189, 2021.

- [21] A. Tsopelakos and G. Fellouris, “Sequential anomaly detection under sampling constraints,” IEEE Transactions on Information Theory, 2022.

- [22] A. Tsopelakos and G. Fellouris, “Asymptotically optimal sequential anomaly identification with ordering sampling rules,” arXiv preprint arXiv:2309.14528, 2023.

- [23] T. Gafni, B. Wolff, G. Revach, N. Shlezinger, and K. Cohen, “Anomaly search over discrete composite hypotheses in hierarchical statistical models,” IEEE Transactions on Signal Processing, vol. 71, pp. 202–217, 2023.

- [24] H. Szostak and K. Cohen, “Deep multi-agent reinforcement learning for decentralized active hypothesis testing,” IEEE Access, 2024.

- [25] K. Cohen and Q. Zhao, “Active hypothesis testing for anomaly detection,” IEEE Trans. Inf. Theory, vol. 61, no. 3, pp. 1432–1450, 2015.

- [26] S. Nitinawarat and V. V. Veeravalli, “Universal scheme for optimal search and stop,” in 2015 Information Theory and Applications Workshop (ITA), pp. 322–328, IEEE, 2015.

- [27] G. Schwarz, “Asymptotic shapes of bayes sequential testing regions,” The Annals of mathematical statistics, pp. 224–236, 1962.

- [28] T. L. Lai, “Nearly optimal sequential tests of composite hypotheses,” The Annals of Statistics, pp. 856–886, 1988.

- [29] L. Lai, H. V. Poor, Y. Xin, and G. Georgiadis, “Quickest search over multiple sequences,” IEEE Transactions on Information Theory, vol. 57, no. 8, pp. 5375–5386, 2011.

- [30] A. Tajer and H. V. Poor, “Quick search for rare events,” IEEE Transactions on Information Theory, vol. 59, no. 7, pp. 4462–4481, 2013.

- [31] K. Cohen, Q. Zhao, and A. Swami, “Optimal index policies for quickest localization of anomaly in cyber networks,” in 2013 IEEE Global Conference on Signal and Information Processing, pp. 221–224, 2013.

- [32] K. Cohen and Q. Zhao, “Anomaly detection over independent processes: Switching with memory,” in 2014 52nd Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 33–37, 2014.

- [33] J. Heydari, A. Tajer, and H. Vincent Poor, “Quickest linear search over correlated sequences,” IEEE Transactions on Information Theory, vol. 62, no. 10, pp. 5786–5808, 2016.

- [34] E. S. Page, “Continuous inspection schemes,” Biometrika, vol. 41, no. 1/2, pp. 100–115, 1954.

- [35] A. N. Shiryaev, “On optimum methods in quickest detection problems,” Theory of Probability & Its Applications, vol. 8, no. 1, pp. 22–46, 1963.

- [36] G. Lorden, “Procedures for reacting to a change in distribution,” The annals of mathematical statistics, pp. 1897–1908, 1971.

- [37] G. V. Moustakides, “Optimal stopping times for detecting changes in distributions,” the Annals of Statistics, vol. 14, no. 4, pp. 1379–1387, 1986.

- [38] Y. Ritov, “Decision theoretic optimality of the cusum procedure,” The Annals of Statistics, pp. 1464–1469, 1990.

- [39] M. Pollak, “Optimal detection of a change in distribution,” The Annals of Statistics, pp. 206–227, 1985.

- [40] E. Bayraktar and L. Lai, “Byzantine fault tolerant distributed quickest change detection,” SIAM Journal on Control and Optimization, vol. 53, no. 2, pp. 575–591, 2015.

- [41] V. V. Veeravalli, G. Fellouris, and G. V. Moustakides, “Quickest change detection with controlled sensing,” IEEE Journal on Selected Areas in Information Theory, vol. 5, pp. 1–11, 2024.

- [42] L. Xie, G. V. Moustakides, and Y. Xie, “Window-limited cusum for sequential change detection,” IEEE Transactions on Information Theory, vol. 69, no. 9, pp. 5990–6005, 2023.

- [43] A. N. Shiryaev, “On optimum methods in quickest detection problems,” Theory of Probability & Its Applications, vol. 8, no. 1, pp. 22–46, 1963.

- [44] G. Lorden, “Procedures for reacting to a change in distribution,” The Annals of Mathematical Statistics, vol. 42, no. 6, pp. 1897–1908, 1971.

- [45] M. Pollak, “Optimal detection of a change in distribution,” The Annals of Statistics, vol. 13, no. 1, pp. 206–227, 1985.

- [46] A. G. Tartakovsky and V. V. Veeravalli, “General asymptotic bayesian theory of quickest change detection,” Theory of Probability & Its Applications, vol. 49, no. 3, pp. 458–497, 2005.

- [47] L. Xie, S. Zou, Y. Xie, and V. V. Veeravalli, “Sequential (quickest) change detection: Classical results and new directions,” IEEE Journal on Selected Areas in Information Theory, vol. 2, no. 2, pp. 494–514, 2021.

- [48] T. Banerjee, H. Firouzi, and A. O. Hero, “Quickest detection for changes in maximal knn coherence of random matrices,” IEEE Transactions on Signal Processing, vol. 66, no. 17, pp. 4490–4503, 2018.

- [49] A. Puzanov and K. Cohen, “Deep reinforcement one-shot learning for change point detection,” in 2018 56th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 1047–1051, 2018.

- [50] Q. Xu, Y. Mei, and G. V. Moustakides, “Optimum multi-stream sequential change-point detection with sampling control,” IEEE Transactions on Information Theory, vol. 67, no. 11, pp. 7627–7636, 2021.

- [51] Q. Xu and Y. Mei, “Asymptotic optimality theory for active quickest detection with unknown postchange parameters,” Sequential Analysis, vol. 42, no. 2, pp. 150–181, 2023.

- [52] A. Wald, Sequential Analysis. New York, NY, USA:Wiley, 1947.

- [53] K. Cohen, Q. Zhao, and A. Swami, “Optimal index policies for anomaly localization in resource-constrained cyber systems,” IEEE Transactions on Signal Processing, vol. 62, no. 16, pp. 4224–4236, 2014.

- [54] H. Robbins and D. Siegmund, “A class of stopping rules for testing parametric hypotheses,” in Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability (Univ. California, Berkeley, CA, 1970/1971), vol. 4, pp. 37–41, 1972.