Astronomical Image Processing at Scale With Pegasus and Montage

Abstract

Image processing at scale is a powerful tool for creating new data sets and integrating them with existing data sets and performing analysis and quality assurance investigations. Workflow managers offer advantages in this type of processing, which involves multiple data access and processing steps. Generally, they enable automation of the workflow by locating data and resources, recovery from failures, and monitoring of performance. In this focus demo we demonstrate how the Pegasus Workflow Manager Python API manages image processing to create mosaics with the Montage Image Mosaic engine. Since 2001, Pegasus has been developed and maintained at USC/ISI. Montage was in fact one of the first applications used to design Pegasus and optimize its performance. Pegasus has since found application in many areas of science. LIGO exploited it in making discoveries of black holes. The Vera C. Rubin Observatory used it to compare the cost and performance of processing images on cloud platforms. While these are examples of projects at large scale, small team investigations on local clusters of machines can benefit from Pegasus as well.

1Caltech/IPAC-NExScI, Pasadena, CA, 91125; [email protected]

2Caltech/IPAC-NExScI, Pasadena, CA, 91125

3USC Information Sciences Institute, Marina del Rey, CA, 90292

1 Introduction

We aim to show how astronomers can take advantage of open source tools to build workflows that can be executed on-premises machines/clusters and then scale as far as possible without modification on distributed platforms. The demo was a proof-of-concept image mosaic workflow run on a local laptop; the same workflow was run without any modifications or optimizations on the Open Science Grid (OSG) 111https://opensciencegrid.org/ high-throughput platform.

The tools we have chosen for this demo are the Pegasus Workflow Manager 222https://pegasus.isi.edu and the Montage Image Mosaic Engine 333http://montage.ipac.caltech.edu. Both are open source, operate by design on multiple platforms, support a Python API, are (reasonably) easy to use, and operate well with one another. The demo itself will create a 1∘ x 1 ∘ mosaic of M17 in a Jupyter Notebook.

2 Pegasus and Montage

Pegasus is a fully featured workflow management system (Deelman et al. 2021) that enables scientists to develop portable scientific workflows, structured as directed acyclic graphs through Python, Java, or R APIs. These workflows can then be run on heterogeneous resources, including local machines, clouds, HPC systems, and campus clusters. Some important features of Pegasus are: monitoring and debugging capabilities via command-line interface (CLI) tools or a web dashboard; automatic handling of data movement between jobs (e.g., pulling input data from an AWS S3 bucket or sending one job’s output to another job); and running workflows with data integrity and fault tolerance in mind.

When working with Pegasus, scientists run a set of computations whose workflow can, as a starting point, be sketched on paper. The abstract workflow can be described programmatically using one of the APIs, in our case case Python. Once that is done, the workflow will be serialized to a YAML representation, which will be consumed by Pegasus and compiled into an executable workflow intended for a specific execution environment, such as a cloud platform or a campus cluster. HTCondor sits underneath Pegasus and is responsible for running the jobs.

Montage is open source toolkit for assembling FITS images into custom mosaics (Berriman & Good 2017). It is written in C for performance and portability. Python binary extensions provide compiled performance on parallel platforms. The modular design of Montage, with independent modules for each step in the mosaic process, allows Montage to play well with workflow managers and enables embarrassingly parallel processing.

3 Try It Out

We have created a Docker image with everything needed to run the Montage workflow just described in a Jupyter Notebook 444 https://github.com/pegasus-isi/ADASS21-montage-docker-image. This Docker image contains an installation of Pegasus and HTCondor, the Montage binaries, and the workflow notebook. You can interact with this container through Jupyter Notebooks via the browser. This container environment supports testing different workflow configurations, and contains Pegasus tutorial notebooks.

4 The Demo Notebook

The notebook demonstrates proof-of-concept parallelization through creation of a small mosaic. The demo was run on a desktop machine for convenience. Because of the overhead imposed on the process by HTCondor, the real power of the approach will lie in its use on high-performance platforms.

The process involves populating three structures, maintained by Pegasus as SQLite databases:

-

•

The "replica catalog:" The input files for the processing and how to access them.

-

•

The "transformations:" The Montage modules and where to run them.

-

•

The "workflow" enumerates the specific steps in the processing, including transforms, argument lists, which files are input and which are output, etc. Pegasus deduces when jobs can be run in large part by when the precursor files are available.

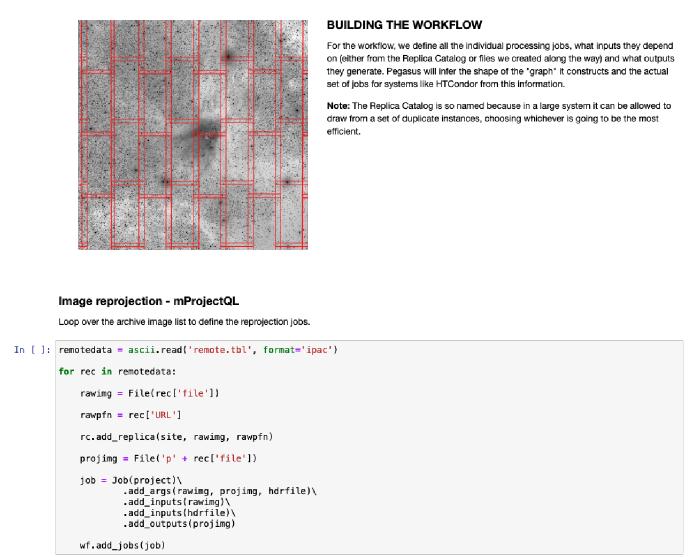

Then the Notebook asks Pegasus to devise a processing plan, and, if successful, to submit that plan to HTCondor. Figure 1 shows a screenshot of one step in the process.

5 Running the Workflow on a Parallel Platform: The Open Science Grid

We ran the Montage workflow on the OSG (Sfiligoi et al. 2009) with minimal modifications to the Python workflow script to utilize OSG-specific features:

-

•

Data movements were managed by OSG’s StashCache data infrastructure, which caches files in an opportunistic manner.

-

•

The workflow used RedHat Enterprise 7 Singularity containers, which are cached within OSG’s CernVM-File system (CVMFS). Setup required changing only a few lines in our workflow generation script.

Table 1 summarized metrics Pegasus reported on the 332 jobs (including jobs added by Pegasus to handle data staging and cleanup).

| Metric | Value |

|---|---|

| Number of Jobs | 332 |

| Workflow Wall Time | 54m 43s |

| Cumulative Job Wall Time | 36m 48s |

| Cumulative Job Wall Time (from submit side) | 2h 38m |

| Integrity Checking Time Spent | 3.57s (for 357 files) |

The wall times reveal considerable queuing overhead associated with running the workflows on OSG. Because the overheads are incurred at the time of job submission, it is beneficial to cluster jobs together to pay a single overhead for a "cluster" of jobs. Pegasus can do this clustering automatically based on user-specified criteria. Pegasus also adds overheads such as integrity checking, but these, by contrast, are negligible and in this case took only 3.57 seconds.

6 Conclusions

We have successfully run Pegasus and Montage on a laptop and on the OSG and we have made our setup available to others for replication and reuse. Our next steps include optimizing performance on parallel platforms through clustering and running the workflow on commercial cloud platforms.

Acknowledgments

Pegasus is funded by the National Science Foundation under OAC SI2-SSI program grant 1664162. Previously, NSF funded Pegasus under OCI SDCI program grant 0722019 and OCI SI2-SSI program grant 1148515. Montage is funded by the National Science Foundation grant awards 1835379, 1642453 and 1440620. OSG is supported by the National Science Foundation grant award 2030508.

References

- Berriman & Good (2017) Berriman, G. B., & Good, J. C. 2017, PASP, 129, 058006. 1702.02593

- Deelman et al. (2021) Deelman, E., Ferreira da Silva, R., Vahi, K., Rynge, M., Mayani, R., Tanaka, R., Whitcup, W., & Livny, M. 2021, Journal of Computational Science, 52, 101200

- Sfiligoi et al. (2009) Sfiligoi, I., Bradley, D. C., Holzman, B., Mhashilkar, P., Padhi, S., & Wurthwein, F. 2009, in 2009 WRI World Congress on Computer Science and Information Engineering, vol. 2 of 2, 428