Assembling a Pipeline for 3D Face Interpolation

Abstract

This paper describes a pipeline built with open source tools for interpolating 3D facial expressions taken from images. The presented approach allows anyone to create 3D face animations from 2 input photos: one from the start face expression, and the other from the final face expression. Given the input photos, corresponding 3D face models are constructed and texture-mapped using the photos as textures aligned with facial features. Animations are then generated by morphing the models by interpolation of the geometries and textures of the models. This work was performed as a MS project at the University of California, Merced.

I Introduction

It is common to observe many realistic 3D animated characters being used in video games and movies. Behind the scenes, large data sets of images are often used in order to achieve realistic appearances. Designers are also often responsible for editing the characters in order to improve the final results. While significant advances have been achieved in facial animation, it is still difficult to make human-like 3D faces that always look realistic. People are very good at recognizing the differences between real humans and digital humans.

3D character animation technologies are also starting to be used in a variety of new innovative products. For example, the newest iPhone (smartphone by Apple) has an application that can take pictures or videos of people and place 3D models covering their faces and moving in coordination with the real faces. Such an application illustrates the growing need for simple and efficient approaches for achieving realistic face animation. This paper describes a pipeline built with open source tools for achieving animations by interpolation of 3D facial expressions taken from pictures.

II Related Work

A significant amount of previous work has relied on datasets of human faces in order to build face models. For example, the approach of Blanz and Vetter [1] applied pattern classification on their dataset of human faces in order to reconstruct a 3D face model from a single 2D face image. Booth et al. [2] introduced the Large Scale Facial Model (LSFM), which is able to automatically construct 3D models of a variety of human faces from a data set of 9,633 distinct facial identities. Tran et al. [3] also proposed a framework to construct 3D models from a large set of face images, but without requiring to collect 3D face scan data. Huber et al. [4] presented the Surrey Facial Model, a multi-resolution model that can build a facial model in different resolution levels.

Facial animation can be accomplished by various approaches. Chuang and Bregler [5] describe an approach for facial animation that is based on motion capture data and interpolation of blend shapes. Noh and Neumann [6] proposed the work Expression Cloning, an approach that makes a facial movement by transferring vertex motion data from one source to another. Lee et al. [7] introduced an approach to generate facial expressions based on muscle information from real face data.

There are also available products that allow the creation and manipulation of 3D facial models. For example, Face Poser is a system presented by Lau et al. [8] and Poser [9] is a software system that facilitates modeling and editing 3D faces with a comprehensive graphical user interface.

In this work a solution based on available open source tools is presented. 3D face models are created from photos based on the Surrey Facial Model [4]. In order to achieve a simple approach for face animation, this work focuses on animating the interpolation between a pair of input facial expressions.

II-A Implementation Details

This project was implemented in C++ with Visual Studio 2017 under Windows 10. The following C++ libraries were used:

-

•

dlib library [10]: This library is used to process the input face images and to place feature landmarks on the images. The landmarks are based on the ibug [16] facial points (Fig. 1), which represent 68 feature landmarks for human faces. These features are later used to generate the 3D face models and to morph 2D images (using the eos library [11]). Figures 2 and 3 illustrate example images including their respective facial landmarks.

Figure 1: Facial landmarks.

Figure 2: Facial landmarks on Albert Einstein’s face.

Figure 3: Facial landmarks on Michael Jackson’s face. -

•

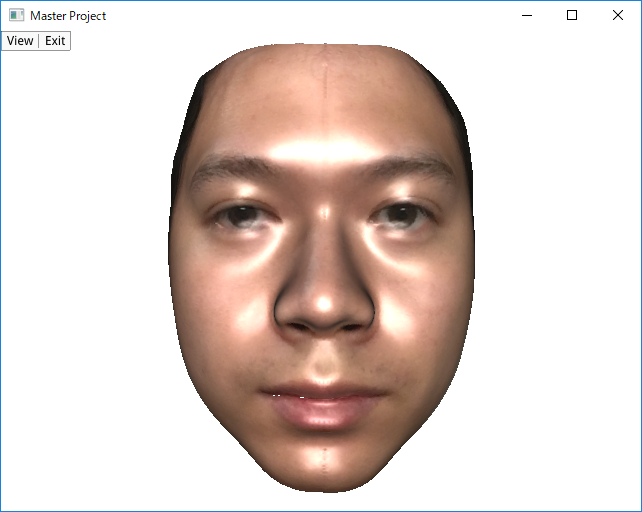

eos library [11]: This library is used to create 3D face models from input photos of human faces. The obtained models follow the Surrey Facial Model, which is based on principal component analysis applied to a large data set of face models. These models can be created in several resolutions. In this project we experimented with face models built with 3448, 16759, and 29587 vertices. This library depends on the OpenCV, Eigen, and Boost libraries. Figures 4 and 5 illustrate example models which were generated from their corresponding input photos.

[1] Front view

[1] Front view

[2] Side view

[2] Side view

Figure 4: 3D Face Model of Albert Einstein.  [1] Front view

[1] Front view

[2] Side view

[2] Side view

Figure 5: 3D Face Model of Michael Jackson. -

•

OpenCV library [12]: This library was used to support image operations. In particular, this library was used to triangulate the facial landmarks in order to interpolate image attributes based on triangle coordinates, such that the interpolated image information preserves the facial features, as later explained in Section III-B. Figure 6 illustrates the obtained triangulations in example photos and Figure 7 illustrates the obtained interpolation result preserving the facial features.

[1] Albert Einstein

[1] Albert Einstein

[2] Michael Jackson

[2] Michael Jackson

Figure 6: Triangulation on example photos.

Figure 7: Morphed image of 2 example photos. -

•

Eigen library [14]: This library provides functions for linear algebra, matrix operations, geometrical transformations, numerical solvers and related algorithms.

-

•

Boost library [13]: This library provides various functions to complement the standard C++ libraries.

-

•

Standalone Interactive Graphics (SIG) library [15]: This library provides a C++ scene graph framework for the development of applications with interactive 3D graphics. The main animation visualizer was built with this library, which handled the graphical user interface and scene graph operations including computing the performed interpolations handling images and 3D objects.

III Overall Method

Our overall method consists of three main steps. First, 3D face models from pairs of input photos are created. In this work we have used 4 pairs in order to obtain 4 animations between different facial expressions. These pairs are presented in Figure 8.

Second, for each pair, the corresponding texture images are morphed in order to obtain a texture image for a given interpolation factor , where allows to obtain results from the initial model () to the goal model ().

Finally, the coordinates of the 3D models are interpolated according to parameter and associated with the intepolated texture image computed in the previous step. These steps are detailed in the next subsections.

III-A Generation of 3D Face Models

The input 3D face models are generated from 2 photos of the same person but with different facial expressions. One of the photos is used as the initial face and the other photo is used as the goal face. At this point the coordinates of the facial landmarks are computed for each photo by using the dlib library. Figure 9 illustrates one pair of photos with the facial landmarks placed on them.

[1] Original photo

[1] Original photo

[2] Eye closed photo

[2] Eye closed photo

|

The 3D facial models are then generated by using the original photos together with the facial landmark information configured in the previous step by using the eos library. Figures 10 and 11 illustrate the 3D face models generated.

[1] Front view

[1] Front view

[2] Side view

[2] Side view

|

[1] Front view

[1] Front view

[2] Side view

[2] Side view

|

III-B Morphed Texture Images

To morph the texture images, the coordinates of the facial landmarks are configured for each texture image by using the dlib library. The landmarks are then triangulated using a Delaunay triangulation (Fig. 12), such that the coordinates of the image texture are interpolated using barycentric coordinates according to the position of each image pixel in its containing triangle. The colors of the image texture are also interpolated in the same way. In this way the facial features are preserved during interpolation. Figure 13 illustrates morphed image of original and eye closed texture images of 3D face models.

[1] Texture image of

original 3D model

[1] Texture image of

original 3D model

[2] Texture image of

eye closed 3D model

[2] Texture image of

eye closed 3D model

|

III-C Interpolation of Mesh Coordinates

Given that the meshes respective to the input images have the same connectivity and number of vertices, a target interpolated 3D model can be simply obtained by linear interpolation of the vertices of the two input meshes. Fig.14 illustrates one interpolated 3D face model with its corresponding morphed texture image computed in the previous step.

[1] Front view

[1] Front view

[2] Side view

[2] Side view

|

IV Results

Figures 15 to 18 at the end of this paper illustrate the interpolated 3D face models obtained for all 4 pairs that were considered. The presented in-between models were obtained with interpolation parameter starting from 0 to 1, by 0.1 increments.

The morphed 3D models for all pairs look natural both with respect to the mesh and texture image deformations. Table 1 presents performance measurements showing how long it takes to compute one interpolated 3D model frame at different resolutions with a growing number of vertices. A 3D face model with 29587 vertices is still processed fast enough to produce smooth animations.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/43a25712-e82b-4a2c-a3db-85025ac7ec7c/run_time.png)

V Discussion

The used Surrey Facial Model [11] allowed us to construct 3D face models that were natural; however, it did not produce good results for all types of faces. Problems were encountered especially for people with rounded faces. The Surrey Facial Model was constructed by analyzing 169 scan data sets and nearly 60% of the scanned data were from Caucasian people. This might be the reason why it was difficult for the Surrey Facial Model to achieve a precise face scaling for all types of faces.

While the obtained results were smooth and of good quality, inorder to achieve a complete result additional facial objects have also to be considered. For example, models for eyeballs, teeth, tongue, eyebrows, eyelashes and hair are important for achieving complete animated faces.

Improved rendering techniques are also important to be considered. The presented results were rendered only using a standard Phong illumination model with a single frontal light source. Special skin illumination characteristics are important to be included with dedicated shaders in order to replicate skin properties and achieve improved illumination results.

VI Conclusion

The described approach presents a simple pipeline to achieve fast and natural 3D facial animation between given expressions. The approach can be replicated with the use of open source tools and animations can be obtained just from input face photos of different expressions.

References

- [1] V. Blanz and T. Vetter. ”A morphable model for the synthesis of 3d faces”. Proceedings of the 26th annual conference on Computer graphics and interactive techniques, pages 187-194. ACM Press/Addison-Wesley Publishing Co., 1999.

- [2] J. Booth, A. Roussos, S. Zafeiriou, A. Ponniahy, and D. Dunaway, “A 3D Morphable Model Learnt from 10,000 Faces”, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- [3] L. Tran, X. Liu. ”On Learning 3D Face Morphable Model from In-the-wild Images”, 2018.

- [4] P. Huber, G. Hu, R. Tena, P. Mortazavian, W. P. Koppen, W. J. Christmas, M. Rätsch, and J. Kittler, “A Multiresolution 3D Morphable Face Model and Fitting Framework”, Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 2016.

- [5] E. Chuang, C. Bregler. “Performance Driven Facial Animation using Blendshape Interpolation”, Computer Science Technical Report, Stanford University, 2002.

- [6] J. Noh, U. Neumann. “Expression Cloning”, Proceedings of SIGGRAPH’01 , 2002.

- [7] H. Lee, E. Kim, G. Hur, H. Choi, ”Generation of 3D facial expressions using 2D facial image”, Fourth Annual ACIS International Conference on Computer and Information Science (ICIS’05) , pages 228-232, 2005.

- [8] M. Lau, J. Chai, Y. Xu, H. Shum. “Face poser: Interactive modeling of 3D facial expressions using model priors”, pages 161-170, 2007.

- [9] “Poser”, Poser. [Online]. Available: https://my.smithmicro.com/poser-3d-animation-software.html .

- [10] “Dlib c++ Library”, Dlib c++ Library. [Online]. Available: http://dlib.net/ .

- [11] Patrik Huber, “patrikhuber/eos,” GitHub, 14-Dec-2018. [Online]. Available: https://github.com/patrikhuber/eos .

- [12] ”OpenCV library”, OpenCV library. [Online]. Available: https://opencv.org/ .

- [13] “boost c++ libraries”, boost c++ libraries. [Online]. Available: https://www.boost.org/ .

- [14] “Eigen”, Eigen. [Online]. Available: http://eigen.tuxfamily.org/ .

- [15] Marcelo Kallmann, “mkallmann/sig”, bitbucket. [Online]. Available: https://bitbucket.org/mkallmann/sig .

- [16] “ibug”, ibug. [Online]. Available: https://ibug.doc.ic.ac.uk/resources/facial-point-annotations/ .