Artworks Reimagined: Exploring Human-AI Co-Creation through Body Prompting

Abstract.

Image generation using generative artificial intelligence is a popular activity. However, it is almost exclusively performed in the privacy of an individual’s home via typing on a keyboard. In this article, we explore body prompting as input for image generation. Body prompting extends interaction with generative AI beyond textual inputs to reconnect the creative act of image generation with the physical act of creating artworks. We implement this concept in an interactive art installation, Artworks Reimagined, designed to transform artworks via body prompting. We deployed the installation at an event with hundreds of visitors in a public and private setting. Our results from a sample of visitors () show that body prompting was well-received and provides an engaging and fun experience. We identify three distinct patterns of embodied interaction with the generative AI and present insights into participants’ experience of body prompting and AI co-creation. We provide valuable recommendations for practitioners seeking to design interactive generative AI experiences in museums, galleries, and other public cultural spaces.

The study setup and user flow through four application screens (top) in which users give consent (1), select an artwork (2), pose (3), and exit the camera booth via an end screen (4). At the event, this was followed by an interview in which the results were revealed (5).

1. Introduction

Image generation with artificial intelligence (AI) has gained popularity, with

millions of practitioners exploring text-to-image generation systems for recreation and creative exploration (Oppenlaender, 2022; McCormack et al., 2024; Kahil, 2023; Sanchez, 2023).

However, text-to-image generation is almost exclusively conducted in the privacy of an individual’s home, and limited to typing on a computer keyboard. We argue the current focus of image generation on typing and discrete prompts

by one solitary creator limits opportunities for engaging experiences, due to four key constraints of text-to-image generation:

literacy,

input control,

social creativity,

and

the setting.

Limited by literacy:

Frequently regarded as an instrumentalist use of technology,

text-to-image generation is a process in which AI is used as a tool to visually translate concepts or ideas (Coeckelbergh, 2023).

For this translation to

be effective, English literacy is required.

Further, some image generators require the use of specific keywords to produce high-quality outputs (Oppenlaender, 2023), which demands expertise in “prompt engineering” (Oppenlaender, 2024, 2022, 2023; Oppenlaender et al., 2023a)

and familiarity with art concepts, such as styles and media (Oppenlaender, 2023).

These language-based requirements

exclude certain populations,

such as the illiterate, young children, and all those who are not familiar with the English language.

Limited input control and expressivity:

The level of control over text-to-image generation is limited by language (McCormack et al., 2023).

Prompt engineering is tedious and it is not possible to fully control text-to-image generation with discrete prompts (Oppenlaender et al., 2023a), leaving much of the initial image generation to randomness (Hauhio et al., 2023).

In practice, the first generated image is often one step in a longer creative process that involves many tools, such as image editors, image-to-image generation, inpainting, and others (Oppenlaender, 2024; Palani and Ramos, 2024; Sanchez, 2023).

ControlNet was a remarkable breakthrough technology (Zhang et al., 2023), allowing fine-grained control over image generation via human poses and other input forms.

A pose is a skeleton-like structure with key points that correspond to major joints and body parts.

ControlNet gained widespread popularity among AI art practitioners.

However, rarely do these poses originate from the practitioners’ own bodies. Instead, poses are transferred from existing images, taken from openpose datasets, or created with tedious manual editing.

In addition to questions about copyright (Samuelson, 2023; Oppenlaender et al., 2023b; Samuelson, 2024), this

“raises issues of embodiment, materiality, and material agency that traditionally come into play when making a visual artwork” (McCormack et al., 2023).

Limited collaboration and social creativity:

Contemporary literature views creativity as a social activity (Frich et al., 2019; Amabile, 1983), with the concept of the lone creator being dispelled as a myth (Lemley, 2012).

Yet, image production with generative AI is almost exclusively completed by a solitary creator.

The social and performative aspects of art creation are lost due to the privacy of the image generation setting and the constrained text input modality.

Collaborative websites, such as Artbreeder111https://www.artbreeder.com,

have demonstrated that collaborative image generation can be an engaging experience, with wide audience appeal.

Limited suitability for public settings:

Confined to the personal and narrow triangular interaction space between user, keyboard, and computer display,

the practice of text-to-image generation does not lend itself well to public settings, such as

Galleries, Libraries, Archives, and Museums (GLAM).

For such public settings, input modalities

should be dynamic and intuitive, offering glimpses of the generative process to the audience and building anticipation for the final image.

Typing on a keyboard in front of a computer screen is not expressive, in this regard, and does not provide an engaging audience experience.

Addressing these limitations could make image generation more inclusive and accessible to a wider audience (Kinnula et al., 2021). Prior work aimed to break free from these four limitations by exploring audience participation in image generation in a museum context. Kun et al.’s interactive GenFrame allowed museum visitors to shape the image generation process via three knobs controlling different parameters of the image generation process (Kun et al., 2024a, b). However, this interaction and the input modality are limited, leaving much of the agency in the act of co-creation to the AI system, not the user.

In this paper, we explore body prompting as a novel input modality for image generation. A body prompt is an embodied input for the image generation system in form of a human pose. Body prompting extends image generation interaction beyond textual inputs to reconnect the creative act of image generation with the expressive physical act of art creation. We enable an audience in a public setting to “reimagine” an existing artwork with their body pose, thereby creating their own version of it. To this end, we designed and implemented Artworks Reimagined, an interactive art installation conceived for the European Researchers’ Night 2023 that, using generative AI, transforms and reinterprets classical artworks via body prompting.

We evaluate body prompting in a user study conducted in a real-world setting, specifically, at a public event with hundreds of visitors. However, some people may be shy and feel inhibited when performing in public, and society is biased against introverts (Cain, 2012). For this reason, we designed and tested two separate body prompting settings: a public staging area where people could body-prompt in front of an audience, and a private booth where people could body-prompt and view the results hidden from the public eye (cf. Figure 2). Our investigation focused on observing participants’ interactions, behavior, and body prompting strategies, followed by a semi-structured interview. This study is exploratory, emphasizing how people naturally engage with generative technology via body prompting when encountering it in a public setting without specific instructions. Our study is guided by the following research questions:

-

RQ1:

Generated images: What images do participants create with body prompting? Is there a difference in images between the public and private setting?

-

RQ2:

User experience: How do participants experience body prompting? And how do they experience AI co-creation via body prompting?

-

RQ3:

Participant behavior: What are the decisions and preferences of participants in regard to body prompting? What goals do participants pursue when body prompting?

-

RQ4:

Body prompting in public: How do personality traits, as measured by the “Big Five” (Digman, 1990), affect body prompting and the use of the installation?

After reviewing related work in Section 2, we present the design of Artworks Reimagined and the study methodology in Section 3. We then provide an overview of the images generated and a synthesis of observations from the event in Section 4. We discuss our findings and provide design recommendations for practitioners and researchers in Section 5. We conclude in Section 6.

2. Related Work

Image generation is predominantly performed via typing prompts on a keyboard in a private space. Therefore, in the vast majority of cases, prompting is “an isolated and individual experience” (Mahdavi Goloujeh et al., 2024). In this section, we focus on related work that sought to break free from this reigning interaction paradigm to develop new inputs and bring the activity of image generation into the public sphere. We first review research on interaction with public displays (Section 2.1), and then examine embodied experiences (Section 2.2) and generative AI in public installations (Section 2.4).

2.1. Interaction with Public Displays

Public displays offer opportunities to study how users interact with technology. However, public displays bring unique challenges, such as display blindness (Müller et al., 2009), interaction blindness (Hosio et al., 2016), and the first-click problem (Kukka et al., 2013). The latter arises when users are unsure how to initiate interaction with a public display. In this section, we focus on challenges related to the public setting of our study.

Privacy concerns require consideration. Brudy et al. identified ‘shoulder surfing’ – where unauthorized individuals may view sensitive information – as a pertinent risk associated with public displays (Brudy et al., 2014). Further exploring privacy issues, Memarovic examined how the public’s concerns about photography in the vicinity of public displays influence user interaction (Memarovic, 2015). Their study emphasized the importance of transparent policies regarding the storage and usage of photos taken in these contexts, highlighting a general need for clear communication about the handling of users’ data. In a comparative study, Collier et al. addressed differences between private and public self-service technologies (Collier et al., 2014). Their findings suggest that perceptions of control and convenience significantly vary depending on the setting, with public technologies often viewed as less controllable and convenient than their private counterparts (Collier et al., 2014). This perception could influence user engagement and satisfaction with the public display in our study. Hosio et al. studied privacy in interactive public displays (Hosio et al., 2019; Alorwu et al., 2022). They found that people are surprisingly willing to volunteer highly sensitive personal information in public settings.

These studies reveal a complex landscape of user behavior and expectations when interacting with displays in public spaces. Addressing these concerns is crucial for designing an interactive public installation.

2.2. Interactive Experiences via Embodied Interaction

Embodied interaction can provide an engaging experience. This is particularly relevant in the context public cultural spaces, such as GLAM institutions. In museums, the contact with more traditional art forms, namely painting or sculpture, tends to occur passively through a cognitive engagement with the artworks. Digitally enriched museum experiences (Calvi and Vermeeren, 2023) open new opportunities to experience and interact with such artworks through embodied cognitive engagement via human-technology interaction. Gestures and posing are an engaging way of meaning making in the GLAM context (Steier et al., 2015; Steier, 2014; Fu et al., 2024). In the remainder of this section, we review prior work on embodied interaction in the context of GLAM institutions and other public settings.

Trajkova et al. explored engaging museum visitors with embodied interaction (Trajkova et al., 2020). Their study was aimed at human-data interaction, enabling people to explore data sets and interactive visualizations with gestures and body movements.

Oppenlaender and Hosio presented a system for museum visitors to provide feedback for artworks, including selfie photos and selfie videos (Oppenlaender and Hosio, 2019). The latter was perceived as a surprising way of interacting with an artwork and its artist. Kozinets et al. also explored museum selfies (Kozinets et al., 2017), finding selfies to be a way to construct narratives about oneself. However, their study did not feature embodied interaction with dynamically generated artifacts in the museum context.

Positive effects of interactive technological engagement with artworks have been reported. For example, aesthetic appreciation (including interest, intensity, pleasure, and learning) was higher in artworks with interactive elements compared to non-interactive physical objects (Jonauskaite et al., 2024). In Coeckelbergh’s framework, body prompting can be conceptualized as a performance-based approach to interaction and AI image generation (Coeckelbergh, 2023). Body prompting is a poietic performance within human-technology co-creation. The performance-based approach emphasizes the process of interaction in which novel artistic (quasi-)subjects and objects emerge and are produced in and by the processes instead of instruments and tools (Coeckelbergh, 2023).

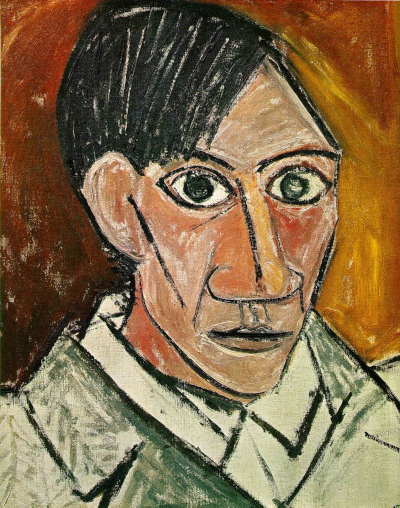

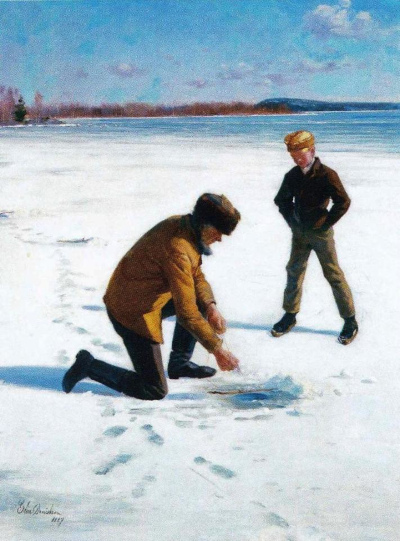

2.3. Re-creating and Reinterpreting Artworks

Art museums are increasingly digitizing their collections and making them accessible on the internet thus fostering different reinterpretations and re-creations of the paintings (Barranha, 2018). Several experiments have shown that people enjoy re-creating and reinterpreting famous artworks. Launched at the height of the COVID-19 pandemic, The Getty Museum Challenge was a widely successful initiative, which invited the public to re-create an artwork with household items (Romero, 2020). In 2023, the Mauritshuis Museum in The Hague launched another challenge, which invited the public to reinterpret Vermeer’s “The Girl with a Pearl Earring” using different processes, including generative AI (Mauritshuis Museum, 2023). The AI-generated images were later exhibited in the Museum in place of Vermeer’s famous painting, in a digital loop display which featured a wide range of experiments produced with different media. In parallel to such institutional initiatives, generative AI has also been used by researchers to reinterpret artists’ self-portraits (Barranha, 2023).

These examples of re-creating and reinterpreting artworks suggest that new activities designed to explore art collections with the use of generative AI can foster participatory creative experiences. However, the Getty and Mauritshuis Museum challenges were completed from the participants’ homes, re-creating or reinterpreting the artwork either with body poses and household items or generative AI. In our work, we explored how visitors could be engaged to re-create or reinterpret an artwork with generative AI and their bodies at a public event.

2.4. Generative AI in Public Exhibitions

Generative AI has been explored in the context of public exhibitions. In this section, we review three interactive installations that used generative AI.

The artist duo Varvara & Mar presented an art installation in which the human impact on a landscape is gradually revealed using eye-tracking and generative AI (Canet Sola and Guljajeva, 2024). While their interactive art installation requires bodily presence, it is driven by gaze-based interaction with the public display, not body posing.

Benjamin and Lindley explored embodiment in Shadowplay (Benjamin and Lindley, 2024). Users of this AI art installation produced shadows on a wall, which served as input for the image generation AI. Our system differs in that the users’ body directly serves as input to re-create or reinterpret an existing artwork via body prompting.

GenFrame by Kun et al. (Kun et al., 2024a, b) is a more recent generative art piece designed for the museum context. GenFrame’s picture display gives the appearance of a traditional artwork, however the audience can control the image generation with three rotating knobs. Like our work, GenFrame was implemented with ControlNet and StableDiffusion. Nevertheless, the interaction affordances of GenFrame are limited by design. Our work explores body prompting as an expressive way of providing input to the image generation process.

3. Method

This section first provides background information on the public event that was the context for our study and our motivation and design goals for Artworks Reimagined. We then describe the study design and procedure, research materials, data collection and analysis, and technical implementation.

3.1. Background and Design Goals

The European Researchers’ Night is an annual event, which aims to interest the general public in research. It is one of the biggest science events in Finland, held at several universities nation-wide. The event gives the public the opportunity to discover the wonders of science in fun and inspiring ways. As a multidisciplinary event, Researchers’ Night offers a wide range of activities from workshops to laboratories, presentations, and lectures. The event program caters to the general public, including visiting school groups and parents with children.

Our study was born from our previous experience hosting an exhibit at the European Researchers’ Night in 2022 (Oppenlaender et al., 2023c, b). At the time, text-to-image generation was still a new phenomenon, and many visitors had not tried it before. The exhibition stand consisted of two laptops on which visitors could try out three text-to-image generation systems (Midjourney, Stable Diffusion, and DALL-E). One laptop was connected to a large public display. During this event, we observed that while interesting for the person writing the prompts, textual interaction with the image generation system was not very appealing for bystanders in a public audience, due to prompts being written in private. In the following year, we designed an interactive art installation to address this issue.

The design of our installation had three goals. First, we sought to overcome the limitations in engagement that we had observed in 2022 and provide a highly engaging experience for visitors of the local Researchers’ Night event. Second, we aimed to allow visitors to reconfigure an existing artwork with an input modality other than typing. To this end, we selected posing (“body prompting”) as input modality. Visitors would be able to reimagine an existing artwork by posing in front of a camera. Third, like a camera booth, the installation was designed to be easy to use and self-guiding, allowing its user to go through the steps necessary to take a selfie photo.

3.2. Artworks Reimagined

We created an interactive art installation, called Artworks Reimagined, that guides its users through four simple steps (depicted in Figure 1 and described in detail in Section 3.3). The physical setup of the installation is depicted in Figure 2. Divider walls were used to define four separate areas. The public posing stage allowed participants to select an artwork on the touch screen and pose in front of the camera. This setup was mirrored in the private camera booth which was cordoned off and protected from viewing with divider walls. Results from the public booth were viewed on a large screen controlled by a laptop, whereas results from the private booth were viewed in private on two laptops equipped with privacy screens.

We selected artwork images for the installation from two sources. The first source included paintings from the “Golden Age of Finnish Art” (circa 1880-1910). We identified artworks by visiting Wikipedia pages of prominent artists from that time period.222https://en.wikipedia.org/wiki/Golden_Age_of_Finnish_Art The second source was a list of popular paintings on WikiArt.333https://www.wikiart.org/en/popular-paintings/alltime When selecting artworks, we avoided nudity and other depictions not appropriate for public viewing in the presence of children. This convenience sample of 60 artworks (29 from the Finnish Golden Age and 31 from WikiArt) represents a mix of well-known international masterpieces and artworks with a deep local context.

The study setup consisted of four separate areas: 1) the public viewing and interview area, 2) the public posing stage with public display, 3) the private posing area with display, and 4) the private viewing area with two laptops equipped with privacy screens.

3.3. Study Design and Procedure

The aim of our study was to observe and understand how body prompting is used and experienced in the context of a public event. Therefore, the research was conducted “in-the-wild”, focusing on observing participants in an environment outside of the controlled laboratory, without interference from researchers. The research was exploratory and participants were not given specific posing instructions.

The study procedure is summarized in Figure 1. Participants were invited to walk up to the public display. The first application screen consisted of a consent form informing the participants about the extent and use of the collected data. Participants were specifically informed that only an anonymous, stick figure-like pose would be collected, not the original photo. After providing consent by clicking a button, participants selected an artwork from the list by touching the screen. Next, participants were informed that a timer would count down from 10 seconds. After confirming this message, participants could see themselves on the screen and assume a pose while the timer counted down to zero. The posing was supported by showing the artwork for a brief moment before displaying the camera feed, and a small version of the artwork was additionally shown in the corner of the screen while posing. Participants were not given any instructions on what specific pose to assume and were completely free to deviate from the source artwork. Finally, participants were shown a short unique code from a curated list of Finnish and English words, generated with a language model, and informed to exit the booth in the final application screen. The short code was used to associate the subsequent interview data with the generated image.

Following the interaction with the installation, one of five research assistants invited the participants to a semi-structured interview recorded using a mobile phone. The assistants showed the participant(s) the artwork and detected body prompt during the interview and recorded participant(s)’ reactions and comments in response to seeing the generated artwork.

Architecture.

The study was conducted in accordance with the policies of the University of Jyväskylä’s Institutional Review Board (IRB) and the Finnish National Board on Research Integrity (TENK). Children under the age of 18 were allowed to use the installation under the supervision of adults, but were excluded from the interviews. In this article, we only focus on the data collected from 79 adults who agreed to an interview.

3.4. Data Collection and Analysis

We developed an interview guide consisting of 12 questions. Besides basic demographics, the research assistants asked participants about their motivation for selecting the artwork, the body prompt, and the choice of private or public posing area. The interviewers also inquired how participants experienced body prompting and what they wanted to express with their body prompt. The structured questions were followed by open-ended follow-up questions (“can you describe why?”). A Likert-scale question captured how enjoyable the experience was for the participant (from 1 – Not At All Pleasant to 5 – Extremely Pleasant). The interview also included the ten-item questionnaire on key personality traits (commonly referred to as “Big Five”) (Digman, 1990).

The generated image was revealed on the screen at the end of the interview and participants were asked whether the image expressed their original intent, thus giving insights into co-creative aspects of body prompting, and any other thoughts about their body prompt and the generated image.

The interview data was transcribed using a text-to-speech service, translated from Finnish into English using Google Translate, and manually reviewed and corrected by three research assistants who listened to the recordings and manually corrected translation errors. The qualitative data was analyzed following a grounded theory approach (Glaser and Strauss, 1967). Three authors (one Postdoc in Cognitive Science, one PostDoc in Computer Science, and one PhD student with professional experience in user experience design) discussed a preliminary coding scheme for each question as a starting point for inductive coding. The three authors then individually coded the responses of a sample of 30 participants. In a discussion following this individual coding session, the authors noticed that coding the data was straightforward in all cases (McDonald et al., 2019). That means, in all but a very few cases, it was easy to identify themes in the data, and in two other questionnaire items, the responses were too diverse to identify common themes. Therefore, it was decided that one author would inductively code the interview data.

The generated images and the body prompts (i.e., poses) were rated by the three authors along three criteria: a) dynamism of the source artwork (low/medium/high), b) dynamism of the participant’s pose (low/medium/high), and c) a comparison of the source artwork and generated image in terms of whether a change in narrative took place. For a) and b), the authors collaboratively agreed on a codebook, as listed in Table 2 in Appendix A. With the metric of ‘dynamism’, we intend to capture the expressiveness of the artwork and body prompt. A static pose was rated to be of low expressiveness, whereas a highly dynamic pose was rated as being very expressive. For c), the authors agreed to code a change in narrative if a subject engaged in a different activity or had a different expression (e.g., static versus humorous), or if the model hallucinated artifacts that caused or strongly contributed to a change in narrative. After a first rating round, the ratings were discussed and each author revised their ratings for internal consistency.

Employing Fleiss’ Kappa for inter-rater reliability assessment, our study found high agreement levels among the three raters across all three variables: source artwork dynamism (), pose dynamism (), and narrative change (). These results underscore the raters’ cohesive interpretations and a consistent and robust evaluation process for the studied variables. After calculating inter-rater agreement, a majority vote between the three authors was used to produce the final set of ratings.

In addition, one author analyzed and coded the difference between the source artwork and the body prompt, characterizing the latter as either being an imitation (re-creation) of the source artwork or an attempt to ‘reimagine’ (reinterpret) the artwork with a body prompt that significantly differs from the source artwork. The latter equates to wanting to change the narrative between source artwork and generated artwork via the body prompt. It was further coded whether this narrative change in the resulting image originated from the generative model (e.g., by hallucinating artifacts or facial expressions) or the user (via the body prompt), with options ‘model’, ‘user’, ‘both’, and ‘n/a’ (meaning no major change in narrative took place).

3.5. Technical Implementation

We developed a software architecture based on T2I-Adapter (Mou et al., 2023) with four different inputs (depicted in Figure 3):

- (1)

-

(2)

Artwork: Style information was extracted from the selected source artwork and used as an input for the T2I-Adapter.

-

(3)

Prompt: A textual prompt was generated from the selected source artwork using CLIP-Interrogator (version 0.6.0).444https://github.com/pharmapsychotic/clip-interrogator

- (4)

Images were generated with Stable Diffusion v1.5 (Rombach et al., 2022) with 50 steps and cfg 8.0. The generated images were upscaled twice with Real-ESRGAN for viewing on the large public display. GFPGAN was used to enhance faces. The image generation architecture was packaged as a cog container and uploaded to Replicate.com where it is publicly available for inference via an API.555https://replicate.com/joetm/camerabooth-openpose-style

Two React-based web applications were developed, one for body prompting and one for viewing the results. Both applications were hosted in AWS S3 buckets, using an ssl-encrypted AWS Cloudfront to provide a secure connection.

The first application guided users through the process with a linear application flow (c.f. Figure 1). The application captured the photo from the webcam MediaStream using the MediaDevices API once the timer hit zero. An AWS Gateway was set up to proxy requests from the web application to Replicate’s API. Results from the API were received with a webhook (AWS Lambda function) and stored in a JSON file hosted in an S3 bucket. The application was automatically reset after 60 seconds once the final application screen was reached. The main purpose of this timed reset was to reduce queuing for participants at the public viewing area — the bottleneck of our study design — and to give more time to the subsequent interview. The source artworks were shuffled after each image generation.

The second application enabled the research assistants to scroll through the generated images with keyboard arrow keys. The application used long-polling to fetch the list of generated images. A red square on the screen indicated the number of newly generated images available for viewing. The participants pose was shown in the corner of the screen. Generated images were viewed either on a public display or in private on two laptops with privacy screens, depending on which posing area was chosen by the participant.

Example of body prompts and resulting generated images.

4. Results

This section first provides information on the participants and then proceeds to answer our research questions.

4.1. Participants

Interviews were conducted with 79 participants after they used the installation. Participants were on average 37 years old (, , ) and included 46 women, 32 men, and one non-binary participant.

Participants’ Big Five personality traits — Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism — were assessed on five-point Likert scales. Participants’ above average openness scores (, ) suggest a general tendency towards creativity and openness to experience within the cohort. Conscientiousness followed closely (, ), indicating a high level of reliability and organization among participants. Extraversion and Agreeableness scores were slightly lower ( and , respectively, and ), pointing to moderate levels of sociability and empathy. Neuroticism received the lowest average score (, ), suggesting a lower degree of emotional instability and stress sensitivity in the group. These findings offer a nuanced view of the personality composition within the participant sample, highlighting a tendency towards openness and conscientiousness, and providing context for our exploration into the behaviors of the study cohort.

Due to the study being conducted in the field without control over the number of participants per condition (i.e., group versus solo participation, body prompting strategy, artwork choice, and public versus private posing area), we focus on describing the images in Section 4.2 and qualitatively analyzing the interview data in sections 4.3–4.6. For a rich description, we contextualize participant quotes with the codes listed in Table 1.

| Code | Participants | Posing Area | Posing Strategy | Participation |

| A | 22 | Public | Reimagine | Group |

| B | 13 | Private | Reimagine | Group |

| C | 12 | Public | Reimagine | Alone |

| D | 9 | Private | Reimagine | Alone |

| E | 8 | Public | Imitate | Alone |

| F | 6 | Private | Imitate | Alone |

| G | 5 | Private | Imitate | Group |

| H | 4 | Public | Imitate | Group |

For participating groups, interviews were held with one group member, but other group members were allowed to comment. For simplicity, we refer to both groups and single participants as ‘participant’ in this work. In the following section, we describe the images generated at the event.

4.2. RQ1: Generated Images

In total, 172 images were created during the six-hour event (112 images in public and 60 images in the private booth). Due to the diversity of source artworks, the body prompts are difficult to compare. In this section, we provide a brief descriptive overview of the 172 generated images and body prompts. This section mainly reports on general trends, while specific outstanding instances are highlighted in Section 4.6.1. A gallery of all generated images is available at https://artworksreimagined.com.

Overall, the style of the source artwork was reproduced well, to a degree where it was clearly recognizable which source artwork was used as style reference (see Figure 4). However, the generated images captured the source artwork to varying degrees, leaving room for interpretation and surprise.

The body prompts had varying degrees of expressiveness. Some participants simply stood motionless in front of the camera in a neutral pose with arms dangling beside their hips. Other participants were more dynamic and expressive, raising limbs into the air. A few participants went to great lengths to produce their body prompts (as depicted in Figure 5, bottom center). The generative model’s output would reflect the same number of posing subjects. Overall, there were no noticeable differences between the body prompts performed in public and private, with a few exception (described in Section 4.6.1).

We classified whether a change in narrative had taken place between source artwork and generated image. In almost half of the generated images (46.8%) a change originated from the participant’s body prompt, which means the pose introduced significant modifications in the generated image. In another 29.1% of the generated images, no change in narrative took place and the new generated image appeared to be a reproduction of the source artwork’s original idea, with some minor changes (e.g., number of subjects).

About a quarter of the participants (24.1%) encountered surprising generative artifacts in the generated image. Co-creation with AI can involve surprising hallucinations by the generative model. With hallucinations, we specifically refer to artifacts introduced by the generative model that cannot be found in the source artwork or in the participant’s body prompt, often resulting in a change in narrative between the source artwork and the generated image. In this study, hallucinatory artifacts included door frames, wooden sticks, fields with houses in the distances, animals, and more. However, these generated elements in the image did not necessarily deviate from the narrative portrayed in the source artwork.

About 8% of the participants encountered surprising artifacts originating from the generative model that clearly changed the narrative. Another 16.2% of participants encountered images where the narrative change originated both from the model and the participant. These were often images where the participant’s pose deviated from the source artwork, with the AI adding surprising elements, such as additional characters or changes to the subjects. In other instances, the model added minor hallucinatory elements that still resulted in a change of narrative. For instance, in the generated image in Figure 5, bottom right, the model appears to have added COVID face masks which are not present in the source artwork. In another funny example, the generative model seemingly changed the gender of a subject from a blonde woman to bald man (see Figure 5, middle row, center).

Examples of changes in narrative.

4.3. RQ2: User Experience

4.3.1. Experiencing body prompting

Participants generally found the experience of body prompting highly pleasant ( on a Likert-scale from 1 to 5, ). Creating images via body prompting was deemed to be overall a great experience (, ). When asked to describe why, participants provided different explanations. The most common reasons were that participants found it fun, interesting, and easy. The ease of the interaction via body prompting was commented on often. The in-app instructions were easy to understand and follow, providing a “fluent” (A38, D70) and “effortless” (C43) user experience.

In regard to body prompting, the installation provided a novel experience with interesting results. “Combining the own posture with an artwork” (D49) was perceived as entertaining activity and made for an interesting combination (D75). The physical interaction with the generative AI was perceived as interesting:

“It’s interesting to try what you can do with the AI and just actually physically do something and see it coming out.” (D76)

Body prompting was perceived as fun and lighthearted, with “not too much pressure participating” (A35). Many participants called it a “fun experiment” (e.g., C28, G79) and some participants appreciated that “you get to be creative and go crazy” (C28). Many participants wanted to try the installation a second time and experiment with different body prompts, such as F62 who wanted to “try a completely different pose than in the artwork”. Speaking about Hokusai’s “The Great Wave off Kanagawa”, H30 commented they “wanted to see if AI transforms us into humans or will it convert us to waves as there were no humans in the original artwork”. As depicted in Figure 5 (top right), the AI indeed added two humans in this case, although in other cases, it did not.

A small minority of participants voiced some discomfort and concerns about body prompting. Most of these comments, however, related to the study design and the technical limitations of the installation. For instance, three participants commented on the wait time which was a fixed part of the study design. Some participants commented on the countdown timer. On the one hand, F57 thought “it was quite a long time to stand and look at your own picture”. On the other hand, some participants wanted to have more time to decide on their body prompt. G66, for instance, thought the picture was taken too quickly and “there was no time to think”. Addressing another technical limitation, A24 thought it was a fun experience, but voiced disappointment about the facial expression not being captured by the body prompt. While privacy-by-design was explicitly appreciated by two participants (G61 and B65), a few other participants still voiced concerns about being photographed. This included comments from E18 and G78 who categorically do not like being photographed. G78 mentioned the picture-taking being “a little bit distressing”. A2 thought it was daring to perform in public, and C43 voiced concerns about being watched: “When I started to do my pose I started to think is there someone watching. Other than that it was fun to see what is coming up”.

We could observe that body prompting by a group of people generally tended to be more entertaining, both for the participants performing the body prompting and the audience. Groups of participants developed their own dynamics leading to playful interactions with the image generation system. Selecting the right source artwork often involved short discussions in which participants agreed on their body prompt. These group negotiations could, obviously, not be observed in single participants. However, some participants instead asked the audience on what body prompt to perform, turning the audience from passive spectators to active participants.

Overall, the novelty of the experience was appreciated by the participants, as exemplified by D47 who said that “this was a Friday afternoon’s fun thing, an experiment, and something new for me”. The fact that no specific body prompting instructions were given during the event was seen as positive by many participants who appreciated being able to apply their creativity. For instance, B77 thought it was “unclear what to expect” which was “a good thing for creativity”. Some participants further referred to the creative aspects of body prompting. B77 thought it was a creative experience, “I would have needed help for making the piece of art [with traditional means]. I felt that there was room for creativity”. B77 mentioned body prompting required “quick enough creativity” in the sense that it “does not have to be perfect”. F62 voiced an interest in exploring emotions with body prompting: “how artificial intelligence modifies – and how a work of art can be modified according to one’s own emotional scale. It would be interesting to use this to express feelings”.

4.3.2. Experiencing AI co-creation via body prompting

In this section, we describe how participants experienced the co-creative embodied interaction with the generative AI. Note that this experience is, in our study, influenced by the pre-selected source artwork and not every combination of source artwork and body prompt worked equally well. The participants’ experiences are, therefore, diverse and difficult to summarize. Experiences with AI co-creation in our study can broadly be divided into whether the generated image met (or exceeded) the participants’ expectations or not. Because the latter category is more insightful due to friction in the experience, we place emphasis on this latter category.

Among the participants who stated their expectations were met (), participants commented the generated image was as they had imagined. C3, for instance, said the generated imagine “expresses [the body prompt] pretty well”. Some participants found the image better than expected, often commenting on the funniness of the image. In this participant group, the generated image matched the dynamism of the body prompt. Participants in this group could recognize themselves and described the experience as interesting and fun.

For the participants who encountered some friction (), the installation was also fun and interesting and overall a very good experience, with some limitations. Participants often commented on the installation’s technical limitations, including its inability to reproduce facial expressions and exact hand gestures. This left room for the generative model to add its own interpretation. A23 said that “the first thing that catches [her] eye is those hands, they somehow look a bit unnatural”. In other cases, issues with distorted body parts were even more pronounced, as A35 remarked, “the fingers look like spaghetti” and B68 exclaimed “look at your head, it’s like an alien!”. A24 also encountered issues with the facial expressions, commenting that “the face is completely distorted”. Some generated images had an uncanniness and strangeness that is often associated with AI-generated visuals. For some participants, the weird generative artifacts made the image “pleasant to look at but hard to relate” (A16). On the other hand, the uncanniness was positive for some participants, such as A24, who, despite the distorted face, thought “the face is amazing”, “better than [we] thought”, and “a positive surprise”. Many participants had an expectation that the system would produce their likeness. C1, for instance, commented “I expected that the face would resemble my own face”. This was even more pronounced in children, as discussed in Section 4.6.2.

For a few participants, the uncanny artifacts shifted the mood of the image, sometimes making it more gloomy, sinister, or eerie than anticipated. For instance, after seeing the unnatural hands, A35 said “there is something disturbing” and A33 commented “it’s scarier than we thought”. A17 thought there was “a more serious atmosphere” in the generated image. Some participants said this mood change was unexpected, with a few participants commenting that they had wanted the image to be “more cheerful” (F62). B67 commented, after finding the outcome “sad and gloomy”, that the generated image “does not reflect what we meant”. A13 also commented that “it was supposed to be funny, and this is more grim”. On the other hand, one participant (A5) thought the generated image had a nice “Christmas feeling” that reminded him of a “Christmas carol”.

Some participants expressed their surprise at the generated image. For instance, G79 pointed out a gender change in the subjects, as depicted in Figure 5 (middle row, center): “I assumed there was a man and a woman in the picture, but they both look like men. The environment also surprises.” C1 also commented she “did not expect this outcome” and C4 said “it was fun to try but quite surprising”.

Participants generally found it difficult to understand why the generative model did what it did (even though the body prompt pose was presented to them). One participant tried to explain the mismatch between their likeness and the generated subject as being a mixture of characteristics of the source artwork and body prompt: “it has probably taken characteristics of both the original and me, so now it’s fun to compare the original to this” (F74). Most participants could not articulate reasons for deviations in the generated image from their body prompt or the source artwork, and very few tried to verbalize such explanations. This is partly due to many participants approaching the installation in a casual interaction without strong expectations. Participants also had difficulty imagining what the generated image would look like. E12 commented on the strong differential between expectation and outcome. According to her, “it has very hard to imagine how the outcome would be. That creates very strong expectations”. Without any reference in mind, she commented that it “affected the way you see the picture” and she also mentioned that seeing the generated images from other people gave her a better reference point and an anchor for her own body-prompted image generation.

4.4. RQ3: Participant Behavior

The participants’ key decisions can be summarized as follows:

-

•

Artwork choice: 52 participants (66%) selected an international artwork from Wikiart and 27 (34%) a local Finnish artwork.

-

•

Camera booth choice: 46 participants (58%) used the installation’s public posing stage, compared to 33 participants (42%) who posed in the private booth.

-

•

Participation mode: 44 participants (56%) participated as a group compared to 35 participants (44%) who body prompted individually.

In the following, we describe why participants selected a given source artwork which will provide context for understanding their body prompts.

4.4.1. Selecting the source artwork

Overall, participants spent some time on selecting their artwork which is indicative of the thought they put into their selection. Participants selected the source artwork for a variety of reasons.

Twelve participants mentioned they selected the artwork because it was familiar. A few participants () were attracted by specific objects in the source artwork. Others were attracted to the style of the artwork () and its mood (). C1 mentioned memories of her childhood attracted her to the artwork, when she “lived by the lake and did laundry”. C10 mentioned being attracted to the painting because it was modern and abstract. B61, on the other hand, preferred realistic source artworks over more abstract ones.

A large proportion of the participants () mentioned they selected the source artwork to contrast or complement their chosen approach to body prompting. For instance, the number of subjects in the artwork was often () chosen to match the participants’ group size. For a few participants, the number of subjects in the artworks was perceived as a limiting factor. A24, for instance, selected an artwork with three subjects to match their group size, because : “it was the only one with three characters”.

Some participants mentioned choosing an artwork with a simple pose, as it would be “easier” to mimic. On the other hand, in some cases, the source artwork became the source of inspiration for the body prompt. A23, for instance, mentioned the artwork “immediately gave [her] an idea as to how [the body prompt] could be implemented” and A7 stated that “maybe the artwork influenced us rather than the other way around”.

Participants often selected their source artwork prompt to match their posing strategy, aiming to either contrast (reimagine) or complement (imitate) the source artwork, as described in the following section.

4.4.2. Deciding on a body prompting strategy

When asked “Why did you pose the way you did?,” three distinct body prompting strategies emerged.

About 30% of participants body prompted to re-create the source artwork in an attempt to “mimic” (G79), “imitate” (G64), “resemble” (H11), “mirror” (F74), and “fit the artwork” (C3). Other participants simply did not want to “invent anything different” (A14). This may reflect a misunderstanding of the technology’s purpose, or simply a natural human behavior to mimic what we see displayed. However, in some cases, imitating the pose was not an easy task. E12, for instance, said she “was trying to replicate the [artwork], but the character had a leg completely up, and I did my best to replicate the pose”. Other participants also responded that their body prompt was inspired by the source artwork, such as C36 who “felt like [the pose] came from the artwork”. A13 were more specific in their imitation of the artwork: “we saw the artwork had a fishing rod. We thought lets do the same fishing rod. We were just imitating whatever was there in the artwork”. A34 responded being motivated by seeking harmony with the source artwork, “because it would adapt well to it, as it is a similar pose”. This was also reflected in E15, who said: “Well, it had to be just like that grandma pose”. B54 also mentioned being “most inspired by the artwork itself” and the pose “felt natural”.

Among the participants who imitated the source artwork, one motivation was to match its mood and atmosphere. C36, for instance, thought the artwork was “energetic and had some ‘Let’s go’ feeling” and this energy inspired her pose. B55 was touched by the smile in the source artwork and wanted to reciprocate it. F62 was also moved by the source artwork and its atmosphere, which inspired her body prompt and “kind of took [her] along”.

The second posing strategy among another third of the participants was to reinterpret the artwork. Participants in this group often reported wanting to “contrast” the source artwork to create a novel image that would significantly diverge. A5, for instance, mentioned seeking “a contrast between the posing and the artwork” to force a change in narrative and create a novel artwork. A33 reported wanting “to recreate the painting in a new way”. D75 mentioned wanting “to create a different atmosphere” which hints at the underlying motivation to cause a change in narrative between the original artwork and the generated image.

Experimentation was one motivator in this group, as participants were curious to see whether the installation could reproduce a diverging body prompt and what the reimagined artwork would look like. Another reason for the body prompt was to produce a funny or weird picture. This group had some pragmatic participants who assumed their pose “because nobody stopped us from doing so” (G78). Another motivation among a few participants in this group was to make a pose that was easily recognizable. B60, for instance, said he assumed the pose so that he could be recognized among multiple people in the group.

Finally, a third of the participants said they assumed the body prompt for no specific reason. These participants had no deep thoughts about their body prompt and simply went with their intuition in the moment, with the body prompt being “the first thing that came to mind” (A23, A24, C3) or body prompted “impulsively, on the spur of the moment” (B77), and F70 mentioned “just throwing [himself] into the moment”.

Among the participants in this group, a few responded that the body prompt was their “normal” (neutral) pose which is part of their personality (F70). As above, curiosity was also a key factor, even if they had no strong body prompting strategy. As A34 stated, she “just wanted to see the end result”, and “did not think about the pose so much”. B60 acknowledged that he “was just interested to see how the system reacts to my pose”. The choice of body prompt was not as important to this group of participants. The body prompt was sometimes assumed “in a hurry” (A38), without deeper reflection, or based on someone else’s suggestion. C44, for instance, reported that “someone suggested me to do a crazy pose”. This reflects the quick decision not being planned or “premeditated” (B71) and often improvised in the moment, without a specific strategy.

4.4.3. Deciding on a specific body prompt and teamwork

Participants in groups often collaborated by discussing and negotiating which image to select, which strategy to follow, and which body prompt to perform. The body prompt itself also often involved collaboration. For instance, two participants body-prompted back-to-back in a pose of strength and power (see Figure 5). Another pair of participants collaborated by forming a heart with their arms (see Figure 4). In some cases, communication was also observed between the performer and the audience who made suggestions on how to act. This happened more often when participants posed alone than in a group. This communication highlights the collaborative aspects of the installation and the importance of meta-communication in the co-creative process (Kantosalo and Takala, 2020).

When asked what they wanted to express with their body prompt, participant answers were extremely diverse. Expressing affection was mentioned by a few participants who wanted to hug others (H39) or portray “friendship” (A40) and “family” (A41). Participants had fun at the event which was also reflected in their body prompts. B59, for instance, wanted to show that they were ““having fun”, and B73 mentioned she “wanted to make something silly”. For some, there was a significant acting involved in their body prompt as they assumed a pose (presumably) different from their own personality. A45, for instance, was inspired by the source artwork and wanted to express “elegance and athleticism” with a pose describing “big movements”. A35 aimed to pose as an “authoritarian evil power” trying to “scare people”. This speaks to the entertaining character and playfulness of the installation.

4.5. RQ4: Body Prompting in Public

Our installation was designed to provide a choice of body prompting in public or private environments. Almost half of the participants () had no strong opinion about this choice and did not reveal many concerns about privacy. A significant number of participants () selected to body prompt in public or private for the pragmatic reason of having a shorter wait in line. H11, for instance, selected the public booth “because it was free and the other one was occupied” while group A35 “wanted the others to see what [they were] doing” (A35). Seeing the artwork on the big public screen, as opposed to the smaller laptop screen, also motivated some participants to select the public booth.

A minority (, 14%) mentioned they selected the private booth out of shyness and not wanting to pose in public considering that it provided a safe space where they “could be more [themselves] or do crazier things” (D76) and there was not “everyone staring” (G66, B73), which was perceived as embarrassing by a few participants (e.g., D51, F62). The private booth provided an environment to this group of participants with “no rush” (B72) and “not so much pressure” (B71, F53). However, F62 called her preference for the private booth “unnecessary shyness”, as seeing others perform in public was fun and enjoyable to her, but concerns kept creeping up when she was faced with the decision to pose in public herself.

The Big Five personality factors were not found to correlate with the choice of posing area (public vs private), participation mode (group vs alone), source artwork (international vs local), dynamism of the source artwork, and expressiveness of the body prompt (Spearman’s rank correlation, ), with two significant, but weak exceptions. The first correlation was found to be in the personality trait of agreeableness: participants who were more agreeable tended to select a more dynamic source artwork; , , Cohen (CI [0.04, 1.01]). Second, there was a reverse correlation between extraversion and the expressiveness (dynamism) of the body prompt; , , Cohen (CI [-1.01, -0.10]). This indicates that for participants with high level of extraversion, the expressiveness of their body prompt tended to be low. The finding may appear counterintuitive since extraversion is typically associated with sociability, enthusiasm, and positive emotions, traits that may be associated with more dynamic and expressive poses. The inverse correlation could suggest that extraverted individuals, perhaps due to their comfort with social interactions, may not feel the need to convey their personality as strongly through a single body prompt, or they might approach body prompting with a different mindset compared to more introverted individuals. However, the correlation was very weak, as the expressiveness of the body prompt is influenced by multiple factors beyond a participant’s level of extraversion, such as the context, the individual’s mood, or the cultural background.

4.6. Other Observations

4.6.1. Key moments during the deployment

A few exceptional body prompts were performed during the event. One notable instance was a re-enactment of a marriage proposal in the public posing area (see Figure 4, center) which was a heart-warming moment.

While the majority of generated images accurately represented the participant’s body prompt, in a few cases, image generation seemingly failed to reproduce the pose. An example of this is depicted in Figure 5 (bottom row, middle) and Table 2 where a group consisting of four participants performed a highly complex body prompt in the private booth. It featured one person on the shoulders of another and one person hanging in between two others. Unfortunately, while the generative model picked up that there was a tree-like structure with light and shadows in the source artwork, the resulting image (depicted in Figure 5, bottom center) did not reproduce the group’s intricate body prompt. This is likely due to the source artwork being abstract and containing no recognizable human pose.

4.6.2. Age appropriateness of the installation

The Researchers’ Night is an event visited by many families with children. Although children were not part of our interview study, they were allowed to use the installation in the presence of adult caretakers. This allowed an important observation: some children became very upset after using the installation. Two young children even started crying after seeing their generated image because they expected to see their likeness in the generated image. This happened even when the selected source artwork was abstract, as in the case of Jean-Michel Basquiat’s artworks. Clearly, the children had a different perception of the generated images and different expectations. For adults, the reimagined abstract artwork was interesting, but young children were often disappointed because they could not recognize themselves in the generated image.

5. Discussion

We evaluated body prompting as a novel interaction mechanism for generative AI art installations in public spaces. Overall, body prompting is a viable way of interacting with generative AI in a public setting, providing a highly engaging and fun experience for both participants and the audience. Our study identified three body prompting strategies employed by participants, focused on imitating the source artwork, re-imagining, or body prompting by “just being themselves”. Personality traits did not play an important role in the participants’ decisions and body prompts and the installation provided a novel experience which was appreciated by both extraverts and introverts.

5.1. Human-AI Co-Creation via Body Prompting

Generative AI has ushered in an era of human-AI co-creation (Kantosalo et al., 2020; Rezwana and Maher, 2023; Capel and Brereton, 2023). The human agency in this co-creative process varies on a spectrum (Parasuraman et al., 2000), from full human autonomy to full machine automation. In our study, participants experienced human-AI co-creation along this spectrum. Depending on the chosen source artwork and body prompt, the generative model had varying levels of influence on the generated image. In combination with the participants’ body prompting strategy, their experiences included either re-creation or reinterpretation, as discussed in Section 2.3. The participants pursued their strategy with varying levels of tenacity, but most interactions were casual, as participants picked a level of interaction suitable for their desired engagement (Pohl, 2015; Pohl and Murray-Smith, 2013).

While it can be argued whether the body-prompted images are art (McCormack et al., 2023; Coeckelbergh, 2023), the poietic act of performing a body prompt changed the dynamic of the creative process of image generation, placing the human in the center of the co-creative process in a public setting. In Kun et al.’s GenFrame, participants turned knobs to control image generation parameters, but otherwise relegated the creative process fully to the machine (Kun et al., 2024a). In our co-creative study, the AI became “more than just a machine”, with AI and humans being co-creators and co-performers (Coeckelbergh, 2023). This highlights the importance of interaction design in co-creative systems (Rezwana and Maher, 2023).

5.2. Recommendations for the Design of Body Prompting Installations

In this section, we reflect on the few shortcomings in the design of our study and installation, and draw on this experience to provide recommendations for practitioners interested in the design of installations and researchers conducting user studies involving interactive image generation in a GLAM context.

5.2.1. Multi-display setup and user experience design

A first recommendation concerns the setup with two separate displays, one for input and one for output. The main reason for this split was that we wanted to incorporate the interview procedure into the image generation process to bridge wait time. However, at times, the interviews became the bottleneck of the installation and participants started queuing to see the results. While this was not a significant issue at our event where visitors also had to queue at other exhibits, it should be avoided in future studies.

Design recommendation: Future installations should provide immediate feedback to participants without forced integration of a user study. Bottlenecks in the user flow should be avoided. We recommend to show generated images on the same screen as soon as they are available. If there is a choice between private and public body prompting, this needs to be explained at the event, for example with signage.

5.2.2. Manage expectations

As mentioned in Section 4.6.2,

some participants experienced frustration. This was particular pronounced with young children who were often disappointed and did not share their parents’ appreciation for the AI-generated images.

This raises a point related to managing expectations: an “AI Camera Booth” may raise expectations that the resulting images will resemble the body prompting person.

Our study’s purpose was slightly different from a “camera booth”, and we carefully designed communication materials to avoid setting this expectation.

Design recommendation:

Communication materials should be carefully designed to set the right expectations.

The purpose of the installation should be made clear to its visitors. If what is being provided is advertised as a “camera booth”, the technology should be able to reproduce the likeness of its users. If the focus is on reinterpreting existing artworks, the term camera booth should be avoided.

Facial expressions and hand gestures should be included in the body prompt.

5.2.3. Enable expressiveness

A body pose can only carry a limited amount of information.

In our case, some participants reported that the generated image was

more serious and sinister than expected.

This could be alleviated by including the facial expression in the body prompt and providing more fine-grained control over the image generation.

Design recommendation:

Include controls for users to set the mood of the generated image in addition to the body prompt,

for instance with Kun et al.’s rotating knobs (Kun et al., 2024a) or other means, such as a mood selectors or sliders in the application.

5.2.4. Enhancing user experience with AI co-creativity

Hallucinations add a surprising and playful element to the co-creative experience. Participants who encountered and noticed these hallucinations were entertained and intrigued.

Uncontrollable AI (Hauhio, 2024) adds value to the user experience and may foster creativity.

However, the trade-off between user and system agency should be carefully balanced (Moruzzi and Margarido, 2024).

Design recommendation:

Hallucinations and weird artifacts are part of the generative AI user experience and add an intriguing component.

We recommend not seeking to eliminate hallucinations, but embracing them in a playful way.

5.2.5. Interaction with the installation

To body prompt, users first needed to select a source artwork.

While this interaction could take place with gestures, we decided on a touch-screen display. However, users may be unaware of interactive content (Henderson et al., 2017).

We observed this interaction blindness in one case at the event where participants in the private booth faced the “first-click problem” – they did not realize the display was a touchscreen and used a mouse and keyboard that happened to be available.

With the public installation, this was not an issue since participants could observe other people’s use of the installation.

Design recommendation:

The first-click problem should be addressed with instructions that are carefully designed to fit the study purpose and not bias participants..

While we addressed this problem with a makeshift banner during the event, the private booth requires a slightly different design and instructions than the public posing area.

5.2.6. Takeaways and souvenirs

Many participants liked the resulting images and inquired about ways of downloading them. These participants developed a sense of ownership of the generated images.

In our event, we strived for a minimal setup and could not facilitate the download of images. Instead, participants were told to take an image of the artwork with their mobile phone.

Design recommendation:

Provide a means for visitors to take their body prompted image home with them. This could be in the form of a technical solution, such as a QR code for downloading the generated images, or via traditional means, such as print-outs.

5.2.7. Nudity and inappropriate imagery

Stable Diffusion has been shown to generate unsafe images and hateful memes (Qu et al., 2023).

Our own early testing during the development of the installation confirmed that generated images can contain accidental nudity and unsafe imagery, if not safeguarded.

Design recommendation:

Guardrails should be implemented to prevent unsafe generations in public installations.

We mitigated this issue by

carefully selecting source artworks, avoiding nudity and blood, and using a “negative” prompt designed to prevent the image generation model from producing explicit imagery.

The design of this prompt was based on best practices used in the community of text-to-image generation practitioners.

5.2.8. Materials

The selection of artworks with a popular, but also local context proved to be a good design choice, as the local art was well received.

However, in some cases, there were combinations of body prompts and source artworks that did not work well together.

Design recommendation:

Provide a variety of source artworks for visitors to experiment with, but ensure that they are related to the human figure.

In particular, provide source images that feature different

subjects and compositions to cater to the body prompting strategy of imitation.

Provide source artworks with different levels of complexity and body prompting difficulty, leaving the choice to the user.

At the same time, in order to allow for a more consistent comparison of the AI-generated images, it is critical to establish more specific criteria for the selection of the source artworks, for e.g. prioritizing figurative painting to the detriment of abstract art.

5.2.9. Personality traits

Personality traits, such as extraversion and openness, were not a significant factor in our study.

This is, in part, due to the specific context of the event that attracts a self-selected sample of participants with tendencies to being extraverted. The Researchers’ Night is visited by people curious to make new experiences, and our installation successfully provided these experiences.

In this regard, the value of having a separate private posing area is questionable.

A related issue is the nature of the private booth: it is hidden from view and easy to overlook. However, even if the booth had been better advertised, the general tendency among participants was that public booth was the preferred option, which is reflected in the visitor numbers and participant responses.

Design recommendation:

We recommend not creating a private booth, and instead focus all efforts on designing the public posing area for effective engagement with the audience.

6. Conclusion

Generative AI opens new opportunities and alternative ways to engage diverse audiences in image generation. Embodied interaction for co-creating images with generative AI is an intriguing possibility, providing a novel, fun, and highly engaging experience. Our study explored embodied interaction (body prompting) as a way to interact with image generation AI in public spaces. As demonstrated by our study, such interaction acts as a meaningful connector between historical artistic practices, such as the act of posing for a portrait, and new creative processes involving AI technology. As observed in our installation, generative AI provides a means for effectively fostering personal expression and collaborative co-creation. Our recommendations serve as guidance for future studies and the design of installations involving generative AI in the context of GLAM institutions and other public settings.

Acknowledgements.

This work is supported by the Natural Sciences and Engineering Research Council of Canada (NSERC). We thank the research assistants Aleksandra Hämäläinen, Saila Karjalainen, and Petri Salo for their contributions to this research project.References

- (1)

- Alorwu et al. (2022) Andy Alorwu, Niels van Berkel, Jorge Goncalves, Jonas Oppenlaender, Miguel Bordallo López, Mahalakshmy Seetharaman, and Simo Hosio. 2022. Crowdsourcing sensitive data using public displays—opportunities, challenges, and considerations. Personal and Ubiquitous Computing 26, 3 (jun 2022), 681–696. https://doi.org/10.1007/s00779-020-01375-6

- Amabile (1983) Teresa M. Amabile. 1983. The Social Psychology of Creativity. Springer, New York, NY. https://doi.org/10.1007/978-1-4612-5533-8

- Barranha (2018) Helena Barranha. 2018. Derivative Narratives: The Multiple Lives of a Masterpiece on the Internet. Museum International 70, 1–2 (2018), 22–33. https://doi.org/10.1111/muse.12190

- Barranha (2023) Helena Barranha. 2023. Reinterpreting Artists’ Self-Portraits through AI Derivative Creations. In EVA Berlin 2023. Elektronische Medien & Kunst, Kultur und Historie, Dominik Lengyel and Andreas Bienert (Eds.). BTU Brandenburgische Technische Universität Cottbus-Senftenberg, Berlim, Germany, 286–294.

- Benjamin and Lindley (2024) Jesse Josua Benjamin and Joseph Lindley. 2024. Shadowplay: An Embodied AI Art Installation. In Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI ’24). Association for Computing Machinery, New York, NY, USA, Article 93, 3 pages. https://doi.org/10.1145/3623509.3635318

- Brudy et al. (2014) Frederik Brudy, David Ledo, Saul Greenberg, and Andreas Butz. 2014. Is Anyone Looking? Mitigating Shoulder Surfing on Public Displays through Awareness and Protection. In Proceedings of The International Symposium on Pervasive Displays (PerDis ’14). Association for Computing Machinery, New York, NY, USA, 1–6. https://doi.org/10.1145/2611009.2611028

- Cain (2012) Susan Cain. 2012. Quiet: The Power of Introverts in a World That Can’t Stop Talking. Crown Publishers/Random House.

- Calvi and Vermeeren (2023) Licia Calvi and Arnold P.O.S. Vermeeren. 2023. Digitally enriched museum experiences – what technology can do. Museum Management and Curatorship 0, 0 (2023), 1–22. https://doi.org/10.1080/09647775.2023.2235683

- Canet Sola and Guljajeva (2024) Mar Canet Sola and Varvara Guljajeva. 2024. Visions Of Destruction: Exploring Human Impact on Nature by Navigating the Latent Space of a Diffusion Model via Gaze. In Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI ’24). Association for Computing Machinery, New York, NY, USA, Article 94, 5 pages. https://doi.org/10.1145/3623509.3635319

- Cao et al. (2021) Zhe Cao, Gines Hidalgo, Tomas Simon, Shih-En Wei, and Yaser Sheikh. 2021. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Transactions on Pattern Analysis & Machine Intelligence 43, 01 (2021), 172–186. https://doi.org/10.1109/TPAMI.2019.2929257

- Capel and Brereton (2023) Tara Capel and Margot Brereton. 2023. What is Human-Centered about Human-Centered AI? A Map of the Research Landscape. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23). Association for Computing Machinery, New York, NY, USA, Article 359, 23 pages. https://doi.org/10.1145/3544548.3580959

- Coeckelbergh (2023) Mark Coeckelbergh. 2023. The Work of Art in the Age of AI Image Generation: Aesthetics and Human-Technology Relations as Process and Performance. Journal of Human-Technology Relations 1 (Jun. 2023). https://doi.org/10.59490/jhtr.2023.1.7025

- Collier et al. (2014) Joel E. Collier, Daniel L. Sherrell, Emin Babakus, and Alisha Blakeney Horky. 2014. Understanding the differences of public and private self-service technology. Journal of Services Marketing 28, 1 (2014), 60–70.

- Digman (1990) John M. Digman. 1990. Personality Structure: Emergence of the Five-Factor Model. Annual Review of Psychology 41, Volume 41, 1990 (1990), 417–440. https://doi.org/10.1146/annurev.ps.41.020190.002221

- Frich et al. (2019) Jonas Frich, Lindsay MacDonald Vermeulen, Christian Remy, Michael Mose Biskjaer, and Peter Dalsgaard. 2019. Mapping the Landscape of Creativity Support Tools in HCI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–18. https://doi.org/10.1145/3290605.3300619

- Fu et al. (2024) Kexue Fu, Ruishan Wu, Yuying Tang, Yixin Chen, Bowen Liu, and RAY LC. 2024. “Being Eroded, Piece by Piece”’: Enhancing Engagement and Storytelling in Cultural Heritage Dissemination by Exhibiting GenAI Co-Creation Artifacts. In Proceedings of the 2024 ACM Designing Interactive Systems Conference (DIS ’24). Association for Computing Machinery, New York, NY, USA, 2833–2850. https://doi.org/10.1145/3643834.3660711

- Glaser and Strauss (1967) Barney G. Glaser and Anselm L. Strauss. 1967. The discovery of grounded theory : strategies for qualitative research.

- Hauhio (2024) Iikka Hauhio. 2024. Enhancing Human Creativity with Aptly Uncontrollable Generative AI. In Proceedings of the 15th International Conference on Computational Creativity. Association for Computational Creativity (ACC).

- Hauhio et al. (2023) Iikka Hauhio, Anna Kantosalo, Simo Linkola, and Hannu Toivonen. 2023. The Spectrum of Unpredictability and its Relation to Creative Autonomy. In Proceedings of the 14th International Conference on Computational Creativity (ICCC ’23’). Association for Computational Creativity (ACC), 148–152.

- Henderson et al. (2017) Jane Henderson, Shaishav Siddhpuria, Keiko Katsuragawa, and Edward Lank. 2017. Fostering large display engagement through playful interactions. In Proceedings of the 6th ACM International Symposium on Pervasive Displays (PerDis ’17). Association for Computing Machinery, New York, NY, USA, Article 20, 8 pages. https://doi.org/10.1145/3078810.3078818

- Hosio et al. (2019) Simo Hosio, Andy Alorwu, Niels van Berkel, Miguel Bordallo López, Mahalakshmy Seetharaman, Jonas Oppenlaender, and Jorge Goncalves. 2019. Fueling AI with Public Displays? A Feasibility Study of Collecting Biometrically Tagged Consensual Data on a University Campus. In Proceedings of the 8th ACM International Symposium on Pervasive Displays (PerDis ’19). Association for Computing Machinery, New York, NY, USA, Article 14, 7 pages. https://doi.org/10.1145/3321335.3324943